A Novel Electrocardiogram Biometric Identification Method Based on Temporal-Frequency Autoencoding

Abstract

1. Introduction

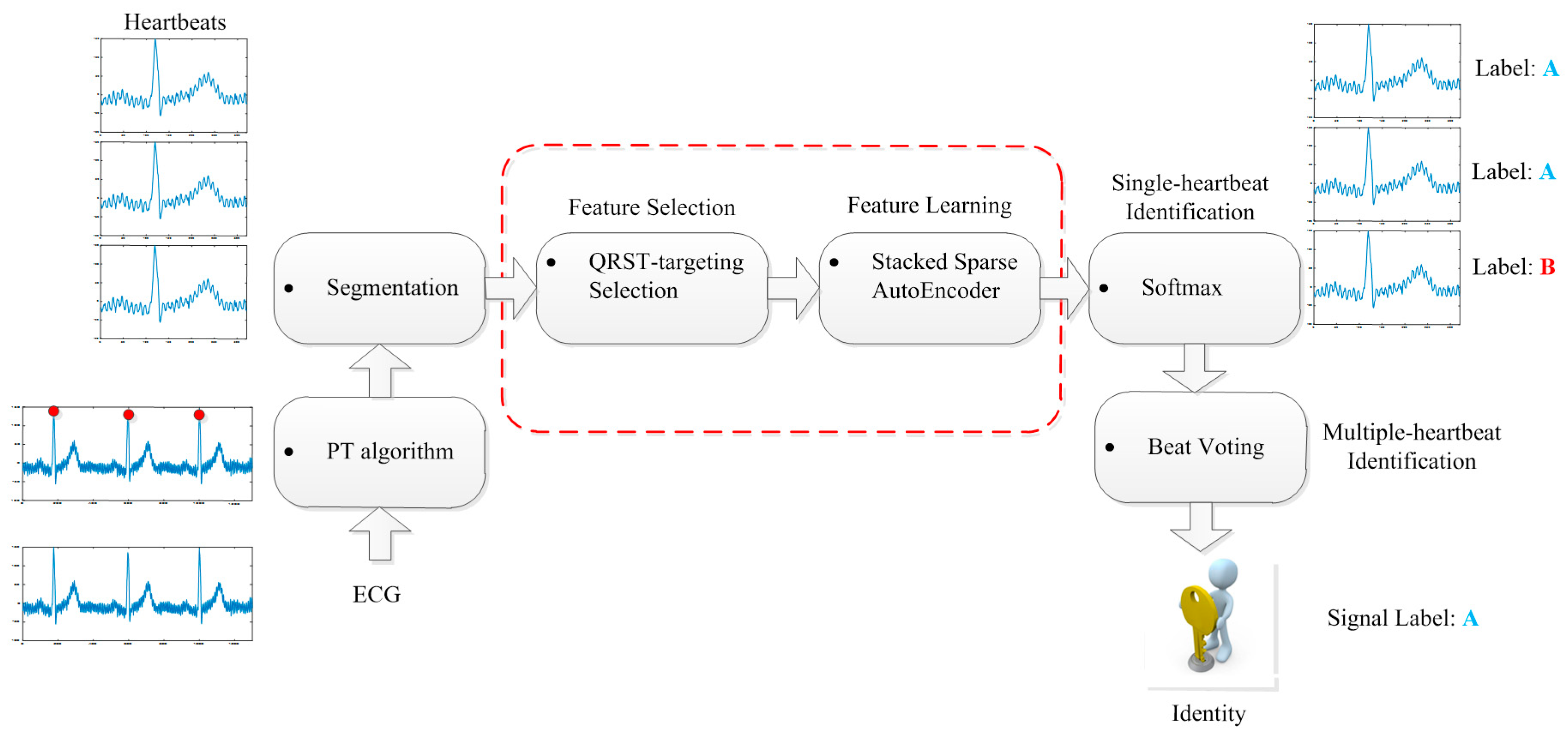

2. Database

3. Proposed Methodology

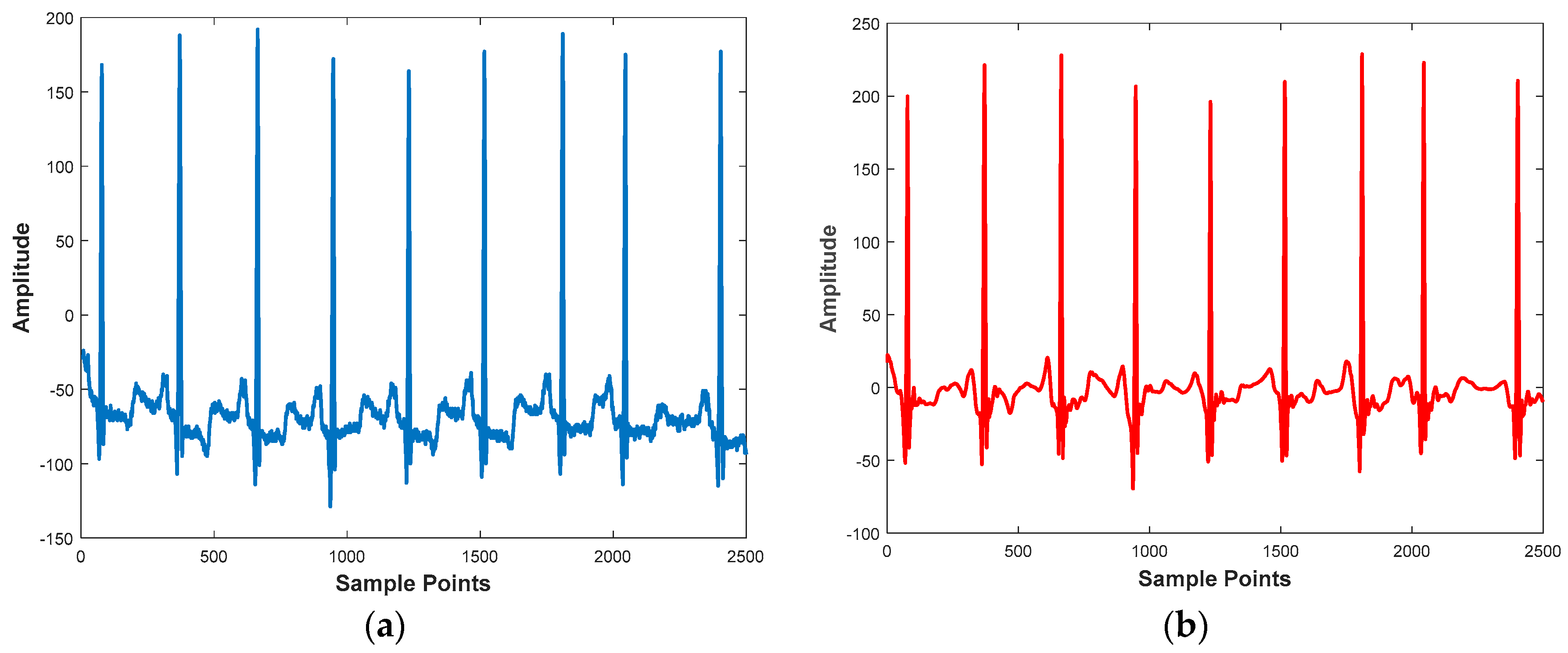

3.1. Preprocessing

3.1.1. Denoising

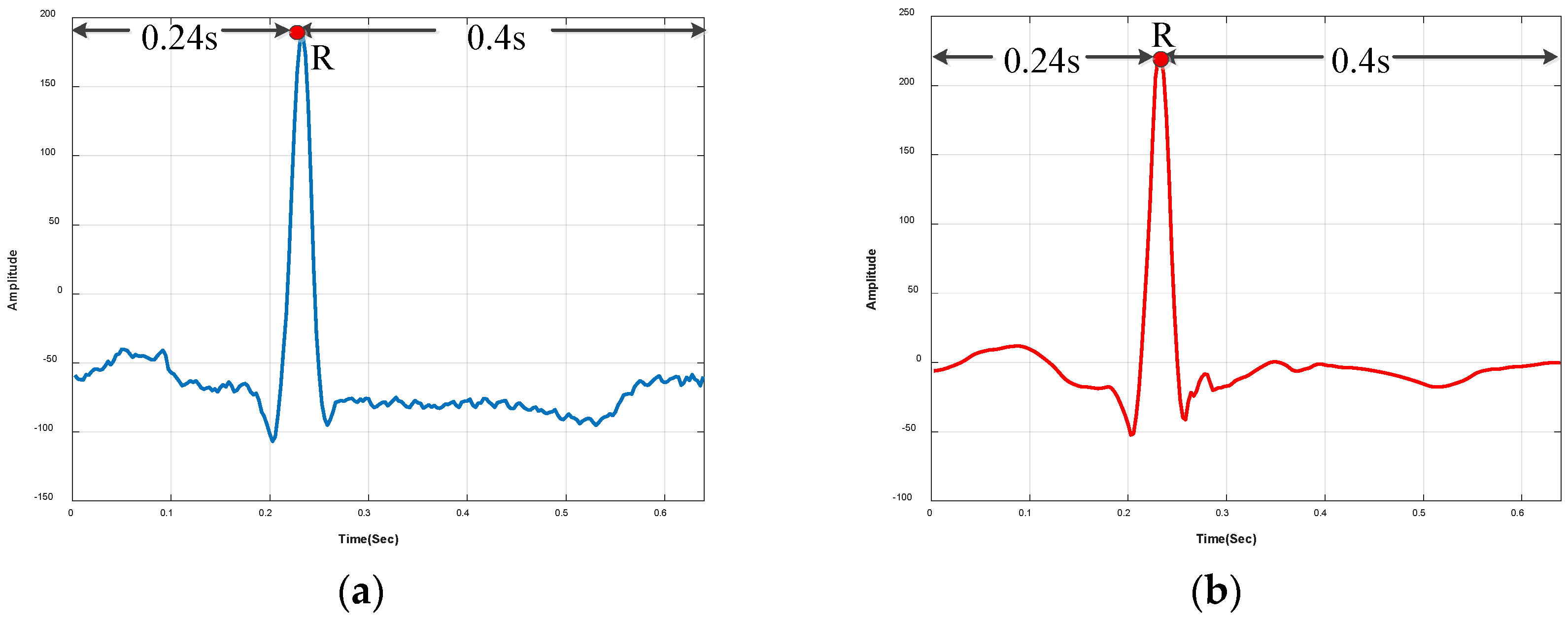

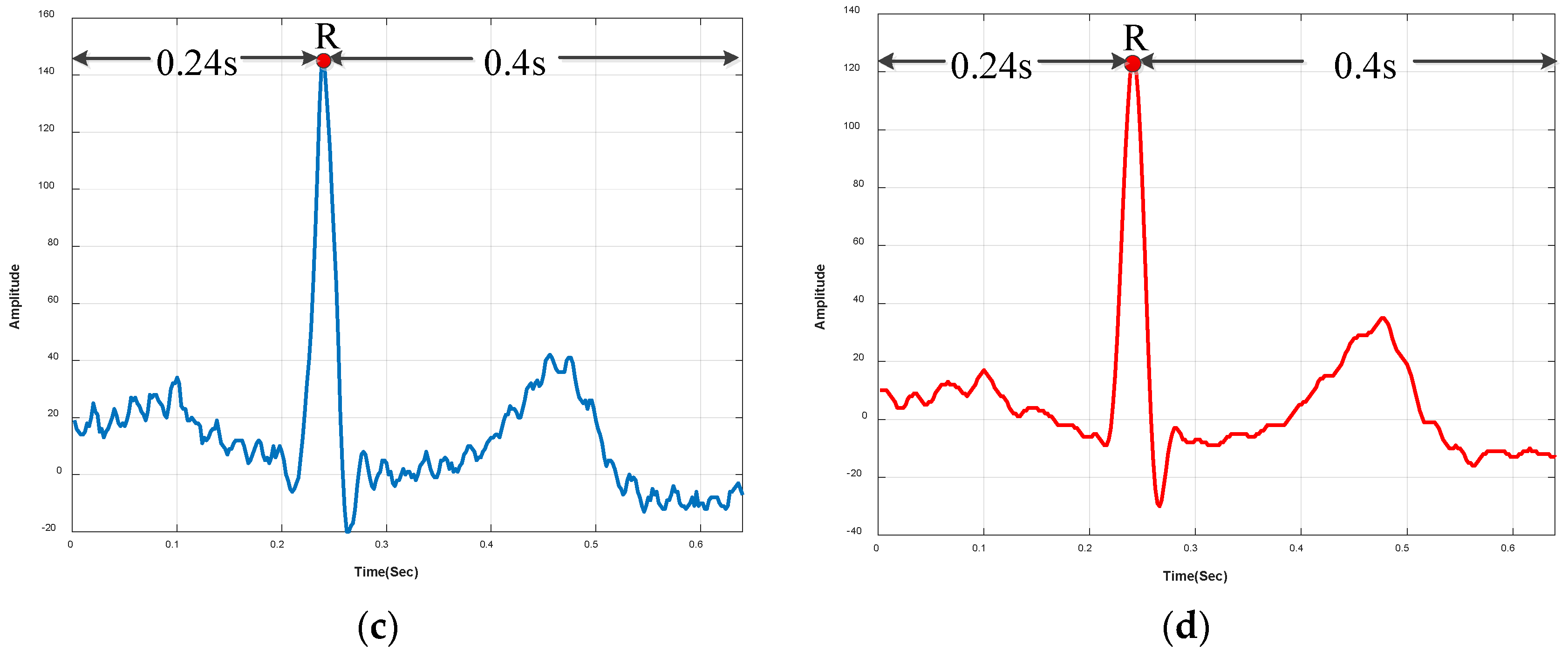

3.1.2. R-Peak Detection and Heartbeat Segmentation

3.2. Feature Selection

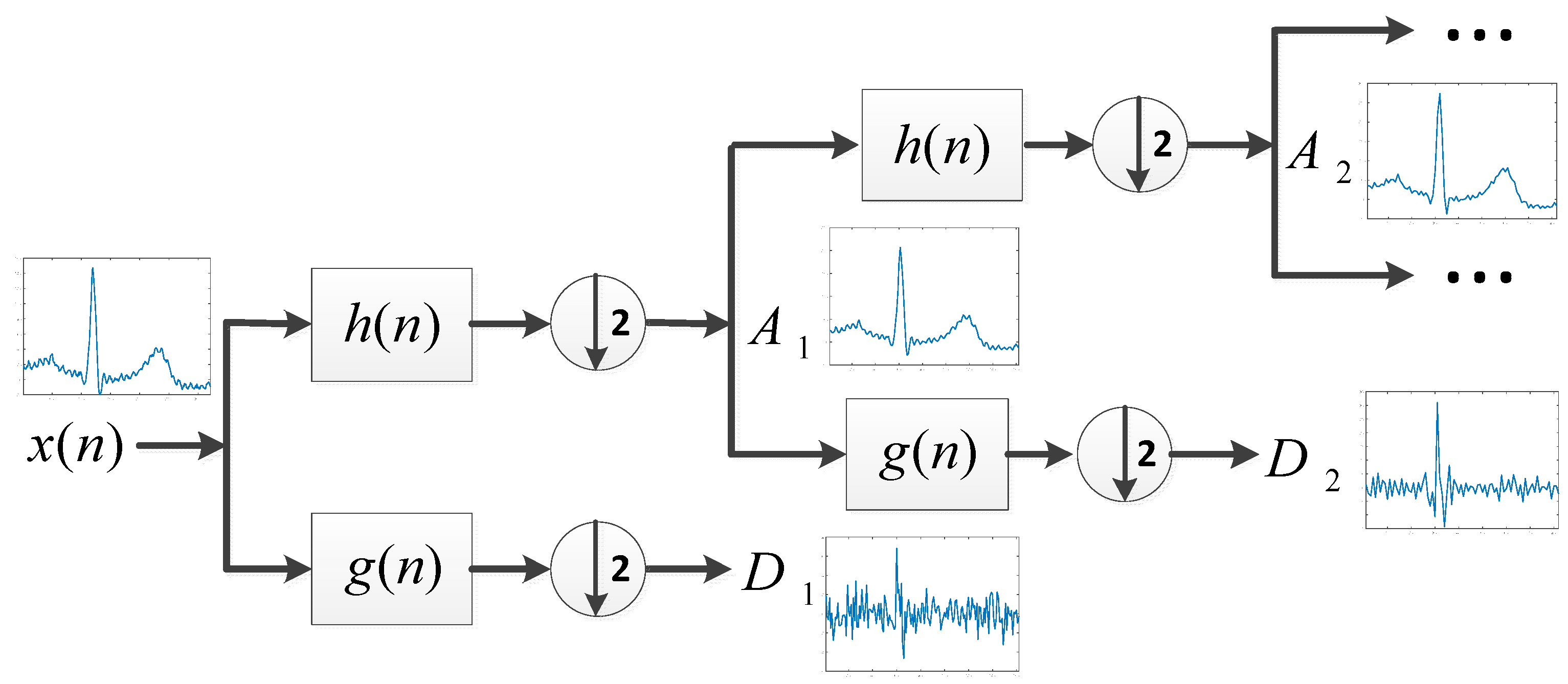

3.2.1. Decomposition

3.2.2. Selection

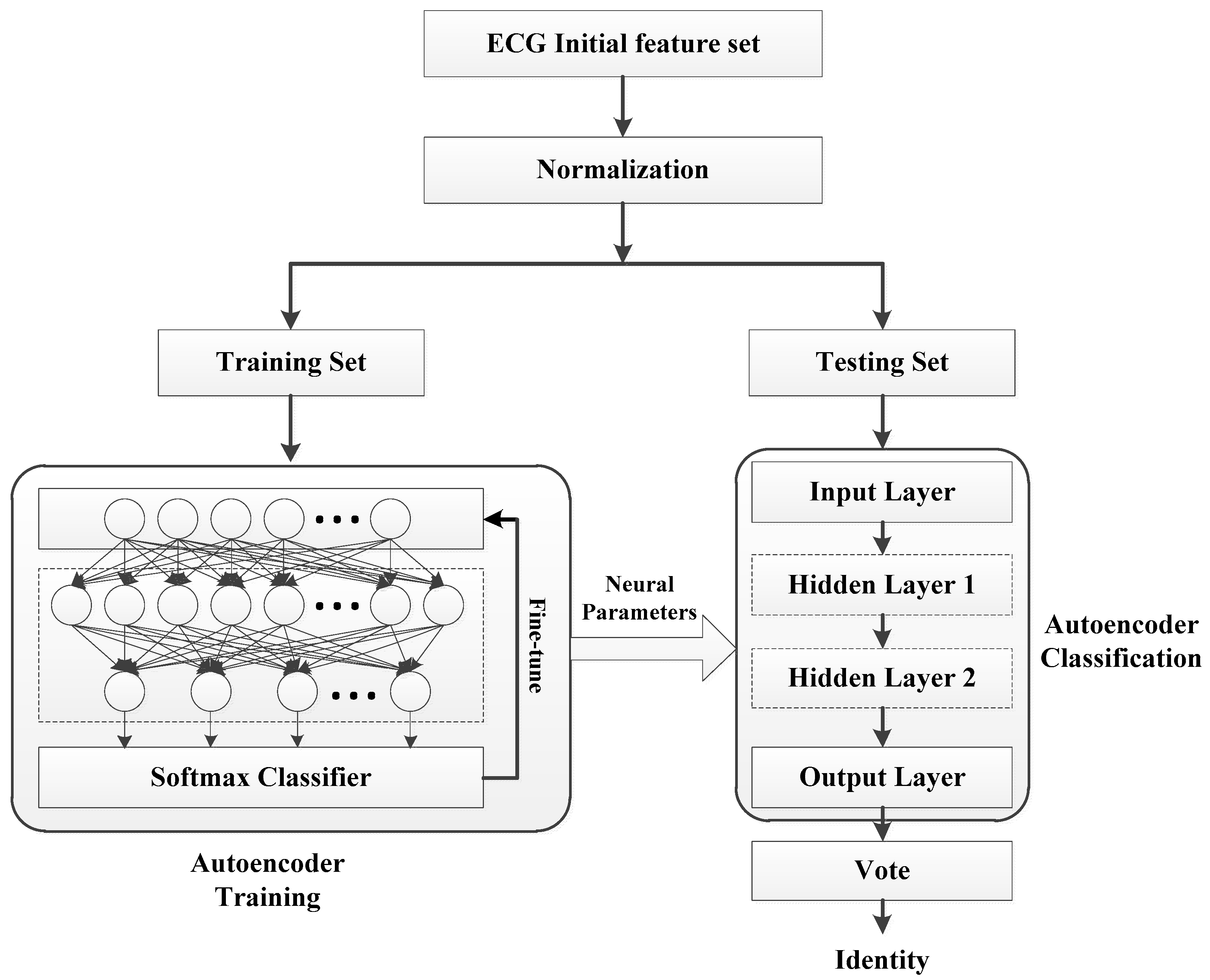

3.3. Feature Learning

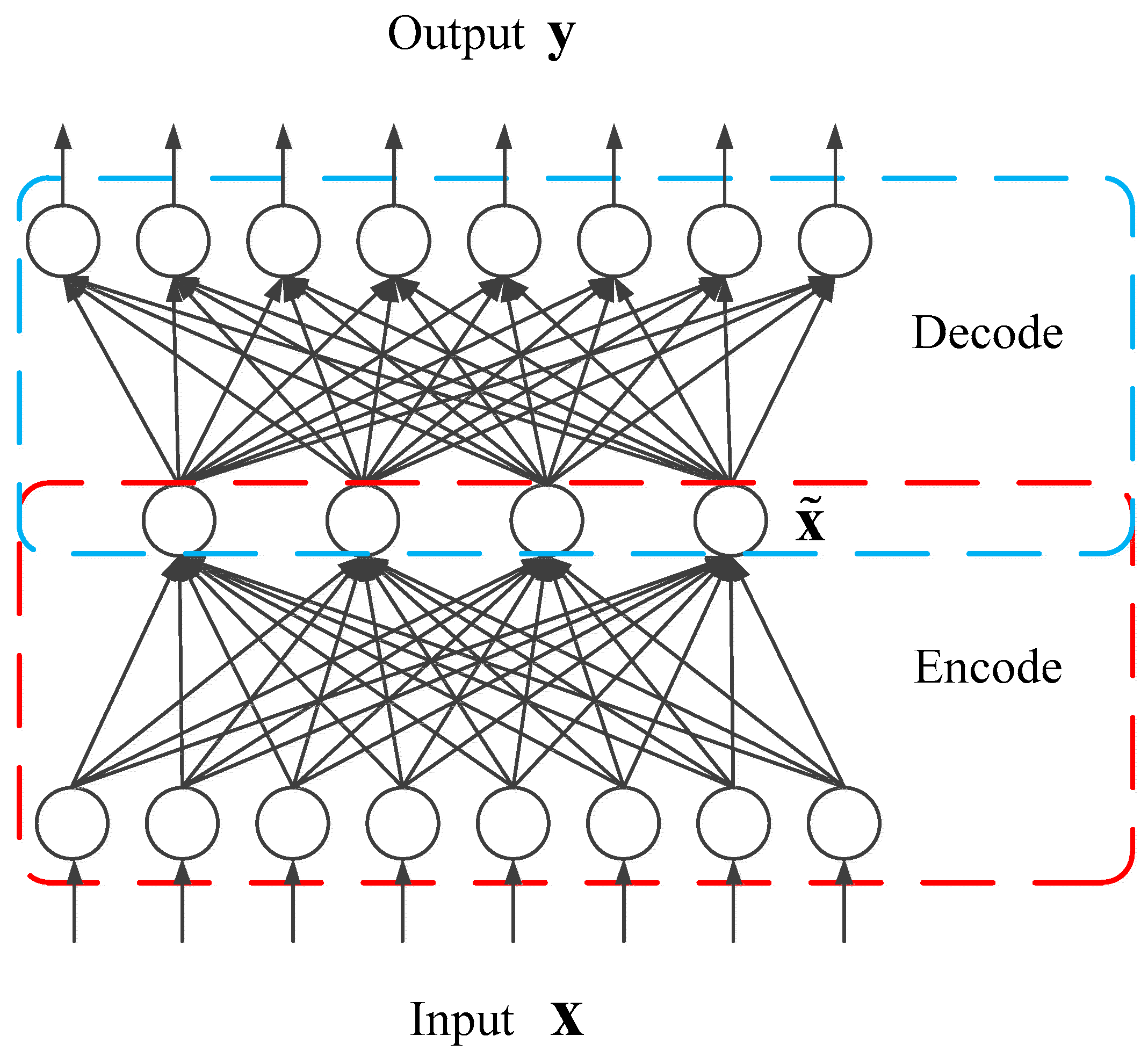

3.3.1. Sparse Autoencoder (S-AE)

3.3.2. Softmax Classifier

3.3.3. Stacked Sparse Autoencoder

3.4. Multiple-Heartbeat Identification

4. Experiments

4.1. Experimental Setup

4.2. Results

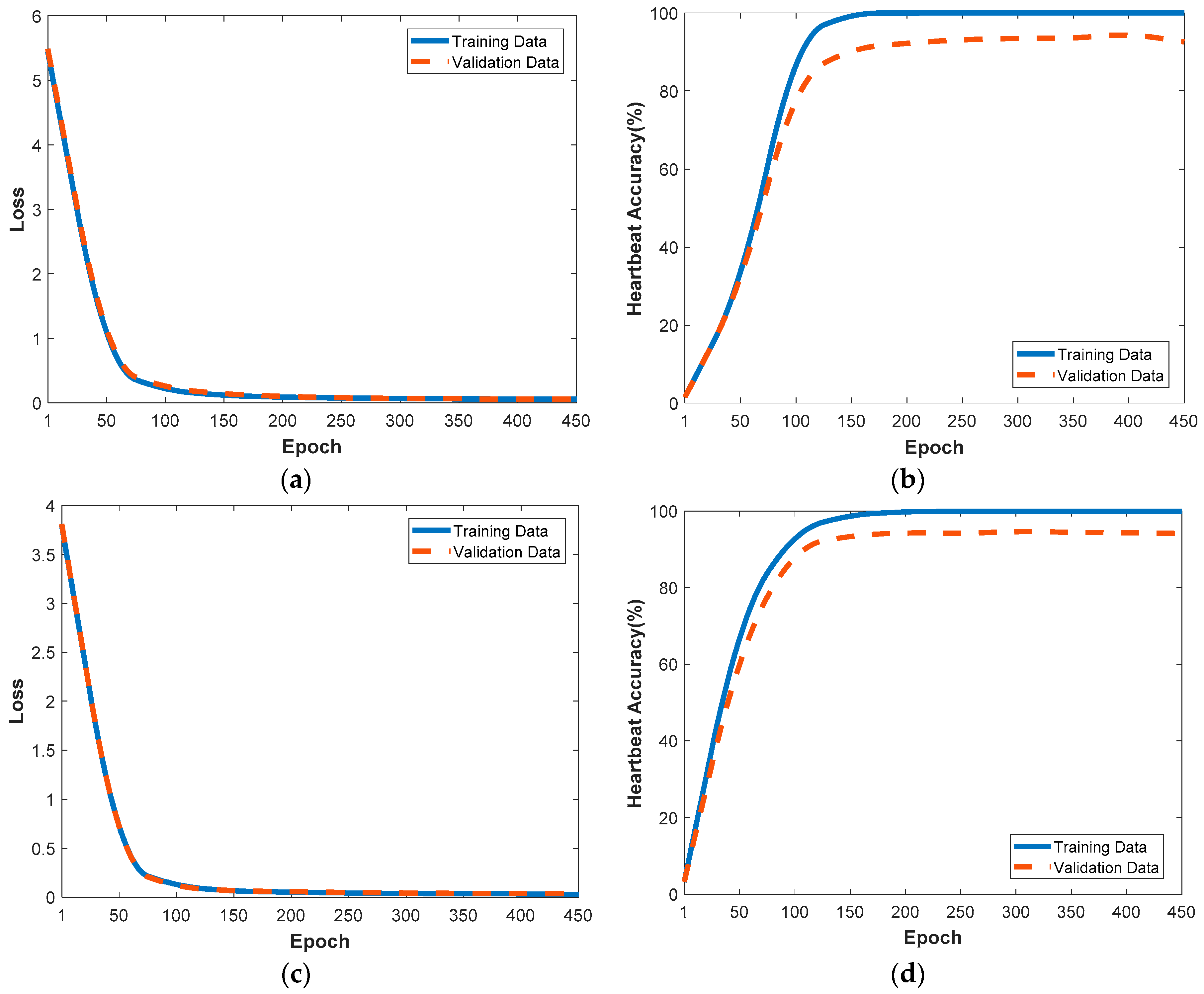

4.2.1. Performance Evaluation with the Training and Validation Data

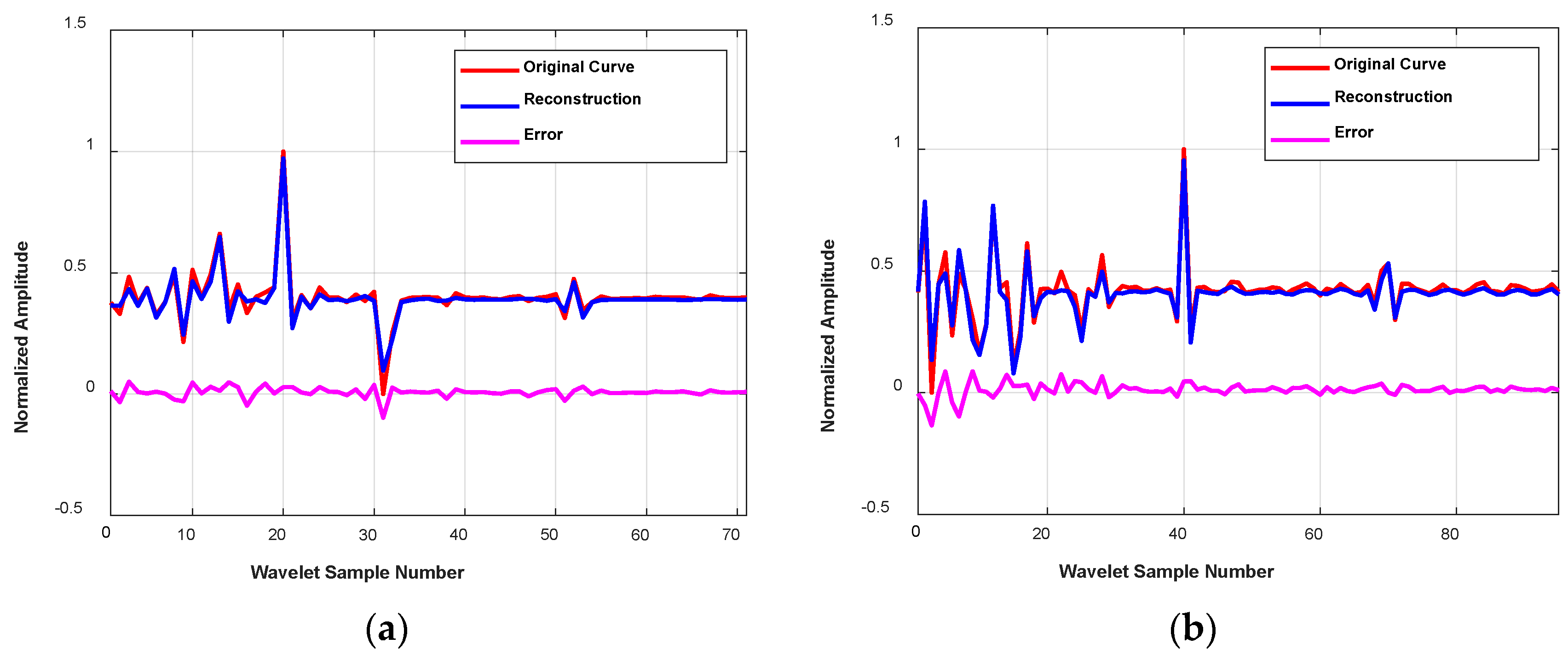

4.2.2. Reconstruction of Temporal-Frequency Curves with S-AEs

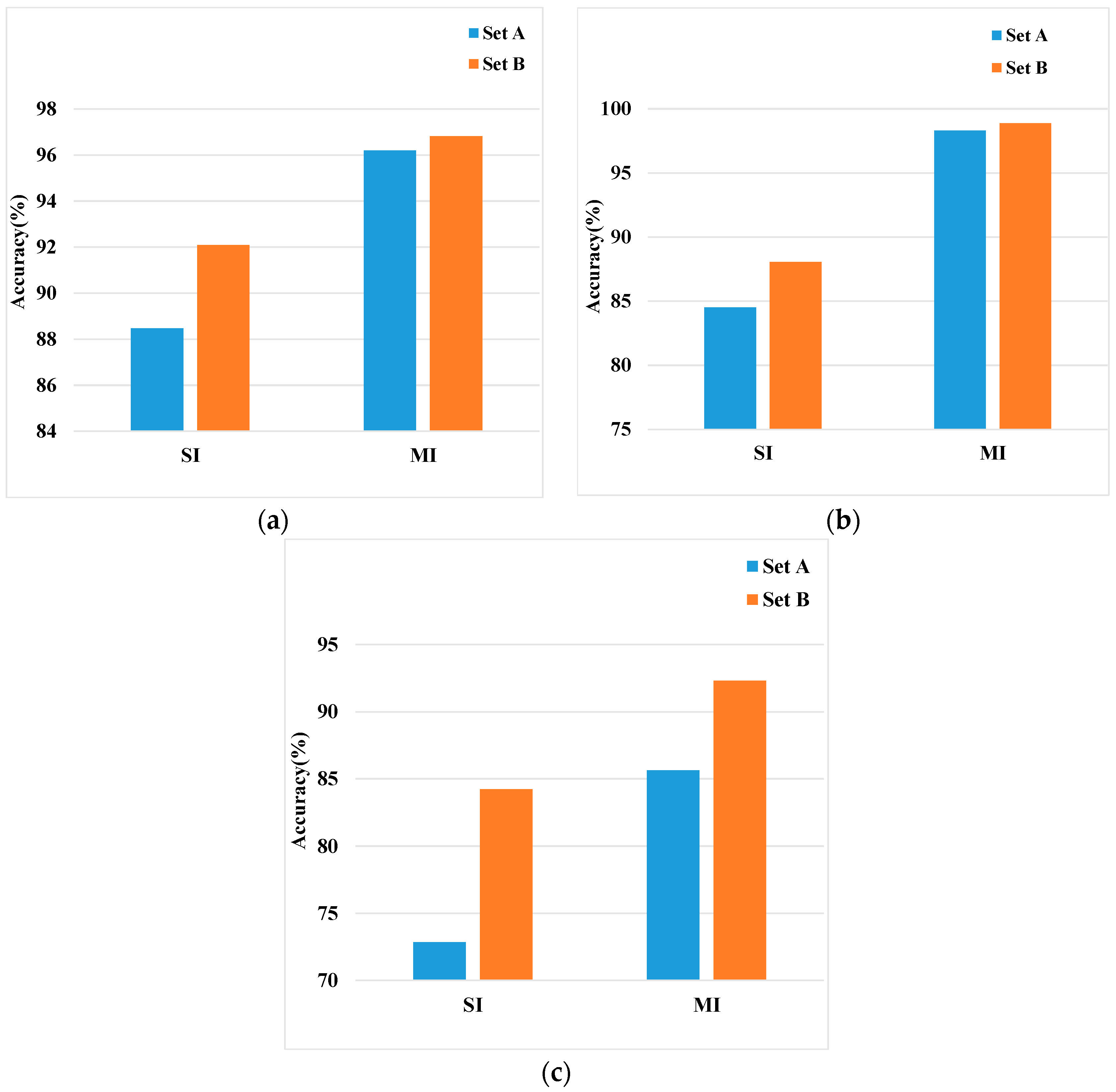

4.2.3. Noisy vs. Denoised

4.2.4. Comparison of Different Features

- Time domain feature: after ECG signal segmentation, we directly used the obtained waveform of a cardiac cycle as features. It describes amplitude, slope, and angle of the original signal in the time domain, but contains no frequency information. Thus, we call it time domain feature.

- FFT (Fast Fourier Transformation) feature: FFT has been widely used in signal processing. Compared with DWT, the FFT feature, which is obtained based on sines and cosines, describes the original signal from a global perspective, ignoring information in localized time and frequency domains.

- DWT Feature-Selected: according to the knowledge provided by prior literature, we selected wavelet coefficients corresponding to the frequency of QRST as features. Here, the selected feature refers to detail coefficients whose frequency ranges from 0.488 Hz to 62.5 Hz (0.351 Hz to 45 Hz) for ECG-ID (MIT-BIH-AHA).

- DWT Feature-Low: in addition to the DWT Feature-Selected, this feature further contains the approximation coefficients, which corresponds to frequency 0 to 0.488 Hz (0–0.351 Hz).

- DWT Feature-High: this feature refers to detail coefficients whose frequency ranges from 0.488 Hz (0.351 Hz) to 125 Hz (90 Hz) for ECG-ID (MIT-BIH-AHA).

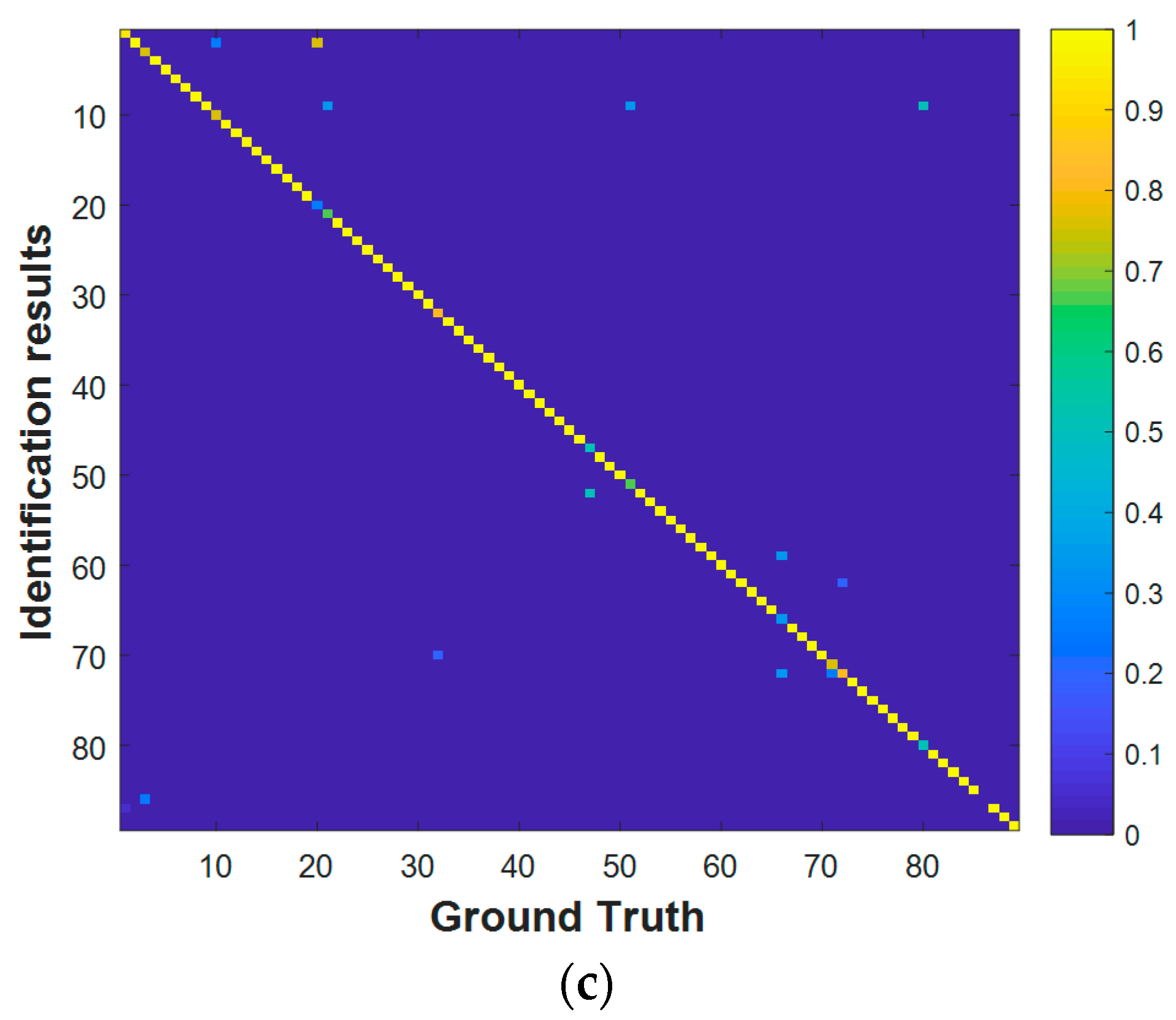

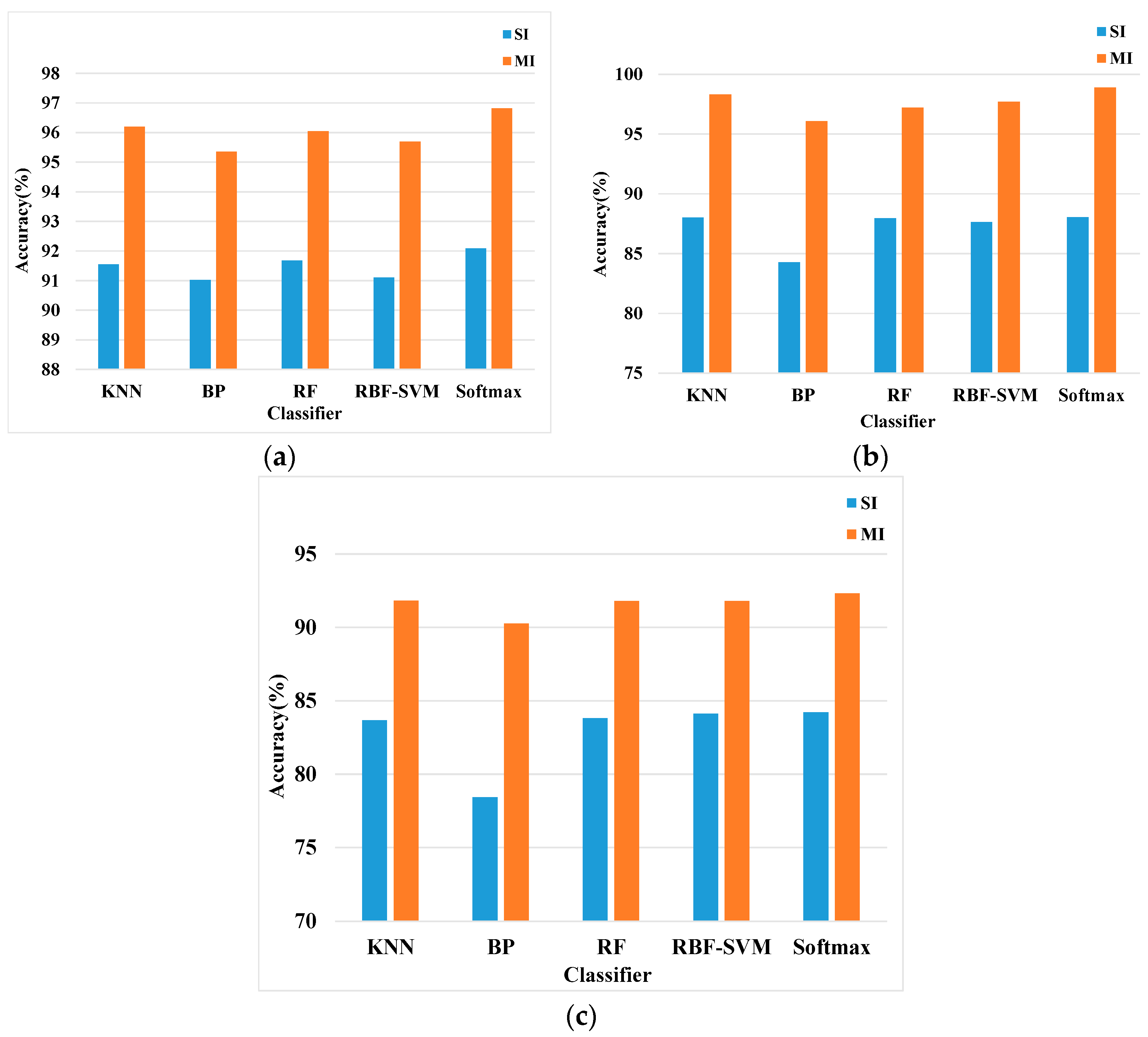

4.2.5. Classification with Different Classifiers

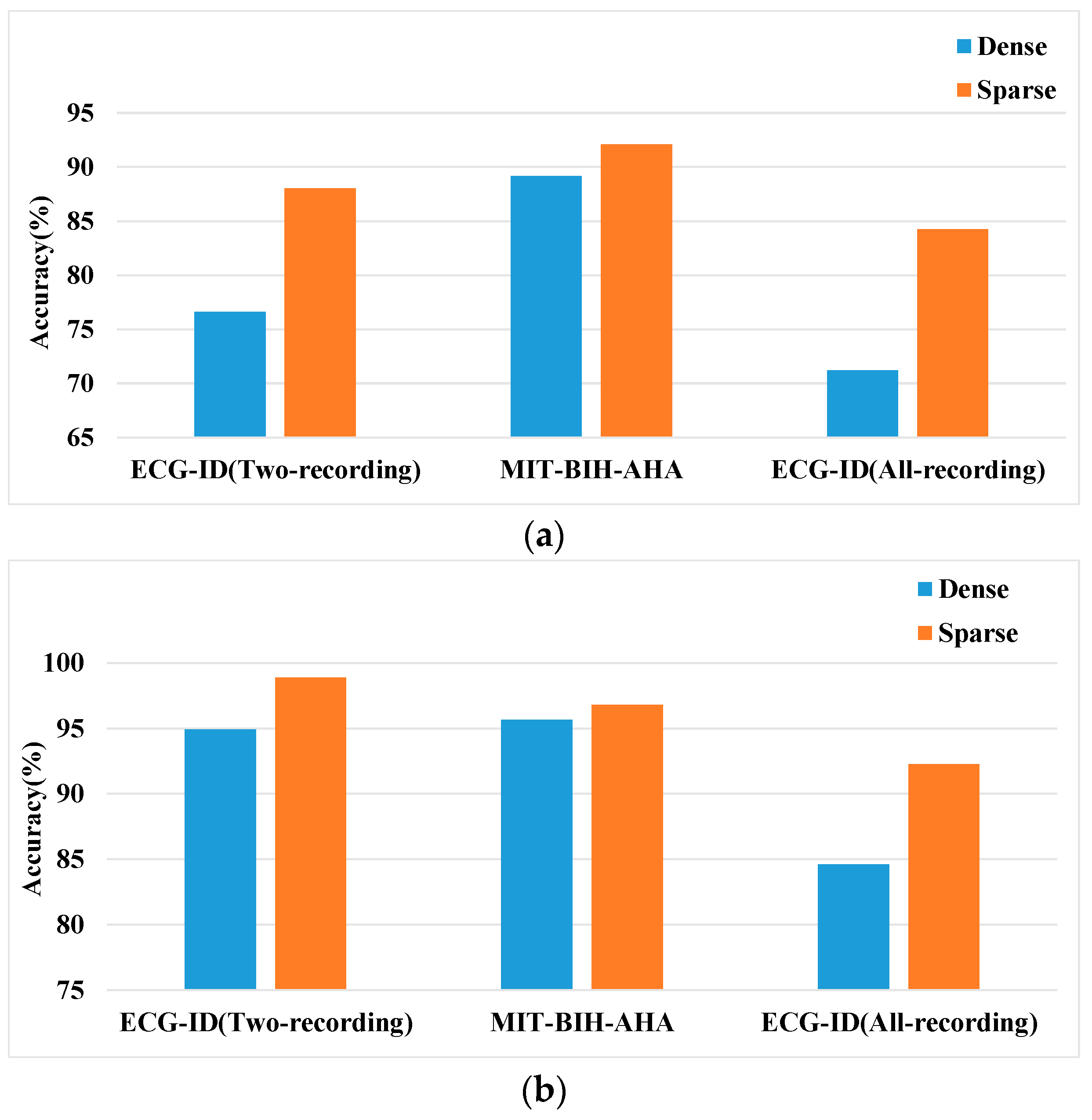

4.2.6. Sparsity vs. Dense

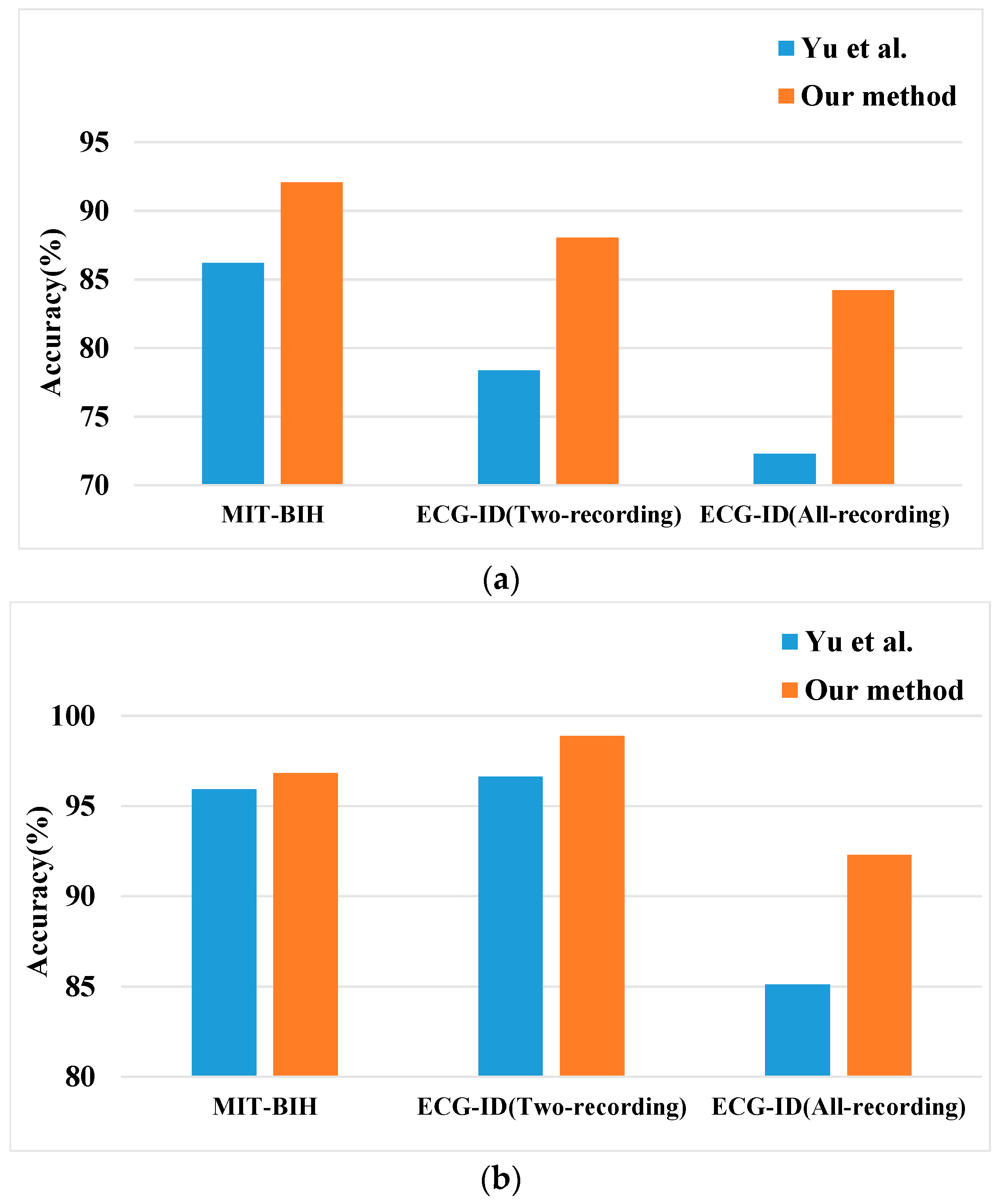

4.2.7. Comparison with Existing Literature

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Karimian, N.; Guo, Z.; Tehranipoor, M.; Forte, D. Highly Reliable Key Generation from Electrocardiogram (ECG). IEEE Trans. Biomed. Eng. 2017, 64, 1400–1411. [Google Scholar] [CrossRef] [PubMed]

- Pinto, J.R.; Cardoso, J.S.; Lourenco, A. Evolution, Current Challenges, and Future Possibilities in ECG Biometrics. IEEE Access 2018, 6, 34746–34776. [Google Scholar] [CrossRef]

- Fratini, A.; Sansone, M.; Bifulco, P.; Cesarelli, M. Individual identification via electrocardiogram analysis. Biomed. Eng. Online 2015, 14, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Biel, L.; Pettersson, O.; Philipson, L.; Wide, P. ECG analysis: A new approach in human identification. IEEE Trans. Instrum. Meas. 2001, 50, 808–812. [Google Scholar] [CrossRef]

- Nemirko, A.; Lugovaya, T. Biometric human identification based on electrocardiogram. In Proceedings of the XIIIth Russian Conference on Mathematical Methods of Pattern Recognition, Moscow, Russia, 20–26 June 2005; pp. 387–390. [Google Scholar] [CrossRef]

- Irvine, J.M.; Israel, S.A.; Scruggs, W.T.; Worek, W.J. eigenPulse: Robust human identification from cardiovascular function. Pattern Recognit. 2008, 41, 3427–3435. [Google Scholar] [CrossRef]

- Sidek, K.A.; Khalil, I. Enhancement of low sampling frequency recordings for ECG biometric matching using interpolation. Comput. Methods Programs Biomed. 2013, 109, 13–25. [Google Scholar] [CrossRef] [PubMed]

- Odinaka, I.; Lai, P.H.; Kaplan, A.D.; O’Sullivan, J.A.; Sirevaag, E.J.; Rohrbaugh, J.W. ECG Biometric Recognition: A Comparative Analysis. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1812–1824. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhou, D.; Zeng, X. HeartID: A Multiresolution Convolutional Neural Network for ECG-Based Biometric Human Identification in Smart Health Applications. IEEE Access 2017, 5, 11805–11816. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, Y.; Deng, Y.; Zhang, X. ECG authentication system design incorporating a convolutional neural network and generalized S-Transformation. Comput. Biol. Med. 2018, 102, 168–179. [Google Scholar] [CrossRef]

- Blanco-Velasco, M.; Weng, B.; Barner, K.E. ECG signal denoising and baseline wander correction based on the empirical mode decomposition. Comput. Biol. Med. 2008, 38, 1–13. [Google Scholar] [CrossRef]

- Wang, D.; Si, Y.; Yang, W.; Zhang, G.; Liu, T. A Novel Heart Rate Robust Method for Short-Term Electrocardiogram Biometric Identification. Appl. Sci. 2019, 9, 201. [Google Scholar] [CrossRef]

- Dar, M.N.; Akram, M.U.; Shaukat, A.; Khan, M.A. ECG Based Biometric Identification for Population with Normal and Cardiac Anomalies Using Hybrid HRV and DWT Features. In Proceedings of the 2015 5th International Conference on IT Convergence and Security (ICITCS), Kuala Lumpur, Malaysia, 24–27 August 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, Z.; Li, W. Multisensor Feature Fusion for Bearing Fault Diagnosis Using Sparse Autoencoder and Deep Belief Network. IEEE Trans. Instrum. Meas. 2017, 66, 1693–1702. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, E.; Chen, W. Deep neural network for halftone image classification based on sparse auto-encoder. Eng. Appl. Artif. Intell. 2016, 50, 245–255. [Google Scholar] [CrossRef]

- Chen, L.L.; He, Y. Identification of Atrial Fibrillation from Electrocardiogram Signals Based on Deep Neural Network. J. Med. Imaging Health Inform. 2019, 9, 838–846. [Google Scholar] [CrossRef]

- Oh, S.L.; Ng, E.Y.K.; Ta, R.S.; Acharya, U.R. Automated beat-wise arrhythmia diagnosis using modified U-net on extended electrocardiographic recordings with heterogeneous arrhythmia types. Comput. Biol. Med. 2019, 105, 92–101. [Google Scholar] [CrossRef]

- Gogna, A.; Majumdar, A.; Ward, R. Semi-supervised Stacked Label Consistent Autoencoder for Reconstruction and Analysis of Biomedical Signals. IEEE Trans. Biomed. Eng. 2017, 64, 2196–2205. [Google Scholar] [CrossRef] [PubMed]

- Eduardo, A.; Aidos, H.; Fred, A. ECG-based Biometrics using a Deep Autoencoder for Feature Learning An Empirical Study on Transferability. In Proceedings of the 6th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2017), Porto, Portugal, 24–26 February 2017; pp. 463–470. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.A.N.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet—Components of a new research resource for complex physiologic signals. Circulation 2000, 101, E215–E220. [Google Scholar] [CrossRef]

- Jané, R.; Laguna, P.; Thakor, N.V.; Caminal, P. Adaptive baseline wander removal in the ECG: Comparative analysis with cubic spline technique. In Proceedings of the Proceedings Computers in Cardiology, Durham, NC, USA, 11–14 October 1992; pp. 143–146. [Google Scholar] [CrossRef]

- Date, A.A.; Ghongade, R.B. Performance of Wavelet Energy Gradient Method for QRS Detection. In Proceedings of the 4th International Conference on Intelligent and Advanced Systems (ICIAS2012), Kuala Lumpur, Malaysia, 12–14 June 2012; pp. 876–881. [Google Scholar] [CrossRef]

- Rakshit, M.; Das, S. An efficient ECG denoising methodology using empirical mode decomposition and adaptive switching mean filter. Biomed. Signal Process. Control 2018, 40, 140–148. [Google Scholar] [CrossRef]

- Agante, P.M.; Marques de Sa, J.P. ECG noise filtering using wavelets with soft-thresholding methods. In Proceedings of the 26th Annual Meeting on Computers in Cardiology (AMCC 1999), Hannover, Germany, 26–29 September 1999; pp. 535–538. [Google Scholar] [CrossRef]

- Kabir, M.A.; Shahnaz, C. Denoising of ECG signals based on noise reduction algorithms in EMD and wavelet domains. Biomed. Signal Process. Control 2012, 7, 481–489. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, I.M. Adapting to unknown smoothness via wavelet shrinkage. J. Am. Stat. Assoc. 1995, 90, 1200–1224. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, J.M. Ideal spatial adaptation by wavelet shrinkage. Biometrika 1994, 81, 425–455. [Google Scholar] [CrossRef]

- Yao, C.; Si, Y. ECG P, T wave complex detection algorithm based on lifting wavelet. J. Jilin Univ. Technol. Ed. 2013, 43, 177–182. [Google Scholar] [CrossRef]

- Pan, J.; Tompkins, W.J. A real-time QRS detection algorithm. IEEE Trans. Biomed. Eng. 1985, 32, 230–236. [Google Scholar] [CrossRef]

- Complete Pan Tompkins Implementation ECG QRS Detector. Available online: https://www.mathworks.com/matlabcentral/fileexchange/45840-complete-pan-tompkins-implementation-ecg-qrs-detector/content/pantompkin.m (accessed on 23 April 2019).

- Tuerxunwaili; Nor, R.M.; Rahman, A.W.B.A.; Sidek, K.A.; Ibrahim, A.A. Electrocardiogram Identification: Use a Simple Set of Features in QRS Complex to Identify Individuals. In Proceedings of the 12th International Conference on Computing and Information Technology (IC2IT), Khon Kaen, Thailand, 7–8 July 2016; pp. 139–148. [Google Scholar] [CrossRef]

- Gargiulo, F.; Fratini, A.; Sansone, M.; Sansone, C. Subject identification via ECG fiducial-based systems: Influence of the type of QT interval correction. Comput. Methods Programs Biomed. 2015, 121, 127–136. [Google Scholar] [CrossRef]

- Simova, I.; Bortolan, G.; Christov, I. ECG attenuation phenomenon with advancing age. J. Electrocardiol. 2018, 51, 1029–1034. [Google Scholar] [CrossRef] [PubMed]

- Matveev, M.; Christov, I.; Krasteva, V.; Bortolan, G.; Simov, D.; Mudrov, N.; Jekova, I. Assessment of the stability of morphological ECG features and their potential for person verification/identification. In Proceedings of the 21st International Conference on Circuits, Systems, Communications and Computers (CSCC 2017), Crete Island, Greece, 14–17 July 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Mallat, S.G. A theory for multiresolution signal decomposition: The wavelet representation. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 674–693. [Google Scholar] [CrossRef]

- Guler, I.; Ubeyli, E.D. ECG beat classifier designed by combined neural network model. Pattern Recognit. 2005, 38, 199–208. [Google Scholar] [CrossRef]

- Polikar, R.; Udpa, L.; Udpa, S.S.; Taylor, T. Frequency invariant classification of ultrasonic weld inspection signals. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 1998, 45, 614–625. [Google Scholar] [CrossRef] [PubMed]

- Deeplearntoolbox for MATLAB. Available online: https://github.com/rasmusbergpalm/DeepLearnToolbox (accessed on 18 May 2019).

- MATLAB Code of minFunc Toolkit. Available online: https://github.com/ganguli-lab/minFunc (accessed on 18 May 2019).

- Salloum, R.; Kuo, C.C.J. ECG-based biometrics using recurrent neural networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2062–2066. [Google Scholar] [CrossRef]

- Sarkar, A.; Abbott, A.L.; Doerzaph, Z. ECG Biometric Authentication Using a Dynamical Model. In Proceedings of the 7th International Conference on Biometric Theory, Applications and Systems (BTAS), Arlington, VA, USA, 8–11 September 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Altan, G.; Kutlu, Y.; Yeniad, M. ECG based human identification using Second Order Difference Plots. Comput. Methods Programs Biomed. 2019, 170, 81–93. [Google Scholar] [CrossRef]

- Yu, J.; Si, Y.; Liu, X.; Wen, D.; Luo, T.; Lang, L. ECG identification based on PCA-RPROP. In Proceedings of the International Conference on Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management, Vancounver, BC, Canada, 9–14 July 2017; pp. 419–432. [Google Scholar] [CrossRef]

- Tan, R.; Perkowski, M. ECG Biometric Identification Using Wavelet Analysis Coupled with Probabilistic Random Forest. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; pp. 182–187. [Google Scholar] [CrossRef]

- Dar, M.N.; Akram, M.U.; Usman, A.; Khan, S.A. ECG Biometric Identification for General Population Using Multiresolution Analysis of DWT Based Features. In Proceedings of the 2015 2nd International Conference on Information Security and Cyber Forensics (InfoSec 2015), Cape Town, South Africa, 15–17 November 2015; pp. 5–10. [Google Scholar] [CrossRef]

- Maaten, L.v.d.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Database | ECG-ID | MIT-BIH-AHA | ||

|---|---|---|---|---|

| Level | Approximation (Hz) | Detail (Hz) | Approximation (Hz) | Detail (Hz) |

| 1 | 0–125 | 125–250 | 0–90 | 90–180 |

| 2 | 0–62.5 | 62.5–125 | 0–45 | 45–90 |

| 3 | 0–31.25 | 31.25–62.5 | 0–22.5 | 22.5–45 |

| 4 | 0–15.625 | 15.625–31.25 | 0–11.25 | 11.25–22.5 |

| 5 | 0–7.812 | 7.812–15.625 | 0–5.625 | 5.625–11.25 |

| 6 | 0–3.906 | 3.906–7.812 | 0–2.812 | 2.812–5.625 |

| 7 | 0–1.953 | 1.953–3.906 | 0–1.406 | 1.406–2.812 |

| 8 | 0–0.976 | 0.976–1.953 | 0–0.703 | 0.703–1.406 |

| 9 | 0–0.488 | 0.488–0.976 | 0–0.351 | 0.351–0.703 |

| Parameters | Value |

|---|---|

| Number of hidden layers | 2 |

| Node per layer | MIT-BIH-AHA: 71-200-50-47 ECG-ID: 95-200-50-89 |

| Sparsity parameter | 0.1 |

| Weight decay | 3 × 10−5 |

| Sparsity penalty weight | 3 |

| Maximum epoch | 400 |

| Activation function | sigmoid |

| Database | ECG-ID (Two-Recording) | MIT-BIH-AHA | ECG-ID (All-Recording) | ||||

|---|---|---|---|---|---|---|---|

| Feature | SI (%) | MI (%) | SI (%) | MI (%) | SI (%) | MI (%) | |

| Time domain Feature | 83.78 | 96.62 | 91.08 | 96.48 | 81.66 | 88.71 | |

| FFT Feature | 57.51 | 75.28 | 84.48 | 94.34 | 57.17 | 71.79. | |

| DWT Feature-Low | 62.99 | 76.96 | 87.84 | 95.67 | 60.56 | 77.43 | |

| DWT Feature-High | 89.44 | 98.31 | 91.64 | 96.80 | 79.89 | 89.74 | |

| DWT Feature-Selected | 88.04 | 98.87 | 92.09 | 96.82 | 84.22 | 92.3 | |

| Classifier Type | Parameter Setting |

|---|---|

| KNN | Nearest Neighbor Number : 3 |

| BP | Network Layer: 3 Hidden Unit Number: 50 |

| RF | Decision Tree Number: 500 |

| RBF-SVM | Error Penalty Factor : 1 Kernel Parameter : 0.1 |

| Softmax | Input layer unit number: 50 Output layer unit number: 89 for the ECG-ID 47 for the MIT-BIH-AHA |

| Related Works | Traditional Denoising Algorithm Required? | Individuals | Features Extraction Method | Classifier | MI Accuracy |

|---|---|---|---|---|---|

| Zhang et al. [9] | Yes | 47 | DWT + 1-CNN | Softmax | 91.1% |

| Sarker et al. [41] | Yes | 45 | Fiducial features | LDA + KNN | 95% |

| Dar et al. [13] | Yes | 47 | Haar Transform and HRV/GBFS | RF | 95.85% |

| Ronald et al. [40] | Yes | 47 | RNN GRU LSTM | Softmax | 93.6% 96.8% 100% |

| Alhan et al. [42] | Yes | 47 | LGA on SODP | SFFS KNN | 95.12% |

| Our Approach | No | 47 | DWT + S-AEs | Softmax | 96.82% |

| Related Works | Traditional Denoising Algorithm Required? | Individuals | Features Extraction Method | Classifier | MI Accuracy |

|---|---|---|---|---|---|

| Ronald et al. [40] | Yes | 89 | RNN GRU LSTM | Softmax | 91.7% 94.4% 100% |

| Yu et al. [43] | Yes | 88 | PCA | RPROP | 96.60% |

| Tan et al. [44] | Yes | 89 | Temporal, amplitude, and angle + DWT coefficients | Random Forests + WDIST KNN | 100% |

| Our Approach | No | 89 | DWT + S-AEs | Softmax | 98.87% |

| Related Works | Traditional Denoising Algorithm Required? | Individuals | Features Extraction Method | Classifier | MI Accuracy |

|---|---|---|---|---|---|

| Dar et al. [45] | Yes | 90 | Haar Transform/GBFS | KNN | 83.2% |

| Dar et al. [13] | Yes | 90 | Haar Transform and HRV/GBFS | RF | 83.9% |

| Alhan et al. [42] | Yes | 90 | LGA on SODP | SFFS KNN | 91.26% |

| Our Approach | No | 89 | DWT + S-AEs | Softmax | 92.3% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, D.; Si, Y.; Yang, W.; Zhang, G.; Li, J. A Novel Electrocardiogram Biometric Identification Method Based on Temporal-Frequency Autoencoding. Electronics 2019, 8, 667. https://doi.org/10.3390/electronics8060667

Wang D, Si Y, Yang W, Zhang G, Li J. A Novel Electrocardiogram Biometric Identification Method Based on Temporal-Frequency Autoencoding. Electronics. 2019; 8(6):667. https://doi.org/10.3390/electronics8060667

Chicago/Turabian StyleWang, Di, Yujuan Si, Weiyi Yang, Gong Zhang, and Jia Li. 2019. "A Novel Electrocardiogram Biometric Identification Method Based on Temporal-Frequency Autoencoding" Electronics 8, no. 6: 667. https://doi.org/10.3390/electronics8060667

APA StyleWang, D., Si, Y., Yang, W., Zhang, G., & Li, J. (2019). A Novel Electrocardiogram Biometric Identification Method Based on Temporal-Frequency Autoencoding. Electronics, 8(6), 667. https://doi.org/10.3390/electronics8060667