2.1. TSN Synchronous Approach

As the target applications of the TSN standard have diversified, there emerged two different forwarding approaches in the standard. The first one is the synchronous approach, which includes the cyclic queuing and forwarding (CQF) [

12] function. The CQF is rooted from the residential Ethernet TG era’s small number of large bandwidth flows. In the synchronous approach, time is divided into slots, whose start and end times are synchronized across all the nodes in the network. In order to guarantee a bounded delay, the allocation of slots at all the nodes are precisely planned at packet level. A predefined slot has to be allocated to a predefined packet, at every node along the path of the packet. This allocation process, which is not defined in the standard, is quite burdensome in networks even with moderate number of flows. This slot allocation problem is similar to the job-shop scheduling problem [

13], which is NP complete. It is shown that with 200 flows the slot allocation takes around 200 s with a contemporary personal computer, even with a heuristic algorithm [

13].

The second one is the asynchronous approach, in which the asynchronous traffic shaping (ATS) [

14] function takes the central role. The ATS, also called the interleaved regulator, is basically a regulation module in front of a scheduler. In this approach there is no need for the synchronization across the network. The ATS can be seen as a revision of an existing solution stemmed from the IntServ framework, with a modification for a simpler implementation. In the following we will see how the ATS is derived.

2.2. Integrated Services (IntServ) and Latency-Rate (LR) Servers

Binding end-to-end network delay is already achievable with the traditional IntServ framework [

3]. Let us look at the theoretical background of IntServ that has been actively researched since the 1990s. The core benefit of the IntServ is that the maximum end-to-end delay of a packet is guaranteed if the following three conditions are met:

The arrival process of every incoming flow conforms to the flow’s arrival curve.

The sum of the arrival rates of flows on every link in the network is less than or equal to the link’s bandwidth.

Every link in the network provides service to each flow with a service process that conforms to a service curve.

More specifically, Condition 1 means that a flow arrival process meets the following inequality.

where

is the amount of the arrived traffic in an arbitrary period

from the flow

,

is the average arrival rate, and

is the maximum burst size of the flow

. A burst can be thought as a large number of packets go together with almost no time interval between them. The curve

is called the arrival curve.

Now let us introduce a set of schedulers that conform to a flow’s service curve therefore meet Condition 3. This set of schedulers is called the latency-rate (LR) servers [

10]. We will elaborate on the properties of the LR servers. The mathematical notations frequently appear in this paper are summarized in

Table 1.

In the following the definitions and the properties are given according to [

10].

Definition 1. Flow busy period is the maximum time period that for all the following inequality holds. , where is the amount of traffic arrived during from flow .

Note that a flow busy period is not the same with a flow backlogged period, during which a flow always has packet(s) to be served in the scheduler. In the following a start time and a finish time of a period are the arrival time of the first packet’s last bit and the departure time of the last packet’s last bit, respectively.

Definition 2. Let be the start time of a flow busy period in server and the finish time of the busy period. Then, server is an LR server if and only if a nonnegative constant can be found such that, at every instant in the period ,where is the amount of service given during to flow . The minimum that satisfies (1) is the latency of the flow at the server and denoted by . The curve derived from the inequality (2) is called the service curve. If a service given to a flow satisfies (2) then it conforms to the service curve. Condition 3 above means that a scheduler at an output port has to satisfy the inequality (2). Therefore, LR servers are the key functional elements of the IntServ framework. For an LR server, only two parameters, service curve and latency define the service curve. LR servers have the following properties [10]. Property 1. If a flow traverses two adjacent LR servers, it is equivalent for the flow to traverse a single LR server with the latency equal to the sum of the latencies of the two adjacent LR servers.

Property 1 means the series of LR servers can be seen as a single LR server with a latency equal to the sum of the latencies of the LR servers in series.

Property 2. If a flow traverses only LR servers in its path, then the end-to-end delay experienced by the packets in the flow is bounded by the following inequality. Property 2 means the maximum end-to-end delay is the sum of latencies of the LR servers plus the single delay term caused by the initial max burst. As in Property 3, the maximum burst of a flow increases as it passes the LR servers, yet it does not affect the delay. This property is called “pay burst only once”.

Property 3. If a flow conforms to the arrival curve with parameters , i.e. , then after traversing an LR server it follows the curve , i.e. the maximum burst increases as much as . In other word, if is the start time of a busy period, then.

Note that a service process for a flow at a scheduler is identical to the arrival process to the next scheduler. Now we have defined the LR servers and its important properties. Fair schedulers that can guarantee sustainable service rate to every flow fall into this category. The ideal generalized processor sharing (GPS) [

4], packetized-GPS [

4], self-clocked fair queuing [

5], and virtual clock [

7], which are all based on the “virtual finish time”, are LR servers. Round robin based deficit round robin (DRR) [

8] and weighted round robin (WRR) are also LR servers. Virtual finish time-based LR servers generally have smaller latencies but are more complex to implement. DRR and WRR are easier to implement and therefore widely deployed. These LR servers’ latencies are proportional to the maximum packet size and the maximum burst size of a flow.

The application service procedure in the IntServ is composed of the connection establishment phase and the data transfer phase. In connection establishment phase, a flow specifies its input parameters , then the network checks if the traversing nodes have enough bandwidth, reserve the bandwidth for the flow, then admit the flow and guarantee the service. The admitted flows are placed in its own queues and serviced fairly with schedulers at the output ports of relaying nodes in the data transfer phase. The LR servers in an IntServ network work as a scheduler and provide a fair share of the resource. The series of LR servers form a single virtual LR server to a flow, according to Property 1. The maximum delay of the flow then limited by (3).

The flow-based scheduling function is a key component of the IntServ, yet its complexity prohibits practical implementation. Flow based schedulers suggested in the IntServ framework are O(N) or O(log N), when N is the number of flows in the scheduler. N can be tens of thousands in core networks. Due to such complexity, flow-based schedulers have not been deployed.

2.3. Alternatives to the IntServ

As a consequence, a simpler solution with the class based scheduling emerged. A class is a coarser set of packets, which is a set of packets of similar performance requirements. This solution is adopted in the differentiated services (DiffServ) [

15] framework. The DiffServ categorizes the whole traffic into eight classes and schedules the queues according to the classes. In the DiffServ, however, the connection establishment and the admission to a network are still flow basis. A flow specifies its input parameters

, then the network checks if the traversing nodes have enough bandwidth, reserve the bandwidth for the flow, then admit the flow and guarantee the service. After connection establishment, in data transfer phase, at the boundary of a network a flow is regulated. Regulation is a process to enforce the arrival process of a flow to conform to its arrival curve. Packets of a flow that does not conform to the negotiated arrival curve may be delayed or dropped if necessary. A properly regulated flow will conform to the arrival curve in (1) after the regulation. The leaky bucket [

16] and the token bucket [

17,

18] are the commonly implemented regulators.

After the regulation process at the network boundary, a flow is put into the network relaying nodes. At the output of the relaying nodes a flow is put into a proper class. The packets in a class is placed into a single queue. Then the queues for different classes are scheduled accordingly. The scheduler used in the DiffServ network is the strict priority (SP) scheduler. In an SP scheduler, among the packets queued, the packet with the highest priority is selected to be served. It is shown that the strict priority scheduler is also an LR server [

11] for a flow, but its latency is a function of the sum of all the other flows’ maximum burst sizes as

where

is the sum of maximum bursts of all the flows in the SP scheduler.

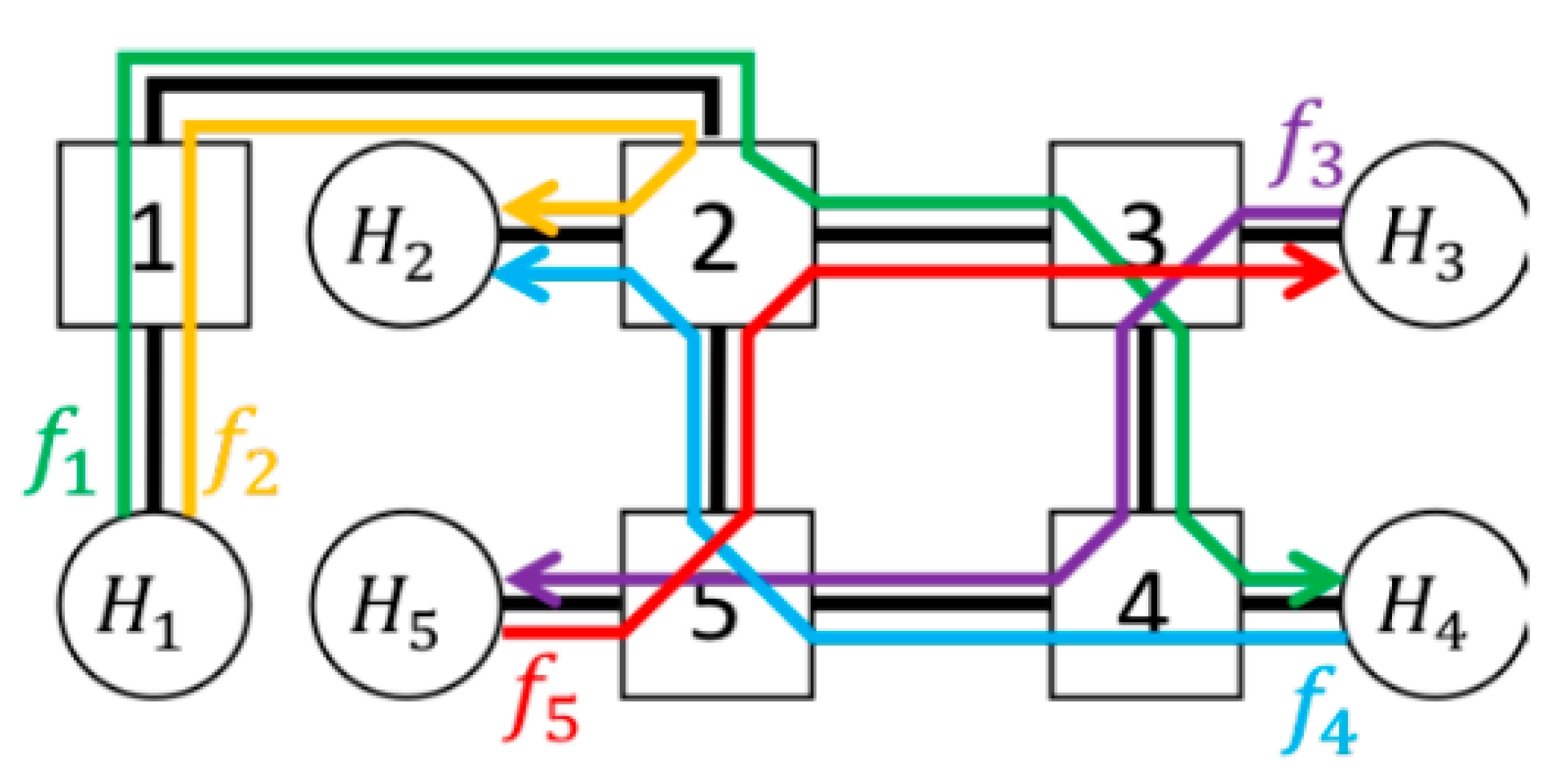

DiffServ is suitable for the applications with the performance metrics such as average delay and packet loss rate, but not for ones with the strict delay bounds. This is because the class-based SP scheduler in the DiffServ cannot provide guaranteed delay for networks with cycle. The burst increases infinitely along the cycle. Consider the following network topology depicted in

Figure 1. Nodes 1-4 forms a cycle. The flows traversing node 1-4 are higher priority flows. They affect to each other as follows. Flow 1 shares the queue at the node 1 with flow 4. Flow 1’s latency at the node 1,

, is a function of the max burst of flow 4 arriving to the node 1,

. Since the max burst of the flow 1 arriving to the node 2,

, is a function of the latency

, it is also a function of

. In other words,

Now, flow 1 affects flow 2 the same way as flow 4 does to flow 1, i.e.,

. It eventually evolves to the point where

. The only value that satisfies this equation for

is infinity.

One possible solution for the infinitely increasing burst is the regulation per flow at every node. Therefore, a regulator placed right before the class-based scheduler has been considered [

6,

9].

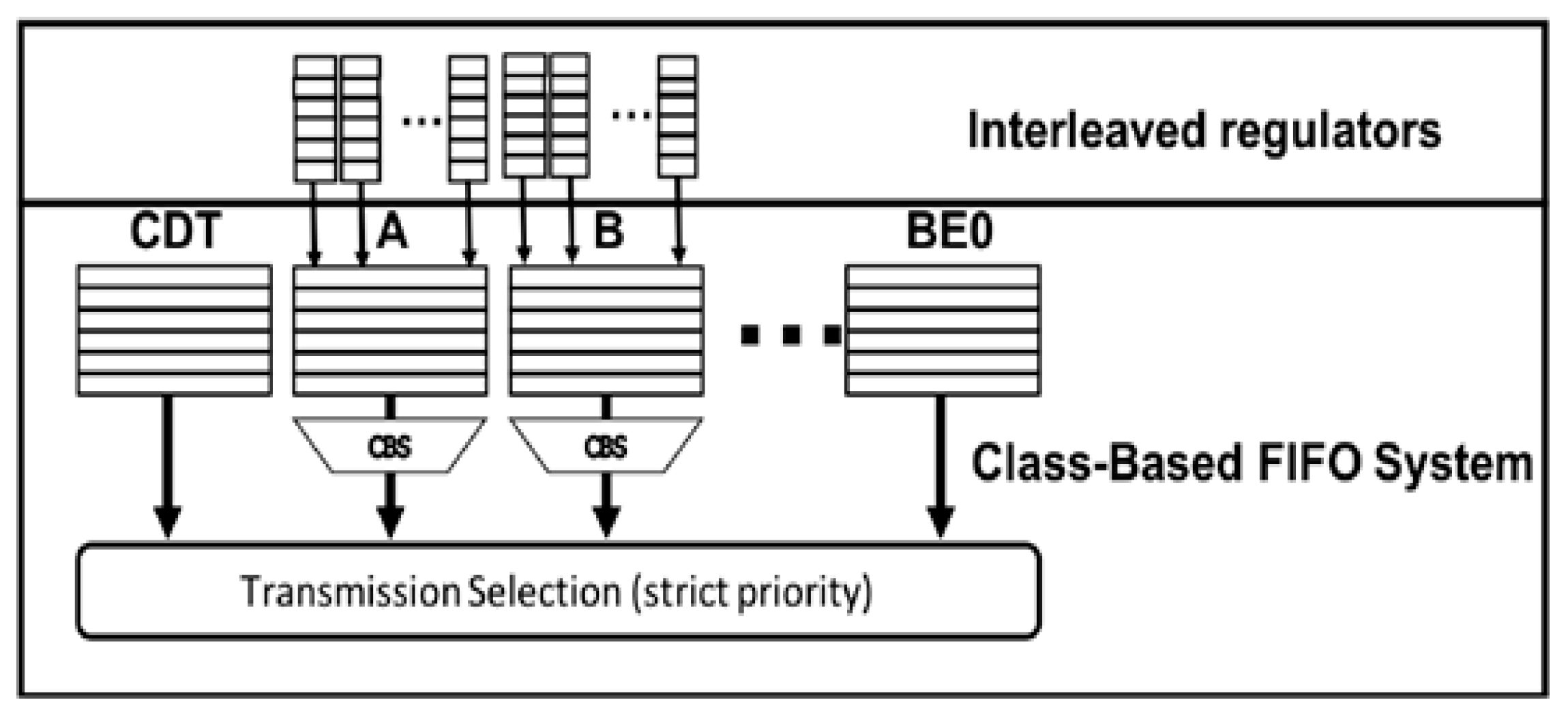

Figure 2 depicts such a system architecture [

6,

9]. In

Figure 2, a connection is synonymous to a flow. The max burst size of a flow at the output of the system in

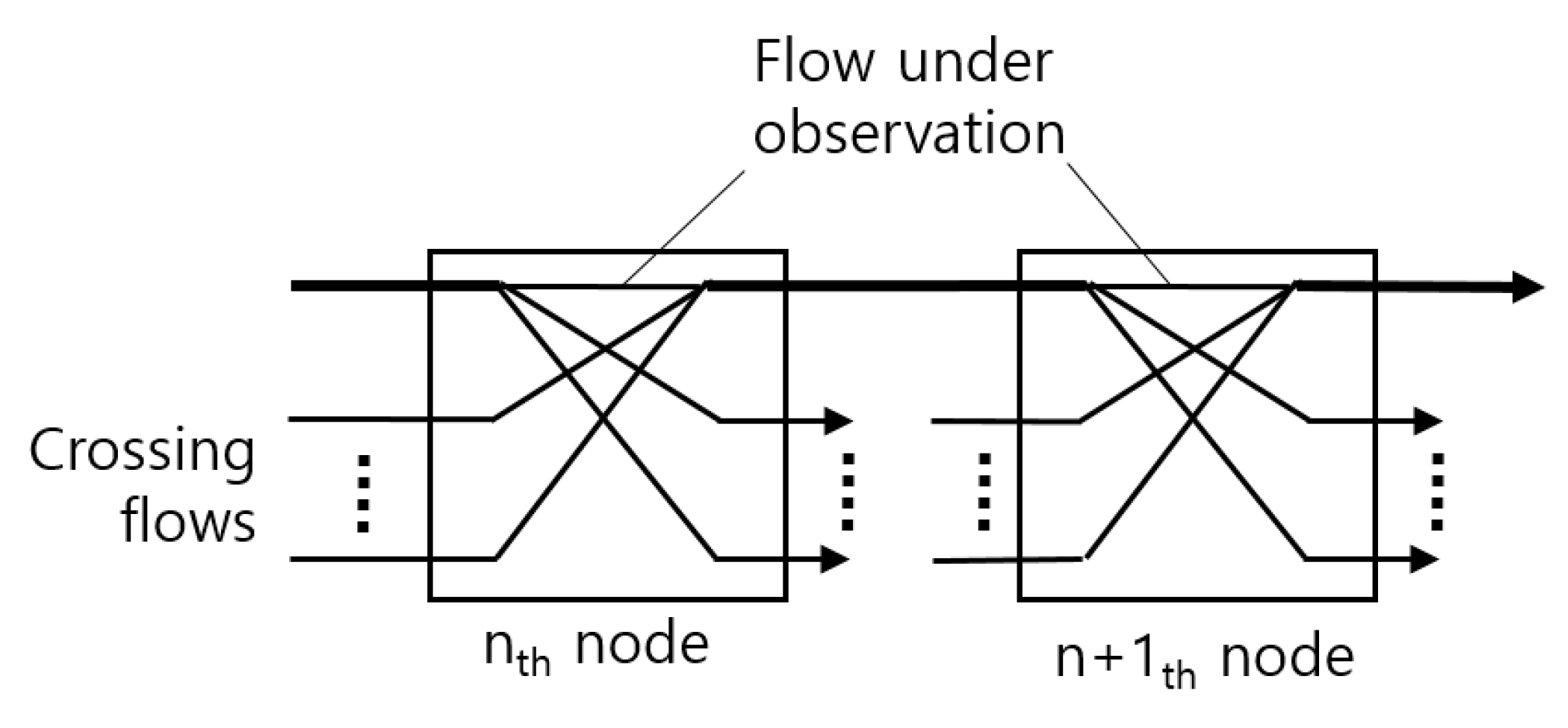

Figure 2 is proportional to the sum of all the flows in a queue. The max burst size, however, is reduced drastically by the regulator at the next node. The packets in the flow under regulation may suffer from additional delays at the regulator, but it is shown that the maximum delay does not increase with the addition of the regulator [

9,

19]. It can be interpreted that the packets with large enough delays are not delayed further at the regulator.

In the system of

Figure 2, the central scheduler is relieved from per-flow scheduling task. The complexity goes to the parallel distributed regulators, however. Since the distributed regulators have most of complexity, compare to the flow-based schedulers, the regulator-scheduler system is more plausible for implementation. This architecture has not been widely accepted though, due to the massive number of regulators.