Abstract

With the fast-paced realization of the Industry 4.0 paradigm, completely centralized networking solutions will no longer be sufficient to meet the stringent requirements of the related industrial applications. Besides requiring fast response time and increased reliability, they will necessitate computational resources at the edge of the network, which demands advanced communication and data management techniques. In this paper, we provide an overview of the network communications and data management aspects for the Industry 4.0. Our global perspective is to understand the key communication and data management challenges and peculiarities for the effective realization of the fourth industrial revolution. To address these challenges, this paper proposes hybrid communications management and decentralized data distribution solutions supported by a hierarchical and multi-tier network architecture. The proposed solutions combine local and decentralized management with centralized decisions to efficiently use the available network resources and meet the requirements of Industry 4.0 applications. To this end, the distributed management entities interact in order to coordinate their decisions and ensure the correct operation of the whole network. Finally, the use of Radio Access Network (RAN) slicing is proposed to achieve the required flexibility to efficiently meet the stringent and varying communication and data management requirements of industrial applications.

1. Introduction

In the Industry 4.0 paradigm, the core of distributed industrial automation and manufacturing systems is essentially the reliable exchange of information. Any attempt to steer processes independently of continuous human interaction requires, in a very wide sense, the flow of information between sensors, controllers, and actuators [1]. The industrial automation sector was always a pioneer of innovation and most industrial systems come equipped with hundreds or thousands installed smart devices. Wearable and smart devices will allow to interconnect, track, and integrate humans in the production process while, at the same time, the data generated will help engineers in increasingly complex decision-making processes [2]. Consequently, there are many connectivity options and data management techniques that can be used today. However, it is not rare that only limited kinds of data are used, while the great amount of other available data is simply dropped [3], especially in completely centralized industrial settings. Current models for cloud-enabled manufacturing propose architectures that are organizationally centralized: operation of the entire cloud network is guided and controlled by a single or a small group of automated organizational nodes, which connect the demand for manufacturing services with their supply [4]. Such centralized operation of the manufacturing network poses a potential problem from the perspectives of the system’s critical requirements, such as latency and reliability.

According to this vision, the next generation of smart factories will very likely be a system of combined networks relying on diverse Internet of Things (IoT) components [5]. Compared to the traditional industrial environments, in IoT-enabled smart factories all relevant data including first, the massive electronic data generated in numeric control units or programmable logic controllers, second, operation status data from add-on sensors and measuring instruments on processing units, and third, environmental data from sensors surrounding processing units, should be collected and transmitted to form for example a cyber processing unit mirroring the physical processing units to realize intelligent-machine applications such as the optimization of processing parameters and the safe functioning of processing units [6]. This transformation comes hand in hand with new challenges for the interplay between IoT and industrial manufacturing [7]; it also imposes a new set of critical and non-critical requirements that need to be addressed.

5G networks are also being designed to support industrial wireless networks. In fact, 5G is being designed to support several verticals, including the factories of the future, in an optimized way, either using low-band spectrum below 1 GHz, mid-band frequencies from 1 GHz to 6 GHz, or high-band spectrum above 6 GHz [8]. 5G is currently attracting extensive research interest from both industry and academia, but there are many challenges that need to be resolved, such as network coexistence among different radio access technologies, resource sharing and access with legacy devices, Quality of Experience (QoE) for users in the unlicensed band, environmental and propagation issues, and power and cost issues [9]. Research activities focusing on radio interface(s) and their interoperability, and access network related issues, traffic characteristics, and spectrum related issues are then encouraged for the development of 5G networks supporting the factories of the future [10].

Some industrial use cases impose stringent requirements on wireless IoT and 5G networks. These requirements include robustness, low latency, energy efficiency, constrained data rates and flexible management and heterogeneity [11]. A basic enabler for satisfying those industrial requirements is the identification and control of the autonomous, interconnected entities within the production system and their operation without the change of the control applications in the rest of the production system [12]. In order to achieve this decentralized mode of operation, the communication network and the data management strategy must be built upon a flexible architecture capable of meeting the communication requirements of the industrial applications, with particular attention on time-critical automation [13]. Moreover, hybrid and hierarchical communication and data management strategies have to be employed.

In this paper, we provide an overview of the network communications and data management emerging trends for the Industry 4.0 and the related architectural design. Particularly, we propose the implementation of hybrid communications management solutions and decentralized data distribution schemes supported by a hierarchical and multi-tier network architecture. The contribution of this paper is part of the work carried out in the H2020 AUTOWARE project [14]. The objective of AUTOWARE is to build an open consolidated ecosystem that lowers the barriers of small, medium, and micro-sized enterprises for cognitive automation application development and application of autonomous manufacturing processes. More specifically, the contributions of this paper are:

- We focus on the emerging trends provided by the 3GPP and 5GPPP by exposing selected industrial use cases and extracting the related critical requirements. Our global perspective is to understand the key communication and data management challenges and peculiarities for the effective realization of the fourth industrial revolution and suggest a holistic communication and data management architectural scheme.

- We suggest a hierarchical multi-tier management scheme which combines local and decentralized management with centralized decisions to efficiently use the available communication resources and carry out the data management in the system. This scheme exploits the different capabilities of the available communication technologies (wired and wireless) to meet the wide range of requirements of the industrial applications.

- We analyze the hybrid communication management and the decentralized data distribution. In this type of management, the distributed communication and data entities interact in order to coordinate their decisions and ensure the correct operation of the whole network.

- We propose the use of RAN (Radio Access Network) slicing to efficiently manage the different communication and data management requirements of Industry 4.0 applications, and we present how the management of these RAN slices can be supported by the proposed hybrid management system.

In Section 2, we outline the identified industrial use cases related to different application areas, such as process and factory automation, logistics warehousing and monitoring, etc. Then, we extract the relevant critical requirements in terms of end-to-end latency, communication service availability, typical message size, and the typical number of devices. In Section 3, we outline the major general challenges and particularities of the factories of the future, and we highlight the specific communication and data management challenges. In Section 4, we expose the recent architectural trends for 5G enabled communications and data management in Industrial Wireless Networks. In Section 5, we detail the communications and data management architectural scheme that we propose so as to provide hierarchical and multi-tier management for Industrial Wireless Networks. At first, we suggest a hierarchical multi-tier management scheme which combines local and decentralized management with centralized decisions to efficiently use the available communication resources and carry out the data management in the system. Then, we analyze the hybrid communication management and the decentralized data distribution. Finally, we propose in Section 6 the use of RAN slicing to achieve the flexibility required to meet the varying and stringent communication and data management requirements of the factories of the future and analyze how RAN slicing can be implemented considering the proposed hybrid management system. In Section 7, we conclude the paper.

2. Use Cases and Requirements

2.1. Selected Industrial Use Cases

The evolution of current communications and data management solutions to support the factory of the future is driven by a series of envisioned use cases and their communication requirements. The International Society of Automation (ISA) classifies the industrial applications in six different classes based on the importance of message timeliness [15]. The 5GPPP classifies industrial use cases in five families, each of them representing a different subset of communication requirements in terms of latency, reliability, availability, throughput, etc. [16]. ETSI has also investigated the communication requirements of industrial automation in [17] and classifies them in two broad groups: sensors and actuators for automation with real-time requirements, and communication at higher levels of the automation hierarchy e.g., at the control or enterprise level, where throughput, security and reliability becomes more important. The 3GPP has recently identified in TR 22.804 V16.1.0 [18] a set of use cases and their communication requirements for the factory of the future taking into account all related studies for the design of the next generation of communication systems. The identified use cases are related to different application areas, such as process and factory automation, logistics warehousing and monitoring and maintenance, among others. Their main characteristics and communication requirements are described below.

Motion control. A motion control system is responsible for controlling moving and/or rotating parts of machines in a well-defined manner, for example in printing machines, machine tools or packaging machines. Motion control has the most stringent requirements in terms of latency and service availability. Currently Industrial Ethernet technologies such as PROFINET® IRT or EtherCAT® are used for motion control systems. In order to support motion control, the 5G system should support a highly deterministic cyclic data communication service, although it is expected that there will be a seamless coexistence between Industrial Ethernet and the 5G system in the future.

Control-to-control communication. This use case considers the communication between different industrial controllers (e.g., programmable logic controllers or motion controllers). This use case includes large machines (e.g., newspaper printing machines), where several controls are used to cluster machine functions, which need to communicate in real time with each other, and individual machines that fulfil a common task (e.g., machines in an assembly line) and that e.g., require controlling the handover of work pieces from one machine to another. Data transmission in control-to-control networks typically consists of cyclic and non-cyclic data transfers. Both types may have real-time requirements, but less demanding than for motion control.

Mobile control panels with safety functions. Control panels are mainly used for configuring, monitoring, and controlling production machinery and moving devices. Safety control panels are in addition typically equipped with an emergency stop button for emergency situations. This use case requires the simultaneous transmission of non-critical (acyclic) data and highly-critical (cyclic) safety traffic with stringent latency requirements.

Mobile robots. A mobile robot is basically a programmable machine able to execute multiple operations, following programmed paths to fulfil a large variety of tasks, including assistance in work steps and transport of goods. Mobile robots are normally controlled from a guidance control system to avoid collisions and assign them driving jobs. In this case, non-real time data, real-time streaming data (video operated remote control), and critical real-time control data need to be transmitted over the same link and to the same mobile robot.

Massive wireless sensor networks. Wireless sensor networks (WSN) facilitate the complex task of monitoring an industrial environment to detect malfunctioning and broken elements in the surrounding environment. Given the simplicity of sensing devices, the data collected is processed by a centralized computing infrastructure such as a mobile data or data center cloud. The computation is referred to as fog computing, multi-access edge computing (MEC), and cloud computing. The traffic patterns generated by the sensor network vary with the type of measurement, and the number of devices in the factory is typically much higher than in the other use cases.

Augmented reality. Augmented reality will play an important role to support shop floor workers for process monitoring, receive step-by-step instructions for specific tasks, or remote support, especially given the high flexibility and versatility of the factories of the future. Head-mounted AR devices with see-through display are very attractive but often require offload complex (e.g., video) processing tasks to the network in real time.

2.2. Industrial Requirements

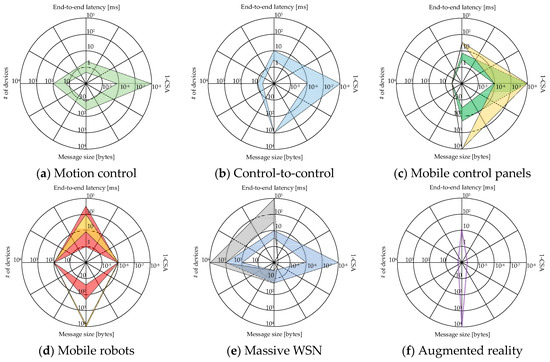

Table 1 shows the requirements of the selected use cases [18] defined in terms of end-to-end latency, communication service availability (CSA), typical message size, and the typical number of devices. The end-to-end latency is the time that takes to successfully transmit a packet from a source to a destination, measured at the communication interface, from the moment it is transmitted by the source to the moment it is successfully received at the destination. It is closely linked to the cycle time of control functions. The communication service availability is the percentage of time the communication service is considered available; the system is considered unavailable in case an expected message is not received within the required end-to-end latency. Table 1 shows that the end-to-end latency required can be below 1 ms for some use cases, and the communication service availability up to 99.999999% (i.e., 1 − 10−8). Other uses cases, however, require the transmission of video with less strict latency requirements. Especially the use of massive wireless sensors will require the deployment of a vast number of devices, in some cases requiring latencies up to 5 ms. As it can be observed, the requirements of the deployed devices will be very heterogeneous and simultaneous transmissions with very different requirements will need to coexist (often in the same link between the same nodes, but also in the same factory). This heterogeneity can also be observed in Figure 1, where the requirements of the six use cases analyzed in this paper are depicted in polar plots. Each sub-figure shows the requirements of one of the use cases in terms of latency, CSA, packet size, and number of devices. Since a range of values is provided for each use case (e.g., latencies between 4 and 8 ms), the requirements are plotted as areas. For some use cases that have 2 applications with very different requirements, 2 areas with different colors are depicted. This is the case of, for example, the massive WSN use case that has significantly different requirements for safety and non-safety monitoring. While condition safety monitoring requires between 5 and 10 ms latency, CSA up to 1 − 10−8 and up to 1000 nodes, interval-based and event-based monitoring require between 50 ms and 1 s latency, CSA up to 1 − 10−3 and up to 10,000 nodes.

Table 1.

Requirements of selected use cases for the factory of the future.

Figure 1.

Requirements of selected use cases for the factory of the future. Each area represents the range of requirements identified for each use case. Some use cases have two areas to highlight that different applications will require significantly different requirements.

3. Challenges

The identified use cases of the factory of the future and their requirements impose new challenges to the underlying communication system that will need to be addressed in the next years to make possible the fourth industrial revolution. The major general challenges and particularities of the factories of the future include the following aspects.

Radio propagation. The radio propagation environment in a factory is typically characterized by very rich multipath, caused by a large number of metallic objects in the immediate surroundings of transmitter and receiver, as well as potentially high interference caused by electric machines, arc welding, etc. [19]. The radio propagation environment therefore can challenge the PHY mechanisms of any communication system that is deployed in a factory.

Industrial-grade quality of service. Many use cases impose stringent requirements in terms of end-to-end latency and communication service availability. The latency levels required are, in some cases, below the levels that can be achieved with current communication technologies, given their time resolution, channel access time, and the need to ask for radio resources to transmit, in many cases. Achieving the communication service availability levels demanded will also require an evolution of the PHY and MAC solutions that are currently available to reliably handle the harsh radio propagation and efficiently manage the interferences [20].

Scalability. Some of the use cases identified require a large number of devices to be effective, such as condition monitoring through the massive deployment of WSN. Scalable solutions will be needed to efficiently and reliably support a high number of connections in the factory. Data management methods [21] and D2D (Device-to-Device) solutions [22] could be used to address this challenge thanks to their benefits in terms of spectral efficiency and reduced redundancy.

Heterogeneous use cases and requirements. There is not only a single class of use cases, but there are many different use cases with a wide variety of different requirements. This results in the need for flexible and adaptable solutions for the communication system, especially considering the adaptability of the future factory.

Mixed traffic. Different use cases require the simultaneous transmission of non-critical and highly-critical data over the same link and to the same device. Different use cases can also coexist in the same factory, thus also requiring the simultaneous transmission of data with different requirements in the same area. This requires that the communication system reliably handles connections with low and high requirements in terms of latency and communication service availability. Their performance should always be guaranteed, independently of each other, which also imposes strong requirements to the design of the underlying communications system and its architecture.

Seamless integration into the existing infrastructure. The communication system has to support a seamless integration into the existing connectivity infrastructure, such as with (Industrial) Ethernet systems. The communication system should be flexible enough to be able to be combined with other (wire-bound) technologies in the same machine or production line. This can be partly addressed with the adoption of certain mechanisms of the IEEE 802.1 protocol family, including IEEE 802.1Qbv (time-aware scheduling) and IEEE 802.1Q (VLANs).

Private network deployments. Due to security, liability, availability, and business reasons, many factory/plant owners require deployment of private networks. This is an inherent characteristic of wired networks deployed today, that becomes more relevant when providing wireless connectivity using a cellular network.

3.1. Communication Challenges

The identified communication requirements impose significant challenges to the underlying communication system. Extremely low latencies require the rapid allocation of the radio resources to transmit the data, or the pre-allocation of such resources. The maximum end-to-end latency tolerated limits the number of possible retransmissions to successfully deliver the data. Under relaxed latency constraints, a high number of retransmissions are possible, which relaxes the necessary PER (Packet Error Rate) and therefore the MCS (Modulation and Coding Scheme) to be used. We can indeed relate the CSA and the maximum tolerated PER together with the number of retransmissions using the following equation, considering that the same PER is experienced in all the retransmissions and that packet loses are independent from each other:

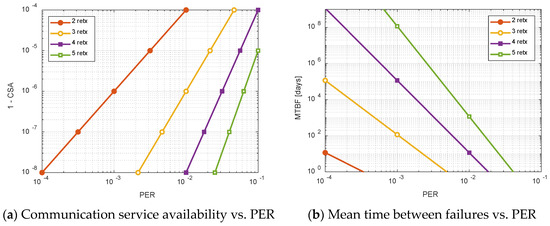

where retx is the maximum number of retransmissions that can be performed within the maximum latency. Figure 2a shows the relationship between PER and 1 − CSA for different values of the number of retransmissions using logarithmic scales for clarity. As it can be observed, achieving a CSA between 1 − 10−6 and 1 − 10−8 requires a PER between 10−4 and 10−3 if only 2 retransmissions are allowed (which would be the case if the required latency is very low).

Figure 2.

Communication service availability (CSA) and mean time between failures (MTBF) as a function of the packet error rate (PER) and the number of possible retransmissions (retx) for L = 10 ms.

The PER can also be used to estimate the mean time between failures (MTBF) [23], which is a popular indicator of the communication service reliability and can be defined as the average elapsed time between inherent failures of a system, during normal system operation. If we consider that a system failure is produced whenever a piece of data cannot be successfully transmitted within the required maximum end-to-end latency, we can estimate the MTBF from the underlying PER. Assuming a constant failure probability, the MTBF can be calculated as the reciprocal of the failure probability of the system [23]. Considering only communication failures, the failure probability can be expressed as:

since all the retransmissions would need to fail to produce the loss of data before the tolerated latency. The MTBF can be therefore expressed as a function of the PER, retx, and the maximum tolerated latency, L, as:

Figure 2b shows the MTBF as a function of the PER for different number of possible retransmissions considering L = 10 ms. As it can be observed, for medium and high PER values, the system is very likely to fail and therefore MTBF values are low. As the PER is decreased (as a result of, e.g., a better interference management, channel access of PHY layer performance), the MTBF can be significantly increased, especially if the number of possible retransmissions increases.

3.2. Data Management Challenges

The identified requirements impose significant challenges also to the network data management and distribution. It is widely recognized that entirely centralized solutions to collect and manage data in industrial environments are not always suitable [24,25]. This is due to the fact that in order to assure quick reaction, process monitoring and automation control may span among multiple physical locations. The fact that automation control may span multiple physical locations and include heterogeneous data sources also pushes towards decentralization. Moreover, the adoption of IoT technologies with the associated massive amounts of generated data makes decentralized data management inevitable. Cloud technologies are considered a great opportunity to implement different types of data-centric automation services at reduced costs, but deploying control-related services in clouds also poses significant challenges. Sending data back and forth between the cloud and the factory over the Internet might result in loss of control over the data by the legitimate owners. A significant challenge is that when data are managed across multiple physical locations, data distribution needs to be carefully designed, so as to ensure that industrial process control is not affected by the well-known issues related to communication delays and jitters [26,27]. While several approaches have been proposed to optimize data management and distributed processing, smart data distribution policies, e.g., data replication to locations from where they can be accessed when needed within appropriate deadlines, are still to be investigated.

4. 5G for Industrial Wireless Networks: Recent Architectural Trends

To support the necessary improvement of flexibility and versatility of industrial production and logistics, novel communication and data management solutions will be needed for the Industry 4.0. Supporting the use cases identified in Section 2 and addressing the challenges described in Section 3 will require a significant evolution of the current communication technologies and architectures available. In this section, we review the existing industrial wireless networks architectures.

Traditional wired fieldbuses and Ethernet-based technologies can provide high communications reliability, but they are not able to fully meet the identified requirements in terms of flexibility, quick reconfigurability, and supporting mobile machines and robots, among others, and challenges of the Industry 4.0. WirelessHART, ISA100.11a and IEEE802.15.4e are some of the wireless technologies developed to support industrial automation and control applications. They consider a central network management entity that is in charge of the configuration and management at the data link and network levels of the communications between the different devices (gateways, routers, and end-devices). The main objective of a centralized network management is to achieve high communications reliability levels. However, the excessive overhead and reconfiguration time that results from collecting state information by the central manager limits their reconfiguration and scalability [28,29].

To overcome this drawback, Li, S. et al. [30,31] proposed to divide a large network into multiple subnetworks, and considered a hierarchical management architecture. Each subnetwork is managed by a local manager and a global entity is in charge of the management and coordination of the entire network. Proposals in [31,32] rely on hierarchical architectures and also propose the integration of heterogeneous technologies to efficiently guarantee the different communication requirements of industrial applications. One representative example is the KoI project [33] that proposes in [34] a two-tier management approach for radio resource coordination to support mission-critical wireless communications in the factory of the future. They consider the deployment of multiple small cells to guarantee the capacity and scalability requirements of the industrial environment. Each of these small cells can implement a different wireless technology and has a Local Radio Coordinator (LRC) that is in charge of the fine-grained management of radio resources for devices in its cell. A single Global Radio Coordinator (GRC) carries out the radio resource management on a broader operational area and coordinates the use of radio resources by the different cells to avoid inter-system and inter-cell interference among them.

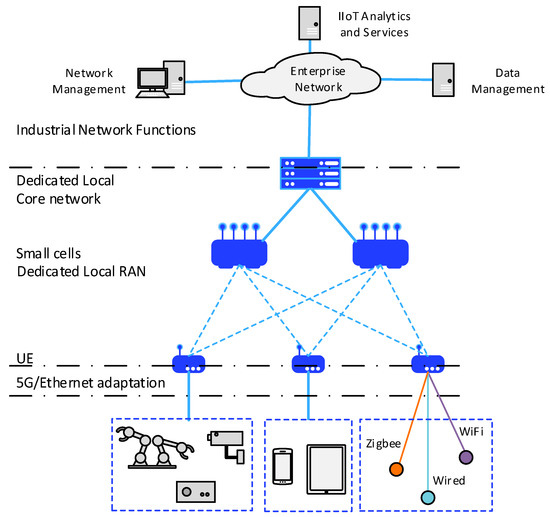

5G networks are also being designed to support industrial wireless networks and the stringent communication requirements identified [35]. Considering the requirement of the use of private networks supporting non-public operation (as highlighted in Section 2), the use of Private 5G networks is proposed [36]. Private 5G networks will allow the implementation of local networks with dedicated radio equipment using shared and unlicensed spectrum, as well as locally dedicated licensed spectrum [18]. Two different types are defined: the so-called type-a network is a 3GPP network that is not for public use and for which service continuity and roaming with a PLMN (public land mobile network) is possible, and the type-b network that is an isolated 3GPP network that does not interact with a PLMN. The design of these Private 5G networks to support industrial wireless applications consider the implementation of several small cells to cover the whole industrial environment integrated in the network architecture [36] as shown in Figure 3, that depicts an example of a Private 5G network of type-b. A variety of sensors, devices, machines, robots, actuators, and terminals are communicating to coordinate and share data. Some of these devices may be directly connected to a local type-b network and some may be connected via gateway(s) to have access to the industrial network functions in the enterprise network. In addition, also the integration of 5G networks with Time Sensitive Networks (TSN) is also considered to guarantee deterministic end-to-end industrial communications, as presented in [35]. TSN is a set of IEEE 802 Ethernet sub-standards that aim to achieve deterministic communication over Ethernet by using time synchronization and a schedule which is shared between all the components (i.e., end systems and switches) within the network.

Figure 3.

Factory of the future using a dedicated local type-b network for industrial automation.

5. Hierarchical and Multi-Tier Communication and Data Management for the Industry 4.0

In this Section, we detail the communications and data management architectural scheme that we propose so as to provide a relevant example of 5G hierarchical and multi-tier management architecture for industrial wireless networks. The architectural scheme we present is a part of the general architecture of the H2020 AUTOWARE project [37].

5.1. Hierarchical and Multi-Tier Network Architecture

Hierarchical management exploits the different capabilities of the available communication technologies (wired and wireless) to meet the wide range of requirements of industrial applications. The architectural scheme is very well aligned with the concepts that are being studied for Industrial 5G networks. The support of very different communication requirements demanded for a wide set of industrial applications (from time-critical applications to ultra-high demanding throughput applications) and the integration of different communication technologies (wired and wireless) are key objectives of the designed architecture. In fact, the scheme focusses on the design of a communication architecture that is able to efficiently meet the varying and stringent communication requirements of the wide set of applications and services that will coexist within the factories of the future; by contrast to the architectures proposed in [32,34], that are mainly designed to guarantee communication requirements of a given type of service (to provide connectivity to a massive number of communication devices in [32], and mission-critical wireless communications in [34]). In addition, it goes a step further and analyses the requirements of the communication architecture from the point of view of the data management and distribution.

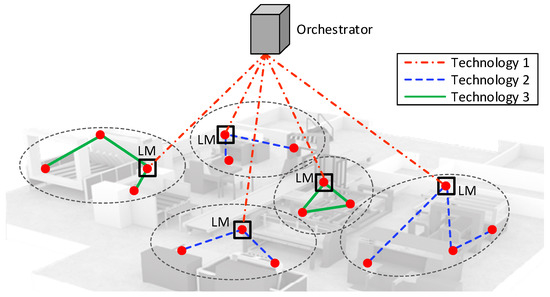

The architectural scheme considers an industrial environment covered by several cells that can implement heterogeneous communication technologies. These cells can overlap in space. In this case, network nodes or end-devices will connect to the cell that is able to most efficiently satisfy its communication needs. Figure 4 shows an example of an Industrial Wireless Network deploying heterogeneous technologies. Technologies 2 and 3 could represent WirelessHART and 5G technologies. Technology 1 is used to connect each cell through a local management entity, referred to as Local Manager (LM), to a central management entity represented as Orchestrator in Figure 4, and it could be implemented with TSN (the communication link between LMs and the Orchestrator could also be implemented by a multi-hop link using also heterogeneous technologies for improved flexibility and scalability (for example, IEEE 802.11 and TSN).

Figure 4.

Hierarchical and heterogeneous Industrial Wireless Network architecture.

As presented in Figure 4, we consider a hierarchical management that combines local and decentralized management with centralized decisions to efficiently use the available communication resources and carry out the data management in the system. In terms of communication management, the Orchestrator is in charge of the global coordination of the whole network. It establishes constraints to the radio resource utilization that each cell has to comply with in order to guarantee coordination and interworking of different cells, and finally ensure the requirements of the industrial applications supported by the network. For example, the Orchestrator must avoid inter-cell interferences between cells implementing the same licensed technology. It must also guarantee interworking among cells implementing wireless technologies using unlicensed spectrum bands in order to avoid inter-system interferences, for example, dynamically allocating non-interfering channels to different cells based on the current demand. LMs are implemented at each cell. A LM is in charge of the local management of the communications and radio resource usage within its cell and makes local decisions to ensure that communication requirements of nodes in its cell are satisfied. LMs are in charge of management functions that require very short response times, such as Radio Resource Allocation, Power Control or Scheduling functions; these functions locally coordinate the use of radio resources among the devices attached to the same cell. In addition, LMs also report the performance levels experienced within its cell to the Orchestrator. Thanks to the information reported by the LMs, the Orchestrator has a global vision of the whole network. The Orchestrator then has the information required and the ability to adapt the configuration of the network based on the current operation conditions. For example, under changes in the configuration of the industrial plant or in the production system, the Orchestrator can reallocate frequency bands to cells implementing licensed technologies based on the new load conditions or the new communication requirements. It could also establish new interworking policies to control interferences between different cells working in the unlicensed spectrum. The Orchestrator also decides to which cell a new device is attached to considering the communication capabilities of the device, the communication requirements of the application, and the current operating conditions of each cell. Finally, end-devices can also participate in the execution of some management functions such as Power Control or Scheduling, among others.

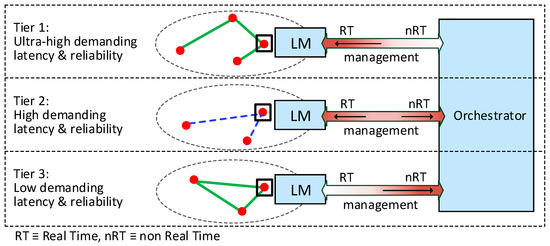

The management entity in charge of some management functions will depend on the particular communications requirements of the nodes connected to a cell. This fact will also influence the type of interactions between the LM of the cell and the Orchestrator. LMs of cells that support communication links with loose latency requirements can delegate some of their management functions to the Orchestrator. For these cells, a closer coordination between different cells could be achieved. For example, radio resource allocation of cells sharing the same unlicensed spectrum could be performed by the Orchestrator to better coordinate the use of resources and avoid intersystem interference if nodes supported in the cell do not present high requirements in terms of latency. However, management decisions performed by LMs based on local information are preferred for applications with ultra-highly demanding latency requirements. In this context, cells are organized in different tiers depending on the communication requirements of the industrial application they support, as illustrated in Figure 5. The different requirements in terms of latency and reliability of the application supported by a cell also affects the exact locations where data should be stored and replicated. For example, in time-critical applications, the lower the data access latency bound is, the closer to the destination the data should be replicated.

Figure 5.

Local Manager (LM)–Orchestrator interaction at different tiers of the management architecture.

5.2. Hybrid Communication Management

Communication systems must be able to support the high dynamism of the industrial environment that will result from the coexistence of different industrial applications, different types of sensors, the mobility of nodes (robots, machinery, vehicles and workers), and changes in the production demands. Industry 4.0 thus demands flexible and dynamic communication networks able to adapt their configuration to changes in the environment to seamlessly meet the communication requirements of industrial applications. To this end, communication management decisions must be based on current operating conditions and on the continuous monitoring of experienced performance. Our hierarchical communication and data management architecture allows the implementation of hybrid communication management schemes that integrate local and decentralized management decisions while maintaining a close coordination through a central management entity (the Orchestrator) with global knowledge of the performance experienced in the whole industrial communication network. The hybrid communication management introduces flexibility in the management of wireless connections and increases the capability of the network to detect and react to local changes in the industrial environment while efficiently guaranteeing the communication requirements of industrial applications and services supported by the whole network.

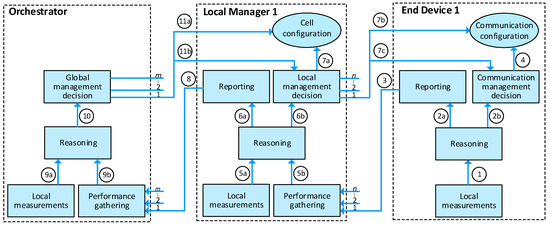

In hybrid management schemes, management entities must interact to coordinate their decisions and ensure the correct operation of the whole network. Figure 6 represents the interactions between the management entities of the hierarchical architecture: the Orchestrator, LMs, and end-devices (as presented in Section 5, end-devices might also participate in the communication management). Boxes within each management entity represent different functions executed at each entity:

Figure 6.

Hybrid communication management: interaction between management entities.

- Local measurements: This function measures physical parameters on the communication link, for example, received signal level (received signal strength indication or RSSI), signal to noise ratio (SNR), etc. In addition, this function also measures and evaluates the performance experienced in the communication, as for example, throughput, delay, PER, etc. This function is performed by each entity on its communication links.

- Performance gathering: This function collects information about the performance experienced at the different cells. This function is performed at the LMs, which collect performance information gathered by end-devices within its cell and at the Orchestrator, which receives performance information gathered by the LMs.

- Reasoning: The reasoning function processes the data obtained by the local measurements and the performance gathering functions to synthesize higher-level performance information. The reasoning performed at each entity will depend on the particular application supported (and the communication requirements of the application), and on the particular management algorithm implemented. For example, if a cell supports time-critical control applications, the maximum value of latency experienced by the 99th percentile of packets transmitted might be of interest, while the average throughput achieved in the communication could be required to analyze the performance of a 3D visualization application.

- Reporting: This function sends periodic performance reports to the management entity in the higher hierarchical level. Particularly, end-devices send periodic reports to the LMs, and LMs report performance information to the Orchestrator.

- Global/Local/Communication management decision: This function executes the decision rule or decision policy. This function can be, for example, Admission Control or Inter-Cell Interference Coordination algorithms can be executed as the Global management decision function in the Orchestrator, Power Control, or Radio Resource Allocation within a cell can be executed as the Local management decision function in the LMs, and Scheduling or Power Control can be executed as the Communication management decision function at the end-devices.

As shown in Figure 6, an end-device performs local measurements of the quality and performance experienced in its communication links. This local data (1) is processed by the reasoning function that provides high-level performance information (2a) that is reported to the LM in its cell (3). This high-level performance information can also be used by the end-device (2b) to get a management decision (4) and configure its communication parameters in the case that the end-device has management capabilities. In this case, the management decisions taken by different end-devices in the same cell are coordinated by the LM in the cell which can also configure some communication parameters of the end-devices (7b). Decisions taken by end-devices are constrained by the decisions taken by the LM (7c). If end-devices do not have management capabilities, the communication parameters for the end-devices are directly configured by the LM (7b). The Local management decisions taken by each LM are based on the performance information gathered by all end-devices in its cell (from 1 to n devices in the figure), and on local measurements performed by the own LM. This data (5a and 5b) is processed by the reasoning function in the LM, and the resulting high-level performance information (6b) is used to take a local management decision and configure the communication parameters of the end-devices in its cell (7a, 7b, and 7c). Each LM also reports to the Orchestrator the processed information about the performance experienced in its cell (8). The Orchestrator receives performance information from all the LMs (from 1 to m LMs in the figure). The performance information gathered by the LMs (9b), together with local measurements performed by the Orchestrator in its communication links with the LMs (9a), is processed by the reasoning function in the Orchestrator. The high-level performance information (10) is used by the Orchestrator to achieve a global management decision and configure radio resources to use at each cell (11a). The global management decisions taken by the Orchestrator constrain the local management decisions taken by the LMs (11b) to guarantee the coordination among the different LMs in the network, and finally ensure the communication requirements of the industrial applications and services supported by the network.

5.3. Decentralized Data Distribution

In terms of the data management, the Orchestrator plays an important role in facilitating the development of novel smart data distribution solutions that cooperate with cloud-based service provisioning and communication technologies. Smart proactive data storage/replication techniques can be designed, ensuring that data is located where it can be accessed by appropriate decision makers in a timely manner based on the performance of the underlying communication infrastructure. Consequently, the Orchestrator serves as a great opportunity to implement different types of data-oriented automation functions at reduced costs, like interactions with external data providers or requestors, inter-cell data distribution planning, and management and coordination of the LMs.

For the data management, allocation of roles to the Orchestrator, LMs, and individual devices is less precisely defined in general, and can vary significantly on a per-application and per-scenario basis. In general, we expect that the Orchestrator decides on which cells (controlled by one LM each) data need to be available and thus replicated. Also, it decides on which cells data must not be replicated due to ownership reasons. Thus, we expect the Orchestrator to be responsible for managing the heterogeneity issues related to managing data across a number of different cells, possibly owned and operated by different entities. LMs manage individual cells. They typically decide where, inside the cell, data need to be replicated, stored, and moved dynamically, based on the requirements of the specific applications, and the resources available at the individual nodes. Note that data in general are replicated across the individual nodes, and not exclusively at the LMs, to guarantee low delays and jitters, which might be excessive if the LMs operate as unique centralized data managers. In some cases, end-devices can also participate in management functions, for example, by exploiting D2D communications to directly exchange data between them, implementing localized data replication or storage policies. In those cases, the data routing is not necessarily regulated centrally, but can be efficiently distributed, using appropriate cooperation schemes. In the architecture, therefore, the control of data management schemes can be performed centrally at the Orchestrator, locally at the LMs, or even at individual devices, as appropriate. Data management operations become distributed, and exploit devices which lie between source and destination devices, like the use of proxies for data storage and access.

Note that our architectural scheme enables the storing and replication of data between (i) (potentially mobile) nodes in the factory environment (e.g., the mobile nodes of the factory operators, nodes installed in work cells, nodes attached to mobile robots, etc.) (ii) edge nodes providing storage services for the specific (areas of the) factory, and (iii) remote cloud storage services. All the three layers can be used in a synergic way, based on the properties of the data and the requirements of the users requesting it. Depending on these properties, data processing may need highly variable computational resources. Advanced scheduling and resource management strategies lie at the core of the distributed infrastructure resources usage. However, such strategies must be tailored to the particular algorithm/data combination to be managed. Differently from the past, the scheduling process, instead of looking for smart ways to adapt the application to the execution environment, now it aims at selecting and managing the computational resources available on the distributed infrastructure to fulfil some performance indicators.

Our suggested architectural scheme has been used in order to efficiently deploy the data management functions over typical industrial IoT networks. Initial results show that the decentralized data management scheme of the proposed architecture can indeed enhance various target metrics when applied to various industrial IoT networking settings:

- Average end-to-end latency. In [21,38], we have provided an extensive experimental evaluation, both in a testbed and through simulations, and we demonstrated that the adoption of the proposed decentralized data management (i) guarantees that the access latency stays below the given threshold, and (ii) significantly outperforms traditional centralized and even distributed approaches, in terms of average data access latency guarantees.

- Maximum end-to-end latency. In [39], we demonstrated that the proposed decentralized data management (i) guarantees data access latency below the given threshold, and (ii) performs well in terms of network lifetime with respect to a theoretically optimal solution.

- Adaptiveness. In [40], based on the proposed decentralized data management, we provided several efficient algorithmic functions which locally reconfigure the paths of the data distribution process, when a communication link or a network node fails. We demonstrated through simulations increased performance gains of our method in terms of energy consumption and data delivery success rate.

6. RAN Slicing for 5G Industrial Wireless Networks

In a factory environment, several applications with different communication requirements (Section 2) will coexist under the same cell. Supporting the heterogeneous use cases and the stringent and varying communication requirements of the industrial applications in the factories of the future is a challenge that has to be faced by industrial wireless networks, as presented in Section 3. Current wireless technologies can support more than one type of application, even belonging to different verticals, each of them with possibly radically different communication requirements. For example, LTE or 5G networks can be used to satisfy the ultra-high reliability and low-latency communication requirements of time-critical automation processes. Moreover, LTE or 5G networks can also support applications that require high throughput levels, such as augmented reality or 4K/8K ultra high definition video. These different communications requirements in terms of transmission rates, delay, or reliability require specific and different communication management functions that cannot always be met through a common network setting. This fact requires industrial wireless networks to be highly flexible and capable of implementing different communication management functions adapted to the communication requirements of each application while ensuring that the application-specific requirements are satisfied independently of the congestion and performance experienced by the other applications supported by the same cell (i.e., performance isolation needs to be guaranteed between different applications). This is typically achieved through network virtualization and slicing which allow composing dedicated logical networks with specific management and functionalities. In addition, one important aspect is to ensure that the application-specific requirements are satisfied independently of the congestion and performance experienced by the other applications supported by the same cell, i.e., performance isolation needs to be guaranteed between different applications.

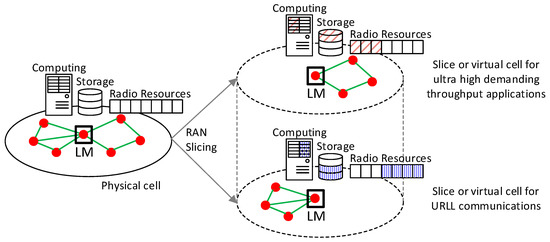

In this context, we propose the use of RAN Slicing to solve the above-mentioned issues. RAN Slicing is based on SDN (Software Defined Networking) and NFV (Network Function Virtualization) technologies and proposes to split the resources and management functions of a RAN in different slices to create multiple logical (virtual) networks on top of a common network infrastructure [41]. Each of these slices, in this case, virtual RANs, must contain the required resources needed to meet the communication requirements of the application or service that such slice supports. As presented in [41], one of the main objectives of RAN Slicing is to assure isolation in terms of performance. In addition, isolation in terms of management must also be ensured, allowing the independent management of each slice as a separated network. As a result, RAN Slicing becomes a key technology to deploy a flexible communication and networking architecture capable to meet the stringent and diverging communication requirements of industrial applications, and in particular, those of ultra-reliable and low-latency (URLL) communications. In the proposed architecture, each slice of a physical cell is referred to as virtual cell as shown in Figure 7. Each virtual cell implements the appropriate functions based on the requirements of the application supported and must be assigned the RAN resources (e.g., data storage, computing, radio resources, etc.) required to satisfy the requirements of the communication links it supports.

Figure 7.

Virtual cells based on Radio Access Network (RAN) Slicing.

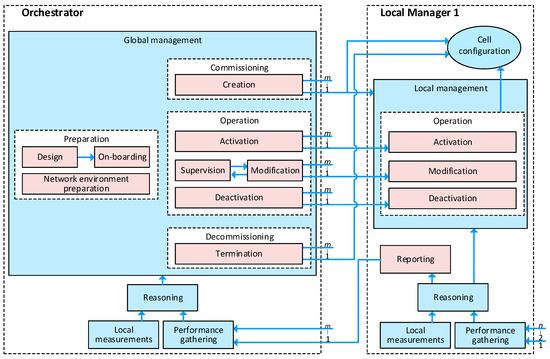

RAN slicing is already being studied by the 3GPP. System architecture and requirements to support network slicing in 5G networks are already defined in [42,43]. The 3GPP also describes the management aspects of a network slice in [44], which are established in 4 lifecycle phases: preparation, commissioning, operation, decommissioning. Within each of these phases, high-level tasks are also defined in [44]. The tasks included in each phase are next described. In addition, Figure 8 shows how the proposed hybrid management architecture can efficiently support the management of the slices (Figure 8 does not show end-devices since RAN slice management functions are in charge of the Orchestrator and the Local Managers).

Figure 8.

Management aspects of RAN slices supported by the hybrid communication management architecture (red squares represent RAN slices management tasks defined in [44]).

- Preparation: The preparation phase includes the evaluation of the RAN slice requirements, design and definition of the attributes of the new RAN slice, and on-boarding. In this phase, the network environment is also prepared. The tasks related to the preparation phase are in charge of the Orchestrator, which has global knowledge of the network. Thanks to the information collected from the different (physical/virtual) cells, the Orchestrator can evaluate the necessity of new slices to guarantee the requirements of the system/network, as well as the capacity of a new RAN slice to meet the communication requirements demanded based on the availability of resources.

- Commissioning: This phase includes the creation of the slice. This task is also performed by the Orchestrator. The Orchestrator allocates the needed resources to the new slice or virtual cell and configures them to accomplish the new slice requirements. The Orchestrator also creates the Local Manager in charge of the new virtual cell. In this case, the Orchestrator also configures the local management functions (for example, the scheduling or power control schemes) to be executed by the Local Manager to satisfy the requirements in terms of latency, reliability, and bandwidth, among others, of the application(s) or service(s) supported by the new slice or virtual cell.

- Operation: The operation phase includes the activation, supervision, performance reporting, modification, and deactivation of a slice. The management tasks of this phase are distributed within the Orchestrator and the Local Managers of each slice or virtual cell. The main decisions about the activation or deactivation of a RAN slice or virtual cell is in charge of the Orchestrator. However, once the activation/deactivation order from the Orchestrator at the Local Manager has been received, some tasks aimed at configuring or activating/deactivating communication services at the cell level could be performed by the Local Manager. During the operation phase, the Orchestrator is also in charge of monitoring and supervising the resource usage and if the established slice requirements are met. To this end, the Local Manager is in charge of reporting information about the performance experienced in the slice or virtual cell. Based on the supervision outcome, the Orchestrator can order modification of the slice or virtual cell. In this case, some modification actions can be performed by the Local Manager in order to change some configuration parameters or functions based on the modification instructions received from the Orchestrator. In addition, slice modification can also be due to receiving new network slice requirements.

- Decommissioning: This phase is executed in the Orchestrator. The Orchestrator is in charge of terminating a slice or virtual cell when it is no longer required. When a slice is terminated, it does no longer exists.

With respect to data management, the data distribution functions operate on top of the virtual networks generated by RAN Slicing, and the requirements posed by data management determine part of the network traffic patterns. Therefore, RAN Slicing defined by the Orchestrator might consider the traffic patterns resulting from data management operations, in order to optimize slicing itself. The interplay between RAN Slicing and decentralized data distribution (contrary to cloud-based centralized middleware for data management) enables the convenience of managing data among different slices locally, and, when needed, exploiting an additional level of infrastructure-based computation and data management (i.e., fixed edge devices, portable user devices and remote clouds). Instead of relying exclusively on storage and computation services provided by a global cloud provider, our scheme can distribute storage and computation tasks among slices, and can therefore separate the network in different planes (e.g., separate network and data planes).

7. Conclusions

In this paper, we highlight some emerging trends provided by the 3GPP and 5GPPP, by considering some typical industrial use cases and relevant requirements. We showcase the key communication and data management challenges and suggest a holistic communication and data management architectural scheme. Our scheme combines a hierarchical multi-tier management system which integrates local and decentralized management with centralized decisions, with the different capabilities of the available communication technologies (wired and wireless) to meet the wide range of requirements of industrial applications. It incorporates hybrid communication and data management, as well as RAN slicing and network virtualization techniques for meeting the communication and data management requirements of the factory of the future.

Author Contributions

Conceptualization, M.C.L.-E. and T.P.R.; Formal analysis, M.S.; Funding acquisition, M.S. and A.P.; Investigation, M.C.L.-E., M.S. and T.P.R.; Methodology, M.C.L.-E. and T.P.R.; Software, M.C.L.-E. and M.S.; Supervision, M.S., A.P. and M.C.; Validation, M.S. and A.P.; Writing-original draft, M.C.L.-E. and T.P.R.; Writing-review & editing, M.C.L.-E., M.S., T.P.R., A.P. and M.C.

Funding

This work has been funded by the European Commission through the FoF-RIA Project AUTOWARE: Wireless Autonomous, Reliable and Resilient Production Operation Architecture for Cognitive Manufacturing (No. 723909).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wollschlaeger, M.; Sauter, T.; Jasperneite, J. The Future of Industrial Communication: Automation Networks in the Era of the Internet of Things and Industry 4.0. IEEE Ind. Electron. Mag. 2017, 11, 17–27. [Google Scholar] [CrossRef]

- Bello, L.L.; Lombardo, A.; Milardo, S.; Patti, G.; Reno, M. Software-Defined Networking for Dynamic Control of Mobile Industrial Wireless Sensor Networks. In Proceedings of the IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA), Torino, Italy, 4–7 September 2018; pp. 290–296. [Google Scholar] [CrossRef]

- Ferrari, P.; Flammini, A.; Rinaldi, S.; Sisinni, E.; Maffei, D.; Malara, M. Impact of Quality of Service on Cloud Based Industrial IoT Applications with OPC UA. Electronics 2018, 7, 109. [Google Scholar] [CrossRef]

- Škulj, G.; Vrabič, R.; Butala, P.; Sluga, A. Decentralised network architecture for cloud manufacturing. Int. J. Comput. Integr. Manuf. 2015, 30, 395–408. [Google Scholar] [CrossRef]

- Zikria, Y.B.; Yu, H.; Afzal, M.K.; Rehmani, M.H.; Hahm, O. Internet of Things (IoT): Operating System, Applications and Protocols Design, and Validation Techniques. Future Gener. Comput. Syst. 2018, 88, 699–706. [Google Scholar] [CrossRef]

- Luo, Y.; Duan, Y.; Li, W.; Pace, P.; Fortino, G. A Novel Mobile and Hierarchical Data Transmission Architecture for Smart Factories. IEEE Trans. Ind. Inform. 2018, 14, 3534–3546. [Google Scholar] [CrossRef]

- Sisinni, E.; Saifullah, A.; Han, S.; Jennehag, U.; Gidlund, M. Industrial Internet of Things: Challenges, Opportunities, and Directions. IEEE Trans. Ind. Inform. 2018, 14, 4724–4734. [Google Scholar] [CrossRef]

- Morgado, A.; Huq, K.M.S.; Mumtaz, S.; Rodriguez, J. A survey of 5G technologies: Regulatory, standardization and industrial perspectives. Digit. Commun. Netw. 2018, 4, 87–97. [Google Scholar] [CrossRef]

- Afzal, M.K.; Zikria, Y.B.; Mumtaz, S.; Rayes, A.; Al-Dulaimi, A.; Guizani, M. Unlocking 5G Spectrum Potential for Intelligent IoT: Opportunities, Challenges, and Solutions. IEEE Commun. Mag. 2018, 56, 92–93. [Google Scholar] [CrossRef]

- Zikria, Y.B.; Kim, S.W.; Afzal, M.K.; Wang, H.; Rehmani, M.H. 5G Mobile Services and Scenarios: Challenges and Solutions. Sustainability 2018, 10, 3626. [Google Scholar] [CrossRef]

- Huang, V.K.L.; Pang, Z.; Chen, C.A.; Tsang, K.F. New Trends in the Practical Deployment of Industrial Wireless: From Noncritical to Critical Use Cases. IEEE Ind. Electron. Mag. 2018, 12, 50–58. [Google Scholar] [CrossRef]

- Schleipen, M.; Lüder, A.; Sauer, O.; Flatt, H.; Jasperneite, J. Requirements and concept for Plug-and-Work. at-Automatisierungstechnik 2018, 63, 801–820. [Google Scholar] [CrossRef]

- Lucas-Estañ, M.C.; Raptis, T.P.; Sepulcre, M.; Passarella, A.; Regueiro, C.; Lazaro, O. A Software Defined Hierarchical Communication and Data Management Architecture for Industry 4.0. In Proceedings of the 14th IEEE/IFIP Wireless On-Demand Network Systems and Services Conference (IEEE/IFIP WONS 2018), Isola, France, 6–8 February 2018; pp. 37–44. [Google Scholar] [CrossRef]

- H2020 AUTOWARE Project. Available online: http://www.autoware-eu.org/ (accessed on 6 December 2018).

- Zand, P.; Chatterjea, S.; Das, K.; Havinga, P. Wireless industrial monitoring and control networks: The journey so far and the road ahead. J. Sens. Actuator Netw. 2012, 1, 123–152. [Google Scholar] [CrossRef]

- 5GPPP. 5G and the Factories of the Future. October 2015. Available online: https://5g-ppp.eu/ (accessed on 6 December 2018).

- ETSI. Technical Report; Electromagnetic Compatibility and Radio Spectrum Matters (ERM); System Reference Document; Short Range Devices (SRD); Part 2: Technical Characteristics for SRD Equipment for Wireless Industrial Applications Using Technologies Different from Ultra-Wide Band (UWB); ETSI TR 102 889-2 V1.1.1; ETSI: Valbonne, France, 2011. [Google Scholar]

- 3GPP. Technical Specification Group Services and System Aspects; Study on Communication for Automation in Vertical Domains (Release 16); 3GPP TR 22.804 v16.1.0; 3GPP: Valbonne, France, 2018. [Google Scholar]

- Gozalvez, J.; Sepulcre, M.; Palazon, J.A. On the feasibility to deploy mobile industrial applications using wireless communications. Comput. Ind. 2014, 65, 1136–1146. [Google Scholar] [CrossRef]

- Lucas-Estañ, M.C.; Maestre, J.L.; Coll-Perales, B.; Gozalvez, J.; Lluvia, I. An Experimental Evaluation of Redundancy in Industrial Wireless Communications. In Proceedings of the IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA 2018), Torino, Italy, 4–7 September 2018. [Google Scholar]

- Raptis, T.P.; Passarella, A.; Conti, M. Performance Analysis of Latency-Aware Data Management in Industrial IoT Networks. Sensors 2018, 18, 2611. [Google Scholar] [CrossRef] [PubMed]

- Lucas-Estañ, M.C.; Gozalvez, J. Distributed Radio Resource Allocation for Device-to-Device Communications Underlaying Cellular Networks. J. Netw. Comput. Appl. 2017, 99, 120–130. [Google Scholar] [CrossRef]

- Birolini, A. Reliability Engineering: Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2013; ISBN 978-3-642-39534-5. [Google Scholar]

- Gaj, P.; Malinowski, A.; Sauter, T.; Valenzano, A. Guest Editorial Distributed Data Processing in Industrial Applications. IEEE Trans. Ind. Inform. 2015, 11, 737–740. [Google Scholar] [CrossRef]

- Wang, C.; Bi, Z.; Da Xu, L. IoT and cloud computing in automation of assembly modeling systems. IEEE Trans. Ind. Inform. 2014, 10, 1426–1434. [Google Scholar] [CrossRef]

- Bi, Z.; Da Xu, L.; Wang, C. Internet of things for enterprise systems of modern manufacturing. IEEE Trans. Ind. Inform. 2014, 10, 1537–1546. [Google Scholar] [CrossRef]

- Gaj, P.; Jasperneite, J.; Felser, M. Computer Communication within Industrial Distributed Environment a Survey. IEEE Trans. Ind. Inform. 2013, 9, 182–189. [Google Scholar] [CrossRef]

- Montero, S.; Gozalvez, J.; Sepulcre, M.; Prieto, G. Impact of Mobility on the Management and Performance of WirelessHART Industrial Communications. In Proceedings of the 17th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Kraków, Poland, 17–21 September 2012. [Google Scholar] [CrossRef]

- Lu, C.; Saifullah, A.; Li, B.; Sha, M.; Gonzalez, H.; Gunatilaka, D.; Wu, C.; Nie, L.; Chen, Y. Real-Time Wireless Sensor-Actuator Networks for Industrial Cyber-Physical Systems. Proc. IEEE 2016, 104, 1013–1024. [Google Scholar] [CrossRef]

- Li, S.; Li, D.; Wan, J.; Vasilakos, A.V.; Lai, C.F.; Wang, S. A review of industrial wireless networks in the context of Industry 4.0. Wirel. Netw. 2017, 23, 23–41. [Google Scholar] [CrossRef]

- Gisbert, J.R. Integrated system for control and monitoring industrial wireless networks for labor risk prevention. J. Netw. Comput. Appl. 2014, 39, 233–252. [Google Scholar] [CrossRef]

- Sámano-Robles, R.; Nordström, T.; Santonja, S.; Rom, W.; Tovar, E. The DEWI high-level architecture: Wireless sensor networks in industrial applications. In Proceedings of the 11th International Conference on Digital Information Management (ICDIM), Porto, Portugal, 19–21 September 2016; pp. 274–280. [Google Scholar] [CrossRef]

- KoI Project Website. Available online: http://koi-projekt.de/index.html (accessed on 6 December 2018).

- Aktas, I.; Ansari, J.; Auroux, S.; Parruca, D.; Guirao, M.D.P.; Holfeld, B. A Coordination Architecture for Wireless Industrial Automation. In Proceedings of the European Wireless Conference, Dresden, Germany, 17–19 May 2017. [Google Scholar]

- Yavuz, M. How Will 5G Transform Industrial IoT? Qualcomm Technologies, Inc.: San Diego, CA, USA, 2018. [Google Scholar]

- Yavuz, M. Qualcomm, Private LTE Networks Create New Opportunities for Industrial IoT; Qualcomm Technologies, Inc.: San Diego, CA, USA, 2017. [Google Scholar]

- Molina, E.; Lazaro, O.; Sepulcre, M.; Gozalvez, J.; Passarella, A.; Raptis, T.P.; Ude, A.; Nemec, B.; Rooker, M.; Kirstein, F.; et al. The AUTOWARE Framework and Requirements for the Cognitive Digital Automation. In Proceedings of the 18th IFIP Working Conference on Virtual Enterprises (PRO-VE), Vicenza, Italy, 18–20 September 2017. [Google Scholar] [CrossRef]

- Raptis, T.P.; Passarella, A. A distributed data management scheme for industrial IoT environments. In Proceedings of the IEEE 13th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), Rome, Italy, 9–11 October 2017; pp. 196–203. [Google Scholar] [CrossRef]

- Raptis, T.P.; Passarella, A.; Conti, M. Maximizing industrial IoT network lifetime under latency constraints through edge data distribution. In Proceedings of the IEEE Industrial Cyber-Physical Systems (ICPS), Saint Petersburg, Russia, 15–18 May 2018; pp. 708–713. [Google Scholar]

- Raptis, T.P.; Passarella, A.; Conti, M. Distributed Path Reconfiguration and Data Forwarding in Industrial IoT Networks. In Proceedings of the 16th IFIP International Conference on Wired/Wireless Internet Communications (WWIC), Boston, MA, USA, 18–20 June 2018. [Google Scholar]

- Ordonez-Lucena, J.; Ameigeiras, P.; Lopez, D.; Ramos-Munoz, J.J.; Lorca, J.; Folgueira, J. Network Slicing for 5G with SDN/NFV: Concepts, Architectures, and Challenges. IEEE Commun. Mag. 2018, 55, 80–87. [Google Scholar] [CrossRef]

- 3GPP. Technical Specification Group Services and System Aspects; System Architecture for the 5G System; Stage 2 (Release 15); 3GPP TS 23.501 v15.3.0; 3GPP: Valbonne, France, 2018. [Google Scholar]

- 3GPP. Technical Specification Group Services and System Aspects; Service requirements for the 5G system; Stage 1 (Release 16); 3GPP TS 22.261 v16.5.0; 3GPP: Valbonne, France, 2018. [Google Scholar]

- 3GPP. Technical Specification Group Services and System Aspects; Telecommunication management; Management and Orchestration; Concepts, Use Cases and Requirements (Release 15); 3GPP TS 28.530 v15.0.0; 3GPP: Valbonne, France, 2018. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).