Using a Reinforcement Q-Learning-Based Deep Neural Network for Playing Video Games

Abstract

1. Introduction

- A new RQDNN, which combines a deep principal component analysis network (DPCANet) and Q-learning, is proposed to determine the strategy in playing a video game;

- The proposed approach greatly reduces computational complexity compared to traditional deep neural network architecture; and

- The trained RQDNN only uses CPU and decreases computing resource costs.

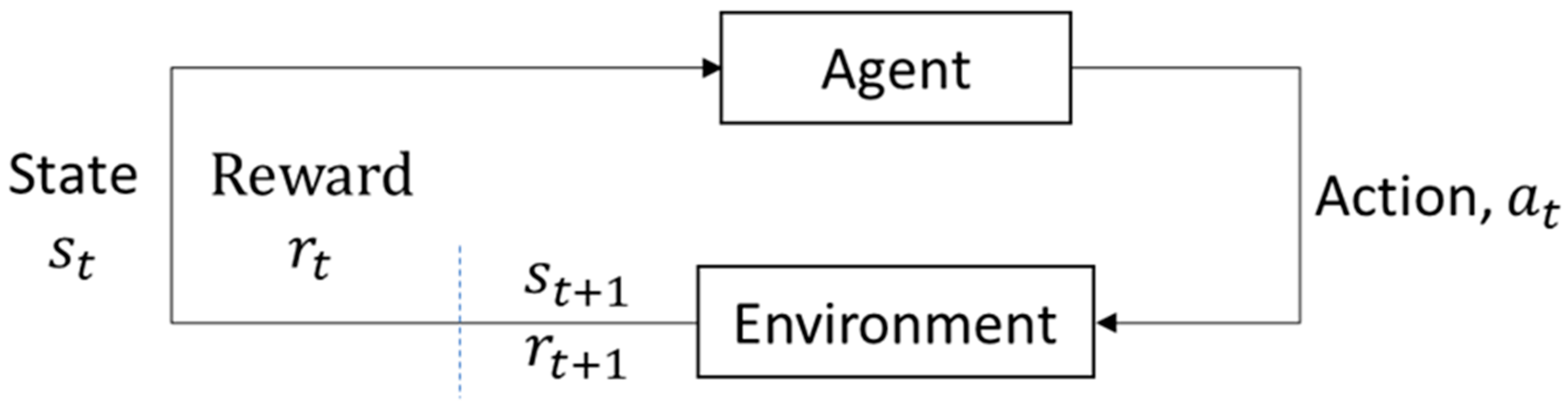

2. Overview of Deep Reinforcement Learning

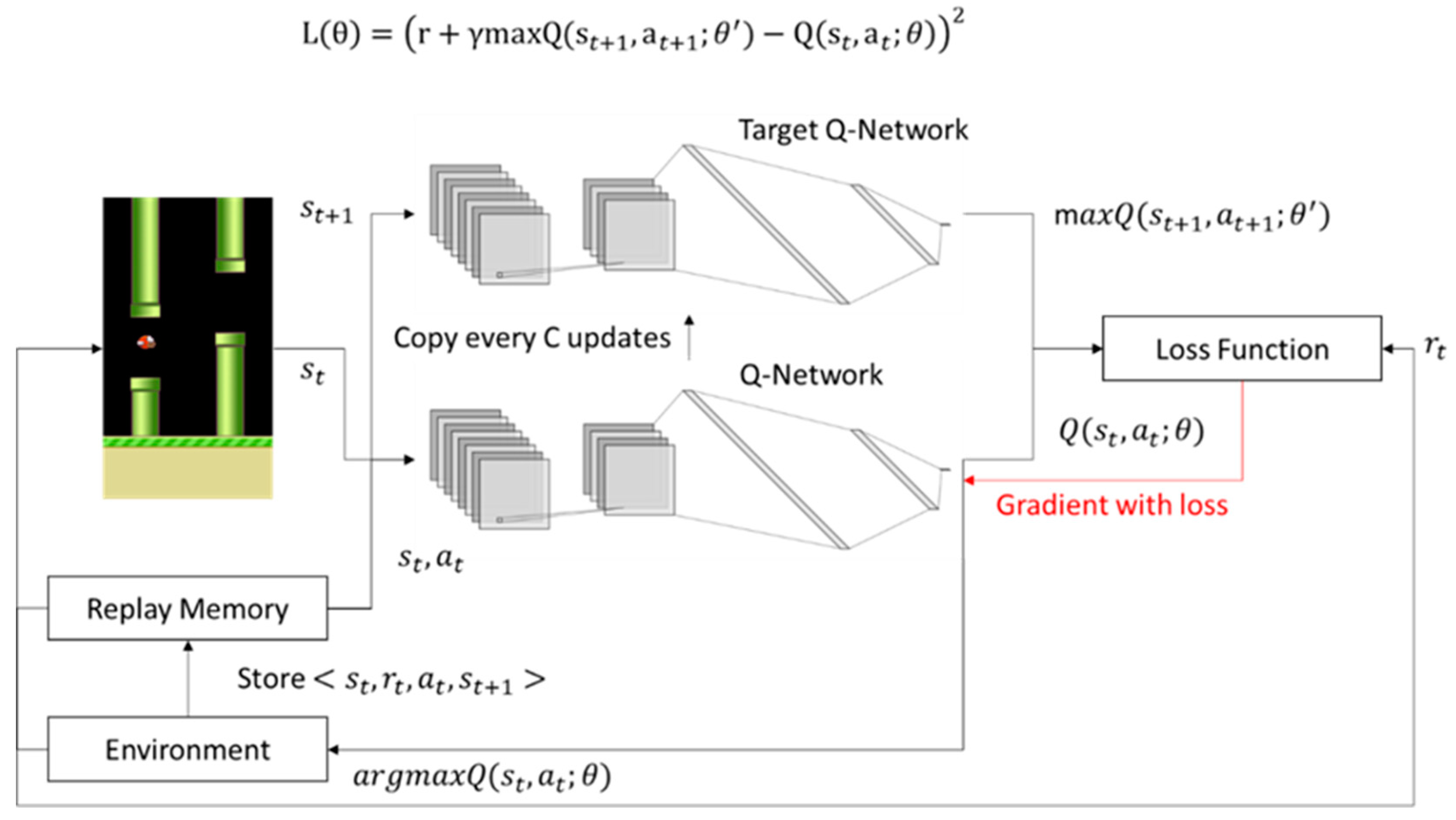

- (1)

- Original images are used as the input states for the convolution neural networks;

- (2)

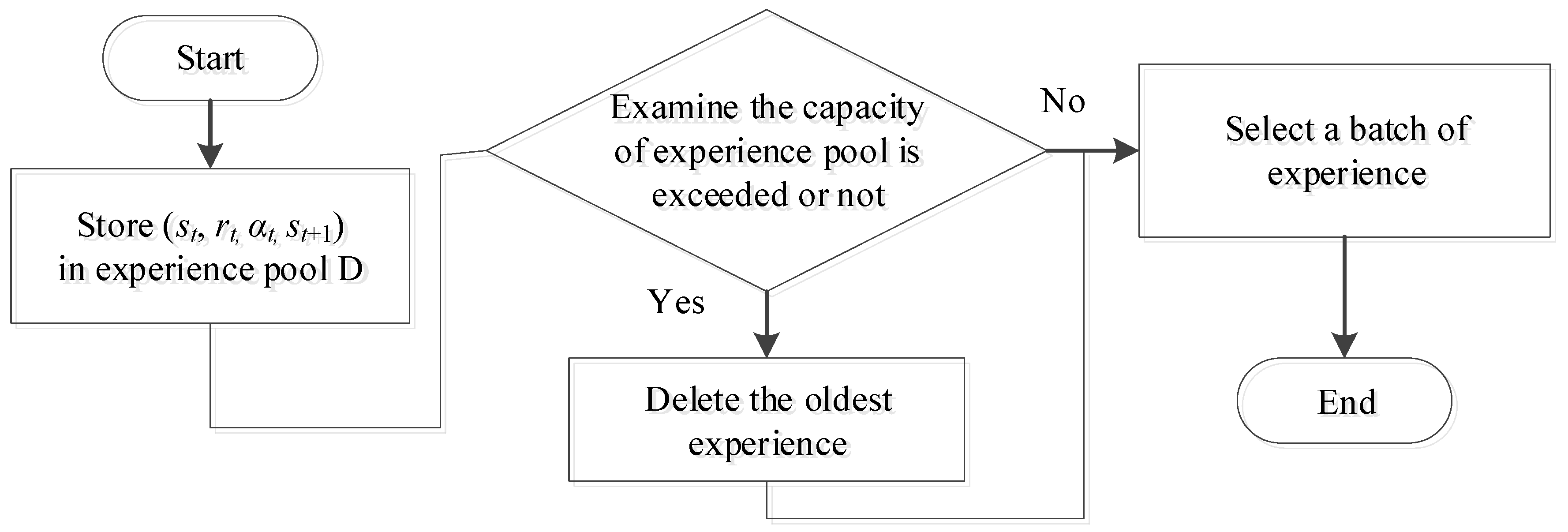

- A replay memory mechanism is added to enhance the learning efficiency of the algorithm; and

- (3)

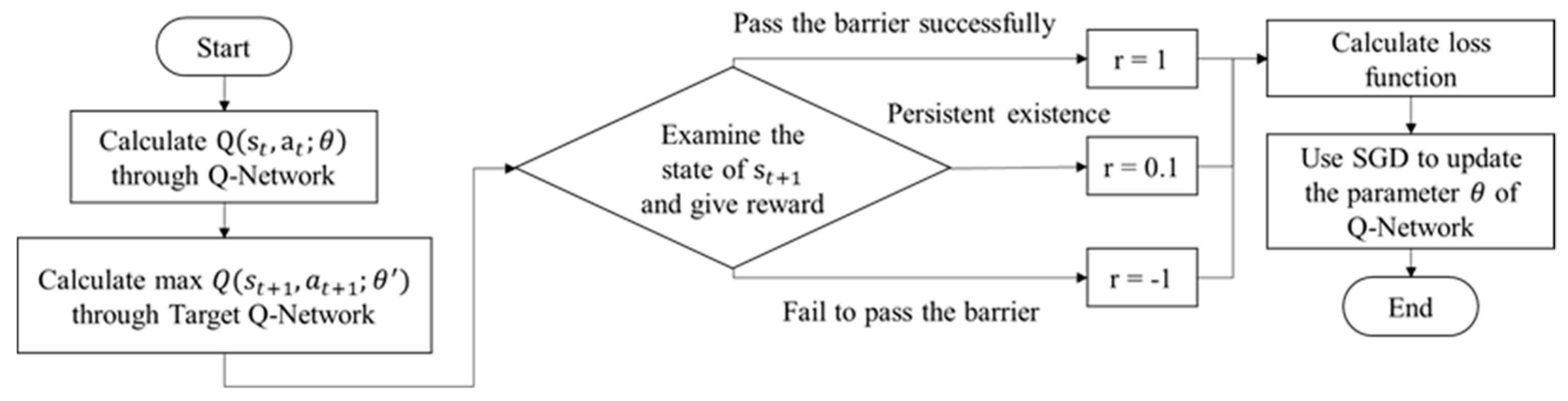

- Independent networks are introduced to estimate time difference errors more accurately and to stabilize algorithm training.

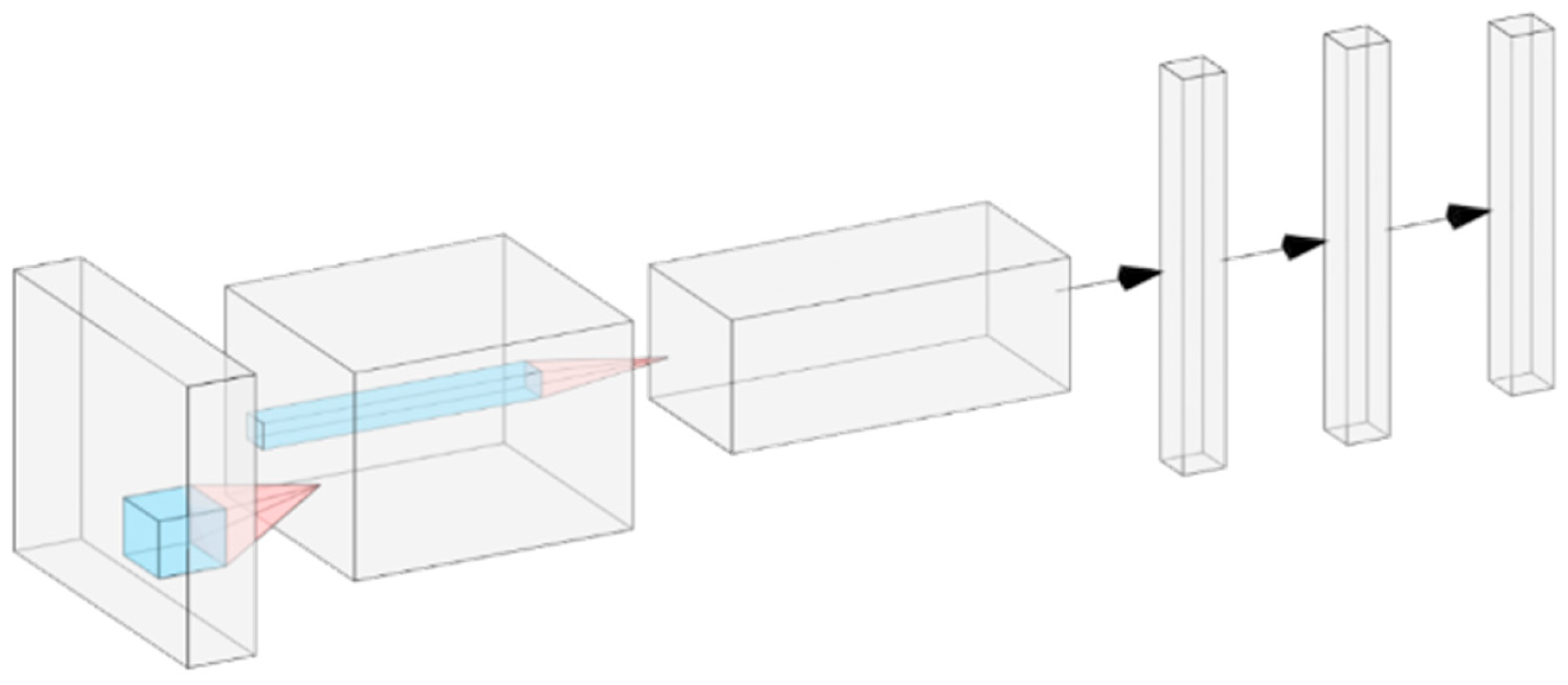

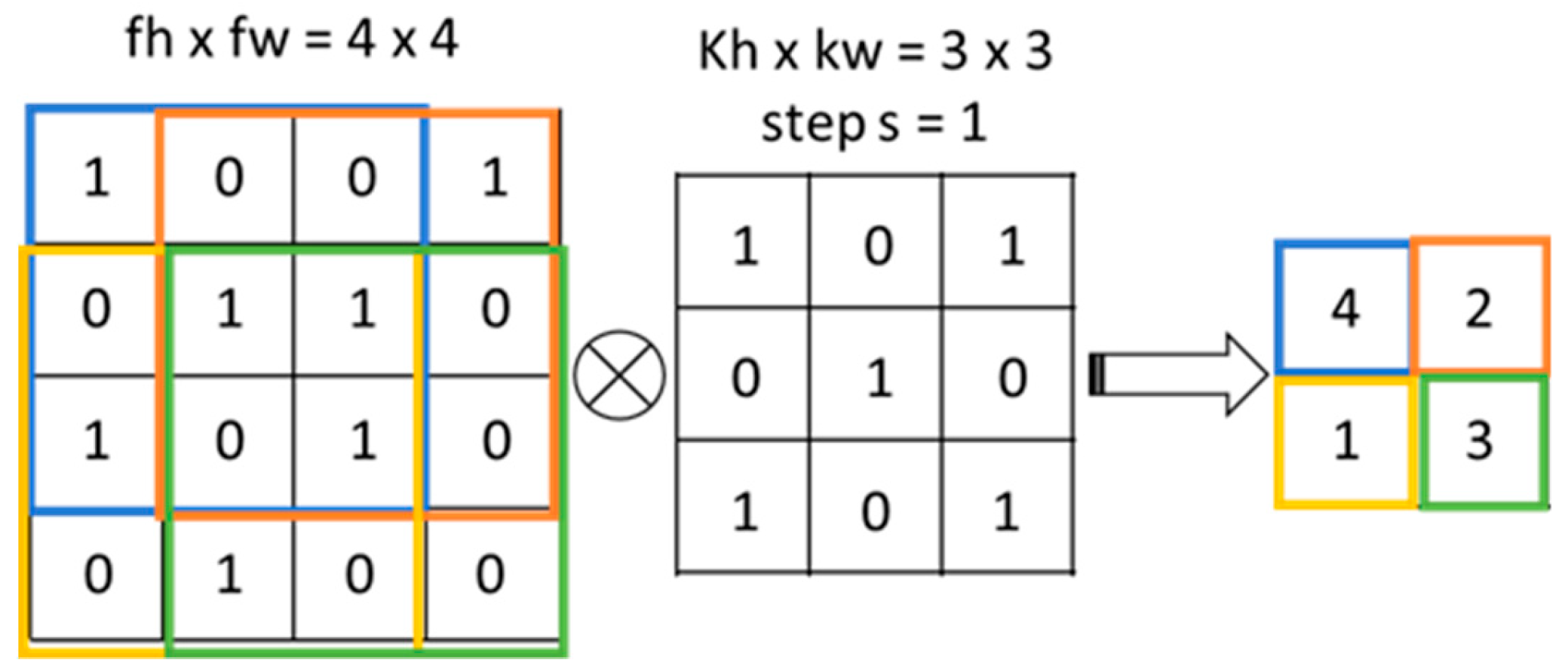

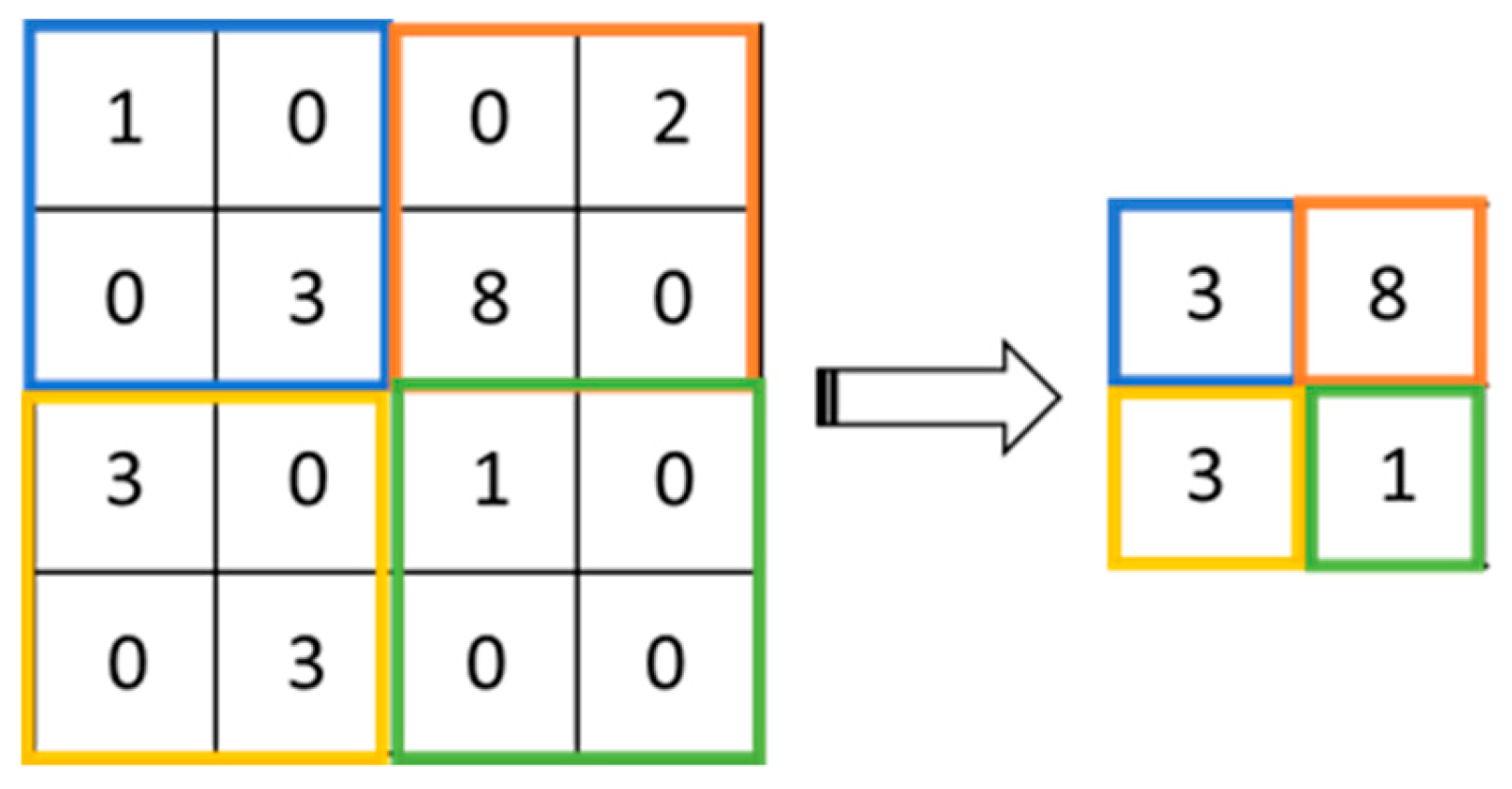

2.1. Convolutional Layer

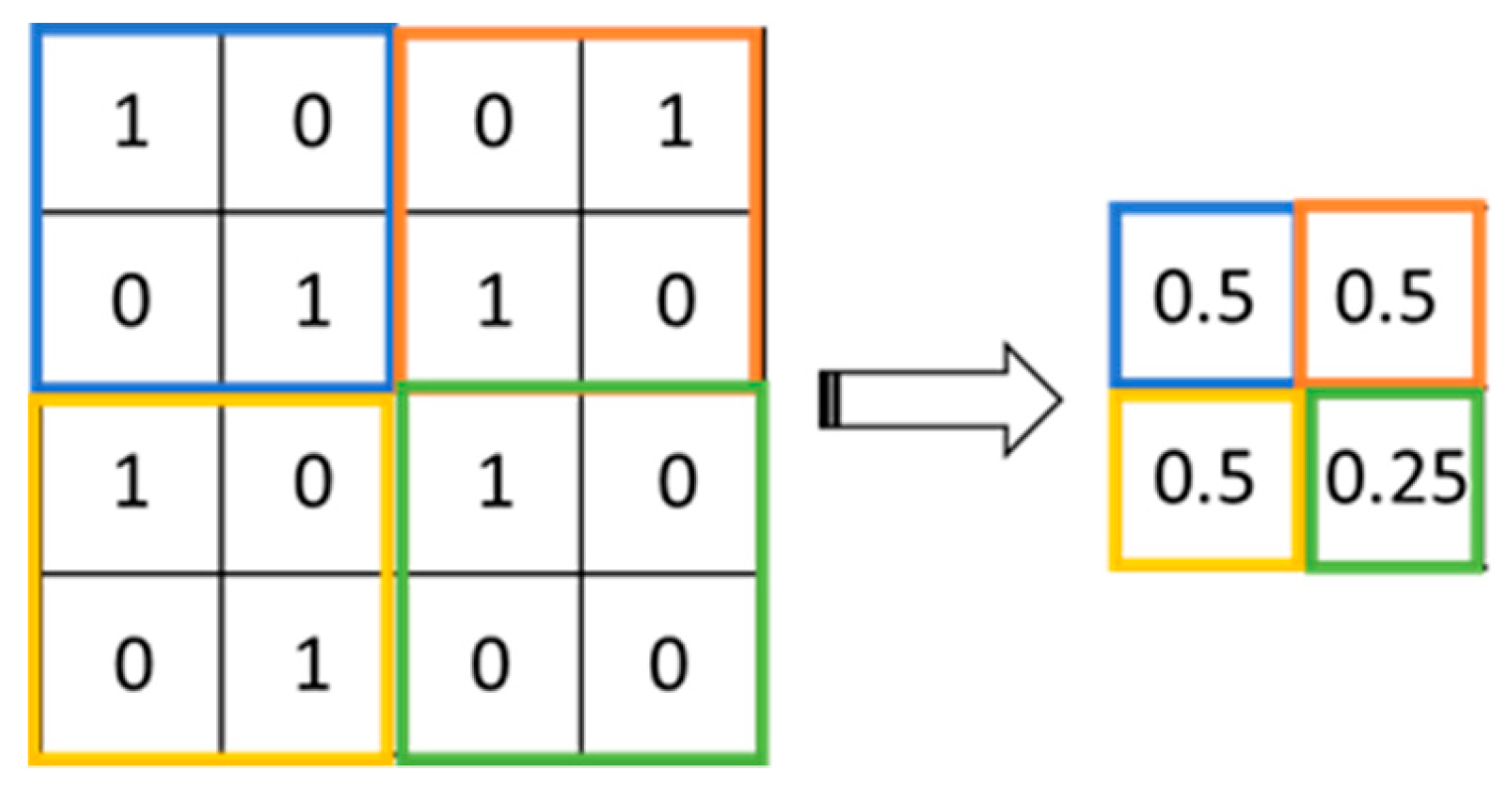

2.2. Pooling Layer

2.3. Activation Function Layer

3. The Proposed RQDNN

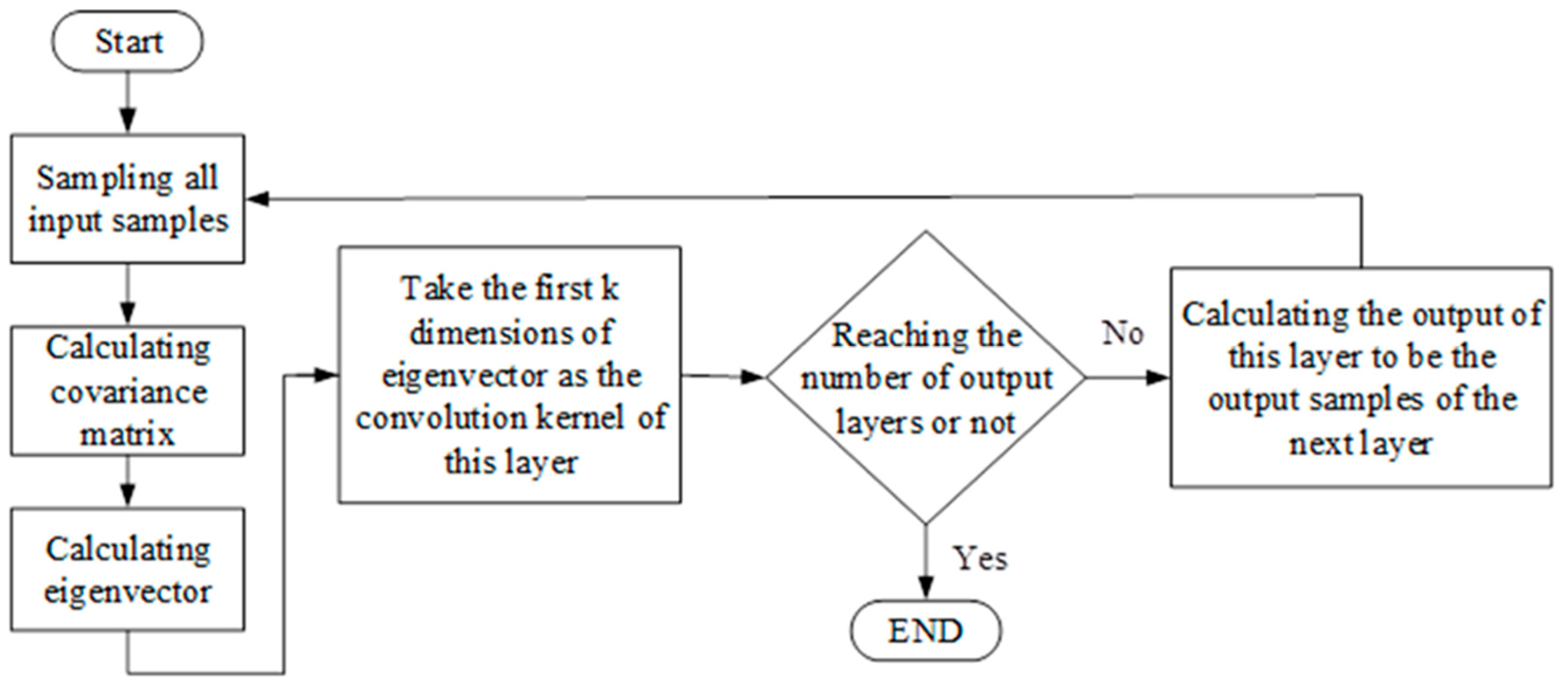

3.1. The Proposed DPCANet

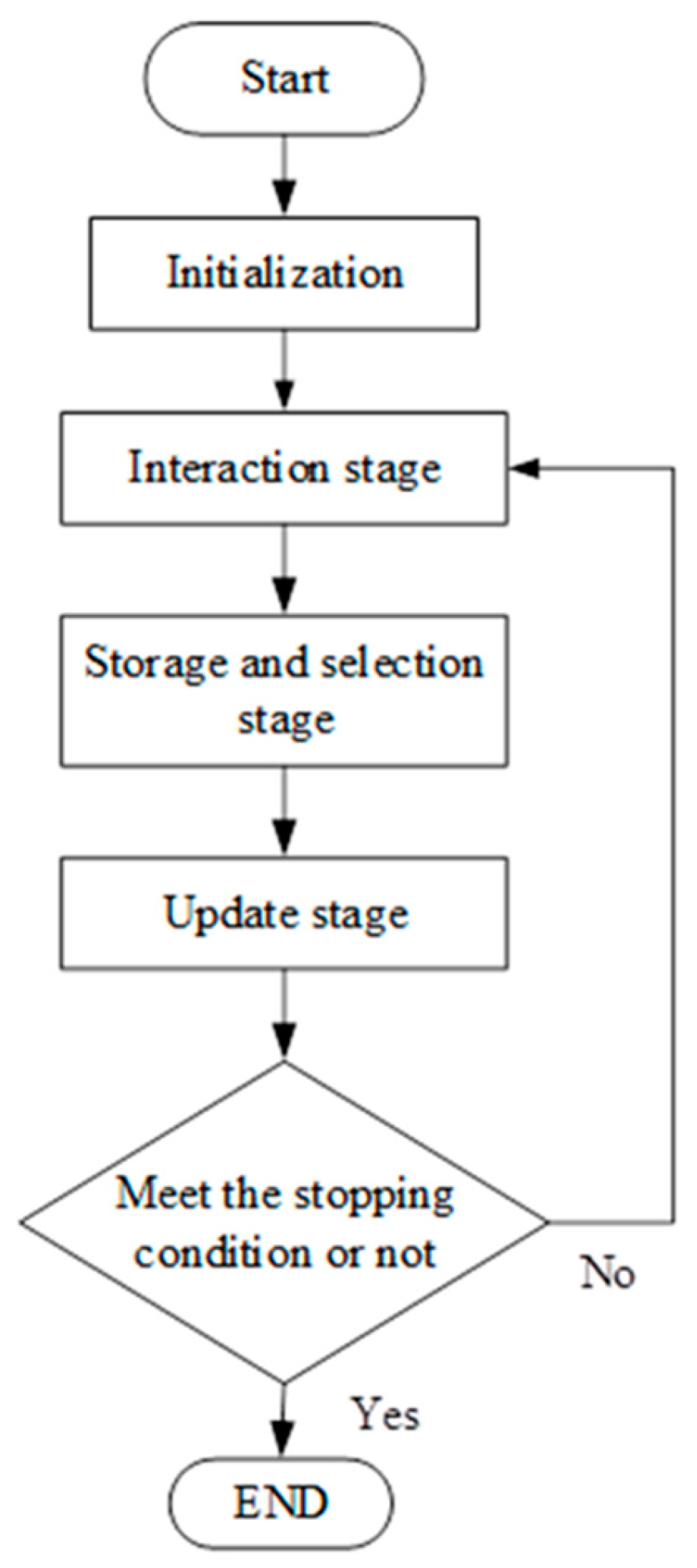

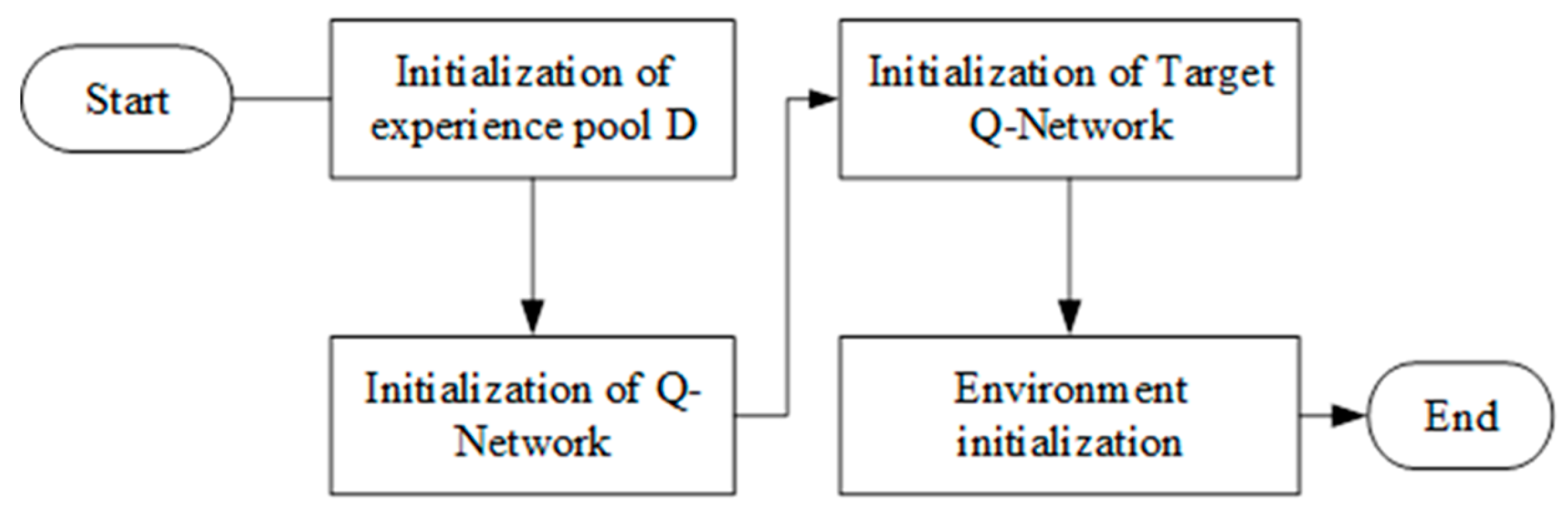

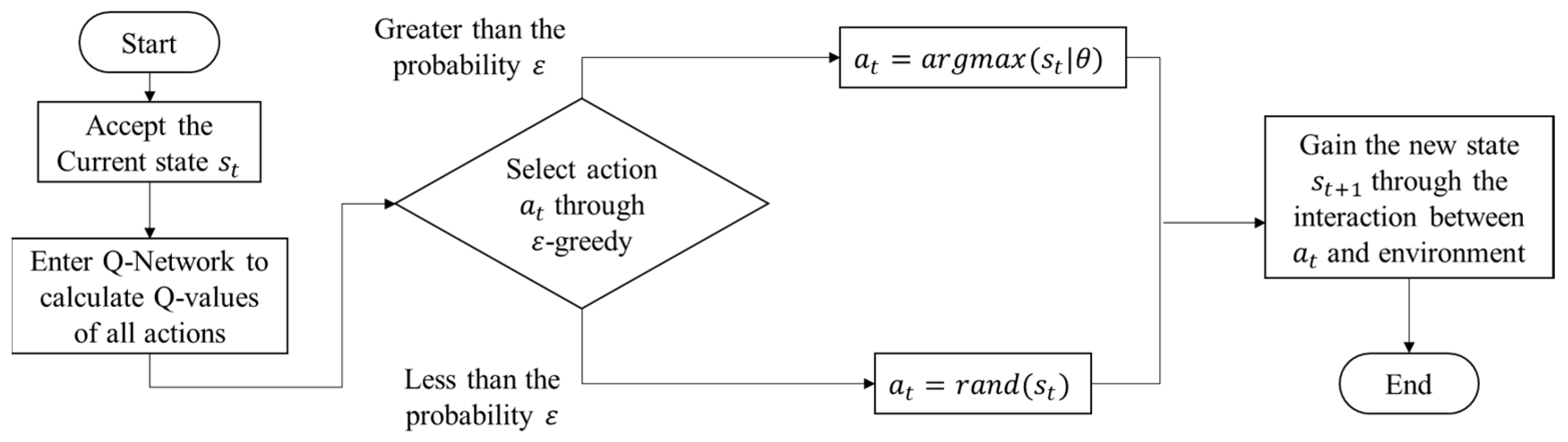

3.2. The Proposed RQDNN

4. Experimental Results

4.1. Evaluation of Different Convolution Layers in DPCANet

4.2. The Flappy Bird Game

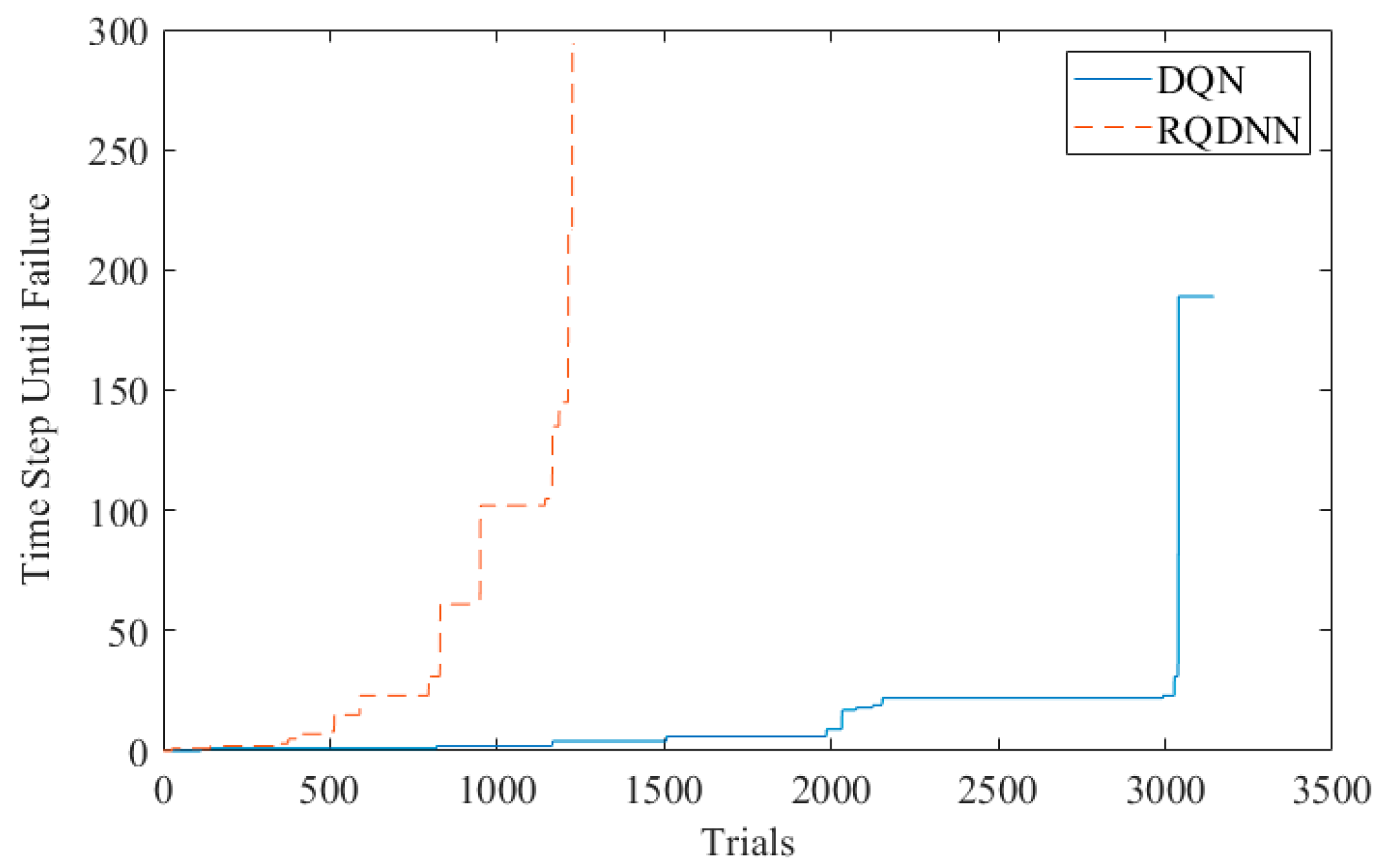

4.3. The Atari Breakout Game

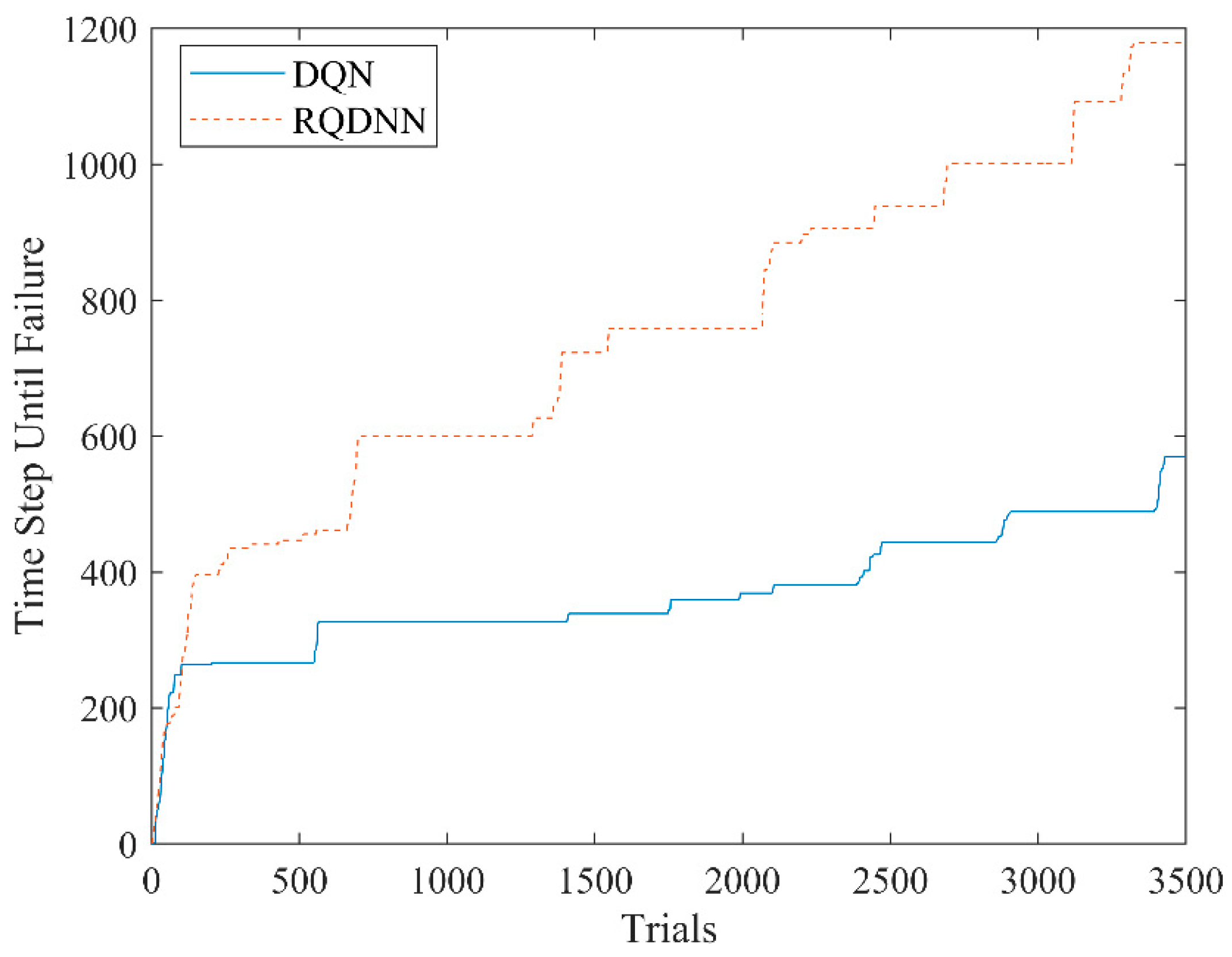

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Togelius, J.; Karakovskiy, S.; Baumgarten, R. The 2009 Mario AI Competition. In Proceedings of the IEEE Congress on Evolutionary Computation, Barcelona, Spain, 18–23 July 2010. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M.A. Playing Atari with Deep Reinforcement Learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.A.; Fidjeland, A.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized Experience Replay. arXiv 2016, arXiv:1511.05952. [Google Scholar]

- Hasselt, H.v.; Guez, A.; Silver, D. Deep reinforcement learning with double Q-Learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence (AAAI’16), Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.V.; Lanctot, M.; Freitas, N.D. Dueling Network Architectures for Deep Reinforcement Learning. arXiv 2015, arXiv:1511.06581. [Google Scholar]

- Li, D.; Zhao, D.; Zhang, Q.; Chen, Y. Reinforcement Learning and Deep Learning Based Lateral Control for Autonomous Driving. IEEE Comput. Intell. Mag. 2019, 14, 83–98. [Google Scholar] [CrossRef]

- Martinez, M.; Sitawarin, C.; Finch, K.; Meincke, L. Beyond Grand Theft Auto V for Training, Testing and Enhancing Deep Learning in Self Driving Cars. arXiv 2017, arXiv:1712.01397. [Google Scholar]

- Yu, X.; Lv, Z.; Wu, Y.; Sun, X. Neural Network Modeling and Backstepping Control for Quadrotor. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018. [Google Scholar] [CrossRef]

- Kersandt, K.; Muñoz, G.; Barrado, C. Self-training by Reinforcement Learning for Full-autonomous Drones of the Future. In Proceedings of the 2018 IEEE/AIAA 37th Digital Avionics Systems Conference (DASC), London, UK, 23–27 September 2018. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

| Q-Table | Actions | ||||||

|---|---|---|---|---|---|---|---|

| South(0) | North(1) | East(2) | West(3) | Pickup(4) | Dropoff(5) | ||

| States | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | |

| 328 | −2.30108 | −1.97092 | −2.30357 | −2.20591 | −10.3607 | −8.55830 | |

| ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | ⋮ | |

| 499 | 9.96984 | 4.02706 | 12.9602 | 29 | 3.32877 | 3.38230 | |

| Name | Functions | Derivatives |

|---|---|---|

| Sigmoid | ||

| tanh | ||

| ReLU | ||

| Leaky ReLU |

| 1st Layer Dimension of Convolution | 2nd Layer Dimension of Convolution | 3rd Layer Dimension of Convolution | 4th Layer Dimension of Convolution | Testing Error Rate | Computation Time per Image (s) | |

|---|---|---|---|---|---|---|

| Network 1 | 8 | NaN | NaN | NaN | 5.5% | 0.12 |

| Network 2 | 8 | 4 | NaN | NaN | 4.1% | 0.17 |

| Network 3 | 8 | 4 | 4 | NaN | 4.6% | 0.22 |

| Network 4 | 8 | 4 | 4 | 4 | 4.8% | 0.34 |

| RQDNN | DQN [2] | |

|---|---|---|

| CPU | Intel Xeon E3-1225 | Intel Xeon E3-1225 |

| GPU | None | GTX 1080Ti |

| Training time | 2 h | 5 h |

| Min | Max | Mean | |

|---|---|---|---|

| Proposed method | 223 | 287 | 254.2 |

| V. Mnih [2] | 176 | 234 | 213.4 |

| Human player | 10 | 41 | 21.6 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, C.-J.; Jhang, J.-Y.; Lin, H.-Y.; Lee, C.-L.; Young, K.-Y. Using a Reinforcement Q-Learning-Based Deep Neural Network for Playing Video Games. Electronics 2019, 8, 1128. https://doi.org/10.3390/electronics8101128

Lin C-J, Jhang J-Y, Lin H-Y, Lee C-L, Young K-Y. Using a Reinforcement Q-Learning-Based Deep Neural Network for Playing Video Games. Electronics. 2019; 8(10):1128. https://doi.org/10.3390/electronics8101128

Chicago/Turabian StyleLin, Cheng-Jian, Jyun-Yu Jhang, Hsueh-Yi Lin, Chin-Ling Lee, and Kuu-Young Young. 2019. "Using a Reinforcement Q-Learning-Based Deep Neural Network for Playing Video Games" Electronics 8, no. 10: 1128. https://doi.org/10.3390/electronics8101128

APA StyleLin, C.-J., Jhang, J.-Y., Lin, H.-Y., Lee, C.-L., & Young, K.-Y. (2019). Using a Reinforcement Q-Learning-Based Deep Neural Network for Playing Video Games. Electronics, 8(10), 1128. https://doi.org/10.3390/electronics8101128