A Spiking Neural Network Based on the Model of VO2–Neuron

Abstract

1. Introduction

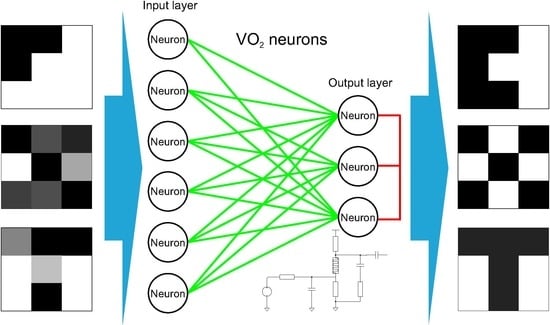

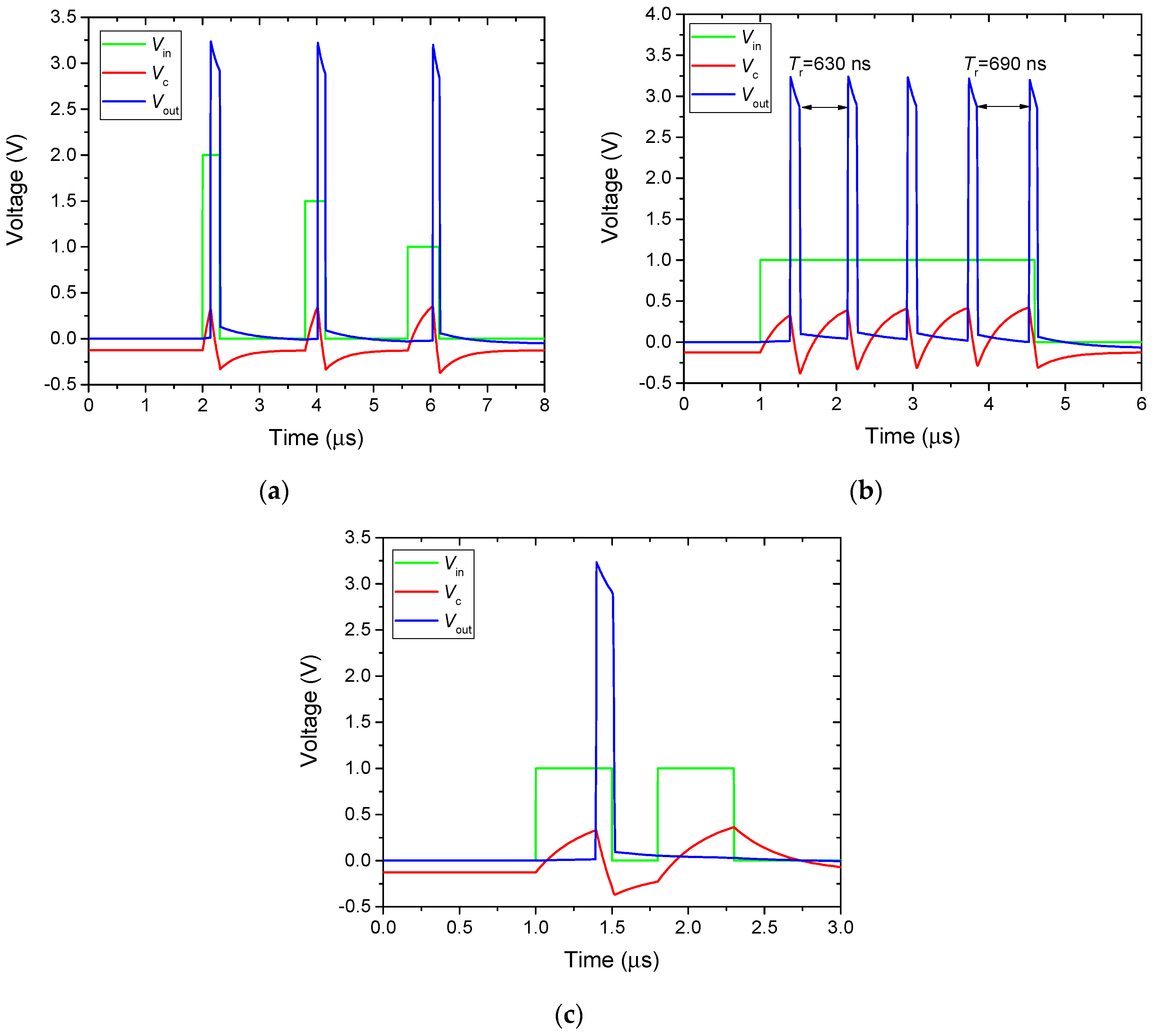

2. SNN Modeling Method

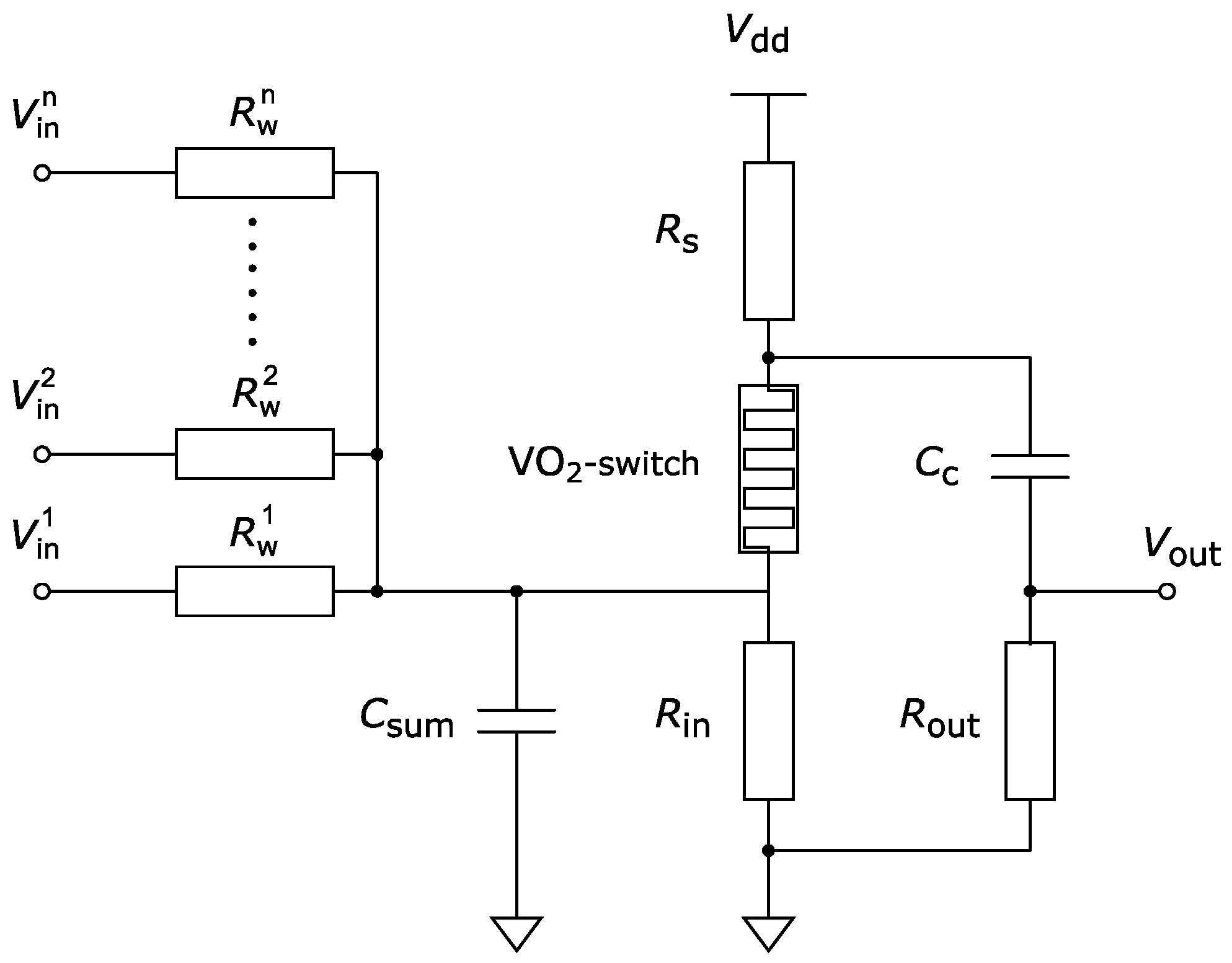

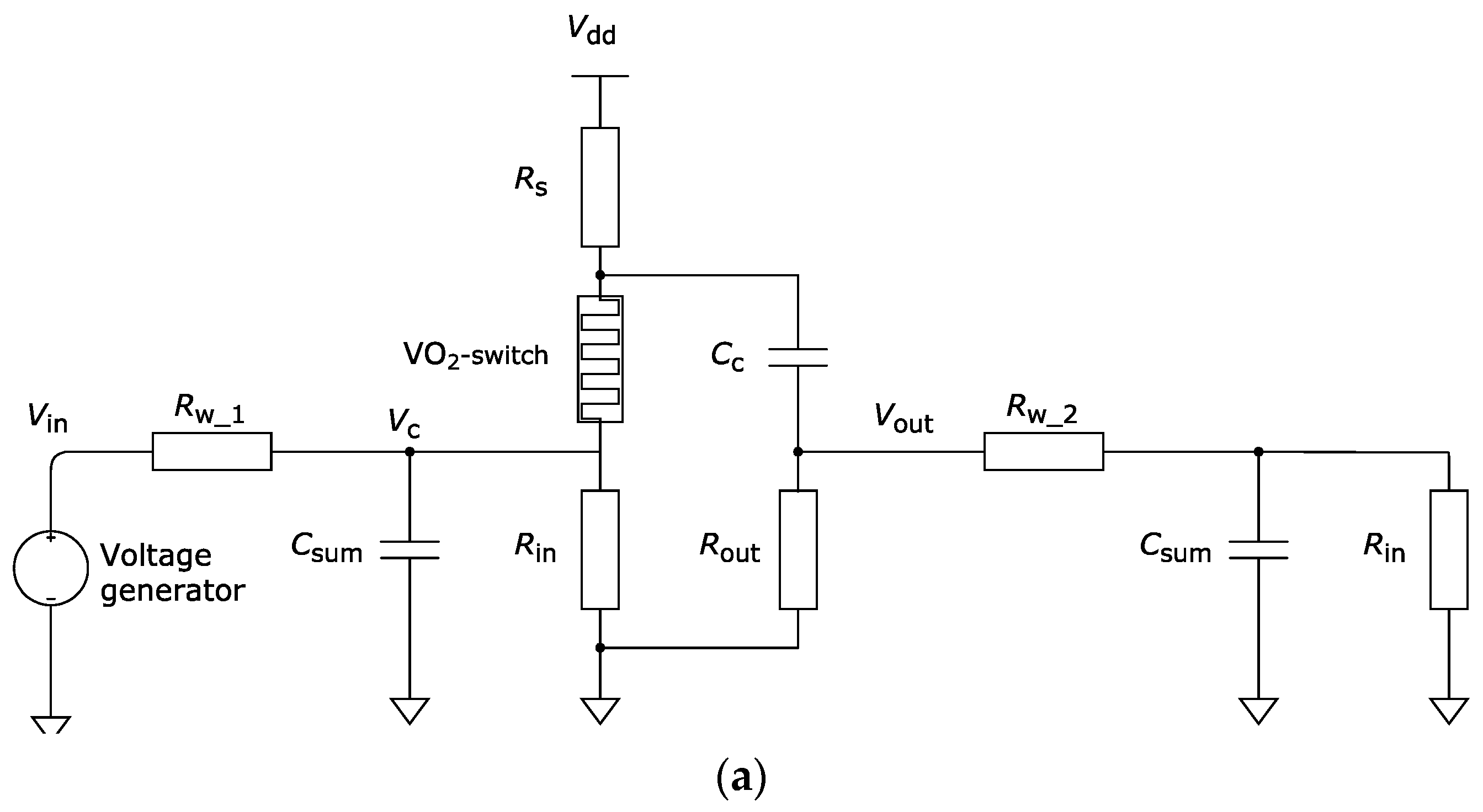

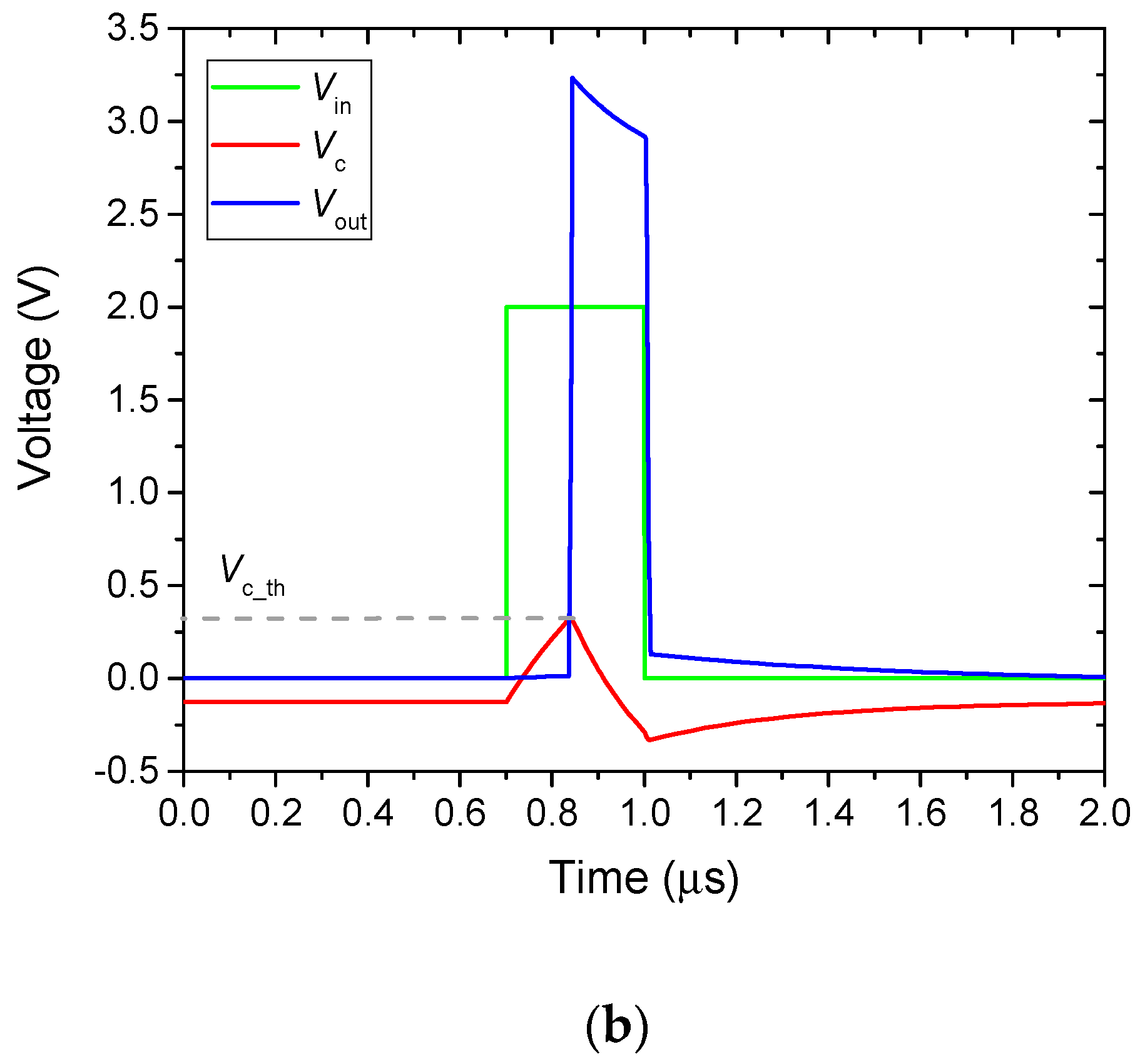

2.1. VO2 Neuron Model

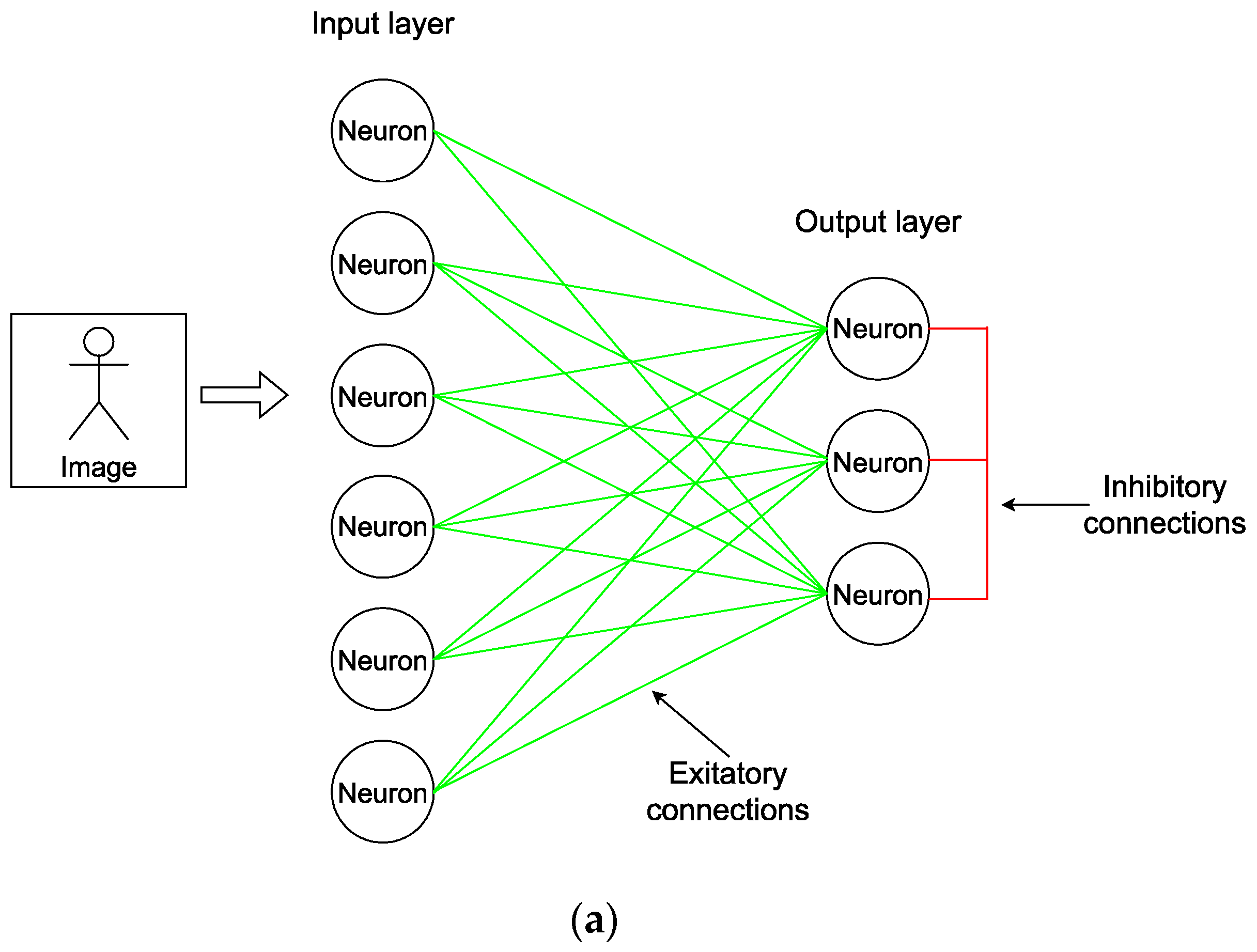

2.2. SNN Architecture

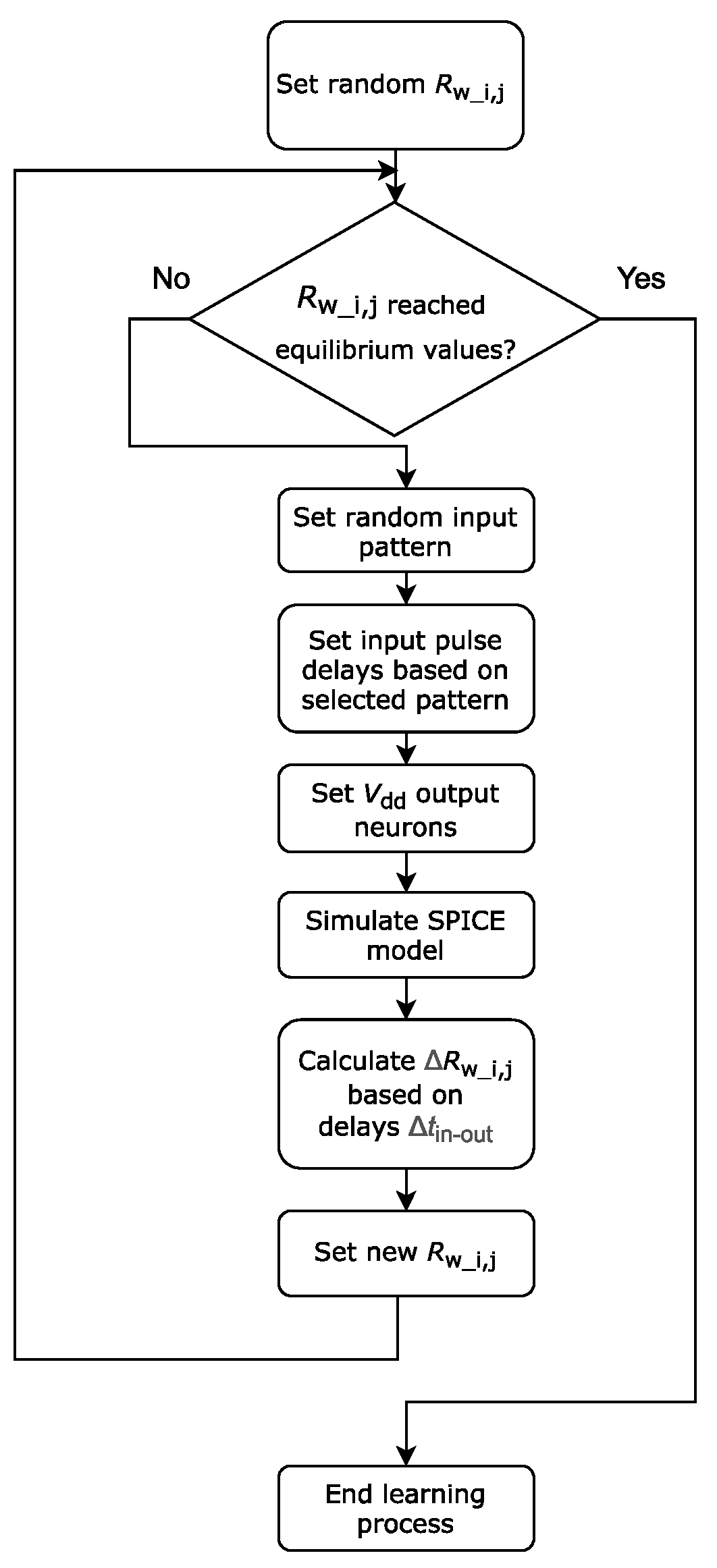

2.3. SNN Training

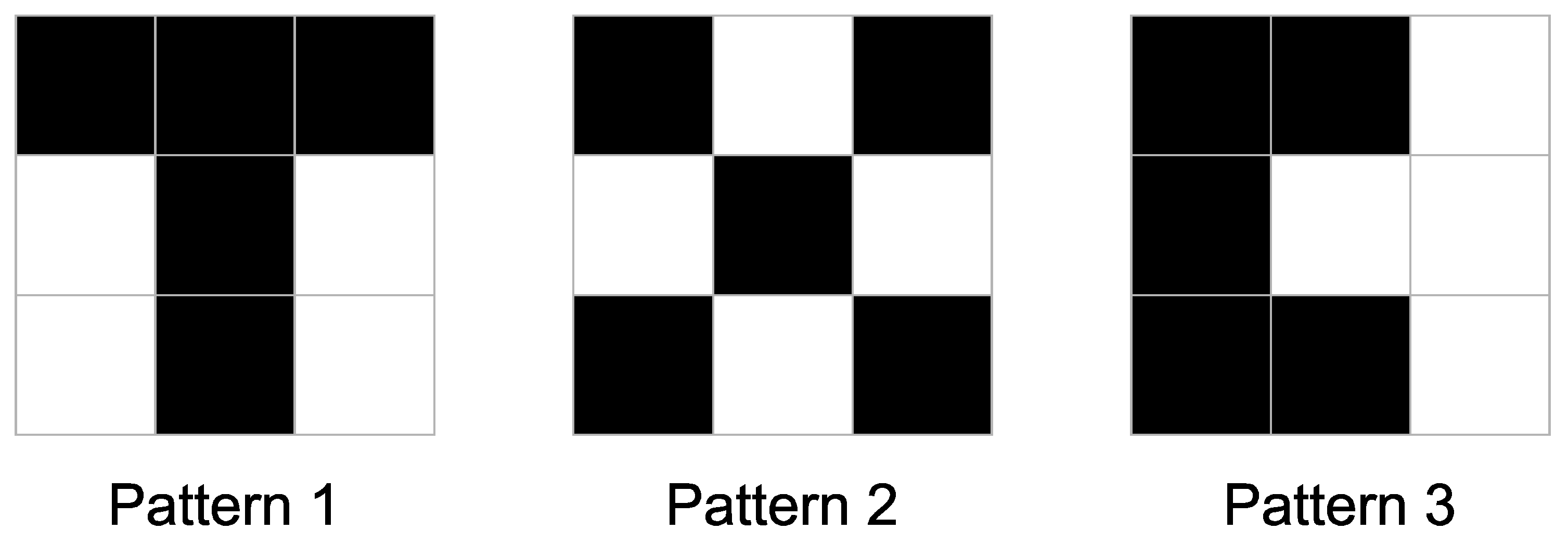

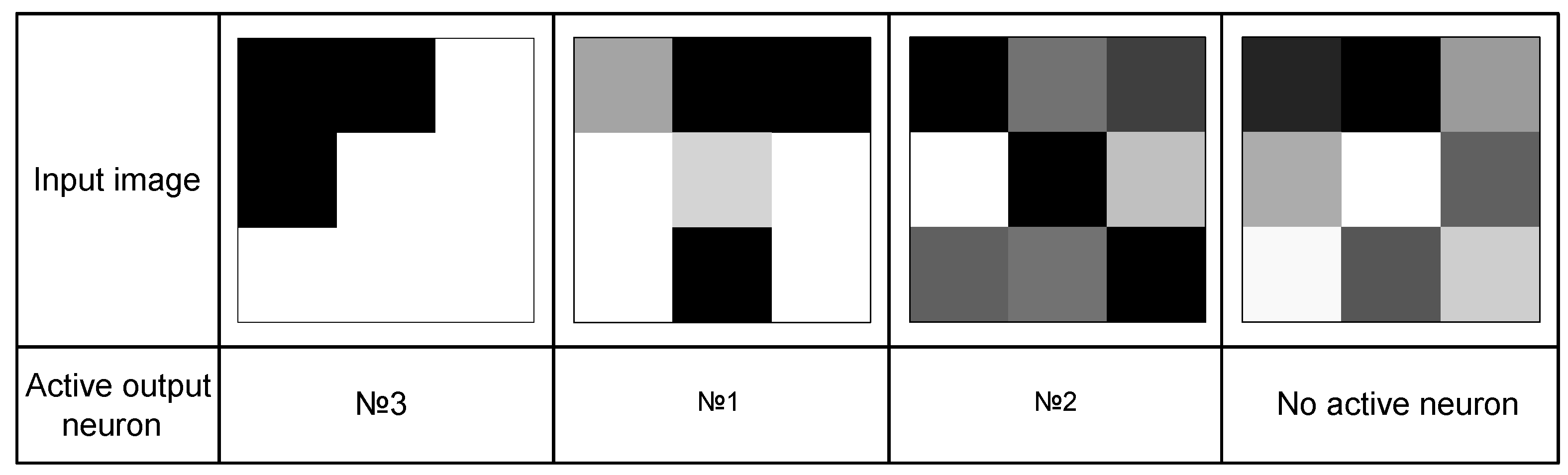

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Min, E.; Guo, X.; Liu, Q.; Zhang, G.; Cui, J.; Long, J. A Survey of Clustering with Deep Learning: From the Perspective of Network Architecture. IEEE Access 2018, 6, 39501–39514. [Google Scholar] [CrossRef]

- Cireşan, D.; Meier, U.; Schmidhuber, J. Multi-column deep neural networks for image classification. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3642–3649. [Google Scholar]

- Abdel-Hamid, O.; Mohamed, A.-R.; Jiang, H.; Deng, L.; Penn, G.; Yu, D. Convolutional Neural Networks for Speech Recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1533–1545. [Google Scholar] [CrossRef]

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar]

- Patterson, J.; Gibson, A. Deep Learning: A Practitioner’s Approach; O’Reilly: Sebastopol, CA, USA, 2017; ISBN 9781491924570. [Google Scholar]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Maass, W. Networks of spiking neurons: The third generation of neural network models. Neural Netw. 1997, 10, 1659–1671. [Google Scholar] [CrossRef]

- Ghosh-Dastidar, S.; Adeli, H. spiking neural networks. Int. J. Neural Syst. 2009, 19, 295–308. [Google Scholar] [CrossRef] [PubMed]

- Bonabi, S.Y.; Asgharian, H.; Safari, S.; Ahmadabadi, M.N. FPGA implementation of a biological neural network based on the Hodgkin-Huxley neuron model. Front. Mol. Neurosci. 2014, 8, 379. [Google Scholar]

- Cheung, K.; Schultz, S.R.; Luk, W. A Large-Scale Spiking Neural Network Accelerator for FPGA Systems; Springer Science and Business Media LLC: Berlin, Germany, 2012; Volume 7552, pp. 113–120. [Google Scholar]

- Beyeler, M.; Dutt, N.D.; Krichmar, J.L. Categorization and decision-making in a neurobiologically plausible spiking network using a STDP-like learning rule. Neural Netw. 2013, 48, 109–124. [Google Scholar] [CrossRef] [PubMed]

- Diehl, P.U.; Cook, M. Unsupervised learning of digit recognition using spike-timing-dependent plasticity. Front. Comput. Neurosci. 2015, 9, 99. [Google Scholar] [CrossRef]

- Beyeler, M.; Oros, N.; Dutt, N.; Krichmar, J.L. A GPU-accelerated cortical neural network model for visually guided robot navigation. Neural Netw. 2015, 72, 75–87. [Google Scholar] [CrossRef]

- Tavanaei, A.; Ghodrati, M.; Kheradpisheh, S.R.; Masquelier, T.; Maida, A. Deep learning in spiking neural networks. Neural Netw. 2019, 111, 47–63. [Google Scholar] [CrossRef]

- Jeong, D.S.; Kim, K.M.; Kim, S.; Choi, B.J.; Hwang, C.S. Memristors for Energy-Efficient New Computing Paradigms. Adv. Electron. Mater. 2016, 2, 1600090. [Google Scholar] [CrossRef]

- Jeong, H.; Shi, L. Memristor devices for neural networks. J. Phys. D Appl. Phys. 2019, 52, 023003. [Google Scholar] [CrossRef]

- Srinivasan, G.; Sengupta, A.; Roy, K. Magnetic Tunnel Junction Based Long-Term Short-Term Stochastic Synapse for a Spiking Neural Network with On-Chip STDP Learning. Sci. Rep. 2016, 6, 29545. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Hwang, S.; Park, J.; Park, B.-G. Silicon synaptic transistor for hardware-based spiking neural network and neuromorphic system. Nanotechnology 2017, 28, 405202. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Joshi, S.; Savel’ev, S.; Song, W.; Midya, R.; Li, Y.; Rao, M.; Yan, P.; Asapu, S.; Zhuo, Y.; et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 2018, 1, 137. [Google Scholar] [CrossRef]

- Wang, Z.; Crafton, B.; Gomez, J.; Xu, R.; Luo, A.; Krivokapic, Z.; Martin, L.; Datta, S.; Raychowdhury, A.; Khan, A.I. Experimental Demonstration of Ferroelectric Spiking Neurons for Unsupervised Clustering. In Proceedings of the 2018 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 1–5 December 2018; pp. 13.3.1–13.3.4. [Google Scholar]

- Zhou, E.; Fang, L.; Yang, B. Memristive Spiking Neural Networks Trained with Unsupervised STDP. Electronics 2018, 7, 396. [Google Scholar] [CrossRef]

- Jerry, M.; Tsai, W.-Y.; Xie, B.; Li, X.; Narayanan, V.; Raychowdhury, A.; Datta, S. Phase transition oxide neuron for spiking neural networks. In Proceedings of the 2016 74th Annual Device Research Conference (DRC), Newark, DE, USA, 19–22 June 2016; pp. 1–2. [Google Scholar]

- Jeong, D.S.; Thomas, R.; Katiyar, R.S.; Scott, J.F.; Kohlstedt, H.; Petraru, A.; Hwang, C.S. Emerging memories: Resistive switching mechanisms and current status. Rep. Prog. Phys. 2012, 75, 76502. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Z.; Midya, R.M.; Xia, Q.; Yang, J.J. Review of memristor devices in neuromorphic computing: Materials sciences and device challenges. J. Phys. D Appl. Phys. 2018, 51, 503002. [Google Scholar] [CrossRef]

- Pergament, A.; Velichko, A.; Belyaev, M.; Putrolaynen, V. Electrical switching and oscillations in vanadium dioxide. Phys. B Condens. Matter 2018, 536, 239–248. [Google Scholar] [CrossRef]

- Crunteanu, A.; Givernaud, J.; Leroy, J.; Mardivirin, D.; Champeaux, C.; Orlianges, J.-C.; Catherinot, A.; Blondy, P. Voltage- and current-activated metal–insulator transition in VO2 -based electrical switches: A lifetime operation analysis. Sci. Technol. Adv. Mater. 2010, 11, 065002. [Google Scholar] [CrossRef]

- Belyaev, M.A.; Boriskov, P.P.; Velichko, A.A.; Pergament, A.L.; Putrolainen, V.V.; Ryabokon’, D.V.; Stefanovich, G.B.; Sysun, V.I.; Khanin, S.D. Switching Channel Development Dynamics in Planar Structures on the Basis of Vanadium Dioxide. Phys. Solid State 2018, 60, 447–456. [Google Scholar] [CrossRef]

- Yi, W.; Tsang, K.K.; Lam, S.K.; Bai, X.; Crowell, J.A.; Flores, E.A. Biological plausibility and stochasticity in scalable VO2 active memristor neurons. Nat. Commun. 2018, 9, 4661. [Google Scholar] [CrossRef] [PubMed]

- Ignatov, M.; Ziegler, M.; Hansen, M.; Petraru, A.; Kohlstedt, H. A memristive spiking neuron with firing rate coding. Front. Mol. Neurosci. 2015, 9, 49. [Google Scholar] [CrossRef] [PubMed]

- Lin, J.; Guha, S.; Ramanathan, S. Vanadium Dioxide Circuits Emulate Neurological Disorders. Front. Mol. Neurosci. 2018, 12, 856. [Google Scholar] [CrossRef] [PubMed]

- Amer, S.; Hasan, M.S.; Adnan, M.M.; Rose, G.S. SPICE Modeling of Insulator Metal Transition: Model of the Critical Temperature. IEEE J. Electron Devices Soc. 2019, 7, 18–25. [Google Scholar] [CrossRef]

- Lin, J.; Sonde, S.; Chen, C.; Stan, L.; Achari, K.V.L.V.; Ramanathan, S.; Guha, S. Low-voltage artificial neuron using feedback engineered insulator-to-metal-transition devices. In Proceedings of the 2016 IEEE International Electron Devices Meeting (IEDM), San Francisco, CA, USA, 3–7 December 2016; p. 35. [Google Scholar]

- Jerry, M.; Parihar, A.; Grisafe, B.; Raychowdhury, A.; Datta, S. Ultra-low power probabilistic IMT neurons for stochastic sampling machines. In Proceedings of the 2017 Symposium on VLSI Technology, Kyoto, Japan, 5–8 June 2017; pp. T186–T187. [Google Scholar]

- Parihar, A.; Jerry, M.; Datta, S.; Raychowdhury, A. Stochastic IMT (Insulator-Metal-Transition) Neurons: An Interplay of Thermal and Threshold Noise at Bifurcation. Front. Neurosci. 2018, 12, 210. [Google Scholar] [CrossRef] [PubMed]

- Boriskov, P.; Velichko, A. Switch Elements with S-Shaped Current-Voltage Characteristic in Models of Neural Oscillators. Electronics 2019, 8, 922. [Google Scholar] [CrossRef]

- Oster, M.; Douglas, R.; Liu, S.-C. Computation with Spikes in a Winner-Take-All Network. Neural Comput. 2009, 21, 2437–2465. [Google Scholar] [CrossRef]

- Ponulak, F.; Kasinski, A. Introduction to spiking neural networks: Information processing, learning and applications. Acta Neurobiol. Exp. 2011, 71, 409–433. [Google Scholar]

- Serrano-Gotarredona, T.; Masquelier, T.; Prodromakis, T.; Indiveri, G.; Linares-Barranco, B. STDP and STDP variations with memristors for spiking neuromorphic learning systems. Front. Mol. Neurosci. 2013, 7, 2. [Google Scholar] [CrossRef]

- Izhikevich, E. Which Model to Use for Cortical Spiking Neurons? IEEE Trans. Neural Netw. 2004, 15, 1063–1070. [Google Scholar] [CrossRef] [PubMed]

- Karda, K.; Mouli, C.; Ramanathan, S.; Alam, M.A. A Self-Consistent, Semiclassical Electrothermal Modeling Framework for Mott Devices. IEEE Trans. Electron Devices 2018, 65, 1672–1678. [Google Scholar] [CrossRef]

- Pergament, A.; Boriskov, P.; Velichko, A.; Kuldin, N.; Velichko, A. Switching effect and the metal–insulator transition in electric field. J. Phys. Chem. Solids 2010, 71, 874–879. [Google Scholar] [CrossRef]

- Querlioz, D.; Bichler, O.; Dollfus, P.; Gamrat, C. Immunity to Device Variations in a Spiking Neural Network with Memristive Nanodevices. IEEE Trans. Nanotechnol. 2013, 12, 288–295. [Google Scholar] [CrossRef]

- Shukla, A.; Kumar, V.; Ganguly, U. A software-equivalent SNN hardware using RRAM-array for asynchronous real-time learning. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 4657–4664. [Google Scholar]

- Kwon, M.-W.; Baek, M.-H.; Hwang, S.; Kim, S.; Park, B.-G. Spiking Neural Networks with Unsupervised Learning Based on STDP Using Resistive Synaptic Devices and Analog CMOS Neuron Circuit. J. Nanosci. Nanotechnol. 2018, 18, 6588–6592. [Google Scholar] [CrossRef] [PubMed]

- Yousefzadeh, A.; Stromatias, E.; Soto, M.; Serrano-Gotarredona, T.; Linares-Barranco, B. On Practical Issues for Stochastic STDP Hardware With 1-bit Synaptic Weights. Front. Mol. Neurosci. 2018, 12, 665. [Google Scholar] [CrossRef] [PubMed]

- Saunders, D.J.; Siegelmann, H.T.; Kozma, R.; Ruszinkao, M. STDP Learning of Image Patches with Convolutional Spiking Neural Networks. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Bichler, O.; Querlioz, D.; Thorpe, S.J.; Bourgoin, J.-P.; Gamrat, C. Extraction of temporally correlated features from dynamic vision sensors with spike-timing-dependent plasticity. Neural Netw. 2012, 32, 339–348. [Google Scholar] [CrossRef] [PubMed]

- Tavanaei, A.; Maida, A. BP-STDP: Approximating backpropagation using spike timing dependent plasticity. Neurocomputing 2019, 330, 39–47. [Google Scholar] [CrossRef]

- Nishitani, Y.; Kaneko, Y.; Ueda, M. Supervised Learning Using Spike-Timing-Dependent Plasticity of Memristive Synapses. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2999–3008. [Google Scholar] [CrossRef] [PubMed]

- Lee, C.; Panda, P.; Srinivasan, G.; Roy, K. Training Deep Spiking Convolutional Neural Networks With STDP-Based Unsupervised Pre-training Followed by Supervised Fine-Tuning. Front. Mol. Neurosci. 2018, 12, 435. [Google Scholar] [CrossRef]

- Garcia, C.; Delakis, M. Convolutional face finder: A neural architecture for fast and robust face detection. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1408–1423. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Lin, C.-Y.; Kim, M.-H.; Kim, T.-H.; Kim, H.; Chen, Y.-C.; Chang, Y.-F.; Park, B.-G. Dual Functions of V/SiOx/AlOy/p++Si Device as Selector and Memory. Nanoscale Res. Lett. 2018, 13, 252. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.-Y.; Chen, P.-H.; Chang, T.-C.; Chang, K.-C.; Zhang, S.-D.; Tsai, T.-M.; Pan, C.-H.; Chen, M.-C.; Su, Y.-T.; Tseng, Y.-T.; et al. Attaining resistive switching characteristics and selector properties by varying forming polarities in a single HfO2-based RRAM device with a vanadium electrode. Nanoscale 2017, 9, 8586–8590. [Google Scholar] [CrossRef] [PubMed]

- Sun, C.; Yan, L.; Yue, B.; Liu, H.; Gao, Y. The modulation of metal–insulator transition temperature of vanadium dioxide: A density functional theory study. J. Mater. Chem. C 2014, 2, 9283–9293. [Google Scholar] [CrossRef]

- Brown, B.L.; Nordquist, C.D.; Jordan, T.S.; Wolfley, S.L.; Leonhardt, D.; Edney, C.; Custer, J.A.; Lee, M.; Clem, P.G. Electrical and optical characterization of the metal-insulator transition temperature in Cr-doped VO2 thin films. J. Appl. Phys. 2013, 113, 173704. [Google Scholar] [CrossRef]

- Pergament, A.; Stefanovich, G.; Malinenko, V.; Velichko, A. Electrical Switching in Thin Film Structures Based on Transition Metal Oxides. Adv. Condens. Matter Phys. 2015, 2015, 654840. [Google Scholar] [CrossRef]

- Lepage, D.; Chaker, M. Thermodynamics of self-oscillations in VO2 for spiking solid-state neurons. AIP Adv. 2017, 7, 055203. [Google Scholar] [CrossRef]

- Sakai, J. High-efficiency voltage oscillation in VO2 planer-type junctions with infinite negative differential resistance. J. Appl. Phys. 2008, 103, 103708. [Google Scholar] [CrossRef]

- Velichko, A.; Belyaev, M.; Putrolaynen, V.; Perminov, V.; Pergament, A. Thermal coupling and effect of subharmonic synchronization in a system of two VO2 based oscillators. Solid-State Electron. 2018, 141, 40–49. [Google Scholar] [CrossRef]

- Velichko, A.; Belyaev, M.; Putrolaynen, V.; Perminov, V.; Pergament, A. Modeling of thermal coupling in VO2 -based oscillatory neural networks. Solid-State Electron. 2018, 139, 8–14. [Google Scholar] [CrossRef]

- Savchenko, A. Sequential three-way decisions in multi-category image recognition with deep features based on distance factor. Inf. Sci. 2019, 489, 18–36. [Google Scholar] [CrossRef]

- Cruz-Albrecht, J.M.; Yung, M.W.; Srinivasa, N. Energy-Efficient Neuron, Synapse and STDP Integrated Circuits. IEEE Trans. Biomed. Circuits Syst. 2012, 6, 246–256. [Google Scholar] [CrossRef] [PubMed]

- Sourikopoulos, I.; Hedayat, S.; Loyez, C.; Danneville, F.; Hoel, V.; Mercier, E.; Cappy, A. A 4-fJ/Spike Artificial Neuron in 65 nm CMOS Technology. Front. Mol. Neurosci. 2017, 11, 1597. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Cortes, C.; Burges, C. MNIST Handwritten Digit Database. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 9 November 2018).

| The Class of the Image, Fed to the SNN Input | The Voltage Vdd of the Output Neuron No. 1, V | The Voltage Vdd of the Output Neuron No. 2, V | The Voltage Vdd of the Output Neuron No. 3, V |

|---|---|---|---|

| Pattern 1 | −5.75 | 0 | 0 |

| Pattern 2 | 0 | −5.75 | 0 |

| Pattern 3 | 0 | 0 | −5.75 |

| Device | Neuron Type Material/Platform | Active Element Size (a) and Neuron Area (Sneuron) | Spike Amplitude (Vspike), Peak Power (Pmax), Duration, (Δtspike) and Energy per Spike (Espike) | Integration and Threshold Mechanism, Threshold Voltage of the Active Element Vth | SNN with Object Recognition, Coding Mechanism |

|---|---|---|---|---|---|

| VO2 (current study) | Leaky Integrate and Fire Vanadium Dioxide (VO2) | a ~ 3 μm | Vspike = 3.2 V Δtspike~500 ns Pmax~37 mW Espike~ 18 nJ | Capacitor charging, Switching effect when reaching Vth, Vth(VO2)~5.6 V | Time to first spike |

| Oxide neuron [35] | Piecewise linear FitzHugh-Nagumo, FitzHugh–Rinzel Vanadium Dioxide (VO2), Niobium oxide (NbO) | a ~ 3 μm | Vspike~3.5V Δtspike~100 μs Pmax~72 mW Espike~ 7 μJ | Capacitor charging and energy of inductance magnetic field, switching effect when reaching Vth, Vth(VO2)~ 5.6 V Vth(NbO2)~ 0.9 V | - |

| Stochastic VO2 neuron [33] | Integrate and fire Vanadium Dioxide (VO2) | a ~ 100 nm | Vspike~0.5 V Δtspike~4 μs Pmax~12 μW Espike~50 pJ | Capacitor charging, switching effect when reaching Vth, Vth(VO2)~ 1.7 V | Rate coding |

| CMOS neuron [62] | Leaky Integrate and fire CMOS | a ~ 90nm Sneuron= 442 μm2 | Vspike = 0.6 V Δtspike~3 ms Espike = 0.4 pJ | Capacitor charging. Reset using comparator, Vth~ 0.6 V | - |

| CMOS neuron [63] | Simplified Morris - Lecar model CMOS | a ~ 65 nm Sneuron = 35 μm2 | Vspike = 112 mV Δtspike~18 μs Espike = 4 fJ | Capacitor charging and discharging through transistors, Vth~ 112mV | - |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belyaev, M.; Velichko, A. A Spiking Neural Network Based on the Model of VO2–Neuron. Electronics 2019, 8, 1065. https://doi.org/10.3390/electronics8101065

Belyaev M, Velichko A. A Spiking Neural Network Based on the Model of VO2–Neuron. Electronics. 2019; 8(10):1065. https://doi.org/10.3390/electronics8101065

Chicago/Turabian StyleBelyaev, Maksim, and Andrei Velichko. 2019. "A Spiking Neural Network Based on the Model of VO2–Neuron" Electronics 8, no. 10: 1065. https://doi.org/10.3390/electronics8101065

APA StyleBelyaev, M., & Velichko, A. (2019). A Spiking Neural Network Based on the Model of VO2–Neuron. Electronics, 8(10), 1065. https://doi.org/10.3390/electronics8101065