RTDNet: Modulation-Conditioned Attention Network for Robust Denoising of LPI Radar Signals

Abstract

1. Introduction

1.1. Related Work

1.2. Motivation and Contributions

- 1.

- Incorporating modulation information as prior knowledge enabled the network to ensure distortion-free denoising tailored to each modulation type.

- 2.

- Effective denoising was achieved by employing an attention mechanism that enhances the distinction between signal and noise. Noise removal performance was further improved by focusing on relevant signal regions.

- 3.

- The resulting time–frequency images (TFIs) boost the accuracy of the same classifier and yield more precise parameter estimates than those obtained with existing methods across the entire SNR range.

2. Background

2.1. LPI Radar Signal Model

2.2. Choi-Williams Distribution (CWD)

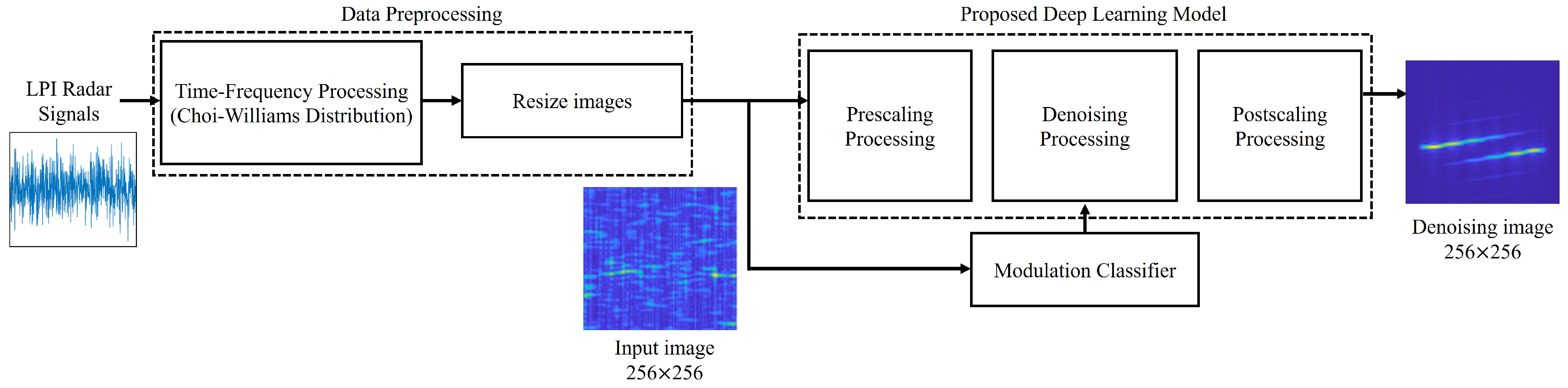

3. Proposed Method

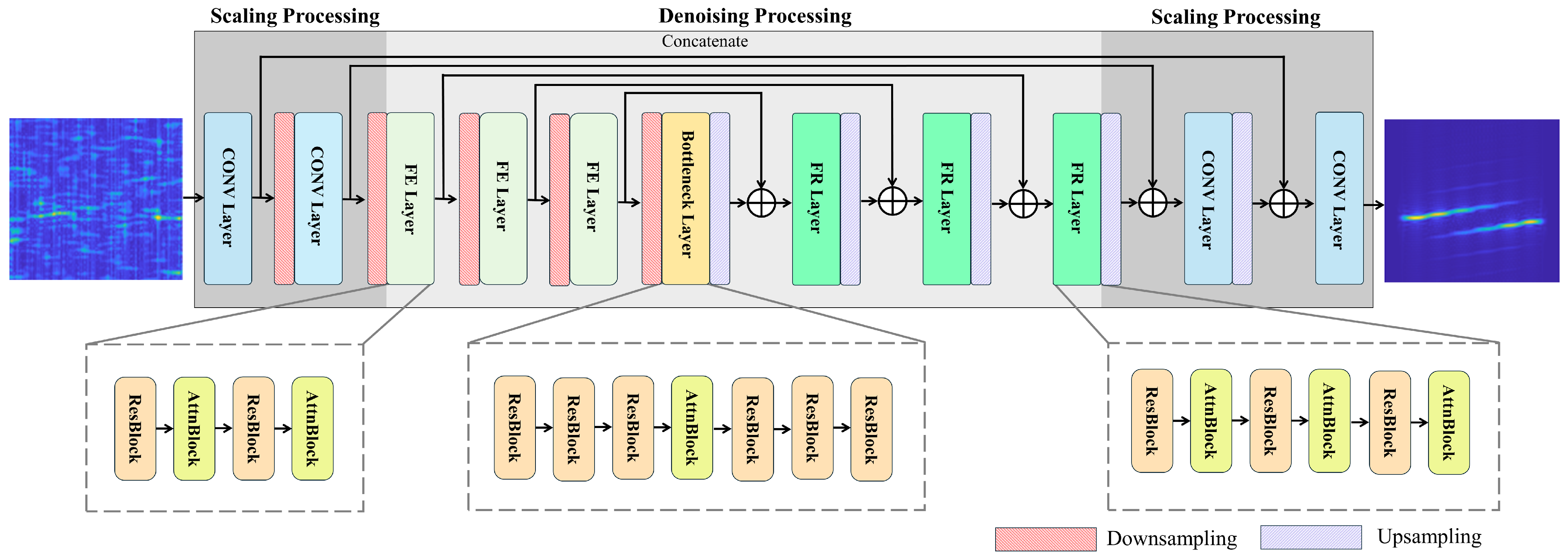

3.1. RTDNet Architecture

3.2. Scaling Processing

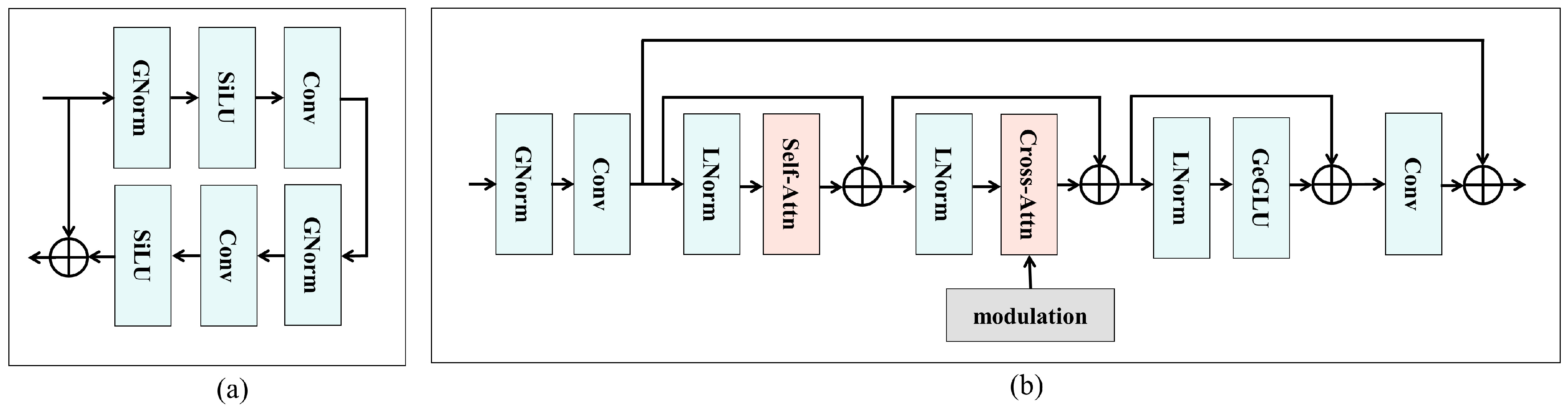

3.3. Denoising Processing

3.4. Attention Mechanism

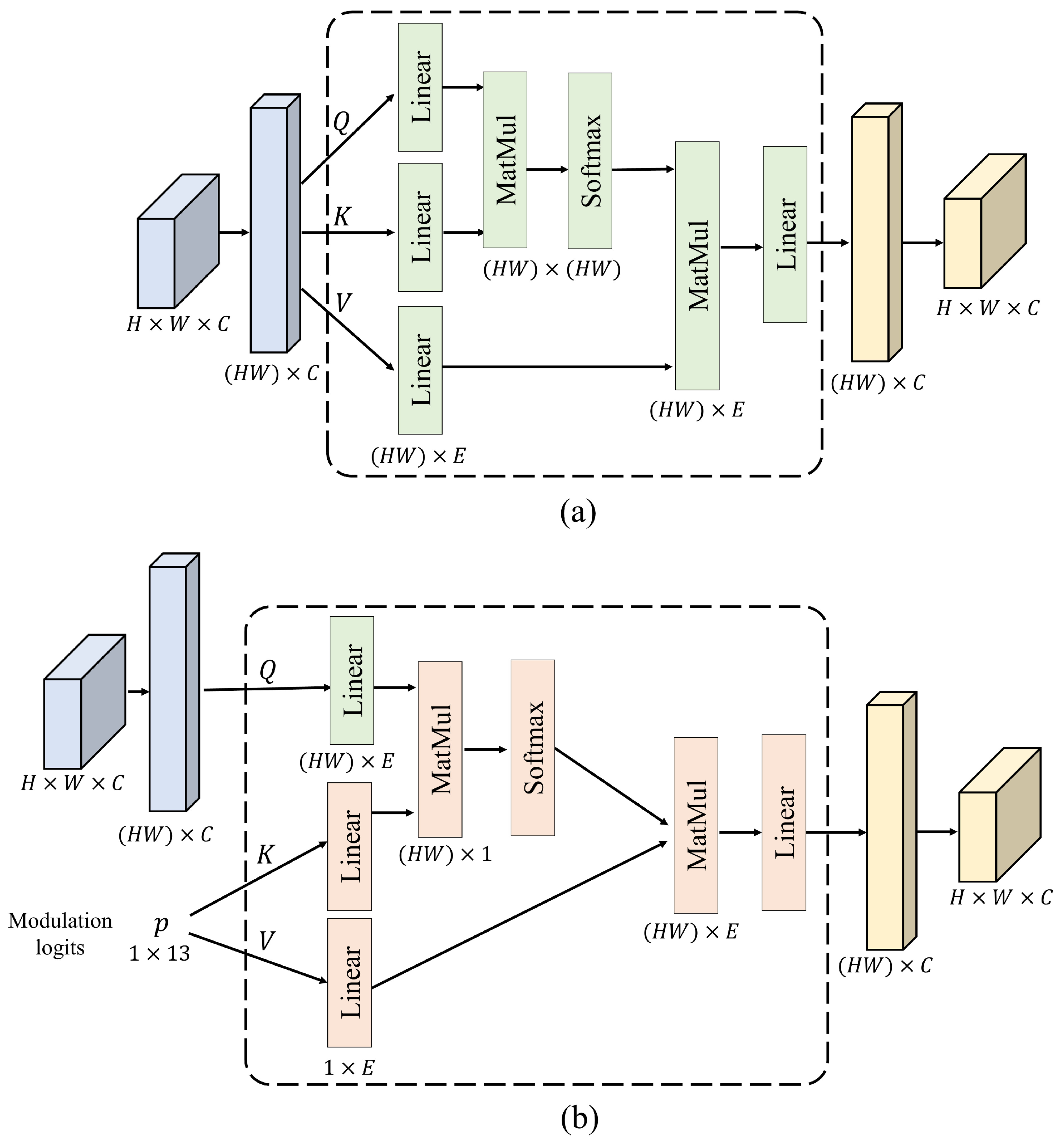

3.4.1. Self-Attention

3.4.2. Modulation-Conditioned Cross-Attention

3.5. Training Objectives

3.5.1. Modulation Recognition Stage

3.5.2. Denoising Stage

4. Simulation Results

4.1. Dataset and Simulation Setup

4.2. Compared Methods

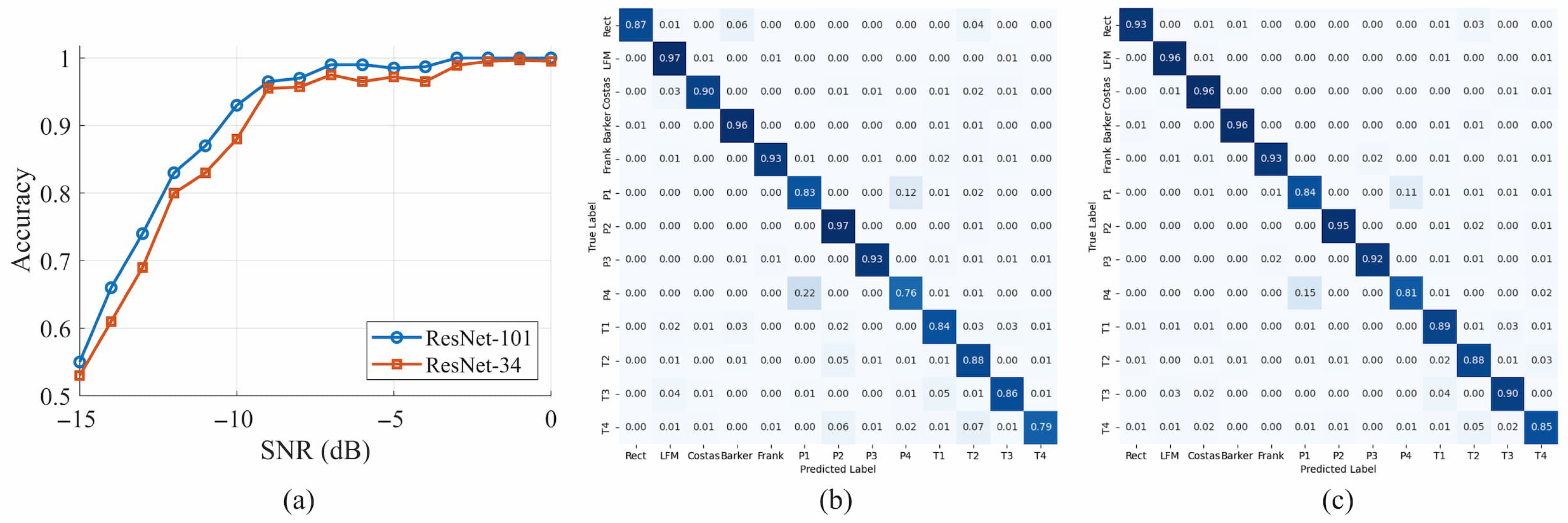

4.3. Modulation Recognition Performance

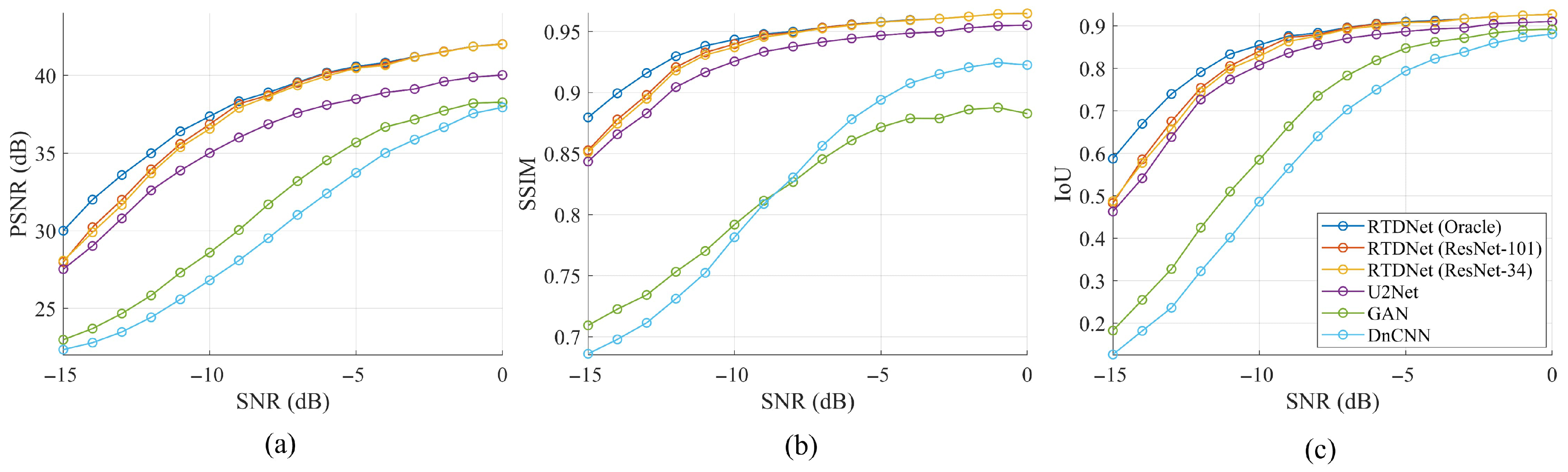

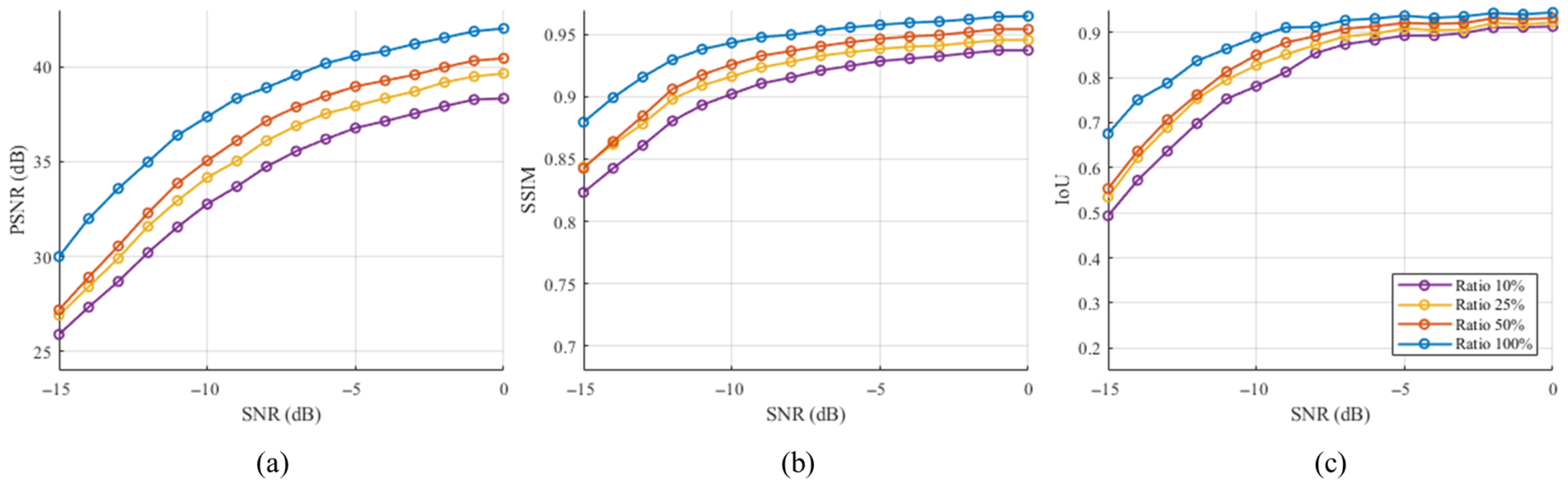

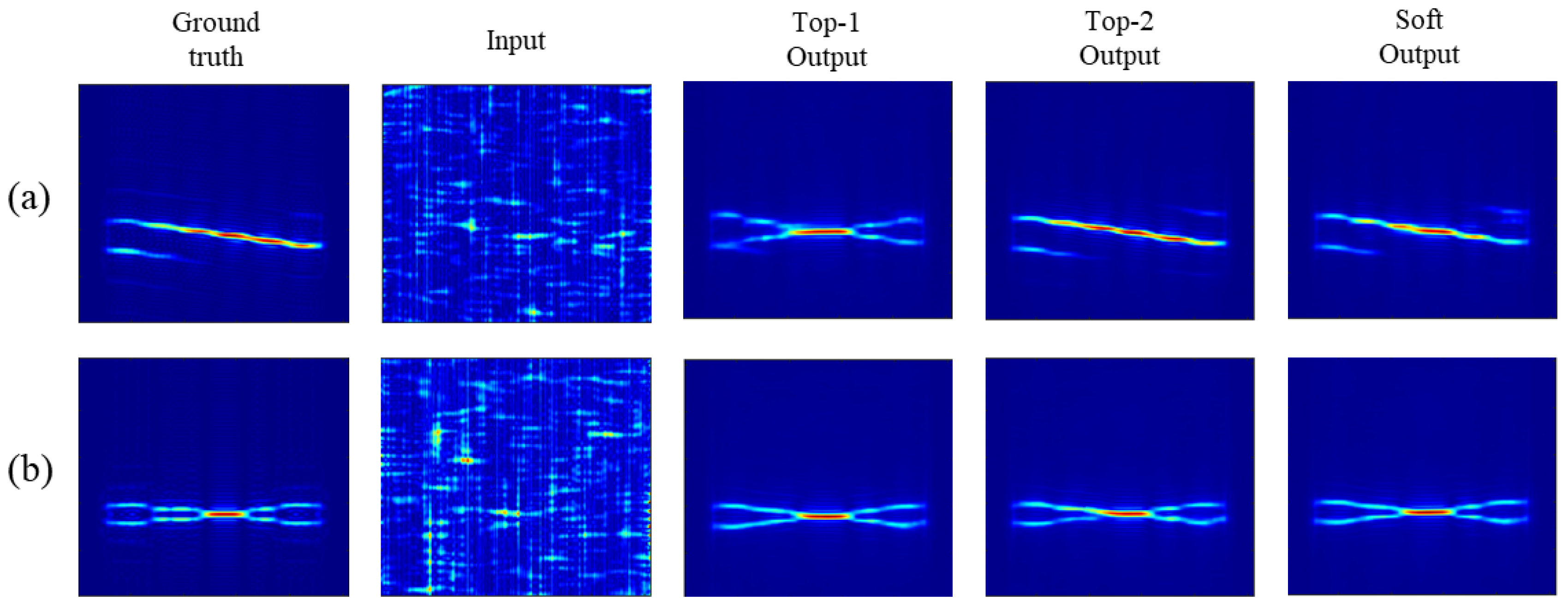

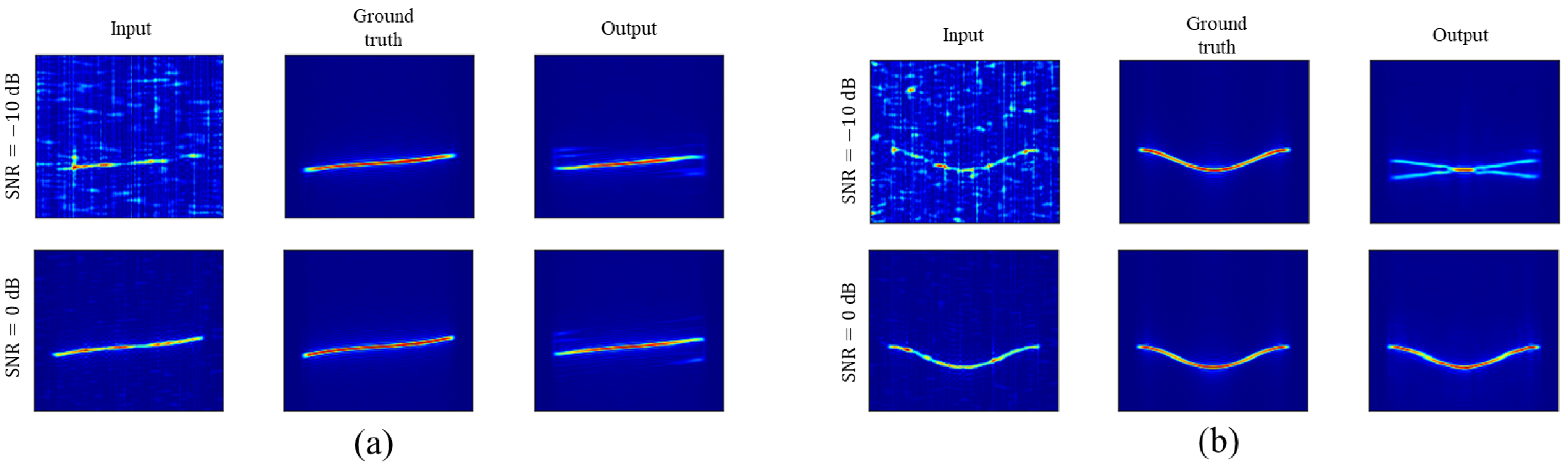

4.4. Denoising Quality

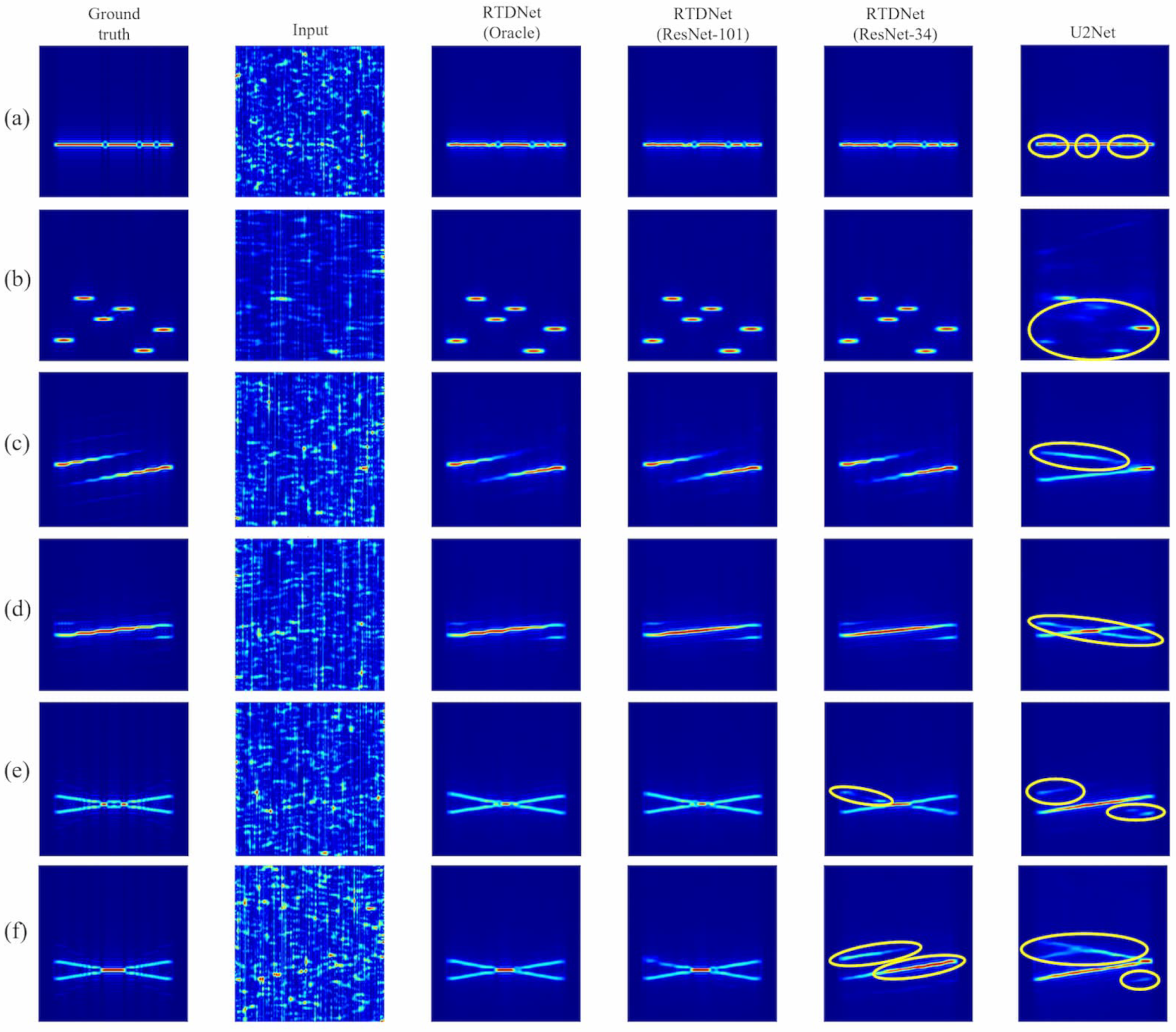

4.5. Qualitative Comparison

- Barker and Costas: If the classifier labels the waveform correctly, RTDNet suppresses noise while keeping Barker inter-chip nulls and the Costas hopping grid intact.

- Polyphase: The staircase spectrum of the Frank and P1 codes stays sharp, with noise removed between the steps. This clear preservation of the step pattern indicates that the modulation prior helps the network suppress noise that would otherwise blur the staircase edges.

- Polytime: Under heavy noise, U2Net often confuses these families because their phase patterns are similar to those of LFM and other polyphase codes. The shallow ResNet-34 branch can misclassify and produce the wrong pattern (yellow ellipses), whereas the deeper ResNet-101 branch rarely does so and closely matches the Oracle output.

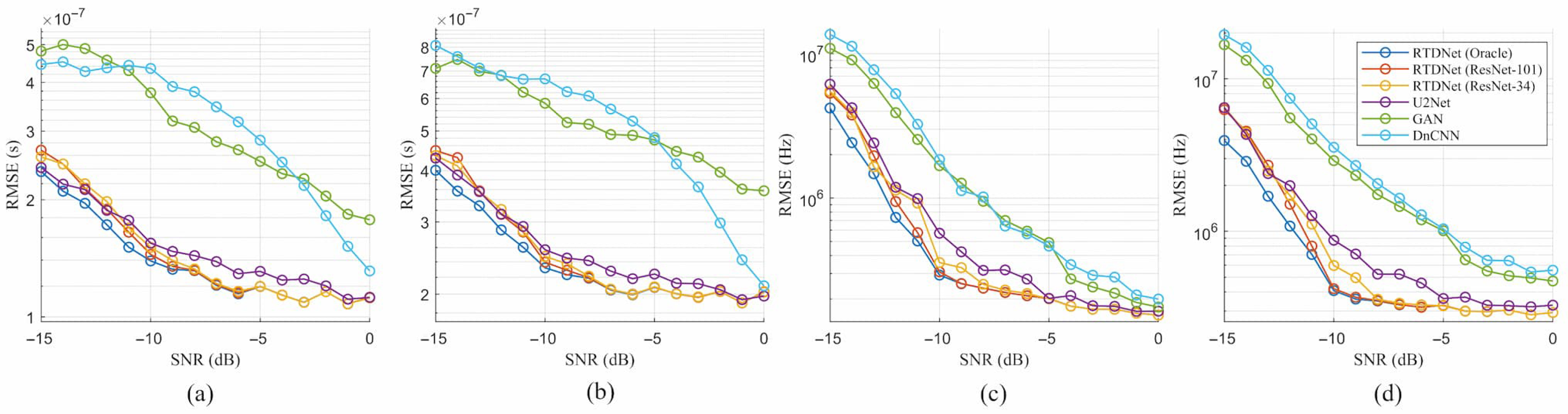

4.6. Parameter Estimation Accuracy

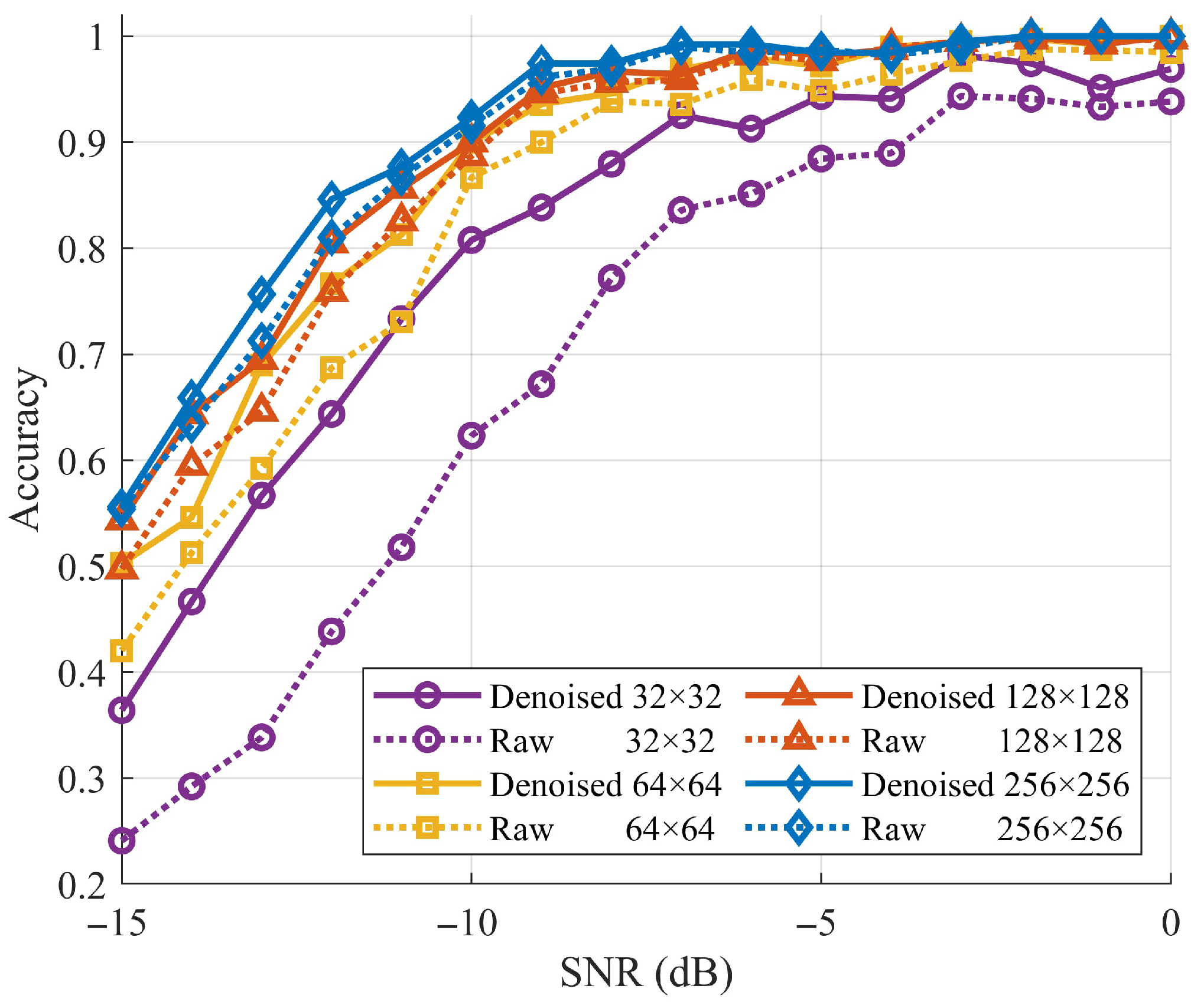

4.7. Post-Denoising Modulation Recognition

- High resolution (): The classification accuracy of the raw TFIs was 89.7%. After denoising, it increased to 90.7% with a gain of 0.99%, which shows that RTDNet causes almost no distortion at a high SNR.

- Moderate downsampling ( and ): The classification accuracies were 87.5% and 83.7% for raw inputs. Denoising restores 1.65% and 3.77%, respectively, almost matching the full-resolution curve shown in Figure 12.

- Severe downsampling (): With heavy downsampling, the raw accuracy decreases to 69.5%. Denoising increases this to 80.6% with a gain of 11.17%. Below dB, the denoised curve in Figure 12 is up to 22% higher than the raw curve, indicating that RTDNet restores the key features even at a severe resolution.

| Input Size | Raw (%) | Denoised (%) | Gain (%) |

|---|---|---|---|

| 32 × 32 | 69.46 | 80.63 | 11.17 |

| 64 × 64 | 83.70 | 87.47 | 3.77 |

| 128 × 128 | 87.53 | 89.18 | 1.65 |

| 256 × 256 | 89.73 | 90.72 | 0.99 |

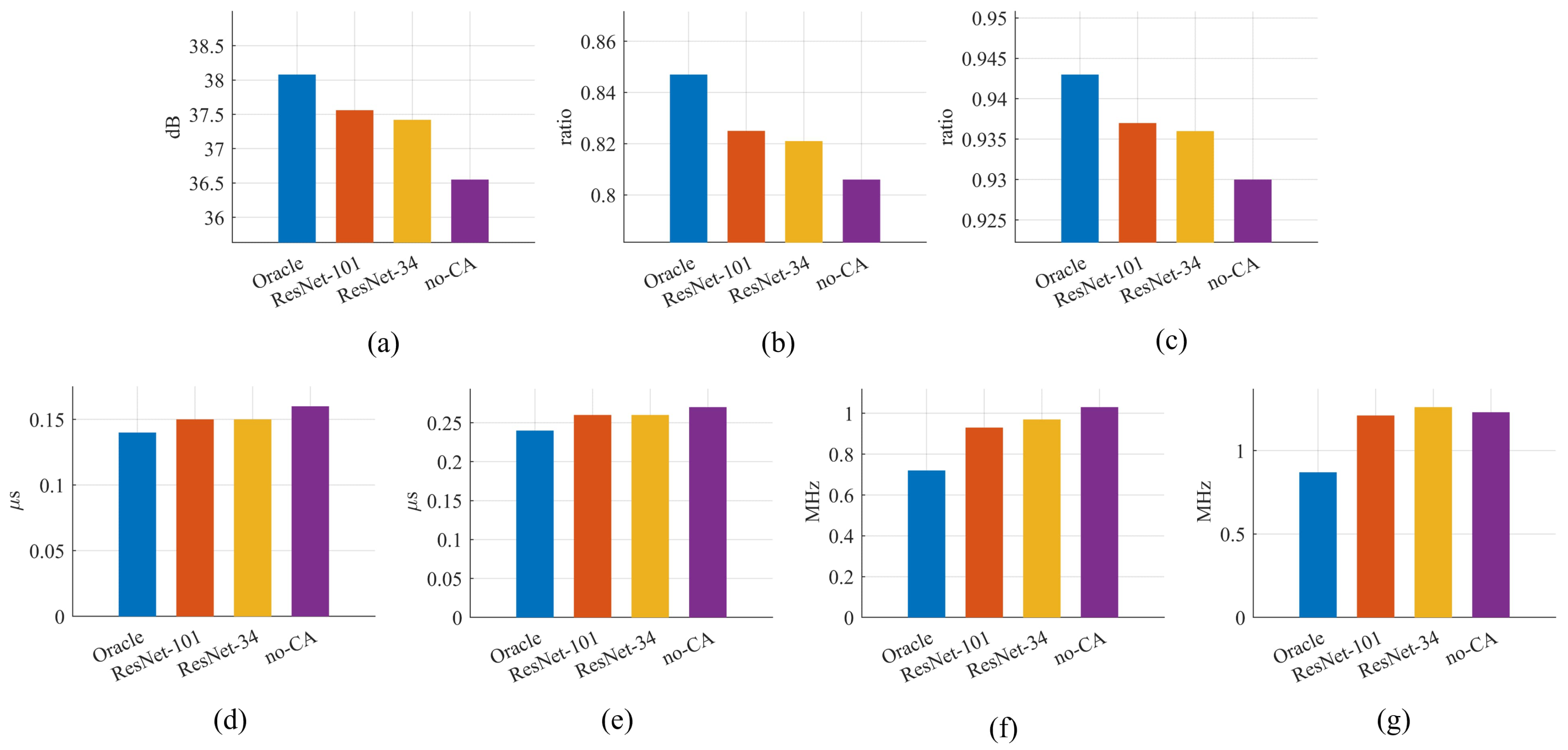

4.8. Ablation on Modulation Prior

- Image quality, Figure 13a–c: When cross-attention is removed (w/o-CA), all three metrics-PSNR, SSIM, and IoU-drop noticeably, confirming that modulation guidance is crucial for high-fidelity restoration. Switching from the lighter ResNet-34 to the deeper ResNet-101 recognizer yields a further, albeit modest, uplift, illustrating that superior classification still translates into cleaner reconstructions.

- Parameter RMSE, Figure 13d–g: Without cross-attention, the bandwidth error rises sharply, and the other three parameters also become worse, underscoring the importance of modulation conditioning for accurate parameter recovery. Replacing ResNet-34 with ResNet-101 consistently lowers every RMSE bar and brings RTDNet closer to the Oracle ceiling, showing that higher recognizer accuracy directly benefits downstream estimation.

4.9. Model Efficiency and Resource Utilization

5. Conclusions

6. DURC Statement

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gupta, M.; Hareesh, G.; Mahla, A.K. Electronic warfare: Issues and challenges for emitter classification. Def. Sci. J. 2011, 61, 467–472. [Google Scholar] [CrossRef]

- Adamy, D. EW 101: A First Course in Electronic Warfare; Artech House: Norwood, MA, USA, 2001; Volume 101. [Google Scholar]

- Pace, P.E. Detecting and Classifying Low Probability of Intercept Radar; Artech House: Norwood, MA, USA, 2004. [Google Scholar]

- Schleher, D.C. LPI radar: Fact or fiction. IEEE Aerosp. Electron. Syst. Mag. 2006, 21, 3–6. [Google Scholar] [CrossRef]

- Chang, S.; Tang, S.; Deng, Y.; Zhang, H.; Liu, D.; Wang, W. An Advanced Scheme for Deceptive Jammer Localization and Suppression in Elevation Multichannel SAR for Underdetermined Scenarios. IEEE Trans. Aerosp. Electron. Syst. 2025; early access. [Google Scholar] [CrossRef]

- Wang, W.; Wu, J.; Pei, J.; Sun, Z.; Yang, J. Deception-Jamming Localization and Suppression via Configuration Optimization for Multistatic SAR. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Tang, J.; Yang, Z.; Cai, Y. Wideband passive radar target detection and parameters estimation using wavelets. In Proceedings of the IEEE International Radar Conference, Alexandria, VA, USA, 7–12 May 2000; pp. 815–818. [Google Scholar]

- Shin, J.-W.; Song, K.-H.; Yoon, K.-S.; Kim, H.-N. Weak radar signal detection based on variable band selection. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 1743–1755. [Google Scholar] [CrossRef]

- Wu, Z.; Huang, N.E. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Adv. Adapt. Data Anal. 2009, 1, 1–41. [Google Scholar] [CrossRef]

- Qi, T.; Wei, X.; Feng, G.; Zhang, F.; Zhao, D.; Guo, J. A method for reducing transient electromagnetic noise: Combination of variational mode decomposition and wavelet denoising algorithm. Measurement 2022, 198, 111420. [Google Scholar] [CrossRef]

- Jiang, M.; Zhou, F.; Shen, L.; Wang, X.; Quan, D.; Jin, N. Multilayer decomposition denoising empowered CNN for radar signal modulation recognition. IEEE Access 2024, 12, 31652–31661. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Lee, S.; Nam, H. LPI radar signal recognition with U2-Net-based denoising. In Proceedings of the 14th International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 11–13 October 2023; pp. 1721–1724. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Jiang, W.; Li, Y.; Tian, Z. LPI radar signal enhancement based on generative adversarial networks under small samples. In Proceedings of the IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 1314–1318. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Krull, A.; Buchholz, T.-O.; Jug, F. Noise2Void–Learning denoising from single noisy images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2129–2137. [Google Scholar]

- Batson, J.; Royer, L. Noise2Self: Blind denoising by self-supervision. In Proceedings of the 36th International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 524–533. [Google Scholar]

- Liao, Y.; Wang, X.; Jiang, F. LPI radar waveform recognition based on semi-supervised model all mean teacher. Digit. Signal Process. 2024, 151, 104568. [Google Scholar] [CrossRef]

- Huang, C.; Xu, X.; Fan, F.; Wei, S.; Zhang, X.; Liu, D.; Gu, M. A low-SNR-adaptive temporal network with smart mask attention for radar signal modulation recognition. Digit. Signal Process. 2025, 168, 105640. [Google Scholar] [CrossRef]

- Xu, S.; Liu, L.; Zhao, Z. Unsupervised recognition of radar signals combining multi-block TFR with subspace clustering. Digit. Signal Process. 2024, 151, 104552. [Google Scholar] [CrossRef]

- Cai, J.; Gan, F.; Cao, X.; Liu, W.; Li, P. Radar intra-pulse signal modulation classification with contrastive learning. Remote Sens. 2022, 14, 5728. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, J.; Chen, S.; Zhang, S.; Zhao, H. Automatic modulation classification of radar signals utilizing X-Net. Digit. Signal Process. 2022, 123, 103396. [Google Scholar] [CrossRef]

- Liang, J.; Luo, Z.; Liao, R. Intra-pulse modulation recognition of radar signals based on efficient cross-scale aware network. Sensors 2024, 24, 5344. [Google Scholar] [CrossRef]

- Bhatti, S.G.; Taj, I.A.; Ullah, M.; Bhatti, A.I. Transformer-based models for intrapulse modulation recognition of radar waveforms. Eng. Appl. Artif. Intell. 2024, 136, 108989. [Google Scholar] [CrossRef]

- Wu, C.; Chen, S.; Sun, G. Automatic modulation recognition framework for LPI radar based on CNN and Vision Transformer. In Proceedings of the 2024 8th International Conference on Computer Science and Artificial Intelligence, Beijing, China, 6–8 December 2024. [Google Scholar]

- Qi, Y.; Ni, L.; Feng, X.; Li, H.; Zhao, Y. LPI radar waveform modulation recognition based on improved EfficientNet. Electronics 2025, 14, 4214. [Google Scholar] [CrossRef]

- Huynh-The, T.; Doan, V.S.; Hua, C.H.; Pham, Q.V.; Nguyen, T.V.; Kim, D.S. Accurate LPI radar waveform recognition with CWD-TFA for deep convolutional network. IEEE Wirel. Commun. Lett. 2021, 10, 1638–1642. [Google Scholar] [CrossRef]

- Kong, S.H.; Kim, M.; Hoang, L.M.; Kim, E. Automatic LPI radar waveform recognition using CNN. IEEE Access 2018, 6, 4207–4219. [Google Scholar] [CrossRef]

- Choi, H.-I.; Williams, W.J. Improved time-frequency representation of multicomponent signals using exponential kernels. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 862–871. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group normalization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Elfwing, S.; Uchibe, E.; Doya, K. Sigmoid-weighted linear units for neural network function approximation in reinforcement learning. Neural Netw. 2018, 107, 3–11. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Shazeer, N. GLU variants improve transformer. arXiv 2020, arXiv:2002.05202. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30, pp. 5998–6008. [Google Scholar]

- Yang, T.; Lan, C.; Lu, Y. Diffusion model with cross attention as an inductive bias for disentanglement. Adv. Neural Inf. Process. Syst. 2024, 37, 82465–82492. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Ward, C.M.; Harguess, J.; Crabb, B.; Parameswaran, S. Image quality assessment for determining efficacy and limitations of super-resolution convolutional neural network (SRCNN). In Applications of Digital Image Processing XL; SPIE: Bellingham, WA, USA, 2017; Volume 10396. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Bang, J.-H.; Park, D.-H.; Lee, W.; Kim, D.; Kim, H.-N. Accurate estimation of LPI radar pulse train parameters via change point detection. IEEE Access 2023, 11, 12796–12807. [Google Scholar] [CrossRef]

| Layers | r | Input Size | |||

|---|---|---|---|---|---|

| Scaling Processing | Conv Layer | 1 | |||

| Downsampling | |||||

| Conv Layer | |||||

| Downsampling | |||||

| Denoising Processing | FE Layer | 3 | |||

| Downsampling | |||||

| Bottleneck Layer | 1 | ||||

| Upsampling | 3 | ||||

| FR Layer | |||||

| Scaling Processing | Upsampling | 1 | |||

| Conv Layer | |||||

| Upsampling | |||||

| Conv Layer | |||||

| Types | Parameters | Range of Value |

|---|---|---|

| All | Number of signal samples | 512, 1024 |

| Centre frequency (Hz) | ||

| SNR (dB) | ||

| Rect † | – | – |

| LFM | Bandwidth (Hz) | |

| Costas | Hopping sequence length | {3, 4, 5, 6} |

| Frequency spacing (Hz) | ||

| Barker | Cycles per phase code | {2, 5} |

| Barker length | {7, 11, 13} | |

| Frank | Cycles per phase code | {3, 5} |

| Number of frequency steps | {6, 7, 8} | |

| P1, P2 | Cycles per phase code | {3, 5} |

| Number of frequency steps | {6, 8} | |

| P3, P4 | Cycles per phase code | {3, 5} |

| Number of subcodes in a code | {36, 64} | |

| T1, T2 | Number of phase states | 2 |

| Number of segments | {4, 5, 6} | |

| T3, T4 | Number of phase states | 2 |

| Bandwidth (Hz) |

| Model | Low SNR ( to dB) | Mid SNR ( to dB) | High SNR ( to 0 dB) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | IoU | SSIM | PSNR | IoU | SSIM | PSNR | IoU | SSIM | |||

| RTDNet (Oracle) | 33.39 | 0.724 | 0.913 | 38.87 | 0.883 | 0.950 | 41.34 | 0.919 | 0.962 | ||

| RTDNet (ResNet-101) | 31.95 | 0.661 | 0.896 | 38.68 | 0.879 | 0.949 | 41.31 | 0.918 | 0.962 | ||

| RTDNet (ResNet-34) | 31.74 | 0.653 | 0.894 | 38.48 | 0.872 | 0.948 | 41.28 | 0.918 | 0.961 | ||

| U2Net [13] | 30.76 | 0.629 | 0.883 | 36.71 | 0.850 | 0.936 | 39.33 | 0.900 | 0.951 | ||

| GAN [15] | 24.90 | 0.340 | 0.738 | 31.61 | 0.717 | 0.827 | 37.29 | 0.875 | 0.881 | ||

| DnCNN [12] | 23.73 | 0.254 | 0.716 | 29.57 | 0.629 | 0.831 | 36.13 | 0.845 | 0.914 | ||

| Method | Low SNR ( to dB) | Mid SNR ( to dB) | High SNR ( to 0 dB) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

TOA

(µs) |

PW

(µs) | fc (MHz) |

BW

(MHz) |

TOA

(µs) |

PW

(µs) | fc (MHz) |

BW

(MHz) |

TOA

(µs) |

PW

(µs) | fc (MHz) |

BW (MHz) | |||

| RTDNet (Oracle) | 0.194 | 0.327 | 1.867 | 2.061 | 0.128 | 0.216 | 0.243 | 0.353 | 0.113 | 0.200 | 0.172 | 0.301 | ||

| RTDNet (ResNet-101) | 0.217 | 0.366 | 2.516 | 3.167 | 0.130 | 0.219 | 0.246 | 0.358 | 0.113 | 0.200 | 0.172 | 0.301 | ||

| RTDNet (ResNet-34) | 0.218 | 0.362 | 2.623 | 3.249 | 0.132 | 0.222 | 0.277 | 0.424 | 0.113 | 0.200 | 0.172 | 0.300 | ||

| U2-Net [13] | 0.208 | 0.356 | 2.994 | 3.288 | 0.143 | 0.238 | 0.380 | 0.618 | 0.121 | 0.208 | 0.183 | 0.339 | ||

| GAN [15] | 0.471 | 0.692 | 6.536 | 9.781 | 0.310 | 0.521 | 1.036 | 1.925 | 0.213 | 0.411 | 0.265 | 0.614 | ||

| DnCNN [12] | 0.441 | 0.726 | 8.241 | 11.886 | 0.373 | 0.599 | 1.039 | 2.250 | 0.203 | 0.335 | 0.299 | 0.702 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Jeon, M.-W.; Park, D.-H.; Kim, H.-N. RTDNet: Modulation-Conditioned Attention Network for Robust Denoising of LPI Radar Signals. Electronics 2026, 15, 386. https://doi.org/10.3390/electronics15020386

Jeon M-W, Park D-H, Kim H-N. RTDNet: Modulation-Conditioned Attention Network for Robust Denoising of LPI Radar Signals. Electronics. 2026; 15(2):386. https://doi.org/10.3390/electronics15020386

Chicago/Turabian StyleJeon, Min-Wook, Do-Hyun Park, and Hyoung-Nam Kim. 2026. "RTDNet: Modulation-Conditioned Attention Network for Robust Denoising of LPI Radar Signals" Electronics 15, no. 2: 386. https://doi.org/10.3390/electronics15020386

APA StyleJeon, M.-W., Park, D.-H., & Kim, H.-N. (2026). RTDNet: Modulation-Conditioned Attention Network for Robust Denoising of LPI Radar Signals. Electronics, 15(2), 386. https://doi.org/10.3390/electronics15020386