Abstract

This study presents a zero-shot object detection framework for corner casting detection in shipping container operations, leveraging edge computing for intelligent robotic perception and control. The proposed system integrates Grounding DINO on a Raspberry Pi, utilizing Referring Expression Comprehension (REC) and Additional Feature Keywords (AFKs) to enable precise corner casting localization without model retraining. This approach reduces computational overhead while ensuring real-time deployment suitability for robotics applications. A comparative evaluation against three SSD-based models—SSD320 MobileNet-V2 FPNLite, MobileNet-V2, and EfficientDet-Lite0—reveals that Grounding DINO achieves a 7.14% higher detection score. Furthermore, a statistical effect size analysis using Cohen’s d (d = 2.2) confirms a significant performance advantage, reinforcing Grounding DINO’s efficacy in zero-shot scenarios. These findings underscore the potential of LLM-driven object detection in resource-constrained environments, offering a scalable and adaptable solution for intelligent perception and control in robotics.

1. Introduction

Detecting container corner castings is crucial for port automation, as these structural components ensure the safe lifting and transportation of shipping containers. Failures in detection can lead to serious safety risks and operational inefficiencies [1]. In port automation systems, robotics gantry arms are commonly employed to handle containers efficiently, further emphasizing the importance of precise corner casting detection. With the increasing adoption of edge devices for intelligent perception and control in robotics, platforms like Raspberry Pi and Nvidia Jetson have gained popularity due to their open-source nature and developer-friendly features. Additionally, there is a rising trend of deploying real-time computational models on edge devices such as Raspberry Pi for various applications, including microscopy [2], drone operations [3], and detection-based counting systems [4]. Raspberry Pi, in particular, has been widely recognized for its suitability in resource-constrained edge computing environments, supporting real-time and low-cost applications in IoT and industrial monitoring [5,6].

Object detection in AI is a tool in intelligent perception and it can be categorized into single-shot detection (SSD) models and region proposal networks (RPNs). RPN-based models, like Faster R-CNN [7], employ a two-stage process that, while accurate, tends to be slower than single-stage SSD models such as MobileNet-V2 [8] and EfficientDet [9]. Given their efficiency, SSD models are commonly used for corner casting detection. However, these models rely on supervised learning and suffer from data limitations [10], making zero-shot detection (ZSD) a promising alternative. ZSD models, such as Grounded Language-Image Pre-Training (GLIP) [11] and Grounding DINO [12], leverage large language models (LLMs) to recognize objects without prior training [13], making them particularly useful for detecting specialized objects like corner castings.

Grounding DINO uses self-supervised training to learn representations from unlabeled data, reducing the need for manual annotation. It allows open-set detection, which enables the model to detect objects belonging to classes that are not present in the training data. Open-set detection is integrated with Referring Expression Comprehension (REC) [14], allowing the model to localize objects in images based on descriptions provided by natural language.

Building on our previous studies [15,16] in single-shot object detection, our works extend the comparison to zero-shot detection on edge devices. Specifically, we evaluate Grounding DINO’s zero-shot detection against three SSD models: SSD320 MobileNetV2 FPNLite, MobileNetV2, and EfficientDet-Lite0. By comparing these models, we assess their efficiency and effectiveness in lightweight object detection. SSD MobileNetV2 FPNLite offers optimized feature extraction, MobileNetV2 serves as a baseline, and EfficientDet-Lite0 is designed for resource-constrained environments. This study provides a comprehensive analysis of lightweight detection architectures and validates the potential of zero-shot models in edge computing applications.

Research on container corner casting detection spans four key areas: 3D vision, LiDAR-based cameras, machine vision, and artificial intelligence. Zhang et al. [17] introduced a 3D vision-based method for detecting container positions, aiding stacker spreader adjustments. However, the need for depth camera calibration makes this approach time-consuming. Similarly, Zhang et al. [18] leveraged LiDAR for container positioning and trajectory planning, integrating a fuzzy adaptive PID control algorithm for crane positioning. While LiDAR is robust to environmental factors, its high cost and limited accuracy pose significant drawbacks [19].

Machine vision techniques have also been explored for corner casting detection. Diao et al. [20] combined hybrid machine vision with a multi-class support vector machine, while Shen et al. [21] applied image processing techniques such as HSV color space analysis and Hough Transform. However, these methods require parameter adjustments for different container sizes, making them less adaptable. Lee et al. [22] used recurrent neural networks (RNNs) and long short-term memory (LSTM) for detection, yet traditional machine vision methods remain sensitive to environmental variations like container color and contamination. In AI-based approaches, Zhang et al. [1] employed a modified Single-Shot Multibox Detector with a Programmable Logic Controller (PLC) for automated rail-mounted gantry (ARMG) control, achieving high accuracy but requiring specialized PLC knowledge and infrastructure, making it impractical for SMEs.

Zero-shot detection (ZSD) offers a promising alternative, though its adoption in engineering applications remains relatively limited. Researchers have harnessed CLIP’s capabilities to develop zero-shot object detection methods aimed at improving order accuracy in food packing [23]. Son et al. [24] demonstrated the feasibility of ZSD by utilizing Grounding DINO for auto-labeling CCTV and smartphone footage, significantly reducing costs. Building upon the potential of ZSD and Grounding DINO, this study aims to integrate zero-shot detection into automated corner casting detection, with a focus on cost-effective implementation through object detection in intelligent perception and robotic controls.

Our main contributions are as follows:

- Introducing a new prompt engineering framework based on Referring Expression Compression (REC) and Additional Feature Keywords (AFKs) to improve model implementation and deployment;

- Conducting a detailed analysis of zero-shot detection and single-shot detection (SSD) models for detecting shipping containers and corner castings;

- Evaluating the feasibility and efficiency of these detection models when deployed on edge devices, considering factors like mean Average Precision (mAP) and detection score.

2. Materials and Methods for Zero-Shot Detection

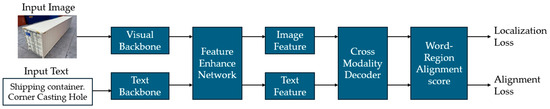

As shown in Figure 1, Grounding DINO begins by extracting fundamental image and text features using dedicated image and text backbones. These features are then processed through a feature enhancer network, which facilitates cross-modal fusion between image and text representations. Next, a language-guided query selection module identifies relevant cross-modality queries from the extracted image features. These queries are then fed into a cross-modality decoder, which refines and adapts them to extract meaningful bimodal features. This step ensures that the model effectively links textual descriptions with corresponding visual elements. Finally, the model generates object proposals for each detected object in the natural language input. These proposals incorporate diverse features such as color, shape, and texture, leveraging Grounding DINO’s extensive pre-training on datasets like O365, GoldG, Cap4M, and COCO, ensuring robust and accurate object detection.

Figure 1.

The framework of Grounding DINO.

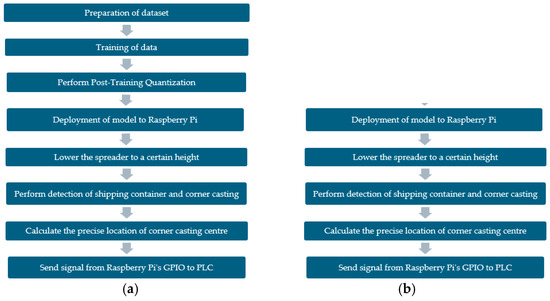

Figure 2 illustrates the comparison of workflow utilizing conventional object detection techniques with single-shot detection and zero-shot detection. The workflow for zero-shot detection is notably shorter, thanks to the absence of dataset preparation and training prerequisites, rendering the entire process significantly faster and easier to implement. Upon successful detection of the shipping container by the model in Raspberry Pi, the next step involves pinpointing the locations of the corner casting holes using machine vision. Once the hole locations are determined, the Raspberry Pi transmits a signal to the Programmable Logic Controller (PLC) to commence the lowering of spreader procedure. This signal transmission occurs through the General-Purpose Input/Output (GPIO) pins on the Raspberry Pi, which are directly connected to the PLC. The conical top is then lowered into the corner casting hole and rotated to secure the container in place.

Figure 2.

Flowchart for automated corner-casting detection: (a) SSD; (b) ZSD with Grounding DINO.

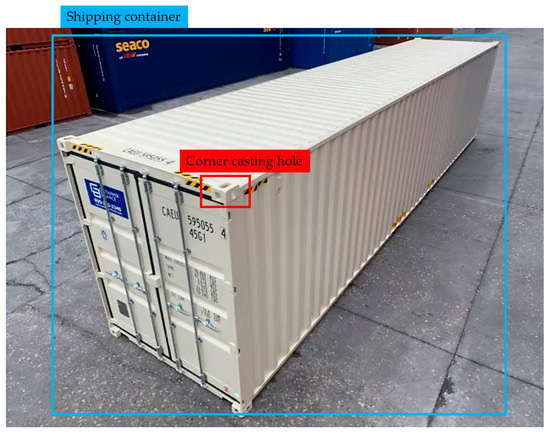

Grounding DINO is classified into three main tasks based on text type: open-vocabulary detection, phrase grounding, and Referring Expression Comprehension (REC). The model processes an (image, text) pair by generating multiple object box and noun phrase pairs, effectively linking visual elements to textual descriptions. As illustrated in Figure 3, the model identifies key objects, such as a shipping container and a corner casting hole, within an input image. It then extracts corresponding class labels, recognizing “container” and “hole” from the input text. This capability highlights Grounding DINO’s strength in associating textual prompts with visual features, facilitating precise object detection. This process leverages Natural Language Processing (NLP) to interpret and understand the textual input, enhancing the model’s ability to accurately detect and classify objects based on the provided descriptions.

Figure 3.

Two classes as labels—the shipping container and corner casting.

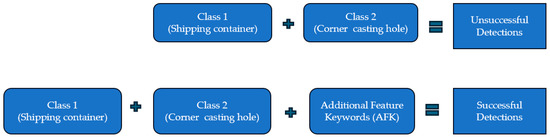

Our primary goal is to detect corner casting holes located on the top of shipping containers using a two-step approach. First, we identify the shipping container, followed by the detection of corner casting holes. This sequential method enhances accuracy by initially detecting the larger, more distinguishable container before narrowing focus to the smaller, intricate corner castings. This process is an integral part of intelligent perception and control in robotics, facilitating automated systems to interpret and interact with their environment effectively. To achieve this, we harness the capabilities of Large Language Models (LLMs) and employ Grounding DINO for object identification based on textual prompts. The model distinguishes key elements within a phrase, such as recognizing “container” and “corner hole” as separate entities in “container with corner hole.” Similarly, in “corner hole on the roof of container”, it identifies “corner hole” and “roof” as distinct objects, ensuring precise localization. As shown in Figure 4, we enhance detection accuracy by incorporating Additional Feature Keywords (AFKs)—descriptive terms that provide supplementary contextual information beyond standard class labels. Examples of AFKs include “extreme”, “roof”, and “top”, which help refine object identification and improve model performance in detecting corner castings in complex scenarios.

Figure 4.

Class and Additional Feature Keywords.

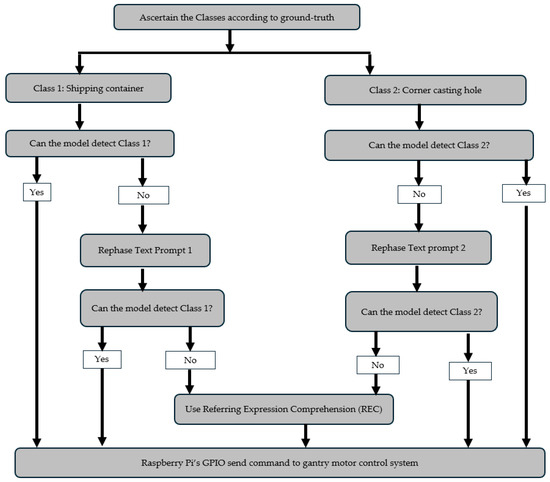

As depicted in Figure 5, the first step involves confirming the object classes with predefined categories. This involves comparing the detection results with known classes specified in the ground truth annotations. The ground truth annotations of custom dataset is provided in Supplementary Material S2. If the model encounters difficulties in detecting less common classes, such as the ‘corner casting hole,’ which may not be readily recognized due to its uncommon usage, it can lead to challenges in accurately identifying and localizing these specific objects. To address this issue, we revise prompt engineering to incorporate more commonly used phrases such as ‘corner hole’ or ‘extreme corner’. Once the model successfully detects both classes, with the assistance of Referring Expression Comprehension (REC) intervention, a control command will be transmitted to the gantry motor through the Raspberry Pi’s GPIO pins.

Figure 5.

Text prompt engineering.

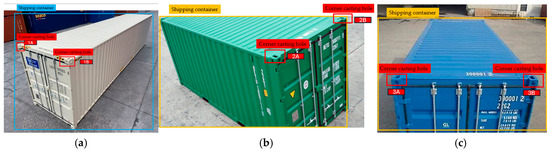

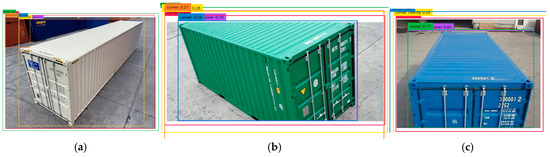

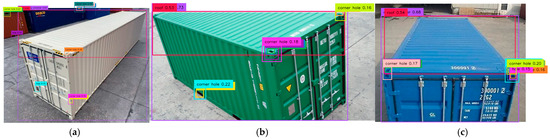

Our study focuses on detecting corner casting holes on container roofs, which are essential for securing spreaders. Using Grounding DINO’s zero-shot detection, we test three open-source images as shown in Figure 6, featuring containers from different perspectives—left, right, and center—within the camera’s field of view. Each container presents unique challenges. Container 1 has a white body that blends with the background, making detection difficult, and corner casting hole 1A is less visible. Container 2 is partially visible, but its corner casting hole 2A is clearly distinguishable. Container 3 offers a distinct test case, featuring two visible corner castings (3A and 3B) in a full front view. By analyzing these varying conditions—including occlusion, color similarity, and different viewing angles—we evaluate the model’s ability to accurately detect corner casting holes, ensuring robust performance in real-world applications. This is part of our broader effort in intelligent perception, aiming to enhance the model’s capability to interpret and understand complex visual environments.

Figure 6.

Test images with ground truths: (a) Container 1; (b) Container 2; (c) Container 3.

2.1. Box and Text Thresholds

Grounding DINO processes an image–text pair and generates 900 object boxes with confidence scores. Each box is evaluated using two thresholds: (1) box_threshold, which filters out low-confidence detections, and (2) text_threshold, which selects words with high similarity scores to the detected objects. For Container 1, we focus on corner casting C1A at the extreme front right, as the front left corner is harder to detect due to distance. For Container 2, C2A on the front left is used as the primary reference, while C2B, another clearly visible corner casting, is also included as ground truth. Container 3 has two front-facing corner castings (C3A and C3B) that serve as reference points due to their clear visibility. The final evaluation involves calculating the average successful detections of corner castings across all test cases, assessing the model’s accuracy and reliability in detecting these crucial structural elements.

2.2. REC with AFKs

Table 1 shows the evaluation method using prompt phase with REC. In total, there are 8 evaluation test cases with two classes, “shipping container” and “corner hole”. Our initial test involves applying Grounding DINO to simple text prompts such as ‘corner casting hole’ and ‘corner hole.’ This test aims to assess the system’s effectiveness in detecting straightforward prompts before proceeding to evaluate its performance with various Additional Feature Keywords (AFKs) in the REC.

Table 1.

Prompt phases with REC.

In our research, we employ the careful selection of keywords as it is vital for accurate detection. For example, the terms like “top” and “roof” have distinct meanings: “top” refers to a point above the container, while “roof” specifies the surface directly above the container. The term “only” helps narrow the focus, ensuring that the model considers only the specified area, excluding other potential locations.

The AFKs are denoted by bold formatting for clarity:

- REC1: “Corner casting hole on top only”

- REC2: “Corner hole on top only”

- REC3: “Hole on extreme corner only”

- REC4: “Hole on extreme top corner”

- REC5: “Corner hole on roof of shipping container”

- REC6: “Corner hole located on roof of shipping container only”

2.3. Prompt Engineering Based on Zero-Shot Detection

As detailed in Table 1, eight evaluation test cases were designed using prompt phases with REC to systematically assess detection performance. For improved clarity, enlarged and clearer versions of the ZSD prompt engineering results corresponding to these test cases are provided in Supplementary Material S1.

2.3.1. Prompt 1: “Corner Casting Hole”

The prompt “corner casting hole” was used to detect corner castings on three containers, as shown in Figure 7. No positive detections were found for Containers 1 and 3, while Container 2 had a 15% detection for corner casting C2A, though the text detected was “hole” instead of “corner casting hole.” Another detection for Container 2 correctly identified “corner casting hole” but was mislocated at the bottom with a 35% confidence level. Additionally, entire container bodies were mistakenly detected as corner casting holes with high confidence levels (52% for Container 1, 38% for Container 2, and 46% for Container 3), indicating a possible misalignment of the term “casting” with the Grounding DINO training dataset. No shipping containers were detected, as they were not mentioned in the prompt.

Figure 7.

Prompt 1 results: (a) Container 1; (b) Container 2; (c) Container 3.

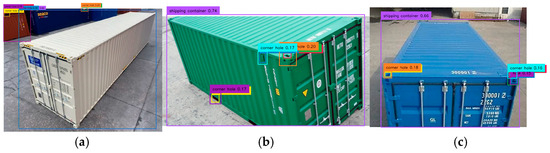

2.3.2. Prompt 2: “Corner Hole”

After analyzing the misdetections, we simplify the terminology in the prompt to “corner hole” by removing the less familiar term “casting.” As shown in Figure 8, only Container 2 exhibited a positive detection for the corner hole, while no detections were observed for Container 1 and Container 3. Misdetections persisted for Containers 1 and 2, where either the entire container or background holes were incorrectly identified as corner holes. For Container 2, a single detection was recorded for corner casting C2A, with a confidence level of 16% (orange).

Figure 8.

Prompt 2 results: (a) Container 1; (b) Container 2; (c) Container 3.

2.3.3. Prompt 3: “Shipping Container. Corner Casting Hole on Top Only”

To prevent misclassification of the container as a corner hole, the prompt “shipping container” was introduced, along with the Additional Feature Keywords (AFKs) “on top only” to refine positioning. These constraints shifted all detections upward, eliminating unwanted hole detections at the bottom of Containers 1 and 2, but also rendered corner casting 3A undetectable, as shown in Figure 9. The shipping container was detected with high confidence levels—71% for Container 1, 70% for Container 2, and 64% for Container 3—indicated by purple boxes. These results suggest the model can generalize well to the “shipping container” object, despite not being explicitly trained on it.

Figure 9.

Prompt 3 results: (a) Container 1; (b) Container 2; (c) Container 3.

2.3.4. Prompt 4: “Shipping Container. Corner Hole on Top Only”

To improve detection, we removed the word “casting”, and, as shown in Figure 10, Prompt 4 restored the detection of corner casting 2A (brown box) on Container 2 with a 20% confidence level. It also enabled the detection of corner casting 3A (brown, 18%) and corner casting 3B (cyan, 16%) on Container 3. However, Grounding DINO still failed to detect the corner casting on Container 1. Consistent with previous prompts, the shipping container was detected with high confidence levels—69% for Container 1, 74% for Container 2, and 66% for Container 3—indicated by purple boxes.

Figure 10.

Prompt 4 results: (a) Container 1; (b) Container 2; (c) Container 3.

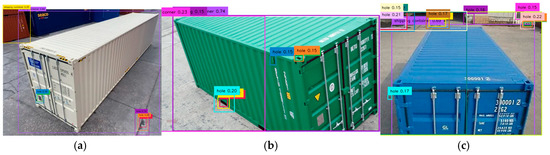

2.3.5. Prompt 5: “Shipping Container. Hole on Extreme Corner”

To reduce false detections of holes at the lower part of the container, Prompt 5 was designed using two Additional Feature Keywords (AFKs): “extreme” and “corner.” The AFK “extreme” helps the model focus on holes at the extreme edges. As shown in Figure 11, two damp patches on the extreme right bottom of the image were mistakenly detected as holes (purple and brown boxes). Container 2 correctly detected corner casting 2A (brown, 15%), while Container 3 had multiple false detections of background holes and one ground hole (cyan, 17%).

Figure 11.

Prompt 5 results: (a) Container 1; (b) Container 2; (c) Container 3.

2.3.6. Prompt 6: “Shipping Container. Hole on Extreme Top Corner”

To reduce false detections on the holes at the lower part of the container, we engineered Prompt 6 as a combination of three AFKs: “extreme”, “top”, and “corner”. It is similar to Prompt 5, except it has an additional AFK “top”. This helped to remove one of the ground detections on Container 1 and ground hole on Container 3, as shown in Figure 12. For Container 2, there was an accurate detection for corner casting 2A (yellow, 28%). The level of confidence was 13% higher than Prompt 5. As for the shipping container, it was detected with a 69% confidence level for Container 1, 74% for Container 2, and 68% for Container 3. All the detections are indicated with a purple box. Only Container 3 had a slight drop of 1% compared to Prompt 5.

Figure 12.

Prompt 6 results: (a) Container 1; (b) Container 2; (c) Container 3.

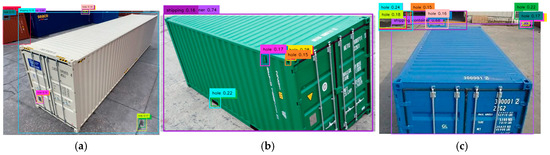

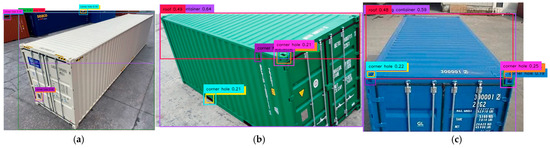

2.3.7. Prompt 7: “Corner Hole on Roof of Shipping Container Only”

Prompt 7 introduced the AFK “roof” to improve corner hole detection accuracy, successfully identifying corner castings across all containers. As shown in Figure 13, there were detections for Container 1’s corner casting 1A (18%) and Container 2’s corner castings 2A (18%) and 2B (16%)—the first detection of 2B. There were also identifications of Container 3’s corner castings 3A (17%) and 3B (20%). The prompt also enabled roof detection with confidence levels of 52% (Container 1), 53% (Container 2), and 54% (Container 3), marked by red boxes. Shipping container detection remained high at 63% (Container 1), 73% (Container 2), and 68% (Container 3), with only a slight 3% confidence drop for Container 1 compared to Prompt 6.

Figure 13.

Prompt 7 results: (a) Container 1; (b) Container 2; (c) Container 3.

2.3.8. Prompt 8: “Corner Hole Located on Roof of Shipping Container Only”

Adding the keywords “located” and “only” to REC6 aimed to improve detection but led to the loss of corner casting 1A for Container 1, as shown in Figure 14. Detection was maintained for Container 2’s corner casting 2A at 18% confidence and Container 3’s corner hole 3A (16%) and corner casting 3B (16%), though both saw slight confidence drops of 1% and 4%, respectively, compared to Prompt 7. Shipping container detection also declined, with confidence levels of 57% (Container 1), 64% (Container 2), and 59% (Container 3), reflecting drops of 8%, 13%, and 12%. This suggests that the verbosity of REC6 may have negatively impacted accuracy, highlighting the need for simpler terms.

Figure 14.

Prompt 8 results: (a) Container 1; (b) Container 2; (c) Container 3.

2.4. Detection Score of Corner Castings

Table 2 presents a summary of detections for corner castings, detailing the number of corner castings detected and highlighting the highest detection score among the identified corner castings. Clearly, the text prompt “corner hole on roof of shipping container” yielded the most favorable results. Our analysis suggests that Grounding DINO exhibits enhanced detection capabilities when the AFK “roof” is employed. As Test Case 7 exhibited the highest detection rate (5 out of 6) and the highest average detection confidence, it served as the basis for calculating the sustainability score of ZSD. The use of AFKs such as ‘extreme’ and ‘only’ improved detection accuracy. ‘Only’ restricted the focus to targeted areas and ‘extreme’ guided the model towards boundary features, resulting in more precise corner casting localization.

Table 2.

Detection scores of corner castings.

2.5. Detection Scores of Shipping Containers

Table 3 shows that explicitly mentioning “shipping container” significantly improved detection confidence, with scores exceeding 71% when it was labeled as a separate prompt. Test Case 6 achieved the highest average detection score at 70.67%. The model’s ability to exclude detections when the prompt is unrelated (as seen in Test Cases 1 and 2) demonstrates strong language understanding.

Table 3.

Detection scores of shipping containers.

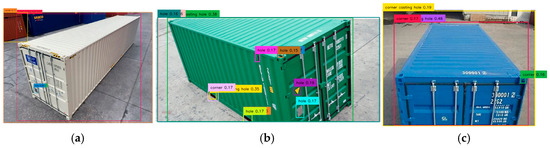

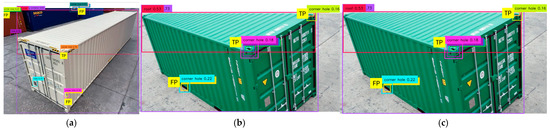

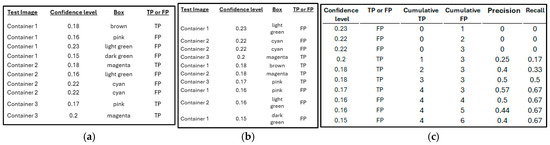

2.6. Average Precision of Corner Casting

The Average Precision (AP) metric evaluates the model’s performance in corner casting detection by measuring precision and recall. Using a 0.15 box threshold and 0.15 text threshold yielded the highest number of positive detections. Prompt 7 was selected to generate detection scores for the three images, with a focus on corner castings rather than shipping containers due to their smaller size and detection difficulty. The mean Average Precision (mAP) serves as a benchmark to compare zero-shot detection (ZSD) and supervised object detection (SSD). The ground truth annotations, illustrated in Figure 15, serve as a benchmark for evaluating detection performance. Precision and recall are computed manually from the sorted textual output scores, leveraging true positives (TPs) and false positives (FPs) to quantify detection accuracy for both corner castings and shipping containers.

Figure 15.

Labelling of TPs and FPs using Prompt 7: (a) Container 1; (b) Container 2; (c) Container 3.

As shown in Figure 16, predictions are sorted by descending confidence levels, and cumulative true positives and false positives are tabulated to calculate precision and recall. The precision–recall curve is summarized using the Average Precision (AP), which is calculated as the area under the curve using Simpson’s numerical integration method. Simpson’s rule, preferred for its accuracy over the trapezoidal rule, uses a quadratic polynomial approximation. The formula for Simpson’s rule is given below, where a quadratic polynomial passes through the points (x0, f0); (x1, f1); (x2, f2), where f1 = f(x1).

Figure 16.

Calculation of Average Precision using area under graph: (a) Unsorted confidence level; (b) sorted confidence level; (c) calculation of precision and recall.

The formula for Simpson’s rule is provided and the mean Average Precision for Grounding DINO is calculated as 24.398%. The formulas for precision and recall for the manual calculation of mAP are below:

P = Cumulative TP/(Cumulative TP + Cumulative FP)

R = Cumulative TP/Total Ground Truths

Recall indicates the model’s ability to correctly identify all relevant instances. The denominator for recall is always 6, representing the number of ground truths (specifically, six corner castings).

3. Method and Materials for Single-Shot Detection

3.1. Dataset

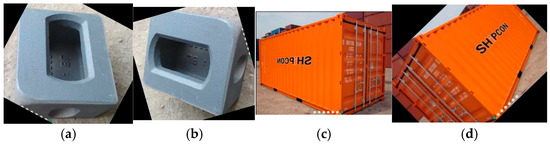

This project leverages a custom dataset comprising images sourced from the internet and repositories such as Roboflow Universe. The custom dataset has a total of 482 images and contains two types of workpieces, corner castings and containers, as shown in Figure 17. The colors and the sizes of the corner castings and containers vary, adding complexity and variability to the dataset. To ensure clarity and consistency across the evaluation, all SSD-based methods (SSD320 MobileNet FPNLite, MobileNet-V2, and EfficientDet-Lite0) were assessed using a common validation split derived from this dataset.

Figure 17.

Dataset of corner casting and container: (a) corner casting; (b) rotated corner casting; (c) shipping container; (d) rotated shipping container.

Table 4 provides the total distribution of classes for the two categories, indicating an equal class balance that can optimize model performance. Each class, “shipping container” and “corner casting”, comprises 50% of the total images, ensuring a balanced representation. Additionally, considering annotations, approximately 53.2% of all annotations pertain to shipping containers, while the remaining 46.8% are for corner castings, ensuring balanced class balance for model performance optimization. All SSD-based models in this study were trained on the same custom dataset of 482 annotated images. A consistent training and validation split was applied to all SSD models for fair comparison.

Table 4.

Distribution of custom dataset.

Similar to our previous works, we used the following deep learning process. First, the dataset was prepared and labeled using Roboflow, with the ground truth annotations illustrated in Supplementary Material S2.

The model was then trained in the cloud using Google Colab, with hyperparameter optimization. After training, the model was converted into the “.tflite” format and deployed to a Raspberry Pi for control and inference. To augment the image count, various processes were applied, as shown in Table 5. This included horizontal and vertical flips, adding Gaussian noise, converting to grayscale, and rotating the images by ±15 degrees. These augmentation techniques helped to increase the diversity of the dataset and improve the model’s ability to generalize to different variations of the input data.

Table 5.

Augmentation operations applied to the original images.

3.2. Hyperparameter

Below are the hyperparameters used, as detailed in Table 6. These hyperparameters were chosen to ensure that the models were trained effectively, taking into consideration the differences in model architecture and input sizes. For SSD320 FPNLite and MobileNetV2, the input size was set to 320 × 320 pixels, while for EfficientDet-Lite0, it was set to 512 × 512 pixels. All the models were trained for 200 epochs with a warm-up factor of 0.02666 and a momentum optimizer with a momentum value of 0.9.

Table 6.

Hyperparameter settings.

3.3. Mean Average Precision (mAP)

For our object detection, the evaluation criteria are the mean Average Precision (mAP) and detection scores. For SSD models, we use the Pascal VOC metric [25] to measure the overall performance of our custom object detection at a fixed Intersect over Union (IoU) threshold. Instead of the default 0.5 IoU, we set the threshold at 0.15, using the box and text thresholds of Grounding DINO as our benchmark.

Both the mAP values at 0.15 IoU and 0.5 IoU are shown in Table 7 below. The results indicate that SSD FPNLite model performs the best among the three, with the highest mAP at both IoU thresholds, suggesting strong overall detection capabilities. In contrast, the EfficientDet-Lite model demonstrates a significant drop in performance when the IoU threshold increases from 0.15 to 0.5, suggesting it might struggle with stricter overlap criteria between predicted and ground truth bounding boxes. The MobileNetV2 model has moderate performance, better than EfficientDet-Lite0 but not as good as SSD FPN320Lite. It shows a smaller drop in mAP when increasing the IoU threshold compared to EfficientDet-Lite0. Overall, SSD320 FPNLite has higher average mAP score compared to the other SSD models.

Table 7.

mAP at 0.15 IoU and 0.5 IoU.

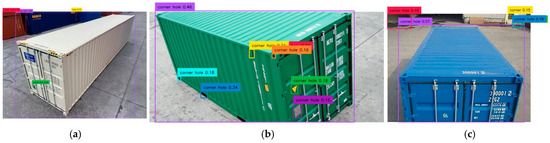

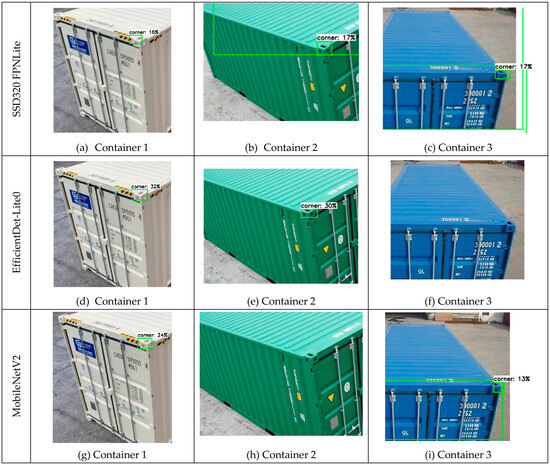

3.4. Detection Scores of SSD Models

As presented in Table 8, object detection was performed on three reference container images using a threshold of 0.15. The average detection scores were calculated based on six ground truth annotations, as there were six corner castings. For instance, corner casting 3A, 3B is represented as (31, 0), indicating that 3A was detected with a score of 31%, while 3B was not detected. The results demonstrate that MobileNetV2 achieved the highest detection scores when compared to the other two SSD models.

Table 8.

Detection scores of shipping containers.

Figure 18 illustrates the detection of shipping containers and corner castings using various SSD models. It is observed that the EfficientDet and MobileNetV2 models failed to detect the corner castings on Container 2. In contrast, the SSD320 FPNLite model successfully detected the corner castings.

Figure 18.

Comparison of detection scores for SSD models.

4. Comparison Between ZSD vs SSD on Edge-Based AI Device

4.1. Mean Average Precision at 0.15 IoU

As shown in Table 9, the SSD320 FPNLite model achieved the highest mAP, at 62.50%, while the EfficientDet-Lite0 model had the lowest, at 55.42%. In comparison, Grounding DINO’s mAP was 33.83% lower than the average performance of the SSD models, as highlighted in Table 10. This significant performance gap underscores the trade-offs between supervised and self-supervised methods in object detection.

Table 9.

mAPs of SSD models.

Table 10.

Comparison of mAP.

4.2. Detection Scores

The detection scores of SSD models for corner castings were computed based on the ground truth, as presented in Table 11. The results shown in Table 12 indicate that Grounding DINO consistently outperformed the SSD models, achieving a 7.14% higher detection score than the average SSD models. This highlights that, despite operating in a zero-shot configuration without the need for training, Grounding DINO can effectively identify and differentiate corner casting features, leveraging the capabilities of Referring Expression Comprehension (REC) and Additional Feature Keywords (AFKs).

Table 11.

Detection scores of SSD models.

Table 12.

Comparison of detection scores.

5. Discussion and Statistical Analysis

The observed performance gap underscores the inherent trade-offs between supervised and self-supervised learning approaches. Supervised models, such as SSD320 FPNLite, EfficientDet-Lite0, and MobileNetV2, benefit from large, annotated datasets, enabling higher accuracy. In contrast, self-supervised models like Grounding DINO excel in scenarios with limited labeled data but exhibit lower precision, as reflected by a mean Average Precision (mAP) of 24.4%. Nevertheless, Grounding DINO achieved a 7.14% higher detection score than the SSD models, highlighting its effectiveness despite the absence of training.

To further strengthen the analysis of Grounding DINO’s detection performance compared to the SSD models, an approximation of Cohen’s d [26] analysis was performed. The standard deviation of the SSD models was computed as 2.52 and the resulting effect size was 2.2, indicating a significant difference in detection performance. Cohen’s d is given by

where M2 represents the detection score of Grounding DINO, M1 is the average detection score of the SSD models, and SD2 is the standard deviation of the SSD models.

The results demonstrate that Grounding DINO outperforms the SSD models, achieving a mean detection score of 17.8%, compared to 10.66% for the SSD models. This substantial difference highlights Grounding DINO’s superior ability to detect corner castings without prior training, leveraging Referring Expression Comprehension (REC) and Additional Feature Keywords (AFKs). The large effect size (Cohen’s d = 2.2) further reinforces the magnitude of this performance gap, emphasizing Grounding DINO’s enhanced detection capability compared to traditional SSD models. The improvement in detection accuracy achieved by Grounding DINO, despite a lower mAP, demonstrates practical relevance for applications in resource-constrained environments.

The large effect size (Cohen’s d = 2.2) observed in this study indicates that the performance difference compared to SSD models is both statistically and practically significant, supporting the effectiveness of prompt engineering in zero-shot detection. Similar use of effect size analysis in object detection, such as by Jung et al. [27], has highlighted the value of quantifying such differences to assess real-world impact. Moreover, improvements in detection accuracy have been shown to enhance operational efficiency and reliability in various industrial contexts, including defect detection in manufacturing [28] and real-time object recognition in intelligent transport systems [29]. These findings suggest that the performance gains achieved by Grounding DINO can contribute to more efficient and scalable automation solutions.

6. Validation

For the validation of our SSD models, we employ the widely recognized mAP 0.5:0.95 metric to evaluate the performance of our object detection models. The mAP 0.5:0.95 metric averages the precision values across multiple IoU thresholds ranging from 0.5 to 0.95, with increments of 0.05. The Intersection over Union (IoU) quantifies the overlap between the predicted bounding boxes and the ground truth bounding boxes of detected objects. For benchmarking, we use the COCO2017 dataset [30] and its associated mAP as a reference. The COCO2017 dataset is used here to benchmark Grounding DINO’s general object detection performance. This serves as a reference for the model’s baseline capabilities prior to specialization for the shipping container detection task. As demonstrated in Table 13, our custom dataset achieves superior performance, surpassing the COCO2017 dataset with an average mAP improvement of 8.8%. This indicates that our model outperforms the baseline model, which was trained and evaluated using the COCO2017 dataset.

Table 13.

Validation of mAP with COCO2017.

7. Conclusions

In this work, we demonstrated the potential of the Grounding DINO model on a Raspberry Pi to effectively detect holes in shipping containers from images. By utilizing zero-shot detection in the automated corner casting detection, we were able to prioritize cost-effectiveness in development. This is part of our broader effort in intelligent perception and automated rail-mounted gantry control, enhancing the model’s capability to interpret and understand complex visual environments.

We introduced an innovative NLP-framework combining Referring Expression Comprehension (REC) and Additional Feature Keywords (AFKs) to achieve successful detection on the Raspberry Pi without any model training. Additionally, we provided a comparison between zero-shot detection (ZSD) and single-shot detection (SSD). Grounding DINO was selected for the automated container lifting system due to its high detection accuracy, detecting objects and assigning scores that activated the Raspberry Pi’s GPIO pins once scores exceeded a set threshold. This controlled the twist lock mechanism, ensuring precise and reliable container handling.

Our work highlighted that supervised models, such as SSD320 FPNLite, EfficientDet-Lite0, and MobileNetV2, benefit from large, annotated datasets, resulting in higher accuracy. In contrast, Grounding DINO, despite offering large language model (LLM) capabilities to auto-annotate objects, lagged in precision, as evidenced by its lower mAP but higher detection score, leading to a high effect size (Cohen’s d = 2.2). For validation, we demonstrated that the mAP of our SSD models exceeded that of the COCO2017 dataset.

In summary, this study demonstrates the effective application of Grounding DINO for detecting shipping container corner castings on a Raspberry Pi, leveraging zero-shot detection as a cost-efficient solution. While Grounding DINO showcased significant potential, supervised models like SSD320 FPNLite exhibited higher precision. Given the critical importance of high detection accuracy in mitigating safety risks, future research will aim to optimize inference timing for real-time detection, further enhancing intelligent perception and automated rail-mounted gantry control systems through zero-shot detection.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/electronics14091887/s1, Supplementary Material S1: Enlarged Visualizations of AFK Impact; Supplementary Material S2: Ground truth annotations of Custom Dataset.

Author Contributions

Conceptualization, E.K. and J.J.C.; methodology, E.K., Z.J.C. and M.L.; validation, E.K., Z.J.C. and M.L.; formal analysis, E.K., Z.J.C. and M.L.; investigation, E.K.; writing—original draft preparation, E.K.; writing—review and editing, E.K. and J.J.C.; supervision, Z.J.C.; funding acquisition, J.J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Materials. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, Y.; Huang, Y.; Zhang, Z.; Postolache, O.; Mi, C. A vision-based container position measuring system for ARMG. Meas. Control 2023, 56, 596–605. [Google Scholar] [CrossRef]

- Bueno, G.; Sanchez-Vargas, L.; Diaz-Maroto, A.; Ruiz-Santaquiteria, J.; Blanco, M.; Salido, J.; Cristobal, G. Real-Time Edge Computing vs. GPU-Accelerated Pipelines for Low-Cost Microscopy Applications. Electronics 2025, 14, 930. [Google Scholar] [CrossRef]

- Rey, L.; Bernardos, A.M.; Dobrzycki, A.D.; Carramiñana, D.; Bergesio, L.; Besada, J.A.; Casar, J.R. A Performance Analysis of You Only Look Once Models for Deployment on Constrained Computational Edge Devices in Drone Applications. Electronics 2025, 14, 638. [Google Scholar] [CrossRef]

- Sun, W.; Niu, X.; Wu, Z.; Guo, Z. Lightweight Detection Counting Method for Pill Boxes Based on Improved YOLOv8n. Electronics 2024, 13, 4953. [Google Scholar] [CrossRef]

- Cortés, J.; Somov, A.; Fedorov, V. Deployment of Smart Cameras for IoT Applications Using Raspberry Pi. Sensors 2020, 20, 7237. [Google Scholar] [CrossRef]

- Morales, R.; Royo, F.; Sales, A. A Low-Cost IoT Sensor Based on Raspberry Pi for a Real-Time Monitoring System in Industrial Applications. Sensors 2020, 20, 2497. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Tan, C.; Xu, X.; Shen, F. A survey of zero shot detection: Methods and applications. Cogn. Robot. 2021, 1, 159–167. [Google Scholar] [CrossRef]

- Li, L.H.; Zhang, P.; Zhang, H.; Yang, J.; Li, C.; Zhong, Y.; Wang, L.; Yuan, L.; Zhang, L.; Hwang, J.-N.; et al. Grounded Lan-guage-Image Pre-Training. arXiv 2022, arXiv:2112.03857. [Google Scholar]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. arXiv 2023, arXiv:2303.05499. [Google Scholar]

- Ma, B.; Xu, W. Efficient Fine Tuning for Fashion Object Detection. Sensors 2023, 23, 6083. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Wang, L.; Yang, M.H. Referring expression generation and comprehension via attributes. In Proceedings of the IEEE International Conference on Computer Vision, Online, 31 October 2017; pp. 4856–4864. [Google Scholar]

- Kee, E.; Chong, J.J.; Choong, Z.J.; Lau, M. Development of Smart and Lean Pick-and-Place System Using EfficientDet-Lite for Custom Dataset. Appl. Sci. 2023, 13, 11131. [Google Scholar] [CrossRef]

- Kee, E.; Chong, J.J.; Choong, Z.J.; Lau, M. Object Detection with Hyperparameter and Image Enhancement Optimisation for a Smart and Lean Pick-and-Place Solution. Signals 2024, 5, 87–104. [Google Scholar] [CrossRef]

- Zhang, H.; Cui, X. Container Position Detection Technology Based on Stacker 3D Vision. In Proceedings of the 2023 3rd International Conference on Computer Science and Blockchain (CCSB), Shenzhen, China, 17–19 November 2023; pp. 69–74. [Google Scholar] [CrossRef]

- Zhang, L.; Cheng, W.M.; Qin, Q. Container positioning system based on lidar measurement technology. Electr. Drives 2022, 52, 75–80. [Google Scholar] [CrossRef]

- Benkert, J.; Maack, R.; Meisen, T. Chances and Challenges: Transformation from a Laser-Based to a Camera-Based Container Crane Automation System. J. Mar. Sci. Eng. 2023, 11, 1718. [Google Scholar] [CrossRef]

- Diao, Y.; Cheng, W.; Du, R.; Wang, Y.; Zhang, J. Vision-based detection of container lock holes using a modified local sliding window method. EURASIP J. Image Video Process. 2019, 2019, 69. [Google Scholar] [CrossRef]

- A positioning lockholes of container corner castings method based on image recognition. Pol. Marit. Res. 2017, 24, 95–101. [CrossRef]

- Deep learning–assisted real-time container corner casting recognition. Int. J. Distrib. Sens. Netw. 2019, 15, 1550147718824462.

- Gatti, M.; Rehman, A.U.; Gallo, I. A CLIP-Based Framework to Enhance Order Accuracy in Food Packaging. Electronics 2025, 14, 1420. [Google Scholar] [CrossRef]

- Son, J.; Jung, H. Teacher–Student Model Using Grounding DINO and You Only Look Once for Multi-Sensor-Based Object Detection. Appl. Sci. 2024, 14, 2232. [Google Scholar] [CrossRef]

- PascalVOC. 2012. Available online: https://paperswithcode.com/dataset/pascal-voc (accessed on 1 May 2025).

- Jacob, C. Statistical Power Analysis for the Behavioral Sciences; Routledge: Oxfordshire, UK, 2013. [Google Scholar]

- Jung, M.; Yang, H.; Min, K. Improving deep object detection algorithms for game scenes. Electronics 2021, 10, 2527. [Google Scholar] [CrossRef]

- Liu, Y.; Zhao, Y.; Zhu, Y. Improved Object Detection Algorithm for Real-Time Defect Detection in Industrial Products. IEEE Access 2020, 8, 118181–118192. [Google Scholar] [CrossRef]

- Kumar, A.; Hansson, L. Enhanced Object Detection for Automated Crane Operations in Container Ports Using Deep Learning. Sensors 2021, 21, 1271. [Google Scholar] [CrossRef]

- COCO2017 Dataset. Available online: https://cocodataset.org/ (accessed on 1 May 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).