VRBiom: A New Periocular Dataset for Biometric Applications of Head-Mounted Display

Abstract

1. Introduction

- Benchmarking the performance of existing methods/models on HMD data;

- Fine-tuning models to address domain shifts (introduced by different capturing angles, devices, resolutions, etc.);

- Developing new methods tailored to the unique requirements of HMDs.

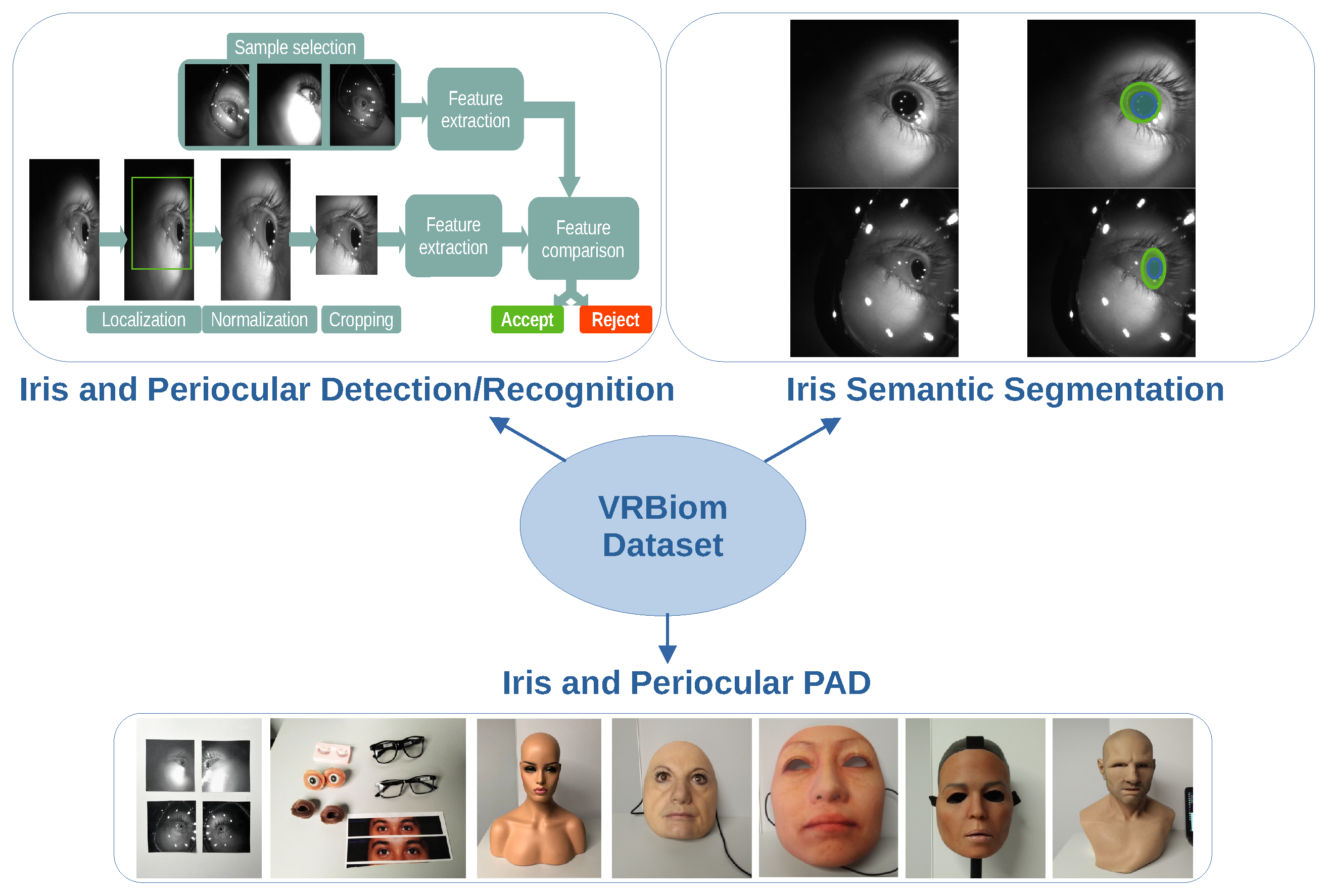

- We have created and publicly released the VRBiom dataset [20]. The VRBiom dataset is a collection of more than 2000 periocular videos acquired from a VR device. To the best of our knowledge, this is the first publicly available dataset featuring realistic, non-frontal views of the periocular region for a variety of biometric applications.

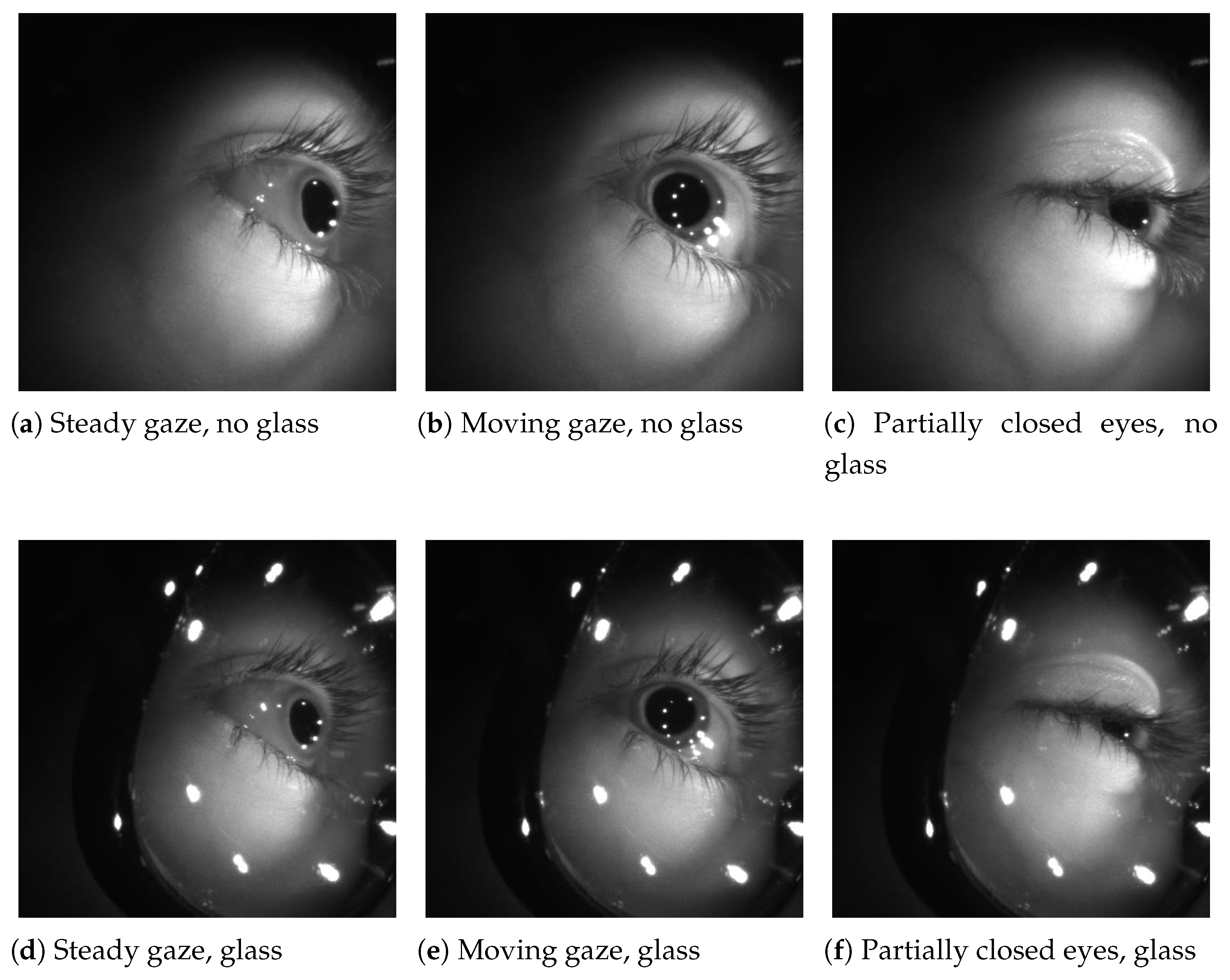

- The VRBiom dataset offers a range of realistic and challenging variations for the benchmarking and development of biometric applications. It includes recordings under three gaze conditions: steady gaze, moving gaze, and partially closed eyes. Additionally, it provides an equal split of recordings with and without glasses, facilitating the analysis of the impact of eye-wear on iris/periocular recognition.

- As a part of VRBiom, we have also released more than 1100 PA videos constructed from 92 attack instruments. These attacks, incorporating different combinations of PAIs such as prints, fake 3D eyeballs, and various masks, provide a valuable resource for advancing PAD research in a VR setup.

2. Related Datasets

2.1. Datasets for Iris/Periocular Biometrics

2.2. HMD Datasets for Iris/ Periocular Biometrics

3. The VRBiom Dataset

3.1. Collection Setup

3.2. Bona Fide Recordings

- Steady Gaze: The subject maintains a nearly fixed gaze position by fixating their eyes on a specific (virtual) object.

- Moving Gaze: The subject’s gaze moves freely across the scene.

- Partially Closed Eyes: The subject keeps their eyes partially closed without focusing on any particular gaze.

3.3. PA Recordings

- Mannequins: We used a collection of seven mannequins made of plastic, each featuring its own eyes. These mannequins are generic (fake heads) as they do not represent any specific bona fide identity. Unlike the requirements of additional components for other PAIs to provide a stable platform or extra equipment to stabilize HMDs, these mannequins are particularly advantageous due to their integrated and stable platform for placing HMDs.

- Custom Rigid Masks (Type-I): This category includes ten custom rigid masks, comprising five men and five women. The term custom implies that the masks were modelled after real individuals. Manufactured by REAL-f Co. Ltd. (Japan), these masks are made from a mixture of sandstone powder and resin. They represent real persons (though not part of the bona fide subjects in this work) and include eyes made of similar material with synthetic eyelashes attached.

- Custom Rigid Masks (Type-II): We used another collection of 14 custom rigid masks to construct the PAs for the VRBiom. These masks differ from the previous category in two ways: first, they are made of amorphous powder compacted with resin material by Dig:ED (Germany); second, they have empty spaces at the eye locations, where we inserted fake 3D glass eyeballs to construct an attack.

- Generic Flexible Masks: This category includes twenty flexible masks made of silicone. These full-head masks do not represent any specific identity, hence termed generic. The masks have empty holes for eyes, where we inserted printouts of periocular regions from synthetic identities. Using synthetic identities alleviates privacy concerns typically associated with creating biometric PAs.

- Custom Flexible Masks: These silicone masks represent real individuals. Similar to the previous attack categories, these masks have holes at the eye locations, where we inserted fake 3D eyes to create the attacks. We have 16 PAIs in this category.

- Vulnerability Attacks: For each subject in the bona fide collection, we created a print attack. For both eye-wear conditions (without and with glasses), we manually selected an appropriate frame from each subject’s bona fide videos and printed it at true-scale on a laser printer. The printouts were cut into periocular crops and placed on a mannequin to resemble a realistic appearance when viewed by the tracking cameras of the HMD. The so-obtained 25 print PAs can be used to assess the vulnerability of the corresponding biometric recognition system. These attacks simulate scenarios where an attacker gains access to (unencrypted) data of authorized individuals and constructs simple attacks using printouts.

3.4. Challenges

- Eyelashes: During the initial data capture experiments, we observed that the subjects’ eyelashes appeared as a prominent feature, especially when capturing the periocular region. The absence of eyelashes in most PAIs, where prints or 3D eyeballs were used to construct the attack, can be considered as an easy-to-distinguish yet unrealistic feature. To create a more realistic scenario, we decided to use false eyelashes from standard makeup kits.

- PA Recordings: Recording the PAs was challenging due to the lack of real-time feedback from the device or capture app. To achieve the correct positioning and angle for various PAIs, multiple attempts and recording trials were often required for data collectors to ensure the desired quality of the captured data.

- NIR Camera’s Over-exposure: The proximity between the attack instrument and the NIR illuminator/receiver often resulted in over-exposed recordings. Due to the fixed distance between the internal light source (illuminator), the subject’s skin (or the material surface in the case of PAs), and the camera, there was limited flexibility to adjust the lighting conditions during acquisition. However, we attempted minor adjustments to the HMD’s orientation and the placement of the PAI to mitigate exposure issues to a considerable extent.

- Synthetic Eyes: One challenge we faced was the variability in the identity of synthetic eyes. To ensure accurate biometric measurements, we needed to carefully select synthetic eyes that closely resemble human eyes.

- Print Attack: The printout attacks designed for vulnerability assessment are inherently 2-dimensional, whereas the periocular region’s appearance is 3D. To simulate realistic appearance, we employed small paper balls to roll the printed attacks, thereby providing a structural view akin to 3D representation.

- Comfort and Safety: Prolonged use of VR devices (as well as smart and wearable devices) has often been associated with user discomfort, including symptoms such as dizziness or uneasiness, and effects of Specific Absorption Rate (SAR) [36,37,38,39]. In our case, since each recording session lasted only a few minutes, these concerns were not encountered. However, we mention this issue for the benefit of future researchers who may intend to conduct longer-duration data collection.

4. Potential Use-Cases of the VRBiom Dataset

4.1. Iris and Periocular Recognition

4.2. Iris and Periocular PAD

4.3. Semantic Segmentation

5. Summary

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Radianti, J.; Majchrzak, T.A.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Kamińska, D.; Sapiński, T.; Wiak, S.; Tikk, T.; Haamer, R.E.; Avots, E.; Helmi, A.; Ozcinar, C.; Anbarjafari, G. Virtual reality and its applications in education: Survey. Information 2019, 10, 318. [Google Scholar] [CrossRef]

- Xie, B.; Liu, H.; Alghofaili, R.; Zhang, Y.; Jiang, Y.; Lobo, F.D.; Li, C.; Li, W.; Huang, H.; Akdere, M.; et al. A review on virtual reality skill training applications. Front. Virtual Real. 2021, 2, 645153. [Google Scholar] [CrossRef]

- Chiang, F.K.; Shang, X.; Qiao, L. Augmented reality in vocational training: A systematic review of research and applications. Comput. Hum. Behav. 2022, 129, 107125. [Google Scholar] [CrossRef]

- Yeung, A.W.K.; Tosevska, A.; Klager, E.; Eibensteiner, F.; Laxar, D.; Stoyanov, J.; Glisic, M.; Zeiner, S.; Kulnik, S.T.; Crutzen, R.; et al. Virtual and augmented reality applications in medicine: Analysis of the scientific literature. J. Med. Internet Res. 2021, 23, e25499. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A. Virtual reality applications toward medical field. Clin. Epidemiol. Glob. Health 2020, 8, 600–605. [Google Scholar] [CrossRef]

- Suh, I.; McKinney, T.; Siu, K.C. Current perspective of metaverse application in medical education, research and patient care. Virtual Worlds 2023, 2, 115–128. [Google Scholar] [CrossRef]

- Boutros, F.; Damer, N.; Raja, K.; Ramachandra, R.; Kirchbuchner, F.; Kuijper, A. Iris and periocular biometrics for head mounted displays: Segmentation, recognition, and synthetic data generation. Image Vis. Comput. 2020, 104, 104007. [Google Scholar] [CrossRef]

- Boutros, F.; Damer, N.; Raja, K.; Ramachandra, R.; Kirchbuchner, F.; Kuijper, A. On benchmarking iris recognition within a head-mounted display for ar/vr applications. In Proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Houston, TX, USA, 28 September–1 October 2020; pp. 1–10. [Google Scholar]

- Arena, F.; Collotta, M.; Pau, G.; Termine, F. An overview of augmented reality. Computers 2022, 11, 28. [Google Scholar] [CrossRef]

- Scavarelli, A.; Arya, A.; Teather, R.J. Virtual reality and augmented reality in social learning spaces: A literature review. Virtual Real. 2021, 25, 257–277. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, J.; Li, J.; Peng, Y.; Mao, Z. Large language models for human–robot interaction: A review. Biomim. Intell. Robot. 2023, 3, 100131. [Google Scholar] [CrossRef]

- Pfeuffer, K.; Geiger, M.J.; Prange, S.; Mecke, L.; Buschek, D.; Alt, F. Behavioural biometrics in vr: Identifying people from body motion and relations in virtual reality. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Morales, A.; Fierrez, J.; Galbally, J.; Gomez-Barrero, M. Introduction to iris presentation attack detection. In Handbook of Biometric Anti-Spoofing: Presentation Attack Detection; Springer: Berlin/Heidelberg, Germany, 2019; pp. 135–150. [Google Scholar]

- Czajka, A.; Bowyer, K.W. Presentation attack detection for iris recognition: An assessment of the state-of-the-art. ACM Comput. Surv. (CSUR) 2018, 51, 1–35. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, Y.; Liu, Y.; Lu, F. Edge-guided near-eye image analysis for head mounted displays. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bari, Italy, 4–8 October 2021; pp. 11–20. [Google Scholar]

- Clay, V.; König, P.; Koenig, S. Eye tracking in virtual reality. J. Eye Mov. Res. 2019, 12, 1–18; [Google Scholar] [CrossRef]

- Kapp, S.; Barz, M.; Mukhametov, S.; Sonntag, D.; Kuhn, J. ARETT: Augmented reality eye tracking toolkit for head mounted displays. Sensors 2021, 21, 2234. [Google Scholar] [CrossRef]

- Bozkir, E.; Özdel, S.; Wang, M.; David-John, B.; Gao, H.; Butler, K.; Jain, E.; Kasneci, E. Eye-tracked virtual reality: A comprehensive survey on methods and privacy challenges. arXiv 2023, arXiv:2305.14080. [Google Scholar]

- Idiap Research Institute. VRBiom Dataset. 2024. Available online: https://www.idiap.ch/dataset/vrbiom (accessed on 31 March 2025).

- Chinese Academy of Sciences. CASIA Iris Image Database. 2002. Available online: http://biometrics.idealtest.org (accessed on 31 March 2025).

- Zanlorensi, L.A.; Laroca, R.; Luz, E.; Britto, A.S., Jr.; Oliveira, L.S.; Menotti, D. Ocular recognition databases and competitions: A survey. Artif. Intell. Rev. 2022, 55, 129–180. [Google Scholar] [CrossRef]

- Omelina, L.; Goga, J.; Pavlovicova, J.; Oravec, M.; Jansen, B. A survey of iris datasets. Image Vis. Comput. 2021, 108, 104109. [Google Scholar] [CrossRef]

- Yambay, D.; Becker, B.; Kohli, N.; Yadav, D.; Czajka, A.; Bowyer, K.W.; Schuckers, S.; Singh, R.; Vatsa, M.; Noore, A.; et al. LivDet iris 2017–Iris liveness detection competition 2017. In Proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 733–741. [Google Scholar]

- Tinsley, P.; Purnapatra, S.; Mitcheff, M.; Boyd, A.; Crum, C.; Bowyer, K.; Flynn, P.; Schuckers, S.; Czajka, A.; Fang, M.; et al. Iris Liveness Detection Competition (LivDet-Iris)–The 2023 Edition. In Proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Ljubljana, Slovenia, 25–28 September 2023; pp. 1–10. [Google Scholar]

- Proença, H.; Alexandre, L.A. UBIRIS: A noisy iris image database. In Proceedings of the International Conference on Image Analysis and Processing (ICIAP), Cagliari, Italy, 6–8 September 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 970–977. [Google Scholar]

- Proença, H.; Filipe, S.; Santos, R.; Oliveira, J.; Alexandre, L.A. The UBIRIS. v2: A database of visible wavelength iris images captured on-the-move and at-a-distance. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1529–1535. [Google Scholar] [CrossRef]

- Teo, C.C.; Neo, H.F.; Michael, G.; Tee, C.; Sim, K. A robust iris segmentation with fuzzy supports. In Proceedings of the International Conference on Neural Information Processing (ICONIP), Sydney, Australia, 21–25 November 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 532–539. [Google Scholar]

- Nguyen, K.; Proença, H.; Alonso-Fernandez, F. Deep learning for iris recognition: A survey. ACM Comput. Surv. 2024, 56, 1–35. [Google Scholar] [CrossRef]

- McMurrough, C.D.; Metsis, V.; Kosmopoulos, D.; Maglogiannis, I.; Makedon, F. A dataset for point of gaze detection using head poses and eye images. J. Multimodal User Interfaces 2013, 7, 207–215. [Google Scholar] [CrossRef]

- Tonsen, M.; Zhang, X.; Sugano, Y.; Bulling, A. Labelled pupils in the wild: A dataset for studying pupil detection in unconstrained environments. In Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14–17 March 2016; pp. 139–142. [Google Scholar]

- Kim, J.; Stengel, M.; Majercik, A.; De Mello, S.; Dunn, D.; Laine, S.; McGuire, M.; Luebke, D. Nvgaze: An anatomically-informed dataset for low-latency, near-eye gaze estimation. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–12. [Google Scholar]

- Garbin, S.J.; Shen, Y.; Schuetz, I.; Cavin, R.; Hughes, G.; Talathi, S.S. Openeds: Open eye dataset. arXiv 2019, arXiv:1905.03702. [Google Scholar]

- Palmero, C.; Sharma, A.; Behrendt, K.; Krishnakumar, K.; Komogortsev, O.V.; Talathi, S.S. Openeds2020: Open eyes dataset. arXiv 2020, arXiv:2005.03876. [Google Scholar]

- Meta Platforms, Inc. Compare Devices. 2025. Available online: https://developers.meta.com/horizon/resources/compare-devices/ (accessed on 31 March 2025).

- Chang, E.; Kim, H.T.; Yoo, B. Virtual reality sickness: A review of causes and measurements. Int. J. Hum.- Interact. 2020, 36, 1658–1682. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, X.; Xu, H. Human factors/ergonomics evaluation for virtual reality headsets: A review. CCF Trans. Pervasive Comput. Interact. 2021, 3, 99–111. [Google Scholar] [CrossRef]

- Laganà, F.; Bibbò, L.; Calcagno, S.; De Carlo, D.; Pullano, S.A.; Pratticò, D.; Angiulli, G. Smart Electronic Device-Based Monitoring of SAR and Temperature Variations in Indoor Human Tissue Interaction. Appl. Sci. 2025, 15, 2439. [Google Scholar] [CrossRef]

- Vrellis, I.; Delimitros, M.; Chalki, P.; Gaintatzis, P.; Bellou, I.; Mikropoulos, T.A. Seeing the unseen: User experience and technology acceptance in Augmented Reality science literacy. In Proceedings of the 2020 IEEE 20th International Conference on Advanced Learning Technologies (ICALT), Tartu, Estonia, 6–9 July 2020; pp. 333–337. [Google Scholar]

- Bowyer, K.W.; Burge, M.J. Handbook of Iris Recognition; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Yin, Y.; He, S.; Zhang, R.; Chang, H.; Han, X.; Zhang, J. Deep learning for iris recognition: A review. arXiv 2023, arXiv:2303.08514. [Google Scholar] [CrossRef]

- Alonso-Fernandez, F.; Bigun, J. A survey on periocular biometrics research. Pattern Recognit. Lett. 2016, 82, 92–105. [Google Scholar] [CrossRef]

- Zhang, M.; Liu, J.; Sun, Z.; Tan, T. The first ICB* competition on iris recognition. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–6. [Google Scholar]

- Zhang, M.; Zhang, Q.; Sun, Z.; Zhou, S.; Ahmed, N.U. The btas competition on mobile iris recognition. In Proceedings of the 8th International Conference on Biometrics Theory, Applications and Systems (BTAS), Niagara Falls, NY, USA, 6–9 September 2016; pp. 1–7. [Google Scholar]

- Boutros, F.; Damer, N.; Raja, K.; Ramachandra, R.; Kirchbuchner, F.; Kuijper, A. Periocular Biometrics in Head-Mounted Displays: A Sample Selection Approach for Better Recognition. In Proceedings of the International Workshop on Biometrics and Forensics (IWBF), Porto, Portugal, 29–30 April 2020; pp. 1–6. [Google Scholar]

- Boyd, A.; Fang, Z.; Czajka, A.; Bowyer, K.W. Iris presentation attack detection: Where are we now? Pattern Recognit. Lett. 2020, 138, 483–489. [Google Scholar] [CrossRef]

- Raja, K.B.; Raghavendra, R.; Braun, J.N.; Busch, C. Presentation attack detection using a generalizable statistical approach for periocular and iris systems. In Proceedings of the International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 21–23 September 2016; pp. 1–6. [Google Scholar]

- Hoffman, S.; Sharma, R.; Ross, A. Iris + Ocular: Generalized iris presentation attack detection using multiple convolutional neural networks. In Proceedings of the International Conference on Biometrics (ICB), Crete, Greece, 4–7 June 2019; pp. 1–8. [Google Scholar]

- Liveness Detection Competitions. LivDet. 2025. Available online: https://livdet.org (accessed on 31 March 2025).

- Adegoke, B.; Omidiora, E.; Falohun, S.; Ojo, J. Iris Segmentation: A survey. Int. J. Mod. Eng. Res. (IJMER) 2013, 3, 1885–1889. [Google Scholar]

- Rot, P.; Vitek, M.; Grm, K.; Emeršič, Ž.; Peer, P.; Štruc, V. Deep sclera segmentation and recognition. In Handbook of Vascular Biometrics; Springer: Basel, Switzerland, 2020; pp. 395–432. [Google Scholar]

- Chaudhary, A.K.; Kothari, R.; Acharya, M.; Dangi, S.; Nair, N.; Bailey, R.; Kanan, C.; Diaz, G.; Pelz, J.B. Ritnet: Real-time semantic segmentation of the eye for gaze tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 3698–3702. [Google Scholar]

- Perry, J.; Fernandez, A. Minenet: A dilated cnn for semantic segmentation of eye features. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019; pp. 3671–3676. [Google Scholar]

- Valenzuela, A.; Arellano, C.; Tapia, J.E. Towards an efficient segmentation algorithm for near-infrared eyes images. IEEE Access 2020, 8, 171598–171607. [Google Scholar] [CrossRef]

- Wang, C.; Wang, W.; Zhang, K.; Muhammad, J.; Lu, T.; Zhang, Q.; Tian, Q.; He, Z.; Sun, Z.; Zhang, Y.; et al. NIR iris challenge evaluation in non-cooperative environments: Segmentation and localization. In Proceedings of the 2021 IEEE International Joint Conference on Biometrics (IJCB), Shenzhen, China, 4–7 August 2021; pp. 1–10. [Google Scholar]

- Das, A.; Atreya, S.; Mukherjee, A.; Vitek, M.; Li, H.; Wang, C.; Zhao, G.; Boutros, F.; Siebke, P.; Kolf, J.N.; et al. Sclera Segmentation and Joint Recognition Benchmarking Competition: SSRBC 2023. In Proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Ljubljana, Slovenia, 25–28 September 2023; pp. 1–10. [Google Scholar]

| Datasets and Subsets | #Subjects | #Images | Resolution | Types of Attacks | Collection Year | Institution(s) | |

|---|---|---|---|---|---|---|---|

| CASIA-Iris series [21] | CASIA-IrisV1 | 108 * | 756 | No | 2002 | Chinese Academy of Sciences | |

| CASIA-IrisV2 | 120 ** | 2400 | No | 2004 | |||

| CASIA-IrisV3 | 700 | 22,034 | Various | No | 2005 | ||

| CASIA-IrisV4 | 2800 | 54,601 | Various | No | 2010 | ||

| LivDetIris 2017 [24] | ND CLD 2015 | - | 7300 | contact lens (live) | 2015 | University of Notre Dame | |

| IIITD-WVU | - | 7459 | 2017 | IIIT Delhi | |||

| Clarkson | 50 | 8095 | Print and contact lens (live) | 2017 | Clarkson University | ||

| Warsaw | 457 | 12,013 | Print and contact lens (print, live) | 2017 | Warsaw University | ||

| UBIRIS [26,27] | UBIRIS-V1 | 241 | 1877 | No | 2004 | University of Beira | |

| UBIRIS-V2 | 261 | 11,102 | No | 2009 | |||

| MMU [28,29] | MMU-V1 | 45 | 450 | - | No | 2010 | Multimedia University |

| MMU-V2 | 100 | 995 | - | No | 2010 | ||

| LivDet-Iris 2023 [25] | - | - | 13,332 *** | Various | Print, contact lens, fake/prosthetic eyes, and synthetic iris | 2023 | Multiple Institutions |

| Datasets | Collection Year | #Subjects | #Images | Resolution | FPS (Hz) | Presentation Attacks | Profile | Synthetic Eye Data |

|---|---|---|---|---|---|---|---|---|

| Point of Gaze (PoG) [30] | 2012 | 20 | – | 30 | ✗ | Frontal | ✗ | |

| LPW [31] | 2016 | 22 | 130,856 | 95 | ✗ | Frontal | ✗ | |

| NVGaze [32] | 2019 | 35 | 2,500,000 | 30 | ✗ | Frontal | ✓ | |

| OpenEDS2019 [33] | 2019 | 152 | 356,649 | 200 | ✗ | Frontal | ✗ | |

| OpenEDS2020 [34] | 2020 | 80 | 579,900 | 100 | ✗ | Frontal | ✗ | |

| VRBiom (this work) | 2024 | 25 | 1,262,520 | 72 | ✓ | Non-Frontal | ✓ |

| Feature | Specification |

|---|---|

| Display Type | LCD with local dimming |

| Pixels Per Degree (PPD) | 22 PPD |

| Refresh Rate | Up to 90 Hz |

| Processor | Qualcomm Snapdragon XR2 + Gen 1 |

| RAM | 12 GB LPDDR5 |

| Tracking Capabilities | Head, hand, face, and eye tracking |

| Field of View | 106° horizontal × 90° vertical |

| Wi-Fi | Wi-Fi 6E capable |

| Battery Life | ≈2.5 h |

| #Subjects | #Gaze | #Glass | #Repetitions | #Eyes | #Total |

|---|---|---|---|---|---|

| 25 | 3 | 2 | 3 | 2 | 900 |

| Type | PA Series | Subtype | # Identities | # Videos | Attack Types |

|---|---|---|---|---|---|

| bona fide | – | [steady gaze, moving gaze, partially closed] × [glass, no glass] | 25 | 900 | – |

| Presentation Attacks | 2 | Mannequins | 7 | 84 | Own eyes (same material) |

| 3 | Custom Rigid Mask (Type I) | 10 | 120 | Own eyes (same material) | |

| 4 | Custom Rigid Mask (Type II) | 14 | 168 | Fake 3D eyeballs | |

| 5 | Generic Flexible Masks | 20 | 240 | Print attacks (synthetic data) | |

| 6 | Custom Silicone Masks | 16 | 192 | Fake 3D eyeballs | |

| 7 | Print Attacks | 25 | 300 | Print attacks (real data) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kotwal, K.; Ulucan, I.; Özbulak, G.; Selliah, J.; Marcel, S. VRBiom: A New Periocular Dataset for Biometric Applications of Head-Mounted Display. Electronics 2025, 14, 1835. https://doi.org/10.3390/electronics14091835

Kotwal K, Ulucan I, Özbulak G, Selliah J, Marcel S. VRBiom: A New Periocular Dataset for Biometric Applications of Head-Mounted Display. Electronics. 2025; 14(9):1835. https://doi.org/10.3390/electronics14091835

Chicago/Turabian StyleKotwal, Ketan, Ibrahim Ulucan, Gökhan Özbulak, Janani Selliah, and Sébastien Marcel. 2025. "VRBiom: A New Periocular Dataset for Biometric Applications of Head-Mounted Display" Electronics 14, no. 9: 1835. https://doi.org/10.3390/electronics14091835

APA StyleKotwal, K., Ulucan, I., Özbulak, G., Selliah, J., & Marcel, S. (2025). VRBiom: A New Periocular Dataset for Biometric Applications of Head-Mounted Display. Electronics, 14(9), 1835. https://doi.org/10.3390/electronics14091835