Abstract

This paper investigates discrete-time distributed optimization for multi-agent systems (MASs) with time-varying objective functions. A novel fully distributed event-triggered discrete-time zeroing neural network (ET-DTZNN) algorithm is proposed to address the discrete-time distributed time-varying optimization (DTDTVO) problem without relying on periodic communication. Each agent determines the optimal solutions by relying solely on local information, such as its own objective function. Moreover, information is exchanged with neighboring agents exclusively when event-triggered conditions are satisfied, significantly reducing communication consumption. The ET-DTZNN algorithm is derived by discretizing a proposed event-triggered continuous-time ZNN (ET-CTZNN) model via the Euler formula. The ET-CTZNN model addresses the time-varying optimization problem in a semi-centralized framework under continuous-time dynamics. Theoretical analyses rigorously establish the convergence of both the ET-CTZNN model and the ET-DTZNN algorithm. Simulation results highlight the algorithm’s effectiveness, precision, and superior communication efficiency compared with traditional periodic communication-based approaches.

1. Introduction

The rapid advancement of large-scale networks and the progress in big data theory have significantly fueled the exploration of distributed optimization algorithms. These algorithms have found widespread application across various scientific and engineering domains [1,2,3,4]. These algorithms prove particularly valuable in application contexts such as power economic dispatch [2], Nash equilibrium seeking [3], and smart grids [4]. Within distributed optimization architectures, the collective objective function of multi-agent networks emerges from the aggregated summation of individual agents’ local sub-objectives. During operational execution, each agent maintains exclusive access to its designated sub-objective function while implementing state information exchange protocols with neighbouring agents. The global optimal solution is attained through consensus across all participating agents [1]. Recent advancements in distributed optimization have focused on various innovative approaches to enhance efficiency and effectiveness. For example, Pan et al. presented a robust optimization method for active distribution networks with variable-speed pumped storage to mitigate the risks associated with new energy sources [5]. Another research by Luo et al. introduced a consensus-based distributed optimal dispatch algorithm for integrated energy microgrids, aiming to achieve economic operation and power supply–demand balance [6]. Additionally, Tariq et al. presented an optimal control strategy for centralized thermoelectric generation systems using the barnacles mating optimization algorithm to address nonuniform temperature distribution [7]. Further, Yang et al. proposed the heavy-ball Barzilai–Borwein algorithm, a discrete-time method combining the heavy-ball momentum and Barzilai–Borwein step sizes for static distributed optimization over time-varying directed graphs [8]. Lastly, a privacy-preserving distributed optimization algorithm for economic dispatch over time-varying directed networks was developed by An et al. to protect sensitive information while ensuring fully distributed and efficient operation [9].

While prior researches have extensively addressed distributed optimization with static objective functions, real-world implementations frequently necessitate handling distributed optimization problems where objective functions dynamically evolve through time [10,11,12,13]. While distributed static optimization problem deals with fixed objective functions, distributed time-varying optimization (DTVO) problem involves objective functions that change over time. This dynamic nature introduces several challenges, such as the need for continuous adaptation to changing conditions, ensuring convergence to time-dependent optimal solutions and maintaining communication efficiency among agents [12]. Recent advancements in the DTVO problem have introduced several innovative algorithms to address dynamic challenges. Zheng et al. proposed a specified-time convergent algorithm tailored for distributed optimization problems characterized by time-varying objective functions. This algorithm guarantees convergence within a predefined timeframe [14]. Wang et al. developed a distributed algorithm for time-varying quadratic optimal resource allocation, focusing on applications like multi-quadrotor hose transportation [15]. Ding et al. developed a distributed algorithm based on output regulation theory to handle both equality and inequality constraints in time-varying optimization problems [16]. Rahili et al. studied the time-varying distributed convex optimization problem for continuous-time multi-agent systems and introduced control algorithms for single-integrator and double-integrator dynamics [17].

Introduced by Zhang et al. in 2002 [18], zeroing neural network (ZNN) models and ZNN algorithms offer a solution for tackling a variety of challenging time-varying problems. Thanks to their ability to handle parallel computing, their suitability for hardware implementation, and their distributed nature, ZNN models have been extensively applied to solve various time-varying challenges since their creation [19,20,21,22]. Moreover, in recent years, researchers have introduced several innovative algorithms to address distributed time-varying problems. For example, Xiao et al. proposed a distributed fixed-time ZNN algorithm designed to achieve fixed-time and robust consensus in multi-agent systems under switching topology [22]. Liao et al. introduced a novel ZNN algorithm for real-time management of multi-vehicle cooperation, illustrating its effectiveness and robustness in achieving real-time cooperation among multiple vehicles [23]. Yu et al. presented a data-driven control algorithm based on discrete ZNN for continuum robots, ensuring real-time control and adaptability to varying conditions [24]. Jin et al. introduced a distributed approach to collaboratively generate motion within a network of redundant robotic manipulators, utilizing a noise-tolerant ZNN to handle communication interferences and computational errors [25].

The aforementioned distributed ZNN models and algorithms tackle continuous-time DTVO problems, and it is essential to develop discrete-time ZNN algorithms for the practical implementation of theoretical continuous-time solutions in digital systems, ensuring accurate and efficient real-time computations while leveraging the advantages of digital hardware [26]. While there are plenty researches on centralized discrete-time ZNN algorithms [26,27], research on distributed ZNN algorithms that tackles discrete-time distributed time-varying problems is scarce. Notably, conventional discrete-time ZNN algorithms predominantly employ periodic iteration mechanisms [26,27]. This architecture inherently incurs elevated communication consumption (if applied to distributed ZNN algorithms), particularly under small sampling intervals or reduced iteration step sizes, while communication consumption constitutes a critical performance metric for assessing distributed algorithmic efficacy. In contrast, event-triggered communication paradigms strategically regulate inter-agent data transmission through state-dependent triggering criteria, thereby achieving substantial communication efficiency gains. Therefore, some researchers have dedicated their efforts to event-triggered ZNN algorithms and the results have shown significant progress in addressing time-varying problems. For instance, Dai et al. introduced an adaptive fuzzy ZNN model integrated with an event-triggered mechanism to address the time-varying matrix inversion problem. Their approach achieved finite-time convergence and demonstrated robustness [28]. Kong et al. introduced a predefined time fuzzy ZNN algorithm with an event-triggered mechanism for kinematic planning of manipulators, ensuring predefined time convergence and reducing computational burden [29].

Motivated by the need to address the discrete-time DTVO (DTDTVO) problem, a novel event-triggered discrete-time ZNN (ET-DTZNN) algorithm is proposed. This algorithm is built upon an event-triggered continuous-time zeroing neural network (ET-CTZNN) model, which was specifically designed to address the continuous-time distributed time-varying optimization (CTDTVO) problem within a semi-centralized framework. By discretizing the ET-CTZNN model using the Euler formula, the ET-DTZNN algorithm enables agents to compute optimal solutions based on local time-varying objective functions and exchange information with neighbors only when specific event-triggered conditions are met. This algorithm significantly reduces communication consumption while preserving the temporal tracking capabilities inherent to ZNN algorithms. In this paper, the ET-DTZNN algorithm is designed to solve strongly convex distributed optimization problem without constraints; it assumes strong convexity of agents’ objective functions.

The structure of this paper consists of six sections. In Section 2, mathematical notations, graph theory preliminaries, and the DTDTVO problem formulation are introduced. Section 3 focuses on the ET-CTZNN model, encompassing the formulation of the CTDTVO problem in Section 3.1 and the detailed design process of the ET-CTZNN model in Section 3.2. The design process and description of the ET-DTZNN algorithm are provided in Section 4. Section 5 delivers theoretical proofs demonstrating the convergence of both the ET-CTZNN model and the ET-DTZNN algorithm. Numerical examples showcasing the effectiveness, precision, and enhanced communication efficiency of the ET-DTZNN algorithm are presented in Section 6. Finally, Section 7 summarizes the paper and outlines the key contributions of this research.

- An innovative ET-CTZNN model is introduced to tackle the CTDTVO problem. The ET-CTZNN model operates within a semi-centralized framework, solving the CTDTVO problem in continuous time.

- The ET-CTZNN model is discretized using the Euler formula to develop the fully distributed ET-DTZNN algorithm. The ET-DTZNN algorithm enables agents to compute optimal solutions based on local time-varying objective functions and exchange state information with neighbors only when specific event-triggered conditions are met, significantly reducing communication consumption.

- Comprehensive theoretical analyses are conducted, establishing the convergence of both the ET-CTZNN model and the ET-DTZNN algorithm, ensuring their effectiveness in solving the DTVO problem.

2. Preliminaries and Problem Formulation for DTDTVO

To lay the basis for further investigation, some preliminaries concerning mathematical notations and graph theory are given in Section 2.1, and the formulation of the DTDTVO problem is given in Section 2.2.

2.1. Preliminaries

This subsection outlines key preliminaries related to mathematical notations and graph theory. The notation denotes the a vector’s or the 2-norm of a matrix, while signifies its transpose. Furthermore, represents the identity matrix, is a d-dimensional vector of ones, and ⊗ denotes the Kronecker product [30]. The Kronecker product is used to extend graph operators like the Laplacian matrix into higher-dimensional spaces, which is essential for distributed algorithms to handle multi-dimensional agent states.

The following paragraph introduces fundamental concepts from graph theory [31]. These principles establish the theoretical basis needed for modeling and analyzing MASs. The notation denotes a weighted graph, represents the vertex set, and denotes the edge set. The adjacency matrix is defined such that if and otherwise. The neighbor set of the ith vertex is represented by . Graph is classified as connected and undirected if there exists a path connecting any pair of vertices in and if implies . The degree matrix is given by . Moreover, the Laplacian matrix is defined as . In distributed optimization, agents work together across a network to solve a shared problem. Consensus means all agents agree on the same solution, which is essential for success. The Laplacian matrix reflects the network’s structure, helping agents align their solutions by emphasizing connections between them and reducing differences with their neighbors. This alignment aids the optimization process by coordinating local efforts.

Assumption 1.

Graph is undirected and connected.

If is not connected, the system may split into isolated subnetworks, preventing global consensus and leading to inconsistent optimization solutions across agents.

2.2. Problem Formulation for DTDTVO

To establish a foundation for further exploration and discussion, this subsection provides the formulation of the DTDTVO problem. The details are outlined to facilitate a clear understanding of the problem structure and its associated constraints.

Let us consider the following DTDTVO problem with the optimal solution to be solved by a multi-agent system consisting of n agents at each computational time interval :

where . In addition, represents the local time-varying objective function of agent i. It is required that be strongly convex and second-order differentiable for all . Here, is the shared optimization variable. This variable is common across all agents in the MAS, and the goal is for the agents to collaboratively optimize it. The time-varying objective function is obtained by sampling at the time instant , referred to as . Here, represents the sampling interval, and denotes the update index.

Given that the communication graph is connected and undirected, the Laplacian matrices associated with facilitate consensus among agents. According to [32], DTDTVO Problem (1) can be transformed into an equivalent formulation, as described in the following lemma.

Lemma 1.

When the graph is strongly connected and undirected, DTDTVO Problem (1) can be equivalently represented by the following formulations [32]:

with denoting the local solution of the shared optimization variable computed by agent i. In addition, the collective state of the whole MAS is defined as and the coefficient matrix is defined as .

In the real-time solving process of DTDTVO Problem (2)–(3), all computations must be executed using present and/or previous data. To address the DTDTVO problem in a fully distributed way, the data utilized are limited to local information, including the local objective function and observations of neighbors’ states. For example, at time instant , for agent i with a neighboring set , only information such as , , and with for is available. The future unknown information such as and is not computed yet and cannot be used for computing the future unknown during . And since is also computed during , it is also unknown to agent i. This paper aims to find the future unknown optimal solution during from present and/or previous data in a fully distributed manner.

3. Event-Triggered Continuous-Time ZNN Model

In this section, a CTZNN model is developed to solve the CTDTVO problem in Section 3.1. On the basis of the CTZNN model, an ET-CTZNN model is designed by introducing an event-triggered mechanism in Section 3.2.

3.1. Continuous-Time ZNN Model

To develop the distributed discrete-time ZNN algorithm to achieve the solution of (2)–(3) effectively, a continuous-time ZNN (CTZNN) model is constructed first. Let us consider the continuous-time counterpart of (2)–(3) expressed as follows:

with , where denotes the local solution of the shared optimization variable computed by agent i in continuous time.

Under Lemma 1, the consensus problem is redefined as Equality Constraint (5). While equality constraints are traditionally addressed using the Lagrange function in the conventional ZNN design method [26], this approach is not feasible for Equality Constraint () due to the inherent rank deficiency of the Laplacian matrix L. As a result, defining also yields a rank-deficient matrix. Therefore, an alternative method is required to derive the optimal solution for CTDTVO Problem (4)–(5). A penalty function is introduced to handle the consensus problem. The penalty function is defined as

with being a large positive parameter. Clearly, as converges to , the value of tends toward zero. We select a quadratic penalty to smoothly enforce consensus by minimizing state differences across agents, leveraging its convexity for stable convergence. Alternatives like an absolute-value penalty could work but might slow convergence due to non-differentiability, though they would still ensure consensus. As a result, Problem (4)–(5) is reformulated into an equivalent CTDTVO problem without equality constraints, described as follows:

Assuming that exists and remains continuous, the optimal solution is determined by satisfying the following equation:

where

with

To derive the optimal solution of Equation (8), an error function is introduced as

To ensure the error function approaches zero, its derivative with respect to time t (9) must remain negative [26]. As a result, the ZNN design formula is applied as follows:

where denotes an array composed of activation functions, with the parameter playing a role in regulating the convergence rate. To meet the necessary conditions, the activation function must adhere to two fundamental properties: it needs to be odd and monotonically increasing. For simplicity, this paper selects the linear function as the activation function. Substituting Equation (10) into Equation (9) yields the following model:

where

with representing the Hessian matrix associated with agent i, where . The specific definition of is provided as follows:

where denotes the jth element of vector . In addition, the notation is defined as

in which .

By performing straightforward matrix computations, the distributed version of Equation (11), specifically designed for agent i, is derived as follows:

Let us define an auxiliary term as . Hence, the above Function (12) is formulated as

where represents the degree of the Laplacian matrix L corresponding to agent i, and . The matrix is defined as . Hence, one has

Therefore, the CTZNN model is finalized as

Theoretically speaking, is non-singular since strict convexity of ensures the Hessian is positive definite and (with and for connected agents) adds a positive shift, maintaining invertibility. In practice, numerical errors might make nearly singular, risking solution stability. If is not strictly convex due to parameter issues, convergence could fail. Regularization, such as with small , could stabilize computation by ensuring positive definiteness, though it slightly modifies the optimum.

3.2. Event-Triggered Continuous-Time ZNN Model

The ET-CTZNN model incorporates an event-triggered mechanism, significantly reducing computational costs compared to the CTZNN model (13). The subsequent sections detail the mechanism and the event-triggered conditions of the ET-CTZNN model.

The following reference measurement is introduced to construct the event-triggering condition:

where represents the triggering time of the current event while corresponds to the next event’s triggering time. The interval between two consecutive triggered instants denotes the triggered time interval. The local distributed error serves as a metric to evaluate the system state within the time interval . The definition of is , and .

The event-triggered condition is designed on the basis of the value relation between the distributed system error and the reference value . When the difference between and exceeds a set range, the event is triggered, and the next triggering time is reached. Thus, the event-triggered condition for agent i is given as follows:

where parameter satisfies . Therefore, for agent i, when , the ET-CTZNN model is finalized as

The event-triggered mechanism’s use of frozen values between triggering events may lead to larger errors when the local functions vary rapidly over time, as the outdated information may not capture the current dynamics, though our theoretical analysis under controlled conditions (e.g., with bounded noise) in Section 5.2.1 indicates acceptable performance.

4. Event-Triggered Discrete-Time ZNN Algorithm

For convenient implementation on digital hardware and the development of numerical algorithms, it is important to discretize the proposed ET-CTZNN model into a discrete-time algorithm. In Section 4.1, we first propose a semi-centralized ET-DTZNN model to solve the DTDTVO problem. Then, by introducing the Euler formula, a fully distributed ET-DTZNN algorithm is designed in Section 4.2. The proposed ET-DTZNN algorithm addresses the DTDTVO problem through a fully distributed approach, achieving a substantial reduction in communication overhead among agents.

4.1. Semi-Centralized ET-DTZNN Model

Following the ET-CTZNN model (13), the discrete-time ZNN (DTZNN) model is given as

where , , and . Furthermore, based on the ET-CTZNN model (13) and the DTZNN model (16), an ET-DTZNN model is constructed. The event-triggered time of agent i in discrete time is denoted as . Under the discrete-time event-triggered mechanism, when , one has . Therefore, on the basis of the ET-CTZNN model (13), for agent i, the function that determines the triggered event in the ET-DTZNN model is defined as follows:

where and . For agent i, when , the ET-DTZNN model is finalized as

Upon examining the ET-CTZNN model (15) and the ET-DTZNN model (18), one observes that and are required for agent i to compute and , respectively. Consequently, if an analytical solution is required, before the first event-triggered time instant, instead of each agent independently computing and , the ET-CTZNN model (13) and the ET-DTZNN model (18) only compute and in a semi-decentralized manner. In other words, while information exchange between agents is distributed, the ET-CTZNN model (15) and the ET-DTZNN model (18) are ultimately solved in a centralized way before the first event-triggered time instant. Furthermore, in real-world applications, directly knowing or obtaining the time derivative and may be difficult. Therefore, it is necessary to develop a fully distributed ET-DTZNN algorithm that addresses the DTDTVO problem through a fully distributed approach.

4.2. Fully Distributed ET-DTZNN Algorithm

In traditional distributed optimization algorithms, agents achieve consensus by exchanging with their neighbors. However, as an alternative, this paper proposes that each agent retains a short-term memory of its neighbors’ states, eliminating the need for direct exchanges of . Using the Euler formula, agents can effectively estimate for based on the stored information, enabling an accurate approximation of .

The Euler formula referenced in this paper is commonly used for approximating derivatives in discrete systems [26]:

Each agent stores just one prior state per neighbor, sufficient for the Euler approximation. The truncation error associated with this Euler formula is of order . As a result, the approximation of can be expressed as follows:

By incorporating the two-step time-discretization (TD) formula [26]

the fully distributed ET-DTZNN algorithm, designed to solve the DTDTVO problem, is derived as follows:

where the convergence rate is regulated by parameter . Distinct from the ET-CTZNN model (15) and the ET-DTZNN algorithm (18), the newly designed ET-DTZNN algorithm (22) tackles the DTDTVO problem in a fully distributed fashion. Importantly, the communication among agents is restricted to sharing their respective solutions , ensuring that essential local information, such as each agent’s objective function, stays completely private and hidden from neighboring agents.

The performance of the ET-DTZNN algorithm depends on the parameters , , , and , which influence convergence speed, accuracy, communication efficiency, and consensus quality. The event-triggering threshold controls the sensitivity of the triggering condition: a smaller increases the frequency of updates, enhancing responsiveness and potentially speeding up convergence by reducing approximation errors, but at the cost of higher communication overhead, while a larger reduces communication, possibly slowing convergence. The convergence parameter accelerates convergence with larger values by increasing the speed of error correction, but if too large, it may lead to overshooting and instability due to overly aggressive updates. The sampling gap affects accuracy, a smaller improves accuracy by closely approximating the continuous-time dynamics, while a larger may degrade performance and risk instability if it exceeds stability limits. The penalty parameter enforces consensus, with larger values ensuring tighter agreement among agents but potentially increasing sensitivity to noise if too large.

The value of parameter should be chosen according to the desired balance between communication efficiency and convergence speed; a moderate value between 0.5 and 0.7 often provides a good trade-off, ensuring sufficient updates without excessive communication. The value of parameter should be chosen according to the balance between accuracy and computational efficiency, ensuring it remains within stability limits; for problems requiring high accuracy, a smaller is preferable, while less stiff problems may tolerate a larger . The value of parameter should be chosen according to the desired convergence speed, starting with a moderate value and adjusting based on and the problem’s stiffness to avoid overshooting. The value of parameter should be chosen according to the need for consensus strength, typically in the range of 10 to 100, depending on the network size and expected noise levels, to enforce agreement without over-penalizing.

5. Theoretical Analyses

This section establishes and proves the convergence theorem for the ET-CTZNN model (15) in Section 5.1. Section 5.2 provides a theoretical analysis of the model’s robustness and its ability to exclude Zeno behavior. In addition, Section 5.3 provides convergence theorems and investigates the maximum steady-state residual error (MSSRE) associated with the ET-DTZNN algorithm (22).

5.1. Convergence Theorem for ET-CTZNN Model

Based on the analysis outlined in Section 3, CTDTVO Problem (4)–(5) is reformulated as the time-varying Equation (8). Consequently, solving the CTDTVO problem equates to resolving the matrix Equation (8). The convergence theorem for ET-CTZNN Model (15) is presented as Theorem 1.

Theorem 1.

Consider CTDTVO Problem (4)–(5) under the following assumptions: (i) each function is at least twice continuously differentiable with respect to and continuous with respect to t; (ii) the communication graph is connected and undirected; (ii) the parameters satisfy and . Starting from any initial state , the residual error of the entire multi-agent system converges to zero, i.e., .

Proof of Theorem 1.

For agent i, a Lyapunov candidate function is defined as

Therefore, the time derivative of can be expressed as follows:

According to the event-triggered Condition (14) with the parameter satisfying , it follows that for all . Consequently, the inequality holds consistently for agent i. Based on the Lyapunov stability theorem [33], this ensures that converges to zero, which implies for . Since , it follows that . Furthermore, for all agents, globally converges to the optimal solution . Therefore, the entire system’s state vector also converges to . Thus, the proof is completed. □

5.2. Robustness and Zeno Behavior Analysis for ET-CTZNN Model

In this subsection, we analyze the robustness and Zeno behavior of the ET-CTZNN model. The robustness analysis evaluates the model’s performance under bounded noise for each agent, while the Zeno behavior analysis ensures the absence of an infinite number of triggering events in a finite time, enhancing the practical feasibility of the model.

5.2.1. Analysis on Robustness for ET-CTZNN Model

We begin by introducing a bounded noise term to ET-CTZNN Model (15) to assess its robustness. For each agent i, we consider the local error function modified with an additive noise term , defined as

where is the noise affecting of agent i, bounded by for some constant . This noise may originate from measurement inaccuracies, communication delays, or computational errors.

To analyze the impact of bounded noise , we define the Lyapunov function for agent i as

Hence, the time derivative of under noisy environment is derived as

The Hessian matrix is bounded and with and being positive constants. Therefore, matrix is also bounded. We assume that bounded matrix satisfies where is a positive constant. Hence, based on the event-triggering Condition (14), we obtain

Subsequently, based on the values of , the analysis is segmented into the next three cases.

- Case 1: If , then we obtain , which implies that tends to decrease and converges towards . That is, .

- Case 2: If , then we obtain . (i) When , we determine that is a decreasing function, which implies that is less than , and the situation turns into Case 3. (ii) When , it indicates that remains on the surface of a sphere with a radius of . That is, .

- Case 3: If , then we have . (i) When , we determine that is a decreasing function, which indicates that . (ii) When , it indicates that remains on the surface of a sphere with a radius of less than . That is, . (iii) When , we determine that is an increasing function, which indicates that the residual error tends to converge towards as time increases. That is, .

In summary, the maximum boundary of the residual error with bounded noise is

indicating that with bounded noise, the residual error converges to a value proportional to the noise magnitude, scaled by the convergence parameter and event-triggering threshold.

5.2.2. Analysis on Zeno Behavior for ET-CTZNN Model

Zeno behavior occurs if an infinite number of triggering events happen in a finite time, undermining the practical utility of the event-triggered mechanism. In this subsection, we exclude Zeno behavior for the ET-CTZNN model.

If the lower bound of the inter-event time interval can be determined and inequality is always satisfied, then Zeno behavior is excluded. Since the event-triggering condition is based on the value relation between and , we investigate the time derivative of .

For , one has . Hence,

Since , one further obtains

Additionally, considering the event-triggering Condition (14), for , one obtains . Therefore, it is determined that . For the next event-triggering time instant , the following inequality holds: . Hence, it is determined that

The lower bound of the inter-event time interval can be expressed as

This formulation effectively demonstrates the nonexistence of Zeno behavior.

5.3. Convergence Theorems of ET-DTZNN Algorithm

This subsection provides a comprehensive theoretical analysis of ET-DTZNN Algorithm (22), which is regarded as a linear two-step algorithm. To aid in understanding, several relevant lemmas are introduced below [26].

Lemma 2.

A linear N-step method is formulated as . The characteristic polynomials of this method are and . For zero-stability, all complex roots of must satisfy . Furthermore, if , the corresponding root should be simple.

Lemma 3.

A linear N-step method is defined as . The truncation error’s order can be evaluated by calculating and . If while for all , the N-step method exhibits a truncation error of .

Lemma 4.

A linear N-step method is expressed as . Its characteristic polynomials are represented as and . To ensure consistency, conditions and must hold, where is the derivative of .

Lemma 5.

A linear N-step method is expressed as . The convergence of the N-step method is ensured if, and only if, Lemmas 3 are satisfied. Specifically, an N-step method is convergent if it is both zero-stable and consistent.

Building upon the established lemmas, the convergence theorem of the ET-DTZNN algorithm (22) is proven. These lemmas provide the theoretical foundation to demonstrate the algorithm’s convergence properties rigorously.

Theorem 2.

Proof of Theorem 2.

In accordance with Lemma 5, ET-DTZNN Algorithm (22) achieves convergence provided it satisfies both zero-stability and consistency. Additionally, as highlighted in Lemma 2, the algorithm’s first characteristic polynomial is defined as follows:

The root of the characteristic polynomial is . It follows that all roots satisfy , and when , the root is simple. Hence, the ET-DTZNN algorithm (22) is zero-stable.

On the basis of (21) and (22), the ET-DTZNN algorithm (22) is described by the following equation:

According to Lemma 3, . Therefore, is calculated as

We compute for . Therefore, based on Lemma 3, ET-DTZNN Algorithm (22) possesses a truncation error order of . Additionally, the second characteristic polynomial of the ET-DTZNN algorithm (22) is given as . Consequently, it follows that . Thus, according to Lemma 4, the ET-DTZNN Algorithm (22) is consistent with an order of .

In alignment with Lemma 5, ET-DTZNN Algorithm (22) achieves convergence as it fulfills the criteria of zero-stability alongside consistency of order . Thus, the proof is concluded. □

Theorem 3.

We consider CTDTVO Problem (4)–(5). We assume that, for every agent i, its local objective function possesses continuous second-order derivatives. With the design parameter and a sufficiently small sampling interval gap, the maximum steady-state residual error (MSSRE) produced by ET-DTZNN Algorithm (22), , is of .

Proof of Theorem 3.

We let represent the actual solution to the problem, i.e., when . According to Theorem 3, ET-DTZNN Algorithm (22) exhibits a truncation error of , implying that . By employing the Taylor expansion, it follows that

Hence, the MSSRE is deduced as

where denotes the Frobenius norm of a matrix. Given that is the actual solution of the problem, it follows that is a constant. Consequently, the MSSRE produced by ET-DTZNN Algorithm (22) is of . Thus, the proof is complete. □

6. Numerical Experiments

In this section, three numerical examples are provided to demonstrate the validity and effectiveness of the proposed ET-DTZNN Algorithm (22) discussed in this paper.

6.1. Example 1: DTDTVO Problem Solved by ET-DTZNN Algorithm

We consider a DTDTVO problem involving a multi-agent system comprising five agents. The DTDTVO problem is formulated as follows:

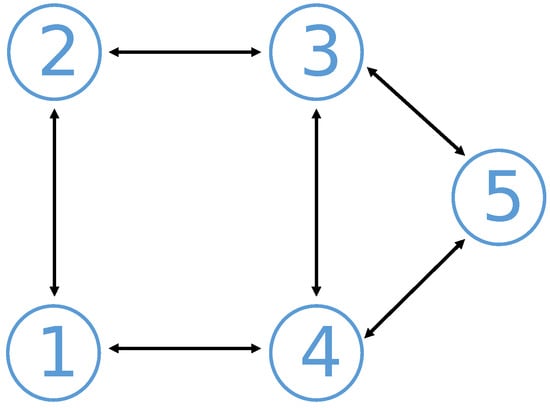

where . The structure of the network’s communication topology, represented by , is presented in Figure 1, illustrating the connections among the agents. Detailed time-varying local objective functions for each agent are provided in Table 1.

Figure 1.

Communication topology graph of multi-agent system in Examples 1 and 2.

Table 1.

Expressions of time-varying objective functions in Example 1.

Prior to the experiment, the parameters of ET-DTZNN Algorithm (22) need to be appropriately set. The operation duration is defined as s, with a sampling interval of s and parameter h configured at . For the penalty function, is assigned a value of 50. Furthermore, the initial states are randomly initialized within the range . The experimental results are showcased in Figure 2, Figure 3, Figure 4 and Figure 5.

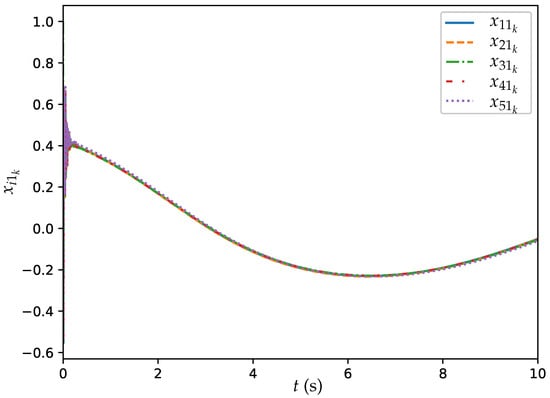

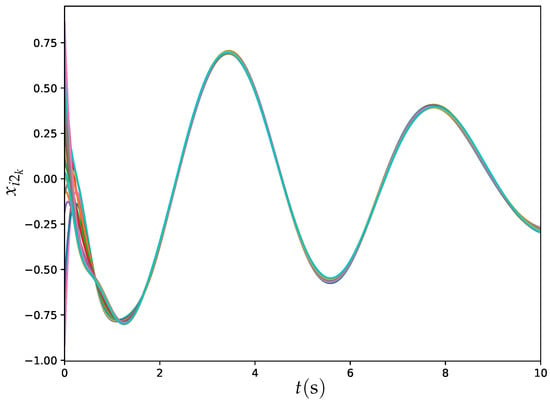

Figure 2.

Trajectories of for all agents synthesized by ET-DTZNN algorithm with s and .

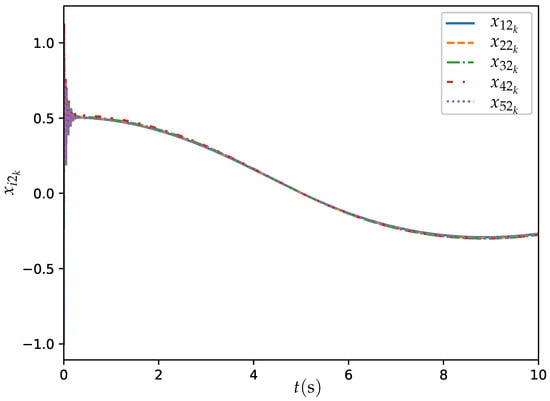

Figure 3.

Trajectories of for all agents synthesized by ET-DTZNN algorithm with s and .

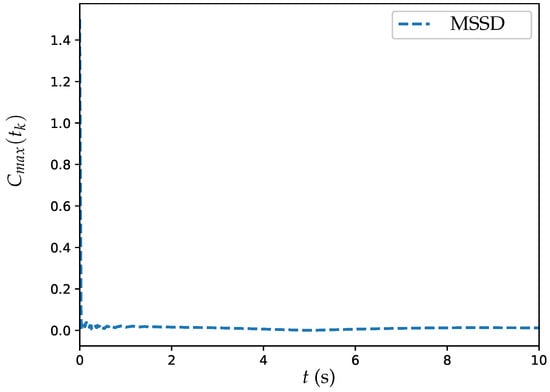

Figure 4.

Trajectory of MSSD for ET-DTZNN algorithm when solving DTDTVO problem with , , and s.

Figure 5.

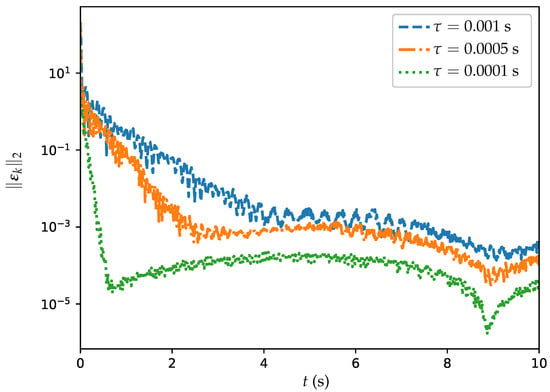

Trajectories of MSSRE for ET-DTZNN algorithm when solving DTDTVO problem with and different .

Figure 2 and Figure 3 illustrate the trajectories of and for all agents synthesized by ET-DTZNN Algorithm (22) with s and . The figure includes five different trajectories, each represented by a different colored dashed line: (blue), (orange), (green), (red), and (purple). The experimental results depicted in Figure 2 and Figure 3 illustrate the effectiveness of ET-DTZNN Algorithm (22) in achieving convergence and consensus for all agents. At the beginning of the simulation, all trajectories exhibit some initial oscillations as the agents adjust their states based on the event-triggered conditions and local objective functions. Over time, the trajectories of all agents converge to a steady state, indicating that ET-DTZNN Algorithm (22) successfully computes the optimal solutions for the time-varying objective functions.

Since achieving consensus is a central aspect of distributed optimization, a specific metric that captures the degree of consensus among the agents over time is introduced. Let us define the maximum steady-state deviation (MSSD) as . The corresponding trajectory of MSSD is illustrated in Figure 4. From Figure 4, it is evident that the MSSD swiftly declines to nearly zero within the initial 2 s, showcasing the algorithm’s capability to effectively achieve consensus. This swift convergence highlights the capability of the ET-DTZNN algorithm to minimize state deviations across agents, ensuring they collaboratively reach a unified solution for the DTDTVO problem efficiently.

Additionally, several experiments are conducted with ET-DTZNN Algorithm (22) solving the DTDTVO problem with different . The MSSRE for the whole system is defined as . The corresponding trajectories of MSSREs are presented in Figure 5 and Table 2.

Table 2.

MSSREs of ET-DTZNN algorithm when solving DTDTVO problem with different .

In Figure 5, the MSSREs are shown for various values of . The figure illustrates three distinct trajectories: the blue dashed line represents a sampling interval of s, the orange dashed line corresponds to s, and the green dashed line indicates s. For s, the MSSRE decreases from approximately to about , represented by the blue dashed line. For s, the MSSRE shows faster convergence, reducing to , and it is represented by the orange dashed line. The green dashed line, which corresponds to s, indicates the most rapid decrease, with the MSSRE reducing from to , highlighting the improved precision achieved with smaller time steps. The figure reveals an evident pattern: a decrease in leads to a reduction in MSSRE, highlighting improved performance of the algorithm when smaller values are utilized. From Figure 5 and Table 3, it can be observed that the MSSRE follows an order of , which is consistent with and validates the theoretical analyses. It is worth pointing out that an MSSRE of is acceptable for applications like robotic coordination, where errors below are typically sufficient, whereas energy networks often demand stricter accuracy, such as or lower, which we achieve with smaller (e.g., MSSRE of at ).

Table 3.

Numbers of trigger events of ET-DTZNN algorithm when solving DTDTVO problem with s and different .

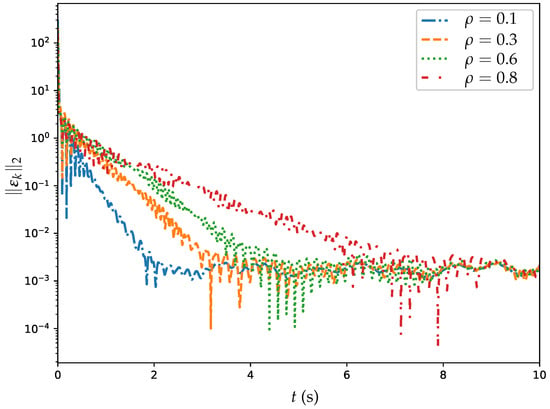

In addition, several experiments are conducted with ET-DTZNN Algorithm (22) solving the DTDTVO problem with different . The numbers of trigger events for ET-DTZNN Algorithm (22) with different values of for five agents are presented in Figrue Figure 6 and Table 3.

Figure 6.

Trajectories of MSSRE for ET-DTZNN algorithm when solving DTDTVO problem with s and different .

From Table 3, one sees that, for , the number of trigger events is significantly higher for all agents compared with and . Specifically, Agent 4 has the highest number of trigger events with 6459, followed by Agent 5 with 6161 and Agent 2 with 5141. As increases to 0.3, the number of trigger events decreases for all agents, indicating that a higher value leads to fewer trigger events. This trend continues as increases to 0.6, with the number of trigger events further decreasing for all agents. Agent 4 still has the highest number of trigger events at 2417 for and 2156 for , followed by Agent 5 with 2312 for and 2345 for . Figure 6 further illustrates the impact of on the convergence rate, showing trajectories of MSSRE for s. For , the convergence rate is the fastest, reaching a low MSSRE within approximately 2 s, while for and , the convergence slows, taking around 3 and 4 s, respectively. At , the convergence rate is significantly slower, requiring over 6 s to reach a similar MSSRE. This highlights that a smaller accelerates convergence by triggering more frequent updates. However, there is a diminishing return in balancing communication cost and convergence rate; for example, when , the communication cost (number of trigger events) does not reduce significantly compared to —e.g., Agent 4 has 1968 triggers at versus 2156 at —but the convergence rate is much slower, taking over 6 s compared to 4 s for . The results showcase that the ET-DTZNN algorithm effectively reduces the number of trigger events as increases, highlighting its efficiency in minimizing communication consumption, though at the cost of slower convergence for larger values.

6.2. Example 2: Comparative Experiments

The widely used gradient neural network algorithm addresses time-varying problems effectively. In this subsection, a comparative experiment is performed to evaluate the GNN-based algorithm against the proposed ET-DTZNN algorithm.

First, based on the algorithm presented in [34], a periodic sampling GNN (PSGNN) algorithm is developed to address the DTDTVO problem. Under the PSGNN algorithm, agents periodically exchange their information with neighboring agents at time intervals given by . For agent i, the scalar-valued energy function is expressed as

where . By utilizing the gradient information of Energy Function (28), the following expression is derived:

where the parameter scales the convergence rate of the GNN algorithm [3]. Thus, the PSGNN algorithm for addressing the DTDTVO problem is defined as

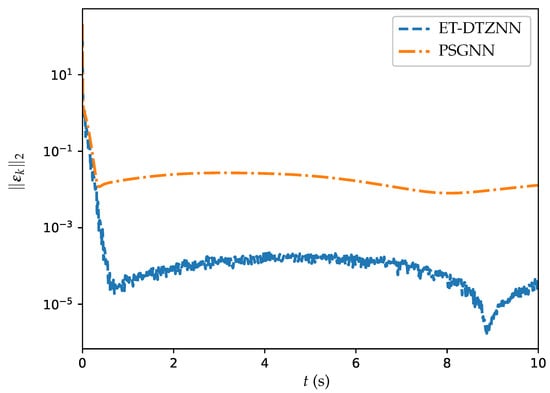

Subsequently, both PSGNN and ET-DTZNN algorithms are applied to address the DTDTVO problem. To ensure a fair comparison, the parameter and the initial states are kept consistent with those defined in Section 6.1, while parameters and are set to . The resulting experimental outcomes are displayed in Table 4 and Figure 7, which provide a detailed comparison of the performance of the PSGNN algorithm and the ET-DTZNN algorithm for solving the DTDTVO problem.

Table 4.

MSSREs of PSGNN and ET-DTZNN algorithms in solving DTDTVO problem with and different values of .

Figure 7.

Trajectories of MSSREs for PSGNN and ET-DTZNN algorithms when solving DTDTVO problem with and s.

Figure 7 illustrates the trajectories of the MSSRE over time t for two different algorithms: PSGNN and ET-DTZNN. The y-axis represents on a logarithmic scale, ranging from to . The blue dashed line represents the ET-DTZNN algorithm, which shows a rapid decrease in initially, stabilizing around . This indicates that the ET-DTZNN algorithm quickly reduces the residual errors and maintains a low error level throughout the experiment. On the other hand, the orange dash–dot line represents the PSGNN algorithm, which shows a slower decrease in and stabilizes around . This indicates that the PSGNN algorithm requires more time to decrease the residual errors and sustains a higher level of error when compared to the ET-DTZNN algorithm. Overall, the ET-DTZNN algorithm presents superior performance in terms of convergence rate and accuracy compared with the PSGNN algorithm. The analysis showcases the success of the ET-DTZNN algorithm in addressing the DTDTVO problem under the configuration of and s, highlighting its efficiency and precision.

Table 4 presents the MSSREs of the PSGNN and ET-DTZNN algorithms in solving the DTDTVO problem with different values of . From Table 4, it is observed that as the value of decreases, the MSSRE values for both algorithms also decrease. Specifically, for s, the MSSRE of PSGNN algorithm is and the MSSRE of ET-DTZNN algorithm is . For s, the MSSRE of PSGNN algorithm is and the MSSRE of ET-DTZNN algorithm is . Finally, for s, the MSSRE of PSGNN algorithm is and the MSSRE of ET-DTZNN algorithm is . Overall, the ET-DTZNN algorithm consistently outperforms the PSGNN algorithm in terms of precision across all tested values. The results highlight the superior performance of the ET-DTZNN algorithm in achieving more accurate solutions compared with the PSGNN algorithm.

6.3. Example 3: DTDTVO Problem Solved by ET-DTZNN Algorithm in Large MASs

In this subsection, two experiments employ the ET-DTZNN algorithm to tackle a DTDTVO problem within a large MAS under static and dynamic topology, respectively. We consider a DTDTVO problem involving a MAS with 20 agents. The DTDTVO problem is formulated as follows:

where . Each agent i () possesses a local time-varying objective function , where . The coefficients are , , , and , rendering the functions time-varying and distinct with a phase difference of 0.1 radians between consecutive agents.

Before the experiment begins, it is essential to configure the parameters of the ET-DTZNN algorithm properly. In both static and dynamic topology scenarios, the total operation duration is set at s, with parameter h defined as and assigned a value of . Due to the scale of the multi-agent system (MAS), the penalty function parameter is assigned a value of 200. Additionally, the initial states are randomly generated within the range of .

6.3.1. ET-DTZNN in Large MAS Under Static Topology

Under the static topology, the agents are linked via a ring-shaped communication topology, enabling each agent i to exchange information bidirectionally with neighbors and . The adjacency matrix A assigns for and 0 otherwise, with degree matrix and Laplacian , ensuring network connectivity for distributed consensus. The experimental results are illustrated through Figure 8, Figure 9 and Figure 10.

Figure 8.

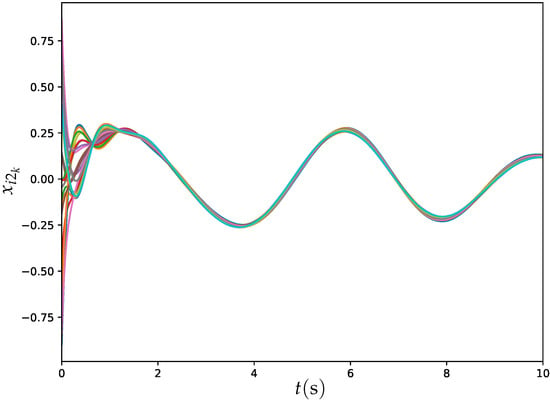

Trajectories of for all agents synthesized by ET-DTZNN algorithm when solving DTDTVO problem within 20 agents under the static topology with s.

Figure 9.

Trajectories of for all agents synthesized by ET-DTZNN algorithm when solving DTDTVO problem within 20 agents under static topology with s.

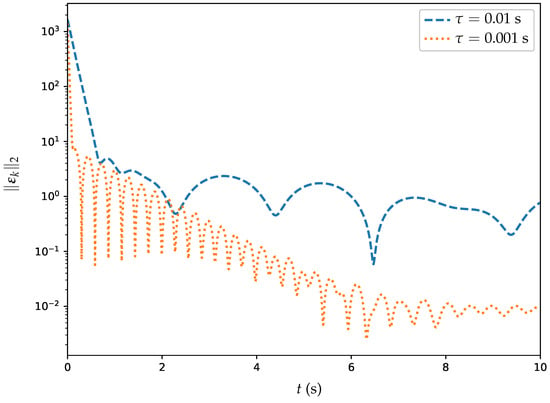

Figure 10.

Trajectories of MSSREs for ET-DTZNN algorithm when solving DTDTVO problem within 20 agents under static topology.

Figure 8 and Figure 9 illustrate the trajectories of and for all agents under static topology synthesized by ET-DTZNN Algorithm (22) with s and . The experimental findings, as shown in Figure 8 and Figure 9, demonstrate the capability of the ET-DTZNN algorithm to ensure both convergence and consensus across all agents within a large MAS. At the beginning of the simulation, all trajectories exhibit some initial deviations as the agents adjust their states based on the event-triggered conditions and local objective functions. As time progresses, the trajectories of all agents gradually stabilize, demonstrating the capability of the ET-DTZNN algorithm to effectively resolve the DTDTVO problem in large multi-agent systems.

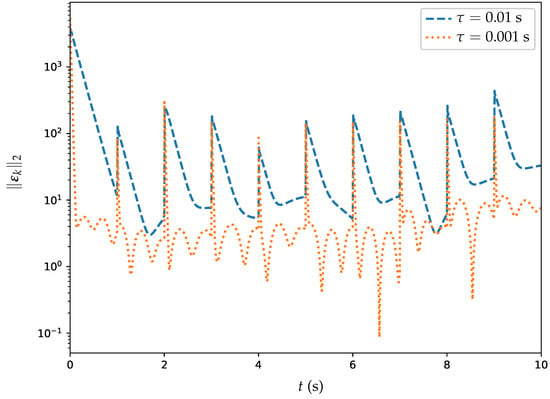

Additionally, experiments are conducted with the ET-DTZNN algorithm solving the DTDTVO problem in a large MAS with different . Figure 10 showcases a semi-log plot illustrating the MSSRE over a 10-second interval for the ET-DTZNN algorithm addressing a DTDTVO problem with 20 agents. This plot compares the results of two trials, where s is represented by the blue dashed line and s is depicted by the orange dotted line. The results show that the smaller sampling interval ( s) yields a low MSSRE of , while s exhibits periodic oscillations peaking at , driven by the time-varying objective function, confirming the theoretical MSSRE scaling of .

6.3.2. ET-DTZNN in Large MAS Under Dynamic Topology

Under the dynamic topology, the agents are linked via a time-varying communication structure, where the topology switches every 1 s to a new randomly generated strongly connected undirected graph. At each switching instant, the adjacency matrix A is constructed using an Erdos–Rényi random graph model with a connection probability of 0.3 [31], ensuring bidirectional information exchange between connected agents. The generation process guarantees strong connectivity by verifying that the resulting graph allows a path between any pair of agents i and j in both directions when considered as a directed graph. This dynamic, strongly connected topology ensures network adaptability and persistent consensus capability despite frequent structural changes. The experimental results are illustrated through Figure 11, Figure 12 and Figure 13.

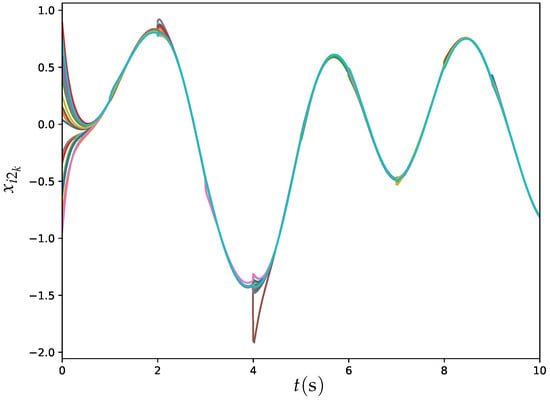

Figure 11.

Trajectories of for all agents synthesized by ET-DTZNN algorithm when solving DTDTVO problem within 20 agents under dynamic topology with s.

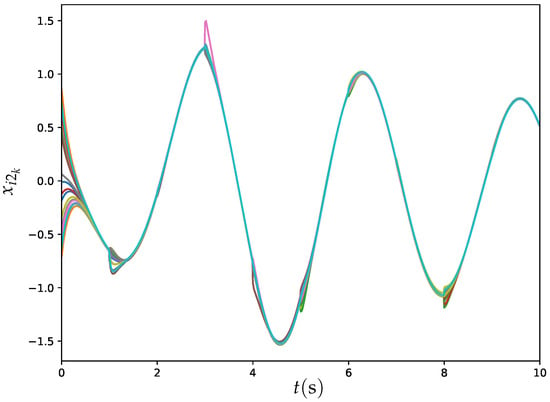

Figure 12.

Trajectories of for all agents synthesized by ET-DTZNN algorithm when solving DTDTVO problem within 20 agents under dynamic topology with s.

Figure 13.

Trajectories of MSSREs for ET-DTZNN algorithm when solving DTDTVO problem within 20 agents under dynamic topology.

Figure 11 and Figure 12 illustrate the trajectories of and for all agents synthesized by ET-DTZNN Algorithm (22) under dynamic topology with s and . The experimental results depicted in Figure 11 and Figure 12 illustrate the effectiveness of the ET-DTZNN algorithm in achieving convergence and consensus for all agents in a large MAS under dynamic topology. The dynamic topology introduces noticeable perturbations at each switching instant (e.g., at ), manifesting as slight discontinuities or oscillations in the trajectories. These perturbations are more pronounced in the early stages when the states are farther apart but diminish as consensus is approached, indicating the robustness of the ET-DTZNN algorithm to topological changes. The impact of the dynamic topology is evident in the small, periodic deviations from smooth convergence, yet the algorithm maintains overall stability and achieves consensus, highlighting its adaptability to frequent structural changes in the communication network.

Additionally, experiments are conducted with the ET-DTZNN algorithm solving the DTDTVO problem in a large MAS with different . Figure 13 displays a semi-log plot illustrating the MSSRE over a 10-second interval. This plot evaluates the ET-DTZNN algorithm’s performance in solving a DTDTVO problem with 20 agents under dynamic topology. Two trials are compared, with the blue dashed line representing s and the orange dotted line depicting s. The MSSRE trajectories demonstrate the algorithm’s convergence despite the dynamic topology, with both experiments showing a rapid initial decrease followed by periodic fluctuations. For s, the MSSRE drops from to around within the first second, while for s, it decreases to around , reflecting the theoretical error bound. The dynamic topology introduces noticeable spikes in the MSSRE at each switching instant (e.g., ), as the sudden change in communication structure perturbs the consensus process. These spikes are more pronounced for s due to the larger sampling interval, which limits the algorithm’s ability to quickly adapt to topological changes. In contrast, the smaller s, combined with a larger , results in a lower MSSRE and smaller fluctuations, indicating better adaptability to the dynamic topology. Despite these perturbations, the MSSRE remains bounded, underscoring the robustness of ET-DTZNN in handling frequent topological switches while maintaining effective distributed optimization.

It is worth mentioning that the fully distributed nature of the ET-DTZNN algorithm ensures that each agent’s computation time per iteration depends primarily on the size of its local neighborhood rather than the total number of agents in the MAS. As shown in Equation (22), each agent computes its update using only its own objective function and the states of its neighbors, resulting in a computational complexity that scales with degree of the agent in graph G. This design keeps per-agent computation lightweight even as the system size grows. Furthermore, the event-triggered mechanism, governed by the condition in Equation (17), reduces communication overhead by limiting inter-agent exchanges to instances when the local error exceeds a threshold relative to . This approach significantly mitigates communication delays, a critical factor in real-time applications, as evidenced by the rapid convergence observed in Figure 8 and Figure 11 across both static and dynamic topologies.

7. Conclusions

To address the DTDTVO problem for MASs characterized by time-varying objective functions, a novel ET-CTZNN model is introduced. This model effectively tackles the CTDTVO problem using a semi-centralized approach. Building upon the ET-CTZNN framework, the ET-DTZNN algorithm is developed. By discretizing the ET-CTZNN model using the Euler formula, the ET-DTZNN algorithm enables agents to compute optimal solutions based on local information and communicate with neighbors only when specific event-triggered conditions are met, significantly reducing communication consumption. For both the ET-CTZNN model and the ET-DTZNN algorithm, their convergence theorems are thoroughly validated. Numerical experiments highlight the effectiveness of the proposed ET-DTZNN algorithm, showcasing its precision and enhanced communication efficiency. Looking forward, the ET-DTZNN algorithm shows potential for applications in smart grids, sensor networks, and collaborative robotics, where it can optimize time-varying objectives while minimizing communication. However, the ET-DTZNN algorithm assumes strong convexity of objective functions, uses a static event-triggering threshold, and relies on Euler discretization. Future work may focus on real-world validation to address challenges like distributed non-convex optimization, integrating adaptive event-triggering for better adaptability, and exploring higher-order discretization methods like Runge–Kutta to enhance stability and accuracy.

Author Contributions

Conceptualization, L.H.; methodology, L.H. and H.C.; software, L.H.; validation, H.C. and Y.Z.; formal analysis, H.C. and L.H.; investigation, H.C. and L.H.; resources, L.H. and Y.Z.; data curation, L.H.; writing—original draft preparation, L.H.; writing—review and editing, L.H. and Y.Z.; visualization, L.H. and Y.Z.; supervision, H.C. and Y.Z.; project administration, Y.Z.; funding acquisition, H.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the China National Key R&D Program (with number 2022ZD0119602), the National Natural Science Foundation of China (with number 62376290), and Natural Science Foundation of Guangdong Province (with number 2024A1515011016).

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors express their sincere thanks to Sun Yat-sen University for its support and assistance in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this paper:

| DTVO | Distributed Time-Varying Optimization |

| MASs | Multi-Agent Systems |

| ZNN | Zeroing Neural Network |

| CTDTVO | Continuous-Time Distributed Time-Varying Optimization |

| DTDTVO | Discrete-Time Distributed Time-Varying Optimization |

| CTZNN | Continuous-Time Zeroing Neural Network |

| DTZNN | Discrete-Time Zeroing Neural Network |

| ET-CTZNN | Event-Triggered Continuous-Time Zeroing Neural Network |

| ET-DTZNN | Event-Triggered Discrete-Time Zeroing Neural Network |

| MSSRE | Maximum Steady-State Residual Error |

| MSSD | Maximum Steady-State Deviation |

| GNN | Gradient Neural Network |

| PSGNN | Periodic Sampling Gradient Neural Network |

References

- Patari, N.; Venkataramanan, V.; Srivastava, A.; Molzahn, D.K.; Li, N.; Annaswamy, A. Distributed optimization in distribution systems: Use cases, limitations, and research needs. IEEE Trans. Power Syst. 2022, 37, 3469–3481. [Google Scholar]

- Cavraro, G.; Bolognani, S.; Carli, R.; Zampieri, S. On the need for communication for voltage regulation of power distribution grids. IEEE Trans. Control Netw. Syst. 2019, 6, 1111–1123. [Google Scholar]

- He, L.; Cheng, H.; Zhang, Y. Centralized and decentralized event-triggered Nash equilibrium-seeking strategies for heterogeneous multi-agent systems. Mathematics 2025, 13, 419. [Google Scholar] [CrossRef]

- Molzahn, D.K.; Dorfler, F.; Sandberg, H.; Low, S.H.; Chakrabarti, S.; Baldick, R.; Lavaei, J. A survey of distributed optimization and control algorithms for electric power systems. IEEE Trans. Smart Grid 2017, 8, 2941–2962. [Google Scholar]

- Pan, P.; Chen, G.; Shi, H.; Zha, X.; Huang, Z. Distributed robust optimization method for active distribution network with variable-speed pumped storage. Electronics 2024, 13, 3317. [Google Scholar] [CrossRef]

- Luo, S.; Peng, K.; Hu, C.; Ma, R. Consensus-based distributed optimal dispatch of integrated energy microgrid. Electronics 2023, 12, 1468. [Google Scholar] [CrossRef]

- Tariq, M.I.; Mansoor, M.; Mirza, A.F.; Khan, N.M.; Zafar, M.H.; Kouzani, A.Z.; Mahmud, M.A.P. Optimal control of centralized thermoelectric generation system under nonuniform temperature distribution using barnacles mating optimization algorithm. Electronics 2021, 10, 2839. [Google Scholar] [CrossRef]

- Yang, S.; Shen, Y.; Cao, J.; Huang, T. Distributed heavy-ball over time-varying digraphs with barzilai-borwein step sizes. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 4011–4021. [Google Scholar]

- An, W.; Ding, D.; Dong, H.; Shen, B.; Sun, L. Privacy-preserving distributed optimization for economic dispatch over balanced directed networks. IEEE Trans. Inf. Forensics Secur. 2024, 20, 1362–1373. [Google Scholar]

- Liu, M.; Li, Y.; Chen, Y.; Qi, Y.; Jin, L. A distributed competitive and collaborative coordination for multirobot systems. IEEE Trans. Mobile Comput. 2024, 23, 11436–11448. [Google Scholar]

- Huang, B.; Zou, Y.; Chen, F.; Meng, Z. Distributed time-varying economic dispatch via a prediction-correction method. IEEE Trans. Circuits Syst. I 2022, 69, 4215–4224. [Google Scholar] [CrossRef]

- He, S.; He, X.; Huang, T. A continuous-time consensus algorithm using neurodynamic system for distributed time-varying optimization with inequality constraints. J. Franklin I 2021, 358, 6741–6758. [Google Scholar] [CrossRef]

- Sun, S.; Xu, J.; Ren, W. Distributed continuous-time algorithms for time-varying constrained convex optimization. IEEE Trans. Autom. Control 2023, 68, 3931–3945. [Google Scholar] [CrossRef]

- Zheng, Y.; Liu, Q.; Wang, J. A specified-time convergent multiagent system for distributed optimization with a time-varying objective function. IEEE Trans. Autom. Control 2023, 68, 1590–1605. [Google Scholar] [CrossRef]

- Wang, B.; Sun, S.; Ren, W. Distributed time-varying quadratic optimal resource allocation subject to nonidentical time-varying hessians with application to multiquadrotor hose transportation. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 6109–6119. [Google Scholar] [CrossRef]

- Ding, Z. Distributed time-varying optimization—An output regulation approach. IEEE Trans. Cybern. 2024, 54, 1362–1373. [Google Scholar] [CrossRef]

- Rahili, S.; Ren, W. Distributed continuous-time convex optimization with time-varying cost functions. IEEE Trans. Autom. Control 2017, 62, 1590–1605. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, D.; Wang, J. A recurrent neural network for solving sylvester equation with time-varying coefficients. IEEE Trans. Neural Netw. 2002, 13, 1053–1063. [Google Scholar] [CrossRef]

- Zhang, Y.; He, L.; Hu, C.; Guo, J.; Li, J.; Shi, Y. General four-step discrete-time zeroing and derivative dynamics applied to time-varying nonlinear optimization. J. Comput. Appl. Math. 2019, 347, 314–329. [Google Scholar] [CrossRef]

- Liao, B.; Han, L.; He, Y.; Cao, X.; Li, J. Prescribed-time convergent adaptive ZNN for time-varying matrix inversion under harmonic noise. Electronics 2022, 11, 1636. [Google Scholar] [CrossRef]

- Zhou, P.; Tan, M.; Ji, J.; Jin, J. Design and analysis of anti-noise parameter-variable zeroing neural network for dynamic complex matrix inversion and manipulator trajectory tracking. Electronics 2022, 11, 824. [Google Scholar] [CrossRef]

- Xiao, L.; Luo, J.; Li, J.; Jia, L.; Li, J. Fixed-time consensus for multiagent systems under switching topology: A distributed zeroing neural network-based method. IEEE Syst. Man Cybern. Mag. 2024, 10, 44–55. [Google Scholar]

- Liao, B.; Wang, T.; Cao, X.; Hua, C.; Li, S. A novel zeroing neural dynamics for real-time management of multi-vehicle cooperation. IEEE Trans. Intell. Veh. 2024, early access, 1–16. [Google Scholar]

- Tan, N.; Yu, P.; Zhong, Z.; Zhang, Y. Data-driven control for continuum robots based on discrete zeroing neural networks. IEEE Trans. Ind. Inform. 2023, 19, 7088–7098. [Google Scholar]

- Jin, L.; Li, S.; Xiao, L.; Lu, R.; Liao, B. Cooperative motion generation in a distributed network of redundant robot manipulators with noises. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 1715–1724. [Google Scholar]

- Chen, J.; Pan, Y.; Zhang, Y. ZNN continuous model and discrete algorithm for temporally variant optimization with nonlinear equation constraints via novel td formula. IEEE Trans. Syst. Man Cybern. Syst. 2024, 54, 3994–4004. [Google Scholar]

- Zuo, Q.; Li, K.; Xiao, L.; Wang, Y.; Li, K. On generalized zeroing neural network under discrete and distributed time delays and its application to dynamic Lyapunov equation. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 5114–5126. [Google Scholar]

- Dai, J.; Tan, P.; Xiao, L.; Jia, L.; He, Y.; Luo, J. A fuzzy adaptive zeroing neural network model with event-triggered control for time-varying matrix inversion. IEEE Trans. Fuzzy Syst. 2023, 31, 3974–3983. [Google Scholar]

- Kong, Y.; Zeng, X.; Jiang, Y.; Sun, D. A predefined time fuzzy neural solution with event-triggered mechanism to kinematic planning of manipulator with physical constraints. IEEE Trans. Fuzzy Syst. 2024, 32, 4646–4659. [Google Scholar]

- Golub, G.; Loan, C.F. Matrix Computations, 4th ed.; John Hopkins Univesity Press: Baltimore, MD, USA, 2013; pp. 22–33. [Google Scholar]

- Diestel, R. Graph Theory, 5th ed.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–33. [Google Scholar]

- Bahman, G.; Jorge, C. Distributed continuous-time convex optimization on weight-balanced digraphs. IEEE Trans. Automat. Contr. 2014, 59, 781–786. [Google Scholar]

- Khalil, H.K. Nonlinear Systems, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002; pp. 111–181. [Google Scholar]

- Zhang, Z.; Yang, S.; Zheng, L. A penalty strategy combined varying-parameter recurrent neural network for solving time-varying multi-type constrained quadratic programming problems. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2993–3004. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).