Abstract

Background: In the context of rapid urbanization, the need for building safety and durability assessment is becoming increasingly prominent. Objective: The aim of this paper is to review the strengths and weaknesses of the main non-destructive testing (NDT) techniques in construction engineering, with a focus on the application of deep learning in image-based NDT. Design: We surveyed more than 80 papers published within the last decade to assess the role of deep learning techniques combined with NDT in automated inspection in construction. Results: Deep learning significantly enhances defect detection accuracy and efficiency in construction NDT, particularly in image-based techniques such as infrared thermography, ground-penetrating radar, and ultrasonic inspection. Multi-technology fusion and data integration effectively address the limitations of single methods. However, challenges remain, including data complexity, resolution limitations, and insufficient sample sizes in NDT images, which hinder deep learning model training and optimization. Conclusions: This paper not only summarizes the existing research results, but also discusses the future optimization direction of the target detection network for NDT defect data, aiming to promote intelligent development in the field of non-destructive testing of buildings, and to provide more efficient and accurate solutions for building maintenance.

1. Introduction

In modern urbanization, concrete materials have become the main construction material for most buildings due to their good mechanical properties and cost-effectiveness [1]. However, during long-term service, buildings inevitably face multiple challenges such as material aging, environmental erosion, and load accumulation, leading to various forms of deterioration of concrete materials, such as cracks, delamination, spalling, voids, leakage, and corrosion of reinforcement [2]. These deterioration phenomena not only seriously affect the structural integrity and safety, but also pose a great challenge to the regular inspection and maintenance of buildings.

Regular inspection and maintenance are an essential step to ensure the safety and longevity of a building and to detect potential problems. However, in practice, we are often faced with a lack of systematic maintenance inspections, reliance on temporary repairs for external damage, and missing historical inspection reports [3]. Traditional wall defect detection methods, such as the manual visual inspection method and knocking method, although simple to operate, are limited by low efficiency, poor accuracy, and strong subjectivity, and make it difficult to meet the urgent needs of modern building maintenance. As a result, advanced NDT techniques are leading a new trend, evolving from mere defect identification to a comprehensive assessment of the nature, number, location, and size of defects, as well as the impact of defects on the performance of the component under test [4]. Although NDT technology has made significant progress, the final damage detection results still require professional judgment, making it difficult to achieve rapid detection.

In this context, the great potential of deep learning in automatic image analysis and computer vision tasks provides new ideas for building condition inspection. Through deep learning technology, we can achieve building condition detection more efficiently and accurately, injecting new vitality into the field of building maintenance. Deep learning models can be classified according to the number of stages in the detection process, whether they rely on an anchor box, and a labeling scheme, such as single-stage detection models (e.g., YOLO and SSD), two-stage detection models (e.g., Faster R-CNN and Mask R-CNN), anchor box-based models (e.g., Faster R-CNN), anchor box-free models (e.g., CornerNet), region suggestion-based models such as Faster R-CNN, and key point-based models (e.g., CenterNet). These classification methods provide a rich applicational perspective of deep learning in the field of building detection. Therefore, in this paper, we delve into the application of deep learning in non-destructive testing of building conditions, to provide smarter and more efficient solutions for regular building maintenance through a literature review.

This paper reviews the application progress of deep learning in the field of building non-destructive testing (NDT). Section 2 describes the methods used in the thesis. Section 3 discusses the combination of image-based NDT technology and deep learning, such as infrared thermal imaging, ground-penetrating radar, and ultrasonic detection, and integrates the application of multiple inspection methods to improve inspection accuracy. In Section 4, future optimization directions for NDT images are presented, aiming to address the issues of data complexity and scarcity. Finally, in Section 5, we summarize the application progress and development trend of deep learning in NDT.

2. Methods

The reporting process adhered to the PRISMA-ScR checklist, which is provided in the Supplementary Materials.

2.1. Review Question

Our research revolves around the following key questions: What are the strengths and limitations of NDT techniques in construction? What is the current status of deep learning applications in NDT, and what are the main challenges, future trends, and optimization directions?

2.2. Eligibility Criteria

This study includes publications from the last decade, with a focus on the last five years of the literature to reflect the latest developments in NDT and deep learning in the construction field. The most recent search was conducted on 18 February 2025.

2.3. Exclusion Criteria

When writing our review article, the exclusion criteria include the following: the literature that is not relevant to the topic of NDT and deep learning research areas; the literature published more than a decade ago, unless it is considered classic literature or grounded theory; the literature from non-academic journals, conference proceedings, or non-peer-reviewed preprints such as arXiv; and the literature that lacks full-text access or duplicates content.

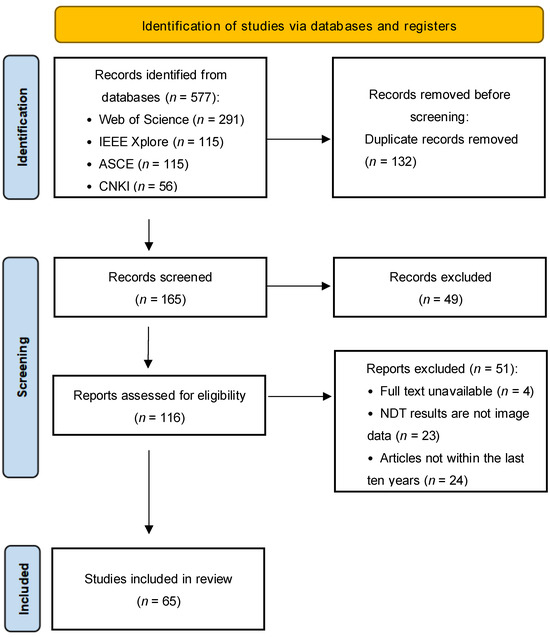

2.4. Search Strategy

We systematically searched four databases, namely, Web of Science, IEEE Xplore, ASCE, and CNKI, using keywords such as “deep learning”, “nondestructive testing”, “building inspection”, “concrete deterioration”, and “image processing”. Initially, we obtained 547 articles. After eliminating duplicates, early publications, and the irrelevant literature, the remaining literature was screened and evaluated in full text. The studies were finally determined to be included in the systematic review. The flow chart of the PRISMA method is shown in Figure 1.

Figure 1.

PRISMA flowchart.

2.5. Data Extraction and Data Synthesis

In the data extraction stage, we collected key information from the literature, including publication year, authors, research objectives, research methodology, main findings, and impacts. We also recorded the types of deep learning models, input/output data, and their main results. In the data synthesis stage, a thematic synthesis approach was used to organize the data into categories such as infrared thermography, ground-penetrating radar, ultrasonic detection technology, synthesis of multiple NDT methods, and deep learning-assisted inspection.

3. Discussion

3.1. Deep Learning-Assisted Infrared Thermography Technology

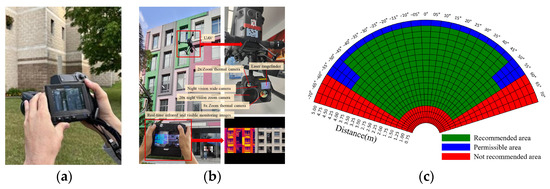

Infrared thermography (IRT) is a non-contact detection method; it can quickly scan a large area, capture the thermal radiation of the object [5], and convert it into a visualized thermal image to achieve rapid detection of surface and internal material defects, including heat loss defects, water infiltration of building envelopes, as well as internal defects such as voids, cracks, delamination, and so on [6,7,8,9]. The device is shown in Figure 2a [10]. The technology is divided into two categories, passive and active, according to the heat source: passive IRT naturally captures the thermal radiation emitted by the object itself, and is suitable for surface defect detection and thermal efficiency assessment, while active IRT excites deep thermal waves with the help of an external heat source and is specialized in the detection of internal defects. At the application level, IRT technology can be divided into qualitative and quantitative modes. Qualitative IRT focuses on intuitive temperature distribution analysis and the interpretation of pixel values in thermal images, and is often used for the detection of defective areas; quantitative IRT delves deeper into the subtle changes in temperature values and material properties to achieve the quantitative assessment [11].

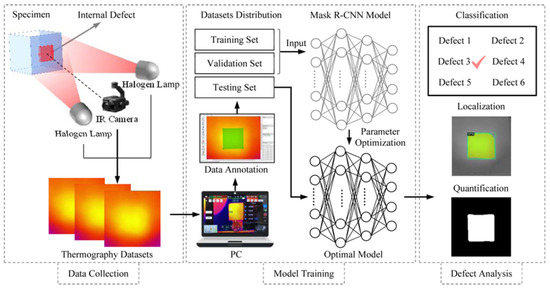

However, the accuracy and efficiency of infrared thermal imaging technology are susceptible to interference from environmental factors such as atmospheric conditions and solar radiation. To address this challenge, the aerial infrared thermal imaging (AIR_T) method [12] is proposed, which combines the UAV detection technology with the mobility of data acquisition equipment, and solves the monitoring problem in complex or inaccessible locations (such as under bridges and behind high-rise buildings), as shown in Figure 2b [13]. In addition, by optimizing the flight plan and data acquisition strategy, flexibly adjusting the camera angle, flight distance, and trajectory, fast and efficient data acquisition is realized, and the adverse impact of environmental factors on data acquisition is effectively reduced. Ma et al. [14] analyzed the impact of camera angle, distance, and illumination on the detection of the fall of tiles outside the building, and provided suggestions on the flight area and time of the UAV, as shown in Figure 2c, thereby improving the data quality. In the field of thermal image processing, Deng et al. [15] fused IRT and Mask R-CNN algorithms for an intelligent inspection system to achieve automatic classification of defects in internal concrete structures, as illustrated in Figure 3. Cheng et al. [16] proposed a deep learning model based on an encoder–decoder architecture to achieve pixel-level segmentation of layered regions in thermal images. Ali et al. [10] demonstrated the effectiveness of the YOLOv7 algorithm in improving building automation potential and the accuracy of building facade heat loss detection. Currently, the fusion of IRT and deep learning algorithms mostly focuses on qualitative analysis, and there have also been studies proposing a lightweight internal damage segmentation network (IDSNet) for real-time region segmentation of thermal images of concrete slabs to quantify internal damages such as delamination, cracks, voids, and honeycombing [17].

Figure 2.

(a) Handheld infrared thermography equipment [10]; (b) UAV thermal imaging [13]; and (c) UAV flight area proposal map [14]. Reproduced with permission from Ref. [10]. Copyright 2024 Elsevier. Reproduced with permission from Ref. [13]. Copyright 2023 Elsevier. Reproduced with permission from Ref. [14]. Copyright 2024 Elsevier.

Figure 3.

Schematic diagram of IRT combined with Mask R-CNN algorithm for detecting internal defects in concrete structures [15]. Reproduced with permission from Ref. [15]. Copyright 2023 Elsevier.

3.2. Deep Learning-Assisted Ground-Penetrating Radar Technology

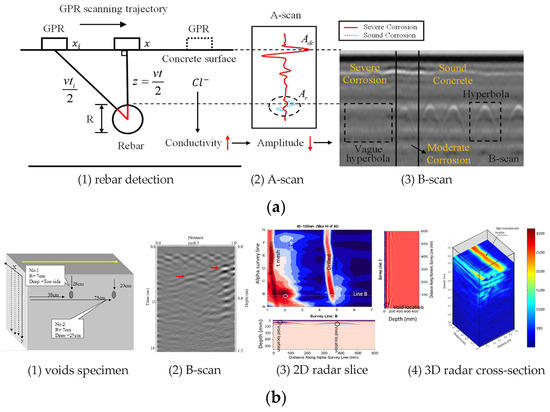

Ground-penetrating Radar (GPR) technology has demonstrated its unique application advantages in many key engineering fields, such as pavement defect detection, bridge quality assessment, railway monitoring, and tunnel lining inspection [18,19,20]. The technology generates detailed images of underground structures through the propagation and reflection of high-frequency electromagnetic waves in the medium, providing intuitive information about the structure’s interior. In the GPR image, the scattering hyperbolic vertices become the key indicators to accurately mark the location of the target object, and this feature greatly enhances the ability of engineers to judge the location of various defects or objects inside the structure. In the inspection of concrete structures, GPR not only accurately locates reinforcement bars and evaluates corrosion conditions [21,22], but also effectively reveals cracks, leaks, grouting defects, voids and other internal defects [23,24], while assisting in the measurement of material delamination and the thickness of the protective layer. The basic principle and images of GPR inspection are shown in Figure 4 [22,25]. To meet various depths of detection requirements, high-frequency antennas, with their high-resolution advantage, become the preferred choice for detecting subtle objects (such as defects and reinforcement bars) in concrete, road, and bridge surface structures. Although low-frequency antennas have slightly lower resolution, they can penetrate deeper into the ground and provide valuable information for deep structure detection [26].

Figure 4.

GPR detection principle and image: (a) steel corrosion detection [22] and (b) test results of hole samples in concrete test blocks and various manifestations of GPR [25]. Reproduced with permission from Ref. [22]. Copyright 2023 ASCE. Reproduced with permission from Ref. [25]. Copyright 2024 King Saud University.

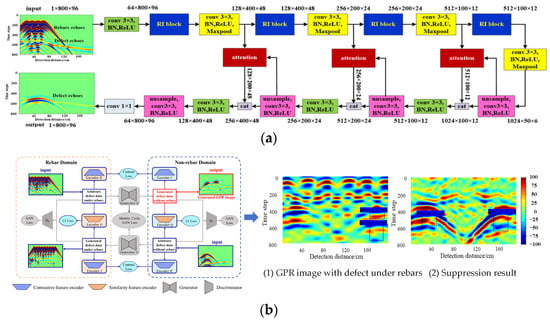

With the advancement of image processing technology, researchers have been exploring ways to improve the accuracy of GPR data analysis. Dinh et al. [27] effectively reduced false anomalies by combining traditional processing techniques, such as image migration, with CNN automation algorithms, but the algorithms require a large amount of training data and do not apply to all types of bridge coverages. Subsequently, Liu et al. [28] introduced migration learning to optimize SSD model training, which alleviated the dependence on a large amount of training data. However, in terms of quantitative rebar size detection, it is difficult to achieve the required accuracy standard by directly assessing rebar size from GPR images. For this reason, Chang et al. [29] achieved quantitative detection of rebar radius within 7% error by constructing an electromagnetic physical model considering rebar radius. Many factors can affect the detection results of ground-penetrating radar (GPR). For example, the thickness of the overlay, the moisture content in the structure, and the spacing of the rebar all have an impact on the GPR signal. It is worth noting that the strong reflectivity of metallic materials leads to a shielding effect of the reinforcement layer in the concrete on the GPR signals, masking the echoes of the damage under the reinforcement and making it difficult to accurately identify the damage signals. This shielding also complicates the detection of steel pipe grouting compactness, making it challenging to assess the integrity of such structures using GPR alone. Focusing on the interference problem of steel reinforcement in defect detection, Wang et al. [30] designed a deep neural network integrating a spatial attention module and a residual starting block, as shown in Figure 5a, aiming to strengthen the network’s ability to identify weak defect signals and achieve an accurate reconstruction of multiple defect reflections. With this design, the rebar signals in the GPR image were effectively filtered out and the echoes of the defects under the rebar layer were enhanced, which improved the recognition accuracy from 0.208 to 0.850. After that, they proposed an unsupervised rebar clutter suppression model based on CycleGAN by combining the contrast feature encoder and similarity feature encoder to address the problem of fewer images in the actual project, as shown in Figure 5b, which effectively reduces the misdetection and omission of the defect [31].

Figure 5.

The conversion of the ground-penetrating radar (GPR) image of the defect under the rebar into a network architecture diagram containing only the defect image: (a) the overall architecture of supervised deep neural networks [30] and (b) the unsupervised reinforcement clutter suppression model based on the CycleGAN framework and real GPR data identification results [31]. Reproduced with permission from Ref. [30]. Copyright 2021 IEEE. Copyright 2021 Elsevier. Reproduced with permission from Ref. [31]. Copyright 2023 Elsevier.

3.3. Deep Learning-Assisted Ultrasonic Detection Technology

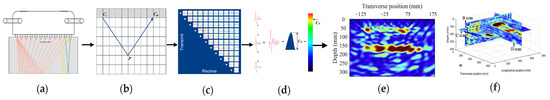

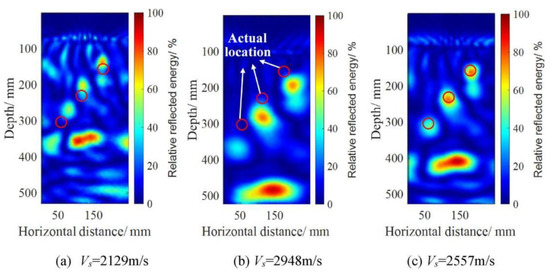

Ultrasonic inspection methods are widely used in thickness measurement, delamination detection, and the detection of concrete members, tunnel linings, under-pavement voids, and reinforcing steel by the analysis of ultrasonic propagation characteristics in the medium, such as reflection, refraction, and scattering phenomena [32,33,34]. Combined with advanced imaging techniques such as synthetic aperture focusing technology (SAFT) (shown in Figure 6 [35,36]), ultrasonic transverse wave tomography (USWT) [33], and the total focusing method (TFM) [37], ultrasonic inspections can acquire high-resolution image datasets, which greatly facilitates the accurate identification of defects. During ultrasonography, the adjustment of parameters affects the accuracy of imaging, among which the physical parameter of wave velocity is crucial for the depth accuracy of internal defect localization [36]. Figure 7 [38] shows the imaging effects at different wave speeds.

Figure 6.

Underlying operating process of array ultrasound: (a) array ultrasound signal emission and reception [35]; (b) time domain signal acquisition [35]; (c) 66 unrelated A-scans [35]; (d) coherent summation and color assignment [35]; (e) single B-scan; and (f) panoramic B-scan, C-scan, and D-scan [36]. Reproduced with permission from Ref. [35]. Copyright 2024 Elsevier. Reproduced with permission from Ref. [36]. Copyright 2018 Elsevier.

Figure 7.

Ultrasound imaging at different wave speeds, where the red circle indicates the actual location of the defect [38]. Reproduced with permission from Ref. [38]. Copyright 2023 Elsevier.

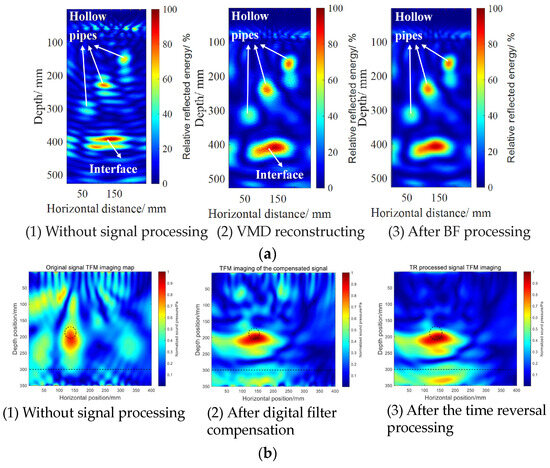

Although the combination of ultrasound imaging and deep learning models has been less studied for the automatic identification of internal concrete defects compared to thermography and GPR images, significant results have been achieved. The first attempt to process ultrasound images using Convolutional Neural Networks (CNNs) was made by Słoński et al. [39] to achieve the classification of a single type of defects in a simulated PVC pipe using a pre-trained VGG-16 model. Kuchipudi et al. [40] further advanced by optimizing defect detection and segmentation of ultrasonic images using a region-based CNN model combined with the RolAlign technique, identifying a wide range of defects in concrete including rebar debonding, delamination, and cracking, with an optimal mean accuracy (mAP) of up to 0.98. Yang et al. [41] utilized a deep neural network with an encoder-decoder architecture, combined with a feature fusion strategy and residual module optimization to achieve pixel-level non-destructive detection of cracks as low as 1 mm width inside reinforced concrete structures, and globally display the crack location and distribution through the alignment technique, with a crack length quantification error of only 6.22%. In addition, the study by Wu et al. [38] focuses on significantly improving image resolution with enhanced recognition of small target defects, integrating the variational mode decomposition and bilateral filtering techniques and introducing the improved YOLOv5s-CBAM network model, as illustrated in Figure 8a, which has been tested in the laboratory with good results. However, the model does not have high detection accuracy for tunnel lining scenes.

Figure 8.

Ultrasound image pre-processing: (a) SAFT imaging before and after processing [38] and (b) total focusing method imaging before and after processing, and the dashed lines in the figure represent the locations of the defects and bottoms [37]. Reproduced with permission from Ref. [38]. Copyright 2023 Elsevier. Reproduced with permission from Ref. [37]. Copyright 2023 Elsevier.

Ultrasonic testing still has many limitations in the identification of internal defects of concrete structures. First of all, the detection equipment has high requirements for surface smoothness and is subject to the adjustment of equipment parameters and the resolution of imaging technology. Secondly, there is insufficient research on the automatic detection of various defect characteristics in concrete structures by imaging. Currently, most of the related research work mainly focuses on simulated detection under laboratory conditions, while relatively few studies are conducted in real application scenarios, resulting in limited model generalization capability and a lack of necessary field dataset support for training optimization. Although existing studies have made some progress in simulated laboratory environments, how to effectively translate these results into models and data suitable for real-world applications is still an urgent problem to be solved.

3.4. Integration of Multiple Inspection Methods

The diversity and complexity of NDT techniques are reflected in their inability to single-handedly address the challenges of all types of defects, with each technique possessing unique strengths and limitations. It is these characteristics that have led to the integration of multi-technology systems as an important strategy to ensure the success and accuracy of inspection results.

In the practice of integrated application, different NDT techniques have their own strengths due to their differences in the depth of detection. The study by Lagüela et al. [42] achieved comprehensive localization from road surface geometry to internal lesions through the combined application of TLS, GPR, and IRT. Similarly, the joint application of IRT and GPR in building moisture detection provides a reliable basis for moisture tracking and depth assessment through the dual analysis of surface moisture movement and internal water accumulation points [43]. In addition, the integrated utilization of data between different NDT techniques enables mutual validation and complementarity, with IRT helping to confirm whether scattering and attenuation in GPR signals are caused by delamination or moisture, thus reducing misjudgments. Meanwhile, the depth information of GPR provides three-dimensional spatial localization of temperature anomalies for IRT, enabling more accurate identification and assessment of internal corrosion and damage areas [44]. For different inspection objects, each technology also shows unique value. In concrete masonry wall monitoring, for example, non-contact IRT, passive acoustic emission AE, and active ultrasonic UT technologies work in concert to comprehensively capture structural damage information through a cross-validation mechanism [45]. In the detection of defects in steel pipe concrete, in response to the possible bias of the ultrasonic method in the detection of dehollowing defects, Chen et al. [46] proposed an improved technique combined with IRT, which was able to more accurately identify dehollowing and internal defects in steel pipe concrete columns. This was further demonstrated in the study by Gucunski et al. [47], who combined five NDE techniques (e.g., impingement echo, ground-penetrating radar, etc.) for the long-term monitoring of bridge decks and not only confirmed corrosion as the main cause of deck deterioration but also demonstrated the high reproducibility and inter-correlation of these techniques.

With the continuous enrichment of NDT tools and methods, more possibilities for multi-technology system integration have also been opened up. Building information modeling (BIM) models are a key tool in the construction industry, detailing the geometry, material properties, internal spatial layout, and semantic information of a building [48]. Integration of BIM models with sensing devices and drone technology is driving the digital transformation of the construction industry. Data collected by drones are used to identify building defects and generate accurate 3D models. At the same time, the BIM model provides critical information to the drone to assist in planning flight paths [49] and creates a two-way interaction. Unlike static representations of building information modeling (BIM), digital twins (DT) enable real-time data interaction between virtual models (built with modeling, simulation, computation, and analysis tools) and actual physical objects, such as buildings, by integrating technologies such as laser scanning, sensors, and the Internet of Things [50]. This need to visualize project data in real time has driven the widespread use of DT in the construction industry [51,52]. In the operation and maintenance phase of the building lifecycle, Yiğit et al. [53] used drone photogrammetry and structure from motion (SfM) algorithms to create high-precision 3D digital twins, and combined deep learning techniques to automatically detect cracks in cultural heritage buildings. To address the high computing resource and technological requirements for large-scale data processing and real-time processing, Pantoja-Rosero et al. [54] proposed an end-to-end automated process for generating lightweight damage-enhanced digital twins (DADT). The method uses multi-view images and artificial intelligence technology to map damage information into 3D models, significantly improving the efficiency of real-time modeling and data processing, making it more suitable for real-time applications and large-scale deployments. However, the current lack of unified modeling and implementation standards has undoubtedly increased the complexity of data integration, technology implementation, and application [55]. Despite significant advances in hybrid inspection systems, a combination of cost, environmental suitability, and technological complexity needs to be considered in the future to expand the application of NDT technologies [2].

4. Future Trends and Possible Research Directions

4.1. Deep Learning Background

Deep learning (DL), an important branch of artificial intelligence, has revolutionized the field of image processing since AlexNet’s victory in the ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) in 2012 [56]. Deep learning creates a hierarchical representation of input information (e.g., images) through a multilayer network that is automatically adapted during training to learn and extract discriminative features for efficient and accurate image object detection and localization [57]. In contrast, traditional image processing relies on manually extracted features and machine learning techniques, which not only require domain expertise but also struggle to cope with the high variability and complexity inherent in visual data [58]. While these methods perform reasonably well in specific tasks, they are limited in accuracy and cumbersome, while deep learning-based target detection algorithms (e.g., defect recognition) have demonstrated much higher efficiency, driving the field of image processing.

Innovations in network architecture are crucial to cope with requirements such as complex datasets. Convolutional Neural Networks (CNNs), since their introduction by LeCun, have demonstrated a wide range of applications in fields such as non-destructive inspection, as a nonlinear mapping model with supervised learning [27]. With the increase in network depth, residual network (ResNet) [59] effectively mitigates the gradient vanishing problem by introducing residual connections, supports the training of deeper networks, and significantly improves the model performance. In addition, the densely connected convolutional network (DenseNet) [60] further enhances performance through cross-layer feature reuse. Currently, deep learning target detection models are mainly classified into two categories: candidate region methods (e.g., R-CNN, Fast R-CNN, and Faster R-CNN) classify and extract features by pre-selecting potential target regions and combining them with CNNs; regression methods (e.g., SSD and YOLO series) use a single end-to-end CNN to directly predict target bounding boxes and categories, which significantly improves the detection speed. The YOLO family, as the mainstream of single-stage target detection algorithms, has been widely used in industry with continuous evolution from YOLOv1 to YOLOv11. In order to operate efficiently on resource-constrained devices, lightweight network architectures (e.g., MobileNet [61,62,63] and ShuffleNet [64,65]) significantly reduce the computational and parametric quantities of the models by introducing techniques such as depthwise separable convolution and group convolution, enabling deep learning techniques to be applied in more practical scenarios. In addition, extensions such as the attention mechanism provide new solutions for deep learning performance optimization. The attention mechanism directs the model to focus on important features by assigning different weights to different information, thus optimizing feature extraction and representation and improving model performance [66]. This mechanism covers a variety of types such as channel attention, spatial attention, self-attention, and combinations of the above attention types to improve the accuracy of the model in handling complex tasks [67].

In the field of non-destructive testing, we rely on more complex data types, such as thermograms, radargrams, and ultrasound images. These images reveal key information such as the temperature distribution, internal structure, and material properties of an object, but they are usually more complex than RGB images, often accompanied by noise and artifacts, and limited in resolution, coupled with a small number of samples that require precise annotation and pre-processing by professionals, all of which increase the difficulty of processing by deep learning models. In addition, due to the different detection objects and purposes, it is usually necessary to select a specific sensor, and even the same sensor needs to adjust parameters (such as dielectric constant and frequency) for different detection objects. These factors increase the difficulty of deep learning model processing. To further advance the application of deep learning in the field of building inspection, data from multiple sensors need to be integrated to improve the integrity and accuracy of inspections and at the same time, improve the data standardization and sharing platform, and promote the accumulation of high-quality data sets to adapt to the resource-constrained detection environment.

4.2. Data Enhancement

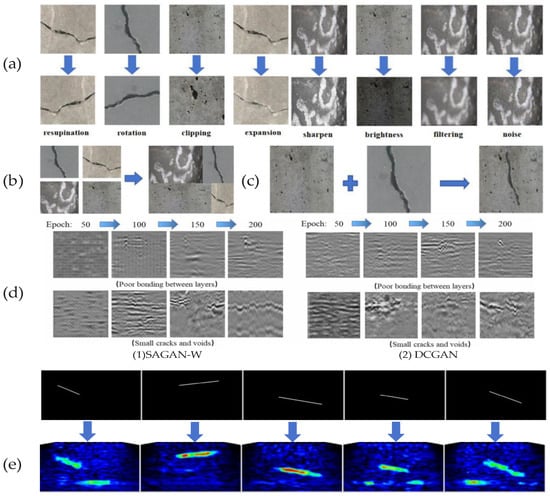

In order to overcome the difficulties of data processing in the field of NDT, brightness and contrast adjustment, perspective transformation, flipping, cropping, scaling, and panning are used as the basic means of supervised data enhancement [68], as shown in Figure 9a [69]. The basic image processing methods can improve the generalization ability and accuracy of the model in the target detection task to a certain extent, but there is a limitation in the expansion of the data diversity. Because of this, YOLO-v5 introduces mosaic and mix-up data enhancement techniques, as shown in Figure 9b,c [69], which effectively enrich the background environment of defect detection and enhance the model’s ability to deal with complex scenes (e.g., small, occluded, and blurred targets) [70].

Figure 9.

Data enhancement technology: (a) single-sample data enhancement [69]; (b) mix-up data enhancement [69]; (c) mosaic data enhancement [69]; (d) radar images generated by SAGAN-W and DCGAN networks [71]; and (e) supervised generative adversarial DNN network generates crack ultrasound images [35]. Reproduced with permission from Ref. [69]. Copyright 2024 Institute of Chemical Technology. Reproduced with permission from Ref. [71]. Copyright 2024 Elsevier. Reproduced with permission from Ref. [35]. Copyright 2024 Elsevier.

Meanwhile, the rise of Generative Adversarial Networks (GANs) has injected new power into the field of data enhancement. GANs are capable of generating realistic synthetic data through adversarial training of generators and discriminators. Among them, Deep Convolutional Generative Adversarial Network (DCGAN), as a variant of GAN, combines the variational auto coder (VAE) and Adam optimizer, which is successfully applied to generate realistic asphalt pavement crack images, effectively alleviating the constraints of sample scarcity on model training [71]. To deal with the collapse problem of DCGAN and the limitation of convolutional GAN in generating complex geometric patterns, Liu et al. [72] designed the self-attention mechanism of Self-Attention Generative Adversarial Network-W (SAGAN-W), which generated GPR images with clear hyperbolic curves and could simulate the true backscattering characteristics of defects. In addition, Yang et al. [35] developed a Conditional Generative Adversarial Network (CGAN) variant for generating ultrasound B-scan images that was trained using real B-scan images and their corresponding truthful image data, thus improving the generalization ability of the model.

In the development of image super-resolution techniques, the emergence of Super-Resolution Generative Adversarial Network (SRGAN) significantly improves the quality of image reconstruction, which not only improves the resolution of the image but also preserves the detailed features of the image, such as edges and textures [73]. Aiming at the challenges of low resolution and weak features in infrared images, Regional Super-Resolution Generative Adversarial Network (RSRGAN) effectively improves the recognition rate of small infrared targets by fusing the regional context network with the improved SRGAN, while reducing the computational cost [74]. The Lightweight Thermal Image Super-Resolution (LTSR) model, on the other hand, further improves detection efficiency through multi-scale feature extraction and latent representation learning [75]. Pozzer et al. [76] employed Very Deep Super-Resolution (VDSR) technology in conjunction with the histogram equalization (HE) method to process fusion images, original visible light images, and thermal images. This combination effectively enhanced image resolution and contrast, enabling more accurate capture of details such as exposed rebar, peeling areas, and boundaries. For more accurate capture of details such as exposed rebar, peeling areas, and boundaries in GPR image processing, the unsupervised Generative Adversarial Network model based on CycleGAN achieves automatic conversion of cluttered GPR images to clutter-free images without relying on pairs of training data, which solves the problem of data set scarcity [77]. Finally, in ultrasound image synthesis, an innovative GAN framework successfully synthesizes high-resolution ultrasound images through auxiliary sketch guidance, progressive training strategy, and feature loss function, providing richer and more accurate image resources for non-destructive testing [78].

4.3. Model Adaptation

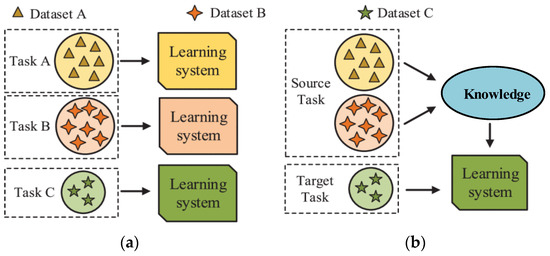

4.3.1. Transfer Learning

Transfer learning has shown significant advantages in addressing the challenges of insufficient data and changing scenarios, especially in the field of object detection. By effectively utilizing models and knowledge accumulated in related fields or previous tasks, it can greatly reduce the workload of data collection and labeling, avoid model overfitting problems caused by small sample data, and significantly improve the performance and robustness of target detection [79]. Figure 10 illustrates the difference between traditional machine learning and TL techniques for multitasking [80].

Figure 10.

The difference between traditional machine learning and TL techniques for multitasking [80]: (a) traditional machine learning and (b) transfer learning. Reproduced with permission from Ref. [80]. Copyright 2023 Elsevier.

In practice, commonly used pre-trained CNN models, such as AlexNet, U-Net, VGG-16, etc., which have been trained on large-scale datasets, can be applied to new problems through migration learning [81]. Fine-tuning is a common method of migration learning, which applies to cases where the source domain is similar to the target domain. For example, using SSD models pre-trained on the PASCAL VOC2007 dataset and fine-tuned to fit the newly acquired GPR dataset, the average accuracy of rebar detection can reach 90.9% [28]. However, the fine-tuning approach may be less applicable in the face of more complex domain offset problems, such as variations in visible data due to differences in illumination conditions and image appearance. To overcome this problem, lv et al. [82] proposed an unsupervised migration learning framework, which improves the generalization ability of the model by constructing intermediate domains to simulate the target domain properties and combining it with an illumination-aware label fusion strategy, which achieves automatic adaptation to the target domain without additional manual annotation. It is worth noting that the success of migration learning depends largely on the similarity between the source and target domains, as well as the knowledge distillation and alignment strategies during the migration process. Therefore, in concrete crack detection and other specific application scenarios, researchers need to choose the most suitable model and method according to the data characteristics; e.g., comparing the performance of Alexnet, VGG16, VGG19, and ResNet-50 models in terms of validation and testing accuracy and training time, it is found that the ResNet-50 model maintains a high accuracy rate with the shortest training time and is the most suitable for concrete crack image classification [83].

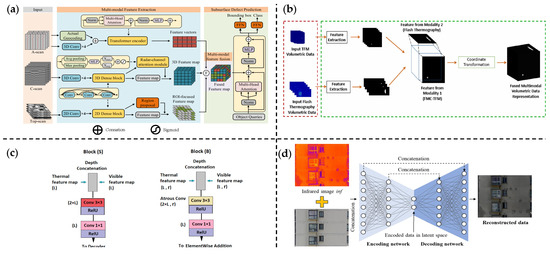

4.3.2. Multimodal Data Fusion Technology

Multimodal data fusion technology breaks through the limitations of traditional isolated data processing by extracting visual attributes from data from different sensors and achieves a more comprehensive information feature system construction [84]. Currently, deep learning-based fusion methods mainly include frameworks such as Autoencoder, Convolutional Neural Networks (CNNs), and Generative Adversarial Networks (GAN). M2FNet, as an innovative multimodal fusion network, combines the local feature extraction capability of CNNs with the global characterization capability of Transformer through its dual-network architecture, extracts features from the raw signal data and B-scan and top-scan GPR image data, and achieves multimodal feature fusion through the cross-attention mechanism, which significantly improves the learning ability of GPR data [80], as shown in Figure 11a. Meanwhile, aiming at the comprehensive detection of metal internal and surface defects, the multi-modal automatic defect detection (M-ADR) system constructed by the fusion of ultrasonic and pulsed thermal imaging (PT) data via coordinate transformation technology realizes the optimization of defect identification and characterization, as shown in Figure 11b, and the detection accuracy rate is up to 91.46%, far exceeding the traditional model and single-mode technology [85]. For the fusion of infrared and visible images, Faegheh et al. [86] proposed the EFASPP U-Net deep network, consisting of two independent encoders (dealing with visible and thermal images, respectively) and a decoder, which combines a standard convolutional layer with a null convolutional layer to achieve feature-level fusion and avoid the loss of information for small targets. Figure 11c shows the block combining the visible and thermal feature maps. In addition, Wang et al. [13] used GAN’s Infrared–Visible Image Fusion (IVIF) technology and relied on the Resnet generator (as shown in Figure 11d) and PatchGAN discriminator to integrate thermal imaging and visual information, and built a deep neural network to achieve efficient case segmentation of building facade defects (cracks, spalling, etc.) in IVIF images.

Figure 11.

Multimodal data fusion technology: (a) architecture of M2FNet model integrating multiple forms of GPR images [80]; (b) coordinate transformation technique for fusing full-focus ultrasound images with pulse thermal imaging (PT) video frame data [85]; (c) schematic representation of multi-encoder blocks merging visible and thermal feature maps [86]; and (d) structure of generator network for visible and thermal images [13]. Reproduced with permission from Ref. [80]. Copyright 2023 IEEE. Reproduced with permission from Ref. [85]. Copyright 2024 Elsevier. Reproduced with permission from Ref. [86]. Copyright 2023 Elsevier. Reproduced with permission from Ref. [13]. Copyright 2024 Elsevier.

5. Conclusions and Outlook

This paper reviews and summarizes the progress of the application of deep learning algorithms in the field of non-destructive building inspection, and combs through the latest frontiers of image-based non-destructive inspection techniques, especially infrared thermal camera, ground-penetrating radar, and ultrasonic inspection techniques. Each of these techniques has its strengths and limitations, so exploring the integrated application of multiple inspection methods has become an important way to improve inspection accuracy. In addition, precise adjustment of the parameters of non-destructive sensors is essential to ensure the accuracy of data acquisition. For precast concrete members with known defect distribution, non-destructive testing (NDT) images can be used to confirm the defect conditions by cross-referencing with known defects. However, in real-world scenarios where unknown damage exists, such as in building walls, NDT, as an indirect means, often needs to be combined with traditional testing to provide more comprehensive information. At the same time, the distinction of damage types in NDT images often relies on professional experience, which poses a challenge to deep learning models in damage matching and recognition, requiring the models to possess higher accuracy and generalization ability.

With the continuous development of deep learning and NDT technology, the integration of NDT and deep learning shows new trends in the future. On the one hand, data enhancement methods focus on image generation and super-resolution techniques based on Generative Adversarial Networks (GAN), aiming to improve the diversity, resolution, and feature retention of NDT image data, solve the problem of sample scarcity and labeling, and provide richer and more accurate image resources for deep learning models. On the other hand, the development of deep learning models focuses on the optimization of transfer learning techniques and the innovative application of multimodal data fusion techniques. Through migration learning, the dependence on new domain datasets is reduced, and the adaptability and generalization ability of the model are improved. Although some progress has been made in the field of visible light images, the application in the fields of infrared thermal imaging, radar 2D imaging, and ultrasound imaging needs to be further strengthened. In addition, further work is required in terms of combining multimodal data fusion technology, innovative network architecture, and fusion strategy, making full use of different sensor data resources and achieving more accurate information feature extraction and fusion, in order to improve the performance and robustness of tasks such as target detection, and to cope with the challenges of complex and changing scenes.

However, this study has certain limitations. It does not delve deeply into the theoretical aspects of deep learning techniques, nor does it provide a comprehensive discussion on the specific deep learning models suitable for different NDT methods and their optimization strategies. Future research should focus on extending the application of deep learning to more NDT methods to improve the quantitative accuracy of defect sizes, ensure intuitive and accurate inspection results, and provide reliable data support for building structural health assessment and maintenance.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/electronics14061124/s1, Table S1: PRISMA-ScR checklist. Reference [87] was cited in Supplementary Materials.

Author Contributions

Methodology, Z.Y., F.Q. and J.D.; writing—original draft preparation, Y.Y. and X.Z.; writing—review and editing, H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Scientific Research Projects of BBMG Corporation (KYJC018) in 2023.

Data Availability Statement

Not applicable.

Acknowledgments

The authors appreciate the financial support from BBMG Corporation. Any opinions, findings, and conclusions expressed in this paper are those of the authors and do not necessarily represent the view of any organization.

Conflicts of Interest

Authors (Xiuli Zhang, Zeming Yu, Fugui Qiao, and Jianneng Du) were employed by the company Beijing Building Materials Testing Academy Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The authors declare that this study received funding from Beijing Building Materials Testing Academy Co., Ltd. The funder had the following involvement with the study: supervision. The funder was not involved in the study design, data collection, analysis, interpretation of data, writing of this article or decision to submit it for publication.

References

- Hussein, R.; Etete, B.; Mahdi, H.; Al-Shukri, H. Detection and Delineation of Cracks and Voids in Concrete Structures Using the Ground Penetrating Radar Technique. J. Appl. Geophys. 2024, 226, 105379. [Google Scholar] [CrossRef]

- Abdelkhalek, S.; Zayed, T. Comprehensive Inspection System for Concrete Bridge Deck Application: Current Situation and Future Needs. J. Perform. Constr. Facil. 2020, 34, 03120001. [Google Scholar] [CrossRef]

- Wang, J.; Ueda, T.; Wang, P.; Li, Z.; Li, Y. Building Damage Inspection Method Using UAV-Based Data Acquisition and Deep Learning-Based Crack Detection. J. Civ. Struct. Health Monit. 2024, 15, 151–171. [Google Scholar] [CrossRef]

- Zheng, Y.; Wang, S.; Zhang, P.; Xu, T.; Zhuo, J. Application of Nondestructive Testing Technology in Quality Evaluation of Plain Concrete and RC Structures in Bridge Engineering: A Review. Buildings 2022, 12, 843. [Google Scholar] [CrossRef]

- Yang, X.; Huang, R.; Meng, Y.; Liang, J.; Rong, H.; Liu, Y.; Tan, S.; He, X.; Feng, Y. Overview of the Application of Ground-Penetrating Radar, Laser, Infrared Thermal Imaging, and Ultrasonic in Nondestructive Testing of Road Surface. Measurement 2024, 224, 113927. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, Y.; Zhang, Y.; Zhao, M. Application of non-destructive testing technique for detecting surface detachment in cultural heritage buildings. J. Shanghai Univ. (Nat. Sci.) 2022, 28, 656–667. (In Chinese) [Google Scholar]

- Pan, P.; Zhang, R.; Zhang, Y.; Li, H. Detecting Internal Defects in FRP-Reinforced Concrete Structures through the Integration of Infrared Thermography and Deep Learning. Materials 2024, 17, 3350. [Google Scholar] [CrossRef]

- Yang, J.; Wang, W.; Lin, G.; Li, Q.; Sun, Y.; Sun, Y. Infrared Thermal Imaging-Based Crack Detection Using Deep Learning. IEEE Access 2019, 7, 182060–182077. [Google Scholar] [CrossRef]

- Ficapal, A.; Mutis, I. Framework for the Detection, Diagnosis, and Evaluation of Thermal Bridges Using Infrared Thermography and Unmanned Aerial Vehicles. Buildings 2019, 9, 179. [Google Scholar] [CrossRef]

- Waqas, A.; Araji, M.T. Machine Learning-Aided Thermography for Autonomous Heat Loss Detection in Buildings. Energy Convers. Manag. 2024, 304, 118243. [Google Scholar] [CrossRef]

- Garrido, I.; Lagüela, S.; Sfarra, S.; Madruga, F.J.; Arias, P. Automatic Detection of Moistures in Different Construction Materials from Thermographic Images. J. Therm. Anal. Calorim. 2019, 138, 1649–1668. [Google Scholar] [CrossRef]

- Kulkarni, N.N.; Raisi, K.; Valente, N.A.; Benoit, J.; Yu, T.; Sabato, A. Deep Learning Augmented Infrared Thermography for Unmanned Aerial Vehicles Structural Health Monitoring of Roadways. Autom. Constr. 2023, 148, 104784. [Google Scholar] [CrossRef]

- Wang, P.; Xiao, J.; Qiang, X.; Xiao, R.; Liu, Y.; Sun, C.; Hu, J.; Liu, S. An Automatic Building Façade Deterioration Detection System Using Infrared-Visible Image Fusion and Deep Learning. J. Build. Eng. 2024, 95, 110122. [Google Scholar] [CrossRef]

- Ma, L.; Xiong, B.; Kong, Q.; Lu, X. Impact of Multiple Factors on the Use of an UAV-Mounted Infrared Thermography Method for Detection of Debonding in Facade Tiles. J. Build. Eng. 2024, 96, 110592. [Google Scholar] [CrossRef]

- Deng, L.; Zuo, H.; Wang, W.; Xiang, C.; Chu, H. Internal Defect Detection of Structures Based on Infrared Thermography and Deep Learning. KSCE J. Civ. Eng. 2023, 27, 1136–1149. [Google Scholar] [CrossRef]

- Cheng, C.; Shang, Z.; Shen, Z. Automatic Delamination Segmentation for Bridge Deck Based on Encoder-Decoder Deep Learning through UAV-Based Thermography. NDT E Int. 2020, 116, 102341. [Google Scholar] [CrossRef]

- Ali, R.; Cha, Y.-J. Attention-Based Generative Adversarial Network with Internal Damage Segmentation Using Thermography. Autom. Constr. 2022, 141, 104412. [Google Scholar] [CrossRef]

- Kaur, P.; Dana, K.J.; Romero, F.A.; Gucunski, N. Automated GPR Rebar Analysis for Robotic Bridge Deck Evaluation. IEEE Trans. Cybern. 2016, 46, 2265–2276. [Google Scholar] [CrossRef]

- Xu, X.; Lei, Y.; Yang, F. Railway Subgrade Defect Automatic Recognition Method Based on Improved Faster R-CNN. Sci. Program. 2018, 2018, 4832972. [Google Scholar] [CrossRef]

- Yue, Y.; Liu, H.; Lin, C.; Meng, X.; Liu, C.; Zhang, X.; Cui, J.; Du, Y. Automatic Recognition of Defects behind Railway Tunnel Linings in GPR Images Using Transfer Learning. Measurement 2024, 224, 113903. [Google Scholar] [CrossRef]

- Sossa, V.; Pérez-Gracia, V.; González-Drigo, R.; Rasol, A.M. Lab Non Destructive Test to Analyze the Effect of Corrosion on Ground Penetrating Radar Scans. Remote Sens. 2019, 11, 2814. [Google Scholar] [CrossRef]

- Zhang, Y.-C.; Du, Y.-L.; Yi, T.-H.; Zhang, S.-H. Automatic Rebar Picking for Corrosion Assessment of RC Bridge Decks with Ground-Penetrating Radar Data. J. Perform. Constr. Facil. 2024, 38, 04023069. [Google Scholar] [CrossRef]

- Ismail, M.A.; Abas, A.A.; Arifin, M.H.; Ismail, M.N.; Othman, N.A.; Setu, A.; Ahmad, M.R.; Shah, M.K.; Amin, S.; Sarah, T. Integrity Inspection of Main Access Tunnel Using Ground Penetrating Radar. IOP Conf. Ser. Mater. Sci. Eng. 2017, 271, 012088. [Google Scholar] [CrossRef]

- Kilic, G.; Eren, L. Neural Network Based Inspection of Voids and Karst Conduits in Hydro–Electric Power Station Tunnels Using GPR. J. Appl. Geophys. 2018, 151, 194–204. [Google Scholar] [CrossRef]

- Almalki, M.A.; Almutairi, K.F. Inspection of Reinforced Concrete Structures Using Ground Penetrating Radar: Experimental Approach. J. King Saud. Univ. Sci. 2024, 36, 103140. [Google Scholar] [CrossRef]

- Negri, S.; Aiello, M.A. High-Resolution GPR Survey for Masonry Wall Diagnostics. J. Build. Eng. 2021, 33, 101817. [Google Scholar] [CrossRef]

- Dinh, K.; Gucunski, N.; Duong, T.H. An Algorithm for Automatic Localization and Detection of Rebars from GPR Data of Concrete Bridge Decks. Autom. Constr. 2018, 89, 292–298. [Google Scholar] [CrossRef]

- Liu, H.; Lin, C.; Cui, J.; Fan, L.; Xie, X.; Spencer, B.F. Detection and Localization of Rebar in Concrete by Deep Learning Using Ground Penetrating Radar. Autom. Constr. 2020, 118, 103279. [Google Scholar] [CrossRef]

- Chang, C.W.; Lin, C.H.; Lien, H.S. Measurement Radius of Reinforcing Steel Bar in Concrete Using Digital Image GPR. Constr. Build. Mater. 2009, 23, 1057–1063. [Google Scholar] [CrossRef]

- Wang, J.; Chen, K.; Liu, H.; Zhang, J.; Kang, W.; Li, S.; Jiang, P.; Sui, Q.; Wang, Z. Deep Learning-Based Rebar Clutters Removal and Defect Echoes Enhancement in GPR Images. IEEE Access 2021, 9, 87207–87218. [Google Scholar] [CrossRef]

- Wang, Z.; Wang, J.; Chen, K.; Li, Z.; Xu, J.; Li, Y.; Sui, Q. Unsupervised Learning Method for Rebar Signal Suppression and Defect Signal Reconstruction and Detection in Ground Penetrating Radar Images. Measurement 2023, 211, 112652. [Google Scholar] [CrossRef]

- Chen, R.; Tran, K.T.; La, H.M.; Rawlinson, T.; Dinh, K. Detection of Delamination and Rebar Debonding in Concrete Structures with Ultrasonic SH-Waveform Tomography. Autom. Constr. 2022, 133, 104004. [Google Scholar] [CrossRef]

- Choi, P.; Kim, D.-H.; Lee, B.-H.; Won, M.C. Application of Ultrasonic Shear-Wave Tomography to Identify Horizontal Crack or Delamination in Concrete Pavement and Bridge. Constr. Build. Mater. 2016, 121, 81–91. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, D.; Zhu, K.; Zhai, Z.; Xu, J.; Wu, L.; Hu, D.; Xu, W.; Huang, H. Evaluation Residual Compressive Strength of Tunnel Lining Concrete Structure after Fire Damage Based on Ultrasonic Pulse Velocity and Shear-Wave Tomography. Processes 2022, 10, 560. [Google Scholar] [CrossRef]

- Yang, H.; Shu, J.; Li, S.; Duan, Y. Ultrasonic Array Tomography-Oriented Subsurface Crack Recognition and Cross-Section Image Reconstruction of Reinforced Concrete Structure Using Deep Neural Networks. J. Build. Eng. 2024, 82, 108219. [Google Scholar] [CrossRef]

- Lin, S.; Shams, S.; Choi, H.; Azari, H. Ultrasonic Imaging of Multi-Layer Concrete Structures. NDT E Int. 2018, 98, 101–109. [Google Scholar] [CrossRef]

- Ge, L.; Li, Q.; Wang, Z.; Li, Q.; Lu, C.; Dong, D.; Wang, H. High-Resolution Ultrasonic Imaging Technology for the Damage of Concrete Structures Based on Total Focusing Method. Comput. Electr. Eng. 2023, 105, 108526. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, Y.; Li, D.; Zhang, J. Two-Step Detection of Concrete Internal Condition Using Array Ultrasound and Deep Learning. NDT E Int. 2023, 139, 102945. [Google Scholar] [CrossRef]

- Słoński, M.; Schabowicz, K.; Krawczyk, E. Detection of Flaws in Concrete Using Ultrasonic Tomography and Convolutional Neural Networks. Materials 2020, 13, 1557. [Google Scholar] [CrossRef]

- Kuchipudi, S.T.; Ghosh, D. Automated Detection and Segmentation of Internal Defects in Reinforced Concrete Using Deep Learning on Ultrasonic Images. Constr. Build. Mater. 2024, 411, 134491. [Google Scholar] [CrossRef]

- Yang, H.; Li, S.; Shu, J.; Xu, C.; Ning, Y. Internal crack recognition of reinforce concrete structure based on array ultrasound and feature fusion neural network. J. Build. Struct. 2024, 45, 89–99. (In Chinese) [Google Scholar]

- Lagüela, S.; Solla, M.; Puente, I.; Prego, F.J. Joint Use of GPR, IRT and TLS Techniques for the Integral Damage Detection in Paving. Constr. Build. Mater. 2018, 174, 749–760. [Google Scholar] [CrossRef]

- Garrido, I.; Solla, M.; Lagüela, S.; Fernández, N. IRT and GPR Techniques for Moisture Detection and Characterisation in Buildings. Sensors 2020, 20, 6421. [Google Scholar] [CrossRef] [PubMed]

- Solla, M.; Lagüela, S.; Fernández, N.; Garrido, I. Assessing Rebar Corrosion through the Combination of Nondestructive GPR and IRT Methodologies. Remote Sens. 2019, 11, 1705. [Google Scholar] [CrossRef]

- Khan, F.; Rajaram, S.; Vanniamparambil, P.A.; Bolhassani, M.; Hamid, A.; Kontsos, A.; Bartoli, I. Multi-Sensing NDT for Damage Assessment of Concrete Masonry Walls. Struct. Control Health Monit. 2015, 22, 449–462. [Google Scholar] [CrossRef]

- Chen, J.; Chen, X.; Zhao, H.; Ji, H.; Chen, R.; Yao, K.; Zhao, R. Experimental research and application of non-destructive detectingtechniques for concrete-filled steel tubes based on infrared thermaimaging and ultrasonic method. J. Build. Struct. 2021, 42, 444–453. [Google Scholar]

- Gucunski, N.; Pailes, B.; Kim, J.; Azari, H.; Dinh, K. Capture and Quantification of Deterioration Progression in Concrete Bridge Decks through Periodical NDE Surveys. J. Infrastruct. Syst. 2017, 23, B4016005. [Google Scholar] [CrossRef]

- Chew, M.Y.L.; Gan, V.J.L. Long-Standing Themes and Future Prospects for the Inspection and Maintenance of Façade Falling Objects from Tall Buildings. Sensors 2022, 22, 6070. [Google Scholar] [CrossRef]

- Huang, X.; Liu, Y.; Huang, L.; Stikbakke, S.; Onstein, E. BIM-Supported Drone Path Planning for Building Exterior Surface Inspection. Comput. Ind. 2023, 153, 104019. [Google Scholar] [CrossRef]

- Hosamo, H.H.; Nielsen, H.K.; Alnmr, A.N.; Svennevig, P.R.; Svidt, K. A Review of the Digital Twin Technology for Fault Detection in Buildings. Front. Built Environ. 2022, 8, 1013196. [Google Scholar] [CrossRef]

- Su, S.; Zhong, R.Y.; Jiang, Y.; Song, J.; Fu, Y.; Cao, H. Digital Twin and Its Potential Applications in Construction Industry: State-of-Art Review and a Conceptual Framework. Adv. Eng. Inform. 2023, 57, 102030. [Google Scholar] [CrossRef]

- Drobnyi, V.; Li, S.; Brilakis, I. Connectivity Detection for Automatic Construction of Building Geometric Digital Twins. Autom. Constr. 2024, 159, 105281. [Google Scholar] [CrossRef]

- Yiğit, A.Y.; Uysal, M. Automatic Crack Detection and Structural Inspection of Cultural Heritage Buildings Using UAV Photogrammetry and Digital Twin Technology. J. Build. Eng. 2024, 94, 109952. [Google Scholar] [CrossRef]

- Pantoja-Rosero, B.G.; Achanta, R.; Beyer, K. Damage-Augmented Digital Twins towards the Automated Inspection of Buildings. Autom. Constr. 2023, 150, 104842. [Google Scholar] [CrossRef]

- Hodavand, F.; Ramaji, I.J.; Sadeghi, N. Digital Twin for Fault Detection and Diagnosis of Building Operations: A Systematic Review. Buildings 2023, 13, 1426. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Kaur, J.; Singh, W. A Systematic Review of Object Detection from Images Using Deep Learning. Multimed. Tools Appl. 2024, 83, 12253–12338. [Google Scholar] [CrossRef]

- Trigka, M.; Dritsas, E. A Comprehensive Survey of Deep Learning Approaches in Image Processing. Sensors 2025, 25, 531. [Google Scholar] [CrossRef]

- Liang, J. Image Classification Based on RESNET. J. Phys. Conf. Ser. 2020, 1634, 012110. [Google Scholar] [CrossRef]

- Yu, D.; Yang, J.; Zhang, Y.; Yu, S. Additive DenseNet: Dense Connections Based on Simple Addition Operations. J. Intell. Fuzzy Syst. 2021, 40, 5015–5025. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1314–1324. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Ghaffarian, S.; Valente, J.; van der Voort, M.; Tekinerdogan, B. Effect of Attention Mechanism in Deep Learning-Based Remote Sensing Image Processing: A Systematic Literature Review. Remote Sens. 2021, 13, 2965. [Google Scholar] [CrossRef]

- Yang, C.; Gao, Z.; Yang, R.; Hao, Y.; Wang, W. Survey of Lightweight Object Detection Model Methods. Mech. Electr. Eng. Technol. 2024, 1–19. (In Chinese) [Google Scholar]

- Han, J.; Kim, J.; Kim, S.; Wang, S. Effectiveness of Image Augmentation Techniques on Detection of Building Characteristics from Street View Images Using Deep Learning. J. Constr. Eng. Manag. 2024, 150, 04024129. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, Y.; Lu, D. Intelligent Detection of Concrete Apparent Defects Based on a Deep Learning—Literature Review, Knowledge Gaps and Future Developments. Ceram. Silik. 2024, 68, 252–266. [Google Scholar] [CrossRef]

- Wang, J.; Liu, M.; Su, Y.; Yao, J.; Du, Y.; Zhao, M.; Lu, D. Small Target Detection Algorithm Based on Attention Mechanism and Data Augmentation. Signal Image Video Process. 2023, 18, 3837–3853. [Google Scholar] [CrossRef]

- Pei, L.; Sun, Z.; Xiao, L.; Li, W.; Sun, J.; Zhang, H. Virtual Generation of Pavement Crack Images Based on Improved Deep Convolutional Generative Adversarial Network. Eng. Appl. Artif. Intell. 2021, 104, 104376. [Google Scholar] [CrossRef]

- Liu, C.; Yao, Y.; Li, J.; Qian, J.; Liu, L. Research on Lightweight GPR Road Surface Disease Image Recognition and Data Expansion Algorithm Based on YOLO and GAN. Case Stud. Constr. Mater. 2024, 20, e02779. [Google Scholar] [CrossRef]

- Yuan, B.; Sun, Z.; Pei, L.; Li, W.; Ding, M.; Hao, X. Super-Resolution Reconstruction Method of Pavement Crack Images Based on an Improved Generative Adversarial Network. Sensors 2022, 22, 9092. [Google Scholar] [CrossRef]

- Ren, K.; Gao, Y.; Wan, M.; Gu, G.; Chen, Q. Infrared Small Target Detection via Region Super Resolution Generative Adversarial Network. Appl. Intell. 2022, 52, 11725–11737. [Google Scholar] [CrossRef]

- Sang, Y.; Liu, T.; Liu, Y.; Ma, T.; Wang, S.; Zhang, X.; Sun, J. A Lightweight Network with Latent Representations for UAV Thermal Image Super-Resolution. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5005211. [Google Scholar] [CrossRef]

- Pozzer, S.; De Souza, M.P.V.; Hena, B.; Hesam, S.; Rezayiye, R.K.; Rezazadeh Azar, E.; Lopez, F.; Maldague, X. Effect of Different Imaging Modalities on the Performance of a CNN: An Experimental Study on Damage Segmentation in Infrared, Visible, and Fused Images of Concrete Structures. NDT E Int. 2022, 132, 102709. [Google Scholar] [CrossRef]

- Hou, F.; Fang, M.; Xianghuan Luo, T.; Fan, X.; Guo, Y. Dual-Task GPR Method: Improved Generative Adversarial Clutter Suppression Network and Adaptive Target Localization Algorithm in GPR Image. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5108313. [Google Scholar] [CrossRef]

- Liang, J.; Yang, X.; Huang, Y.; Li, H.; He, S.; Hu, X.; Chen, Z.; Xue, W.; Cheng, J.; Ni, D. Sketch Guided and Progressive Growing GAN for Realistic and Editable Ultrasound Image Synthesis. Med. Image Anal. 2022, 79, 102461. [Google Scholar] [CrossRef] [PubMed]

- Sayed, A.N.; Himeur, Y.; Bensaali, F. Deep and Transfer Learning for Building Occupancy Detection: A Review and Comparative Analysis. Eng. Appl. Artif. Intell. 2022, 115, 105254. [Google Scholar] [CrossRef]

- Li, N.; Wu, R.; Li, H.; Wang, H.; Gui, Z.; Song, D. M2 FNet: Multimodal Fusion Network for Airport Runway Subsurface Defect Detection Using GPR Data. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5108816. [Google Scholar] [CrossRef]

- Katsigiannis, S.; Seyedzadeh, S.; Agapiou, A.; Ramzan, N. Deep Learning for Crack Detection on Masonry Façades Using Limited Data and Transfer Learning. J. Build. Eng. 2023, 76, 107105. [Google Scholar] [CrossRef]

- Lyu, C.; Heyer, P.; Goossens, B.; Philips, W. An Unsupervised Transfer Learning Framework for Visible-Thermal Pedestrian Detection. Sensors 2022, 22, 4416. [Google Scholar] [CrossRef]

- Paramanandham, N.; Koppad, D.; Anbalagan, S. Vision Based Crack Detection in Concrete Structures Using Cutting-Edge Deep Learning Techniques. Trait. Signal 2022, 39, 485–492. [Google Scholar] [CrossRef]

- Bayoudh, K.; Knani, R.; Hamdaoui, F.; Mtibaa, A. A Survey on Deep Multimodal Learning for Computer Vision: Advances, Trends, Applications, and Datasets. Vis. Comput. 2022, 38, 2939–2970. [Google Scholar] [CrossRef]

- Sudharsan, P.L.; Gantala, T.; Balasubramaniam, K. Multi Modal Data Fusion of PAUT with Thermography Assisted by Automatic Defect Recognition System (M-ADR) for NDE Applications. NDT E Int. 2024, 143, 103062. [Google Scholar] [CrossRef]

- Shojaiee, F.; Baleghi, Y. EFASPP U-Net for Semantic Segmentation of Night Traffic Scenes Using Fusion of Visible and Thermal Images. Eng. Appl. Artif. Intell. 2023, 117, 105627. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMAScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).