MSF-Net: A Data-Driven Multimodal Transformer for Intelligent Behavior Recognition and Financial Risk Reasoning in Virtual Live-Streaming

Abstract

1. Introduction

- We construct, to the best of our knowledge, the first standardized multimodal financial security dataset tailored for virtual live-streaming, integrating synchronized visual, audio, textual, and transactional streams, thereby providing a reproducible benchmark for subsequent research on virtual human risk analysis.

- We design a cross-signal feature alignment network based on a multimodal Transformer, which performs unified temporal modeling over virtual human behaviors and transaction sequences, enabling the capture of subtle and long-range behavior–finance anomalies that cannot be represented by single-modality or transaction-only models.

- We propose a fake review detection and anti-fraud fusion mechanism that jointly leverages semantic content, affective dynamics, and transactional frequency patterns, allowing MSF-Net to simultaneously address fake reviews, abnormal tipping, and illicit payment behaviors within a single coherent framework.

- We implement MSF-Net and conduct comprehensive evaluations in controlled virtual live-streaming environments, as well as on an external multimodal benchmark, demonstrating promising performance in terms of accuracy, real-time capability, and interpretability compared with representative financial risk detection baselines.

2. Related Work

2.1. Virtual Human Live Streaming and Multimodal Analysis

2.2. Sentiment Recognition and Cross-Modal Feature Fusion

2.3. Financial Risk Detection and Deep Security Learning

3. Materials and Methods

3.1. Data Collection

3.2. Data Preprocessing and Augmentation

3.2.1. Image Augmentation

3.2.2. Audio Augmentation

3.2.3. Series-Data Augmentation

3.3. Proposed Method

3.3.1. Overall

3.3.2. Multimodal Alignment Transformer

3.3.3. Fake Review Detection Module

3.3.4. Multi-Signal Fusion Decision Module

4. Results and Discussion

4.1. Experimental Configuration

4.1.1. Hardware and Software Platform

4.1.2. Baseline Models and Evaluation Metrics

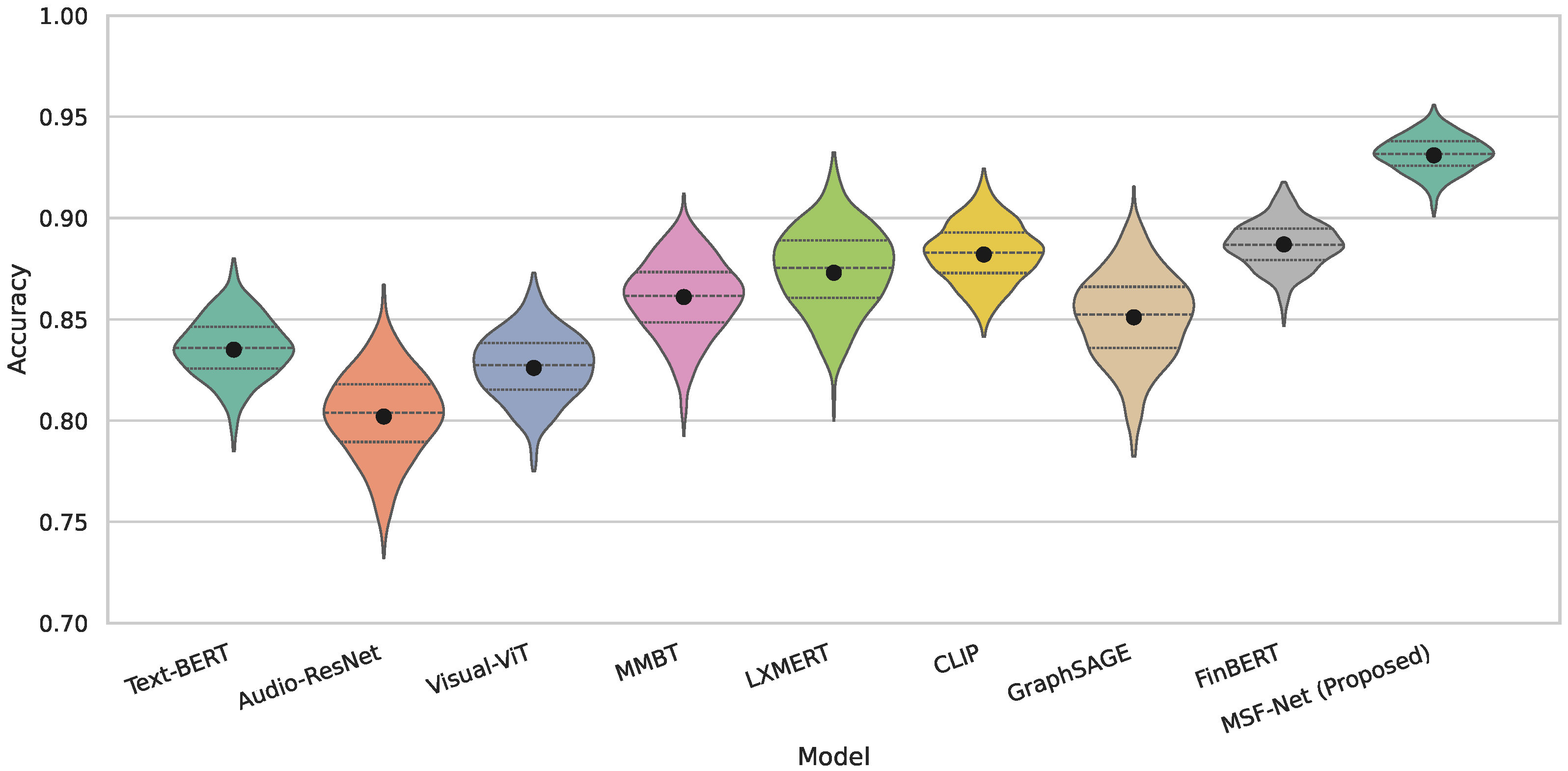

4.2. Performance Comparison

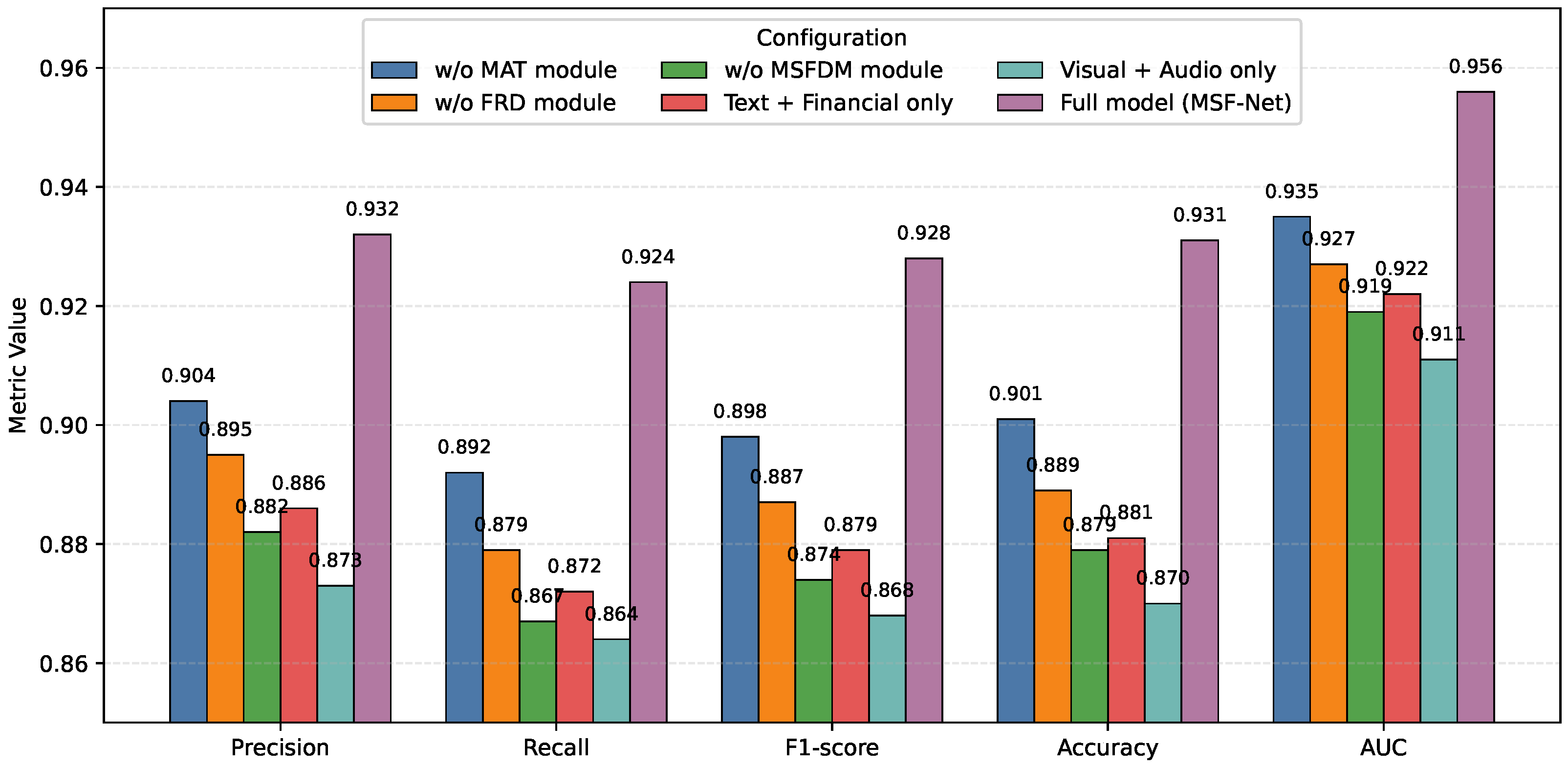

4.3. Ablation Study

4.4. Interpretability Analysis and Visualization

4.5. Practical Deployment and Case Analysis

4.6. Differences from Real Data and Implications for Generalization

4.7. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Olugbade, T.; He, L.; Maiolino, P.; Heylen, D.; Bianchi-Berthouze, N. Touch technology in affective human–, robot–, and virtual–human interactions: A survey. Proc. IEEE 2023, 111, 1333–1354. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Zhao, X. Supervising or assisting? The influence of virtual anchor driven by AI–human collaboration on customer engagement in live streaming e-commerce. Electron. Commer. Res. 2025, 25, 3047–3070. [Google Scholar] [CrossRef]

- Cui, L.; Liu, J. Virtual human: A comprehensive survey on academic and applications. IEEE Access 2023, 11, 123830–123845. [Google Scholar] [CrossRef]

- Wronka, C. “Cyber-laundering”: The change of money laundering in the digital age. J. Money Laund. Control 2022, 25, 330–344. [Google Scholar] [CrossRef]

- Wang, K.; Wu, J.; Sun, Y.; Chen, J.; Pu, Y.; Qi, Y. Trust in human and virtual live streamers: The role of integrity and social presence. Int. J. Hum.- Interact. 2024, 40, 8274–8294. [Google Scholar] [CrossRef]

- Aditya Wahyu Febriyantoro, A. Applying EU Anti-Money Laundering Regulations and De-Risking Policy on Social Live Streaming Service Platforms and Live-Streamers. Master’s Thesis, Utrecht University, Utrecht, The Netherlands, 2024. [Google Scholar]

- Huang, Z.; Dong, W.; Kim, J.N.; Hollenczer, J.; Lee, H.; Jensen, M.; Bessarabova, E.; Talbert, N.; Zhu, R.; Li, Y. Efficient Duplicate Comment Detection for Rulemaking Agencies With Unsupervised Deep Learning: A Cost-Effective and High-Accuracy Approach. IEEE Trans. Comput. Soc. Syst. 2025, 12, 2675–2684. [Google Scholar] [CrossRef]

- Rao, G.; Wang, Z.; Liang, J. Reinforcement learning for pattern recognition in cross-border financial transaction anomalies: A behavioral economics approach to AML. Appl. Comput. Eng. 2025, 142, 116–127. [Google Scholar] [CrossRef]

- Gao, G.; Liu, H.; Zhao, K. Live streaming recommendations based on dynamic representation learning. Decis. Support Syst. 2023, 169, 113957. [Google Scholar] [CrossRef]

- Wu, D.; Zhou, L. Cross-modal stream transmission: Architecture, strategy, and technology. IEEE Wirel. Commun. 2023, 31, 134–140. [Google Scholar] [CrossRef]

- Fan, J.; Peng, L.; Chen, T.; Cong, G. Regulation strategy for behavioral integrity of live streamers: From the perspective of the platform based on evolutionary game in China. Electron. Mark. 2024, 34, 21. [Google Scholar] [CrossRef]

- Li, Q.; Ren, J.; Zhang, Y.; Song, C.; Liao, Y.; Zhang, Y. Privacy-Preserving DNN Training with Prefetched Meta-Keys on Heterogeneous Neural Network Accelerators. In Proceedings of the 2023 60th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 9–13 July 2023; pp. 1–6. [Google Scholar]

- Chen, W. Simulation application of virtual robots and artificial intelligence based on deep learning in enterprise financial systems. Entertain. Comput. 2025, 52, 100772. [Google Scholar]

- Xu, G.; Ren, M.; Wang, Z.; Li, G. MEMF: Multi-entity multimodal fusion framework for sales prediction in live streaming commerce. Decis. Support Syst. 2024, 184, 114277. [Google Scholar] [CrossRef]

- Song, Y.; Ni, Y.; Xu, R.; Sun, C.; Sun, B. Research on Dynamic Perception and Intelligent Prevention and Control of Digital Financial Security Risks by Integrating Multimodal Data. Available online: https://ssrn.com/abstract=5450724 (accessed on 3 November 2024).

- Karjee, J.; Kakwani, K.R.; Anand, K.; Naik, P. Lightweight Multimodal Fusion Computing Model for Emotional Streaming in Edge Platform. In Proceedings of the 2024 IEEE 21st Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 6–9 January 2024; pp. 419–424. [Google Scholar]

- Sun, J.; Yin, H.; Tian, Y.; Wu, J.; Shen, L.; Chen, L. Two-Level Multimodal Fusion for Sentiment Analysis in Public Security. Secur. Commun. Netw. 2021, 2021, 6662337. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, Y. Confidential Federated Learning for Heterogeneous Platforms against Client-Side Privacy Leakages. In Proceedings of the ACM Turing Award Celebration Conference, Changsha, China, 5–7 July 2024; pp. 239–241. [Google Scholar]

- Ali, M.; Naeem, F.; Kaddoum, G.; Hossain, E. Metaverse communications, networking, security, and applications: Research issues, state-of-the-art, and future directions. IEEE Commun. Surv. Tutor. 2023, 26, 1238–1278. [Google Scholar] [CrossRef]

- Jiang, Y.; Ning, K.; Pan, Z.; Shen, X.; Ni, J.; Yu, W.; Schneider, A.; Chen, H.; Nevmyvaka, Y.; Song, D. Multi-modal time series analysis: A tutorial and survey. In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining V. 2, Toronto, ON, Canada, 3–7 August 2025; pp. 6043–6053. [Google Scholar]

- Wan, A.; Jiang, M. Can virtual influencers replace human influencers in live-streaming e-commerce? An exploratory study from practitioners’ and consumers’ perspectives. J. Curr. Issues Res. Advert. 2023, 44, 332–372. [Google Scholar] [CrossRef]

- Seaborn, K.; Miyake, N.P.; Pennefather, P.; Otake-Matsuura, M. Voice in human–agent interaction: A survey. ACM Comput. Surv. CSUR 2021, 54, 1–43. [Google Scholar] [CrossRef]

- Zhang, Y.; He, S.; Wa, S.; Zong, Z.; Lin, J.; Fan, D.; Fu, J.; Lv, C. Symmetry GAN detection network: An automatic one-stage high-accuracy detection network for various types of lesions on CT images. Symmetry 2022, 14, 234. [Google Scholar] [CrossRef]

- Nguyen, E.; Goel, K.; Gu, A.; Downs, G.; Shah, P.; Dao, T.; Baccus, S.; Ré, C. S4nd: Modeling images and videos as multidimensional signals with state spaces. Adv. Neural Inf. Process. Syst. 2022, 35, 2846–2861. [Google Scholar]

- Lu, Z.; Kazi, R.H.; Wei, L.y.; Dontcheva, M.; Karahalios, K. StreamSketch: Exploring multi-modal interactions in creative live streams. Proc. ACM Hum.-Comput. Interact. 2021, 5, 1–26. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Ma, X. A new strategy for tuning ReLUs: Self-adaptive linear units (SALUs). In Proceedings of the ICMLCA 2021, 2nd International Conference on Machine Learning and Computer Application, Shenyang, China, 17–19 December 2021; pp. 1–8. [Google Scholar]

- Hu, F.; He, K.; Wang, C.; Zheng, Q.; Zhou, B.; Li, G.; Sun, Y. STRFLNet: Spatio-Temporal Representation Fusion Learning Network for EEG-Based Emotion Recognition. IEEE Trans. Affect. Comput. 2025, 16, 1–16. [Google Scholar] [CrossRef]

- Chandrasekaran, G.; Nguyen, T.N.; Hemanth D, J. Multimodal sentimental analysis for social media applications: A comprehensive review. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2021, 11, e1415. [Google Scholar] [CrossRef]

- Yu, P.; He, X.; Li, H.; Dou, H.; Tan, Y.; Wu, H.; Chen, B. FMLAN: A novel framework for cross-subject and cross-session EEG emotion recognition. Biomed. Signal Process. Control 2025, 100, 106912. [Google Scholar] [CrossRef]

- Xu, P.; Zhu, X.; Clifton, D.A. Multimodal learning with transformers: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12113–12132. [Google Scholar] [CrossRef] [PubMed]

- Omoifo, D. Exploring Customer Behavior Through Emotion Detection. Master’s Thesis, Kennesaw State University, Kennesaw, GA, USA, 2024. [Google Scholar]

- Li, S.; Tang, H. Multimodal alignment and fusion: A survey. arXiv 2024, arXiv:2411.17040. [Google Scholar] [CrossRef]

- Udayakumar, R.; Joshi, A.; Boomiga, S.; Sugumar, R. Deep fraud Net: A deep learning approach for cyber security and financial fraud detection and classification. J. Internet Serv. Inf. Secur. 2023, 13, 138–157. [Google Scholar]

- Teng, T.; Ma, L. Deep learning-based risk management of financial market in smart grid. Comput. Electr. Eng. 2022, 99, 107844. [Google Scholar] [CrossRef]

- Luo, Q.; Zeng, W.; Chen, M.; Peng, G.; Yuan, X.; Yin, Q. Self-attention and transformers: Driving the evolution of large language models. In Proceedings of the 2023 IEEE 6th International Conference on Electronic Information and Communication Technology (ICEICT), Qingdao, China, 21–24 July 2023; pp. 401–405. [Google Scholar]

- Hu, L.; Zhang, B.; Zhang, P.; Qi, J.; Cao, J.; Gao, D.; Zhao, H.; Feng, X.; Wang, Q.; Zhuo, L.; et al. A Virtual character generation and animation system for e-commerce live streaming. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 1202–1211. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (Long and Short Papers), pp. 4171–4186. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN architectures for large-scale audio classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 131–135. [Google Scholar]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Tsai, Y.H.H.; Bai, S.; Liang, P.P.; Kolter, J.Z.; Morency, L.P.; Salakhutdinov, R. Multimodal transformer for unaligned multimodal language sequences. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Volume 2019, p. 6558. [Google Scholar]

- Tan, H.; Bansal, M. Lxmert: Learning cross-modality encoder representations from transformers. arXiv 2019, arXiv:1908.07490. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning. PmLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1025–1035. [Google Scholar]

- Araci, D. Finbert: Financial sentiment analysis with pre-trained language models. arXiv 2019, arXiv:1908.10063. [Google Scholar]

| Data | Data Source | Scale | Main Features |

|---|---|---|---|

| Visual | Virtual human video stream (Unity + FaceRig) | 1.2 TB (200 sessions) | Facial expressions, motion, and posture sequences |

| Audio | Anchor voice recordings (44.1 kHz stereo) | 360 GB (480 h) | Mel-spectrogram, pitch contour, and energy envelope |

| Text | Real-time comments and barrage API logs | 680k entries | Comment semantics, sentiment tags, and user interactions |

| Financial | Platform payment and gifting simulation | 450k records | Transaction amount, timestamp, payment method, and anomaly labels |

| Module | Operation | Input Shape | Output Shape/Hyperparameters |

|---|---|---|---|

| MAT | Visual patch embedding | ; patch size 16, stride 16 | |

| Audio temporal encoder | Mel-spec | ; 1D-Transformer depth 2 | |

| Text encoder (BERT) | ; hidden dim | ||

| Cross-modal attention | ; 8 heads, FFN dim 2048 | ||

| Mamba block | ; state dim 256 | ||

| FRD | Text conv encoder | ; kernels | |

| Affective temporal conv | ; kernels | ||

| Cross-dimensional fusion | ; | ||

| Contrastive projection | ; temperature | ||

| MSFDM | Input projection | All | |

| Cross-signal attention | query | ; 8 heads | |

| Fusion layers (4 ×) | ; FFN dim 2048 | ||

| Decision heads | Risk: ; Class: | ||

| Final Output | Risk score + behavior class | , |

| Model | Precision | Recall | F1-Score | Accuracy | AUC | FPS |

|---|---|---|---|---|---|---|

| Text-BERT | 0.842 | 0.816 | 0.829 | 0.835 | 0.871 | 45.3 |

| Audio-ResNet | 0.794 | 0.781 | 0.787 | 0.802 | 0.846 | 52.6 |

| Visual-ViT | 0.821 | 0.807 | 0.814 | 0.826 | 0.859 | 49.1 |

| MMBT | 0.865 | 0.849 | 0.857 | 0.861 | 0.893 | 41.8 |

| LXMERT | 0.872 | 0.864 | 0.868 | 0.873 | 0.902 | 38.7 |

| CLIP | 0.881 | 0.873 | 0.877 | 0.882 | 0.913 | 40.4 |

| GraphSAGE | 0.853 | 0.841 | 0.847 | 0.851 | 0.887 | 56.2 |

| FinBERT | 0.889 | 0.875 | 0.882 | 0.887 | 0.919 | 50.9 |

| MSF-Net (Proposed) | 0.932 | 0.924 | 0.928 | 0.931 | 0.956 | 60.7 |

| Model | Precision | Recall | F1-Score | Accuracy | AUC | FPS |

|---|---|---|---|---|---|---|

| Text-BERT | 0.728 | 0.670 | 0.698 | 0.712 | 0.743 | 120.3 |

| Audio-ResNet | 0.651 | 0.594 | 0.621 | 0.634 | 0.671 | 128.5 |

| Visual-ViT | 0.675 | 0.618 | 0.645 | 0.657 | 0.692 | 115.2 |

| MMBT | 0.741 | 0.683 | 0.711 | 0.724 | 0.758 | 82.7 |

| LXMERT | 0.758 | 0.700 | 0.728 | 0.736 | 0.773 | 78.4 |

| CLIP | 0.769 | 0.711 | 0.739 | 0.748 | 0.781 | 80.1 |

| GraphSAGE | 0.701 | 0.655 | 0.677 | 0.689 | 0.732 | 110.4 |

| FinBERT | 0.742 | 0.684 | 0.712 | 0.725 | 0.752 | 118.7 |

| MSF-Net | 0.793 | 0.735 | 0.763 | 0.772 | 0.812 | 84.6 |

| Configuration | Precision | Recall | F1-Score | Accuracy | AUC | Latency (ms) |

|---|---|---|---|---|---|---|

| w/o MAT module | 0.904 | 0.892 | 0.898 | 0.901 | 0.935 | 28.3 |

| w/o FRD module | 0.895 | 0.879 | 0.887 | 0.889 | 0.927 | 26.5 |

| w/o MSFDM module | 0.882 | 0.867 | 0.874 | 0.879 | 0.919 | 24.7 |

| Text + Financial only | 0.886 | 0.872 | 0.879 | 0.881 | 0.922 | 22.4 |

| Visual + Audio only | 0.873 | 0.864 | 0.868 | 0.870 | 0.911 | 23.1 |

| Full model (MSF-Net) | 0.932 | 0.924 | 0.928 | 0.931 | 0.956 | 21.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Y.; Zhang, L.; Zhang, R.; Zhan, H.; Dai, M.; Hu, X.; Chen, R.; Li, M. MSF-Net: A Data-Driven Multimodal Transformer for Intelligent Behavior Recognition and Financial Risk Reasoning in Virtual Live-Streaming. Electronics 2025, 14, 4769. https://doi.org/10.3390/electronics14234769

Song Y, Zhang L, Zhang R, Zhan H, Dai M, Hu X, Chen R, Li M. MSF-Net: A Data-Driven Multimodal Transformer for Intelligent Behavior Recognition and Financial Risk Reasoning in Virtual Live-Streaming. Electronics. 2025; 14(23):4769. https://doi.org/10.3390/electronics14234769

Chicago/Turabian StyleSong, Yang, Liman Zhang, Ruoyun Zhang, Haoyuan Zhan, Mingyuan Dai, Xinyi Hu, Ranran Chen, and Manzhou Li. 2025. "MSF-Net: A Data-Driven Multimodal Transformer for Intelligent Behavior Recognition and Financial Risk Reasoning in Virtual Live-Streaming" Electronics 14, no. 23: 4769. https://doi.org/10.3390/electronics14234769

APA StyleSong, Y., Zhang, L., Zhang, R., Zhan, H., Dai, M., Hu, X., Chen, R., & Li, M. (2025). MSF-Net: A Data-Driven Multimodal Transformer for Intelligent Behavior Recognition and Financial Risk Reasoning in Virtual Live-Streaming. Electronics, 14(23), 4769. https://doi.org/10.3390/electronics14234769