1. Introduction

Rate of penetration (ROP) is an important parameter for evaluating the efficiency of the drilling process. The prediction of the ROP provides critical guidance for drilling operations. By adjusting control parameters to maximize ROP, drilling efficiency can be enhanced and overall costs reduced [

1]. ROP is influenced by numerous drilling parameters and formation characteristics.

Existing methods for ROP prediction modeling primarily include mechanistic modeling, data-driven approaches, and hybrid modeling techniques. Among these, mechanistic models are grounded in classical drilling mechanics, which encompass analytical, semi-analytical, and mechanistic approaches [

2]. However, these methods have difficulty accurately establishing complex nonlinear relationships between parameters and therefore have limited predictive performance [

3].

Classical mechanistic modeling ROP models are grounded in drilling mechanics and bit–rock interaction physics. Early work such as Maurer’s perfect-cleaning theory derives a roller-cone drilling-rate equation from crater formation mechanics under the ideal assumption that cuttings are fully removed between tooth impacts [

4]. Teale’s mechanical specific energy formalizes the energy per unit volume required to excavate rock, providing a mechanistic link between bit load, torque, and formation strength, and a diagnostic for drilling efficiency [

5]. Semi-empirical composite formulations, most notably Bourgoyne and Young’s multiplicative eight-function model, integrate depth, differential pressure, weight on bit (WOB), rotary speed (RPM), bit wear, hydraulics, and jet impact to predict ROP using offset-well calibration constants [

6]. For roller-cone bits, Warren’s model emphasizes the cuttings generation-removal balance and relates ROP to WOB, RPM, bit size, and unconfined compressive strength (UCS) under effective hole cleaning [

7]. For drag bits, Detournay and Defourny’s phenomenological model formalizes rate-independent interface laws and frictional contact to describe bit–rock interaction response [

8]. In practice, these mechanistic approaches face notable challenges: steady-state and near-perfect cleaning assumptions are frequently violated in field operations [

4]; parameter identifiability is hampered by noisy measurements, latent formation properties (UCS, anisotropy, heterogeneity), and normalization choices [

5]; the need for site-specific calibration limits generalization across lithologies, bit designs, and depths [

6]; strong nonlinear coupling among hydraulics, cuttings transport, progressive bit wear, and drillstring dynamics is difficult to capture within compact analytical forms [

7]. These limitations often lead to degraded predictive accuracy in deep or directional wells and under time-varying conditions, motivating complementary data-driven and hybrid methods that retain physical interpretability while modeling complex, nonstationary behavior. Data-driven and hybrid approaches have demonstrated significant success across diverse domains. Examples include HHOA-optimized deep neural networks for textual information extraction from composite document images [

9], novel IoT-based deep learning methods for breast cancer detection [

10], and hybrid machine learning models for improving stock market prediction accuracy through efficient strategy optimization [

11]. Given this proven efficacy across varied applications, the application of such hybrid methodologies to ROP prediction holds considerable promise.

In the research on data-driven and hybrid models, some researchers use machine learning methods such as ANN [

12] and Support Vector Regression (SVR) [

13] to predict ROP. These methods use a single data-driven approach, so the precision of the prediction model is limited. By integrating multiple methods into a hybrid prediction model, better prediction accuracy can be achieved. For example, combining Convolutional Neural Networks (CNN) with Least Squares Support Vector Machines (LSSVMs) can improve the generalization ability of ROP prediction [

14]. The multi-factor collaborative random forest regression model [

15] outperforms ANN and support vector machines (SVMs) in terms of drilling speed prediction accuracy and interpretability, but its performance decreases with increasing well depth. By using a hybrid bat algorithm to optimize parameters and combining a restricted Boltzmann machine and a back propagation neural network, online prediction of drilling speed can be achieved [

16], but the universality of this method in different geological environments has not been discussed.

Some researchers treat ROP prediction as a time-series prediction problem and employ time-series methods to predict ROP along the depth sequence. One study applied a bidirectional Gated Recurrent Unit (GRU) to handle temporal and non-temporal features. With segmented training and sliding-window updates, it reduced Mean Absolute Percentage Error (MAPE) to 5.42% in real-time prediction for horizontal wells in Northwest China [

17]. Another study embedded the Bingham rheological equation into a BiLSTM-SA model. Hyperparameters were optimized with an improved dung beetle algorithm, achieving a Root Mean Square Error (RMSE) of 0.065 m/h and a Coefficient of Determination (R

2) of 0.963 across wells in the Dagang Oilfield [

18]. Some researchers use only the ROP series itself as input and perform one- to two-step-ahead prediction between adjacent geothermal wells using GRU, maintaining MAPE within 3% [

19]. However, these studies do not discuss how to simultaneously leverage local high-frequency features and ultra-long sequence dependencies, nor do they analyze phase lag and redundant compression issues associated with deep networks.

Consequently, fusion models based on Informer have been adopted. For instance, PCA–Informer reduces the original 12-dimensional input to five principal components in the Taipei block and achieves an additional 11.8% reduction in RMSE relative to the standard Informer [

20]; GRU–Informer combines GRU’s short-term memory with Informer’s sparse attention and attains R

2 above 0.96 in real-time prediction for shale gas wells in southern Sichuan [

21]. Nonetheless, most studies retain Informer’s distilling and normalization modules, which may weaken abrupt-change signals. They also lack unified evaluation across multiple horizons and do not analyze error-degradation or phase-lag patterns.

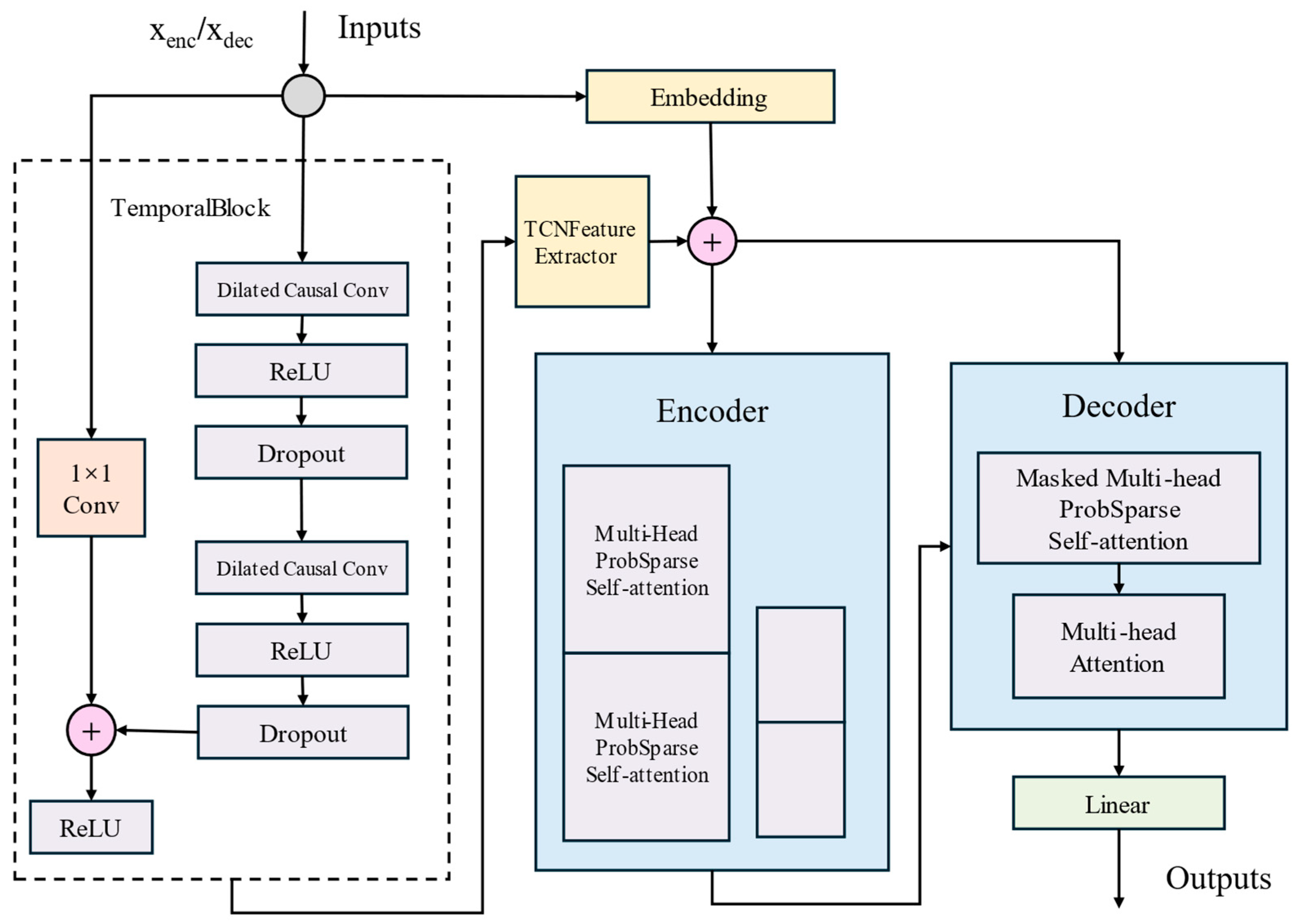

In this study, ROP prediction over depth sequences is treated as a time-series task. The main contributions are summarized as follows:

- (1)

To improve engineering data quality and model stability, a preprocessing pipeline is constructed that removes duplicate records by depth-based deduplication, applies quantile filtering within a sliding window and then performs secondary outlier removal with Isolation Forest, resamples features and labels for each well at 0.05 m intervals using K-Nearest Neighbors (KNN) regression, and conducts per-well standardization while concatenating the standardized label as an auxiliary feature. Training employs a sliding-window regime with generative decoding using a last-frame copy placeholder, cold-start smoothing to mitigate early volatility, and Optuna’s Bayesian search to jointly optimize the architecture and training hyperparameters for Informer and the Temporal Convolutional Network (TCN), thereby providing cleaner, more learnable inputs for Informer and TCN–Informer. Model performance across different combinations of input and prediction lengths is evaluated using Mean Absolute Error (MAE), RMSE, and R2.

- (2)

For depth-sequence prediction, Informer is used to capture ultra-long-range dependencies and yields a stable long-sequence baseline. On the dataset, ProbSparse attention and the generative decoder markedly improve inference efficiency for long sequences; however, overshoot and phase lag remain evident in segments with high-frequency perturbations and abrupt changes.

- (3)

To address Informer’s fluctuations in local prediction quality, a TCN is integrated to form TCN–Informer, enhancing the short-term prior via causal dilated convolutions, while phase distortion and redundant compression are reduced by removing weight normalization in the TCN and the distilling layer in Informer. Under a unified multi-horizon evaluation, relative to Informer, the hybrid exhibits slower degradation across horizons, faster responses in abrupt segments, and smoother predictions with smaller residuals in near-steady segments.

2. Related Work

2.1. Informer

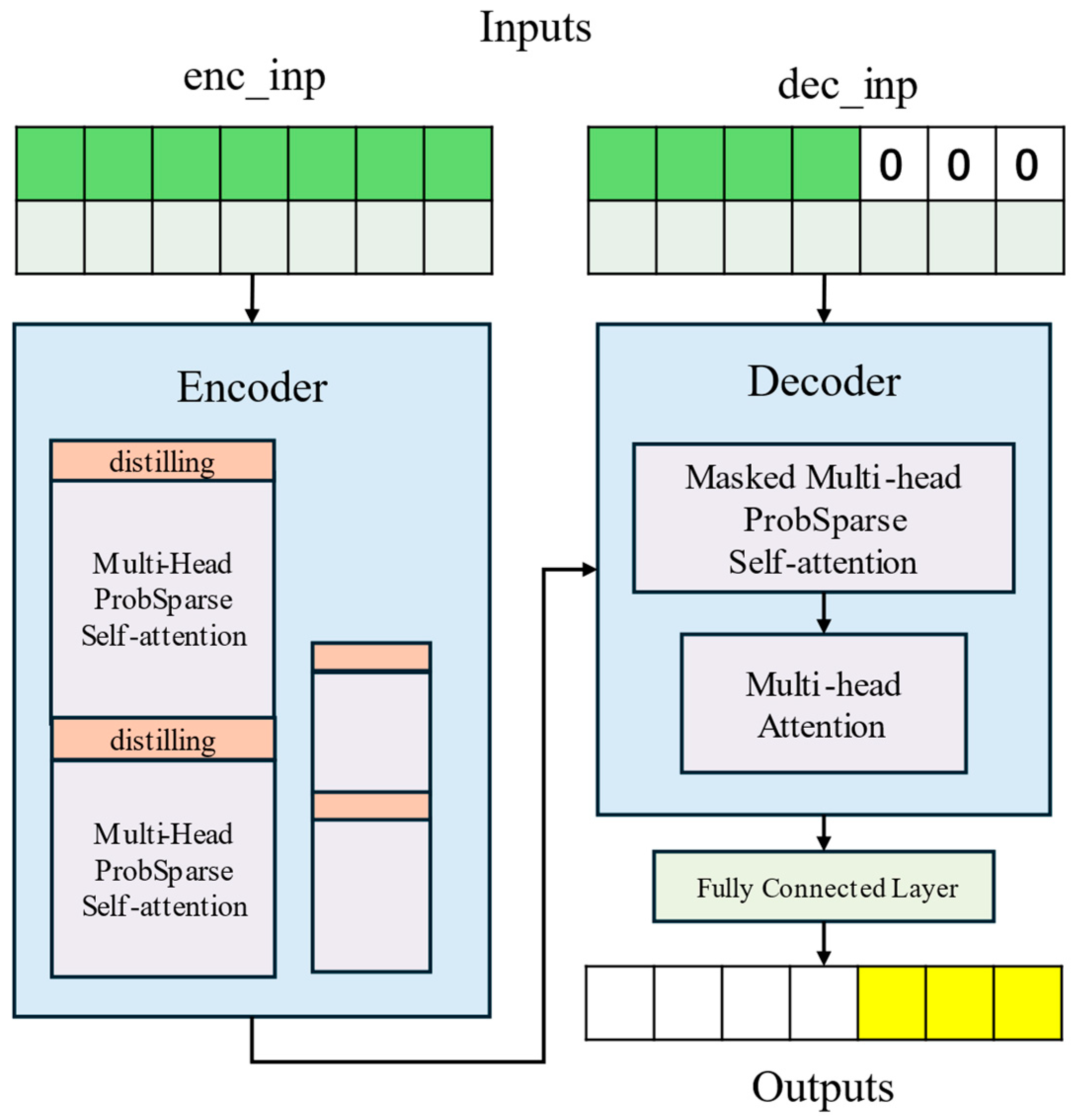

Informer is an efficient Transformer model designed for long-sequence time-series prediction, as illustrated in

Figure 1. It aims to overcome the quadratic time complexity and high memory consumption of conventional Transformers when handling long sequences, as well as inherent limitations of the encoder–decoder architecture, thereby enhancing predictive capability and efficiency for long-sequence inputs and outputs.

The self-attention mechanism of the basic Transformer relies on standard dot-product operations, and this yields time and memory complexities that grow quadratically with sequence length. As multiple layers are stacked, memory usage further accumulates, making it difficult to process long inputs; meanwhile, the decoder’s autoregressive generation of predictions step by step causes a substantial slowdown in inference for long-horizon prediction. To break through these limitations, Informer introduces three key innovations [

22].

First, the ProbSparse self-attention mechanism exploits the sparsity inherent in attention distributions to achieve efficient dependency alignment. Using a query sparsity metric to identify critical queries, it allows each key to attend only to a subset of dominant queries, thereby reducing per-layer time complexity and memory footprint while maintaining dependency alignment performance comparable to standard self-attention. The ProbSparse self-attention is defined as:

The sparsity metric is computed as the difference between the log-sum-exp and the arithmetic mean of a query’s scores over all keys; the formula is:

Furthermore, the computation is simplified via a maximum-mean approximation to ensure numerical stability; the corresponding approximation is:

Secondly, the self-attention distilling operation progressively filters dominant attention features layer by layer, effectively reducing the memory (space) complexity of stacked layers. After each stacked layer, this operation applies a one-dimensional convolution, ELU activation, and max pooling to halve the temporal dimension of the input sequence; its formulation is:

This design lowers the overall memory complexity and, by constructing stacked replicas with progressively halved input lengths and concatenating their outputs, enhances the model’s ability to handle ultra-long input sequences. Finally, the generative decoder replaces the step-by-step inference of the conventional encoder–decoder architecture with a single forward pass that completes long-horizon prediction. Its input is formed by concatenating a start token with placeholders for the target sequence; the formulation is:

By leveraging masked multi-head attention to circumvent the autoregressive constraint, the model can directly produce the complete long-horizon prediction during both training and inference, substantially improving inference speed for long sequences.

Empirical results show that Informer significantly outperforms existing methods such as ARIMA, Prophet, LSTM, and DeepAR on multiple large-scale datasets (e.g., transformer temperature, electricity load, and meteorological data). Its prediction error increases more slowly with longer prediction horizons, and it demonstrates clear advantages in both inference speed and memory efficiency, validating its effectiveness and practicality for long-sequence time-series prediction [

22]. These cross-domain results indicate that Informer has general advantages in scenarios characterized by long sequences, nonstationarity, and sparse critical events. For ROP prediction, this implies that the model can robustly capture a small number of key turning points over long well sections while maintaining scalable inference efficiency.

2.2. TCN

TCN is a general convolutional architecture for sequence prediction, designed to integrate best practices from modern convolutional networks into a concise and efficient starting point for sequence modeling. TCN is constructed within the broader Convolutional Neural Network (CNN) paradigm. CNNs exploit local connectivity and weight sharing to learn hierarchical features via convolutional kernels (1D for sequences, 2D/3D for images and volumes). Convolution in TCN is constructed to adapt to sequence data, preserving causality and long-range dependencies while retaining the efficiency of CNNs. It should be noted that TCN is not an entirely new architecture but rather a descriptive term for a family of architectures. Its core characteristics rest on two key principles: (1) the network adopts causal convolutions to prevent “leakage” of future information, meaning that the output at each time step depends only on the current and past inputs; and (2) it can accept sequences of arbitrary length and map them to output sequences of the same length, similar to the Recurrent Neural Network (RNN).

In sequence modeling, the model must predict outputs based on the historical portion of the input. Specifically, given an input sequence and the corresponding output sequence , each is allowed to depend only on and must not involve any “future” inputs . This causal constraint is fundamental to sequence modeling. TCN enforces this constraint via causal convolutions, which can be summarized as “1-D Fully Convolutional Network (FCN) combined with causal convolution”: the 1-D FCN ensures that each hidden layer has the same length as the input layer by adding zero padding of length (kernel size − 1); the causal convolution guarantees that the output at time is computed by convolving only with elements at time and earlier in the previous layer, thereby completely avoiding contamination from future information.

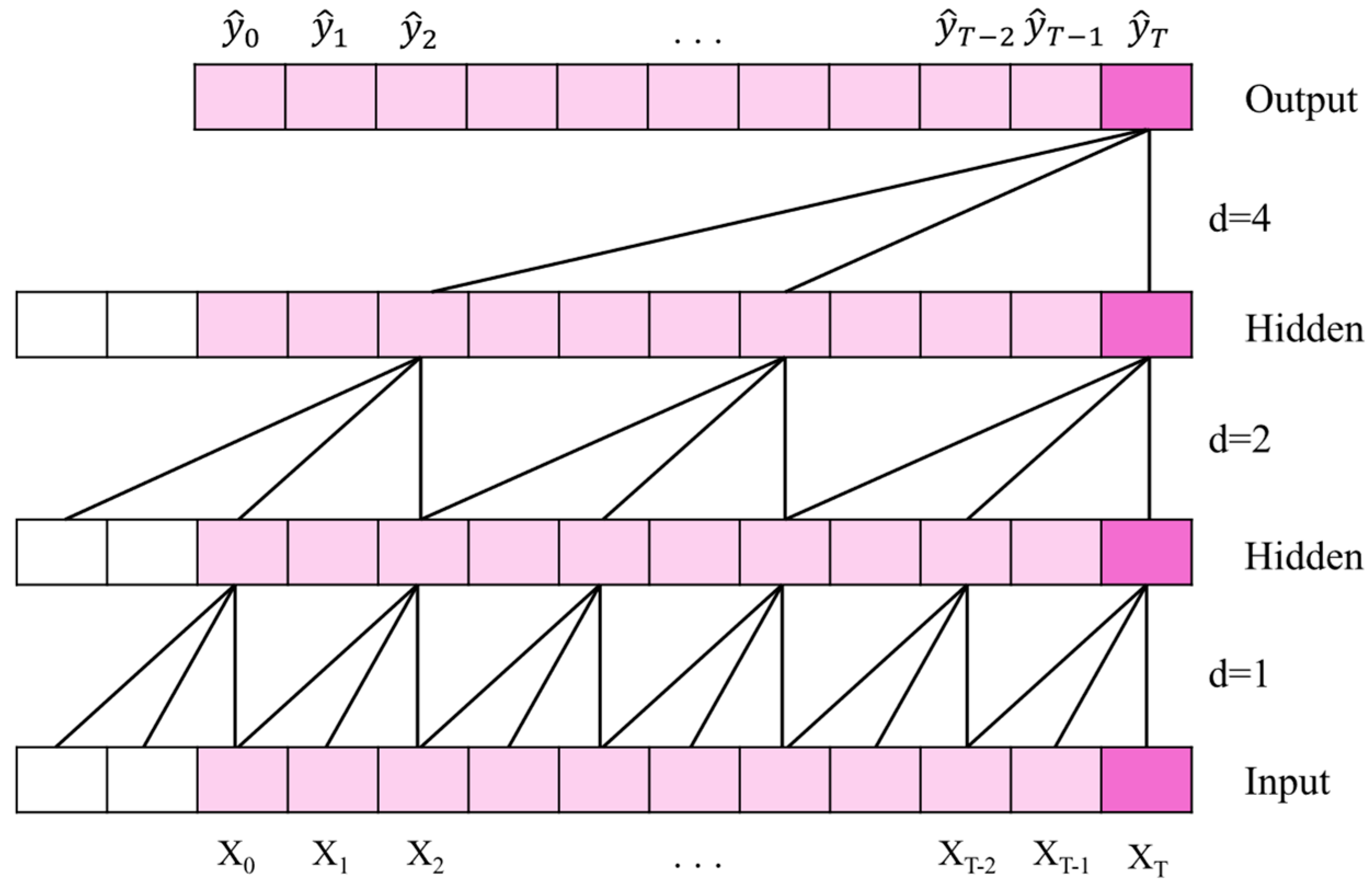

A basic causal convolution has a clear limitation: its effective history length grows linearly with network depth, which is inadequate for tasks requiring long-range historical context. To address this issue, TCN introduces dilated convolutions [

23]. As illustrated in

Figure 2, by inserting a fixed stride (the dilation factor) between kernel elements, the receptive field grows exponentially. The computation of a dilated convolution can be expressed as:

where

is the dilation factor and

is the kernel size, while the term

reflects the backward traversal over historical inputs. In practice, the dilation factor typically increases exponentially with depth (e.g.,

at layer

), enabling deep networks to cover extremely long histories while ensuring that every input position within the receptive field is captured by the corresponding convolutional kernel.

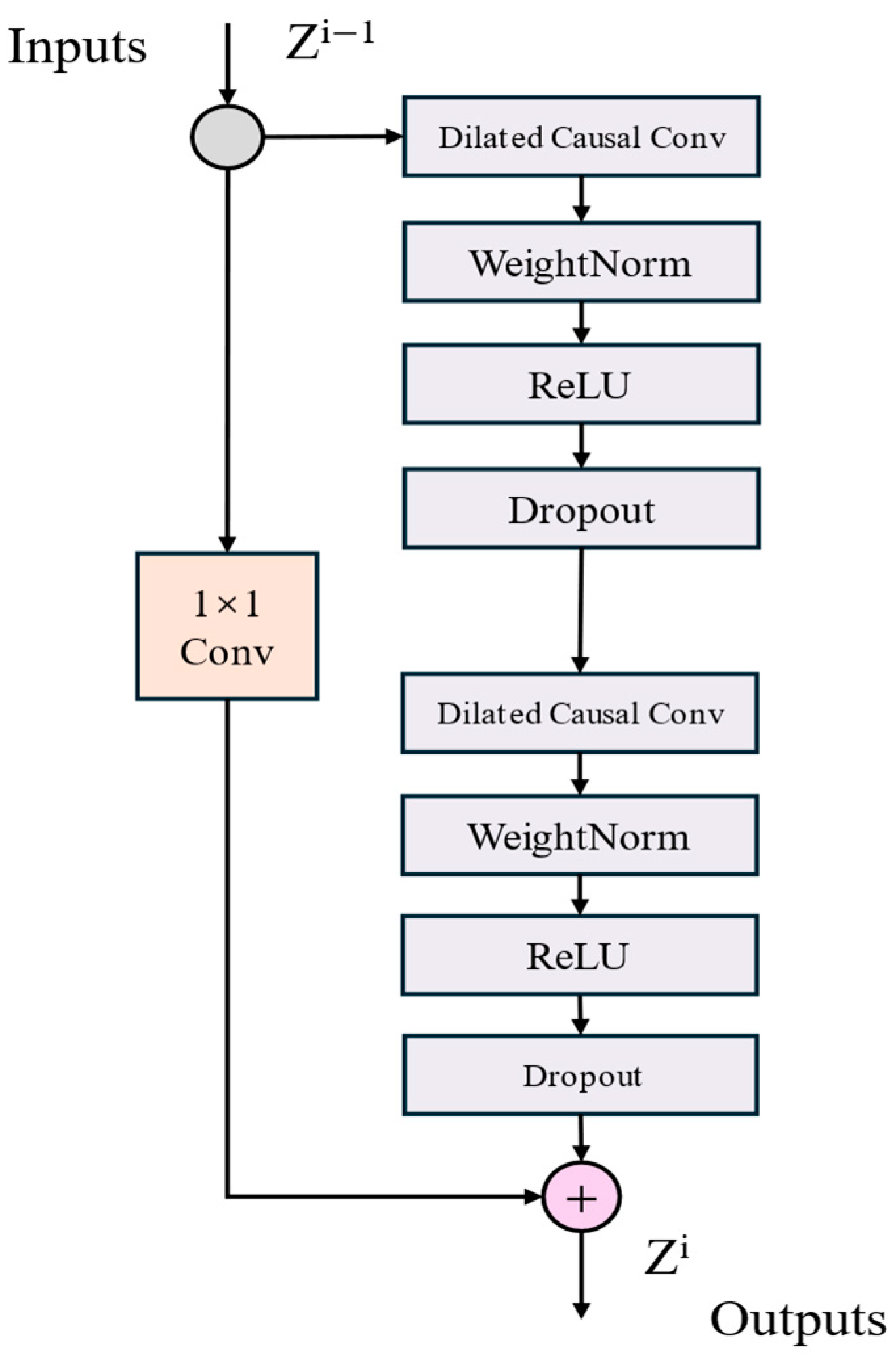

To stabilize the training of deep TCN and improve performance, residual connections are incorporated into the architecture [

23]. As shown in

Figure 3, each residual block contains two layers of dilated causal convolutions with ReLU activations in between. Weight normalization is applied to the convolutional kernels to accelerate training, and spatial dropout is added after each dilated convolution for regularization (randomly dropping entire channels during training). The computation of a residual block can be expressed as:

where

is a sequence of transformations. When the input and output dimensions differ, a 1 × 1 convolution is used to match dimensions and enable elementwise addition. This design allows each layer to learn a correction to the identity mapping rather than a full transformation, substantially enhancing the training stability of deep networks.

TCN offers several advantages for sequence modeling. In terms of parallelism, convolutions—with kernels shared across positions—allow entire long input sequences to be processed in parallel, eliminating the temporal dependencies of RNN and markedly improving training and evaluation efficiency. The receptive field can be flexibly controlled by adjusting the kernel size, dilation factor, or network depth, facilitating adaptation to different domains. The backpropagation path is decoupled from the temporal direction, mitigating the gradient explosion and vanishing issues common in RNN. Memory demand during training is relatively low, since kernels are shared within layers and backpropagation depends primarily on network depth; by contrast, gated mechanisms in RNN often lead to substantially higher memory usage. Moreover, similar to RNN, TCN can process input sequences of arbitrary length via sliding 1-D convolutional kernels, making it a practical replacement across diverse sequential data.

TCN also has limitations. During evaluation, RNN can generate predictions by maintaining only the hidden state and the current input, whereas TCN must retain the portion of the input sequence within the effective history (i.e., the receptive field), which can increase memory consumption. When transferring from domains with modest memory requirements to those demanding long-range temporal dependencies, an insufficient receptive field in the original TCN may degrade performance.

Overall, by organically combining causal convolutions, dilated convolutions, and residual connections, TCN demonstrates the potential to surpass traditional recurrent architectures (e.g., LSTM, GRU) in sequence modeling, providing a concise and efficient alternative. TCN focuses on short- to mid-term controllable operations and local dynamics, yielding a clean and robust local prior; on this basis, Informer captures sparse yet critical long-range dependencies. Together, they complement each other and support accurate multi-length, long-span ROP prediction [

24,

25].

4. Results

4.1. Data Processing

The drilling data used in this study come from the University of Stavanger Rate of Penetration (USROP) dataset constructed by Tunkiel et al. [

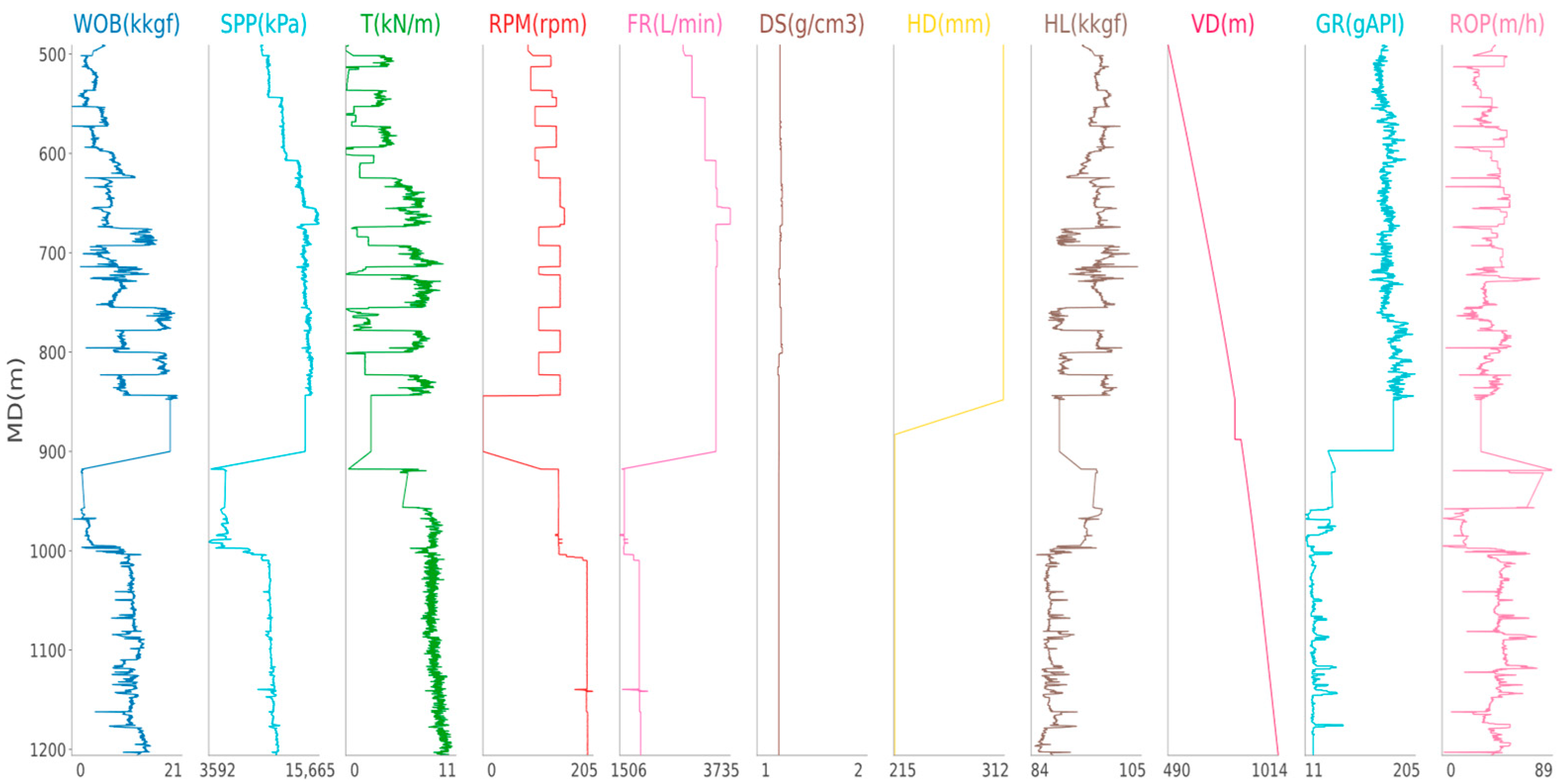

27]. The dataset comprises nearly 200,000 sample records from seven wells and covers 12 common drilling attributes, including measured depth (MD), weight on bit (WOB), standpipe pressure (SPP), surface torque (T), rotary speed (RPM), mud flow rate (FR), mud density (DS), hole diameter (HD), hookload (HL), vertical depth (VD), the USROP gamma value (GR), and ROP. To evaluate the performance of TCN–Informer, this study selected three files from the USROP dataset: USROP_A 0 N-NA_F-9_Ad, USROP_A 2 N-SH_F-14d, and USROP_A 4 N-SH_F-15Sd, hereafter referred to as Well #1, Well #2, and Well #3. To visually illustrate parameter types, lengths, and variations, this paper selects Well #1 for detailed parameter presentation. Data formats for other wells follow the same pattern as Well #1.

Table 1 summarizes Well #1’s basic information, including the unit, minimum value, maximum value, and average value for each parameter.

Figure 5 displays how each drilling parameter (WOB, SPP, T, RPM, etc.) varies with depth, revealing the nonlinear relationships between parameters during the drilling process. After preprocessing the features and labels for each well, the processed data were used for model training and evaluation.

4.2. Evaluation Metrics

This study adopts MAE, RMSE, 95th MAE, 95th RMSE, and R

2 as the core metrics to evaluate predictive performance.

where

is the number of samples,

is the ground-truth value of the i-th sample,

is the model’s prediction for the i-th sample, and

is the mean of the ground-truth values across all samples.

MAE measures the average magnitude of the absolute differences between predictions and ground truth, with a range of [0, +∞). Compared with RMSE, MAE is less sensitive to outliers. A smaller MAE indicates higher predictive accuracy. RMSE quantifies the typical magnitude of the differences between predictions and ground truth, with a range of [0, +∞). RMSE is the square root of MSE and is more sensitive to larger errors. A smaller RMSE indicates higher predictive accuracy. R2 assesses, from a statistical perspective, the extent to which the model explains the variability of the target variable, with a range of (−∞, 1]. Values of R2 closer to 1 indicate that the model explains a greater proportion of the variance in the target variable and achieves a better overall fit between predictions and observations.

The 95th MAE and 95th RMSE, respectively, represent the mean absolute error and root mean square error for all values exceeding the fast jump threshold (

). Calculated using the same methodology as MAE and RMSE, they can be derived by identifying the indices of fast jump points. Through these two metrics, model predictions can avoid long-term saturation values masking underestimation caused by rapid jumps, thereby effectively evaluating each model’s predictive performance at rapid jump points.

where

is the ground-truth value of the i-th sample,

is the depth for the i-th sample,

is the rate of change in the i-th sample.

is the fast jump threshold,

is the 95-th percentile, and

is the indices of fast jump points.

4.3. Parameter Settings and Hyperparameter Search

Parameter tuning plays a critical role in optimizing model performance, as demonstrated by related studies. In quantum differential evolution algorithms for constrained capacitated vehicle routing problems, hyperparameter calibration directly influences convergence speed and routing optimization quality [

28]. In business process management, parameter configuration affects resource allocation efficiency and workload balancing effectiveness [

29]. Consequently, systematic parameter setting and hyperparameter search are indispensable for balancing model complexity, training stability, and predictive accuracy. To balance modeling efficiency with the ability to capture sequence dependencies, this study configures the encoder input length, the decoder’s known-segment length, and the prediction length as specified in

Table 2. This study concatenates the target variable with the raw features to strengthen the sequential signal. Training uses mini-batches and a chunked schedule, with multiple iterations per chunk and early stopping to limit overfitting. This study adopts Adam with weight decay, and selects the learning rate via automated hyperparameter search. To mitigate early-stage instability, this study enables a cold start over the first few windows and applies exponential moving-average smoothing to early predictions.

Hyperparameter search follows Optuna’s Bayesian optimization and uses MSE as the validation loss function. During hyperparameter tuning, the constructed sequence dataset is randomly split into training (90%) and validation (10%) subsets. No separate test set is utilized, and model selection is based on validation loss. The search space covers, for Informer, the model dimension, number of attention heads, numbers of encoder and decoder layers, feed-forward dimension, and dropout; and for TCN, the depth (number of levels) and kernel size. This study runs a bounded number of trials to strike a practical balance between efficiency and effectiveness.

4.4. Sliding Window

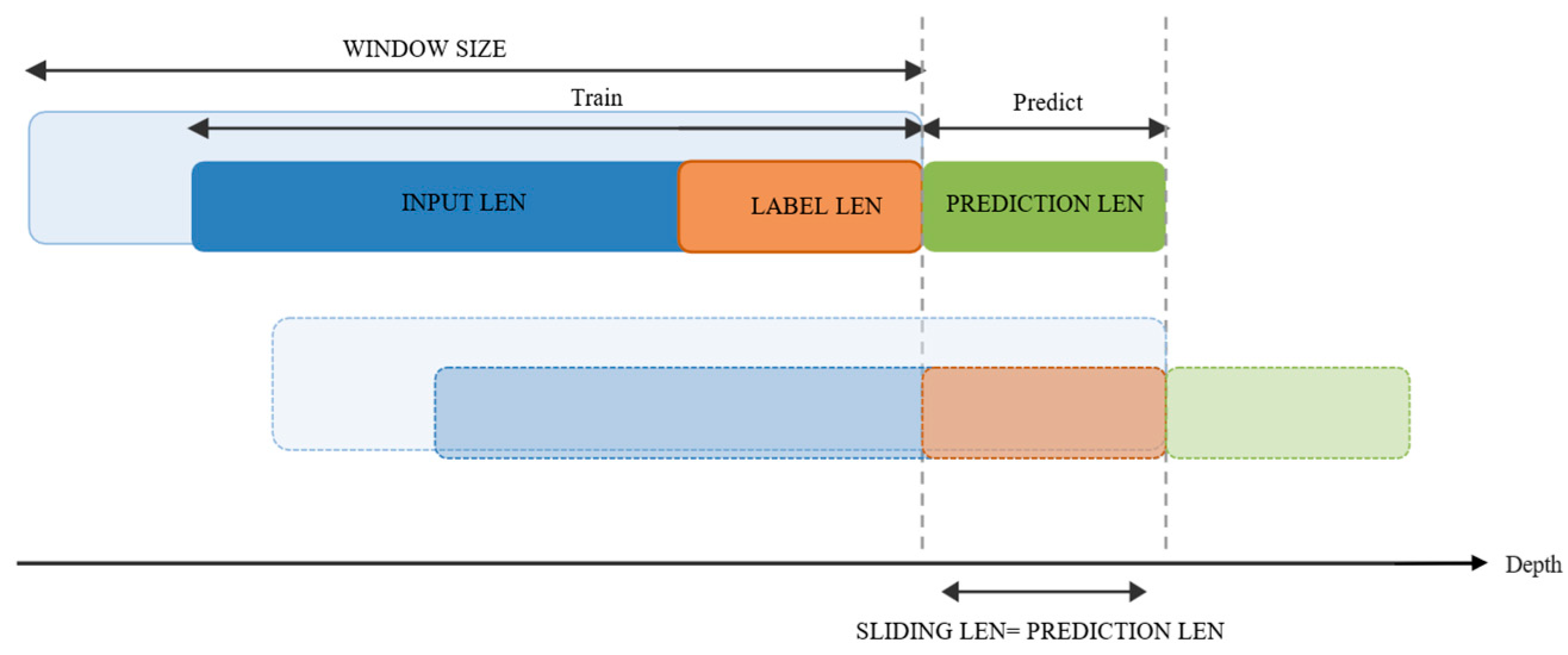

The sliding window is the core mechanism for constructing series samples and enabling continual learning. As shown in

Figure 6, during training this study samples the current window to form the encoder input, the decoder’s known segment, and the prediction labels. During inference, the window advances by the prediction length (equal to the sliding length) and incorporates newly observed ground-truth values into subsequent windows.

This study sets the window size as an integer multiple of the input length to preserve short-term fluctuations and mid-term trends. To avoid distribution shift from uninformed padding, the decoder’s future segment uses a last-frame copy strategy to populate placeholder inputs. This design reduces memory footprint and computational load by limiting window length, while it expands the receptive field through multi-layer causal dilated convolutions in the TCN module to capture longer-period dependencies. Together, rolling prediction and cold-start smoothing improve adaptation to distribution drift and suppress early prediction volatility.

4.5. TCN–Informer vs. Informer ROP Prediction Comparison

This study feeds the processed features and labels into two models: TCN–Informer and the baseline Informer. A sliding window is used to perform continual ROP prediction.

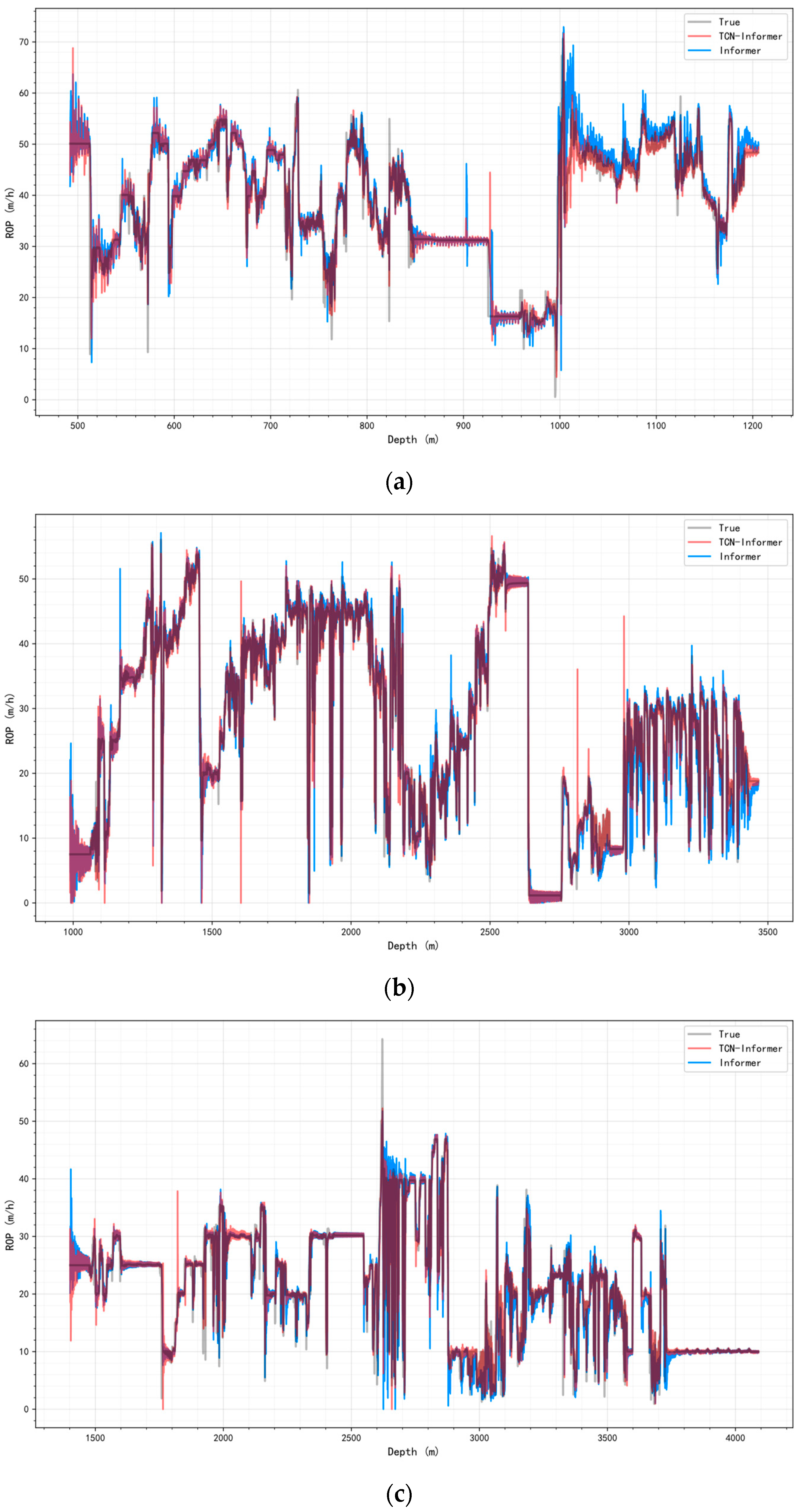

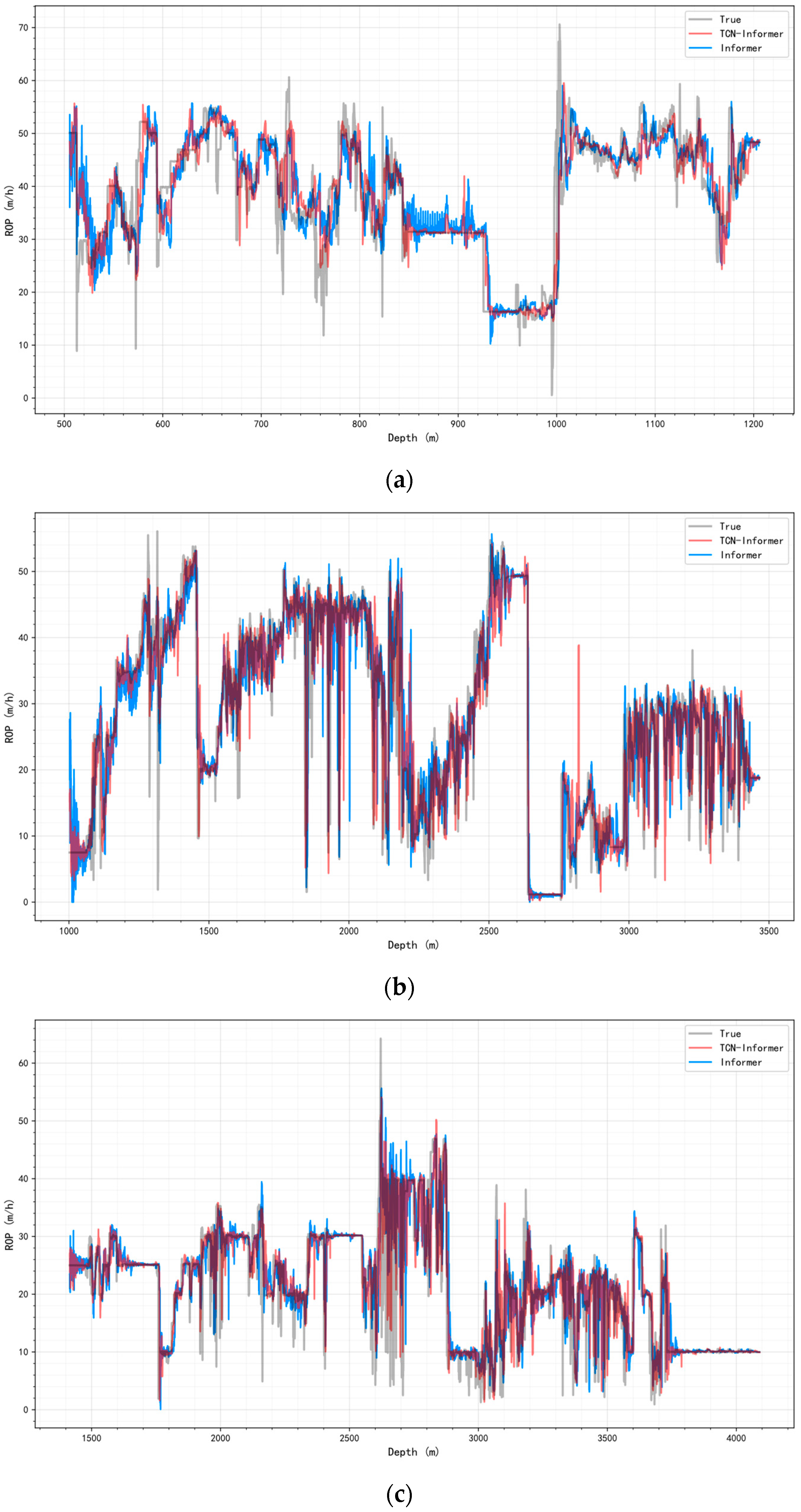

Figure 7 shows a short-sequence setting (input 12, predict 3).

Figure 8 shows a long-sequence setting (input 192, predict 48). Both figures demonstrate the ROP prediction performance of TCN–Informer and Informer. TCN–Informer achieves prediction results closer to actual ROP under both short-sequence and long-sequence settings, exhibiting superior long-term fitting and local noise suppression capabilities.

Across sequence setting, segment lengths, and well conditions, TCN–Informer generally performs better and more consistently. For Well #1, in intervals with dense high-frequency perturbations and local spikes (500–520 m and 985–1005 m), TCN–Informer responds faster to abrupt changes. It shows smaller phase lag at turning points and produces peak-trough amplitudes and arrival times closer to the ground truth. In near-steady or slowly varying intervals (approximately 1005–1180 m), its predictions are smoother, with less overshoot, smaller residual fluctuations, and slower error accumulation as the prediction horizon extends.

This study observes the same pattern in Well #2 and Well #3. In segments with high-frequency perturbations and local spikes (1950–2150 m in Well #2; 2950–3120 m in Well #3), TCN–Informer suppresses noise-induced oscillations and limits over-tracking. In near-steady segments (2600–2700 m in Well #2; 3800–4100 m in Well #3), it maintains trend continuity and stable amplitudes.

These results align with the model design. The TCN branch, through causal dilated convolutions, rapidly captures short-period mechanical vibrations and local inertia, providing a clean short-term prior for the Informer. The Informer, using sparse attention, focuses on distant key dependencies, enabling accurate alignment at abrupt boundaries and over long ranges. As a result, across wells with varying segment lengths and operating conditions (Well #1, Well #2, and Well #3), TCN–Informer effectively fuses short- and long-term information. Compared with the basic Informer, it produces smaller residuals and weaker phase lag, demonstrating strong adaptability to diverse well types and conditions.

4.6. Ablation Study on TCN and Informer Components

To analyze the independent contributions of TCN and Informer components to overall performance, this study separately employed TCN and Informer as standalone models for ROP prediction under consistent experimental conditions, obtaining their evaluation metrics as shown in

Table 3.

This ablation study reveals the distinct and complementary contributions of TCN and the Informer model. In both the long-sequence and short-sequence settings, Informer alone consistently achieves lower MAE and RMSE than TCN while maintaining higher R2 values, reflecting its superior modeling capability for long-range dependencies. Conversely, TCN alone achieves lower 95th MAE and 95th RMSE than Informer across all sequence settings, with optimal performance across all metrics in the short-sequence setting (input 12, predict 3), indicating its superiority in capturing local high-frequency dynamics. The TCN–Informer achieves optimal performance in the long-sequence setting (input 192, predict 48 and input 96, predict 24), reducing MAE, RMSE, 95th percentile MAE, and 95th percentile RMSE while attaining the highest R2. In the short-sequence setting (input 48, predict 12 and input 24, predict 6), it also delivers the best MAE, RMSE, and R2 values. Overall, Informer outperforms TCN on metrics in the long-sequence setting, while TCN outperforms Informer on metrics in the short-sequence setting and at fast jump points. This validates a complementary mechanism: TCN’s causal convolutions provide clear short-term prior information, while Informer’s sparse attention coordinates long-range dependencies, thereby enhancing model accuracy.

4.7. TCN–Informer vs. Other Sequence Models ROP Prediction Comparison

To compare TCN–Informer with other sequence models for ROP prediction, this study evaluates TCN–Informer, Informer, LSTM, GRU, and Transformer on Well #1, Well #2, and Well #3, and reports the metrics MAE, RMSE, 95th MAE, 95th RMSE, and R

2 for ROP. This study also analyzes representative segments from Well #1. The results are summarized in

Table 4.

The comparative experiments span multiple settings, from short input with short prediction to long input with long prediction.

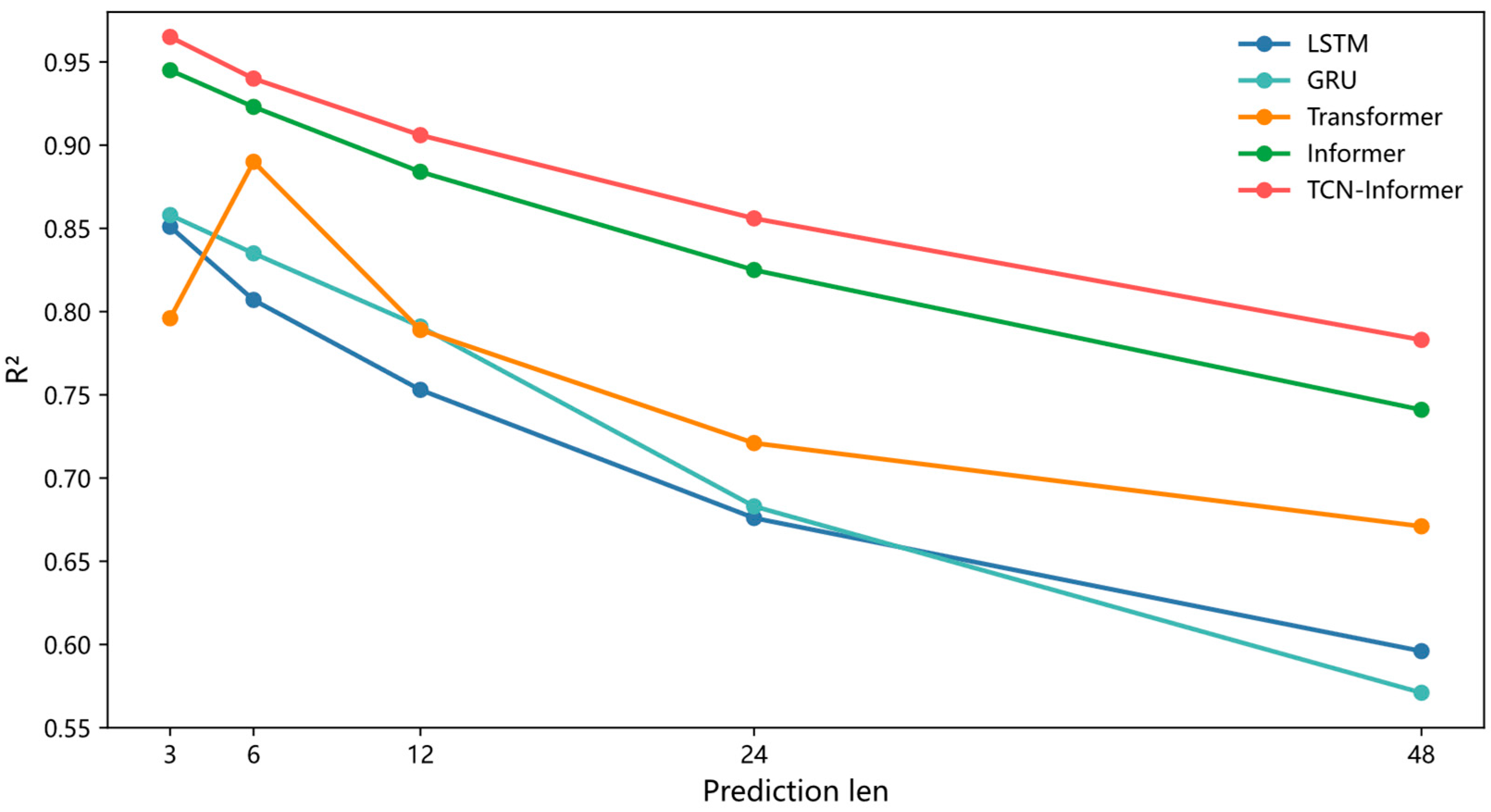

Table 4 and

Figure 9 show a clear trend: in most settings, TCN–Informer achieves lower MAE and RMSE and higher R

2. Its performance also degrades more slowly as the prediction length increases.

Representative scenarios confirm this pattern. With the short-sequence setting (input 12, predict 3), TCN–Informer reduces MAE and RMSE by 24.3% and 18.5% relative to Informer, with R2 increasing by 2.1%. Relative to Transformer, MAE and RMSE decrease by 62% and 58.7%, with R2 increasing by 21.2%. Relative to GRU, the decreases are 52% and 50%, with R2 increasing by 12.5%. Relative to LSTM, the decreases are 61.3% and 57.1%, with R2 increasing by 18.4%.

With the long-sequence setting (input 192, predict 48), TCN–Informer reduces MAE and RMSE by 11.8% and 8.4% relative to Informer, with R2 increasing by 5.7%. Relative to Transformer, MAE and RMSE decrease by 25.3% and 18.4%, with R2 increasing by 16.7%. Relative to GRU, the decreases are 40.7% and 28.8%, with R2 increasing by 37.1%. Relative to LSTM, the decreases are 38.5% and 26.5%, with R2 increasing by 31.4%.

In addition,

Table 4 reports MAE and RMSE at fast jump points. These values are consistently higher than the overall MAE and RMSE, confirming that jump points are the hardest regime. Even under this regime, TCN–Informer attains the smallest errors across models. In the short-sequence setting (input 12, predict 3), its 95th MAE and 95th RMSE are 3.335 and 5.211, improving over Informer (4.125 and 6.145) and remaining below Transformer, GRU, and LSTM. The superiority carries over to the long-sequence setting (input 192, predict 48), where TCN–Informer continues to show smaller errors at fast jump points, indicating better suppression of phase lag and residual fluctuations near abrupt changes. Quantitatively, these outcomes demonstrate that coupling a TCN branch with sparse attention provides a stronger local prior and more reliable alignment of distant dependencies, delivering more accurate and stable predictions at fast jump points than Informer, Transformer, GRU, and LSTM.

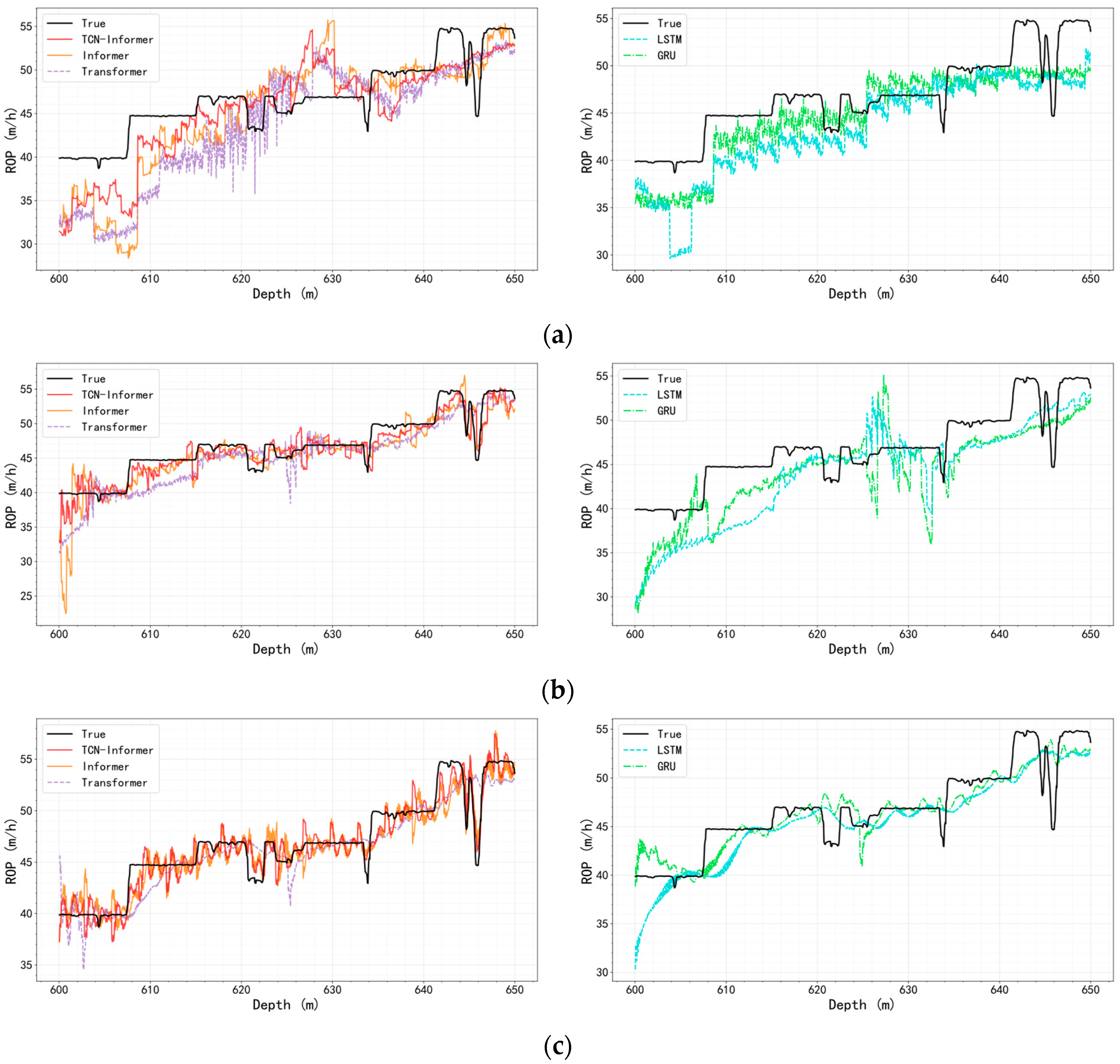

The visualized curves in

Figure 10 reinforce these quantitative results. Under varying input and prediction lengths, the TCN–Informer model delivers smaller residuals, shorter phase lags, and smoother long-horizon extrapolations compared to other models. Under the short-sequence setting, LSTM and GRU—limited by effective memory—often show phase lag and amplitude compression. Transformer and Informer capture part of the long-range dependencies but are more sensitive to local high-frequency disturbances and noise, leading to overshoot near turning points. In contrast, TCN–Informer uses causal dilated convolutions to extract short-period components and local inertia, while sparse attention aligns distant key dependencies. This yields more accurate arrival times and amplitudes at abrupt changes.

As input and prediction lengths grow, LSTM and GRU exhibit stronger error accumulation and oscillations. Transformer and Informer frequently show lag and oscillation near abrupt changes. TCN–Informer produces smaller residual fluctuations and phase deviations, smoother long-horizon extrapolation, and stronger trend consistency.

In summary, across different combinations of input and prediction window lengths, TCN–Informer consistently delivers smaller residuals, weaker phase lag, and more stable multi-length prediction performance. These results highlight a complementary mechanism: the TCN branch provides a clean short-term prior, and the Informer, via sparse attention, efficiently aligns distant critical evidence.

5. Conclusions

5.1. Summary

This study addresses the challenges of low prediction accuracy, high computational complexity, and poor adaptability to complex well conditions in traditional models for ROP prediction in drilling engineering. The core issue lies in the inability of single models to simultaneously capture local short-term fluctuations and long-range dependencies in drilling data, which is characterized by strong nonstationarity, sparsity of key events, and susceptibility to noise interference.

To solve this problem, a hybrid TCN–Informer model is proposed, which integrates the advantages of TCN and Informer. TCN leverages its causal dilated convolutions and residual connections to effectively extract local features of the drilling sequence. This capability effectively compensates for the single Informer model’s inadequacy in capturing short-term mechanical vibrations and local inertia. Informer leverages its ProbSparse self-attention and generative decoder to reduce the time and space complexity of long-sequence processing. This design addresses the traditional Transformer’s inefficiency in handling long drilling sequences. Additionally, the model optimizes the structure by removing the distilling layer from Informer and WeightNorm from TCN, and adjusts the input embedding method to enhance feature representation.

For data preprocessing, a four-step strategy is adopted: data deduplication, sliding-window quantile outlier detection, isolation forest secondary outlier detection, and KNN resampling to address data quality issues such as repetition, anomalies, and uneven distribution. The experiment uses the USROP dataset, selecting Well #1, Well #2, and Well #3 for validation. Evaluation indicators include MAE, RMSE, and R2, with sliding-window training and Bayesian hyperparameter search based on Optuna to optimize model parameters.

Experimental results show that the TCN–Informer model outperforms single models such as Informer, LSTM, GRU, and Transformer under various input and prediction length combinations. For instance, under the short-sequence setting (input 12, predict 3), the proposed model reduces MAE and RMSE by 24.3% and 18.5%, respectively, and increases R2 by 2.1% compared to the Informer model. In contrast, under the long-sequence setting (input 192, predict 48), it achieves reductions of 11.8% in MAE and 8.4% in RMSE, while demonstrating a more substantial improvement in R2, which increases by 5.7%. The model demonstrates better responsiveness to sudden ROP changes and more stable prediction in steady-state intervals, verifying its effectiveness and adaptability in ROP prediction.

5.2. Limitations

Despite the satisfactory performance of the TCN–Informer model in ROP prediction, this study still has certain limitations. Firstly, the data preprocessing stage focuses on outlier removal and resampling, but lacks in-depth processing of missing data. In actual drilling operations, sensor failures or data transmission interruptions often lead to large-scale missing data, which may affect the model’s input reliability and prediction stability, and this aspect needs further improvement. Secondly, the model is validated only on the USROP dataset, and the drilling conditions (such as formation type, drilling equipment, and operating parameters) in this dataset are relatively limited. There is a lack of verification on datasets from different regions, complex formation environments (e.g., high-temperature and high-pressure formations), or special drilling technologies (e.g., horizontal well drilling), which may restrict the generalization ability of the model in more diverse practical scenarios. Thirdly, the model’s hyperparameter optimization relies on Optuna’s Bayesian search with a limited number of searches and fixed patience, which may fail to fully explore the optimal hyperparameter combination, and there is room for improvement in optimization efficiency and accuracy.

5.3. Future Directions

Future research can be carried out in the following directions. First, the model’s validation scope should be expanded. This involves collecting drilling data from different regions, formation types, and technologies to build a more diverse and comprehensive dataset. Subsequently, the model’s adaptability and stability need to be verified in complex and variable practical environments, ultimately enhancing its generalization ability. Precisely, the integration of multi-source data can be implemented by standardizing and coordinating multi-source inputs through unified modalities and ontologies, or by employing feature-level fusion with temporal alignment or multi-view learning to integrate these data sources. This approach enables robust cross-modal learning while preserving modality-specific information. Second, the hyperparameter optimization strategy should be improved. For instance, multi-objective optimization algorithms (e.g., NSGA-II) could be incorporated to balance model accuracy and computational efficiency. Alternatively, adaptive hyperparameter adjustment mechanisms could be adopted to reduce reliance on manual tuning and enhance the optimization outcome. Third, the integration of domain knowledge (e.g., drilling mechanics principles, formation lithology characteristics) into the model design should be explored, such as adding domain-guided attention mechanisms or feature engineering modules, to further improve the model’s physical interpretability and prediction accuracy for complex drilling scenarios. Specifically, for the aforementioned research directions in integrating physical models, one can impose physical information constraints or loss terms based on drilling mechanics, encompassing relationships between drilling pressure, torque, mechanical drilling rate, and drill-bit–rock interactions. Alternatively, hybrid models can be developed by combining data-driven predictors with reduced-order or differentiable physical simulators to regularize training and ensure physically consistent outputs.