1. Introduction

Embedded systems have become an essential foundation of modern society, supporting applications ranging from consumer electronics to automotive and industrial control [

1]. As embedded systems continue to evolve, their computational capability demands have grown substantially due to the increasing complexity of real-world tasks. To address these challenges, multi-core architectures have been widely adopted, offering the potential to boost overall performance by enabling parallel execution [

2]. Recent processors for embedded domain often feature four or more cores, and some platforms even use many-core CPU with over a dozen cores [

3,

4].

However, the actual performance of tasks in a multi-core system is not simply determined by the hardware specification. For instance, it will also be influenced by the entire software stack including components like operating system, device drivers and middleware. Further, most of the embedded systems include some jobs with timing constraints, such as real-time control loops. The performance metrics of application design for such systems are not limited to average throughput but also must encompass timing-related factors such as execution delay and deadline satisfaction.

Traditionally, embedded systems, especially those for hard real-time tasks, are built upon MCUs (microcontroller units) with lightweight RTOS (real-time operating system) kernels. To guide system development and support application design, it is an effective and necessary approach to evaluate the platform characteristics using comprehensive benchmarking tools [

5]. For RTOS-based multi-core environments, some studies have proposed benchmark methods and tools to examine various factors (e.g., inter-core resource contention, kernel locking mechanisms), and reveal useful insights on performance analysis and bottleneck identification [

4,

6].

Beside these RTOS-based systems, Linux has also become a major option in embedded environments equipped with multi-core SoC (system on a chip) to achieve the high performance and rich functionality required by more complex applications [

7]. While Linux is not originally designed for real-time systems, its community is actively embracing useful capabilities to improve the support. For instance, the latest long term kernel (6.12 as of writing) has fully integrated the PREEMPT_RT real-time patchset and refined scheduling mechanisms to deliver lower latency and better determinism. However, existing benchmark tools for Linux mainly focus on evaluating overall throughput or computational efficiency rather than real-time performance metrics, leaving timing-related characteristics largely unexplored [

8,

9]. Technically, it is also possible to port those RTOS-oriented benchmark tools mentioned above to measure Linux, but they primarily employ fixed stress test scenarios for worst-case assessment, which cannot accurately reflect the performance of realistic application workloads on complex embedded systems. Some tools like MiBench [

10] and TACLeBench [

11] are usually categorized as realistic benchmarks for embedded systems because they provide workloads by taking computation kernels and algorithms from real-world applications. However, these programs are implemented with hard-coded static tasks so they cannot evaluate the performance with different active cores, timing behaviors, resource accesses and contentions.

In this paper, to fill the gaps left by existing approaches, we propose a new multi-core benchmark framework for Linux, which supports performance characteristics analysis with diversified tasks generated to simulate realistic workloads of computing and contention in embedded systems. The main contributions of our work are summarized as follows:

Benchmark methodology: The proposed methodology integrates resource contention model into task-set generation process, which can bridge the gap between theoretical models and executable benchmark programs to enable performance evaluation with various workloads created from controllable parameters.

Open-source framework: Building on this methodology, we design and implement a portable, open-source benchmark framework that automatically generates, compiles, and executes task sets on Linux-based platforms. It can also systematically iterate through parameter grids to produce highly diversified scenarios for analyzing the impacts of related factors quantitatively. The source files are available for reproducibility and extendibility (see “Data Availability Statement” for the repository).

Empirical case study: Using the proposed framework, we perform an experimental study on a 16-core embedded platform to comparatively examine Linux kernel with different versions and preemption modes. The evaluation results do not only demonstrate the effectiveness of our method for multi-core embedded systems, but also provide new insights about the real-time performance characteristics of Linux-based platforms.

The remainder of this paper is organized as follows. In

Section 3, we present the proposed benchmarking methodology in detail, including the design of a contention-oriented task-set model, the pipeline of task-set generation and the implementation of the benchmarking framework. In

Section 4, we describe the experiments to evaluate the performance characteristics of three Linux configurations and comparatively analyze the results to show the usefulness of our benchmark method. In

Section 2, we review mainstream methodologies for performance evaluation in embedded systems and highlight their limitations in the context of multi-core evaluation. Finally,

Section 5 concludes the paper and outlines directions for future work.

3. Materials and Methods

The primary goal of our methodology is to design benchmark workloads that resemble realistic tasks in embedded systems, to enable systematic analysis of multi-core performance characteristics. To achieve this goal, we first construct models to capture the common features of tasks in multi-core environment, with both resource contention and computation behaviors considered. Based on the models, we design an algorithm that can synthesize executable task sets according to user-specified parameters, enabling the generation of various benchmark scenarios. Finally, we implement a portable and extendable benchmark framework that integrates the generator with automated compilation, execution, and data collection so the user can effortlessly evaluate and analyze embedded systems under different environment settings.

3.1. Multi-Core Resource Contention Model

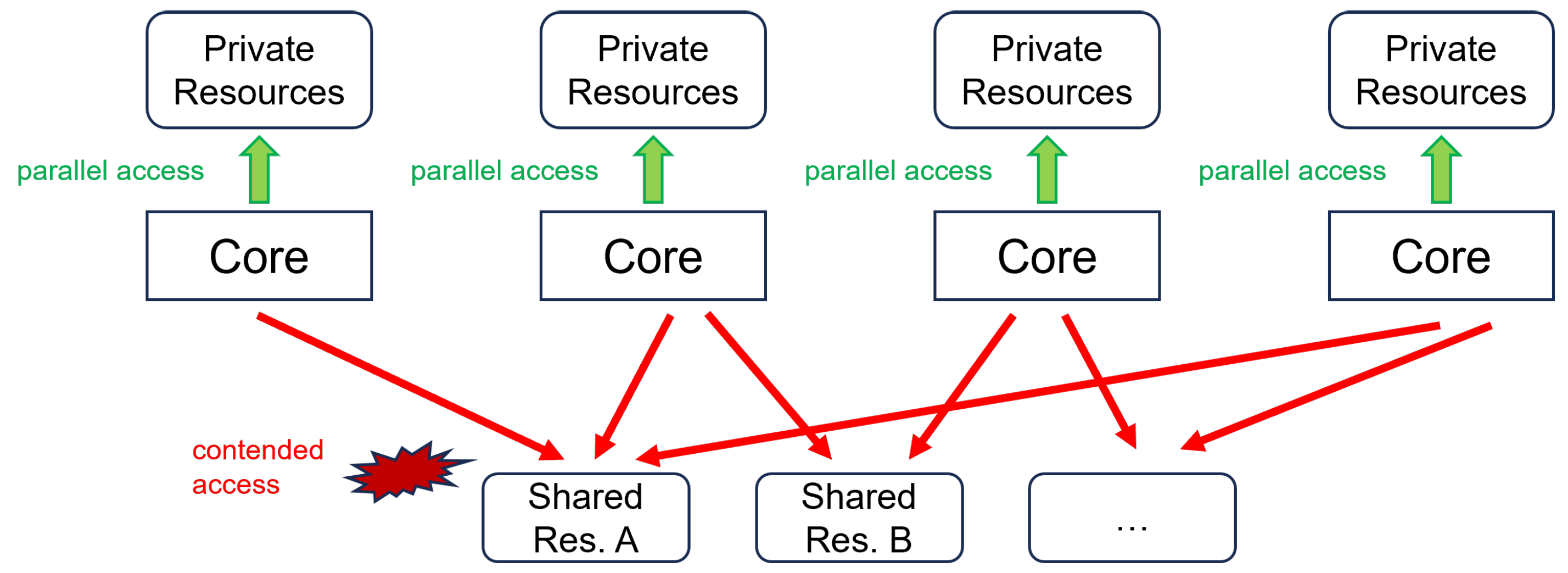

We first describe the resource contention model in multi-core systems. As illustrated in

Figure 1, multiple tasks running on different cores may attempt to access the same shared resource concurrently. Such concurrent access inevitably introduces contention, which in turn may cause waiting and potential task delays. Most of the contended resources are kernel objects managed by the OS level. Especially for control-flow-oriented embedded systems, synchronization and communication primitives such as semaphores and data queues are heavily used, thereby becoming major sources of inter-core contention. It is noteworthy that many hardware components are shared implicitly, such as cache and memory bus, which should also be considered contended resources.

In theory, the delay caused by multi-core contention can be bounded by the worst-case scenario in which multiple tasks accessing the same resource serialize their entries into the critical section. In practice, however, the observed delays often far exceed this theoretical expectation, particularly when the number of cores is increasing. Several factors contribute to this phenomenon, including contention in multi-level cache hierarchies, operating-system overheads such as context switching and scheduling, as well as different system lock contention.

On the other hand, modern operating systems, including certain real-time operating systems, are increasingly adopting fine-grained locking designs. This trend changes the impact of resource access compared to traditional embedded systems that relied on a single global lock. With a global lock, any resource access may cause contention across all tasks in the system. By contrast, fine-grained locking introduces separate locks for each core or even for individual resources. While this design introduces additional overhead due to lock management, it significantly improves system parallelism. Experience from mature systems such as Linux has shown that fine-grained locking is beneficial for real-time performance, which has motivated several RTOSs to explore similar approaches. Differences in lock design introduce an additional dimension into the resource contention model. In addition to the number of cores and the frequency of resource accesses, one must also consider whether a task accesses private resources, i.e., those confined to its own core, or global resources shared across cores. As the contrast between global and fine-grained locking makes clear, these two types of access behave very differently in terms of contention. We therefore regard this distinction as a key factor in evaluating modern embedded systems that are evolving toward fine-grained locking.

We identify three primary factors that influence multicore contention in embedded systems: the number of cores, the proportion of resource accesses within parallel tasks, and the type of resource being accessed. In this work, we refer to accesses to private resources as paralleled access, whereas accesses to global resources are referred to as contentional access.

3.2. Task-Set Model

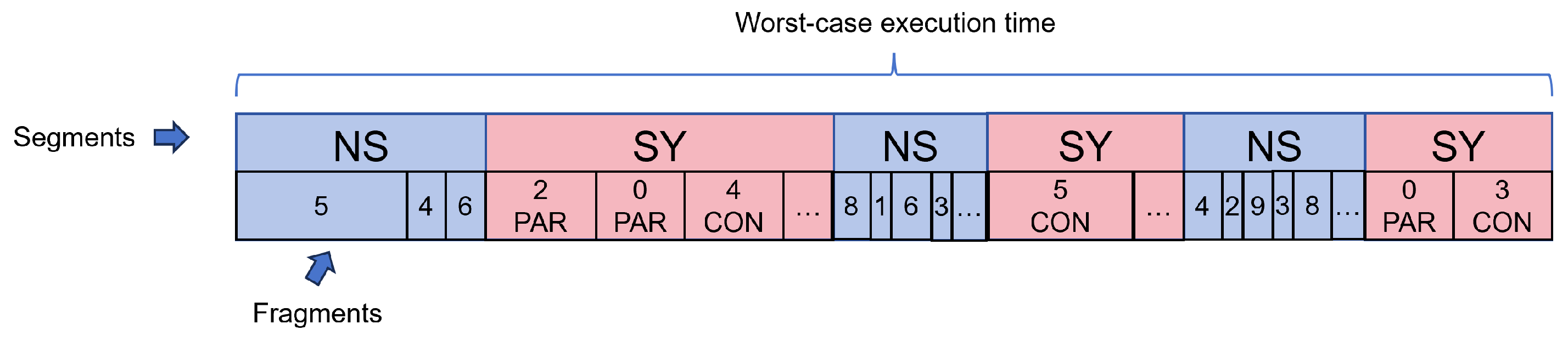

Building on the above contention model, we now integrate these factors into a formalized task-set model for multi-core evaluation. In real embedded systems, tasks typically consist of kernel API calls involving synchronization/communication, which explicitly introduce system-level contention, as well as conventional code, such as computation-intensive routines commonly found in control-flow programs. While the latter usually does not directly cause OS-level contention, it may still induce hardware-level contention through accessing to shared components like caches and memory buses. For clarity of modeling, we therefore abstract each task as a combination of NS (non-synchronization) segments and SY (synchronization) segments, where the NS ones represent typical computation workloads and the SY ones explicitly capture OS-level resource accesses.

It is important to note that not all synchronization segments necessarily lead to resource contention. For example, certain synchronization operations may only access resources that are private a specific core, thus avoiding inter-core interference. Therefore, to distinguish between different synchronization segments, we explicitly integrate the resource contention model into the task-set model. Specifically, each synchronization segment for a type of kernel resource is associated with two alternative API implementations. The CON (contended-access) version enforces access to a shared resource, thereby maximizing contention among tasks. By contrast, the PAR (parallel-access) version directs tasks to private resources confined to their own cores, enabling concurrent execution under fine-grained locking schemes. This distinction allows the model to reproduce a broader range of multi-core execution scenarios.

Considering the need to generate executable code for real-system evaluation, we further refine each segment into smaller units, referred to as fragments. A fragment represents a complete and self-contained operation. For non-synchronization segments, a fragment may correspond to a function implementing a mathematical computation or algorithmic routine. For synchronization segments, a fragment corresponds to a specific API invocation, such as a semaphore operation or a message queue access. Each segment is constructed by filling its allocated execution slot with an appropriate collection of fragments. We also include common parameters required for task sets in embedded system analysis, by referring to related works. Specifically, each task is associated with a worst-case execution time (WCET), period, and relative deadline. Incorporating these elements, we define the task-set model—together with its resource model—as follows.

Incorporating these elements, we formalize the task-set and resource models as follows. A task set is denoted as

Each task

is characterized by the tuple

with the parameters defined as

Each segment

is

with the parameters defined as

For synchronization segments (

), the access mode and resource class are constrained by

The resource set is

with the parameters defined as

3.3. Task-Set Generator

The task-set model effectively captures both common realistic embedded-task characteristics and the multi-core contention model. In this section, we will design a code generator to actually produce executable C programs from this model. The workflow comprises four stages:

- (1)

User input

The generator is configured by the following user inputs:

Cores and tasks : number of active cores M and total number of tasks N.

Segment cap per task : an upper bound on the number of segments per task; for each , the segment count satisfies .

WCET bounds : lower/upper bounds for sampling task WCETs from a user-specified distribution with support .

Synchronization share : controls the proportion of synchronization (SY) within each task as an expected share of total segment time.

Access-mode mix within SY : controls the ratio between contended (global-resource) and parallel (core-private) APIs inside SY segments. We interpret as the expected fraction instantiated as contended (CON), with instantiated as parallel (PAR).

Remark. Parameters and jointly shape OS-level contention: sets how much of a task is synchronization-driven, while sets the CON:PAR mix within those SY portions.

- (2)

Task-set synthesis

Unlike utilization-driven generators (e.g., UUniFast and related optimizers), we currently focus on

contention effects rather than schedulability frontiers. For each task

, we independently sample its worst-case execution time (WCET)

from a user-specified distribution

, and partition

into

segments with randomized lengths

such that

, and the segment type (NS or SY) of

is constrained by

. We then fix the timing parameters by

This “ten-times” heuristic, common in control-oriented embedded workloads where the nominal period substantially exceeds single-core compute time, standardizes load and suppresses utilization-induced schedulability pressure; as a result, observed differences are primarily attributable to inter-core resource contention (e.g., access modes and resource classes) rather than to the utilization distribution itself. In contention-heavy regimes, additional blocking, cache/memory interference, and lock serialization often dominate the baseline compute time and may push response times beyond the nominal budget. For completeness, the generator also provides an optional utilization-driven mode that allocates in a UUniFast-style manner; this mode is included for users who require it, although it is not yet evaluated in this study.

- (3)

Segment realization using fragments

To instantiate the model as executable task sets, for each segment created in the previous step, the generator will fill it with a list of concrete fragments drawn from the following two disjoint pools, according to its segment type. (i) Non-synchronization (NS) pool. We source compute kernels from TACLeBench [

11] that emphasize arithmetic and algorithmic routines while avoiding data-dependent I/O and OS interactions. To support accurate time matching, we select compute kernels spanning multiple execution scales (via problem-size parameters). Each compute kernel is wrapped into a side-effect–free function with fixed interfaces and no external I/O, enabling standardized inlining into NS segments. (ii) Synchronization (SY) pool. Building on the analysis of common resource-access APIs in real-time embedded system [

4], we select some of the most representative synchronization/communication primitives (e.g., semaphores, mutexes, message queues) in Linux kernel. For each primitive, we provide two implementations: a parallel variant bound to core-local (private) resources and a contended variant bound to globally shared resources.

Table 2 summarizes the current NS and SY fragment pools we implemented to demonstrate the generator. The generator includes a microbenchmark to calibrate those fragments. When running on a new system, every candidate fragment in the pools will be automatically measured under single-core, interference-free conditions to obtain its baseline execution time. The measurement results are stored as a per-platform calibration map and subsequently used to match segment budgets.

For segment realization, the generator fills each segment’s time budget with a homogeneous set of fragments of the appropriate type. We employ a randomized packing heuristic: fragments are sampled from a size-stratified catalog to promote diversity, and selection proceeds until the cumulative measured cost matches the target duration within a small tolerance. Within SY segments, the fragment version (parallel vs. contended) is selected under the user-specified

parameter, which constrains the ratio of PAR:CON mix, and the corresponding resource binding (private vs. global) follows from this choice. This pipeline yields the source code for each segment with concrete fragments filled according to the user parameters.

Figure 2 shows an example of an executable task with segments generated and fragments filled.

- (4)

Executable benchmark program generation

The synthesized artifacts of task set from the above pipeline is further passed to a Python 3 script to render a C project template in Jinja2 engine. The template provides the minimal runtime skeleton required by the target system to compile and build the benchmark program into an executable binary file.

3.4. Benchmark Framework

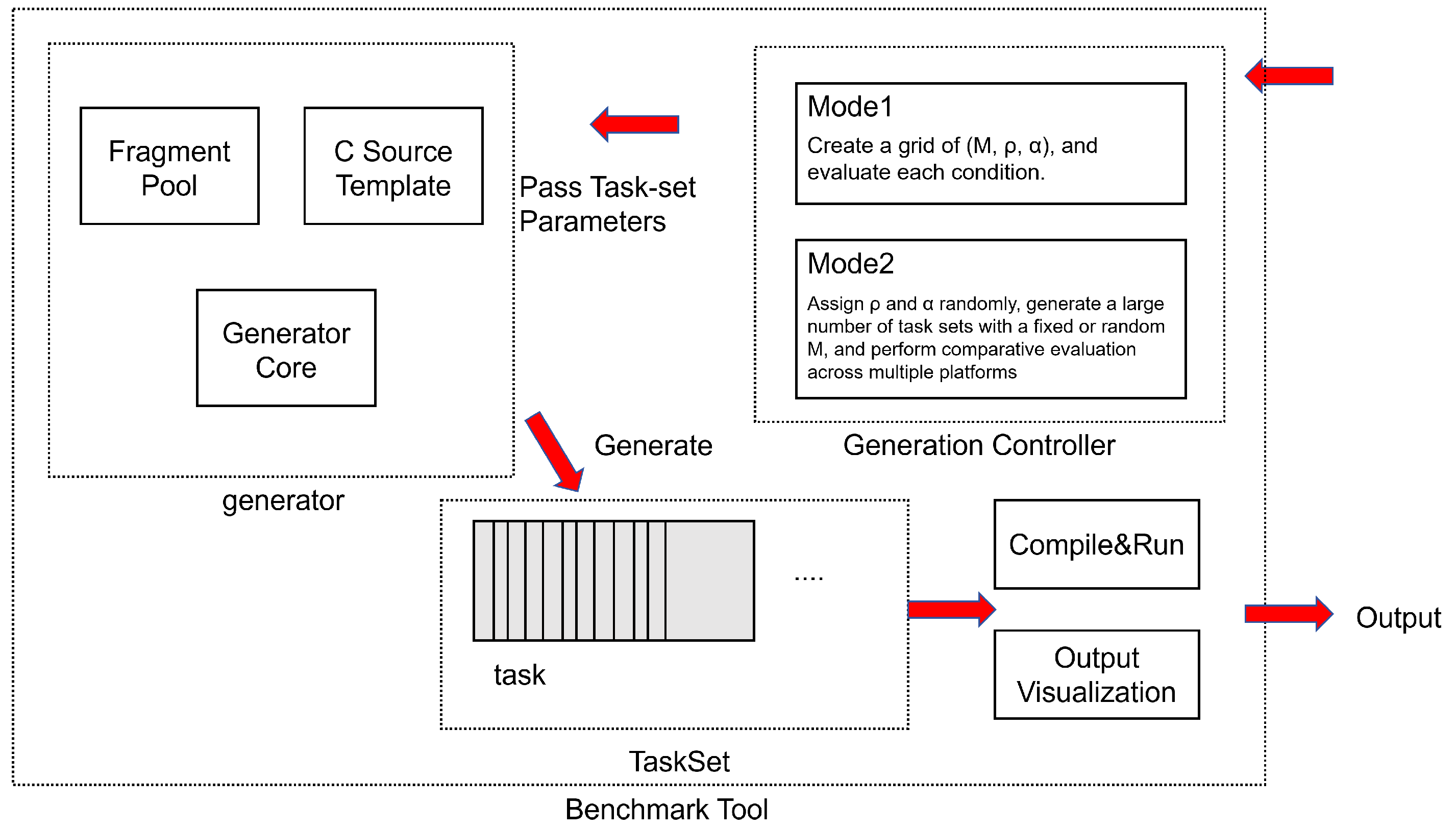

In this section, we present the benchmark framework, which leverages the task-set generator above to evaluate across the parameter space and enable a comprehensive system-level perspective. With the generator, we can instantiate task sets for any specified contention configuration , namely, the number of active cores M, the synchronization share within tasks , and the access-mode mix within synchronization . Our framework can iterate the full grid to measure the evaluation metrics explained below for real-time performance analysis.

Figure 3 presents the overall architecture of the benchmark framework. The workflow begins by collecting user inputs, including the chosen evaluation mode and the number of active cores

M. Currently the two modes are supported as follows.

Mode 1: systematic profiling. We profile task-set behavior over the parameter space , where is the synchronization share and is the contended-access ratio. Given user-specified grid resolutions for and , the tool instantiates a predetermined number of task sets at every grid point and aggregates performance metrics per point. Python drivers invoke the task-set generator to instantiate concrete programs and use build-and-run scripts to compile and execute them. The tool calculates and visualizes global summaries and preserves per-run artifacts for inspection and reproducing.

Mode 2: randomized stress testing. This mode includes two variants: (2a) fully randomized per task set; and (2b) fixed M with randomized and . As in Mode 1, the pipeline is implemented in Python and integrates code generation, compilation, and execution, with metrics and raw outputs retained as separate files.

Performance metrics: Because the task-set design adopts

, we complement the standard deadline-miss rate with a delay ratio

In addition, we define the deadline miss ratio at each grid point by aggregating all jobs across all instantiated task sets and then taking the fraction:

where

is the collection of task-set runs at grid point

g,

is the multiset of all jobs released during run

s, and

. A “job” denotes one instance of a periodic task over a period (one release–deadline pair).

The variation of delay and deadline miss ratio over yields the system’s multi-core performance signature. In Mode 2, we report (i) the mean delay and deadline miss ratio across all randomized task sets and (ii) their per–task-set distributions, which together summarize system capability under randomized workloads. The tool will record all statistics and raw outputs (e.g., summaries, per-run logs) into standalone files.

The entire design and implementation of the benchmark framework are based on hardware-independent abstractions of proposed models, which ensures a high degree of portability across platforms. The benchmark tool itself is fully implemented in Python: given user-specified parameters, it generates the required contention grid points defined by , invokes the task-set generator to produce the corresponding number of task sets, and subsequently compiles and executes them according to collect the measurement data. This feature allows the user to easily reproduce benchmarking across different environments for comparative analysis.

For Mode 1, the only required inputs are the grid resolution, the number of active cores to be tested, and the number of task sets to instantiate per grid point. For Mode 2, the user only specifies whether the number of active cores is fixed and the total number of task sets to be generated. The benchmark then creates a task-set directory, records execution results for each run, and aggregates global metrics. The summarized data files (grid-based metrics for Mode 1 and overall task-set statistics for Mode 2) are stored as CSV files in the working directory. In addition, the framework provides visualization utilities: 3D surface plots are used to illustrate multicore performance signatures, while histograms depict the distribution of metrics across task sets.

In this way, we have constructed a benchmark framework grounded in a task-set model that integrates both realistic embedded-task characteristics and a multi-core contention model. Mode 1 of our framework mainly enables performance characterization of a specific platform, by profiling the overall behavior of a target system under varied contention scenarios. Mode 2, in contrast, randomly generates many task sets under constrained conditions, which can be used together with Mode 1 to facilitate cross-platform comparisons and highlight performance differences among these platforms. We believe that this framework not only fills the gap in multi-core embedded evaluation by explicitly accounting for inter-core contention and examining the performance characteristics of Linux, but also provides useful support for future research and development aimed at understanding and improving embedded systems.

4. Results

4.1. Evaluation Experiments

To validate the design of the proposed benchmark methodology and investigate the open question regarding the multi-core capabilities across different Linux configurations, we conduct comparative experiments on the same hardware under multiple kernel settings. The environment is summarized in

Table 3.

The LX2160 is a 64-bit Armv8-based SoC for embedded system released by NXP, integrating 16 Cortex-A72 cores with a maximum clock frequency of 2.2 GHz. It has state-of-the-art shared cache hierarchy and high core count, making it a representative platform for evaluating the impact of Linux kernel design under various contention scenarios.

Because the internal lock design and synchronization mechanisms of Linux are very complex, characterizing the multi-core behaviors across different kernel configurations is a central task for understanding and optimizing Linux-based platform. In this study, we first measure Linux 5.15 PREEMPT (i.e., Low-Latency Desktop Preemption Model) as the baseline, which is the official kernel of LX2160 SDK and the final longterm version of 5.x series. We then compare the baseline with Linux 6.12 PREEMPT, the latest longterm kernel, to assess the impact of kernel evolution from 5.x to 6.x on multi-core performance. In addition, Linux 6.12 PREEMPT_RT (i.e., Fully Preemptible Kernel) is also evaluated to examine the practical effectiveness of the real-time patches.

To ensure consistency across all experiments, the LX2160 platform is fixed to performance mode at 2 GHz, and all code is compiled with the -O2 optimization level. Task priorities and scheduling policy are uniformly set to SCHED_FIFO. Tasks within each task set are bound to cores 0–15 in a round-robin manner according to their generation order using the affinity function. Other aspects, such as timing measurement and code warm-up, are also standardized. In this implementation, we deliberately focus on reproducing contention scenarios so configurations related to IRQ handling and similar kernel parameters are retained at their default settings. This choice is close to typical deployment environments, thereby improving the practical relevance of our evaluation.

We use the microbenchmarks in the generator to measure the baseline execution time of all segments. To eliminate hardware-related interference and avoid over-optimistic values, we explicitly cleared caches, TLBs, and branch predictors after each execution. The results of each fragment (average values calculated from 1000 iterations) are summarized in

Table 4.

After establishing the baseline, our experiments employ both Mode 1 and Mode 2 provided by the benchmark framework as follows.

Mode 1: We generate 16 three-dimensional plots, each corresponding to a different number of active cores, showing how the delay ratio and deadline miss ratio vary across grid points defined by and . The grid resolution is set to 0.1, and 10 task sets are generated per grid point to ensure that randomness does not bias the results. Each task set is forced to execute for one second before termination, guaranteeing that every task runs at least 200 times and thereby providing sufficient diversity in contention.

Mode 2: We evaluate task-set distributions under randomized conditions. In Mode 2a, 3000 task sets are generated for core counts of 4, 8, 12, and 16, allowing us to examine the distributions of delay ratios and deadline miss ratios under these configurations. In Mode 2b, 5000 task sets are generated with all variables randomized. Histograms are then used to characterize the distributions of delay ratios and deadline miss ratios in both cases.

After task sets are generated by the framework, we use the identical benchmark programs to evaluate Linux 5.15 PREEMPT, Linux 6.12 PREEMPT and Linux 6.12 PREEMPT_RT to ensure a fair cross-version comparison.

4.2. Linux 5.15 Performance Characteristics

In this section, we first present the results of systemic profiling (i.e., Mode 1) measured under Linux 5.15 PREEMPT to show how the real-time performance is impacted by the task sets generated from

parameters.

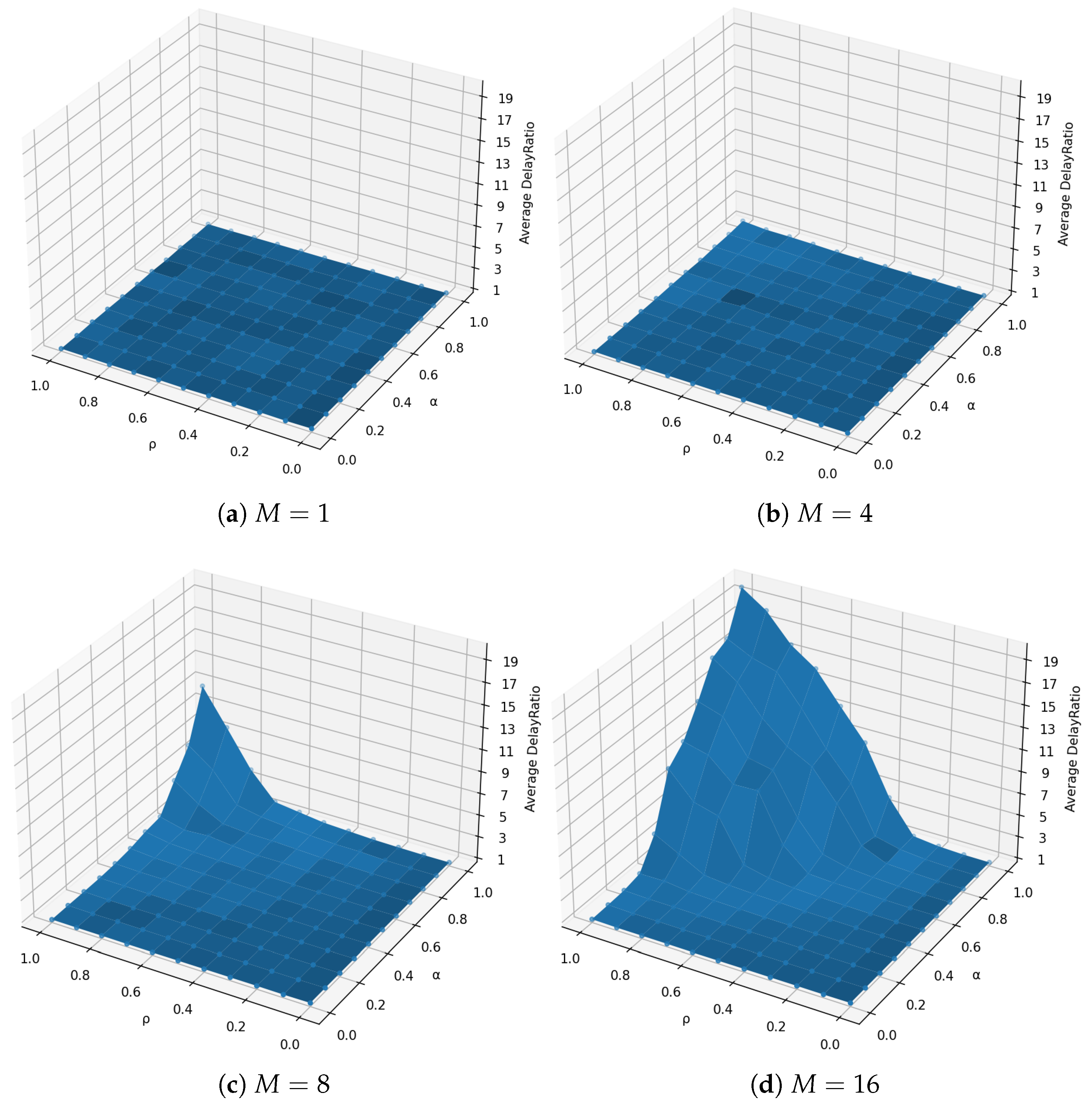

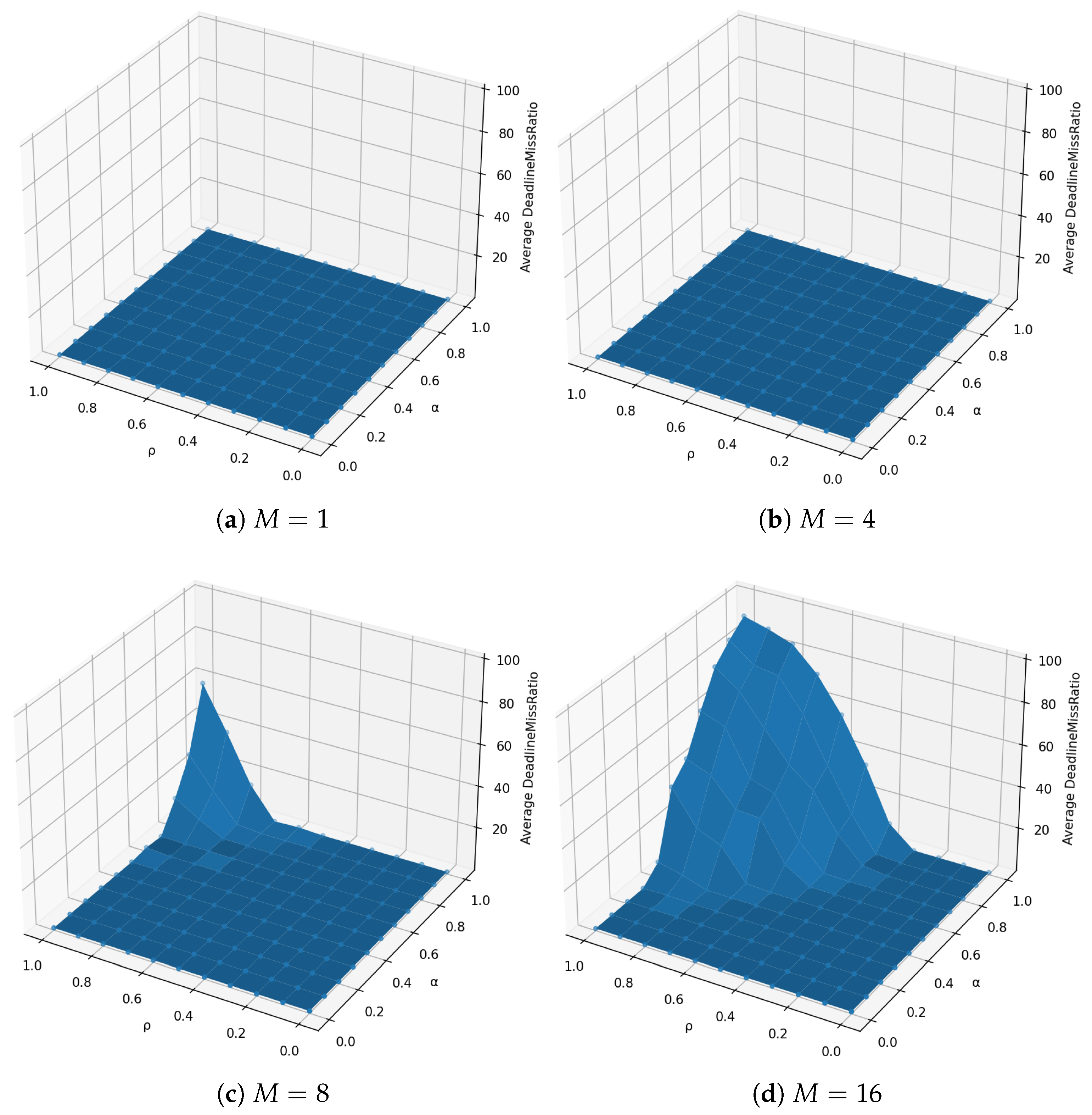

Figure 4 compares the average delay ratio under different

M (active cores) using the three-dimensional plots, and the observations are as follows.

Observation 1: The benchmark framework can correctly generate tasks from the specified model parameters. In the single-core configuration , since no inter-core contention is expected, the overall timing behavior should purely correspond to the generated fragments. The workloads have exhibited average delay ratio close to 1.0, which means the execution times of task sets are consistent with the target WCET parameter. Although it is hardly noticeable in the figure, some task sets actually have delay ratio around 0.9 (i.e., 10% faster than WCET). The results are as expected since the WCETs of baseline fragments are measured in cold state while the real tasks can benefit from cache hit.

Observation 2: Linux shows excellent scalability when the number of active cores is small. In the quad-core configuration , the average delay ratio remains close to the single-core baseline, which indicates that performance of the target system scales efficiently with minimal overhead. The maximum delay ratio does not exceed 1.2, even under the most extreme contended condition (. This result suggests that the current fine-grained locking design of the Linux kernel can effectively mitigate the impact of inter-core contention at least for many budget embedded systems with less than 4 cores, which leads to a possible advantage over those RTOSs using coarse-grained lock.

Observation 3: The performance begins to suffer from heavy synchronization workloads as the number of cores further increases. In the 8-core configuration , the delay is still ignorable in most cases. However, unlike the quad-core setting, the ratio rises sharply to about 10 under synchronization-intensive conditions (). This indicates that, even though fine-grained locking is employed, other factors (e.g., context switching, memory bus bandwidth, cache coherency) start to dominate the delay in execution time when tasks on multiple cores frequently invoke system calls for synchronization. Even though, the overall scalability still remains acceptable since many systems do not require a large amount of synchronization.

Observation 4: Linux encounters severe performance bottlenecks in many-core environment. In the 16-core configuration , delays emerge significantly even under moderate contention levels (). This suggests that inter-core interference, shared resource contention, and OS-level synchronization overhead collectively limit scalability beyond a certain point. It means that the system’s efficiency declines rapidly in the many-core regime, revealing inherent bottlenecks in the operating system and hardware components.

In addition to the delays, the average deadline miss ratio is also a key metric to evaluate real-time embedded systems, which is shown in

Figure 5 for comparison.

Observation 5: The techniques of cross-core interference mitigating is important to support real-time systems. The tendency of results is very similar to the average delay ratio, which the performance problem begins to appear under extremely contended scenarios with 8 cores. However, in high-end embedded systems with many cores, most of the cores are typically used to process computing-intensive tasks while the share of critical tasks with a firm deadline is relatively small. Therefore, it is a common practice to ensure real-time performance by statically assigning a few cores dedicated for running those critical tasks only. If we allocate up to 4 cores for them to minimize the deadline miss, it means that the computing-intensive workloads can run on at least 12 cores on our target system, which is already possible to cause performance degradation according to Observation 3 and 4. Under those circumstances, the technique to avoid such degradation from interfering the critical tasks become a key to meet the deadline requirement, as also noticed in some latest studies [

16].

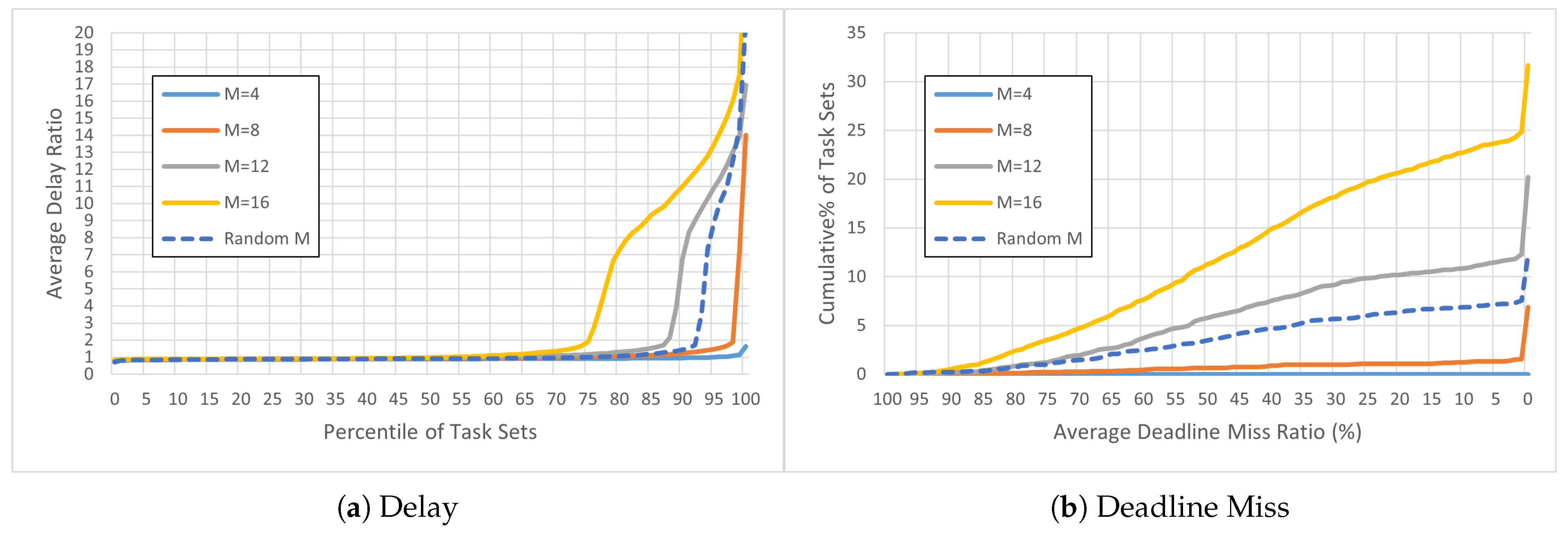

Figure 6 summarizes the evaluation results measured in Mode 2 (i.e., randomly picking parameters to generate task sets), by plotting the distributions of delay ratio and deadline miss ratio. For delay ratio, we show the percentile to emphasize how the tendency changes. For deadline miss ratio, the cumulative values are used since they are important to the design of real-time systems.

Observation 6: The benchmark framework provides an intuitive way to visualize the overall performance characteristics of the target system. While Mode 1 can support detailed analysis by producing 3D plots for each core, it also has two main limitations: (1) all tasks in a task set sharing the same and parameters are rare in real applications, (2) it is hard to summarize the overall performance from many individual graphs. On the other hand, Mode 2 enables large-scale randomized experiments across various parameters and can present the results in a single figure. It does not only provide a comprehensive understanding of the current system under diverse workload conditions, but is also useful to compare overall performance trends on different platforms.

4.3. Comparison of Different Linux Configurations

In this section, we focus on evaluating the target system under three kernel configurations: Linux 5.15 PREEMPT, Linux 6.12 PREEMPT and Linux 6.12 PREEMPT_RT. The purpose is to examine the practical multi-core performance changes caused by version upgrades and preemption modes, which has rarely been explored in prior studies to the best of our knowledge.

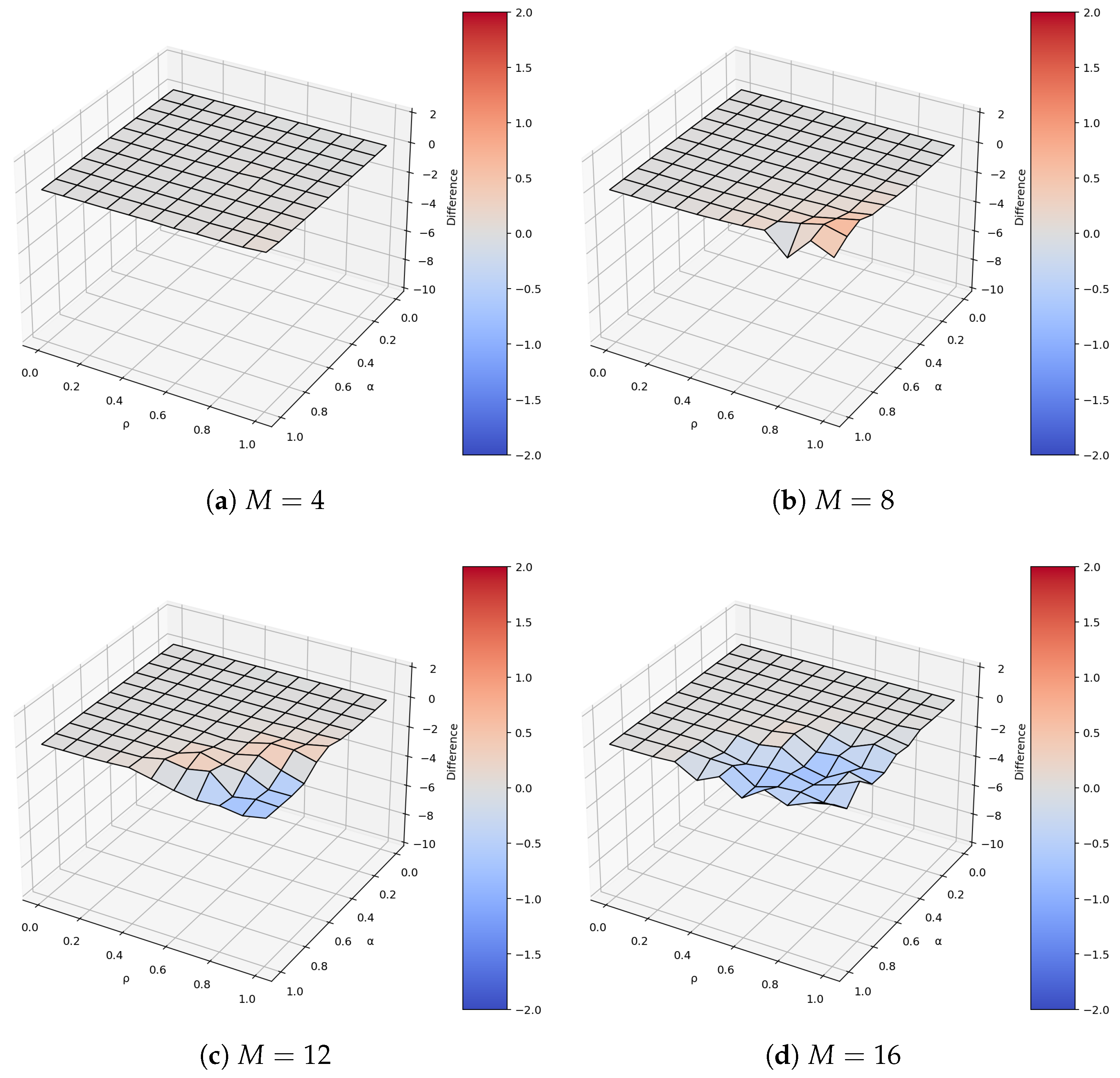

We first compare Linux 5.15 PREEMPT with Linux 6.12 PREEMPT to examine the impact of the version upgrade on multi-core performance, by using heatmaps to capture the differences across grid nodes as shown in

Figure 7.

Observation 7: Linux 6.12 shows a minor improvement in many-core scenario compared to Linux 5.15. When the number of cores is relatively small (), contention remains limited and the two kernels exhibit nearly identical behavior, with Linux 6.12 PREEMPT performing slightly worse. This minor degradation may come from the increased overhead associated with the more complex design of the newer kernel. As the core count increases, however, Linux 6.12 PREEMPT shows a modest advantage, suggesting that its multi-core mechanisms are more effective.

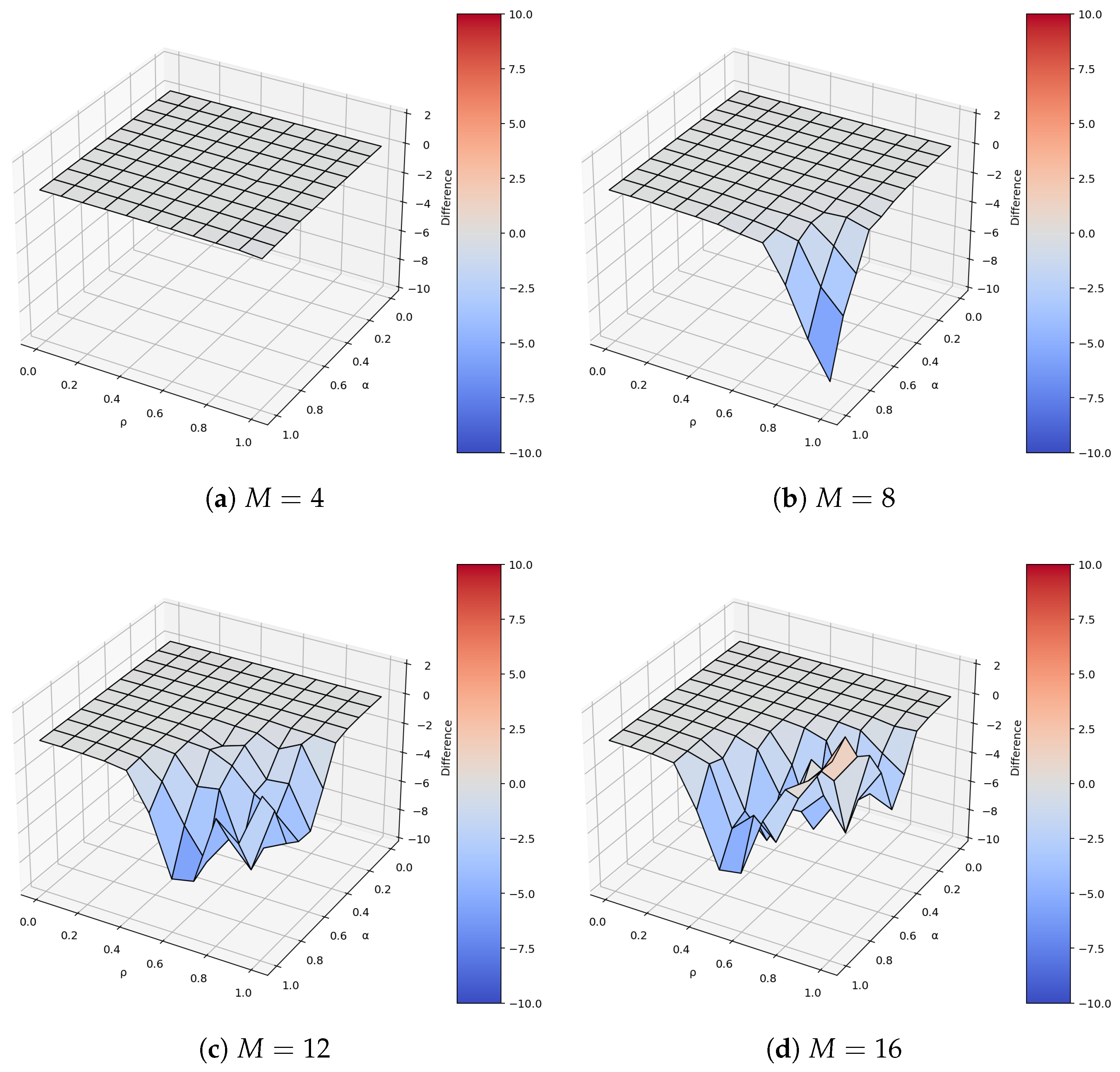

We then compare Linux 6.12 PREEMPT with Linux 6.12 PREEMPT_RT in order to quantify the effect of the popular real-time patches, as the results shown in

Figure 8.

Observation 8: PREEMPT_RT kernel remarkably outperforms PREEMPT under contentions. Linux 6.12 PREEMPT_RT consistently beats Linux 6.12 PREEMPT across most contention scenarios, demonstrating substantially lower delays. However, in the extreme case of 16-core where contention intensity reaches its maximum, the performance of PREEMPT_RT is slightly worse than that of PREEMPT. This degradation is likely due to the overhead introduced by real-time patches becoming dominant once contention is fully saturated. Nevertheless, taken as a whole, PREEMPT_RT exhibits significantly better performance in multi-core contention scenarios and provides clear advantages over its non-RT counterpart.

Finally, we use Mode 2 to generate 5000 task sets in a fully randomized manner, and employ these identical task sets to compare the overall performance of these three configurations.

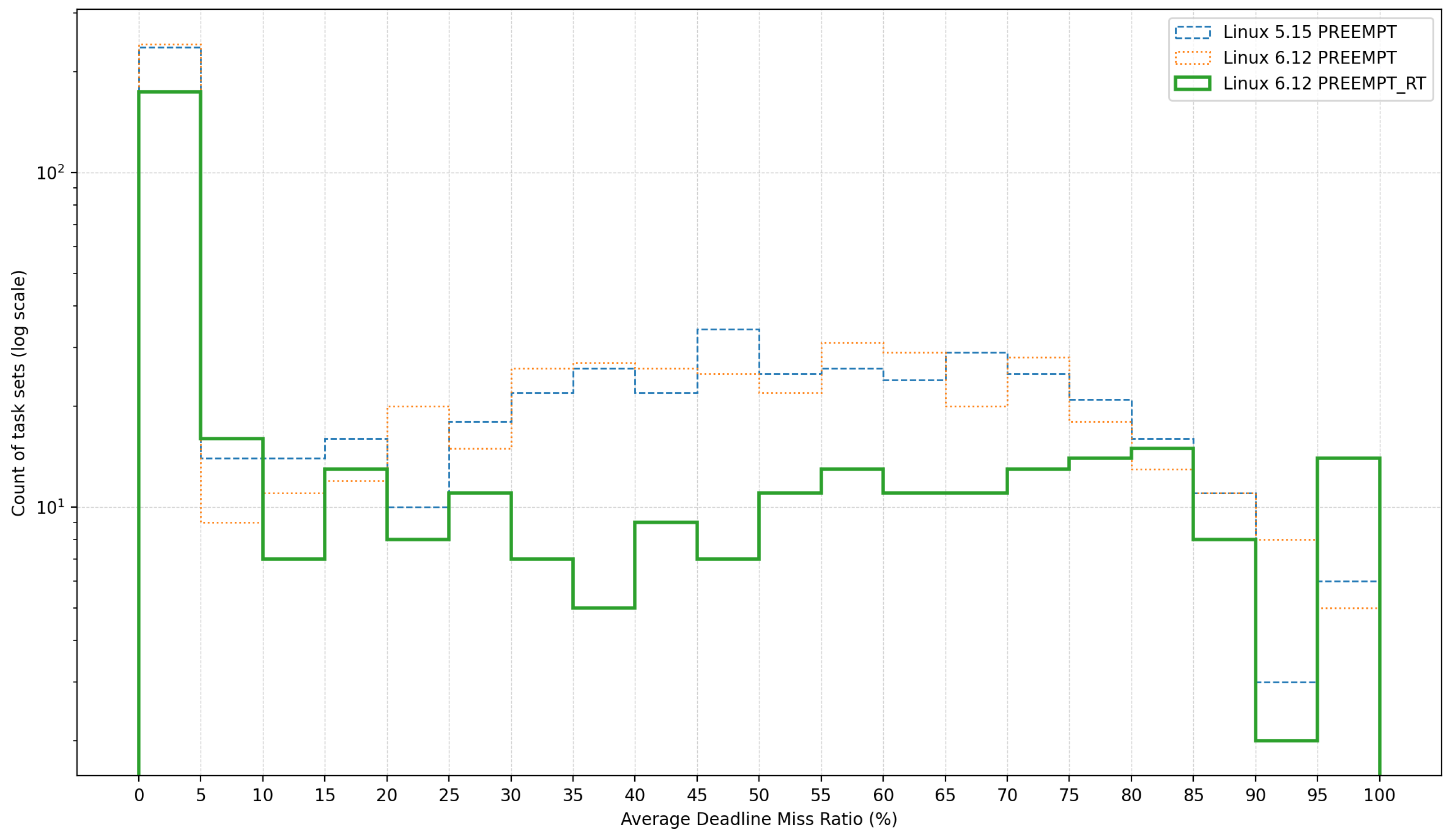

Figure 9 presents the distributions of deadline miss ratio with zero values (i.e., task executions without missing its deadline) excluded.

Observation 9: PREEMPT_RT kernel effectively reduces overall deadline misses in most cases. Across the 5000 generated task sets, we observe that in the vast majority of cases, specifically for the ratio is below 95%, the chances of deadline miss are noticeably lower in PREEMPT_RT than the other two configurations. This provides further evidence of the superior performance of PREEMPT_RT under non-extreme conditions. However, in the extreme tail of the distribution where ratio exceeds 95%, PREEMPT_RT exhibits a higher frequency of deadline misses. This finding aligns with the earlier observations, indicating that under saturated contention the intrinsic overhead of real-time patches may dominate, leading to increased delays.

4.4. Additional Experiments

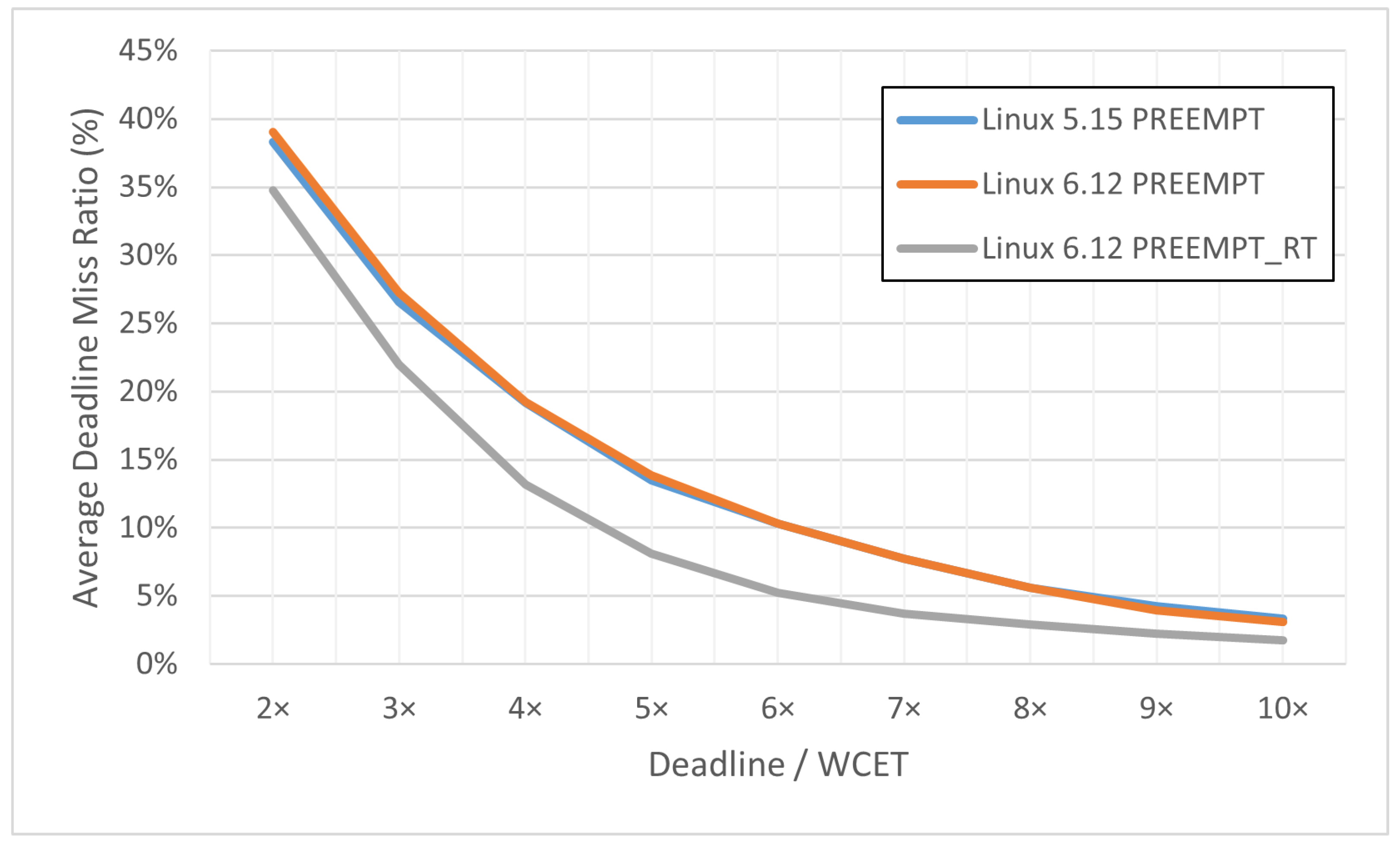

As described in

Section 3.3, the default deadline parameter is set to ten times of WCET (i.e., 10% utilization). For reference purpose, we have also compared the deadline miss ratio on the three kernel configurations using deadline parameter from 2× to 10× under Mode 2b with all 16 cores active and 5000 task sets generated for each parameter level. According to the results summarized in

Figure 10, all configurations show significant increase in deadline miss ratio as the utilization level gains. When the deadline parameter is 2× WCET (i.e., 50% utilization), even PREEMPT_RT will miss 35% deadline of all jobs. It indicates that, at least on our 16-core environment, current Linux is difficult to achieve reasonable performance for real-time systems if all cores have tasks with relatively tight deadline requirement. Meanwhile, under the 10× deadline parameter setting, all three configurations show less than 4% miss rate, which should be considered acceptable for many soft real-time use cases. Therefore, we believe 10× should be a good default value to generate useful task sets for evaluating systems with a large number of cores.

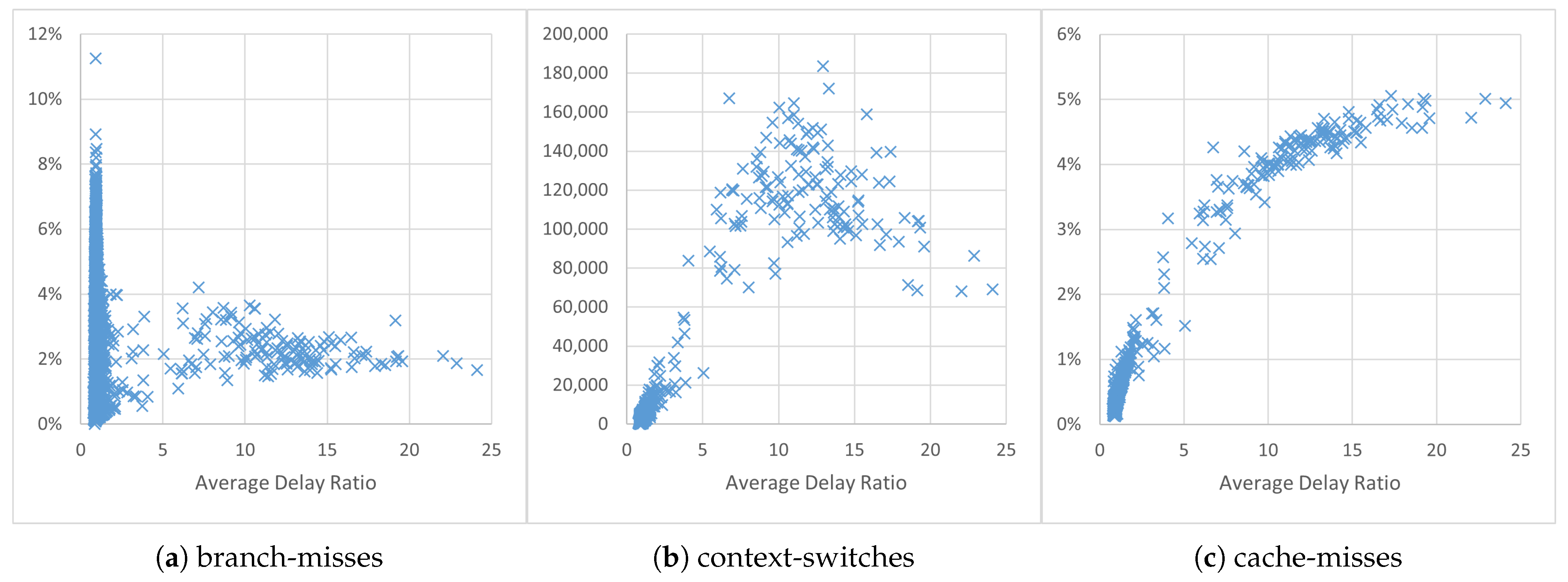

The purpose of our benchmark tool is to generate task sets that can trigger performance bottlenecks due to various hardware and software factors, but itself does not integrate the functionality to analyze such factors. Meanwhile, the user can always profile these task sets with existing well-established tools such as perf command for detailed analysis. To demonstrate this approach, we have used perf to record the software events and hardware performance counters for over 2000 task sets.

Figure 11 plots three events (branch-misses, context-switches and cache-misses), each showing a different correlation pattern to the delay ratio. The results indicate that the branch-misses events have almost no impact on performance, while the cache-misses events show a clear logarithmic-like correlation. As for the context-switches events, they can introduce a medium amount of delay but are less relevant to those task sets with very high delay. This experiment confirms that our benchmark tool can easily cooperate with existing profilers to analyze the factors causing performance bottlenecks.

5. Discussion

This study has proposed a benchmark methodology and framework for embedded system that integrates realistic task-set modeling with explicit multi-core contention representation. Unlike conventional benchmark suites which mainly provide fixed sequential or application-level workloads, our approach provides a controllable means to reproduce and analyze inter-core contention behaviors under realistic scenarios, especially suitable for those soft real-time systems such as compute-dominated IoT devices with mixed-criticality and core shielding design. By embedding OS-level synchronization primitives into parameterized task sets, the framework captures the combined effects of computation and contention, bridging the gap between theoretical models and executable performance evaluation workloads.

Through experiments on the 16-core NXP LX2160 SoC, we have applied this benchmark to assess the scalability and real-time characteristics of three representative Linux configurations (Linux 5.15 PREEMPT, Linux 6.12 PREEMPT and Linux 6.12 PREEMPT_RT). The results demonstrated several key insights. First, the benchmark reliably created task sets with performance trends consistent with the designed parameters, showing high reproducibility of the random generator. Second, Linux exhibited strong scalability up to moderate core counts, but experienced increasing performance degradation in heavy synchronization scenarios, particularly in many-core configurations. Finally, PREEMPT_RT remarkably reduced delays and deadline misses compared to the non-RT kernel configurations, confirming its effectiveness in mitigating interference and contention, though at the cost of slight overhead under saturated workloads.

An additional strength of the framework lies in its portability and extensibility. Because the task model itself is platform-agnostic by design, it can be easily migrated to other environments by substituting these OS-specific fragments and the system template. This flexibility enables not only cross-version Linux comparisons but also potential extensions to RTOS-based platforms. For POSIX-like RTOS, it should be able to run by simply replacing Linux-dependent service calls in fragments with equivalent ones. For RTOSs with other API specifications, it is also required to implement the interfaces of task creation, multi-core scheduler parameters, resource allocation and timer control. Such cross-OS evaluations can provide more valuable insights into the impact of factors like locking granularity, scheduling policies and heterogeneous architectures on real-time performance.

Nevertheless, several limitations remain within this work. The current task-set model focuses on OS-level contention and has not directly incorporated detailed micro-architectural factors such as cache coherence, bus arbitration, or memory controller latency. Moreover, while the evaluation across Linux versions has discovered performance bottlenecks, the root cause analysis (e.g., bad kernel design or hardware limit?) is still required to address them. Finally, the number and diversity of benchmark fragments should also be expanded to further enhance the generality of evaluation results.

Future work will address these limitations above in the following directions. We first plan to utilize the performance counters to analyze the relation between benchmark workloads and hardware events, and refine the task-set model and generator to integrate these hardware factors. With more performance metrics added into the framework, we can further improve the analysis and visualization tools to assist the rapid identification of root cause for performance issues. By collecting and characterizing the trace data from industrial use cases, it is possible to expand the fragment pools with more representative workloads. To enhance the usefulness of our framework, we will also port it to RTOS-based platforms since they are preferred over Linux in many energy-efficient and mission-critical embedded systems.