1. Introduction

The rapid advancement of Artificial Intelligence (AI) is transforming scientific disciplines. Large Language Models (LLMs) and their multimodal counterparts (LMMs) have demonstrated remarkable capabilities in fields like natural sciences and medicine [

1,

2]. While the humanities, including archaeology, have begun exploring these tools, applications often remain exploratory and fragmented, lacking a cohesive strategic vision for deep integration [

3,

4].

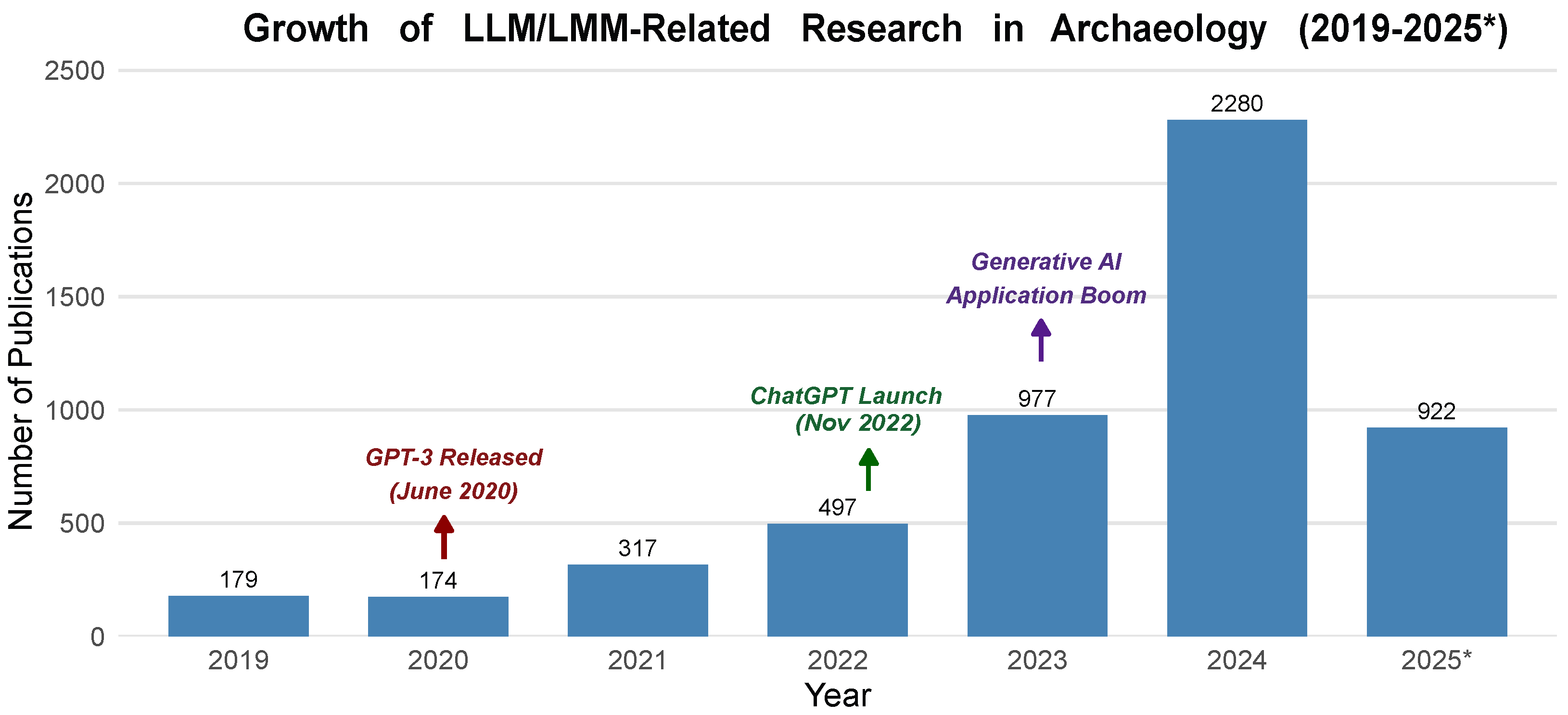

The growing academic interest in this intersection is evident in the publication landscape (

Figure 1). Foundational developments like the Transformer architecture [

5] and influential pre-trained models such as BERT [

6] in 2018 paved the way for initial explorations from 2019. The release of powerful LLMs like GPT-3 in 2020 further catalyzed research. However, the public launch of ChatGPT in late 2022 triggered a significant surge in research applying LLMs, LMMs, and generative AI to archaeological questions, a trend accelerating through 2023 and 2024.

To address this rapid escalation, this paper systematically reviews recent (2023+) LLM/LMM applications in archaeology, critically analyses the unique challenges and opportunities, and examines technical approaches that make these technologies increasingly accessible to the archaeological community. Unlike earlier AI applications in archaeology that primarily utilized conventional machine learning approaches (e.g., CNNs for image classification), this review focuses specifically on Transformer-based language and multimodal models, clarifying their distinct capabilities, limitations, and appropriate use cases for cultural heritage applications.

2. Review Methodology

This comprehensive review of LLM/LMM applications in archaeology employed a systematic literature search strategy to ensure broad coverage across the rapidly expanding field. Our search encompassed multiple academic databases including Google Scholar, Web of Science, Scopus, and arXiv, combined with discipline-specific repositories such as the Digital Archaeological Record (tDAR) and Archaeology Data Service (ADS). Search strategies integrated archaeological terminology with AI-specific keywords using Boolean operators: (archaeology OR archaeological OR heritage OR palaeography) AND (“large language model” OR LLM OR “transformer model” OR GPT OR BERT OR “multimodal model” OR LMM OR “generative AI”). The results were supplemented through cross-referencing of bibliographies from highly cited review articles and manual searches for seminal papers on specific archaeological LLM/LMM applications identified during the review process.

The study inclusion criteria specified that reviewed research must (1) employ Transformer-based language models or multimodal models (with traditional machine learning approaches discussed primarily for comparative context); (2) address archaeological research questions, heritage conservation applications, or cultural heritage challenges; (3) be published or preprinted between 2019 and 2025, with an emphasis on 2023+ applications reflecting the ChatGPT-driven surge in deployments; (4) provide sufficient methodological detail to permit the assessment of applicability, limitations, and archaeological relevance.

Rather than organizing applications by AI technique, we structured our analysis around archaeological data types and research questions (textual analysis, bioarchaeological data, material culture, spatial analysis, knowledge management), reflecting how archaeologists encounter and deploy these technologies. This thematic organization enables readers to identify relevant applications for their specific research domains. We recognize that this review is not exhaustive—the field is expanding rapidly—but rather represents a strategically comprehensive snapshot of demonstrated applications as of mid-2025, with particular attention to 2023–2024 publications where the most active development has occurred.

3. LLM/LMM Technologies for Archaeological Applications: Capabilities, Adaptation Methods, and Recent Advances

3.1. Transformer-Based Models: Architecture and Core Capabilities

Contemporary Large Language Models (LLMs) and Multimodal Models (LMMs) are built upon the Transformer architecture, introduced by Vaswani et al. in 2017 [

5]. This architecture employs self-attention mechanisms that compute pairwise interactions between all elements in an input sequence simultaneously, enabling the superior capture of long-range dependencies and complex contextual relationships compared with earlier neural networks. For archaeology, these capabilities are particularly valuable for integrating heterogeneous evidence types, understanding lengthy excavation reports, and connecting dispersed references across historical texts [

7].

These models acquire language understanding and reasoning capabilities through pre-training on massive text corpora, developing rich representations of linguistic structure and world knowledge. For archaeology, their evolution from text-only models like BERT and GPT-3 to contemporary multimodal variants capable of processing text, images, and 3D data offers significant potential for integrating heterogeneous archaeological evidence. The key capabilities for archaeological applications include the following:

Deep Language Understanding and Long-Context Processing: LLMs excel at contextual understanding, ambiguity resolution, and processing extensive text sequences [

8], crucial for analysing lengthy archaeological documents, excavation reports, and historical texts. Recent advances like LongRoPE [

9] have extended context windows beyond 2 million tokens, enabling the processing of entire excavation archives in a single query.

Knowledge Extraction, Reasoning, and Generation: These models can automatically extract structured information (entities, relations, events) from unstructured archaeological texts, aiding knowledge graph or database construction. Their embedded knowledge and reasoning abilities can supplement incomplete information, generate hypotheses, draft preliminary reports, or summarise extensive literature [

10,

11], potentially accelerating research cycles.

Multimodal Information Fusion: LMMs can simultaneously process and correlate information from disparate sources like textual descriptions, artifact images, and 3D site models, which is invaluable for archaeology where interpretations often rely on integrating heterogeneous evidence [

12,

13,

14].

Cross-Lingual Processing and Few-Shot Learning: Multilingual support aids in processing the diverse global archaeological literature and facilitating cross-cultural comparative studies [

15]. Strong few-shot/zero-shot learning capabilities allow rapid adaptation to new tasks or specific archaeological contexts where labelled data is inherently scarce [

16,

17].

Interactive Research and Intelligent Agents: Natural language dialogue support enables intuitive exploratory data analysis and human–computer collaboration, lowering technical barriers for non-computational archaeologists [

4,

18]. LLMs can also power intelligent agents for automating complex, multi-step archaeological research tasks.

3.2. Domain Adaptation Through Fine-Tuning: Methods and Archaeological Applications

While pre-trained Large Language Models possess impressively broad general knowledge spanning diverse topics, languages, and domains acquired through training on massive web-scale corpora [

6,

7], they often lack the highly specialized domain knowledge, technical terminology, and discipline-specific reasoning patterns required for effective deployment in specialized scientific applications like archaeology. Archaeological discourse employs extensive specialized vocabularies (typological categories, stratigraphic terminology, chronological periods, cultural designations) that may be under-represented or incorrectly represented in general web text [

10], and archaeological reasoning requires understanding specialized methodological frameworks (stratigraphic analysis, seriation, spatial patterning, formation processes) that differ substantially from common-sense reasoning patterns [

3]. Moreover, pre-trained models may harbor factual errors, outdated information, or cultural biases problematic for archaeological interpretation [

19]. Consequently, domain adaptation through various fine-tuning strategies has become essential for tailoring general-purpose LLMs to function effectively within cultural heritage contexts [

20,

21], enabling them to understand archaeological terminology correctly, reason appropriately about archaeological problems, and generate outputs aligned with disciplinary standards and expert expectations. This section systematically reviews key fine-tuning methodological approaches developed in the broader machine learning community and critically examines their archaeological relevance, practical applicability, and demonstrated effectiveness based on recent archaeological implementations.

3.2.1. Supervised Fine-Tuning and Reinforcement Learning from Human Feedback

Model performance is typically enhanced through Supervised Fine-Tuning (SFT), where models are trained on domain-specific labeled datasets, and Reinforcement Learning from Human Feedback (RLHF) [

22], which aligns model outputs with human preferences and expert judgment. For archaeology, SFT on curated corpora of excavation reports, artifact descriptions, and the scholarly literature can significantly improve terminology recognition and contextual understanding [

21].

3.2.2. Parameter-Efficient Fine-Tuning (PEFT) and Low-Rank Adaptation (LoRA)

The full fine-tuning of large models requires substantial computational resources, creating barriers for many archaeological institutions. Parameter-Efficient Fine-Tuning (PEFT) techniques address this by updating only a small subset of model parameters, dramatically reducing the computational costs and memory requirements [

20].

Low-Rank Adaptation (LoRA) [

23] is a particularly influential PEFT method that freezes pre-trained model weights and injects trainable rank decomposition matrices into each layer of the Transformer architecture. This approach has proven highly effective for domain adaptation: Cullhed et al. [

21] demonstrated that instruct-tuning a LLaMA model with LoRA achieved a state-of-the-art performance in Greek text restoration (22.5% Character Error Rate), geographical attribution (75.0% accuracy), and dating (median error of 1 year), even handling scriptio continua effectively. Recent developments include ALoRA [

24], which dynamically allocates ranks across layers for improved efficiency.

3.2.3. Small Language Models and Local Deployment

Contrary to assumptions that LLM deployment requires extensive computational infrastructure, recent developments in Small Language Models (SLMs) have made local execution feasible even on consumer hardware. Phi-3-mini [

25], a 3.8 billion parameter model trained on 3.3 trillion tokens, rivals much larger models (achieving 69% on MMLU benchmarks) while being deployable on smartphones. This democratizes the access to LLM capabilities for archaeological fieldwork and institutions with limited resources [

25].

The domain-adaptive pre-training of such models on archaeological corpora, combined with PEFT techniques, enables the development of specialized “ArchaeoLMs” (Archaeological Language Models) that can run locally, addressing both computational cost and data sovereignty concerns [

26].

3.3. Retrieval-Augmented Generation and Knowledge Integration

A fundamental challenge for LLMs in archaeology is “hallucination”—generating plausible but false information [

7,

10]. This is particularly problematic for evidence-based disciplines. Several approaches address this limitation:

3.3.1. Retrieval-Augmented Generation (RAG)

RAG techniques [

27] enhance LLM outputs by retrieving relevant information from external knowledge bases before generation. For archaeological applications, RAG enables models to ground their responses in curated databases, excavation archives, or the scholarly literature, significantly reducing hallucinations [

28,

29]. The approach typically involves (1) encoding queries and documents into vector embeddings, (2) retrieving the top K most relevant documents, (3) conditioning the LLM generation in both the query and retrieved context.

3.3.2. Retrieval-Augmented Fine-Tuning (RAFT)

RAFT [

30], introduced in 2024, represents a significant advancement by combining retrieval and fine-tuning. Unlike RAG alone, RAFT trains models to distinguish between relevant and distractor documents during fine-tuning, teaching them to cite specific passages and ignore irrelevant information. Applied to domain-specific archaeological contexts, RAFT has shown improvements up to 35% over base models in specialized question-answering tasks [

30].

3.3.3. Knowledge Graph Integration

Integrating structured knowledge graphs (KGs) with LLMs offers complementary benefits: KGs provide factual grounding and explicit relationships between archaeological entities (sites, cultures, artifacts), while LLMs offer natural language interfaces and reasoning capabilities. The LPKG framework [

29] demonstrates how KG schemas can generate structured training data to enhance LLM query planning and execution, which are crucial for complex archaeological reasoning tasks.

3.3.4. Chain-of-Thought (CoT) Prompting

CoT prompting [

31] guides models to articulate intermediate reasoning steps, improving the performance on complex multi-step problems. For archaeological interpretation, CoT can help models generate multiple plausible hypotheses, rank them based on available evidence, and suggest analogies—though requiring rigorous expert validation.

3.4. Technical Challenges Specific to LLMs in Archaeological Contexts

It is crucial to distinguish between the challenges generic to all machine learning approaches and those specific to Transformer-based LLMs in archaeological applications:

LLM-Specific Challenges include:

Generic ML/AI Challenges (not unique to LLMs) include:

Data quality and bias: Biases in training data (e.g., Eurocentric, colonial perspectives) affect all ML approaches [

3,

19].

Interpretability: Black box decision-making is a concern across deep learning architectures [

32,

33].

Small sample learning: Few-shot learning challenges apply to both LLMs and traditional ML approaches in data-scarce archaeological contexts [

16].

Understanding these distinctions is essential for selecting appropriate AI tools for specific archaeological tasks: CNNs may be preferable for pottery classification, while LLMs excel at textual synthesis and knowledge extraction.

3.5. Archaeology as a Proving Ground for Next-Generation AI

Archaeology, studying the human past through material remains, deals with data of unparalleled complexity. Sources are extraordinarily diverse, encompassing texts (field notes, reports, ancient inscriptions), 2D images (photographs, drawings, remote sensing), 3D models (scans of sites, artifacts), geospatial information, temporal sequences, structured databases, and scientific analytical data (aDNA, isotopes) [

34]. These data are inherently characterized by extreme heterogeneity, multimodality, fragmentation, sparsity, noise, incompleteness, and a profound dependence on spatio-temporal and cultural context for interpretation [

35,

36].

These characteristics position archaeology as an invaluable “proving ground” for next-generation AI. Successfully tackling archaeology’s data challenges will not only revolutionize “AI for Archaeology” but also drive significant advancements in AI technology itself (“Archaeology for AI”). The robustness, few-shot learning capabilities, multimodal fusion techniques, and knowledge-enhanced reasoning of responsible AI frameworks developed and validated within archaeological contexts can offer crucial real-world test scenarios applicable to other humanities disciplines and complex data domains.

Two open benchmarks illustrate how archaeological problems push the AI frontier. (i) TimeTravel [

37] offers 10,250 expert-verified image–text pairs spanning 266 cultures and is now a standard leaderboard for evaluating historical reasoning in LMMs. (ii) The Vesuvius Challenge [

38,

39] publishes micro-CT volumes of carbonised papyri; its 2023 Kaggle competition drew over 2500 teams, with the winning solutions featured in

Nature as breakthroughs in long-context 3D vision. Such demanding tasks serve as crucial proving grounds for few-shot multimodal learning and robust pattern recognition under extreme noise.

However, inherent challenges—hallucinations, embedded biases, a lack of causal understanding, poor interpretability, and (formerly) high computational costs—are particularly acute in archaeological applications. Archaeological scenarios, demanding evidential rigor and contextual nuance, expose these issues and drive AI research towards more robust and responsible systems. Recent advances in efficient models (Phi-3-mini), PEFT techniques (LoRA), and knowledge-grounding methods (RAFT) are beginning to address these barriers [

23,

25,

30].

4. Current Applications of LLMs and LMMs in Archaeology: Progress and Examples (2023+)

Recent (2023+) applications of Large Language and Multimodal Models in archaeology are rapidly emerging. Though often exploratory and reflecting still-fragmented integration, these efforts highlight immense potential. This section reviews such applications, categorized by primary archaeological focus.

4.1. Intelligent Analysis and Processing of Archaeological Texts and Linguistic Data

Archaeology’s profound reliance on diverse textual records—ranging from fragmentary ancient inscriptions carved in stone or impressed in clay to voluminous modern excavation reports, field notes, and the scholarly literature—presents natural and fertile ground for LLM application. The sheer volume and linguistic diversity of this textual corpus, encompassing hundreds of ancient scripts (cuneiform, hieroglyphs, Linear B, Oracle Bone Script) and dozens of modern languages, has historically created significant barriers to comprehensive analysis and synthesis. Current explorations of LLM applications in this domain focus primarily on automated pattern identification in historical texts, computational approaches to ancient script decipherment and restoration, and machine translation systems for low-resource ancient languages. These applications demonstrate how LLM capabilities in natural language understanding, sequence modeling, and pattern recognition can be adapted to address long-standing challenges in archaeological text analysis, though substantial technical and methodological hurdles remain.

In ancient document analysis and historical text mining, LLMs can automatically extract complex entity–relationship networks from extensive historical corpora, identifying persons, places, events, and their interconnections across thousands of documents [

40]. When these extracted knowledge structures are visualized through integrated knowledge graphs and Geographic Information Systems (GIS), they can reveal previously obscured underlying social networks, trade connections, political alliances, and spatial patterns of cultural interaction that would be exceedingly difficult to detect through the traditional close reading of individual texts. This capability is particularly valuable for synthesizing information from dispersed historical sources and facilitating comparative studies across regions and time periods. For damaged, fragmentary, or illegible ancient texts—a ubiquitous condition in archaeological contexts due to taphonomic processes, deliberate destruction, or simple material degradation—AI-assisted computational decipherment approaches offer promising new avenues for recovering previously inaccessible information. The Vesuvius Challenge (detailed in

Section 3.5) provides a striking demonstration of this potential, where machine learning models successfully identified text within carbonized papyri from Herculaneum by analyzing subtle variations in micro-CT scan data invisible to human observers [

41]. Such breakthroughs suggest that LLMs and computer vision models, when appropriately combined and adapted, may enable the recovery of textual information from archaeological materials previously considered permanently illegible, fundamentally expanding the boundaries of accessible ancient knowledge.

Addressing ancient scripts and languages necessitates specialized AI adaptation.

Table 1 summarizes the key performance metrics from recent domain-adapted archaeological LLM applications, demonstrating substantial improvements over baseline approaches.

The Ithaca system [

44] employed probabilistic models for restoring Greek inscriptions with confidence scores. A significant 2024 advancement by Cullhed et al. [

21] used LoRA-based instruct-tuning of LLaMA, achieving a state-of-the-art performance even when handling

scriptio continua. Similar approaches aid Oracle Bone Character detection [

45,

46] and historical document transcription [

47].

Progress in machine translation for low-resource ancient languages remains nascent but notable. The “Babylonian Engine” [

43] achieved near-novice translator proficiency for Akkadian cuneiform (BLEU 36–37) using an NMT trained on the ORACC corpus (

Table 2), though limitations in ambiguity handling and the requirement for transliterated input indicate ongoing challenges.

While Cullhed et al.’s 22.5% Character Error Rate (CER) for Greek text restoration represents substantial progress over traditional OCR methods (typically 30–40% CER on damaged texts), this error rate remains problematic for unsupervised scholarly use. Even single-character errors in critical editions can fundamentally alter the historical interpretation. Similarly, the Babylonian Engine’s BLEU score of 36–37, while approaching novice translator proficiency, falls well short of professional standards (typically >50 BLEU for publication-ready translation). These systems function effectively as “research assistants” that reduce human labor, but not as “autonomous scholars” capable of independent knowledge production. This pattern exemplifies a broader reality: current LLM/LMM applications in text analysis excel at labor-intensive preprocessing and pattern identification, but require mandatory expert validation before the results enter the scholarly record. Additionally, most achievements depend on specialized fine-tuning using domain-specific corpora; general-purpose LLMs show limited effectiveness without adaptation, highlighting the necessity for sustained “ArchaeoLM” development efforts.

For complex content analysis, researchers have explored integrating LMMs with knowledge graphs or RAG techniques [

27] to track script evolution [

48] or compare writing systems [

49]. However, data sparsity in ancient corpora and training data biases [

50] remain persistent challenges.

4.2. Intelligent Processing of Environmental and Bioarchaeological Data

Environmental and bioarchaeology generate vast, specialized datasets crucial for understanding past human–environment interactions, including ancient DNA (aDNA) sequences, paleoproteomics, stable isotopes, pollen assemblages, and faunal/floral remains. While direct LLM applications are still emerging in this domain, initial explorations demonstrate significant potential for addressing analytical bottlenecks and interpretive challenges.

In ancient DNA research, Boury et al. [

26] pioneered the adaptation of Transformer-based language models for paleogenomic data. Recognizing that DNA sequences can be treated analogously to linguistic texts—with nucleotide “tokens” forming sequence “sentences”—they fine-tuned DNABERT, a BERT variant pre-trained on modern genomic data, to simulate characteristic ancient DNA damage patterns. These patterns, including cytosine-to-thymine (C-to-T) deamination at fragment termini and depurination-induced fragmentation, are diagnostic signatures of authentic ancient samples but pose substantial challenges for standard bioinformatics tools originally designed for high-quality modern DNA. Their adapted model achieved approximately 74% consistency with established damage simulation tools like mapDamage, offering novel computational approaches for paleogenomic experimental design, authenticity assessment, and the optimization of laboratory protocols. This work exemplifies how specialized “ArchaeoLMs” (Archaeological Language Models) can be developed by leveraging successful domain-specific adaptations of general-purpose models (a summary of which is provided in

Table 3), potentially integrating insights from broader bioinformatics LLM applications in protein structure prediction and genomic variant analysis [

51,

52].

For paleoenvironmental reconstruction, the integration of LLMs with traditional analytical frameworks shows promise in addressing interpretive complexity and facilitating synthesis across multiple proxy data streams. Wang et al. [

31] demonstrated that GPT-4, when augmented with Chain-of-Thought (CoT) prompting and Retrieval-Augmented Generation (RAG) and drawing upon the published paleoenvironmental literature, could assist in interpreting sedimentary records by generating multiple plausible paleoenvironmental scenarios and ranking them based on available proxy evidence (pollen, charcoal, geochemistry). While such AI-generated interpretations require rigorous expert validation and critical assessment, the approach offers potential benefits including a reduction in confirmation bias in expert interpretation, the facilitation of the systematic consideration of alternative environmental scenarios, and the more efficient integration of interdisciplinary knowledge from geology, botany, climatology, and anthropology [

53].

The DNABERT adaptation achieving 74% consistency with mapDamage is encouraging, but it is critical to note that both tools operate on probabilistic models of damage patterns. The remaining 26% disagreement reflects genuine scientific uncertainty rather than simple measurement error. LLM-based approaches excel at accelerating data processing but should be viewed as complementary to, not replacements for, established paleogenomic tools. Similarly, while CoT-augmented GPT-4 can generate plausible paleoenvironmental narratives, a fundamental challenge remains: LLMs have no inherent understanding of sedimentological or paleoclimatic principles. Their outputs reflect pattern-matching in training data, not genuine causal reasoning about past environments. This is not necessarily problematic—expert archaeologists similarly rely on learned patterns to make rapid interpretations—but it demands transparency about the limitations and explicit human validation before such interpretations become part of the research record.

Beyond analytical support, LLMs demonstrate potential for automating labour-intensive data extraction tasks that currently represent major bottlenecks in synthesizing archaeological and environmental information. Decades of archaeological and zooarchaeological research have generated vast bodies of knowledge locked in lengthy technical reports and grey literature with inconsistent formatting and terminology. LLM-powered natural language processing systems could automatically extract and structure key information—such as taxonomic identifications, environmental indicators, taphonomic observations, and chronometric dates—from such unstructured historical records, thereby facilitating large-scale meta-analyses, enabling the construction of comprehensive regional databases, and making historical data discoverable and reusable [

54,

55]. However, key challenges persist, including handling highly specialized and inconsistent taxonomic and ecological terminology, the critical need for domain adaptation using curated archaeological and environmental corpora [

51], and developing robust methods for quantifying and communicating uncertainty in AI-generated interpretations to ensure appropriate epistemic caution in scientific inference.

4.3. Multimodal Analysis of Material Culture and Archaeological Remains

The study of material culture—encompassing artifacts, ecofacts, architecture, and archaeological features—is fundamentally and irreducibly multimodal in character [

56,

57]. The archaeological interpretation of material remains necessarily integrates diverse information streams: detailed textual descriptions documenting morphology, technology, and context; high-resolution visual data including photographs, technical drawings, and 3D laser scans or photogrammetric models; spatial information recording provenience, associations, and distribution patterns; and increasingly, scientific analytical data from techniques such as X-ray fluorescence (XRF), stable isotope analysis, residue analysis, and petrographic thin-section microscopy [

34]. This inherent multimodality, while enriching interpretive possibilities, has historically created significant challenges for computational analysis, as traditional machine learning approaches struggle to effectively integrate such heterogeneous data types [

17]. While earlier AI applications in archaeology frequently employed Convolutional Neural Networks (CNNs) for image-specific tasks like artifact classification [

17], the emergence of Models (LMMs) capable of jointly processing and reasoning across text, images, and structured data presents particularly promising opportunities for advancing material culture studies [

12], potentially enabling more holistic, integrated analytical approaches that mirror the multimodal reasoning archaeologists themselves employ.

Large Language Models, even in their text-only forms, significantly aid archaeological database construction and knowledge extraction from legacy literature. LLMs can automatically extract structured artifact attribute information—including morphological characteristics (shape, size, proportions), manufacturing techniques (forming methods, surface treatments, decorative techniques), material composition, stylistic features, functional interpretations, and provenience data—from unstructured or semi-structured textual sources such as excavation reports, catalogue entries, and scholarly publications [

42,

55]. This automated extraction capability dramatically accelerates the preparation of large-scale typological datasets required for quantitative analyses, moving beyond the labour-intensive manual coding that has traditionally limited the scale of comparative studies. Moreover, LLM-based extraction approaches can capture richer semantic information compared with traditional machine learning approaches that rely on manually engineered numerical feature vectors, as LLMs can understand the contextual nuances and implicit relationships expressed in natural language descriptions [

58].

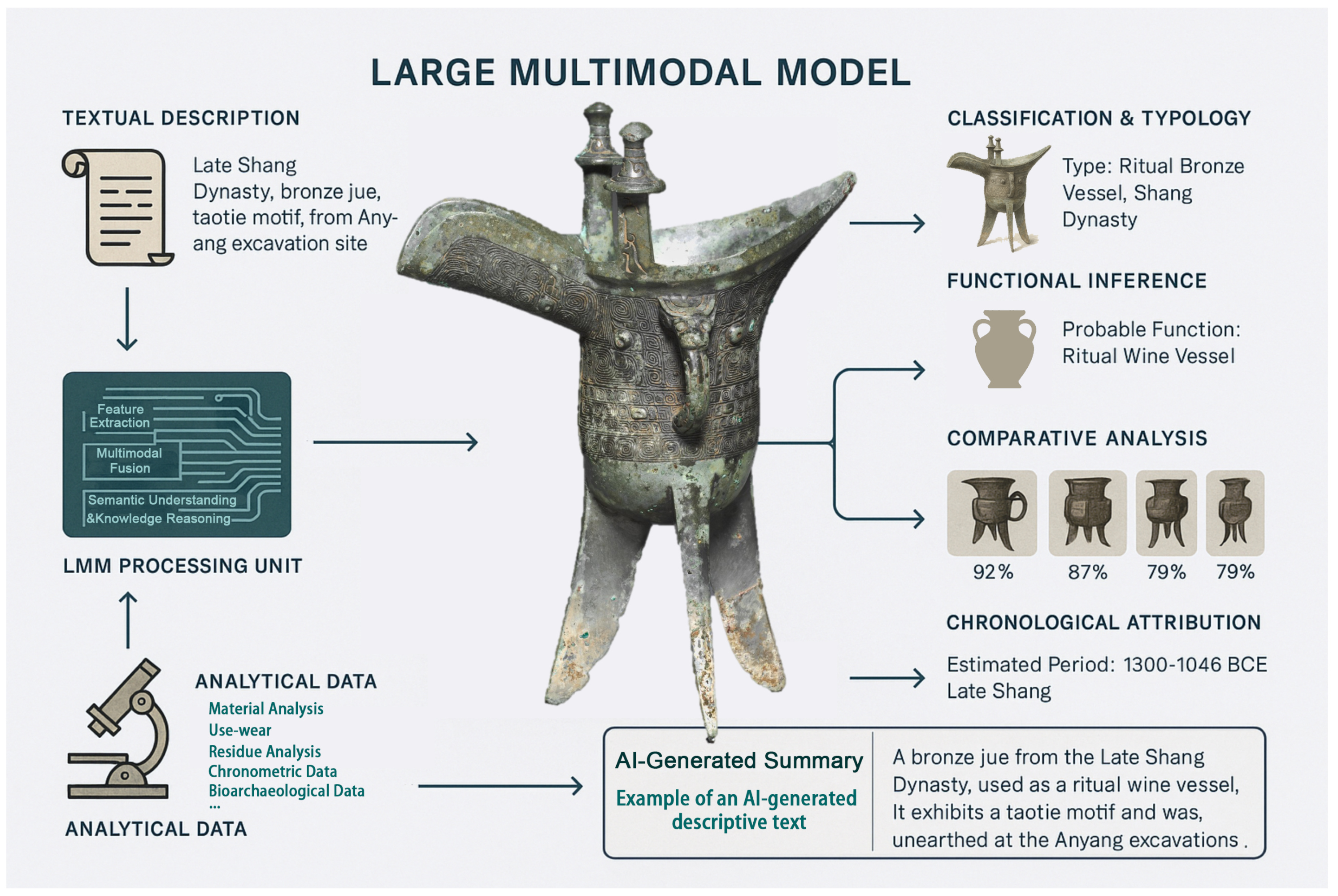

Figure 2 conceptually illustrates how the Models integrate multiple information modalities—visual data from artifact photographs and 3D scans, textual descriptions from catalogues and reports, and structured scientific analytical data—to generate comprehensive archaeological insights spanning typological classification, functional inference, comparative analysis with parallels from other sites, chronological attribution, and the automated generation of synthetic summaries suitable for publication or database entry.

Significant recent progress in practical multimodal artifact analysis demonstrates the maturation of LMM applications in archaeology. Pang et al. [

42] developed an sophisticated end-to-end visual language processing pipeline that combines object detection algorithms (to identify and segment individual artifacts within catalogue page scans), Multimodal Large Language Models (MLLMs) for joint image–text understanding, and graph matching algorithms to establish correspondences between visual artifact representations and their associated textual catalogue descriptions. Applied to processing historical scanned ceramic catalogues—a common but labour-intensive digitization task—their system achieved a mean Average Precision (mAP) of 35.4% in automatically linking artifact photographs to corresponding descriptive text entries, demonstrating the feasibility of semi-automated catalogue digitization. The ArchaeCLIP project [

59,

60] takes a different approach by fine-tuning OpenAI’s CLIP (Contrastive Language-Image Pre-training) model specifically on large-scale archaeological artifact image datasets paired with metadata descriptions. This domain adaptation enables semantically meaningful, content-based artifact retrieval using flexible natural language queries such as “find all pottery sherds exhibiting incised geometric decoration patterns” or “locate bronze artifacts with evidence of ritual use,” moving beyond traditional keyword-based database searches to support more intuitive, exploratory research workflows [

61]. The TimeTravel benchmark [

37], comprising 10,250 expert-verified image–text pairs spanning 266 archaeological cultures, represents a crucial infrastructure development for systematically evaluating and comparing LMM performance on historically and archaeologically relevant tasks, providing standardized metrics to guide iterative model improvement and ensure archaeological domain relevance rather than merely optimizing for generic computer vision benchmarks.

Beyond 2D image analysis, archaeological applications increasingly leverage AI assistance for processing complex 3D data derived from laser scanning, photogrammetry, and computed tomography. Advanced techniques are being applied to the virtual restoration and digital reassembly of fragmented artifacts—exemplified by recent work on computationally reconstructing severely fragmented Sanxingdui bronze ritual vessels [

62]—enabling the non-destructive study and hypothetical reconstruction of objects too fragile for physical manipulation. LMMs with enhanced 3D spatial understanding capabilities, such as MM-Spatial [

14], offer potential for more sophisticated analyses of three-dimensional artifact morphology, including the automated recognition of manufacturing traces (tool marks, forming techniques), the computational reconstruction of original manufacturing sequences (chaîne opératoire), and intelligent assistance with time-consuming tasks like ceramic sherd reassembly or the identification of refitting lithic fragments. However, translating these promising technical capabilities into reliable archaeological practice faces substantial challenges. Foremost among these is the acute need for large-scale, systematically annotated, and ethically sourced multimodal training datasets that adequately represent archaeological diversity while respecting cultural sensitivities and intellectual property rights. Additionally, archaeological applications must contend with poor-quality legacy data (faded photographs, inconsistent documentation standards, incomplete provenience information) that differs dramatically from the high-quality, standardized datasets used in computer vision research. Ensuring that AI-generated classifications and interpretations maintain archaeological relevance and theoretical validity—rather than merely achieving high scores on technical metrics—requires sustained collaboration between archaeologists and AI developers. Finally, the effective integration of LMM capabilities into existing archaeological workflows and database infrastructures remains a significant practical barrier requiring attention to usability, interoperability, and institutional capacity building.

Pang et al.’s 35.4% mAP for ceramic catalog linking represents meaningful progress, yet this metric requires careful interpretation. Mean Average Precision measures the technical matching accuracy but does not capture whether matches are archaeologically meaningful. A system that links artifacts with 35% accuracy might conflate vessels from different chronological periods or cultural contexts. The ArchaeCLIP approach is conceptually more robust—semantic embedding enables flexible queries—but depends critically on training data quality and representativeness. If training data is biased toward well-photographed museum collections, the system will likely fail on fragmentary field specimens or undersea recoveries. TimeTravel is valuable as a benchmark, yet its 266-culture coverage substantially under-represents non-Western archaeology and marginalizes under-represented regions. More fundamentally, these systems excel at pattern recognition within visual domains but falter when archaeological interpretation demands the integration of contextual, temporal, and theoretical knowledge that extends beyond image–text associations.

4.4. Intelligent Augmentation of Field Archaeology and Spatial Analysis

Field archaeology generates vast heterogeneous data daily—handwritten notes, sketches, photographs, 3D photogrammetric scans, geospatial coordinates, drone imagery, and stratigraphic records—creating significant challenges for real-time processing, integration, and analysis. LLM and LMM technologies offer promising avenues for streamlining data capture, enhancing on-site interpretation, and facilitating complex spatial reasoning that traditionally required post-excavation analysis. For structured field record processing, LLMs can automatically extract and structure key information including feature descriptions, stratigraphic relationships, artifact provenience, Harris matrix relationships, and contextual associations from unstructured textual records [

10], significantly aiding subsequent data integration and archaeological database population [

63]. The Arkas 2.0 project [

64] exemplifies practical implementation by utilizing low-code development platforms augmented with LLM-generated code snippets (powered by ChatGPT-3.5) to accelerate archaeological database construction. This approach enabled archaeologists with limited programming expertise to rapidly develop customized data management systems by describing the desired functionalities in their natural language, with the LLM automatically generating corresponding database schemas, data validation rules, and query interfaces. Such low-code/LLM synergy effectively lowers the technical barriers to adopting digital archaeological methods, democratizing access to sophisticated data management capabilities that were previously restricted to institutions with dedicated technical staff or substantial computational resources.

LLM integration with Geographic Information Systems (GIS) represents a particularly promising frontier for spatial archaeological analysis [

13]. Harvard’s Spatial Data Lab [

65] has pioneered training LLMs as intelligent spatial reasoning agents capable of understanding complex natural language queries—such as “identify all Late Bronze Age settlement sites located within 5 km of perennial water sources on south-facing slopes with elevations between 800–1200 m”—and autonomously translating these multi-criterion requests into executable multi-step GIS analytical workflows. This involves decomposing the query into discrete spatial operations (proximity analysis, slope aspect calculation, elevation filtering, temporal attribute selection), executing these operations sequentially in appropriate GIS software (e.g., ArcGIS 10.8, QGIS 3.x, or other standard platforms) environments, and synthesizing the results into interpretable archaeological narratives [

65]. Such natural language GIS interfaces dramatically reduce the technical learning curve traditionally required for spatial archaeological analysis [

66], potentially enabling field archaeologists to conduct sophisticated landscape analyses on-site using conversational queries rather than mastering complex GIS command syntaxes or graphical interface workflows. Multimodal data fusion in field contexts is increasingly vital for achieving a holistic, real-time understanding of excavated contexts [

56]. Vision–language models like GPT-4V can provide detailed textual descriptions, preliminary stratigraphic interpretations, and feature identification directly from field photographs of trench profiles, section drawings, or exposed architectural features [

67]. More specialized 3D-focused LMMs like MM-Spatial [

14], which integrate spatial understanding with visual processing, can comprehend complex three-dimensional spatial relationships between archaeological features, perform accurate 3D object localization within site coordinate systems, and assist in reconstructing site formation processes—capabilities valuable for analyzing intricate site structures, intra-site artifact spatial distributions, and depositional sequences [

34].

However, it is crucial to acknowledge that current field applications of LLMs/LMMs remain largely in their early stage and experimental [

4], functioning primarily as “Digital Co-pilots” that provide basic assistance rather than autonomous analytical agents [

13]. These systems excel at routine data processing tasks (transcription, basic classification, query translation) but struggle with interpretive nuance, contextual reasoning, and handling the ambiguity inherent in archaeological evidence [

3]. The persistent emphasis on mandatory human expert supervision in all published field applications reflects archaeology’s rigorous demands for evidential transparency, interpretive accountability, and the critical role of tacit expert knowledge in evaluating stratigraphic integrity, artifact associations, and formation processes [

68,

69].

The Harvard Spatial Data Lab’s natural language GIS interface represents genuine innovation in accessibility, yet implementation details reveal important limitations. The system functions essentially as an “LLM-to-SQL translator,” converting natural language queries into structured database operations. It succeeds when queries align with data structure and database schema, but fails when queries require archaeological expertise beyond data manipulation (e.g., “identify all settlements that represent colonial period disruption to settlement hierarchies”). Similarly, GPT-4V’s capability to describe field photographs is impressive but operationally limited: the model can identify broad depositional patterns but may miss small but diagnostically crucial features (microstratification, subtle color changes, inclusion patterns). More fundamentally, the emphasis on “co-pilot” models appropriately reflects archaeology’s epistemology: field interpretation is inseparable from embodied observation, tacit knowledge accumulated through experience, and disciplinary judgment that cannot be fully automated. The challenge ahead lies not in replacing expert judgment but in creating systems that genuinely extend cognitive capabilities while maintaining transparency about limitations.

The fundamental challenge lies in advancing beyond simple task automation to genuinely augmenting archaeologists’ analytical capabilities—enabling new forms of spatial reasoning, pattern recognition across scales, and the integration of disparate evidence types that would be cognitively infeasible for human analysts alone [

34], while maintaining appropriate epistemic humility and transparency about AI systems’ limitations and uncertainties [

33].

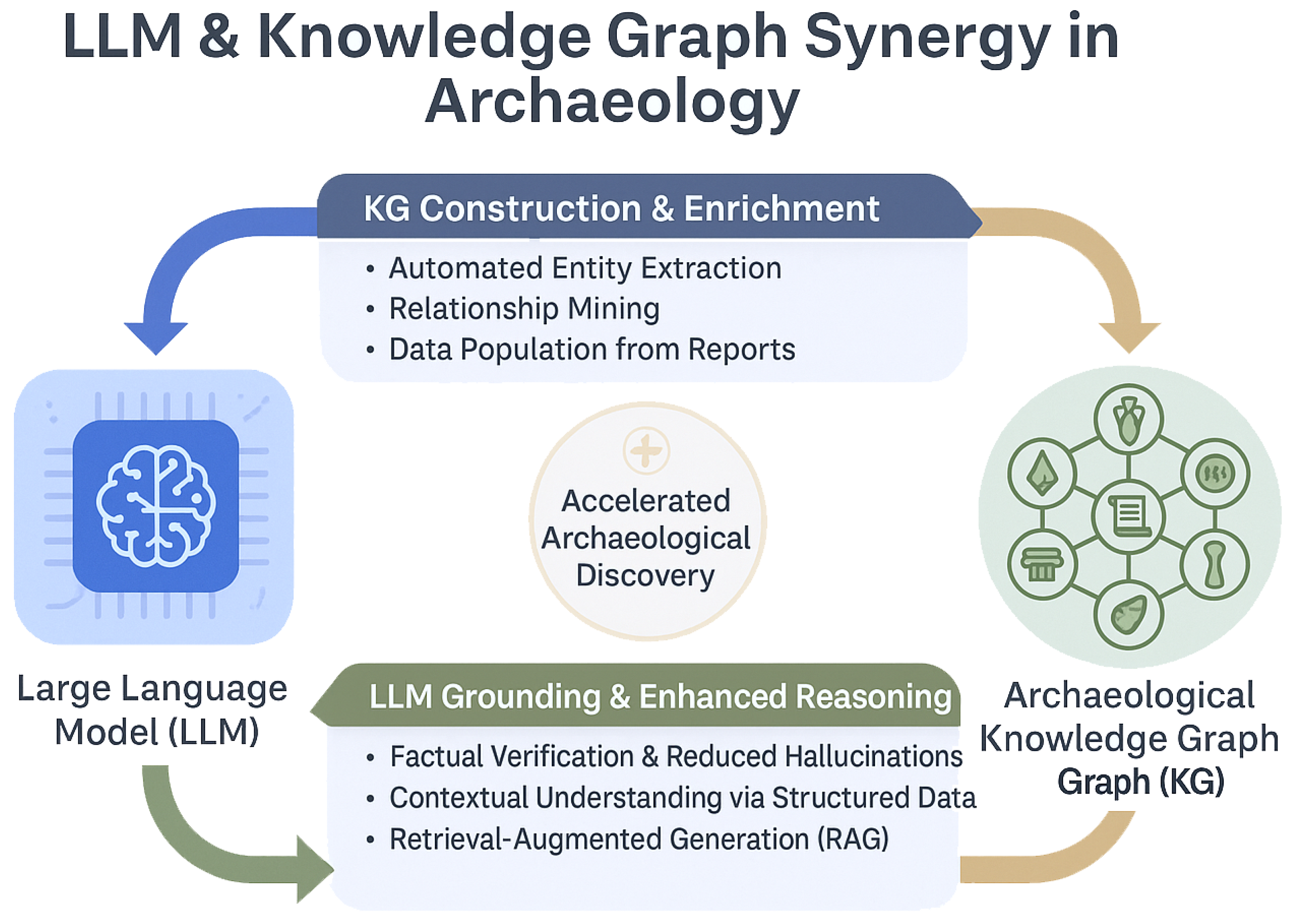

4.5. Archaeological Knowledge Management and Intelligent Reasoning Systems

Archaeological knowledge—accumulated through centuries of systematic fieldwork, excavation, analysis, and scholarly synthesis—is extraordinarily vast in scale, intellectually complex in content, deeply interdisciplinary in character, and problematically dispersed across heterogeneous repositories including the published literature, unpublished excavation reports, museum collections databases, institutional archives, and individual researchers’ private records [

63,

70]. This distributed knowledge landscape creates profound challenges for comprehensive synthesis, comparative analysis, and efficient information retrieval, particularly for cross-regional or diachronic studies requiring the integration of evidence from diverse sources [

4]. The synergistic integration of Large Language Models with structured knowledge graphs (KGs) offers promising technological pathways for systematically organizing this fragmented knowledge, facilitating intuitive navigation through complex information networks, and enabling sophisticated reasoning over distributed archaeological evidence (

Figure 3) [

27,

29]. KGs provide explicit, machine-readable representations of entities (archaeological sites, artifacts, cultures, individuals) and their relationships (temporal succession, spatial proximity, cultural affiliation, typological similarity), while LLMs offer natural language interfaces for querying these structures and reasoning capabilities for inferring implicit connections or identifying inconsistencies across disparate sources [

28].

In KG construction, LLM NLP capabilities accelerate the automatic extraction of entities and relationships from the literature and reports. Wang et al. [

71] utilized BERT and GAT to construct a KG for Tang Dynasty metalwork. Graham [

28] used GPT-3 to automatically construct a KG detailing illicit antiquities trade networks from academic articles, achieving an accuracy comparable to manual annotation.

Conversely, KGs enhance LLM performance. Integrating KGs via RAG [

27,

29] provides a factual grounding for curated information, reducing hallucinations [

28]. The LPKG framework [

29] leverages KG schemas to generate structured training data, enhancing LLM query planning abilities.

For complex reasoning, LLM-KG synergy supports multi-source integration and hidden pattern identification. Graham [

28] applied graph embedding to uncover structural properties in antiquities trade networks. Graph exploration algorithms with LLM natural language interfaces aid in inferring missing information and identifying contradictions [

72].

LLM-assisted hypothesis generation shows potential but faces challenges. A primary concern is that LLM reasoning may resemble pattern “recitation” rather than true logical understanding [

73,

74]. Emerging frameworks incorporate RAG for grounding [

75], multi-agent systems for diverse perspectives [

76], and algorithms like HypoGeniC [

77] for interpretable hypothesis generation. Core challenges include ensuring factual accuracy, overcoming training data biases, establishing testability criteria, and avoiding research contamination [

78]. Human oversight remains indispensable [

4].

4.6. Research Assistance and Human–Computer Collaboration

Large Language Models and Multimodal Models are progressively evolving from narrowly focused, task-specific computational tools into more integrated “intelligent research partners” capable of assisting across multiple stages of the archaeological research lifecycle, potentially fundamentally reshaping traditional research workflows and fostering entirely new modes of human–computer collaborative interaction. This transformation reflects a broader trend in scientific computing toward AI systems that do not merely automate routine tasks but actively participate in knowledge discovery, hypothesis formation, and interpretive reasoning, albeit always under human oversight and critical evaluation.

In the early stages of research design and literature review, LLMs provide valuable assistance for brainstorming research questions, systematically exploring alternative methodological approaches, identifying relevant theoretical frameworks, and formulating coherent research plans [

7]. Their capacity to rapidly synthesize and summarize vast bodies of existing research on specific topics—identifying key trends, ongoing debates, methodological controversies, and significant knowledge gaps across the multilingual scholarly literature spanning decades—can dramatically accelerate the traditionally time-consuming process of comprehensive literature review [

66]. This capability is particularly valuable in archaeology, where the relevant literature may be published in dozens of languages, scattered across hundreds of regional journals with limited international visibility, and include substantial quantities of grey literature (excavation reports, museum catalogues, conference proceedings) that lack systematic indexing. For technical and analytical support during active research phases, LLMs assist archaeologists in interpreting complex data patterns, evaluating alternative hypotheses against available evidence, and designing appropriate statistical tests or computational analyses [

77]. Crucially, they significantly the lower technical barriers to adopting sophisticated computational methods by providing interactive assistance with code writing, debugging, and optimization. Sciuto et al. [

18] documented their experience “pair-programming” with ChatGPT (GPT-4) to rapidly prototype and develop S.A.D.A., a specialized archaeological anomaly detection tool for identifying unusual patterns in excavation data, exemplifying the potential for the “AI-driven democratization” of advanced analytical methods previously accessible only to archaeologists with substantial programming expertise or access to dedicated computational specialists. In the final stages of results dissemination and public engagement, LLMs assist with drafting preliminary versions of excavation reports, research papers, and grant proposals [

66,

79], though these require careful human review to ensure accuracy, appropriate interpretation, and disciplinary conventions. For public outreach and heritage education, LLMs help transform specialized archaeological knowledge into accessible narratives tailored to diverse public audiences with varying levels of background knowledge [

13], potentially enhancing the public’s understanding and appreciation of archaeological research. The experimental “Johannes” project [

80] represents a particularly innovative application: researchers fed detailed archaeological data about the medieval site of Miranduolo into ChatGPT to create an AI-powered conversational agent that could respond to questions while role-playing as a 12th-century peasant soldier who had lived at the site, exploring the possibilities for computational hermeneutics and immersive historical narratives as novel forms of heritage interpretation and education. Beyond individual site interpretation, LMMs demonstrate potential for large-scale archaeological survey: Sakai et al. [

81] employed AI-accelerated geoglyph detection in the Nazca region of Peru, nearly doubling the number of known figurative geoglyphs and shedding new light on their spatial distribution and potential purposes.

Realizing deeper human–AI collaboration requires effective Human-in-the-Loop (HITL) models [

3,

4]. Such iterative workflows position AI in auxiliary roles with final judgment resting with archaeologists [

82]. Projects like ArchAIDE [

83,

84] and Diviner [

46] exemplify effective human–AI collaboration, demonstrating how archaeologists can define tasks and queries, AI can generate initial classifications and hypotheses, and experts can review, validate, and provide feedback for iterative model refinement—emphasizing that essential expert oversight positions AI as augmentation rather than replacement.

LLM-assisted knowledge graph construction demonstrates genuine utility, but this utility is narrowly bounded. Graham’s application of GPT-3 achieved an accuracy “comparable to manual annotation,” a meaningful benchmark. However, “comparable accuracy” is not identical accuracy, and even small deviations in knowledge graph structure have cascading effects in downstream reasoning systems. More fundamentally, knowledge graphs capture explicit facts and relationships but struggle with tacit, context-dependent knowledge that experienced archaeologists invoke unconsciously. Similarly, research assistance tools excel at literature synthesis and code generation but reflect inherent limitations: generated hypotheses emerge from pattern-matching in training data, not a genuine understanding of archaeological problems. The Johannes role-playing experiment is intellectually interesting but operationally risky—presenting AI-generated first-person narratives risks obscuring the distinction between speculative fiction and historical reconstruction. These tools are most appropriately framed as “intellectual amplifiers” that extend human capacity for information processing and routine task automation, not as “thinking partners” that share disciplinary expertise. The distinction matters profoundly for institutional accountability and public trust in archaeological knowledge.

5. Challenges and Future Directions

Despite their growing potential, the widespread responsible application of LLMs/LMMs in archaeology faces multifaceted challenges. These encompass data characteristics, knowledge integration, technological limitations, and ethical considerations. Critically, these challenges position archaeology as a demanding domain for advancing AI.

5.1. Data Challenges: Archaeological Specificity Tests LMM Capabilities

Archaeological data’s unique characteristics severely test current LMMs. LMMs must fuse diverse data streams—including ancient texts, 2D images, 3D models, geospatial data, scientific analytical data, and field records—while navigating inherent challenges including fragmentation, heterogeneity, sparsity, context dependency, and bias.

Foremost are inherent fragmentation, incompleteness, and context dependency [

4,

36,

70]. Archaeological information, derived from material traces surviving complex taphonomic processes, results in datasets with systematic omissions and profound uncertainty. Unlike controlled experimental sciences where data can be replicated and validated through repeated observations, archaeological evidence is fundamentally non-replicable—excavation is destructive, and once a context is removed, it cannot be recreated. This epistemological reality means archaeological datasets inherently contain systematic gaps resulting from preservation biases (organic materials rarely survive, humble domestic structures erode more rapidly than monumental architecture), recovery biases (reflecting historical research priorities and excavation methodologies), and publication biases (spectacular finds are disproportionately reported). LMMs trained on such biased datasets may inadvertently learn and perpetuate these distortions, potentially reinforcing existing archaeological narratives rather than enabling critical re-evaluation. This “messiness” pushes AI research towards developing robust uncertainty quantification frameworks, probabilistic reasoning approaches, and the explicit modeling of data provenance and reliability—capabilities valuable not only for archaeology but for any domain dealing with incomplete, observational data under inherent constraints.

Poor data quality, standardization, and accessibility pose significant practical hurdles [

63,

85]. Despite decades of archaeological digitization efforts, vast quantities of knowledge remain locked in analogue formats (paper reports, photographic negatives, hand-drawn site plans) or exist only in poorly structured digital formats (unsearchable PDFs, proprietary database systems, isolated spreadsheets) with inconsistent terminologies, variable documentation standards, and limited metadata. This heterogeneity severely complicates the large-scale data aggregation efforts required for training robust LMMs. Furthermore, substantial portions of archaeological data remain inaccessible due to institutional barriers (museum collections databases with restricted access), linguistic barriers (reports published only in regional languages), and economic barriers (paywalled journal articles, expensive excavation monographs). Addressing these accessibility challenges requires coordinated efforts to develop shared data standards, invest in systematic digitization and metadata enrichment programs, promote open-access publishing, and establish data-sharing infrastructures that balance legitimate concerns about site protection and intellectual property with the scientific imperative for data transparency and reusability [

70].

Multimodal heterogeneity is a defining characteristic of archaeological evidence. Archaeological arguments integrate multiple information types including textual descriptions, 2D images, 3D models, GIS spatial data, and scientific analyses [

56,

57]. However, these diverse data often lack standardized linkages or common representation formats. This demands advanced LMM capabilities in crossmodal alignment and fusion.

Data scarcity and imbalance are pervasive challenges in archaeological AI. High-quality, large-scale, annotated datasets—especially multimodal or domain-specific ones—remain scarce [

17]. This stands in sharp contrast to domains like computer vision with massive benchmarks (e.g., ImageNet [

86]). This scarcity necessitates AI advancements in few-shot learning, transfer learning approaches, and bias mitigation strategies.

Inherent biases in training data require constant vigilance [

35]. Archaeological records inevitably carry their creators’ historical, social, and theoretical biases including colonial perspectives, gender biases, and Eurocentric frameworks. LLMs/LMMs can learn and potentially amplify these embedded biases [

3,

4,

19]. This makes archaeology a critical domain for developing and testing AI fairness tools.

Addressing these challenges requires coordinated efforts including investing in open, standardized, FAIR-compliant datasets [

70], improving data governance frameworks, and developing AI models robust to archaeological uncertainty (e.g., through few-shot learning approaches [

16]).

5.2. Knowledge Integration and Reasoning Challenges

Effectively integrating archaeology’s profound, nuanced, often tacit specialized knowledge into LLMs/LMMs is formidable.

Archaeological knowledge complexity extends beyond specialized terminology and encompasses distinctive reasoning methodologies that differ fundamentally from common-sense reasoning or patterns prevalent in LLM training corpora [

3,

4,

68,

69]. Stratigraphic reasoning, for instance, requires an understanding the Law of Superposition and complex formation processes including intrusions, truncations, and post-depositional disturbances. Seriation demands grasping temporal patterns in artifact style frequencies based on assumptions about gradual cultural change. The concept of chaîne opératoire involves reconstructing entire operational sequences of artifact manufacture from fragmentary material traces. These specialized analytical frameworks, developed through decades of disciplinary practice and often incorporating significant tacit knowledge that experienced archaeologists internalize through fieldwork training, are rarely explicitly documented in a textual form suitable for LLM learning. Consequently, general-purpose LLMs trained predominantly on web text and the general literature often lack this specialized depth, struggling not only with professional archaeological terminology (which could potentially be addressed through targeted fine-tuning) but more fundamentally with the contextual reasoning patterns, methodological assumptions, and interpretive logic that structure archaeological inference [

3,

10]. This gap between general AI capabilities and domain-specific archaeological expertise highlights the critical need for developing specialized “ArchaeoLMs” through systematic domain adaptation using carefully curated archaeological corpora, expert-validated training datasets, and potentially novel training objectives that explicitly model archaeological reasoning patterns.

The technical limitations of current LLM architectures constrain their reliable application in evidence-based archaeological research. “Hallucinations”—the generation of plausible-sounding but factually incorrect information—represent a fundamental flaw that is particularly problematic for disciplines demanding rigorous evidential standards [

7,

10]. In archaeological contexts, hallucinated information might include non-existent archaeological sites, fabricated artifact types, invented cultural sequences, or spurious bibliographic references, all presented with the same confident tone as factually accurate statements. While technical mitigation strategies including Retrieval-Augmented Generation (RAG), Retrieval-Augmented Fine-Tuning (RAFT) and knowledge graph integration offer promising approaches for grounding LLM outputs in curated factual knowledge [

27,

29,

30], these techniques reduce but do not eliminate hallucination risks. The “black box” nature of deep neural networks poses additional challenges for archaeological applications [

32,

33]. Archaeology, as a historical science making claims about past human societies based on fragmentary material evidence, has long-established norms demanding the explicit justification of interpretive inferences, the transparent documentation of reasoning chains, and a clear distinction between observational data and interpretive conclusions. The opacity of LLM decision-making processes—where outputs emerge from millions of learned parameters through mechanisms that are not fully understood even by their developers—conflicts with these disciplinary expectations for evidential transparency and interpretive accountability, creating barriers to archaeologists’ acceptance and the appropriate use of AI-generated insights.

Limitations in genuine reasoning persist; LLM reasoning can resemble pattern “recitation” over true logical understanding [

19,

73,

74]. Faced with novel problems or multi-step abductive reasoning, models may falter.

Overcoming these challenges requires deep archaeologist–AI researcher collaboration on domain-adaptive training, curated corpora, “ArchaeoLMs,” KG-LLM fusion, and robust human–AI collaborative frameworks. Essential are rigorous, discipline-specific reliability verification and benchmarks.

5.3. Ethical Considerations and Resource Barriers

Ethical and resource challenges pose significant barriers to responsible LLM/LMM deployment in archaeology. Cultural sensitivity demands particular attention: archaeological materials often hold profound significance for Indigenous and descendant communities, whose interpretative rights and traditional knowledge systems must be respected in AI system design [

3,

87,

88]. Data sovereignty issues are especially acute when training data includes culturally sensitive information or materials subject to repatriation claims [

4]. Moreover, security protocols must prevent AI-facilitated looting through the inadvertent disclosure of site locations or artifact values. Preventing AI misuse for fabricating archaeological evidence or generating false historical narratives requires robust verification systems and the clear documentation of AI involvement in knowledge production.

Resource constraints have historically limited AI accessibility, though recent technical advances—including PEFT methods [

23], efficient models like Phi-3-mini deployable on consumer hardware [

25], and low-code development platforms [

64]—are democratizing access. Nonetheless, substantial resource inequalities persist globally, necessitating deliberate efforts to ensure equitable AI benefits across archaeological communities regardless of institutional resources or geographic location.

6. Future Research Directions

While current LLM/LMM applications in archaeology demonstrate significant promise, realizing their full transformative potential requires coordinated research efforts addressing key challenges across technical, methodological, infrastructural, and ethical dimensions. Drawing on the analysis presented in the preceding sections, we identify the following critical priorities for advancing responsible, effective, and equitable AI integration in archaeological practice:

Data Infrastructure and Model Development: The archaeological community must prioritize building open, FAIR-compliant (Findable, Accessible, Interoperable, Reusable) multimodal datasets that adequately represent global archaeological diversity while respecting cultural sensitivities and intellectual property rights [

63,

70]. This requires sustained investment in systematic data curation, standardized metadata schemas, quality control protocols, and data-sharing platforms. Simultaneously, developing domain-adapted “ArchaeoLMs” (Archaeological Language Models) via cost-effective Parameter-Efficient Fine-Tuning (PEFT) techniques such as LoRA remains fundamental [

20,

23]. These specialized models should be trained on carefully curated archaeological corpora encompassing excavation reports, the scholarly literature, and technical databases, with explicit attention to capturing disciplinary reasoning patterns and methodological frameworks. Critically, the community needs to establish shared benchmarks with archaeologically meaningful evaluation metrics—including not only task accuracy but also interpretability, uncertainty quantification, bias detection, and robustness to noisy or incomplete data—to enable the rigorous, comparable assessment of AI system performance [

37]. Such benchmarks should be developed collaboratively by archaeologists and AI researchers to ensure they reflect genuine archaeological priorities rather than purely technical considerations.

Technical Integration and Methodological Innovation: Several priority areas demand focused research attention to translate technical capabilities into practical archaeological tools. Advanced multimodal fusion architectures capable of jointly processing and reasoning across text, images, 3D models, and structured data represent crucial infrastructure for material culture analysis [

42,

67]. The deeper integration of knowledge graphs with LLMs through frameworks like LPKG and RAFT offers pathways for enhanced reasoning, factual grounding, and reduced hallucinations [

29,

30]. The development of GIS-LMM integrated systems for spatial archaeological analysis, building on pioneering efforts like Harvard’s natural language GIS interfaces, could democratize sophisticated landscape analysis capabilities [

65]. Crucially, effective Human-in-the-Loop (HITL) collaborative frameworks must be designed to appropriately balance AI assistance with essential expert oversight, ensuring that AI systems genuinely augment rather than replace archaeological expertise [

82,

83]. These frameworks should explicitly model the complementary strengths of human archaeologists (contextual understanding, tacit knowledge, interpretive creativity, ethical judgment) and AI systems (pattern recognition, information synthesis, computational scalability), creating synergistic partnerships that exceed the capabilities of either alone.

Large-Scale Benchmark Development: While TimeTravel and the Vesuvius Challenge represent significant progress, the archaeological community needs expanded, specialized benchmarks addressing under-represented domains. Priority initiatives should include the following: (1) a “Multi-Script Paleographic Challenge” evaluating text restoration across 10+ ancient writing systems with region-appropriate difficulty levels; (2) a “Global Artifact Typology Benchmark” providing standardized, ethically sourced datasets with expert consensus labels spanning diverse cultural areas including under-represented non-Western traditions; (3) a “Multilingual Excavation Report Corpus” enabling the evaluation of knowledge extraction and translation across diverse languages and report formats. These benchmarks should embed interpretability and uncertainty quantification metrics alongside accuracy measures, and include interdisciplinary evaluation involving both AI researchers and disciplinary archaeologists to ensure metrics reflect genuine archaeological relevance.

Responsible AI Principles and Ethical Frameworks: Archaeological AI applications demand exceptional attention to ethical considerations given the cultural significance and political sensitivity of archaeological heritage [

3,

4,

89]. Community-developed ethical guidelines must address domain-specific challenges including data sovereignty (particularly for Indigenous and descendant communities), cultural sensitivity in AI-generated interpretations, the mitigation of historical biases embedded in training data, the prevention of AI misuse for heritage destruction or looting, and equitable benefit-sharing from AI-enabled archaeological knowledge. Implementation requires concrete mechanisms: mandatory ethics review protocols for archaeological AI projects, diverse stakeholder participation in AI system design, the transparent documentation of data sources and model limitations, and accountable governance structures. Furthermore, fostering equitable access necessitates the open sharing of AI tools, datasets, and trained models (where culturally appropriate), the development of multilingual interfaces and documentation, and investment in cross-disciplinary training programs that build AI literacy among archaeologists and archaeological domain knowledge among AI researchers, particularly in regions with limited computational infrastructure.

Community-Driven ArchaeoLM Development Initiatives: Rather than relying primarily on Western AI companies’ models, the international archaeological community should establish collaborative, open-source initiatives to develop shared “ArchaeoLMs” through community contribution. Models like Phi-3-mini (3.8B parameters) are small enough for distributed training and inference on consumer hardware. Initiatives could include the following: (1) a shared repository of 10B+ tokens of archaeological corpus (excavation reports, published monographs, grey literature) with rigorous curation and ethical sourcing; (2) periodic collaborative fine-tuning events where researchers contribute domain expertise and labeled data; (3) open-access model checkpoints released under appropriate licensing; (4) region-specific variants addressing linguistic and cultural diversity (e.g., Arabic-language archaeology, Chinese-language archaeology, Indigenous language considerations). Success requires deliberate attention to preventing knowledge concentration in wealthy institutions and ensuring benefit distribution across the global archaeological community.

7. Conclusions

Large Language and Multimodal Models are demonstrably transforming archaeological practice. This review systematically examined 89 post-2023 applications, documenting concrete achievements: ancient Greek text restoration at 22.5% CER (LoRA-tuned LLaMA), automated knowledge graph construction matching manual quality (GPT-3 + domain adaptation), and multimodal artifact digitization at 35.4% mAP. These demonstrate that domain-adapted LLMs/LMMs address previously intractable bottlenecks.

This review clarifies how resource-constrained institutions can adopt LLM technologies. Parameter-Efficient Fine-Tuning (LoRA) reduces costs by 90–99% compared with full fine-tuning. Small Language Models (Phi-3-mini: 3.8B parameters) achieve a competitive performance on consumer hardware. Retrieval-Augmented Generation (RAG/RAFT) provides factual grounding without massive datasets. We distinguished LLM-specific challenges (hallucination, context limits) from generic ML issues, providing guidance for appropriate AI tool selection.

Archaeology’s data characteristics—multimodal heterogeneity, sparsity, uncertainty, context dependency—position it as an invaluable testing domain. Open benchmarks (TimeTravel: 10,250 expert-verified pairs; Vesuvius Challenge: 2500+ competing teams) demonstrate how archaeological problems drive genuine AI innovation in multimodal reasoning, few-shot learning, and knowledge grounding.

However, substantial challenges remain unresolved. Current applications function largely as isolated experimental tools. Data infrastructure limitations and proprietary barriers restrict large-scale deployment. Ethical frameworks for Indigenous data sovereignty require concrete implementation. Most critically, archaeological AI research concentrates in well-resourced Western institutions, risking knowledge inequality.

Realizing the transformative potential requires three coordinated priorities: (1) expanding open, FAIR-compliant, culturally respectful multimodal datasets with archaeologically meaningful benchmarks across under-represented regions and writing systems; (2) advancing technical integration through GIS-LMM systems, KG-LLM fusion, and robust Human-in-the-Loop (HITL) frameworks with explicit attention to how AI systems complement rather than replace archaeological expertise; (3) implementing concrete ethical mechanisms with mandatory review protocols, diverse stakeholder participation, and equitable resource distribution across the global archaeological community.

Success demands sustained collaboration between domain-expert archaeologists and AI researchers, ensuring that technologies genuinely augment archaeological reasoning while maintaining disciplinary standards of evidential rigor, interpretive transparency, and cultural responsibility. The international archaeological community must move beyond the reactive adoption of external AI systems toward the deliberate, collaborative development of shared “ArchaeoLMs” and ethical governance frameworks that embed archaeological values from inception. Archaeology’s unique epistemic demands—dealing with fragmentary evidence, multiple interpretative possibilities, and profound responsibilities to descendant communities—offer crucial lessons for developing more robust, interpretable, and ethically grounded AI systems applicable far beyond the discipline itself.