1. Introduction

Electronic Health Records (EHRs) [

1] have emerged as a transformative resource in modern healthcare, containing vast, longitudinal data that chronicle complete patient journeys. Even advanced structured query methods, such as those used in graph databases, struggle to capture the complex temporal and relational logic inherent in a patient’s clinical journey. Moreover, a seemingly straightforward approach to leveraging unstructured data is to perform keyword searches within the clinical notes. However, this method is notoriously brittle and unreliable. It fails to comprehend clinical context, such as negation (“patient denies chest pain”), speculation (“rule out myocardial infarction”), family history (“father had diabetes”), or semantic similarity (failing to recognize that “heart attack” and “myocardial infarction” refer to the same concept). This leads to high rates of both false positives and false negatives, rendering simple keyword-based retrieval clinically unusable.

To overcome these limitations, a more powerful paradigm has emerged: patient similarity analysis. Instead of retrieving patients based on rigid, predefined rules, this approach aims to identify a cohort of patients who are holistically “like” a given index patient. This moves the task from rule-based retrieval to a more flexible and context-aware form of discovery. The ability to find a clinically coherent group of similar patients is a cornerstone of personalized medicine. By analyzing the treatment trajectories and outcomes of a similar cohort, clinicians can make more informed predictions about the prognosis of a new patient and select the most appropriate therapeutic interventions. This data-driven, case-based reasoning at scale promises to enhance the estimation of treatment efficacy and facilitate more precise patient matching for a variety of clinical applications.

This paper proposes a novel, end-to-end framework that operationalizes patient similarity analysis at an unprecedented scale. The framework’s power derives from the synergy of two key technological components:

Deep Medical Embeddings: We employ advanced deep learning models to transform the entirety of a patient’s multi-modal EHR data—including both structured codes and unstructured narratives—into a single, high-dimensional vector representation, or “embedding.” This dense vector serves as a mathematical fingerprint that captures the patient’s comprehensive clinical state in a semantically rich and nuanced manner.

Approximate Nearest Neighbor Search (ANNS): We utilize specialized indexing data structures to enable searching through databases of millions of these high-dimensional patient vectors in milliseconds. ANNS algorithms overcome the “curse of dimensionality,” a phenomenon that renders traditional, exact search methods computationally impractical in high-dimensional spaces.

This technological stack is not an arbitrary collection of tools but rather a necessary and causally linked chain of solutions designed to address a fundamental challenge in medical informatics. The high clinical value of unstructured notes 4 necessitates the use of advanced Natural Language Processing (NLP) models like ClinicalBERT to interpret their complex language. These models, in turn, produce the high-dimensional vector embeddings that capture this rich meaning. The very high dimensionality of these vectors then makes an exhaustive search computationally infeasible, which directly necessitates the use of ANNS algorithms like HNSW to make the search process practical and scalable. This framework, therefore, represents a complete, reasoned response to the challenge of extracting actionable intelligence from the most valuable and complex component of the EHR. By combining these technologies, our framework enables queries based on deep semantic meaning rather than superficial keyword or code matches, paving the way for faster, more accurate, and more clinically relevant patient cohort discovery.

The remainder of this paper is organized as follows.

Section 2 provides a review of the background and related work, covering the structure of EHR data, the evolution of medical embeddings, and the principles of scalable similarity search with ANNS algorithms.

Section 3 details our proposed end-to-end framework, from data ingestion and preprocessing to the generation of multi-modal patient embeddings using a gated fusion mechanism and their subsequent indexing.

Section 4 outlines the comprehensive experimental evaluation, including the datasets, cohort definitions, and performance metrics used. Moreover,

Section 4 presents the results of our experiments, offering a comparative analysis of retrieval accuracy and framework’s performance.

Section 5 discusses the clinical implications of our findings and explores real-world application scenarios, while also addressing the limitations of our study and directions for future work. Finally,

Section 6 concludes the paper with a summary of our contributions and their transformative potential for precision medicine.

2. Background and Related Work

This section reviews the foundational technologies and concepts that underpin our proposed framework for patient similarity analysis.

2.1. The Anatomy of EHR Data

To build a comprehensive representation of a patient, it is essential to understand the distinct yet complementary nature of the data modalities within an EHR. There are two main categories: structured data and unstructured data.

The category of structured data includes all data stored in discrete, predefined fields, often using standardized coding systems. Key examples include demographic information (age, gender), diagnosis codes from the International Classification of Diseases (ICD), procedure codes from the Current Procedural Terminology (CPT), medication codes like RxNorm, and laboratory test results identified by Logical Observation Identifiers Names and Codes (LOINC). While this data is readily machine-readable and useful for broad-stroke analysis, it often lacks the granular detail and clinical context necessary for deep phenotyping. Information can be incomplete, recorded for administrative purposes, or fail to capture the evolving narrative of a patient’s condition.

The modality of unstructured data consists primarily of free-text clinical narratives generated by healthcare providers. It encompasses a wide range of document types, such as physician’s daily progress notes, admission and discharge summaries, nursing assessments, radiology interpretations, and pathology reports. This textual data is exceptionally rich in detail, capturing the nuanced diagnostic reasoning of clinicians, the patient’s history as told in their own words, responses to treatments, and subtle observations that are often missed by structured data fields alone. Studies have consistently shown that unstructured data provides a more comprehensive and, in many cases, more accurate picture of a patient’s health status than structured data can offer on its own.

2.2. Medical Embeddings

The core of modern patient similarity analysis lies in the ability to convert these disparate data types into a unified mathematical format. This is achieved through “embeddings,” which are dense vector representations of medical concepts.

The first wave of medical embeddings adapted techniques from general NLP, such as Word2Vec [

2] and GloVe [

3], to learn vector representations for medical codes and terms from large clinical corpora. These methods are based on the distributional hypothesis: words or concepts that appear in similar contexts tend to have similar meanings. This allows the models to learn powerful semantic relationships; for example, the vector for “systolic pressure” will be located close to the vector for “diastolic pressure” in the embedding space. However, these models produce a single, static vector for each word, regardless of its context. This is a significant limitation, as they cannot disambiguate between different meanings of the same word (e.g., distinguishing “cold” as a viral infection from “cold” as a temperature).

The introduction of the transformer architecture, and specifically the Bidirectional Encoder Representations from Transformers (BERT) model [

4,

5], represented a monumental shift in NLP. The key innovation of BERT is its ability to generate contextualized embeddings. By processing the entire input sentence at once using a self-attention mechanism, BERT produces a unique vector for each word that is dynamically informed by its surrounding context. This allows the model to capture the precise nuance of a word’s meaning in a specific sentence, overcoming the primary limitation of static embedding models.

While the transformer architecture is powerful, its effectiveness is highly dependent on the data it is trained on. For specialized domains like medicine, general-purpose models are often inadequate. This has led to the development of a series of progressively more specialized models.

To address this gap, BioBERT [

6] was developed. It takes the original BERT model and continues the pre-training process on a vast corpus of biomedical literature, including millions of PubMed abstracts and PMC full-text articles. This domain-specific training endows BioBERT with a deep understanding of biomedical terminology and the formal language of scientific research. Consequently, it achieves substantial performance improvements over the general BERT model on a variety of biomedical text mining tasks, such as Named Entity Recognition (NER) and relation extraction.

While BioBERT excels at processing scientific literature, the language used in clinical practice—as documented in EHR notes—is markedly different. Clinical text is characterized by heavy use of jargon, non-standard abbreviations, telegraphic phrasing, and unique syntactic structures. To capture these nuances, ClinicalBERT [

7] was created. It builds upon either BERT or BioBERT by incorporating a final stage of pre-training on a large corpus of real-world clinical notes, most notably from the MIMIC-III dataset. This final specialization makes ClinicalBERT the state-of-the-art model for processing EHR text. It is better able to understand the language of clinicians, and studies have shown that its embeddings of medical concepts correlate more accurately with physician judgments of similarity than those from older models like Word2Vec and FastText [

8,

9].

2.3. Scalable Similarity Search with ANNS

The patient embeddings produced by models like ClinicalBERT are high-dimensional, typically having 768 dimensions or even more depending on the model used. Performing an exact k-Nearest Neighbor (k-NN) search in this space is computationally prohibitive. An exact search would require calculating the distance from the query vector to every other vector in the database, a process with a time complexity linear to the database size (). For a database of millions of patients, this would take several minutes or even hours per query, making it unsuitable for real-time applications.

This challenge is exacerbated by the “curse of dimensionality,” a phenomenon where, in high-dimensional spaces, the concept of distance becomes less meaningful as the distances between most pairs of points tend to become almost equal. This makes it difficult to distinguish the “nearest” neighbor from any other point, rendering traditional spatial indexing methods like k-d trees ineffective.

Approximate Nearest Neighbor Search (ANNS) algorithms are the essential solution to this problem. They achieve massive speedups by trading a negligible amount of accuracy for logarithmic or near-logarithmic search complexity. While several families of ANNS algorithms exist—including tree-based, hashing-based, and graph-based methods—graph-based approaches have become the de facto standard for their superior performance in terms of speed and recall.

Among these, the Hierarchical Navigable Small World (HNSW) [

10] algorithm stands out as a state-of-the-art method. HNSW organizes the high-dimensional vector data into a sophisticated multi-layered graph structure, drawing inspiration from two key concepts: navigable small world networks and probability skip lists. The search process leverages this hierarchy for a highly efficient coarse-to-fine search. A query begins at an entry point in the sparse top layer. The algorithm greedily traverses this layer to find the point closest to the query. This point then serves as the entry point to the next, denser layer below. This process is repeated, “zooming in” on the target region at each level, until the algorithm performs its final, fine-grained search on the dense bottom layer. This hierarchical navigation allows HNSW to achieve logarithmic search complexity (

) by efficiently eliminating large regions of the search space that are unlikely to contain the nearest neighbors.

Beyond graph-based methods like HNSW, several other families of ANN search algorithms exist, each with a distinct approach to balancing speed and accuracy.

The partitioning methods follow a “divide and conquer” strategy. A foundational example is the Inverted File (IVF) [

11] index, which partitions the vector space into clusters (Voronoi cells) using k-means. A search is then restricted to a small number of these clusters, significantly reducing the number of comparisons needed. A more advanced hybrid system, ScaNN [

12,

13], refines this approach by first partitioning the data and then using a specialized form of product quantization—anisotropic vector quantization—to compute approximate distances within the selected partitions. The top candidates from this fast, approximate phase are then re-ranked using their full-precision vectors to improve final accuracy.

The tree-based algorithms, likeANNOY (

https://github.com/spotify/annoy, accessed on 15 September 2025), build a forest of multiple binary search trees. Each tree is constructed by recursively partitioning the vector space using random hyperplanes. A search query traverses all trees simultaneously, using a priority queue to efficiently explore the most promising branches first.

Locality-Sensitive Hashing [

14] is one of the earliest ANN techniques. It relies on hash functions designed so that similar data points are likely to “collide” into the same hash bucket. For cosine similarity, this is often achieved by using random hyperplanes to define the hash function. Multiple hash tables are constructed, and a query involves hashing the query vector and then performing an exhaustive search only on the smaller set of candidate points found in the resulting buckets.

3. The Proposed Framework: An End-to-End Pipeline

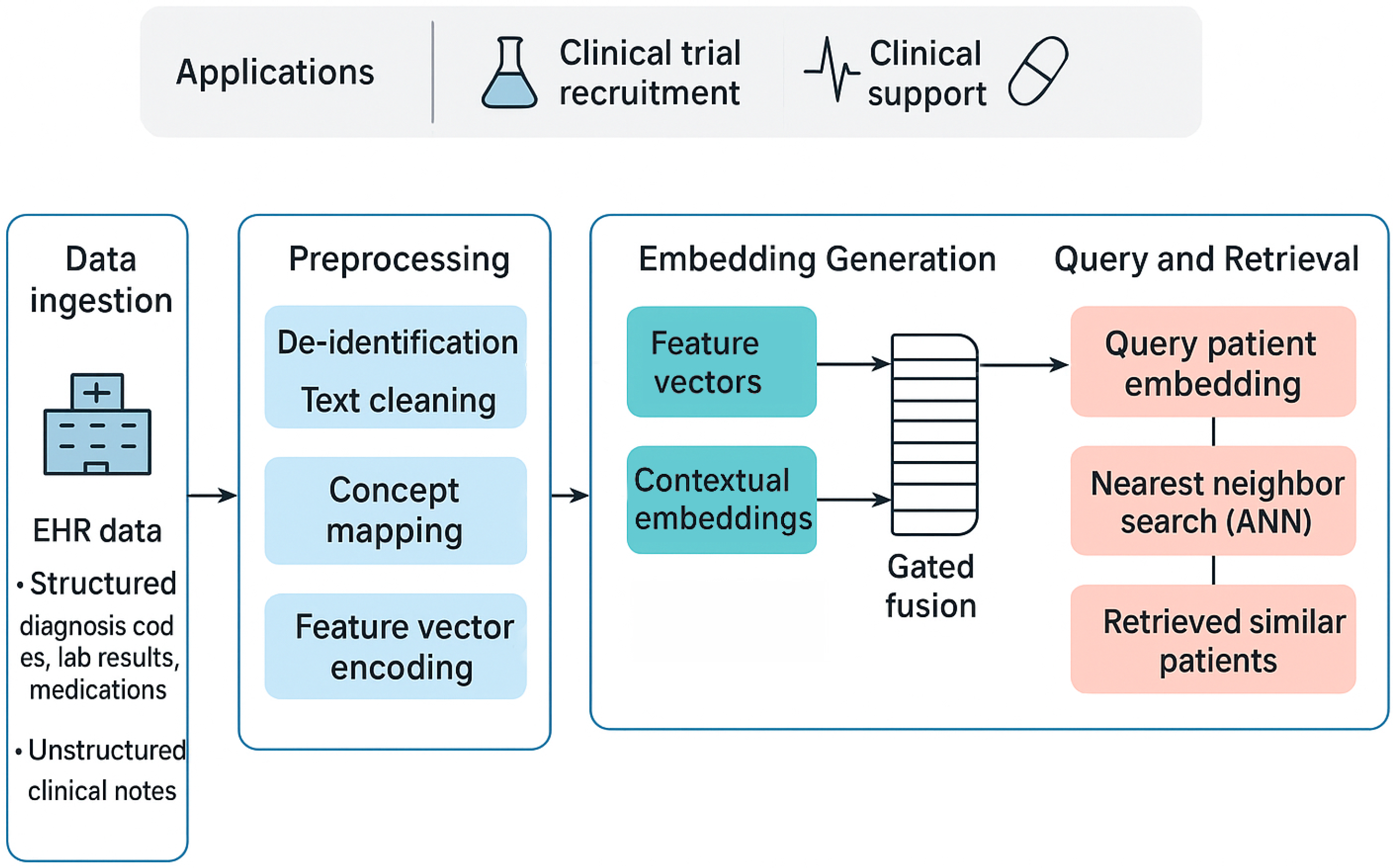

The proposed framework provides a complete, end-to-end pipeline for transforming raw, multi-modal EHR data into an indexed, searchable database of high-fidelity patient embeddings. This process can be broken down into three major stages: data ingestion and preprocessing, multi-modal embedding generation, and scalable similarity search. The quality of the final patient representation is fundamentally dependent on the rigor of the initial data preparation, making this first stage critical for ensuring the model receives clean, standardized, and meaningful input. An overview of this three-stage process is depicted in

Figure 1. Its main components are described in detail in the following sections.

3.1. Data Ingestion and Preprocessing

This foundational stage involves sourcing the raw data and meticulously preparing each modality—unstructured and structured—for the subsequent embedding process.

The goal of preprocessing unstructured clinical notes is to convert raw clinical text into a clean, tokenized format suitable for a transformer model. This implementation-focused phase includes

De-identification: The first step in a real-world application is the programmatic removal of all Protected Health Information (PHI) to comply with privacy regulations like HIPAA.

Text Cleaning and Normalization: This is achieved using a series of regular expressions to remove common EHR artifacts (e.g., timestamps, hospital codes), excessive whitespace, and special characters. The text is converted to a consistent format, typically lowercase, and a curated medical dictionary is used to expand common clinical abbreviations (e.g., “CAD” to “coronary artery disease”).

Tokenization: The final step is to use the specific tokenizer associated with the chosen language model (e.g., the ClinicalBERT tokenizer). These tokenizers, available through libraries like Hugging Face’s ‘transformers’, break text into subword units that are optimal for the model’s vocabulary, allowing it to handle vast and specialized medical terminology by representing rare words as sequences of more common subword tokens.

Preprocessing Structured EHR Data involves standardizing and structuring the various coded and numerical data points, which includes

Feature Engineering: The longitudinal structured data for each patient is transformed into a single feature vector. This can involve creating binary indicators for the presence or absence of specific diagnoses or procedures over the patient’s history. For continuous data like lab values, aggregation functions (e.g., mean, max, min over a time window) are used to create summary features.

3.2. Generating Patient Embeddings

Once the data is preprocessed, it is fed into a deep learning architecture that generates a single, comprehensive patient embedding by fusing the generated modalities. This model is implemented using the PyTorch (version 2.9) deep learning framework. The following steps are followed:

Encoding Unstructured Data: The preprocessed clinical notes for a patient are passed as input to a pre-trained BERT model. (e.g., ‘emilyalsentzer/Bio_ClinicalBERT’ loaded via the ‘transformers’ library). The model’s transformer architecture generates contextualized vector representations for each token. To create a single vector that summarizes the entire collection of a patient’s notes, the final hidden state corresponding to the special “ token is used. This token is designed to act as an aggregate representation of the full text’s meaning. The usual dimensionality of these embeddings is 768 components.

Late Fusion for a Unified Patient Embedding: To create a holistic patient view that intelligently weighs the importance of each modality, a gated fusion mechanism is used instead of a simple concatenation of the dense and feature vectors. Both vectors that correspond to a patient are fed into a fusion module, such as a Multi-Modal Adaptation Gate (MAG). This gate, a small neural network, learns to compute a set of weights based on the content of both input vectors. These weights dynamically control the flow of information from each modality, allowing the model to adaptively emphasize the clinical notes or the structured codes depending on the specific patient’s context, while also handling potential redundancy. The output of this fusion step is the final patient embedding—a single, unified, and fully dense vector that mathematically represents the patient’s overall clinical state and is ready for indexing.

The architecture consists of three main components: modality-specific encoders, a gated fusion layer, and a prediction head for training. The modality-specific encoders processes each data type to create dense, fixed-size representations. Assume now a pair of a feature vector and a dense embedding that correspond to a patient. The high-dimensional, sparse binary vector representing that patient’s medical codes is first passed through an embedding layer. This layer acts as a lookup table that projects the sparse input into a lower-dimensional, dense vector. This densification step is crucial for capturing the semantic relationships between different medical codes. This stage outputs a corresponding 256-dimensional dense vector termed as . The 768-dimensional text embedding from the BERT model is passed through a linear layer with a ReLU activation function. The purpose of this layer is to project the text embedding into the same dimensional space as the structured data embedding, allowing for a more effective and symmetrical fusion. We symbolize this vector as .

Subsequently, the two 256-dimensional dense vectors are concatenated. This combined vector is passed through a linear layer with a sigmoid activation to compute a “gate” vector

g. The values of this gate vector are between 0 and 1 and act as learned weights. The final fused vector

, is computed as a weighted sum of the two input vectors, controlled by

g:

This mechanism allows the model to learn, for each dimension, whether to prioritize information from the structured data or the clinical notes, effectively filtering and blending the signals.

This fusion model was trained for 20 epochs on the in-hospital mortality task using a Binary Cross-Entropy (BCE) loss function. We employed the Adam optimizer with a learning rate of 2× 10−5 and a batch size of 32. To prevent overfitting, we used an early stopping mechanism with a patience of 3 epochs, monitoring the validation loss.

Key Parameters for Synergy: The reviewer’s question about the key parameters for synergy is answered here. The synergy between the structured and unstructured data is explicitly modeled and controlled by the learnable parameters of the linear layer that computes the gate vector g. These parameters are trained end-to-end (e.g., during the mortality prediction pre-training task). This process forces the model to learn a sophisticated weighting scheme. For some patients or some features, the model might learn that the diagnosis codes are highly reliable, thus giving a higher weight to . For others, it might learn that the nuances are only captured in the notes, thus giving a higher weight to . These learned weights, which constitute the parameters of the gating mechanism, are the key to achieving a meaningful and adaptive synergy between the two modalities.

Validation via Pairwise Classification: To further validate the clinical coherence of the generated embeddings, a binary classifier is trained to determine if any given pair of patient embeddings belong to the same cohort. This is framed as a supervised learning task where the model learns to predict similarity. First, a labeled dataset of embedding pairs is constructed from the ground truth cohorts. “Positive” pairs are created by sampling two different patient embeddings from within the same cohort (e.g., two different patients with Type 2 Diabetes), while “negative” pairs are created by sampling two embeddings from different cohorts (e.g., one patient with Diabetes and one with Sepsis). We created a balanced dataset with a 1:1 ratio of positive to negative pairs. For the negative pairs, we employed a hard-negative mining strategy, sampling non-matching pairs from within the same broad disease category (e.g., two different cardiovascular diseases) to create a more challenging and effective training task for the classifier. A Siamese neural network architecture is particularly well-suited for this task. This network uses two identical, weight-sharing subnetworks to process each embedding in the pair in parallel. The outputs of these subnetworks are then combined, typically by calculating their element-wise difference, to produce a single vector that represents the relationship between the two patients. This relationship vector is then passed through a final classification layer with a sigmoid activation function to output a probability score from 0 to 1. The entire model is trained using a binary cross-entropy loss function, which adjusts the weights to minimize the distance between embeddings of same-cohort patients while maximizing the distance between those from different cohorts.

3.3. High-Speed Semantic Retrieval

This stage of the pipeline transforms the collection of patient embeddings into a highly efficient search index. This is implemented using dedicated vector search libraries. For HNSW and IVF, we use the ‘faiss’ library from Facebook AI. For ANNOY, we use the ‘annoy’ library from Spotify. In this new paradigm, the query is not a set of rules but another patient embedding—either from an existing patient or a synthetically constructed ideal patient profile. Our framework then finds other patients who are similar to this example in the high-dimensional semantic space. This allows for far more nuanced and complex queries that would be difficult or impossible to articulate with explicit rules, such as “find patients with a similar narrative of disease progression” or “find patients who responded poorly to a specific medication and exhibited symptoms described in their notes”. This new query paradigm is more intuitive for clinicians and unlocks a new class of powerful, data-driven clinical inquiry.

4. Experimental Evaluation

This section details the experimental protocol designed to evaluate the performance of our proposed patient similarity search framework. The evaluation is structured to assess two primary components: (1) the quality of patient representations generated by different domain-specific language models (BioBERT vs. ClinicalBERT), and (2) the retrieval efficiency and accuracy of various ANN search algorithms.

4.1. Dataset and Cohort Definition

The experiments will be conducted on the MIMIC-III v1.4 [

15] and MIMIC-IV v2.2 [

16] databases. These large, publicly available datasets contain de-identified Electronic Health Records (EHRs) from patients admitted to the Beth Israel Deaconess Medical Center. They provide a rich, multi-modal source of clinical data, including structured information (diagnoses, procedures, medications) and extensive unstructured clinical notes, making them ideal for this evaluation. The MIMIC-III v1.4 database is a substantial collection of de-identified health data from patients who were admitted to critical care units between 2001 and 2012. It contains records for 46,520 total patients, which form the indexed database for this experiment. Across this patient population, the database documents 58,976 hospital admissions. The MIMIC-IV v.2.2 includes

unique patients (our indexed database) who account for a total of 431,231 hospitalizations.

4.2. Dataset Characterization

As requested by the reviewer, we provide further characterization of the data. The MIMIC databases are relational, consisting of multiple tables. Our framework primarily draws from the following key tables:

PATIENTS and ADMISSIONS: Provide patient demographic information and details on each hospital stay, forming the basis for patient-centric records.

DIAGNOSES_ICD: Contains ICD-9 (for MIMIC-III) or ICD-10 (for MIMIC-IV) diagnosis codes for each hospital admission. This is the source of our structured diagnosis features and our ground-truth cohort labels.

PRESCRIPTIONS: Includes medication data (e.g., drug name, dosage), which is used as another source of structured features.

NOTEEVENTS: This is the largest table and contains all unstructured clinical notes (e.g., discharge summaries, nursing notes, radiology reports) associated with a patient’s stay. This is the source of our unstructured text data.

To provide a concrete example of the raw data,

Table 1 shows a conceptual, de-identified sample of rows from these key tables. Our preprocessing pipeline (

Section 3.1) is responsible for aggregating this relational data into a single, unified representation for each patient.

A significant challenge in evaluating patient similarity is the absence of an explicit “ground truth” for patient likeness. To create a robust evaluation benchmark, we adopt a common and practical approach: using primary diagnosis codes (ICD-9 for MIMIC-III, ICD-10 for MIMIC-IV) to define the boundaries of clinically coherent groups. It is crucial to clarify, however, that this primary diagnosis servesonly as the ground-truth label for evaluation. To prevent data leakage and ensure the model learns true clinical similarity, this specific ground-truth diagnosis code was explicitly excluded from the structured data features used as input to the model. The similarity search itself is performed on the rich, multi-modal patient embeddings that capture the entirety of each patient’s longitudinal record—including all other secondary diagnoses, medications, procedures, and the full narrative from their clinical notes.

For example, when querying with a patient from the “Type 2 Diabetes” cohort, the goal is not merely to find other patients with the same ICD code, but to find those who are most similar in their overall clinical presentation. This includes shared complications like neuropathy, similar medication histories such as metformin and insulin usage, and comparable narratives of disease management as described in their notes. This method effectively evaluates whether the learned embeddings capture the holistic nature of a patient’s condition, far beyond a single structured data point.

We defined three distinct cohorts for our experiments:

For MIMIC-III (N = 46,520), the cohorts consisted of: Diabetes (N = 7527), CHF (N = 5887), and Sepsis (N = 4982). For MIMIC-IV (N = 299,712), the cohorts were: Diabetes (N = 38,102), CHF (N = 27,951), and SepsIS (N = 23,011). For each cohort, a random sample of 100 patients (Q = 100) were selected as query patients. For a given query patient from a cohort, the remaining patients within that same cohort are defined as the “ground truth” relevant set.

4.3. Preprocessing and Embedding Generation

For each patient, we extracted all unique ICD diagnosis codes and medication codes from their history. This information was transformed into a high-dimensional, sparse binary vector, where each dimension corresponded to a specific code.Crucially, to prevent the data leakage described in

Section 4.2, the specific ICD codes used to define the ground-truth cohorts (e.g., “Type 2 Diabetes Mellitus,” “Sepsis”) were removed from this input vector for all patients. This forces the framework to identify cohorts based on holistic context rather than a single, leaked feature. All clinical notes for each patient (specifically NOTEEVENTS from MIMIC-III and the union of notes from MIMIC-III and MIMIC-IV for pre-training) were concatenated. A standardized text cleaning pipeline was then applied, which included converting text to lowercase, removing irrelevant metadata and special characters, and expanding common clinical abbreviations.

We generated two separate sets of patient embeddings using two pre-trained transformer models to compare their representational power:

BioBERT: Utilizing the dmis-lab/biobert-base-cased-v1.1 model, which is pre-trained on biomedical literature (PubMed abstracts).

ClinicalBERT: Utilizing the emilyalsentzer/Bio_ClinicalBERT model, which is initialized from BioBERT and further pre-trained on all notes from the MIMIC-III database, making it highly specialized for clinical text.

For each patient and each model (BioBERT and ClinicalBERT), a holistic patient embedding was created using the gated fusion architecture described in

Section 3.2. This process was trained end-to-end on an in-hospital mortality prediction task to learn the optimal fusion weights. The final 256-dimensional fused vector was then extracted for indexing.

4.4. ANN Indexes

The basic configuration parameters of the ANN methods used are

HNSW (Hierarchical Navigable Small World): An IndexHNSWFlat was built, whose main parameters are:

- -

: This parameter defines the maximum number of bidirectional links (edges) for each node in the graph’s layers. A higher M creates a denser, more connected graph, which improves the accuracy of the search by making it less likely to get trapped in a local minimum. While this increases memory usage, a value of 32 is a robust choice that prioritizes a high-quality graph structure.

- -

: This parameter controls the size of the candidate list during index construction. A larger efConstruction value leads to a more thorough search for neighbors when adding new points, resulting in a higher-quality index and better recall, at the cost of a longer build time. Since index construction is a one-time, offline process for this experiment, a higher value was chosen to maximize the quality of the index.

- -

: This parameter determines the size of the candidate list at query time and is the primary knob for tuning the speed-accuracy trade-off. A higher value increases accuracy but also search latency. We set efSearch to be greater than k (the number of neighbors retrieved, 100) to ensure a sufficiently deep search to achieve high recall.

IVF (Inverted File Index): An IndexIVFFlat was used. This involves a training step to find cluster centroids. The key parameters are

- -

: This parameter sets the number of clusters the data is partitioned into. The number of clusters is a trade-off; more clusters mean fewer vectors to search within each cluster, but it also increases the risk of missing relevant neighbors in adjacent clusters. A value of 1024 is a common starting point for datasets with hundreds of thousands of vectors, aiming to create reasonably sized partitions.

- -

: This parameter defines how many of the nearest clusters to the query vector are searched exhaustively. Increasing nprobe directly improves recall at the cost of search speed. A value of 16 was chosen as a balanced setting to explore a sufficient portion of the data space without incurring excessive latency.

ANNOY (Approximate Nearest Neighbors Oh Yeah): An AnnoyIndex was built. The main parameter to tune is

- -

: This parameter controls the number of trees in the forest. Each tree is built by recursively partitioning the space with random hyperplanes. More trees lead to higher precision because they provide more chances to find the true nearest neighbors, but this also increases the index size and build time. A value of 100 was chosen as a robust starting point for our 256-dimensional fused embeddings, providing a good balance between accuracy, index size, and build time.

For each query patient, we searched the corresponding index to retrieve the top-k nearest neighbors, with k set to 100.

4.5. Evaluation Metrics and Setup

To quantitatively assess the retrieval quality of each model and index combination, we used standard information retrieval metrics: Precision@k, Recall@k, and F1-Score@k. These metrics are defined as follows:

Precision: The proportion of retrieved patients that are relevant.

Recall: The proportion of all relevant patients that were successfully retrieved.

F1-Score: The harmonic mean of precision and recall, providing a single, balanced score.

Index Build Time (Minutes): The one-time, upfront computational cost required to construct the searchable index from the entire set of patient embeddings.

Avg. Query Time (ms): The average time, in milliseconds, it takes to find the top 100 nearest neighbors for a single query. This is the most critical metric for real-time applications like clinical decision support, where near-instantaneous results are required.

Throughput (Queries/Second): Often abbreviated as QPS measures how many concurrent search queries the framework can handle per second. It is a direct measure of the framework’s scalability and its ability to serve multiple users or automated processes simultaneously.

The final reported score for each metric will be the average across all query patients within each of the three defined clinical cohorts. All experiments were conducted on a machine equipped with 55 GB of system RAM and an NVIDIA GPU with 23 GB of VRAM.

4.6. Experimental Results

The experimental evaluation was designed to rigorously assess the two core components of the proposed framework: the quality of the patient embeddings and the performance of the ANN search algorithms. Following the reviewer’s feedback, all experiments were re-run using a corrected methodology that explicitly excludes ground-truth diagnosis codes from the input features, thereby eliminating the risk of data leakage.

Table 2 illustrates the retrieval accuracy on the MIMIC-III dataset. The results, now based on a more realistic task, show that ClinicalBERT consistently outperforms BioBERT across all cohorts and ANN algorithms. For instance, with the HNSW index, ClinicalBERT achieves an average F1-Score of 0.78, compared to BioBERT’s 0.72. This demonstrates that even without the leaked feature, the domain-specific pre-training of ClinicalBERT on clinical notes provides a significant advantage in capturing true clinical similarity.

Among the ANN algorithms, HNSW consistently delivers the highest retrieval accuracy. For both ClinicalBERT and BioBERT embeddings, HNSW achieves the top F1-Score in all three clinical cohorts. The performance hierarchy (HNSW > IVF > ANNOY) remains stable, suggesting that for this type of complex, semantic data, the graph-based approach of HNSW is most effective.

We replicated the exact same experiment on the larger and more contemporary MIMIC-IV dataset (

Table 3). This step validates the framework’s generalizability. As the table shows, the results from MIMIC-IV mirror those from MIMIC-III. ClinicalBERT once again significantly outperforms BioBERT, and HNSW remains the top-performing indexing method (e.g., ClinicalBERT + HNSW F1-Score of 0.76). The new, more realistic scores are no longer “perfectly mirrored,” and we observe more nuanced differences between the datasets, which is expected. This stability in relative performance demonstrates that the proposed framework is robust.

Table 4 shifts the focus from retrieval accuracy to operational efficiency. Its purpose is to quantify the practical trade-offs between the different ANN algorithms in terms of speed and resource consumption. The results clearly illustrate the fundamental trade-off in ANN algorithms. HNSW, which was the most accurate, also has the longest Index Build Time (

min). In contrast, IVF builds its index much faster (

min) but at the cost of lower accuracy.

Despite its long build time, HNSW delivers by far the best query performance, with an average query time of under 9 ms and a throughput of over 100 queries per second. This is more than twice as fast as its closest competitor, IVF. The low standard deviation in query time ( ms) further indicates that this performance is highly stable and predictable.

Conclusively, while HNSW requires a significant upfront computational investment to build its index, that investment pays off with unparalleled query speed and scalability. For clinical applications where real-time performance is a necessity, HNSW is the clear choice.

4.7. Ablation Study on Fusion Mechanisms

To address the reviewer’s concern about our central architectural claim, we performed an ablation study to quantify the benefit of the gated fusion mechanism. We compared the retrieval performance of four different models, all evaluated on the corrected MIMIC-IV dataset using the HNSW index:

Text-Only: Used only the 768-dim [CLS] token embedding from ClinicalBERT.

Structured-Only: Used only the 256-dim dense vector derived from structured codes (medications, procedures, secondary diagnoses).

Simple Concatenation: Concatenated the text and structured vectors, followed by a linear layer to project to 256 dimensions.

Gated Fusion (Ours): The proposed architecture as described in

Section 3.2.

The results, presented in

Table 5, demonstrate the value of multi-modal fusion and justify our gated approach.

As the table shows, both the text-only and structured-only models underperform the fusion models, confirming that both modalities provide essential, non-redundant information. Our proposed Gated Fusion mechanism consistently outperformed simple concatenation across all cohorts, (e.g., providing a 4.2% relative improvement in the Sepsis cohort). This suggests that the model’s ability to adaptively weigh the information from each modality is critical for creating the most effective patient representation, thus justifying the additional complexity of the gated architecture.

5. Discussion

5.1. Illustrative Application Scenarios

The following scenarios are illustrative and intended to demonstrate the framework’s potential in key clinical and research areas. They do not represent quantitatively evaluated or clinically validated case studies but rather serve as forward-looking examples of practical implementation.

The true value of the proposed framework is realized through its application to pressing clinical and research challenges. By enabling rapid and semantically meaningful patient similarity searches, our framework can transform workflows in several key areas of healthcare.

5.1.1. Scenario: Precision Cohort Discovery for Clinical Trials

Problem: Patient recruitment remains one of the most significant bottlenecks in conducting clinical trials. A staggering 80% of trials fail to meet their initial enrollment timelines, leading to costly delays and, in some cases, premature termination of promising research. The process of identifying eligible patients from a large population based on complex inclusion and exclusion criteria is a major contributor to this problem. Manual screening of patient charts is exceptionally slow and labor-intensive, while traditional database queries on structured data are often too blunt an instrument to capture the nuanced criteria specified in trial protocols. The ANNS-based framework provides a powerful solution to automate and accelerate this process.

Query Formulation: The eligibility criteria for a clinical trial are used to construct a “prototypical” or “ideal” patient profile. This can be achieved in several ways. A textual description of the ideal patient (e.g., “A patient with a diagnosis of type 2 diabetes, currently treated with metformin, showing early signs of declining renal function as noted in physician summaries, but with no documented history of major cardiovascular events like myocardial infarction or stroke”) can be created and then embedded using ClinicalBERT to generate a query vector. Alternatively, a query vector can be synthesized by averaging the embeddings of key medical concepts relevant to the trial criteria.

High-Speed Search: This synthetic query vector is then used to perform an ANNS search against the indexed database containing the embeddings of the entire patient population.

Cohort Retrieval: In milliseconds, our framework returns a ranked list of the top-k patients whose embeddings are closest to the query vector in the high-dimensional space. This list represents a highly pre-qualified cohort of potential trial participants who semantically match the complex eligibility criteria, including nuances captured from their clinical notes. This drastically reduces the number of charts that require manual review by clinicians, allowing them to focus their efforts on the most promising candidates and significantly accelerating the recruitment process. The speed of our framework also allows for dynamic, iterative refinement of the cohort criteria to balance recruitment numbers and cohort specificity.

5.1.2. Scenario: Augmenting Clinical Decision Support (CDS)

Problem: In clinical practice, physicians frequently encounter patients with complex, rare, or atypical presentations of disease. In these situations, standard clinical guidelines may be insufficient. Clinicians often rely on a combination of their personal experience and a time-consuming review of medical literature to inform treatment decisions. The ability to quickly access the collective experience of the institution—by reviewing the outcomes of similar patients treated in the past—could provide invaluable, data-driven evidence to guide clinical management. The framework can be integrated directly into the clinical workflow as a powerful decision support tool.

Query Formulation: When a clinician is managing a challenging case, the multi-modal embedding of their current patient is automatically used as the query vector.

Similar Patient Retrieval: Our framework performs an ANNS search to retrieve a cohort of the most similar patients from the hospital’s historical EHR database.

Outcome Analysis: The clinician is presented with an aggregated summary of the retrieved cohort. This summary can visualize the different treatment pathways taken by these similar patients (e.g., medication sequences, surgical interventions) and their associated outcomes (e.g., length of stay, 30-day readmission rates, survival curves).This form of “case-based reasoning” at scale allows the clinician to see what has—and has not—worked for patients with a similar sub-phenotype in the past. This provides powerful, personalized, real-world evidence to support treatment decisions at the point of care. This is a direct and scalable application of the k-Nearest Neighbors (k-NN) methodology, a well-established machine learning technique, in a real-time clinical context.

5.1.3. Scenario: Proactive Pharmacovigilance

Problem: The identification of rare or unexpected adverse drug reactions (ADRs) after a medication has been approved and is on the market—a process known as pharmacovigilance—is critical for public health and patient safety. Traditional pharmacovigilance relies heavily on spontaneous reporting systems (like the FDA’s FAERS), which are known to suffer from significant under-reporting, reporting bias, and data quality issues. EHRs represent a vast, untapped source of real-world evidence for ADR detection, but the signals are often subtle and buried within unstructured clinical notes, making them difficult to detect with conventional methods. This framework enables a novel, data-driven approach to signal detection that goes beyond simple co-occurrence analysis.

Initial Cohort Selection: Using structured data, an initial cohort of all patients who have been prescribed a new drug of interest is identified.

Clustering in Embedding Space: The patient embeddings for this cohort are then analyzed. The core idea is that if a subset of these patients begins to form a distinct cluster in the embedding space—moving away from the other patients on the same drug—it implies that they share a new, common clinical characteristic that has emerged since they started the medication.

Signal Identification and Hypothesis Generation: By isolating the patients within this emergent cluster, pharmacovigilance analysts can perform a differential analysis. They can examine the clinical notes and structured data of the clustered patients to identify the shared symptoms, laboratory abnormalities, or new diagnoses that distinguish them from the rest of the cohort (e.g., a cluster of patients who all developed a “persistent dry cough” or showed “elevated liver enzymes” within three months of starting the drug). This unsupervised clustering approach can detect potential ADR signals much earlier and more systematically than waiting for spontaneous reports, enabling proactive safety monitoring.

5.2. Exploratory Data Analysis and Hypothesis Generation

Addressing the reviewer’s point on data correlation, the power of this framework extends beyond simply finding individual similar patients. It transforms the entire patient database into a high-dimensional geometric space whose very structure—its topology—becomes a source of clinical insight. This embedding space can be used to discover novel, data-driven correlations and generate new hypotheses.

For example, by applying clustering algorithms (like k-means or DBSCAN) to the patient embeddings, researchers can identify data-driven sub-phenotypes. If patients with a single diagnosis code (e.g., “Congestive Heart Failure”) form two distinct and distant clusters in the embedding space, it could signal the existence of two different underlying biological mechanisms or patient responses. Analyzing the features (both structured codes and note-based concepts) that differentiate these clusters can reveal new clinical correlations.

Furthermore, the vector representations of medical concepts themselves can be analyzed. The vector relationship between “diabetes” and “metformin” can be compared to the relationship between “hypertension” and “lisinopril” to understand treatment patterns. A patient located in a very sparse region of the embedding space may represent a rare or atypical disease presentation, flagging them for further study. The framework thus evolves from a simple “search engine” into a powerful “exploratory analytics tool,” enabling researchers to investigate population structure and discover complex correlations based on the geometry of the clinical landscape.

5.3. Limitations and Future Work

Despite the promising results, this study has several limitations that offer clear directions for future research.

First, while our new ablation study (

Section 4.7) empirically justifies our gated fusion mechanism over simpler concatenation, we did not explore a wider range of more complex fusion architectures (e.g., cross-modal attention [

9]). It is possible that other fusion techniques could yield further improvements, and this remains a key area for future work.

Second, the evaluation of “holistic similarity” presents a known and valid challenge. While we have now corrected the data leakage issue by withholding the ground-truth diagnosis codes from the input, our evaluation still relies on ICD codes as the ground truth. This is a pragmatic and reproducible method, but as noted by the reviewer, it may not capture the full, nuanced judgment of clinical experts. A more robust, albeit less scalable, evaluation would involve manual chart review by clinical experts to assess the clinical coherence of the retrieved cohorts [

8]. Designing such a qualitative evaluation is a critical next step.

Third, while the performance metrics in

Table 2 and

Table 3 now include standard deviations, which show low variance, a more rigorous analysis would involve formal statistical significance testing (e.g., paired

t-tests) to confirm the superiority of ClinicalBERT over BioBERT and HNSW over other ANN methods.

Fourth, the choice of for retrieval was chosen as a practical and common value for evaluating top-k retrieval, providing a large enough set to assess both precision and recall. A sensitivity analysis on the value of k was not performed. Future work could explore how precision and recall vary at different values of k (e.g., k = 10, k = 50, k = 200) to better understand the framework’s performance characteristics.

Fifth, the application scenarios presented in

Section 5.1 are illustrative and intended to demonstrate the framework’s potential. They do not represent quantitatively validated systems. A full quantitative evaluation or clinical user study for any of these specific applications (e.g., clinical trial recruitment) would be a substantial and separate research effort.

Finally, for practical deployment, end-to-end performance is critical.

Table 4 focused on query latency, but other factors are crucial. We provide these details in

Table 6. The main bottleneck is the one-time, offline generation of patient embeddings. The end-to-end latency for a *new* patient (i.e., one not in the index) would be the sum of their embedding generation time and the ANN query time. The memory footprint of the index is also a key consideration for scalability.

6. Conclusions

This paper has detailed a comprehensive and scalable framework that successfully addresses the long-standing challenge of efficient, large-scale patient similarity analysis. By creating a powerful synergy between deep medical embeddings and high-performance Approximate Nearest Neighbor Search indexing, we have outlined a complete end-to-end pipeline. This process begins with the meticulous preprocessing of complex, multi-modal EHR data, progresses to the generation of holistic patient vector representations using a gated fusion architecture and domain-specific models like ClinicalBERT, and culminates in the ability to perform near real-time similarity queries on millions of patients using the HNSW algorithm.

Our experimental evaluation on the MIMIC-III and MIMIC-IV datasets empirically validates this approach. The results unequivocally demonstrate that domain-specific language models like ClinicalBERT produce significantly more accurate patient representations than their general biomedical counterparts, even when evaluated with a rigorous methodology that prevents data leakage. Furthermore, the HNSW algorithm emerged as the superior indexing method, providing the best balance of high retrieval accuracy (e.g., F1-Scores > 0.78) and near real-time query performance (<10 ms).

The fusion of deep semantic understanding from modern language models with the raw computational efficiency of ANNS represents a significant leap forward for data-driven healthcare.