Abstract

By leveraging retrieval-augmented generation technology in human–computer interaction applications, a large language model was established based on local hardware to construct a root cause query model for equipment failures on virtual power plant intelligent operation and maintenance platforms. This enhances the efficiency of maintenance personnel in retrieving troubleshooting solutions from vast technical documentation. First, the virtual power plant architecture was established, clarifying the functions of each layer and defining the flow of information and commands between them. Subsequently, the time-based workflow and corresponding functional modules and sub-functions of its core smart operation and maintenance platform were analyzed. Then a root cause query model for equipment failures was developed on the local hardware platform. A knowledge base for equipment failure root causes was constructed. During deployment, two large models were selected for performance comparison. After comparative experiments, performance of RAG varied across different models, requiring careful selection based on hardware and environment to determine whether RAG technology should be employed.

1. Introduction

With growing global concern over energy shortage and environmental protection, distributed renewable energy has experienced rapid development. However, its characteristics of small capacity and scattered distribution have also led to challenges such as difficult grid integration, management difficulties, and operational and maintenance issues [1]. To advance energy structure transformation, on 9 February 2025, the National Development and Reform Commission and the National Energy Administration issued Document No. 136, explicitly promoting the participation of renewable energy grid-connected electricity in market transactions [2]. However, due to the limited scale and high volatility of various renewable energy generation sources, their direct integration into the grid or participation in the market could significantly impact system operational stability. In this context, the concept of virtual power plants (VPP) emerged. At its core, this approach integrates distributed generation, energy storage, and controllable loads through advanced information and communication technologies and software systems, forming a unified dispatchable virtual power entity [1,3]. This approach not only enhances resource aggregation efficiency and grid stability but also provides a viable pathway for renewable energy to participate in electricity spot markets and ancillary services markets at scale and with high efficiency.

With the continuous advancement of large language model (LLM) technology, its characteristics such as multimodal learning, intelligent reasoning, and powerful generative capabilities have enabled widespread application across the power industry. In 2024, the State Grid Corporation of China unveiled China’s first one hundred billion-level multimodal industry model, called the “Bright Power Large Model,” which has been deployed in renewable energy operations and maintenance, power grid situation analysis and equipment diagnostics [4,5]. In 2023, China Southern Power Grid unveiled its self-developed and controllable LLM “MegaWatt.” Integrating general knowledge with specialized knowledge bases, it spans multiple business domains and scenarios, significantly boosting operational efficiency [6]; Huawei Cloud’s Pangu LLM adopts a “foundation, industry, scenario” three tier architecture, which achieved a leap from general capabilities to specialized applications through professional knowledge training and scenario adaptation [7]. The above case demonstrates that LLMs have significant application potential in the power sector. However, as a novel business model, VPPs have seen relatively few instances of integrated application with LLMs. In February 2025, the State Power Investment Corporation (SPIC) Energy Science and Technology Research Institute integrated the DeepSeek into its VPP platform. By consolidating policy data, load characteristics, and transaction decision-making experience, it provides real-time policy analysis and transaction strategy support [8]. However, since current functionality remains limited to policy interpretation, market analysis, and strategy formulation, the scope of application needs to be further expanded.

In the operation and maintenance of VPPs, root cause analysis of equipment failures is a critical component. The diverse protocols and inconsistent log formats of distributed devices connected to VPPs often trigger alarm storms when failures occur. Traditional manual troubleshooting is inefficient, severely influencing maintenance response times. To address these challenges, the core concept of “machine-assisted human decision-making” within Cognitive Computing-based Hybrid Human–Machine Intelligence (CC-HHMI) can be leveraged for rapid equipment fault diagnosis. This concept falls under the HHMI branch within the Human–Computer Interaction (HCI) discipline, representing a key direction in China’s next-generation AI strategy [9]. HHMI is further categorized into two types: hybrid augmented intelligence based on human action or human-in-the-loop, and hybrid augmented intelligence based on human cognition. In short, the former involves direct human intervention in AI control or decision loops, while the latter entails humans indirectly influencing AI systems through their cognition or experience, thereby enhancing AI reasoning and learning capabilities to improve performance [10]. Retrieval-Augmented Generation (RAG) technology exemplifies a typical application of the latter approach. RAG technology can be understood as a natural language processing technique that integrates information retrieval, text augmentation, and text generation. It combines the capabilities of traditional information retrieval systems with generative LLMs, enabling LLMs to generate more accurate and contextually relevant text without requiring additional training. Its application involves three steps: data preparation and knowledge base construction, retrieval module design, and generation module design. First, collect diverse data relevant to the question-answering system from sources such as documents, web pages, and databases. Clean the collected data by removing noise, duplicates, and irrelevant information to ensure quality and accuracy. Use this data to construct the knowledge base. During knowledge base construction, text is segmented into smaller chunks. A text embedding model converts these chunks into vectors, which are then stored in a vector database. When a user inputs a query, the same text embedding model converts the question into a vector. The system then calculates similarity scores between vectors in the database to retrieve knowledge base chunks most similar to the query vector. These retrieved results are ranked by similarity score, with the most relevant chunk selected as input for subsequent generation. During generation, the retrieved relevant chunks are merged with the original question to form richer contextual information. A large language model then generates the response based on this contextual information. The large language model learns how to generate accurate and useful answers by leveraging the retrieved information [11,12,13]. Therefore, by integrating a structured knowledge base of virtual power plants that combines expert experience and historical case studies, a reliable external knowledge source can be provided for LLMs. This significantly enhances the accuracy of fault diagnosis and the reliability of decision-making, shortens troubleshooting time, and assists operation and maintenance personnel in decision-making. Through continuous incorporation of human cognitive feedback and contextual knowledge, the system with this model can iterate and enhance itself, progressively meeting the complex operational and maintenance demands of VPPs’ device.

Based on the above, we established a local LLM integrated with the equipment operation and maintenance knowledge base of the VPP. This model will be deployed within the intelligent operation and maintenance platform of the VPP to enable root cause analysis of equipment failures. The article structure is as follows: Section 2 introduces the VPP intelligent operation and maintenance platform and its corresponding functions; Section 3 elaborates on the LLM, related knowledge bases, and interactive platform content; Section 4 conducts simulation experiments on the technical content; and Section 5 presents the conclusion and outlook.

2. Virtual Power Plant Intelligent Operation and Maintenance Platform

2.1. Virtual Power Plant Architecture

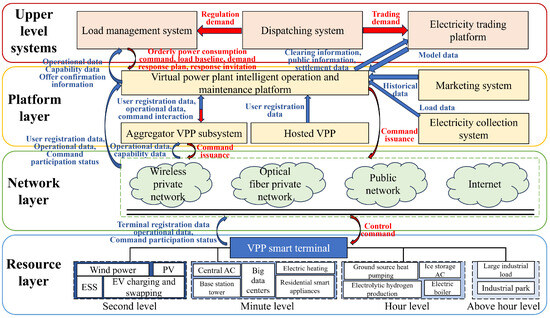

Based on the principle of layered design, the architecture of a VPP typically comprises four layers from bottom to top: the resource layer, network layer, platform layer, and upper-level systems. This integrated architecture achieves unified operations for resource aggregation, coordinated control, market transactions, and grid dispatch through top-down command flows and bottom-up data flows. The schematic diagram of the architecture is shown in Figure 1 [14].

Figure 1.

Virtual Power Plant System Architecture.

The resource layer serves as the physical foundation, connecting and managing distributed resources such as wind power, central air conditioning (AC) systems, electric boilers, and large industrial loads through the smart terminals of VPP. Based on the response characteristics of each resource, they are categorized into four types: second level, minute level, hour level, and above hour-level. This classification supports the multi-timescale precise control capabilities and market service functions of VPP.

Through the smart terminal of VPP, operational data and command participation status from various resources are transmitted to the network layer for communication. Serving as the information transmission channel, the network layer employs multiple communication technologies such as fiber-optic private networks, wireless private networks, public networks, and the Internet to ensure reliable, efficient, and secure data and command transmission between upper and lower layers.

Information is transmitted through the network layer’s data channels to the platform layer, the core component of the VPP. Acting as the central hub, this layer facilitates data and command exchanges with lower-level resources and upper-level systems via the VPP intelligent operation and maintenance platform, integrated with aggregator VPP subsystems or hosted VPP. Co-located at this layer are the marketing system and the electricity collection system. The marketing system serves as the entry point for resource owners to join the VPP, handling user registration, qualification review, electronic agreement signing, revenue settlement, user incentives, and interaction. The electricity collection system processes and aggregates the massive raw data uploaded from the resource layer while receiving overall control commands from the platform layer, decomposing them, and distributing them to each terminal device.

The upper-level system layer, serving as both the source of commands and the destination for data, integrates three core modules: the load management system, the dispatch system, and the electricity trading platform. These modules respectively handle command issuance and data reception, demand conversion and information integration, and transaction execution and information feedback. The load management system generates regulatory commands based on grid requirements, the dispatch system converts regulatory needs into trading demands, and the electricity trading platform completes market clearing while providing financial feedback. The first two systems verify execution outcomes through data exchange, forming a “Command-Market-Dispatch” coordination framework. This approach not only safeguards fundamental grid security requirements but also optimizes resource allocation efficiency through market-based mechanisms.

2.2. Virtual Power Plant Intelligent Operation and Maintenance Platform Architecture

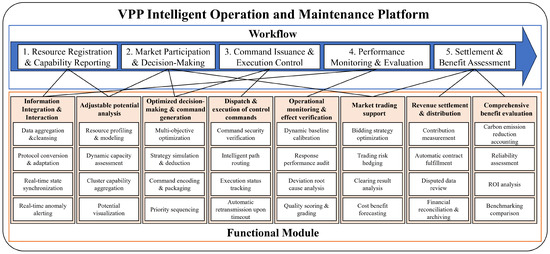

As shown in Figure 1, the most critical component within a VPP is its intelligent operation and maintenance platform. Serving as the central hub for information and command exchange, this platform functions as the brain of the VPP. The detailed functional architecture of the VPP intelligent operation and maintenance platform is illustrated in Figure 2 [15].

Figure 2.

Functional Architecture of the Virtual Power Plant Intelligent Operation and Maintenance Platform.

The workflow defines the execution sequence for business operations within the VPP intelligent Operation and Maintenance Platform. Resource registration and capacity reporting constitute the access and initialization phase for aggregating resources within the VPP. After receiving user registration data from the marketing system, the VPP intelligent operation and maintenance platform must also collect operational data—including actual power output and status via the consumption measurement system or aggregator subsystem. Following aggregated calculation of resource capacity data, both capacity and operational data are reported to upper level systems such as the dispatch system, indicating the platform’s available service capabilities.

Market participation and decision-making refers to the stage where the platform formulates participation strategies and internal decisions after receiving market signals or regulatory requirements from higher authorities. Upon receiving demand response plans or response invitations from the load management system, as well as clearing information, public data, and model data from the electricity trading platform, the platform performs optimization calculations based on market information and its own resource capabilities to generate decision proposals for market participation or regulatory response.

Command issuance and execution control means the platform converts decision-making plans into specific, executable control commands and issues them through the network layer to resource endpoints for execution. First, the platform generates concrete control commands based on the plan. These commands are then transmitted via the command issuance interface through the network layer to the VPP smart terminal, aggregator VPP subsystem, or hosted VPP. The platform interacts with lower-level systems to ensure commands are correctly received and understood.

Performance monitoring and evaluation means after issuing instructions, the platform must monitor the actual execution of resources and compare it against expected outcomes to form a closed-loop feedback system. The platform collects participation status and operational data through the electricity collection system to verify correct instruction execution. It then compares actual execution results with load baselines provided by the load management system to assess peak shaving and valley filling effectiveness, analyze execution deviations, and generate effect evaluation reports.

Settlement and benefit assessment must be based on performance evaluation reports and market clearing results to complete the settlement and allocation of economic benefits, thereby achieving a commercial closed-loop. Upon receiving settlement data from the electricity trading platform, the platform can determine the final profits of the market transaction or response. Using user registration data from the marketing system and command participation records from the electricity collection system, the platform calculates each user’s contribution level. Profit distribution is then achieved according to contractual terms.

Below the workflow, the corresponding functional modules are listed. After organizing and categorizing the platform’s functions, eight functional types were identified. Each platform business utilizes different types of functional modules, with each business typically incorporating 2–3 functions. These functions collectively support the workflow of the VPP Intelligent Operation and Maintenance Platform, ensuring the smooth execution of all platform functions. Overall, the platform relies on information provided by the marketing system and electricity collection system. Its workflow achieves a complete closed-loop process of “data-decision-control-verification-value,” demonstrating its core value as the intelligent hub within the VPP.

3. Root Cause Query Model for Equipment Failure on Virtual Power Plant Intelligent Operation and Maintenance Platform

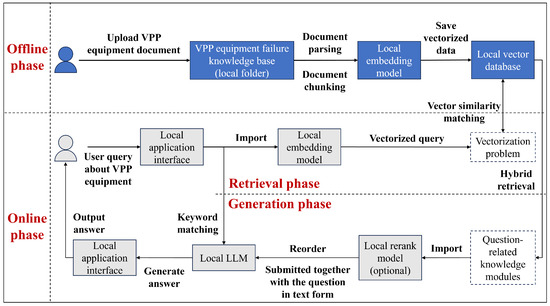

When establishing a root cause query model for equipment failures on the VPP intelligent operation and maintenance platform, the process is divided into offline and online phases: During the offline phase, we upload the compiled equipment failure-related materials to the web server. The server stores these materials in a data repository for subsequent management of additions and deletions. Subsequently, the locally embedded model parses and chunks the uploaded data, generating semantically coherent text fragments. These fragments undergo vectorization, transforming them into vector representations in high-dimensional space, and are ultimately stored in a vector database. This process aims to transform unstructured raw documents into a structured knowledge base that can be efficiently retrieved. During the online phase, after the user poses a question, the server initiates the retrieval and generation process. In the retrieval phase, the question is first received by the web server, which simultaneously performs keyword matching and vector similarity matching. The latter involves the server converting the user’s query information into vectors via the local embedding model, then matching these vectors against a vector database to match the most semantically relevant knowledge fragments. During the generation phase, knowledge chunks relevant to the question are extracted and fed into the Rerank model for reordering, thereby filtering out the knowledge chunks with the highest similarity to the question. It should be noted that feeding knowledge chunks into the Rerank model is not mandatory. In practical applications, deployment should be selectively determined based on the quality of generated answers and time constraints. Ultimately, these filtered knowledge chunks are input alongside the original question in textual form to the local LLM. Enhanced by this content, the LLM can generate more accurate and reliable responses to return to the user [16,17]. Overall, the model forms a complete closed-loop system from knowledge injection to intelligent question-answering, demonstrating an interactive process where humans leverage external knowledge bases to influence artificial intelligence systems, enhance their reasoning and learning capabilities, and thereby reciprocally impact human decision-making. The technical workflow of the fault root cause model within the VPP intelligent operation and maintenance platform is illustrated in Figure 3.

Figure 3.

Technical Process for Device failure Query Model for the Virtual Power Plant Smart Operation and Maintenance Platform.

As shown in Figure 1, within the resource layer of the virtual power plant system, various equipment types are categorized into four classes based on their response characteristics: second level, minute level, hour level, and above hour level. Since resources above the hourly level do not represent specific equipment types, we selected one representative device from each of the second level, minute level, and hourly level categories when constructing the knowledge base. We compiled relevant information on their associated failures, causes, and solutions to establish a root cause database for equipment failures. Among them, The second-level device selected is wind turbine; the minute-level device is central air conditioning unit; and the hourly-level device is electric boiler. The documentation comprises three categories of equipment-related materials: technical guidelines, standards, textbooks, notes, design manuals, instruction manuals, technical procedures, maintenance manuals, papers, product specifications, and user manuals. All materials have been processed for identification purposes. Due to the database’s large size, Table 1 illustrates the fault database content using the steps of a wind turbine maintenance plan as an example.

Table 1.

Example of Fault Database Content.

To facilitate troubleshooting by operations personnel, we opted to deploy the model on portable laptops. During deployment, to validate which model delivers higher-quality locally generated answers, better retrieval enhancement effects, and faster speeds, we simultaneously deployed LLMs of varying scales locally for knowledge base vectorization and content generation. First, based on the content of the operational equipment and fault database, a document containing questions and standard answers was established to standardize model inputs. This document includes information such as question serial numbers, questions, reference answers, key points, and question types. Subsequently, model selection was conducted, the environment was set up, and the model was deployed locally. When outputting, the model must not only provide the aforementioned input information but also generate the model’s answer, processing time, test mode, model name, and timestamp information. Following model output, metrics were designed to evaluate the generated answers. Given this paper’s focus on integrating information retrieval with LLMs in specialized domains, emphasis was placed on assessing the LLM’s generative performance rather than traditional evaluation methods. The selected metrics are described as follows:

(1) Faithfulness. This metric measures the factual consistency between the generated answer and the given context, calculated by evaluating both the answer and the retrieved context. The answer’s value is scaled to the (0, 1) interval, where a higher value indicates better factual consistency. An answer is considered factually consistent if all statements it makes can be inferred from the given context. To compute this metric, a set of statements within the generated answer must first be identified. These statements are then cross-checked individually against the context to determine if they can be inferred from the given context. The calculation formula is shown in Equation (1).

Here, F denotes the factual consistency score; represents the set of all statements identified in the generated answer; denotes the given context; and I denotes the arguments that can be inferred from the given context within the generated answer.

(2) Answer Relevance. This metric evaluates the degree to which generated answers align with the given question. Incomplete answers, those containing redundant information, or irrelevant responses receive lower relevance scores, while higher scores indicate greater alignment between the answer and the question. The metric is defined as the average cosine similarity between the original question and a series of artificially generated questions derived from the answer, as calculated in Equation (2).

Among these, denotes the vector embedding of the generated problem; denotes the vector embedding of the original problem; N denotes the number of generated problems.

(3) Context Precision measures whether all contexts relevant to the ground truth are ranked in prominent positions. Ideally, all relevant information chunks should appear near the top of the ranking. This metric is computed based on the query, ground truth, and context, yielding values between [0, 1], where higher scores indicate greater precision. Let T denote the total number of relevant information chunks in the top K results. The context precision score is then defined as shown in necessary revisions. Equation (3).

Here, K denotes the total number of information blocks in the context; represents the relevance indicator at the ranking position, with values of 1 or 0 indicating whether the information block at that position is relevant. Furthermore, using the confusion matrix, all prediction results can be categorized into four classes: (true positive) where the prediction matches the actual category, (false positive) where the prediction does not match the actual category, and so on. The calculation of is shown in Equation (4).

(4) Answer Semantic Similarity. This metric evaluates user experience by measuring the semantic similarity between generated answers and benchmark facts. The assessment is based on benchmark facts and answers, yielding values within the range [0, 1]. A higher answer semantic similarity score indicates greater consistency between generated answers and benchmark facts, as shown in Equation (5).

Among these, is the vector embedding of the generated answer; is the vector embedding of the reference fact.

(5) Comprehensive Score. This metric evaluates the model’s comprehensive performance and is calculated as shown in Equation (6):

Among these, a, b, c, and d represent the weight coefficients for factual consistency, answer relevance, contextual accuracy, and answer semantic similarity, respectively, with values of 0.25, 0.25, 0.2, and 0.3 to reflect the relative importance of each metric.

4. Results and Analysis

In the process of establishing the root cause of failure database, the failure analysis information of each device is converted into text-recognisable PDF format and organised according to the category, with a total of 25 pieces of information, including 8 pieces of wind turbines, 8 pieces of central air conditioning units, 9 pieces of electric boilers, with a total of 1898 pages. The deployment of large models using Windows 11 system, the hardware environment for a piece of Nvidia RTX3060 LAPTOP GPU, a total of 14 GB of video memory; Nvidia driver version 581.42, CUDA version 12.5, 15.7 GB of RAM. in order to simplify the process of deploying the local LLMs, with the help of Ollama for localised deployment, the deployment of the DeepSeek-R1-Distill-Qwen-1.5B and DeepSeek-R1-Distill-Qwen-7B macromodels for comparative validation. The quantization scheme is Q4_K_M. Accordingly, quantization and precision are not specified, and Ollama’s internal default quantization and precision values are used. The embedding model was tested with EmbeddingGemma, mxbai-embed-large-v1, and BGE-M3, and the BGE-M3 was chosen by its performance. It is based on the BERT architecture with a context window size of 8192, embedding length of 1024, and F16 quantization. The vector database utilizes FAISS with chunk size set to 1000, chunk overlap to 100, and retrieval top k to 3. Python version 3.9.13, Langchain version 0.3.27, faiss-cpu version 1.12.0, and transformers version 4.57.1 are employed.

Due to the presence of scored evaluations, a standardized dataset of questions and answers must first be established. For each selected device, 18 common operational issues were chosen, totaling 54 questions. The question formats are non-standardized. Beyond conventional questions, the dataset includes ambiguous phrasing, colloquial expressions, multiple-choice items, operational detail inquiries, and declarative statements. Each question is paired with a unique, unambiguous standard answer to calculate faithfulness scores. This dataset is constructed using JSON files based on high-quality operational data from three types of equipment, with DeepSeek-V3.1 employed as an auxiliary tool to ensure file content consistency. Its tags include <id, question, reference_answer, key_facts, question_type>, representing the question serial number, question text, reference answer, key points, and question type respectively. Taking question Q001 as an example, the query model usage is illustrated as shown in Table 2.

Table 2.

Query model usage example.

Using question Q001 as an example, Table 3 shows examples of correct and incorrect answers provided by the model, where correct_answer denotes the correct answer and incorrect_answer denotes the incorrect answer.

Table 3.

Model Responses: Correct and Incorrect Answer Examples.

The error examples were generated by DeepSeek-R1-Distill-Qwen-1.5B without RAG. Initial suspicion points to randomness during model generation, but repeated testing consistently failed to produce correct answers—instead yielding increasingly detailed erroneous responses. The reason may be that the model itself misunderstands this knowledge, erroneously associating similar fault codes, leading to semantic confusion.

After running the large models and submitting batch queries, two sets of question-answer pairs were obtained: one without RAG technology and one with RAG technology. For the answers, we conducted metric evaluations on the obtained responses to each question. Simultaneously, we established a simple retrieval-based question-answering system. This system employs a TF-IDF and cosine similarity architecture, returning the top 3 most relevant documents as the foundation for answers. It serves as the baseline model for comparison. The average values of each metric and the average end-to-end runtime for each model, with and without the knowledge base, are shown in Table 4. The model names in the table are represented in simplified form as DeepSeek-R1-1.5B and DeepSeek-R1-7B.

Table 4.

Model Performance Metrics Scores.

Based on the comprehensive scores generated by the model for each question, a quality grading system for question responses has been established, as shown in Table 5.

Table 5.

Quality Grading Table.

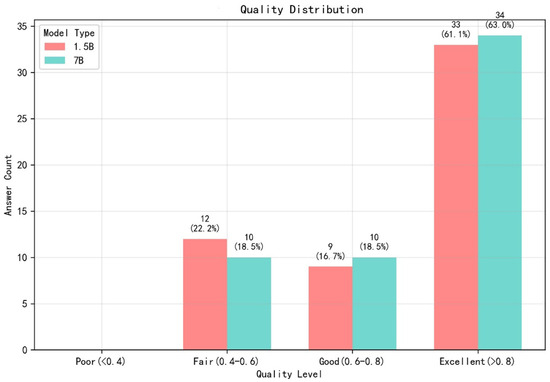

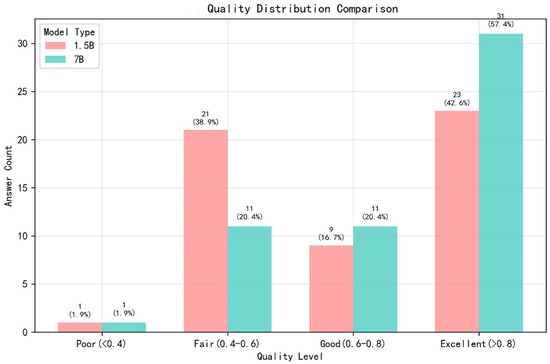

The quality distribution comparison between the two models without RAG is shown in Figure 4. The quality distribution comparison between the two models with RAG is shown in Figure 5.

Figure 4.

Comparison of Quality Distributions Between Two Models Without RAG.

Figure 5.

Comparison of Quality Distributions Between Two Models with RAG.

Based on the data analysis in Table 3, under conditions without RAG, the DeepSeek-R1-Distill-Qwen-1.5B and DeepSeek-R1-Distill-Qwen-7B models exhibit the expected scale effect characteristics. The DeepSeek-R1-Distill-Qwen-7B model demonstrates an extremely marginal advantage in the composite score, outperforming the DeepSeek-R1-Distill-Qwen-1.5B model by 1.07%. This indicates that increasing the number of parameters does indeed enhance foundational capabilities, though the improvement is relatively limited. Simultaneously, the two models show negligible differences across Faithfulness, Answer Relevancy, and Answer Semantic Similarity metrics. The sole marginal advantage lies in context precision, where the DeepSeek-R1-Distill-Qwen-7B model slightly outperforms the DeepSeek-R1-Distill-Qwen-1.5B model. Regarding average processing time, the DeepSeek-R1-Distill-Qwen-1.5B model completed tasks nearly six times faster than the DeepSeek-R1-Distill-Qwen-7B model. As shown in Figure 4, most responses from both models fall into the “high-quality” category. However, the DeepSeek-R1-Distill-Qwen-7B model produced a slightly higher proportion of high-quality and good answers while generating fewer average-quality responses, indicating superior stability in answer quality compared to the DeepSeek-R1-Distill-Qwen-1.5B model. Based on the above analysis, without RAG, the DeepSeek-R1-Distill-Qwen-7B model holds only a marginal advantage over the DeepSeek-R1-Distill-Qwen-1.5B model in response quality generation, yet requires significantly more processing time. In scenarios prioritizing efficiency where RAG technology cannot be applied, the DeepSeek-R1-Distill-Qwen-1.5B model demonstrates markedly superior performance compared to the DeepSeek-R1-Distill-Qwen-7B model.

Further analysis reveals that under RAG conditions, both models experienced score declines, but the DeepSeek-R1-Distill-Qwen-1.5B model showed a significantly larger drop than the DeepSeek-R1-Distill-Qwen-7B model. At this point, the DeepSeek-R1-Distill-Qwen-7B model demonstrated a substantial advantage over the DeepSeek-R1-Distill-Qwen-1.5B model. Regarding Faithfulness, the DeepSeek-R1-Distill-Qwen-1.5B model experienced a substantial decline, while the DeepSeek-R1-Distill-Qwen-7B model showed only a slight decrease. This indicates that the DeepSeek-R1-Distill-Qwen-1.5B model encountered severe “cognitive confusion” when processing external knowledge provided by RAG, which actually reduced the authenticity of its responses. Changes in other metrics were relatively minor. Additionally, after introducing the RAG retrieval step, the end-to-end processing time for both models increased significantly. As shown in Figure 5, the DeepSeek-R1-Distill-Qwen-1.5B model’s responses deteriorated severely, while the DeepSeek-R1-Distill-Qwen-7B model maintained relatively stable quality distribution. However, both models produced “poor” responses, suggesting RAG may introduce certain errors or noise. The analysis reveals that after incorporating RAG, the DeepSeek-R1-Distill-Qwen-1.5B model failed to benefit and instead experienced a significant decline in Faithfulness and overall response quality. In contrast, the DeepSeek-R1-Distill-Qwen-7B model demonstrated greater adaptability and robustness, making it the superior choice in RAG scenarios. Additionally, both models outperformed the baseline model across all metrics regardless of RAG usage, though their end-to-end processing times were substantially longer than the baseline model’s runtime.

From the perspective of computational resource consumption, deploying models utilizing quantization techniques represents the preferred low-cost approach for large models under existing hardware constraints. Since the Nvidia RTX3060 LAPTOP GPU used is a consumer-grade graphics card, its limited VRAM inherently restricts the use of large-parameter models, resulting in fewer viable model options. Both the DeepSeek-R1-Distill-Qwen-1.5B and DeepSeek-R1-Distill-Qwen-7B models deployed via Ollama employ the Q4_K_M quantization strategy. Regarding VRAM utilization, during DeepSeek-R1-Distill-Qwen-1.5B runtime, GPU utilization approaches 100%, while DeepSeek-R1-Distill-Qwen-7B runs with GPU utilization nearing 70% and CPU utilization around 30%. Due to the smaller parameter count of the DeepSeek-R1-Distill-Qwen-1.5B model, reading model weights and intermediate variables from VRAM is rapid. This enables continuous data supply to the GPU’s compute units, keeping them perpetually busy and thus achieving near-100% utilization. At this point, the GPU’s computational capacity becomes the bottleneck for model execution. The DeepSeek-R1-Distill-Qwen-7B model has a larger parameter count. Although it can also be loaded into VRAM, the volume of data requiring movement between VRAM and GPU compute units increases significantly. The GPU must wait for data delivery, resulting in an utilization rate of only around 70%. Furthermore, the data exchange process necessitates CPU involvement for scheduling and management, hence the rise in CPU utilization.

System scalability is primarily influenced by hardware constraints. Regarding vertical scaling, the knowledge base capacity employed is approximately 1900 pages. Comparing the processing time and efficiency of both models with and without RAG technology reveals that when utilizing RAG, the average processing time for all queries increased by about 20% across both models, with corresponding decreases in processing efficiency. Horizontal scaling is primarily constrained by hardware thermal limitations due to limited modification space. Future improvements could involve deploying and differentiating functionalities: the DeepSeek-R1-Distill-Qwen-1.5B model handles real-time queries, while the DeepSeek-R1-Distill-Qwen-7B model manages complex diagnostics. Implementing pipeline parallelism would optimize resource utilization.

From a cost-benefit perspective, practical deployment can be achieved without additional investment in existing hardware. Prioritize deploying the DeepSeek-R1-Distill-Qwen-1.5B quantized model to validate core functionality, then gradually introduce the DeepSeek-R1-Distill-Qwen-7B model to handle complex scenarios. Key optimization efforts should focus on memory mapping and thermal management to ensure stable service delivery within the constrained resources of mobile platforms.

In summary, the DeepSeek-R1-Distill-Qwen-1.5B model should be prioritized for scenarios without RAG technology to leverage its speed advantage. If a knowledge base is required, significant effort must be invested in optimizing RAG retrieval accuracy to ensure the model’s overall performance. The DeepSeek-R1-Distill-Qwen-7B model is better suited for scenarios requiring RAG technology, as it outperforms the DeepSeek-R1-Distill-Qwen-1.5B in stability and other aspects. However, since both models are equally affected by RAG, the next step should focus on enhancing RAG retrieval quality and speed. This approach aims to improve answer accuracy while minimizing time overhead for both models.

Based on current results, significant optimization potential exists not only for RAG but also for the model itself. Regarding optimization strategies, distillation methods can be employed. By constructing a training dataset that uses RAG contexts and questions as inputs and high-quality answers generated by the DeepSeek-R1-Distill-Qwen-7B model as outputs, fine-tuning the DeepSeek-R1-Distill-Qwen-1.5B model with this dataset may yield a customized DeepSeek-R1-Distill-Qwen-1.5B model capable of both rapid response and effective integration of external knowledge. Regarding energy consumption, model performance is severely constrained by GPU memory limitations. Without adding new hardware, we can explore running the embedding model on the CPU to free up valuable GPU memory for the large model and KV Cache. To further enhance performance beyond current outputs, a hybrid approach is recommended. By default, use the DeepSeek-R1-Distill-Qwen-1.5B model without RAG while setting upgrade rules: when the DeepSeek-R1-Distill-Qwen-1.5B model’s response contains uncertainty indicators, the user explicitly requests knowledge base references, or the question is overly complex, model routing can directly switch to the DeepSeek-R1-Distill-Qwen-7B model with RAG for processing. This approach handles the vast majority of simple queries while also addressing complex problems when encountered.

In real-world environments, due to the complexity of on-site conditions, fault identification robots have already been deployed in practical settings. Considering mobile deployment, the DeepSeek-R1-Distill-Qwen-1.5B is more suitable for actual field applications, offering significant advantages in response speed and resource efficiency. Combined with RAG technology, it can meet the rapid diagnostic needs of most routine operational scenarios. However, its accuracy is somewhat limited, requiring human judgment in practical scenarios. Meanwhile, DeepSeek-R1-Distill-Qwen-7B demonstrate superior performance in complex fault diagnosis. However, their longer response times introduce latency, potentially extending operational maintenance durations. Therefore, within the VPP’s intelligent operations and maintenance platform, these two models can be integrated to form a RAG knowledge question-answering system for equipment troubleshooting. When VPP operations engineers detect equipment failures on-site via the operations robot, the locally deployed DeepSeek-R1-Distill-Qwen-1.5B small model on mobile terminals can rapidly identify the root causes and solutions corresponding to the fault symptoms, enabling swift resolution. If a fault alert is detected through the monitoring system, entering the fault symptoms into the model interface allows the system deployed the DeepSeek-R1-Distill-Qwen-7B model to quickly integrate information from the local knowledge base and provide precise troubleshooting steps, potential causes, and historical case studies. Its core advantage lies in its ability to understand semantics, generate summaries, and integrate document information—significantly reducing fault response times compared to traditional manual lookups or keyword searches. Future challenges involve building a comprehensive intelligent operations system. This system could adopt a layered deployment architecture, deeply integrating the analytical capabilities of two or more models while thoroughly addressing critical issues such as real-time performance, system integration, and reliability.

Additionally, a limitation of this study is that the model currently employed cannot address resource optimization problems requiring real-time data input and complex numerical calculations. At present, it functions solely as a knowledge retrieval and decision-support tool, rather than an autonomous optimization decision system. In the operation and maintenance of VPPs, resource optimization problems remain a key focus and challenge. Examples include how to schedule maintenance plans to achieve the lowest cost or shortest duration while ensuring power supply, or how to formulate participation strategies in scenarios with multiple market participants. To address these challenges, invoking external tools via LLMs emerges as a viable solution. This approach centers on equipping LLMs with the capability to invoke external tools. Under this paradigm, the LLM interacts with tools through API interfaces and integrates the tools’ outputs into its responses. This method significantly enhances the LLM’s performance in specialized domains [18]. Building on this concept, invoking optimization solvers via large language models presents a viable approach for solving VPP resource optimization problems. Domestic tools already exist for addressing power plant scheduling optimization [19]. Therefore, we contend that the LLM+Tools approach to solving optimization decision problems in virtual power plants represents a clear future research direction and a feasible implementation path.

5. Conclusions

This paper leverages retrieval-augmented technology from human–computer interaction to construct a root cause query model for equipment failures within a virtual power plant intelligent operation and maintenance platform, based on a locally established large language model. First, it clarifies the virtual power plant’s architectural framework comprising the “resource layer, network layer, platform layer, and upper-level systems,” detailing the functions of each layer within the virtual power plant and delineating the direction of information and command flows between them. Subsequently, the virtual power plant intelligent operation and maintenance platform within the platform layer is further elaborated. The platform’s time-based workflow and corresponding functional modules are analyzed. The platform comprises eight functional modules, each subdivided into four specialized functions. These functions collaborate within the workflow to achieve a logical closed-loop of “data-decision-control-verification-value.” Subsequently, a root cause analysis model for equipment failures was developed on the local hardware platform for the virtual power plant intelligent operation and maintenance platform, alongside the construction of a local knowledge base for equipment failure root causes. During local model deployment, two large models and a baseline model were selected for performance comparison. Through comparative experiments, The DeepSeek-R1-Distill-Qwen-1.5B model was found to be faster and more accurate without RAG, making it suitable for environments requiring rapid response. In contrast, the DeepSeek-R1-Distill-Qwen-7B model offers greater stability and, when combined with RAG, can generate higher-quality answers, providing more detailed references for operations tasks. The next phase of work involves selecting additional models for comparison and continuously updating the knowledge base to refine the establishment of the virtual power plant’s intelligent operation and maintenance platform.

Author Contributions

Conceptualization, Z.L. and Y.Z.; methodology, Z.L. and Y.Z.; software, Y.Z., L.N. and G.D.; validation, X.Z. and L.N.; formal analysis, Y.Z. and X.Z.; investigation, X.Z., G.D. and R.S.; resources, G.D. and R.S.; data curation, X.Z. and L.N.; writing—original draft preparation, Z.L. and Y.Z.; writing—review and editing, Z.L., X.Z. and L.N.; visualization, L.N. and G.D.; supervision, Z.L.; project administration, Y.Z. and R.S.; funding acquisition, Z.L. and R.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by Anshan Power Supply Company of Liaoning State Grid Electric Power Co., Ltd. Project name is “Research on Intelligent Operation and Maintenance Technology of Virtual Power Plants Based on Large Language Models” (Project No. 2025YF-13).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Acknowledgments

The authors would like to thank the reviewers for their valuable comments and suggestions.

Conflicts of Interest

Authors Zhengping Li, Xiangbo Zhu, Lei Nie and Guanming Ding were employed by the company State Grid Anshan Power Supply Company, Anshan, China. Author Yufan Zhao was enrolled at University of Science and Technology Beijing, Beijing, China. Author Ruizhuo Song was employed by University of Science and Technology Beijing, Beijing, China. The authors declare no conflicts of interest.

References

- Wei, Z.; Yu, S.; Sun, G.; Sun, Y.; Yuan, Y.; Wang, D. Concept and development of virtual power plant. Autom. Electr. Power Syst. 2013, 37, 1–9. [Google Scholar]

- National Development and Reform Commission of the People’s Republic of China. Notice on Deepening Market-Based Reform of Feed-In Tariffs for Renewable Energy Promoting High-Quality Development of Renewable Energy. Available online: https://www.ndrc.gov.cn/xxgk/zcfb/tz/202502/t20250209_1396066.html (accessed on 8 September 2025).

- Shimon, A.; Carayannis, E.G.; Preston, A. The Virtual Utility: Some Introductory Thoughts on Accounting, Learning and the Valuation of Radical Innovation; Springer: Boston, MA, USA, 1997; pp. 71–96. [Google Scholar]

- China Energy News. State Grid Unveils China’s First Multi-Billion-Level Multimodal Large Model for the Power Industry. Available online: https://paper.people.com.cn/zgnyb/pc/content/202412/23/content_30048825.html (accessed on 9 September 2025).

- State Grid Electric Power Research Institute: Nari Group Corporation. Advancing the Construction of New Power Systems: Scientific Achievements 4: Bright Power Large Model. Available online: http://www.sgepri.sgcc.com.cn/html/sgepri/gb/xwzx/zbdt/20250609/901344202506091041000002.shtml (accessed on 9 September 2025).

- China Southern Power Grid. Make MegaWatts Bigger and Better. Available online: https://www.csg.cn/xwzx/2024/gsyw/202408/t20240820_342231.html (accessed on 9 September 2025).

- Huawei Cloud. PanguLargeModels. Available online: https://www.huaweicloud.com/product/pangu.html (accessed on 9 September 2025).

- National Nuclear Safety Administration of the People’s Republic of China. State Power Investment Corporation Energy Science and Technology Research Institute’s Virtual Power Plant Platform Integrates DeepSeek Local Large Model. Available online: https://nnsa.mee.gov.cn/ztzl/haqshmhsh/haqrdmyyt/202502/202502/t20250228_1103074.html (accessed on 17 September 2025).

- The State Council of the People’s Republic of China. New Generation Artificial Intelligence Development Plan. Available online: https://www.gov.cn/zhengce/content/2017-07/20/content_5211996.htm?ivk_sa=1024320u (accessed on 24 September 2025).

- Li, P.; Huang, W.; Liang, L.; Dai, Z.; Cao, S.; Che, L.; Tu, C. Application and prospect of hybrid human-machine decision intelligence in the dispatch and control of next-era power systems. Proc. CSEE 2024, 44, 6347–6367. [Google Scholar]

- Hou, R.; Zhang, Y.; Ou, Q.; Li, S.; He, Y.; Wang, H.; Zhou, Z. Recommendation method of power knowledge retrieval based on graph neural network. Electronics 2023, 12, 3922. [Google Scholar] [CrossRef]

- Chen, Z.; Mi, W.; Lin, J.; Wang, H.; Wang, H.; Dong, G. Discussion on intelligence assistant scheme of dispatching and control operation in power grid. Autom. Electr. Power Syst. 2019, 43, 173–178+186. [Google Scholar]

- Sun, H.; Huang, T.; Guo, Q.; Zhang, B.; Guo, W.; Liu, W.; Xu, T.; Xu, T. Automatic operator for decision-making in dispatch: Research and applications. Power Syst. Technol. 2020, 44, 1–8. [Google Scholar]

- Power Sector Roundtable. Research on Technological System and Business Model for Virtual Power Plants Towards Carbon Peaking and Neutrality Goals. Available online: http://www.nrdc.cn/Public/uploads/2023-11-20/655b21ecbf17d.pdf (accessed on 9 September 2025).

- Zhong, Y.; Ji, L.; Li, J.; Zuo, J.; Wang, Z.; Wu, S. System framework and comprehensive functions of intelligent operation management and control platform for virtual power plant. Power Gener. Technol. 2023, 44, 656–666. [Google Scholar]

- Chen, J.; Sheng, S.; Lin, J.; Li, Y.; Peng, Y.; Xu, C. Zero sample power grid equipment ontology defect grade idetification method based on knowledge enhanced large language model. High Technol. Lett. 2025, 35, 429–439. [Google Scholar]

- Li, S.; Lu, Y.; Chen, X.; Wang, N.; Zhu, D. Implementation of construction engineering management knowledge question-answering model based on retrieval-augmented generation. Sci. Technol. Eng. 2025, 25, 10840–10849. [Google Scholar]

- Qu, C.; Dai, S.; Wei, X.; Cai, H.; Wang, S.; Yin, D.; Xu, J.; Wen, J. Tool learning with large language models: A survey. Front. Comput. Sci. 2025, 19, 198343. [Google Scholar] [CrossRef]

- Zhang, M.; Yin, W.; Wang, M.; Shen, Y.; Xiang, P.; Wu, Y.; Zhao, L.; Pan, J.; Jiang, H.; Huang, K. MindOpt tuner: Boost the performance of numerical software by automatic parameter tuning. arXiv 2023, arXiv:2307.08085. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).