1. Introduction

Within the theoretical framework of the information society, the deep integration of the internet and mobile communication technologies has propelled the comprehensive digitization of information dissemination and transmission. Empirical studies indicate that digital images, as quintessential multi-dimensional data carriers, now achieve three-dimensional spatial expansion in transmission channels, enhancing information representation efficiency by 42 percent compared with traditional models while maintaining a data fidelity rate of 98.2 percent [

1]. This technological empowerment, analyzed through Lessig’s digital rights management framework, manifests as a double-edged sword: it democratizes information accessibility by eliminating geographical and temporal constraints, yet simultaneously introduces systemic security risks [

2]. Such duality underscores the urgent need for adaptive regulatory frameworks to reconcile innovation incentives with intellectual property safeguards in hyperconnected ecosystems [

3].

To mitigate the malicious exploitation of digital images and safeguard their intellectual property rights, digital watermarking technology has been established as an effective copyright protection mechanism. By embedding imperceptible identifiers into digital content through information embedding principles, this technology enables robust traceability authentication for unauthorized usage [

4,

5]. Empirical studies demonstrate that such imperceptible markers achieve an 87.6 percent success rate in copyright tracking across diverse application scenarios. Digital watermarks can be broadly categorized into spatial-domain watermarks and frequency-domain watermarks. Spatial-domain approaches, while computationally simpler, exhibit inferior robustness compared with frequency-domain methods [

6,

7]. For instance, Deep Shikha Chopra et al. proposed a spatial-domain technique where the least significant bits (LSBs) of pixel values are directly replaced with watermark data. Another widely adopted spatial-domain method is Local Binary Pattern (LBP) [

8,

9]. However, such methods exhibit critical vulnerabilities in maintaining watermark integrity under adversarial conditions.

Frequency-domain watermarking demonstrates superior attack resistance and imperceptibility compared with spatial-domain approaches [

10], as its energy is stochastically distributed across spectral components and tightly integrated with image texture features, making precise localization and removal by adversaries highly challenging [

11]. The human visual system’s lower sensitivity to spectral noise further ensures covert watermark preservation. For instance, Bao et al. [

12] embedded watermarks by transforming U fractions of the YUV flavor universe into Radon domains, performing 2D-DCT on selected blocks, and modifying fixed mid-frequency coefficients. Wang et al. [

13] enhanced imperceptibility through periodic watermark encryption and sub-block mapping mechanisms. Kumari et al. [

14] optimized embedding positions via DWT-SVD decomposition combined with a tunicate swarm optimization algorithm (TSA), significantly improving robustness against geometric distortions. Woo et al. [

15] mitigated rounding errors in DWT using intelligent optimization techniques, achieving higher imperceptibility. While Zhang et al. [

16] leveraged DFT’s DC component uniqueness for blind extraction in spatial domains, DFT-based methods remain vulnerable to coordinate-level attacks in vector map applications due to localized sensitivity. To address this, Qu et al. [

17] proposed a hybrid DFT-SVD framework that embeds watermarks into invariant feature sequences derived from geometric transformations, thereby resisting affine attacks. These hybrid strategies underscore the necessity of integrating multiple frequency-domain transformations to balance robustness and adaptability in complex application scenarios [

18].

Frequency-domain algorithms typically process grayscale images directly. For color images, conventional approaches decompose them into three separate channels (e.g., R, G, B) for independent watermark embedding, which inevitably disrupts inter-channel correlations and compromises color integrity [

19]. To preserve these intrinsic relationships, quaternion-based methodologies emerge as an optimal solution [

20]. By representing color images as pure quaternion matrices—where the three imaginary components encode red, green, and blue channels—quaternion frameworks enable holistic processing of color data, preserving both chromatic coherence and spatial-textural interdependencies [

21]. This unified representation not only retains color fidelity but also enhances watermark robustness by leveraging the algebraic properties of quaternions, such as rotational invariance and multi-channel synchronization [

22], which are critical for resisting geometric distortions and channel-specific attacks. Bas et al. [

23] proposed a method integrating quaternion Fourier transform (QFT) with quantization index modulation, optimizing embedding parameters through performance analysis across diverse color image filtering processes while leveraging quaternion algebra to preserve holistic chromatic features. Wang et al. [

24] developed a quaternion polar harmonic transform (QPHT)-based framework that extracts stable structural feature points from color images, generating geometrically invariant regions for watermark embedding in frequency-domain coefficients correlated with color components, thereby maintaining chromatic integrity under affine transformations. Yan et al. [

25] introduced a quaternion-based image hashing technique that eliminates geometric distortion effects through quaternion spectral transformations, exhibiting exceptional resilience against common signal processing attacks. Chen et al. [

26] designed a QSVD-based algorithm incorporating key-dependent coefficient selection and cross-correlation optimization, achieving high-fidelity real-time embedding with minimal perceptual distortion. Zhang et al. [

27] implemented quaternion Householder transformations for color image watermarking, demonstrating robustness against compression and noise injection. Gong et al. [

28] enhanced geometric attack resistance by combining quaternion fractional orthogonal Fourier–Mellin moments (QFrOOFMM) with least squares support vector regression (LS-SVR) for distortion parameter estimation, enabling precise watermark recovery. Further innovations by Ouyang et al. [

29] improved uniform log-polar mapping (IULPM) integration with quaternion discrete Fourier transform (QDFT), significantly boosting resistance to scaling and rotation attacks.

This study proposes a robust watermarking algorithm based on QGT and neighborhood coefficient statistical features, addressing existing challenges by reducing computational complexity while enhancing security through visual capacity value optimization and watermark scrambling. The primary contributions are as follows:

A watermark embedding framework integrating QGT, using the neighborhood coefficient to determine the final watermark value, embedding the authenticated watermark in the real component of the quaternion coefficient, and then embedding the synchronous watermark in combination with the IULPM method to complete the embedding of the final watermark. This method increases the security and layout against geometric attacks. Meanwhile, through the embedding sequence of DQ, it can not only maintain the visual fidelity but also enhance the security of the watermark.

An adaptive embedding strategy prioritizing sub-blocks with higher visual capacity values to optimize imperceptibility, coupled with a support vector machine (SVM)-based extraction mechanism. The SVM models nonlinear relationships between watermark values, spatial positions, and neighborhood coefficients, enabling accurate watermark recovery under adversarial conditions. Experimental validation confirms superior resistance to compression, noise, and geometric distortions compared with conventional spatial-domain and frequency-domain methods.

The rest of the paper is organized as follows.

Section 2 briefly summarizes the approach used in the suggested scenario;

Section 3 gives a detailed description of the suggested image watermarking solution;

Section 4 introduces the empirical results, and the performance of this paper’s solution is presented in comparison with the state-of-the-art solutions reported in publications.

Section 5 summarizes the paper and suggests some future directions for development.

2. Background

In this section, some related techniques are introduced, including the concepts and related work on Arnold scrambling, Gyrator transform, quaternions and their related theories, inhomogeneity of blocks, etc.

2.1. Scrambling of Image Watermarking

Digital watermarking technology is usually inseparable from encryption technology [

30]. Generally, in digital watermarking, some information is encrypted to ensure the security of the watermark information. Under normal circumstances, the encryption of watermarks only requires simple operations. However, if the watermark information is relatively complex, more appropriate encryption techniques will be needed. For example, Gao et al. [

31] used a combination of chaotic mapping and neural networks to encrypt color images, which not only has excellent security but also reduces the time complexity. Since the watermark information in this paper is only a binary image and does not require complex operations, we only used the Arnold transform as the encryption operation of the watermark.

The Arnold scrambling transformation, also known as cat mapping, is widely adopted as a pre-processing technique in information hiding [

32] to enhance watermark security by converting meaningful watermark images into noise-like patterns prior to embedding. This method scrambles pixel positions through iterative coordinate transformations governed by the formula

where

,

P is the size of the binary watermarked image to be processed,

P is a matrix of two rows and one column,

is the pixel position of the watermarked image before disorganization, and

is the position of the pixel of the watermarked image after disorganization.

The Arnold scrambling transformation enables pixel coordinate relocation from

to

in digital watermarking, achieving information concealment through iterative position permutations. The scrambling iterations (K) serve as a private key to enhance cryptographic security by obfuscating spatial correlations. For watermark recovery, the inverse Arnold transformation is defined as

2.2. Introduction to Gyrator Transformations

Rodrigo and his team proposed a first-order optical system model based on a columnar lens array in 2006, which realizes the rotational transformation function of a two-dimensional image by adjusting the optical path parameters, and at the same time has the separable fractional-order Fourier transform characteristics. The core innovation lies in expanding the order of the traditional Fourier transform into the angle variable, forming a mathematical framework with the rotation angle as an independent control parameter, so it is defined as the Gyrator transform. The main idea of the calculation is as follows: Suppose there exists a grayscale

, and the rotation angle is of the Gyrator transform:

The above transformations can be converted according to Euler’s formula as follows:

If you need to invert the transformation, you only need to perform a Gyrator transformation with the rotation angle adjusted to to get the original image .

The inverse process of the Gyrator transform can be achieved by adjusting the rotation angle to the negative of the initial parameter; specifically, the original object can be reconstructed by applying the transform with a rotation angle of

. As a linear operation, this transform has previously been utilized in optical information processing and has been progressively extended to the domain of image encryption [

33]. Its primary advantage lies in the introduction of a freely adjustable rotation angle

as the key parameter, akin to the order parameter of the fractional-order Fourier transform. In the absence of the correct angle parameter, an unauthorized decryptor cannot recover the original image through conventional reverse operations, thereby significantly enhancing the security of the encryption system. In comparison to the traditional Fourier transform, the Gyrator transform facilitates hybrid analysis in the air-frequency domain through optical rotation transformation, offering distinct advantages in image feature extraction and information hiding. It has emerged as one of the leading tools in optical image processing, with substantial potential for algorithm optimization and multi-dimensional extensions, such as advancements in quaternionic domain applications.

2.3. Quaternion Correlation Theory

Quaternionic hypercomplex numbers are an extended concept after the complex numbers were proposed by Hamilton in 1843 to cope with the previous problem that complex numbers can only deal with two-dimensional space but not three-dimensional space. Since there exists a certain relationship between the three imaginary parts of quaternions, and at the same time, there exists a close structural relationship between the three channels in RGB color images, which corresponds exactly to the relationship between the components of quaternions, quaternions can be a data structure for expressing color images and have been introduced into various fields of color image processing by researchers, replacing the traditional image processing scheme with the image processing method under quaternions. The traditional image processing scheme is replaced by the image processing method under the quaternion, such as quaternion Fourier transform, quaternion singular value decomposition, quaternion wavelet transform, and quaternion rotary transform. The following mainly introduces the basic arithmetic rules under quaternions and the quaternion Fourier transform, which is closely related to this paper.

2.3.1. Quaternion Base Operations

Quaternions, as an extension of real and complex numbers, consist of one real part and three imaginary parts [

34,

35,

36]. The mathematical expression is

where

are real numbers;

are units of imaginary parts as quaternions, which means that this number is the imaginary part of quaternions; and the imaginary parts satisfy the following relationship:

The weighing component is the real part of the quaternion q, and is the imaginary part of the quaternion q. A pure quaternion is a quaternion for which the real part of the quaternion . When the imaginary part is 0, the quaternion transforms to a real number.

The conjugate operation of the quaternion is

. The modulo operation of the quaternion

q is

The modulus of the pure unit quaternion is 1.

Basic operations with quaternions: Let two quaternions be

and

, respectively.

Addition of quaternions means addition of corresponding parts, equality of quaternions means equality of corresponding parts, and multiplication of quaternions:

Addition and subtraction of quaternions satisfy the law of union and the law of exchange, but multiplication of quaternions does not have the law of exchange. When the real part of a quaternion is 0, the quaternion is said to be a pure quaternion. Each pixel of a color image

is represented by a quaternion as

where

,

and

denote the red, green and blue components of an image pixel, respectively.

are units of imaginary parts as quaternions.

2.3.2. Quaternion Gyrator Transformations

Because multiplication of quaternions does not satisfy the exchange law, the quaternion Gyrator transform is similar to the quaternion Fourier transform, defined in two forms: the left quaternion Gyrator transform and the right quaternion Gyrator transform. For a color image, the expression of the left quadratic Gyrator transform for the rotation angle

is

Right quaternion Gyrator transformations:

where

is a color image represented by a pure quaternion matrix:

The corners R, G, and B denote the three color channels, and

is the kernel function of the transformation [

37], which is expressed as follows:

where

is the unit pure quaternion, i.e.,

, and

. And the variable

represents the angle of rotation of the image. In order to reconstruct the original signal from the transformed signal, the inverse GT corresponds to the QGT at rotation angle ‘

’.

From the conjugate property of the quaternion product, it follows that the left quaternion Gyrator transform and the right quaternion Gyrator transform can be converted to each other as follows:

2.4. The Non-Uniformity of Visual Capacity

The transparency of digital watermarking pertains to the perceptual invariance between the original host image and the watermarked image as assessed by the human visual system (HVS), ensuring that embedded watermarks remain imperceptible. Visual thresholds demonstrate spatial heterogeneity; areas with significant background variations or complex textures inherently exhibit higher thresholds due to visual masking effects, where rapid luminance gradients or intricate patterns diminish the HVS’s sensitivity to localized perturbations. By leveraging the HVS’s insensitivity to subtle spatial distortions while maintaining acute detection of granular artifacts, watermark embedding must comply with sub-threshold intensity criteria to prevent perceptibility. This requires adaptive watermark embedding, where local visual capacity—measured through gradient magnitude, edge density, or texture complexity—dictates region-specific embedding strengths. By focusing on high-capacity areas (e.g., textured regions with elevated masking thresholds) for more robust watermark insertion while safeguarding low-capacity zones (e.g., smooth backgrounds), the balance between imperceptibility and robustness is systematically optimized.

where

.

i and

j refer to the pixel positions of the sub-blocks to be calculated at present. The carrier image is a square matrix of size

, partitioned into

sub-blocks. Let

denote a sub-block of the carrier image,

represent the mean value of that sub-block, and

be the weighted correction factor (0.6–0.7) [

38]. And the range of

itself does not affect the performance but only plays a balancing role.

Unlike grayscale images, the carrier image is a quaternion-based color image, where the parameters within the numerator and denominator brackets in Equation (

16) are quaternions. The operator

calculates the modulus of the quaternion parameters, ensuring algebraic consistency in the computation of sub-block statistical features while preserving inter-channel correlations inherent to color representations.

4. Experimentation

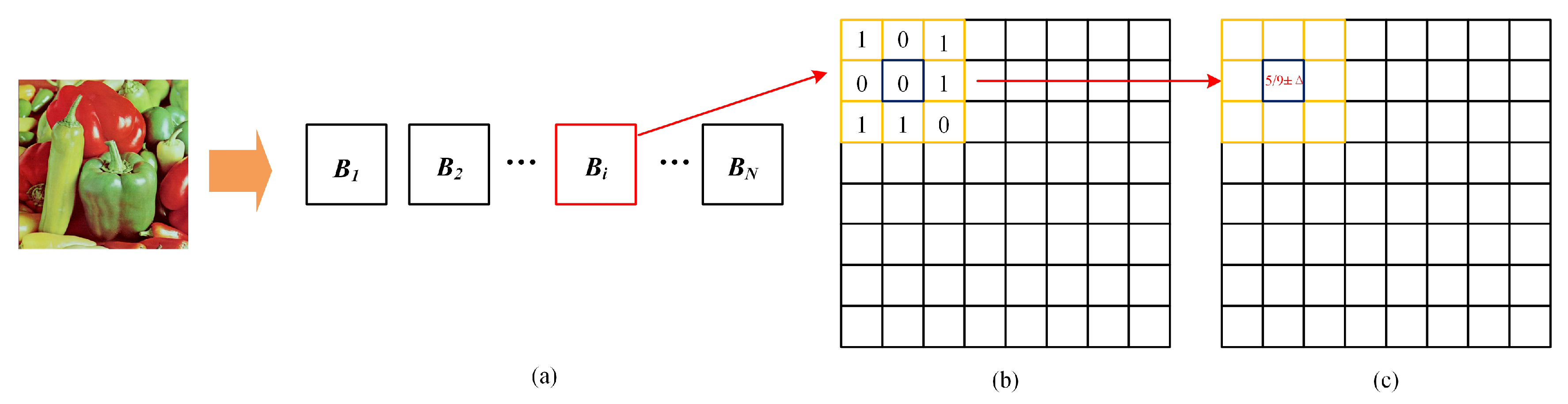

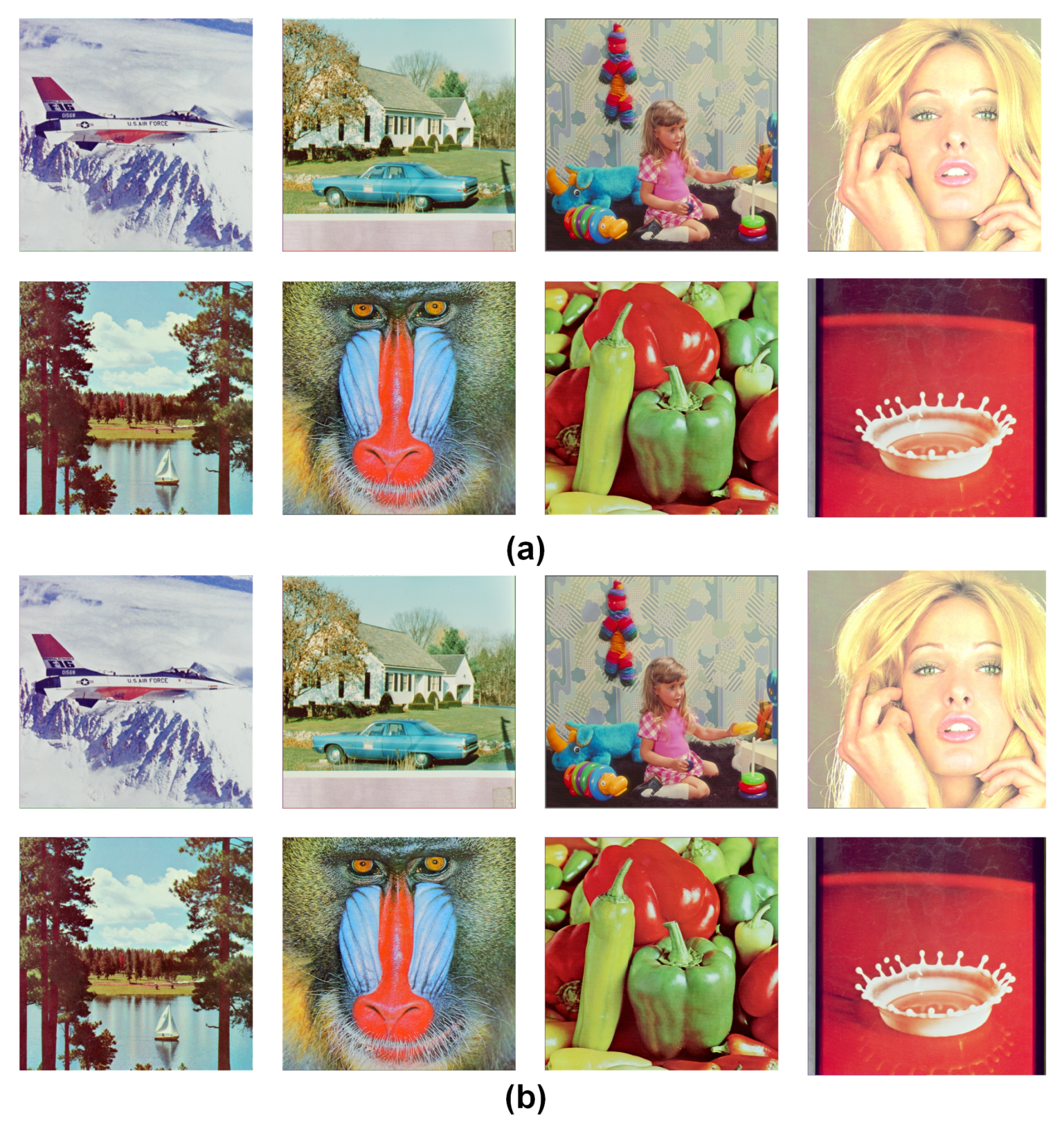

The properties of the watermarking algorithm are typically evaluated in terms of both robustness and imperceptibility. To assess the robustness and imperceptibility of the proposed watermarking algorithm, the following experiments utilize a color digital image of size 512 × 512 as the host image, as depicted in

Figure 4a–h. The host images employed are standard color pictures sourced from the CVG-UGR and USC-SIPI databases. The watermarked image (i) is a binary digital image measuring 64 × 64, and a series of simulated attacks is conducted following the embedding of the watermark. To ensure a more accurate and objective evaluation of the algorithm’s performance, the experimental results are compared with several related watermarking techniques. All experimental results were obtained using the MATLAB R2023b software on a PC with the following specifications: an Intel(R) Xeon(R) CPU E5-1650 v2 @ 3.50GHz, 32GB RAM, and Windows 10 Professional Edition.

4.1. Assessment of Indicators

In digital watermarking technology, the image quality assessment system is the core link to authenticate the efficacy of the method. The system needs to meet two core performance requirements at the same time: one is the covertness (i.e., imperceptibility) of the watermarking information, and the other is the algorithm’s anti-interference ability (i.e., robustness). In an effort to quantitatively assess the covertness of the approach suggested in this essay, the study selects an internationally recognized dual index evaluation system—Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). In addition to these, Normalized Correlation Index (NC) and Bit Error Rate (BER) are also used to assess the anti-jamming capability of the approach.

PSNR: PSNR is utilized to calculate the differential in pixels between the source picture and the corrupted pictures and is a widespread and useful tool for objectively assessing the quality of a watermarked image. The better the PSNR value, the higher the quality of the watermarked picture and the more improved the watermarking algorithm is. The PSNR of a multicolor digitized image is as follows:

where

is a log scale feature with base ten,

j indicates the

j th level of color in the picture,

indicates the pixel locations, and

and

are the pixels on the

position in the

j th layer of the vector picture and the watermark picture, respectively.

The PSNR value can be calculated according to Equation (

19). In measuring the imperceptibility of the watermark, the higher the PSNR value, the better the quality of the image embedded with the watermark, and the higher the visibility of the watermark. Generally, a PSNR is higher than 30 dB, which indicates that the imperceptibility of the watermarked image is comparable to the visibility of the persistent one.

SSIM: Similar to PSNR, SSIM is a more recent image similarity metric tool. It is designed according to the characteristics of human visible light and the visual system. The SMIM value lies between 0 and 1, which can validly weigh the performance of the image. The higher the level of the SSIM value, the better the quality of the image embedded with the watermark. The SSIM calculation formula is shown in Equation (

20).

where

H represents the carrier image,

represents the watermarked image, respectively, and

and

denote the average of

H and

,

and

represent the criterion variance of

H and

, respectively, and

represents the covariance of

H and

, respectively, while

and

are two other statistics.

In addition to the metrics mentioned above, normalized correlation [

39] and bit error rate (BER) [

40] are often assessed to evaluate the methods’ robustness against various attacks. They are typically utilized in the information graphic for watermarking information documents. The NC measure is found to be the likeness ratio between the initial watermarked graphic and its recovery. The range of NC ranges from [0, 1]. The NC range is 1 when both the comparative graphics are the same. As the difference increases between the two objects, the NC value decreases, and a value of zero means that both images are the same.

NC: Normalized correlation is an objective standard for the estimation of the ruggedness of watermarking calculation algorithms; the range of NC numbers is 0–1; the larger the NC number is, the greater the likeness of both objects is. The calculation of the NC number is as follows:

4.2. Parameter Selection

The watermark insertion intensity is always a trade-off, which is directly related to the quality of the image and the watermark’s roleness. To identify the embedding intensity , we perform an analysis of the performance of the watermarking encoding algorithm by computing the average of PSNR and NC values on a color standard test image set of size 512 × 512. The image is chunked with an image block of size 8 × 8, a certified watermark of size 64 × 64, and Select as the embedding position of the watermark. As a result, the total number of sub-blocks that the host image is segmented into is 4096, and the same number of SVM training sets is also 4096.

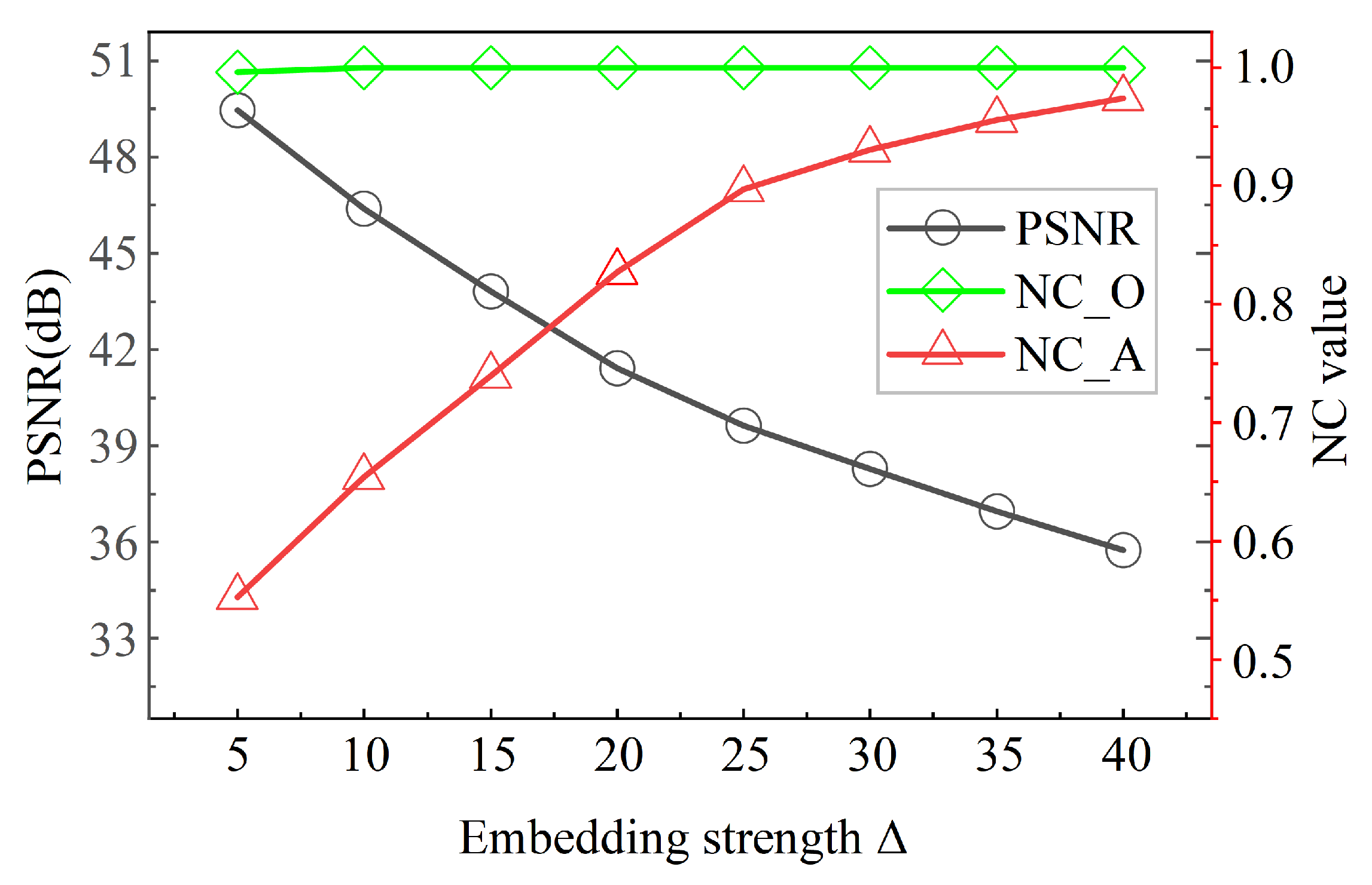

Figure 5 shows the variation of PSNR and NC mean value with different embedding strengths, where NC_O indicates the NC of the extracted watermark when the image does not receive an attack, and NC_A indicates the NC of the extracted watermark when the image receives an attack.

From the observation, we can conclude that the larger the embedding intensity

value, the more robust the watermark is, but it will lead to a lower PSNR of the host image. Whereas, smaller embedding strength

value produces higher image quality but makes the watermark easy to eliminate. It has been shown by consensus that a PSNR value greater than 35 dB and less than 48 dB indicates that the image is visually pleasing [

41]. In consideration of the need to counterbalance the transparency and robustness of the watermarked image, the embedding Intensity

was set to 23 in the simulations to ensure that the PSNR value of the host image after embedding the watermark is about 40 dB.

Support Vector Machine (SVM) [

42] is a supervised learning model mainly used for classification and regression analysis. It is capable of achieving a balance between model complexity and generalization ability under the condition of limited samples to prevent overfitting. Choosing appropriate parameters during the SVM training process has an important influence on the performance of the model. The system parameter search strategy combined with cross-validation can effectively balance the model complexity and generalization ability, making SVM applicable to related tasks such as classification and regression. To sum up, SVM is highly suitable for implementation in the proposed method. The key parameter configurations include setting the kernel type to linear, fixing the regularization parameter (c value) to 1, defining the kernel parameter as 1, disabling the normalization parameter (normalization = 0), and optimizing the solver through sequential least optimization (SMO).

4.3. Analysis of Imperceptibility

To ensure the covert characteristics of digital watermarking, this study employs a dual index system comprising PSNR and SSIM to quantitatively assess the imperceptibility of watermarking. The experimental design is structured as follows: within the eight groups of original carrier images depicted in

Figure 4, single authentication watermarks and combinations of authentication and synchronous dual watermarks are embedded using the algorithm outlined in

Section 3. Subsequently, the visual difference parameters are calculated based on the normalized Formulas (

19) and (

20).

The quantitative data presented in

Table 1 indicate that the mean PSNR value reaches 41.19 ± 0.36 dB when embedding the authentication watermark and 40.61 ± 0.51 dB when embedding the dual watermarks. The SSIM metrics for both groups stabilize within the 0.99 ± 0.01 range. This data demonstrates that (1) the superposition of two watermarks results in only 0.58 dB of PSNR attenuation, evidencing the multilayer watermarking architecture’s excellent steganographic compatibility, and (2) the standard deviations among the test samples remain below 0.5 dB, confirming the algorithm’s robust adaptability across varying image features. Additionally, visual evaluation results, such as the comparison schematic in

Figure 6, further substantiate that watermark implantation does not induce noticeable texture distortion or color shift, thereby establishing visual quality criteria for imperceptible watermarks.

PSNR and SSIM results of watermarked images obtained using the method of this paper.

Where “Auth” means embedding just one certification watermark, while “Dual” means embedding one certification watermark and one standardization watermark.

In particular, it should be noted that although the introduction of synchronized watermarking leads to a downward shift in the domain of PSNR values, the increase in its standard deviation is controlled within 0.15 dB, indicating that the redundant information integration strategy of the geometric correction module effectively balances the contradiction between functionality expansion and visual fidelity. This feature enables this method to maintain the covert transmission of watermark information in response to rotation and cropping space attacks.

4.4. Robustness Analysis Against Attacks

In the field of digital watermarking technology, robustness specifically refers to the watermarking algorithm’s anti-interference ability to maintain the recognizability and integrity of the watermarked information after the carrier image has been subjected to malicious attacks such as geometrical deformation, filtering processing, compression, and degradation. This index becomes a core parameter for evaluating the security level of algorithms by quantifying the tolerance threshold of the watermarking system to attacks. In this study, the attack resistance of watermarking algorithms is quantitatively evaluated by NC. Initially, the watermarked images are subjected to various assaults. Next, the watermarked icons are restored from every hacked watermarked icon using the extraction as well as decryption techniques explained in detail in the previous section. Finally, the NCs are calculated among the pristine and restored watermarked images using Equation (

21). The following subsections provide detailed results on robustness.

4.4.1. No Attack

In that simulation, the watermarked samples did not receive any hits.

Table 2 illustrates the NC numbers and BERs. From

Table 2, it can be seen that for all the tested color images, the suggested technique acquires an NC worth of 1 and a BER worth of 0. This shows that the suggested method is very effective in recovering the watermarked pictures, devoid of any mass damage. Also, it shows that the suggested method still recovers the watermarked image correctly, no matter which test color image is employed.

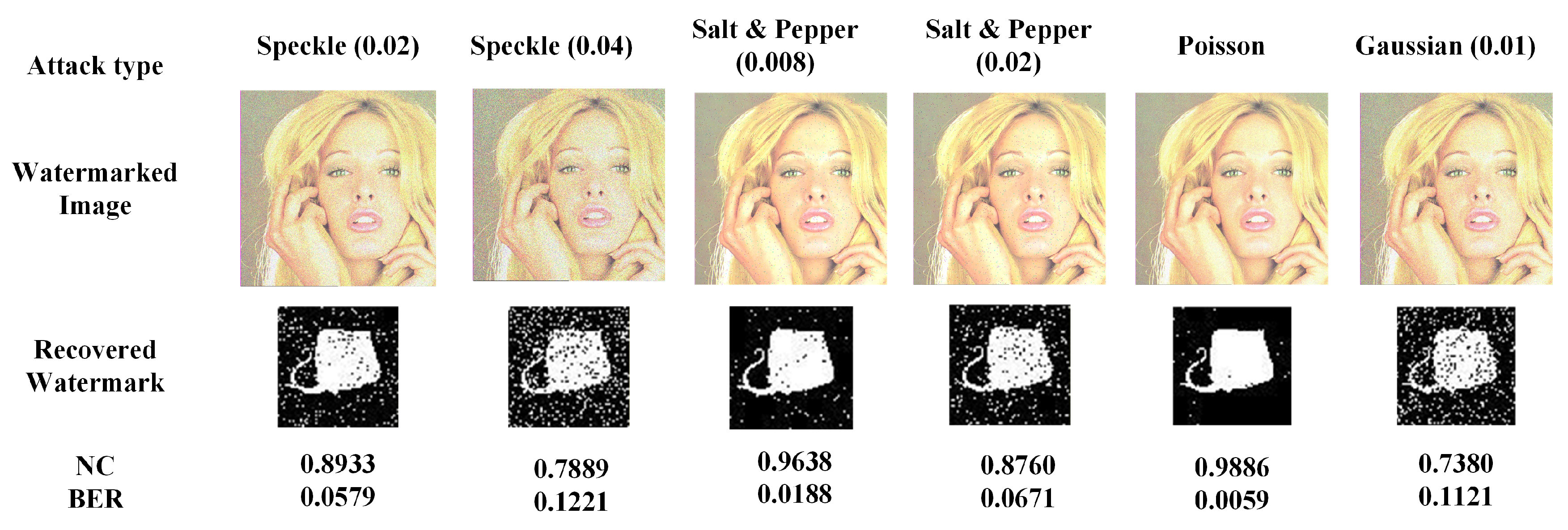

4.4.2. Noise Attack

In this simulation, a variety of noises like speckle noise, pretzel noise, and Gaussian noise are added to the watermarked image. The NC results are listed in

Table 3. In additional,

Figure 7 illustrates some voluntary outcomes of recovering the watermarked information from the noisy watermarked image. From

Table 3, it can be seen that in most cases, the NC value is close to 1. This indicates that the proposed method is highly resilient to the studied noise assaults.

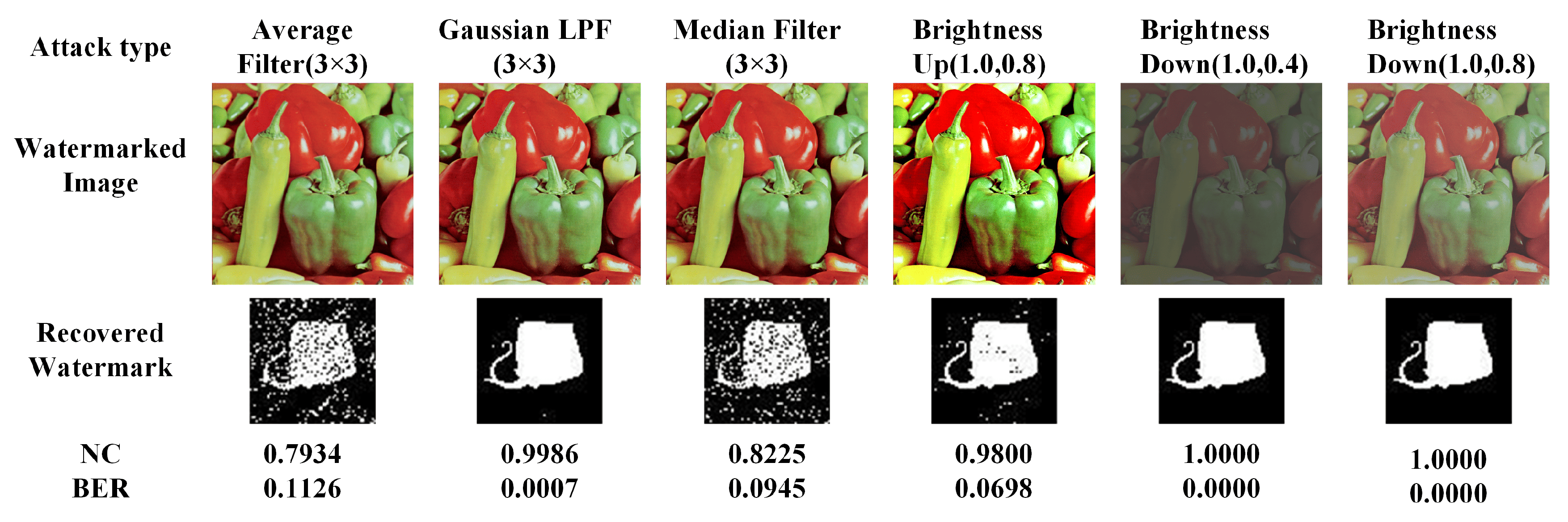

4.4.3. Filtering and Brightness Attack

In this simulation, the image with a watermark is attacked by a variety of filters, such as the median filter, averaging filter, and Gaussian Low Pass Filter (LPF), and the variations of the attacks on the luminance include increasing the luminance and decreasing the luminance.

Table 4 shows NC levels. Moreover,

Figure 8 shows some visual results of the watermarked image restored from the filtered watermarked one.

This experimental image has been subjected to a filtering attack and a luminance attack. As can be seen from

Table 4, the NC value of the information recovered from the watermark under the luminance decrease attack is 1, which indicates that the algorithm in this paper is highly resistant to the luminance decrease attack. The NC values for brightness increase are generally above 0.8 except for individual ones, which are on the low side. Against the filtering attack, the total NC value is around 0.8, and the individual NC value is 1; it shows that the watermark possesses good resistance to the filtering attack.

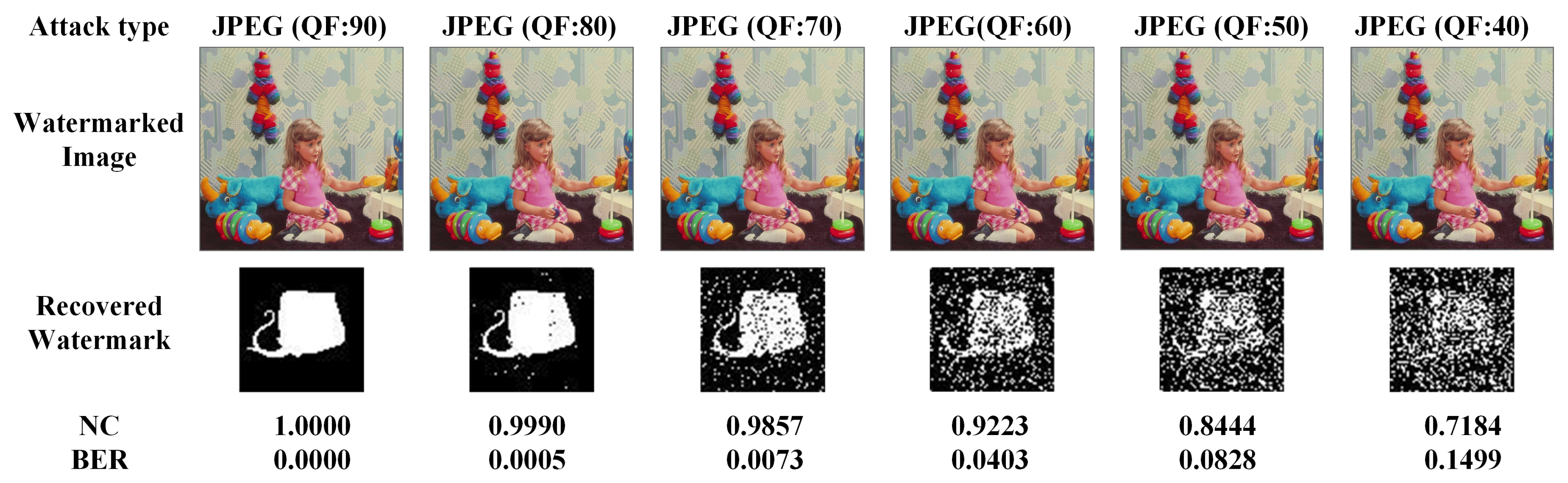

4.4.4. JPEG Compression Attack

JPEG compression is a way to condense an image without appreciably degrading the perceived visual aspect of the resulting image. It is commonly used for a digital watermark, as it provides increased delivery efficiency. In this simulation, watermarked imagery is crushed using JPEG compression with a variable quality factor (QF). The NC values are listed in

Table 5. Furthermore,

Figure 9 displays some visual consequences of recovering a watermarked image from a compressed watermarked example.

It can be seen from

Table 5 that when the QF is varied between 60 and 90, the NC is close to or in the same range as 1. This indicates that the recovery of the watermarked pictures is almost the same or exactly the same as that of the source watermarked pictures. It is also seen that as the QF is placed at 40 or 50, the NC levels of some of the watermarked pictures depart by 1 and 0, and the deviation increases as the QF is set lower, but the NC value remains above 0.64 at a QF of 40 for JPEG, indicating that the present method has a certain degree of resistance to JPEG compression.

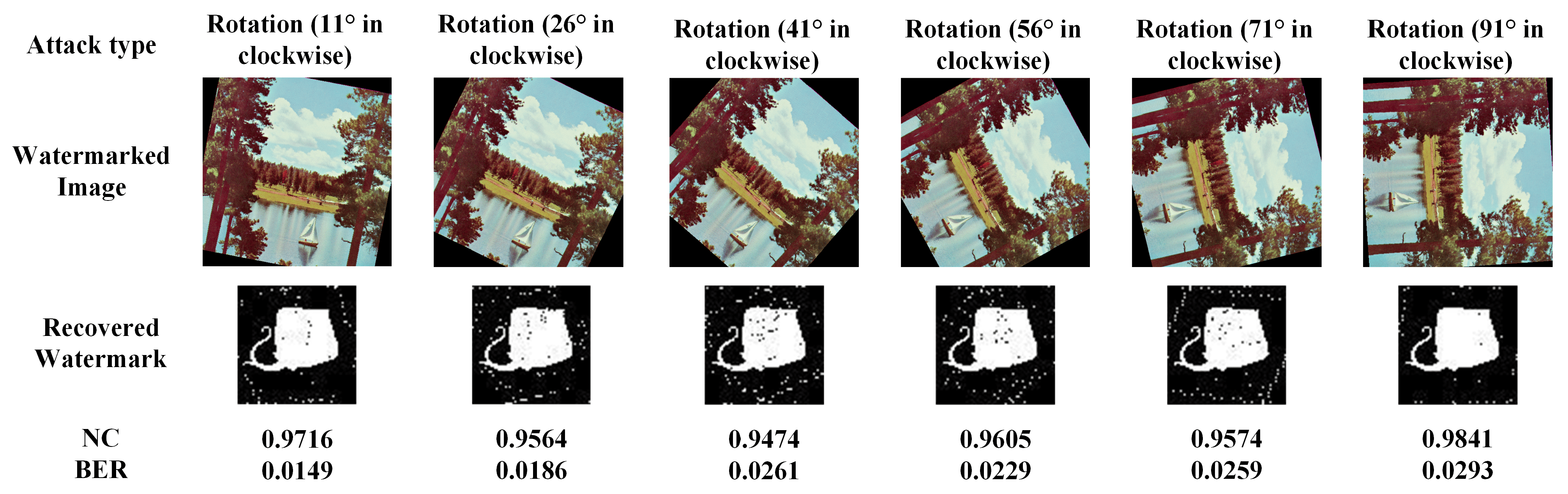

4.4.5. Rotation Attacks

In this experiment, the watermarked image rotation attack was used. In this experiment, the attack with clockwise rotation of the watermarked image is taken; the rotation angles are 11°, 26°, 41°, 56°, 71°, and 91°. The NC values are listed in

Table 6. Furthermore,

Figure 10 shows some visual results of recovering the watermarked image from the attacked watermarked image.

As a typical form of geometric attack, image rotation not only changes the absolute position of pixels but also causes relative changes in pixel values. This double destructiveness leads to the failure of the synchronization mechanism of watermark embedding and extraction, which makes the traditional watermarking algorithm face serious challenges. In the experimental design, the researchers systematically analyze the correlation between the watermark detection accuracy and the rotation angle by rotating the watermark-containing image at different angles. As can be seen from

Table 6, the NC values under rotational attacks are very close to 1. This indicates that the proposed method has excellent robustness to geometric attacks with motion rotation (

Figure 10).

4.4.6. Other Attacks

In this experiment, the watermarked image is subjected to contrast attack, scaling attack, sharpening attack, and histogram equalization attack. The contrast attack takes both contrast enhancement (value 1.2) and contrast reduction (value 0.8), the scaling attack uses 0.8 times and 2.0 times to attack the image, and the sharpening attack value uses 2.

Table 7 lists the NC values. In addition,

Figure 11 shows some visual results of recovering the watermarked image from the attacked watermarked image.

As shown in

Table 7, the NC values of the experimental attacks on the host image are generally around 0.9, which indicates that the proposed method has excellent robustness to these attacks on motion rotation. The NC value of 1 for the contrast attack in particular indicates that the algorithm is highly resistant to the contrast attack.

4.5. Capacity and Timing Analysis

In the realm of digital watermarking technology, imperceptibility and robustness have traditionally been considered the primary evaluation criteria. Conversely, the embedding capacity of watermarking algorithms—the amount of information that can be carried per unit of image—has typically been regarded as a secondary consideration. However, with the increasing prevalence of color images in multimedia applications, the data dimension (e.g., the RGB three-channel) has expanded significantly compared with grayscale or binary images. This shift has catalyzed research into large-capacity color watermarking technology, making it a focal point of interest.

The information-carrying capacity in digital watermarking is typically quantified by the maximum number of embeddable bits per pixel, which indicates the upper limit of watermark information that can be accommodated by the carrier image while maintaining visual quality. The mathematical definition of this index is represented in Equation (

22), expressed in bits per pixel (bpp). This equation reflects the relationship between the maximum number of embeddable bits, denoted as

, and the total pixel count of the host image, represented as

, forming the core calculation logic of this metric.

In order to gauge the proposed watermarking method’s embedding capacity, the maximal embedding capacity of the proposed watermarking algorithm is calculated. In this paper, a 512 × 512 colored host image is partitioned into 8 × 8 image chunks, and the real part of each sub-chunk is embodied with 1 bit of binary watermarking data. The relevant comparison results can be seen in

Table 8.

The computational efficiency of digital watermarking algorithms constitutes a critical evaluation criterion, particularly in 5G-enabled environments where real-time processing demands necessitate optimized execution speed.

It can be seen from the data listed in

Table 9 that the execution time of watermark embedding and watermark extraction proposed by this algorithm is relatively long.

Since the time complexity of the quaternion Gyrator transformation itself for processing images is , when processing the host image and performing block operations, it requires an execution of the quaternion Gyrator transformation at the order of . Therefore, the overall time complexity of the proposed algorithm is of the order of .

In the comparison method, Mohammed et al. and Wang et al. The time complexity of both Mehraj et al. is . The time complexity of the algorithm of Ubhi et al. Gul et al. is .

The overall complexity of each process of the proposed method is as follows: Block QGT overall complexity is , the statistical characteristic of computing time complexity is , and the overall complexity of the SVM prediction is .

4.6. Security Analysis

From

Section 3.2, it can be seen that the parameters required for the watermarking algorithm include the watermark image disambiguation key

, the sequence DQ, and the QGT rotation angle

. Secondly, the embedding of one bit of information in the real part has to be determined by determining the embedding position, and the wrong extraction position is not able to extract the watermarked information successfully. Therefore, the safety of the suggested approaches is assessed by the allocation of distinct sets of values for the required bonds based on the hypothesis below. Here, we take the assumption that the embedding locations are all correct for the experiments.

First, we assume that the disruption key, DQ sequence, and rotation angle used are all different. Second, we assume that only the scrambling key is correct among the keys used each time, and the remaining two are incorrect. Third, it is assumed that just one out of each used key is wrong and the other two are right. At last, we assume that all keys used are true for all three keys.

For each performed operation, we used the state policy decryption watermarking image process detailed in

Section 3.2.

Table 10 lists the outcomes of the eight experiments carried out. As can be seen from

Table 10, when any of the used keys is incorrect, the restored watermarked image is a noisy image. When multiple keys are used incorrectly, the recovered watermarked imagery is even worse. The recovered watermarked image is the same as the original watermarked image when the correct key is used. Based on the above-mentioned factors, it can be concluded that the proposed system is highly secure.

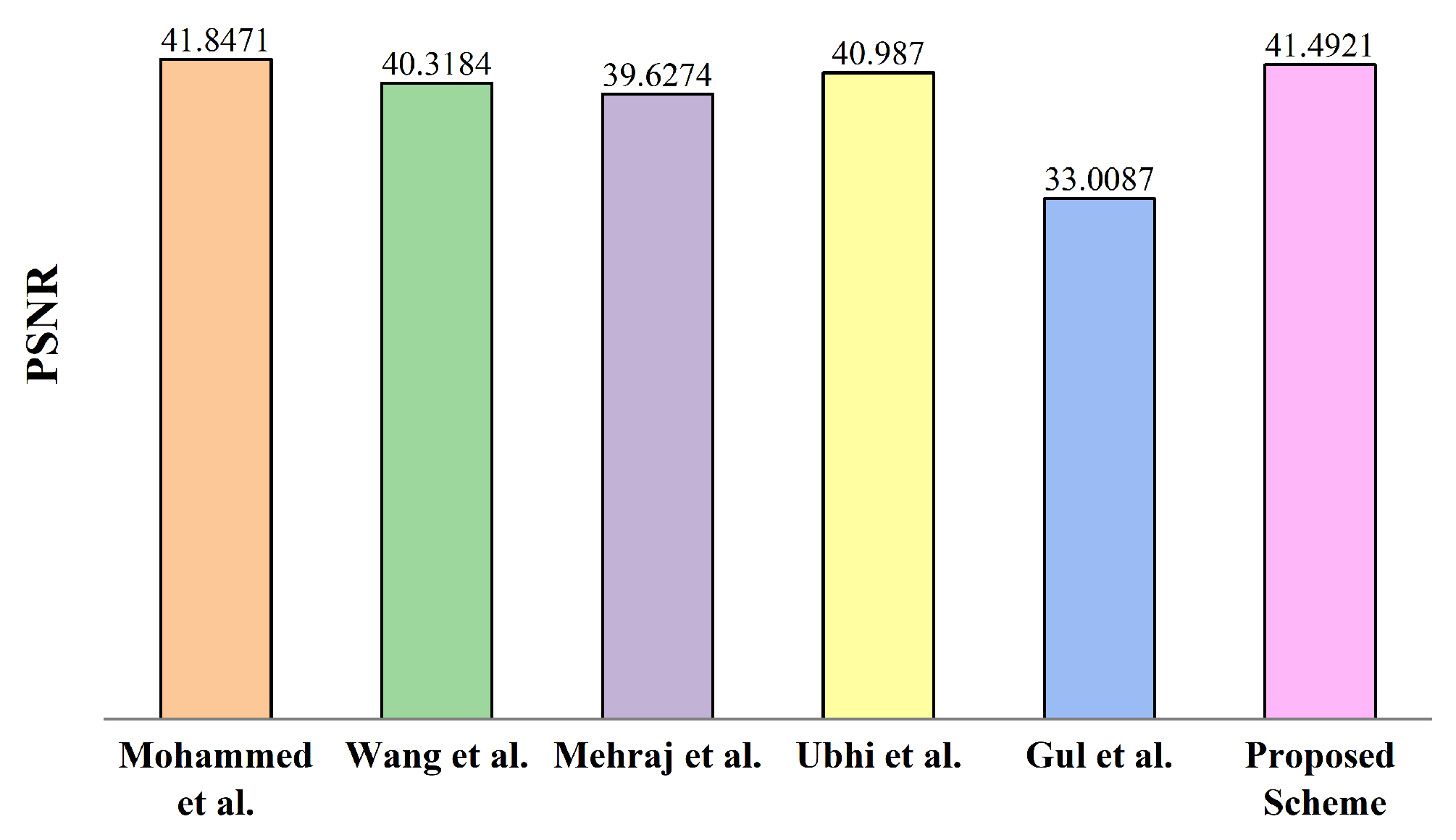

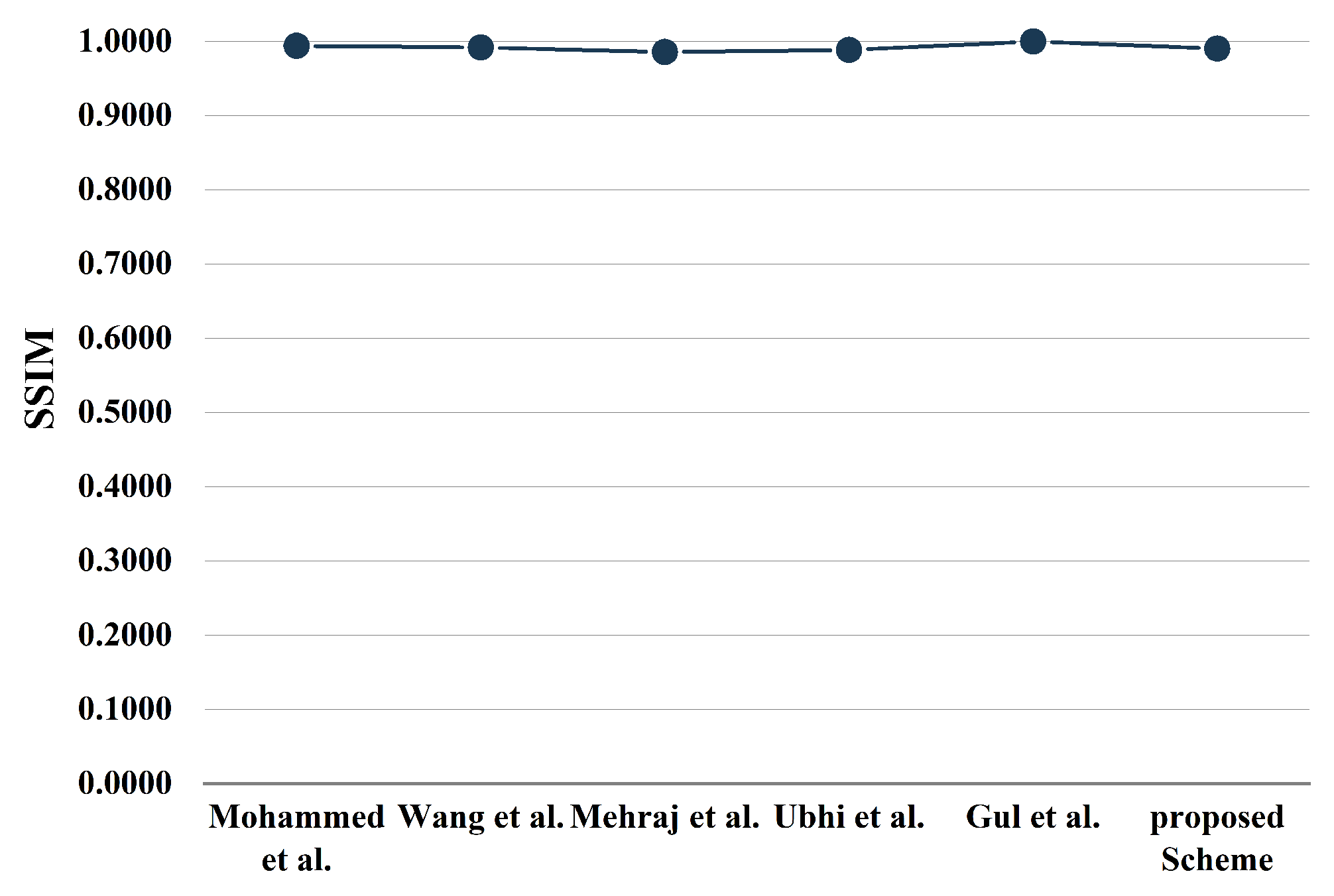

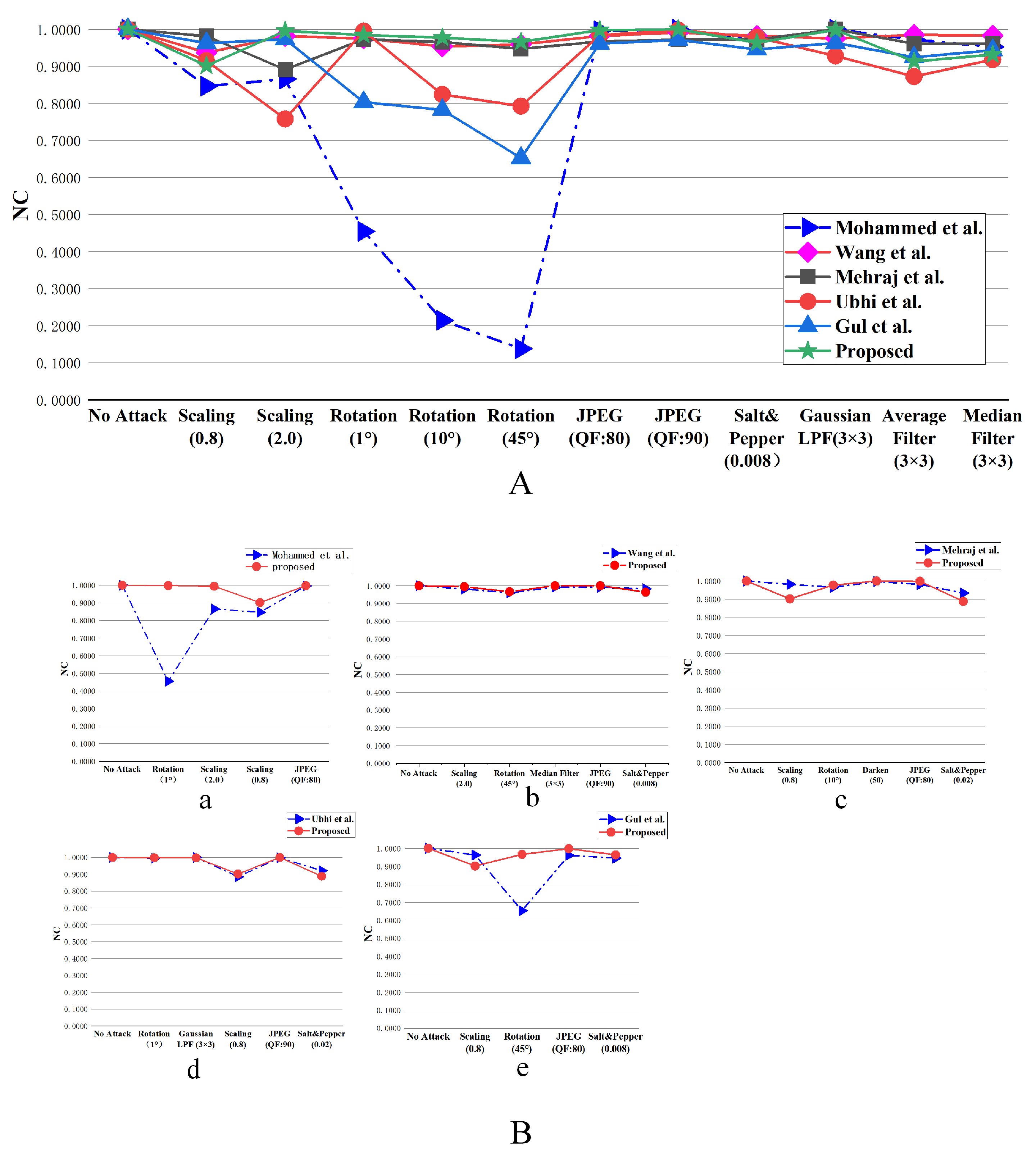

4.7. Comparison with State-of-the-Art Approaches

This chapter gives a set of experiences of comparing the proposed method with the recent approach to image watermarking in terms of imperceptibility and the robustness metrics. The outcomes of the Imperceptibility Comparison and Robustness Response competitions are shown in

Figure 12 and

Figure 13, and the Robustness Response competition is shown in

Figure 14, respectively.

Figure 14, part (A) is a visual comparison plot of the compared methods in a single plot, and part (B) is a detailed comparison plot. The comparative methodologies were sequentially referenced as follows: Mohammed et al. [

43]; Wang et al. [

13]; Gul et al. [

45]; Mehraj et al. [

40]; and Ubhi et al. [

44].

In this paper, an equivalent binary image is used as a digital watermark embedded into the host image, and the embedding results of the watermark are shown in

Figure 12 and

Figure 13, excluding the Gul et al. [

45] method, the PSNR values of the remaining algorithms reach around 40 dB, and the PSNR of the proposed method is 41.4921, which is higher than most of the algorithms in the referenced literature and shows good invisibility; the SSIM values of all the methods in the literature and the proposed method are all greater than 0.96; on the other hand, it can be found from observing the visual effect graphs in

Figure 6, that the watermarks are embedded in the watermarked host image is not detectable, which indicates that the methods in this paper perform reasonably well in terms of the degree of unrecognizability. In other words, the proposed watermarking algorithm possesses good invisibility compared with the overall schemes in the literature.

As can be seen in part (A) of

Figure 14, the proposed method performs better than the method of Mohammed et al. [

43] and Gul et al. [

45] in terms of robustness in most cases, as shown in Figures a and f in part (B) of

Figure 14. The proposed method shows better robustness under the attack of rotation compared with Mohammed et al. [

43], Wang et al. [

13], and Gul et al. [

45]. However, under a scaling attack, the method of Mehraj et al. [

40] and Gul et al. [

45] performs more satisfactorily than ours; however, the proposed method performs better than the method of Mohammed et al. [

43] and Wang et al. [

13]. Moreover, under JPEG compression attack, our method is slightly better than the methods of Mohammed et al. [

43], Wang et al. [

13], Mehraj et al. [

40], and Gul et al. [

45]. The proposed method outperforms the method of Mohammed et al. [

43] and Gul et al. [

45] under the attack of pretzel noise, although it is not as good as the method of Wang et al. [

13] under median filtering attack or as good as the method of Ubhi et al. [

44] under Gaussian filtering attack, but the proposed algorithm’s NC value under this attack still maintains the high values of 0.9317 and 0.9981, which also indicates that the robustness of the proposed method in this area is highly desirable.

Based on the findings of the empirical comparisons and the previous section of the discussion, it can be summarized that the present method implements both satisfactory imperceptibility and striking robility as opposed to the prior art methods.

In the experimental section of this paper, the digital watermarking algorithm demonstrates limited resistance to adversarial attacks, particularly when subjected to modifications of the watermark image through small perturbations, which may result in the loss or destruction of watermark information [

46]. This observation suggests that the current quaternion-based digital watermarking methods possess certain limitations in robustness. To enhance the algorithm’s resilience against such attacks, future research should consider improvements to the watermark embedding strategy, increasing redundancy, or integrating deep learning techniques, such as Generative Adversarial Networks (GANs) [

47], to bolster watermark robustness. Furthermore, it will be essential to evaluate and optimize the performance of watermarking algorithms under various adversarial attacks to enhance their practical applicability.

5. Conclusions and Future Prospects

In this paper, a robust watermarking algorithm based on QGT and neighborhood coefficient statistical features is proposed. The QGT and domain statistical features are combined to enhance the robustness of watermarking; the image is segmented into non-overlapping sub-blocks; the authentication watermark is embedded in each word fast; and the visual capacity sequence is used to guide the embedding priority. The IULPM method is embedded into the geometric watermark, and finally, both watermarks are embedded into the host image to enhance the attack-resistant nature of the image. The watermarks are extracted using a support vector machine, which stores the mapping relationships when embedding the watermarks. The watermarked values can be extracted very well. The digital watermarking algorithm proposed in this study, based on the dual watermark embedding mechanism and the statistical feature fusion strategy, has achieved the collaborative optimization of robustness and security in the field of digital copyright protection. In terms of security, the adopted three-level key system includes the master key, feature key, and verification key to ensure that unauthorized users cannot obtain the complete watermark. Experimental data show that this algorithm has significant resistance to attacks such as JPEG compression and median filtering. This multi-dimensional protection mechanism provides a reliable technical path for digital content copyright certification.

For further research, we intend to investigate other properties of QGT and apply them to watermarking algorithms. For example, after QGT transformation, we assign its amplitude, which can increase the embedding capacity of watermark information to a greater extent. Since QGT itself needs to perform multiple multiplications, increasing the execution efficiency of time is one of the future research directions. In addition, we also intend to test the feasibility of the tamper detection function of semi-fragile watermarking by using this method in order to understand the advantages and properties. For example, we will explore deep learning techniques applied to the fusion with watermarking and tampering localization models to obtain more robust performance. The combination of support vector machine and deep learning is also one of our future research directions. The future development direction will inevitably be inseparable from deep learning, and support vector machines can precisely fit into the field of deep learning. Therefore, in the future, the multi-core or integration of vector machines can be combined with deep learning as one of the research directions.