3.2. Experimental Results and Comparative Analysis

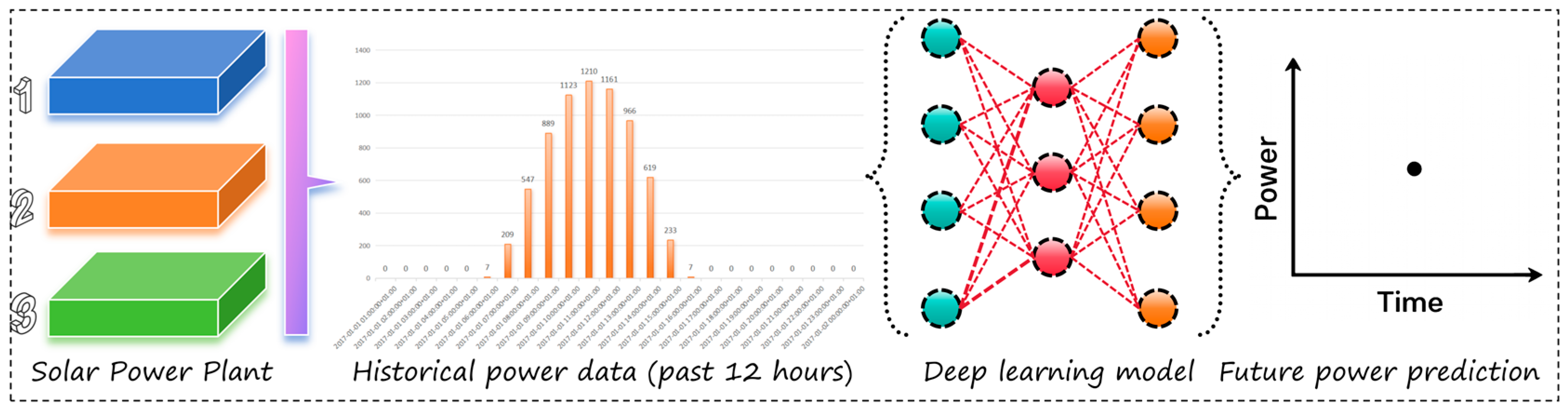

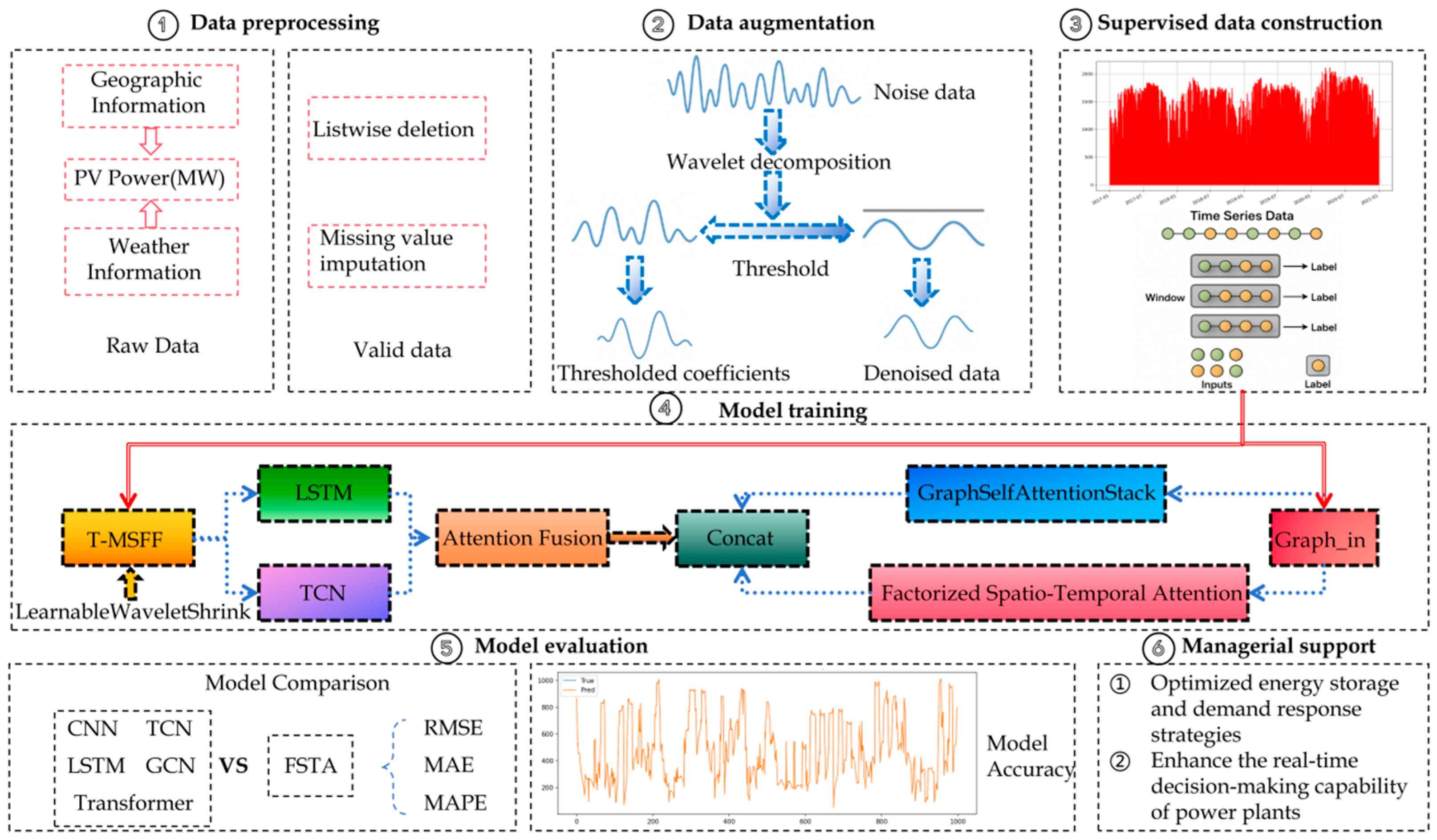

In this study, the TCN–LSTM architecture is designated as the baseline, and a comprehensive suite of modular ablation experiments is orchestrated within the proposed WGL framework, enabling a systematic dissection of each component’s functional contribution to the overall predictive efficacy. All experiments are conducted on a three-year corpus of historical PV generation data, employing a 12-h look-back window and a 1-h forecasting horizon to emulate ultra-short-term operational conditions. Through the progressive incorporation of key WGL modules atop the baseline, the study conducts a granular investigation into each component’s role in capturing temporal dependencies and delineating spatial feature interactions.

As evidenced by the quantitative results in

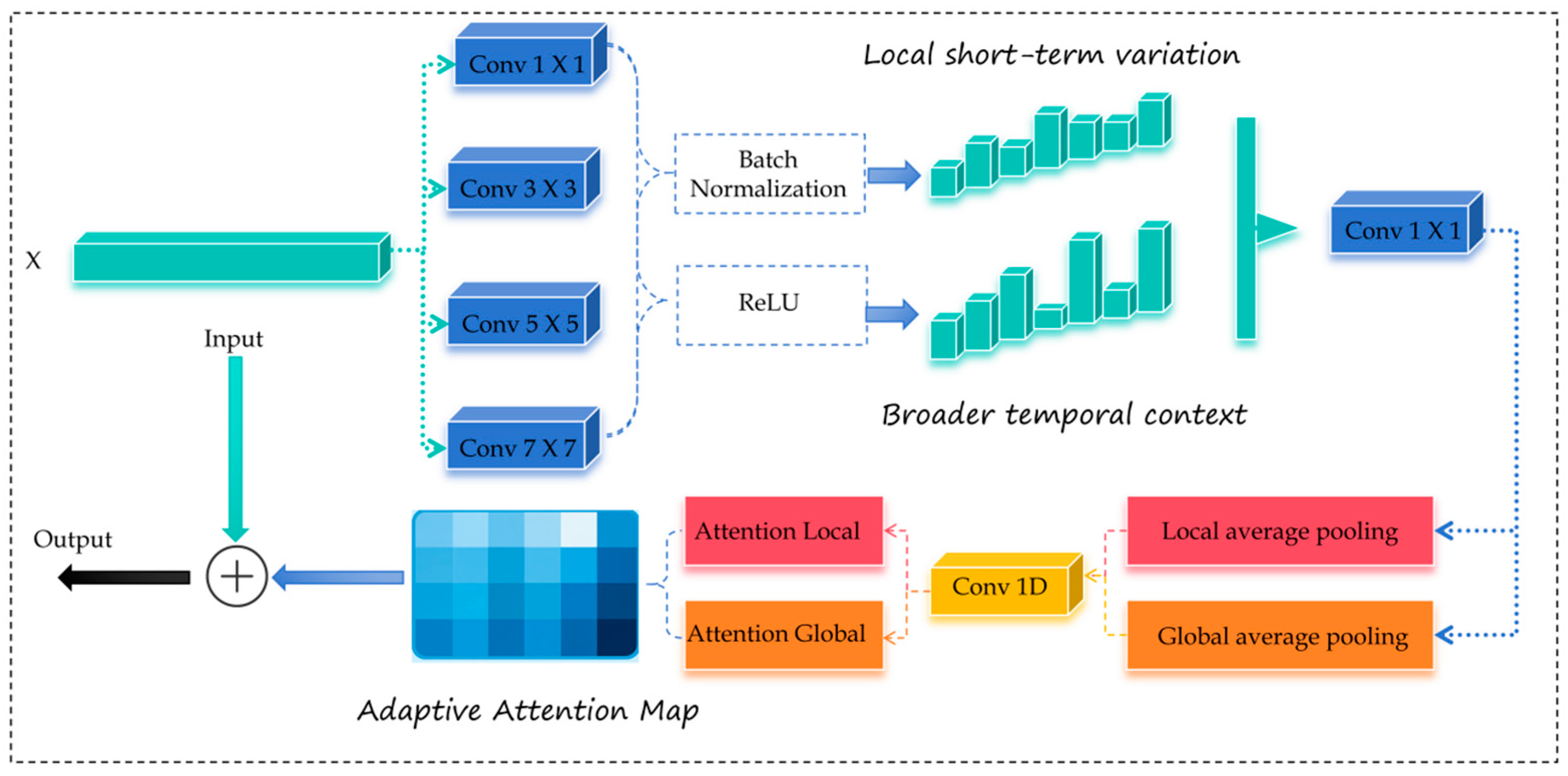

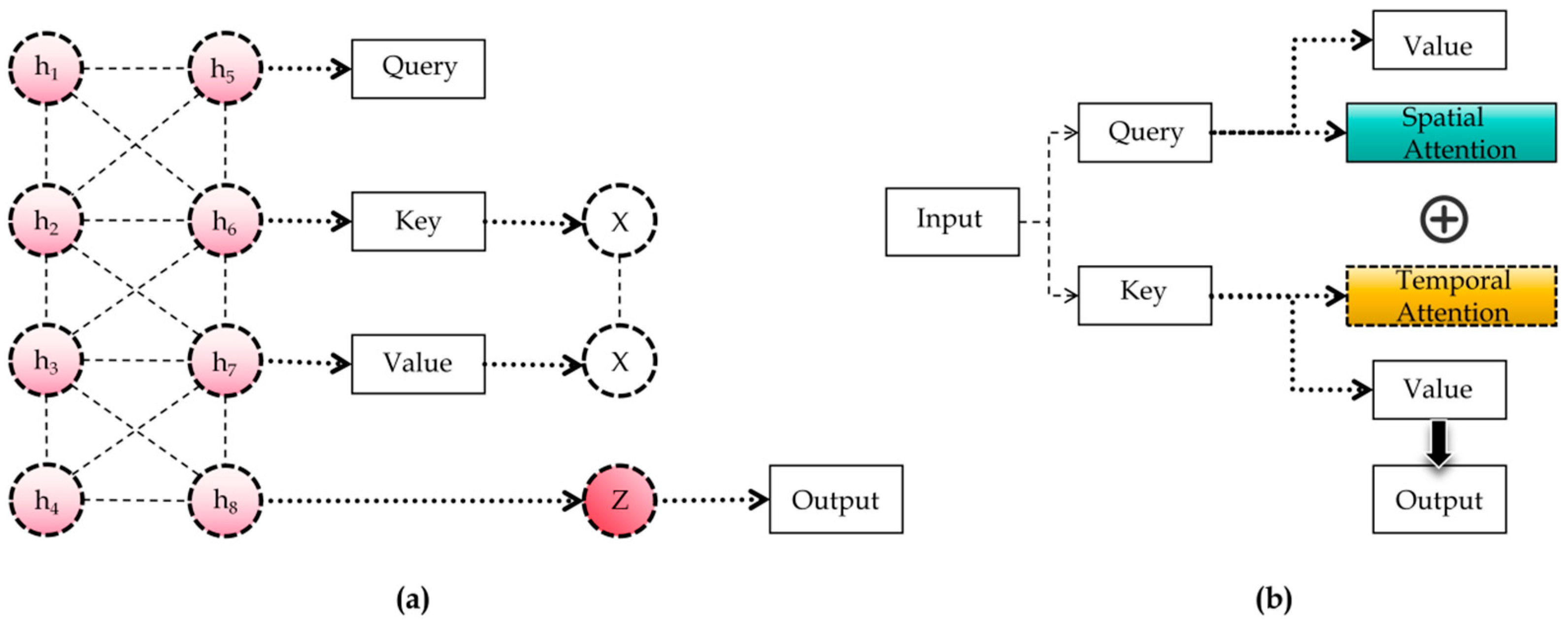

Table 4, the ablation experiments conducted at a 1 h forecasting horizon reveal that the baseline model attains an RMSE of 0.80, an MAE of 0.50, and a MAPE of 1.39. Upon incorporating the attention-oriented modules (Attention Fusion, GSA, and FSTA), the error metrics decline to 0.60, 0.36, and 1.01, respectively. Building on these results, the proposed WGL framework achieves further reductions—RMSE = 0.57, MAE = 0.33, MAPE = 0.91—corresponding to relative improvements of 28.8%, 34.0%, and 34.5% over the baseline, and additional decreases of 5.0%, 8.3%, and 9.9% relative to the attention-only configuration. From a mechanistic perspective, LWS performs adaptive denoising via trainable shrinkage embedded in a wavelet decomposition–reconstruction framework, thereby enhancing the robustness of time–frequency-localized features. Attention Fusion introduces a differentiable attention mechanism that adaptively balances the respective contributions of LSTM and TCN across sample and feature dimensions, effectively integrating long-term dependencies with local temporal patterns. GSA integrates prior adjacency as a bias term while jointly learning an adaptive adjacency matrix, achieving a synergistic balance between geometric priors and task-specific relations. Finally, FSTA factorizes comprehensive spatio-temporal attention into temporal and node-level subspaces, markedly reducing computational and memory complexity while maintaining fine-grained cross-time and cross-node interactions. Overall, the WGL framework coherently integrates denoising and recalibration (LWS), adaptive fusion (Attention Fusion), and structure-aware spatio-temporal attention (GSA + FSTA) within a unified architecture, thereby mitigating systematic bias, suppressing extreme residuals, and delivering stable and cumulative performance gains.

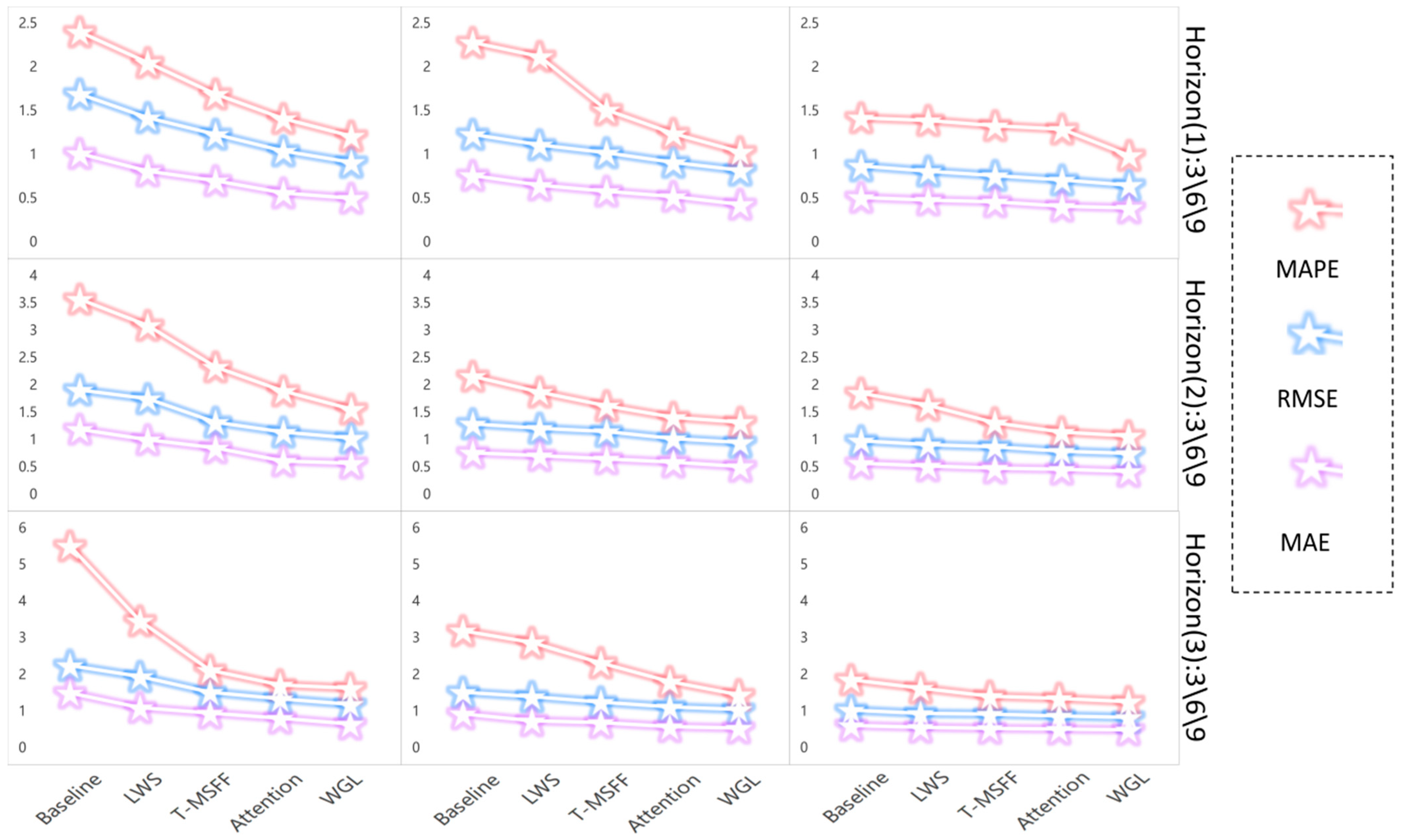

As illustrated by the comparative analyses presented in

Figure 8, three evaluation metrics—MAPE, RMSE, and MAE—are employed to rigorously assess the performance of five representative models (Baseline, LWS, T-MSFF, Attention, and WGL) across three experimental scenarios and forecasting horizons of 3, 6, and 9 h. The results reveal a consistent decline in prediction errors as model complexity increases, with WGL attaining the lowest MAPE, RMSE, and MAE across all scenarios and horizons, thereby demonstrating its comprehensive performance advantage. Notably, under more challenging conditions—characterized by higher baseline errors and extended forecasting horizons—WGL achieves markedly greater error reductions, indicating that the proposed framework not only enhances point-estimate accuracy but also effectively mitigates extreme deviations and noise perturbations.

For benchmarking purposes, the proposed WGL framework is evaluated against several representative deep learning architectures, including GCN, CNN, LSTM, TCN, Transformer [

29], CNN–LSTM [

30], and Transformer–LSTM [

31]. All comparative experiments are trained on a three-year corpus of historical PV generation data, employing a 12-h look-back window as input and forecasting targets of 1, 2, and 3 h ahead.

As evidenced by the quantitative results presented in

Table 5, under an identical input sequence length of 12 h, the proposed WGL model consistently delivers superior predictive performance across all forecasting horizons. At a forecasting horizon of 3 h, WGL attains RMSE = 0.65, MAE = 0.39, and MAPE = 1.21, corresponding to reductions of approximately 33%, 35%, and 28% relative to CNN–LSTM (0.97/0.60/1.69). Furthermore, when compared with single-architecture baselines such as LSTM, TCN, and Transformer (e.g., for a 1 h horizon: LSTM = 1.21/0.73/2.65; TCN = 1.47/0.85/2.33), WGL exhibits even more pronounced advantages. Collectively, these results demonstrate that under a unified input configuration, WGL consistently yields lower forecasting errors across all short-term horizons, underscoring its enhanced capacity to capture complex temporal dependencies and nonlinear dynamics, while maintaining robust stability and strong generalization in hour-level photovoltaic power forecasting.

The results in

Table 6 show significant differences among the models in terms of parameter count, computational cost, and latency. Although CNN is the lightest model, it has the highest latency, indicating lower parallel efficiency. TCN and LSTM achieve reduced latency, while CNN-LSTM maintains a reasonable delay despite increased computation. The Transformer-based models exhibit relatively low latency even with larger parameter sizes, demonstrating strong parallel performance. Overall, WGL performs best with the lowest latency (6.15), suggesting a more efficient architectural design.

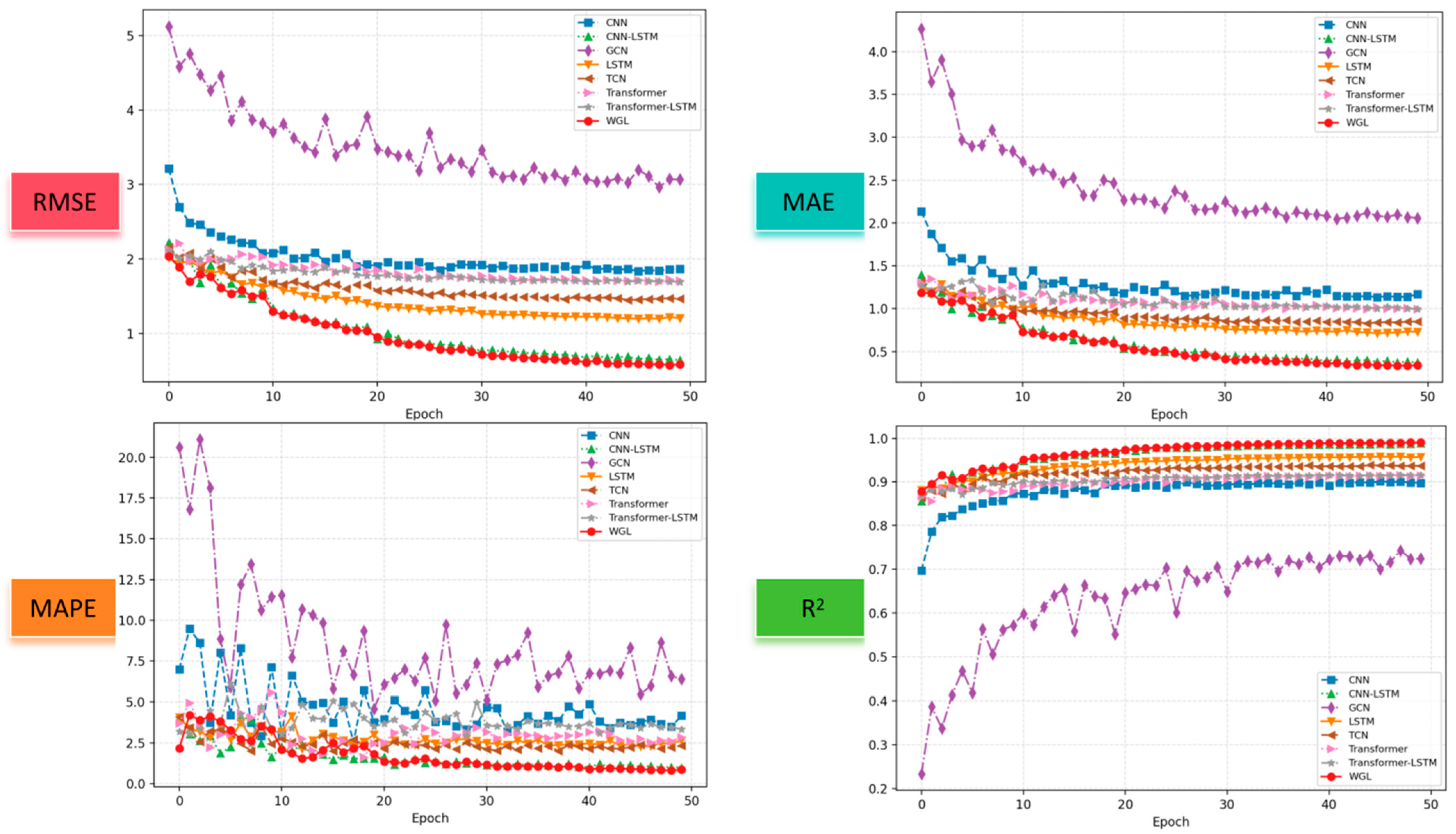

Figure 9 presents four subplots illustrating the evolution of RMSE, MAE, MAPE, and R

2 across training epochs for different models. Most models begin with comparatively high initial errors that progressively decline and eventually stabilize; nevertheless, their convergence rates, terminal error magnitudes, and training stability differ substantially. In particular, the WGL model (red curve) consistently surpasses all counterparts across every evaluation metric, exhibiting the fastest convergence, the smoothest training trajectory (with minimal oscillations), the lowest terminal RMSE, MAE, and MAPE, and the highest and most stable R

2 values. By contrast, several alternative models exhibit noticeable oscillations during early-stage training and slower convergence, with certain architectures showing pronounced MAPE fluctuations—an indication of heightened sensitivity to outliers and limited-sample variability.

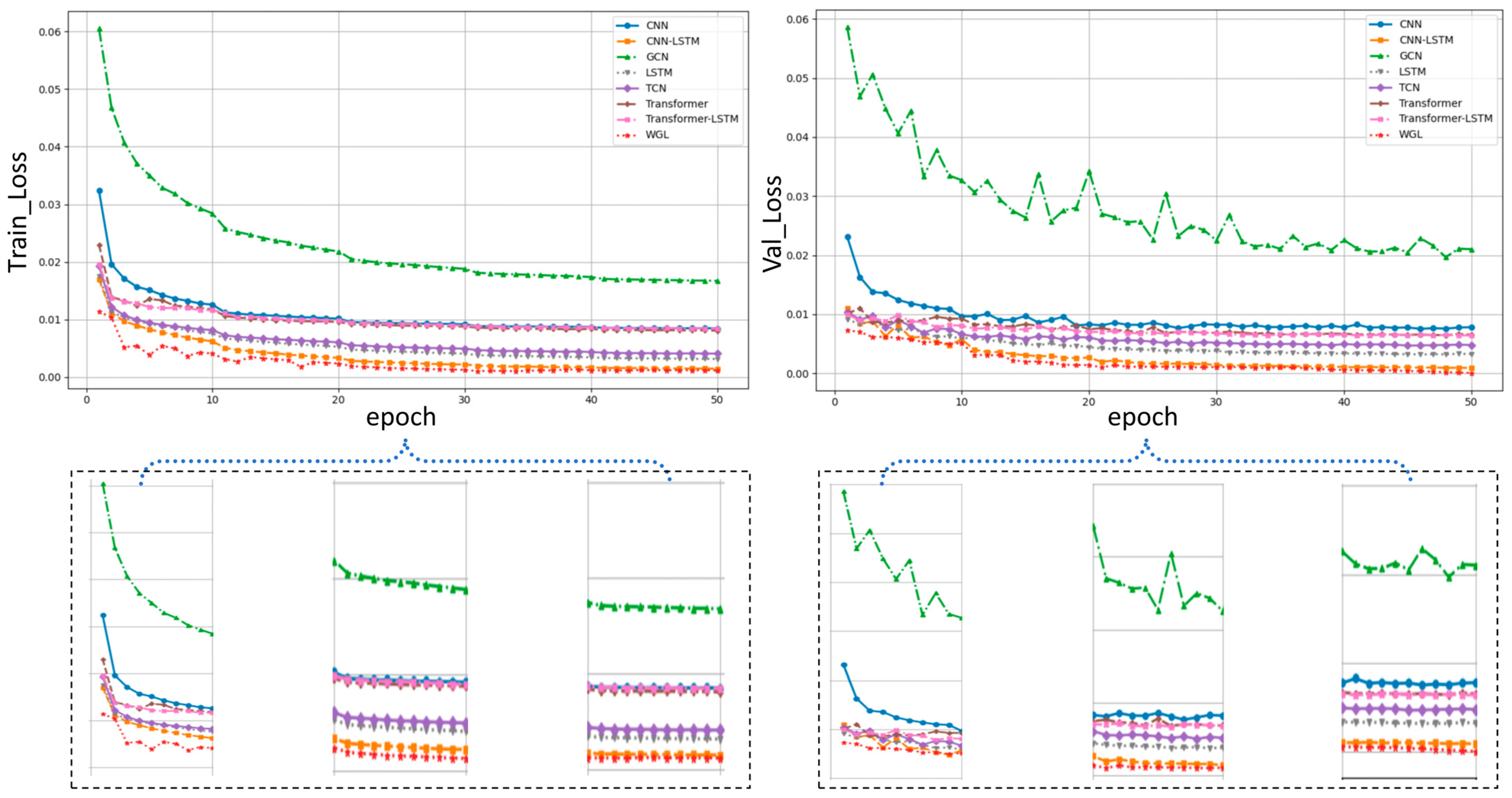

The convergence behavior illustrated in

Figure 10 highlights that the proposed WGL model consistently outperforms all comparative baselines—including CNN, LSTM, TCN, Transformer, and their hybrid variants—in convergence speed, loss attenuation, and training–validation coherence. The WGL model rapidly converges toward a low-loss regime during the initial training phase and remains exceptionally stable thereafter, ultimately attaining the lowest and most consistent validation loss among all evaluated models. Collectively, these observations confirm that WGL exhibits superior representational capacity, strong generalization, and marked resilience to noise perturbations.

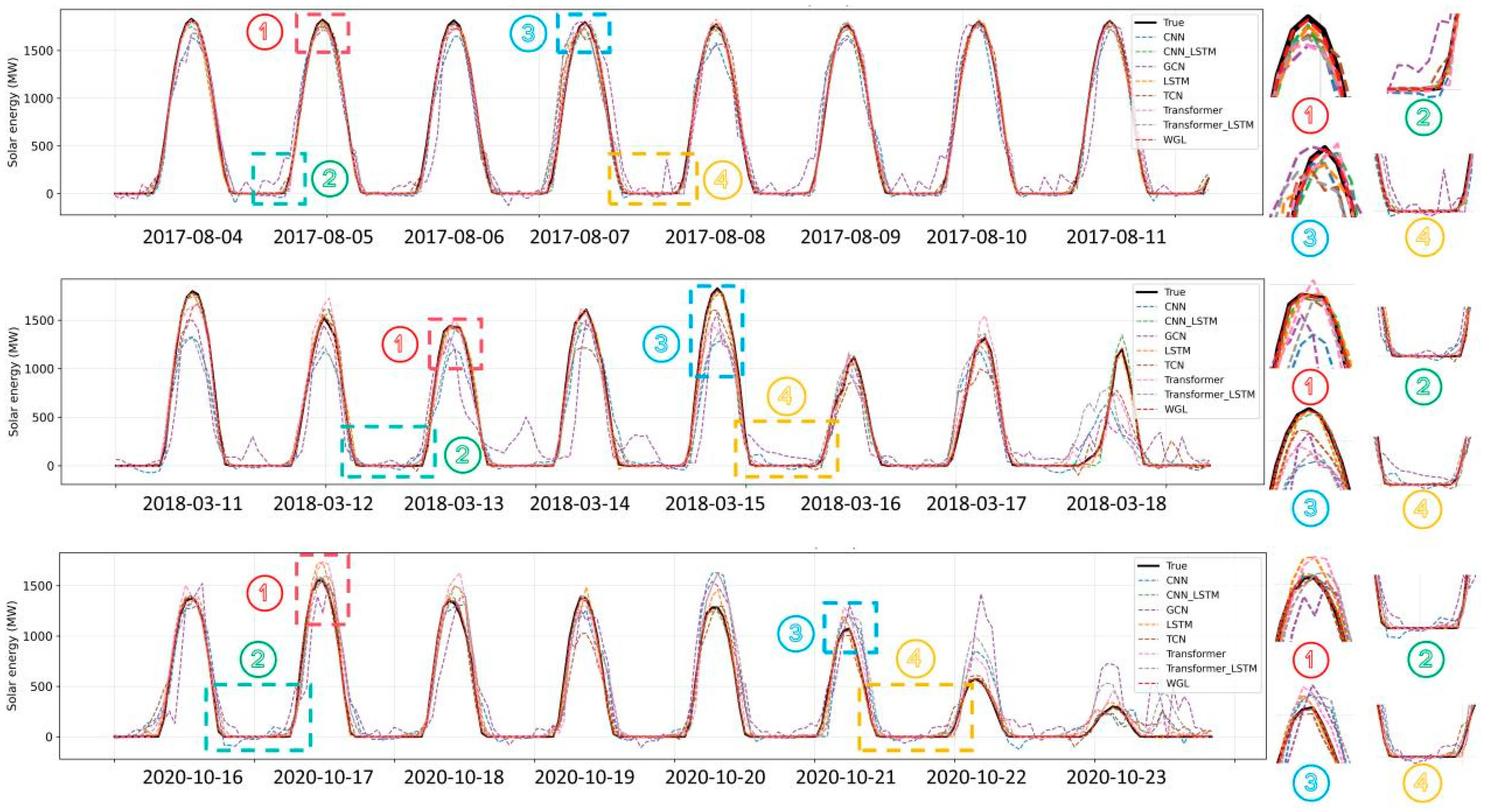

An inspection of

Figure 11 reveals that the proposed WGL model more faithfully reproduces the true intraday photovoltaic power trajectories across diverse dates and meteorological conditions. In particular, WGL effectively mitigates overshooting and peak clipping around midday, precisely reconstructing peak amplitudes; it exhibits reduced phase lag during the steep ascent and descent transitions at dawn and dusk; it promptly responds to short-term irradiance-induced power dips while preserving their morphological integrity without over-smoothing; and it sustains a stable baseline throughout low-power tail segments with negligible deviation. These empirical observations suggest that WGL achieves an optimal balance between capturing local transient dynamics and preserving the overarching diurnal periodic structure. Relative to conventional baselines—including CNN, RNN, TCN, and Transformer—the WGL framework yields markedly lower systematic errors and enhanced robustness with respect to amplitude deviation, phase lag, and transient responsiveness, thereby underscoring its superior short-term predictive accuracy and cross-scenario generalization.

Figure 12 presents comparative results indicating that the proposed WGL framework substantially surpasses all competing baselines across multiple evaluation criteria—namely RMSE, MAE, and MAPE—under various forecasting horizons. WGL not only attains the lowest absolute errors in terms of RMSE and MAE but also delivers distinctly superior performance in relative error (MAPE). Furthermore, as the forecasting horizon extends, WGL exhibits a markedly slower increase in error magnitude, reflecting its enhanced stability and robustness across short-, medium-, and long-term forecasting horizons.

From

Table 7, it can be observed that the overall errors of most models decrease as the input length increases, indicating that a longer historical window contributes positively to short-term forecasting performance. Among the baseline models, CNN-LSTM consistently outperforms single-architecture models such as LSTM, TCN, and Transformer, while GCN exhibits the weakest performance in this task. Compared with all baselines, WGL achieves the best results across all four input lengths and all three evaluation metrics, with its relative advantage becoming more pronounced at longer input lengths. Specifically, compared with the strong baseline CNN-LSTM, WGL reduces RMSE, MAE, and MAPE by up to approximately 46%, 51%, and 40%, respectively. These results demonstrate that WGL can more effectively leverage long-term temporal information and model key patterns that affect one-step forecasting accuracy.