1. Introduction

In the current digital era, the security of transmitted information is of paramount importance. With the rapid advancement of digital technologies, the risk of data breaches and unauthorized access by malicious actors has increased significantly [

1]. Consequently, robust methods are required to ensure the confidentiality and integrity of sensitive data during transmission. Steganography, the art and science of hiding secret messages within an ordinary-looking cover medium such as an image, audio, or text file, has emerged as a key technology for covert communication [

2,

3]. Unlike cryptography, which encrypts the content of a message, steganography aims to conceal the very existence of the message, making it an attractive tool for enhancing information security [

4].

However, traditional steganography techniques, such as the Least Significant Bit (LSB) method [

5,

6], often exhibit vulnerabilities. They can be detected by modern steganalysis tools, particularly those powered by Artificial Intelligence (AI) and deep learning [

7]. Furthermore, the rise of adversarial attacks poses a significant threat to even more advanced, AI-based steganography methods [

8,

9]. These attacks can introduce small, often imperceptible perturbations to the cover medium, causing the hidden information to be lost or incorrectly extracted, thereby compromising the entire security framework. This highlights a critical research gap: the need for a steganography method that is not only effective at hiding data but also robust against sophisticated adversarial attacks [

10].

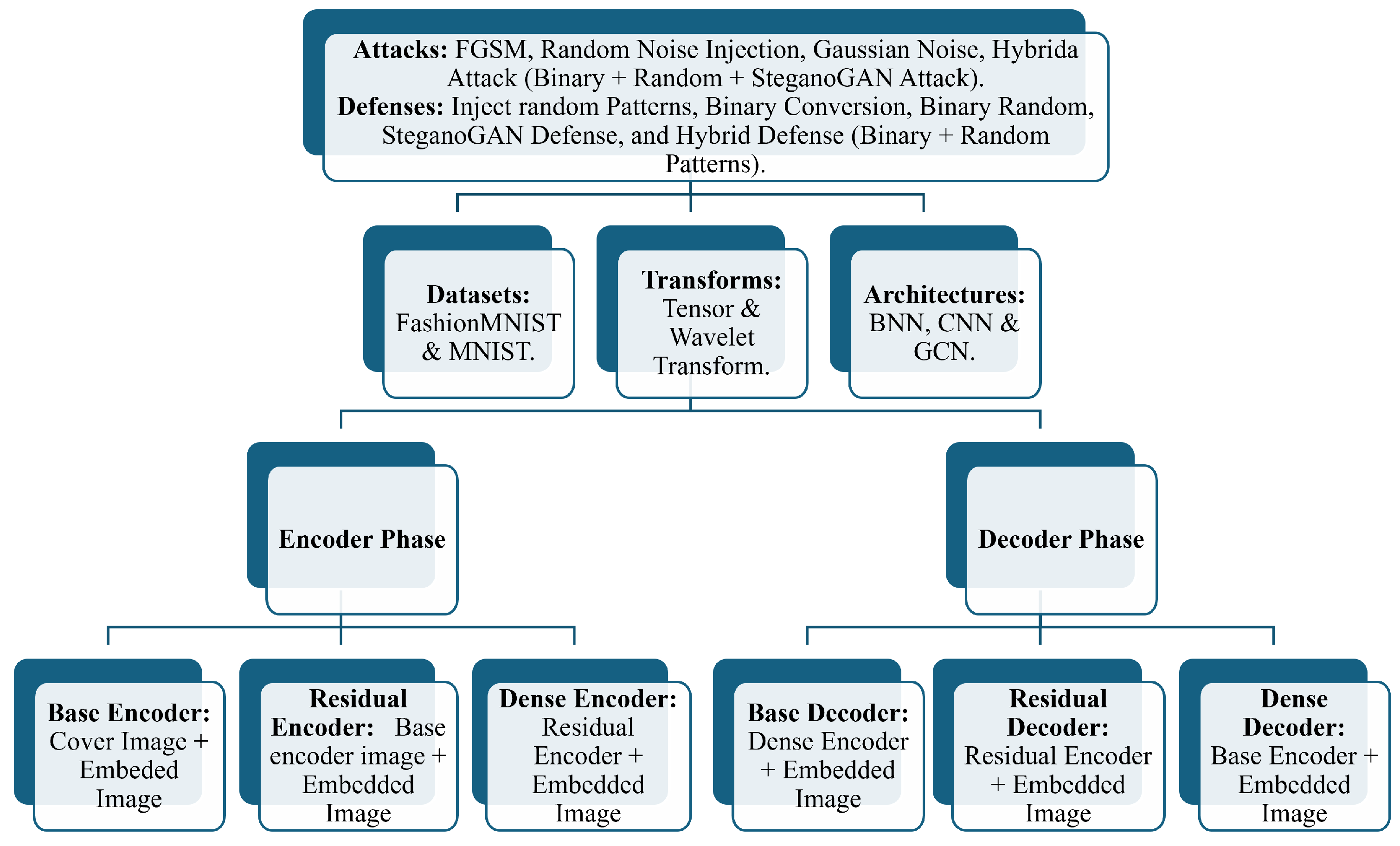

To address these challenges, this paper proposes a novel AI-based steganography framework that enhances the security and robustness of hidden messages in digital images. Our approach integrates the analytical power of Wavelet Transforms (WT) with various deep learning architectures, including Convolutional Neural Networks (CNNs), Bayesian Neural Networks (BNNs), and Graph Convolutional Networks (GCNs). The core of our method lies in a multi-stage embedding process, which includes a primary encoder, a residual encoder, and a dense encoder. This layered approach is designed to increase the complexity for potential attackers and improve the resilience of the hidden data. Recent deep-learning frameworks such as SteganoGAN, HiDDeN, and U-Net-based models achieve high embedding capacity but remain vulnerable to gradient-based adversarial attacks. Unlike these single-stage approaches, the proposed multi-stage wavelet-integrated framework focuses on enhancing robustness and reversibility, forming a complementary direction to existing GAN- or U-Net-based designs.

This work addresses two primary gaps in current AI-based steganography methods: (1) insufficient robustness to gradient-based perturbations that can easily reveal or destroy hidden information, and (2) the absence of probabilistic modeling to estimate uncertainty and improve resilience. Our multi-stage Bayesian–Wavelet framework is designed to close these gaps by integrating frequency-domain embedding with probabilistic feature learning.

The main contributions of this work are threefold:

We design and implement a novel, multi-layered steganography framework that progressively embeds secret images, enhancing the overall security and capacity.

We systematically investigate the integration of Wavelet Transforms with different deep learning models to improve the robustness of the steganographic system against detection and adversarial manipulations.

We conduct a comprehensive experimental evaluation on the MNIST [

11] and FashionMNIST [

12] datasets, testing our method against a variety of adversarial attacks (e.g., FGSM, RNI) and defense mechanisms to demonstrate its superior performance and resilience compared to baseline models.

The remainder of this paper is organized as follows:

Section 2 reviews related work.

Section 3 details the proposed methods, including the framework architecture and the underlying models.

Section 4 presents the experimental setup and analyzes the results. Finally,

Section 5 concludes the paper and discusses potential directions for future research.

4. Experimental Results

This section presents a comprehensive evaluation of our proposed AI-based steganography framework. We begin by detailing the experimental setup, followed by a systematic analysis of the performance of different model architectures, the effectiveness of our multi-stage framework, and its robustness against various adversarial attacks and defenses.

4.1. Experimental Setup

Datasets: We used two standard benchmark datasets: MNIST [

11] and FashionMNIST [

12]. The MNIST dataset consists of 60,000 training and 10,000 testing grayscale images of handwritten digits (28 × 28 pixels) across 10 classes. The FashionMNIST dataset contains grayscale images of 10 fashion product categories of the same size and training/testing split, offering a more complex classification task. These grayscale datasets were selected to provide a controlled evaluation environment that isolates the effects of the embedding and adversarial processes without the confounding factors introduced by color channels.

Implementation Details: All experiments were conducted on Google Colab using NVIDIA Tesla T4 GPU instances with 16 GB of memory. The models were implemented in Python 3.6 and PyTorch 1.2.0, along with supporting libraries such as torch-geometric 1.3.2 and PyWavelets 1.1.1. All models were trained for 10 epochs with a batch size of 256 using the Adam optimizer and a learning rate of . Early stopping with a patience of 20 epochs was employed to prevent overfitting. Unless otherwise stated, the random seed was fixed to 42 for all runs to ensure reproducibility. Each experiment was repeated three times, and the average performance and standard deviation are reported in the corresponding tables.

Evaluation Metrics: The primary metric for evaluation is classification accuracy. For robustness analysis, we measure the accuracy of the models on adversarially perturbed images, both with and without defense mechanisms.

In addition to classification accuracy, we have further included quantitative image-quality metrics, including Peak Signal-to-Noise Ratio (PSNR), Structural Similarity (SSIM), and Mean Squared Error (MSE), to assess the fidelity and imperceptibility of the embedded images. While ROC and AUC are commonly used in binary detection tasks, the chosen metrics are more directly related to the performance of steganographic embedding and reconstruction.

4.2. Baseline Performance of Backbone Architecture

Before evaluating the steganography framework, we first established the baseline performance of the different deep learning architectures (CNNs, BNN, GCN) on the original, “clean” image classification task. This allows us to understand the inherent capabilities and limitations of each model.

The results are summarized in

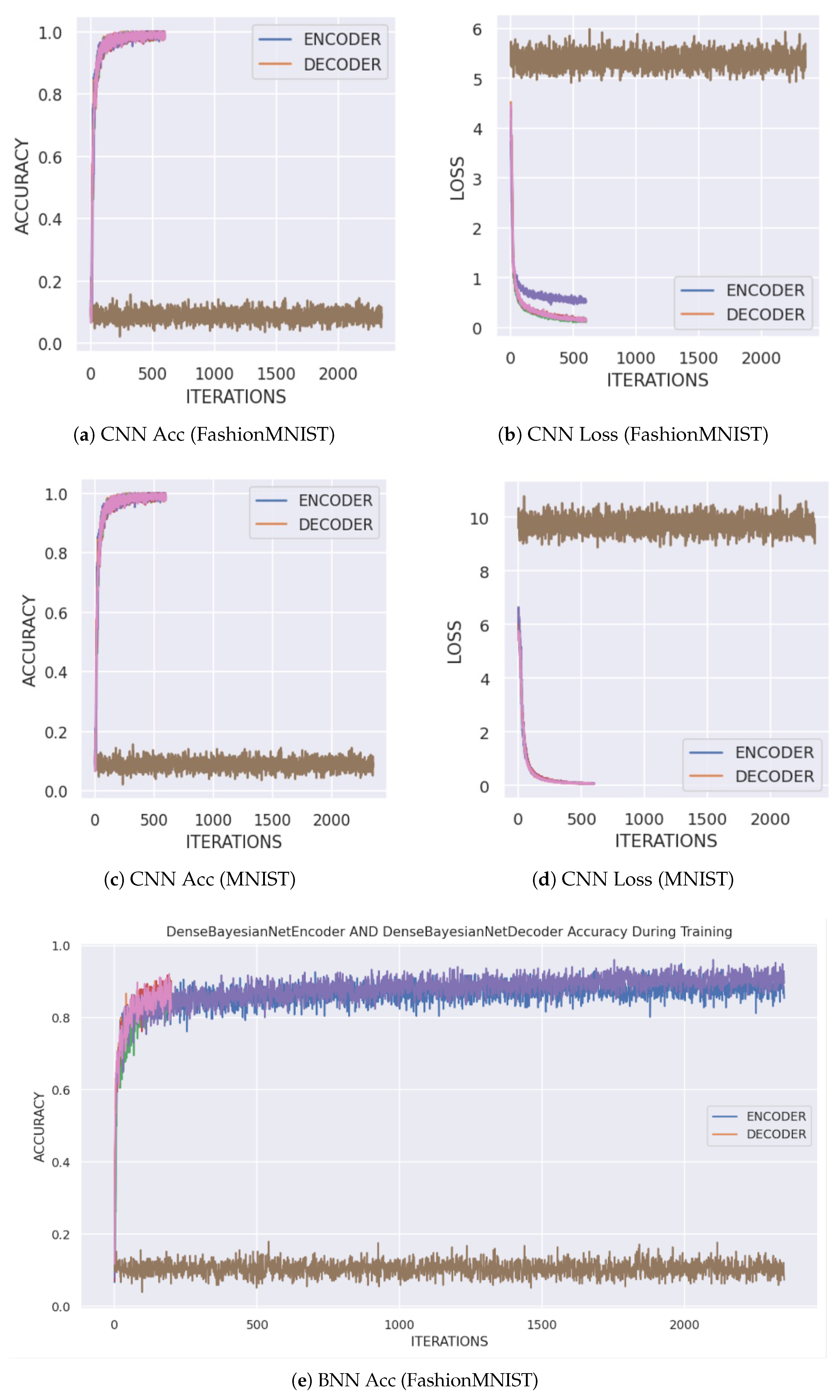

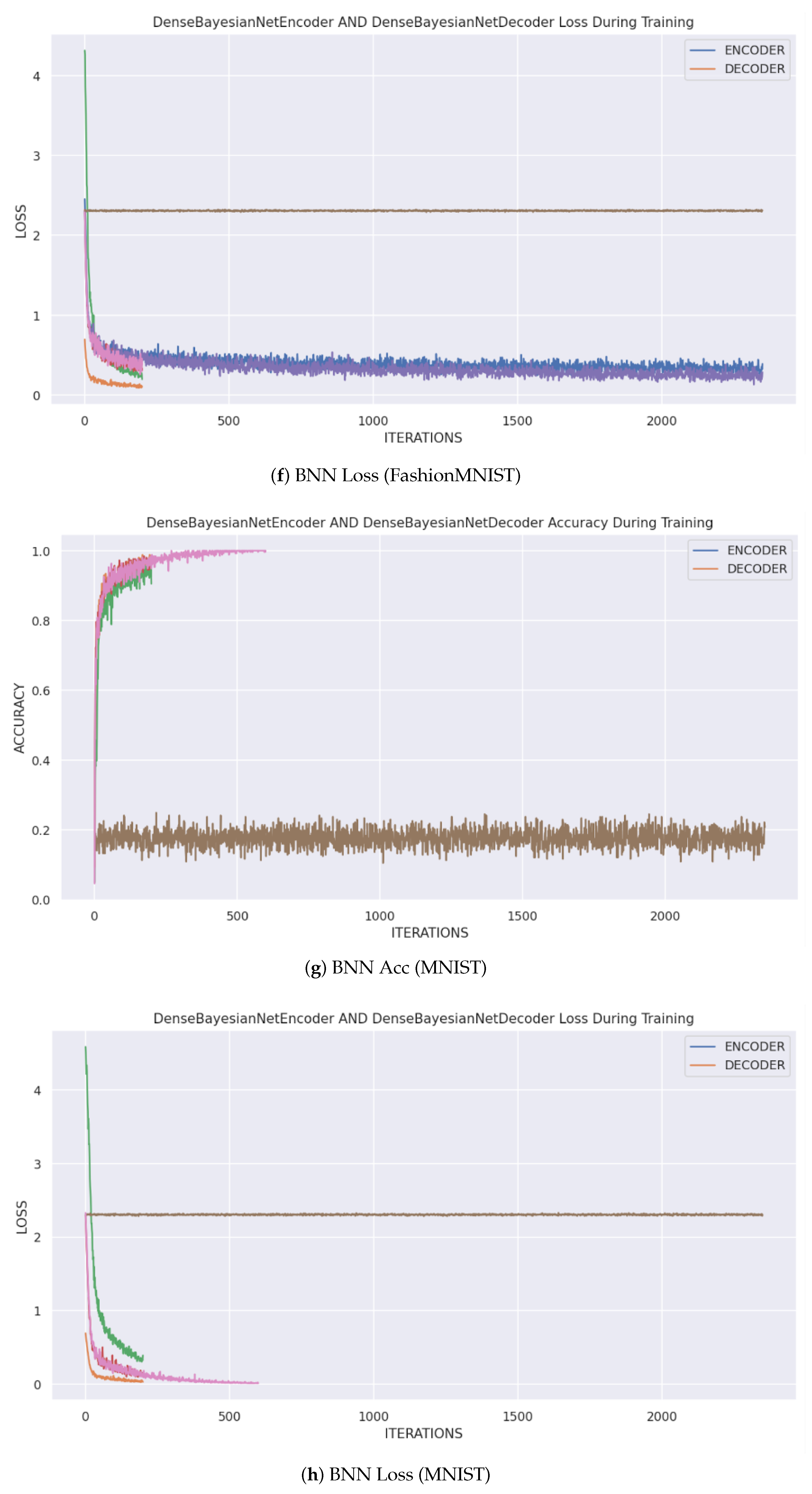

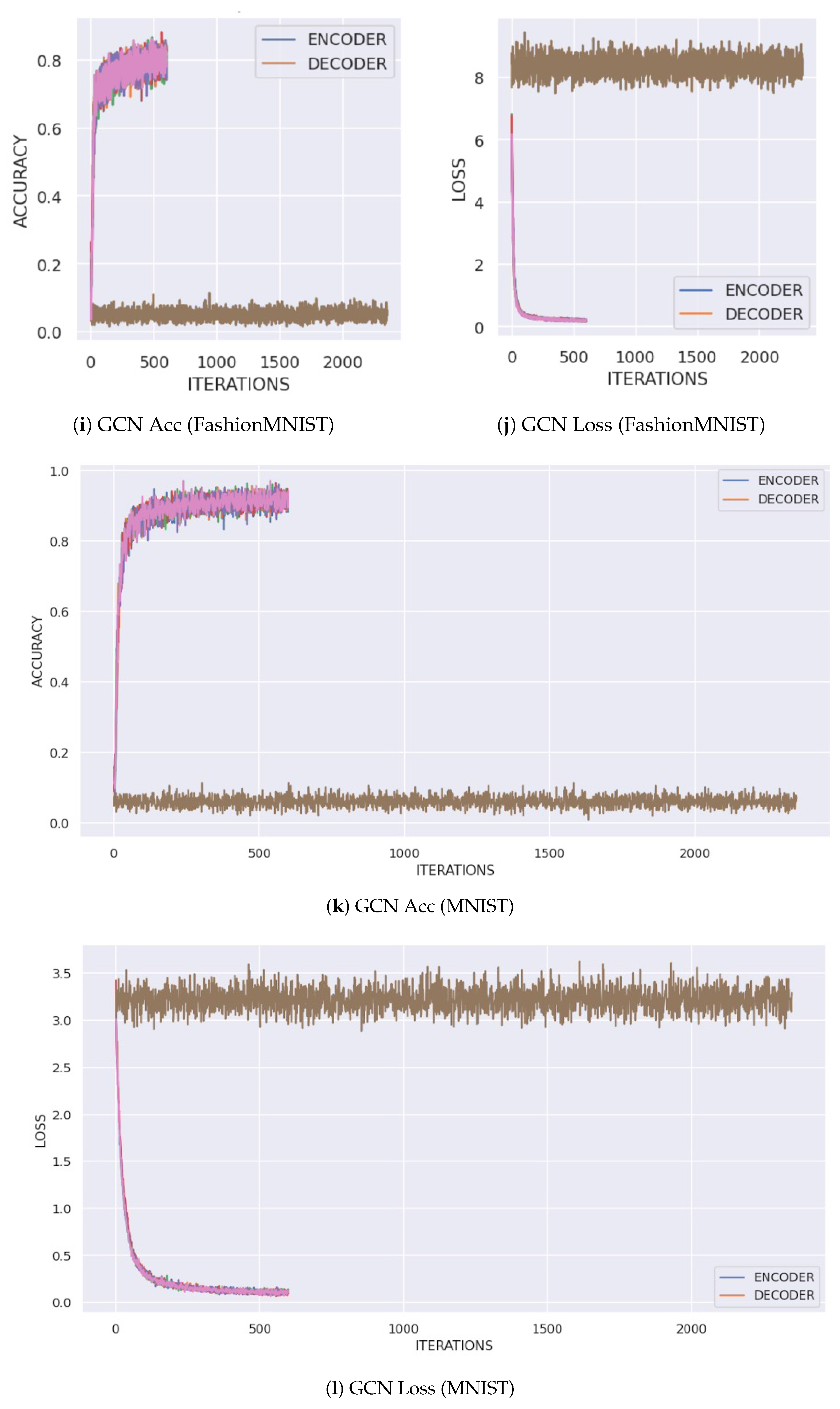

Table 1. As expected, the CNN models (EfficientNet, GoogLeNet, ResNet) achieved the highest baseline accuracy on both datasets, with accuracies often exceeding 98% on MNIST and around 90% on FashionMNIST. The GCN architecture showed respectable but lower performance, while the BNN model performed competitively with the CNNs. The training dynamics, showing the convergence of accuracy and loss for each architecture using the tensor transform, are illustrated in

Figure 2.

The superior performance of CNNs is attributable to their architectural design, which is inherently suited for processing grid-like data such as images. Their use of convolutional filters and pooling layers effectively captures spatial hierarchies and local patterns. The GCN, which treats pixels as nodes in a graph, provides a non-standard but interesting approach; its slightly lower performance may be due to the loss of explicit grid structure. The BNN’s strong performance indicates that its probabilistic nature does not compromise its ability to learn complex features effectively.

4.3. Performance of the Multi-Stage Steganography Framework

In addition to classification accuracy, we further evaluate the quality of the stego images using PSNR, SSIM, and MSE to measure imperceptibility and reconstruction fidelity. Subsequently, we evaluated the performance of our multi-stage framework. The key question was whether the models could successfully hide and reveal secret images without significantly degrading classification accuracy. We analyzed the performance of the Base Encoder, Residual Encoder, and Dense Encoder stages.

As shown in

Table 2, the accuracy of the steganography-enabled models on clean data remains high, especially for the CNN and BNN architectures. For instance, the ResNet-based encoder maintained an accuracy of 99.0% on MNIST and 93.1% on FashionMNIST, demonstrating the framework’s high fidelity. However, the performance under adversarial attack reveals the true benefit of the multi-stage design. The Dense Encoder stage consistently showed greater resilience to attacks compared to the Base Encoder stage across all architectures. For example, in

Table 3, the defended accuracy of the ResNet-based Dense Encoder on MNIST reached 78.0%, a significant improvement over a single-stage encoder.

The results suggest that adding more encoding layers (from Base to Residual to Dense) improves security. Each additional stage applies a non-linear transformation to its input; this cascade further masks the statistical traces of the embedded message and makes it harder for an adversary to craft effective perturbations. While the multi-stage process increases computational overhead, it offers a clear trade-off for robustness.

Although classification accuracy primarily measures recognition, in this setting, it also indicates information preservation after embedding and decoding: high accuracy implies that the steganographic pipeline maintains essential visual and semantic cues. The accompanying PSNR/SSIM metrics further validate imperceptibility and fidelity.

In addition to classification accuracy, we evaluate imperceptibility and reconstruction fidelity using PSNR, SSIM, and MSE.

Table 4 reports the BNN backbone at the Encoder stage under five attack types, comparing Tensor and 2D Haar preprocessing. The residual and dense stages show similar trends (

Table 5). Extended per-transform/per-stage results are provided in the

Supplementary Materials. For reference, SteganoGAN was also evaluated using the same metrics. While it achieves reasonable PSNR and SSIM scores, its adversarial robustness is noticeably lower than the proposed multi-stage framework.

As defined in

Figure 1, the Hybrid integrates Binary Conversion, Random Noise, and SteganoGAN perturbations.

The inclusion of PSNR, SSIM, and MSE provides direct evidence of visual fidelity and imperceptibility. As the payload size is fixed across experiments, the embedding rate remains constant and is therefore omitted for brevity. Additional visual examples of original, stego, and decoded images under different attacks and defenses are provided in

Supplementary Materials S2 (PDF).

Furthermore, quantitative analysis of

Table 4 and

Table 5 reveals consistent trends across both datasets. The wavelet-based preprocessing (Tensor vs. 2D Haar) slightly decreases MSE and increases both PSNR and SSIM, confirming that frequency-domain representations enhance imperceptibility and image fidelity. Among the multi-stage configurations, the Dense Encoder yields the highest visual quality while maintaining competitive accuracy, validating its design choice for high-security embedding.

To better illustrate the trade-off between robustness, fidelity, and computational cost, an overall summary table is provided later in

Section 4.6. This table consolidates performance trends across both datasets and reports the

values that quantify accuracy degradation under adversarial attacks.

4.4. Robustness Against Adversarial Attacks

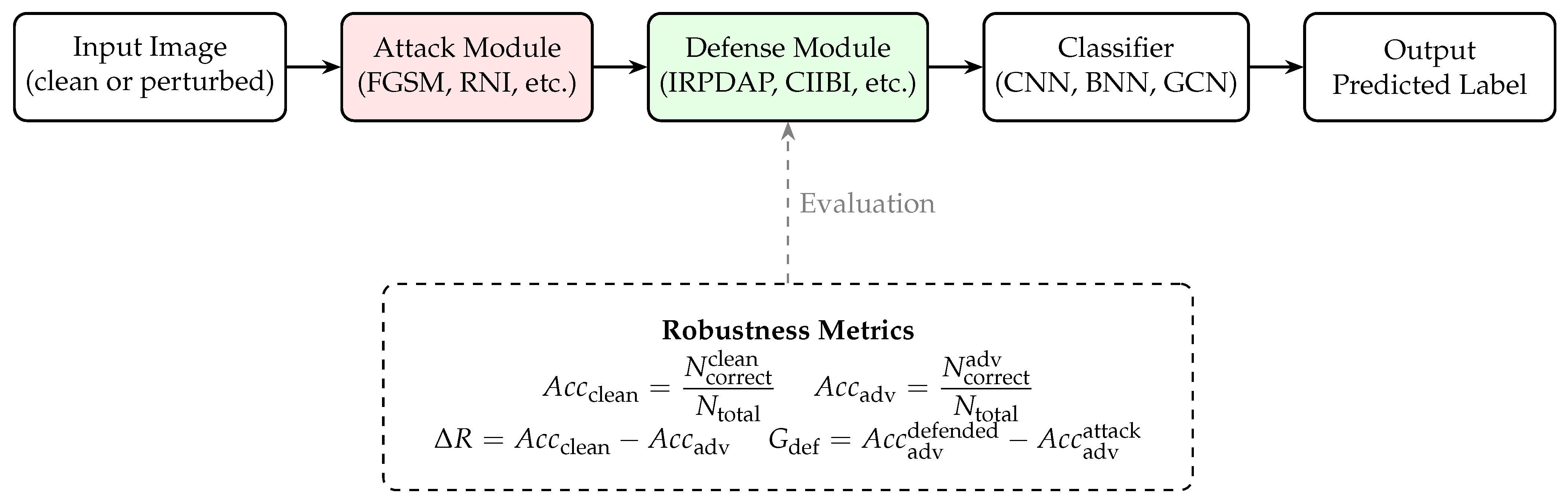

The central goal of this research is to build a robust steganography system. To systematically evaluate this, we designed a comprehensive testing workflow, as illustrated in

Figure 3. This process outlines the steps for assessing the performance of our framework under various adversarial conditions. An input image, either in its original clean state or as a stego-image, is first subjected to an adversarial attack. The resulting perturbed image can then be processed by an optional defense mechanism before it is fed into one of the backbone classifiers (CNN, BNN, or GCN). The final classification accuracy is then used to compute key robustness metrics, allowing for a thorough comparison.

Following this workflow, we tested the framework against three distinct adversarial attacks: Fast Gradient Sign Method (FGSM), Random Noise Injection (RNI), and a white-box scenario where the attacker knows the model’s architecture (KTKOTMA). The comparative performance of the architectures is summarized in

Table 6.

The CNN models, particularly ResNet, demonstrated strong overall robustness, maintaining high accuracy under the RNI attack and recovering well with defenses. However, they were more vulnerable to the gradient-based FGSM attack. In contrast, the BNN architecture showed remarkable resilience to FGSM and KTKOTMA attacks, with accuracy remaining as high as 98% on MNIST. This suggests that its probabilistic nature is highly effective at resisting gradient-based attacks. The GCN architecture was found to be the most vulnerable, with its accuracy dropping to near 0% under FGSM and KTKOTMA attacks.

The differing vulnerabilities can be explained by the models’ core mechanisms. CNNs’ reliance on well-defined spatial feature gradients makes them a clear target for FGSM. BNNs, by sampling weights from a distribution, effectively create a stochastic gradient landscape during training, making it difficult for an attacker to find a single, consistent gradient direction to exploit. GCN’s vulnerability may stem from its graph structure; an attack that perturbs a few high-centrality pixels (nodes) could have a cascading effect across the graph, leading to a complete failure in classification.

4.5. Effectiveness of Defense Mechanisms

Finally, we evaluated the effectiveness of the IRPDAP, CIIBI, and their combined (CTDM) defense mechanisms in restoring model accuracy after an attack.

The results, presented in

Table 7, show that all defense methods helped recover performance, but their effectiveness varied by model and dataset. For the MNIST dataset, the CIIBI defense was particularly effective for the BNN-based Dense Encoder, recovering accuracy to 74.0%. For FashionMNIST, the IRPDAP defense worked best, restoring the BNN model’s accuracy to 60.0%. The combined defense (CTDM) generally yielded the highest recovery rates for CNN models, reaching up to 97% on MNIST. This indicates that different defense strategies are suited for different data complexities and model architectures. These results indicate that different defenses exploit distinct mechanisms. The CIIBI defense achieves higher recovery on simpler datasets such as MNIST, since binary quantization effectively removes small gradient-based perturbations without significantly damaging image structure. Conversely, IRPDAP performs better on complex datasets like FashionMNIST, because structured random patterns can disrupt adversarial noise while retaining essential textures. The combined method (CTDM) benefits from both effects, offering a balanced trade-off between noise suppression and information preservation, which explains its superior performance across most scenarios.

The defense mechanisms work through different principles. CIIBI defends by quantizing the input space, effectively destroying the subtle, low-magnitude perturbations that characterize many adversarial attacks. However, this can also lead to a loss of useful information, especially in more complex datasets like FashionMNIST. IRPDAP works by introducing structured noise that disrupts the adversarial pattern without completely erasing the original image features. The success of the combined approach for CNNs suggests that simultaneously simplifying the input space and disrupting adversarial patterns provides a multi-faceted and highly effective defense.

Our empirical findings align with prior defenses that suppress small perturbations via quantization or bit-depth reduction (a core idea behind our CIIBI) [

39,

40], as well as with randomized or noise-injection mechanisms that disrupt gradient alignment (related to IRPDAP) [

41]. They also resonate with frequency-domain preprocessing that preserves semantic content while damping adversarial high-frequency components [

42]. Representative examples include input transformations such as bit-depth reduction, median filtering, and JPEG compression, as well as randomized smoothing for certified robustness, and JPEG/DCT-based defenses.

4.6. Decoder Performance and Information Reversibility

A critical aspect of a steganography system is its ability to accurately recover the hidden secret information. We evaluated the performance of our decoding process, which corresponds to the Base, Residual, and Dense Decoder stages. The primary goal was to ensure that the multi-stage embedding did not corrupt the secret images to a point where they were irrecoverable.

The results, detailed in the

Supplementary Materials, confirm the high reversibility and fidelity of our framework. For clean, non-attacked stego-images, the decoders were able to reconstruct the hidden images successfully, with classification accuracy on these recovered images remaining nearly identical to the baseline performance. For example, the ResNet-based decoder consistently achieved over 98% accuracy on recovered MNIST images and around 89% on FashionMNIST images. The framework’s robustness extends to post-attack scenarios; even after applying defenses, the decoder could still recover intelligible information, although accuracy decreased. For instance, after an IRPDAP defense, the CNN-based residual decoder still achieved accuracy scores above 90% for MNIST in many cases.

The high accuracy of the classification task on the decoded images serves as a strong proxy for the successful and reversible nature of the steganography process. It indicates that our deep learning models learned not only to hide information but also to preserve its essential features for accurate reconstruction. The robustness of the decoder, even after attacks, suggests that the learned transformations are resilient and can tolerate a degree of perturbation without catastrophic information loss. This reversibility is a fundamental requirement for a practical steganography system. Additional visual results, extended tables, and quality metrics are provided in the

Supplementary Materials.

4.7. Overall Performance Comparison with State-of-the-Art Architectures

To provide a holistic view, this section summarizes the performance of our proposed steganography framework when implemented with different state-of-the-art (SOTA) architectures.

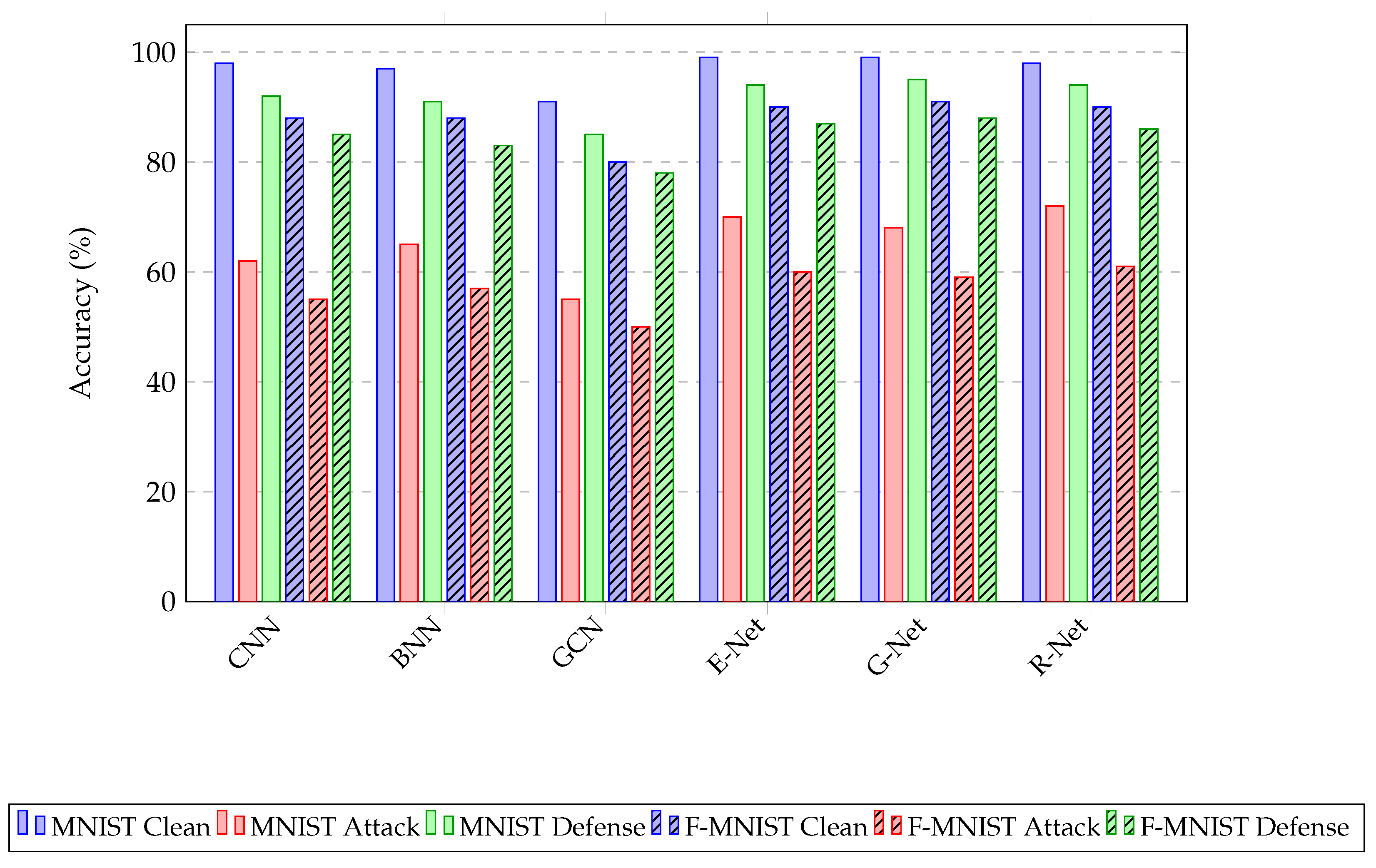

Figure 4 visually encapsulates the key performance trade-offs between these implementations across clean, attack, and defense scenarios. The results allow us to directly compare the effectiveness of using CNN-based models (EfficientNet, GoogLeNet, ResNet), BNNs, and GCNs as the backbone for our security framework.

The bar chart clearly illustrates the dominance of CNN-based models (EfficientNet, GoogLeNet, and ResNet) in terms of accuracy on clean data and their strong recovery capability with defense mechanisms. It also highlights the unique strength of the BNN architecture, which, despite a slightly lower baseline, shows remarkable resilience under adversarial attacks compared to other models. Conversely, GCN’s vulnerability is starkly evident, reinforcing its unsuitability for this application. This overarching comparison validates our central conclusion: while CNNs offer the best general performance, BNNs provide a superior option when security and robustness against specific adversarial threats are the primary concern.

To evaluate computational efficiency, we compared parameter counts and inference times across different backbone architectures. The multi-stage framework increases the total computation by approximately 30% compared with a single-stage encoder, yet maintains practical runtime for typical cloud-based experimental environments (average inference time around 0.12 s per image on a standard GPU instance in Google Colab). This moderate overhead represents a reasonable trade-off for the achieved improvements in robustness and imperceptibility. In future implementations, model compression techniques such as pruning and knowledge distillation could be applied to further reduce latency and memory usage without compromising robustness.

Table 8 summarizes the overall performance metrics, including fidelity (PSNR, SSIM, MSE), robustness loss (

), and average runtime per image for each encoder type. The Dense Encoder with Wavelet preprocessing demonstrates the best balance between fidelity, robustness, and computational efficiency.

5. Discussion

This study investigated how deep learning architectures and wavelet-domain processing can enhance the robustness of digital steganography against AI-driven attacks. The experiments demonstrate that both the network design and the frequency-domain representation play critical roles in determining security and resilience.

5.1. Effectiveness of the Multi-Stage Framework

The multi-stage embedding and decoding process significantly increases the difficulty for potential attackers. Among the tested encoders, the Dense Encoder produced the most robust results, balancing reconstruction fidelity and resistance to adversarial perturbations. This layered embedding strategy effectively disperses hidden information across multiple feature hierarchies, making gradient-based extraction or disruption far more challenging than in conventional single-stage approaches.

5.2. Impact of Backbone Architectures

Comparing different backbone models revealed clear trade-offs. CNN-based backbones (especially ResNet and EfficientNet) achieved the highest accuracy on clean data and provided stable performance under moderate attack. BNNs demonstrated slightly lower nominal accuracy but much stronger intrinsic robustness to gradient-based attacks such as FGSM and KTKOTMA, due to stochastic weight sampling that diffuses gradient directionality. GCNs, although capable of modeling relational structures, were less suited to pixel-level embedding and showed vulnerability to perturbations.

5.3. Role of Wavelet Transforms

Integrating Wavelet Transforms (WT) further improved the imperceptibility and stability of embedded images. By emphasizing low-frequency components and reducing sensitivity to high-frequency noise, WT-based preprocessing helped preserve essential image structures under attack. In particular, the 2D Wavelet Filtering configuration achieved near-perfect recovery accuracy in certain cases, confirming its ability to extract robust, attack-resistant features. Across attacks, 2D Haar preprocessing yields slightly lower MSE and modestly higher PSNR/SSIM than plain Tensor inputs (

Table 4 and

Table 5). This indicates that frequency-domain representations help preserve content fidelity under perturbations, likely by concentrating salient structures into more stable sub-bands and attenuating high-frequency noise components introduced by attacks.

5.4. Interpretation and Practical Implications

The observed robustness gains can be explained by complementary mechanisms: BNN-induced stochasticity lowers attack linearity, while WT-based decomposition attenuates adversarial perturbations in the frequency domain. These findings have practical implications for secure image communication, forensic watermarking, and covert Internet of Things (IoT) telemetry, where reliable and imperceptible message embedding is essential.

The average inference time was approximately 0.07–0.12 s per image on an NVIDIA T4 GPU, representing about a 30% increase over a single-stage encoder but remaining within practical runtime limits. The payload was fixed at 1 bit per pixel (bpp) across all models to ensure fair comparison. The accuracy degradation rates (

) reported in

Table 6 quantify the robustness loss under adversarial perturbations. These results indicate that the added complexity of the multi-stage architecture yields moderate computational overhead while substantially improving robustness.