Quantum-Inspired Cross-Attention Alignment for Turkish Scientific Abstractive Summarization

Abstract

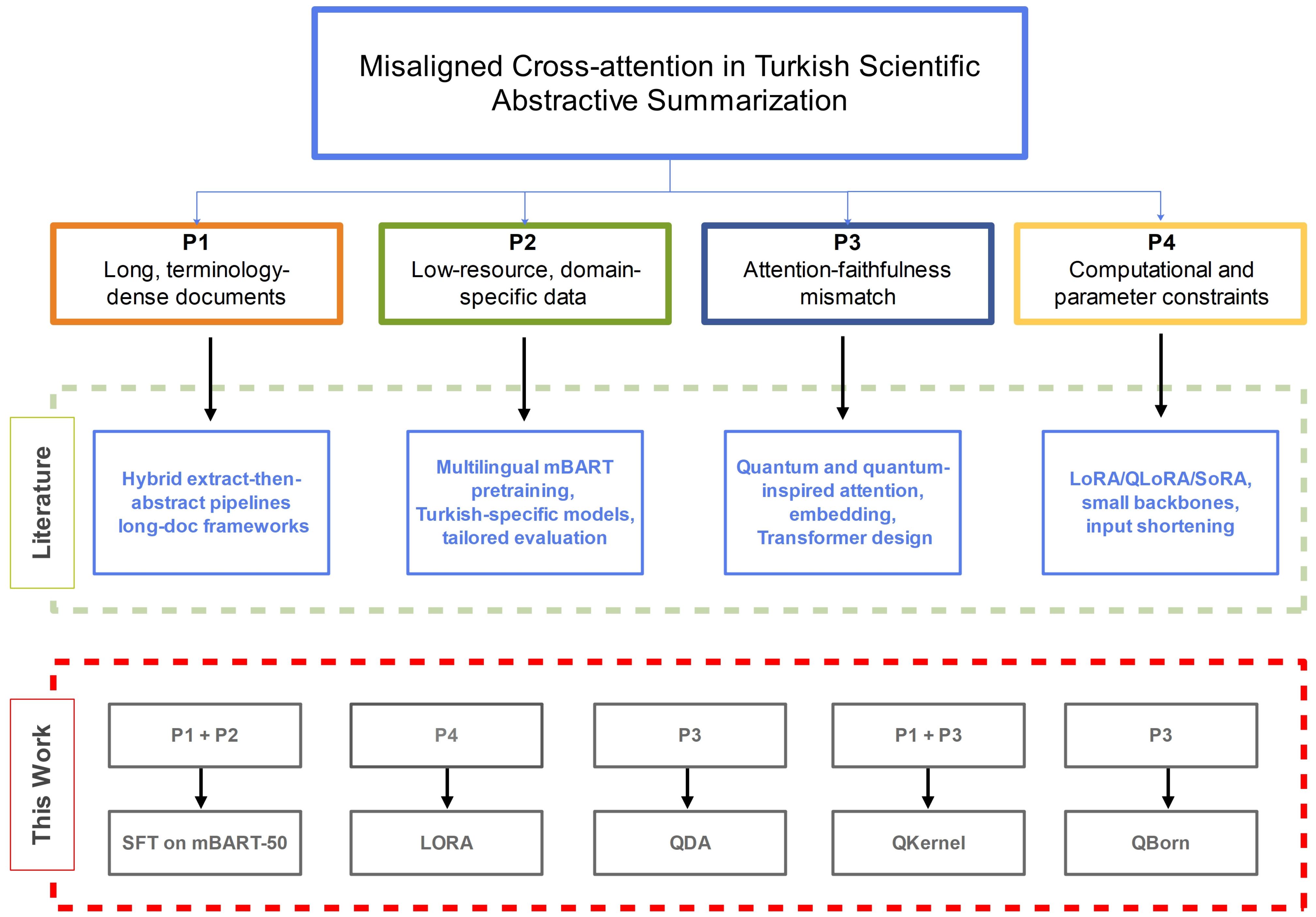

1. Introduction

2. Background

3. Materials and Methods

3.1. Dataset Construction

3.1.1. Corpus Compilation and Preprocessing

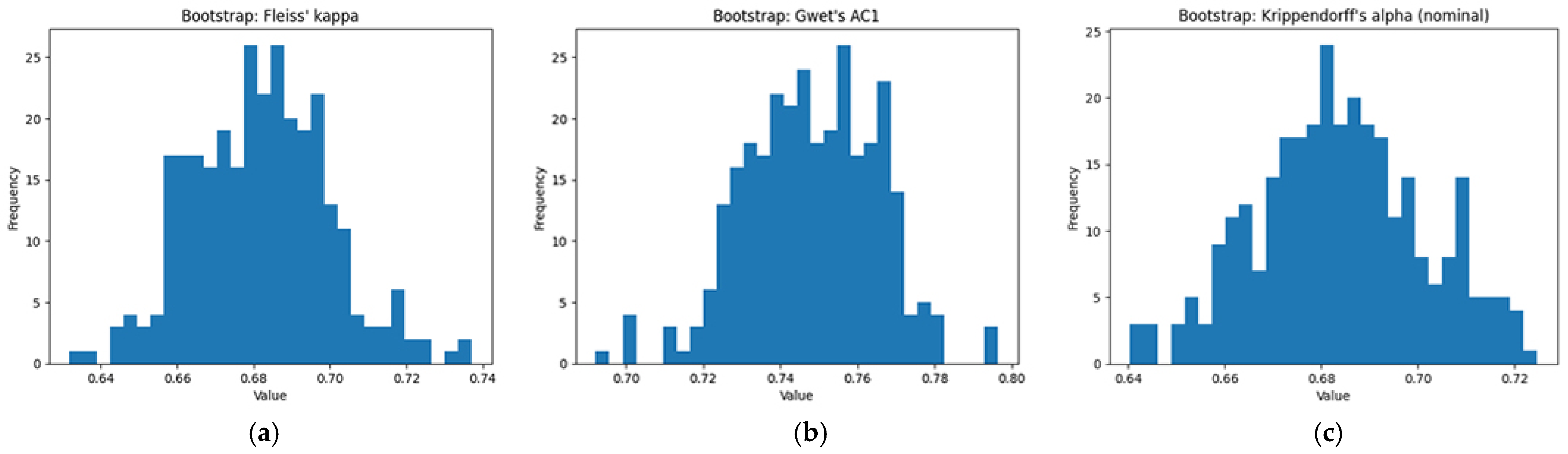

3.1.2. Reliability Metrics and Rationale

3.2. Text Preprocessing and Structuring

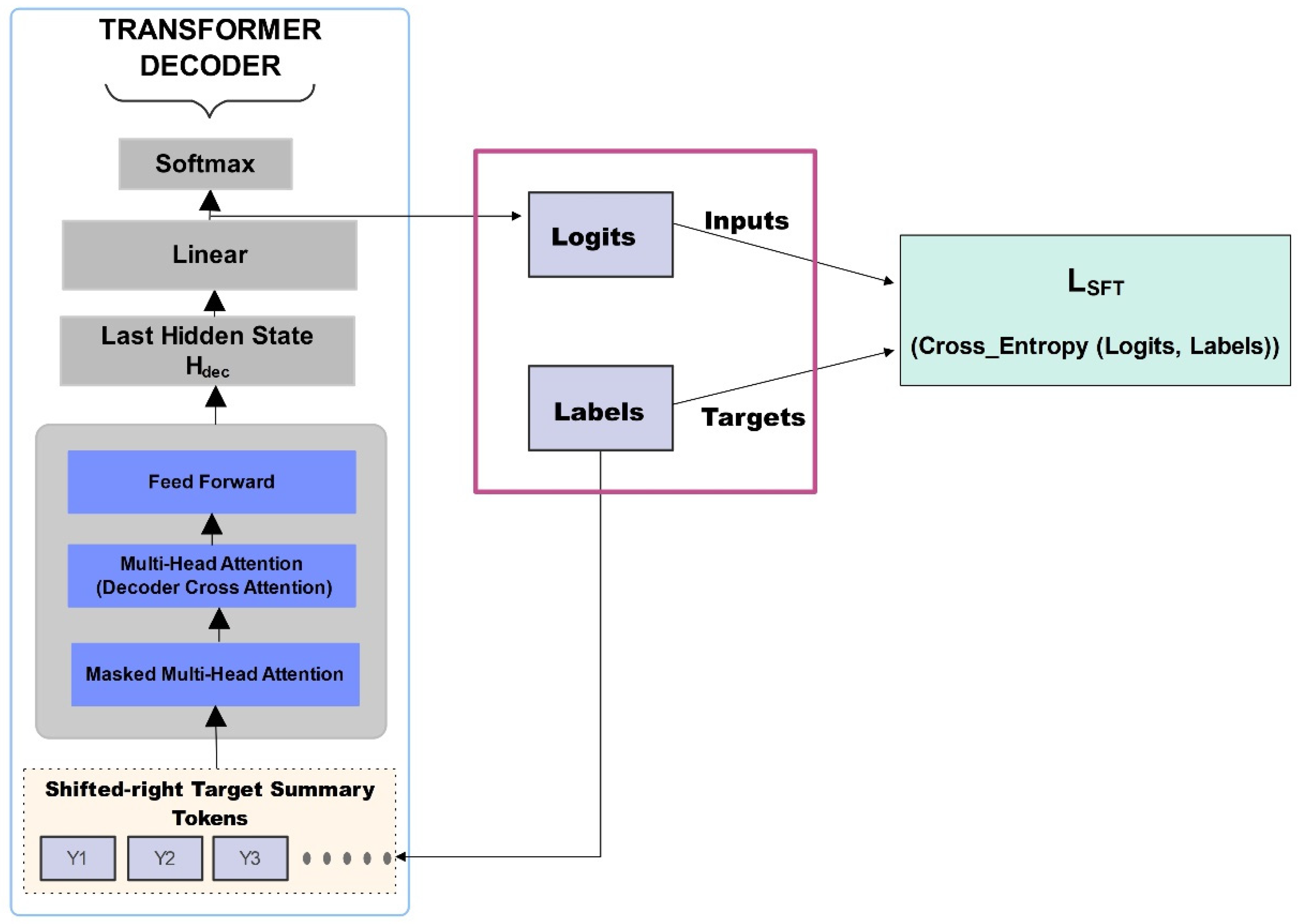

3.3. Base Model (SFT) and Parameter-Efficient Fine-Tuning (LORA)

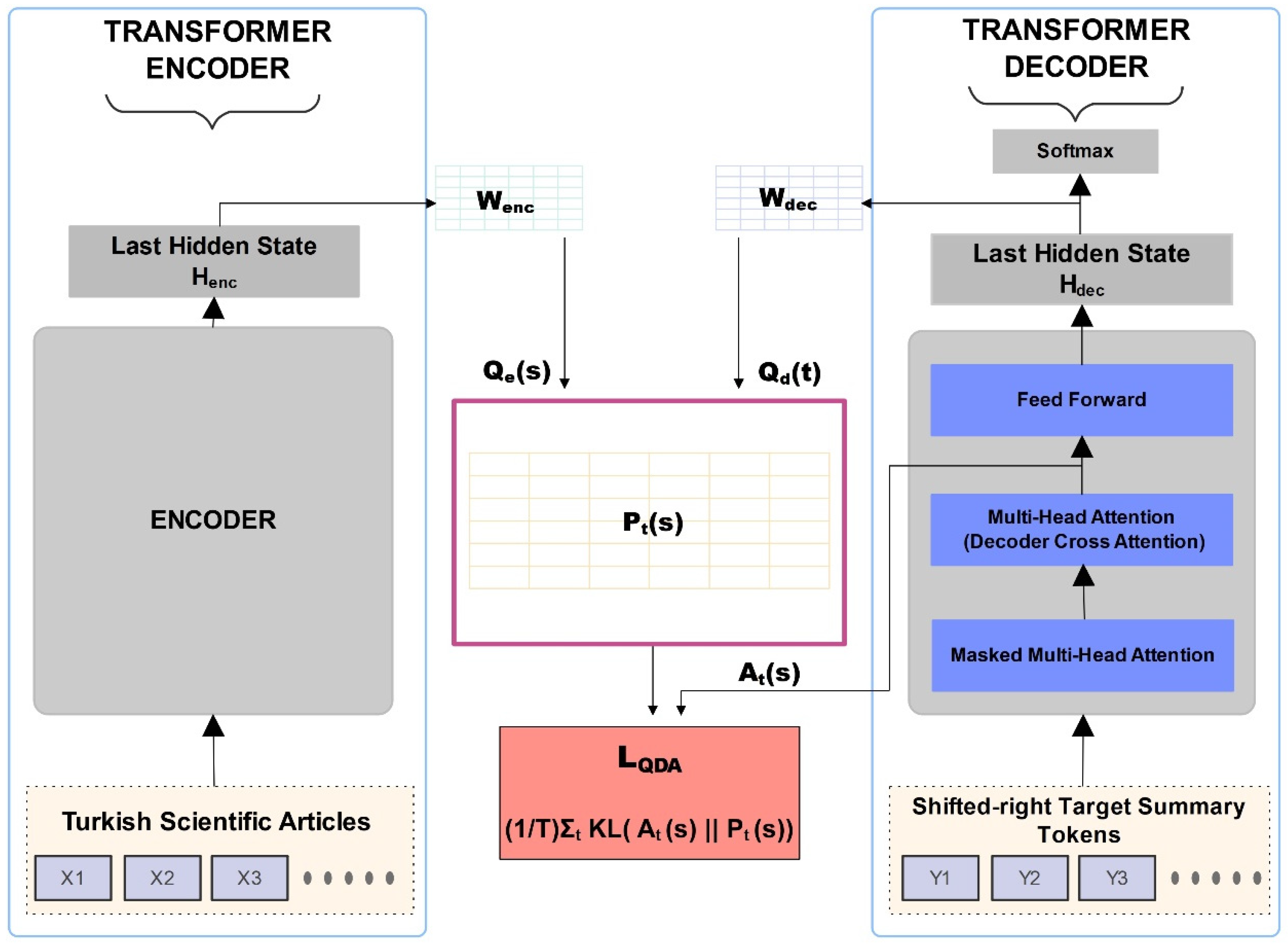

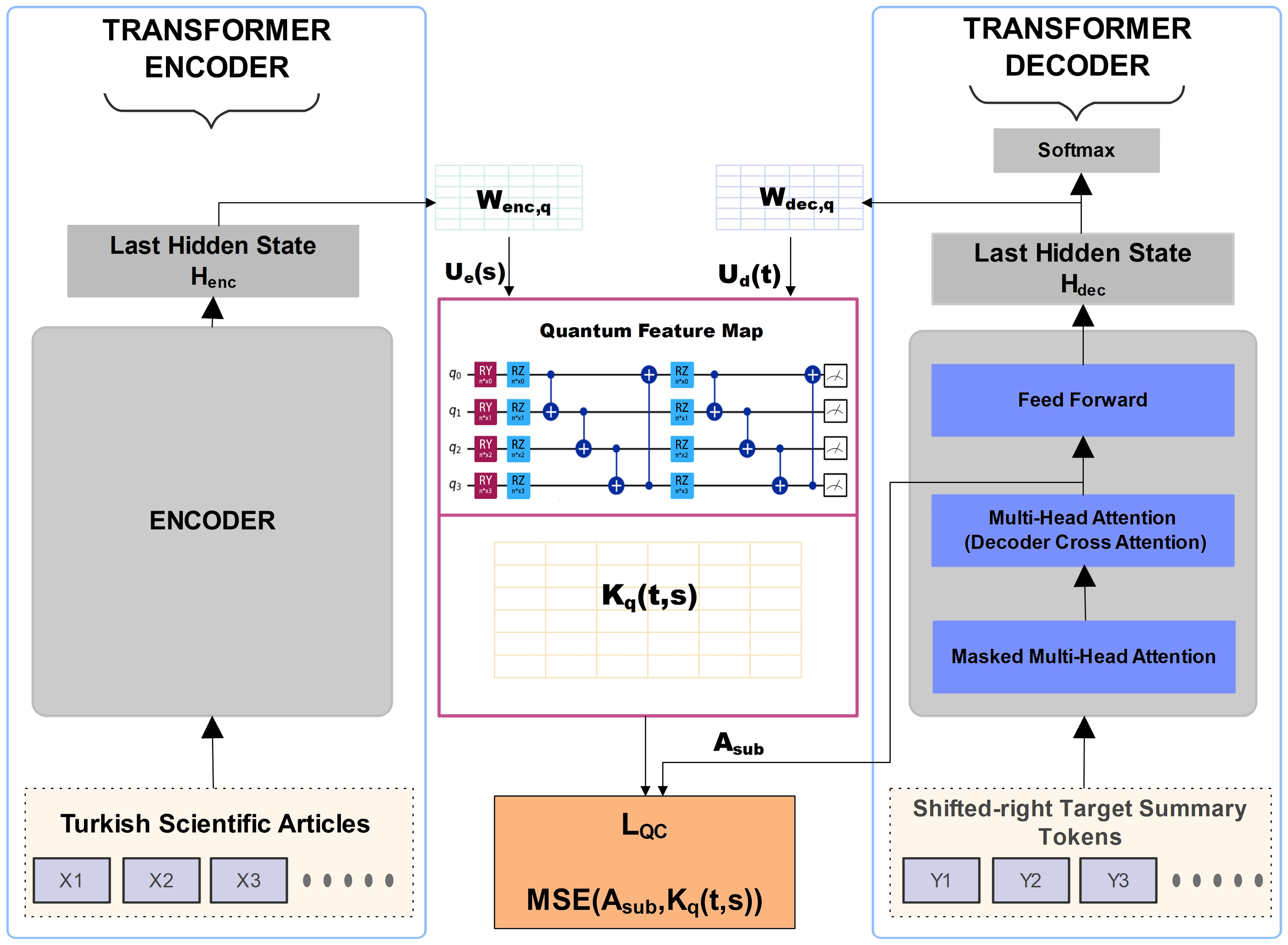

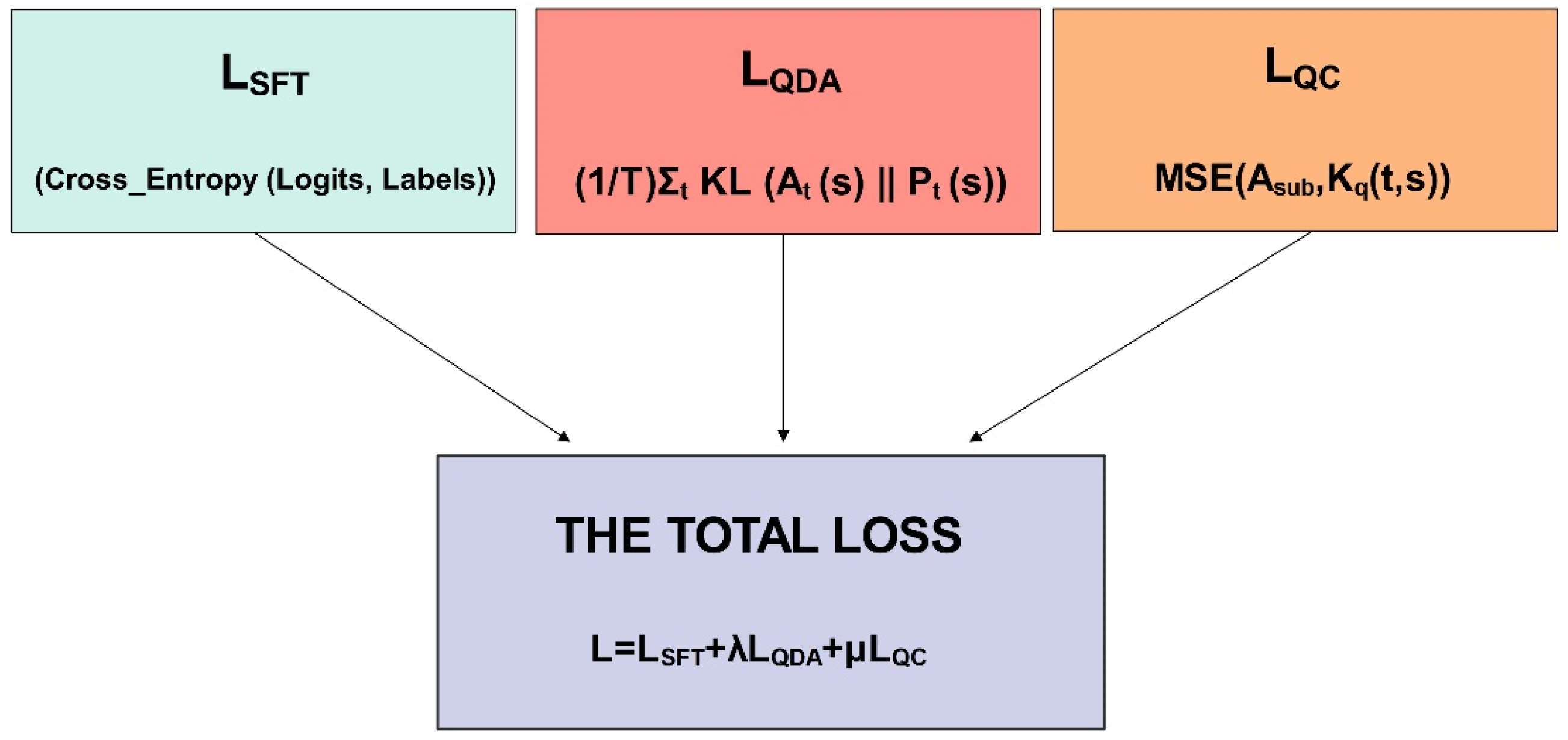

3.4. QDA (Quantum Distribution Alignment)

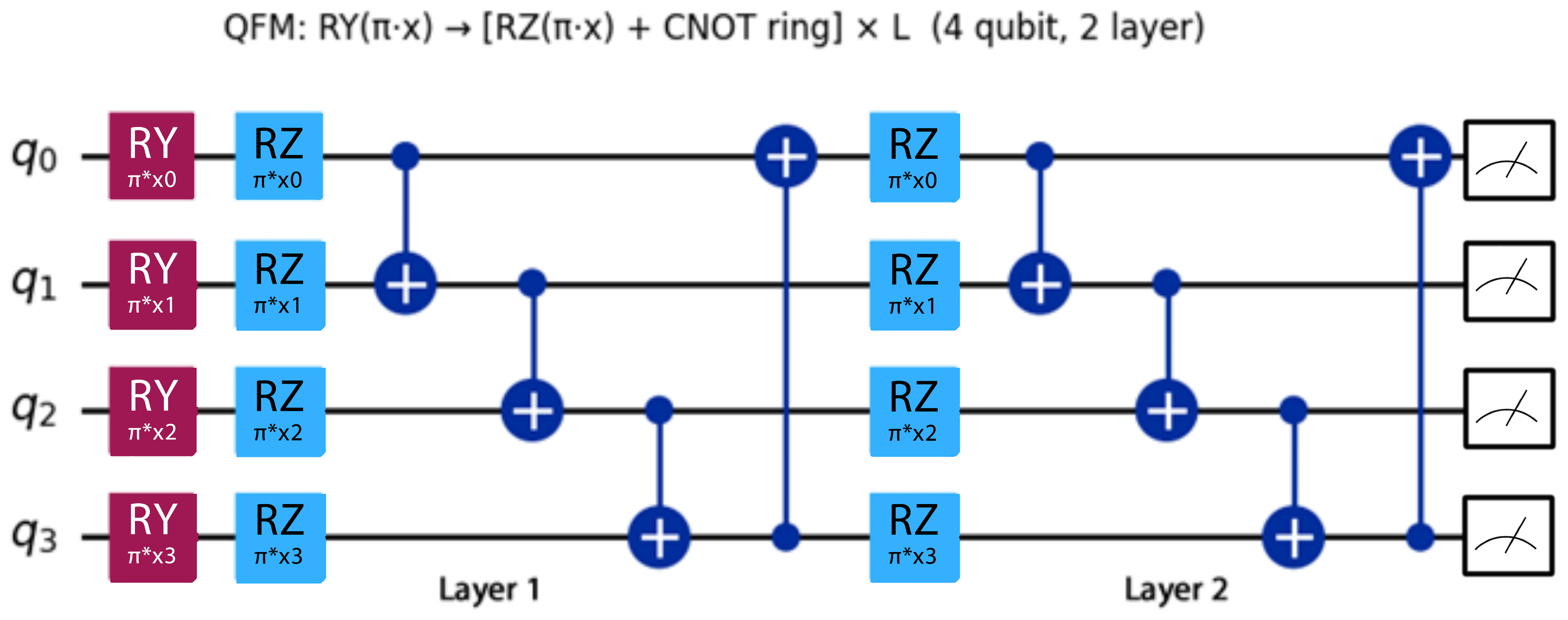

3.5. QKernel and QBorn Instantiations

3.5.1. Parameter-Free Kernel (QKernel)

3.5.2. QBorn (Born-Rule-Based Alignment)

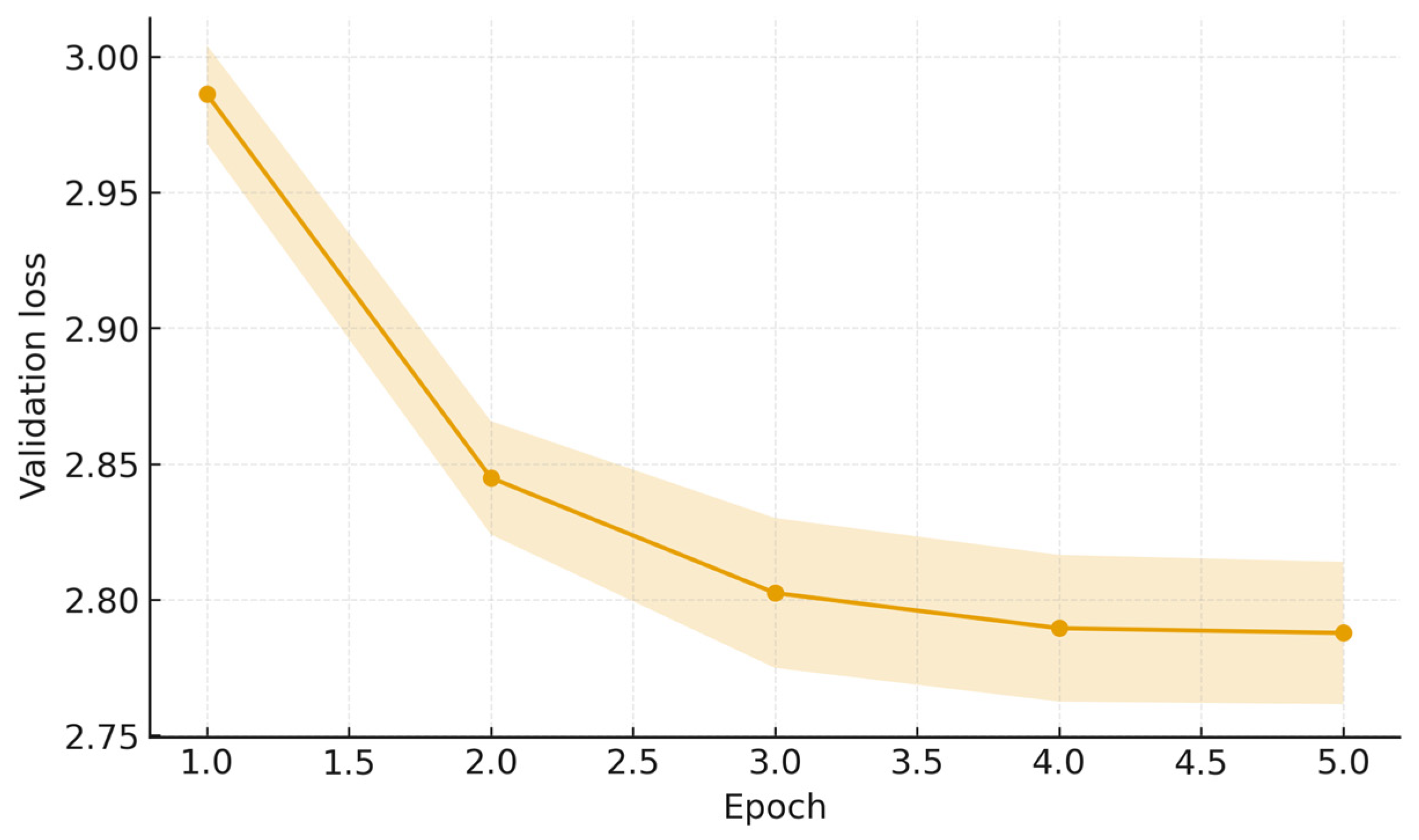

3.6. Training Configuration

3.7. Evaluation Protocol

3.8. Software Infrastructure and Libraries

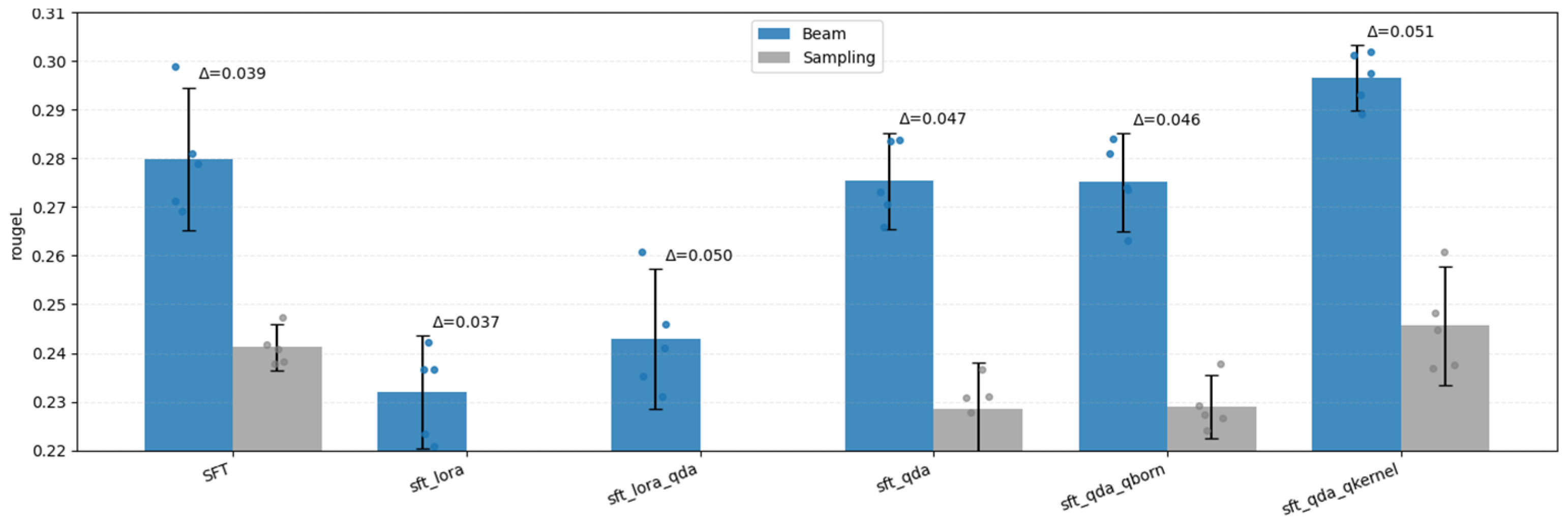

4. Results

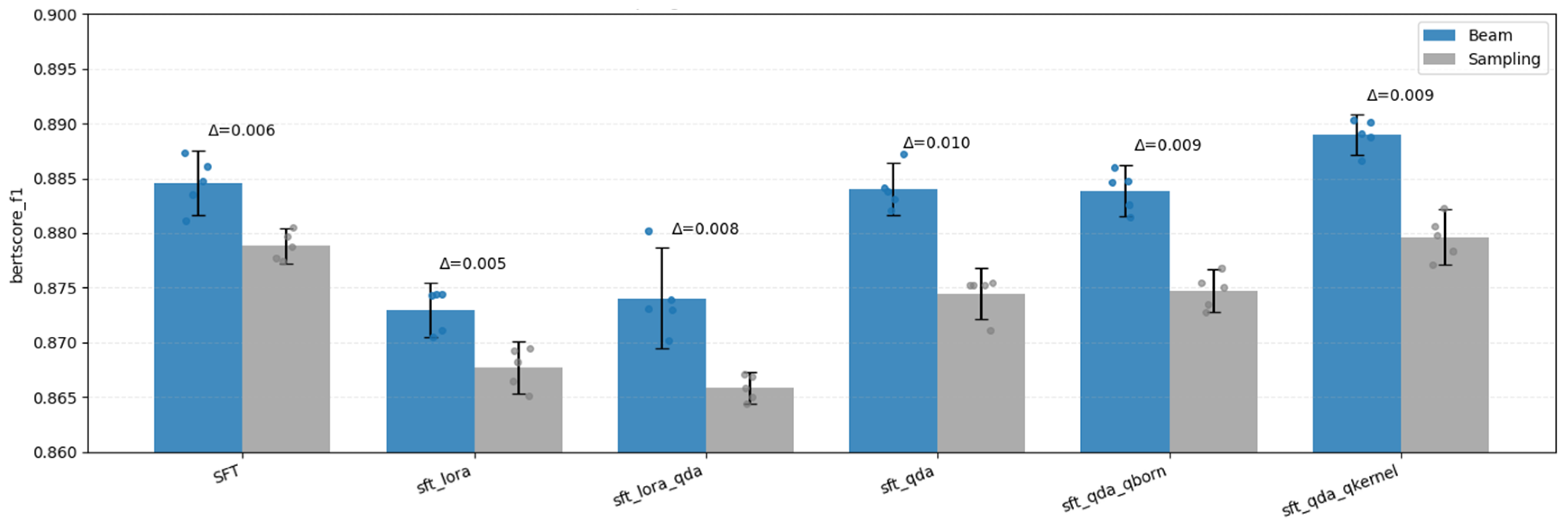

4.1. Decoding Mode Comparison: Beam vs. Sampling

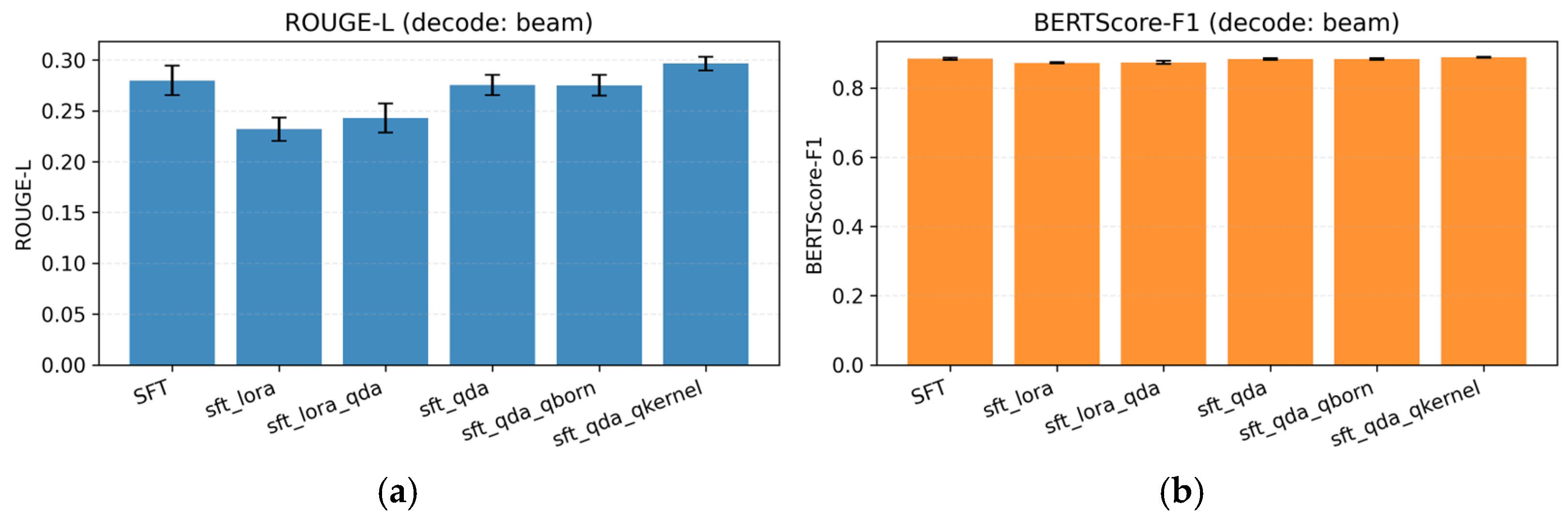

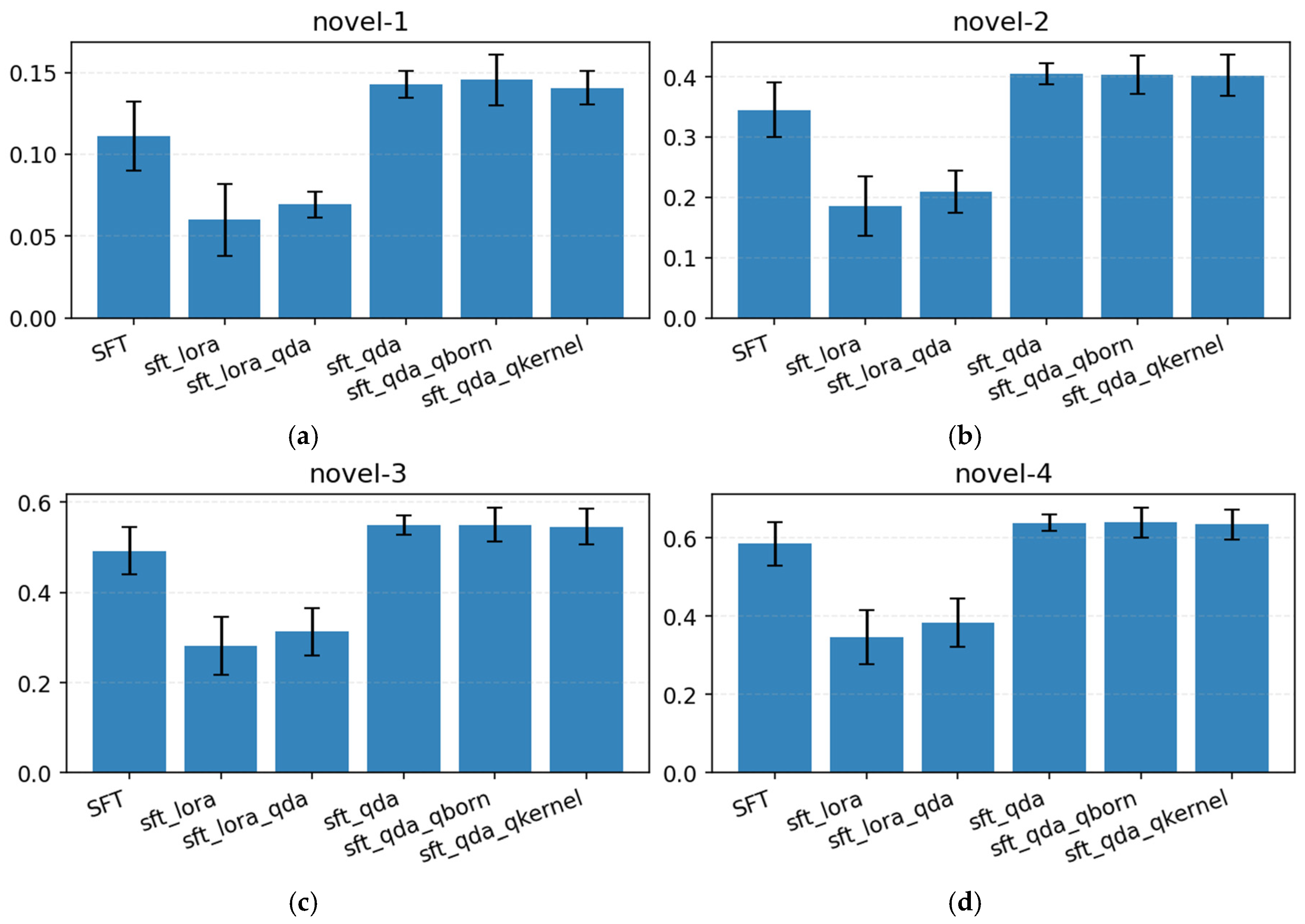

4.2. Architectural Comparison

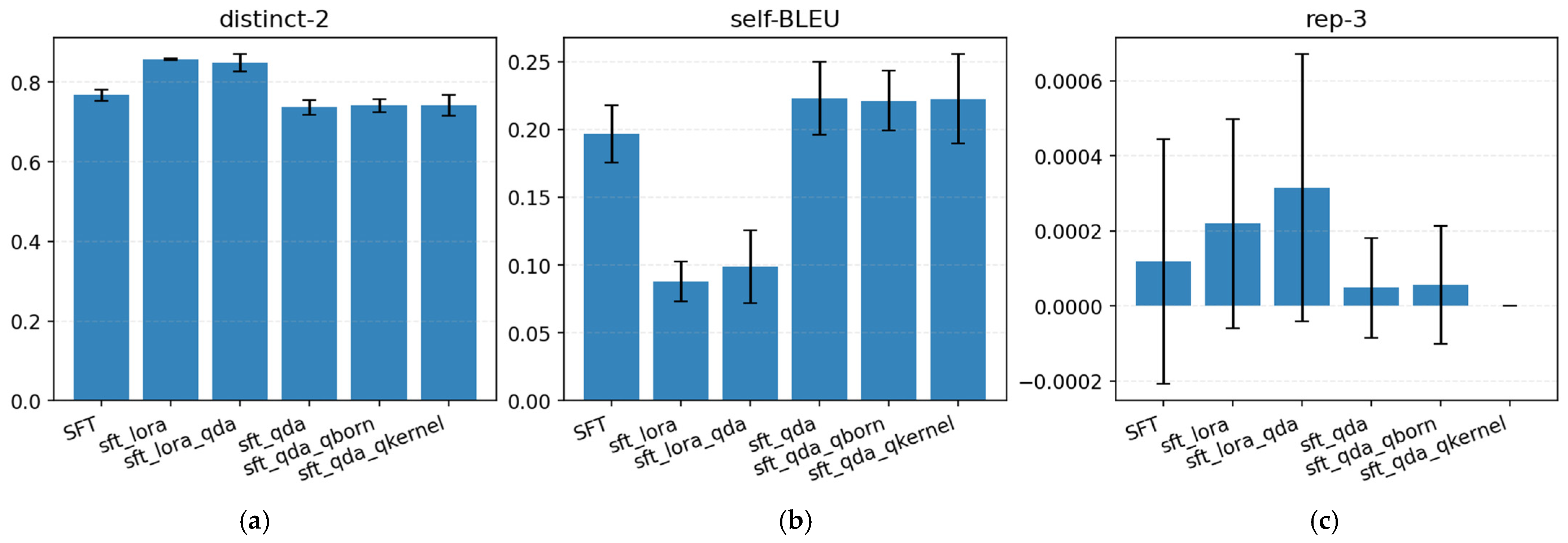

4.3. Diversity and Copying Dynamics

4.4. Qualitative Analysis (Case Studies)

5. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Experiment | Seed | rouge1 | rouge2 | rougeL | rougeLsum | bertscore_f1 | rep3 |

|---|---|---|---|---|---|---|---|

| SFT | 11 | 0.45470 | 0.24790 | 0.28110 | 0.28150 | 0.88470 | 0.00000 |

| SFT | 22 | 0.42437 | 0.23371 | 0.26915 | 0.26849 | 0.88351 | 0.00000 |

| SFT | 33 | 0.43063 | 0.22553 | 0.27128 | 0.27101 | 0.88116 | 0.00000 |

| SFT | 42 | 0.46370 | 0.26116 | 0.29903 | 0.30031 | 0.88737 | 0.00000 |

| SFT | 55 | 0.44806 | 0.24154 | 0.27893 | 0.27898 | 0.88606 | 0.00000 |

| sft_lora | 11 | 0.40097 | 0.18482 | 0.22083 | 0.22092 | 0.87107 | 0.00000 |

| sft_lora | 22 | 0.39071 | 0.18205 | 0.22343 | 0.22289 | 0.87044 | 0.00000 |

| sft_lora | 33 | 0.41953 | 0.20714 | 0.24230 | 0.24384 | 0.87445 | 0.00000 |

| sft_lora | 42 | 0.41787 | 0.19892 | 0.23671 | 0.23743 | 0.87429 | 0.00000 |

| sft_lora | 55 | 0.41047 | 0.20186 | 0.23659 | 0.23744 | 0.87441 | 0.00000 |

| sft_lora_qda | 11 | 0.41225 | 0.20152 | 0.24111 | 0.23980 | 0.87387 | 0.00000 |

| sft_lora_qda | 22 | 0.44061 | 0.22746 | 0.26087 | 0.26143 | 0.88017 | 0.00000 |

| sft_lora_qda | 33 | 0.41809 | 0.21219 | 0.24603 | 0.24657 | 0.87294 | 0.00000 |

| sft_lora_qda | 42 | 0.40920 | 0.19685 | 0.23515 | 0.23620 | 0.87305 | 0.00050 |

| sft_lora_qda | 55 | 0.39746 | 0.18867 | 0.23112 | 0.23142 | 0.87021 | 0.00000 |

| sft_qda | 11 | 0.43894 | 0.23487 | 0.27321 | 0.27381 | 0.88415 | 0.00000 |

| sft_qda | 22 | 0.44798 | 0.24621 | 0.28377 | 0.28402 | 0.88718 | 0.00000 |

| sft_qda | 33 | 0.44623 | 0.24460 | 0.28360 | 0.28443 | 0.88309 | 0.00000 |

| sft_qda | 42 | 0.43899 | 0.23201 | 0.26601 | 0.26581 | 0.88377 | 0.00000 |

| sft_qda | 55 | 0.44096 | 0.23549 | 0.27057 | 0.27113 | 0.88207 | 0.00000 |

| sft_qda_qborn | 11 | 0.44012 | 0.24080 | 0.28100 | 0.28049 | 0.88464 | 0.00000 |

| sft_qda_qborn | 22 | 0.45147 | 0.24611 | 0.28402 | 0.28324 | 0.88600 | 0.00000 |

| sft_qda_qborn | 33 | 0.42849 | 0.23306 | 0.27348 | 0.27414 | 0.88139 | 0.00000 |

| sft_qda_qborn | 42 | 0.43889 | 0.22878 | 0.26311 | 0.26331 | 0.88253 | 0.00000 |

| sft_qda_qborn | 55 | 0.44562 | 0.24041 | 0.27400 | 0.27557 | 0.88478 | 0.00000 |

| sft_qda_qkernel | 11 | 0.46550 | 0.26007 | 0.30129 | 0.30166 | 0.89036 | 0.00000 |

| sft_qda_qkernel | 22 | 0.47182 | 0.27327 | 0.30192 | 0.30070 | 0.89008 | 0.00000 |

| sft_qda_qkernel | 33 | 0.45922 | 0.26918 | 0.29745 | 0.29608 | 0.88875 | 0.00000 |

| sft_qda_qkernel | 42 | 0.46591 | 0.26459 | 0.28907 | 0.28885 | 0.88903 | 0.00000 |

| sft_qda_qkernel | 55 | 0.45425 | 0.25885 | 0.29311 | 0.29262 | 0.88662 | 0.00000 |

| Experiment | Seed | rouge1 | rouge2 | rougeL | rougeLsum | bertscore_f1 | rep3 |

|---|---|---|---|---|---|---|---|

| SFT | 11 | 0.3975 | 0.1907 | 0.2385 | 0.2385 | 0.8788 | 0.0000 |

| SFT | 22 | 0.3928 | 0.1768 | 0.2292 | 0.2291 | 0.8775 | 0.0000 |

| SFT | 33 | 0.3923 | 0.1840 | 0.2417 | 0.2416 | 0.8778 | 0.0000 |

| SFT | 42 | 0.4013 | 0.1893 | 0.2435 | 0.2440 | 0.8805 | 0.0000 |

| SFT | 55 | 0.3983 | 0.1872 | 0.2534 | 0.2541 | 0.8797 | 0.0000 |

| sft_lora | 11 | 0.3339 | 0.1188 | 0.1557 | 0.1556 | 0.8665 | 0.0000 |

| sft_lora | 22 | 0.3376 | 0.1157 | 0.1418 | 0.1417 | 0.8682 | 0.0000 |

| sft_lora | 33 | 0.3343 | 0.1080 | 0.1662 | 0.1661 | 0.8651 | 0.0000 |

| sft_lora | 42 | 0.3389 | 0.1122 | 0.1716 | 0.1717 | 0.8695 | 0.0000 |

| sft_lora | 55 | 0.3377 | 0.1134 | 0.1697 | 0.1696 | 0.8693 | 0.0000 |

| sft_lora_qda | 11 | 0.3409 | 0.1238 | 0.1481 | 0.1476 | 0.8669 | 0.0000 |

| sft_lora_qda | 22 | 0.3495 | 0.1190 | 0.1462 | 0.1457 | 0.8671 | 0.0000 |

| sft_lora_qda | 33 | 0.3408 | 0.1155 | 0.1516 | 0.1517 | 0.8650 | 0.0000 |

| sft_lora_qda | 42 | 0.3388 | 0.1082 | 0.1420 | 0.1413 | 0.8659 | 0.0000 |

| sft_lora_qda | 55 | 0.3399 | 0.1118 | 0.1414 | 0.1412 | 0.8693 | 0.0000 |

| sft_qda | 11 | 0.3973 | 0.1889 | 0.2312 | 0.2317 | 0.8755 | 0.0002 |

| sft_qda | 22 | 0.4043 | 0.1789 | 0.2278 | 0.2278 | 0.8752 | 0.0000 |

| sft_qda | 33 | 0.4013 | 0.1875 | 0.2367 | 0.2363 | 0.8752 | 0.0000 |

| sft_qda | 42 | 0.4052 | 0.1805 | 0.2309 | 0.2309 | 0.8752 | 0.0000 |

| sft_qda | 55 | 0.3938 | 0.1751 | 0.2158 | 0.2157 | 0.8711 | 0.0000 |

| sft_qda_qborn | 11 | 0.3944 | 0.1861 | 0.2302 | 0.2301 | 0.8768 | 0.0000 |

| sft_qda_qborn | 22 | 0.4000 | 0.1769 | 0.2222 | 0.2221 | 0.8728 | 0.0000 |

| sft_qda_qborn | 33 | 0.3998 | 0.1842 | 0.2397 | 0.2398 | 0.8754 | 0.0000 |

| sft_qda_qborn | 42 | 0.4026 | 0.1830 | 0.2323 | 0.2322 | 0.8754 | 0.0000 |

| sft_qda_qborn | 55 | 0.3952 | 0.1772 | 0.2208 | 0.2209 | 0.8735 | 0.0000 |

| sft_qda_qkernel | 11 | 0.4052 | 0.1988 | 0.2411 | 0.2410 | 0.8807 | 0.0000 |

| sft_qda_qkernel | 22 | 0.4084 | 0.1975 | 0.2416 | 0.2416 | 0.8823 | 0.0000 |

| sft_qda_qkernel | 33 | 0.4052 | 0.2005 | 0.2356 | 0.2354 | 0.8783 | 0.0000 |

| sft_qda_qkernel | 42 | 0.4100 | 0.1969 | 0.2420 | 0.2420 | 0.8798 | 0.0000 |

| sft_qda_qkernel | 55 | 0.4018 | 0.1947 | 0.2244 | 0.2243 | 0.8771 | 0.0000 |

| Model | Seed | rougeL_beam | rougeL_sampling | diff_beam_minus_sampling |

|---|---|---|---|---|

| SFT | 11 | 0.281114 | 0.247312 | 0.033802 |

| SFT | 22 | 0.269150 | 0.237728 | 0.031422 |

| SFT | 33 | 0.271282 | 0.241761 | 0.029521 |

| SFT | 42 | 0.299029 | 0.238312 | 0.060717 |

| SFT | 55 | 0.278926 | 0.240884 | 0.038043 |

| sft_lora | 11 | 0.220833 | 0.190810 | 0.030023 |

| sft_lora | 22 | 0.223430 | 0.198674 | 0.024756 |

| sft_lora | 33 | 0.242301 | 0.188698 | 0.053603 |

| sft_lora | 42 | 0.236713 | 0.199374 | 0.037339 |

| sft_lora | 55 | 0.236592 | 0.197476 | 0.039117 |

| sft_lora_qda | 11 | 0.241106 | 0.188188 | 0.052918 |

| sft_lora_qda | 22 | 0.260874 | 0.189185 | 0.071688 |

| sft_lora_qda | 33 | 0.246025 | 0.196171 | 0.049855 |

| sft_lora_qda | 42 | 0.235151 | 0.192879 | 0.042271 |

| sft_lora_qda | 55 | 0.231121 | 0.197768 | 0.033353 |

| sft_qda | 11 | 0.273206 | 0.231169 | 0.042037 |

| sft_qda | 22 | 0.283773 | 0.227820 | 0.055953 |

| sft_qda | 33 | 0.283602 | 0.236706 | 0.046896 |

| sft_qda | 42 | 0.266006 | 0.230907 | 0.035099 |

| sft_qda | 55 | 0.270571 | 0.215776 | 0.054795 |

| sft_qda_qborn | 11 | 0.281005 | 0.237839 | 0.043166 |

| sft_qda_qborn | 22 | 0.284021 | 0.227428 | 0.056593 |

| sft_qda_qborn | 33 | 0.273483 | 0.226725 | 0.046758 |

| sft_qda_qborn | 42 | 0.263108 | 0.229182 | 0.033925 |

| sft_qda_qborn | 55 | 0.274005 | 0.223998 | 0.050007 |

| sft_qda_qkernel | 11 | 0.301291 | 0.248191 | 0.053100 |

| sft_qda_qkernel | 22 | 0.301920 | 0.260835 | 0.041085 |

| sft_qda_qkernel | 33 | 0.297455 | 0.237539 | 0.059916 |

| sft_qda_qkernel | 42 | 0.289065 | 0.244677 | 0.044388 |

| sft_qda_qkernel | 55 | 0.293109 | 0.236792 | 0.056317 |

| Model | beam_mean | Sampling-Mean | beam_minus_sampling_mean | beam_minus_sam-pling_ci_low | beam_minus_sampling_ci_high | paired_t_p_greater |

|---|---|---|---|---|---|---|

| SFT | 0.2799 | 0.2412 | 0.0387 | 0.0311 | 0.0500 | 0.0012 |

| sft_lora | 0.2320 | 0.1950 | 0.0370 | 0.0287 | 0.0460 | 0.0008 |

| sft_lora_qda | 0.2429 | 0.1928 | 0.0500 | 0.0402 | 0.0621 | 0.0007 |

| sft_qda | 0.2754 | 0.2285 | 0.0470 | 0.0402 | 0.0537 | 0.0001 |

| sft_qda_qborn | 0.2751 | 0.2290 | 0.0461 | 0.0391 | 0.0526 | 0.0001 |

| sft_qda_qkernel | 0.2966 | 0.2456 | 0.0510 | 0.0448 | 0.0571 | 0.0001 |

| Model | ROUGE-1 | ROUGE-2 | ROUGE-L | ROUGE-Lsum | BERTScore-F1 | rep3 |

|---|---|---|---|---|---|---|

| sft | 0.4443 | 0.2420 | 0.2799 | 0.2801 | 0.8846 | 0.0000 |

| sft_lora | 0.4079 | 0.1950 | 0.2320 | 0.2325 | 0.8729 | 0.0000 |

| sft_lora_qda | 0.4155 | 0.2053 | 0.2429 | 0.2431 | 0.8740 | 0.0001 |

| sft_qda | 0.4426 | 0.2386 | 0.2754 | 0.2758 | 0.8841 | 0.0000 |

| sft_qda_qborn | 0.4409 | 0.2378 | 0.2751 | 0.2753 | 0.8839 | 0.0000 |

| sft_qda_qkernel | 0.4633 | 0.2652 | 0.2966 | 0.2960 | 0.8890 | 0.0000 |

| model_A | model_B | mean_diff | ci_low | ci_high | p_dir | q_dir | cliffs_delta | Winner | Significant |

|---|---|---|---|---|---|---|---|---|---|

| sft_lora | sft_qda_qkernel | −0.064594 | −0.075004 | −0.054306 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora_qda | sft_qda_qkernel | −0.053713 | −0.059652 | −0.046950 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora | sft_qda | −0.043458 | −0.053476 | −0.033569 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora | sft_qda_qborn | −0.043151 | −0.055788 | −0.030513 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora_qda | sft_qda | −0.032576 | −0.037357 | −0.027426 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora_qda | sft_qda_qborn | −0.032269 | −0.039202 | −0.025834 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_qda_qborn | sft_qda_qkernel | −0.021444 | −0.024190 | −0.018858 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_qda | sft_qda_qkernel | −0.021136 | −0.025092 | −0.016699 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| SFT | sft_qda_qkernel | −0.016668 | −0.027732 | −0.002093 | 0.010235 | 0.013957 | −0.6 (large) | B > A | True |

| sft_lora | sft_lora_qda | −0.010881 | −0.026208 | 0.002069 | 0.061080 | 0.076350 | −0.2 (small) | B > A | False |

| sft_qda | sft_qda_qborn | 0.000307 | −0.004779 | 0.005965 | 0.461473 | 0.461473 | −0.2 (small) | A > B | False |

| SFT | sft_qda | 0.004469 | −0.009567 | 0.019020 | 0.339928 | 0.364209 | 0.2 (small) | A > B | False |

| SFT | sft_qda_qborn | 0.004776 | −0.007916 | 0.021597 | 0.280444 | 0.323589 | 0.2 (small) | A > B | False |

| SFT | sft_lora_qda | 0.037045 | 0.019578 | 0.052939 | 0.000005 | 0.000007 | 1.0 (large) | A > B | True |

| SFT | sft_lora | 0.047927 | 0.037670 | 0.058183 | 0.000005 | 0.000007 | 1.0 (large) | A > B | True |

| model_A | model_B | mean_diff | ci_low | ci_high | p_dir | q_dir | cliffs_ delta | Winner | Significant |

|---|---|---|---|---|---|---|---|---|---|

| sft_lora | sft_qda_qkernel | −0.0160 | −0.0185 | −0.0135 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora_qda | sft_qda_qkernel | −0.0149 | −0.0163 | −0.0123 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora | sft_qda | −0.0111 | −0.0141 | −0.0084 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora | sft_qda_qborn | −0.0109 | −0.0137 | −0.0081 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora_qda | sft_qda | −0.0100 | −0.0112 | −0.0083 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora_qda | sft_qda_qborn | −0.0098 | −0.0125 | −0.0073 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_qda_qborn | sft_qda_qkernel | −0.0051 | −0.0066 | −0.0033 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_qda | sft_qda_qkernel | −0.0049 | −0.0057 | −0.0038 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| SFT | sft_qda_qkernel | −0.0043 | −0.0067 | −0.0019 | 0.000005 | 0.000007 | −1.0 (large) | B > A | True |

| sft_lora | sft_lora_qda | −0.0011 | −0.0055 | 0.0025 | 0.330067 | 0.370464 | 0.2 (small) | B > A | False |

| sft_qda | sft_qda_qborn | 0.0001 | −0.0013 | 0.0014 | 0.374533 | 0.374533 | 0.2 (small) | A > B | False |

| SFT | sft_qda | 0.0005 | −0.0021 | 0.0031 | 0.345767 | 0.370464 | 0.2 (small) | A > B | False |

| SFT | sft_qda_qborn | 0.0007 | −0.0012 | 0.0029 | 0.279267 | 0.349083 | 0.2 (small) | A > B | False |

| SFT | sft_lora_qda | 0.0105 | 0.0065 | 0.0142 | 0.000005 | 0.000007 | 1.0 (large) | A > B | True |

| SFT | sft_lora | 0.0116 | 0.0091 | 0.0133 | 0.000005 | 0.000007 | 1.0 (large) | A > B | True |

References

- Karadağ, Ö. Türkçe ders kitaplarında yer alan özetleme etkinlikleri üzerine bir değerlendirme. Ana Dili Eğitimi Derg. 2019, 7, 469–485. [Google Scholar] [CrossRef]

- Sinha, A.; Yadav, A.; Gahlot, A. Extractive text summarization using neural networks. arXiv 2018, arXiv:1802.10137. [Google Scholar] [CrossRef]

- Torres-Moreno, J.M. Automatic Text Summarization; John Wiley Sons: Hoboken, NJ, USA, 2014. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, H.; Meng, D.; Wang, J.; Tan, J. A comprehensive survey on automatic text summarization with exploration of LLM-based methods. Neurocomputing 2025, 663, 131928. [Google Scholar] [CrossRef]

- Suleiman, D.; Awajan, A.A. Deep learning based extractive text summarization: Approaches, datasets and evaluation measures. In Proceedings of the 2019 Sixth International Conference on Social Networks Analysis, Management and Security (SNAMS), Granada, Spain, 22–25 October 2019; pp. 204–210. [Google Scholar] [CrossRef]

- Suleiman, D.; Awajan, A. Deep Learning Based Abstractive Text Summarization: Approaches, Datasets, Evaluation Measures, and Challenges. Complexity 2020, 2020, 9365340. [Google Scholar] [CrossRef]

- Sunitha, C.; Jaya, A.; Ganesh, A. A study on abstractive summarization techniques in Indian languages. Procedia Comput. Sci. 2016, 87, 25–31. [Google Scholar] [CrossRef]

- Al-Radaideh, Q.A.; Bataineh, D.Q. A hybrid approach for Arabic text summarization using domain knowledge and genetic algorithms. Cogn. Comput. 2018, 10, 651–669. [Google Scholar] [CrossRef]

- Zhang, R.; Guo, J.; Chen, L.; Fan, Y.; Cheng, X. A review on question generation from natural language text. ACM Trans. Inf. Syst. 2021, 40, 1–43. [Google Scholar] [CrossRef]

- Rush, A.M.; Chopra, S.; Weston, J. A neural attention model for abstractive sentence summarization. arXiv 2015, arXiv:1509.00685. [Google Scholar] [CrossRef]

- Sanjrani, A.A.; Saqib, M.; Rehman, S.; Ahmad, M.S. Text Summarization using Deep Learning: A Study on Automatic Summarization. Asian Bull. Big Data Manag. 2024, 4, 216–226. [Google Scholar] [CrossRef]

- Kabir, F.; Mitu, S.A.; Sultana, S.; Hossain, B.; Islam, R.; Ahmed, K.R. LegalSummNet: A Transformer-Based Model for Effective Legal Case Summarization. Int. J. Adv. Comput. Sci. Appl. 2025, 16, 14. [Google Scholar] [CrossRef]

- Abadi, V.N.M.; Ghasemian, F. Enhancing Persian text summarization through a three-phase fine-tuning and reinforcement learning approach with the mT5 transformer model. Sci. Rep. 2025, 15, 80. [Google Scholar] [CrossRef]

- Kryściński, W.; McCann, B.; Xiong, C.; Socher, R. Evaluating the Factual Consistency of Abstractive Text Summarization. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020. [Google Scholar] [CrossRef]

- Maynez, J.; Narayan, S.; Bohnet, B.; McDonald, R. On Faithfulness and Factuality in Abstractive Summarization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 16–20 July 2020. [Google Scholar] [CrossRef]

- Pagnoni, A.; Balachandran, V.; Tsvetkov, Y. FRANK: A Benchmark for Factuality Metrics. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021. [Google Scholar] [CrossRef]

- Fabbri, A.R.; Wu, C.-S.; Liu, W.; Xiong, C. QAFactEval: Improved QA-Based Factual Consistency Evaluation. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022. [Google Scholar] [CrossRef]

- Cohan, A.; Dernoncourt, F.; Kim, D.S.; Bui, T.; Kim, S.; Chang, W.; Goharian, N. A Discourse-Aware Attention Model for Abstractive Summarization of Long Documents. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), New Orleans, LA, USA, 1–6 June 2018. [Google Scholar] [CrossRef]

- Beltagy, I.; Peters, M.E.; Cohan, A. Longformer: The Long-Document Transformer. arXiv 2020, arXiv:2004.05150. [Google Scholar] [CrossRef]

- See, A.; Liu, P.J.; Manning, C.D. Get To The Point: Pointer-Generator Networks. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017. [Google Scholar] [CrossRef]

- Paulus, R.; Xiong, C.; Socher, R. A Deep Reinforced Model for Abstractive Summarization. arXiv 2018, arXiv:1705.04304. [Google Scholar] [CrossRef]

- Zaheer, M.; Guruganesh, G.; Dubey, A.; Ainslie, J.; Alberti, C.; Ontanon, S.; Pham, P.; Ravula, A.; Wang, Q.; Yang, L.; et al. BigBird: Transformers for Longer Sequences. arXiv 2020, arXiv:2007.14062. [Google Scholar] [CrossRef]

- Child, R.; Gray, S.; Radford, A.; Sutskever, I. Generating Long Sequences with Sparse Transformers. arXiv 2019, arXiv:1904.10509. [Google Scholar] [CrossRef]

- Vats, A.; Raja, R.; Kattamuri, A.; Bohra, A. Quantum Natural Language Processing: A Comprehensive Survey of Models, Architectures, and Evaluation Methods. Preprint 2025. [Google Scholar] [CrossRef]

- Nausheen, F.; Ahmed, K.; Khan, M.I. Quantum Natural Language Processing: A Comprehensive Review of Models, Methods, and Applications. arXiv 2025, arXiv:2504.09909. [Google Scholar] [CrossRef]

- Li, G.; Zhao, X.; Wang, X. Quantum self-attention neural networks for text classification. Sci. China Inf. Sci. 2024, 67, 142501. [Google Scholar] [CrossRef]

- Wright, M. Design and implementation of a quantum kernel for natural language processing. arXiv 2022, arXiv:2205.06409. [Google Scholar] [CrossRef]

- Ertam, F.; Aydin, G. Abstractive text summarization using deep learning with a new Turkish summarization benchmark dataset. Concurr. Comput. Pract. Exp. 2022, 34, e6482. [Google Scholar] [CrossRef]

- Karaca, A.; Aydın, Ö. Transformatör mimarisi tabanlı derin öğrenme yöntemi ile Türkçe haber metinlerine başlık üretme. Gazi Üniversitesi Mühendislik Mimar. Fakültesi Derg. 2023, 39, 485–496. [Google Scholar] [CrossRef]

- Baykara, B.; Güngör, T. Abstractive text summarization and new large-scale datasets for agglutinative languages Turkish and Hungarian. Lang. Resour. Eval. 2022, 56, 973–1007. [Google Scholar] [CrossRef]

- Albayati, M.A.A.; Findik, O. A Hybrid Transformer-based Framework for Multi-Document Summarization of Turkish Legal Documents. IEEE Access 2025, 13, 37165–37181. [Google Scholar] [CrossRef]

- Lin, C.-Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the 4th Workshop on Computational Linguistics for the Political and Social Sciences: Long and Short Papers, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Lee, D.; Shin, M.C.; Whang, T.; Cho, S.; Ko, B.; Lee, D.; Kim, E.; Jo, J. Reference and document aware semantic evaluation methods for Korean language summarization. arXiv 2020, arXiv:2005.03510. [Google Scholar] [CrossRef]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Building and Using Parallel Texts, Ann Arbor, MI, USA, 29–30 June 2005; pp. 65–72. [Google Scholar]

- Grusky, M.; Naaman, M.; Artzi, Y. NEWSROOM: A Dataset of 1.3M Summaries with Diverse Extractive Strategies. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 708–719. [Google Scholar] [CrossRef]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A Diversity-Promoting Objective Function for Neural Conversation Models. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 110–119. [Google Scholar] [CrossRef]

- Zhu, Y.; Lu, S.; Zheng, L.; Guo, J.; Zhang, W.; Wang, J.; Yu, Y. Texygen: A Benchmarking Platform for Text Generation Models. In Proceedings of the KDD, London, UK, 19–23 August 2018; pp. 1099–1108. [Google Scholar] [CrossRef]

- Berg-Kirkpatrick, T.; Burkett, D.; Klein, D. An Empirical Investigation of Statistical Significance in NLP. In Proceedings of the EMNLP-CoNLL, Jeju Island, Republic of Korea, 12–14 July 2012; pp. 995–1005. [Google Scholar]

- Dror, R.; Baumer, G.; Shlomov, S.; Reichart, R. The Hitchhiker’s Guide to Testing Statistical Significance in NLP. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 1383–1392. [Google Scholar] [CrossRef]

- Benjamini, Y.; Hochberg, Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. J. R. Stat. Soc. Ser. B 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Cliff, N. Dominance Statistics: Ordinal Analyses to Answer Ordinal Questions. Psychol. Bull. 1993, 114, 494–509. [Google Scholar] [CrossRef]

- Romano, J.; Kromrey, J.D.; Coraggio, J.; Skowronek, J.; Devine, L. Exploring Methods for Evaluating Group Differences on the NSSE and Other Surveys. Proc. South. Assoc. Institutional Res. 2006, 14, 1–51. [Google Scholar]

- Vargha, A.; Delaney, H.D. A Critique and Improvement of the “CL” Common Language Effect Size Statistics of McGraw and Wong. J. Educ. Behav. Stat. 2000, 25, 101–132. [Google Scholar] [CrossRef]

- Song, H.; Su, H.; Shalyminov, I.; Cai, J.; Mansour, S. FineSurE: Fine-grained summarization evaluation using LLMs. arXiv 2024, arXiv:2407.00908. [Google Scholar] [CrossRef]

- Honovich, O.; Aharoni, R.; Herzig, J.; Taitelbaum, H.; Kukliansy, D.; Cohen, V.; Scialom, T.; Szpektor, I.; Hassidim, A.; Matias, Y. TRUE: Re-evaluating factual consistency evaluation. arXiv 2022, arXiv:2204.04991. [Google Scholar] [CrossRef]

- Wang, A.; Wang, R.; Sadat, M.; Wang, W.Y.; Wan, X. AlignScore: Evaluating text-to-text generation with alignment-aware metrics. arXiv 2023, arXiv:2305.16739. [Google Scholar] [CrossRef]

- Tsirmpas, A.; Hutson, M.; Papineni, A.; Lignos, C. Neural NLP for long texts: A survey. Eng. Appl. Artif. Intell. 2024, 126, 108231. [Google Scholar] [CrossRef]

- Van Schaik, T.A.; Pugh, B. A field guide to automatic evaluation of llm-generated summaries. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington DC, USA, 14–18 July 2024; pp. 2832–2836. [Google Scholar] [CrossRef]

- Abdul Salam, M.; Gamal, M.; Hamed, H.F.; Sweidan, S. Abstractive text summarization using deep learning models: A survey. Int. J. Data Sci. Anal. 2025, 57, 1–29. [Google Scholar] [CrossRef]

- Pei, J.; Hantach, R.; Abbès, S.B.; Calvez, P. Towards Hybrid Model for Automatic Text Summarization. In Proceedings of the 19th IEEE International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 14–17 December 2020; pp. 987–993. [Google Scholar] [CrossRef]

- Li, C.; Xu, W.; Li, S.; Gao, S. Guiding generation for abstractive text summarization based on key information guide network. In Proceedings of the NAACL-HLT 2018 (Short Papers), New Orleans, LA, USA, 1–6 June 2018; pp. 55–60. [Google Scholar] [CrossRef]

- Ghadimi, A.; Beigy, H. Hybrid multi-document summarization using pre-trained language models. Expert Syst. Appl. 2022, 192, 116292. [Google Scholar] [CrossRef]

- Muniraj, P.; Sabarmathi, K.R.; Leelavathi, R. HNTSumm: Hybrid text summarization of transliterated news articles. Int. J. Intell. Netw. 2023, 4, 53–61. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual denoising pre-training for neural machine translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Shakil, H.; Farooq, A.; Kalita, J. Abstractive Text Summarization: State of the Art, Challenges, and Improvements. arXiv 2024, arXiv:2409.02413. [Google Scholar] [CrossRef]

- Liu, R.; Liu, M.; Yu, M.; Zhang, H.; Jiang, J.; Li, G.; Huang, W. SumSurvey: An abstractive dataset of scientific survey papers for long document summarization. In Proceedings of the Findings of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; pp. 9632–9651. [Google Scholar] [CrossRef]

- Lee, J.; Goka, H.; Ko, H. BRIDO: Bringing Democratic Order to Abstractive Summarization. arXiv 2025, arXiv:2502.18342. [Google Scholar] [CrossRef]

- Almohaimeed, N. Abstractive text summarization: A comprehensive survey. Comput. Sci. Rev. 2025, 57, 100762. [Google Scholar] [CrossRef]

- Rahman, S.; Labib, M.; Das, S. CUET_SSTM at GEM’24 (Swahili long-text summarization). In Proceedings of the 17th International Natural Language Generation Conference, Tokyo, Japan, 19–22 August 2024. [Google Scholar] [CrossRef]

- Han, R.; Li, W.; Li, D.; Guo, T.; Zhang, R.; Li, Z.; Liu, Y. Rethinking efficient multilingual text summarization meta-evaluation. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL 2024), Bangkok, Thailand, 11–16 August 2024. [Google Scholar] [CrossRef]

- Türker, M.; Arı, M.E.; Han, A. VBART: The Turkish LLM. arXiv 2024, arXiv:2403.01308. [Google Scholar] [CrossRef]

- Azhar, M.; Amjad, A.; Dewi, D.A.; Kasim, S. A Systematic Review and Experimental Evaluation of Classical and Transformer-Based Models for Urdu Abstractive Text Summarization. Information 2025, 16, 784. [Google Scholar] [CrossRef]

- Masih, S.; Hassan, M.; Fahad, L.G.; Hassan, B. Transformer-Based Abstractive Summarization of Legal Texts in Low-Resource Languages. Electronics 2025, 14, 2320. [Google Scholar] [CrossRef]

- Kozhirbayev, Z. Enhancing neural machine translation with fine-tuned mBART50 pre-trained model: An examination with low-resource translation pairs. Ingénierie Systèmes D’information 2024, 29, 831–840. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, L.; Wang, W.; Chen, W.; Chi, Y.; Raj, A.; et al. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar] [CrossRef]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLoRA: Efficient Finetuning of Quantized LLMs. arXiv 2023, arXiv:2305.14314. [Google Scholar] [CrossRef]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attia, P.; Gelly, S. Parameter-Efficient Transfer Learning for NLP. In Proceedings of the Seventh International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Rücklé, A.; Poth, C.; Kamath, A.; Vulić, I.; Ruder, S.; Cho, K.; Gurevych, I. AdapterHub: A Framework for Adapting Transformers. In Proceedings of the EMNLP 2020: Systems Demonstrations, Online, 16–20 November 2020; pp. 46–54. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Kamath, A.; Rücklé, A.; Cho, K.; Gurevych, I. Adapterfusion: Non-destructive task composition for transfer learning. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main volume, Kyiv, Ukraine, 19–23 April 2021; pp. 487–503. [Google Scholar] [CrossRef]

- Havlíček, V.; Córcoles, A.D.; Temme, K.; Harrow, A.W.; Kandala, A.; Chow, J.M.; Gambetta, J.M. Supervised Learning with Quantum-Enhanced Feature Spaces. Nature 2019, 567, 209–212. [Google Scholar] [CrossRef] [PubMed]

- Schuld, M.; Killoran, N. Quantum Machine Learning in Feature Hilbert Spaces. Phys. Rev. Lett. 2019, 122, 040504. [Google Scholar] [CrossRef]

- Henderson, L.J.; Goel, R.; Shrapnel, S. Quantum kernel machine learning with continuous variables. Quantum 2024, 8, 1570. [Google Scholar] [CrossRef]

- Incudini, M.; Serra, G.; Grossi, M.; Bosco, D.L.; Martini, F.; Di Pierro, A. Automatic and effective discovery of quantum kernels. arXiv 2022, arXiv:2209.11144. [Google Scholar] [CrossRef]

- Gili, K.; Hibat-Allah, M.; Mauri, M.; Ballance, C.; Perdomo-Ortiz, A. Do quantum circuit Born machines generalize? Quantum Sci. Technol. 2023, 8, 035021. [Google Scholar] [CrossRef]

- Zeng, Q.W.; Ge, H.Y.; Gong, C.; Zhou, N.R. Conditional quantum circuit Born machine based on a hybrid quantum–classical framework. Phys. A Stat. Mech. Appl. 2023, 619, 128736. [Google Scholar] [CrossRef]

- Tomal, S.M.Y.I.; Al Shafin, A.; Bhattacharjee, D.; Amin, M.K.; Shahir, R.S. Quantum-Enhanced Attention Mechanism in NLP: A Hybrid Classical-Quantum Approach. arXiv 2025, arXiv:2501.15630. [Google Scholar] [CrossRef]

- Zhu, J.; Ma, X.; Lin, Z.; De Meo, P. A quantum-like approach for text generation from knowledge graphs. CAAI Trans. Intell. Technol. 2023, 8, 1455–1463. [Google Scholar] [CrossRef]

- Yan, K.; Gou, Z.; Zhang, Z.; Wang, H. Quantum-Inspired Language Model with Lindblad Master Equation and Interferometry (LI-QiLM). In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 10–15 June 2024. [Google Scholar] [CrossRef]

- Xu, C.; Wang, X.; Tang, J.; Wang, Y.; Shao, L.; Gao, Q. Quantum-Inspired Attention-Based Semantic Dependency Fusion Model for Aspect-Based Sentiment Analysis. Axioms 2025, 14, 525. [Google Scholar] [CrossRef]

- Stoica, O.C. Born rule: Quantum probability as classical probability. Int. J. Theor. Phys. 2025, 64, 117. [Google Scholar] [CrossRef]

- Neumaier, A. The Born Rule—100 Years Ago and Today. Entropy 2025, 27, 415. [Google Scholar] [CrossRef] [PubMed]

- Östborn, P. Born’s rule from epistemic assumptions. arXiv 2024, arXiv:2402.17066. [Google Scholar] [CrossRef]

- Ellerman, D. Where does the Born Rule come from? arXiv 2023, arXiv:2310.04188. [Google Scholar] [CrossRef]

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Gwet, K.L. Kappa statistic is not satisfactory for assessing the extent of agreement between raters. Stat. Methods Inter-Rater Reliab. Assess. 2002, 1, 1–6. [Google Scholar]

- Gwet, K.L. Handbook of Inter-Rater Reliability, 4th ed.; Advanced Analytics, LLC: Chicago, IL, USA, 2014. [Google Scholar]

- Krippendorff, K. Content Analysis: An Introduction to Its Methodology, 3rd ed.; Sage Publications: Washington, DC, USA, 2018. [Google Scholar]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 7871–7880. [Google Scholar] [CrossRef]

- Williams, R.J.; Zipser, D. A learning algorithm for continually running fully recurrent neural networks. Neural Comput. 1989, 1, 270–280. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception architecture for computer vision. In Proceedings of the Twenty-Ninth IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Müller, R.; Kornblith, S.; Hinton, G. When does label smoothing help? In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Prechelt, L. Early stopping—But when? In Neural Networks: Tricks of the Trade; Orr, G.B., Müller, K.-R., Eds.; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar] [CrossRef]

- Huang, H.-Y.; Kueng, R.; Torlai, G.; Albert, V.V.; Preskill, J. Power of data in quantum machine learning. Nat. Commun. 2021, 12, 2631. [Google Scholar] [CrossRef] [PubMed]

- Caro, M.C.; Datta, N.; Di Sante, D.; Sliva, L.; Banchi, L. Generalization in quantum machine learning from few training data. Nat. Commun. 2022, 13, 4919. [Google Scholar] [CrossRef]

- Dodge, J.; Gururangan, S.; Card, D.; Schwartz, R.; Smith, N.A. Show your work: Improved reporting of experimental results. In Proceedings of the Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP): System Demonstrations, Hong Kong, China, 3–7 November 2019. [Google Scholar] [CrossRef]

- Mosbach, M.; Andriushchenko, M.; Klakow, D. On the stability of fine-tuning BERT. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Online, 3–7 May 2021. [Google Scholar] [CrossRef]

- Bethard, S. We need to talk about random seeds. arXiv 2022, arXiv:2210.13393. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Keskar, N.S.; Mudigere, D.; Nocedal, J.; Smelyanskiy, M.; Tang, P.T.P. On large-batch training for deep learning: Generalization gap and sharp minima. arXiv 2016, arXiv:1609.04836. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the 30th International Conference on Machine Learning (ICML 2013), Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. JMLR 2020, 21, 1–67. [Google Scholar] [CrossRef]

- Narang, S.; Diamos, G.; Elsen, E.; Micikevicius, P.; Alben, J.; Garcia, D.; Ginsburg, B.; Houston, M.; Kuchaiev, O.; Venkatesh, G.; et al. Mixed precision training. arXiv 2017, arXiv:1710.03740. [Google Scholar] [CrossRef]

- Koehn, P.; Knowles, R. Six Challenges for Neural Machine Translation. In Proceedings of the First Workshop on Neural Machine Translation, Vancouver, Canada, 30 July–4 August 2017; pp. 28–39. [Google Scholar] [CrossRef]

- Tu, Z.; Liu, Y.; Lu, Z.; Liu, X.; Li, H. Modeling coverage for neural machine translation. In Proceedings of the Association for Computational Linguistics (ACL), Berlin, Germany, 7–12 August 2016. [Google Scholar] [CrossRef]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M.; Macherey, W.; Krikun, M.; Cao, Y.; Gao, Q.; Macherey, K.; et al. Google’s NMT system: Bridging the gap between human and machine translation. arXiv 2016, arXiv:1609.08144. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. In Proceedings of the 8th International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar] [CrossRef]

- Cumming, G. The New Statistics: Why and How. Psychol. Sci. 2014, 25, 7–29. [Google Scholar] [CrossRef]

- Wasserstein, R.L.; Lazar, N.A. The ASA’s Statement on p-Values: Context, Process, and Purpose. Am. Stat. 2016, 70, 129–133. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar] [CrossRef]

- Bergholm, V.; Izaac, J.; Schuld, M.; Gogolin, C.; Ahmed, S.; Ajith, V.; Alam, M.S.; Alonso-Linaje, G.; AkashNarayanan, B.; Asadi, A.; et al. PennyLane: Automatic differentiation of hybrid quantum-classical computations. arXiv 2018, arXiv:1811.04968. [Google Scholar] [CrossRef]

- Kottmann, J.S.; Alperin-Lea, S.; Tamayo-Mendoza, T.; Cervera-Lierta, A.; Lavigne, C.; Yen, T.-C.; Verteletskyi, V.; Schleich, P.; Anand, A.; Degroote, M.; et al. Tequila: A platform for rapid development of quantum algorithms. Quantum Sci. Technol. 2021, 6, 024009. [Google Scholar] [CrossRef]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef] [PubMed]

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly: Springfield, MO, USA, 2009. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019, arXiv:1908.10084. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

| Model (A) | Karşılaştırılan (B) | Δ = A − B | 95% CI | Cliff’s δ | q_dir (FDR) |

|---|---|---|---|---|---|

| sft_qda_qkernel | sft_lora | 0.0646 | [0.0543, 0.0750] | 1.0 (large) | 0.000007 |

| sft_qda_qkernel | sft_lora_qda | 0.0537 | [0.0470, 0.0597] | 1.0 (large) | 0.000007 |

| sft_qda_qkernel | sft_qda_qborn | 0.0214 | [0.0189, 0.0242] | 1.0 (large) | 0.000007 |

| sft_qda_qkernel | sft_qda | 0.0211 | [0.0167, 0.0251] | 1.0 (large) | 0.000007 |

| sft_qda_qkernel | SFT | 0.0167 | [0.0021, 0.0277] | 0.6 (large) | 0.013957 |

| Paper ID | Gold Summary | Best Model − sft + qda + qkernel (Beam = 4, no_repeat_ngram_size = 3, length_penalty = 0,9, Seed = 22) | Evaluation |

|---|---|---|---|

| 635 | bu çalışma ilköğretim matematik öğretmenliği programı öğrencilerinin koordinat düzleminde birim çemberi kullanarak tanjant ve kotanjant fonksiyonları nın grafiklerinin çiziminde sayı doğrusu kullanımlarını araştıran betimsel bir çalış madır. çalışmada nicel veri toplama yöntemlerinin yanında gözlem ve görüşme gibi nitel veri toplama yöntemleri de kullanılabilen tarama modeli kullanılmıştır. çalışma türkiye nin doğu anadolu bölgesinin nüfusça orta ölçekli bir ilinde yapılmış tır. çalışma grubunu ilköğretim matematik öğretmenliği programına kayıtlı 56 birinci sınıf öğrencisi oluşturmaktadır. çalışmaya katılan öğrencilere on bir adet beşli likert tipi ve altı adet açık uçlu soru sorulmuş ve veriler analiz edilerek elde edilen bulgular sunulmuştur. (This study is a descriptive investigation exploring the use of the number line by prospective primary school mathematics teachers in drawing the graphs of tangent and cotangent functions using the unit circle on a coordinate plane. The study employed a survey research design, utilizing both quantitative data collection methods and qualitative methods such as observation and interviews. The research was conducted in a medium-sized province in the Eastern Anatolia region of Turkey. The study group consisted of 56 first-year students enrolled in the primary school mathematics teaching program. The students participating in the study were administered a questionnaire comprising eleven 5-point Likert-type scale questions and six open-ended questions. The findings obtained from the analysis of the collected data are presented.) | bu çalışmanın amacı ilköğretim matematik öğretmenliği programında yer alan tanjant ve kotanjant fonksiyonlarının grafik çizimi ve doğru sayı doğrusu uygulamaları ile ilgili öğrencilerin algılarını ortaya çıkarmaktır. araştırmada nitel veri toplama yöntemlerinden gözlem ve görüşme yöntemleri kullanılmıştır. araştırmanın çalışma grubunu 2010–2011 eğitim öğretim yılı bahar yarıyılında doğu anadolu bölgesinin orta ölçekli bir ilinde yer alan bir eğitim fakültesinde öğrenim görmekte olan altı birinci sınıf öğrencisi oluşturmaktadır. verilerin toplanmasında bilgi algılama ölçeği ve bilgi testi kullanılmış olup verilerin analizinde betimsel analiz yöntemi kullanılmıştır. (The primary objective of this study is to reveal the students’ perceptions regarding the graphing of tangent and cotangent functions and the correct number line applications, which are part of the primary school mathematics teaching program curriculum. Observation and interview methods, which are qualitative data collection techniques, were employed in the research. The study group consisted of 6 first-year students who were enrolled in a Faculty of Education in a medium-sized province in the Eastern Anatolia region during the Spring semester of the 2010–2011 academic year. A Knowledge Perception Scale and a knowledge test were used for data collection, and the descriptive analysis method was utilized for the analysis of the data.) | The model output correctly captures the study type (“descriptive/survey”), the context (a faculty of education in Eastern Anatolia), and the data-collection instruments, establishing strong topical alignment. However, it diverges from the gold summary by reporting the sample size as 56 instead of 6 and introduces numerical specifics (e.g., “11 Likert + 6 open-ended items”) that create factual inconsistencies. Overall: high coverage/fluency, but a numeric error and unnecessary detail. |

| 485 | bu araştırmada sınıf öğretmeni adaylarına uygulanan stem proje tabanlı öğrenme etkinlikleri sonunda öğretmen adaylarının ürettikleri matematik projeleri incelenmiştir. gerçekleştirilen çalışmada sınıf eğitimi birinci sınıf programında yer alan temel matematik ii dersi kapsamında katı cisimlerin alan ve hacimleri trigonometri ve koordinat sistemi konularını içeren 4 farklı stem etkinliği yürütülmüştür. stem etkinlikleri bina modelleme teodolit tasarımı simülasyon tasarımı ve oyun tasarımı şeklinde olup bu etkinliklerin her birinin sonunda sınıf öğretmeni adaylarına bir proje olmak üzere toplam 4 proje ödevi verilmiştir. araştırmaya sınıf eğitimi birinci sınıfta öğrenim gören 23 öğretmen adayı katılmıştır (This research examined the mathematics projects produced by prospective primary school teachers following the implementation of STEM project-based learning activities. The study involved conducting four distinct STEM activities within the scope of the Basic Mathematics II course, a component of the first-year primary education curriculum. These activities covered the topics of area and volume of solids, trigonometry, and the coordinate system. The STEM activities were designed as building modeling, theodolite design, simulation design, and game design. Following each of these activities, prospective primary school teachers were assigned a total of four project assignments, one project after each activity. Twenty-three prospective teachers enrolled in the first year of the primary education program participated in the research.) | bu çalışmada sınıf eğitimi birinci sınıfta yer alan temel matematik ii dersinde dört stem etkinliği yapılmış ve etkinlikler sonunda öğretmen adaylarına proje ödevleri verilmiştir. bu etkinliklerin incelenmesinde öğretmen adaylarının stem projesi hazırlama beceri düzeyleri betimsel istatistiklerle değerlendirilmiştir. araştırma 2016–2017 eğitim öğretim yılı bahar döneminde orta karadeniz bölgesindeki bir üniversitenin eğitim fakültesinde gerçekleştirilmiştir. araştırmanın veri toplama aracı olarak çalışma grubunu oluşturan 23 öğretmen adayı oluşturmuştur. (In this study, four STEM activities were conducted within the scope of the Basic Mathematics II course, which is taught in the first year of the primary education program, and project assignments were given to prospective teachers upon the completion of these activities. To evaluate these activities, the prospective teachers’ skill levels in preparing STEM projects were assessed using descriptive statistics. The research was carried out at a Faculty of Education at a university located in the Central Black Sea region during the Spring semester of the 2016–2017 academic year. The study group, consisting of 23 prospective teachers, also constituted the data collection instrument for the research.) | The model correctly conveys the four STEM activities, the assignment of project tasks, and the sample of 23 preservice teachers; by explicitly naming the thematic topics (solid geometry/volumes, trigonometry, coordinate system), it enhances content coverage and maintains high fidelity to the gold summary. Overall: good fluency and rich content, with no substantive errors beyond minor verbosity. |

| 142 | bu araştırma öğrencilerin matematik korkusunun nedenlerini belirlemeye yönelik bir ölçek geliştirmek amacıyla yapılmıştır. çalışmanın örneklemini 2018–2019 öğretim yılında uşak il genelinde ilkokul ortaokul ve lise kademesinde öğrenim gören 2580 öğrenci oluşturmuştur. ölçek geliştirme aşamaları kapsamında alan yazın taranmıştır. ölçekle ilgili madde havuzunun oluşturulmasından sonra uzman görüşüne başvurulmuştur. pilot çalışma yapı geçerliliği ve güvenirliğinin tespiti çalışmaları yapılmıştır. açımlayıcı faktör analizi sonucunda her biri beş sorudan oluşan öğretmenden kaynaklı matematik korkusu çevreden kaynaklı matematik korkusu matematiğin yapısından kaynaklı matematik korkusu öğrencinin kendi kişisel özelliklerinden kaynaklı matematik korkusu boyutları oluşmuştur (This research was conducted with the aim of developing a scale to determine the causes of students’ mathematics anxiety. The study’s sample comprised 2580 students attending primary, middle, and high schools throughout the province of Uşak during the 2018–2019 academic year. Within the scope of the scale development process, an extensive literature review was performed. Following the creation of the initial item pool for the scale, expert opinion was sought. Subsequently, pilot studies and examinations for construct validity and reliability were conducted. The results of the Exploratory Factor Analysis (EFA) yielded four dimensions, each consisting of five items: Mathematics Anxiety Caused by the Teacher, Mathematics Anxiety Caused by the Environment, Mathematics Anxiety Caused by the Nature of Mathematics, and Mathematics Anxiety Caused by Student’s Own Personal Characteristics.) | bu çalışmanın amacı ortaokul öğrencilerinin matematik korkusunun nedenlerini belirlemek için bir ölçeğin geliştirilmesidir. araştırmada betimsel tarama modeli kullanılmıştır. araştırmanın örneklemini 2018–2019 öğretim yılında uşak il genelinde ilkokul ve liselerde öğrenim gören 300 öğrenci oluşturmuştur. araştırmaya katılan öğrencilerden matematik korkusu nedenlerini ortaya koymak amacıyla bir ölçek geliştirilmeye çalışılmıştır. madde havuzu oluşturmak için ilgili alanyazın taranması ve uzman görüşleri alınmıştır. çalışmada veri toplama aracı olarak matematikten kaynaklı korku ölçeği ve matematik dersine yönelik akıl yürütme araçlarından biri olan matematiğe yönelik tutum ölçekleri kullanılmıştır (The purpose of this study is the development of a scale to determine the causes of mathematics anxiety of middle school students. A descriptive survey model was used in the research. The sample of the research was constituted by 300 students who were studying in primary schools and high schools across the province of Uşak in the 2018–2019 academic year. An effort was made to develop a scale from the students who participated in the research in order to reveal the causes of mathematics anxiety. The related literature review and expert opinions were taken for creating the item pool. In the study, the Fear Caused by Mathematics Scale and attitude scales towards mathematics, which is one of the reasoning tools related to the mathematics course, were used as data collection instruments.) | The model correctly states the objective of “developing a mathematics anxiety scale” and concisely summarizes the methodological steps (literature review, expert judgment, pilot study, EFA), even recovering the four-factor structure. However, it diverges from the gold summary by inflating the sample size to 2580 (gold: 300 students) and broadening the grade levels. Overall: high content coverage but weakened fidelity due to numeric and population-scope errors. In general, while coverage and fluency are strong, the numerical specifics call for cautious normalization. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Altay, G.; Küçüksille, E.U. Quantum-Inspired Cross-Attention Alignment for Turkish Scientific Abstractive Summarization. Electronics 2025, 14, 4474. https://doi.org/10.3390/electronics14224474

Altay G, Küçüksille EU. Quantum-Inspired Cross-Attention Alignment for Turkish Scientific Abstractive Summarization. Electronics. 2025; 14(22):4474. https://doi.org/10.3390/electronics14224474

Chicago/Turabian StyleAltay, Gönül, and Ecir Uğur Küçüksille. 2025. "Quantum-Inspired Cross-Attention Alignment for Turkish Scientific Abstractive Summarization" Electronics 14, no. 22: 4474. https://doi.org/10.3390/electronics14224474

APA StyleAltay, G., & Küçüksille, E. U. (2025). Quantum-Inspired Cross-Attention Alignment for Turkish Scientific Abstractive Summarization. Electronics, 14(22), 4474. https://doi.org/10.3390/electronics14224474