4.1. Experimental Set-Up

We implement our framework with Pytorch 1.11.0 on a single NVIDIA GeForce RTX 4090 GPU. We set the batch size to 1 and resized all test images to 256 × 256. For the PEC module, we set the exposure control coefficient

= 0.4, the number of shrinkage built-in blocks

= 3, and the iteration steps

= [3, 3, 3]. In the diffusion model, we employ the sampling steps

= 100, set travel_length to 1, and use linear betas of (0.0001, 0.02). We adopt the Adam optimizer [

37] with a learning rate of 1 × 10

−2. Additionally, we set the brightness level

to 0.6.

We evaluated our method on the widely used LOL-v1 [

14], LOL-v2 [

38], and LSRW [

39] datasets. Image quality is assessed with the Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM) [

17], and Learned Perceptual Image Patch Similarity (LPIPS) [

40] metrics. In addition, we introduce two non-reference perceptual metrics Natural Image Quality Evaluator (NIQE) [

41] and Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE) [

42] to measure the visual quality.

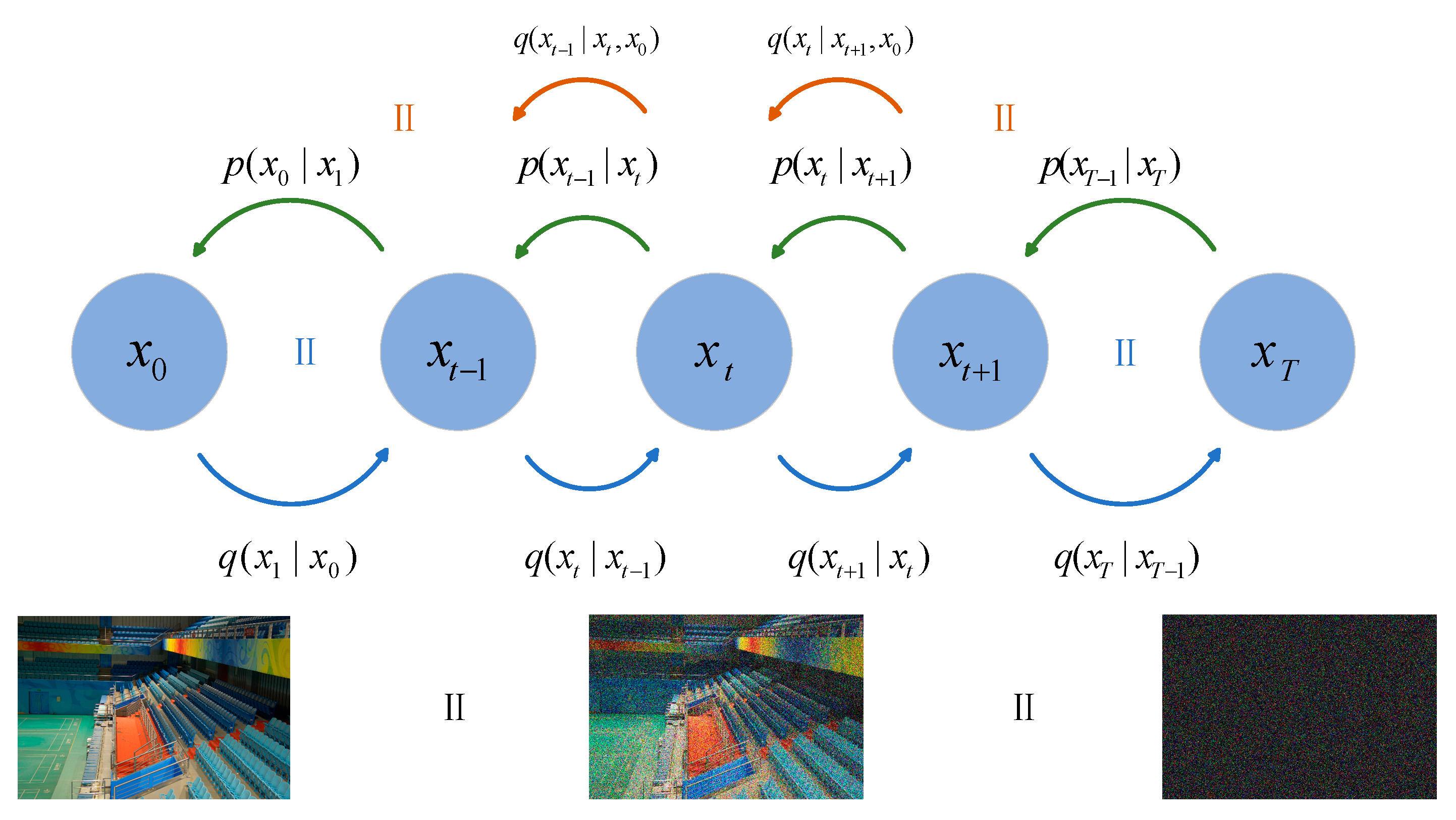

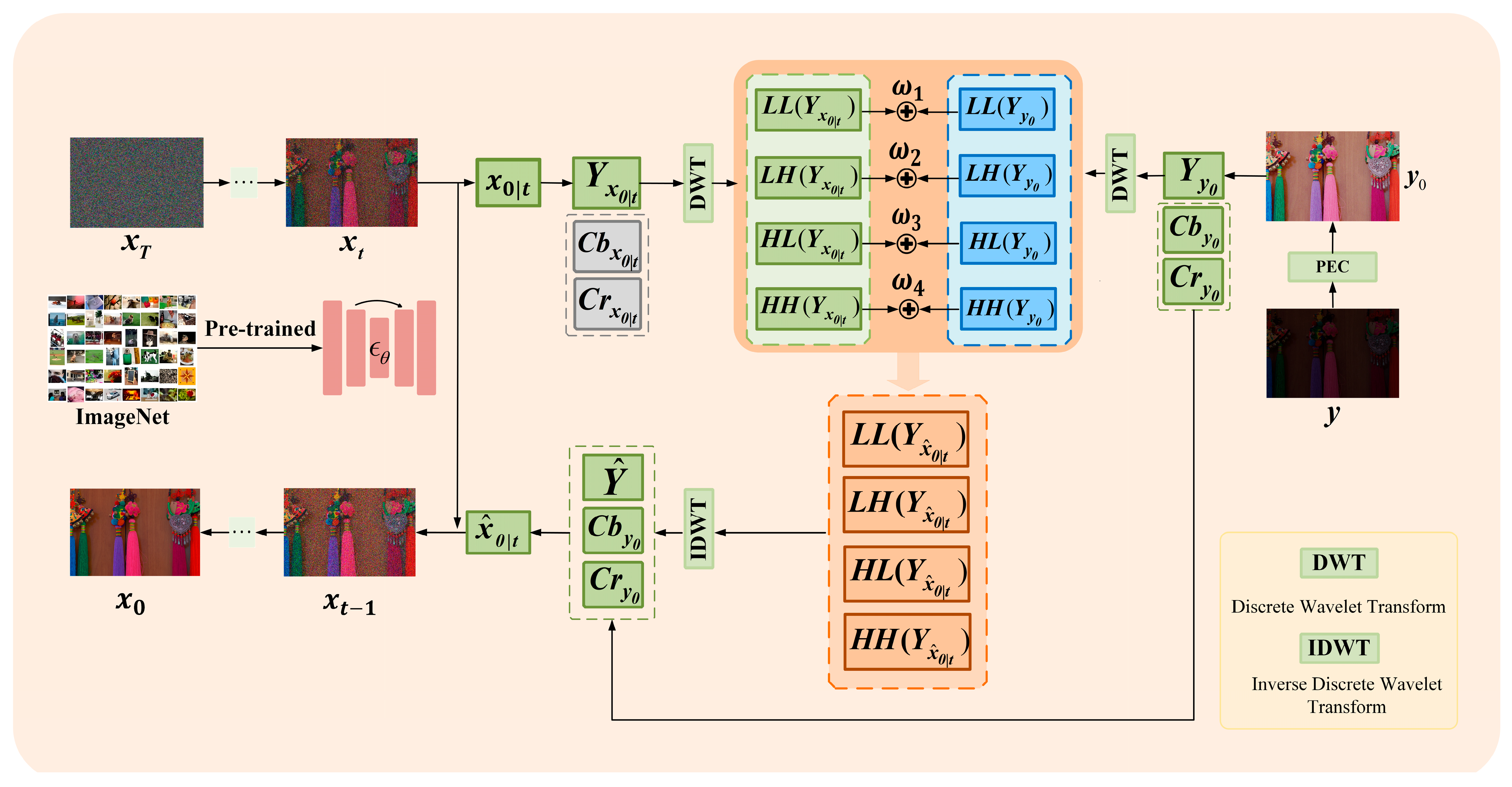

4.2. Qualitative Comparison

To thoroughly evaluate our model, we select several unsupervised low-light image enhancement methods—Zero-DCE [

19], EnlightenGAN [

18], RUAS [

20], SCI [

6], the low light enhancement branch of FourierDiff [

32] (denoted as FourierDiff_LLIE), and JWFPGD [

34]—and conduct evaluations on the supervised LOL-v1, LOL-v2, and LSRW datasets using their officially released implementations. Among the unsupervised low-light image enhancement methods compared in this paper, Zero-DCE [

19], EnlightenGAN [

18], RUAS [

20], and SCI [

6] are classic representative methods. Many subsequent related studies take them as baseline methods for performance comparison. In contrast, FourierDiff_LLIE [

32] and JWFPGD [

34] are advanced methods proposed in the field of low light enhancement during 2024–2025. Specifically, the LSRW dataset contains images captured by Nikon cameras and Huawei smartphones. The LSRW_Nikon dataset consists of 3150 pairs of training images and 20 pairs of test images, while the LSRW_Huawei dataset comprises 2450 pairs of images, with 30 pairs allocated for testing. The results are shown in

Figure 3,

Figure 4,

Figure 5 and

Figure 6.

As illustrated in

Figure 3, it can be observed that the images processed via Zero-DCE [

19] and SCI [

6] remain dark overall and are still affected by some noise that impairs image quality. Although the EnlightenGAN-based [

18] method outperforms the previous two in restoring image brightness, significant noise is still observed in the enhanced images. There is a certain degree of color distortion relative to the ground truth, and partial blurring occurs at the blue lines within the boxed regions. For images processed using RUAS [

20] and FourierDiff-LLIE [

32], relatively good overall brightness restoration is achieved. However, noise of varying degrees still exists, and the regions with high brightness in the original images undergo brightness over-enhancement after enhancement, which obscures some details. Although the image processed by the JWFPGD [

34] method can effectively restore the normal illumination level, it still exhibits significant deficiencies in detail restoration. Specifically, the object edges in the region of interest show obvious block artifacts. Our approach successfully restores natural illumination while preserving high perceptual quality, exhibiting superior spatial smoothness. In contrast to competing methods, it introduces virtually no visible noise or artifacts.

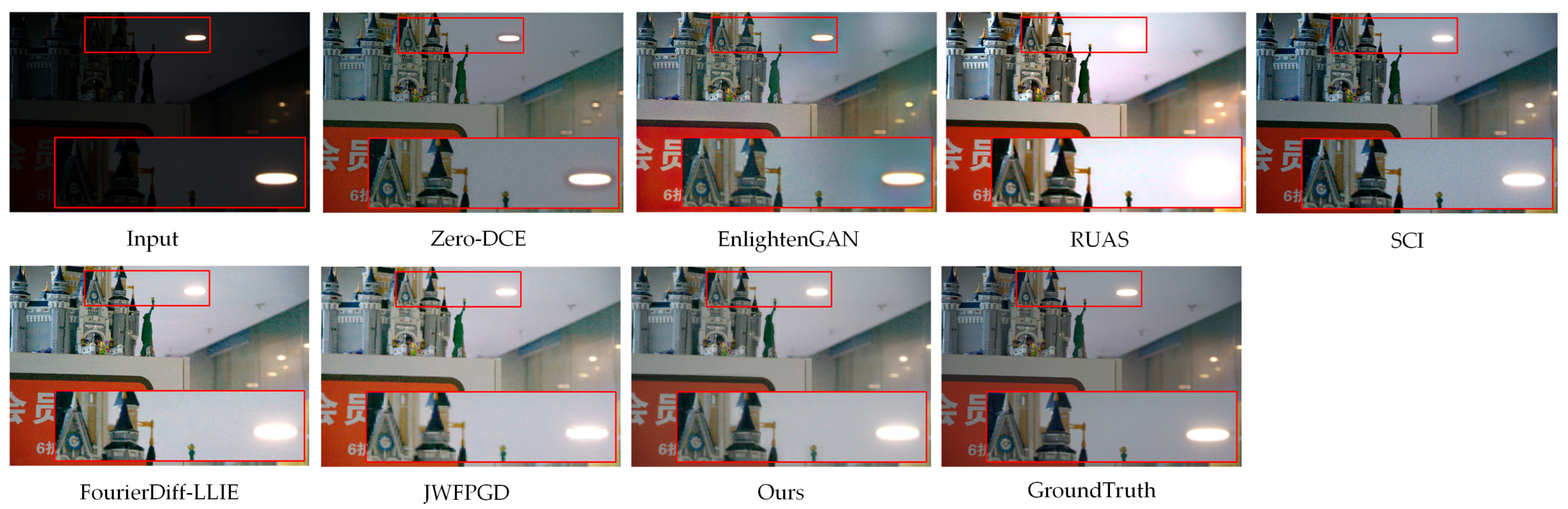

A visual inspection of

Figure 4 reveals that images restored using Zero-DCE [

19] and EnlightenGAN [

18] exhibit unexpected black artifacts near the light sources of the original images, which impairs the visual effects of the images. Images processed via RUAS [

20] and FourierDiff-LLIE [

32] both present noise of varying degrees, compromising image quality. Meanwhile, in images restored by RUAS, the light sources in the original images are nearly invisible. In the image restored by FourierDiff-LLIE [

32], the edges of the light source in the original image become blurred, resulting in a decrease in contrast with the surrounding environment. The image enhanced through SCI [

6] also shows significant noise, which affects image quality. The image processed by the JWFPGD method [

34] still exhibits obvious block artifacts, which seriously degrades the image’s visual quality. In contrast to competing approaches, our method not only restores natural illumination and accurate color balance but also preserves the integrity and radiance of the original light sources within the scene.

From the results in

Figure 5, qualitative comparisons can be observed between our method and competing approaches on the LSRW_Huawei dataset. The regions of interest are highlighted with red bounding boxes; magnified views of these patches are displayed in the bottom-right corners of the corresponding images. Although the methods based on Zero-DCE [

19], EnlightenGAN [

18], and RUAS [

20] can effectively achieve normal illumination restoration, their overall colors still exhibit significant deviations from the ground truth. Further observation reveals that the methods based on RUAS [

20] and SCI [

6] suffer from detail loss, specifically manifested in the fact that the cloud edges in the input image are barely visible after processing. In addition, the FourierDiff-LLIE [

32] and JWFPGD [

34] methods still manifest obvious deficiencies in normal illumination restoration capability, and the overall brightness of the images processed by them still has a significant difference from that of the ground truth. While restoring normal illumination, our proposed method exerts no significant impact on image color and incurs no significant detail loss.

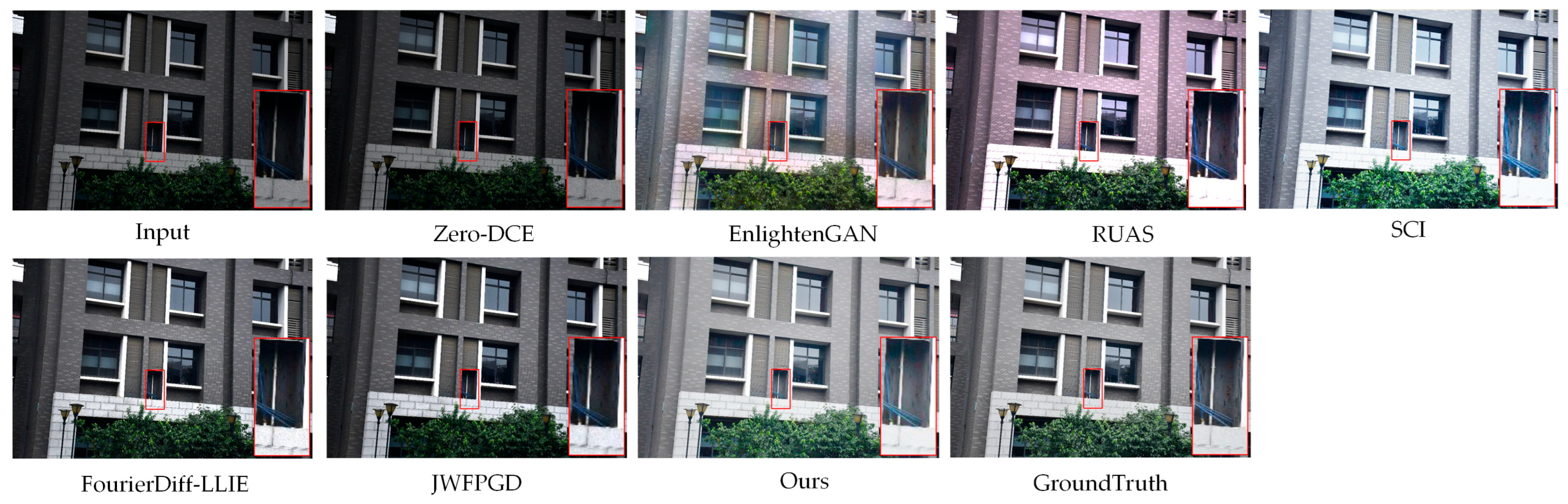

It can be seen from the results in

Figure 6, Zero-DCE [

19], FourierDiff-LLIE [

32], and JWFPGD [

34] exhibit obvious deficiencies in normal illumination restoration capability, and the overall brightness of the images processed by them still manifests a significant difference from the ground truth. However, these three methods perform well in preserving the details of the original image. Meanwhile, the images processed by the EnlightenGAN [

18] and RUAS [

20] methods show a significant deviation in overall color from the ground truth. Furthermore, the image processed by RUAS [

20] also suffers from severe detail loss, specifically manifested in the fact that the brick gaps in the region of interest are barely visible. In addition, the image processed by the SCI [

6] method has an over-exposure issue compared with the ground truth, and the detail loss is also severe. By contrast, the image processed by our proposed method can effectively restore normal illumination while well preserving the details in the image.

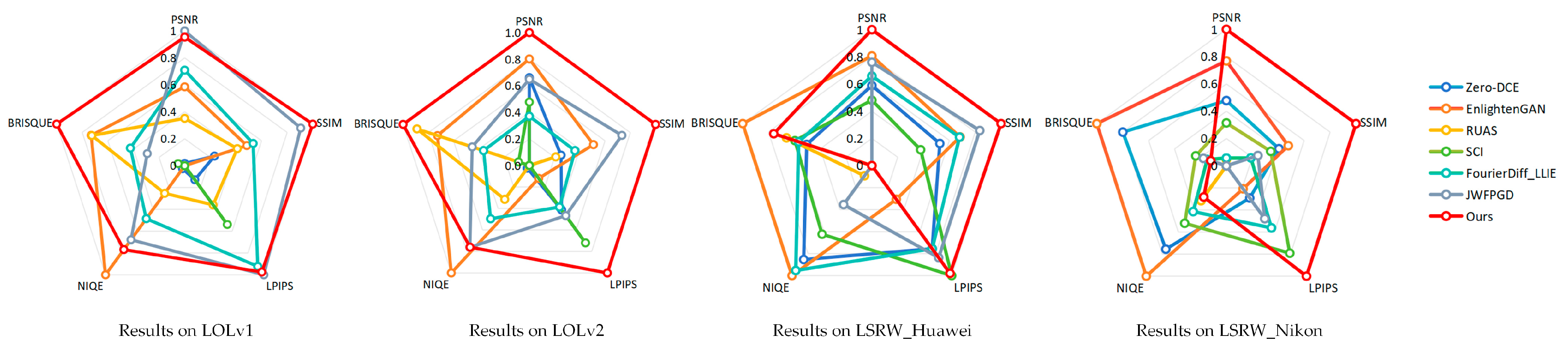

4.3. Quantitative Comparison

We quantitatively evaluate our method with several unsupervised low-light image enhancement methods, including PSNR, SSIM [

17], LPIPS [

40], NIQE [

41], and BRISQUE [

42]. The results are shown in

Table 1,

Table 2,

Table 3 and

Table 4. To intuitively demonstrate the performance differences between our method and other comparative methods, we plotted radar charts based on the data in

Table 1,

Table 2,

Table 3 and

Table 4, as shown in

Figure 7. To address the issues of inconsistent numerical scales and conflicting variation directions among different metrics, we performed normalization on the data in the tables. Finally, we define that in the radar charts, the closer the value is to 1, the better the metric performs.

PSNR [

17] is a classic metric for measuring the pixel-level errors between enhanced images and reference images. In the test results on the LOLv2, LSRW_Huawei, and LSRW_Nikon datasets, the PSNR values of our method exceed that of all comparative methods. In the testing experiments on the LOLv1 dataset, our proposed method achieves a sub-optimal performance in terms of the PSNR metric. This indicates that our method performs optimally in pixel-level error control. The enhanced images have the smallest pixel difference from ideal reference images and the highest pixel-level restoration accuracy.

SSIM [

17] is a metric that, from the perspective of human visual perception, measures the similarity between enhanced images and reference images in three dimensions—brightness, contrast, and structure. Similarly, our method outperforms other reference methods in the test results on all datasets, highlighting its advantages in structure preservation and visual consistency. It demonstrates that our method can not only enhance the brightness of low-light images but also preserve structural features, making the enhanced images visually closer to real-world scenarios.

By simulating the perceptual mechanism of the human visual system, LPIPS [

40] quantifies the difference between enhanced images and reference images. A lower value indicates greater similarity between the two images at the perceptual level. In the testing experiments on the LOLv1 and LSRW_Huawei datasets, our proposed method achieves a sub-optimal performance in terms of the LPIPS metric; while in those on the LOLv2 and LSRW_Nikon datasets, it achieves an optimal performance. This result shows that the images enhanced by our method are more natural, rarely exhibiting a sense of incongruity such as color distortion or brightness contrast discontinuity, thus achieving excellent perceptual quality.

NIQE [

41] evaluates image quality by analyzing the degree of deviation between the statistical characteristics of an image and those of natural images. In the testing experiments on all datasets, our proposed method exhibits advantageous performance in terms of the NIQE metric. This implies that in application scenarios without ideal reference images, the results of our method can still maintain high naturalness, and their statistical characteristics are closer to those of real natural images.

BRISQUE [

42] focuses on the spatial domain quality of images and evaluates quality by extracting the natural scene statistical features of images. In the testing experiments on the LOLv1 and LOLv2 datasets, our proposed method achieves an optimal performance in terms of the BRISQUE metric; in those on the LSRW_Huawei and LSRW_Nikon datasets, the method exhibits an advantageous performance in the same metric. This result indicates that the results obtained by our method exhibit excellent spatial domain quality. The enhanced images have high definition and low noise residue.

Based on a comprehensive analysis of all metrics, our method demonstrates a comprehensive and balanced low-light image enhancement performance.

4.4. Ablation Study

We conduct ablation studies to evaluate (i) the necessity of priming dark inputs with Practical Exposure Correction (PEC) [

35] prior to diffusion sampling; (ii) the effectiveness of converting images from the RGB space to the YCbCr space for subsequent processing; (iii) the effectiveness of decomposing the images via DWT and subsequently fusing the corresponding sub-bands with learnable weights; (iv) the effectiveness of the two introduced loss functions (i.e.,

and

). Specifically, we compare the full pipeline of our proposed method against five ablated variants: (i) w/o PEC, which omits the conservative exposure pre-correction, (ii) w/o YCbCr, which processes images directly in the RGB space instead of converting them to the YCbCr space first; (iii) w/o DWT, which replaces wavelet-based fusion with a naïve pixel-wise weighted summation; (iv) w/o

, which uses only the

loss function without

; (v) w/o

, which uses only the

loss function without

. All configurations are evaluated on four datasets, including LOL-v1, LOL-v2, LSRW-Huawei, and LSRW-Nikon. The results are shown in

Figure 8 and

Table 5,

Table 6,

Table 7 and

Table 8.

The results of the ablation studies demonstrate that each core component in our proposed method contributes significantly to the final performance. A detailed analysis is as follows: (1) Removing the PEC component leads to a significant performance degradation, which confirms the necessity of performing robust exposure pre-correction on low-light input images prior to diffusion sampling. (2) The contribution of YCbCr space conversion is relatively moderate. After removing this component, the model’s performance on some perceptual metrics is similar to that of the full pipeline, but the full pipeline still exhibits superior or comparable performance stability across most key metrics. (3) Removing the wavelet decomposition and DWT sub-band weighted fusion module results in significant degradation in PSNR and SSIM across all datasets. This proves the superiority of multi-sub-band fusion in the frequency domain for preserving image details and structural information. (4) The ablation results of the loss functions show that the exposure loss function is crucial for regulating image exposure and contrast, and serves as the core to maintain high PSNR and SSIM metrics; the detail loss function helps further improve image structural similarity. When the two functions work synergistically, the model achieves an optimal balance between objective metrics and perceptual quality.

In conclusion, the ablation studies systematically verify the effectiveness of each core design choice in the proposed method.

4.5. Effect on Object Detection

We conducted experiments on downstream tasks such as object detection to demonstrate the practical value of our method. Specifically, we employed the pre-trained YOLO11 model provided officially by Ultralytics on the COCO dataset. The results are shown in

Figure 9. The first row presents the object detection results on the original low-light images, while the remaining rows show the object detection results on images processed by Zero-DCE [

19], EnlightenGAN [

18], RUAS [

20], SCI [

6], FourierDiff-LLIE [

32], JWFPGD [

34], and our proposed method.

The results indicate that although YOLO11 has achieved significant improvements in object detection capability compared with its previous versions, it still suffers from issues such as missed detections and false detections in low-light environments. After processing with low light enhancement algorithms, the overall brightness of the images is significantly improved, and the detection accuracy of YOLO11 also increases accordingly. By comparing these low light enhancement methods, it can be seen that our proposed method not only performs excellently in its low light enhancement performance but also performs prominently in assisting YOLO11 in improving detection accuracy.

In fact, deep learning-based object detectors such as YOLO operate on a core mechanism of extracting low-level features and high-level features from input images in a stepwise manner and accomplish object detection through multi-scale feature fusion and anchor box matching. However, the inherent defects of low-light images directly interfere with this process, which manifests specifically in three aspects. Insufficient brightness causes low-level features of objects to be obscured by noise and low-illumination conditions, making it difficult for object detectors to capture an adequate number of discriminative features and thus leading to missed detections. Meanwhile, the pervasive signal-dependent noise in low-light images may be misidentified as “spurious features” or directly mask the real features of objects, resulting in false detections or missed detections. Additionally, the contrast imbalance and color distortion often associated with low-light images lead to significant discrepancies between the brightness distribution and color features of objects, and the normal-light images used for YOLO’s pre-training. This makes it difficult for the object detector to effectively match the pre-trained category features and accurately distinguish the object–background boundaries. In contrast, the method proposed in this paper can enhance the brightness of images while achieving color fidelity and detail restoration, addressing the aforementioned interference of low-light images on detection and improving object detection accuracy effectively.