This paper presents a novel hybrid OCR system that combines YOLOv7 for text detection and TrOCR for text recognition. The integration of these two models addresses significant challenges in port operations, particularly in detecting and recognizing codes under varying conditions and orientations.

Experimental results demonstrate that the proposed system performs well, achieving high accuracy and robustness compared to existing models. It successfully recognizes text in scenarios where traditional OCR systems struggle, while maintaining accuracy and inference speed. The implementation of this hybrid OCR system represents a significant step towards automating and optimizing port operations. By improving the reliability and speed of container code recognition, it contributes to the streamlining of workflows and enables better resource allocation, leading to improved operational effectiveness in port settings.

4.1. Limitations

Although the proposed system shows promising results, several limitations were identified during the study.

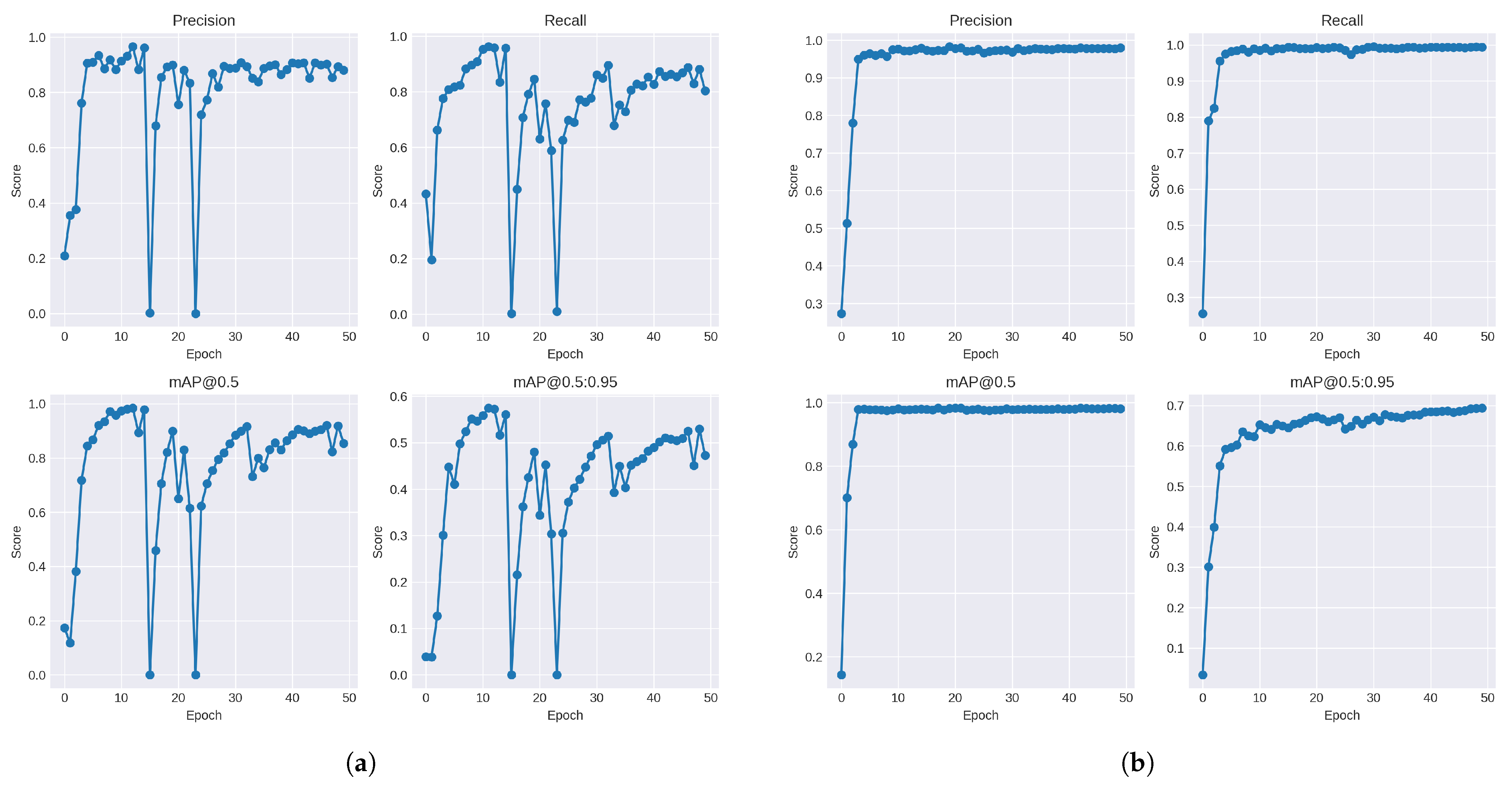

Firstly, while the datasets used for training and validation were limited in size and collected during a limited time frame, they may not represent the variability encountered in real-world port environments. This may affect the model’s generalizability. Secondly, while the YOLOv7 proved effective for text detection, new YOLO architectures with improved capabilities, such as small object detection, may offer better performance under certain port conditions. Therefore, ongoing evaluation and comparison of emerging models is necessary to ensure that the most effective solution is deployed for text detection in port environments.

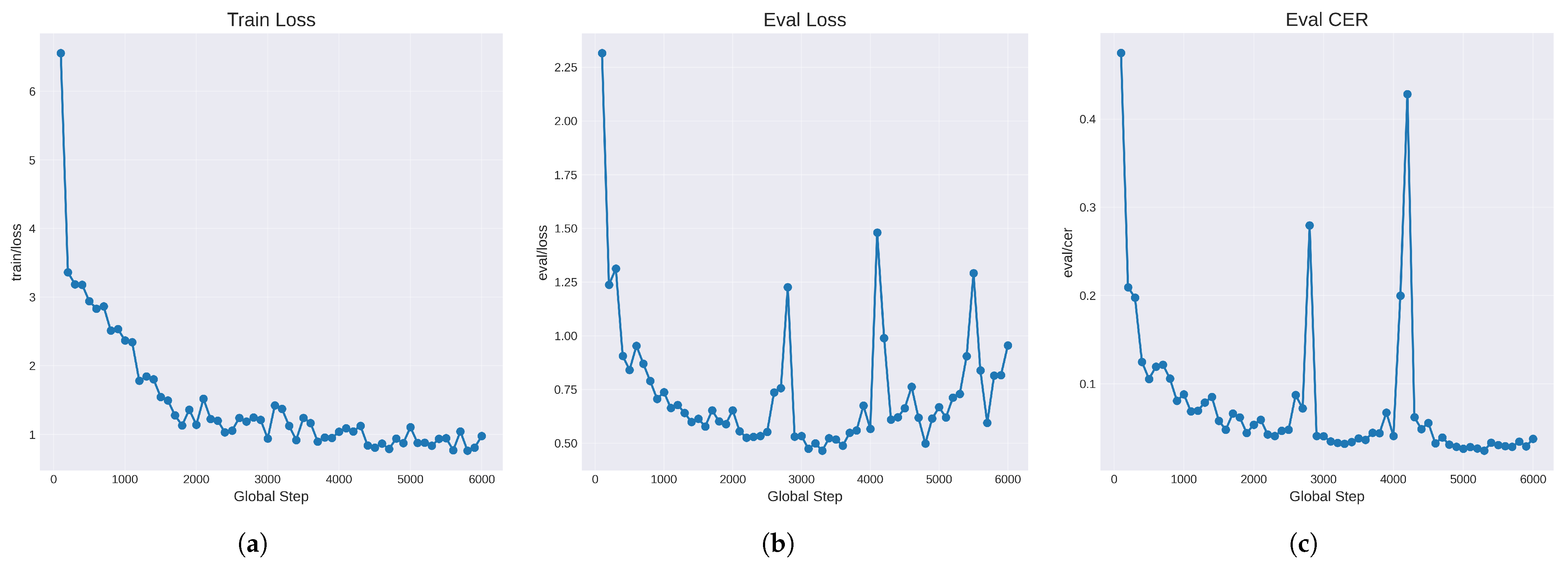

Third, while TrOCR demonstrated strong performance, as it was fine-tuned for this specific task, it may not generalize well to other text recognition tasks without further adaptation. Additionally, the computational requirements for deploying this hybrid system for testing were manageable; scaling the implementation for real-time port operations may require further optimization to ensure efficiency and responsiveness.

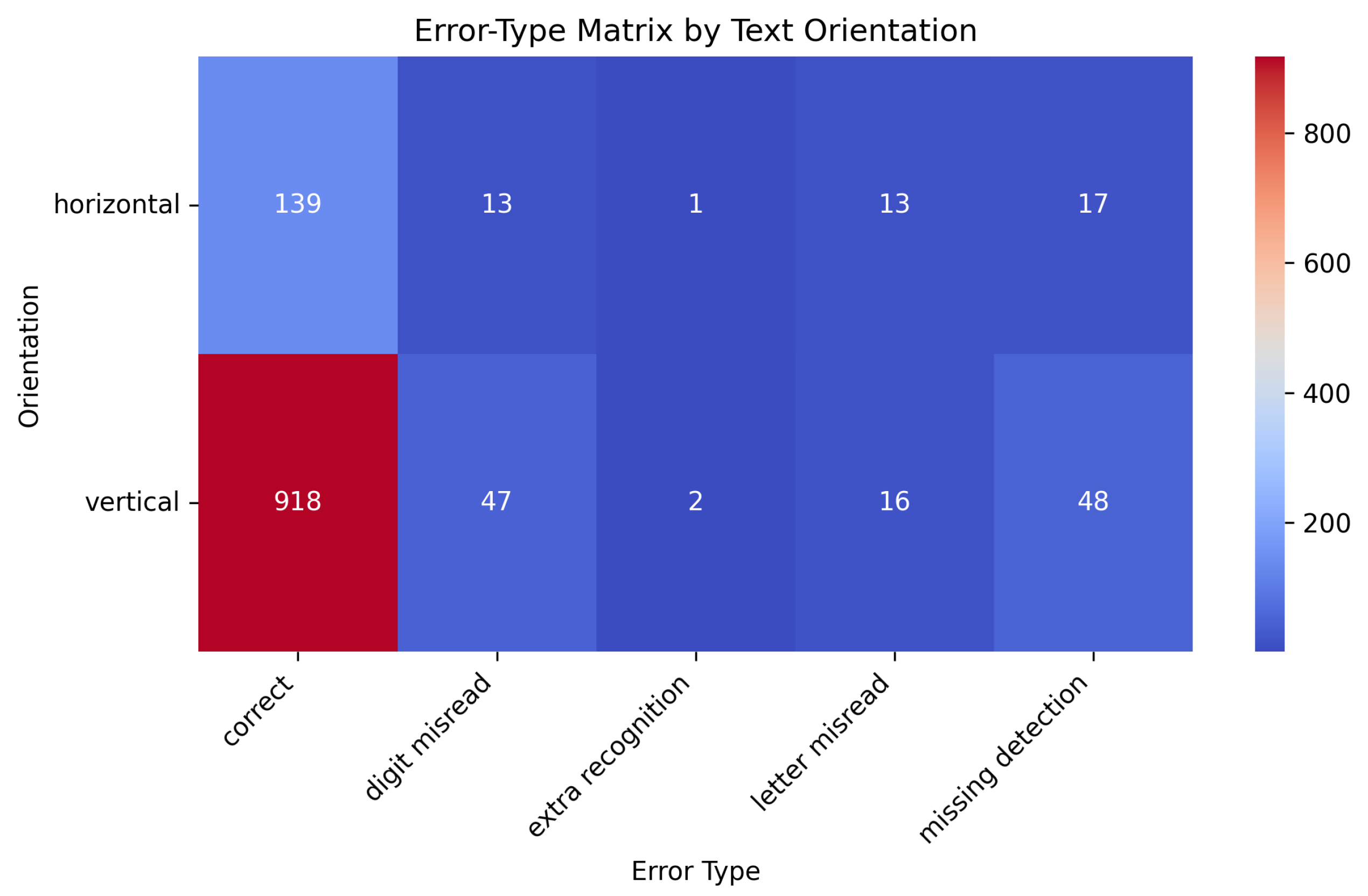

Fourth, the statistical analysis presented in this work is limited to reporting standard OCR metrics. The use of more advanced statistical methods, such as confidence intervals or hypothesis testing, would provide stronger evidence of model robustness and a clearer understanding of performance variability across different conditions.

Finally, the post-processing step, while crucial for ensuring the reliability of the recognized codes in practical applications, was not implemented during the evaluation phase. This does not affect the intrinsic performance of the detection and recognition models, but it may be modified to improve the system performance or lead to more robust results in practical applications.

4.2. Future Work

With the experimental results reported in

Section 3.4, a prototype version of the system is already being tested at the Port of Sines in collaboration with an industrial partner. Although operation results from this deployment are not yet available, future work will focus on reporting the performance data from this real-world application, while capturing images under challenging and diverse operational conditions. This will provide valuable insights into the system’s effectiveness in operational settings, as well as identify areas for further refinement and optimization.

A major focus of future research will be enhancing the system’s robustness and adaptability. To this end, expanding the dataset to encompass a broader range of environmental and operational conditions will strengthen the models’ generalization capabilities. Improving inference speed also remains a priority, as the current throughput is below real-time operational demands. Techniques such as model pruning, quantization, or exploring lightweight architectures could help achieve higher speeds while maintaining accuracy.

Another important direction is the comprehensive evaluation of the current system and its components. Ablation studies will be conducted to analyze the performance variation between the fine-tuned YOLOV7 models and provide insight into the superior performance of the unfrozen configuration. Additionally, statistical analysis for the evaluated models will be expanded to include more rigorous techniques for both the detection and recognition models, such as k-fold cross-validation or hypothesis testing. This will offer stronger evidence of model robustness and will expand the understanding of performance variability across different conditions.

Given the significance of the results presented, further statistical validation with paired statistical tests, such as the paired t-test, will provide stronger evidence of model robustness, and the calculation of confidence intervals will expand the understanding of performance variability. Constructing error matrices that capture common recognition errors, such as occlusions, surface wear, and misclassifications, will assist in identifying systematic weaknesses and areas for improvement.

To enable this level of analysis, a more detailed dataset with a systemic, condition-aware collection of images, annotated with specific imaging conditions, such as lighting variations, occlusions from cargo or equipment, motion blur, and surface wear, would be beneficial. With these detailed annotations, insights into the recognition behavior across different scenarios could be obtained, enabling adaptive training strategies and error correction mechanisms tailored to the operational challenges in port environments.

Similarly, the post-processing module can be refined into a more advanced validation system that not only verifies the recognized codes but also corrects recognition errors. This can be achieved by cross-referencing with a database of known container codes, employing template matching techniques, or incorporating contextual information from port logistics systems. Such refinements could enable automatic correction of check-digit mismatches and improve overall recognition reliability in real-world applications.

In addition, future research should explore the integration of this OCR system with other port management systems, such as inventory tracking and logistics planning. This integration could provide a more comprehensive solution for port operations, leveraging the strengths of the OCR system to enhance overall efficiency and effectiveness.

In conclusion, the proposed hybrid OCR system offers an effective and practical solution to the challenge of container code recognition in port operations. With continued development and real-world deployment, it has the potential to become a critical component in the modernization and automation of logistics infrastructure.