4.5.1. Comparative Experiments

To validate the effectiveness of the proposed teacher model for distillation training of the student model, we conducted distillation experiments with different distillation temperature values, using the same dataset and experimental setup. The experimental results are presented in

Table 4, where an additional evaluation metric of detection time is included. We set six distillation temperature gradients ranging from 10 to 60 °C, maintaining all other experimental conditions identical, including the dataset, training rounds, and optimizer. We systematically tested the core performance metrics of the student model at different temperatures. The results are as follows: When

°C, the model AP gradually increases with increasing temperature. This is because moderately increasing the temperature allows the teacher model to convey richer soft label information, helping the student model better learn the subtle features of corroded metal fittings. However, when

°C, the AP begins to decline significantly. This is because excessively high temperatures dilute the effective feature signal, making it difficult for the student model to distinguish between metal fittings and background noise, and even leading to the problem of overfitting the soft labels.

By analyzing the experiment results, it can be observed that as the distillation temperature value increases, the average precision also increases. However, when the temperature value exceeds 40, the average precision starts to decrease as the temperature increases. The trend indicates that the positive impact of the teacher model on training the student model. However, when the distillation temperature value exceeds a certain threshold, it diminishes the effectiveness of the teacher model. Our analysis suggests that this is because the student model, being smaller in size, is unable to fully capture all the knowledge imparted by the teacher model. Therefore, it is essential to set an appropriate distillation temperature value to enhance the efficacy of knowledge distillation.

Basic IoU methods rely solely on target overlap, which can easily misclassify overlapping adjacent hardware as the same target in occluded scenarios, resulting in low recall and precision. GIoU and DIoU, while incorporating minimum bounding box enclosed area and center point distance optimization, respectively, fail to fully account for the loss of target edge information caused by occlusion: GIoU’s focus on enclosing area ignores fine-grained shape differences, and DIoU’s center distance term cannot distinguish between occluded and non-occluded targets with similar center positions but distorted shapes. This limits their adaptability to densely occluded scenarios. DIoU-NMS improves performance by optimizing the overlapping box filtering logic, but still struggles to accurately distinguish target boundaries in occluded areas due to the lack of explicit shape constraints.

Considering the common object occlusion problem in corrosion hardware inspection scenarios, the EIoU-NMS proposed in this paper addresses these limitations through its dual-term optimization. To further clarify its mechanism in handling occlusions, the formula is:

where IoU represents the intersection over union of the predicted box (

) and ground truth (

), the second term quantifies the normalized center distance between

and

, and the third term measures the aspect ratio mismatch between the two boxes.

and

are weight coefficients to balance the contributions of positional and shape features. The center distance term ensures that even partially occluded targets whose centers may deviate slightly from ground truth are not prematurely suppressed. Meanwhile, the aspect ratio term effectively captures the residual edge information of hardware under occlusion, for example, when a bolt head is partially occluded, its remaining visible edges preserve the intrinsic aspect ratio, which EIoU-NMS leverages to distinguish it from adjacent nuts. This enables accurate definition of the target’s actual range, reducing misjudgment and missed detection caused by occlusion.

To further verify the performance of the proposed EIoU-NMS in the corroded fitting detection task, we additionally supplemented it with mainstream IoU series methods such as IoU, GIoU, DIoU, and DIoU-NMS. All experiments were conducted based on the same lightweight model framework and test set environment. The core evaluation metric was the AP value. The specific comparison results are shown in

Table 5.

Considering the common object occlusion problem in corrosion hardware inspection scenarios, the data in

Table 5 clearly demonstrates the performance advantages of our proposed EIoU-NMS. Basic IoU methods rely solely on target overlap, which can easily misclassify overlapping adjacent hardware as the same target in occluded scenarios, resulting in low recall and precision. GIoU and DIoU, while incorporating minimum bounding box enclosed area and center point distance optimization, respectively, fail to fully account for the loss of target edge information caused by occlusion, limiting their adaptability to densely occluded scenarios. DIoU-NMS improves performance by optimizing the overlapping box filtering logic, but still struggles to accurately distinguish target boundaries in occluded areas. The EIoU-NMS proposed in this paper incorporates edge distance features into its design, which can effectively capture the residual edge information of hardware under occlusion, accurately define the actual range of the target, and reduce the problems of misjudgment and missed detection caused by occlusion. Therefore, it significantly outperforms other IoU series methods in the three core indicators of Recall 90.08%, Precision 89.38%, and AP 95.82%, making it more suitable for the occlusion scenarios required in corrosion hardware detection.

The distillation loss weight

is used to balance detection loss and distillation loss in Equations (11)–(13). To verify the impact of the distillation loss weight

on model performance, we fixed the distillation temperature at 40 °C to 0.2, 0.4, 0.6, 0.8, 1.0, 1.2, and 1.4 respectively, and then evaluated the model’s Recall, Precision, and AP on the same test set. The experimental results are shown in

Table 6.

The experimental results show that when , as increases, the model Recall, Precision and AP all gradually improve. The reason is that when the distillation loss weight is insufficient, the student model cannot fully absorb the guidance knowledge of the teacher model, resulting in limited performance. When , the three indicators of the model reach the best. At this time, the detection loss and distillation loss achieve the optimal balance, which not only avoids the insufficient performance caused by too weak distillation knowledge, but also prevents the excessive distillation knowledge from interfering with the student model’s autonomous learning ability. When , the indicators show a downward trend. Because the excessively high distillation loss weight makes the student model overly dependent on the teacher model output, ignoring the supervision information of the true label, resulting in a decrease in generalization capability.

In the process of UAV intelligent inspection of power transmission lines, a lightweight image object detection model is required. To further validate the effectiveness of the proposed method, we set the distillation temperature value to 40, and conduct comparative experiments on the dataset provided in our paper. Existing adaptive distillation frameworks [

34] can indeed perform detection quite well, but for the task type in this paper, i.e., recognizing rusted metal fittings with small size in UAV field of view and often being occluded, this technique will inevitably bring additional computational costs, which is crucial for UAV deployment.To further verify the effectiveness of the proposed method, we introduce a representative object detection method [

35] for comparison. This method was designed for blur-aware object detection by first generating a synthetic blurred dataset and then employing a teacher-student network with shared parameters to conduct self-guided knowledge distillation. Then we believe that this method is applicable to the metal-fitting detection problem.

The experimental results are presented in

Table 7 and

Figure 17. The results demonstrate that employing knowledge distillation training significantly enhances the model’s performance, with an average precision improvement of 5.85%, recall rate improvement of 6.94%, and accuracy improvement of 3.83%. Moreover, the model size remains consistent with the student model, being only one-fourth the size of the teacher model, resulting in faster detection speed. It validates the effectiveness of the proposed method in this paper.

Somewhat unexpectedly, as shown in

Table 7, the proposed method still achieves significant improvements in recall and precision than the self-guided knowledge distillation method [

35] whose detection speed is nearly doubled. Meanwhile, the model size of the proposed method is reduced from 32.1 MB to 10.5 MB. This comparison fully demonstrates that, through the synergistic optimization of lightweight network design and high-precision knowledge selection mechanism, the proposed method not only enhances robustness and accuracy in detecting objects within blurred images, but also substantially improves inference efficiency and deployability, making it better suited for resource-constrained edge computing scenarios. Through knowledge distillation training, the knowledge from the teacher model can be transferred to the student model, thereby improving the performance of the student model while maintaining its size. As a result, a robust lightweight image object detection model is obtained.

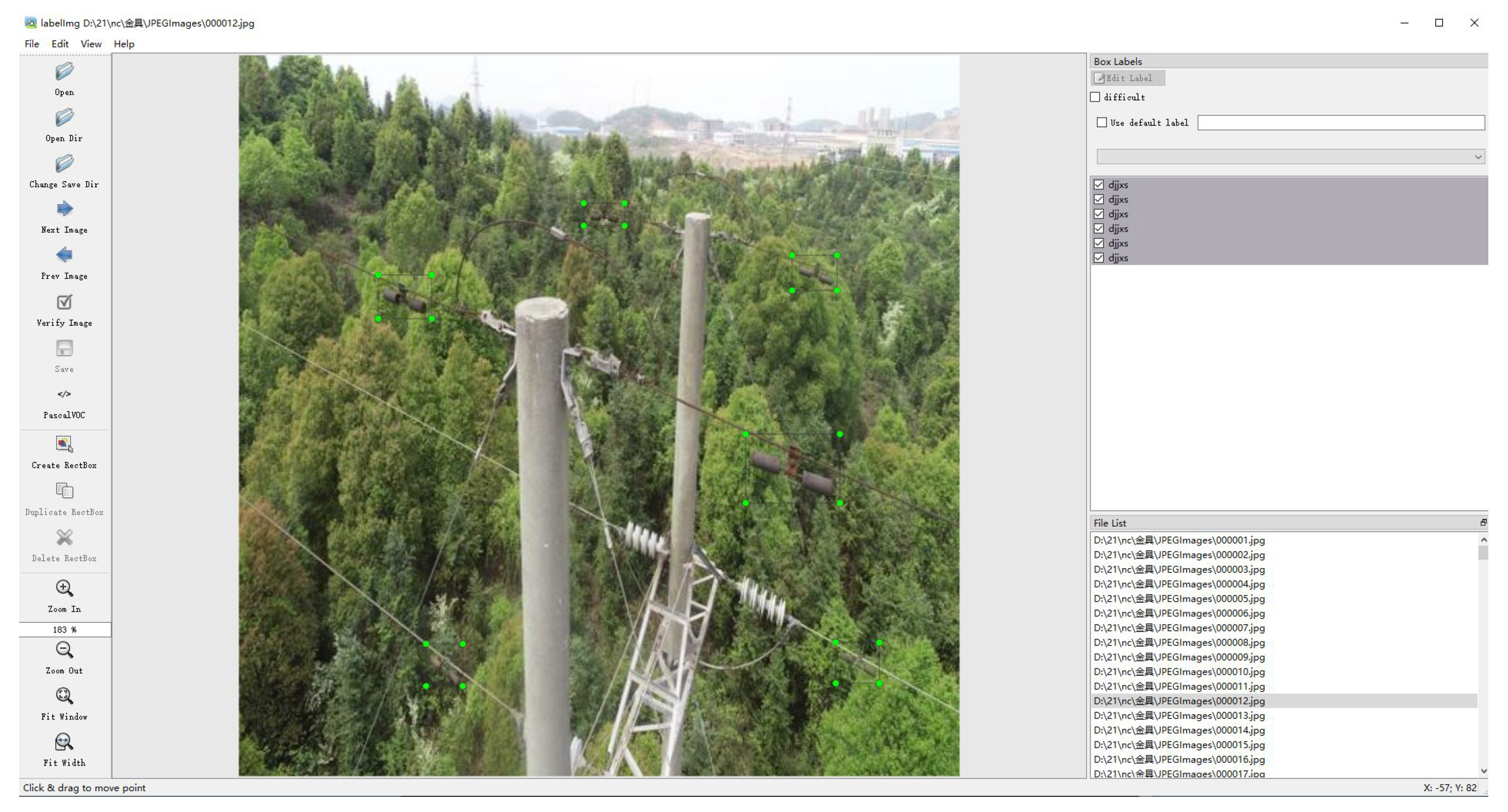

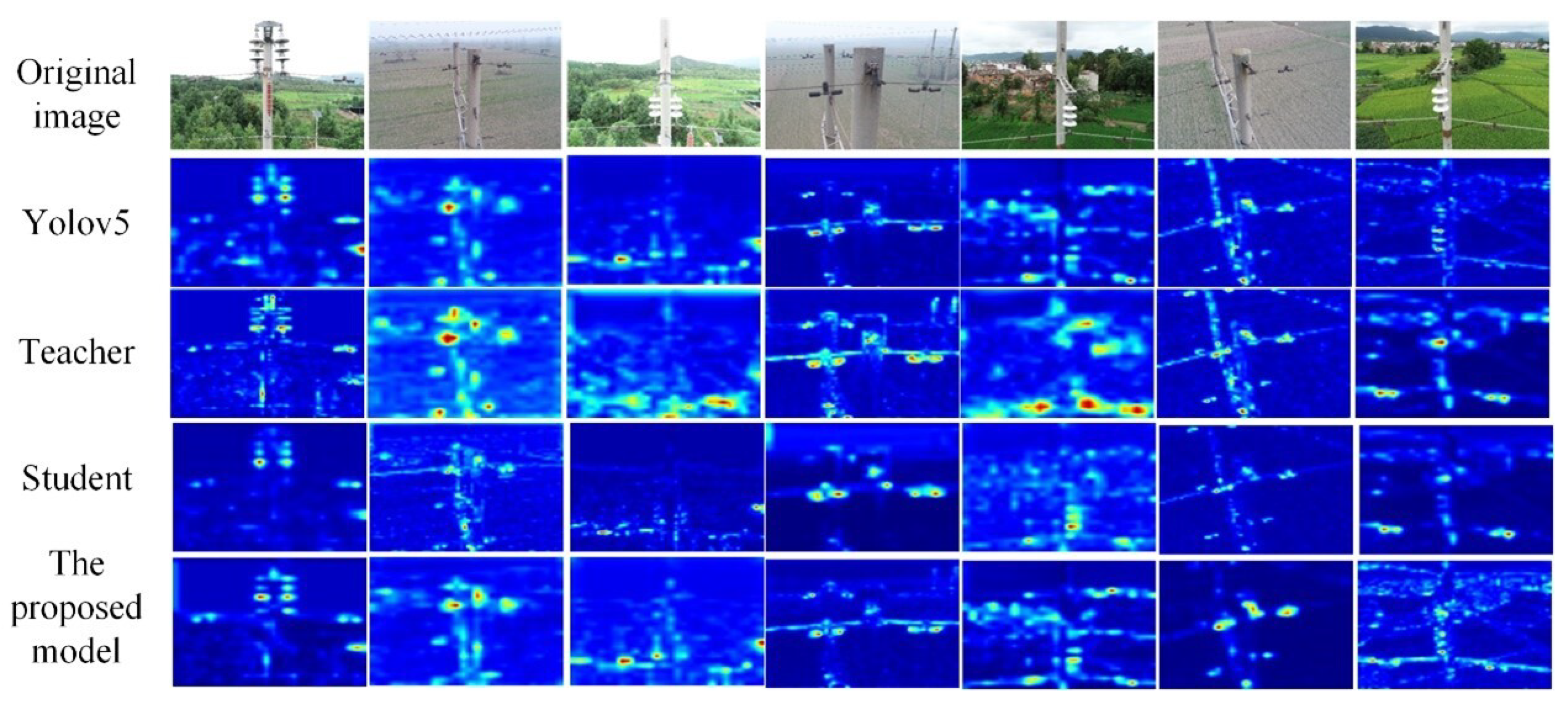

4.5.2. Visualized Results

For the purpose of visual analysis on the impact of the teacher model on the student model during the distillation training process, the heatmaps are depicted as shown in

Figure 18. It can be observed from the heatmaps that the utilization of knowledge distillation training method can enhance the performance of the student model by means of knowledge transfer from the teacher model, resulting in an increased contribution to object detection.

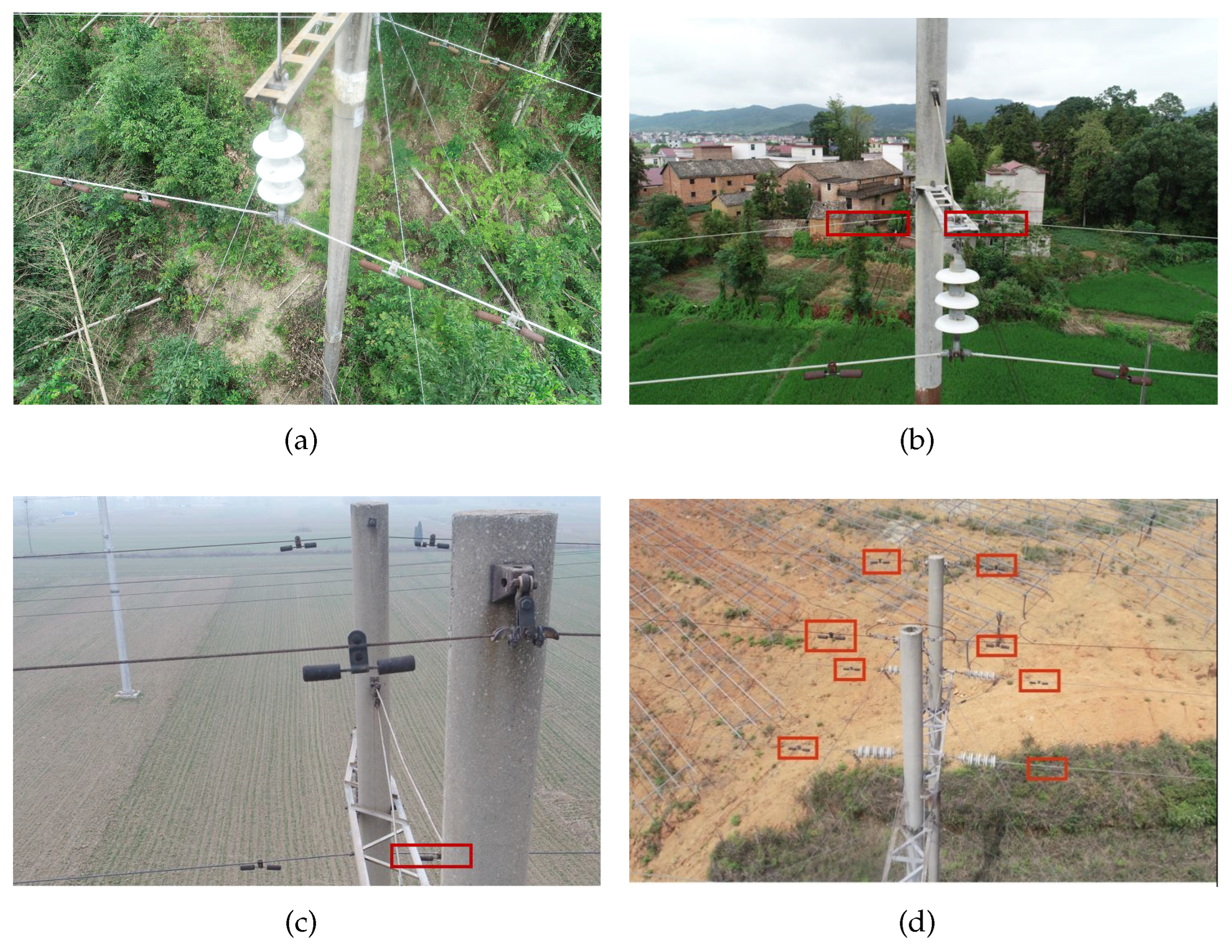

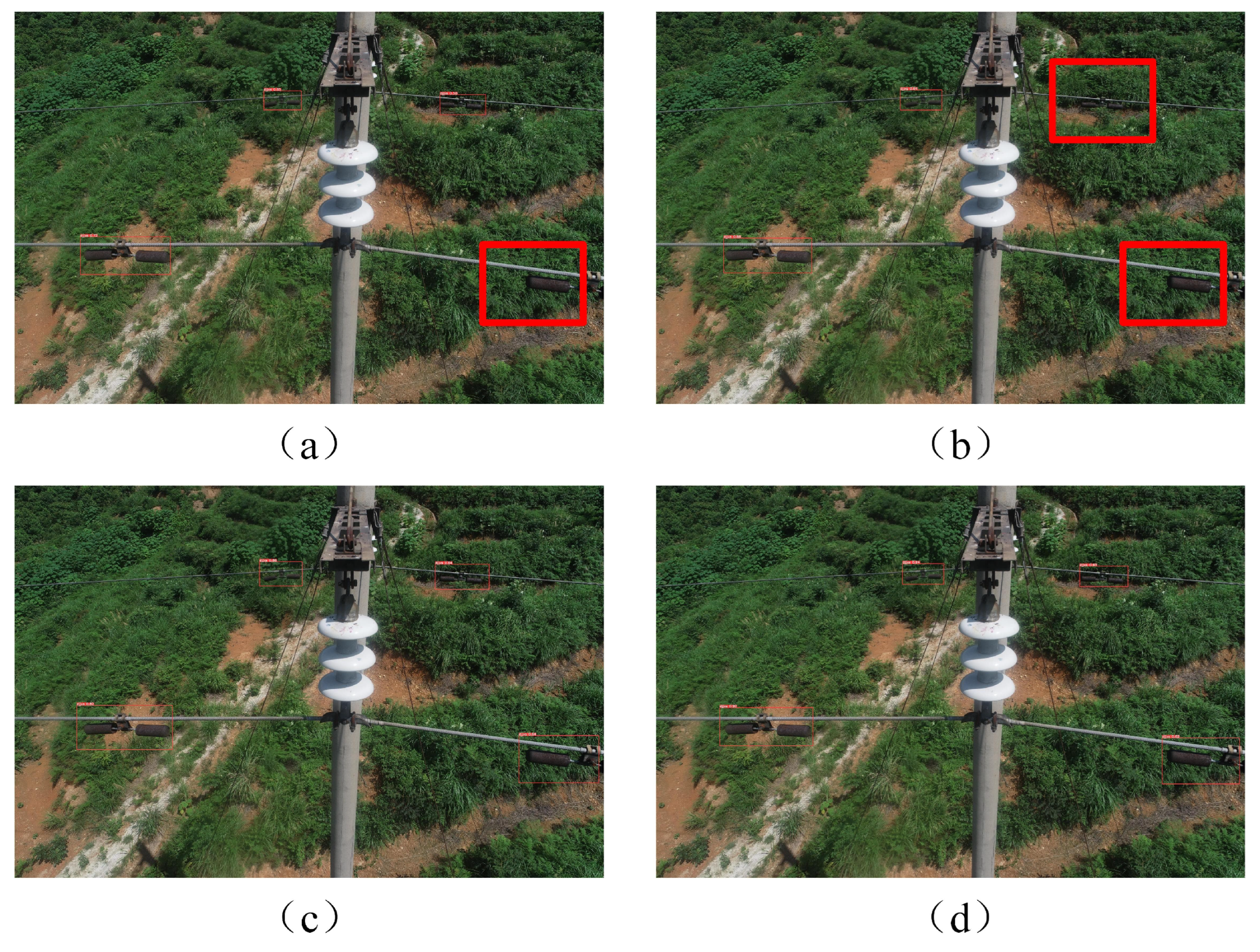

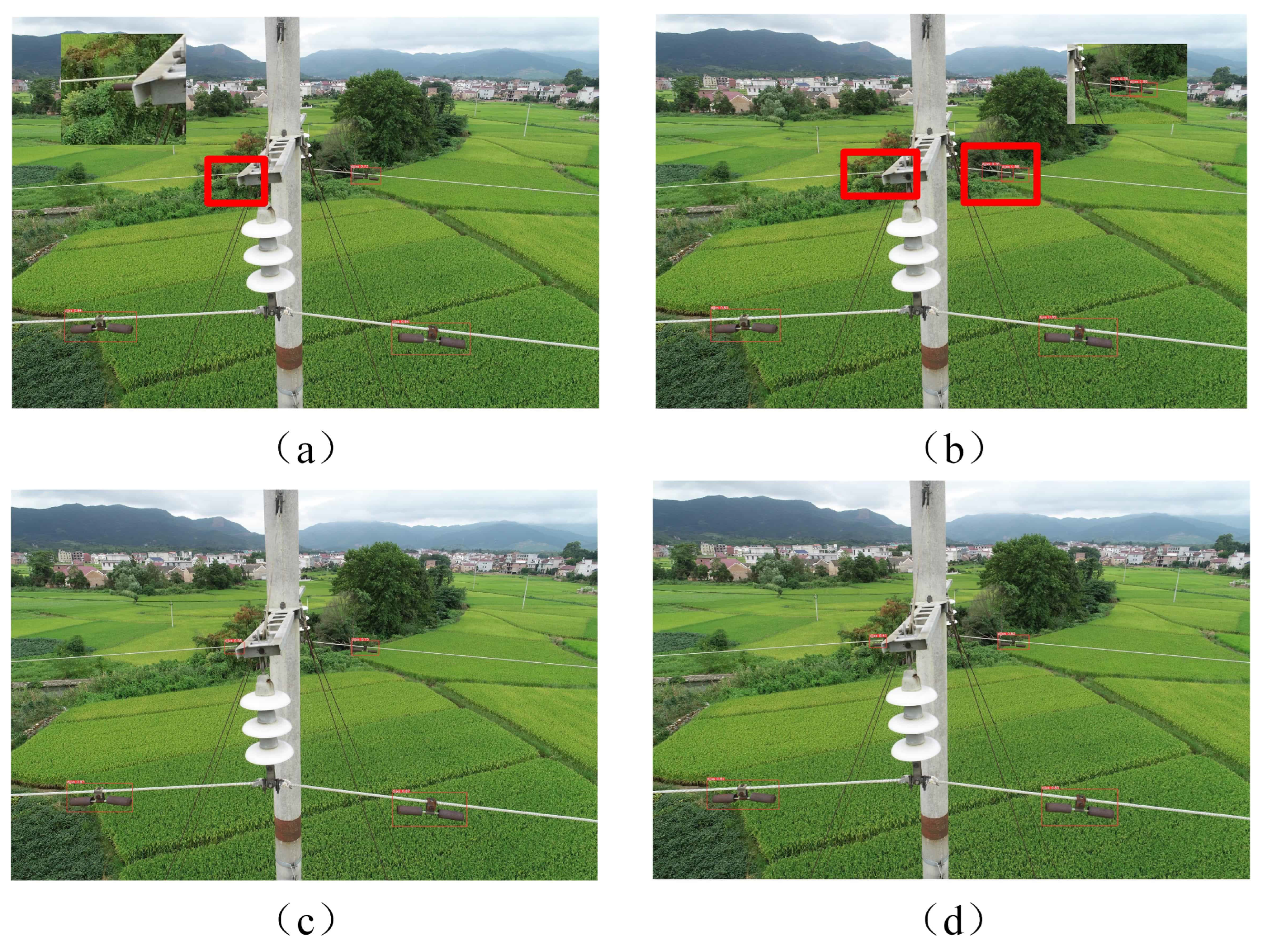

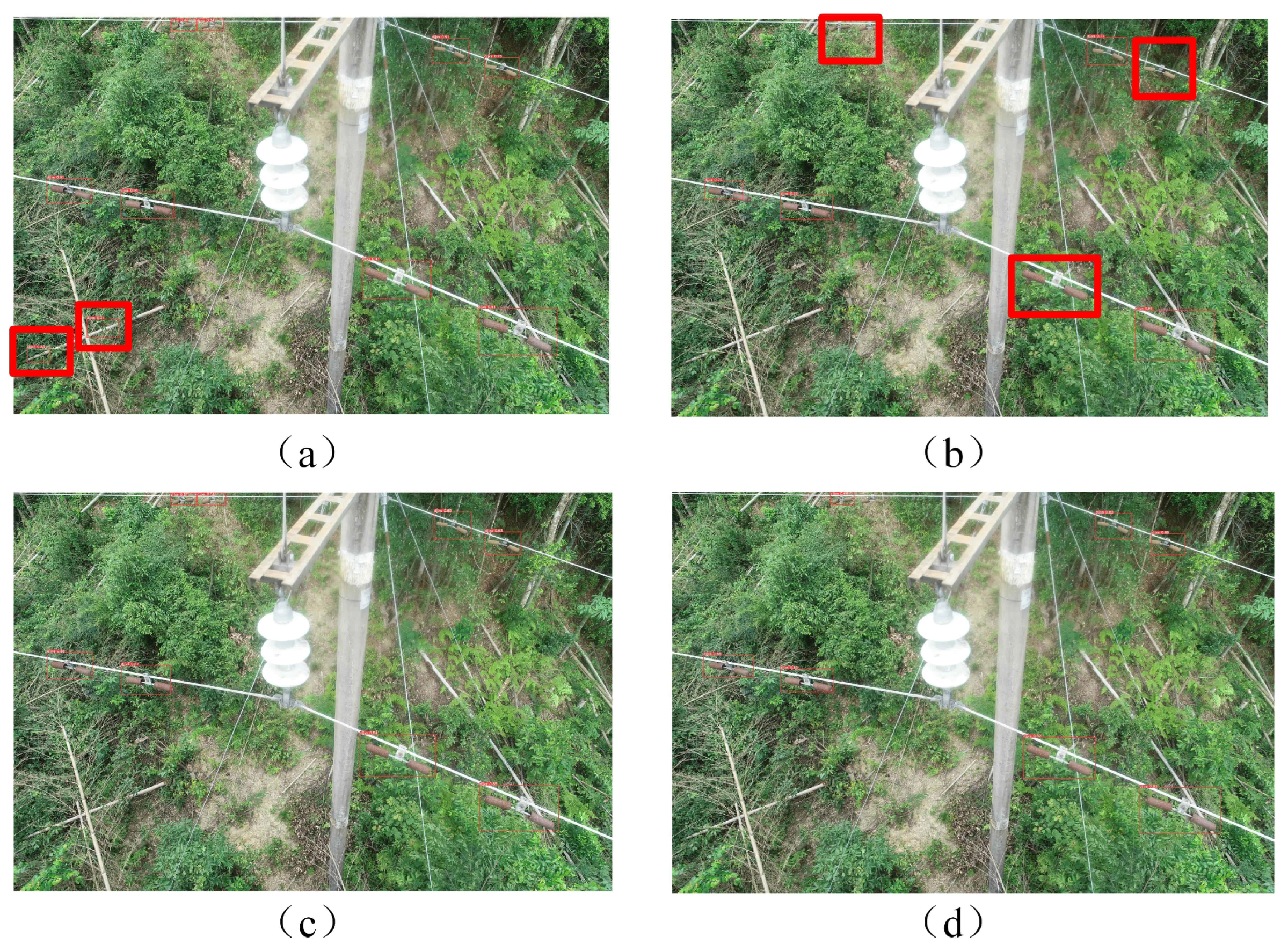

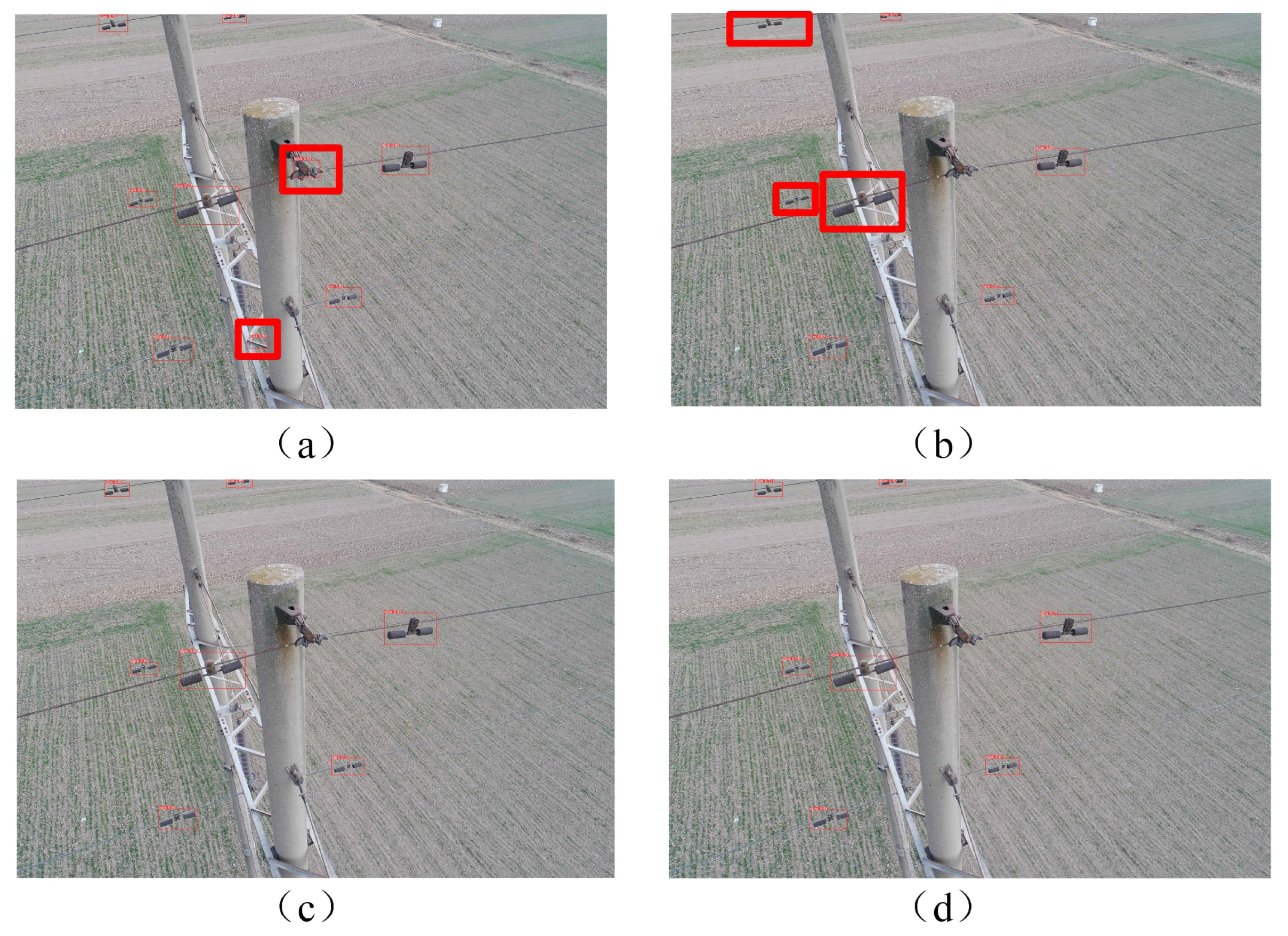

The images acquired from the UAV are subjected to detection using an image object detection model, and the corresponding results are illustrated in

Figure 19,

Figure 20,

Figure 21 and

Figure 22. The subfigures (a–d) correspond to the detection results of YOLOv5, the student model, the teacher model and the proposed approach, respectively. From the detection results, it can be visually observed that the teacher model achieves a higher accuracy in detection. The student model exhibits instances of false detections and missed detections. Using the knowledge distillation training approach, our method improves the occurrences of false detections and missed detections in the results. As shown in

Figure 19, the detection results of the student model exhibit a fusion between the rusted fitting target and the background, due to their similar color and less prominent features, which makes them difficult to detect. Consequently, there are instances of missed detections. However, our proposed method successfully detects previously missed corroded fittings.

Figure 20 shows a scenario in whichh the corrosionof thef the fittings is partially obscured during the UAV inspection process, attributable to the angle of image capture. Consequently, the student model exhibits instances of missed detections of these targets, accompanied by inaccuracies in localizing the bounding boxes. However, the proposed method not only detects these previously missed rusted fittings but also enhances the accuracy of the bounding box localization, thereby enabling more precise detection.

Figure 21 presents an image characterized by a complex background environment and substantial interference, resulting in missed detections of the target in the results of the student model. Furthermore, the YOLOv5 image detection model also exhibits instances of false detections. Nevertheless, our method effectively detects the previously missed corroded fittings in the image.

Figure 22 demonstrates a scenario where the corroded fittings intersect with the iron frame, creating a complex structure that poses challenges for the student model’s detection. As a consequence, there are instances of missed detections, including small target objects. Nonetheless, our proposed method effectively detects the previously missed corroded fittings in the image. The detection results validate that the efficacy of the proposed method in enhancing the performance of the student image object detection model. It effectively improves both the accuracy and speed of the image object detection model while maintaining robustness and lightweight characteristics.

To validate the significant differences in false positive rate (FPR) and false negative rate (FNR) between the proposed model and the classical YOLOv5 model, this study employs a rigorous experimental design and a statistical testing workflow. First, both models were independently trained for 20 epochs on the same target dataset. After each training session, five full-scale tests were performed on the test set, resulting in data sets of 100 samples for both FPR and FNR. During the statistical analysis phase, normality was assessed using the Shapiro–Wilk test, and quantile–quantile plots (Q-Q plot) were also drawn to visually verify the Gaussian distribution characteristics of the data. If the data satisfied the normality assumption (

p > 0.05), a paired sample

t-test was performed with a significance level of

= 0.05, using a two-tailed test to determine mean differences. If the data did not meet normality, logarithmic or square root transformations were applied before re-test. The results of a paired-sample

t-test that compares the Yolov5 model and the proposed method are listed in

Figure 23,

Table 8 and

Table 9.

As shown in

Figure 23, the data points approximately lie along the straight line, confirming the normality assumption. From

Table 8 and

Table 9, Yolov5 has a mean value with 0.162 in terms of FPR, while the proposed method shows a lower FPR of 0.085. In terms of FNR, YOLOv5 records 0.125, while the proposed method achieves a lower FNR of 0.072. The

p values for FPR and FNR are 0.0184 and 0.0196 respectively. For the FPR paired differences, the Shapiro–Wilk test yielded a statistic

with

. For the FNR paired differences, the Shapiro–Wilk test statistic was

with

. These

p values are less than the typical significance level of 0.05, indicating that there are statistically significant differences in both FPR and FNR between the two models. Thus, the proposed method demonstrates superior performance in reducing both false positives and false negatives compared to YOLOv5.

4.5.3. Computational Cost Evaluation

The teacher model in the proposed method mainly consists of convolution operation and pixel-level attention mechanism. The computational complexity of standard convolution operation can be approximately represented by Equation (

18). After introducing pixel attention mechanism, the overall complexity can be expressed by Equation (

19) [

36].

where,

and

denote the number of input and output channels, respectively.

K is the size of convolution kernel,

H and

W are the height and width of the input feature map, while

N signifies the number of heads of the attention mechanism. Although the pixel-level attention mechanism enhances the model’s ability to extract features, it significantly increases the computational cost, which is not conducive to deployment on edge devices. That is why we employ a knowledge distillation method to maintain the lightweight recognition of the student model.

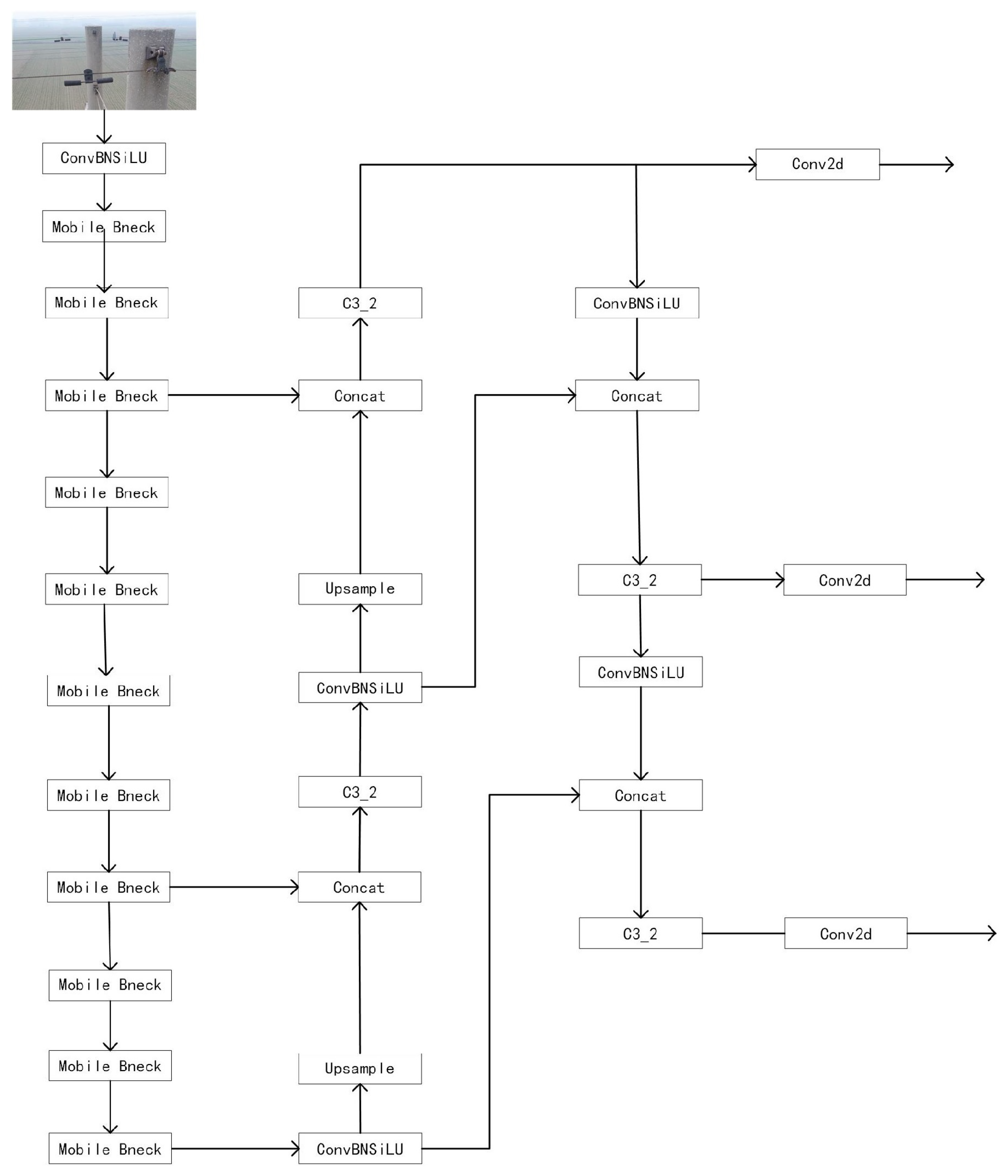

In the student model, MobileNetV3 is used to replace the feature extraction part of YOLOv5. Compared with the original feature extractor, MobileNetV3 significantly reduces the number of parameters through depthwise separable convolutions. The computational complexity of the depthwise separable convolutions can be approximately represented by Equation (

20).

From Equations (

19) and (

20), it can be seen that the depthwise separable convolution reduces the complexity of standard convolutions from

to

.

The empirical evaluation is conducted on our actual UAV platform. Due to commercial confidentiality, we will not disclose the specific hardware configuration parameters here. Since only student model is deployed at the platform, the cost evaluation is mainly carried out for the student model. Here we compare the proposed method with the classical YOLOv5 that uses CSPDarknet53 as student model. The YOLOv5s model with CSPDarknet53 gets its memory footprint approximately 14.5 MB, while the proposed method with MobileNetV3 gets the memory footprint reduced to 2.7 MB. The parameter number is significantly reduced by over 80%. Therefore, the lightweight characteristics of MobileNetV3 make it highly suitable for deployment on resource-constrained edge devices. Its low parameter count and FLOPs enable the model to run efficiently on low-power devices while maintaining high detection accuracy.