Abstract

This paper proposes a novel dual-branch Faster R-CNN model, termed D-faster R-CNN, for breast ultrasound image detection. It adds a new parallel backbone, Pyramid Vision Transformer (PVT), to the original ResNet50 backbone, forming a dual-branch feature extraction structure of ResNet50 and PVT. To enhance the extracted features on the PVT and ResNet50 branches, a simple feature pyramid network and an asymptotic feature pyramid network are used, respectively, and the enhanced features are merged for the subsequent region proposal and RoI Align. The proposed model is validated on a publicly available breast ultrasound image dataset (BUSI). The experimental results show that compared with the baseline models, the proposed D-faster R-CNN with dual-feature extraction backbone can effectively improve tumor detection performance, and the average precision is significantly improved.

1. Introduction

In recent years, the detection rate of breast cancer has increased year by year [1,2], and early diagnosis and treatment can effectively reduce the mortality rate of patients with malignant tumors [3]. Ultrasound is increasingly favored in the medical field due to its cost effectiveness and radiation-free nature, which makes it widely used in cancer screening, especially breast cancer [4]. Accurately identifying the locations and numbers of masses in ultrasound images is critical for diagnosis but challenging due to limited datasets, imaging artifacts, low signal-to-noise ratio, and limited fields of view [5].

With the significant progress of deep learning in the field of computer vision, deep learning-based target detection methods are widely used in the medical field [6,7,8]. The deep learning detection models can be broadly classified into single-stage and two-stage models [9,10]. Single-stage models, such as the YOLO [11] and SSD [12] families, use regression-based techniques with simple structures and high computational efficiency. Two-stage models first generate convolutional feature maps to identify regions of interest (RoIs) and then perform object classification, typically like the R-CNN [13,14] family.

In medical image detection tasks for diagnostic purposes, accuracy is more important than speed. Therefore, we use Faster R-CNN [15] as the base model, which has been reported in the literature to have state-of-the-art accuracy. The R-CNN family generally uses a convolutional neural network (CNN) as the backbone to extract features from the input image; commonly used backbones are MobileNet [16], VGG16 [17], and ResNet [18], which are typically pre-trained on large-scale datasets such as ImageNet [19] to enhance their feature extraction capability. As an excellent convolutional backbone, ResNet has become almost a standard component for the R-CNN family. However, ResNet also suffers from the limited receptive field of CNNs and is better at extracting local features. Recently, with the great success of transformer in natural language processing, this attention-based technique is gradually being applied to visual tasks. Vision Transformer (ViT) [20] is first applied to image classification and then gradually penetrates into image segmentation and detection tasks. Pyramid Vision Transformer (PVT) [21] introduces a pyramid structure into ViT to obtain CNN-like multi-scale features to better cope with complex visual tasks. Compared to ResNet, PVT which combines multi-scale with attention is more advantageous for global or long-range feature extraction.

In this work, to leverage the complementary advantages of CNN and transformer architectures, we design a dual-branch feature extraction network within the Faster R-CNN framework, which extracts multi-scale image features using ResNet and PVT, respectively, and enhances these features by using different feature pyramid networks so that the merged features are more conducive to subsequent region proposal and bounding-box classification and regression. The main contributions of this work are as follows: (1) Combining two feature extraction backbones, ResNet50 [18] and PVT, for image detection and experimentally verifying their complementarity in the ultrasound image detection task. (2) Designing different feature pyramid networks on the ResNet50 and PVT branches for cross-scale fusion and enhancement of multi-scale features on both branches, and experimentally verifying the effectiveness of the combinations of backbones and feature pyramid networks. The full source code for the proposed model is publicly available at https://github.com/coolxuzz/D-faster-R-CNN/tree/master (accessed on 1 October 2025).

2. Methods

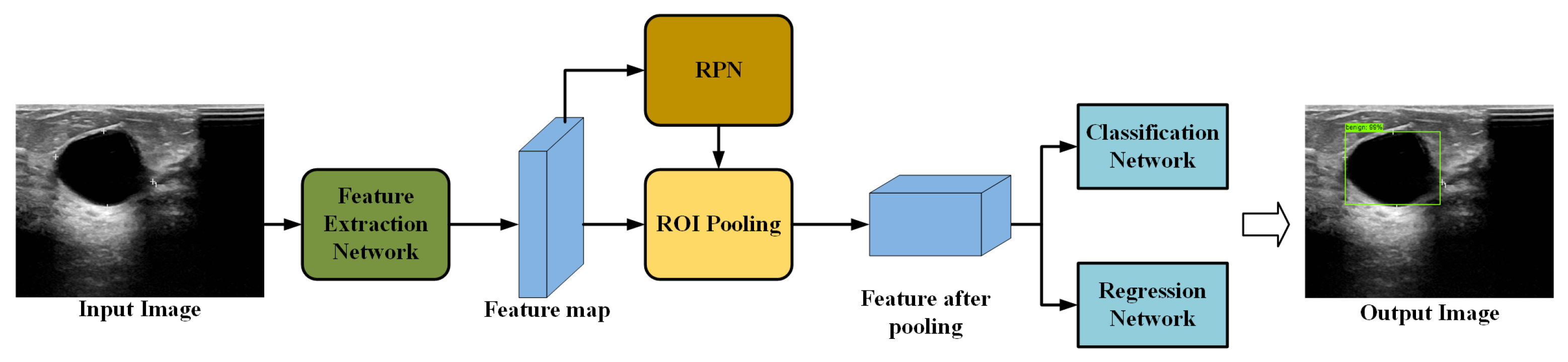

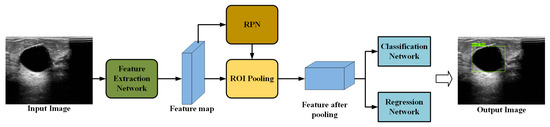

Faster R-CNN, which evolved from R-CNN and Fast R-CNN [22], has become one of the main methods for target detection. Figure 1 shows the standard Faster R-CNN framework, which consists of four main components: feature extraction network, region proposal network (RPN), RoI Align, and classification and regression networks.

Figure 1.

Structure of the standard Faster R-CNN framework.

Feature extraction networks are typically built based on a CNN, such as VGG or ResNet, which encode the input image into feature maps that retain both spatial and high-level semantic information by cascading convolutional and pooling layers. The encoded features are then used for the RPN and RoI Align. The RPN generates target region proposals through operations such as sliding windows, anchor classification and regression, and non-maximum suppression (NMS) [23]. RoI Align maps the candidate regions generated by the RPN onto multi-scale feature maps, employing bilinear interpolation to convert ROIs of varying sizes into fixed-size feature maps for subsequent classification and regression. The classification layer maps each candidate region to one of the predefined object categories, and the regression layer refines the bounding-box coordinates to better fit the object. Finally, the predicted boxes are mapped back to the original image to obtain the detection results.

2.1. Dual-Branch Feature Extraction Network

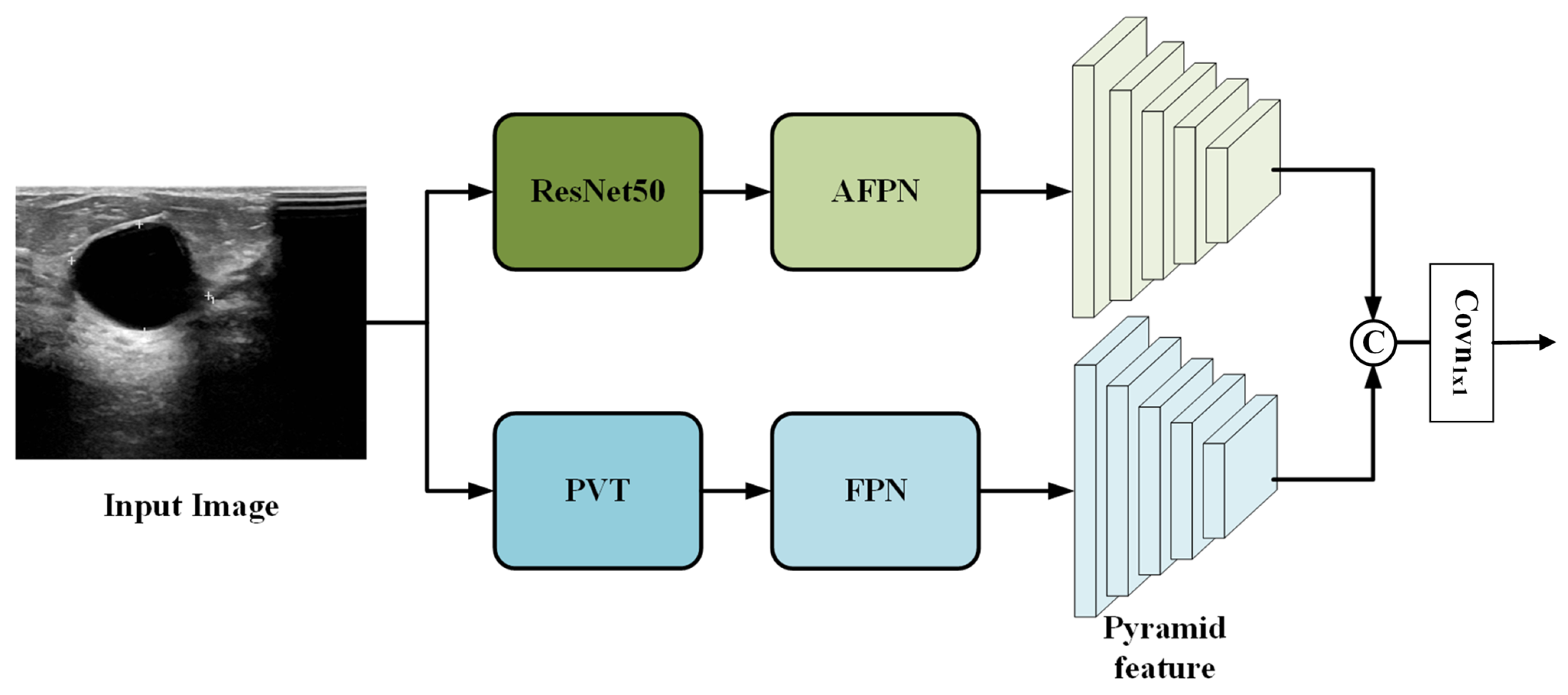

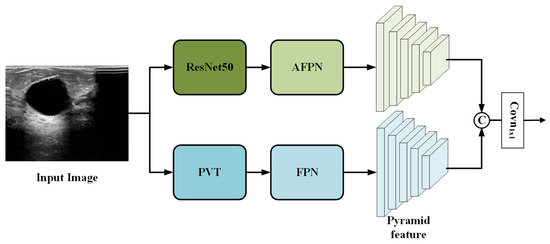

To fully leverage the complementary characteristics of convolutional and transformer-based architectures, we extend the standard Faster R-CNN by designing a parallel dual-branch feature extraction network. As shown in Figure 2, the proposed architecture consists of two independent branches: a ResNet50 branch and a PVT branch, which process the input image in parallel.

Figure 2.

Overview of the proposed dual-branch feature extraction network.

The overall workflow is as follows. The input image is simultaneously fed into both branches. In the ResNet50 branch, the convolutional backbone extracts multi-scale feature maps, which are then enhanced by an asymptotic feature pyramid network (AFPN) [24] to facilitate better cross-scale fusion. In the PVT branch, the transformer backbone captures global contextual information and generates its own set of multi-scale features, which are refined by a standard feature pyramid network (FPN) [25].

The two resulting feature pyramids from the ResNet50+AFPN branch and the PVT+FPN branch are then fused via channel-wise concatenation followed by a convolution (see Section 2.1.3 for details). The final fused feature pyramid is passed to the subsequent RPN and detection head, which operate identically to the classic Faster R-CNN framework.

2.1.1. ResNet50 Branch

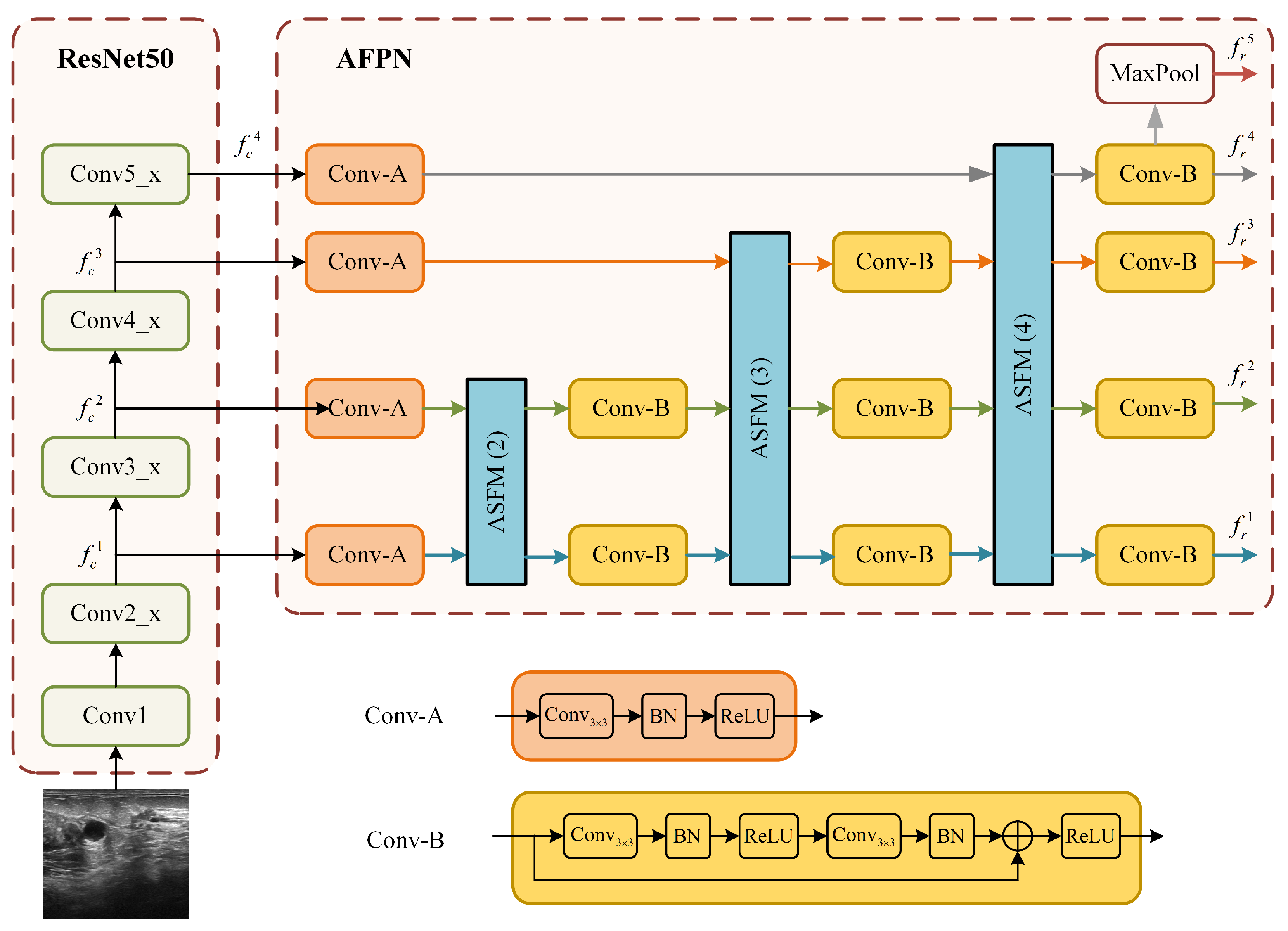

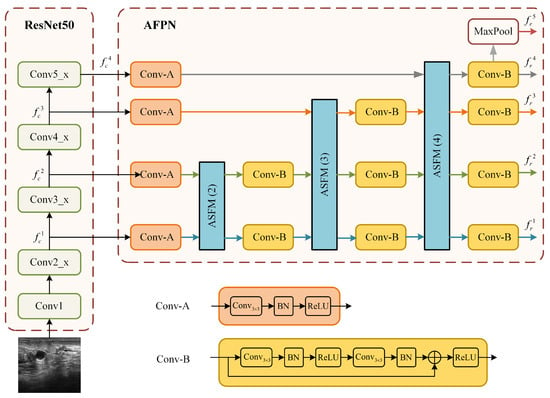

The structure of the ResNet50 branch, which integrates the ResNet50 backbone with the AFPN is illustrated in Figure 3. This branch is designed to address the challenge of large tumor scale variation in ultrasound images by constructing a powerful multi-scale feature representation. Relying solely on single-scale or the native multi-scale features from ResNet50 is often insufficient for this task.

Figure 3.

Detailed structure of the ResNet50+AFPN branch.

The AFPN module mitigates the problems of information loss and degradation prevalent in standard feature pyramids by employing an adaptive spatial fusion operation to gradually fuse low-level and high-level features. As shown in Figure 3, the input image is first processed by the ResNet50 backbone to produce a set of multi-scale feature maps. Let denote these 4-scale feature maps, with strides of 4, 8, 16, and 32 relative to the input image X:

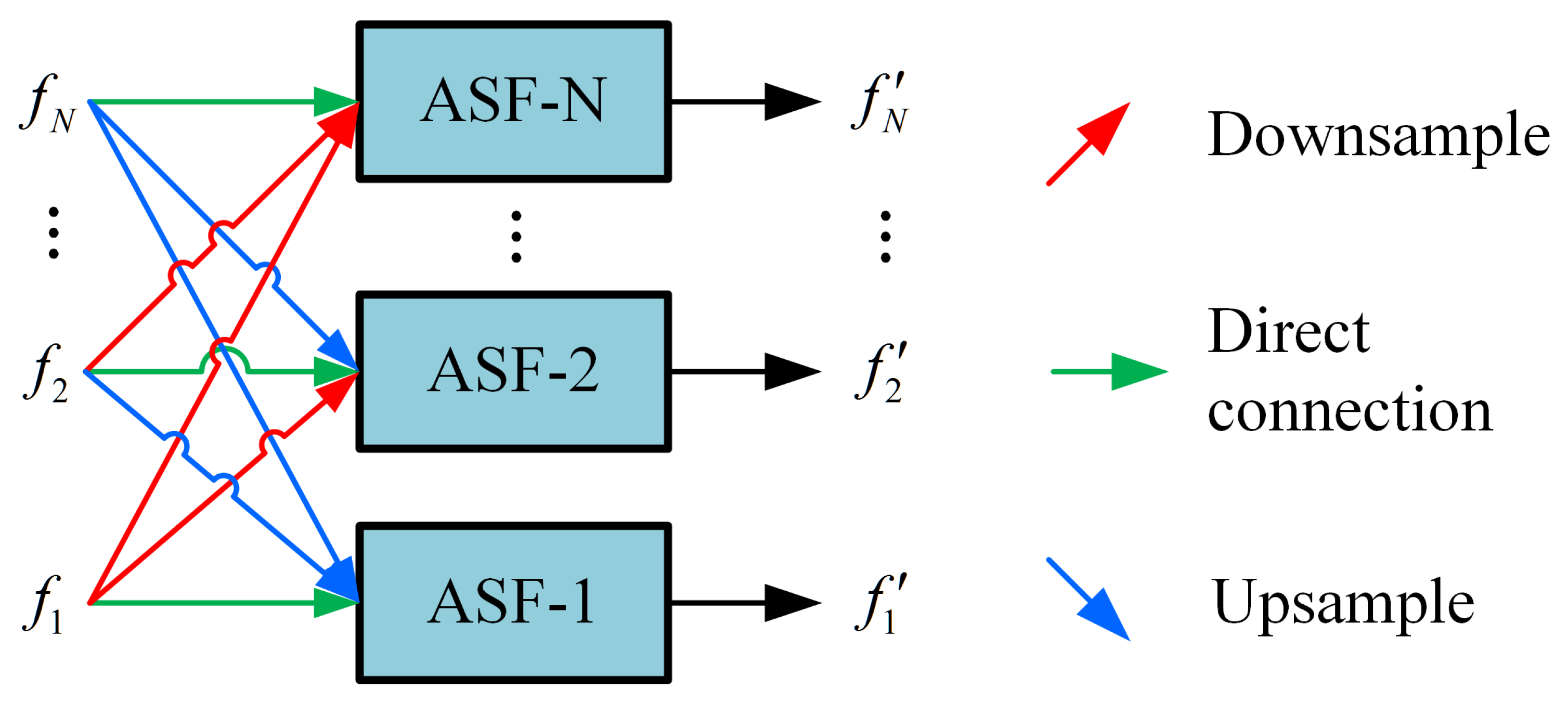

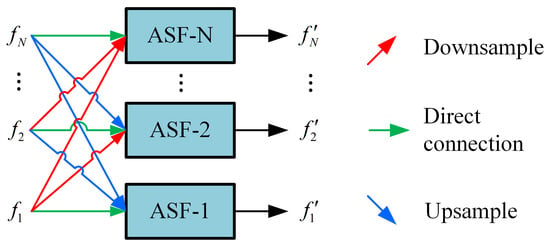

These feature maps are then processed by the AFPN. The AFPN first uses two specific convolution blocks (denoted as Conv-A and Conv-B in Figure 3) to prepare the features. The core of the AFPN is composed of several adaptive spatial fusion modules (ASFM). The architecture of a general N-scale ASFM is detailed in Figure 4. Each ASFM contains a number of adaptive spatial fusion (ASF) blocks [26] equal to the number of input feature scales, N.

Figure 4.

Structure of the adaptive spatial fusion module (ASFM) for N-scale features, denoted as ASFM(N).

The ASFM is designed to adaptively fuse feature maps from different scales, enhancing the response in salient regions while preserving crucial multi-scale context. Within each ASF block, input features from different scales are first resized to a common spatial size. The channel-aligned features (all set to 256 channels) then undergo global average pooling to generate a global response vector for each scale, guiding the fusion process. Each ASF block ultimately outputs a feature map at its target scale by integrating inputs through direct connections, downsampling, or upsampling. The output of the AFPN is an enhanced set of multi-scale feature maps. Assume that the multi-scale feature maps enhanced by the AFPN are

Finally, a fifth feature level is generated from by applying a max pooling operation [27].

2.1.2. PVT Branch

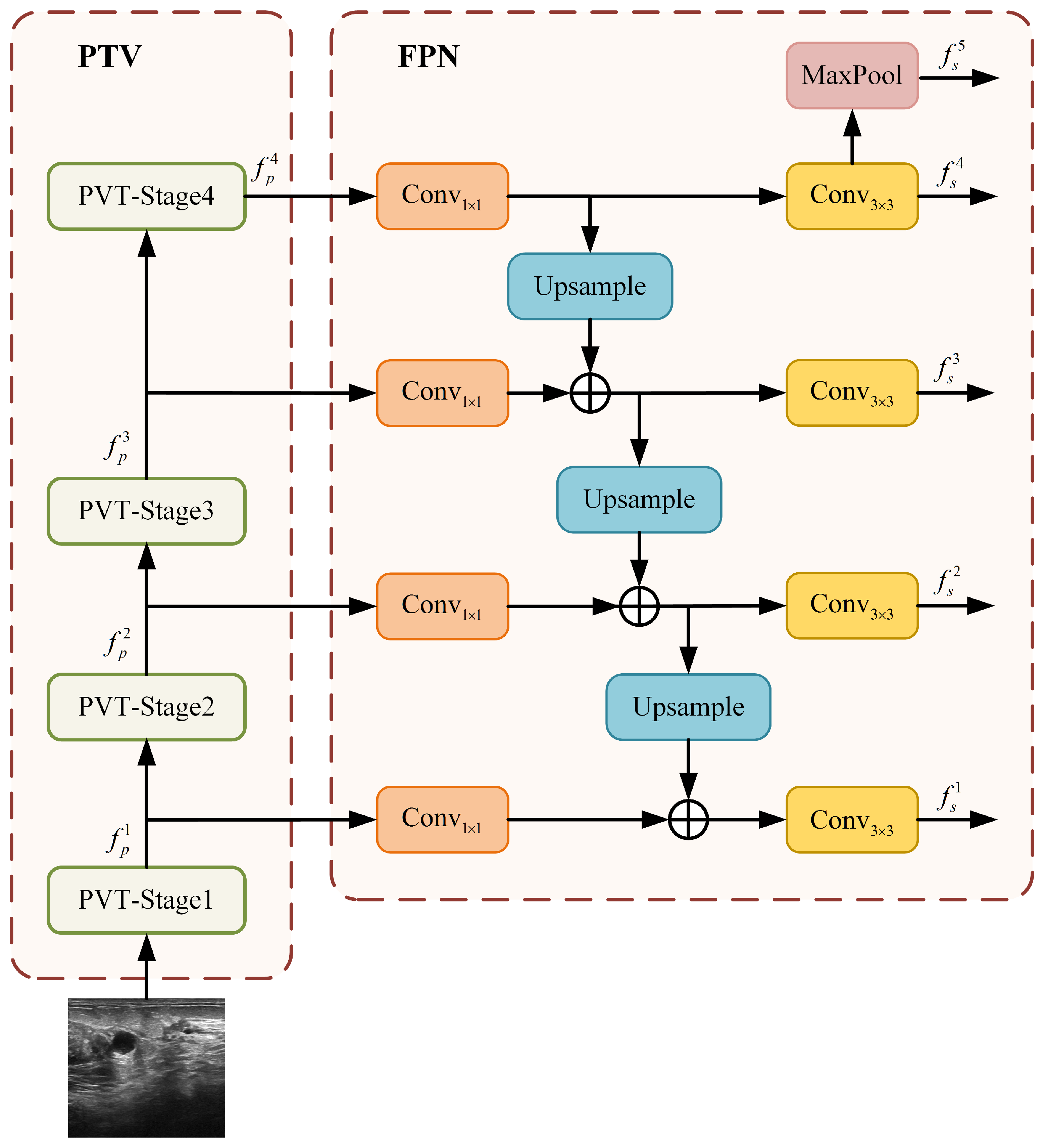

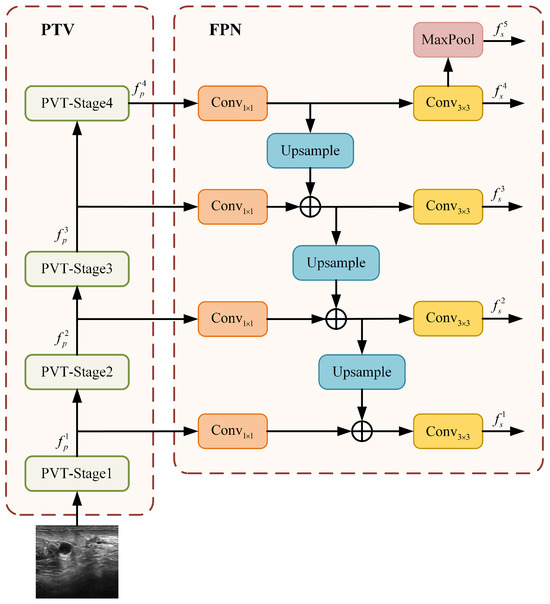

To complement the local feature extraction of ResNet50 and to imbue the network with robust global and long-range dependency modeling capabilities, we introduce the PVT branch. The overall structure of this branch, which combines a PVT backbone with a standard FPN [25], is depicted in Figure 5.

Figure 5.

Architecture of the PVT+FPN branch.

Similar to the ResNet50 branch, the PVT backbone produces a four-scale hierarchy of feature maps. Let represent these feature maps, with strides of 4, 8, 16, and 32 relative to the input image X:

These feature maps are subsequently enhanced by the FPN to build a strong multi-scale representation. As illustrated in Figure 5, the FPN operates in a top-down manner. It starts by projecting the deepest, most semantically rich feature maps from the PVT stages onto a uniform channel dimension of 256 using lateral convolutions. Higher-level features are then progressively upsampled and fused with the corresponding lower-level features via element-wise addition. Each fused feature map is further refined with a convolution to reduce aliasing effects and to generate the final set of enhanced features:

This design is motivated by PVT’s inherent strength in building deep semantic representations. A simple FPN is sufficient to effectively assemble these features into a coherent pyramid, leveraging the global context at all scales.

Finally, a fifth feature level is obtained by applying a max pooling operation to the top-most feature map, ,

The resulting five-scale feature pyramid () from the PVT+FPN branch provides rich, globally aware feature maps for the subsequent fusion stage.

2.1.3. Feature Fusion

To effectively integrate the complementary features extracted by the ResNet50 and PVT backbones, a feature fusion operation is applied to the enhanced feature pyramids from both branches. The feature maps from both branches are first concatenated along the channel dimension at each level ,

which preserves the complete feature representations from both the CNN and transformer architectures, maintaining local detail information from ResNet50 and global contextual information from PVT.

Subsequently, the concatenated feature map is passed through a convolutional layer. This operation is critical for channel dimensionality reduction, projecting the concatenated features back to a standard channel width to meet the input requirements of the subsequent RPN and detection head, while simultaneously enabling the model to learn an optimal linear combination of the features from both branches, thereby effectively integrating the cross-branch information through a learned weighting mechanism.

We initially explored more complex fusion strategies, including various attention mechanisms, such as ECA, CPAM, and FFA, to potentially enhance cross-branch complementarity. However, experimental results indicate that these attention-based fusion strategies did not yield performance improvements and in some cases even led to slight degradation. We posit that the concatenation operation followed by a convolution provides a sufficiently expressive representation, while the added complexity of attention modules may increase the risk of overfitting on this limited-scale medical dataset. Consequently, the final model adopts the structurally simpler, more stable, and empirically superior concatenation-based fusion scheme.

2.2. Loss Function

The proposed D-faster R-CNN model is trained end-to-end using the classical two-stage joint training strategy. In the first stage, the RPN generates candidate regions that may contain objects, performing binary classification to distinguish the foreground (object) from the background. In the second stage, these candidate regions are refined through RoI Align, object category classification, and bounding-box regression.

The RPN loss consists of a classification loss and a bounding-box regression loss:

where is the predicted probability of the i-th anchor being the foreground, is the corresponding ground-truth label (1 for foreground, 0 for background), and , denote the parameterized coordinates of the predicted box and the ground-truth box, respectively. Here, is the binary cross-entropy loss, is the smooth L1 loss, is the mini-batch size (set to 256) for normalizing the classification loss, is the number of anchor locations (approximately equal to the image size) for normalizing the regression loss, and is a balancing weight set to 3.

The detection loss for the second stage is defined as

where N is the number of sampled RoI proposals (set to 512) used to normalize both loss terms. and are the predicted and ground-truth class labels for each proposal, respectively, and is an indicator function that equals 1 if the proposal is not the background (i.e., belongs to a foreground class) and 0 otherwise. The regression loss is only activated for foreground proposals. The balancing weight is set to 1 in this stage.

The total loss is the sum of both stages:

3. Experimental Evaluation

3.1. Dataset and Experimental Setting

The proposed D-faster R-CNN is validated on the breast ultrasound image (BUSI) dataset provided by Al-Dhabyani et al. [28], which contains 780 images from 600 female patients, including 437 benign images, 210 malignant images, and 113 normal images. As the dataset does not include patient identity or linkage metadata, a patient-wise data split is not feasible. Therefore, we adopt the common practice of image-level split, randomly allocating 85% of the abnormal (benign and malignant) samples for training and the remaining 15% for testing.

This approach is justified by the nature of breast ultrasound imaging. Images from the same patient are typically acquired from different views and breast quadrants, capturing distinct lesions or anatomical contexts. This results in substantial visual and feature variability among images of the same individual. Furthermore, based on consultations with clinical radiologists, determining patient identity solely from breast ultrasound image content is generally challenging, as visual characteristics lack the consistency found in volumetric modalities like CT or MRI. Collectively, these factors suggest a low risk of data leakage and performance inflation in this context.

The dataset provides pixel-level segmentation masks for each image. We generated ground-truth bounding boxes by extracting the minimal external rectangle that fully encloses the lesion area in each mask. This process is straightforward and reliable since all lesions in the dataset are spatially isolated and confined to a single contiguous region per image, requiring no additional rules for handling overlapping lesions or multiple disconnected regions.

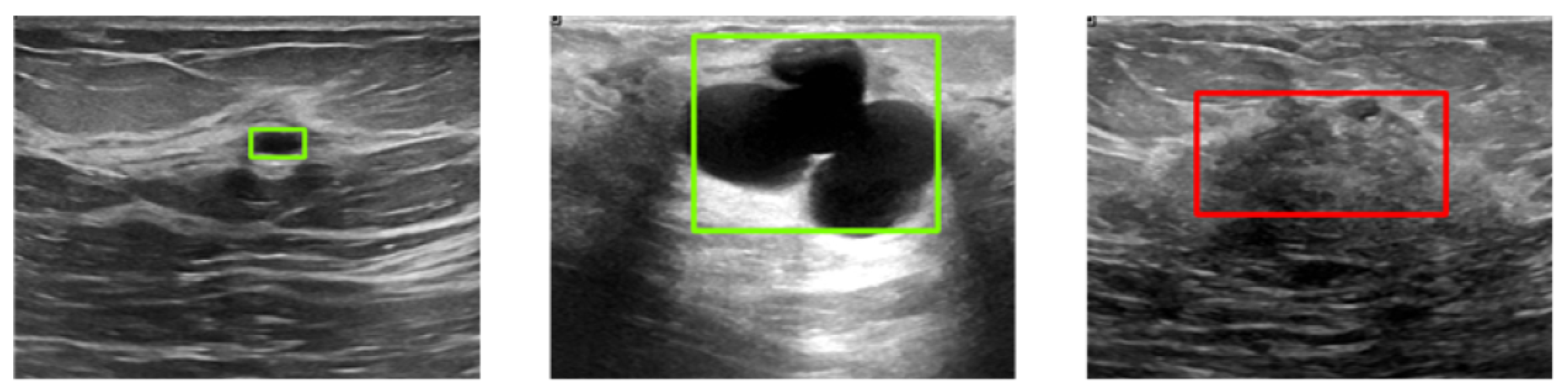

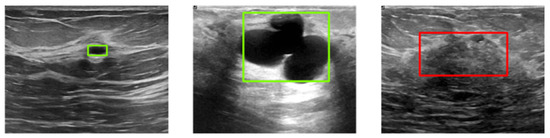

Figure 6 illustrates three example images with ground-truth annotations. Malignant tumors generally exhibit more irregular shapes and are more likely to be confused with normal tissue compared to benign tumors.

Figure 6.

Sample images from the BUSI dataset with ground-truth bounding boxes. Benign and malignant tumors are annotated with green and red boxes, respectively.

All experiments were conducted on a system equipped with an RTX 4090D GPU using PyTorch 1.11.0 and Python 3.8. The model was trained for 30 epochs with a batch size of 4, using the Adam optimizer [29] with an initial learning rate of 0.001, which was reduced by 10% every 5 epochs. Non-maximum suppression (NMS) thresholds were set to 0.8 for the region proposal network (RPN) and 0.5 for the second-stage detection.

To improve robustness and generalization, we applied standard data augmentation techniques during training, including random horizontal and vertical flipping, random cropping, Gaussian noise injection, and minor affine transformations. Input images were normalized to zero mean and unit variance using statistics computed from the training set. Class imbalance between foreground and background proposals was mitigated by the RPN’s built-in mini-batch sampling strategy. Both ResNet50 and PVT backbones were pre-trained on ImageNet and fine-tuned end-to-end without freezing any layers.

3.2. Experimental Results

This study aims to advance the Faster R-CNN framework; therefore, our experimental design emphasizes comparisons with its representative variants to fairly evaluate architectural improvements. All baseline models were independently implemented and trained from scratch under the same experimental setup and hyper-parameters to ensure a fair and reproducible comparison.

Our evaluation encompasses standard Faster R-CNN baselines, including the classic version [15] with a ResNet50 [18] backbone (denoted as ResNet50) and its FPN variant (ResNet50+FPN) [25]. To analyze the impact of different backbone and feature pyramid combinations, we also evaluate variants such as ResNet50 with the AFPN (ResNet50+AFPN), PVT with FPN (PVT+FPN) [21], and PVT with AFPN (PVT+AFPN). Furthermore, to position our approach within the broader landscape of contemporary detection methods, we include the Detection Transformer (DETR) [30] as a representative of modern end-to-end transformer-based detectors.

In addition, we conduct ablation experiments on component combinations within the proposed dual-branch structure. The following sections present the results and analyses.

3.2.1. Comparison with the Baseline Models

For quantitative evaluation, we adopt the COCO evaluation toolkit, a widely recognized benchmark for object detection. We report the standard COCO metrics: AP, AP50, and AP75. Here, AP is the primary metric, computed as the mean average precision (mAP) averaged over 10 Intersection-over-Union (IoU) thresholds from 0.5 to 0.95. AP50 and AP75 are the mAP at the specific IoU thresholds of 0.5 and 0.75, respectively. These metrics collectively reflect the model’s performance under varying localization strictness, with AP providing the most comprehensive assessment.

To complement these metrics with clinical relevance, we also report image-based sensitivity (abbreviated as Sens.), defined as the proportion of abnormal images in which at least one lesion is correctly localized (IoU ≥ 0.5), regardless of classification outcome. This measures the model’s ability to identify tumor-present cases at the image level.

Table 1 summarizes the performance comparison. As described above, the baseline models include standard and enhanced variants of Faster R-CNN as well as the transformer-based DETR. The results show that our proposed D-Faster R-CNN achieves the best performance across all metrics—38.4% AP, 66.1% AP50, 40.0% AP75, and 77.8% image-based sensitivity—consistently surpassing both CNN-based and transformer-based baselines. The notably higher sensitivity confirms the model’s strength in identifying images with tumors, while the superior AP values reflect accurate localization and classification. Together, these results validate the effectiveness of the dual-backbone design and feature fusion strategy.

Table 1.

Average precision metrics of various models.

To evaluate the source of performance gains, we analyze the model complexity. The standard ResNet50 baseline contains 60.8M parameters and requires 40.2G FLOPs, while our dual-branch model utilizes 108.7M parameters and 87.7G FLOPs. Although our model exhibits higher computational cost, the performance improvement cannot be attributed solely to the increase in scale. Notably, our model achieves a +3.9% AP gain over the ResNet50+AFPN model (34.5% AP), which already represents a strong and enhanced baseline. This discernible improvement, attained with only an approximately 1.5× increase in model complexity, underscores that the performance gain is primarily attributable to the effective architectural design of our dual-branch fusion mechanism rather than mere model scaling.

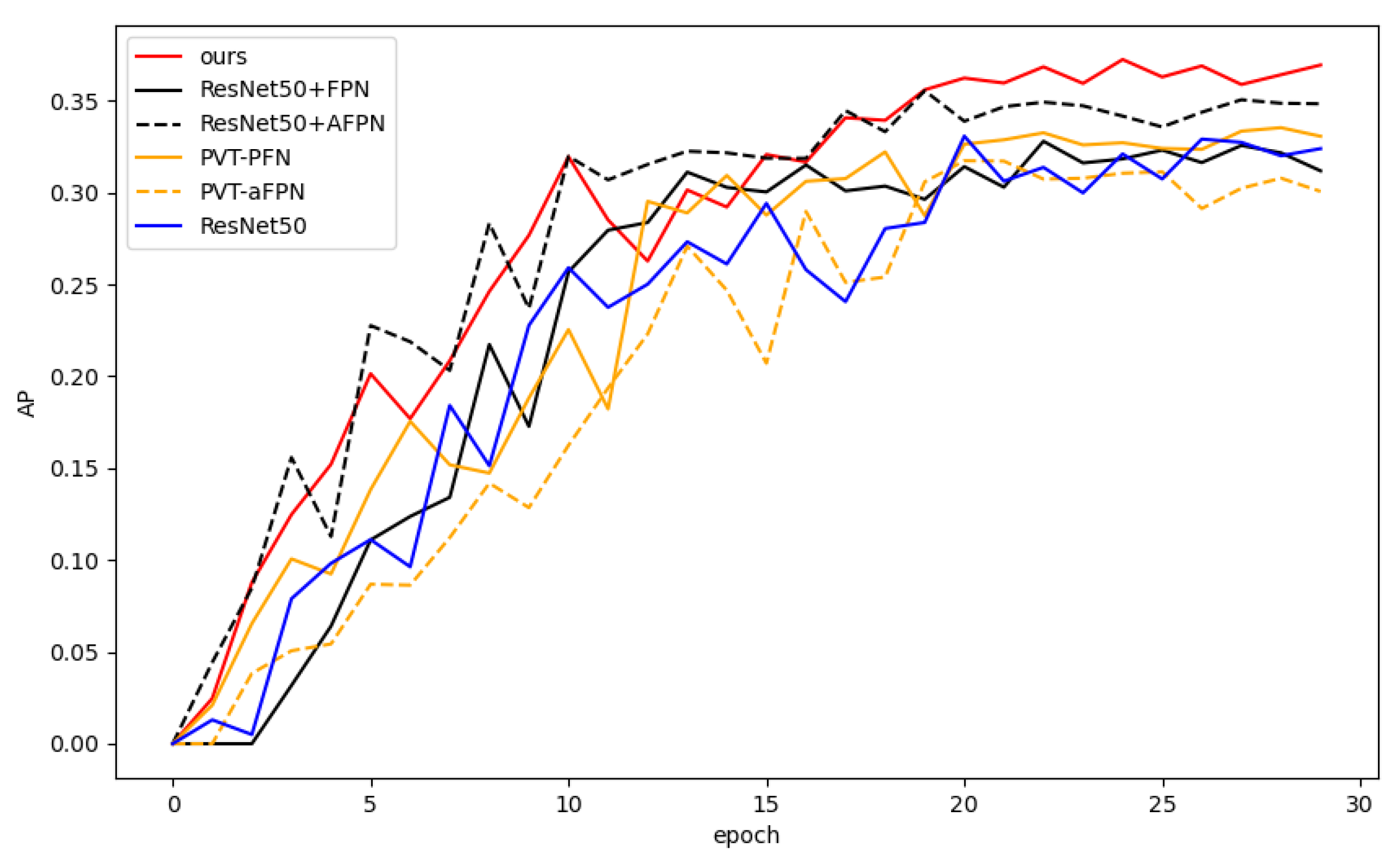

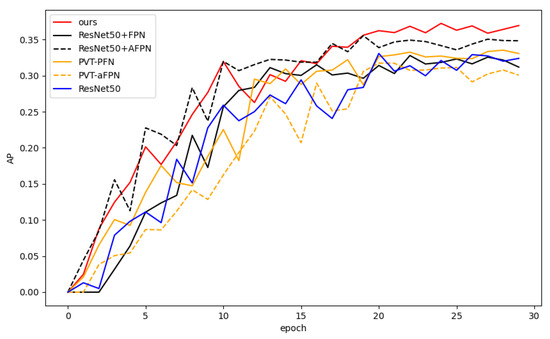

Figure 7 shows the AP curve (on the test set) for each model with respect to the training epoch. Our model (red curve) performs consistently during training and significantly outperforms the comparison models after about 20 epochs.

Figure 7.

Average precision (AP) curves of all models with respect to training epoch.

3.2.2. Ablation Analysis

Since the performance of PVT is closely related to the network depth, an ablation study was conducted on the scale of the PVT branch. Four scales of PVT—Tiny, Small, Medium, and Large—were investigated, corresponding to different numbers of transformer encoder layers in each stage (as detailed in [21]). As shown in Table 2, the detection performance improves significantly as the network scale increases, with the Large-scale PVT (3, 4, 27, and 3 encoder layers in the four stages) achieving the best overall accuracy.

Table 2.

Ablation study on the PVT for four scales.

In addition, the effect on performance of using different feature pyramid networks in each branch is also investigated. As shown in Table 3, the combination of PVT+FPN & ResNet50+AFPN has the best performance, justifying the proposed dual-branch structure.

Table 3.

Ablation study on different combinations of feature pyramid networks in each branch.

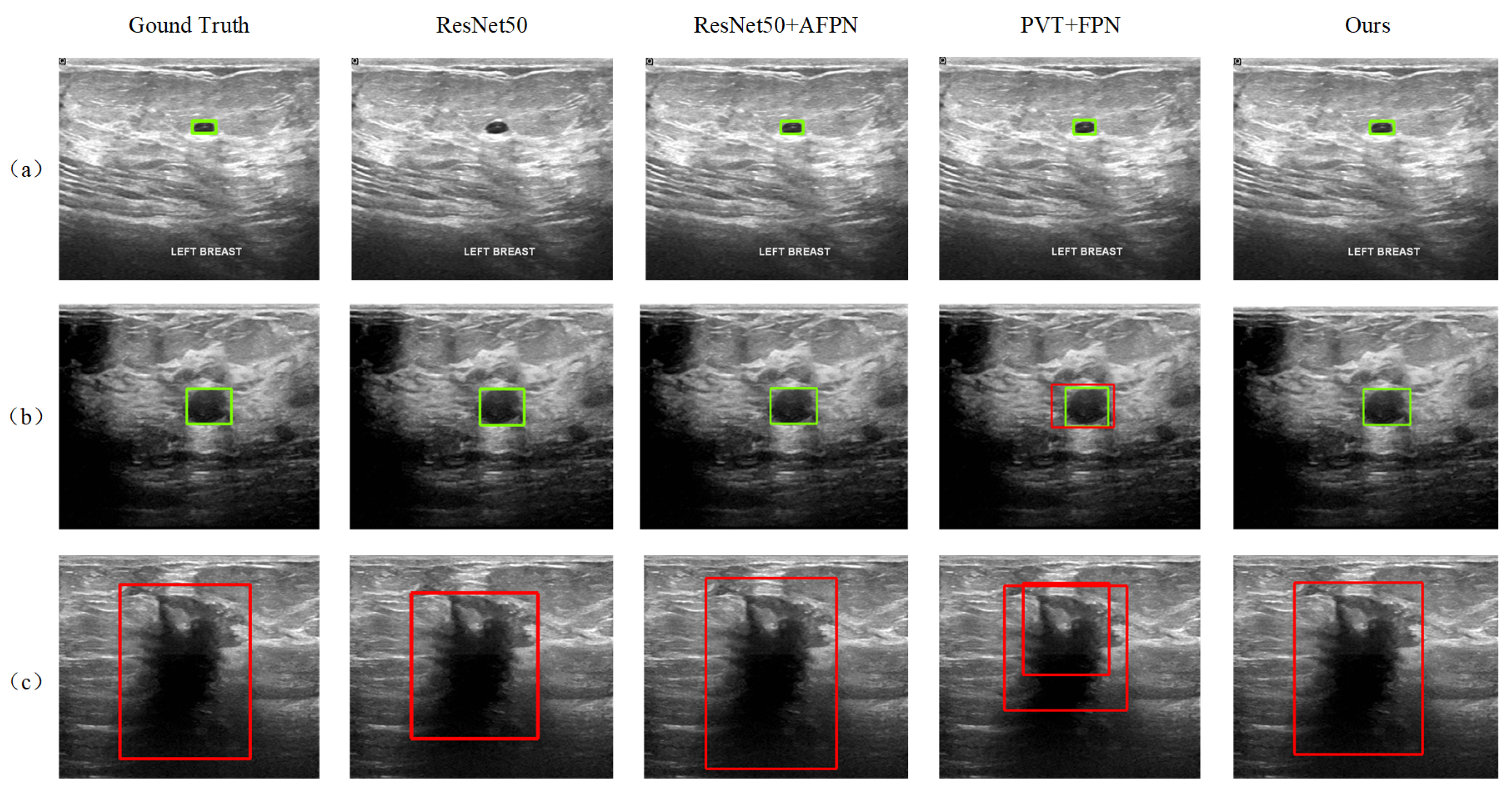

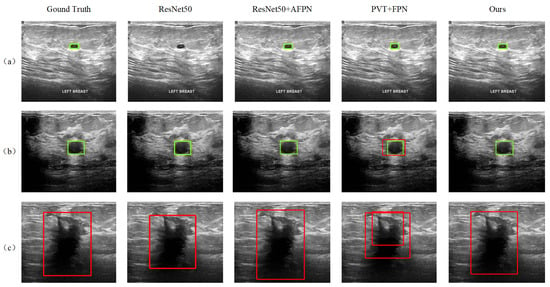

3.2.3. Visualization of Detection Results

In addition to the quantitative AP-based evaluation, the detection results of the proposed method are also visualized and qualitatively analyzed. It is shown that our model is better at detecting tumors of different scales and properties. Figure 8 shows the results of some sample images, where samples (a) and (b) contain benign tumors with large-scale differences, marked by the green boxes, while sample (c) contains a malignant tumor, marked by the red boxes. The detection results of the three reference models, ResNet50, ResNet50+AFPN, and PVT+FPN, that performed better in Table 1 are also given in the figure. The ResNet50 model fails to detect the small benign tumor in sample (a). In sample (b), although all models successfully detected the benign tumor, the PVT+FPN model additionally detected a malignant tumor in almost the same (slightly larger) location, resulting in a contradictory result. The introduction of AFPN into ResNet50 effectively improves the detection performance, and the ResNet50+AFPN model achieves correct results in all three samples (see the third column). In contrast, the DETR model performs less effectively in detecting large malignant tumors, often producing overly broad bounding regions that reduce localization precision when compared with our method. Compared to ResNet50+AFPN, our model demonstrates more accurate tumor localization.

Figure 8.

Tumor detection results for three samples in the test set: Sample (a) contains a small benign tumor, Sample (b) contains a medium-sized benign, Sample (c) contains a malignant tumor.

4. Conclusions

In this paper, we propose an improved Faster R-CNN model for ultrasound image detection, called D-faster R-CNN. The proposed model adds an additional feature extraction branch to the Faster R-CNN framework to form a two-branch feature extraction architecture of ResNet50 and PVT. Feature pyramid networks are used to enhance the extracted features at different scales. This two-branch architecture can fully exploit the complementary nature of the two backbone networks, PVT and ResNet50, in feature extraction, i.e., PVT is good at capturing global features, while ResNet50 is good at extracting local detail features. The introduction of feature pyramid networks effectively improves the model’s ability to detect targets at different scales. The comprehensive experiments on the publicly available dataset demonstrate the effectiveness and superiority of the proposed approach.

Despite the promising results, this study has certain limitations that point to valuable future research directions. The evaluation, while comprehensive, could be further strengthened by incorporating additional statistical measures, such as reporting standard deviations across multiple runs, to enhance robustness. Furthermore, a more in-depth clinical performance analysis using metrics like the FROC curve would be beneficial for assessing the model’s behavior in low false-positive regimes, which is critical for clinical translation.

Moving forward, we plan to investigate semi-supervised, weakly-supervised, and few-shot learning extensions, and to integrate the emerging vision-language modeling to better exploit scarce or weak labels and complementary modalities [31].

Author Contributions

Conceptualization, B.Y.; methodology, B.Y. and C.L. (Chenxu Liu); software, C.L. (Chenxu Liu); validation, C.L. (Chenxu Liu); resources, L.Z.; data curation, C.L. (Chenxu Liu) and L.Z.; writing—original draft preparation, C.L. (Chenxu Liu); writing—review and editing, B.Y., C.L. (Chao Liu) and W.Z.; supervision, B.Y., C.L. (Chao Liu) and W.Z.; funding acquisition, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the Pole Universitaire d’Innovation de Montpellier, University of Montpellier under the “Companies and Campus” project INTELLISHARE, and by Sichuan Science and Technology Program (2023YFH0004, 2023YFSY0026).

Data Availability Statement

The dataset analyzed in this study is publicly available. The Breast Ultrasound Images (BUSI) dataset was provided by Al-Dhabyani et al. and can be accessed through https://scholar.cu.edu.eg/?q=afahmy/pages/dataset (accessed on 1 October 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Giaquinto, A.N.; Sung, H.; Miller, K.D.; Kramer, J.L.; Newman, L.A.; Minihan, A.; Jemal, A.; Siegel, R.L. Breast cancer statistics, 2022. CA A Cancer J. Clin. 2022, 72, 524–541. [Google Scholar] [CrossRef] [PubMed]

- Arnold, M.; Morgan, E.; Rumgay, H.; Mafra, A.; Singh, D.; Laversanne, M.; Vignat, J.; Gralow, J.R.; Cardoso, F.; Siesling, S.; et al. Current and future burden of breast cancer: Global statistics for 2020 and 2040. Breast 2022, 66, 15–23. [Google Scholar] [CrossRef] [PubMed]

- Milosevic, M.; Jankovic, D.; Milenkovic, A.; Stojanov, D. Early diagnosis and detection of breast cancer. Technol. Health Care 2018, 26, 729–759. [Google Scholar] [CrossRef] [PubMed]

- Shung, K.K. Diagnostic ultrasound: Past, present, and future. J. Med. Biol. Eng. 2011, 31, 371–374. [Google Scholar] [CrossRef]

- Roll, S.C.; Selhorst, L.; Evans, K.D. Contribution of positioning to work-related musculoskeletal discomfort in diagnostic medical sonographers. Work 2014, 47, 253–260. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Wang, C.; Guo, J.; Yang, L.; Bai, H.; Li, W.; Yi, Z. DeepLN: A framework for automatic lung nodule detection using multi-resolution CT screening images. Knowl.-Based Syst. 2020, 189, 105128. [Google Scholar] [CrossRef]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. NPJ Digit. Med. 2021, 4, 5. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Zhou, X.; Tong, T.; Nie, X.; Wang, L.; Zheng, H.; Li, J.; Xue, E.; Chen, S.; Zheng, M.; et al. A super-resolution guided network for improving automated thyroid nodule segmentation. Comput. Methods Programs Biomed. 2022, 227, 107186. [Google Scholar] [CrossRef] [PubMed]

- Jiao, L.; Zhang, F.; Liu, F.; Yang, S.; Li, L.; Feng, Z.; Qu, R. A survey of deep learning-based object detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar] [CrossRef]

- Xiao, Y.; Tian, Z.; Yu, J.; Zhang, Y.; Liu, S.; Du, S.; Lan, X. A review of object detection based on deep learning. Multimed. Tools Appl. 2020, 79, 23729–23791. [Google Scholar] [CrossRef]

- Redmon, J. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Xu, J.; Ren, H.; Cai, S.; Zhang, X. An improved faster R-CNN algorithm for assisted detection of lung nodules. Comput. Biol. Med. 2023, 153, 106470. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Sinha, D.; El-Sharkawy, M. Thin MobileNet: An enhanced MobileNet architecture. In Proceedings of the 2019 IEEE 10th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 10–12 October 2019; pp. 0280–0285. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Neubeck, A.; Van Gool, L. Efficient non-maximum suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 3, pp. 850–855. [Google Scholar]

- Yang, G.; Lei, J.; Zhu, Z.; Cheng, S.; Feng, Z.; Liang, R. AFPN: Asymptotic feature pyramid network for object detection. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Oahu, HI, USA, 1–4 October 2023; pp. 2184–2189. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning spatial fusion for single-shot object detection. arXiv 2019, arXiv:1911.09516. [Google Scholar] [CrossRef]

- Zhang, R. Making convolutional networks shift-invariant again. In International Conference on Machine Learning; MLR Press: Cambridge, MA, USA, 2019; pp. 7324–7334. [Google Scholar]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief 2020, 28, 104863. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Chen, J.; Duan, H.; Zhang, X.; Gao, B.; Grau, V.; Han, J. From Gaze to Insight: Bridging Human Visual Attention and Vision-Language Model Explanation for Weakly-Supervised Medical Image Segmentation. IEEE Trans. Med. Imaging, 2025; Early Access. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).