SMAD: Semi-Supervised Android Malware Detection via Consistency on Fine-Grained Spatial Representations

Abstract

1. Introduction

- 1.

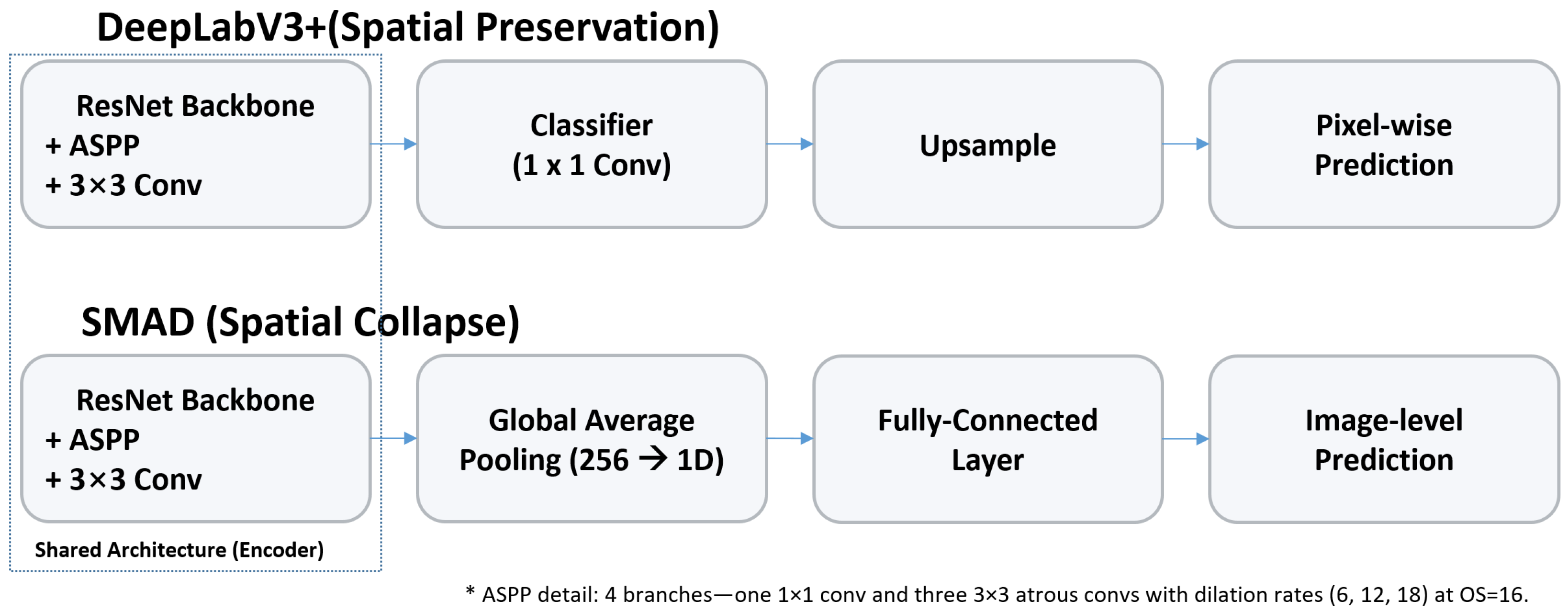

- A semi-supervised detector with segmentation-derived pixel-level features. We introduce SMAD, which couples dual-branch consistency with a segmentation-oriented backbone that extracts dense, pixel-level, multi-scale representations from APK imagery via an Atrous Spatial Pyramid Pooling (ASPP) module. These features combined with agreement between parallel branches provide higher-SNR unsupervised targets—improving early calibration, training stability, and label efficiency under label scarcity.

- 2.

- Improved robustness to unknown behaviors. By enforcing agreement between two branches processing the same APK image, SMAD exhibits enhanced generalization to previously unseen malware families without additional expert labels.

- 3.

- Controlled and balanced evaluation. We conduct extensive experiments and report accuracy, macro-precision, macro-recall, and macro-F1 with mean ± std over three runs; compare against FixMatch under the same schedule; and perform a backbone ablation (dense encoder vs. WideResNet) to isolate encoder effects.

2. Related Work

2.1. Motivation and Theoretical Foundations

2.2. SSL for Malware and Encrypted-Traffic Detection

2.3. Advanced SSL Architectures and Emerging Directions

3. Semi-Supervised Android Malware Detection

3.1. Architecture

3.2. Training Objectives

4. Experiments

4.1. Setup

4.2. Compared Schemes

5. Results

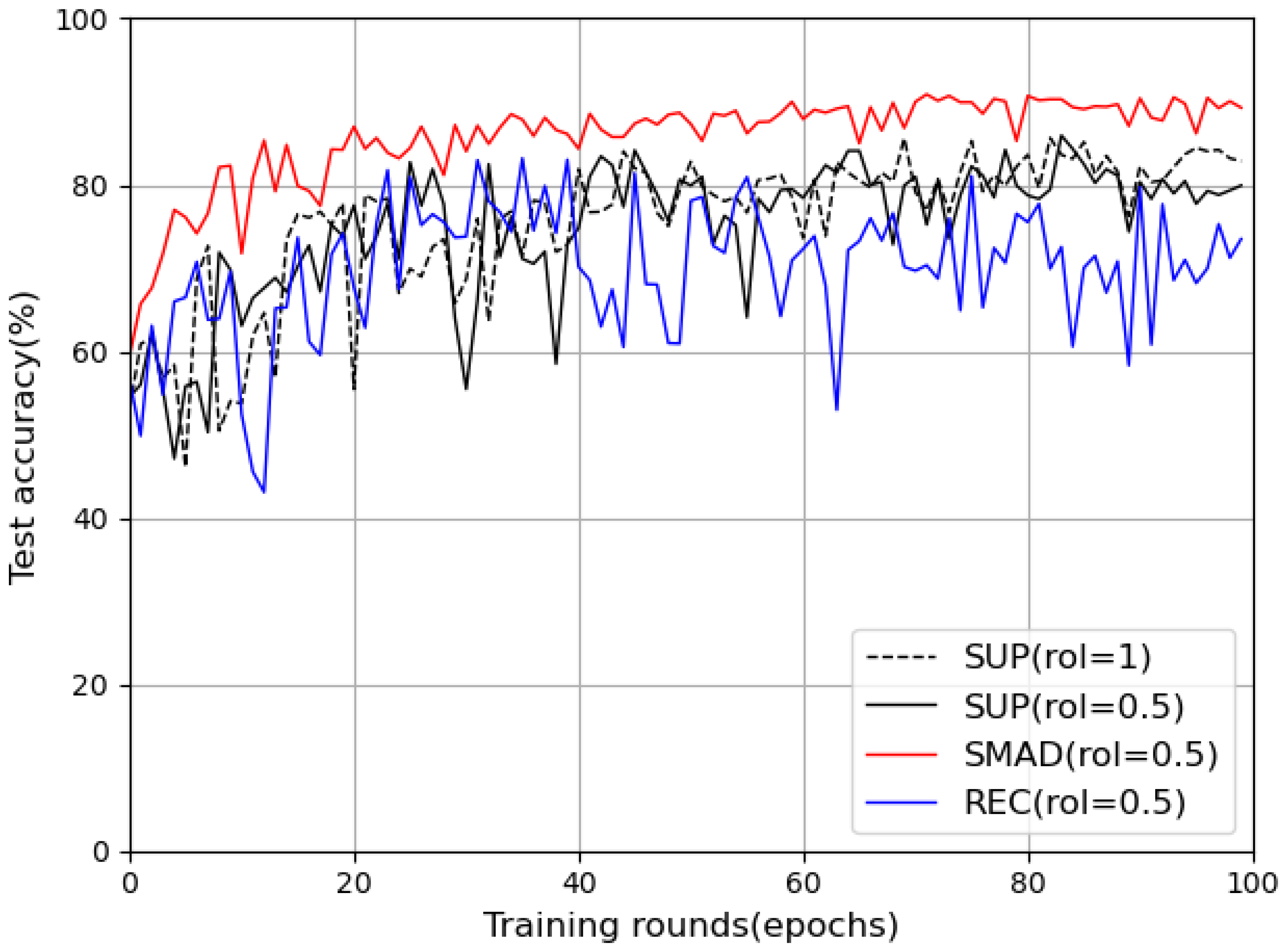

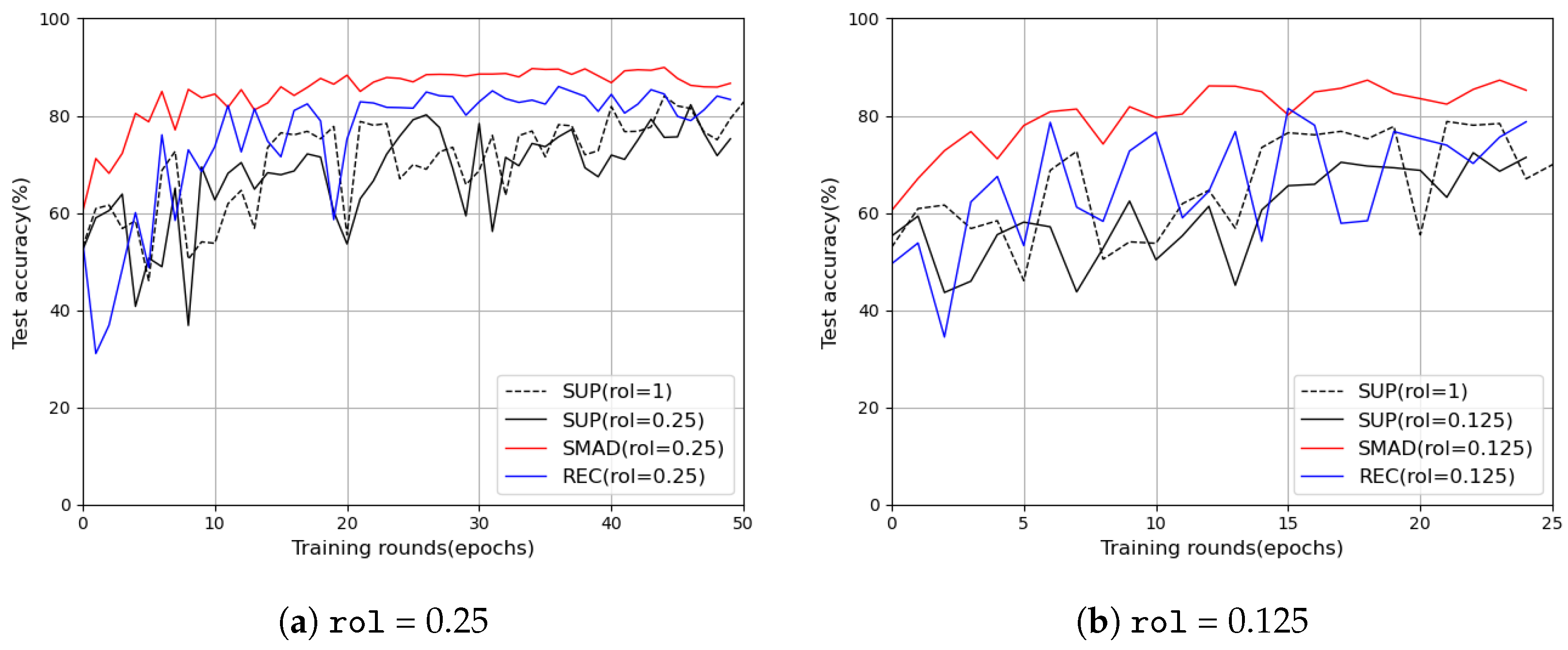

5.1. Overall Performance Across Label Ratios

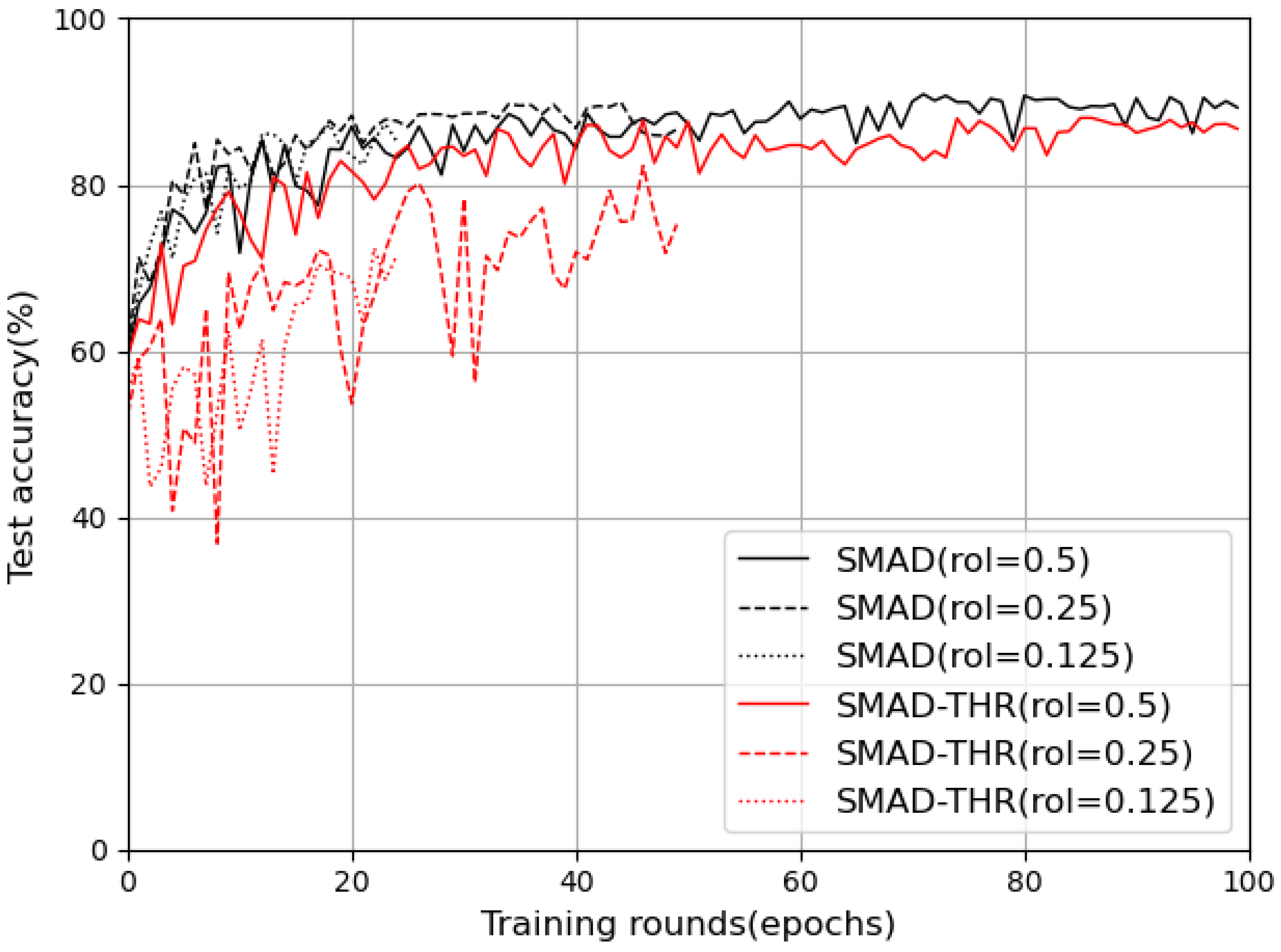

5.2. Ablation Study

5.3. Summary and Concluding Remarks

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zaim bin Ahmad, M.S.; Takemoto, K. Large-scale moral machine experiment on large language models. PLoS ONE 2025, 20, e0322776. [Google Scholar] [CrossRef]

- Hasanzadeh, F.; Josephson, C.B.; Waters, G.; Adedinsewo, D.; Azizi, Z.; White, J.A.T. Bias recognition and mitigation strategies in artificial intelligence healthcare applications. npj Digit. Med. 2025, 8, 154. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.; Soofi, A.A.; Raza, S.; Khan, F.; Ahmad, S.; Khan, W.U.; Asif, M.; Xu, F.; Han, Z. Advancements in RIS-Assisted UAV for Empowering Multiaccess Edge Computing: A Survey. IEEE Internet Things J. 2025, 12, 6325–6346. [Google Scholar] [CrossRef]

- Wolniak, R.; Stecuła, K. Artificial Intelligence in Smart Cities—Applications, Barriers, and Future Directions: A Review. Smart Cities 2024, 7, 1346–1389. [Google Scholar] [CrossRef]

- Hashmi, E.; Yamin, M.M.; Yayilgan, S.Y. Securing tomorrow: A comprehensive survey on the synergy of Artificial Intelligence and information security. AI Ethics 2025, 5, 1911–1929. [Google Scholar] [CrossRef]

- Yang, X.; Song, Z.; King, I.; Xu, Z. A Survey on Deep Semi-Supervised Learning. IEEE Trans. Knowl. Data Eng. 2023, 35, 8934–8954. [Google Scholar] [CrossRef]

- Nguyen, A.T.; Raff, E.; Nicholas, C.; Holt, J. Leveraging uncertainty for improved static malware detection under extreme false positive constraints. arXiv 2021, arXiv:2108.04081. [Google Scholar] [CrossRef]

- Ucci, D.; Aniello, L.; Baldoni, R. Survey of machine learning techniques for malware analysis. Comput. Secur. 2019, 81, 123–147. [Google Scholar] [CrossRef]

- Alhogail, A.; Alharbi, R.A. Effective ML-Based Android Malware Detection and Categorization. Electronics 2025, 14, 1486. [Google Scholar] [CrossRef]

- Huang, W.; Stokes, J.W. MtNet: A multi-task neural network for dynamic malware classification. In Proceedings of the International Conference on Detection of Intrusions and Malware, and Vulnerability Assessment, San Sebastián, Spain, 7–8 July 2016; pp. 399–418. [Google Scholar]

- Ahmad, R.; Alsmadi, I.; Alhamdani, W.; Tawalbeh, L.A. Zero-day attack detection: A systematic literature review. Artif. Intell. Rev. 2023, 56, 10733–10811. [Google Scholar] [CrossRef]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. arXiv 2017, arXiv:1703.01780. [Google Scholar] [CrossRef]

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. Mixmatch: A holistic approach to semi-supervised learning. arXiv 2019, arXiv:1905.0224. [Google Scholar] [CrossRef]

- Berthelot, D.; Carlini, N.; Cubuk, E.D.; Kurakin, A.; Sohn, K.; Zhang, H.; Raffel, C. Remixmatch: Semi-supervised learning with distribution alignment and augmentation anchoring. arXiv 2019, arXiv:1911.09785. [Google Scholar] [CrossRef]

- Xie, Q.; Dai, Z.; Hovy, E.; Luong, M.-T.; Le, Q.V. Unsupervised data augmentation for consistency training. arXiv 2019, arXiv:1904.12848. [Google Scholar] [CrossRef]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. arXiv 2020, arXiv:2001.07685. [Google Scholar] [CrossRef]

- Xie, Q.; Luong, M.T.; Hovy, E.; Le, Q.V. Self-training with noisy student improves imagenet classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10687–10698. [Google Scholar] [CrossRef]

- Oliver, A.; Odena, A.; Raffel, C.A.; Cubuk, E.D.; Goodfellow, I. Realistic evaluation of deep semi-supervised learning algorithms. arXiv 2018, arXiv:1804.09170. [Google Scholar] [CrossRef]

- Arazo, E.; Ortego, D.; Albert, P.; O’Connor, N.E.; McGuinness, K. Pseudo-labeling and confirmation bias in deep semi-supervised learning. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Mvula, P.K.; Branco, P.; Jourdan, G.V.; Viktor, H.L. A Survey on the Applications of Semi-supervised Learning to Cyber-security. ACM Comput. Surv. 2024, 56, 1–41. [Google Scholar] [CrossRef]

- Memon, M.; Unar, A.A.; Ahmed, S.S.; Daudpoto, G.H.; Jaffari, R. Feature-Based Semi-Supervised Learning Approach to Android Malware Detection. Eng. Proc. 2023, 32, 6. [Google Scholar] [CrossRef]

- Liu, M.; Yang, Q.; Wang, W.; Liu, S. Semi-Supervised Encrypted Malicious Traffic Detection Based on Multimodal Traffic Characteristics. Sensors 2024, 24, 6507. [Google Scholar] [CrossRef]

- Chin, M.; Corizzo, R. Continual Semi-Supervised Malware Detection. Mach. Learn. Knowl. Extr. 2024, 6, 2829–2854. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y.; Huang, Y.; Leach, K. Malmixer: Few-shot malware classification with retrieval-augmented semi-supervised learning. arXiv 2024, arXiv:2409.13213. [Google Scholar]

- Shu, R.; Xia, T.; Tu, H.; Williams, L.; Menzies, T. Reducing the Cost of Training Security Classifier (via Optimized Semi-Supervised Learning). arXiv 2022, arXiv:2205.00665. [Google Scholar] [CrossRef]

- Zheng, X.; Yang, S.; Wang, X. SF-IDS: An Imbalanced Semi-Supervised Learning Framework for Fine-Grained Intrusion Detection. In Proceedings of the IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold regularization: A geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

- Grandvalet, Y.; Bengio, Y. Semi-supervised learning by entropy minimization. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Vancouver, BC, Canada, 1 December 2004; Available online: https://dl.acm.org/doi/10.5555/2976040.2976107 (accessed on 26 October 2025).

- Dang, Z.; Zheng, Y.; Lin, X.; Peng, C.; Chen, Q.; Gao, X. Semi-Supervised Learning for Anomaly Traffic Detection via Bidirectional Normalizing Flows. arXiv 2024, arXiv:2403.10550. [Google Scholar] [CrossRef]

- Williams, B.; Qian, L. Semi-Supervised Learning for Intrusion Detection in Large Computer Networks. Appl. Sci. 2025, 15, 5930. [Google Scholar] [CrossRef]

- Yuan, Y.; Huang, Y.; Zeng, X.; Mei, H.; Cheng, G. M3S-UPD: Efficient Multi-Stage Self-Supervised Learning for Fine-Grained Encrypted Traffic Classification with Unknown Pattern Discovery. arXiv 2025, arXiv:2505.21462. [Google Scholar]

- Sun, J.; Zhang, X.; Wang, Y.; Jin, S. CoMDet: A Contrastive Multimodal Pre-Training Approach to Encrypted Malicious Traffic Detection. In Proceedings of the 2024 IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024; pp. 1118–1125. [Google Scholar] [CrossRef]

- Perales Gómez, Á.L.; Fernández Maimó, L.; Huertas Celdrán, A.; García Clemente, F.J. An interpretable semi-supervised system for detecting cyberattacks using anomaly detection in industrial scenarios. IET Inf. Secur. 2023, 17, 553–566. [Google Scholar] [CrossRef]

- Krajewska, A.; Niewiadomska-Szynkiewicz, E. Clustering Network Traffic Using Semi-Supervised Learning. Electronics 2024, 13, 2769. [Google Scholar] [CrossRef]

- Nataraj, L.; Karthikeyan, S.; Jacob, G.; Manjunath, B.S. Malware images: Visualization and automatic classification. In Proceedings of the 8th International Symposium on Visualization for Cyber Security, Pittsburgh, PA, USA, 20 July 2011; pp. 1–7. [Google Scholar] [CrossRef]

- Seneviratne, S.; Shariffdeen, R.; Rasnayaka, S.; Kasthuriarachchi, N. Self-Supervised Vision Transformers for Malware Detection. IEEE Access 2022, 10, 103121–103135. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wang, Z.; Zhao, Z.; Xing, X.; Xu, D.; Kong, X.; Zhou, L. Conflict-based cross-view consistency for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 19585–19595. [Google Scholar] [CrossRef]

- Android Malware Dataset (CICMalDroid 2020). Available online: https://www.unb.ca/cic/datasets/maldroid-2020.html (accessed on 5 August 2025).

- Lee, S. Distributed Detection of Malicious Android Apps While Preserving Privacy Using Federated Learning. Sensors 2023, 23, 2198. [Google Scholar] [CrossRef]

- PyTorch. Available online: https://pytorch.org/ (accessed on 5 August 2025).

| Method (rol) | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| SMAD (0.5) | 91.2% ± 0.5% | 89.9% ± 0.6% | 90.7% ± 0.6% | 90.3% ± 0.5% |

| SMAD (0.25) | 90.3% ± 0.4% | 88.7% ± 0.7% | 89.7% ± 0.6% | 89.1% ± 0.5% |

| SMAD (0.125) | 89.4% ± 0.6% | 87.2% ± 0.8% | 88.7% ± 0.5% | 87.8% ± 0.7% |

| FixMatch (0.5) | 88.0% ± 1.4% | 86.5% ± 0.9% | 85.9% ± 1.8% | 86.1% ± 1.4% |

| FixMatch (0.25) | 85.8% ± 1.1% | 84.4% ± 0.9% | 83.6% ± 0.9% | 83.8% ± 0.9% |

| FixMatch (0.125) | 81.1% ± 0.7% | 79.6% ± 1.1% | 78.2% ± 1.2% | 78.6% ± 0.9% |

| Method (with rol) | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|

| SMAD (0.5) | 91.0% ± 0.8% | 89.2% ± 1.1% | 90.2% ± 1.1% | 89.6% ± 1.1% |

| SMAD (0.25) | 90.3% ± 0.4% | 88.7% ± 0.7% | 89.7% ± 0.6% | 89.1% ± 0.5% |

| SMAD (0.125) | 89.4% ± 0.6% | 87.2% ± 0.8% | 88.7% ± 0.5% | 87.8% ± 0.7% |

| (0.5) | 89.6% ± 0.3% | 88.0% ± 0.5% | 88.6% ± 0.4% | 88.3% ± 0.5% |

| (0.25) | 87.8% ± 0.5% | 86.4% ± 0.9% | 85.1% ± 0.8% | 85.6% ± 0.8% |

| (0.125) | 84.0% ± 1.2% | 82.9% ± 0.5% | 80.5% ± 2.3% | 81.0% ± 2.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Han, S. SMAD: Semi-Supervised Android Malware Detection via Consistency on Fine-Grained Spatial Representations. Electronics 2025, 14, 4246. https://doi.org/10.3390/electronics14214246

Lee S, Han S. SMAD: Semi-Supervised Android Malware Detection via Consistency on Fine-Grained Spatial Representations. Electronics. 2025; 14(21):4246. https://doi.org/10.3390/electronics14214246

Chicago/Turabian StyleLee, Suchul, and Seokmin Han. 2025. "SMAD: Semi-Supervised Android Malware Detection via Consistency on Fine-Grained Spatial Representations" Electronics 14, no. 21: 4246. https://doi.org/10.3390/electronics14214246

APA StyleLee, S., & Han, S. (2025). SMAD: Semi-Supervised Android Malware Detection via Consistency on Fine-Grained Spatial Representations. Electronics, 14(21), 4246. https://doi.org/10.3390/electronics14214246