From 2D-Patch to 3D-Camouflage: A Review of Physical Adversarial Attack in Object Detection

Abstract

1. Introduction

1.1. Literature Selection

- (1)

- Physical deployment. The attack strategies and methods in this review had to be implementable in a physical environment.

- (2)

- Victim tasks. This review focuses on physical adversarial attacks against object detection models, including related tasks such as person detection, vehicle detection, and traffic sign detection.

- (3)

- Conceptual Cohesiveness. Articles were selected based on their relevance and cohesive contribution to the field of physical adversarial attacks.

| Survey | 3D Adversarial Camouflage | Physical Deployment | Transferability | Perceptibility | Year |

|---|---|---|---|---|---|

| Wei et al. [19] | × | × | × | × | 2023 |

| Wang et al. [20] | × | × | × | × | 2023 |

| Guesmi et al. [21] | × | × | ✓ | ✓ | 2023 |

| Wei et al. [22] | × | ✓ | × | ✓ | 2024 |

| Nguyen et al. [23] | × | × | × | × | 2024 |

| Zhang et al. [24] | × | ✓ | ✓ | 2024 | |

| Ours | ✓ | ✓ | ✓ | ✓ | 2025 |

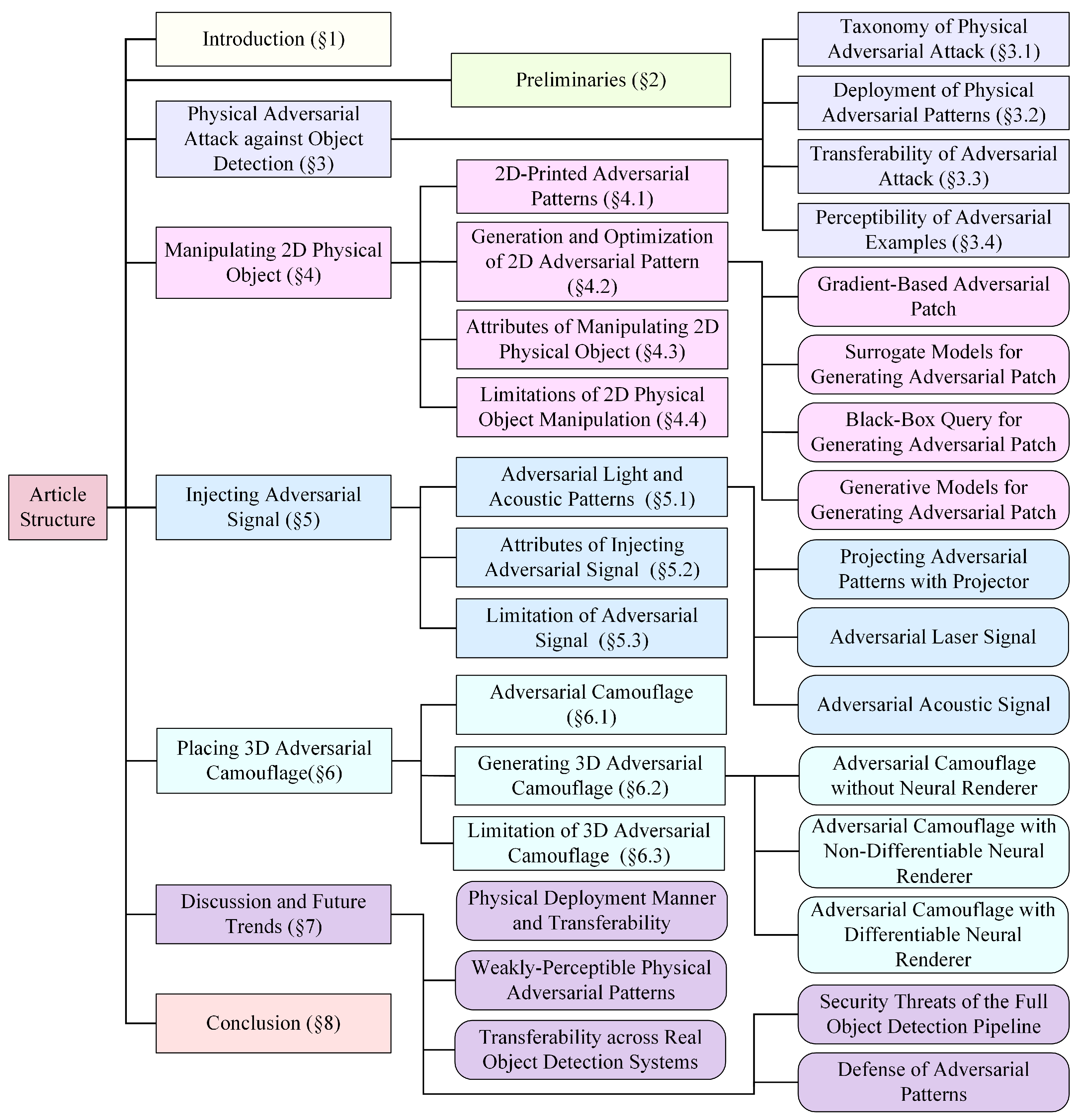

1.2. Our Contributions

- This review identifies, explores, and evaluates physical adversarial attacks targeting object detection systems, including pedestrian and vehicle detection.

- We propose a novel taxonomy for physical adversarial attacks against object detection, categorizing them into three classes based on the generation and deployment of adversarial patterns: manipulating 2D physical objects, injecting adversarial signals, and placing 3D adversarial camouflage. These categories are systematically compared on nine key attributes.

- The transferability of adversarial attacks and the perceptibility of adversarial examples are further analyzed and evaluated. Transferability is classified into two levels: cross-model and cross-modal. Perceptibility is divided into four levels: visible, natural, stealthy, and imperceptible.

- We discuss the challenges of adversarial attacks against object detection models, propose potential improvements, and suggest future research directions. Our goal is to inspire further work that emphasizes security threats affecting the entire object detection pipeline.

2. Preliminaries

2.1. Adversarial Examples

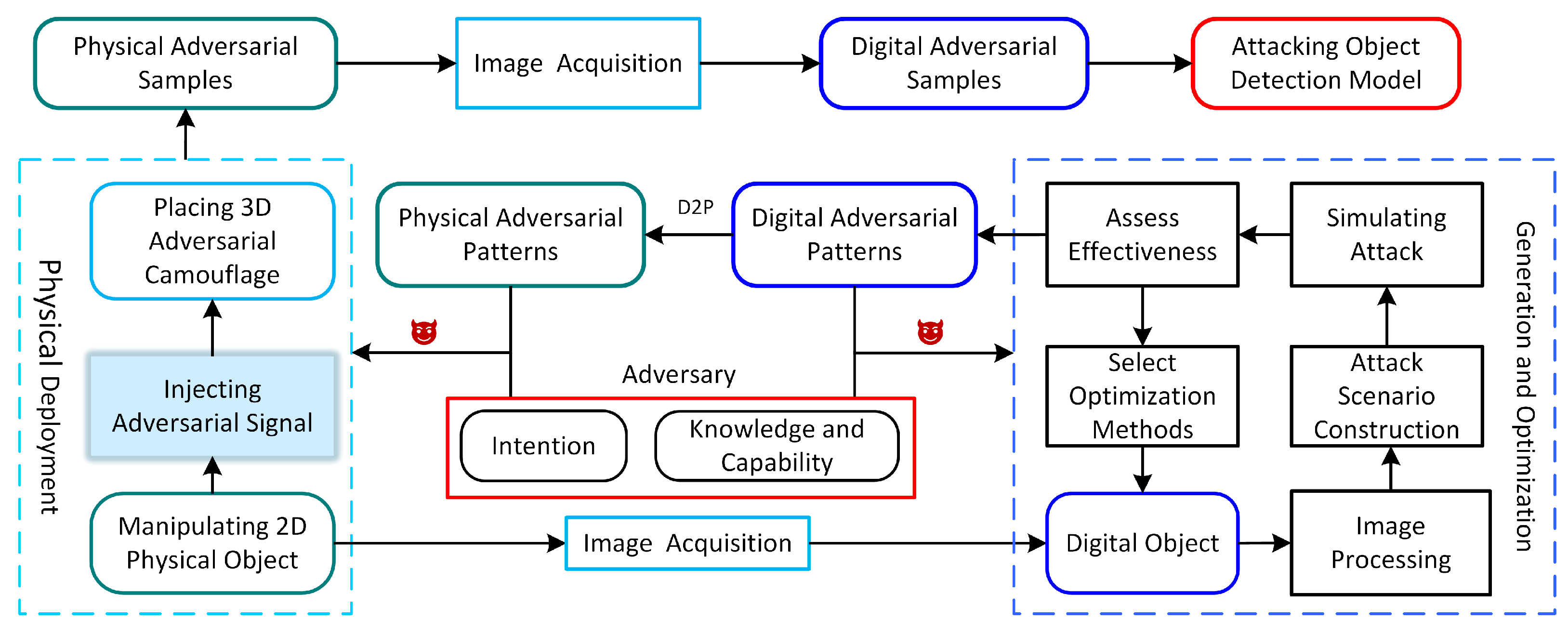

2.2. Adversarial Attack Environment

2.2.1. Digital Adversarial Attack

2.2.2. Physical Adversarial Attack

2.3. Adversary’s Intention

2.4. Adversary’s Knowledge and Capabilities

- (1)

- White-box

- (2)

- Black-box

- (3)

- Gray-box

2.5. Victim Model Architecture

- (1)

- CNN-based model

- (2)

- Transformer-based model

- (3)

- Real-world systems

3. Physical Adversarial Attacks Against Object Detection

3.1. Taxonomy of Physical Adversarial Attacks

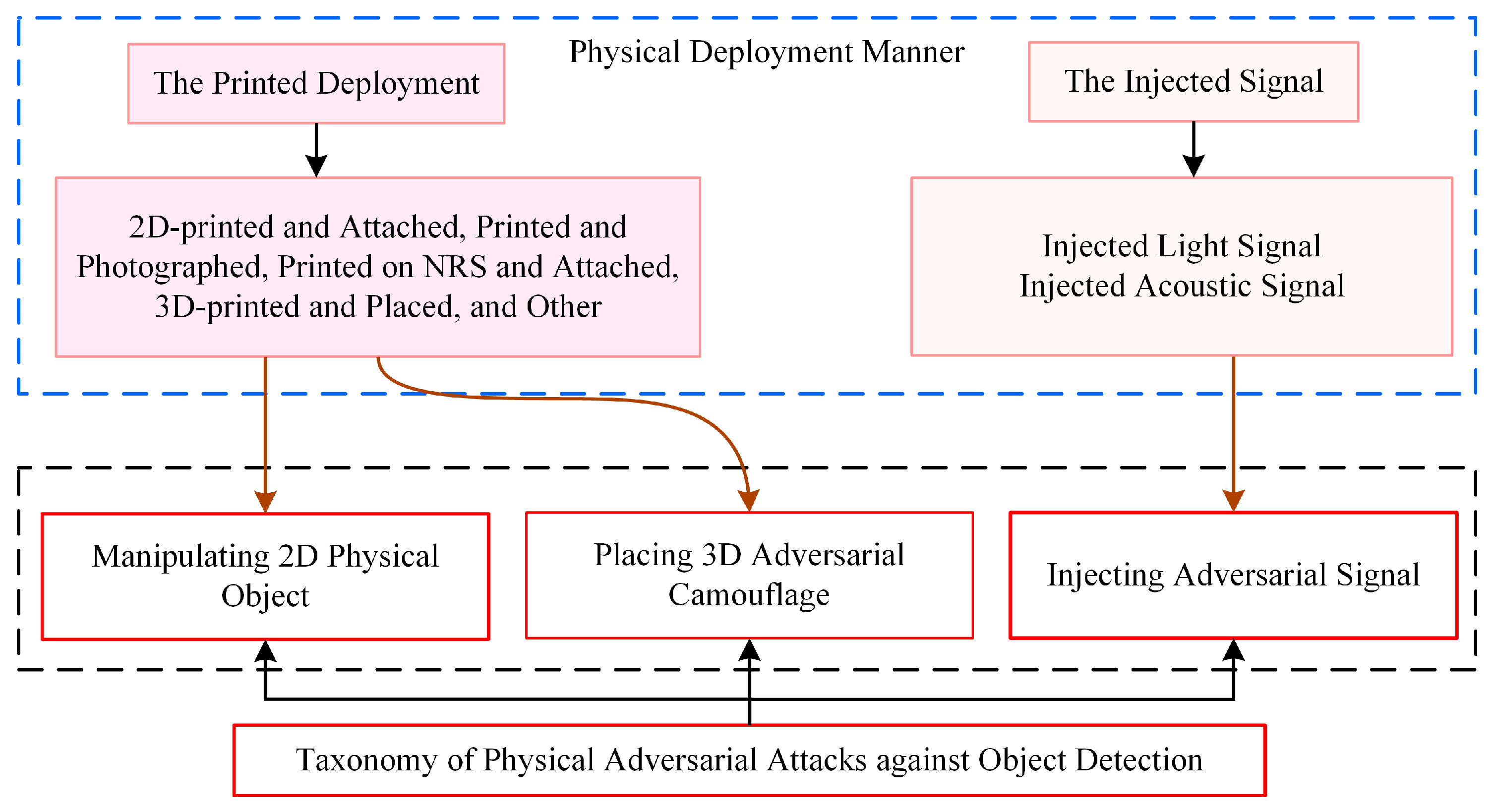

3.2. Deployment of Physical Adversarial Patterns

- (1)

- Printed deployment involves printing adversarial patterns or examples in 2D or 3D and placing them on or around the target surface. This can be further classified into four categories: printed and attached, printed and photographed, printed on non-rigid surface and attached, and 3D-printed and placed, which are primarily used for the deployment of adversarial patches, stickers, and camouflage for physical object manipulation.

- (2)

- The injected signal involves introducing an external signal source (light or sound signals) to project adversarial patterns onto the target surface or image perception devices. This can be categorized into two types: injected light signal and injected acoustic signal.

| Type | Physical Deployment Manner | Representative Work |

|---|---|---|

| Printed Deployment | Printed and Attached | [11,15,38,57,62,71,72,73,74] |

| Printed and Photographed | [75,76] | |

| Printed on NRS | [49,77,78,79,80,81,82,83,84,85,86] | |

| 3D-Printed and Placed | [39,53,55,69,87] | |

| Other-Printed and Deployed | [16,67,68,70] | |

| Injected Signal | Light Signal | [16,63,64,65,88,89,90,91] |

| Acoustic Signal | [42,59,92] |

3.3. Transferability of Adversarial Attack

3.3.1. Cross-Model Transferability

3.3.2. Cross-Modal Transferability

3.4. Perceptibility of Adversarial Examples

- (1)

- Visible. Physical adversarial patterns are visible to the human observer, captureable by image perception devices, and clearly distinguishable from the target in digital representation. Adversarial patterns in this category, such as patches or stickers, have noticeable visual differences from normal examples and are easily detected by human observers. We use the term visible to denote this category.

- (2)

- Natural. Physical adversarial patterns are visible to the human observer, captureable by image perception devices, but hard to distinguish from the target in digital representation. Natural adversarial examples emphasize whether the adversarial patterns appear as a naturally occurring object. For example, adversarial patterns that use mediums such as natural lighting, projectors, shadows, and camouflage are classified as natural. This category emphasizes the natural appearance of adversarial patterns in relation to the target environment. We use the term natural to denote this category.

- (3)

- Covert. Physical adversarial patterns are hard to observe with the naked eye, can be captured by image perception devices with anomalies, and are distinguishable from original images in the digital domain. Covert adversarial examples are visually imperceptible or similar to the original examples for humans, but they can be physically perceived by the targeted device or system. Adversarial examples, such as those using media like thermal insulation materials, infrared light, or lasers, are classified as covert. This category emphasizes that such adversarial examples are not easily detected from the perspective of human observers.

- (4)

- Imperceptible. Physical adversarial patterns are invisible to the naked eye, hard to capture using image perception devices, and hard to distinguish from original images in the digital domain. Adversarial examples in this category include ultrasound-based attacks on camera sensors. The term imperceptible highlights that the differences between digital adversarial examples and their benign counterparts are challenging to measure.

4. Manipulating 2D Physical Objects

4.1. 2D-Printed Adversarial Patterns

- (1)

- Patch and sticker

- (2)

- Image and cloth patch

| AP | Method | Akc | VMa | VT | AInt | Venue |

|---|---|---|---|---|---|---|

| Patch | extended-RP2 [103] | WB | CNN | OD | UT | 2018 WOOT |

| Patch | Nested-AE [38] | WB | CNN | OD | UT | 2019 CCS |

| Patch | OBJ-CLS [72] | WB | CNN | PD | UT | 2019 CVPRW |

| Patch | AP-PA [15] | WB, BB | CNN, TF | AD | UT | 2022 TGRS |

| Patch | T-SEA [73] | WB, BB | CNN | OD, PD | UT, TA | 2023 CVPR |

| Patch | SysAdv [11] | WB | CNN | OD, PD | UT | 2023 ICCV |

| Patch | CBA [74] | WB, BB | CNN, TF | OD, AD | UT | 2023 TGRS |

| Patch | PatchGen [57] | BB | CNN, TF | OD, AD, IC | UT | 2025 TIFS |

| Cloth-P | NAP [77] | WB | CNN | OD, PD | UT | 2021 ICCV |

| Cloth-P | LAP [78] | WB | CNN | OD, PD | UT | 2021 MM |

| Cloth-P | DAP [79] | WB | CNN | OD, PD | UT | 2024 CVPR |

| Cloth-P | DOEPatch [80] | WB, BB | CNN | OD | UT | 2024 TIFS |

| Sticker | TLD [62] | BB | RS | LD | UT | 2021 USENIX |

| Image-P | ShapeShifter [75] | WB | CNN | TSD | UT, TA | 2019 ECML PKDD |

| Image-P | LPAttack [76] | WB | CNN, RS | OD | UT | 2020 AAAI |

4.2. Generation and Optimization of 2D Adversarial Patterns

4.2.1. Generation and Optimization Methods

4.2.2. Gradient-Based Adversarial Patch

4.2.3. Surrogate Models for Generating Adversarial Patches

4.2.4. Black-Box Query for Generating Adversarial Patches

4.2.5. Generative Models for Generating Adversarial Patches

4.3. Attributes of Manipulating 2D Physical Objects

- (1)

- Adversarial Pattern (AP).

- (2)

- Adversary’s Knowledge and Capabilities (Akc).

- (3)

- Victim Model Architecture (VMa).

- (4)

- Victim Tasks (VT).

- (5)

- Adversary’s Intention (AInt).

- (1)

- Generation and Optimization Methods (Gen).

- (2)

- Physical Deployment Manner (PDM).

- (3)

- Adversarial Attack’s Transferability.

- (4)

- Adversarial Example’s Perceptibilitiy.

4.4. Limitations of 2D Physical Object Manipulation

- (1)

- Various printed-deployed adversarial patterns (such as patches and stickers) involve complex digital-to-physical environment transitions (such as non-printability score, expectation over transformation, total variation) to handle printing noise and cannot address noise from dynamic physical environments. From the perspective of generation methodology, these approaches primarily rely on gradient-based optimization (e.g., AP-PA [15], DAP [79]) and surrogate model methods (e.g., PatchGen [57]) to simulate physical conditions during training. However, such simulation remains inadequate for modeling complex environmental variables like lighting changes and viewing angles, leading to performance degradation in real-world scenarios. Furthermore, while these methods effectively constrain perturbations to localized areas, they lack adaptive optimization mechanisms for dynamic physical conditions.

- (2)

- Printed-deployed adversarial patterns (patches, stickers) visually have a significant difference from the background environment of the target, with strong perceptibility that can be easily discovered by the naked eye. This visual distinctiveness stems from their generation process, where gradient-based methods often produce high-frequency patterns that conflict with natural textures. Although generative models have been employed to create more natural-looking patterns (such as NAP [77]), they still struggle to achieve seamless environmental integration. The fundamental challenge stems from the inherent trade-off between attack success and visual stealth, as most optimization algorithms must balance the pursuit of high success rates against preserving the visual naturalness of the pattern.

- (3)

- Adversarial patterns that resemble natural objects tend to have weaker attack effects, possibly because the training of models focuses on similar natural objects that have been seen before, and the target model has gained better robustness during training. This phenomenon can be attributed to the model’s prior exposure to similar patterns during training. The semantic consistency of natural-object-like patterns may actually trigger the model’s built-in mechanisms, making them less effective compared to other patterns.

- (4)

- Distributed adversarial patch attacks increase the difficulty of defense detection, with weaker perceptibility, but are relatively challenging to deploy. Their generation typically employs gradient-based optimization across multiple spatially distributed locations, requiring careful coordination of perturbation distribution. While generative models offer potential for creating coherent distributed patterns, they face practical deployment challenges in maintaining precise spatial relationships. This type of attack is highly dependent on the precise spatial configuration of multiple components during deployment, making it susceptible to environmental factors and deployment methods. Furthermore, attackers must balance distribution density with attack effectiveness, which often requires specialized optimization strategies distinct from traditional patch generation methods.

5. Injecting Adversarial Signals

5.1. Adversarial Light and Acoustic Patterns

5.1.1. Projecting Adversarial Patterns with Projectors

5.1.2. Adversarial Laser Signal

5.1.3. Adversarial Acoustic Signals

5.2. Attributes of Injecting Adversarial Signal

5.3. Limitations of Adversarial Signals

- (1)

- Injecting adversarial signals requires precise devices to reproduce specific optical or acoustic conditions for effective translation of digital attacks into the physical world. The signal sources of such attacks can be categorized into energy radiation types, such as the blue lasers in rolling shutter [89], the infrared laser LED employed in ICSL [65], and field interference types, such as intentional electromagnetic interference (IEMI) in GlitchHiker [108] and the acoustic signals in PG attacks [59]. Their physical deployment is directly linked to the stealth and feasibility of the attacks. For instance, lasers require precise calibration of the incident angle, acoustic signals must match the sensor’s resonant frequency, and electromagnetic interference relies on near-field coupling effects. Since such attacks do not rely on physical attachments, their deployment flexibility is greater than that of patch-based attacks. Although they offer greater deployment flexibility than patch-based attacks, their effectiveness is highly sensitive to even minor environmental deviations.

- (2)

- Projector-based adversarial patterns share mechanisms with adversarial patches by introducing additional patterns captured by cameras. However, projected attacks (e.g., Phantom Attack [64], Vanishing Attack [90]) face unique deployment challenges, including calibration for surface geometry and compensation for ambient light. A key reason for DNN models’ vulnerability to such attacks is the lack of training data representing targets under specific optical conditions, such as dynamic projections or strong ambient light. In contrast, laser injection methods like L-HAWK [16] bypass some environmental interference by directly targeting sensors but face deployment complexities related to synchronization triggering and optical calibration.

6. Placing 3D Adversarial Camouflage

6.1. Adversarial Camouflage

6.2. Generating 3D Adversarial Camouflage

6.2.1. Adversarial Camouflage Without Neural Renderers

6.2.2. Adversarial Camouflage with Non-Differentiable Neural Renderers

6.2.3. Adversarial Camouflage with Differentiable Neural Renderers

6.3. Limitations of 3D Adversarial Camouflage

7. Discussion and Future Trends

7.1. Physical Deployment Manner and Transferability

7.2. Weakly Perceptible Physical Adversarial Patterns

7.3. Transferability Across Real Object Detection Systems

7.4. Security Threats of Full Object Detection Pipeline

7.5. Defense of Adversarial Patterns

- (1)

- Adversarial Training. This method is applied during the model training stage by proactively introducing adversarial perturbations into the training datasets [112]. This process enhances the model’s inherent robustness, enabling it to ignore the interference of adversarial patterns.

- (2)

- Input Transformation. This defense is deployed at the input stage, before the image is processed by the target model, by filtering, purifying, or otherwise transforming the input. For example, SpectralDefense [124] transforms the input image from the spatial domain to the frequency domain (Fourier domain) in order to eliminate adversarial perturbations. The objective is to disrupt or neutralize adversarial patterns, thereby preventing them from reaching and compromising the model’s inference.

- (3)

- Adversarial Purging. During the model testing/inference stage, adversarial purging involves fine-tuning the target model in advance to develop the capability of identifying and detecting adversarial patches and perturbations, such as DetectorGuard [132], PatchGuard [133], PatchCleanser [134], PatchZero [137], PAD [139], and PatchCURE [140]. During inference, the enhanced model can recognize these threats and employ certain cleansing techniques to significantly reduce or even eliminate the effectiveness of adversarial attacks.

- (4)

- Multi-modal Fusion. This strategy operates at the final output stage by aggregating and reconciling outputs from multiple data modalities [143] or independent models to reach a consensus decision. Since adversarial patterns are typically effective against only a single modality or model, fusion mechanisms such as majority voting or consistency verification can mitigate the impact of unilateral attacks. A typical example is fusing results from infrared and visible light modalities in object detection tasks to defend against adversarial light signal injection attacks.

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| OD | Object Detection | PD | Person Detection |

| VD | Vehicle Detection | AD | Aerial Detection |

| LD | Lane Detection | TSD | Traffic Sign Detection |

| IC | Image Classification | FR | Face Recognition |

| OT | Object Tracking | TSR | Traffic Sign Recognition |

| RS | Real Systems | ||

| BB | Black-box | GyB | Gray-box |

| WB | White-box | ||

| UT | Untargeted Attack | TA | Targeted Attack |

| BQ | Black-Box Query | GM | Generative Model |

| GB | Gradient-based | SM | Surrogate Model |

| PDM | Physical Deployment Manner | PAA | Physical Adversarial Attack |

| Per | Perceptibility | Tra | Transferability |

| LAP | Adversarial Light Pattern | AAR | Adversarial Acoustic Pattern |

| VT | Victim Tasks | VMa | Victim Model Architecture |

| AInt | Adversary’s Intention | ||

| CNN | CNN-based bone architecture | ||

| TF | Transformer-based bone architecture | ||

| Akc | Adversary’s knowledge and capabilities | ||

| Gen | Generation and optimization method | ||

| DNR | Differentiable Neural Renderer | ||

| NNR | Non-differentiable Neural Renderer | ||

| AC (w/) | Adversarial Camouflage with | ||

| 3D-Rendering | |||

| AC (w/o) | Adversarial Camouflage without | ||

| 3D-Rendering | |||

| ✓ | means covered | × | means not covered |

| — | means not mentioned |

References

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A Review of Convolutional Neural Networks in Computer Vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Kobayashi, R.; Nomoto, K.; Tanaka, Y.; Tsuruoka, G.; Mori, T. WIP: Shadow Hack: Adversarial Shadow Attack against LiDAR Object Detection. In Proceedings of the Symposium on Vehicle Security & Privacy, San Diego, CA, USA, 26 February 2024. [Google Scholar] [CrossRef]

- Zhou, M.; Zhou, W.; Huang, J.; Yang, J.; Du, M.; Li, Q. Stealthy and Effective Physical Adversarial Attacks in Autonomous Driving. IEEE Trans. Inf. Forensics Secur. 2024, 19, 6795–6809. [Google Scholar] [CrossRef]

- Ullah, F.U.M.; Obaidat, M.S.; Ullah, A.; Muhammad, K.; Hijji, M.; Baik, S.W. A Comprehensive Review on Vision-Based Violence Detection in Surveillance Videos. ACM Comput. Surv. 2023, 55, 1–44. [Google Scholar] [CrossRef]

- Nguyen, K.N.T.; Zhang, W.; Lu, K.; Wu, Y.H.; Zheng, X.; Li Tan, H.; Zhen, L. A Survey and Evaluation of Adversarial Attacks in Object Detection. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 15706–15722. [Google Scholar] [CrossRef]

- Wang, N.; Xie, S.; Sato, T.; Luo, Y.; Xu, K.; Chen, Q.A. Revisiting Physical-World Adversarial Attack on Traffic Sign Recognition: A Commercial Systems Perspective. In Proceedings of the Network and Distributed System Security (NDSS) Symposium 2025, San Diego, CA, USA, 24–28 February 2025. [Google Scholar] [CrossRef]

- Li, Y.; Xie, B.; Guo, S.; Yang, Y.; Xiao, B. A Survey of Robustness and Safety of 2D and 3D Deep Learning Models Against Adversarial Attacks. arXiv 2023, arXiv:2310.00633. [Google Scholar] [CrossRef]

- Wu, B.; Zhu, Z.; Liu, L.; Liu, Q.; He, Z.; Lyu, S. Attacks in Adversarial Machine Learning: A Systematic Survey from the Life-Cycle Perspective. arXiv 2024, arXiv:2302.09457. [Google Scholar] [CrossRef]

- Jia, W.; Lu, Z.; Zhang, H.; Liu, Z.; Wang, J.; Qu, G. Fooling the Eyes of Autonomous Vehicles: Robust Physical Adversarial Examples Against Traffic Sign Recognition Systems. In Proceedings of the 2022 Network and Distributed System Security Symposium, San Diego, CA, USA, 24–28 April 2022. [Google Scholar] [CrossRef]

- Wang, N.; Luo, Y.; Sato, T.; Xu, K.; Chen, Q.A. Does Physical Adversarial Example Really Matter to Autonomous Driving? In Towards System-Level Effect of Adversarial Object Evasion Attack. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 4389–4400. [Google Scholar] [CrossRef]

- Sato, T.; Bhupathiraju, S.H.V.; Clifford, M.; Sugawara, T.; Chen, Q.A.; Rampazzi, S. Invisible Reflections: Leveraging Infrared Laser Reflections to Target Traffic Sign Perception. In Proceedings of the 2024 Network and Distributed System Security Symposium, San Diego, CA, USA, 29 February–1 March 2024. [Google Scholar] [CrossRef]

- Guo, D.; Wu, Y.; Dai, Y.; Zhou, P.; Lou, X.; Tan, R. Invisible Optical Adversarial Stripes on Traffic Sign against Autonomous Vehicles. In Proceedings of the 22nd Annual International Conference on Mobile Systems, Applications and Services, Tokyo, Japan, 3–7 June 2024; pp. 534–546. [Google Scholar] [CrossRef]

- Duan, R.; Ma, X.; Wang, Y.; Bailey, J.; Qin, A.K.; Yang, Y. Adversarial Camouflage: Hiding Physical-World Attacks With Natural Styles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 997–1005. [Google Scholar] [CrossRef]

- Lian, J.; Mei, S.; Zhang, S.; Ma, M. Benchmarking Adversarial Patch against Aerial Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5634616. [Google Scholar] [CrossRef]

- Liu, T.; Liu, Y.; Ma, Z.; Yang, T.; Liu, X.; Li, T.; Ma, J. L-HAWK: A Controllable Physical Adversarial Patch against a Long-Distance Target. In Proceedings of the 2025 Network and Distributed System Security Symposium, San Diego, CA, USA, 24–28 February 2025. [Google Scholar]

- Biton, D.; Shams, J.; Koda, S.; Shabtai, A.; Elovici, Y.; Nassi, B. Towards an End-to-End (E2E) Adversarial Learning and Application in the Physical World. arXiv 2025, arXiv:2501.08258. [Google Scholar] [CrossRef]

- Badjie, B.; Cecílio, J.; Casimiro, A. Adversarial Attacks and Countermeasures on Image Classification-Based Deep Learning Models in Autonomous Driving Systems: A Systematic Review. ACM Comput. Surv. 2024, 57, 1–52. [Google Scholar] [CrossRef]

- Wei, X.; Pu, B.; Lu, J.; Wu, B. Visually Adversarial Attacks and Defenses in the Physical World: A Survey. arXiv 2023, arXiv:2211.01671. [Google Scholar] [CrossRef]

- Wang, D.; Yao, W.; Jiang, T.; Tang, G.; Chen, X. A Survey on Physical Adversarial Attack in Computer Vision. arXiv 2023, arXiv:2209.14262. [Google Scholar] [CrossRef]

- Guesmi, A.; Hanif, M.A.; Ouni, B.; Shafique, M. Physical Adversarial Attacks for Camera-Based Smart Systems: Current Trends, Categorization, Applications, Research Challenges, and Future Outlook. IEEE Access 2023, 11, 109617–109668. [Google Scholar] [CrossRef]

- Wei, H.; Tang, H.; Jia, X.; Wang, Z.; Yu, H.; Li, Z.; Satoh, S.; Gool, L.V.; Wang, Z. Physical Adversarial Attack Meets Computer Vision: A Decade Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9797–9817. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, K.; Fernando, T.; Fookes, C.; Sridharan, S. Physical Adversarial Attacks for Surveillance: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 17036–17056. [Google Scholar] [CrossRef]

- Zhang, C.; Xu, X.; Wu, J.; Liu, Z.; Zhou, L. Adversarial Attacks of Vision Tasks in the Past 10 Years: A Survey. arXiv 2024, arXiv:2410.23687. [Google Scholar] [CrossRef]

- Byun, J.; Cho, S.; Kwon, M.J.; Kim, H.S.; Kim, C. Improving the Transferability of Targeted Adversarial Examples through Object-Based Diverse Input. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 15223–15232. [Google Scholar] [CrossRef]

- Ren, Y.; Zhao, Z.; Lin, C.; Yang, B.; Zhou, L.; Liu, Z.; Shen, C. Improving Integrated Gradient-Based Transferable Adversarial Examples by Refining the Integration Path. arXiv 2024, arXiv:2412.18844. [Google Scholar] [CrossRef]

- Wei, X.; Ruan, S.; Dong, Y.; Su, H.; Cao, X. Distributionally Location-Aware Transferable Adversarial Patches for Facial Images. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2849–2864. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, B.; Song, Y.; Yu, Z.; Hu, S.; Wan, W.; Zhang, L.Y.; Yao, D.; Jin, H. NumbOD: A Spatial-Frequency Fusion Attack against Object Detectors. In Proceedings of the 39th Annual AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025. [Google Scholar] [CrossRef]

- Li, C.; Jiang, T.; Wang, H.; Yao, W.; Wang, D. Optimizing Latent Variables in Integrating Transfer and Query Based Attack Framework. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 161–171. [Google Scholar] [CrossRef]

- Song, Y.; Zhou, Z.; Li, M.; Wang, X.; Zhang, H.; Deng, M.; Wan, W.; Hu, S.; Zhang, L.Y. PB-UAP: Hybrid Universal Adversarial Attack for Image Segmentation. In Proceedings of the 2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Sarkar, S.; Babu, A.R.; Mousavi, S.; Gundecha, V.; Ghorbanpour, S.; Naug, A.; Gutierrez, R.L.; Guillen, A. Reinforcement Learning Platform for Adversarial Black-Box Attacks with Custom Distortion Filters. Proc. AAAI Conf. Artif. Intell. 2025, 39, 27628–27635. [Google Scholar] [CrossRef]

- Chen, J.; Chen, H.; Chen, K.; Zhang, Y.; Zou, Z.; Shi, Z. Diffusion Models for Imperceptible and Transferable Adversarial Attack. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 961–977. [Google Scholar] [CrossRef]

- He, C.; Ma, X.; Zhu, B.B.; Zeng, Y.; Hu, H.; Bai, X.; Jin, H.; Zhang, D. DorPatch: Distributed and Occlusion-Robust Adversarial Patch to Evade Certifiable Defenses. In Proceedings of the 2024 Network and Distributed System Security Symposium, San Diego, CA, USA, 26 February–1 March 2024. [Google Scholar] [CrossRef]

- Wei, X.; Guo, Y.; Yu, J. Adversarial Sticker: A Stealthy Attack Method in the Physical World. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2711–2725. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Tan, W.; Wang, T.; Liang, X.; Pan, Q. Flexible Physical Camouflage Generation Based on a Differential Approach. arXiv 2024, arXiv:2402.13575. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, Q.; Gao, R.; Juefei-Xu, F.; Yu, H.; Feng, W. Adversarial Relighting Against Face Recognition. IEEE Trans. Inf. Forensics Secur. 2024, 19, 9145–9157. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Z.; Luo, B.; Hui, R.; Li, F. The Invisible Polyjuice Potion: An Effective Physical Adversarial Attack against Face Recognition. In Proceedings of the 2024 on ACM SIGSAC Conference on Computer and Communications Security, Salt Lake City, UT, USA, 14–18 October 2024; pp. 3346–3360. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhu, H.; Liang, R.; Shen, Q.; Zhang, S.; Chen, K. Seeing Isn’t Believing: Practical Adversarial Attack Against Object Detectors. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 1989–2004. [Google Scholar] [CrossRef]

- Duan, Y.; Chen, J.; Zhou, X.; Zou, J.; He, Z.; Zhang, J.; Zhang, W.; Pan, Z. Learning Coated Adversarial Camouflages for Object Detectors. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022; pp. 891–897. [Google Scholar] [CrossRef]

- Yang, X.; Liu, C.; Xu, L.; Wang, Y.; Dong, Y.; Chen, N.; Su, H.; Zhu, J. Towards Effective Adversarial Textured 3D Meshes on Physical Face Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 4119–4128. [Google Scholar] [CrossRef]

- Wan, J.; Fu, J.; Wang, L.; Yang, Z. BounceAttack: A Query-Efficient Decision-Based Adversarial Attack by Bouncing into the Wild. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 1270–1286. [Google Scholar] [CrossRef]

- Zhu, W.; Ji, X.; Cheng, Y.; Zhang, S.; Xu, W. TPatch: A Triggered Physical Adversarial Patch. In Proceedings of the 32nd USENIX Conference on Security Symposium, Anaheim, CA, USA, 9–11 August 2023; pp. 661–678. [Google Scholar]

- Hu, C.; Shi, W.; Jiang, T.; Yao, W.; Tian, L.; Chen, X.; Zhou, J.; Li, W. Adversarial Infrared Blocks: A Multi-View Black-Box Attack to Thermal Infrared Detectors in Physical World. Neural Netw. 2024, 175, 106310. [Google Scholar] [CrossRef]

- Hu, C.; Shi, W.; Yao, W.; Jiang, T.; Tian, L.; Chen, X.; Li, W. Adversarial Infrared Curves: An Attack on Infrared Pedestrian Detectors in the Physical World. Neural Netw. 2024, 178, 106459. [Google Scholar] [CrossRef]

- Yang, C.; Kortylewski, A.; Xie, C.; Cao, Y.; Yuille, A. PatchAttack: A Black-Box Texture-Based Attack with Reinforcement Learning. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Volume 12371, pp. 681–698. [Google Scholar] [CrossRef]

- Doan, B.G.; Xue, M.; Ma, S.; Abbasnejad, E.; Ranasinghe, C.D. TnT Attacks! Universal Naturalistic Adversarial Patches Against Deep Neural Network Systems. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3816–3830. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards Large-Scale Small Object Detection: Survey and Benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef]

- Xu, K.; Zhang, G.; Liu, S.; Fan, Q.; Sun, M.; Chen, H.; Chen, P.Y.; Wang, Y.; Lin, X. Adversarial T-Shirt! Evading Person Detectors in a Physical World. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Volume 12350, pp. 665–681. [Google Scholar] [CrossRef]

- Zhu, X.; Li, X.; Li, J.; Wang, Z.; Hu, X. Fooling Thermal Infrared Pedestrian Detectors in Real World Using Small Bulbs. Proc. AAAI Conf. Artif. Intell. 2021, 35, 3616–3624. [Google Scholar] [CrossRef]

- Wei, X.; Yu, J.; Huang, Y. Physically Adversarial Infrared Patches with Learnable Shapes and Locations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12334–12342. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110. [Google Scholar] [CrossRef]

- Suryanto, N.; Kim, Y.; Larasati, H.T.; Kang, H.; Le, T.T.H.; Hong, Y.; Yang, H.; Oh, S.Y.; Kim, H. ACTIVE: Towards Highly Transferable 3D Physical Camouflage for Universal and Robust Vehicle Evasion. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 4282–4291. [Google Scholar] [CrossRef]

- Wang, R.; Guo, Y.; Wang, Y. AGS: Affordable and Generalizable Substitute Training for Transferable Adversarial Attack. Proc. AAAI Conf. Artif. Intell. 2024, 38, 5553–5562. [Google Scholar] [CrossRef]

- Huang, Y.; Dong, Y.; Ruan, S.; Yang, X.; Su, H.; Wei, X. Towards Transferable Targeted 3D Adversarial Attack in the Physical World. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024. [Google Scholar] [CrossRef]

- Chen, J.; Feng, Z.; Zeng, R.; Pu, Y.; Zhou, C.; Jiang, Y.; Gan, Y.; Li, J.; Ji, S. Enhancing Adversarial Transferability with Adversarial Weight Tuning. Proc. AAAI Conf. Artif. Intell. 2025, 39, 2061–2069. [Google Scholar] [CrossRef]

- Li, K.; Wang, D.; Zhu, W.; Li, S.; Wang, Q.; Gao, X. Physical Adversarial Patch Attack for Optical Fine-Grained Aircraft Recognition. IEEE Trans. Inf. Forensics Secur. 2024, 20, 436–448. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, N.; Xiao, C.; Yang, D.; Fang, J.; Yang, R.; Chen, Q.A.; Liu, M.; Li, B. Invisible for Both Camera and LiDAR: Security of Multi-Sensor Fusion Based Perception in Autonomous Driving Under Physical-World Attacks. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 24–27 May 2021; pp. 176–194. [Google Scholar] [CrossRef]

- Ji, X.; Cheng, Y.; Zhang, Y.; Wang, K.; Yan, C.; Xu, W.; Fu, K. Poltergeist: Acoustic Adversarial Machine Learning against Cameras and Computer Vision. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 24–27 May 2021; pp. 160–175. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, Z.; Yin, Z.; Ji, X. Rolling Colors: Adversarial Laser Exploits against Traffic Light Recognition. In Proceedings of the 31nd USENIX Conference on Security Symposium, Boston, MA, USA, 10–12 August 2022; pp. 1957–1974. [Google Scholar]

- Song, R.; Ozmen, M.O.; Kim, H.; Muller, R.; Celik, Z.B.; Bianchi, A. Discovering Adversarial Driving Maneuvers against Autonomous Vehicles. In Proceedings of the 32nd USENIX Conference on Security Symposium, Anaheim, CA, USA, 9–11 August 2023; pp. 2957–2974. [Google Scholar]

- Jing, P.; Tang, Q.; Du, Y. Too Good to Be Safe: Tricking Lane Detection in Autonomous Driving with Crafted Perturbations. In Proceedings of the 30nd USENIX Conference on Security Symposium, Vancouver, BC, Canada, 11–13 August 2021; pp. 3237–3254. [Google Scholar]

- Muller, R.; University, P.; Song, R.; University, P.; Wang, C. Investigating Physical Latency Attacks against Camera-Based Perception. In Proceedings of the 2025 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 12–15 May 2025; pp. 4588–4605. [Google Scholar] [CrossRef]

- Nassi, B.; Mirsky, Y.; Nassi, D.; Ben-Netanel, R.; Drokin, O.; Elovici, Y. Phantom of the ADAS: Securing Advanced Driver-Assistance Systems from Split-Second Phantom Attacks. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, Virtual, 9–13 November 2020; pp. 293–308. [Google Scholar] [CrossRef]

- Wang, W.; Yao, Y.; Liu, X.; Li, X.; Hao, P.; Zhu, T. I Can See the Light: Attacks on Autonomous Vehicles Using Invisible Lights. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual, 15–19 November 2021; pp. 1930–1944. [Google Scholar] [CrossRef]

- Zhang, Y.; Foroosh, H.; David, P.; Gong, B. Camou: Learning a Vehicle Camouflage for Physical Adversarial Attack on Object Detectors in the Wild. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Wang, J.; Liu, A.; Yin, Z.; Liu, S.; Tang, S.; Liu, X. Dual Attention Suppression Attack: Generate Adversarial Camouflage in Physical World. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 8561–8570. [Google Scholar] [CrossRef]

- Wang, D.; Jiang, T.; Sun, J.; Zhou, W.; Gong, Z.; Zhang, X.; Yao, W.; Chen, X. FCA: Learning a 3D Full-Coverage Vehicle Camouflage for Multi-View Physical Adversarial Attack. Proc. AAAI Conf. Artif. Intell. 2022, 36, 2414–2422. [Google Scholar] [CrossRef]

- Suryanto, N.; Kim, Y.; Kang, H.; Larasati, H.T.; Yun, Y.; Le, T.T.H.; Yang, H.; Oh, S.Y.; Kim, H. DTA: Physical Camouflage Attacks Using Differentiable Transformation Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 15284–15293. [Google Scholar] [CrossRef]

- Zhou, J.; Lyu, L.; He, D.; Li, Y. RAUCA: A Novel Physical Adversarial Attack on Vehicle Detectors via Robust and Accurate Camouflage Generation. In Proceedings of the 41st International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024; Volume 235, pp. 62076–62087. [Google Scholar] [CrossRef]

- Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Xiao, C.; Prakash, A.; Kohno, T.; Song, D. Robust Physical-World Attacks on Deep Learning Visual Classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1625–1634. [Google Scholar] [CrossRef]

- Thys, S.; Ranst, W.V.; Goedeme, T. Fooling Automated Surveillance Cameras: Adversarial Patches to Attack Person Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 49–55. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Z.; Chen, H.; Wang, Y.; Zhang, K. T-SEA: Transfer-Based Self-Ensemble Attack on Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 20514–20523. [Google Scholar] [CrossRef]

- Lian, J.; Wang, X.; Su, Y.; Ma, M.; Mei, S. CBA: Contextual Background Attack against Optical Aerial Detection in the Physical World. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5606616. [Google Scholar] [CrossRef]

- Chen, S.T.; Cornelius, C.; Martin, J.; Chau, D.H. ShapeShifter: Robust Physical Adversarial Attack on Faster R-CNN Object Detector. In Proceedings of the Machine Learning and Knowledge Discovery in Databases, Dublin, Ireland, 10–14 September 2019; Volume 11051, pp. 52–68. [Google Scholar] [CrossRef]

- Yang, K.; Tsai, T.; Yu, H.; Ho, T.Y.; Jin, Y. Beyond Digital Domain: Fooling Deep Learning Based Recognition System in Physical World. Proc. AAAI Conf. Artif. Intell. 2020, 34, 1088–1095. [Google Scholar] [CrossRef]

- Hu, Y.C.T.; Chen, J.C.; Kung, B.H.; Hua, K.L.; Tan, D.S. Naturalistic Physical Adversarial Patch for Object Detectors. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 7828–7837. [Google Scholar] [CrossRef]

- Tan, J.; Ji, N.; Xie, H.; Xiang, X. Legitimate Adversarial Patches: Evading Human Eyes and Detection Models in the Physical World. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021; pp. 5307–5315. [Google Scholar] [CrossRef]

- Guesmi, A.; Ding, R.; Hanif, M.A.; Alouani, I.; Shafique, M. DAP: A Dynamic Adversarial Patch for Evading Person Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 24595–24604. [Google Scholar]

- Tan, W.; Li, Y.; Zhao, C.; Liu, Z.; Pan, Q. DOEPatch: Dynamically Optimized Ensemble Model for Adversarial Patches Generation. IEEE Trans. Inf. Forensics Secur. 2024, 19, 9039–9054. [Google Scholar] [CrossRef]

- Huang, L.; Gao, C.; Zhou, Y.; Xie, C.; Yuille, A.L.; Zou, C.; Liu, N. Universal Physical Camouflage Attacks on Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 717–726. [Google Scholar] [CrossRef]

- Wu, Z.; Lim, S.N.; Davis, L.S.; Goldstein, T. Making an Invisibility Cloak: Real World Adversarial Attacks on Object Detectors. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Volume 12349, pp. 1–17. [Google Scholar] [CrossRef]

- Hu, Z.; Huang, S.; Zhu, X.; Sun, F.; Zhang, B.; Hu, X. Adversarial Texture for Fooling Person Detectors in the Physical World. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13297–13306. [Google Scholar] [CrossRef]

- Hu, Z.; Chu, W.; Zhu, X.; Zhang, H.; Zhang, B.; Hu, X. Physically Realizable Natural-Looking Clothing Textures Evade Person Detectors via 3D Modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 16975–16984. [Google Scholar] [CrossRef]

- Sun, J.; Yao, W.; Jiang, T.; Wang, D.; Chen, X. Differential Evolution Based Dual Adversarial Camouflage: Fooling Human Eyes and Object Detectors. Neural Netw. 2023, 163, 256–271. [Google Scholar] [CrossRef]

- Wang, J.; Li, F.; He, L. A Unified Framework for Adversarial Patch Attacks against Visual 3D Object Detection in Autonomous Driving. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4949–4962. [Google Scholar] [CrossRef]

- Xiao, C.; Yang, D.; Li, B.; Deng, J.; Liu, M. MeshAdv: Adversarial Meshes for Visual Recognition. In Proceedings of the IEEE/CVFConference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6891–6900. [Google Scholar] [CrossRef]

- Lovisotto, G.; Turner, H.; Sluganovic, I.; Strohmeier, M.; Martinovic, I. SLAP: Improving Physical Adversarial Examples with Short-Lived Adversarial Perturbations. In Proceedings of the 30nd USENIX Conference on Security Symposium, Vancouver, BC, Canada, 11–13 August 2021; pp. 1865–1882. [Google Scholar]

- Köhler, S.; Lovisotto, G.; Birnbach, S.; Baker, R.; Martinovic, I. They See Me Rollin’: Inherent Vulnerability of the Rolling Shutter in CMOS Image Sensors. In Proceedings of the 37th Annual Computer Security Applications Conference, Virtual, 6–10 December 2021; pp. 399–413. [Google Scholar] [CrossRef]

- Wen, H.; Chang, S.; Zhou, L. Light Projection-Based Physical-World Vanishing Attack against Car Detection. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (Icassp), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Wen, H.; Chang, S.; Zhou, L.; Liu, W.; Zhu, H. OptiCloak: Blinding Vision-Based Autonomous Driving Systems Through Adversarial Optical Projection. IEEE Internet Things J. 2024, 11, 28931–28944. [Google Scholar] [CrossRef]

- Cheng, Y.; Ji, X.; Zhu, W.; Zhang, S.; Fu, K.; Xu, W. Adversarial Computer Vision via Acoustic Manipulation of Camera Sensors. IEEE Trans. Dependable Secur. Comput. 2024, 21, 3734–3750. [Google Scholar] [CrossRef]

- Khan, A.; Rauf, Z.; Sohail, A.; Khan, A.R.; Asif, H.; Asif, A.; Farooq, U. A Survey of the Vision Transformers and Their CNN-Transformer Based Variants. Artif. Intell. Rev. 2023, 56, 2917–2970. [Google Scholar] [CrossRef]

- Papa, L.; Russo, P.; Amerini, I.; Zhou, L. A Survey on Efficient Vision Transformers: Algorithms, Techniques, and Performance Benchmarking. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 7682–7700. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Jia, Y.; Wang, J.; Li, B.; Chai, W.; Carin, L.; Velipasalar, S. Enhancing Cross-Task Black-Box Transferability of Adversarial Examples with Dispersion Reduction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 937–946. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, H.; Li, R.; Sicre, R.; Amsaleg, L.; Backes, M.; Li, Q.; Shen, C. Revisiting Transferable Adversarial Image Examples: Attack Categorization, Evaluation Guidelines, and New Insights. arXiv 2023, arXiv:2310.11850. [Google Scholar] [CrossRef]

- Fu, J.; Chen, Z.; Jiang, K.; Guo, H.; Wang, J.; Gao, S.; Zhang, W. Improving Adversarial Transferability of Vision-Language Pre-Training Models through Collaborative Multimodal Interaction. arXiv 2024, arXiv:2403.10883. [Google Scholar] [CrossRef]

- Kim, T.; Lee, H.J.; Ro, Y.M. Map: Multispectral Adversarial Patch to Attack Person Detection. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 4853–4857. [Google Scholar] [CrossRef]

- Wei, X.; Huang, Y.; Sun, Y.; Yu, J. Unified Adversarial Patch for Cross-Modal Attacks in the Physical World. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 4422–4431. [Google Scholar] [CrossRef]

- Zhang, T.; Jha, R. Adversarial Illusions in Multi-Modal Embeddings. In Proceedings of the 33nd USENIX Security Symposium, Philadelphia, PA, USA, 14–16 August 2024; pp. 3009–3025. [Google Scholar]

- Williams, P.N.; Li, K. CamoPatch: An Evolutionary Strategy for Generating Camouflaged Adversarial Patches. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; Volume 36, pp. 67269–67283. [Google Scholar]

- Liu, F.; Zhang, C.; Zhang, H. Towards Transferable Unrestricted Adversarial Examples with Minimum Changes. In Proceedings of the IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), Raleigh, NC, USA, 8–10 February 2023; pp. 327–338. [Google Scholar] [CrossRef]

- Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Tramer, F.; Prakash, A.; Kohno, T.; Song, D. Physical Adversarial Examples for Object Detectors. In Proceedings of the 12th USENIX Workshop on Offensive Technologies, Baltimore, MD, USA, 13–14 August 2018. [Google Scholar]

- Xia, H.; Zhang, R.; Kang, Z.; Jiang, S.; Xu, S. Enhance Stealthiness and Transferability of Adversarial Attacks with Class Activation Mapping Ensemble Attack. In Proceedings of the 2024 Network and Distributed System Security Symposium, San Diego, CA, USA, 26 February–1 March 2024. [Google Scholar] [CrossRef]

- Williams, P.N.; Li, K. Black-Box Sparse Adversarial Attack via Multi-Objective Optimisation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 12291–12301. [Google Scholar] [CrossRef]

- Brown, T.B.; Mané, D.; Roy, A.; Abadi, M.; Gilmer, J. Adversarial Patch. In Proceedings of the Advances in Neural Information Processing Systems Workshop, Montreal, QC, Canada, 3–8 December 2018. [Google Scholar] [CrossRef]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial Examples in the Physical World. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar] [CrossRef]

- Jiang, Q.; Ji, X.; Yan, C.; Xie, Z.; Lou, H.; Xu, W. GlitchHiker: Uncovering Vulnerabilities of Image Signal Transmission with IEMI. In Proceedings of the 32nd USENIX Conference on Security Symposium, Anaheim, CA, USA, 9–11 August 2023; pp. 7249–7266. [Google Scholar]

- Lin, G.; Niu, M.; Zhu, Q.; Yin, Z.; Li, Z.; He, S.; Zheng, Y. Adversarial Attacks on Event-Based Pedestrian Detectors: A Physical Approach. In Proceedings of the The 39th Annual AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025. [Google Scholar] [CrossRef]

- Athalye, A.; Engstrom, L.; Ilyas, A.; Kwok, K. Synthesizing Robust Adversarial Examples. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 284–293. [Google Scholar]

- Xie, T.; Han, H.; Shan, S.; Chen, X. Natural Adversarial Mask for Face Identity Protection in Physical World. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 2089–2106. [Google Scholar] [CrossRef]

- Addepalli, S.; Jain, S.; Babu, R.V. Efficient and Effective Augmentation Strategy for Adversarial Training. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 1488–1501. [Google Scholar]

- Dong, Y.; Ruan, S.; Su, H.; Kang, C.; Wei, X.; Zhu, J. ViewFool: Evaluating the Robustness of Visual Recognition to Adversarial Viewpoints. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 36789–36803. [Google Scholar] [CrossRef]

- Wei, Z.; Wang, Y.; Guo, Y.; Wang, Y. CFA: Class-Wise Calibrated Fair Adversarial Training. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 8193–8201. [Google Scholar] [CrossRef]

- Li, L.; Spratling, M. Data Augmentation Alone Can Improve Adversarial Training. In Proceedings of the International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, Y.; Goldblum, M.; Goldstein, T.; Huang, F. Exploring and Exploiting Decision Boundary Dynamics for Adversarial Robustness. In Proceedings of the International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar] [CrossRef]

- Latorre, F.; Krawczuk, I.; Dadi, L.; Pethick, T.; Cevher, V. Finding Actual Descent Directions for Adversarial Training. In Proceedings of the International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Yuan, X.; Zhang, Z.; Wang, X.; Wu, L. Semantic-Aware Adversarial Training for Reliable Deep Hashing Retrieval. IEEE Trans. Inf. Forensics Secur. 2023, 18, 4681–4694. [Google Scholar] [CrossRef]

- Ruan, S.; Dong, Y.; Su, H.; Peng, J.; Chen, N.; Wei, X. Towards Viewpoint-Invariant Visual Recognition via Adversarial Training. In Proceedings of the IEEE/CVFInternational Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 4686–4696. [Google Scholar] [CrossRef]

- Li, Q.; Shen, C.; Hu, Q.; Lin, C.; Ji, X.; Qi, S. Towards Gradient-Based Saliency Consensus Training for Adversarial Robustness. IEEE Trans. Dependable Secur. Comput. 2024, 21, 530–541. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, C.; Li, D.; Ding, J.; Jiang, T. Defense against Adversarial Attacks on No-Reference Image Quality Models with Gradient Norm Regularization. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 25554–25563. [Google Scholar] [CrossRef]

- Bai, Y.; Ma, Z.; Chen, Y.; Deng, J.; Pang, S.; Liu, Y.; Xu, W. Alchemy: Data-Free Adversarial Training. In Proceedings of the 2024 on ACM SIGSAC Conference on Computer and Communications Security, Salt Lake City, UT, USA, 14–18 October 2024; pp. 3808–3822. [Google Scholar] [CrossRef]

- He, C.; Zhu, B.B.; Ma, X.; Jin, H.; Hu, S. Feature-Indistinguishable Attack to Circumvent Trapdoor-Enabled Defense. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual, 15–19 November 2021; pp. 3159–3176. [Google Scholar] [CrossRef]

- Harder, P.; Pfreundt, F.J.; Keuper, M.; Keuper, J. SpectralDefense: Detecting Adversarial Attacks on CNNs in the Fourier Domain. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Nie, W.; Guo, B.; Huang, Y.; Xiao, C.; Vahdat, A.; Anandkumar, A. Diffusion Models for Adversarial Purification. arXiv 2022, arXiv:2205.07460. [Google Scholar] [CrossRef]

- Shi, X.; Peng, Y.; Chen, Q.; Keenan, T.; Thavikulwat, A.T.; Lee, S.; Tang, Y.; Chew, E.Y.; Summers, R.M.; Lu, Z. Robust Convolutional Neural Networks against Adversarial Attacks on Medical Images. Pattern Recognit. 2022, 132, 108923. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, S.; Chen, K. AI-Guardian: Defeating Adversarial Attacks Using Backdoors. In Proceedings of the 2023 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 21–25 May 2023; pp. 701–718. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.; Yang, L.; Ma, J.; Li, H. ADDITION: Detecting Adversarial Examples with Image-Dependent Noise Reduction. IEEE Trans. Dependable Secur. Comput. 2024, 21, 1139–1154. [Google Scholar] [CrossRef]

- Wu, S.; Wang, J.; Zhao, J.; Wang, Y.; Liu, X. NAPGuard: Towards Detecting Naturalistic Adversarial Patches. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 24367–24376. [Google Scholar] [CrossRef]

- Diallo, A.F.; Patras, P. Sabre: Cutting through Adversarial Noise with Adaptive Spectral Filtering and Input Reconstruction. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 2901–2919. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, M.; Liu, W.; Hu, S.; Zhang, Y.; Wan, W.; Xue, L.; Zhang, L.Y.; Yao, D.; Jin, H. Securely Fine-Tuning Pre-Trained Encoders against Adversarial Examples. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 3015–3033. [Google Scholar] [CrossRef]

- Xiang, C.; Mittal, P. DetectorGuard: Provably Securing Object Detectors against Localized Patch Hiding Attacks. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual, 15–19 November 2021; pp. 3177–3196. [Google Scholar] [CrossRef]

- Xiang, C.; Sehwag, V.; Mittal, P. PatchGuard: A Provably Robust Defense against Adversarial Patches via Small Receptive Fields and Masking. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Online, 11–13 August 2021. [Google Scholar]

- Xiang, C.; Mahloujifar, S.; Mittal, P. PatchCleanser: Certifiably Robust Defense against Adversarial Patches for Any Image Classifier. In Proceedings of the 31st USENIX Security Symposium (USENIX Security), Boston, MA, USA, 10–12 August 2022; pp. 2065–2082. [Google Scholar]

- Liu, J.; Levine, A.; Lau, C.P.; Chellappa, R.; Feizi, S. Segment and Complete: Defending Object Detectors against Adversarial Patch Attacks with Robust Patch Detection. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 14953–14962. [Google Scholar] [CrossRef]

- Cai, J.; Chen, S.; Li, H.; Xia, B.; Mao, Z.; Yuan, W. HARP: Let Object Detector Undergo Hyperplasia to Counter Adversarial Patches. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 2673–2683. [Google Scholar] [CrossRef]

- Xu, K.; Xiao, Y.; Zheng, Z.; Cai, K.; Nevatia, R. PatchZero: Defending against Adversarial Patch Attacks by Detecting and Zeroing the Patch. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 4621–4630. [Google Scholar] [CrossRef]

- Lin, Z.; Zhao, Y.; Chen, K.; He, J. I Don’t Know You, but I Can Catch You: Real-Time Defense against Diverse Adversarial Patches for Object Detectors. In Proceedings of the 2024 on ACM Sigsac Conference on Computer and Communications Security, Salt Lake City, UT, USA, 14–18 October 2024; pp. 3823–3837. [Google Scholar] [CrossRef]

- Jing, L.; Wang, R.; Ren, W.; Dong, X.; Zou, C. PAD: Patch-Agnostic Defense against Adversarial Patch Attacks. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 24472–24481. [Google Scholar] [CrossRef]

- Xiang, C.; Wu, T.; Dai, S.; Mittal, P. PatchCURE: Improving Certifiable Robustness Model Utility and Computation Efficiency of Adversarial Patch Defense. In Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24), Philadelphia, PA, USA, 14–16 August 2024; pp. 3675–3692. [Google Scholar]

- Feng, J.; Li, J.; Miao, C.; Huang, J.; You, W.; Shi, W.; Liang, B. Fight Fire with Fire: Combating Adversarial Patch Attacks Using Pattern-Randomized Defensive Patches. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 12–15 May 2025; pp. 2133–2151. [Google Scholar] [CrossRef]

- Wei, X.; Kang, C.; Dong, Y.; Wang, Z.; Ruan, S.; Chen, Y.; Su, H. Real-World Adversarial Defense against Patch Attacks Based on Diffusion Model. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 1–17. [Google Scholar] [CrossRef]

- Yu, Z.; Li, A.; Wen, R.; Chen, Y.; Zhang, N. PhySense: Defending Physically Realizable Attacks for Autonomous Systems via Consistency Reasoning. In Proceedings of the 2024 on ACM SIGSAC Conference on Computer and Communications Security, Salt Lake City, UT, USA, 14–18 October 2024; pp. 3853–3867. [Google Scholar] [CrossRef]

- Kobayashi, R.; Mori, T. Invisible but Detected: Physical Adversarial Shadow Attack and Defense on LiDAR Object Detection. In Proceedings of the 34th USENIX Security Symposium (USENIX Security 25), Seattle, WA, USA, 13–15 August 2025; pp. 7369–7386. [Google Scholar]

| AP | Method | Gen | Physical Deployment | Transferability | Perceptibility |

|---|---|---|---|---|---|

| Patch | extended-RP2 [103] | GB | Printed, placed | - | Visible |

| Patch | Nested-AE [38] | GB | Printed, placed | Same-bone | Visible |

| Patch | OBJ-CLS [72] | GB | Printed, placed | - | Visible |

| Patch | AP-PA [15] | GB, SM | Printed, placed (on, around) | Diverse-bone | Visible |

| Patch | T-SEA [73] | SM | Displayed on IPAD | Same-bone | Visible |

| Patch | SysAdv [11] | GB | Printed, placed | Same-bone | Visible |

| Patch | CBA [74] | GB, SM | Printed, placed (around) | Diverse-bone | Visible |

| Patch | PatchGen [57] | SM | Printed, placed | Diverse-bone | Visible |

| Cloth-P | NAP [77] | GM | Printed, worn | Same-bone | Natural |

| Cloth-P | LAP [78] | GB | Printed, worn | - | Natural |

| Cloth-P | DAP [79] | GB | Printed, worn | Same-bone | Natural |

| Cloth-P | DOEPatch [80] | SM | Printed, worn | Same-bone | Visible |

| Sticker | TLD [62] | BQ | Printed, placed | - | Covert |

| Image-P | ShapeShifter [75] | GB | Printed, photographed | - | Visible |

| Image-P | LPAttack [76] | GB | Printed, photographed | Same-bone | Visible |

| AP | Method | Akc | VMa | VT | AInt | Venue |

|---|---|---|---|---|---|---|

| ALP | Phantom Attack [64] | BB | RS | PD, TSR | UT | 2020 CCS |

| ALP | SLAP [88] | WB, BB | CNN | OD, TSR | UT, TA | 2021 USENIX |

| AAP | PG attack [59] | BB | CNN | OD | UT, TA | 2021 S&P |

| ALP | Rolling Shutter [89] | BB | CNN | OD | UT | 2021 ACSAC |

| ALP | ICSL [65] | BB | RS | OD, TSD | UT | 2021 CCS |

| ALP | Vanishing Attack [90] | WB, BB | CNN | VD | UT | 2023 ICASSP |

| AAP | TPatch [42] | WB, BB | CNN | OD, IC | TA | 2023 USENIX |

| AEP | GlitchHiker [108] | GrB | CNN | OD, FR | UT | 2023 USENIX |

| ALP | OptiCloak [91] | WB, BB | CNN | VD | UT, TA | 2024 IoTJ |

| AAP | cheng et al. [92] | BB | CNN | OD, LD | UT, TA | 2024 TDSC |

| ALP | L-HAWK [16] | WB | CNN | OD, IC | TA | 2025 NDSS |

| ALP | DETSTORM [63] | WB | CNN, Other | OD, OT | UT | 2025 S&P |

| Method | Gen | Signal Source | Physical Deployment | Transferability | Perceptibility |

|---|---|---|---|---|---|

| Phantom Attack [64] | — | Projector or screen | Projected or displayed | — | Natural |

| SLAP [88] | GB, SM | Projector (RGB) | Projected short-lived light | Same-bone | Visible |

| PG attack [59] | BQ | Acoustic signals | Injected into the inertial sensors | Same-bone | Imperceptible |

| Rolling Shutter [89] | BQ | Blue laser signal | Injected into rolling shutter | Same-bone | Covert |

| ICSL [65] | BQ | Infrared laser LED | Deployed and injected into camera | Diverse-bone | Covert |

| Vanishing Attack [90] | GB, SM | Projector | Projected | Same-bone | Visible |

| TPatch [42] | GB, SM | Acoustic signals, patch | Injected, affixed patch | Same-bone | Covert |

| GlitchHiker [108] | BQ | Intentional electromagnetic interference (IEMI) | Injected signal | Same-bone | Covert |

| OptiCloak [91] | GB, BQ | Projector | Projected | Same-bone | Visible |

| Cheng et al. [92] | BQ | Acoustic signals | Injected into the inertial sensors | Same-bone | Imperceptible |

| L-HAWK [16] | GB | Laser signal, patch | Injected, affixed patch | Same-bone | Covert |

| DETSTORM [63] | GB | Projector | Projected into camera perception pipeline | Diverse-bone | Covert |

| AP | Method | Akc | VMa | VT | AInt | Venue |

|---|---|---|---|---|---|---|

| AC (w/o) | UPC [81] | WB | CNN | OD | UT | 2020 CVPR |

| AC (w/o) | Invisibility Cloak [82] | WB | CNN | PD | UT | 2020 ECCV |

| AC (w/o) | AdvT-shirt [49] | WB | CNN | PD | UT | 2020 ECCV |

| AC (w/o) | TC-EGA [83] | WB | CNN | PD | UT | 2022 CVPR |

| AC (w/) | MeshAdv [87] | WB | CNN | OD, IC | TA | 2019 CVPR |

| AC (w/) | CAMOU [66] | BB | CNN | VD | UT | 2019 ICML |

| AC (w/) | DAS [67] | BB | CNN | OD, IC | UT | 2021 CVPR |

| AC (w/) | CAC [39] | WB | CNN | VD | TA | 2022 IJCAI |

| AC (w/) | FCA [68] | BB | CNN | VD | UT | 2022 AAAI |

| AC (w/) | DTA [69] | WB | CNN | VD | UT | 2022 CVPR |

| AC (w/) | AdvCaT [84] | WB | CNN, TF | PD | UT | 2023 CVPR |

| AC (w/) | ACTIVE [53] | WB | CNN, TF | VD, IS | UT | 2023 ICCV |

| AC (w/) | DAC [85] | BB | CNN | PD | UT | 2023 NN |

| AC (w/) | TT3D [55] | BB | CNN, TF | OD, IC, ICap | TA | 2024 CVPR |

| AC (w/) | FPA [35] | WB | CNN, TF | OD, VD | UT | 2024 arXiv |

| AC (w/) | RAUCA [70] | BB | CNN, TF | VD | UT | 2024 ICML |

| AC (w/) | Lin et al. [109] | WB | TF | PD | UT | 2025 AAAI |

| AC (w/) | Wang et al. [86] | WB | CNN, TF | OD (3D) | UT | 2025 TCSVT |

| Method | Gen | Renderer | Physical Deployment | Transferability | Perceptibility |

|---|---|---|---|---|---|

| UPC [81] | GB | — | Printed, attached | Same-bone | Visible |

| Invisibility Cloak [82] | GB | — | Printed, worn | Same-bone | Visible |

| AdvT-shirt [49] | GB | — | Printed, worn | Same-bone | Visible |

| TC-EGA [83] | GB, GM | — | Printed, tailored | Same-bone | Visible |

| MeshAdv [87] | GB | NNR | 3D-printed, placed | Same-bone | Visible |

| CAMOU [66] | SM | NNR | Painted on target | Same-bone | Visible |

| DAS [67] | SM | NNR | Printed, attached to a toy car | Same-bone | Visible |

| CAC [39] | GB | NNR | 3D-printed car model | Same-bone | Visible |

| FCA [68] | SM | NNR | Printed, attached to a toy car | Same-bone | Visible |

| DTA [69] | GB | DNR, NNR | 3D-printed car model | Same-bone | Visible |

| AdvCaT [84] | GB | DNR | Printed, tailored | Diverse-bone | Natural |

| ACTIVE [53] | GB | DNR | 3D-printed car model | Diverse-bone | Visible |

| DAC [85] | SM | NNR | Printed, worn | Same-bone | Natural |

| TT3D [55] | SM | DNR, NNR | 3D-printed, placed | Diverse-bone | Natural |

| FPA [35] | GB | DNR, NNR | Printed, attached to a toy car | Diverse-bone | Visible |

| RAUCA [70] | SM | DNR | Printed, attached to a toy car | Diverse-bone | Visible |

| Lin et al. [109] | GB | DNR | Printed, assembled | - | Natural |

| Wang et al. [86] | GB | DNR | Printed, attached | Diverse-bone | Visible |

| Transferability | Physical Deployment | Method |

|---|---|---|

| Same-bone | Printed Deployment | [11,38,39,49,67,68,69,76,77,79,80,81,82,83,85,87] |

| Injected Signal | [16,42,59,88,89,90,91,92,108] | |

| Diverse-bone | Printed Deployment | [15,35,53,55,57,70,74,84,86] |

| Injected Signal | [63,65] |

| Pipeline Stage | Defense Strategy | Defense Objective | Cost and Limitations | Defense Method |

|---|---|---|---|---|

| Model Training | Adversarial Training | Enhancing model’s intrinsic robustness | Poor defense against novel or unseen adversarial patterns | [112,113,114,115,116,117,118,119,120,121,122] |

| Data Input | Input Transformation | Neutralizing adversarial features in input | Potential damage to valid image information, degrading primary task performance | [123,124,125,126,127,128,129,130,131] |

| Model Inference | Adversarial Purging | Locating and cleansing adversarial patterns | Ineffective against subtle or natural adversarial patterns | [132,133,134,135,136,137,138,139,140,141,142] |

| Result Output | Multi-Modal Fusion | Achieving output consensus from multiple modalities or models | High computational overhead and poor real-time performance | [143,144] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Cao, M.; Zhang, Y.; Xu, S.; Cao, Y. From 2D-Patch to 3D-Camouflage: A Review of Physical Adversarial Attack in Object Detection. Electronics 2025, 14, 4236. https://doi.org/10.3390/electronics14214236

Li G, Cao M, Zhang Y, Xu S, Cao Y. From 2D-Patch to 3D-Camouflage: A Review of Physical Adversarial Attack in Object Detection. Electronics. 2025; 14(21):4236. https://doi.org/10.3390/electronics14214236

Chicago/Turabian StyleLi, Guojia, Mingyue Cao, Yihong Zhang, Simin Xu, and Yan Cao. 2025. "From 2D-Patch to 3D-Camouflage: A Review of Physical Adversarial Attack in Object Detection" Electronics 14, no. 21: 4236. https://doi.org/10.3390/electronics14214236

APA StyleLi, G., Cao, M., Zhang, Y., Xu, S., & Cao, Y. (2025). From 2D-Patch to 3D-Camouflage: A Review of Physical Adversarial Attack in Object Detection. Electronics, 14(21), 4236. https://doi.org/10.3390/electronics14214236