Abstract

Deep neural networks have demonstrated remarkable performance in object detection tasks; however, they remain highly susceptible to adversarial attacks. Previous surveys in computer vision have provided considerable coverage of physical adversarial attacks, yet the aspect of systematically categorizing and evaluating their physical deployment methods for object detection has not received commensurate focus. To address this gap, we categorize physical adversarial attacks into three primary classes based on the physical deployment of adversarial patterns: manipulating 2D physical objects, injecting adversarial signals, and placing 3D adversarial camouflage. These categories are further analyzed and compared across nine key attributes. Furthermore, we elucidate the relationship between physical adversarial attacks against object detection models and two critical properties: transferability and perceptibility. Our findings indicate that while attacks involving the manipulation of 2D physical objects are relatively straightforward to deploy, their adversarial patterns are often perceptible to human observers. Similarly, 3D adversarial camouflage tends to lack stealthiness, whereas adversarial signal injection offers stronger imperceptibility. However, all three attack types exhibit limited transferability across different models and modalities. Finally, we discuss current challenges and propose actionable directions for future research, aiming to foster the development of more robust object detection systems.

1. Introduction

In recent years, Deep Neural Networks (DNNs) have been extensively utilized in object detection tasks [1,2]. These tasks often serve as the foundation in fields such as autonomous driving [3,4], video surveillance [5]. Existing research has highlighted the vulnerability of DNNs [6,7]. Adversarial examples pose a significant security threat to object detection models, and this threat continues to be a key area of research [8,9].

For example, in autonomous driving systems, object detectors are employed to recognize traffic signs in order to assist with safety and security [10,11]. Adversaries can mislead or cause the object detector to ignore the sign by pasting a physical adversarial patch on the sign (such as a stop sign), which may lead to vehicle collision accidents and traffic rule violations after being captured by the onboard camera [12,13].

In physical adversarial attacks against object detection models, adversaries need to place physical adversarial patterns in the physical environment so that the image perception device can capture the target carrying adversarial patterns, turning it into a digital adversarial sample as the input for the victim model [14,15,16]. In real environments, physical adversarial patterns are subject to various physical constraints, such as shape, size, color, lighting, distance, viewing angle, and limitations of image perception devices. Therefore, deploying physical adversarial patterns is more challenging compared to adding adversarial perturbations in digital adversarial attacks [4,17]. Therefore, this work offers a thorough summary of physical adversarial attacks in object detection and clarifies the relationship between physical adversarial attacks and their transferability/perceptibility.

1.1. Literature Selection

This review employs a systematic literature review methodology [18] to evaluate the state-of-the-art academic and industrial research on physical adversarial attacks against object detection, including pedestrian and vehicle detection, as well as models for infrared detection. In this review, the following keywords were used as search strings: physical adversarial attack, object detection, person detection, vehicle detection.

Our literature search was conducted up to July 2025, encompassing various scholarly search engines and platforms, including the ACM Digital Library (https://dl.acm.org/, accessed on 15 July 2025), IEEE Xplore (https://ieeexplore.ieee.org/Xplore/home.jsp, accessed on 12 July 2025), Elsevier (https://www.elsevier.com/en-in, accessed on 12 July 2025), Springer (https://www.springer.com/gp, accessed on 12 July 2025), Web of Science (https://webofscience.clarivate.cn/wos/woscc/basic-search, accessed on 12 July 2025), Scopus (https://www.scopus.com/pages/home?display=basic#basic, accessed on 12 July 2025), Google Scholar (https://scholar.google.com/, accessed on 12 July 2025), and mainly from the top journals and conferences, such as CCS, S&P, USENIX, NDSS, AAAI, CVPR, ICCV, ICML, TIFS, TDSC, TGRS, TPAMI, IJCV, TIP, NeurIPS, IoT, and ICLR. The selection criteria were as follows:

- (1)

- Physical deployment. The attack strategies and methods in this review had to be implementable in a physical environment.

- (2)

- Victim tasks. This review focuses on physical adversarial attacks against object detection models, including related tasks such as person detection, vehicle detection, and traffic sign detection.

- (3)

- Conceptual Cohesiveness. Articles were selected based on their relevance and cohesive contribution to the field of physical adversarial attacks.

We employed the OR operator to incorporate synonymous terms for core concepts, such as “physical adversarial attack” OR “physical adversarial example” OR “adversarial patch” OR “adversarial camouflage”. We then applied the AND operator to combine different conceptual groups and refine the search results, for example, (“physical adversarial attack” OR “physical adversarial example”) AND (“object detection” OR “vehicle detection”).

This systematic categorization and organization help identify strengths and weaknesses, recurring trends, and research gaps in physical adversarial attacks against object detection, thereby achieving a comprehensive understanding of existing work. Subsequently, the top 200 articles were read individually to analyze their scope and contributions.

Although the domain of physical adversarial attacks has received considerable attention in computer vision surveys, the aspect of systematically categorizing and evaluating their physical deployment methods for object detection has not received commensurate focus. To address this gap, this review systematically applies and evaluates the taxonomy of physical deployment methods, transferability, and perceptibility to organize and assess the progress in generating 2D adversarial patterns and 3D adversarial camouflage for object detection.

Wei et al. [19] focused on physical adversarial attacks, organizing the current physical attacks from attack tasks, attack forms, and attack methods. Wang et al. [20] mainly reviewed physical adversarial attacks in image recognition and object detection tasks, covering some physical adversarial attacks in object tracking and semantic segmentation. Guesmi et al. [21] explored physical adversarial attacks for various camera-based computer vision tasks. Wei et al. [22] introduced the adversarial medium to summarize physical adversarial attacks in computer vision and proposed six evaluation metrics. Nguyen et al. [23] specifically studied physical adversarial attacks for four surveillance tasks. Additionally, Zhang et al. [24] conducted a comparative analysis of datasets and victim models in digital adversarial attacks. A comparative analysis of existing physical adversarial attack review literature is shown in Table 1. Our work systematically reviews the development of physical adversarial attacks in object detection, from 2D patches to 3D adversarial camouflages, and assesses key properties such as transferability and perceptibility, filling important gaps in current research.

Table 1.

A comparative analysis of several existing surveys about physical adversarial attacks.

Table 1.

A comparative analysis of several existing surveys about physical adversarial attacks.

| Survey | 3D Adversarial Camouflage | Physical Deployment | Transferability | Perceptibility | Year |

|---|---|---|---|---|---|

| Wei et al. [19] | × | × | × | × | 2023 |

| Wang et al. [20] | × | × | × | × | 2023 |

| Guesmi et al. [21] | × | × | ✓ | ✓ | 2023 |

| Wei et al. [22] | × | ✓ | × | ✓ | 2024 |

| Nguyen et al. [23] | × | × | × | × | 2024 |

| Zhang et al. [24] | × | ✓ | ✓ | 2024 | |

| Ours | ✓ | ✓ | ✓ | ✓ | 2025 |

1.2. Our Contributions

Under the various physical constraints of the real world, how can we establish a systematic framework to categorize, evaluate, and understand the physical deployment of adversarial patterns (both 2D and 3D) against object detection systems, and what are the key trade-offs and relationships among transferability, perceptibility, and the physical deployment manner of such attacks? Our work aims to fill this gap by introducing a novel taxonomy of physical adversarial attacks from 2D adversarial patterns to 3D adversarial camouflage, and we propose a graded evaluation framework for transferability and perceptibility of adversarial attacks. This review offers the following contributions:

- This review identifies, explores, and evaluates physical adversarial attacks targeting object detection systems, including pedestrian and vehicle detection.

- We propose a novel taxonomy for physical adversarial attacks against object detection, categorizing them into three classes based on the generation and deployment of adversarial patterns: manipulating 2D physical objects, injecting adversarial signals, and placing 3D adversarial camouflage. These categories are systematically compared on nine key attributes.

- The transferability of adversarial attacks and the perceptibility of adversarial examples are further analyzed and evaluated. Transferability is classified into two levels: cross-model and cross-modal. Perceptibility is divided into four levels: visible, natural, stealthy, and imperceptible.

- We discuss the challenges of adversarial attacks against object detection models, propose potential improvements, and suggest future research directions. Our goal is to inspire further work that emphasizes security threats affecting the entire object detection pipeline.

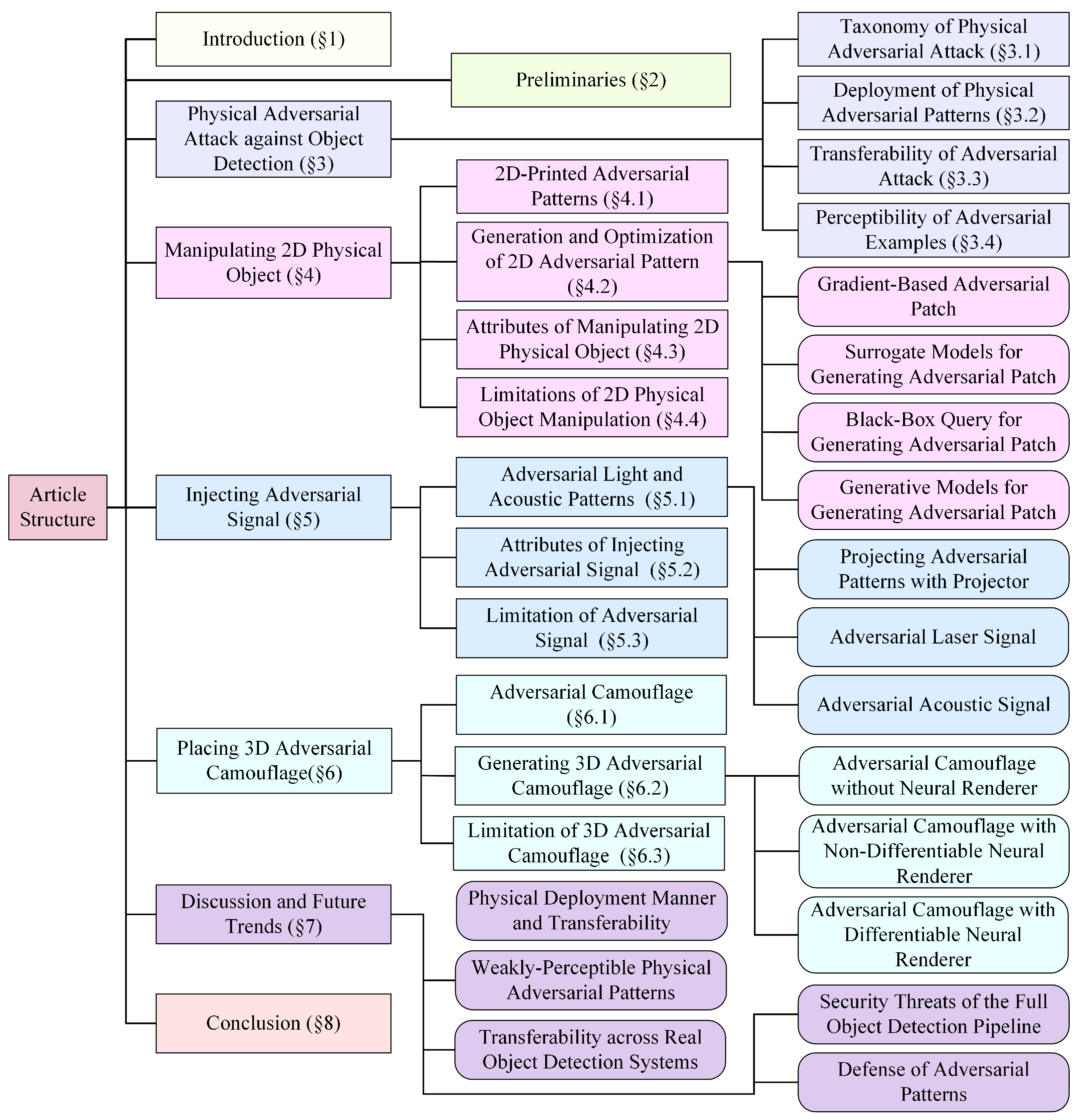

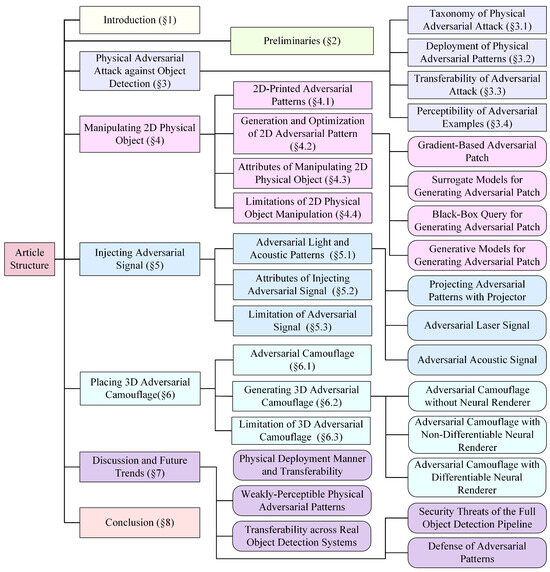

This review is organized as follows: Section 1 introduces the scope of the reviewed literature and the academic databases used for the literature search. Section 2 presents a number of preliminaries. Section 3 delineates the taxonomy of physical adversarial attacks against object detection (Section 3.1), the deployment of physical adversarial patterns (Section 3.2), the transferability (Section 3.3) of adversarial attacks, and the perceptibility of adversarial examples (Section 3.4). Section 4, Section 5, and Section 6 introduce three types of attack in turn: manipulating 2D physical objects, injecting adversarial signals, and placing 3D adversarial camouflage. Section 7 offers detailed discussion of the trade-offs between transferability, perceptibility, and deployment manners, along with their limitations and future trends. Finally, Section 8 presents the conclusion. The entire structure of this article is shown in Figure 1.

Figure 1.

Article structure. Physical adversarial attacks against object detection (Section 3) are divided into three categories: manipulating 2D physical object (Section 4), injecting adversarial signal (Section 5), placing 3D adversarial camouflage (Section 6). For each category, we systematically explain the adversarial patterns, detail the generation and optimization methods, evaluate its nine key properties, and discuss specific limitations.

2. Preliminaries

We elucidate the threat model from four dimensions: adversarial attack environment, adversary’s intention, adversary’s knowledge and capabilities, and victim tasks and model architecture. Subsequently, we will formally define the adversarial samples and then introduce the content of the threat model.

2.1. Adversarial Examples

Adversarial examples refer to carefully crafted modifications or perturbations applied to input data with the intention of misleading or causing errors in DNN-based models. Adversarial perturbations are often designed to be imperceptible to human observers and can cause significant errors in the decision-making process of the model.

In computer vision, given a DNN-based model characterized by a parameter set , we formulate the DNN-based models as follows:

where for any input data , the well-trained model is able to predict a value that closely approximates the corresponding ground truth .

The addition of small, imperceptible, and carefully crafted perturbations or noise to an image can result in model producing incorrect predictions . Note that the attacker’s modification is limited to the input data. The input example x, following the addition of minimal perturbations that can be learned by an adversarial algorithm, is denoted as the adversarial example , which can be indistinguishable from the original input x, and lead to . Mathematically, the joint representation is expressed as follows:

Implementing an adversarial attack first requires generating an appropriate adversarial example .

Generating an adversarial example is the cornerstone of the adversarial attack, and its quality directly impacts the attack’s effectiveness and success rate [25]. To ensure that can effectively mislead the incorrect behavior in the target model and be invisible to human observers, adversaries must take into account the environment, the intention, their knowledge, capabilities, and the victim model.

2.2. Adversarial Attack Environment

Based on the deployment scenarios of the adversarial examples, existing adversarial attacks can be categorized as digital adversarial attacks and physical adversarial attacks.

2.2.1. Digital Adversarial Attack

In digital adversarial attacks, adversaries can directly manipulate digital images [26,27]. By making precise pixel modifications to digital images to add adversarial perturbations, digital adversarial examples are generated to serve as direct input to the model, misleading the target model to make incorrect predictions. Researchers have proposed numerous methods for generating adversarial perturbations or adversarial patterns in digital adversarial attacks [28,29].

In the digital domain, adversaries can directly manipulate pixel-level input images to implant carefully crafted perturbations; hence, digital adversarial attacks can exhibit a high success rate [30,31,32]. However, due to the impact of dynamic physical conditions (such as varying shooting angles and distances) and optical imaging [10], it is challenging to perfectly transfer digital adversarial perturbations to the physical environment.

2.2.2. Physical Adversarial Attack

Physical adversarial attacks involve manipulating objects in the physical world [21]. Adversaries can place or attach adversarial patterns to targets in the physical environment, causing image perception devices (e.g., cameras) to capture adversarial images.

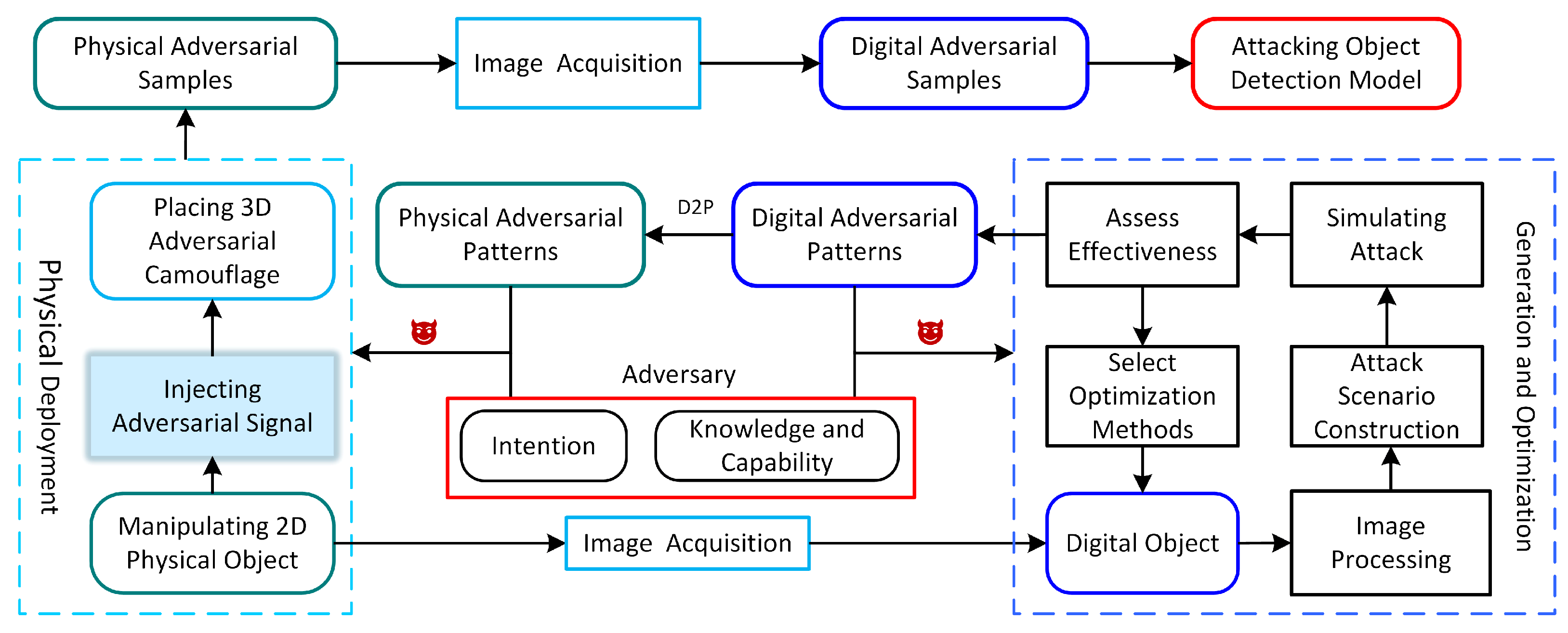

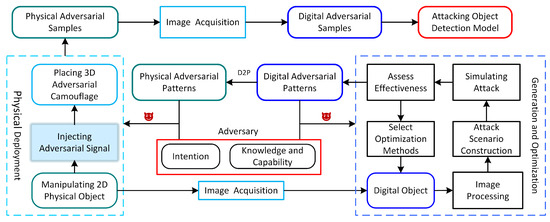

As shown in Figure 2, which shows a schematic diagram of physical adversarial attacks, the physical object first enters the image perception device, and the image perception device converts light signals into electrical signals, which are then processed into a digital image and fed into the image victim model. The schematic diagram of physical adversarial attacks can be conceptualized as a three-layer structure, from bottom to top. The first layer represents the adversary’s background knowledge, encompassing attack intentions, knowledge, and capabilities of the adversary, as well as potential attack methodologies. The second layer involves the generation of adversarial patterns, which are categorized into physical and digital adversarial attacks.

Figure 2.

A schematic diagram of physical adversarial attacks, including the manipulation of physical adversarial patterns to generate physical adversarial examples and the generation and optimization of digital adversarial patterns. We can easily compare and contrast to see where the contributions are and what it missing to build effective physical or digital adversarial attacks.

Following the generation process of digital adversarial patterns, the digital attack consists of six key steps: digital object manipulation, digital image processing, attack scenario construction, attack simulation, effectiveness assessment, and selection of optimization methods. The objective is to generate optimized digital adversarial patterns.

Adversaries may employ substitute models or generative model-based attack methods (Section 4.2), leveraging background knowledge about the target model to select appropriate substitute or generative models, simulate attack scenarios, and then use the generated adversarial patterns to conduct simulated attacks. They can quantify the attack effectiveness and further refine the adversarial patterns. The ultimate goal is to produce adversarial patterns that can be transferred to unknown target models or systems in black-box settings.

The left side of the second layer illustrates the deployment of physical adversarial patterns, derived from their digital counterparts, which can be deployed in the physical world by manipulating 2D physical objects (Section 4), injecting adversarial signals (Section 5), and placing 3D adversarial camouflage (Section 6). These patterns, when embedded in a physical medium and combined with the target, form physical adversarial examples.

The third layer pertains to the generation and transformation of adversarial examples. The physical adversarial examples generated in the second layer must be converted into digital adversarial examples through image acquisition, which are then input into the object detection model to execute the attack.

A substantial amount of research has been conducted exploring adversarial attacks against various DNN models or tasks, resulting in the creation of various forms of physical adversarial patterns, such as adversarial patch [33], adversarial sticker [34], adversarial camouflage [35], adversarial light projection [36], and adversarial perturbations against cameras using ultrasonic waves or laser signals [16,37].

In complex real-world environments, small perturbations may go unnoticed or be challenging to capture accurately by the camera. In addition, physical adversarial attacks also involve issues concerning the physical deployment of adversarial perturbations. Physical adversarial patterns must be transformed into deployable physical forms through printing, attaching materials, and light projection, then captured by image perception devices to mislead object detection models. Adversarial attacks in the physical environment present substantial threats that raise serious concerns, particularly in safety-critical applications such as autonomous driving and video surveillance. Given that object detection is a core component of these systems, this work focuses on the security threats of physical adversarial examples targeting object detection.

2.3. Adversary’s Intention

Adversarial examples aim to maliciously mislead the model’s output with the objective of undermining the model’s integrity [8]. Based on incorrect output of the victim model, the adversary’s intention can be categorized as an untargeted attack or a targeted attack.

Untargeted attacks are designed to mislead the target model into producing any incorrect prediction, applicable in scenarios where the adversary only needs the model to make an error without concern for the specific category of error. In object detection tasks, untargeted attacks can cause the target to be hidden (Hiding Attack) [4], or they can cause the model to misidentify objects as something else entirely (Appearing Attack) [38].

Targeted attacks, on the other hand, aim to induce the target model to output a specific incorrect prediction for a given example; these are used in scenarios requiring precise control over the model’s erroneous behavior. A targeted attack on an object detection model can cause the adversarial example to be recognized as a specific object [39].

Targeted and untargeted attacks can be easily interchanged by modifying the objective function [24]. Typically, the attack success rate of targeted attacks is lower than that of untargeted attacks [40,41].

2.4. Adversary’s Knowledge and Capabilities

Based on the level of access to information about the target model and training data, adversaries’ knowledge and capabilities can be categorized into three attack scenarios, ranging from weak to strong: black-box, gray-box, and white-box.

- (1)

- White-box

In a white-box scenario, it is assumed that the adversary has full access to the target model, including all parameters, gradients, architectural information, and even substitute data used for training the target model. Therefore, the adversary can meticulously craft adversarial examples by acquiring complete knowledge of the target model [42].

- (2)

- Black-box

In a black-box scenario, the adversary has no knowledge of the target model’s parameters, architecture, or training data, and it can only obtain the model’s outputs, such as confidence scores and predicted class labels, by submitting query samples to the target model [43,44]. Additionally, the adversary may be subject to query limitations and may be unaware of the state-of-the-art (SOTA) defense mechanisms deployed in the target model [45].

- (3)

- Gray-box

In a gray-box scenario, the adversary’s knowledge and capabilities fall between those of white-box and black-box settings. It is assumed that the adversary has partial knowledge of the target model, such as its architecture, but lacks access to the model’s parameters and gradient information [46].

In real-world physical environments, it is difficult for adversaries to gain knowledge of the target model, but they can utilize substitute models from white-box scenarios to produce optimized, transferable adversarial examples for deployment in black-box physical environments.

2.5. Victim Model Architecture

In the domain of object detection, neural network models are primarily categorized into two-stage detectors and one-stage detectors. According to the architecture of the model, they can be further classified into CNN-based models and Transformer-based models [47].

- (1)

- CNN-based model

To date, various deep neural network (DNN) models based on convolutional neural network (CNN) architectures have been most widely applied in object detection tasks [2,48]. A wealth of research has been conducted on adversarial attacks against CNN-based model architectures [2,47,49,50,51], including both digital and physical adversarial attacks on tasks.

- (2)

- Transformer-based model

In recent years, visual image models based on Transformer architectures have also been widely applied across multiple vision tasks [52]. Adversarial attacks against Transformer-based models have also garnered attention [53,54,55,56,57]. Almost all tasks based on the CNN models introduced earlier can be implemented using transformer models. The CNN-based tasks previously introduced face the same adversarial attack threats when implemented under the Transformer architecture.

- (3)

- Real-world systems

Previous studies have simulated and tested adversarial attacks against tasks such as object detection, object tracking, and traffic sign recognition in open-source autonomous driving platforms or real vehicle autonomous driving systems, such as Apollo [58,59,60], Autoware [3,58,61], Autopilot [62], openpilot [61], DataSpeed [63], Tesla Model X and Mobileye 630 [64], and Tesla Model 3 [65].

3. Physical Adversarial Attacks Against Object Detection

3.1. Taxonomy of Physical Adversarial Attacks

Physical adversarial examples, for object detection tasks, refer to adding specific physical adversarial patterns to real-world objects. This causes object detection models to produce errors during detection. These examples are converted into digital patterns through image acquisition. Additionally, digital adversarial patterns can be directly manipulated onto digital images to obtain digital adversarial examples. We focus on physical adversarial examples.

The role of adversarial patterns in generating physical adversarial examples can be categorized into three main scenarios: (1) the adversarial pattern is applied to the target object, such as traffic signs, pedestrians, or vehicles on the road in object detection tasks; (2) the adversarial pattern is introduced into the signal processing, such as altering various lighting conditions in the physical environment; (3) the adversarial pattern is placed on the image perception device, such as the camera lens and the sensors.

Based on where physical adversarial patterns are deployed, physical adversarial attacks against object detection could include the following types:

(1) Manipulating 2D Physical Objects; (2) Injecting Adversarial Signals; (3) Placing 3D Adversarial Camouflage.

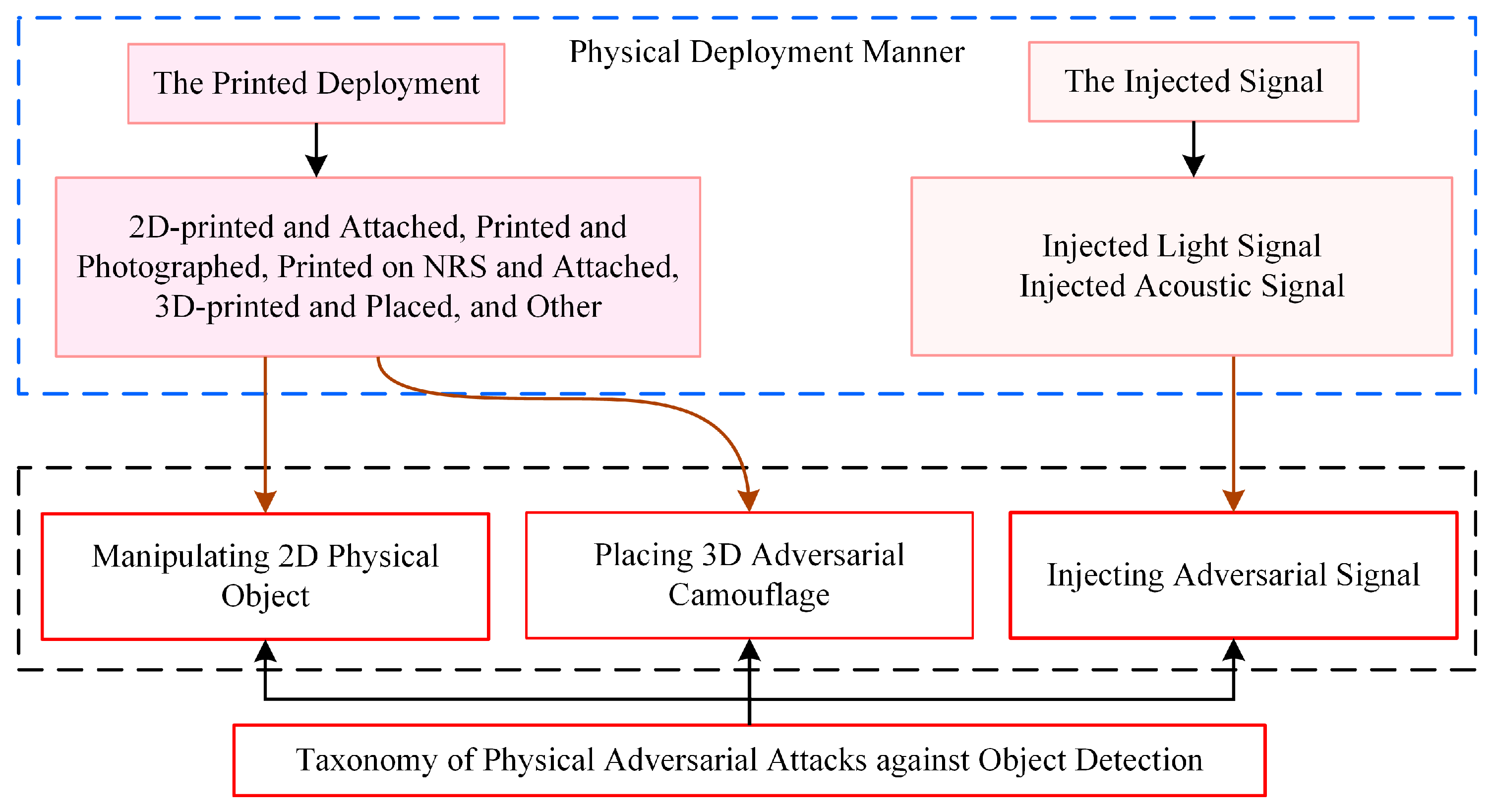

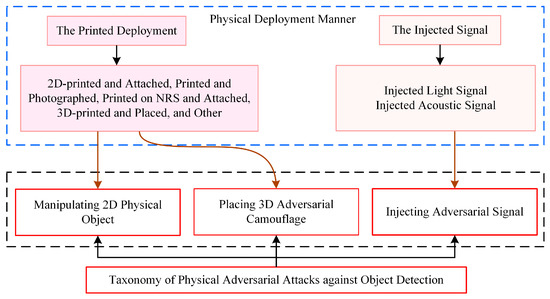

Adversaries employ diverse physical adversarial patterns and media via physical deployment to generate physical adversarial examples against object detection models. Figure 3 presents a taxonomy that organizes physical adversarial attacks on object detection into three categories.

Figure 3.

The taxonomy of physical adversarial attacks against object detection: (1) Manipulating 2D physical objects; (2) Injecting adversarial signals; (3) Placing 3D adversarial camouflage. The blue dashed boxes represent the physical deployment methods of adversarial patterns; the black dashed boxes represent the types of physical adversarial attacks; the brown solid arrows indicate the correspondence between the deployment manners and the physical attacks.

3.2. Deployment of Physical Adversarial Patterns

Based on the deployment of physical adversarial patterns in real environments, we categorize the deployment manners of 2D and 3D physical adversarial patterns into two types, printed deployment and injected signal, as shown in Table 2.

- (1)

- Printed deployment involves printing adversarial patterns or examples in 2D or 3D and placing them on or around the target surface. This can be further classified into four categories: printed and attached, printed and photographed, printed on non-rigid surface and attached, and 3D-printed and placed, which are primarily used for the deployment of adversarial patches, stickers, and camouflage for physical object manipulation.

- (2)

- The injected signal involves introducing an external signal source (light or sound signals) to project adversarial patterns onto the target surface or image perception devices. This can be categorized into two types: injected light signal and injected acoustic signal.

Additionally, the simulated scene deployment of adversarial patterns is utilized in autonomous driving and object detection (vehicle detection) simulated scenarios to verify the effectiveness of adversarial patterns [35,53,66,67,68,69,70].

Table 2.

List of physical adversarial pattern deployments and corresponding attacks.

Table 2.

List of physical adversarial pattern deployments and corresponding attacks.

| Type | Physical Deployment Manner | Representative Work |

|---|---|---|

| Printed Deployment | Printed and Attached | [11,15,38,57,62,71,72,73,74] |

| Printed and Photographed | [75,76] | |

| Printed on NRS | [49,77,78,79,80,81,82,83,84,85,86] | |

| 3D-Printed and Placed | [39,53,55,69,87] | |

| Other-Printed and Deployed | [16,67,68,70] | |

| Injected Signal | Light Signal | [16,63,64,65,88,89,90,91] |

| Acoustic Signal | [42,59,92] |

3.3. Transferability of Adversarial Attack

The transferability of adversarial attacks is evaluated based on the ability of adversarial patterns, which are optimized and generated on source datasets and models, to attack new datasets and models. We consider the transferability of adversarial attacks as the ability of these patterns to transfer across datasets, models, or tasks.

According to the adversary’s intent, the transferability of adversarial attacks is related to the victim model and data modality. Thus, the transferability of adversarial patterns can also be measured from two aspects: victim model and data modality. We categorize the transferability of physical adversarial patterns into two levels from weak to strong: cross-model and cross-modal.

3.3.1. Cross-Model Transferability

The cross-model transferability (CMT) of adversarial patterns refers to the ability of adversarial patterns to transfer across multiple models and tasks. Currently, DNN model architectures in computer vision can be divided into three categories: CNN-based architectures, Transformer-based architectures, and other architectures. For example, models such as VGG, ResNet, DenseNet, GoogleNet, Xception, Inception, EfficientNet, YOLO, Faster R-CNN, and SSD are CNN-based architectures [47,48]. Models like the ViT family, DeiT family, DETR, Twin DETR, Deformable DETR, and Swin DETR are Transformer-based architectures [93,94]. Based on the victim model’s backbone architecture, we categorize the cross-model transferability of adversarial patterns into two types: cross same-bone model transferability and cross diverse-bone model transferability.

Cross same-bone model transferability (CSMT) refers to the scenario where the victim models share the same backbone architecture, and the types of datasets are the same or diverse (one or multiple tasks). Adversarial patterns can be transferred across different models of the same-bone architecture or between data samples within different types of datasets. For example, adversarial patterns can transfer from image classification tasks (ImageNet, WideResNet50) to face recognition (PubFig, VGG-Face2 model), scene classification (CIFAR-10, 6 Conv + 2 Dense CNN model), and traffic sign recognition (GTSRB, 7 Conv + 2 Dense CNN model) tasks against the CNN-based architectures of victim models [46].

Cross diverse-bone model transferability (CDMT) refers to the scenario where the victim models have different backbone architectures and the types of datasets are the same or diverse (one or multiple tasks). Adversarial patterns can be transferred between models of diverse-bone architectures or between data samples within datasets of different types. For example, adversarial patterns can be transferred between CNN-based models and Transformer-based models [55].

Cross-task transferability of adversarial patterns [95] can be considered a special case of cross-model transferability of adversarial patterns targeting diverse dataset types. It involves transferring from image classification to object detection tasks [16,57]. Prior research indicates that the CSMT of adversarial patterns is stronger than CDMT [69], and it is more difficult to transfer from CNN-based models to Transformer-based models [96].

3.3.2. Cross-Modal Transferability

The cross-modal transferability (CM) of adversarial patterns refers to the ability of adversarial patterns to transfer across different data modalities and multimodal tasks. Currently, the CM of adversarial patterns is an emerging research focus [55,97]. Based on the modalities of datasets, we categorize CM into two types: cross diverse data-modal transferability and cross mulimodal-task transferability.

Cross diverse data-modal transferability (CDDM) refers to the scenario where the victim models have the same or different backbone architectures and the types of victim datasets are diverse, while all are single-modal. Adversarial patterns can take effect on two or more single-modal datasets simultaneously, causing the model to produce incorrect outputs across different modalities. For example, adversarial 3D-printed objects can simultaneously attack LiDAR and camera perception sources in autonomous driving [58]. The cropped warming and cooling paste can simultaneously attack target detection tasks in the image visible light modality and the infrared modality [98,99].

Cross mulimodal-task transferability (CMTT) refers to the scenario in which the victim models have the same or different backbone architectures and the victim datasets are multimodal (covering one or more multimodal tasks). Adversarial patterns are transferable across multiple multimodal tasks or between different modalities within a multimodal dataset. For example, Zhang and Jha [100] proposed adversarial illusions, which perturb an input of one modality (e.g., an image or a sound) to make its embedding close to another target modality (e.g., (image, text) or (audio, text)), to mislead image generation, text generation, zero-shot classification, and audio retrieval caption.

3.4. Perceptibility of Adversarial Examples

To provide a qualitative measure of perceptibility, we integrate the characteristics of physical adversarial attacks and decompose perceptibility into three complementary perspectives: the human observer, the image perception device, and the digital representation. This yields a four-level scale ranging from most to least perceptible: visible, natural, stealthy, and imperceptible.

- (1)

- Visible. Physical adversarial patterns are visible to the human observer, captureable by image perception devices, and clearly distinguishable from the target in digital representation. Adversarial patterns in this category, such as patches or stickers, have noticeable visual differences from normal examples and are easily detected by human observers. We use the term visible to denote this category.

- (2)

- Natural. Physical adversarial patterns are visible to the human observer, captureable by image perception devices, but hard to distinguish from the target in digital representation. Natural adversarial examples emphasize whether the adversarial patterns appear as a naturally occurring object. For example, adversarial patterns that use mediums such as natural lighting, projectors, shadows, and camouflage are classified as natural. This category emphasizes the natural appearance of adversarial patterns in relation to the target environment. We use the term natural to denote this category.

- (3)

- Covert. Physical adversarial patterns are hard to observe with the naked eye, can be captured by image perception devices with anomalies, and are distinguishable from original images in the digital domain. Covert adversarial examples are visually imperceptible or similar to the original examples for humans, but they can be physically perceived by the targeted device or system. Adversarial examples, such as those using media like thermal insulation materials, infrared light, or lasers, are classified as covert. This category emphasizes that such adversarial examples are not easily detected from the perspective of human observers.

- (4)

- Imperceptible. Physical adversarial patterns are invisible to the naked eye, hard to capture using image perception devices, and hard to distinguish from original images in the digital domain. Adversarial examples in this category include ultrasound-based attacks on camera sensors. The term imperceptible highlights that the differences between digital adversarial examples and their benign counterparts are challenging to measure.

4. Manipulating 2D Physical Objects

Adversaries can introduce adversarial patterns by manipulating physical objects to cause object detection models to produce incorrect outputs. Typically, adversaries place the crafted adversarial patterns on the target surface or around the target. Depending on the physical deployment method of the adversarial patterns, a 2D physical object can be manipulated by placing printed adversarial patterns, such as patches, stickers, image patches, and cloth patches, thus generating physical adversarial examples. Furthermore, as shown in Table 3, we have organized a comprehensive list of adversarial methods for physical object manipulation, sorted by the category of adversarial pattern and chronological order.

4.1. 2D-Printed Adversarial Patterns

Placing adversarial patterns on target surfaces or around a target is a common and practical approach in physical adversarial attacks. To generate physically realized adversarial examples, adversaries strategically place printed adversarial patterns onto the surfaces of physical objects. When adversarial examples are captured by image perception devices, the target image carrying the adversarial pattern is fed into object detection models, thereby enabling the execution of the attack.

- (1)

- Patch and sticker

The printed adversarial patterns mainly include two types: patch and sticker. The digitally crafted adversarial patches or stickers are printed on paper (or printed on the physical surfaces of clothing or objects), then attached to an object’s surface or placed around it.

Adversarial patches typically confine perturbations to a small, localized area without imposing any intensity constraints. Their deployment usually only requires simple printing and pasting onto target surfaces, making this method both simple and commonly used for various attack tasks. The generation methods for adversarial stickers and patches are similar, and they perform equally in terms of attack effectiveness. Patches are usually regular in shape (e.g., circular), while stickers are irregular and can adapt to non-rigid surfaces (e.g., cars, traffic signs, and persons).

- (2)

- Image and cloth patch

An image patch is holistically printed or fabricated, wherein the adversarial examples carrying the adversarial pattern are entirely printed or produced, such as a cloth patch that is printed on non-rigid surfaces such as clothing. Such adversarial patterns are also essentially patches but differ in their generation methods and physical deployment.

Generally, both patches and stickers have distinct visual features that are different from the target surface and are visible to human observers. Adversaries can enhance the stealthiness of these attacks by making the sticker patterns close to natural textures or images, improving the stealthiness of patches by reducing their size or applying ℓ2-norm, ℓ∞-norm, ℓp-norm constraints, or adjusting transparency, or by dispersed deployment [33,101,102]. However, these methods might compromise the effectiveness and robustness of adversarial attacks.

Table 3.

List of attributes of manipulating printed 2D adversarial patterns (patches and stickers). They are arranged according to adversarial pattern and chronological order.

Table 3.

List of attributes of manipulating printed 2D adversarial patterns (patches and stickers). They are arranged according to adversarial pattern and chronological order.

| AP | Method | Akc | VMa | VT | AInt | Venue |

|---|---|---|---|---|---|---|

| Patch | extended-RP2 [103] | WB | CNN | OD | UT | 2018 WOOT |

| Patch | Nested-AE [38] | WB | CNN | OD | UT | 2019 CCS |

| Patch | OBJ-CLS [72] | WB | CNN | PD | UT | 2019 CVPRW |

| Patch | AP-PA [15] | WB, BB | CNN, TF | AD | UT | 2022 TGRS |

| Patch | T-SEA [73] | WB, BB | CNN | OD, PD | UT, TA | 2023 CVPR |

| Patch | SysAdv [11] | WB | CNN | OD, PD | UT | 2023 ICCV |

| Patch | CBA [74] | WB, BB | CNN, TF | OD, AD | UT | 2023 TGRS |

| Patch | PatchGen [57] | BB | CNN, TF | OD, AD, IC | UT | 2025 TIFS |

| Cloth-P | NAP [77] | WB | CNN | OD, PD | UT | 2021 ICCV |

| Cloth-P | LAP [78] | WB | CNN | OD, PD | UT | 2021 MM |

| Cloth-P | DAP [79] | WB | CNN | OD, PD | UT | 2024 CVPR |

| Cloth-P | DOEPatch [80] | WB, BB | CNN | OD | UT | 2024 TIFS |

| Sticker | TLD [62] | BB | RS | LD | UT | 2021 USENIX |

| Image-P | ShapeShifter [75] | WB | CNN | TSD | UT, TA | 2019 ECML PKDD |

| Image-P | LPAttack [76] | WB | CNN, RS | OD | UT | 2020 AAAI |

4.2. Generation and Optimization of 2D Adversarial Patterns

4.2.1. Generation and Optimization Methods

Based on the generation and optimization methods of adversarial patterns, physical adversarial attacks can be categorized into gradient-based methods, surrogate model methods, black-box query methods, and generative models methods.

Gradient-based attack methods utilize the gradient information of the target model to guide the generation of adversarial examples [38]. By computing the gradient of the loss function with respect to the input data, these methods identify which pixel changes in the input data can maximize the model’s loss, making it one of the earliest and most widely studied methods of adversarial attacks.

Substitute model attack methods utilize known models (substitute models or ensemble models) and datasets to generate adversarial examples that are then transferred to attack an unknown target model. Several findings have been made to enhance the transferability of adversarial examples [104].

Black-box query attacks query the target model repeatedly and can be further categorized into score-based attacks [105] and decision-based attacks [41] according to model feedback. Adversaries could obtain only the predicted labels or confidence scores of the victim classifier within limited queries and different perturbation constraints to perform adversarial attacks. The adversary’s focus lies in reducing the number of queries by optimizing the size of the adversarial space and refining the query direction and gradient in the adversarial space.

Generative model attack methods utilize generative models (such as generative adversarial networks, diffusion models, etc.) to learn the data distribution and perturb the input or latent space to create adversarial examples [32].

4.2.2. Gradient-Based Adversarial Patch

The adversarial patch printed and affixed to the target surface represents one of the first adversarial patterns used for physical adversarial attacks [106]. Adv-Examples [107] construct the first adversarial examples in the physical world. Adversaries print the generated adversarial examples on paper, then they use a camera to capture the printed images and input the adversarial examples into the subsequent image model.

For example, Extended-RP2 [103] improves the RP2 [71] gradient-based attack to achieve a disappearing attack that causes physical objects to be ignored by detectors, creating an attack wherein innocuous physical stickers fool a model into detecting nonexistent objects. Nested-AE [38] generates two adversarial examples for long and short distances, combined in a nested fashion to deceived multi-scale object detectors. OBJ-CLSE [72] generates adversarial patches to hide a person from a person detector. The adversarial patches, which were printed on small cardboard plates, were held by individuals in front of their bodies and oriented towards surveillance cameras. SysAdv [11] crafts adversarial patches by considering the system-level nature of the autonomous driving system.

For image patches, LPAttack [76] attacks license plate detection by manufacturing real metal license plates with carefully crafted perturbations against object detection models, yet the approach only targeted North American customized vehicle license plates, and perturbations to other license plates with fixed patterns are easily detected by the human observer.

For cloth patches, LAP [78] generates adversarial patches from cartoon images to evade object detection models via the two-stage patch training process. The patches look natural and are hard to distinguish from general T-shirts. DAP [79] generates dynamic adversarial patches against person detection by accounting for non-rigid deformations caused by changes in a person’s pose.

The generation of gradient-based adversarial patches typically occurs under a white-box scenario, where adversaries employ a physical deployment method involving printing and affixing to the target surface. The clothing patch is printed on fabric, while the image patch is entirely printed and placed in real world. These patches are visible to observers, and they exhibit primarily cross same-bone model transferability [11,38,79,80].

4.2.3. Surrogate Models for Generating Adversarial Patches

Existing research on generating adversarial patches based on substitute models generally employs CNN-based or Transformer-based architectures as substitute models [57,74,80]. Adversaries, with knowledge of the substitute models, design multiple loss terms to optimize the loss function of the DNN model, such as TV loss, non-printability loss (NPS), and adversarial detection loss for object detection tasks, and they use Expectation over Transformation (EOT) to compensate for digital-to-physical transformation. They also jointly optimize the size, position, shape, and transparency of the patches to maintain attack performance in the physical world.

For example, AP-PA [15] generates an adversarial patch that can be printed and then placed on or outside aerial targets. The work of the same authors, CBA [74], continues AP-PA, misdirecting target detection by placing adversarial patches in the background region of the target (the airplane in the aerial image). T-SEA [73] proposes a transfer-based self-ensemble attack on object detection systems. PatchGen [57] uses YOLOv3 + Faster R-CNN, YOLOv3 + DETR, and Faster R-CNN + DETR as known white-box models, based on the ensemble model idea, to generate adversarial patches on the DOTA dataset and then transfer them to attack multiple target models (YOLOv3, YOLOv5, Faster R-CNN, DETR, and Deformable DETR) in aircraft detection tasks.

Additionally, clothing patches generated based on substitute models are used to attack object detection models. DOEPatch [80] uses an optimized ensemble model to dynamically adjust the weight parameters of the target models, and it provides an explanatory analysis of the adversarial patch attacks using energy-based analysis and Grad-CAM visualization.

Adversarial patches generated based on substitute models generally exhibit better transferability across same-bone models [80], but they exhibit weaker transferability across divers-models [57,74]. In practical scenarios, because adversaries seldom possess comprehensive knowledge of the target system or model, generating adversarial patterns based on substitute models for black-box attacks has proven to be an effective attack strategy.

4.2.4. Black-Box Query for Generating Adversarial Patches

In adversarial patch and adversarial sticker attacks based on black-box query, adversaries leverage the decision (classification) information or confidence scores from the target model, employ optimization methods such as differential evolution, genetic algorithms, particle swarm optimization, or Bayesian optimization to determine the search direction and magnitude of adversarial perturbations, and ultimately generate adversarial samples that effectively mislead the target model.

For example, TLD [62] conducts a comprehensive study on the security of lane detection modules in real vehicles. Through a meticulous reverse engineering process, the adversarial examples are generated by adding perturbations to the camera images based on physical coordinates that are obtained by accessing the lane detection system’s hardware. These perturbations are designed to be imperceptible to human vision while simultaneously causing the Tesla Autopilot lane detection module to generate a fake lane output. The natural appearance of stickers maintains stealthiness and reduces the need for complex transformations.

4.2.5. Generative Models for Generating Adversarial Patches

In addition to the aforementioned attack methods, existing research has also utilized various generative adversarial networks to generate transferable adversarial patches. In the context of adversarial attacks, GANs are trained on additional (clean) data, using a pre-trained image classifier as the discriminator for image classification tasks. Unlike traditional GAN training, the GAN only updates the generator while keeping the discriminator unchanged. The training objective of the generator is to cause the discriminator to misclassify the generated images (adversarial examples). For example, NAP [77] leverages the learned image manifold of pre-trained generative adversarial networks (BigGAN and StyleGAN2) to generate natural-looking adversarial clothing patches.

4.3. Attributes of Manipulating 2D Physical Objects

We have organized a comprehensive list of adversarial attack methods targeting physical object manipulation, categorized by types of adversarial patterns and arranged chronologically. Each method is analyzed and characterized according to its key attributes. The attributes of methods employing 2D printed adversarial patterns are summarized in Table 3.

- (1)

- Adversarial Pattern (AP).

- (2)

- Adversary’s Knowledge and Capabilities (Akc).

- (3)

- Victim Model Architecture (VMa).

- (4)

- Victim Tasks (VT).

- (5)

- Adversary’s Intention (AInt).

Furthermore, for each adversarial attack targeting object detection tasks, we present the generation and optimization methods (Gen) of the corresponding adversarial patterns, along with their physical deployment manners. We analyze and summarize the transferability of each method across different datasets or models. Additionally, we evaluate the perceptibility of each adversarial pattern to both human vision and image perception devices using a graded perceptibility level. The specific details are presented in Table 4.

Table 4.

The adversarial attacks of manipulating printed 2D adversarial patterns: generation and optimazation, deployment manners, transferability, and perceptibility.

- (1)

- Generation and Optimization Methods (Gen).

- (2)

- Physical Deployment Manner (PDM).

- (3)

- Adversarial Attack’s Transferability.

- (4)

- Adversarial Example’s Perceptibilitiy.

4.4. Limitations of 2D Physical Object Manipulation

Physical object manipulation is the most common adversarial patterns, but it also has some issues:

- (1)

- Various printed-deployed adversarial patterns (such as patches and stickers) involve complex digital-to-physical environment transitions (such as non-printability score, expectation over transformation, total variation) to handle printing noise and cannot address noise from dynamic physical environments. From the perspective of generation methodology, these approaches primarily rely on gradient-based optimization (e.g., AP-PA [15], DAP [79]) and surrogate model methods (e.g., PatchGen [57]) to simulate physical conditions during training. However, such simulation remains inadequate for modeling complex environmental variables like lighting changes and viewing angles, leading to performance degradation in real-world scenarios. Furthermore, while these methods effectively constrain perturbations to localized areas, they lack adaptive optimization mechanisms for dynamic physical conditions.

- (2)

- Printed-deployed adversarial patterns (patches, stickers) visually have a significant difference from the background environment of the target, with strong perceptibility that can be easily discovered by the naked eye. This visual distinctiveness stems from their generation process, where gradient-based methods often produce high-frequency patterns that conflict with natural textures. Although generative models have been employed to create more natural-looking patterns (such as NAP [77]), they still struggle to achieve seamless environmental integration. The fundamental challenge stems from the inherent trade-off between attack success and visual stealth, as most optimization algorithms must balance the pursuit of high success rates against preserving the visual naturalness of the pattern.

- (3)

- Adversarial patterns that resemble natural objects tend to have weaker attack effects, possibly because the training of models focuses on similar natural objects that have been seen before, and the target model has gained better robustness during training. This phenomenon can be attributed to the model’s prior exposure to similar patterns during training. The semantic consistency of natural-object-like patterns may actually trigger the model’s built-in mechanisms, making them less effective compared to other patterns.

- (4)

- Distributed adversarial patch attacks increase the difficulty of defense detection, with weaker perceptibility, but are relatively challenging to deploy. Their generation typically employs gradient-based optimization across multiple spatially distributed locations, requiring careful coordination of perturbation distribution. While generative models offer potential for creating coherent distributed patterns, they face practical deployment challenges in maintaining precise spatial relationships. This type of attack is highly dependent on the precise spatial configuration of multiple components during deployment, making it susceptible to environmental factors and deployment methods. Furthermore, attackers must balance distribution density with attack effectiveness, which often requires specialized optimization strategies distinct from traditional patch generation methods.

5. Injecting Adversarial Signals

In general, artificial lights reflected from physical objects or ultrasound signals received by camera image sensors are the main physical signals that affect the image output. The adversary projects adversarial patterns to generate adversarial perturbations by manipulating external light sources or acoustic signals to implement physical-world adversarial attacks against object detection models.

5.1. Adversarial Light and Acoustic Patterns

The adversary uses adversarial light or acoustic signals as attack media and does not directly modify the appearance of the object’s surface. Instead, adversarial examples are generated by optimizing various parameters of the projected light or acoustic signals, such as position, shape, color, intensity, wavelength, distance, angle, and projection pattern. These adversarial perturbations generated using visible light or optical devices are common in physical environments and are often selectively ignored by people, thus providing a certain level of stealthiness.

5.1.1. Projecting Adversarial Patterns with Projectors

Various projectors are used to project adversarial patterns onto objects. Image-capturing devices capture the light superimposed with the target object and the adversarial pattern, then transmit the example with the adversarial pattern to the DNN model. SLAP [88] generates short-lived adversarial perturbations using a projector to shine specific light patterns on stop signs. Vanishing Attack [90] utilizes a drone with a portable projector to project the adversarial light pattern on the rear windshield of a vehicle to obstruct the object detector (OD) in autonomous driving systems. In follow-up work, OptiCloak [91], developed by the same research team, the adversarial light pattern employed in the attack framework was further optimized through the implementation of random focal color filtering (RFCF) and gradient-free optimization via the ZO-AdaMM algorithm, enhancing the attack effectiveness of adversarial samples under long-distance and low-light conditions. DETSTORM [63] investigates physical latency attacks against camera-based perception pipelines in the autonomous driving system, using projector perturbations to create a large number of adversarial objects and causing perception delays in dynamic physical environments.

5.1.2. Adversarial Laser Signal

Some studies also use lasers or infrared lasers as the attack medium. For example, rolling shutter [89] further demonstrates the rolling shutter attack for object detection tasks by irradiating a blue laser onto seven different CMOS cameras, ranging from cheap IoT to semi-professional surveillance cameras, to highlight the wide applicability of the rolling shutter attack. ICSL [65] generates adversarial examples using invisible infrared light LEDs, which are deployed in various ways to attack autonomous vehicles’ cameras, effectively causing errors in their environment perception and SLAM processes.

There have also been works that exploit the perceptual differences between human eyes and cameras for varying frequency signals to attack the rolling shutter of autonomous vehicles’ cameras. L-HAWK [16] presents a novel physical adversarial patch activated by laser signals at long distances against mainstream object detectors and image classifiers. In normal circumstances, L-HAWK remains harmless but can manipulate the driving decision of a targeted AV via specific image distortion caused by laser signal injection towards the camera.

Additionally, GlitchHiker [108] investigates different adversarial light patterns in the camera image signal transmission phase, inducing controlled glitch images by injecting intentional electromagnetic interference (IEMI) into the image transmission lines. The effectiveness of the glitch injection is dependent on the strength of the coupled signal in the victim camera system.

5.1.3. Adversarial Acoustic Signals

Adversaries can inject resonant ultrasonic signals into the camera’s inertial sensors, causing the image stabilization system to overcompensate and inducing image distortion, which in turn produces erroneous outputs. For example, Poltergeist attack (PG attack) [59] injects acoustic signals into the camera’s inertial sensors (via frequency scanning to find the acoustic resonance frequency of the target sensors’ MEMS gyroscopes) to interfere with the camera’s image stabilization system, which results in blurring of the output image, even if the camera is stable. Cheng et al. [92] used the same approach as PG attacks, additionally validated on two lane detectors. TPatch [42] builds on the work of ref. [59], designing a physical adversarial patch triggered by acoustic signals to target the inertial sensor of the camera. TPatch remains benign under normal circumstances but can be triggered to cause specific image blurring distortion and generate adversarial images which launched in hiding, creating or altering attacks when the selected acoustic signals are injected as a trigger into the inertial sensor of camera.

5.2. Attributes of Injecting Adversarial Signal

In physical adversarial attacks that inject adversarial light and acoustic signals to attack object detection, signal sources primarily include projectors, laser signals, and ultrasonic signals. Projectors are used to project adversarial patterns onto the surface of target objects, while laser signals are emitted to illuminate the sensors of image perception devices (such as the camera’s rolling shutter or image processing pipeline). Ultrasonic signals are mainly employed to interfere with the inertial sensors of cameras. In Table 5, we summarize the adversarial light and acoustic signals, along with the attribute list of the corresponding attack methods.

Table 5.

The injection of adversarial light and acoustic signal, and the attribute list of adversarial attack methods.

Furthermore, for each adversarial attack utilizing adversarial light and acoustic signals, we also summarize the generation and optimization methods of the adversarial patterns, categorizing them into three types of signal sources while briefly describing the physical deployment approaches. We analyze and summarize the transferability of each method across different datasets or models. Additionally, we evaluate the perceptibility of each adversarial pattern to both human vision and perception devices. The specific details are presented in Table 6.

Table 6.

Adversarial light and acoustic signal attacks: generation and optimization, signal source, deployment manners, transferability, and perceptibility.

5.3. Limitations of Adversarial Signals

For adversarial patterns involving artificial or natural light sources, the lack of target image training data in specific optical condition scenarios may be a primary reason for the weak robustness of DNN models against such attacks.

- (1)

- Injecting adversarial signals requires precise devices to reproduce specific optical or acoustic conditions for effective translation of digital attacks into the physical world. The signal sources of such attacks can be categorized into energy radiation types, such as the blue lasers in rolling shutter [89], the infrared laser LED employed in ICSL [65], and field interference types, such as intentional electromagnetic interference (IEMI) in GlitchHiker [108] and the acoustic signals in PG attacks [59]. Their physical deployment is directly linked to the stealth and feasibility of the attacks. For instance, lasers require precise calibration of the incident angle, acoustic signals must match the sensor’s resonant frequency, and electromagnetic interference relies on near-field coupling effects. Since such attacks do not rely on physical attachments, their deployment flexibility is greater than that of patch-based attacks. Although they offer greater deployment flexibility than patch-based attacks, their effectiveness is highly sensitive to even minor environmental deviations.

- (2)

- Projector-based adversarial patterns share mechanisms with adversarial patches by introducing additional patterns captured by cameras. However, projected attacks (e.g., Phantom Attack [64], Vanishing Attack [90]) face unique deployment challenges, including calibration for surface geometry and compensation for ambient light. A key reason for DNN models’ vulnerability to such attacks is the lack of training data representing targets under specific optical conditions, such as dynamic projections or strong ambient light. In contrast, laser injection methods like L-HAWK [16] bypass some environmental interference by directly targeting sensors but face deployment complexities related to synchronization triggering and optical calibration.

6. Placing 3D Adversarial Camouflage

6.1. Adversarial Camouflage

Early adversarial camouflage methods targeted 2D rigid or non-rigid surfaces, generating adversarial textures that were printed and attached to the target surface [14,49,81,82] to attack the target model. The 3D adversarial camouflage attack requires placing the adversarial pattern across the entire surface of the target, which may be a non-rigid structure. Therefore, 3D adversarial camouflage attacks involve two key aspects: texture generation methods and rendering techniques applied to the target surface. The literature lists of placing 3D adversarial camouflage are shown in Table 7.

Table 7.

Attribute list of placing 3D adversarial camouflage and corresponding adversarial attack methods.

There are two existing methods for generating 3D adversarial camouflage using neural renderers: one optimizes 2D texture patterns, mapping them repeatedly onto the target via texture overlay, known as the world-aligned method [53,66,69]. The other optimizes 3D textures that cover the entire surface of the target in the form of UV mapping, known as the UV map method [35,67,68,70,84]. The world-aligned method cannot ensure that the texture is mapped onto the target in the same way during both generation and evaluation, leading to discrepancies in the adversarial camouflage effects. The UV map methods are unable to render complex environmental features such as lighting and weather, which can diminish the effectiveness of adversarial camouflage attacks in physical deployment.

6.2. Generating 3D Adversarial Camouflage

Based on whether neural rendering tools are used in the adversarial camouflage generation process, we categorize 3D adversarial camouflage into two types: adversarial camouflage with 3D-rendering, and adversarial camouflage without 3D-rendering.

Adversarial camouflage with 3D-rendering employs neural renderers to generate adversarial textures or patterns. Among these, the 3D neural renderers include two categories: differentiable neural renderers (DNRs) and non-differentiable neural renderers (NNRs). PyTorch3D 0.7.6, Nvdiffrast 0.3.3, Mitsuba 2.0, and Mitsuba 3.0 are differentiable neural renderers, and MeshLab 1.3.2.0, Blender 4.5, Unity 1.6.0, and Unreal Engine 5.6 are non-differentiable neural renderers. The generation and optimization methods, the neural renderer, and the deployment manner of placing 3D adversarial camouflage are shown in Table 8.

Table 8.

Placing 3D adversarial camouflage: generation and optimization, neural renderer, deployment manner, transferability, and perceptibility.

3D differentiable rendering techniques address non-rigid deformations and the need for extensive labeled data, ensuring that adversarial information is preserved when mapped onto the 3D object. This enables practical deployment of the 3D adversarial camouflage in a sticker mode while maintaining the necessary adversarial properties and robustness.

6.2.1. Adversarial Camouflage Without Neural Renderers

Early adversarial camouflage methods generated adversarial samples by repeatedly pasting adversarial patterns onto target surfaces without using neural renderers, as demonstrated by [14,49,81,82,83].

For example, UPC [81] generates adversarial patterns that are optimized through a combination of semantic constraints and joint-attacks strategy targeting the region proposal network (RPN), classifier and regressor of the detector. AdvCam [14]) generates stealthy adversarial examples by combining neural style transfer with adversarial attack techniques. Adv-Tshirt [49] was the first clothing non-rigid adversarial patch for targeting person detection systems by creating adversarial T-shirts. The adversarial patterns are optimized using thin plate spline (TPS) transformation to model the non-rigid deformations of the T-shirt, combined with a min–max optimization framework in the ensemble loss function to enhance robustness. Invisibility Cloak [82] concurrently uses a similar method to generate clothing adversarial patterns and has been verified on more models and datasets.

6.2.2. Adversarial Camouflage with Non-Differentiable Neural Renderers

Previous works employed non-differentiable neural renderers in adversarial camouflage attacks to generate adversarial textures or patterns through search-based or iterative optimization techniques. While effective, these methods typically require more computational resources and time compared to approaches using differentiable neural renderers. Under the Expectation Over Transformation (EOT) framework, Athalye et al. [110] pioneered the creation of the first 3D physical-world adversarial objects, addressing the challenge that adversarial examples often fail to maintain their adversarial nature under real-world image transformations.

For example, CAMOU [66] employs a simulation environment (Unreal Engine) that enables the creation of realistic 3D vehicle models and diverse environmental conditions to learn camouflage patterns that can reduce the detectability of vehicles by object detectors. DAS [67] proposes a dual attention suppress attack based on the fact that different models share similar attention patterns for the same object. The adversarial patterns are rendered in a neural mesh renderer (NMR). CAC [39] leverages Unity for rendering and employs a dense proposals attack strategy, significantly enhancing the transferability and robustness of adversarial examples. However, the method’s applicability to Transformer-based models remains unexplored. FCA [68] generates robust adversarial texture patterns by utilizing the CARLA simulator to paint the texture on the entire vehicle surface.

6.2.3. Adversarial Camouflage with Differentiable Neural Renderers

Differentiable neural renderers bridge the gap between 3D models and 2D images by enabling gradient-based optimization of 3D model parameters directly from the pixel space.

For example, ACTIVE [53] provides a differentiable texture rendering process, using a neural texture renderer (NTR) to preserve various physical characteristics of the rendered images. AT3D [40] develops adversarial textured 3D meshes that can effectively deceive commercial face recognition systems by optimizing adversarial meshes in the low-dimensional coefficient space of the 3D Morphable model (3DMM). AdvCaT [84] uses a Voronoi diagram to parameterize the adversarial camouflage textures and the Gumbel–softmax trick to enable differentiable sampling of discrete colors, followed by applying TopoProj and TPS to simulate the physical deformation and movement of clothes. RAUCA [70] utilizes a Neural Renderer Plus (NRP) that can project vehicle textures onto 3D models and render images that incorporate a variety of environmental characteristics, such as lighting and weather conditions, leading to more robust and realistic adversarial camouflage. Wang [86] proposed a unified adversarial attack framework that can generate physically printable adversarial patches with different attack targets, including instance-level hiding and scene-level creating.

Additionally, some studies have used both differentiable and non-differentiable neural renderers. For example, DTA [69] introduces the Differentiable Transformation Attack, which leverages a neural rendering model known as the Differentiable Transformation Network (DTN). The DTN is designed to learn the representation of various scene properties from a legacy photo-realistic renderer. TT3D [55] generates adversarial examples in a digital format and then converts them into physical objects using 3D printing techniques to achieve the targeted adversarial attack. The attack performs adversarial fine-tuning in the grid-based Neural Radiance Field (NeRF) space and conducts transferability tests across various renderers, including Nvdiffrast, MeshLab, and Blender.

6.3. Limitations of 3D Adversarial Camouflage

Physically, adversarial camouflage is typically deployed via 3D-printing or 2D-printing followed by attachment to the target object. Since this form of attack involves manipulating a large area of the target’s surface, it is generally visible to human observers. However, the perceptibility of 3D adversarial camouflage, particularly in the context of pedestrian and vehicle detection, is increasingly evolving toward a more natural appearance.

The adoption of differentiable neural renderers has been instrumental in this evolution, as they enable gradient-based optimization of both texture and shape by enabling gradients to flow from the 2D rendered output back to the 3D model. This facilitates the generation of highly effective and natural-looking adversarial patterns that are fine-tuned to maximize attack success while minimizing perceptibility. Furthermore, placing 3D adversarial camouflage has demonstrated notable cross-model transferability. For example, exiting work [35,40,53,55,70,84,86,111] exhibits transferability across diverse-bone models.

7. Discussion and Future Trends

In this section, we provide a detailed discussion of the trade-offs between transferability, perceptibility, and deployment manners, along with their limitations and future trends.

7.1. Physical Deployment Manner and Transferability

The transferability of physical adversarial attacks can be qualitatively assessed by the cross-dataset, cross-model, and cross-task capabilities of adversarial patterns. For object detection tasks, the reduction in mean average precision (mAP) serves as the primary evaluation metric instead of attack success rate (ASR) [16], quantifying the performance degradation when adversarial patterns are transferred from source datasets and models to new models or tasks.

We systematically summarize the transferability of adversarial attacks against object detection, focusing specifically on the transferability of cross same-bone models and cross diverse-bone models, along with their two corresponding physical deployment manners: the printed deployment and the injected signal. The transferability of these attacks and their corresponding physical deployment manners are presented in Table 9.

Table 9.

The transferability of adversarial physical attacks and corresponding physical deployment manner.

Adversarial patterns generally exhibit stronger transferability across models with similar architectures (referred to as CSMT) than across those with different architectures (referred to as CDMT). This performance gap primarily stems from discrepancies in model architectures, variations in training data distributions, and other contributing factors such as differences in feature representations and decision boundaries.

As shown in Table 9, and considering the publication years of the various methods in previous Table 3, Table 5 and Table 7, early adversarial attacks primarily demonstrated cross same-bone model transferability (particularly across CNN architectures). More recently, research demonstrating cross diverse-bone model transferability has also emerged. For instance, 3D adversarial camouflage methods [35,53,55,70,84,86] exhibit transferability across both CNN and Transformer architectures. Similarly, adversarial patches [15,57,74] also demonstrate transferability across different model architectures. From a practical deployment perspective, transferability represents a critical metric for assessing the effectiveness of attacks against unknown real-world systems. Furthermore, adversarial signals, whether acoustic or optical (such as laser or infrared), compared to adversarial patches or large-area 3D adversarial camouflages, are less perceptible to human observers and offer superior visual stealth [63,92]. Additionally, their physical deployment is often more straightforward to implement. These advantages position adversarial signals as a promising vector for future attacks.

7.2. Weakly Perceptible Physical Adversarial Patterns

Adversarial patches that manipulate 2D physical targets cover a small area of the target surface, but their patterns are often unnatural and easily detectable by the naked eye. In real-world deployments, adversarial patterns must be readily captured by imaging sensors while remaining inconspicuous to human observers, all while maintaining a high attack success rate. Consequently, future work should prioritize improving the weak perceptibility of physical adversarial examples.

Researchers may design weakly perceptible physical adversarial patterns that combine multi-source illumination with deformable materials (e.g., colored thin-film plastics) to occlude key regions of the target, thereby inducing object hiding or appearing in object detection models. Additionally, 3D-printed adversarial patterns constrained by semantic priors could be leveraged to deceive object detection systems.

7.3. Transferability Across Real Object Detection Systems

Real-world systems are typically black-box, meaning adversaries possess limited or no knowledge of the target system’s internal workings. To perform successful attacks under such constraints, they often resort to strategies such as surrogate models or black-box query to achieve transferable adversarial effects in practical black-box settings. Enhancing the black-box transferability of physical adversarial patterns is therefore a critical direction for future research.

In the surrogate models method, adversarial patterns generated under white-box conditions demonstrate stronger transferability when the surrogate model’s architecture closely matches that of the target victim model. When the architecture of the target system is unknown, combining adversarial patterns generated from multiple surrogate models with diverse architectures may improve the feasibility and robustness of the attack.

In the black-box query method, adversaries in real-world scenarios often face strict limitations on the number of queries they can make to the target system. Efficiently incorporating prior knowledge about the target system into the adversarial pattern optimization process can significantly enhance query efficiency. For example, object detection mechanisms used in autonomous driving systems from different manufacturers can be analyzed using pre-trained deep neural networks based on open-source autonomous driving architectures, modules, and algorithms. By leveraging such prior knowledge, adversaries can better guide the optimization of adversarial patterns, thereby improving both query efficiency and attack effectiveness against unknown real-world systems.

7.4. Security Threats of Full Object Detection Pipeline

The deployment of adversarial patterns against object detection models in real-world environments involves numerous influencing factors, such as ambient lighting, distance, perspective, target velocity, camera sensor characteristics, and weather conditions, all of which affect the attack’s performance.

In the object detection modules of autonomous vehicles, when operating at high speeds or relying on multi-sensor fusion for decision-making, the robustness of existing adversarial patterns remains insufficiently validated. Many studies still lack evaluation under highway speed scenarios or testing on actual vehicle platforms, indicating a need for further verification. Other approaches use lasers to interfere with camera sensors, rolling shutters, or optical channels, thereby attacking the visual perception systems of autonomous vehicles. However, these efforts often focus on a single attack surface and lack comprehensive system-level adversarial evaluations of the entire object detection pipeline in autonomous driving. As a result, the overall systemic threat posed by such attacks remains poorly understood and requires further investigation.

7.5. Defense of Adversarial Patterns

Given the complexity of the physical world, we categorize defense strategies from the perspective of model pipeline into four types according to the stages of model processing: model training, model input, model inference, and result output. These four defense strategies and their corresponding objectives are presented in Table 10.

Table 10.

The taxonomy of adversarial defense strategies: defense objectives and key limitations across the four pipeline stages.

- (1)

- Adversarial Training. This method is applied during the model training stage by proactively introducing adversarial perturbations into the training datasets [112]. This process enhances the model’s inherent robustness, enabling it to ignore the interference of adversarial patterns.

- (2)