Abstract

Existing contrastive deep graph clustering methods typically employ fixed-threshold strategies when constructing positive and negative sample pairs, and fail to integrate both graph structure information and clustering structure information effectively. However, this fixed-threshold and binary partitioning approach is overly rigid, limiting the model’s utilization of potentially learnable samples. To address this problem, this paper proposes a contrastive deep graph clustering model with a progressive relaxation weighting strategy (DCPRES). By introducing the progressive relaxation weighting strategy (PRES), DCPRES dynamically allocates sample weights, constructing a progressive training strategy from easy to difficult samples. This effectively mitigates the impact of pseudo-label noise and enhances the quality of positive and negative sample pair construction. Building upon this, DCPRES designs two contrastive learning losses: an instance-level loss and a cluster-level loss. These respectively focus on local node information and global cluster distribution characteristics, promoting more robust representation learning and clustering performance. Extensive experiments demonstrated that DCPRES significantly outperforms existing methods on multiple public graph datasets, exhibiting a superior robustness and stability. For instance, on the CORA dataset, our model achieved a significant improvement over the static approach of CCGC, with the NMI increasing by , the ACC by , the ARI value by , and the F1-score by . It provides an efficient and stable solution for unsupervised graph clustering tasks.

1. Introduction

Graph data possess the advantage that their topological structure inherently provides natural self-supervision signals [1,2]. Consequently, this type of data holds significant application value in unsupervised learning and has greatly advanced the development of deep graph clustering (DGC) techniques. Deep graph clustering techniques aim to learn node representations and uncover latent cluster structures in an unsupervised setting. Classical methods such as ARGA [3] and DSCPS [4] typically guide clustering training based on reconstruction loss or generative modeling. However, such methods often struggle to capture the complex semantic relationships between nodes. Robustness in complex networks has also been emphasized in other domains, such as traffic network security [5], which further highlights the necessity of designing stable and resilient strategies in deep graph clustering.

Benefiting from the remarkable ability of contrastive learning [6] (CL) to learn supervision signals from unlabeled samples, contrastive deep graph clustering [1] (CDGC) has achieved an impressive performance. CDGC leverages augmented views of the graph structure and features to generate positive and negative sample pairs. By maximizing the similarity between positive pairs and minimizing the similarity with negative pairs, it guides the model to learn discriminative representations of augmented nodes, significantly enhancing the graph clustering performance. In CDGC, the selection of high-confidence positive and negative samples is crucial for improving the clustering performance. Early CDGC methods, such as MVGRL [7], primarily relied on manually designed data augmentation techniques (e.g., node feature perturbation, edge structure perturbation) to generate different views. While this approach can promote diversity in node representations, the inherent randomness of the augmentation often leads to semantic drift issues, consequently degrading the quality of the constructed positive and negative sample pairs for clustering. Yang et al. proposed CCGC [8], which utilizes high-confidence pseudo-labels to select reliable nodes for guiding the construction of positive and negative pairs. It employs dual encoders to generate different views, significantly mitigating the problems of semantic drift and pseudo-label noise. Liu et al. proposed HSAN [9], which, for the first time, introduced hard sample awareness into the CDGC framework. HSAN identifies hard positive and hard negative samples through the dual modeling of the feature and structural similarity. By dynamically weighting these hard samples during training, it enhances the model’s discriminative power on challenging samples and improves the clustering performance.

Although contrastive deep graph clustering has achieved a promising performance, we point out two drawbacks in the existing methods, as follows: (1) Existing methods overlook the role of graph structure information and clustering structure information when learning to construct negative pairs. Although CCGC [8] considers clustering structure information when selecting negative pairs and HSAN [9] considers graph structure information, no existing work has simultaneously integrated both attribute and structural information. (2) When selecting positive and negative sample pairs based on the K-means clustering results, the existing methods typically employ a fixed-threshold strategy [8,9], focusing more on samples distant from cluster centers, termed “hard samples”. However, this fixed-threshold and binary partitioning approach is overly rigid, limiting the model’s utilization of potentially learnable samples. An excessively large threshold introduces excessive noise within clusters. Conversely, an overly small threshold risks losing high-confidence samples.

To solve the mentioned problems, this paper proposes a contrastive deep graph clustering model with a progressive relaxation weighting strategy (DCPRES). Concretely, when constructing different views, we simultaneously embedded both the sample attributes and the data structure of the graph data. The embeddings from these two sources were then fused, resulting in node representations for different views that possess richer semantic information. Simultaneously, when selecting positive samples, we adopted the progressive relaxation weighting strategy (PRES). This involved introducing two small threshold parameters to divide the area around the cluster center into three confidence regions: high, medium, and low. This avoids the shortcomings of using a fixed threshold to distinguish positive and negative samples. The samples were then assigned to a confidence region based on their distance to the cluster center and weighted according to their confidence level. This ensures that the model first learns features from high-quality samples. Inspired by self-paced learning, we progressively increased the threshold parameters. This gradually introduced hard samples, guiding the model to learn their features from easy to difficult instances. This approach addresses the instability caused by semantic drift and pseudo-label noise, enhancing the model’s generalization and robustness. The main contributions of this paper are as follows:

- We introduce a novel contrastive deep graph clustering model featuring a PRES. To improve feature representation, our model concurrently processes both sample attributes and graph structures and establishes a two-tiered contrastive learning framework at both the instance and cluster levels.

- The progressive relaxation weight strategy implements an “easy-to-hard” learning curriculum. It guides the model to initially train on high-confidence samples to build a strong feature foundation, before gradually incorporating more challenging, lower-confidence samples. This approach enhances the model’s capacity for distinguishing between positive and negative pairs.

- Extensive experiments on six benchmark datasets validated the superiority of our proposed model, which set new state-of-the-art results across several key quantitative metrics against competing methods.

The remainder of this paper is organized as follows. We first review related work in contrastive deep graph clustering and self-paced learning. Next, we detail the proposed DCPRES framework, including its feature encoding process, the PRES, and the dual contrastive loss functions. Subsequently, we present extensive experimental results on six benchmark datasets to demonstrate the superiority of our method, followed by ablation studies and a visual analysis to validate the effectiveness of each component. Finally, we conclude the paper with a summary of our findings.

2. Related Work

2.1. Contrastive Deep Graph Clustering

Contrastive deep clustering is a method for unsupervised representation learning whose fundamental principle is to learn highly discriminative features by attracting positive pairs and repelling negative pairs within an embedding space. This method has seen broad applications in representation learning in various domains, including computer vision [10,11,12], natural language processing [13], and graph-structured data [14,15]. It is especially effective at uncovering latent semantic structures in data where labels are absent. Central to this is the info-noise contrastive estimation (InfoNCE) loss [16], a classic paradigm in contrastive learning. It drives the learning of discriminative features by maximizing the similarity between positive pairs while minimizing their similarity to a set of negative samples. For a given anchor sample x, its corresponding positive sample (typically generated by data enhancement), and a set of negative samples , the InfoNCE loss is formulated as follows:

Here, , , and are the respective embedding vectors for the anchor x, the positive sample , and the negative sample . The term represents a similarity metric (such as cosine similarity) and is a temperature parameter. The InfoNCE loss function considers a sample and its augmentation as a positive pair, while all other samples in the batch are treated as negative pairs. In complex graph structures, this design is prone to inaccurate positive–negative sample partitioning, thereby degrading the model performance.

To overcome this limitation, researchers have proposed several improved strategies to construct positive and negative pairs more effectively and increase the performance of contrastive learning. Prominent examples include DCHD [17], CCGC, and HSAN. These approaches typically select positive and negative pairs based on the K-means clustering results. They often focus on “hard samples”, those distant from cluster centers, and leverage confidence scores or neighborhood relationships to improve the precision of the selection process. However, these methods neglect the influence of the sample-to-center distance (i.e., the threshold) on the model. An overly large threshold introduces significant noise into the clusters, whereas a threshold that is too small results in the loss of high-confidence samples. Moreover, these approaches fail to fully leverage samples in the vicinity of cluster centers, which are high-confidence samples that could enable the model to learn essential features with greater ease.

To address this issue, we introduced a contrastive deep graph clustering model that incorporates a progressive relaxation weighting mechanism. Our approach utilizes two threshold parameters, which are relaxed as training progresses, to divide the space surrounding the cluster centers into three distinct zones: high-, medium-, and low-confidence. By assigning different weights to the samples based on their respective zones, we steer the model to prioritize learning from high-confidence samples in close proximity to the cluster centers. As training continues, these selection criteria are gradually loosened, establishing an “easy-to-hard” curriculum. This strategy significantly enhances both the robustness of the model and its overall clustering efficacy.

2.2. Semi-Supervised Clustering Based on Self-Paced Learning

Self-paced learning (SPL), initially introduced by Kumar et al. [18], was inspired by the cognitive principle that humans learn progressively, starting with simple concepts and gradually advancing to more complex ones. This approach introduces a self-pace parameter to dynamically manage the difficulty of samples during training, allowing the model to first master easier examples before tackling more challenging ones. This, in turn, enhances the robustness and generalization capacity of the model. In contrast to conventional methods that utilize the entire dataset from the outset, SPL prioritizes a sequential and phased approach to sample selection, which effectively mitigates the disruptive influence of noisy data. The efficacy and potential of SPL have been demonstrated from multiple angles in various applications, including semi-supervised image classification [19] (SPLSSE), deep subspace clustering [20] (DSC-AS), and multi-modal semi-supervised classification [21] (SSMFS). In the SPL framework, the learning pace is regulated by introducing a weight variable, , for each sample, indicating its degree of inclusion in the training process. This joint learning over model parameters and sample weights is formulated as the following optimization problem [18]:

In Equation (2), denotes the training loss of the supervised model, while represents the model parameterized by w. The term is a self-paced regularizer that governs the allocation of sample weights () and steers the learning curriculum. Here, is the self-paced parameter that controls the quantity and difficulty of the samples selected at each stage. Initially, a small ensures that only a few high-confidence samples are used for training. As training advances, is gradually increased, exposing the model to more complex and challenging samples. In conventional self-paced learning, the value of imposes a hard-thresholding decision (i.e., weights of 0 or 1) on whether a sample is included in the training. In the context of unsupervised clustering, however, we argue that a soft-weighting scheme is more beneficial. Relaxing the confidence criteria allows the model to learn richer semantic representations and enhances its robustness. Therefore, inspired by self-paced learning, we conceptualized the sample-to-center distance as a self-paced parameter, . We then relaxed this condition by introducing a second parameter, (with ), to create a soft-weighting interval. Samples with distances falling between and were assigned a weight between 0 and 1. Both and were progressively increased during training, empowering the model to more effectively select positive and negative samples.

3. Method

3.1. Overview of the Framework

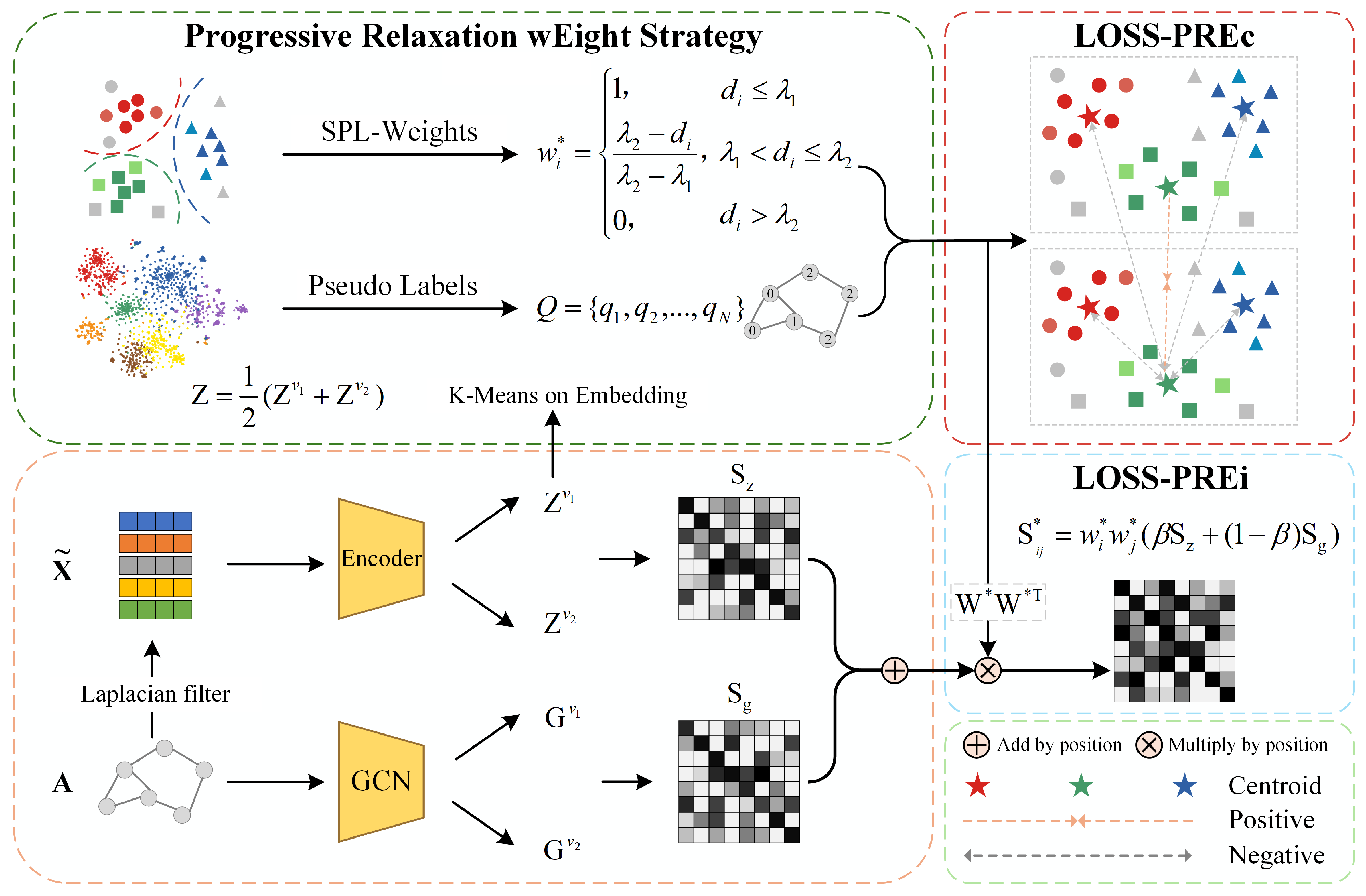

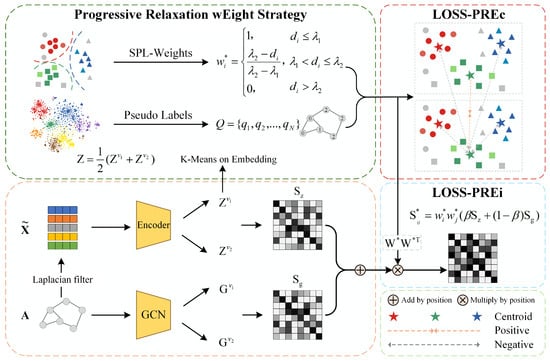

We propose DCPRES, whose architectural framework is depicted in Figure 1. Initially, the model employs a feature extraction module to generate embeddings that capture both the attribute and structural characteristics of the nodes. Subsequently, our PRES module dynamically allocates weights to samples, facilitating a curriculum where the model learns progressively from high-confidence to low-confidence examples. The architecture incorporates two key loss functions: an instance-level contrastive loss (LOSS-PREi) that captures local structural details via sample-to-sample comparisons, and a cluster-level contrastive loss (LOSS-PREc) guided by our weighting strategy that refines the global cluster distribution through cluster-to-cluster comparisons. Both losses, synergistic under the progressive relaxation mechanism, jointly elevate the overall clustering performance. Consequently, the model strikes an effective balance between local similarity and global consistency, and its robustness to noisy data is significantly improved.

Figure 1.

Overall framework of the contrastive deep graph clustering model with a progressive relaxation weighting strategy (DCPRES). The diagram illustrates a dual-branch feature extraction from attributes () and structure (A) to generate embeddings and pseudo-labels. The PRES assigns dynamic weights () using thresholds and to implement an “easy-to-hard” curriculum. This guides two contrastive losses: LOSS-PREi for instance-level learning and LOSS-PREc for cluster-level refinement, collectively improving the clustering performance and noise robustness.

3.2. Attribute and Structural Feature Encoding

In this section, we model the node data as an undirected graph. Let define an undirected graph comprising N nodes and K classes. The set of nodes is denoted by , and the set of undirected edges M by , which capture the connectivity between nodes. Each node is characterized by both attribute and structural information. For ease of feature extraction, we adopted a matrix representation for the graph data. The node attributes are represented by a matrix , where each row corresponds to the vector of characteristics D dimensional of node . The structure of the graph is encoded in an adjacency matrix , where signifies an edge between nodes and , and indicates no direct connection.

In line with previous work [8,9], we preprocessed the adjacency matrix with a normalization step prior to feature extraction to enhance the representational capacity of both the graph structure and node features. The procedure is as follows: first, we introduced self-loops to the adjacency matrix A by adding the identity matrix I, resulting in . This step is crucial for ensuring that a node’s own features are preserved during the information aggregation phase. Next, we constructed a symmetrically normalized graph Laplacian matrix, , to stabilize the information propagation and mitigate issues arising from nonuniform node degree distributions. It was formulated as follows:

Here, is the degree matrix corresponding to , with each diagonal element denoting the degree of the node. This preprocessing step lays a robust foundation for the subsequent extraction of features based on the graph structure. The final smoothed node attributes are given by Equation (4), where t signifies the number of smoothing layers.

Given the preprocessed feature matrix and the original adjacency matrix A, we separately fed them into an attribute feature encoder (AE) and a structure feature GCN (SG). The attribute encoder maps node attributes into latent embeddings, while the structure encoder is implemented as a graph convolutional network (GCN) to capture structural dependencies. This process yields distinct representations for the node attributes and the graph structure, respectively:

The GCN-based structure encoder updates node features by aggregating information from neighbors according to the normalized adjacency matrix. Its propagation rule follows the standard formulation:

where is a non-linear activation function. After this encoding process, the attribute encoders and yield two attribute-view embeddings , and the structure encoders produce two structure-view embeddings .

3.3. Progressive Relaxation Weight Strategy

In the task of contrastive deep graph clustering, selecting high-confidence samples is paramount for improving the model stability and discriminative power. The conventional approach involves clustering the representations (, ) from a dual-view encoder and designating the top samples—those closest to their cluster centroids or with the highest assignment probability—as high-confidence pairs. Traditional methods often rely on a static thresholding policy: samples satisfying a specific distance criterion are marked as positive (weight of 1), while all others are disregarded (weight of 0). This rigid, binary partitioning, however, is overly restrictive and prevents the model from leveraging other potentially valuable samples.

To address this limitation, we introduced a progressive relaxation weighting mechanism for the dynamic selection of high-confidence samples to augment the supervisory signal. Within this relaxation weighting module, a sample’s weight is determined by its feature-space distance and the clustering outcome. Specifically, we began by fusing the outputs from the two attribute encoders, and , to form a unified attribute representation, Z.

We applied K-means clustering to the complete set of fused representations . This process yielded a set of cluster centroids and a distance matrix . To quantify the clustering confidence for each sample, we then computed the Euclidean distance between the sample and its assigned cluster centroid:

Here, denotes the centroid of the cluster to which sample is assigned after K-means clustering. To improve the comparability of the distance metric and enhance the training stability, we normalized all sample distances , scaling them to the range. A smaller value of signifies that the sample is closer to its cluster centroid, and thus possesses a higher clustering confidence.

For sample weight computation, we departed from traditional binary selection policies by proposing a three-stage sample-weighting mechanism based on a progressive relaxation strategy. This mechanism permits the model to initially focus exclusively on the most reliable samples, ensuring training stability. As training deepens, more challenging samples are progressively incorporated to improve the model’s discriminative power and generalization capabilities. Concurrently, by filtering out low-confidence samples, the disruptive influence of noise on the training process is significantly mitigated. Specifically, we introduced two threshold parameters, and (where ), to define confidence regions for the samples. The parameter functions like a conventional fixed threshold, identifying definitive positive samples. The second parameter, , relaxes this stringent condition by allowing samples that fall within the interval to be assigned a soft confidence weight. This approach expands the pool of trainable data to include potentially valuable samples, thereby enhancing the model’s feature-learning capacity. According to the functions of and , the dynamic formula for calculating sample weights was established as follows:

Accordingly, we classified samples into three distinct types: high-confidence (), medium-confidence (), and low-confidence (). At the outset of training, and were initialized to very small values. This guaranteed that the initial training set consisted of high-confidence samples characterized by minimal clustering distances and the most dependable feature representations. To realize an “easy-to-hard” training curriculum, we progressively increased the values of and as the training unfolded, thereby relaxing the conditions for sample selection. Specifically, during the t-th epoch of training, the self-paced learning parameters and were updated based on the following rule:

In Equation (10), and govern the growth increments for thresholds and per training epoch. The magnitudes of these increments directly dictate the pace at which the model incorporates more challenging samples into the training process. To accommodate the varying requirements of different training phases, diverse growth strategies can be employed for and , enabling a more adaptable sample-filtering procedure.

In practice, the threshold parameters and are initialized with small values (e.g., , ). This design ensures that, at the beginning of training, the model focuses only on high-confidence samples located close to the cluster centers, thereby reducing the negative influence of noisy pseudo-labels. This choice was inspired by the principle of self-paced learning, where the model first learns from “easy” and reliable samples before gradually incorporating more challenging ones.

To ensure interpretability and stability, we adopted a linear growth strategy for and , where both thresholds were steadily increased at each training epoch. For example, the growth rate of per epoch, denoted as , is defined as , where T represents the total number of training epochs. Accordingly, the value of at the t-th epoch can be computed as . This formulation ensures that linearly increases from its initial value to a maximum of 1.0 by the end of training. A similar strategy was applied to .

In addition, the effectiveness of this design was validated through a hyperparameter sensitivity analysis (see Section 4.6) across multiple datasets. The experiments demonstrated that, when and are initialized within a relatively small range, the model achieves a consistently robust clustering performance.

By leveraging the aforementioned PRES for each sample in every iteration, a dedicated training weight is dynamically computed based on its distance metric relative to the thresholds and . The collection of these weights forms the sample weight matrix, , which acts as a dynamic measure of sample importance. This integrated sample selection and weighting mechanism enhances the model’s robustness and generalization capabilities throughout the training process.

3.4. Progressive Relaxation Weighted Instance-Level Contrastive Loss (LOSS-PREi)

Following the K-means clustering of the fused features Z, we derived an assignment probability matrix, . This matrix details the probability of each sample’s assignment to every cluster center. For each individual sample, we then selected the cluster center corresponding to the maximum assignment probability to serve as its pseudo-label:

This process generated a set of pseudo-labels, , which we used to construct positive and negative pairs. For any given sample from the first attribute view, its positive counterpart, , is a sample from the second attribute view that shares the identical pseudo-label (). All samples with differing pseudo-labels are considered negative samples. An analogous protocol was employed for the structural view representations, and . This construction protocol ensures that nodes with coherent features across views are treated as positive pairs, thereby effectively strengthening the alignment of cross-view representations.

Building upon this, we incorporated the obtained weight , which was automatically modulated based on its confidence score within the semantic feature space. In the initial stages of training, the model focuses exclusively on high-confidence samples. As training proceeds, more challenging medium-confidence samples are progressively incorporated, while low-confidence noisy samples are either assigned a lower weight or discarded entirely. Inspired by the InfoNCE loss [16] (Equation (1)), we designed a weighted instance contrastive loss function to encourage aggregation among positive pairs and suppress similarity among negative samples, which is defined as follows:

Here, and are the respective sets of positive and negative samples corresponding to a given sample . The function denotes the normalized cosine similarity. is the instance-level temperature parameter, while is a learnable parameter that weighs the contributions of semantic and structural view similarities. The indices a and b denote the first and second views, respectively. This loss function, calculated for sample in view a, integrates information from both attribute and structural cross-view perspectives. Compared to the original InfoNCE loss, which typically relies on a single pair of positive and negative samples, our instance-level loss leverages clustering results to generate a richer set of positive and negative pairs. This allows the model to exploit more informative supervisory signals, thereby optimizing the representation learning process. Furthermore, by incorporating the PRES, each sample is assigned a confidence-based weight that fine-tunes its contribution to the final loss. This dynamic weighting mechanism reduces the influence of noisy samples and enhances the robustness of the learned representations, ultimately leading to a more stable and effective clustering performance. By incorporating confidence-based sample weighting, it steers the model to learn increasingly discriminative representations through an “easy-to-hard” curriculum. The total instance-level contrastive loss, guided by our self-paced strategy, is formulated as follows:

3.5. Progressive Relaxation Weighted Cluster-Level Contrastive Loss (LOSS-PREc)

Beyond the instance-level contrastive loss operating on individual samples, we introduced a cluster-level contrastive loss to capture higher-order semantic consistency. This loss is designed to maximize the similarity between positive cluster views while minimizing it between negative pairs. Concretely, for each of the two attribute views and , we aggregated node features into class-center representations according to their pseudo-labels. To enhance the robustness, we incorporated the sample confidence weights, , into the formulation of the cluster centroids. The centroid for the k-th class in view , denoted , is defined as follows:

In this context, serves as an indicator function, which yields a value of 1 if sample is a member of the k-th class, and 0 otherwise. To enforce consistency between centroids of the same class across different views, while simultaneously enlarging the separation between centroids of different classes, we designed the following self-paced clustering contrastive loss:

In this context, denotes the normalized cosine similarity and is the clustering temperature parameter that modulates the sensitivity of the contrastive loss distribution. The indices a and b correspond to the first and second views, respectively. This loss function designates the centroids of the same class k from two different views, and , as a positive pair. Conversely, all other class centroids, , are treated as negative samples. This cluster-level contrastive objective optimizes the clustering consistency between the two views. Therefore, the final loss for DCPRES is a combination of the instance-level and cluster-level losses, formulated as shown below. Additionally, the complete training procedure for the model is detailed in Algorithm 1.

| Algorithm 1 CPRES algorithm flow. |

|

4. Experiment

This section begins by introducing the six publicly available datasets utilized in our experiments, accompanied by a brief description of each dataset’s key characteristics. We then provide a detailed exposition of our proposed method’s implementation, covering the experimental setup, the comparative baseline models, and the evaluation metrics employed. To conduct a thorough performance assessment, we performed extensive clustering experiments on these six datasets, benchmarking our approach against several state-of-the-art methods.

4.1. Dataset

Our experimental section evaluates the performance on six common graph-structured datasets, which included standard citation networks and text-attributed graphs. The first category included CORA and CITE, two classic citation network datasets where nodes signify papers and edges denote citation links. Each node is characterized by textual features and a class label, making these datasets standard benchmarks for node classification. The second category comprised AMAP, BAT, EAT, and UAT, which are datasets built as text-attributed graphs for broad use in unsupervised or semi-supervised graph representation learning. In these datasets, nodes typically correspond to documents or text fragments, with edges established based on semantic similarity. A statistical summary of these six datasets is provided in Table 1.

Table 1.

Details of the graph datasets.

4.2. Experimental Environment and Hyperparameter Settings

In our experiments, we trained the model end-to-end for 400 iterations. We employed the Adam optimizer with momentum for gradient descent-based minimization of the loss function, configured with a learning rate of 1 × and a weight decay of 1 × . All the experiments were executed on a system running Ubuntu 22.04.2 LTS, powered by an Intel Core i9-10980XE CPU @ 3.00 GHz and an NVIDIA GeForce RTX 4080 GPU with 16 GB of VRAM for training acceleration. The implementation was built upon the PyTorch framework (v2.4.1), utilizing CUDA 12.4 and Python 3.10. To ensure a fair comparison, all the models were trained from scratch without any pre-trained weights and under identical hyperparameter settings. Furthermore, for the sake of reproducibility, we used a fixed random seed across all experiments to maintain the reliability and comparability of our results.

4.3. Comparative Experiments

To benchmark the performance of our proposed DCPRES model, we conducted a comprehensive experimental comparison against a range of state-of-the-art deep graph clustering methods, all evaluated under identical conditions and without utilizing any pre-trained weights. Our baseline models included deep graph clustering methods such as DAEGC [22] (2019), DyFSS [23] (2024), and DeSE [24] (2025), as well as cutting-edge contrastive deep graph clustering models like GDCL [25] (2021), DCHD [17] (2024), MAGI [26] (2024), CCGC [8] (2023), and HSAN [9] (2023). To thoroughly assess the clustering performance across various aspects, we used four widely recognized evaluation metrics: the accuracy (ACC), the normalized mutual information (NMI), the adjusted Rand index (ARI), and the F1-score [4,8,27,28]. The full results of this comparative study are presented in Table 2, with the top-performing metric highlighted in bold and the runner-up underlined.

Table 2.

Index comparison of different graph clustering methods on six graph datasets.

As indicated by the results in Table 2, traditional deep graph clustering models, such as DAEGC and DyFSS, show effectiveness on select datasets, but they are fundamentally limited in their ability to capture latent semantic and structural inter-node relationships. These methods generally lack explicit supervisory signals and often rely heavily on specific pre-training routines, which restricts their capacity to fully leverage the underlying graph structures. In contrast, our proposed DCPRES model integrates a contrastive learning mechanism, significantly enhancing the discriminative power of node representations. By enabling the discovery of more informative positive and negative sample pairs, DCPRES sharpens the model’s ability to detect cluster boundaries, leading to an improved clustering performance.

One of the key features of DCPRES is the progressive relaxation strategy for sample selection, which we link to SPL. Self-paced learning provides an “easy-to-hard” curriculum for the model, where it starts by focusing on high-confidence samples and gradually introduces harder, more uncertain samples as training progresses. This progressive approach is particularly effective for mitigating the impact of pseudo-label noise and stabilizing gradient updates, which is crucial for improving the clustering performance in challenging, noisy environments. Specifically, by initially training on easy-to-classify samples, DCPRES ensures a stable optimization trajectory, while progressively introducing more complex samples allows the model to learn more discriminative features as training advances. The dynamic weighting of samples based on their confidence level further refines the contrastive learning objective, making the model more robust to noise and enhancing the gradient stability.

Furthermore, we benchmarked DCPRES against leading contrastive deep graph clustering models, including GDCL, DCHD, CCGC, MAGI, and HSAN. While these models leverage contrastive learning to address the lack of supervision, they predominantly rely on static high-confidence sample selection, which can be suboptimal for both sample utilization and the representation robustness. DCPRES, in contrast, employs a dynamic, self-paced learning-based sample-scheduling strategy. This allows the model to begin by focusing on high-confidence samples to stabilize early-stage training, and then progressively include medium- and low-confidence samples, thereby adapting to harder examples. This “easy-to-hard” learning paradigm, facilitated by dynamically weighted contributions from each sample, improves both the robustness and flexibility of the contrastive loss function. The progressive relaxation strategy enables more effective use of the dataset by dynamically managing sample inclusion, mitigating early noise effects, and ultimately leading to better generalization.

For example, on the CORA dataset, DCPRES outperformed CCGC’s static approach by 4.73% for the NMI, 4.77% for the ACC, 7.03% for the ARI value, and 5.89% for the F1-score, demonstrating a superior performance and robustness in clustering. Additionally, DCPRES exhibited considerably less performance fluctuation across all metrics compared to the other models, which is reflected in the lower standard deviation. This indicates that the self-paced learning mechanism is effective at reducing the uncertainty associated with training and improving the stability of the final clustering outcomes.

4.4. Ablation Experiments

As shown in Table 3, we conducted six ablation experiments on the CORA, CITE, AMAP, and BAT datasets to evaluate the contributions of GCN-Encoder, LOSS-PREi, and LOSS-PREc. By comparing the first and third groups, as well as the second and fourth groups, we observed that removing GCN-Encoder consistently led to a decline in the performance across multiple metrics. This indicates that the structural information captured by GCN-Encoder provides essential global context that complements the final loss optimization, making it a critical component for performance improvement.

Table 3.

Ablation studies of DCPRES on six datasets.

Furthermore, when either LOSS-PREi or LOSS-PREc was excluded from DCPRES, we observed a clear degradation in the results across all datasets. This demonstrates the importance of both the instance-level and cluster-level losses: they impose complementary constraints that jointly guide the representation space toward more discriminative and consistent clustering. Overall, the complete model, which integrates GCN-Encoder, LOSS-PREi, and LOSS-PREc, achieved the best results on nearly all metrics, confirming that the combination of structural encoding and dual-level loss design is both effective and necessary.

In addition, we note that the marginal contributions of each component varied across datasets. For example, removing GCN-Encoder had little effect on CORA’s NMI (+0.04), but significantly reduced the BAT NMI (−2.52). This difference reflects the varying balance of information sources: CORA node features are relatively strong, so structural encoding adds limited gain, while BAT relies more heavily on graph connectivity, making the GCN-Encoder indispensable. Similarly, the benefits of LOSS-PREi and LOSS-PREc depend on the dataset characteristics—LOSS-PREi is more effective when local neighborhoods are noisy, while LOSS-PREc contributes more when clusters are imbalanced or diverse. These results highlight that, although all components are crucial, their relative importance is dataset-dependent.

In Table 4, we compare three threshold-selection strategies—fixed, single, and DCPRES—on CORA and AMAP. Fixed applies a hard binary cut-off at 0.7; single sweeps a single threshold from 0.5 to 1.0 to derive soft weights; and DCPRES adopts a double-threshold progressive relaxation that partitions samples into high-/medium-/low-confidence bands and gradually relaxes the inclusion criteria during training. The results showed that DCPRES consistently delivered the best scores (NMI/ACC/ARI/F1), indicating that progressively widening the acceptance region with dual thresholds achieves a better trade-off between sample purity and coverage, suppresses early-stage pseudo-label noise, and incorporates medium-confidence samples at the right time, leading to more stable optimization and a stronger clustering performance.

Table 4.

Threshold setting analysis on CORA and AMAP. Fixed: hard binary cutoff; single: single-threshold soft weighting; DCPRES: double-threshold progressive relaxation, splitting samples into high-/medium-/low-confidence bands.

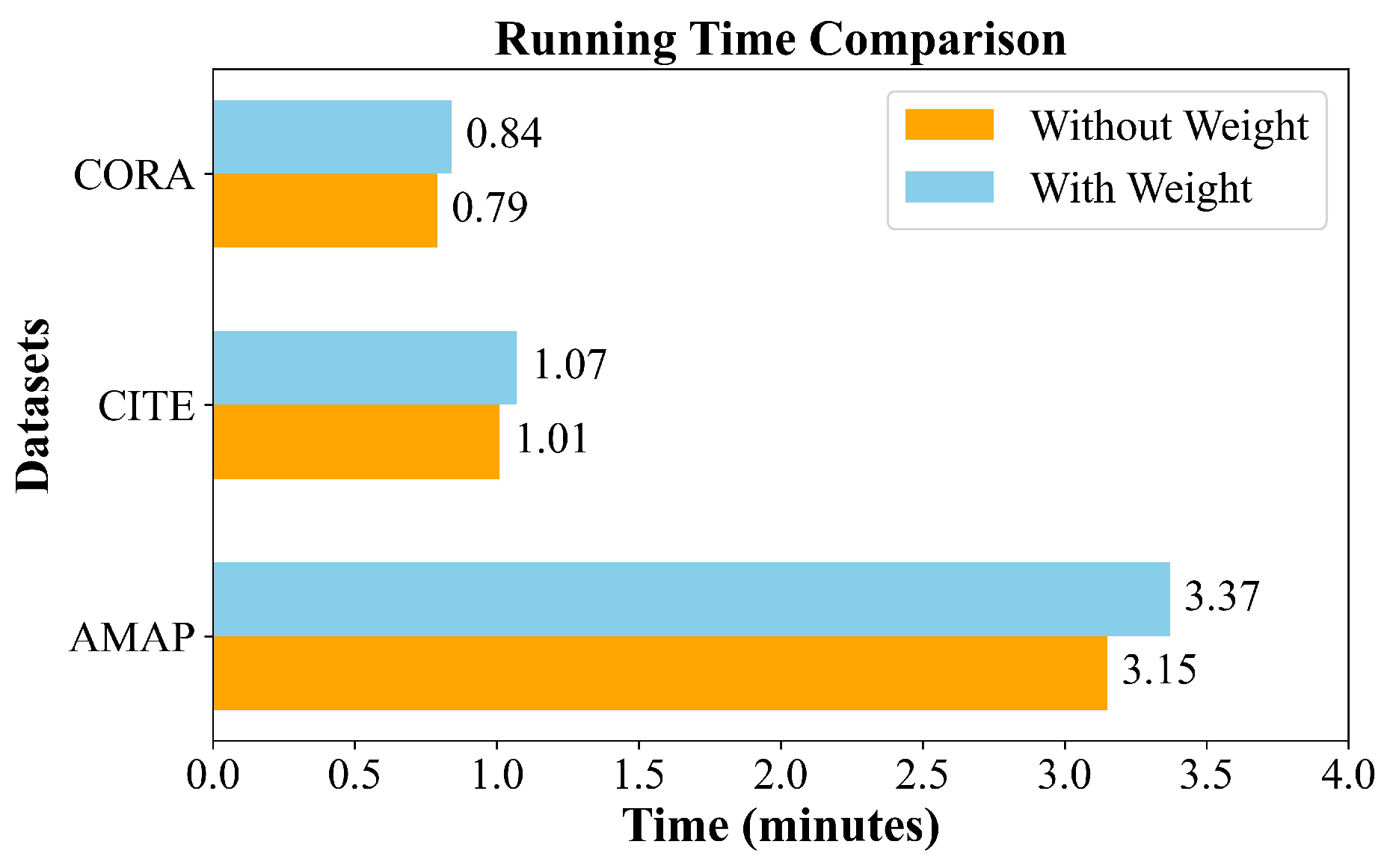

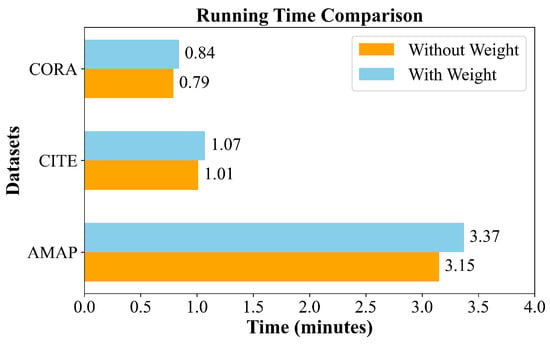

As shown in Figure 2, this analysis shows the additional time required for calculating sample weights after incorporating the progressive relaxation mechanism. The experiment was conducted with 400 training epochs, and the final time reflects the average of 10 runs. The time to calculate the weights increased by 0.05 min, 0.06 min, and 0.22 min for the CORA, CITE, and AMAP datasets, respectively. On average, the time increased by 6.42%. The increase was relatively small on smaller datasets like CORA and CITE, but slightly higher for larger datasets like AMAP. However, this time increase is acceptable, considering the performance improvement. The efficiency gain is attributed to the use of matrix operations for the weight calculation, which is much faster than regular mathematical computations. Additionally, the memory requirements are minimal, needing only an weight matrix to store the results.

Figure 2.

Soft weight calculation time consumption analysis.

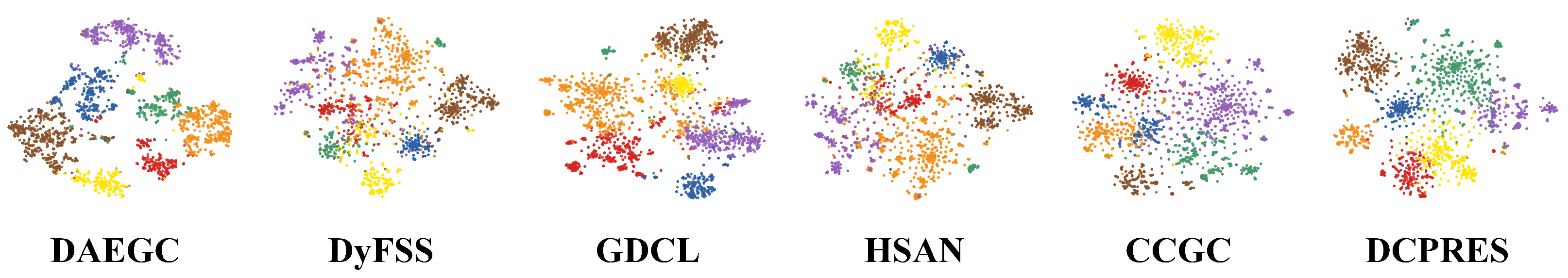

4.5. Visual Analytics

To provide a more intuitive illustration of the superiority of our proposed DCPRES model in capturing cluster structures, this section presents a visual analysis of the final node embeddings on the CORA dataset, as depicted in Figure 3. We employed the t-SNE [29] algorithm to project the high-dimensional representations into a 2D space, which allowed for a clear observation of the spatial distribution of nodes from different classes. The visualization revealed that, in comparison to other deep clustering methods, our DCPRES model carves out more distinct and semantically coherent clusters, indicating its enhanced discriminative power and ability to maintain semantic consistency.

Figure 3.

2D visualization of the comparison model on the CORA dataset.

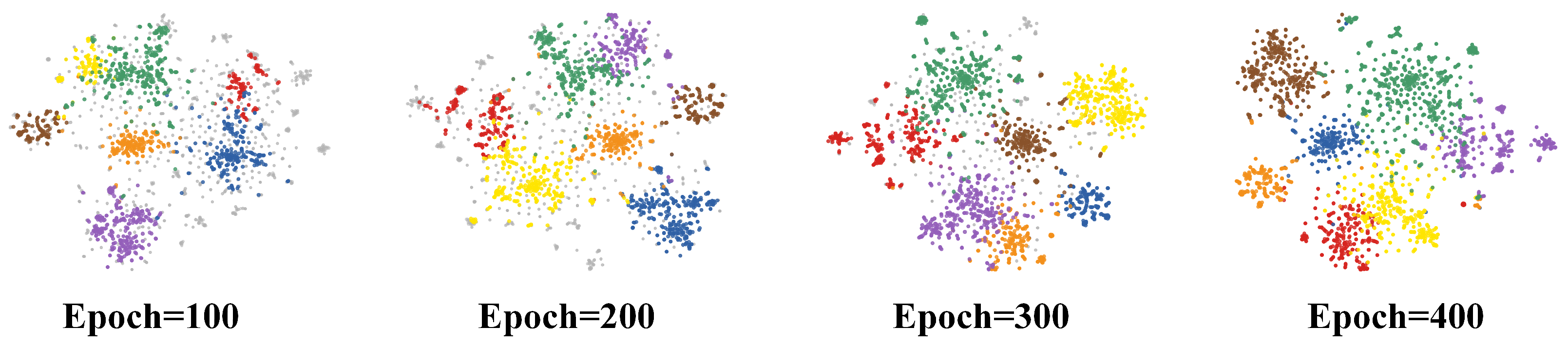

Next, we present a visualization of the clustering results from DCPRES on the CORA dataset in Figure 4. For this, we again employed the t-SNE method to project the node embeddings into a 2D space at four different training milestones: the 100th, 200th, 300th, and 400th epochs. In these plots, low-confidence samples that have not yet been incorporated into the training are colored gray. The remaining nodes, which are actively part of the training, are colored according to their assigned cluster (seven distinct colors for seven classes). The evolution of the distributions across these stages clearly shows that DCPRES initially leverages high-confidence samples for optimization. It then progressively incorporates samples that were previously difficult to classify, ultimately encompassing the entire dataset. This “easy-to-hard” guided learning process effectively showcases the dynamic and adaptive nature of our proposed sample selection strategy in DCPRES.

Figure 4.

2D visualization of the iterative process on the CORA dataset.

4.6. Hyperparameter Analysis

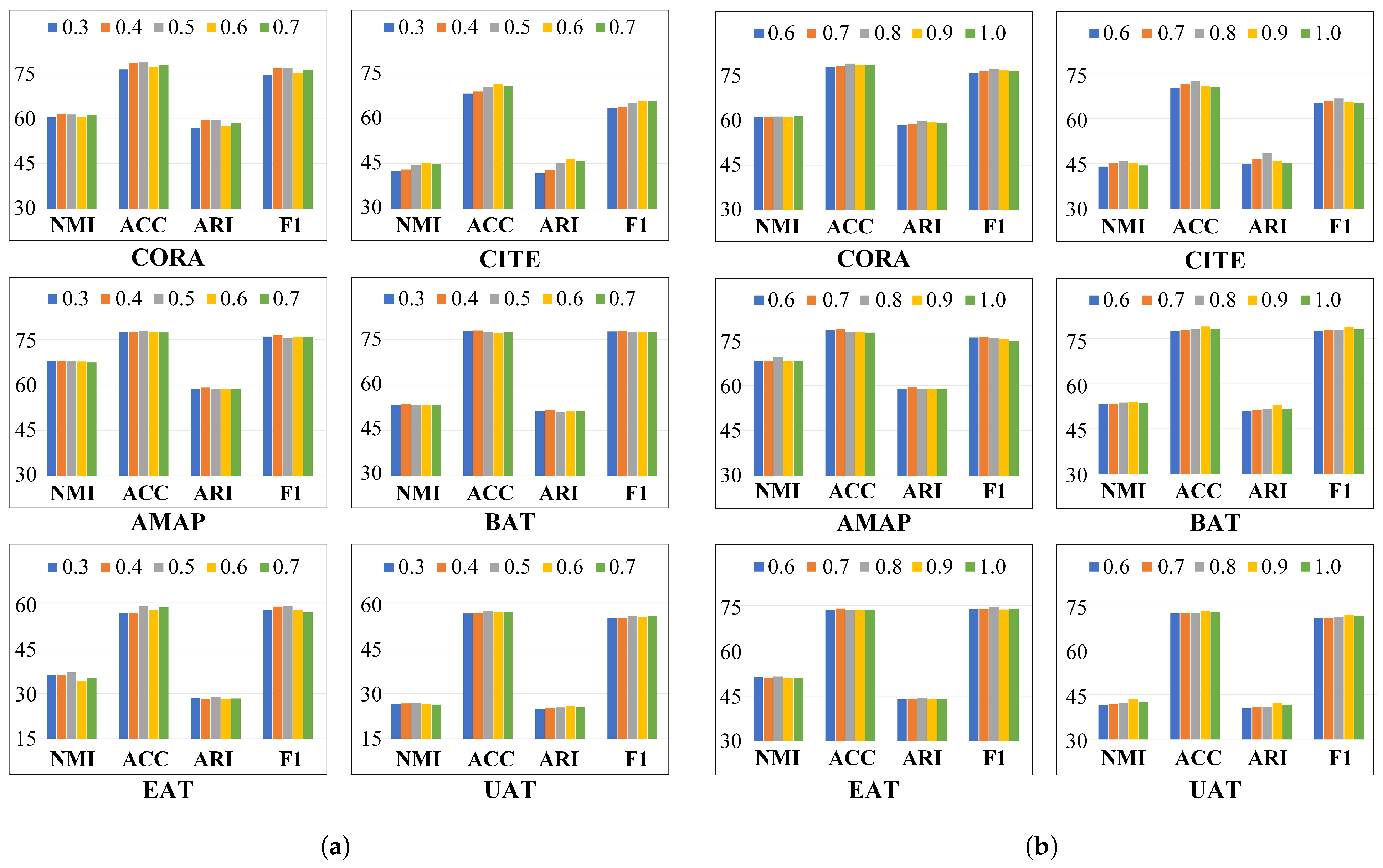

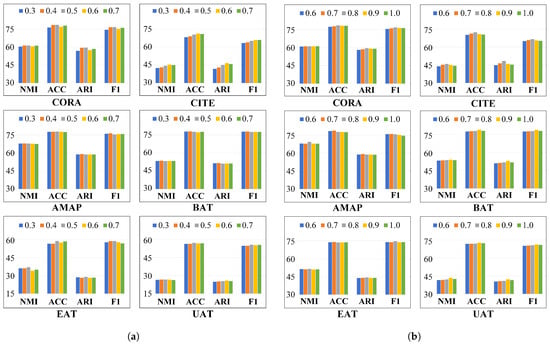

To further investigate the influence of the two critical threshold parameters, and , within our DCPRES, we conducted a hyperparameter sensitivity analysis. This study examined the performance trends under varying sample selection intensities. These parameters govern the confidence assignment for each sample, thereby dictating whether a pseudo-labeled sample is fully trusted, partially utilized, or entirely discarded. To systematically quantify the effects of these thresholds, we designed two sets of sensitivity experiments across six datasets, including CORA.

In the first experimental set, we fixed at 0.8 and varied from 0.3 to 0.7 in increments of 0.1. In the second set, was held constant at 0.5, while ranged from 0.6 to 1.0, also in increments of 0.1. For each experiment, we assessed the performance using four standard clustering metrics (ACC, NMI, ARI, and F1) and visualized the results as bar charts to illustrate the impact of parameter changes, as detailed in Figure 5a,b.

Figure 5.

Hyperparameter sensitivity analysis of the DCPRES model. (a) Performance variation across four metrics with different values of , while is fixed at 0.8. (b) Performance variation with different values of , while is fixed at 0.5. Results are shown for the six datasets.

The results from the first experimental set reveal that the model generally attained a superior performance when was set to 0.5 or 0.6. This suggests that significantly influences the quality of pseudo-label filtering and the initial construction of cluster centroids. On the CITE dataset, for instance, a small (e.g., 0.3) limited the number of high-confidence samples for initialization, resulting in less representative centroids and compromising the stability and accuracy of subsequent training. As increased, more high-confidence samples were included in the initialization, leading to an improved clustering performance. However, an excessively high (e.g., 0.7) introduced noisy pseudo-labels, which diminished the purity of the initial centroids and adversely affected the final results.

In the second set of experiments, as increased, the model performance followed a pattern of initial improvement followed by a decline. On the CORA dataset, for example, all four metrics peaked when was approximately 0.8. This indicates that a moderate value enables the model to effectively filter out highly uncertain pseudo-labels while retaining high-confidence ones, thus boosting the overall clustering performance. Conversely, as approaches , the model effectively degenerates to a hard-thresholding approach, using only the highest-confidence samples and discarding potentially useful information, which results in a slight performance drop. While other datasets showed similar trends, the optimal value varied, reflecting the different data distributions and demonstrating the model’s adaptive, yet variable, sensitivity to .

These two sets of sensitivity experiments confirm that the dual-threshold design ( and ) of our self-paced learning mechanism is crucial for effective pseudo-label filtering and performance enhancement in DCPRES. A well-chosen is instrumental for constructing high-quality initial cluster centroids, while a judiciously set improves the model’s adaptability to samples with varying confidence levels. Together, they validate the effectiveness and robustness of our self-paced sample selection strategy.

5. Conclusions

In this paper, we proposed DCPRES. By dynamically assigning weights to samples, this model enables the more effective construction of positive and negative pairs as well as more coherent cluster structures. Our framework integrates both the attribute and structural information of nodes, and employs dual contrastive learning modules—at the instance and cluster levels—guided by a self-paced strategy. This progressive learning process, moving from high-confidence to medium- and low-confidence samples, significantly improves the robustness and clustering performance. The experimental results on multiple public datasets demonstrate that DCPRES achieved superior results, exhibiting a strong stability and generalization.

Nevertheless, we acknowledge certain limitations of our work. In particular, selecting the optimal values of the hyperparameters (such as and ) currently requires manual tuning. While this approach is manageable for small- and medium-scale datasets, it becomes time-consuming and computationally demanding for larger datasets, which limits the efficiency and scalability of the method. To address this issue, in our future research, we plan to investigate parameter self-adjustment mechanisms. For instance, adaptive search strategies or meta-learning techniques could allow the model to dynamically determine the optimal parameter values during training, thereby reducing manual intervention and enhancing the efficiency. Moreover, we intend to explore additional optimization strategies and regularization methods to further improve the generalization and practical applicability of the model.

Author Contributions

Conceptualization, X.Q.; methodology, X.Q., L.P., and C.Y.; software, L.P.; validation, X.Q., L.P., and Z.Q.; data curation, Z.Q.; writing—original draft preparation, X.Q.; writing—review and editing, X.Q.; visualization, L.P. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Science and Technology of the People’s Republic of China, under grant No. STI2030-Major Projects 2021ZD0201900, and the Key Project of Science and Technology of Guangxi (Grant No. AB25069247).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets presented in this study are openly available. A comprehensive list of these datasets and access methods can be found at https://github.com/yueliu1999/Awesome-Deep-Graph-Clustering, accessed on 1 September 2025.

Acknowledgments

The authors would like to express their gratitude to all the experts who provided valuable suggestions and support for this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, Y.; Xia, J.; Zhou, S.; Yang, X.; Liang, K.; Fan, C.; Zhuang, Y.; Li, S.Z.; Liu, X.; He, K. A survey of deep graph clustering: Taxonomy, challenge, application, and open resource. arXiv 2022, arXiv:2211.12875. [Google Scholar]

- Ren, Y.; Pu, J.; Yang, Z.; Xu, J.; Li, G.; Pu, X.; Yu, P.S.; He, L. Deep clustering: A comprehensive survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 5858–5878. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.; Hu, R.; Fung, S.; Long, G.; Jiang, J.; Zhang, C. Learning graph embedding with adversarial training methods. IEEE Trans. Cybern. 2019, 50, 2475–2487. [Google Scholar] [CrossRef] [PubMed]

- Qin, X.; Yuan, C.; Jiang, J.; Chen, L. Deep semi-supervised clustering based on pairwise constraints and sample similarity. Pattern Recognit. Lett. 2024, 178, 1–6. [Google Scholar] [CrossRef]

- Hong, S.; Yue, T.; You, Y.; Lv, Z.; Tang, X.; Hu, J.; Yin, H. A Resilience Recovery Method for Complex Traffic Network Security Based on Trend Forecasting. Int. J. Intell. Syst. 2025, 2025, 3715086. [Google Scholar] [CrossRef]

- Jaiswal, A.; Babu, A.R.; Zadeh, M.Z.; Banerjee, D.; Makedon, F. A survey on contrastive self-supervised learning. Technologies 2020, 9, 2. [Google Scholar] [CrossRef]

- Hassani, K.; Khasahmadi, A.H. Contrastive multi-view representation learning on graphs. In Proceedings of the International Conference on Machine Learning, Virtual Event, 13–18 July 2020. [Google Scholar]

- Yang, X.; Liu, Y.; Zhou, S.; Wang, S.; Tu, W.; Zheng, Q.; Liu, X.; Fang, L.; Zhu, E. Cluster-guided contrastive graph clustering network. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023. [Google Scholar]

- Liu, Y.; Yang, X.; Zhou, S.; Liu, X.; Wang, Z.; Liang, K.; Tu, W.; Li, L.; Duan, J.; Chen, C. Hard sample aware network for contrastive deep graph clustering. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023. [Google Scholar]

- Li, Y.; Hu, P.; Liu, Z.; Peng, D.; Zhou, J.T.; Peng, X. Contrastive clustering. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021. [Google Scholar]

- Dang, Z.; Deng, C.; Yang, X.; Wei, K.; Huang, H. Nearest neighbor matching for deep clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Event, 19–25 June 2021. [Google Scholar]

- Huang, D.; Deng, X.; Chen, D.H.; Wen, Z.; Sun, W.; Wang, C.D.; Lai, J.H. Deep clustering with hybrid-grained contrastive and discriminative learning. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 9472–9483. [Google Scholar] [CrossRef]

- Yong, Q.; Chen, C.; Zhou, X. Contrastive Learning Subspace for Text Clustering. arXiv 2024, arXiv:2408.14119. [Google Scholar] [CrossRef]

- Chen, M.; Wang, B.; Li, X. Deep contrastive graph learning with clustering-oriented guidance. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024. [Google Scholar]

- Kulatilleke, G.K.; Portmann, M.; Chandra, S.S. SCGC: Self-supervised contrastive graph clustering. Neurocomputing 2025, 611, 128629. [Google Scholar] [CrossRef]

- van der Oord, A.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Zhang, X.; Xu, H.; Zhu, X.; Chen, Y. Deep contrastive clustering via hard positive sample debiased. Neurocomputing 2024, 570, 127147. [Google Scholar] [CrossRef]

- Kumar, M.; Packer, B.; Koller, D. Self-paced learning for latent variable models. Adv. Neural Inf. Process. Syst. 2010, 23, 1189–1197. [Google Scholar]

- Gu, N.N.; Sun, X.N.; Liu, W.; Li, L. Semi-supervised classification method based on self-paced learning and sparse self-expression. Syst. Sci. Math. 2020, 40, 191–208. [Google Scholar]

- Jiang, Y.Y.; Tao, C.F.; Li, P. Deep subspace clustering algorithm with data augmentation and adaptive self-paced learning. Comput. Eng. 2023, 49, 96–103. [Google Scholar]

- Zhang, C.; Fan, W.; Wang, B.; Chen, C.; Li, H. Self-paced semi-supervised feature selection with application to multi-modal Alzheimer’s disease classification. Inf. Fusion 2024, 107, 102345. [Google Scholar] [CrossRef]

- Wang, C.; Pan, S.; Hu, R.; Long, G.; Jiang, J.; Zhang, C. Attributed graph clustering: A deep attentional embedding approach. arXiv 2019, arXiv:1906.06532. [Google Scholar] [CrossRef]

- Zhu, P.; Wang, Q.; Wang, Y.; Li, J.; Hu, Q. Every node is different: Dynamically fusing self-supervised tasks for attributed graph clustering. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 17184–17192. [Google Scholar]

- Zhang, J.; Peng, H.; Sun, L.; Wu, G.; Liu, C.; Yu, Z. Unsupervised graph clustering with deep structural entropy. In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining, V.2, Toronto, ON, Canada, 3–7 August 2025; pp. 3752–3763. [Google Scholar]

- Zhao, H.; Yang, X.; Wang, Z.; Yang, E.; Deng, C. Graph debiased contrastive learning with joint representation clustering. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Montreal, QC, Canada, 21–26 August 2021. [Google Scholar]

- Liu, Y.; Li, J.; Chen, Y.; Wu, R.; Wang, E.; Zhou, J.; Chen, L. Revisiting modularity maximization for graph clustering: A contrastive learning perspective. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; pp. 1968–1979. [Google Scholar]

- Wan, X.; Liu, J.; Liang, W.; Liu, X.; Wen, Y.; Zhu, E. Continual multi-view clustering. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022. [Google Scholar]

- Liu, Y.; Yang, X.; Zhou, S.; Liu, X.; Wang, S.; Liang, K.; Tu, W.; Li, L. Simple contrastive graph clustering. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 13789–13800. [Google Scholar] [CrossRef] [PubMed]

- Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).