A Uniform Multi-Modal Feature Extraction and Adaptive Local–Global Feature Fusion Structure for RGB-X Marine Animal Segmentation

Abstract

1. Introduction

- We propose a novel feature learning architecture, namely a Uniform Multi-Modal Feature Extraction and Adaptive Local–Global Feature Fusion Structure. It enhances marine object segmentation capabilities by integrating RGB information with auxiliary information.

- In the decoder part, we introduce the Adaptive Local–Global Feature Fusion (ALGFF) module, which combines the strengths of CNNs and Transformers. It performs multi-modal and multi-scale feature fusion in a simple, consistent, and dynamic way.

- The experimental results prove the efficiency and effectiveness of the proposed method by achieving state-of-the-art performance on three datasets.

2. Related Work

2.1. Marine Animal Segmentation

2.2. RGB+X Object Detection

3. Proposed Method

3.1. Parallel Feature Extraction

3.2. Adaptive Local–Global Feature Fusion

3.3. Progressive Prediction

4. Experiments

4.1. Datasets and Evaluation Metrics

4.2. Implementation Details

4.3. Comparison with the State of the Art

4.4. Ablation Studies

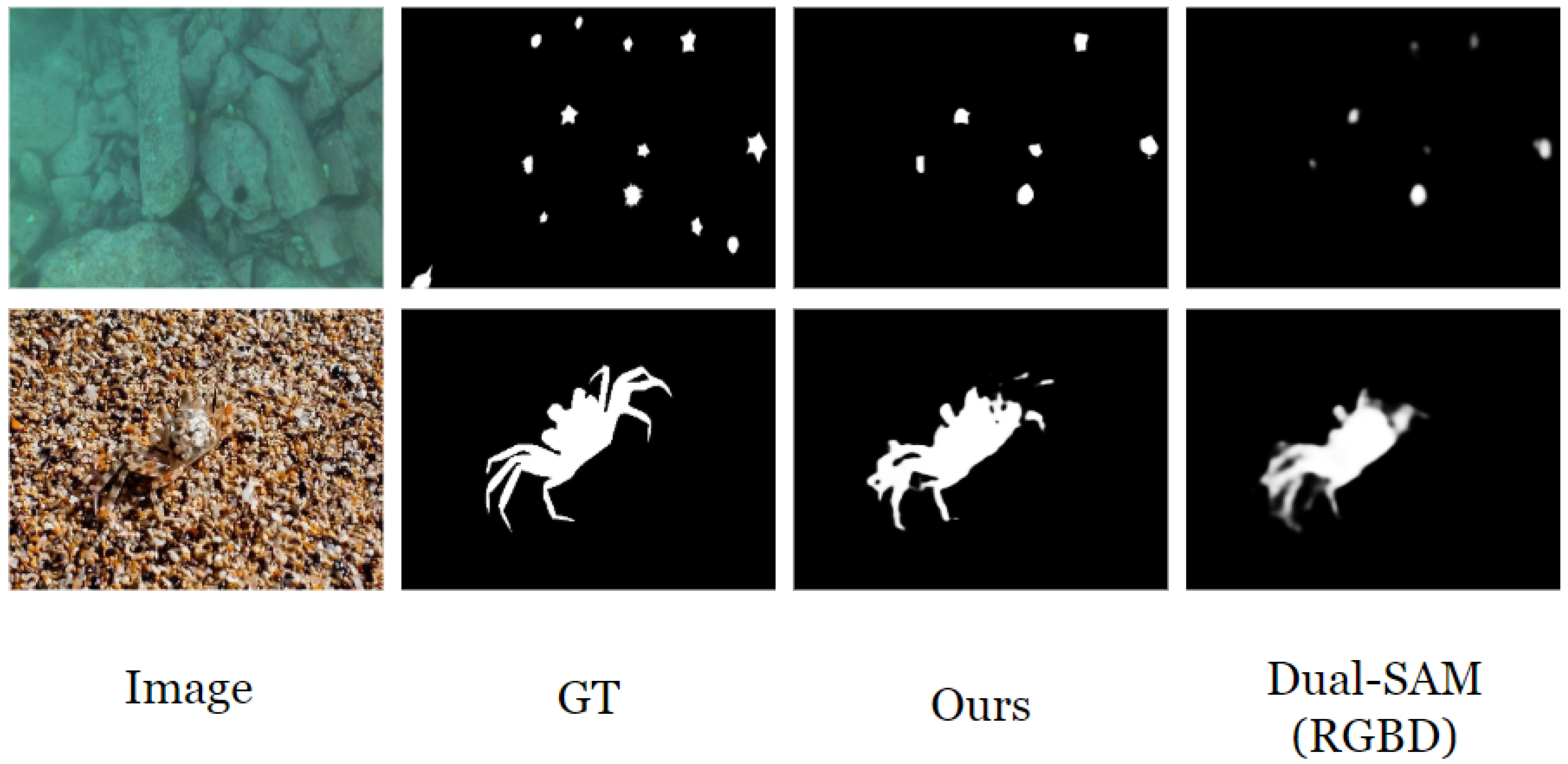

4.5. Failure Cases

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yan, T.; Wan, Z.; Deng, X.; Zhang, P.; Liu, Y.; Lu, H. MAS-SAM: Segment any marine animal with aggregated features. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, Jeju, Republic of Korea, 3–9 August 2024; pp. 6886–6894. [Google Scholar]

- Chen, Z.; Sun, Y.; Gu, Y.; Wang, H.; Qian, H.; Zheng, H. Underwater object segmentation integrating transmission and saliency features. IEEE Access 2019, 7, 72420–72430. [Google Scholar] [CrossRef]

- Sun, Y.; Zhe, C.; Wang, H.; Zhang, Z.; Shen, J. Level set method combining region and edge features for segmenting underwater images. J. Image Graph. 2020, 25, 824–835. [Google Scholar]

- Ma, Z.; Wang, C.; Niu, Y.; Wang, X.; Shen, L. A saliency-based reinforcement learning approach for a UAV to avoid flying obstacles. Robot. Auton. Syst. 2018, 100, 108–118. [Google Scholar] [CrossRef]

- Beijbom, O.; Edmunds, P.J.; Kline, D.I.; Mitchell, B.G.; Kriegman, D. Automated Annotation of Coral Reef Survey Images. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1170–1177. [Google Scholar]

- Yang, Y.; Li, D.; Zhao, S. A novel approach for underwater fish segmentation in complex scenes based on multi-levels triangular atrous convolution. Aquac. Int. 2024, 32, 5215–5240. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 4015–4026. [Google Scholar]

- Zhang, P.; Yan, T.; Liu, Y.; Lu, H. Fantastic animals and where to find them: Segment any marine animal with dual sam. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2578–2587. [Google Scholar]

- Hong, L.; Wang, X.; Zhang, G.; Zhao, M. Usod10k: A new benchmark dataset for underwater salient object detection. IEEE Trans. Image Process. 2023, 34, 1602–1615. [Google Scholar] [CrossRef]

- Zhang, P.; Yu, H.; Li, H.; Zhang, X.; Wei, S.; Tu, W.; Yang, Z.; Wu, J.; Lin, Y. Msgnet: Multi-source guidance network for fish segmentation in underwater videos. Front. Mar. Sci. 2023, 10, 1256594. [Google Scholar] [CrossRef]

- Boudhane, M.; Nsiri, B. Underwater image processing method for fish localization and detection in submarine environment. J. Vis. Commun. Image Represent. 2016, 39, 226–238. [Google Scholar] [CrossRef]

- Zhu, S.; Luo, W.; Duan, S. Enhancement of underwater images by CNN-based color balance and dehazing. Electronics 2022, 11, 2537. [Google Scholar] [CrossRef]

- Zhang, P.; Yang, Z.; Yu, H.; Tu, W.; Gao, C.; Wang, Y. RUSNet: Robust fish segmentation in underwater videos based on adaptive selection of optical flow. Front. Mar. Sci. 2024, 11, 1471312. [Google Scholar] [CrossRef]

- Zuo, X.; Jiang, J.; Shen, J.; Yang, W. Improving underwater semantic segmentation with underwater image quality attention and muti-scale aggregation attention. Pattern Anal. Appl. 2025, 28, 1–12. [Google Scholar] [CrossRef]

- Wen, J.; Cui, J.; Yang, G.; Zhao, B.; Zhai, Y.; Gao, Z.; Dou, L.; Chen, B.M. Waterformer: A global–local transformer for underwater image enhancement with environment adaptor. IEEE Robot. Autom. Mag. 2024, 31, 29–40. [Google Scholar] [CrossRef]

- Chen, Z.; Tang, J.; Wang, G.; Li, S.; Li, X.; Ji, X.; Li, X. UW-SDF: Exploiting Hybrid Geometric Priors for Neural SDF Reconstruction from Underwater Multi-view Monocular Images. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14–18 October 2024; pp. 14248–14255. [Google Scholar]

- Song, K.; Wang, H.; Zhao, Y.; Huang, L.; Dong, H.; Yan, Y. Lightweight multi-level feature difference fusion network for RGB-DT salient object detection. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 35, 101702. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, H.; Yang, K.; Hu, X.; Liu, R.; Stiefelhagen, R. CMX: Cross-modal fusion for RGB-X semantic segmentation with transformers. IEEE Trans. Intell. Transp. Syst. 2023, 24, 14679–14694. [Google Scholar] [CrossRef]

- Wu, Z.; Allibert, G.; Meriaudeau, F.; Ma, C.; Demonceaux, C. Hidanet: Rgb-d salient object detection via hierarchical depth awareness. IEEE Trans. Image Process. 2023, 32, 2160–2173. [Google Scholar] [CrossRef]

- Wu, L.F.; Wei, D.; Xu, C.A. CFANet: The Cross-Modal Fusion Attention Network for Indoor RGB-D Semantic Segmentation. J. Imaging 2025, 11, 177. [Google Scholar] [CrossRef]

- Yang, X.; Li, Q.; Yu, D.; Gao, Z.; Huo, G. Polarization spatial and semantic learning lightweight network for underwater salient object detection. J. Electron. Imaging 2024, 33, 033010. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, H.; Li, Y.; Nakamura, Y.; Zhang, L. Flowfusion: Dynamic dense rgb-d slam based on optical flow. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 7322–7328. [Google Scholar]

- Luiten, J.; Kopanas, G.; Leibe, B.; Ramanan, D. Dynamic 3d gaussians: Tracking by persistent dynamic view synthesis. In Proceedings of the 2024 International Conference on 3D Vision (3DV), Davos, Switzerland, 18–21 March 2024; pp. 800–809. [Google Scholar]

- Sun, W.; Cao, L.; Guo, Y.; Du, K. Multimodal and multiscale feature fusion for weakly supervised video anomaly detection. Sci. Rep. 2024, 14, 22835. [Google Scholar] [CrossRef]

- Anvarov, F.; Kim, D.H.; Song, B.C. Action recognition using deep 3D CNNs with sequential feature aggregation and attention. Electronics 2020, 9, 147. [Google Scholar] [CrossRef]

- Hui, T.; Xun, Z.; Peng, F.; Huang, J.; Wei, X.; Wei, X.; Dai, J.; Han, J.; Liu, S. Bridging search region interaction with template for rgb-t tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13630–13639. [Google Scholar]

- Xue, Y.; Jin, G.; Zhong, B.; Shen, T.; Tan, L.; Xue, C.; Zheng, Y. FMTrack: Frequency-aware Interaction and Multi-Expert Fusion for RGB-T Tracking. IEEE Trans. Circuits Syst. Video Technol. 2025. [Google Scholar] [CrossRef]

- Tang, H.; Li, Z.; Zhang, D.; He, S.; Tang, J. Divide-and-conquer: Confluent triple-flow network for RGB-T salient object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 1958–1974. [Google Scholar] [CrossRef]

- Du, N.; Huang, Y.; Dai, A.M.; Tong, S.; Lepikhin, D.; Xu, Y.; Krikun, M.; Zhou, Y.; Yu, A.W.; Firat, O.; et al. Glam: Efficient scaling of language models with mixture-of-experts. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 5547–5569. [Google Scholar]

- Shen, Z.; Zhang, M.; Zhao, H.; Yi, S.; Li, H. Efficient attention: Attention with linear complexities. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 3531–3539. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4. [Google Scholar]

- Connor, R.; Dearle, A.; Claydon, B.; Vadicamo, L. Correlations of cross-entropy loss in machine learning. Entropy 2024, 26, 491. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 19–23 October 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Fu, Z.; Chen, R.; Huang, Y.; Cheng, E.; Ding, X.; Ma, K.K. Masnet: A robust deep marine animal segmentation network. IEEE J. Ocean. Eng. 2023, 49, 1104–1115. [Google Scholar] [CrossRef]

- Li, L.; Rigall, E.; Dong, J.; Chen, G. Mas3k: An open dataset for marine animal segmentation. In Proceedings of the International Symposium on Benchmarking, Measuring and Optimization, Virtual Event, 15–16 November 2020; pp. 194–212. [Google Scholar]

- Saleh, A.; Laradji, I.H.; Konovalov, D.A.; Bradley, M.; Vazquez, D.; Sheaves, M. A realistic fish-habitat dataset to evaluate algorithms for underwater visual analysis. Sci. Rep. 2020, 10, 14671. [Google Scholar] [CrossRef] [PubMed]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Fan, D.P.; Cheng, M.M.; Liu, Y.; Li, T.; Borji, A. Structure-Measure: A New Way to Evaluate Foreground Maps. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Fan, D.P.; Ji, G.P.; Qin, X.; Cheng, M.M. Cognitive vision inspired object segmentation metric and loss function. Sci. Sin. Informationis 2021, 6, 5. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Huang, L.; Gong, A. Trigonometric feature learning for RGBD and RGBT image salient object detection. Knowl.-Based Syst. 2025, 310, 112935. [Google Scholar] [CrossRef]

- Chen, T.; Xiao, J.; Hu, X.; Zhang, G.; Wang, S. Adaptive fusion network for RGB-D salient object detection. Neurocomputing 2023, 522, 152–164. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Wan, Z.; Tian, X.; Li, A.; Lv, Y.; Dai, Y. Generative transformer for accurate and reliable salient object detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 1041–1054. [Google Scholar] [CrossRef]

| Dataset | Method | Type | MAE | Total Params | Trainable Params | FLOPs | |||

|---|---|---|---|---|---|---|---|---|---|

| RMAs | MAS-SAM | RGB | 0.0229 | 0.8652 | 0.8561 | 0.9442 | 98.41 M | 11.13 M | 141.03G |

| AFNet | RGBD | 0.0263 | 0.8527 | 0.8366 | 0.9335 | 254.45 M | 254.45 M | 128.30 G | |

| iGAN | RGBD | 0.0258 | 0.8589 | 0.8464 | 0.9325 | 87.32 M | 87.32 M | 47.72 G | |

| TFL-Net | RGB-D | 0.0243 | 0.8545 | 0.8437 | 0.9426 | 201.45 M | 201.32 M | 54.32 G | |

| TC-USOD | RGB-D | 0.0237 | 0.8448 | 0.8412 | 0.9230 | 125.96 M | 125.81 M | 29.64 G | |

| Dual-SAM | RGB-D | 0.0257 | 0.8614 | 0.8400 | 0.9360 | 159.95 M | 74.30 M | 325.68 G | |

| Dual-SAM | RGB-G | 0.0220 | 0.8609 | 0.8550 | 0.9424 | 159.95 M | 74.30 M | 325.68 G | |

| Ours | RGB-D | 0.0221 | 0.8769 | 0.8633 | 0.9464 | 98.59 M | 12.45 M | 252.11 G | |

| Mask3K | MAS-SAM | RGB | 0.0258 | 0.8748 | 0.8829 | 0.9358 | 98.41 M | 11.13 M | 141.03 G |

| AFNet | RGB-D | 0.0334 | 0.8426 | 0.8581 | 0.9089 | 254.45 M | 254.45 M | 128.30 G | |

| iGAN | RGB-D | 0.0288 | 0.8580 | 0.8654 | 0.9128 | 87.32 M | 87.32 M | 47.72 G | |

| TFL-Net | RGB-D | 0.0294 | 0.8501 | 0.8623 | 0.9174 | 201.45 M | 201.32 M | 54.32 G | |

| TC-USOD | RGB-D | 0.0343 | 0.8229 | 0.8470 | 0.9015 | 125.96 M | 125.81 M | 29.64 G | |

| Dual-SAM | RGB-D | 0.0306 | 0.8705 | 0.8703 | 0.9205 | 159.95 M | 74.30 M | 325.68 G | |

| Dual-SAM | RGB-G | 0.0252 | 0.8756 | 0.8821 | 0.9330 | 159.95 M | 74.30 M | 325.68 G | |

| Ours | RGB-D | 0.0263 | 0.8857 | 0.8863 | 0.9325 | 98.59 M | 12.45 M | 252.11 G | |

| DeepFish | MAS-SAM | RGB | 0.0092 | 0.8646 | 0.8783 | 0.9538 | 98.41 M | 11.13 M | 141.03 G |

| TFL-Net | RGB-O | 0.0070 | 0.8893 | 0.8923 | 0.9615 | 201.45 M | 201.32 M | 54.32 G | |

| TC-USOD | RGB-O | 0.0070 | 0.8835 | 0.8959 | 0.9546 | 125.96 M | 125.81 M | 29.64 G | |

| Dual-SAM | RGB-O | 0.0077 | 0.8783 | 0.8904 | 0.9618 | 159.95 M | 74.30 M | 325.68 G | |

| Dual-SAM | RGB-G | 0.0086 | 0.8558 | 0.8727 | 0.9523 | 159.95 M | 74.30 M | 325.68 G | |

| Ours | RGB-O | 0.0062 | 0.8964 | 0.9016 | 0.9730 | 98.59 M | 12.45 M | 252.11 G |

| Method | MAS-SAM | AFNet | iGAN | TFL-Net | TC-USOD | Dual-SAM | Ours |

|---|---|---|---|---|---|---|---|

| FPS (it/s) | 15.17 | 18.65 | 33.13 | 19.58 | 31.81 | 9.91 | 11.03 |

| Method | MAE | Total Params | Trainable Params | FLOPs | |||

|---|---|---|---|---|---|---|---|

| RGB | 0.0225 | 0.8752 | 0.8605 | 0.9439 | 95.00 M | 11.43 M | 138.99 G |

| RGB+depth (single) | 0.0235 | 0.8693 | 0.8592 | 0.9428 | 95.00 M | 11.51 M | 139.00 G |

| Ours | 0.0221 | 0.8769 | 0.8633 | 0.9464 | 98.59 M | 12.45 M | 252.11 G |

| Method | MAE | Total Params | Trainable Params | FLOPs | |||

|---|---|---|---|---|---|---|---|

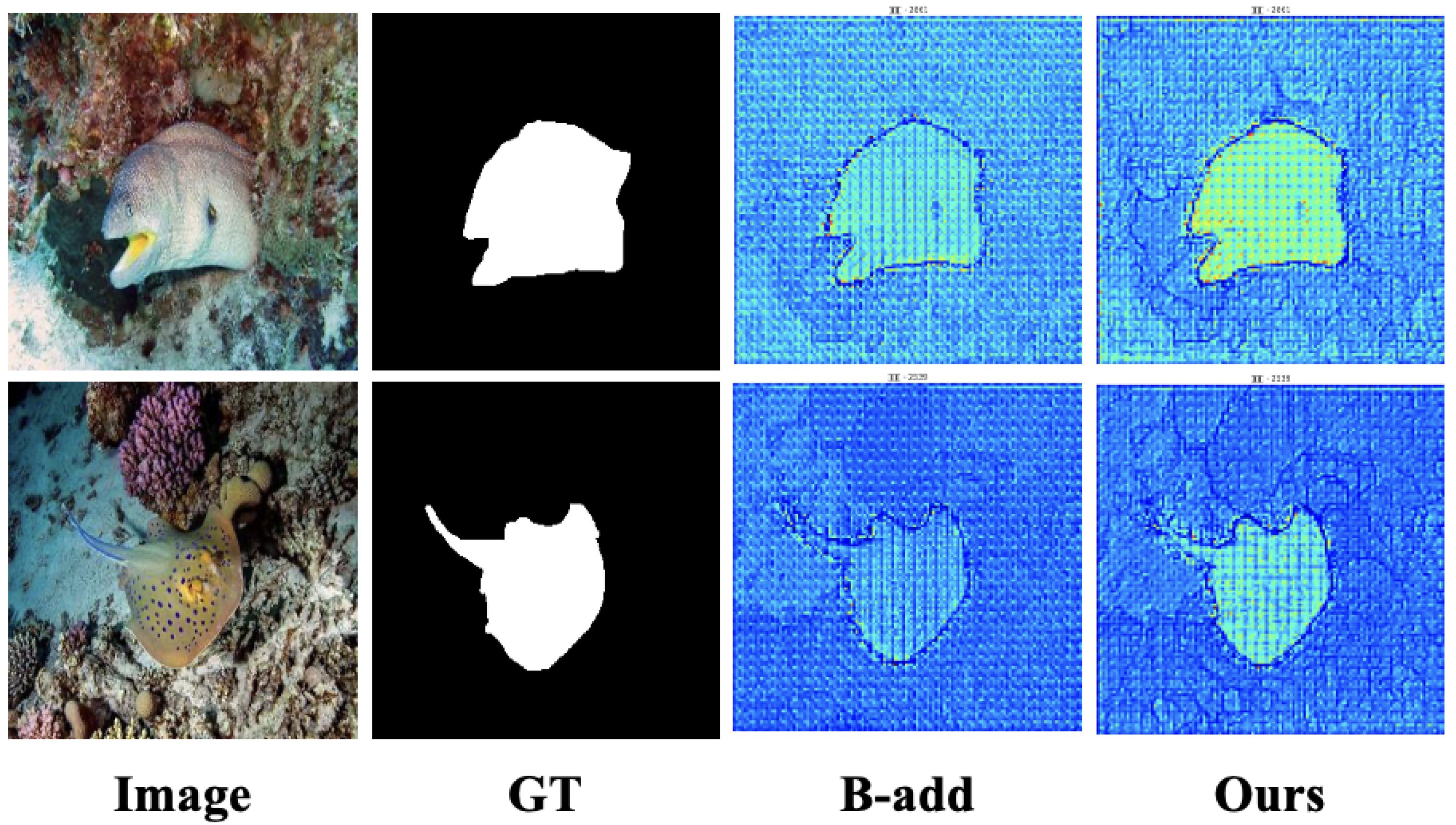

| B-add | 0.0225 | 0.8752 | 0.8578 | 0.9433 | 97.83 M | 11.69 M | 243.39 G |

| B-concat | 0.0222 | 0.8719 | 0.8608 | 0.9388 | 97.96 M | 11.74 M | 243.93 G |

| multi-scale | 0.0220 | 0.8724 | 0.8573 | 0.9457 | 98.31 M | 12.23 M | 248.78 G |

| FAM | 0.0228 | 0.8739 | 0.8584 | 0.9450 | 98.39 M | 12.40 M | 244.92 G |

| Ours | 0.0221 | 0.8769 | 0.8633 | 0.9464 | 98.59 M | 12.45 M | 252.11 G |

| Method | MAE | Total Params | Trainable Params | FLOPs | |||

|---|---|---|---|---|---|---|---|

| B-add | 0.0225 | 0.8752 | 0.8578 | 0.9433 | 97.83 M | 11.69 M | 243.39 G |

| local-branch | 0.0222 | 0.8782 | 0.8607 | 0.9439 | 98.20 M | 12.16 M | 246.62 G |

| Equal-weight | 0.0224 | 0.8740 | 0.8602 | 0.9468 | 98.59 M | 12.44 M | 252.06 G |

| Ours | 0.0221 | 0.8769 | 0.8633 | 0.9464 | 98.59 M | 12.45 M | 252.11 G |

| Method | MAE | Total Params | Trainable Params | FLOPs | |||

|---|---|---|---|---|---|---|---|

| B-add | 0.0073 | 0.8789 | 0.8906 | 0.9615 | 97.83 M | 11.69 M | 243.39 G |

| local-branch | 0.0064 | 0.8907 | 0.8974 | 0.9664 | 98.20 M | 12.16 M | 246.62 G |

| Equal-weight | 0.0069 | 0.8872 | 0.8957 | 0.9686 | 98.57 M | 12.44 M | 252.06 G |

| Ours | 0.0062 | 0.8964 | 0.9016 | 0.9730 | 98.59 M | 12.45 M | 252.11 G |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, Y.; Gao, Y.; Wang, Y.; Wang, Y.; Yu, H.; Lin, Y. A Uniform Multi-Modal Feature Extraction and Adaptive Local–Global Feature Fusion Structure for RGB-X Marine Animal Segmentation. Electronics 2025, 14, 3927. https://doi.org/10.3390/electronics14193927

Jiang Y, Gao Y, Wang Y, Wang Y, Yu H, Lin Y. A Uniform Multi-Modal Feature Extraction and Adaptive Local–Global Feature Fusion Structure for RGB-X Marine Animal Segmentation. Electronics. 2025; 14(19):3927. https://doi.org/10.3390/electronics14193927

Chicago/Turabian StyleJiang, Yue, Yan Gao, Yifei Wang, Yue Wang, Hong Yu, and Yuanshan Lin. 2025. "A Uniform Multi-Modal Feature Extraction and Adaptive Local–Global Feature Fusion Structure for RGB-X Marine Animal Segmentation" Electronics 14, no. 19: 3927. https://doi.org/10.3390/electronics14193927

APA StyleJiang, Y., Gao, Y., Wang, Y., Wang, Y., Yu, H., & Lin, Y. (2025). A Uniform Multi-Modal Feature Extraction and Adaptive Local–Global Feature Fusion Structure for RGB-X Marine Animal Segmentation. Electronics, 14(19), 3927. https://doi.org/10.3390/electronics14193927