4.1. Data Acquisition and Preprocessing

To comprehensively evaluate the performance and generalization capabilities of the proposed multi-source, multi-domain ensemble learning sentiment analysis model, we selected five representative datasets covering a variety of typical scenarios, including food delivery, hotels, film and television, e-commerce, and medical reviews. These datasets vary significantly in scale, source, and text features, ensuring a diverse data source while highlighting the complexity and challenges of cross-domain sentiment analysis.

- (1)

Waimai_10k Takeaway Review Dataset: This dataset contains a large number of real user reviews of food and beverage takeaways, with an original size of 11,987, including 4000 positive reviews and 7987 negative reviews. This study obtained this dataset from the ChineseNlpCorpus repository on GitHub (URL:

https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/waimai_10k/waimai_10k.csv(accessed on 23 April 2025)). To improve the data quality, a systematic cleaning process was further performed, including removing invalid reviews, duplicate text, and redundant symbols. After processing, 11,604 valid reviews (3824 positive reviews and 7780 negative reviews) were retained, providing a reliable data foundation for the model’s sentiment recognition in the food and beverage consumption scenario.

- (2)

ChnSentiCorp_htl_all Hotel Review Dataset: This dataset contains approximately 7766 hotel user reviews, with a distribution of 5322 positive reviews and 2444 negative reviews. It is a classic corpus in Chinese sentiment analysis research. These data, compiled by Professor Tan Songboon on the basis of Ctrip reviews, are available on ChineseNlpCorpus (URL:

https://github.com/SophonPlus/ChineseNlpCorpus/blob/master/datasets/ChnSentiCorp_htl_all/ChnSentiCorp_htl_all.csv (accessed on 23 April 2025)). To ensure the validity of the experiment, we cleaned the raw data by removing HTML tags, redundant spaces, duplicate comments, and excessively short text. The resulting dataset contained 7758 high-quality reviews (5318 positive and 2440 negative), providing data support for validating the cross-domain sentiment model’s applicability to the travel and service industries.

- (3)

Douban Movie Short Comments: This dataset is large in scale, with more than 2.12 million original comments, covering a variety of semantic expressions and language styles. It is publicly released on the Kaggle community under the name “Douban Movie Short Comments” and can be retrieved and downloaded on the platform (URL:

https://www.kaggle.com/datasets/utmhikari/doubanmovieshortcomments?select=DMSC.csv (accessed on 4 October 2025)). To ensure the balance of the binary classification experiment, this paper randomly selected reviews with a rating of 5 stars and 1 star as positive and negative samples, and after cleaning and screening, finally obtained 20,000 high-quality short comments. This dataset is highly representative in terms of sentiment polarity and language differences, providing an ideal scenario for evaluating the generalization ability of the model in large-scale unstructured text.

- (4)

Alibaba Cloud Tianchi Multi-Domain Comment Dataset (ALY_ds): This dataset was sourced from the Alibaba Cloud Tianchi competition platform. It encompasses four distinct product domains: mobile phones, cars, cameras, and notebooks, characterized by their cross-product and multi-context nature. The corpus for this study was acquired by querying the platform using the keywords “ALY_ds” and “Multi-Domain Comment Data,” followed by the integration of the corresponding datasets from these four domains. The dataset for each of the four domains (mobile phones, cars, cameras, and notebooks) comprises two separate files: one containing the raw review texts and the other containing the corresponding sentiment labels. Therefore, the complete dataset consists of eight files (4 domains × 2 file types). The mapping between the

file numbers (S1–S8) in the Supplementary Materials and the specific files is as follows:

S1 (mobile_phone_sentence), S2 (mobile_phone_label), S3 (car_sentence), S4 (car_label), S5 (camera_sentence), S6 (camera_label), S7 (notebook_sentence), and S8 (notebook_label). To ensure data integrity, this study conducted systematic preprocessing, including text cleaning, deduplication, and invalid comment filtering. Ultimately, 6582 comments were retained. Their multi-domain attributes provide experimental support for exploring the performance of multi-source sentiment analysis models in domain transfer and feature fusion.

- (5)

Chinese Medical Review Dataset (Lung_ds, self-constructed): This dataset was independently constructed by this study and aims to evaluate the performance of sentiment analysis models in the medical field, especially in scenarios related to lung diseases. Specifically, based on the keyword screening method, the research team extracted 2796 Q&A records related to lung diseases from the CHIP2020 medical question-and-answer dataset (URL:

https://ai-studio-online.bj.bcebos.com/v1/2e0a232cfa4b4ef6bfcbdd4d82f2e12bae914019263e4b71afdd5c48ed6105bb?responseContentDisposition=attachment%3Bfilename%3DRAG-%E7%B2%BE%E9%80%89%E5%8C%BB%E7%96%97%E9%97%AE%E7%AD%94_onecolumn_80k.csv (accessed on 5 October 2025)) and collected 2171 real user reviews from the respiratory medicine and thoracic surgery pages of the “Good Doctor Online” platform. After manual cleaning, deduplication, and invalid text removal, 3337 medical review texts were finally obtained. To cope with the large number of professional terms in medical texts, this paper uses the pause medical field word segmentation model for segmentation and combines it with a self-constructed medical term dictionary for vocabulary normalization. In terms of sentiment annotation, multi-source dictionaries such as NTUSD, HowNet, and Dalian University of Technology Sentiment Vocabulary Ontology were integrated, and sentiment polarity and intensity were combined for binary classification annotation, resulting in 2370 positive reviews and 967 negative reviews. The dataset was split into training and test sets in a 4:1 ratio to ensure the scientific nature and reproducibility of the experiments.

To ensure fair and reproducible experimental results, this study adopted a consistent partitioning and usage strategy across all datasets. Specifically, the five datasets (Waimai_10k, ChnSentiCorp_htl_all, Douban Movie Short Comments, ALY_ds, and Lung_ds) were all split in a training:test ratio of 8:2. A 10% portion of the training set was randomly allocated as a validation set for early stopping.

Given that this study focuses on Chinese corpora, the datasets used are all Chinese review texts. The goal is to delve into the feature distribution and model generalization performance of multi-source and multi-domain sentiment analysis in the Chinese context. It is worth noting that Chinese differs significantly from other languages (such as English and French) in terms of lexical structure, word segmentation, and emotional expression. Direct cross-language transfer often leads to semantic drift and feature mismatch. Therefore, this paper focuses on cross-domain generalization within the same language system, rather than cross-language adaptation. Although no direct cross-language experiments were conducted at this stage, the proposed ensemble learning framework has strong scalability.

To ensure the reproducibility and computational stability of the experimental results, this study completed all model training and evaluation on the AutoDL cloud server platform (URL:

https://www.autodl.com/market/list (accessed on 18 January 2025)), which provides GPU-based deep learning environments. The software and hardware configurations used in the experiment are shown in

Table 1:

All neural network modules (including RoBERTa-FC, BERT-BiGRU, TextCNN-Att, and the attention-based meta-learner), as well as the comparison models in

Section 4.2.2 and the ablation experiments in

Section 4.3, were implemented using the PyTorch 1.9.0 framework (URL:

https://pytorch.org/, accessed on 18 January 2025). The models were constructed with the torch.nn and Transformers libraries (v4.30.2, URL:

https://huggingface.co/transformers/, accessed on 18 January 2025) for parameter management and contextual feature encoding.The AdamW optimizer (implemented in torch.optim.AdamW), a native component of PyTorch, was employed for model optimization. Learning rate scheduling and gradient updates were managed using the mixed-precision training mechanism (torch.cuda.amp). The entire model training process was visualized and monitored via TensorBoard (v2.14.0, URL:

https://www.tensorflow.org/tensorboard, accessed on 18 January 2025).To ensure consistency and comparability across experiments, all random processes use the same random seed (42) and the random number generator state is fixed.

4.2. Experimental Results Analysis

4.2.1. Performance Comparison Between the Single-Base Classifier and the Ensemble Model

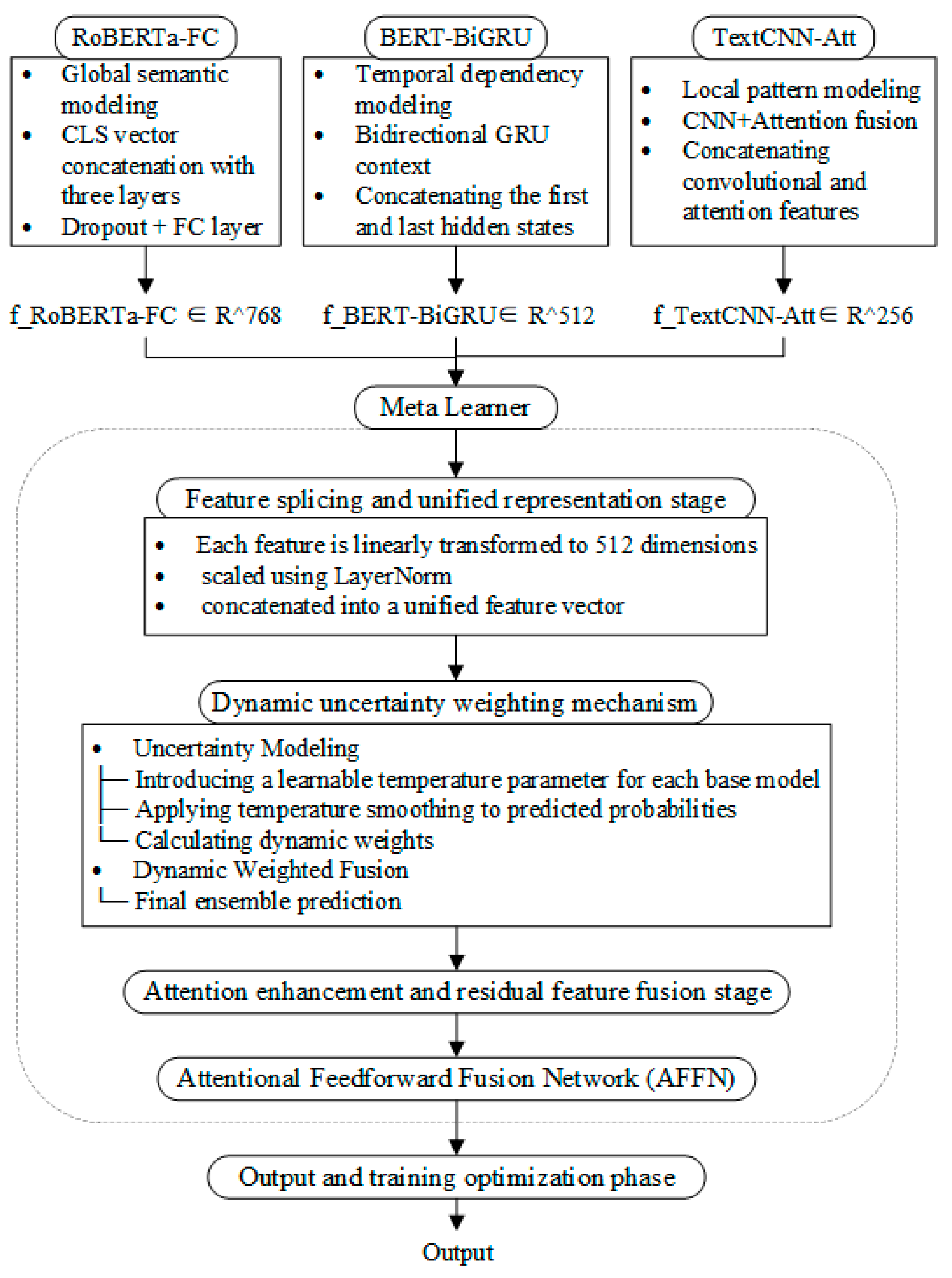

To validate the effectiveness of the proposed ensemble learning framework, we compared the performance of three base classifiers (RoBERTa-FC, BERT-BiGRU, and TextCNN-Att) with the ensemble model on five sentiment classification datasets from different sources: Waimai, Chn_hot, disc, AlY_ds, and Lung_ds.

Table 2 shows the experimental results of each model on five metrics: Accuracy, Precision, Recall, F1 Score, and Macro-AUC.

Overall, the ensemble model outperformed the single-base classifiers on all datasets, with a steady and significant improvement.

On the Waimai dataset, the ensemble model achieved an Accuracy of 0.9259, an improvement of approximately 0.0112 over the best single model, RoBERTa-FC (0.9147). It also achieved an F1 Score improvement of 0.0148 and a Recall improvement of 0.0105. This demonstrates that in the relatively regularized context of e-commerce reviews, model fusion can better integrate the strengths of different classifiers and improve the overall recognition capabilities.

On the Chn_hot dataset, the ensemble model achieved an F1 Score of 0.9353, an improvement of 0.0278 over the best single model, BERT-BiGRU (0.9075). Furthermore, it achieved an Accuracy of 0.9111 and a Recall of 0.9379, both of which outperformed the single models. The integrated model demonstrates stronger robustness in discerning complex contexts by combining the global semantic modeling of RoBERTa-FC and the temporal feature capture of BiGRU.

On the dmsc dataset, the ensemble model achieved an Accuracy of 0.9071, significantly outperforming the single-model BERT-BiGRU (0.8706). Its F1 Score reached 0.9069, a 0.0283 improvement over RoBERTa-FC’s 0.8786. Its Macro-AUC also improved from 0.9417 for the best single-model BERT-BiGRU to 0.9724, demonstrating that the ensemble strategy can significantly enhance the model’s discrimination and generalization capabilities in multi-category and diverse corpus contexts.

On the AlY_ds dataset, the ensemble model achieved the best performance across all metrics, with an F1 Score of 0.9590, a 0.0292 improvement over the best single-model BERT-BiGRU (0.9298). Its Macro-AUC reached 0.9797, representing the largest improvement. The results show that in cross-domain data scenarios, the integrated model can effectively alleviate the overfitting and underfitting problems that may occur in the migration process of a single model.

Lung_ds Medical Review Dataset: This dataset features sparse samples, imbalanced categories, and specialized terminology. While the performance of the individual models varied significantly (Macro-AUC ranged from 0.6169 to 0.8027), the ensemble model achieved a Macro-AUC of 0.9238, a 0.1211 improvement over the best single model, TextCNN-Att. Accuracy, Precision, and F1 Score improved by 0.1309, 0.1578, and 0.0702, respectively, demonstrating that model fusion can effectively improve the recognition of minority and difficult-to-class samples in the medical context.

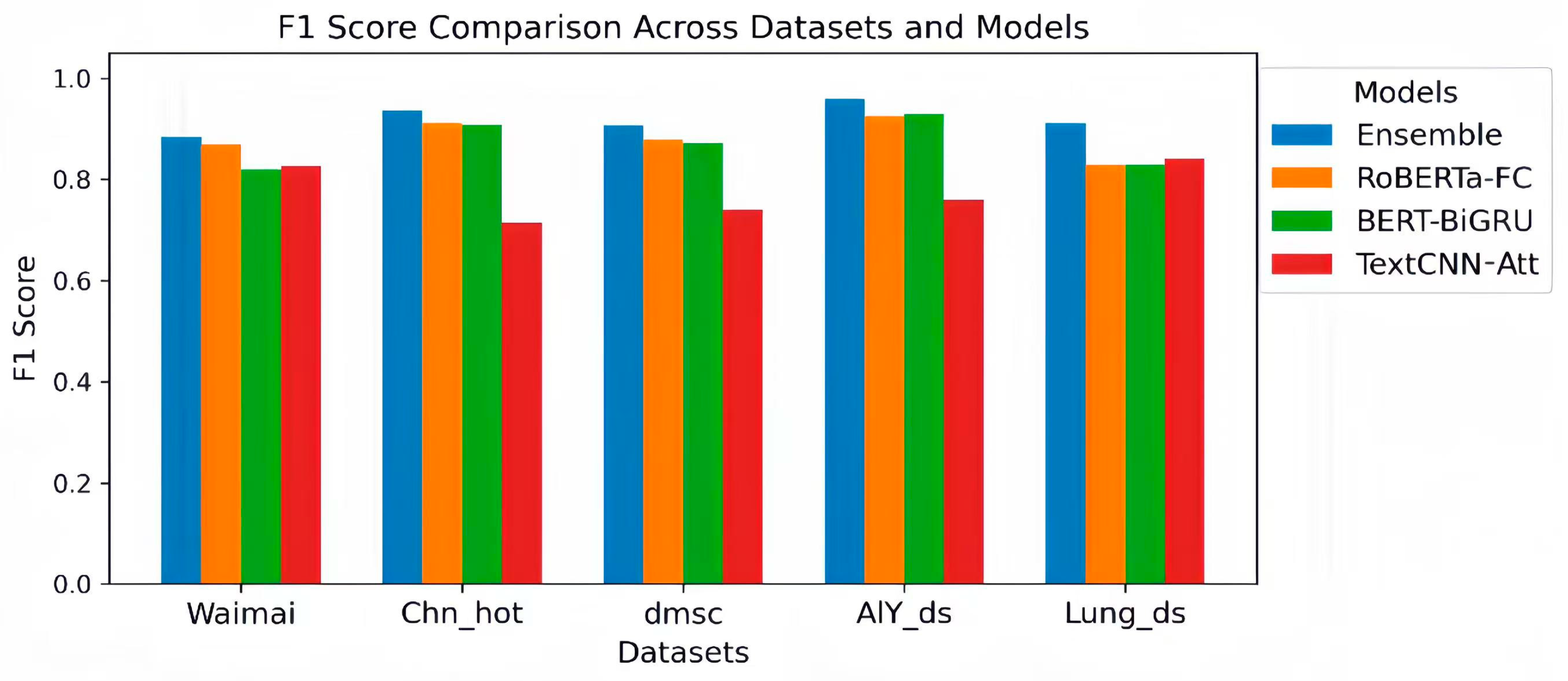

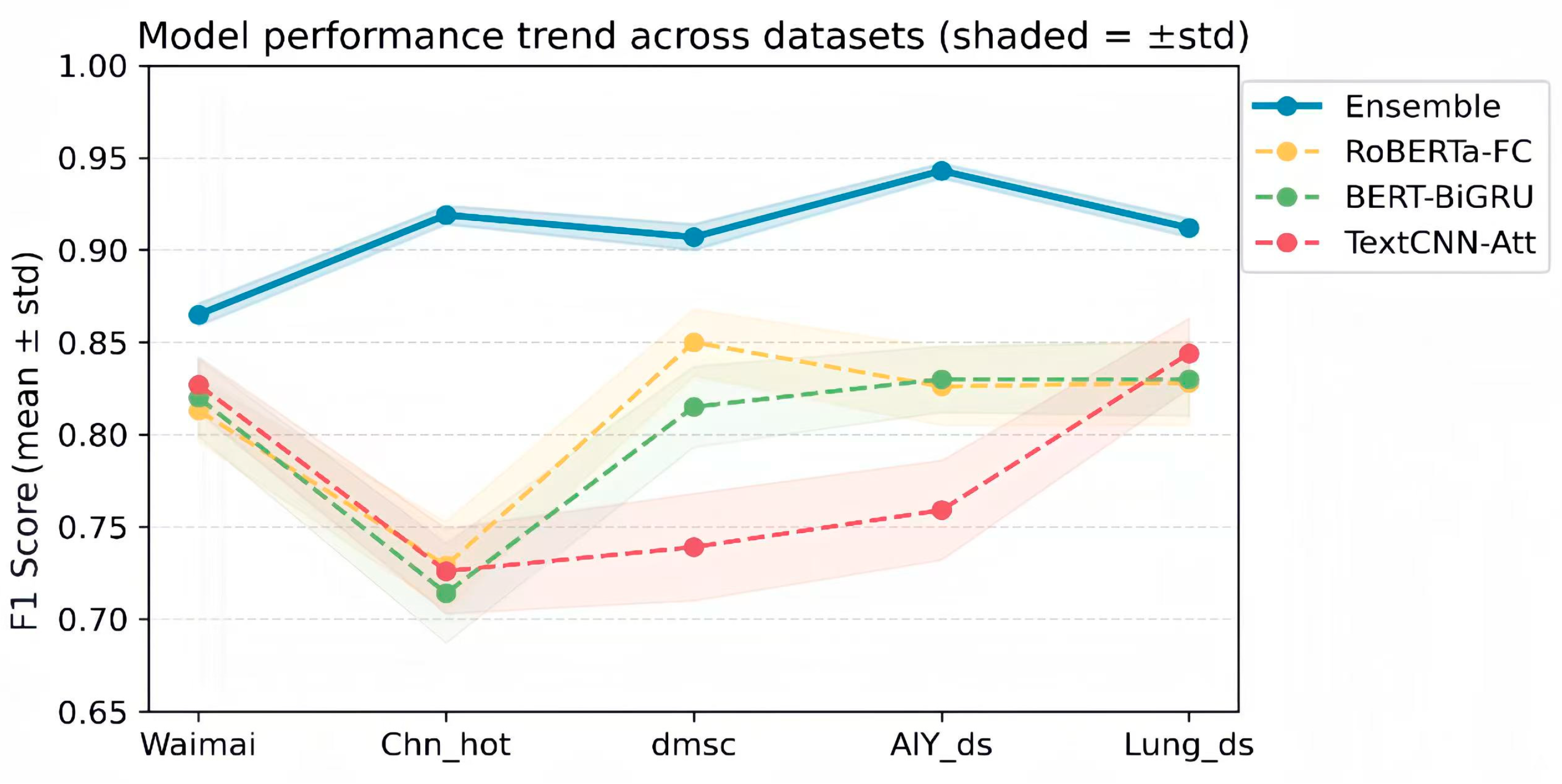

Figure 2 plots the F1 Score comparisons of various models on the five datasets. It is clear from

Figure 2 that the ensemble model achieves significantly higher F1 Scores than a single-base classifier on all five datasets. This result demonstrates that ensemble learning can fully integrate the strengths of different models, demonstrating enhanced stability and generalization in sentiment classification tasks.

Specifically, on the Waimai dataset, the ensemble achieved an F1 Score of 0.8844, an improvement of approximately 1.48% over the top-performing single model, RoBERTa-FC, which achieved a score of 0.8696. On the Chn_hot dataset, the ensemble achieved an F1 Score of 0.9353, a 2.36% improvement over RoBERTa-FC’s score of 0.9117 and far exceeding TextCNN-Att’s score of 0.7135, demonstrating the effectiveness of ensemble strategies in suppressing the negative effects of weak models. On the disc dataset, the ensemble achieved an F1 Score of 0.9069, surpassing both RoBERTa-FC and BERT-BiGRU, further demonstrating its robustness to diverse text distributions. On the AlY_ds dataset, the ensemble’s F1 Score reached 0.9590, significantly exceeding the top single-model BERT-BiGRU’s score of 0.9298. This demonstrates that even with complex datasets, ensemble models can leverage the complementary strengths of multiple models to improve the overall prediction accuracy. Even on datasets like Lung_ds, which have a smaller sample size or uneven class distribution, the ensemble achieved an F1 Score of 0.9115, a 7–8% improvement compared to the individual models’ scores, which fluctuated between 0.8281 and 0.8413. This demonstrates that ensemble strategies can effectively mitigate the overfitting problem of individual models on small or imbalanced datasets.

Analysis of single models shows that RoBERTa-FC performs well on most datasets, especially on Waimai, Chn_hot, and dmsc, where its F1 Score approaches that of the ensemble. However, its performance on Lung_ds drops significantly, indicating its limited adaptability to small or noisy data. BERT-BiGRU slightly outperforms RoBERTa-FC in F1 Score on AlY_ds and Lung_ds, reflecting the potential of recurrent networks in handling sequential dependencies and the semantics of long texts. However, it falls slightly short on the Waimai dataset. TextCNN-Att’s performance is relatively unstable, with an F1 Score significantly lower than other models on most datasets, particularly on the Chn_hot dataset, where it only reaches 0.7135. However, it slightly outperforms RoBERTa-FC on Lung_ds, demonstrating that convolutional networks have some advantages in extracting local features but struggle to capture complex semantics and long-range dependencies. In summary, by fusing the predictions of multiple base learners, the ensemble model not only combines the strengths of each model but also effectively reduces the bias and instability of individual models, achieving robust performance across multiple datasets and text distributions. Its generally leading performance and robustness to extreme conditions give it a significant advantage in sentiment analysis tasks, making it particularly suitable for complex, noisy, or unevenly distributed data scenarios, providing a reliable and highly generalizable solution for practical applications.

4.2.2. Comparative Analysis of the Existing Integrated Learning Strategies

To validate the effectiveness of the proposed multi-model ensemble framework based on dynamic uncertainty weighting for multi-domain text sentiment classification, we selected six representative ensemble learning strategies for comparison (see

Table 3). These strategies include: heterogeneous classifiers + fuzzy integral weighting, stacking-BERT multi-base model, voting ensemble of adaptive NB and optimized SVM, BERT-BiGRU perturbation + voting, and regression ensemble of heterogeneous classifiers. Comparison metrics include Accuracy, Precision, Recall, F1 Score, and Macro-AUC. All methods were evaluated on five datasets: Waimai, Chn_hot, dmsc, AlY_ds, and Lung_ds. These datasets cover a variety of scenarios, including food delivery, e-commerce/hotels, film reviews, and medical reviews, effectively demonstrating cross-domain generalization capabilities.

- (1)

Heterogeneous integration model by Zhong et al. [

26]: This method aims to improve the ability of sentence-level text sentiment classification and designs a multi-element integration framework based on heterogeneous classifiers. Its base classifiers include three categories: bidirectional long short-term memory network (BiLSTM) based on the self-attention mechanism, convolutional neural network (CNN) based on word embedding, and naive Bayes (NB) based on text information entropy. In the integration stage, this method uses a fuzzy integral algorithm to dynamically determine the weight of each classifier, thereby maximizing the complementarity and prediction effect between the different classifiers.

- (2)

Stacking-BERT multi-base model [

11]: In response to the limitations of traditional static word vectors (such as word2vec, GloVe) in semantic expression and the problem of insufficient generalization ability of single deep models, this method proposes a multi-base model framework based on BERT. First, the BERT pre-trained model is used to extract deep feature representations of text. Then, a heterogeneous classifier is constructed by combining multiple neural networks such as TextCNN, DPCNN, TextRNN, and TextRCNN. Finally, the stacking method is used to fuse them, and support vector machines (SVM) are used as meta-classifiers for training and prediction, thereby enhancing the adaptability and robustness of the model in different scenarios.

- (3)

Voting ensemble method by Miao, P. et al. [

27]: This method improves the naive Bayes into an adaptive version and optimizes the parameters of the support vector machine to construct multiple classifiers with differentiated performance. Subsequently, the SVM was used as the core base classifier and combined with the bagging strategy to form an integrated component. Finally, the results of multiple classifiers are fused through the voting mechanism to improve the overall classification performance.

- (4)

BERT-BiGRU ensemble model by You, L. et al. [

28]: To overcome the problem that traditional sentiment recognition models rely only on shallow semantics and have insufficient generalization ability, this method uses the BERT pre-trained model to obtain the contextual semantic features of the comments, and then combines BiGRU to extract deep nonlinear features, thereby obtaining the optimal performance under a single model. In the integration stage, by perturbing the training results of multiple BERT series models and fusing the voting strategy, the deep features of different classifiers are complemented, thereby enhancing the stability and overall performance of the model.

- (5)

The heterogeneous classifier ensemble method proposed by Li, D. et al. [

29]: This method focuses on integrating different feature extraction and classification methods, combining three mainstream models: long short-term memory network (LSTM) based on word embedding, convolutional neural network (CNN) based on word embedding, and logistic regression (LR) based on TF-IDF. In response to the problem that traditional hard voting methods are single and soft voting is difficult to directly apply to heterogeneous classifiers, this method proposes an ensemble strategy based on regression learning. By performing regression modeling on the outputs of multiple classifiers, the model’s ability to recognize emotional color is effectively improved.

In terms of overall performance, the ensemble model demonstrated high robustness and generalization across the five datasets. Its strengths are evident not only in F1 Score but also in the balance of multiple metrics, including Accuracy, Precision, Recall, and Macro-AUC.

On the Waimai dataset, the ensemble achieved Accuracy, Precision, F1 Score, Recall, and Macro-AUC of 0.9147, 0.8777, 0.8696, 0.8616, and 0.9478, respectively. While its F1 Score was slightly lower than Comparison-Model 2 (0.8883), its Accuracy, Precision, and Macro-AUC were higher than those of most individual models, but slightly lower than Comparison-Model 4 (0.9088) and Macro-AUC (0.9671). This phenomenon suggests that a single model may have a stronger fit for local features, such as oversensitivity to certain categories of samples, causing these metrics to peak for specific models. However, the ensemble’s F1 Score and Recall are closer to the overall optimal, indicating that it balances predictions for all categories while suppressing single-model bias and improving overall balance and robustness.

On the Chn_hot dataset, the ensemble achieved an F1 Score of 0.9353, with both precision (0.9327) and recall (0.9379) exceeding all other comparison models, demonstrating its significant advantage in handling data with complex category distributions or imbalanced sentiment. Some comparison models performed slightly higher or lower in accuracy or F1 Score. For example, Comparison-Model 2 achieved an accuracy of 0.9188, but its F1 Score and recall were inferior to those of the ensemble. This suggests that while individual models are strong in capturing local patterns, their overall prediction balance and generalization capabilities are insufficient. The ensemble model, through multi-model fusion, integrates the prediction results of each model, smooths local fluctuations, and achieves stable recognition of different sentiment categories. On the disc dataset, the ensemble achieved an F1 Score of 0.9069, which was slightly lower than Comparison-Model 2 (0.9086) and slightly lower Accuracy (0.9071) than Comparison-Model 2 (0.9105). However, its Macro-AUC (0.9724) was significantly higher than the other single models. This demonstrates that while the ensemble model does not pursue extreme values for individual metrics, by fusing the predictions of each model, it reduces sensitivity to noisy samples or extreme features, achieving a robust improvement in the overall performance. The slightly lower local metrics are due to the weighted or averaging strategy used in the ensemble model during fusion. This smooths out local peaks in individual models, but at the expense of overall generalization and cross-category balance.

On the AlY_ds dataset, the ensemble leads across all metrics, achieving an F1 Score of 0.9590 and the highest precision (0.9487), recall (0.9694), and macro-AUC (0.9797), fully demonstrating the effectiveness of multi-model complementarity on complex data. Even though some individual models are similar in terms of local metrics, such as Comparison-Model 2 with an F1 Score of 0.9338, their recall and macro-AUC are inferior to the ensemble. This suggests that individual models tend to overfit certain local features. By fusing the outputs of each model, the ensemble model balances predictions across categories, improving overall robustness and consistency and avoiding bias caused by local overfitting. On the Lung_ds dataset, the ensemble achieved an F1 Score of 0.9115, which was higher than most of the compared models. Its Precision (0.9053) was slightly lower than Comparison-Model 4 (0.9167), but its Recall (0.9177) and Macro-AUC (0.9238) were at the highest levels. Meanwhile, the ensemble’s Recall was slightly lower than that of Comparison-Model 3 (0.9367), reflecting the model’s aggressive discrimination of specific categories. While local metrics showed high peaks, the overall prediction was lacking in balance. In contrast, the ensemble model, through multi-model probability weighting, achieved a more balanced performance across different categories, thereby improving the overall accuracy and robustness.

Overall, the advantage of ensemble models lies in their ability to steadily improve multiple metrics by fusing predictions from multiple models, mitigate the impact of outliers, and enhance adaptability to diverse datasets. Even when a few metrics were slightly lower than those of the individual models, their overall accuracy, precision, recall, F1 Score, Macro-AUC, and consistency of prediction output remained significantly better than those of the individual models. This fully demonstrates the reliability and high generalization capabilities of the ensemble strategies for multi-dataset, multi-metric tasks. Slightly lower local metrics are often the result of the ensemble model’s smoothing strategy, which sacrifices local peaks in individual models to achieve global prediction stability, balance, and consistency, thereby optimizing the overall performance.

4.2.3. Model Complexity and Computational Efficiency Analysis

While verifying the model’s performance, this paper further compared the computational resource consumption of different models to evaluate the feasibility and efficiency of the proposed dynamic weighted integration mechanism in engineering implementation. The comparison results are shown in

Table 4.

The table shows significant differences in model size and computational load among the three base classifiers:

TextCNN-Att, due to its lightweight structure and minimal parameters (approximately 72 MB), boasts the fastest training and inference speeds. BERT-BiGRU, while introducing recurrent layers, slightly increases computational load, but remains superior in semantic modeling. RoBERTa-FC, a representative Transformer architecture, boasts the strongest contextual modeling capabilities, but also carries a higher computational cost.

Compared to a single model, static weighted ensemble simultaneously activates multiple sub-models to achieve feature fusion, increasing training time to approximately 6.7 min per epoch and GPU usage to 7.8 GB. Dynamic weighted ensemble builds on this by introducing uncertainty-based adaptive weight allocation and an attention fusion module, resulting in a slight increase in training time (+6%) to 7.1 min, approximately 3% higher GPU usage, and approximately 10% higher inference latency. This additional overhead primarily stems from the dynamic weight calculation and attention mapping processes, but is relatively minor compared to the overall computational load of the Transformer backbone.

In terms of performance, the dynamic ensemble model achieves an average F1 Score improvement of approximately 0.5–2% compared to the static approach, with no performance degradation across all datasets, demonstrating improved stability and generalization. This demonstrates that, while the dynamic ensemble mechanism slightly increases training cost, the performance gains and improved robustness it brings offer a more cost-effective solution in practical applications.

Overall, the proposed dynamic weighted ensemble model achieves a good balance between performance and resource consumption: the structural diversity of lightweight sub-models provides feature complementarity; the dynamic fusion mechanism enhances adaptability to cross-domain tasks; and the computational cost is manageable, with the overall number of parameters and computation time only slightly increasing compared to static ensemble.

4.3. Ablation Experiments

To further quantify the contributions of each base learner and ensemble strategy to the overall framework, this paper designed and conducted systematic ablation experiments. These experiments focused on three types of base learners: the global semantic view (RoBERTa-FC), the time series view (BERT-BiGRU), and the local feature view (TextCNN-Att). The independent impact of residual connections, dynamic uncertainty weighting, and the attention fusion mechanism were also examined.

Table 5 summarizes the F1 Scores for each dataset under different ablation conditions. Specifically, the experimental scheme includes:

- (1)

Base learner ablation: remove RoBERTa-FC, BERT-BiGRU and TextCNN-Att, respectively, to evaluate the independent contribution of single semantic, sequence and local features to the ensemble performance;

- (2)

Residual connection ablation: remove the residual path in the meta learner to analyze its impact on gradient transfer and feature stability fusion;

- (3)

Dynamic weighting ablation: remove dynamic uncertainty weighting and only retain feature splicing to explore its robustness under the interference of weak classifiers;

- (4)

Attention fusion ablation: remove the Attention Fusion module and only perform simple splicing to evaluate the applicability of the attention mechanism under different data complexities;

- (5)

Combination view comparison: retain two base learner combinations (such as RoBERTa-FC + TextCNN-Att), respectively, to analyze the complementarity between views and the impact of single view loss.

- (6)

Analysis of the independent contributions of dynamic weight and attention: New experiments include “Dynamic Weight Only” and “Attention Only” to further distinguish the independent contributions of the two mechanisms in ensemble decision-making.

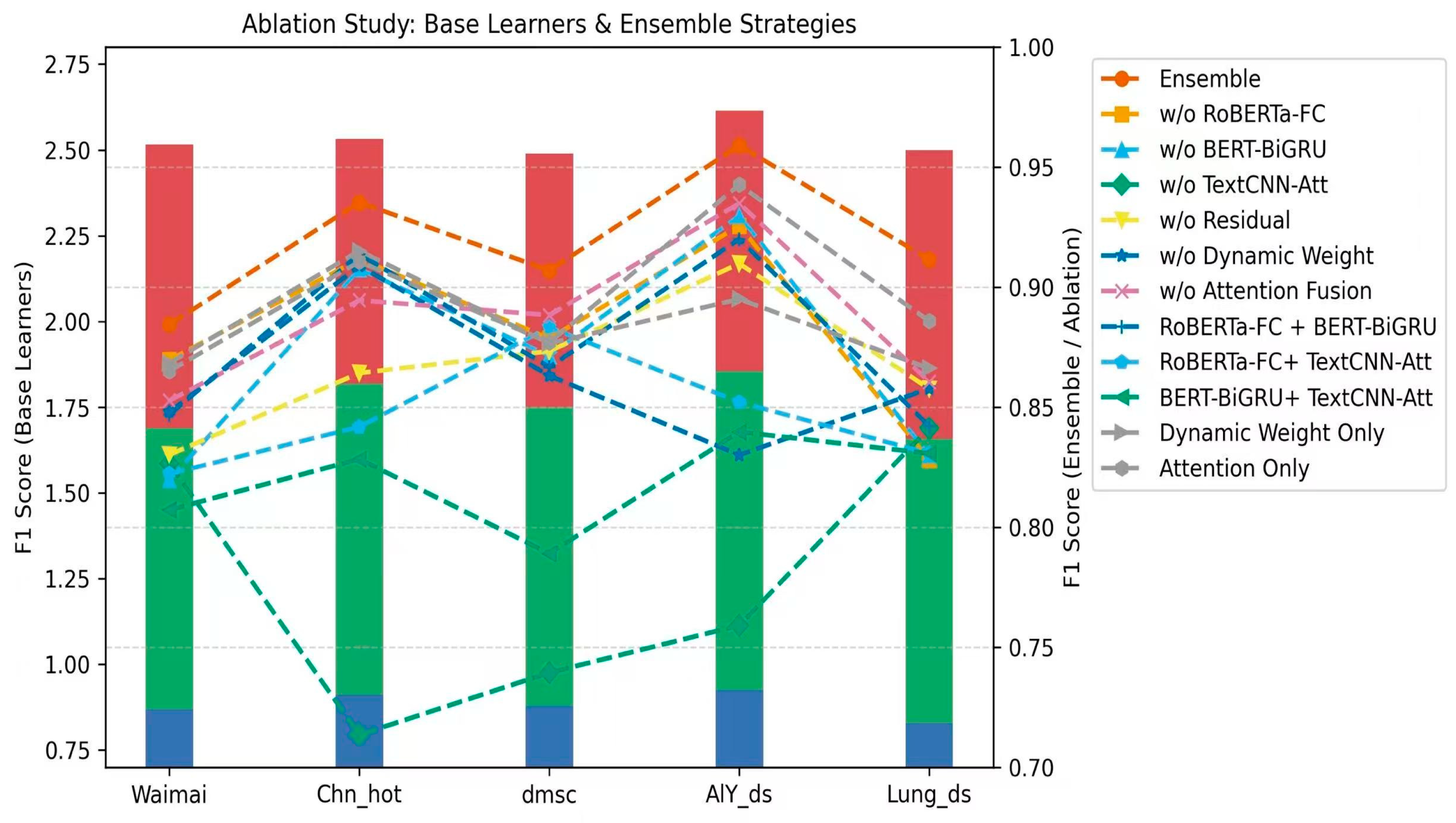

To intuitively present the impact of each ablation experiment, the data in

Table 3 are visualized (as shown in

Figure 3). The columns (blue, green, and red) in the figure represent the contributions of the three base learners (RoBERTa-FC, BERT-BiGRU, and TextCNN-Att). The height of each column represents the overall performance of the ensemble model. The broken lines correspond to different ablation experiment settings. Overall, the image as a whole demonstrates the importance of each module in the final performance.

Combining the table and the graph, we can further analyze the contribution of each base learner and the ensemble strategy to the overall performance.

- (1)

Contribution of base learners to ensemble performance: The ensemble model performs best on all datasets (e.g., F1 = 0.8844 on the Waimai dataset), indicating that multi-view feature fusion has a significant complementary effect. Removing RoBERTa-FC (global semantic view) results in the most significant performance drop (e.g., Chn_hot drops from 0.9353 to 0.9117, a decrease of 2.36%), indicating that it plays a key role in capturing context and global semantic dependencies and is the core source of ensemble performance improvement. Removing BERT-BiGRU (time series view) reduces the F1 of the Waimai dataset from 0.8844 to 0.8197 (a decrease of 6.47%), indicating that it has important value in context sequence modeling and sequence dependency capture, and can make up for the shortcomings of static semantic features. The impact of TextCNN-Att (local feature view) varies significantly across datasets, especially in the Chn_hot dataset, where the drop is the largest (a decrease of 22.18%), indicating that local convolutional features are particularly important for emotional segment recognition and capturing high-frequency emotional words.

- (2)

Analysis of inter-view complementarity: When only some base learners are retained, the overall performance decreases, further confirming the importance of multi-view fusion. When only RoBERTa-FC + BERT-BiGRU is retained, the average performance is relatively high (e.g., AlY_ds F1 Score = 0.9200), indicating that there is strong complementarity between semantic and temporal features. When only RoBERTa-FC + TextCNN-Att is retained, the performance decreases significantly (e.g., AlY_ds F1 Score = 0.8521), indicating that the model has difficulty capturing semantic transition information without temporal features. When only BERT-BiGRU + TextCNN-Att is retained, the overall performance is the lowest, proving that the lack of a global semantic view leads to a serious lack of contextual information, verifying the core role of the global semantic layer in sentiment modeling.

- (3)

The role of residual connections: After removing the residual connections, the F1 Scores of all datasets decreased (e.g., AlY_ds dropped from 0.9590 to 0.9098), indicating that the residual mechanism effectively alleviated the problems of gradient vanishing and feature degradation during deep fusion, and helped improve the stability and convergence performance of the model.

- (4)

The role of dynamic uncertainty weighting: After removing the dynamic weights, the performance of some datasets decreased significantly (e.g., AlY_ds F1 Score dropped from 0.9590 to 0.8301, a decrease of 12.89%), indicating that this mechanism can adaptively adjust the influence of weak classifiers in the ensemble decision, thereby improving the overall robustness. In particular, in the case of noise in multi-source heterogeneous features, dynamic weighting can effectively suppress the interference of local mismatches on the overall prediction.

- (5)

Applicability of Attention Fusion: After removing attention fusion, performance slightly decreases (e.g., the AlY_ds F1 Score drops from 0.9590 to 0.9349, a decrease of 2.41%), indicating that attention weights may lead to local overfitting in data with sufficient complementary features. This result suggests that the fusion method should be flexibly selected under different data scales: the attention mechanism can bring benefits in large-scale and complex data, while simple splicing may be more robust in small and medium-sized datasets.

- (6)

Independent effects of dynamic weight and attention mechanism: To further distinguish the effects of the two mechanisms, the “Dynamic Weight Only” and “Attention Only” experiments were added: Dynamic Weight Only: Attention Fusion was removed, and only the temperature parameter was retained for dynamic weighting. The results showed that high F1 (0.8684, 0.9151, 0.8765, 0.8661) was maintained on the Waimai, Chn_hot, dmsc, and Lung_ds datasets, indicating that dynamic weighting can effectively adjust the weights of each base learner and has a suppressive effect on the interference of weak classifiers. However, the F1 on the AlY_ds dataset was 0.8950, slightly lower than Attention Only (0.9426), suggesting that dynamic weighting alone is not enough to fully exploit local complementary information on large-scale datasets with fully complementary features. Attention Only: Dynamic weighting was removed, and only Attention Fusion was retained. The results show that the F1 Scores on the AlY_ds and Lung_ds datasets are 0.9426 and 0.8858, respectively, both exceeding those of the Dynamic Weight Only approach. This demonstrates that the attention mechanism can enhance the local complementarity of feature fusion, improving the ability to model complex semantic structures or sentiment patterns in long texts. However, on the Waimai, Chn_hot, and dmsc datasets, the F1 Scores are 0.8651, 0.9106, and 0.8769, respectively, slightly lower than those of the Dynamic Weight Only approach. This suggests that attention alone is insufficient to ensure robustness when noise levels are high or weak classifiers are interfering.

Combining the two, we can see that dynamic weights are more critical in suppressing weak classifier interference and ensuring model robustness, while the attention mechanism primarily improves feature fusion accuracy and local complementarity. Combining the two achieves both robustness and feature weighting efficiency, achieving optimal overall performance.

- (7)

Analysis of the independent impact of multi-view feature deletion: In response to the problem that “multi-view feature integration is the key, but there is a lack of comprehensive ablation research”, the experimental results clearly reveal:

Deleting the global semantic view (RoBERTa-FC): The model loses the ability to model long-range dependencies and global sentiment transfer, and the F1 drops significantly, especially in semantically complex datasets (such as Chn_hot, AlY_ds);

Deleting the time series view (BERT-BiGRU): The model’s performance in modeling emotional transitions and context consistency degrades, indicating that time-dependent features are an important supplement to semantic continuity;

Deleting the local view (TextCNN-Att): The model has difficulty capturing phrase-level clues such as emotional words and modifiers, and the ability to recognize emotional polarity is significantly reduced, especially in short texts or high-noise corpora.

In summary, the collaborative integration of multi-view features is the key to improving overall performance. The global semantic structure provided by RoBERTa-FC is the basic core, BERT-BiGRU supplements the contextual dynamic dependency of the time series, and TextCNN-Att strengthens the emotion recognition ability of local segments. The three complement each other under the action of dynamic weighting and residual fusion mechanism, making the model show strong robustness and generalization ability under various corpus and task conditions.

4.4. Model Validation and Analysis

4.4.1. Statistical Significance Analysis

To verify the statistical significance of the performance improvement achieved by the proposed heterogeneous ensemble learning model on the multi-source, multi-domain sentiment classification task, we conducted paired

t-tests and confidence interval (CI) analyses (see

Table 6) on the experimental results in

Table 2 and

Table 3. Because the models on each dataset were independently trained and validated under the same conditions, this approach effectively controlled for the impact of inter-sample differences. The significance level was set at α = 0.05. Test metrics included the F1 Score and Macro-AUC, which comprehensively reflect the model’s overall classification ability and robustness to class imbalance.

Let the null hypothesis be: There is no significant difference in the performance of the two models on the given dataset;

The alternative hypothesis is: The ensemble model significantly outperforms the comparison model.

The statistical definitions are as follows:

where

is the mean of the performance differences between the two models on each dataset,

is the standard deviation of the differences, and

is the number of samples (i.e., the number of datasets). If

p < 0.05, H_0 is rejected and the difference is considered statistically significant.

As shown in

Table 4, the ensemble model achieved significant performance improvements on all base classifiers (

p < 0.05), with F1 Score increases ranging from 3.7% to 14.3%, and none of the confidence intervals crossed zero, demonstrating robust and reliable performance. In particular, the TextCNN-Att -based model achieved the most significant improvement after ensemble (ΔF1 Score = 0.1435), demonstrating strong feature complementarity between this model and the other learners. These results confirm that the heterogeneous ensemble mechanism proposed in this paper can effectively combine the strengths of multiple models, significantly improving sentiment classification performance.

Table 7 shows that, with the exception of Comparison-Model 2, the performance differences between the other four comparison models and our method all reached statistically significant levels (

p < 0.05). Among them, Comparison-Model 3 achieved the greatest improvement (ΔF1 Score = 0.1190), demonstrating that our proposed weighted ensemble framework possesses stronger optimization capabilities in terms of model fusion mechanisms.

For Comparison-Model 2, the p-value is 0.3664, and the 95% confidence interval includes 0 ([−0.0088, 0.0279]), thus failing to reject the null hypothesis at an α < 0.05 level. The standard deviation of the differences inferred from the t-statistic is approximately 0.0209, resulting in a calculated pairwise effect size (Cohen’s d) of ≈0.46, which is considered a small-to-medium effect. This indicates that while the difference is not yet significant, there is a slight positive trend (ΔF1 Score 1 = +0.0095), indicating that the ensemble model performs slightly better overall. This type of “small but consistent” improvement reflects the robustness and generalization consistency of the model, especially in high-performance tasks (F1 Score > 0.90), where small improvements are still meaningful.

4.4.2. Stability Verification

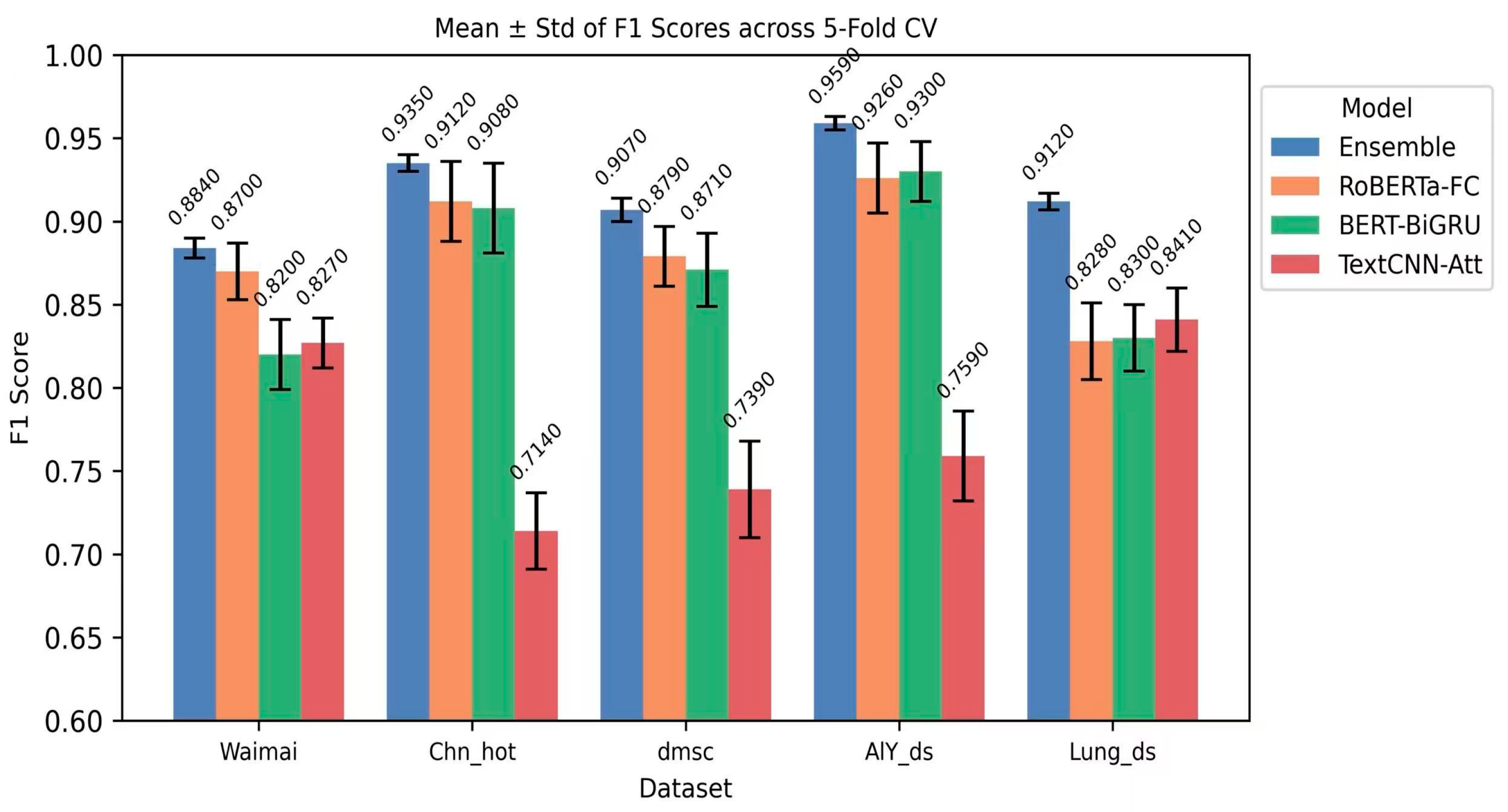

To further evaluate the robustness and generalization ability of the proposed dynamic uncertainty weighted ensemble model under different data partitions, this study conducted a stability analysis using K = 5 cross-validation on five datasets.

Table 8 shows the mean and within-fold standard deviation (mean ± std) of the F1 Score for different datasets and models under K = 5 cross-validation.

Figure 4, corresponding to

Table 4, presents the mean F1 Score and within-fold standard deviation (STD) of the four models on each dataset in the form of a grouped bar chart. The figure clearly shows that the ensemble’s mean bar is higher than that of most base learners on all datasets, and its error bars are shorter, indicating that the model significantly reduces the within-fold fluctuation under K = 5-fold cross-validation, demonstrating high stability. Comparing the error bar lengths, the ensemble significantly reduced the within-fold uncertainty on all five datasets, validating the conclusion that “ensembling reduces within-fold fluctuation.” On the Waimai dataset, the mean of a single-base model (TextCNN-Att) is close to that of the ensemble, but the ensemble’s mean is still slightly higher and its variance is smaller, demonstrating that the ensemble strategy can provide robust gains even in everyday scenarios with direct emotional expression and brief text. On the Chn_hot dataset, the mean of the ensemble is significantly higher than that of the three-base model, and the error bars almost do not overlap, demonstrating that in complex, long texts, information-intensive corpora with multiple emotional transitions, the integration mechanism can fully integrate the complementary features of the base models to achieve maximum performance improvement.

Figure 5 shows the performance trends of the models on different datasets using overlaid shading, with shading representing ±std. The analysis shows that the ensemble’s shading line consistently stays at the top and has a narrow shading band, indicating smoother and more stable performance across datasets. This not only supports the generalization advantage of ensemble methods for multi-source, multi-domain texts, but also reveals their robustness in the face of corpus differences. In particular, on the Chn_hot dataset, the vertical distance between the ensemble and the three-base model is the largest, intuitively reinforcing the phenomenon that ensembles yield the greatest benefits on complex, long texts. For short, everyday texts such as Waimai and dmsc, the shading lines are relatively close, indicating that the single-base model has achieved a good fit on these texts. However, the ensemble maintains its lead and has a smaller within-fold standard deviation, demonstrating that even with limited absolute improvement, its stability benefits are still significant.

Further comparison of

Figure 4 and

Figure 5 reveals that the benefits of ensembling are not only reflected in an improvement in the mean, but also in a significant reduction in variance: the average standard deviation of the ensemble is significantly lower than that of the three-base model. This demonstrates that the ensemble approach, through dynamic uncertainty weighting, balances the bias between model outputs, resulting in consistently improved performance both within and across datasets. This stability is particularly important for practical industrial deployment and multi-round experimental validation, as it ensures the model’s repeatability and reliability under random data partitioning, while mitigating the risk of performance fluctuations caused by varying text feature distributions.

In summary, the K = 5 cross-validation results strongly demonstrate that the proposed dynamic uncertainty weighted ensemble model not only improves the average performance in multi-source, multi-domain sentiment analysis tasks but also significantly reduces within-fold fluctuations, achieving stable and universal performance improvements. Furthermore, the charts reveal the mechanism for the differential benefits across datasets: Ensembling yields the greatest gains on complex, long texts and cross-subdomain corpora, while maintaining stability on short, everyday texts, demonstrating the adaptability and robustness of the ensemble strategy across diverse corpora.

4.4.3. Analysis of the Model’s Cross-Domain Robustness and Comprehensive Advantages

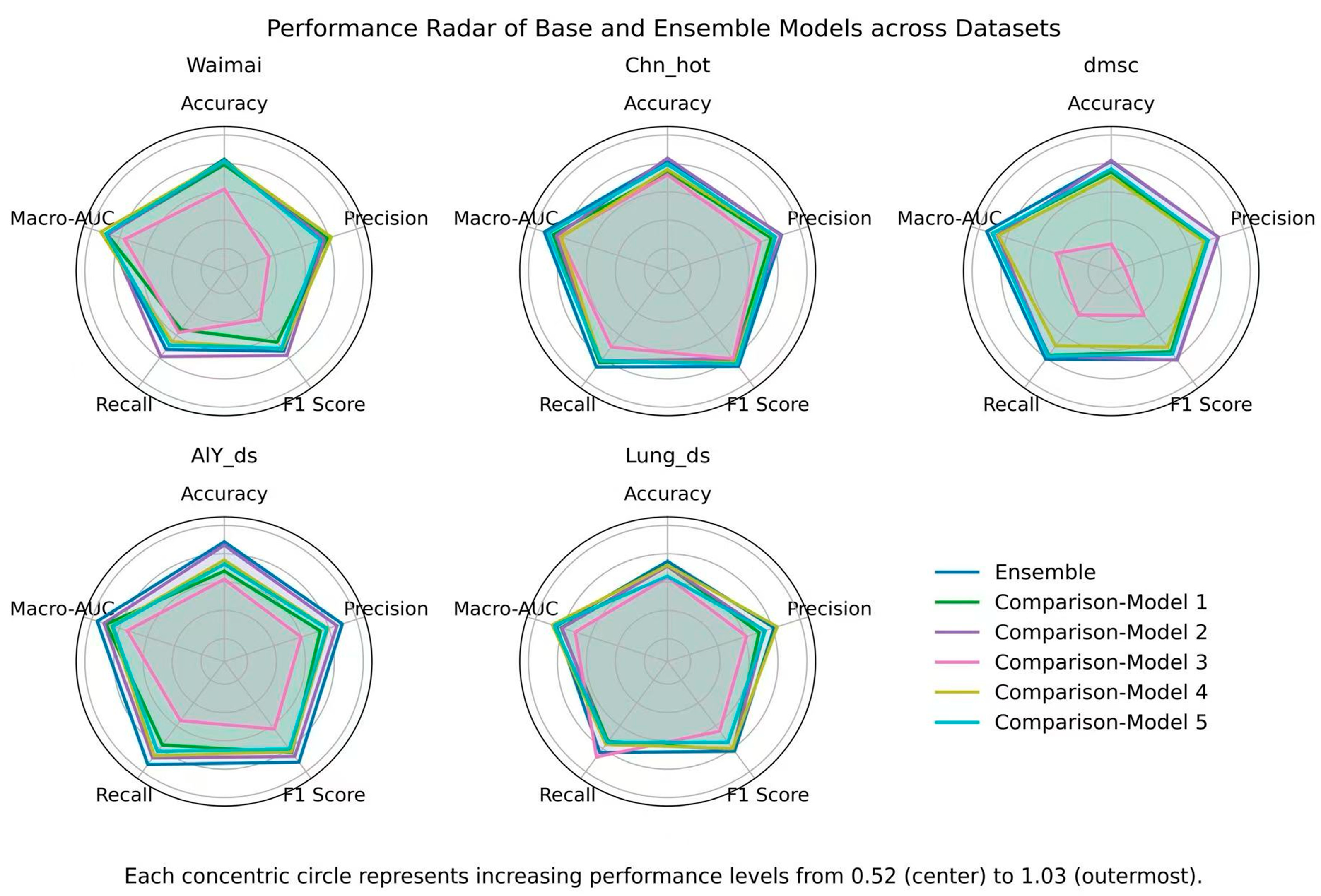

To comprehensively evaluate the adaptability and overall advantages of the proposed integrated model in tasks in different fields, this study drew a radar chart based on five core indicators (Accuracy, Precision, F1 Score, Recall, and Macro-AUC) on five datasets (Waimai, Chn_hot, dmsc, AlY_ds, and Lung_ds) (as shown in

Figure 6) and calculated the cross-dataset mean of each indicator (as shown in

Table 9).

Statistical results show that the average performance of the ensemble model across the five datasets is Accuracy = 0.9097 ± 0.025, Precision = 0.9147 ± 0.027, Recall = 0.9183 ± 0.040, F1 Score = 0.9149 ± 0.033, and Macro-AUC = 0.9582 ± 0.023. The Macro-AUC consistently remained in the 0.92–0.98 range, demonstrating the model’s robust discriminative capabilities across different thresholds. Furthermore, Precision and F1 Score remained above 0.90 on most datasets, demonstrating the model’s superiority in class prediction balance and overall reliability. For example, the standard deviation of Accuracy for Comparison-Model 3 is as high as 0.096, while that of the ensemble model is only 0.025, demonstrating its lower fluctuation across different domain tasks. In particular, Macro-AUC has remained in the range of 0.92–0.98 for a long time, reflecting the model’s stable discrimination ability at different discrimination thresholds; Recall and F1 Score have both remained stable at around 0.91, indicating that its performance in balancing minority classes and overall categories is more reliable.

Combined with the radar chart in

Figure 6, we can see that the ensemble model forms relatively full and stable coverage curves across all tasks, while the comparison models exhibit significant skewness: some models excel in Precision or Recall, but suffer from significant shortcomings in other metrics.

- (1)

In the Waimai dataset (general scenario), the ensemble model achieves the best result in Accuracy (0.9147), but falls short in other individual metrics: Comparison-Model 4 leads in Precision (0.9088) and Macro-AUC (0.9671), while Comparison-Model 2 leads in F1 Score (0.8883), and also performs best in Recall (0.8930). This indicates that, in general review scenarios, individual comparison models often achieve advantages in specific metrics, but this advantage is often accompanied by significant declines in other metrics. For example, Comparison-Model 4 performs well in Precision and Macro-AUC, but its Recall (0.8277) is significantly lower than that of the ensemble model (0.8616), indicating a significant weakness in minority class recognition. While Comparison-Model 2 leads in F1 Score and Recall, its Accuracy and Macro-AUC are relatively low (0.9059 and 0.9396), limiting its overall discriminative ability. In contrast, the ensemble model’s distinguishing feature is not a single optimal metric, but rather a relatively balanced performance across all metrics: Accuracy and Recall are at relatively good levels, while Precision and F1 Score, while lower than the best model, remain within acceptable ranges (0.8777 and 0.8696), and Macro-AUC also remains high (0.9478). This coordination between metrics demonstrates that the ensemble strategy, by combining the discriminative strengths of different base learners, effectively mitigates biases in individual models due to threshold selection or overfitting, resulting in a more robust overall performance. Therefore, in general tasks, while the comparison model may outperform the ensemble model in some local dimensions, the ensemble model, thanks to its balanced approach across multiple metrics, demonstrates superior overall robustness and robustness.

- (2)

In the Chn_hot dataset (cross-domain review scenario), the ensemble model achieved outstanding performance across multiple core metrics: Precision (0.9327), F1 Score (0.9353), Recall (0.9379), and Macro-AUC (0.9672) all achieved the best results, demonstrating strong overall discrimination and minority class recognition capabilities in cross-domain tasks. In comparison, while Accuracy (0.9111) was slightly lower than Comparison-Model 2 (0.9188), the gap was not significant. Further comparison reveals that Comparison-Model 2 achieved the best performance in Accuracy (0.9188), but its F1 Score (0.9015) and Recall (0.9117) lagged significantly behind the ensemble model. This suggests that while it leads in overall accuracy, it struggles to achieve a balanced classification performance. Overall, the ensemble model not only leads in core metrics such as Precision, F1 Score, Recall, and Macro-AUC but also maintains near-optimal accuracy, demonstrating comprehensive robustness through complementary advantages. This demonstrates that the cross-domain review task can effectively integrate the local strengths of different models to achieve a more representative overall lead.

- (3)

On the dmsc (cross-domain social text) dataset, Comparison-Model 2 slightly outperformed the ensemble model (0.9071 and 0.9069) in Accuracy (0.9105) and F1 Score (0.9086), but the differences were only 0.34% and 0.17%, respectively, representing marginal advantages. In comparison, the ensemble model achieved the best results in Precision (0.9091), Recall (0.9048), and Macro-AUC (0.9724). The ensemble model achieved a 4% lead in Macro-AUC (0.9724 vs. 0.9323), demonstrating its superior discriminative ability under varying thresholds and sample distributions. Furthermore, the ensemble model’s Recall significantly outperformed Comparison-Model 2 (0.9048 vs. 0.8900), demonstrating its more comprehensive coverage of minority class samples. Overall, while individual models had slight advantages in Accuracy and F1 Score, the ensemble model, with its higher Recall and significantly higher Macro-AUC, demonstrated more robust overall performance in cross-domain noisy environments.

- (4)

In the AlY_ds (multi-subdomain e-commerce data), the ensemble model leads across the board in Precision (0.9487), F1 Score (0.9590), Recall (0.9694), and Macro-AUC (0.9797), demonstrating strong discriminative ability and robustness in complex heterogeneous scenarios. In contrast, while Comparison-Model 2 achieves the best accuracy (0.9299), its Precision (0.9291), Recall (0.9409), and Macro-AUC (0.9554) lag significantly behind the ensemble model, indicating that its overall stability is insufficient. Notably, the ensemble model achieved a Recall score of 0.9694, significantly outperforming all the compared models, demonstrating its superior coverage of minority classes and marginal samples. Furthermore, the Macro-AUC reached a high of 0.9797, far exceeding the single model (which reached a maximum of only 0.9554) and demonstrating its robust discrimination capabilities across various thresholds. Overall, while not achieving an absolute advantage in accuracy, the ensemble model, with its comprehensive leadership across multiple metrics, achieved an optimal balance across diverse e-commerce sub-sectors, making it more suitable for addressing practical application needs in complex, multi-source environments.

- (5)

On the Lung_ds (medical text) dataset, the ensemble model achieved the best results in Recall (0.9177) and F1 Score (0.9115), highlighting its stronger comprehensive recognition capabilities in complex medical semantic environments. In comparison, Comparison-Model 4 slightly outperformed the ensemble model in Precision (0.9167) and Macro-AUC (0.9379), but its Recall (0.8819) was significantly lower than the ensemble model, indicating insufficient coverage of minority class samples. Furthermore, while Comparison-Model 3 achieved the highest Recall (0.9367), its Precision and F1 Score were only 0.8056 and 0.8238, respectively, demonstrating a significant imbalance. In contrast, while the ensemble model did not achieve an overall lead in metrics such as Accuracy (0.8734), Precision (0.9053), and Macro-AUC (0.9238), it consistently maintained a high level, with a gap of only 1–1.5 percentage points from the best results. Overall, the ensemble model, through its advantages in Recall and F1 Score, balanced the recognition of minority classes with overall performance, meeting the need for “more detection and fewer omissions” in medical text scenarios and demonstrating greater practical value and robustness.

In addition, to avoid bias from a single metric, this study used an equally weighted ranking method based on the five metrics of Accuracy, Precision, Recall, F1 Score, and Macro-AUC to calculate the average ranking of each model across different datasets. The results show that the ensemble model achieved the lowest average ranking on dmsc and AlY_ds (both 1.25), 1.75 on Chn_hot, 2.25 on Waimai, and 2.00 on Lung_ds, respectively; the overall average ranking across datasets was 1.70. This demonstrates that regardless of the changing task scenarios, the ensemble model consistently maintains a top-two overall performance, often directly leading or on par with the best model.

In summary, while some comparison models may have advantages in terms of individual metrics or specific datasets, they often struggle to achieve consistent performance globally. In contrast, the ensemble model, leveraging the complementary nature of multi-base learners and its uncertainty weighting mechanism, effectively mitigates the bias and overfitting risks of individual models. It demonstrates stronger transferability and noise resistance in cross-domain tasks, achieving overall superiority across multiple metrics in multiple sub-domain tasks. Therefore, the proposed ensemble strategy not only demonstrates outstanding global performance but also possesses cross-domain robustness and practical deployment value.