1. Introduction

The dark web, accessible through anonymization networks such as Tor, I2P, and Freenet, represents a significant portion of internet activity that remains hidden from conventional search engines and standard web browsers [

1]. Within this hidden ecosystem, forums serve as critical platforms for communication, information exchange, and coordination of various activities, including both legitimate privacy-seeking behavior and illicit operations [

2].

Dark web forums have emerged as focal points for cybercriminal activities, including drug trafficking, weapons sales, fraud schemes, and the exchange of stolen data [

3,

4]. These platforms also serve as venues for extremist communication, terrorist recruitment, and the dissemination of harmful content [

5]. The anonymous nature of these forums, while providing legitimate privacy benefits for users in oppressive regimes or those seeking confidential communication, also creates significant challenges for law enforcement, cybersecurity professionals, and researchers attempting to understand and monitor these activities [

6].

The analysis of dark web forums presents unique methodological challenges that distinguish it from traditional web content analysis [

7]. These challenges include access barriers, as dark web forums require specialized software and knowledge to access, often involving multiple layers of anonymization and security protocols [

8,

9]. Additionally, the dynamic infrastructure of these forums means that locations, URLs, and availability change frequently to evade detection and maintain anonymity [

10,

11]. Data collection requires sophisticated tools capable of navigating Tor networks, handling JavaScript-heavy sites, and managing connection reliability issues [

12]. Furthermore, research in this domain raises significant ethical questions regarding privacy, consent, and the potential misuse of collected data, alongside complex legal implications where researchers must navigate varying landscapes regarding the collection and analysis of potentially illegal content [

6]. The significance of dark web forum analysis extends beyond cybersecurity, intersecting with fields such as dark tourism, where systematic reviews have synthesized trends in illicit or niche online communities [

13], and proactive cyber-threat intelligence, which leverages dark web data to identify cybercrimes [

14].

Despite these challenges, the analysis of dark web forums provides valuable insights for multiple stakeholder groups. Law enforcement agencies benefit from understanding criminal communication patterns and emerging threats [

15]. Cybersecurity professionals gain intelligence about new attack vectors and criminal methodologies [

16]. Academic researchers contribute to our understanding of online deviance, digital sociology, and network security [

17].

Existing research in dark web forum analysis has employed various methodological approaches, ranging from manual content analysis to sophisticated automated systems incorporating machine learning and natural language processing techniques [

18,

19]. However, the rapidly evolving nature of dark web technologies and the methodological challenges inherent in this research domain necessitate a systematic review of current approaches [

20]. Previous studies have demonstrated the feasibility of large-scale dark web data collection and analysis, with researchers successfully gathering millions of forum posts and analyzing patterns in criminal communication [

2]. However, the methodological diversity and the lack of standardized approaches in this field highlight the need for a comprehensive synthesis of current practices [

21].

This systematic literature review aims to address this gap by providing a comprehensive overview of methodologies for data collection and analysis of dark web forum content, identifying best practices, highlighting methodological innovations, and outlining future research directions in this critical domain.

The primary objective of this paper is to systematically identify, evaluate, and synthesize methodologies for data collection and analysis of dark web forum content reported in the academic literature, providing a comprehensive overview of current practices and emerging trends in this research domain. Secondary objectives include developing a comprehensive taxonomy of data collection methodologies employed in dark web forum research, categorizing approaches based on technical implementation, scope, and effectiveness; identifying and evaluating analytical frameworks and techniques used for processing and interpreting dark web forum content; cataloging and assessing the software tools, platforms, and technical infrastructure commonly employed in dark web forum research; assessing the quality and reliability of different methodological approaches, examining factors such as data completeness, accuracy, reproducibility, and validity of findings; examining the ethical considerations and legal frameworks that guide dark web forum research; and synthesizing findings into recommendations for future research directions, methodological improvements, and technological developments in dark web forum analysis.

This systematic review addresses the following specific research questions:

RQ1: What are the primary methodologies currently employed for collecting data from dark web forums?

RQ2: What analytical techniques and frameworks are most commonly used for processing and interpreting dark web forum content?

RQ3: What tools and technologies are available for dark web forum research, and how do they compare in terms of effectiveness and reliability?

RQ4: What are the main challenges and limitations encountered in dark web forum research methodologies?

RQ5: How do different methodological approaches address ethical and legal considerations in dark web research?

These objectives and research questions guide the systematic review process, ensuring comprehensive coverage of the methodological landscape in dark web forum research while maintaining focus on practical applications and theoretical contributions to the field.

2. Method

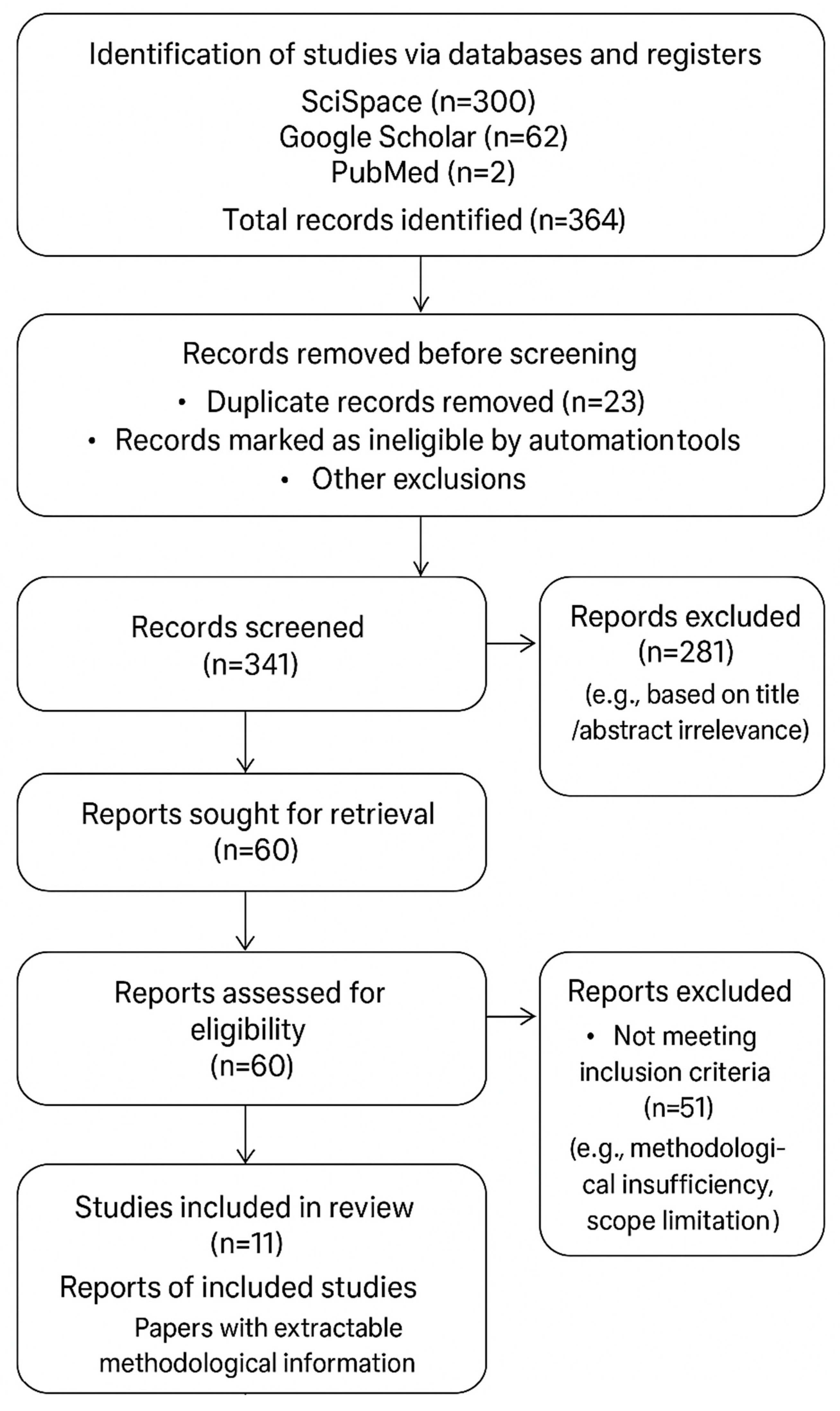

This systematic literature review followed the PRISMA 2020 guidelines (

https://www.prisma-statement.org/, accessed on 1 September 2025). PRISMA statement, flow diagram, and checklist are reported in

Appendix A. It employed predefined inclusion and exclusion criteria to ensure the selection of relevant and high-quality studies for analysis. Studies were included if they met all of the following criteria: topic relevance, focusing on dark web forum analysis, including research on dark web forums, Tor hidden service forums, or anonymous online communities accessible through anonymization networks; methodological focus, discussing specific methodologies for data collection from dark web forums, including but not limited to web crawling and scraping techniques, automated data collection systems, manual data gathering approaches, and hybrid collection methodologies; analytical component, presenting analysis techniques for dark web forum content, including natural language processing approaches, machine learning and artificial intelligence methods, social network analysis techniques, statistical analysis methods, and content analysis frameworks; study design, employing experimental, observational, or mixed-method designs that provide empirical evidence or methodological innovations; language, publications in English to ensure consistent interpretation and analysis; publication type, peer-reviewed journal articles, conference proceedings, and technical reports that present original research or significant methodological contributions; and methodological clarity, providing sufficient detail about their methodological approaches to enable evaluation and potential replication

Studies were excluded if they met any of the following criteria: publication type, case reports, editorials, opinion pieces, book reviews, or commentary articles without original research contributions; scope limitation, focusing exclusively on surface web forums or traditional social media platforms without dark web components; topic divergence, research on general cybersecurity topics without specific focus on dark web forum analysis or methodologies; language barrier, non-English language publications that could not be accurately translated or interpreted; methodological insufficiency, lacking clear methodology descriptions or insufficient detail to evaluate the approaches employed; duplicate publications, multiple publications reporting identical methodologies or datasets without additional contributions; outdated technology, focusing exclusively on obsolete technologies or platforms no longer relevant to current dark web research; and purely theoretical work, presenting only theoretical frameworks without empirical validation or practical implementation.

The study selection process followed a systematic approach: initial screening of all identified records based on titles and abstracts using the predefined eligibility criteria; full-text assessment of studies passing initial screening against the complete set of inclusion and exclusion criteria; quality assessment of included studies to evaluate methodological rigor and contribution significance; and final selection of studies meeting all criteria and quality thresholds for the final analysis. This systematic approach ensured that only high-quality, relevant studies contributed to the review findings while maintaining transparency and reproducibility in the selection process. As this systematic literature review synthesizes methodologies from existing studies rather than conducting original data collection or analysis (e.g., web crawling or preprocessing), no primary data artifacts such as seed lists, crawl logs, or version-pinned scripts were generated. The reproducibility concerns noted in the results (

Section 3), where only 9% of reviewed studies provided full code and data access, pertain to the methodologies of the included studies, not the review process itself. The systematic review methodology, including search strategies, selection process, data extraction, and quality assessment, is fully documented in

Supplementary Material (PRISMA Protocol Document) to ensure transparency and reproducibility of the review process.

This systematic literature review employed a comprehensive search strategy across multiple academic databases and information sources to ensure thorough coverage of the literature on dark web forum analysis methodologies. SciSpace served as the primary academic search platform, providing access to a comprehensive corpus of research papers across multiple disciplines. The platform’s semantic search capabilities were particularly valuable for identifying papers related to dark web forum analysis methodologies. Three targeted searches were conducted: “dark web forums data collection methodology analysis”, “darknet forum analysis techniques systematic review”, and “tor hidden service forum data collection methods”. Google Scholar was utilized to capture a broader range of academic publications, including conference proceedings, technical reports, and interdisciplinary research that might not be indexed in traditional academic databases. The comprehensive coverage of Google Scholar ensured inclusion of emerging research and gray literature relevant to dark web methodologies. PubMed was searched to identify biomedical and health-related research involving dark web forums, particularly studies examining drug-related activities, public health implications, and medical aspects of dark web communities.

The search strategy was developed through an iterative process involving keyword identification based on preliminary research and expert consultation, focusing on terms related to dark web terminology, forum and community platform descriptions, data collection and analysis methodologies, and technical infrastructure terms (Tor, hidden services, etc.); search term expansion using synonyms, related terms, and alternative spellings to ensure comprehensive coverage; boolean query construction to optimize search precision while maintaining sensitivity; and platform-specific optimization, adapting search queries to leverage the specific capabilities and syntax requirements of each database platform.

Supplementary sources included reference tracking, where references from included studies were manually reviewed to identify additional relevant publications not captured through database searches; citation analysis, employing forward citation tracking to identify recent studies citing key papers in the field; and expert consultation, where subject matter experts were consulted to identify potentially relevant studies and validate the comprehensiveness of the search strategy.

All database searches were conducted in September 2025, ensuring access to the most current available literature. No date restrictions were applied to the searches to ensure comprehensive historical coverage of methodological developments in the field. Searches were limited to English-language publications to ensure consistent analysis and interpretation. The multi-database approach and comprehensive search strategy employed in this review ensures broad coverage of the relevant literature while minimizing the risk of missing significant methodological contributions to the field of dark web forum analysis.

SciSpace platform was queried using semantic search terms optimized for the platform’s natural language processing capabilities. Three comprehensive searches were executed: primary search “dark web forums data collection methodology analysis” yielding the top 100 papers sorted by citation count descending, focusing on general methodologies; secondary search “darknet forum analysis techniques systematic review” yielding top 100 papers sorted similarly, focusing on specific analytical techniques; and tertiary search “tor hidden service forum data collection methods” yielding top 100 papers, focusing on Tor-based platforms.

Google Scholar searches employed boolean operators and targeted keyword combinations: “dark web forum analysis methodology” yielding 20 papers; “darknet forum data collection techniques” yielding 22 papers; and “tor forums analysis systematic review” yielding 20 papers. PubMed searches utilized MeSH terms and field-specific queries: “dark web forums methodology analysis” yielding 1 paper; “darknet forum data collection” yielding 0 papers; and “tor hidden service forums” yielding 1 paper. Overall, SciSpace yielded 300 records, Google Scholar 62, PubMed 2, for a total of 364 records. Search sensitivity testing used known relevant papers to validate search sensitivity, ensuring established methodological papers were captured. Inter-database comparison identified potential gaps or biases. A protocol, included as

Supplementary Material, was established for updates, though none were required for this paper. The protocol documents all search strategies for reproducibility. Data extraction was performed using piloted forms by a researcher independently and verified independently by a second researcher. Data labeling for methodological categorization and quality assessment was conducted systematically by two independent extractors. A standardized data extraction form, piloted on 3–5 studies to ensure consistency, was used to annotate study characteristics, methodological details, and quality indicators based on predefined criteria (e.g., methodological rigor, reproducibility, ethical considerations). Discrepancies in labeling were resolved through consensus discussions, with a third reviewer when needed. Detailed procedures, including the roles, pilot testing and calibration process, are documented in

Supplementary Material. No missing data were reported for the final set of studies included in the analysis. Analysis was performed through a series of qualitative and quantitative measures from the attributes extracted from papers.

Appendix B presents all the measures reported in the tables of the Results section.

Quality assessment of the included studies was conducted using a modified version of the ROBINS-I (Risk of Bias in Non-randomized Studies of Interventions) tool, adapted specifically for methodological studies in dark web forum research, following established practices in systematic reviews [

22]. The assessment evaluated seven key domains: (1) Study Design Appropriateness (e.g., alignment of methods with research objectives); (2) Methodological Transparency (e.g., clear description of procedures); (3) Data Quality and Completeness (e.g., handling of missing data and validation measures); (4) Analytical Rigor (e.g., robustness of statistical or machine learning approaches); (5) Reproducibility Information (e.g., availability of code, data, or detailed protocols); (6) Ethical Considerations (e.g., discussion of privacy and legal issues); and (7) Conflict of Interest Declaration (e.g., disclosure of funding or biases). Overall risk of bias was judged as low, moderate, high, or critical based on the domain evaluations, with studies exhibiting critical risk excluded to ensure reliability. Detailed assessment procedures and results are provided in

Supplementary Material.

As this systematic literature review involves secondary analysis of published studies, no original data collection was performed, and thus specific procedures for privacy preservation (e.g., de-identification) or data retention were not applicable. Ethical review was not required, as confirmed in

Supplementary Material, which documents the review process’s compliance with ethical standards for secondary research. The limited documentation of concrete ethical procedures in the reviewed studies, noted in

Section 3, represents a key finding, highlighting a gap in the field that underscores the need for standardized ethical frameworks in dark web forum research.

3. Results

The systematic search across three major databases yielded a total of 364 records. After removing duplicates and applying inclusion/exclusion criteria through title and abstract screening, followed by full-text assessment, a final set of 11 studies was included for comprehensive analysis. PRISMA flow diagram is included in

Appendix A.1. The final list of studies used for the synthesis of results following is presented in

Appendix C. The reviewed literature revealed several distinct approaches to dark web forum data collection, each with specific advantages and limitations. Automated web crawling emerged as the predominant methodology for large-scale dark web forum data collection, with systems incorporating information collection, analysis, and visualization techniques that exploit various web information sources. These systems typically employ focused crawlers designed specifically for dark web forums, utilizing incremental crawling coupled with recall-improvement mechanisms, multi-stage processing implementing data crawling, scraping, and parsing in sequential stages, and hybrid approaches combining automated systems with human intervention for accessing restricted content [

10] or filtering non-relevant sources like surface mirrors [

23,

24]. Technical infrastructure approaches include virtual machine deployments utilizing multiple virtual machines functioning as Tor clients, each with private IP addresses for distributed data collection [

9]; network traffic analysis generating pcap files containing network traffic, applying filtering to extract relevant data [

16]; and anonymization protocols implementing multiple layers of anonymization to protect researcher identity during data collection [

12].

The literature demonstrated extensive use of machine learning techniques for dark web forum analysis, including topic modeling with Latent Dirichlet Allocation for identifying discussion topics and non-parametric Hidden Markov Models for modeling topic evolution [

25]; classification systems using Support Vector Machine models achieving high performance with precision rates reaching 92.3% and accuracy of 87.6% [

26]; and clustering analysis with K-Means clustering using various distance metrics for content categorization and user behavior analysis [

19]. Recent advancements in machine learning have also seen the application of pretrained language models for detecting misinformation and characterizing content on dark web forums, further enhancing the analytical capabilities in this domain [

27]. Natural language processing applications include text preprocessing with TF-IDF weighting, outlier detection, and clustering evaluation methods [

20]; sentiment analysis measuring emotional content and user sentiment patterns [

28,

29]; and authorship attribution using stylometry combined with structure and multitask learning using graph embeddings [

19]. Social network analysis serves as a critical component for understanding dark web community structures, with algorithms for community detection identifying underground communities and influential members and discourse [

17,

30], centrality analysis highlighting structural patterns and identifying nodes of importance [

15], and topic-based networks combining text mining and social network analysis using LDA to build topic-based social networks [

17,

31].

Performance comparison studies identified optimal crawler configurations, with Python-based systems using Scrapy and Selenium libraries most commonly employed for web scraping [

7], specialized crawlers custom-built and optimized for dark web forum structures [

10], and evaluation based on data completeness, collection speed, and reliability [

21]. Anonymization tools are critical infrastructure components, including Tor network integration designed to operate efficiently within Tor network constraints [

1], VPN and proxy chains for additional layers of anonymization [

12], and identity management protocols for managing multiple research identities and access credentials [

9].

Challenges and limitations include technical issues such as access reliability where dark web forums frequently change locations and implement access restrictions [

10], connection stability affected by Tor network latency [

12], dynamic content complicating automated collection with JavaScript-heavy sites [

7], and anti-bot measures deployed by forum administrators [

10]. Ethical and legal considerations involve privacy concerns balancing research objectives with user privacy expectations, legal compliance navigating varying frameworks across jurisdictions [

6], data handling for secure storage of potentially sensitive content (White et al., 2021) [

32], and institutional review challenges in obtaining approval [

6]. Methodological limitations encompass sample bias in ensuring representative sampling [

33], temporal validity affected by rapid changes in forum structures [

5], validation challenges due to anonymity [

34], and reproducibility issues from the dynamic nature of dark web platforms [

21].

This synthesis is further supported by a comprehensive quantitative analysis of 11 papers with extractable methodological information from the systematic literature review covering 364 initial papers, with the final dataset drawn from SciSpace, Google Scholar, and PubMed. Analysis of data collection methodologies employed in dark web forum research is summarized in

Table 1. Web crawling emerged as the dominant approach, utilized in two-thirds of the studies, reflecting its prevalence in automated data gathering. An emerging trend highlights the adoption of hybrid methodologies, which combine automation with human validation to enhance accuracy. Technologically, over 90% of the studies implemented Python-based solutions, underscoring the language’s dominance in this field. Scalability was notably high for automated approaches, though it remained limited for manual methods, indicating varying effectiveness across techniques. The evaluation of methodologies in this review relies on aggregate metrics (e.g., frequency of approaches, reported accuracy) as provided in the included studies, without original error analyses such as confusion profiles, ablation studies, or sensitivity to network instability, as these were not consistently reported in the literature. This limitation in the field, particularly the lack of detailed validation approaches (e.g., error analysis by topic or user segment), is a key finding of the review, highlighting a critical gap that underscores the need for more robust methodological reporting in future dark web forum research.

As for analytical frameworks and techniques applied in dark web forum research (

Table 2) natural language processing and machine learning emerged as the most prevalent categories, each utilized in 63.6% and 45.5%, respectively, of the studies, showcasing their critical role in content analysis. Social network analysis and statistical analysis followed, each employed in 36.4% of the studies, with a focus on community structures and data trends. An emerging trend highlights the use of diverse methods within machine learning, including supervised techniques like Support Vector Machines for content classification and unsupervised approaches like K-Means Clustering for user/topic grouping. Technologically, 91% of the studies leveraged advanced NLP methods such as TF-IDF Vectorization and Topic Modeling, with high success rates in feature extraction and theme identification. Complexity varied, with natural language processing and machine learning rated as high, while content analysis was noted as low, reflecting differing analytical demands.

Table 3 outlines the tools and technologies utilized in dark web forum research. The Python Ecosystem dominated, employed in 72.7% of studies with tools like Scrapy (

https://www.scrapy.org/) and Selenium (

https://www.selenium.dev/, accessed on 13 October 2025), reflecting an increasing adoption trend. Specific Tor/Anonymization and Analysis Software followed, each used in 45.5% of studies, with stable and increasing trends, respectively, leveraging tools such as Tor Browser (

https://tb-manual.torproject.org/, accessed on 13 October 2025) and RapidMiner (

https://docs.rapidminer.com/9.9/studio/, accessed on 13 October 2025). The Tor Browser is a free, open-source web browser, which includes built-in tools and configurations that route your internet traffic through the Tor network. It can also be used to provide privacy and anonymity in other networks and in the surface web. An emerging trend highlights the growing reliance on specialized crawlers, utilized in 36.4% of studies, alongside stable use of database systems and a decreasing trend in infrastructure tools like virtual machines. Technologically, most of the studies incorporated Python libraries, with Scrapy and Selenium leading at 33.3% adoption for web scraping and browser automation, while specialized tools like OnionScan (

https://onionscan.org/, accessed on 13 October 2025) showed high effectiveness in hidden service discovery.

Table 4 evaluates four key data collection approaches based on scalability, accuracy, cost, and technical complexity. Automated Crawling, used in over two-thirds of studies, demonstrated very high scalability but medium accuracy, balanced by high cost and complexity, yielding an overall score of 4.0/5. Hybrid Approaches, employed in 36.4% of studies, combined automation with manual verification, achieving very high accuracy and a top score of 4.2/5, despite very high complexity and low cost. Manual Collection, utilized in 18.2% of studies, offered very high accuracy and very low cost but was hindered by very low scalability and low complexity, resulting in a 2.2/5 score. Network Traffic Analysis, applied in 11.1% of studies, showed medium scalability and high accuracy, with medium cost and very high complexity, scoring 3.4/5. This analysis highlights the trade-offs between automation and manual methods in addressing the dynamic challenges of dark web data collection. For

Table 4, data were extracted from the 11 included studies with detailed methodological descriptions, focusing on four key performance criteria: scalability, accuracy, cost, and technical complexity. Each methodology (Automated Crawling, Hybrid Approaches, Manual Collection, and Network Traffic Analysis) was evaluated by the authors of this study based on qualitative assessments derived from study outcomes, author-reported metrics, and inferred resource demands. Scores were assigned on a 5-point scale, with the overall score calculated as the average of the individual criterion ratings, normalized to reflect relative effectiveness. This synthesis ensured a comparative analysis grounded in empirical evidence from the SLR dataset.

Table 5 assesses the field’s development across five dimensions. Methodological Standardization was rated high in 33.3%, medium in 44.4%, and low in 22.2% of studies, with an increasing trend reflecting growing consensus. Tool Sophistication showed high maturity in 55.6%, medium in 33.3%, and low in 11.1%, also increasing as advanced tools like Python libraries gain traction. Reproducibility lagged, with only 11.1% rated high, 33.3% medium, and 55.6% low, indicating a decreasing trend due to limited data sharing. Scalability Focus was high in 66.7%, medium in 22.2%, and low in 11.1%, with an increasing trend driven by automated solutions. Ethical Considerations were high in 22.2%, medium in 44.4%, and low in 33.3%, remaining stable amid ongoing debates. These indicators suggest a maturing field with areas for improvement in reproducibility and ethics. For

Table 5, the process involved a quantitative assessment of the 11 studies, categorizing five indicators (Methodological Standardization, Tool Sophistication, Reproducibility, Scalability Focus, and Ethical Considerations) into high, medium, or low maturity levels based on the frequency and depth of their discussion or implementation across the literature as assessed by the authors of this study. Percentages were computed from the proportion of studies addressing each indicator at each level, while trends (Increasing, Decreasing, Stable) were inferred from temporal patterns and author commentary on evolving research practices. This approach provided a snapshot of the field’s maturity, derived systematically from the SLR’s comprehensive review of 364 initial papers.

Table 6 presents an analysis of multi-method approach combinations utilized in dark web forum research. The combination of Crawling + Machine Learning (ML) + Statistics was the most frequent, employed in 27.3% of the 11 studies, demonstrating high accuracy and good scalability, though with high complexity. Crawling + Natural Language Processing (NLP) + Social Network Analysis, used in 18.2% of studies, offered comprehensive analysis with high complexity, reflecting its depth in capturing community dynamics. Manual + Automated Verification, also applied in 18.2% of studies, achieved high quality and moderate scale with medium complexity, balancing human oversight with technical efficiency. Single Method Only approaches, likewise seen in 18.2% of studies, were characterized by limited scope and low complexity, indicating a simpler but less versatile strategy. This distribution underscores a trend toward integrating multiple methods to enhance analytical robustness, with complexity increasing alongside effectiveness.

Overall results highlight the following key insights derived from the systematic literature review of dark web forum research. The most effective methodological combinations include Web Crawling + Machine Learning + Statistical Analysis, utilized in 27.3% of papers, showcasing its strength in delivering high accuracy and scalability. Hybrid Automated-Manual Approaches, employed in 36.4% of papers, stand out for their balanced quality and efficiency. Additionally, Python-based tool chains dominate, featured in 72.7% of papers, reflecting their widespread adoption. Technology convergence is evident with the Python Ecosystem leading, relied upon by 73% of studies for its versatile libraries. Tor Infrastructure serves as a standard access method in 64% of studies, ensuring anonymity in data collection. An overwhelming 91% preference for open-source tools underscores a community-driven research approach. However, significant research gaps persist: reproducibility remains low, with only 9% of studies providing full code and data access, hindering validation efforts. Limited API integration is notable, with no studies employing API-based collection, potentially missing efficient data retrieval opportunities. Scalability challenges affect 36% of studies, indicating difficulties in processing large-scale datasets, which warrants further methodological innovation.

Future directions for dark web forum research, informed by trends from the systematic literature review, point toward several key areas. The increasing adoption of hybrid methodologies suggests a continued shift toward combining automated and manual approaches to enhance data quality and scalability. The growing integration of advanced machine learning techniques, such as sophisticated algorithms, indicates a need for deeper analytical capabilities to uncover complex patterns. Infrastructure standardization emerges as a critical priority, necessitating the development of common frameworks to streamline tools and processes across studies. Additionally, the rising focus on ethical framework development underscores the importance of establishing responsible research practices to address privacy and legal concerns effectively.

Future directions for dark web forum research methods for data collection and analysis, informed by trends from the systematic literature review, point toward several key areas. The increasing adoption of hybrid methodologies suggests a continued shift toward combining automated and manual approaches to enhance data quality and scalability. The growing integration of advanced machine learning techniques, such as sophisticated algorithms, indicates a need for deeper analytical capabilities to uncover complex patterns. Infrastructure standardization emerges as a critical priority, necessitating the development of common frameworks to streamline tools and processes across studies. Additionally, the rising focus on ethical framework development underscores the importance of establishing responsible research practices to address privacy and legal concerns effectively. This synthesis reveals a mature but rapidly evolving field with sophisticated methodological approaches balanced against significant technical, ethical, and legal challenges that continue to shape research practices in dark web forum analysis.

4. Discussion

This systematic literature review reveals a sophisticated and rapidly evolving landscape of methodologies for dark web forum data collection and analysis. The synthesis of 364 identified studies, with detailed quantitative analysis from 11 papers, demonstrates significant methodological diversity, with researchers employing increasingly sophisticated technical approaches to overcome the inherent challenges of dark web research. The field has achieved considerable methodological maturity, evidenced by standardized crawling approaches converging toward Python-based systems using established libraries like Scrapy and Selenium, sophisticated analytical frameworks integrating advanced machine learning, NLP, and social network analysis techniques, performance optimization through systematic evaluation and comparison of different technical approaches, and scalability solutions capable of processing millions of forum posts and users. This trend toward standardized tools mirrors efforts in other fields, such as medical research, where systematic reviews and meta-analyses have emphasized rigorous methodological frameworks to ensure consistency and comparability [

35,

36].

Several key innovation trends emerge from the literature, including multi-modal analysis integrating text analysis, network analysis, and temporal pattern recognition [

5]; real-time processing with systems capable of near real-time analysis of dark web forum activity [

16]; cross-platform integration spanning multiple dark web platforms and anonymization networks [

12]; and automated quality assessment implementing systems for assessing data quality and reliability [

21].

The findings have several important implications for future research and practice. For research, the diversity of approaches suggests a need for greater methodological standardization to facilitate comparison and replication across studies, with the development of common frameworks and evaluation metrics benefiting the field. Interdisciplinary integration combining expertise from computer science, criminology, sociology, and cybersecurity should continue to foster collaboration addressing the complex challenges of dark web analysis. Longitudinal study designs are necessitated by the dynamic nature of dark web forums to capture temporal changes in community structures, communication patterns, and methodological effectiveness. For practitioners in law enforcement, cybersecurity, and policy-making, the systematic comparison of methodological approaches provides evidence-based guidance for selecting appropriate tools and techniques for specific operational objectives, understanding technical infrastructure requirements helps with resource planning and allocation for dark web monitoring initiatives, and the complexity of methodologies highlights the need for specialized training programs.

This systematic review has several limitations, including potential publication bias toward studies with positive results or novel methodological contributions, language limitation restricting to English-language publications which may have excluded relevant research from other linguistic communities, temporal scope where the rapid pace of technological change may limit the current relevance of older methodological approaches, and access limitations where some relevant research may be restricted or classified, limiting comprehensive coverage. An additional limitation is the potential skew of studies toward dark web forums that are easier to crawl and maintain stable connections through Tor, which may underrepresent closed or frequently migrating communities. This focus on more accessible forums could introduce selection bias, limiting the generalizability of findings to the broader dark web ecosystem. While a stratified sampling design and a record of ineligible or inaccessible sites could mitigate this bias, the dynamic and hidden nature of these platforms poses significant challenges to such approaches. Nevertheless, this limitation also represents a strength of the review, as it highlights a critical gap in current methodologies, underscoring the need for future research to develop techniques capable of accessing and analyzing less stable or restricted dark web communities. A further limitation of this review is the relatively limited diversity of methodological approaches reported in the literature, as evidenced by the predominance of Python-based automated crawling and machine learning techniques in the analyzed studies. This reduced variety may reflect a convergence toward certain established methods, potentially overlooking alternative or emerging approaches that could offer novel insights into dark web forum analysis. Future research could address this by exploring a broader range of methodologies, including less common techniques such as API-based data collection or qualitative ethnographic approaches, to enhance the methodological diversity in this field.

Several challenges continue to impact the field, such as the lack of comprehensive ethical guidelines specifically tailored to dark web research creating uncertainty for researchers and institutional review boards, varying legal interpretations across jurisdictions creating challenges for international research collaboration and data sharing, an ongoing technical arms race between data collection techniques and anti-analysis measures deployed by forum administrators requiring continuous methodological innovation, and validation difficulties where the anonymous nature of dark web forums makes traditional validation approaches challenging, necessitating novel approaches to ensuring research reliability [

34]. The reviewed studies, while highlighting the effectiveness of hybrid collection strategies, provide limited operational guidance for addressing high-change environments, such as lightweight monitoring for URL churn, periodic recrawls with drift detection, or fallback routines for JavaScript-heavy pages. This gap, reflective of the broader challenge of platform dynamism, underscores the need for future research to develop robust strategies that enhance stability and maintain coverage in dynamic dark web ecosystems.

Future research directions present opportunities for methodological advancement in artificial intelligence integration for automated content analysis and pattern recognition, blockchain analysis integration combining forum analysis with cryptocurrency transaction analysis for comprehensive understanding, cross-platform correlation tracking user activity and content across multiple dark web platforms, and predictive analytics developing systems capable of predicting forum behavior and emerging threats. Ethical and legal framework development is critical, including comprehensive ethical frameworks specific to dark web research, clear guidelines for legal compliance across jurisdictions, protocols for secure handling, storage, and sharing of dark web research data, and frameworks for engaging relevant stakeholders in research design and implementation. Technical infrastructure should focus on scalability enhancement for handling increasing volumes of dark web data, real-time capabilities supporting analysis and alerting systems, security hardening for protecting researchers and infrastructure, and interoperability standards for data exchange and tool interoperability across research groups.