The Role of Graph Neural Networks, Transformers, and Reinforcement Learning in Network Threat Detection: A Systematic Literature Review

Abstract

1. Introduction

1.1. Intrusion Detection Systems

1.1.1. Signature-Based IDS

1.1.2. Anomaly-Based IDS

1.1.3. Limitations of Existing AIDS

- Generating high false-positive rates: AIDS tends to produce more false positives compared to SIDS. This presents a major challenge that leads to model inefficiency, alert fatigue, and unnecessary human interventions, thus requiring ongoing research contributions.

- Inability to capture complex spatial relationships and inherent graph structures related to network threats: Traditional machine learning models treat network traffic as flat, sequential data, making it difficult to accurately capture complex relationships and interdependencies between network nodes and their communications. Such spatial, graph-structured information is crucial for identifying unusual relational patterns associated with threats that can potentially lead to network attacks, including insider threats [6].

- Limitations in handling long-term dependencies in network traffic: Subtle threats that develop over a long period present a significant challenge to current anomaly detection models, such as those based on RNNs, due to problems like vanishing gradients [7]. However, identifying such threats is increasingly important in the current network security landscape.

- Adversarial robustness: Current threat detection methods often lack resilience against adversarial attacks. This makes them vulnerable to evasion techniques used by sophisticated network attacks to manipulate machine learning models with malicious data [6].

- Scalability and high dimensionality of network traffic data: Ongoing research is necessary to process network data at large scales effectively and promptly. Even the typical computer network generates network traffic with high throughput, resulting in large volumes of data that current models struggle to analyze efficiently, leading to performance bottlenecks. Therefore, achieving low latency with high network throughput remains a challenge for traditional ML and DL models. Additionally, the high dimensionality of network data leads to the curse of dimensionality, where the effectiveness and generalizability of distance-based algorithms decline as the input data dimensions increase, resulting in increased computational complexity, overfitting, spurious correlations, and sparse clusters [8].

- Covert channel detection: Traditional ML models often miss covert channel attacks that use hidden communication paths, resulting in data theft [9].

- Challenges of dynamic and evolving network environments: Real-world networks must often be restructured with different topologies to meet organizational requirements, resulting in variations in their traffic patterns over time. However, traditional anomaly detection models usually assume the conceptual structure of their network environment to be static or slowly changing, making them less effective in the real world.

- Limited interpretability and transparency: Transparency is important when making cybersecurity-based decisions. However, many ML models, particularly deep neural networks, cannot support transparency because they act as black boxes. This lack of transparency and interpretability can make it challenging for security analysts to understand the reasoning behind the detected anomalies, especially those that are previously unknown.

1.2. GNNs

The Potential of GNNs for Network Threat Detection

- Modeling complex relationships and patterns: GNNs have the potential to successfully capture the interdependencies and relationships in computer networks, including LANs, where nodes represent network entities such as hosts or IP addresses, and edges represent the relationships among the nodes, which can be communication flows, network sessions, etc. This unique characteristic of GNNs can be particularly useful for identifying network abnormalities that can span across multiple coordinated entities and their complex interactions, which traditional ML models, such as SVMs or clustering algorithms, struggle to achieve [11]. Furthermore, GNNs can be employed to identify hidden network patterns, such as the ones presented by covert channels.

- Scalability: Computer networks are known to produce a large amount of data. However, GNNs can handle graph data at large scales, making them suitable for typical network anomaly detection tasks. With appropriate architectural or sampling strategies, GNNs can be scaled to accommodate additional data without a significant loss of performance, demonstrating their inherent robustness qualities [12].

- Dynamic graph construction: Automatic dynamic graph construction techniques can be employed to address the challenge of dynamic and evolving network environments, allowing real-time updates to the graph as new data is received [13]. This improves the robustness of GNNs compared to traditional static ML models.

1.3. Transformers

Potential of Transformers for Network Threat Detection

- Handling long sequential data: Transformers are designed to process sequences of data and capture dependencies over long ranges. Hence, they can be potentially used in analyzing network traffic to identify sophisticated threats that evolve slowly by detecting abnormal patterns growing over extended periods [14].

- Attention mechanisms: By utilizing its attention mechanisms, the Transformer model can focus on relevant parts of its input sequence. This helps highlight important features that correspond to malicious behaviors and thus enhances the precision by reducing false positives.

- Parallel processing: Balancing real-time detection vs. accuracy is critical in any anomaly detection mechanism. Achieving low latency in high-throughput environments is crucial for supporting tasks such as network traffic analysis, which is challenging for traditional sequential models, including RNNs. However, Transformers can process entire sequences simultaneously, enabling low-latency detection in network environments.

- Versatility in data handling: Furthermore, Transformers are highly versatile and can be applied to handle different input modalities, enabling multi-faceted network threat analysis, which can improve model robustness [15].

- Interpretability and transparency: Attention mechanisms in Transformers can be inferred to highlight the most relevant parts of the input data that they paid attention to during the threat detection process. This can be exploited to interpret the Transformer’s decision up to some degree, and thus, the overall transparency can be improved.

1.4. Reinforcement Learning

Potential of Reinforcement Learning in Network Threat Detection

- Adaptive learning: RL enables IDS to adapt to new and evolving threats by continuous learning via interactions with the network environment. This ensures the responding strength of RL-enabled IDS against changing attack patterns.

- Optimization of detection strategies: By learning the optimal policies, RL can optimize the trade-offs between detection accuracy, false positives, and computational efficiency. This can significantly improve the overall performance and resilience of network threat detection systems [17].

- Decision making in real time: RL can improve real-time IDS decisions and responses based on the current state of the network [18].

- Adapting optimal hyperparameters of the base models: RL can effectively choose optimal hyperparameters of ML and DL models when set up in hybrid environments.

1.5. Aims and Contributions of the SLR

1.5.1. Aims

- Explore how GNNs, Transformers and RL algorithms have been employed in current network threat detection literature, standalone or in hybrid manners.

- Explore the effectiveness of these models and algorithms in network threat detection, especially in detecting sophisticated modern network threats.

- Identify key trends, strengths, limitations, and gaps in existing approaches.

1.5.2. Contributions

- Providing a potentially novel conceptual framework for classifying network threats inspired by the MITRE ATT&CK model and cyber kill chain that clearly separates network threats from network attacks and maps such network threats to their respective MITRE ATT&CK tactics.

- A domain-specific contextualization of network threat detection focused on conventional Ethernet and TCP/IP-based LANs.

- A structured and detailed synthesis of how GNNs, Transformers and RL algorithms are currently employed in conventional LAN-based network threat detection.

2. Literature Review

2.1. Traditional Machine Learning Methods for Network Threat Detection

2.2. Traditional Deep Learning Methods vs. GNNs and Transformers for Network Threat Detection

2.3. GNNs in Network Threat Detection

2.4. Transformers in Network Threat Detection

2.5. RL Algorithms in Network Threat Detection

2.6. Taxonomy of the Research Domain

2.7. Cost-Effectiveness Comparison Between Traditional ML and Deep Learning Models vs. GNNs, Transformers, and Reinforcement Learning Models

- Advanced models (GNNs, Transformers, RL) impose higher preprocessing, training, and operational costs than classic ML baselines, and

- Engineering mitigations (lite models, input encodings, model distillation, or hybrid supervision + RL) are being proposed to reduce these costs.

2.7.1. Data Collection and Preprocessing

- Traditional ML and DL baselines: Surveys of classical and ML-based IDS research note that many traditional ML and DL pipelines with engineered features operate on compact flow or summarized tabular features (e.g., NetFlow/summary statistics), which reduces preprocessing and storage overhead relative to heavy representation pipelines [19,20].

- Heterogeneous or nested graphs require additional preprocessing: Reviews of GNN methods emphasize that applying GNNs to network security requires an explicit graph construction step (hosts/sessions/processes → nodes; flows/relations → edges) and design choices for heterogeneous or nested graphs; those preprocessing steps incur extra engineering cost (feature to graph mapping, edge definition logic) compared to flow/tabular pipelines and homogeneous graphs [26,30,32]. The literature also discusses the need to maintain or update graph structures for streaming data, which adds ongoing processing overhead [30,34].

- Transformer tokenization/sequence handling increases preprocessing: Transformer-based NIDS research explicitly discusses the need to prepare sequential inputs (tokenization/embedding, padding/striding, or patching) for long flows or logs. This processing can increase memory usage and I/O when sequences are long, and authors have proposed input encodings and striding/patching to reduce this cost [36,38]. The Flow Transformer paper explicitly reports that careful input encoding and head selection can affect model size and speed. It further demonstrates design choices that reduce model size and improve training/inference time [37].

- RL environment and trajectory generation cost: RL approaches to IDS typically require the definition of an environment (state, action, reward) and the generation of agent trajectories for training. Building faithful training environments or realistic simulators adds engineering and annotation costs not normally required for supervised classifiers [42,44].

2.7.2. Training Compute and Sample Efficiency

- GNNs: These models require moderate to high compute demands, depending on design. Methodological reviews of GNNs report that message passing and heterogeneous/hierarchical graph designs increase memory and compute demands. Temporal GNN variants and nested/hierarchical graphs further increase training complexity and memory usage [30,34]. These studies demonstrate that computational cost as a key practical challenge when scaling GNNs to high-throughput network data.

- Transformers: Reviewed Transformer literature notes that Transformer models, especially large pre-trained or long sequence variants, cause higher GPU memory consumption and longer training times. NIDS Transformer frameworks (e.g., Flow Transformer) report measurable model size and runtime tradeoffs, and show that careful design, such as input encoding and classification head choices, can reduce model size by >50% and improve training/inference time without loss of detection performance in experiments [36,37,38]. Thus, Transformers can be computationally heavy, yet they are open to engineering optimizations.

- RL: Deep RL studies for IDS warn that RL training can be sample inefficient and require many episodes to converge. This leads to large cumulative compute (many interactions/episodes) compared to supervised training, and authors recommend sample-efficient RL approaches or hybrid supervised + RL designs for practicality [42,44,46].

2.7.3. Online Updating and Adaptivity

2.7.4. Operational Complexity and Maintainability

- Pipeline complexity: As mentioned above, existing literature highlights that GNNs and Transformer systems typically demand more complex data pipelines, such as graph processing frameworks for GNNs and long sequence tokenization and large model serving for Transformers. And thus, higher operational and maintenance costs compared to classic ML pipelines [26,30,36].

- Safety & robustness costs: RL studies document adversarial concerns such as reward poisoning and exploitation during learning, which require defenses and monitoring when RL is deployed online. These concerns highlight the need for additional safeguards [45].

2.8. Research Gap and the Significance of the SLR

3. Methodology

3.1. Research Questions

3.1.1. Primary Research Questions

- How are GNNs, Transformers, and RL used individually or in combination for network threat detection?

- How do different model architectures, datasets, evaluation metrics, and types of network threats addressed compare across studies involving GNNs, Transformers, and RL?

3.1.2. Secondary Research Questions

- What are the strengths, limitations, and gaps observed in current research utilizing GNNs, Transformers, and RL for network threat detection?

- What are the observable trends that integrate GNNs, Transformers, and/or RL for enhanced network threat detection?

3.2. Scope of the Study

3.2.1. Network Context

3.2.2. Threat Context

3.3. Threat Frameworks and Taxonomies

3.4. Methodological Framework for Threat Classification

3.4.1. Conceptual Framework

- MITRE ATT&CK offers fine-grained categorization of adversarial tactics.

- Cyber Kill Chain provides temporal positioning within the attack lifecycle.

3.4.2. Context-Aware Definition of Threats and Attacks

- Threats denote capabilities, behaviors, or preparatory actions that have the potential to cause harm but have not yet materialized into a successful exploitation or system compromise, e.g., malware download, infiltration attempts, reconnaissance, or credential-testing activities.

- Attacks represent realized exploitation or harm, where the threat has transitioned into active execution against a target, achieving adversarial objectives or producing measurable system impact, e.g., DDoS flooding.

3.4.3. Threat/Attack Determination Rules

3.4.4. General Rules for Mapping (From Network Activity/Label → Classification)

- Treat attempted actions as Threats, and realized or successful actions as Attacks.

- Choose the earliest relevant stage when multiple stages apply.

- When the dataset or paper does not clarify success, default to Threat to align with the proactive detection perspective.

- Document all ambiguous cases in an ambiguity log for reviewer consensus.

3.4.5. Step by Step Procedure for Manual Classification

- Extract labels: List every threat/attack label reported in the paper or dataset.

- Normalize terminology (optional): If necessary, convert labels to lowercase, remove punctuation, and harmonize synonyms (e.g., port scan → portscan) for consistency.

- Identify observed behavior: Examine dataset documentation or study description to infer practical meaning.

- Apply mapping rules. Assign:

- MITRE ATT&CK Tactic and ID

- Cyber Kill Chain Stage

- Classification (Threat/Attack/Dual-context)

- Resolve ambiguity. If the label fits multiple categories, select the earliest stage and record justification.

- Reviewer verification (Optional). A second reviewer reclassifies a 20–30% random subset. Compute inter-rater reliability (Cohen’s κ). Resolve disagreements through discussion.

- Record results. Maintain a spreadsheet with fields (Example fields are given below): Study ID|Dataset|Original Label|Mapped Tactic|Kill Chain Stage|Classification|Ambiguity Flag|Justification|Reviewer 1|Reviewer 2|Final Decision.

3.5. Research Design

3.5.1. Methodological Framework

3.5.2. Research Design Components

3.5.3. SLR Protocol and Registration

3.5.4. Eligibility Criteria

3.5.5. Critical Appraisal (Risk of Bias Assessment)

3.5.6. Information Sources and Search Strategy

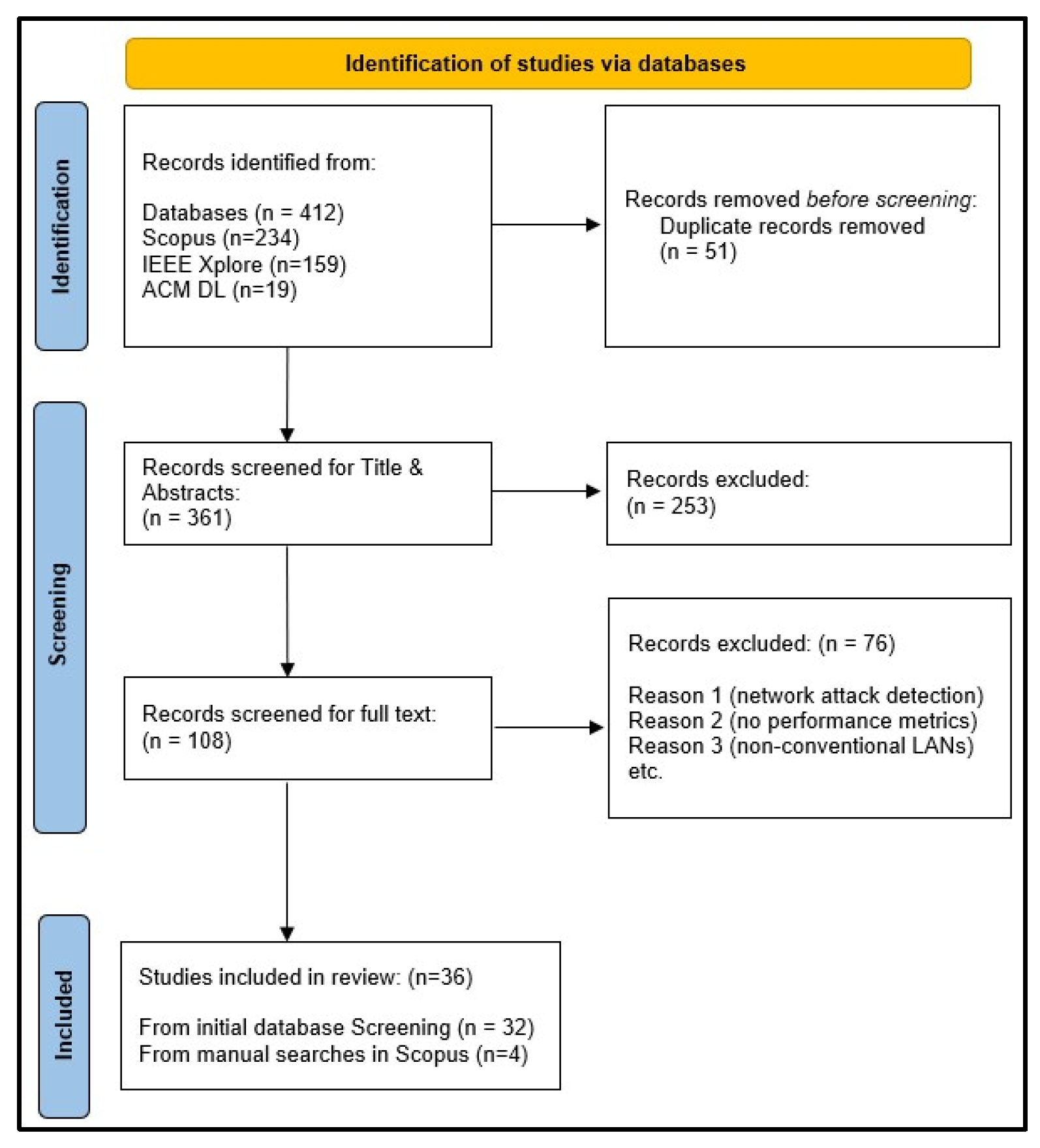

3.5.7. Screening and Study Selection

3.5.8. Data Extraction and Quality Assessment

3.5.9. Limitations of the Chosen Research Method

- The current body of research, including this SLR, has predominantly focused on LAN-based environments using datasets such as UNSW-NB15, CICIDS2017, CSE-CIC-IDS2018, and LANL. However, modern infrastructures increasingly rely on cloud computing, IoT ecosystems, and other hybrid architectures, limiting the broader applicability of LAN-based threat detection research.

- Inability to conduct a meta-analysis: The extreme heterogeneity caused by various performance metrics of choice, a wide range of different datasets, and network threat types made a meta-analysis impossible in this systematic literature review.

- Exclusion of non-peer-reviewed preprints: Non-peer-reviewed preprints were excluded as part of the gray literature during the searching and screening process. However, most of the GNN and Transformer literature is recent, especially in the cybersecurity domain. Thus, by excluding grey literature, there is a possibility of missing such high-quality yet non-peer-reviewed research from this SLR.

- Language bias: Due to resource constraints, only the journal articles and conference papers written in English were included in the review. This might have brought a regional/language-based bias to the review. For example, there are high-quality Chinese papers written in the scope of this SLR. However, it was not possible to analyze and synthesize such documents due to the shortage of Chinese-fluent reviewers.

3.5.10. Consideration of Alternative Methods

4. Results

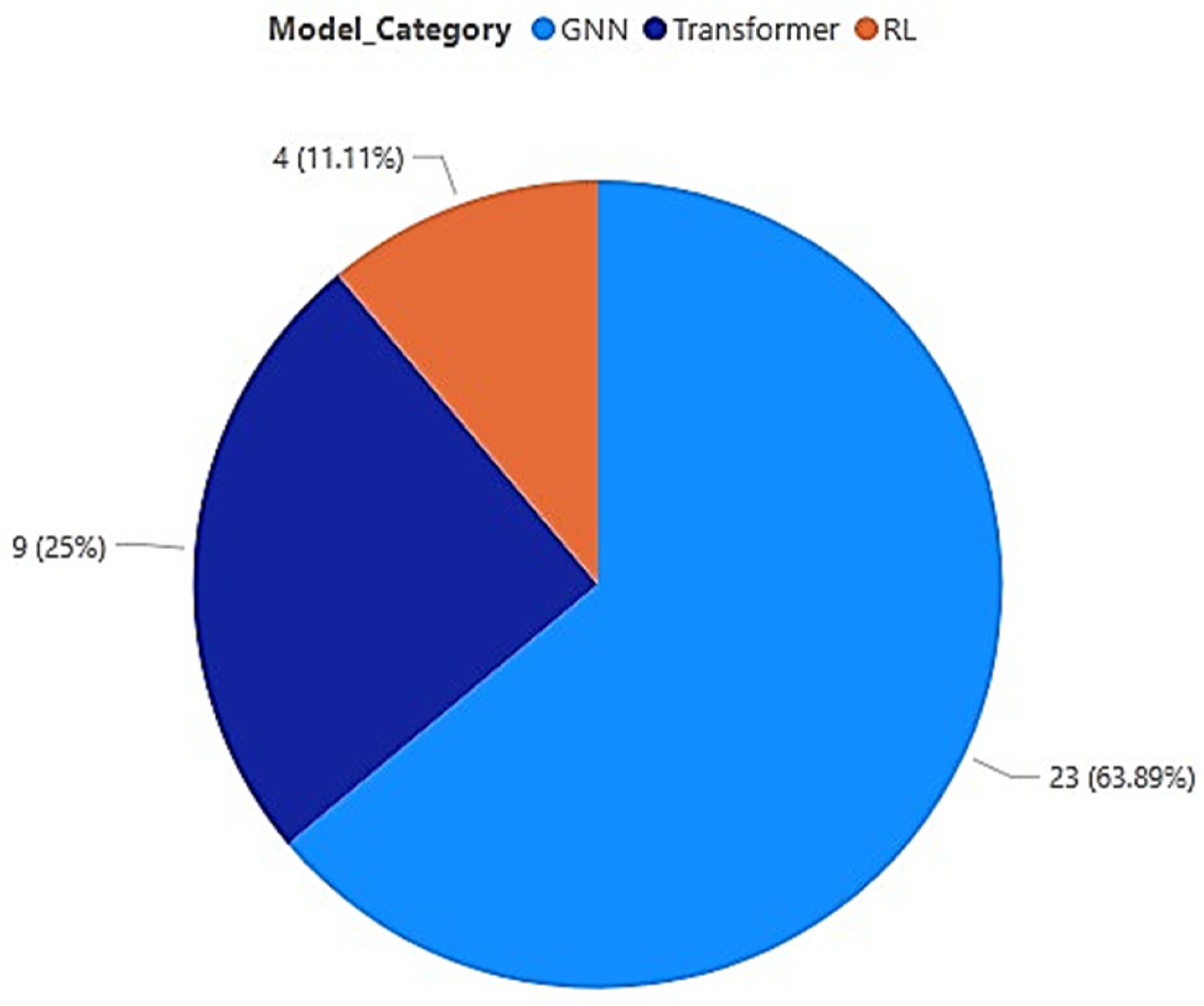

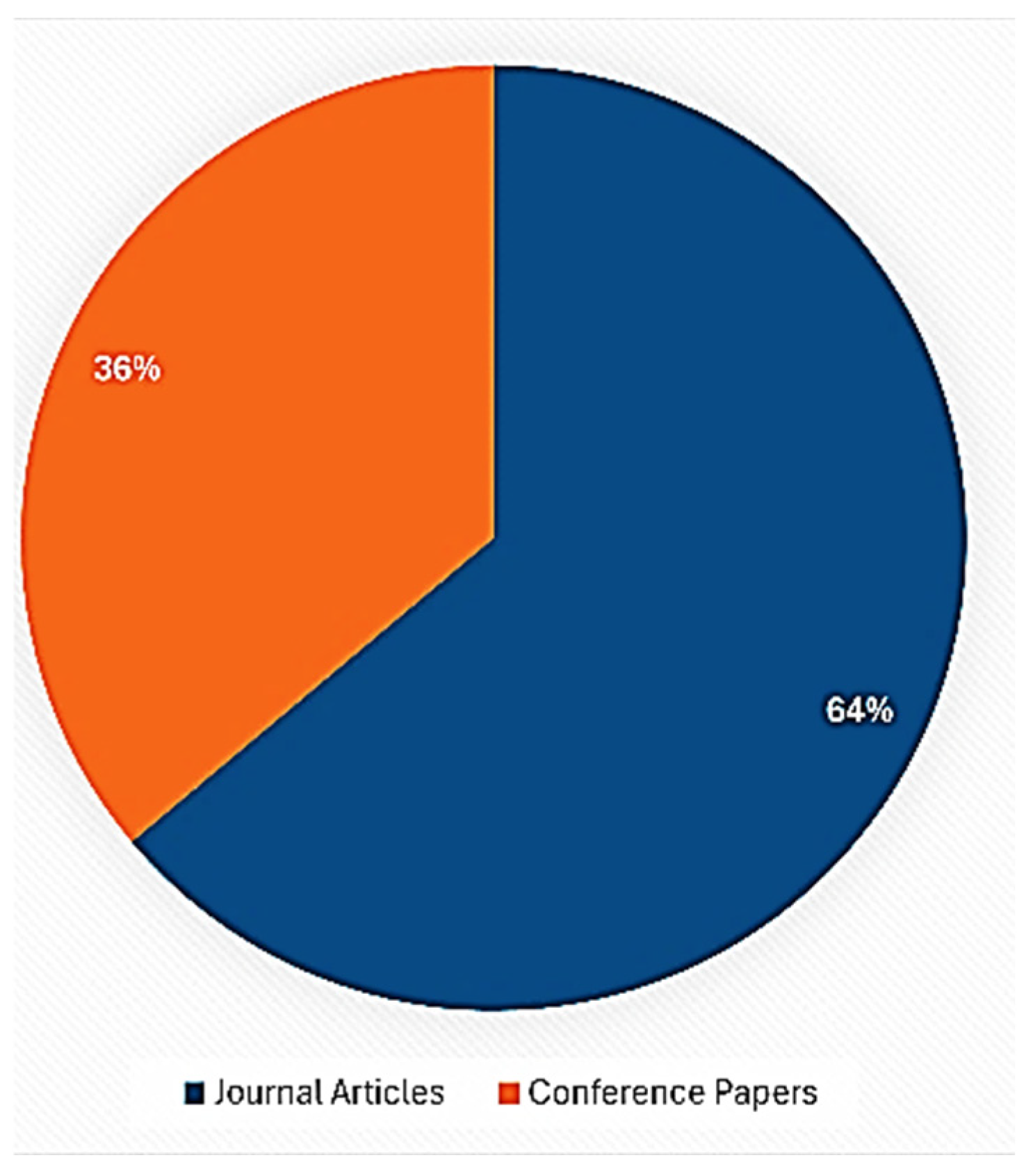

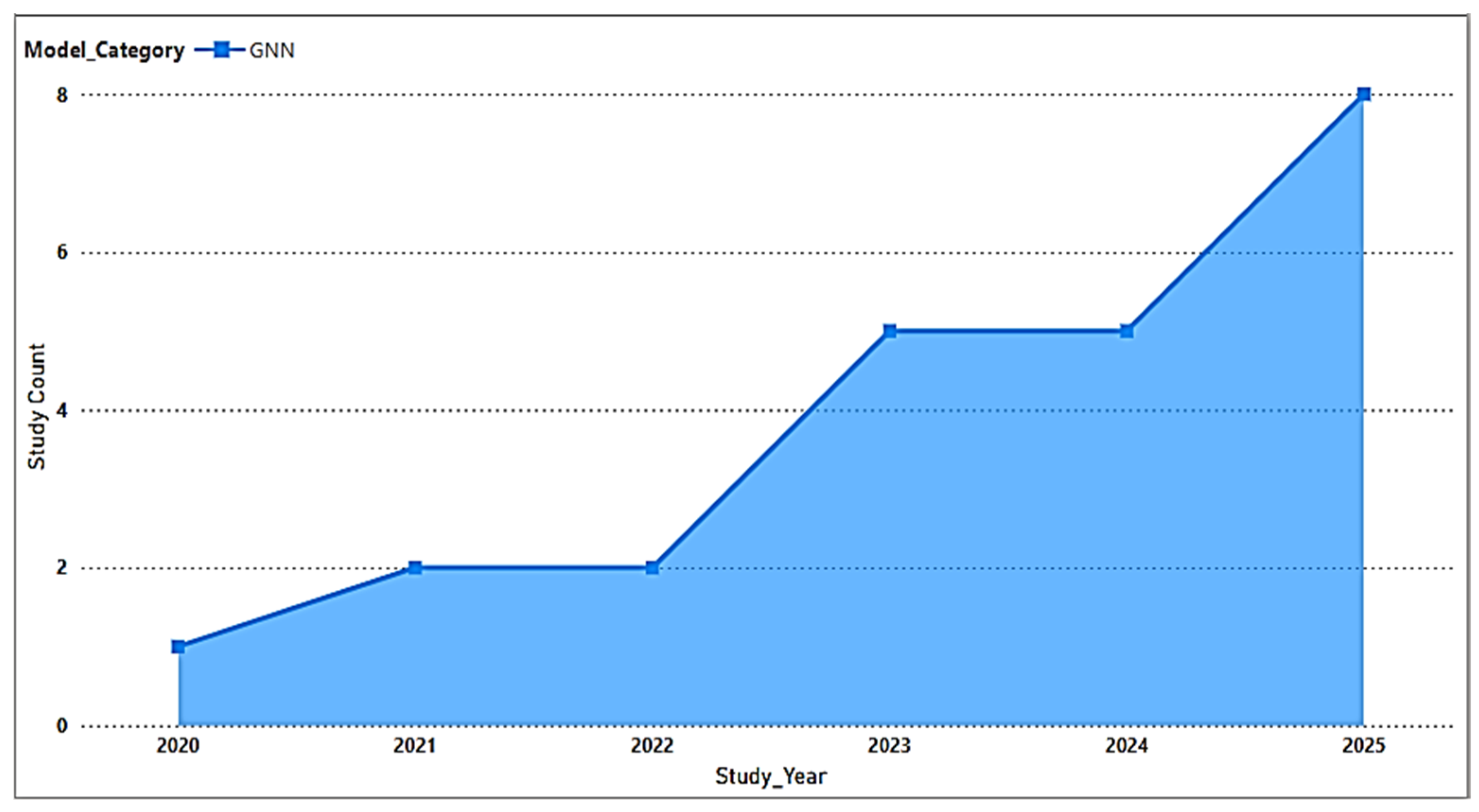

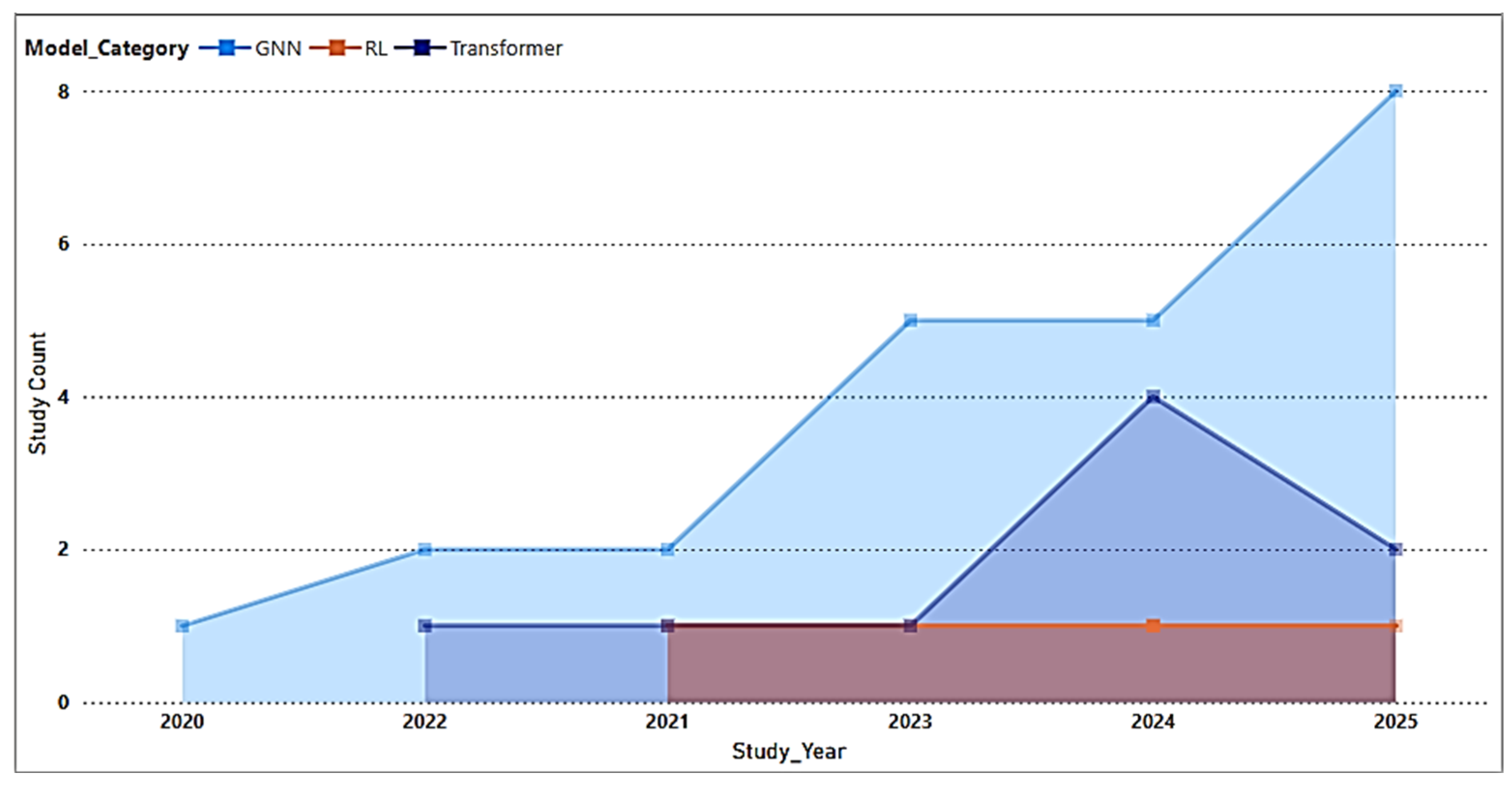

4.1. Characteristics of Included Studies

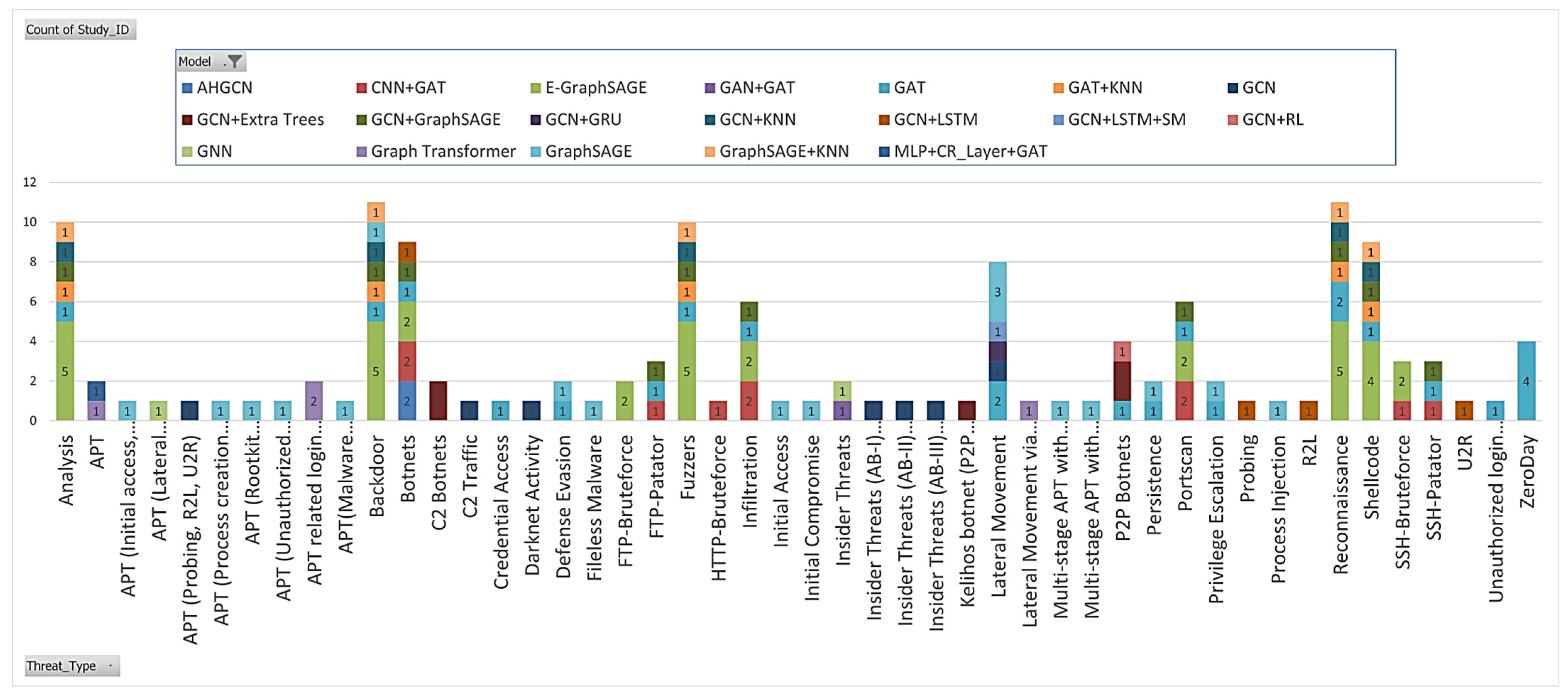

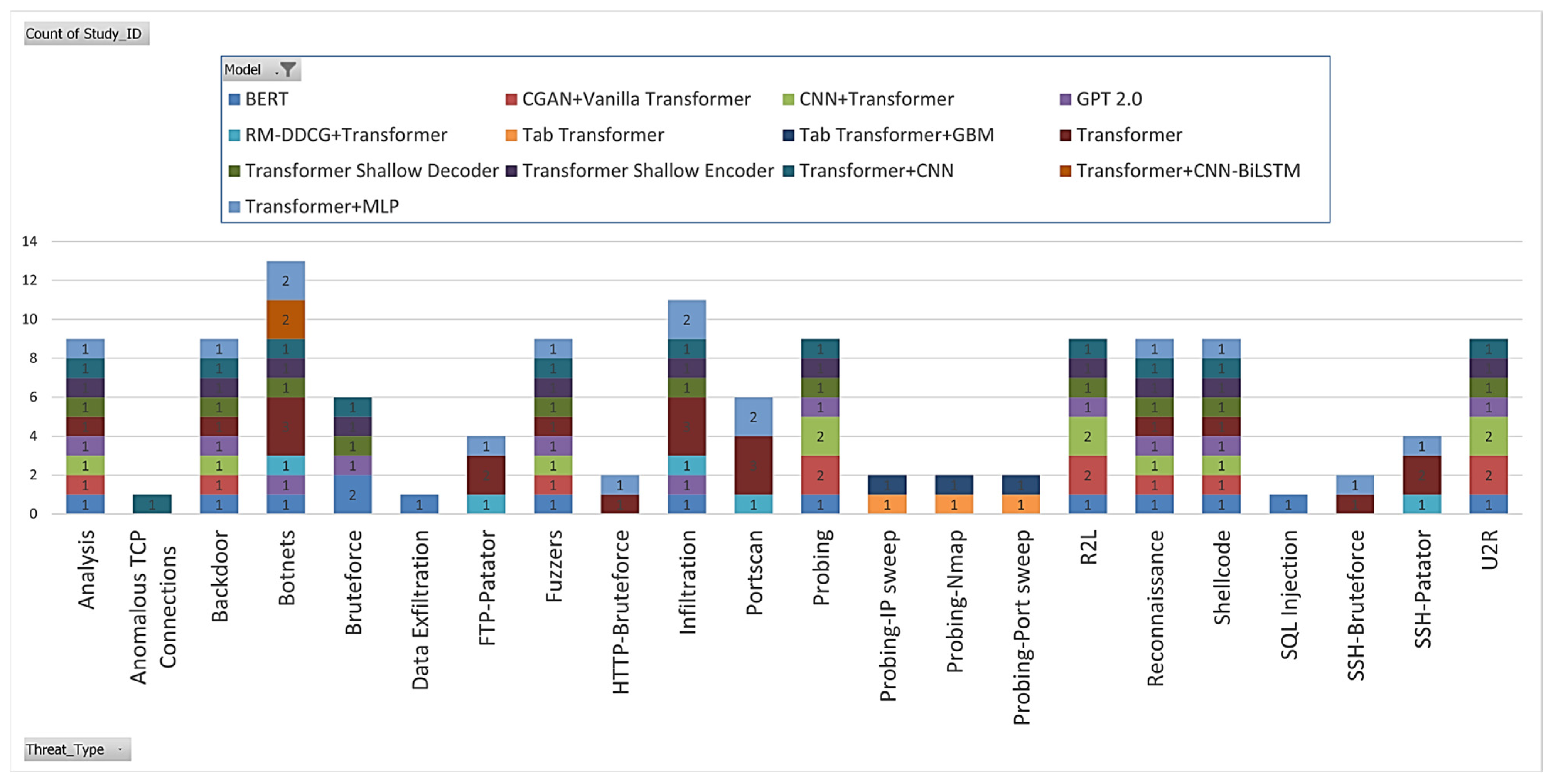

4.2. Threats Evaluated

4.3. Consistency of the Threat Classification Framework in Practical Applications

4.4. GNN-Based Studies

4.5. Transformer-Based Studies

4.6. RL-Based Studies

5. Analysis and Discussion

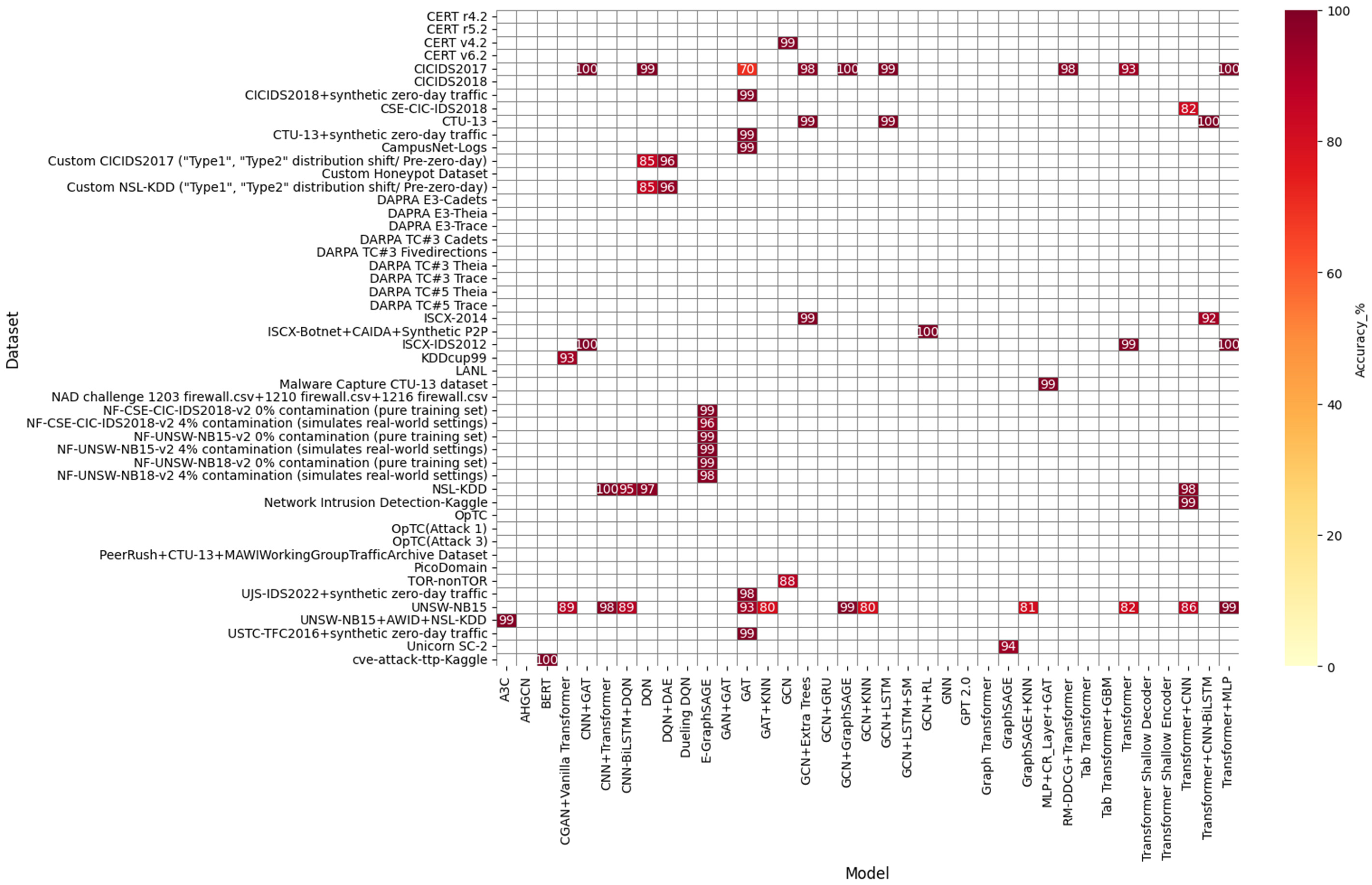

5.1. Model Performance Analysis

5.2. Performance of GNNs

5.2.1. Overall Success Rate of GNNs

5.2.2. GNN Top Performers

5.2.3. GNN Underperformers

5.3. Performance of Transformers

5.3.1. Overall Success Rate of Transformers

5.3.2. Transformer Top Performers

5.3.3. Transformer Moderate Performers

5.3.4. Transformer Underperformers

5.4. Performance of Reinforcement Learning Algorithms

5.5. Key Applications of GNNs

5.5.1. Malicious Traffic Detection

5.5.2. APT and Insider Threat Detection

5.5.3. Botnet Detection

5.5.4. Malicious Anomaly Detection in Dynamic Network Environments

5.6. Methodological and Architectural Trends of GNNs vs. Their Limitations

5.7. Strengths of GNNs

5.8. Key Applications of Transformers

5.8.1. Payload and Flow-Based Threat Detection

5.8.2. Log-Based Anomaly Detection

5.8.3. Zero-Day and Adaptive Threat Detection

5.9. Methodological and Architectural Trends of Transformers vs. Their Limitations

5.10. Strengths of Transformers

5.11. Key Applications of RL Algorithms

5.12. Strengths of Reinforcement Learning Algorithms

5.13. Challenges and Limitations of Reinforcement Learning Algorithms

5.14. Dataset Bias

- CIC Family Datasets (CICIDS2017, CSE-CIC-IDS2018, CIC-DDoS2019)

- Class imbalance: Certain attack types (e.g., Infiltration, Heartbleed, SQL Injection) have very few instances, while benign traffic dominates, leading to skewed accuracy metrics.

- Non-stationary data: Traffic was captured over separate days, creating temporal discontinuities that models may exploit as artificial patterns.

- Controlled lab environment: All CIC datasets were generated using scripted attacks and synthetic normal traffic, lacking the diversity and background noise of real-world networks [86].

- Redundant and correlated features: Flow-based features often overlap, introducing redundancy that can distort feature importance analysis.

- Reproducibility gaps: Variations in preprocessing pipelines across studies (e.g., feature normalization, label consolidation) cause inconsistencies in reported performance.

- 2.

- UNSW-NB15

- Synthetic traffic generation: Network data were produced using the IXIA PerfectStorm tool rather than real enterprise captures [87].

- Limited protocol diversity: Fewer application layer behaviors and uniform normal traffic make models overfit to specific flow characteristics.

- Feature correlation: Certain attributes are highly collinear, reducing discriminative value for ML/DL models.

- Short capture duration: Restricts representation of long-term threat evolution or gradual attack stages such as APTs.

- 3.

- Legacy KDD-Based Datasets (KDDCup’99, NSL-KDD).

- Obsolete attack types that no longer reflect modern network threats.

- Feature duplication and redundancy leading to inflated classification metrics.

- Over simplified feature space unsuitable for testing complex deep models such as GNNs or Transformers.

- 4.

- CERT Insider Threat Datasets

- Simulated organizational environments with scripted user behavior, lacking true behavioral diversity [88].

- Limited organizational roles and time span, which restrict generalization to real multi-department enterprises.

- Label uncertainty: Some insider behaviors are ambiguous or context dependent, potentially introducing labeling bias.

- Low Utilization of Other Specialized Datasets

5.15. GNNs, Transformers, and RL, Cross-Model Comparison

6. Future Research Trends and Directions

6.1. Development of Hybrid GNN–Transformers

6.2. Reinforcement Learning and Transformer Synergy

6.3. Generative AI for Threat Simulation and Augmentation

6.4. Exploration of Agentic AI for Automated Threat Detection Pipelines

7. Conclusions & Recommendations

7.1. Conclusions

- A novel conceptual threat classification framework: A novel framework for classifying network threats and distinguishing them from network attacks, inspired by the MITRE ATT&CK tactics and Lockheed Martin cyber kill chain, was developed. This classification framework, along with the analysis and the synthesis, provided a deeper understanding into the stages of the cyber kill chain that GNNs, Transformers and RL models focus on and which early stages need attention to prevent network attacks.

- Comparative synthesis: This review conducted a comprehensive, comparative analysis and qualitative synthesis with quantitative elements of the application of GNNs, Transformers and RL algorithms in conventional network threat detection. The preliminary literature review showed that an SLR conducted regarding all three of these models is rare or nonexistent. Thus, this SLR fills a significant gap in the current cybersecurity literature.

- Trend identification: Significant trends were identified and documented for each of the model types. For GNNs and Transformers, there is a trend of increasing interest in hybrid architectures for network threat detection, as some GNNs were combined with traditional sequential models and some Transformer architectures were integrated with traditional spatial models to overcome their inherent architectural limitations.

- Limitations & challenges identification: The review identified and documented limitations and challenges in the current literature while highlighting the solutions presented by the complementary studies.

7.2. Recommendations

- Extended network threat detection SLRs for Cloud, IoT and flat network architectures: As organizations increasingly migrate operations to cloud environments, integrate with IoT networks, and adopt flat network architectures to simplify connectivity, reduce latency, and enhance scalability, future research should focus on systematic literature reviews and empirical studies addressing threat detection in these environments. Such reviews would support the adaptation and optimization of advanced threat detection frameworks for modern enterprise networks with minimal segmentation and distributed cloud resources.

- Explainability and interpretability: Many DL models, including GNNs and Transformers, are considered black boxes. Therefore, it is recommended that future research investigates techniques to improve the transparency of these models, making their detection more transparent and interpretable. This can benefit cybersecurity experts and other authorities in understanding the reasoning process behind model detections, which is crucial for privacy-critical scenarios such as insider threat detection.

- Utilizing lightweight Transformers: Despite their effectiveness in modeling temporal aspects, Transformers are often considered computationally intensive algorithms. Further research is needed to develop lightweight Transformers that can produce decent performance in network threat detection tasks.

- Meta-Analysis and Standardized Reporting: Meta-analyses are recognized for their robust statistical insights. Therefore, it is recommended to conduct a meta-analysis on the applicability of GNNs, Transformers, and RL algorithms in network threat detection. However, to make it feasible, the research community must collectively adhere to more standardized reporting practices, including unified evaluation metrics (e.g., a clear indication of the type of multi-class F1-score used, macro or micro).

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AIDS | Anomaly-Based Intrusion Detection System |

| APT | Advanced Persistent Threat |

| AUC | Area Under the Curve |

| BERT | Bidirectional Encoder Representations from Transformers |

| BiLSTM | Bidirectional Long Short-Term Memory |

| CASP | Critical Appraisal Skills Programme |

| CIC | Canadian Institute for Cybersecurity |

| CNN | Convolutional Neural Network |

| DDoS | Distributed Denial of Service |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| DRL | Deep Reinforcement Learning |

| DQN | Deep Q-Network |

| FAR | False Alarm Rate |

| FN | False Negative |

| FPR | False-Positive Rate |

| FP | False Positive |

| Gen-AI | Generative Artificial Intelligence |

| GAN | Generative Adversarial Network |

| GAT | Graph Attention Network |

| GCN | Graph Convolutional Network |

| GNN | Graph Neural Network |

| GPT | Generative Pre-trained Transformer |

| GRU | Gated Recurrent Unit |

| IDS | Intrusion Detection System |

| IP | Internet Protocol |

| LAN | Local Area Network |

| LLM | Large Language Model |

| LSTM | Long Short-Term Memory |

| MAC | Media Access Control |

| MCC | Matthews Correlation Coefficient |

| MDP | Markov Decision Process |

| ML | Machine Learning |

| MLP | Multilayer Perceptron |

| NIDS | Network Intrusion Detection System |

| OSF | Open Science Framework |

| P2P | Peer-to-Peer |

| PCA | Principal Component Analysis |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| RL | Reinforcement Learning |

| RNN | Recurrent Neural Network |

| RoB | Risk of Bias |

| SDN | Software-Defined Network |

| SIDS | Signature-Based Intrusion Detection System |

| SLR | Systematic Literature Review |

| SMOTE | Synthetic Minority Over-sampling Technique |

| SOTA | State of the Art |

| SVM | Support Vector Machine |

| TCP/IP | Transmission Control Protocol/Internet Protocol |

| TNR | True-Negative Rate |

| TPR | True-Positive Rate |

| UAV | Unmanned Aerial Vehicle |

| VAE | Variational Autoencoder |

| VLAN | Virtual Local Area Network |

| ViT | Vision Transformer |

Appendix A

| Study | Data Quality | Model Transparency | Reproducibility | Methodology | External Validity | Overall RoB |

|---|---|---|---|---|---|---|

| S1 | Yes | Yes | No | Yes | Yes | Low |

| S2 | No | Yes | Unclear | Yes | No | High |

| S3 | Yes | Yes | Yes | Yes | Yes | Low |

| S4 | Yes | Yes | No | Yes | Unclear | Low |

| S5 | Yes | Yes | No | Yes | Yes | Low |

| S6 | Yes | Yes | Yes | Yes | Yes | Low |

| S7 | Unclear | Yes | No | No | No | High |

| S8 | Yes | Yes | No | Yes | No | Moderate |

| S9 | Yes | Yes | No | Yes | Unclear | Low |

| S10 | Yes | Yes | No | Yes | Yes | Low |

| S11 | Yes | Yes | No | Yes | No | Moderate |

| S12 | Yes | Yes | No | Yes | Unclear | Low |

| S13 | Yes | Yes | Yes | Unclear | Unclear | Moderate |

| S14 | Yes | Yes | Unclear | Yes | No | Moderate |

| S15 | Yes | Yes | No | Yes | No | Moderate |

| S16 | Unclear | Partial | No | Yes | No | High |

| S17 | Yes | Yes | No | Yes | Unclear | Low |

| S18 | Yes | Yes | No | Yes | Yes | Low |

| S19 | Yes | Yes | No | Yes | No | Moderate |

| S20 | Yes | Yes | No | Yes | Yes | Low |

| S21 | Yes | Yes | Yes | Yes | Yes | Low |

| S22 | Yes | Yes | No | Yes | Unclear | Low |

| S23 | Yes | Yes | No | Yes | No | Moderate |

| S24 | Yes | Yes | No | Yes | Unclear | Low |

| S25 | Yes | Partial | No | Yes | No | High |

| S26 | Yes | Yes | No | Yes | No | Moderate |

| S27 | Yes | Yes | No | Yes | Unclear | Low |

| S28 | Yes | Yes | No | Yes | Yes | Low |

| S29 | Yes | Yes | No | Yes | Yes | Low |

| S30 | Yes | Yes | Yes | Yes | Unclear | Low |

| S31 | Yes | Yes | No | Yes | Yes | Low |

| S32 | Yes | Partial | No | Yes | Unclear | High |

| S33 | Yes | Partial | No | Yes | No | High |

| S34 | Yes | No | No | Yes | No | High |

| S35 | Yes | Yes | No | Yes | No | Moderate |

| S36 | Yes | Yes | No | Yes | Unclear | Low |

References

- García-Teodoro, P.; Díaz-Verdejo, J.; Maciá-Fernández, G.; Vázquez, E. Anomaly-based network intrusion detection: Techniques, systems and challenges. Comput. Secur. 2009, 28, 18–28. [Google Scholar] [CrossRef]

- Díaz-Verdejo, J.; Muñoz-Calle, J.; Estepa Alonso, A.; Estepa Alonso, R.; Madinabeitia, G. On the Detection Capabilities of Signature-Based Intrusion Detection Systems in the Context of Web Attacks. Appl. Sci. 2022, 12, 852. [Google Scholar] [CrossRef]

- Ali, M.L.; Thakur, K.; Schmeelk, S.; Debello, J.; Dragos, D. Deep Learning vs. Machine Learning for Intrusion Detection in Computer Networks: A Comparative Study. Appl. Sci. 2025, 15, 1903. [Google Scholar] [CrossRef]

- Newsome, J.; Karp, B.; Song, D. Polygraph: Automatically generating signatures for polymorphic worms. In Proceedings of the 2005 IEEE Symposium on Security and Privacy (S&P’05), Oakland, CA, USA, 8–11 May 2005; pp. 226–241. [Google Scholar]

- Samrin, R.; Vasumathi, D. Review on anomaly based network intrusion detection system. In Proceedings of the 2017 International Conference on Electrical, Electronics, Communication, Computer, and Optimization Techniques (ICEECCOT), Mysuru, India, 15–16 December 2017; pp. 141–147. [Google Scholar]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Ramzan, M.; Shoaib, M.; Altaf, A.; Arshad, S.; Iqbal, F.; Castilla, Á.K.; Ashraf, I. Distributed Denial of Service Attack Detection in Network Traffic Using Deep Learning Algorithm. Sensors 2023, 23, 8642. [Google Scholar] [CrossRef] [PubMed]

- Hu, Z.; Shukla, K.; Karniadakis, G.E.; Kawaguchi, K. Tackling the Curse of Dimensionality with Physics-Informed Neural Networks. Neural Netw. 2024, 176, 106369. [Google Scholar] [CrossRef]

- Caviglione, L. Trends and Challenges in Network Covert Channels Countermeasures. Appl. Sci. 2021, 11, 1641. [Google Scholar] [CrossRef]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Pujol-Perich, D.; Suarez-Varela, J.; Cabellos-Aparicio, A.; Barlet-Ros, P. Unveiling the potential of Graph Neural Networks for robust Intrusion Detection. ACM Sigmetrics Perform. Eval. Rev. 2022, 49, 111–117. [Google Scholar] [CrossRef]

- Kundacina, O.; Gojic, G.; Cosovic, M.; Miskovic, D.; Vukobratovic, D. Scalability and Sample Efficiency Analysis of GraphNeural Networks for Power System State Estimation. arXiv 2023, arXiv:2303.00105. [Google Scholar]

- Guan, M.; Iyer, A.P.; Kim, T. Dynagraph: Dynamic graph neural networks at scale. In Proceedings of the 5th ACM SIGMOD Joint International Workshop on Graph Data Management Experiences & Systems (GRADES) and Network Data Analytics (NDA), Philadelphia, PA, USA, 12 June 2022. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Xu, P.; Zhu, X.; Clifton, D.A. Multimodal Learning with Transformers: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12113–12132. [Google Scholar] [CrossRef] [PubMed]

- Qiang, W.; Zhongli, Z. Reinforcement learning model, algorithms and its application. In Proceedings of the 2011 International Conference on Mechatronic Science, Electric Engineering and Computer (MEC), Jilin, China, 19–22 August 2011; pp. 1143–1146. [Google Scholar]

- Neufeld, E.A.; Bartocci, E.; Ciabattoni, A.; Governatori, G. Enforcing ethical goals over reinforcement-learning policies. Ethics Inf. Technol. 2022, 24, 43. [Google Scholar] [CrossRef]

- Shakya, A.K.; Pillai, G.; Chakrabarty, S. Reinforcement learning algorithms: A brief survey. Expert Syst. Appl. 2023, 231, 120495. [Google Scholar] [CrossRef]

- Khan, N.M.; Madhav, C.N.; Negi, A.; Thaseen, I.S. Analysis on Improving the Performance of Machine Learning Models Using Feature Selection Technique. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Online, 12–15 December 2020; pp. 69–77. [Google Scholar]

- Disha, R.A.; Waheed, S. Performance analysis of machine learning models for intrusion detection system using Gini Impurity-based Weighted Random Forest (GIWRF) feature selection technique. Cybersecurity 2022, 5, 1. [Google Scholar] [CrossRef]

- Lee, J.; Pak, J.; Lee, M. Network Intrusion Detection System using Feature Extraction based on Deep Sparse Autoencoder. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 21–23 October 2020; pp. 1282–1287. [Google Scholar]

- Jing, D.; Chen, H.B. SVM Based Network Intrusion Detection for the UNSW-NB15 Dataset. In Proceedings of the 2019 IEEE 13th International Conference on ASIC (ASICON), Chongqing, China, 29 October–1 November 2019; pp. 1–4. [Google Scholar]

- Zhang, C.; Jia, D.; Wang, L.; Wang, W.; Liu, F.; Yang, A. Comparative research on network intrusion detection methods based on machine learning. Comput. Secur. 2022, 121, 102861. [Google Scholar] [CrossRef]

- Dash, N.; Chakravarty, S.; Rath, A.K.; Giri, N.C.; AboRas, K.M.; Gowtham, N. An optimized LSTM-based deep learning model for anomaly network intrusion detection. Sci. Rep. 2025, 15, 1554. [Google Scholar] [CrossRef]

- Udurume, M.; Shakhov, V.; Koo, I. Comparative Analysis of Deep Convolutional Neural Network—Bidirectional Long Short-Term Memory and Machine Learning Methods in Intrusion Detection Systems. Appl. Sci. 2024, 14, 6967. [Google Scholar] [CrossRef]

- Tran, D.H.; Park, M. FN-GNN: A Novel Graph Embedding Approach for Enhancing Graph Neural Networks in Network Intrusion Detection Systems. Appl. Sci. 2024, 14, 6932. [Google Scholar] [CrossRef]

- Hu, G.; Sun, M.; Zhang, C. A High-Accuracy Advanced Persistent Threat Detection Model: Integrating Convolutional Neural Networks with Kepler-Optimized Bidirectional Gated Recurrent Units. Electronics 2025, 14, 1772. [Google Scholar] [CrossRef]

- Wu, Z.; Zhang, H.; Wang, P.; Sun, Z. RTIDS: A Robust Transformer-Based Approach for Intrusion Detection System. IEEE Access 2022, 10, 64375–64387. [Google Scholar] [CrossRef]

- Ding, J.; Qian, P.; Ma, J.; Wang, Z.; Lu, Y.; Xie, X. Detect Insider Threat with Associated Session Graph. Electronics 2024, 13, 4885. [Google Scholar] [CrossRef]

- Sun, Z.; Teixeira, A.M.H.; Toor, S. GNN-IDS: Graph Neural Network based Intrusion Detection System. In Proceedings of the 19th International Conference on Availability, Reliability and Security, Vienna, Austria, 30 July–2 August 2024; p. 14. [Google Scholar]

- Jianping, W.; Guangqiu, Q.; Chunming, W.; Weiwei, J.; Jiahe, J. Federated learning for network attack detection using attention-based graph neural networks. Sci. Rep. 2024, 14, 19088. [Google Scholar] [CrossRef] [PubMed]

- Ayoub Mansour Bahar, A.; Soaid Ferrahi, K.; Messai, M.-L.; Seba, H.; Amrouche, K. CONTINUUM: Detecting APT Attacks through Spatial-Temporal Graph Neural Networks. arXiv 2025, arXiv:2501.02981. [Google Scholar] [CrossRef]

- Ji, Y.; Huang, H.H. NestedGNN: Detecting Malicious Network Activity with Nested Graph Neural Networks. In Proceedings of the ICC 2022—IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; pp. 2694–2699. [Google Scholar]

- Mohanraj, S.K.; Sivakrishna, A.M. Spatio-Temporal Graph Neural Networks for Enhanced Insider Threat Detection in Organizational Security. Authorea Prepr. 2024. [Google Scholar] [CrossRef]

- Zhong, M.; Lin, M.; Zhang, C.; Xu, Z. A survey on graph neural networks for intrusion detection systems: Methods, trends and challenges. Comput. Secur. 2024, 141, 103821. [Google Scholar] [CrossRef]

- Xi, C.; Wang, H.; Wang, X. A novel multi-scale network intrusion detection model with transformer. Sci. Rep. 2024, 14, 23239. [Google Scholar] [CrossRef]

- Manocchio, L.D.; Layeghy, S.; Portmann, M. FlowTransformer: A flexible python framework for flow-based network data analysis. Softw. Impacts 2024, 22, 100702. [Google Scholar] [CrossRef]

- Abdul Kareem, S.; Sachan, R.C.; Malviya, R.K. Neural Transformers for Zero-Day Threat Detection in Real-Time Cyberse curity Network Traffic Analysis. Int. J. Glob. Innov. Solut. (IJGIS) 2024, 14, 5–7. [Google Scholar] [CrossRef]

- Chen, J.; Zhou, H.; Mei, Y.; Adam, G.; Bastian, N.D.; Lan, T. Real-time Network Intrusion Detection via Decision Transformers. arXiv 2023, arXiv:2312.07696. [Google Scholar] [CrossRef]

- De Rose, L.; Andresini, G.; Appice, A.; Malerba, D. VINCENT: Cyber-threat detection through vision transformers and knowledge distillation. Comput. Secur. 2024, 144, 103926. [Google Scholar] [CrossRef]

- Kheddar, H. Transformers and large language models for efficient intrusion detection systems: A comprehensive survey. Inf. Fusion 2025, 124, 103347. [Google Scholar] [CrossRef]

- Oh, S.H.; Kim, J.; Nah, J.H.; Park, J. Employing Deep Reinforcement Learning to Cyber-Attack Simulation for Enhancing Cybersecurity. Electronics 2024, 13, 555. [Google Scholar] [CrossRef]

- Yang, W.; Acuto, A.; Zhou, Y.; Wojtczak, D. A Survey for Deep Reinforcement Learning Based Network Intrusion Detection. arXiv 2024, arXiv:2410.07612. [Google Scholar]

- Ren, K.; Zeng, Y.; Cao, Z.; Zhang, Y. ID-RDRL: A deep reinforcement learning-based feature selection intrusion detection model. Sci. Rep. 2022, 12, 15370. [Google Scholar] [CrossRef]

- Merzouk, M.A.; Delas, J.; Neal, C.; Cuppens, F.; Boulahia-Cuppens, N.; Yaich, R. Evading Deep Reinforcement Learning-based Network Intrusion Detection with Adversarial Attacks. In Proceedings of the 17th International Conference on Availability, Reliability and Security, Vienna, Austria, 23–26 August 2022; p. 31. [Google Scholar]

- Ren, K.; Zeng, Y.; Zhong, Y.; Sheng, B.; Zhang, Y. MAFSIDS: A reinforcement learning-based intrusion detection model for multi-agent feature selection networks. J. Big Data 2023, 10, 137. [Google Scholar] [CrossRef]

- Issa, M.M.; Aljanabi, M.; Muhialdeen, H.M. Systematic literature review on intrusion detection systems: Research trends, algorithms, methods, datasets, and limitations. J. Intell. Syst. 2024, 33, 20230248. [Google Scholar] [CrossRef]

- MITRE ATT&CK. Enterprise Tactics. Available online: https://attack.mitre.org/tactics/enterprise/ (accessed on 22 October 2025).

- Hutchins, E.M.; Cloppert, M.J.; Amin, R.M. Intelligence-Driven Computer Network Defense Informed by Analysis of Adversary Campaigns and Intrusion Kill Chains. Available online: https://www.lockheedmartin.com/content/dam/lockheed-martin/rms/documents/cyber/LM-White-Paper-Intel-Driven-Defense.pdf (accessed on 22 October 2025).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering. 2007. Available online: https://legacyfileshare.elsevier.com/promis_misc/525444systematicreviewsguide.pdf (accessed on 22 October 2025).

- casp-uk. CASP Checklists. Available online: https://casp-uk.net/casp-tools-checklists/ (accessed on 22 October 2025).

- Zhao, Z.; Li, Z.; Li, T.; Zhang, F. A Large-Scale P2P Botnet Detection Framework via Topology and Traffic Co-Verification. In Proceedings of the Annual IEEE Communications Society Conference on Sensor, Mesh and Ad Hoc Communications and Networks Workshops, Phoenix, AZ, USA, 2–4 December 2024. [Google Scholar]

- Gonçalves, L.; Zanchettin, C. Detecting abnormal logins by discovering anomalous links via graph transformers. Comput. Secur. 2024, 144, 103944. [Google Scholar] [CrossRef]

- Gao, P.; Zhang, H.; Wang, M.; Yang, W.; Wei, X.; Lv, Z.; Ma, Z. Deep Temporal Graph Infomax for Imbalanced Insider Threat Detection. J. Comput. Inf. Syst. 2025, 65, 108–118. [Google Scholar] [CrossRef]

- Akpaku, E.; Chen, J.; Ahmed, M.; Agbenyegah, F.K.; Brown-Acquaye, W.L. RAGN: Detecting unknown malicious network traffic using a robust adaptive graph neural network. Comput. Netw. 2025, 262, 111184. [Google Scholar] [CrossRef]

- Cuong, N.H.; Do Xuan, C.; Long, V.T.; Dat, N.D.; Anh, T.Q. A Novel Approach for APT Detection Based on Ensemble Learning Model. Stat. Anal. Data Min. 2025, 18, e70005. [Google Scholar] [CrossRef]

- Ding, Q.; Li, J. AnoGLA: An efficient scheme to improve network anomaly detection. J. Inf. Secur. Appl. 2022, 66, 103149. [Google Scholar] [CrossRef]

- Han, X.; Zhang, M.; Yang, Z. TBA-GNN: A Traffic Behavior Analysis Model with Graph Neural Networks for Malicious Traffic Detection. In Proceedings of the Wireless Artificial Intelligent Computing Systems and Applications: 18th International Conference, WASA 2024, Qindao, China, 21–23 June 2024; pp. 374–386. [Google Scholar]

- King, I.J.; Huang, H.H. Euler: Detecting Network Lateral Movement via Scalable Temporal Link Prediction. ACM Trans. Priv. Secur. 2023, 26, 35. [Google Scholar] [CrossRef]

- Kisanga, P.; Woungang, I.; Traore, I.; Carvalho, G.H.S. Network Anomaly Detection Using a Graph Neural Network. In Proceedings of the 2023 International Conference on Computing, Networking and Communications, ICNC, Honolulu, HI, USA, 20–22 February 2023; pp. 61–65. [Google Scholar]

- Li, C.; Li, F.; Yu, M.; Guo, Y.; Wen, Y.; Li, Z. Insider Threat Detection Using Generative Adversarial Graph Attention Networks. In Proceedings of the GLOBECOM 2022–2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022; pp. 2680–2685. [Google Scholar]

- Li, Z.; Cheng, X.; Sun, L.; Zhang, J.; Chen, B. A Hierarchical Approach for Advanced Persistent Threat Detection with Attention-Based Graph Neural Networks. Secur. Commun. Netw. 2021, 2021, 9961342. [Google Scholar] [CrossRef]

- Meng, X.; Lang, B.; Yan, Y.; Liu, Y. Deeply fused flow and topology features for botnet detection based on a pretrained GCN. Comput. Commun. 2025, 233, 108084. [Google Scholar] [CrossRef]

- Rithuh Subhakkrith, S.; Kumar, Y.J.; Harish, R.; Dheeraj, B.V.; Vidhya, S. Graph-Based Approaches to Detect Network Anomalies and to Predict Attack Spread. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies, ICCCNT 2024, Mandi, India, 18–22 June 2024. [Google Scholar]

- Ur Rehman, M.; Ahmadi, H.; Ul Hassan, W. Flash: A Comprehensive Approach to Intrusion Detection via Provenance Graph Representation Learning. In Proceedings of the Proceedings—IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 19–23 May 2024; pp. 3552–3570. [Google Scholar]

- Wu, G.; Wang, X.; Lu, Q.; Zhang, H. Bot-DM: A dual-modal botnet detection method based on the combination of implicit semantic expression and graphical expression. Expert Syst. Appl. 2024, 248, 123384. [Google Scholar] [CrossRef]

- Wu, Y.; Hu, Y.; Wang, J.; Feng, M.; Dong, A.; Yang, Y. An active learning framework using deep Q-network for zero-day attack detection. Comput. Secur. 2024, 139, 103713. [Google Scholar] [CrossRef]

- Xu, X.; Zheng, X. Hybrid model for network anomaly detection with gradient boosting decision trees and tabtransformer. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 8538–8542. [Google Scholar]

- Yang, Y.; Wang, L. LGANet: Local Graph Attention Network for Peer-to-Peer Botnet Detection. In Proceedings of the Proceedings—2021 3rd International Conference on Advances in Computer Technology, Information Science and Communication, CTISC 2021, Shanghai, China, 23–25 April 2021; pp. 31–36. [Google Scholar]

- Zhang, H.; Zhou, Y.; Xu, H.; Shi, J.; Lin, X.; Gao, Y. Graph neural network approach with spatial structure to anomaly detection of network data. J. Big Data 2025, 12, 105. [Google Scholar] [CrossRef]

- Zhang, L.; Shi, H.; Zhang, K.; Sun, H.; Zhang, W. GraphMal: A Network Malicious Traffic Identification Method Based on Graph Neural Network. In Proceedings of the 2023 International Conference on Networking and Network Applications, NaNA 2023, Qingdao, China, 18–21 August 2023; pp. 262–267. [Google Scholar]

- Zhao, J.; Liu, X.; Yan, Q.; Li, B.; Shao, M.; Peng, H. Multi-attributed heterogeneous graph convolutional network for bot detection. Inf. Sci. 2020, 537, 380–393. [Google Scholar] [CrossRef]

- Zoubir, A.; Missaoui, B. Graph Neural Networks with scattering transform for network anomaly detection. Eng. Appl. Artif. Intell. 2025, 150, 110546. [Google Scholar] [CrossRef]

- Yang, Y.; Yao, C.; Yang, J.; Yin, K. A Network Security Situation Element Extraction Method Based on Conditional Generative Adversarial Network and Transformer. IEEE Access 2022, 10, 107416–107430. [Google Scholar] [CrossRef]

- Xu, L.; Zhao, Z.; Zhao, D.; Li, X.; Lu, X.; Yan, D. AJSAGE: A intrusion detection scheme based on Jump-Knowledge Connection To GraphSAGE. Comput. Secur. 2025, 150, 104263. [Google Scholar] [CrossRef]

- Düzgün, B.; Çayır, A.; Ünal, U.; Dağ, H. Network intrusion detection system by learning jointly from tabular and text-based features. Expert Syst 2024, 41, e13518. [Google Scholar] [CrossRef]

- Cao, H. The Detection of Abnormal Behavior by Artificial Intelligence Algorithms under Network Security. IEEE Access 2024, 12, 118605–118617. [Google Scholar] [CrossRef]

- Du, W.; He, Y.; Li, G.; Yang, X.; Li, J.; Ren, G.; Zhou, K. HyperTTC: Hypergraph-Empowered Tactic-Specific Traffic Clustering for Atomized APT Detection. In Proceedings of the 2025 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 17–20 February 2025; pp. 318–322. [Google Scholar]

- Manocchio, L.D.; Layeghy, S.; Lo, W.W.; Kulatilleke, G.K.; Sarhan, M.; Portmann, M. FlowTransformer: A transformer framework for flow-based network intrusion detection systems. Expert Syst. Appl. 2024, 241, 122564. [Google Scholar] [CrossRef]

- Santosh, R.P.; Chaudhari, T.; Godla, S.R.; Ramesh, J.V.N.; Muniyandy, E.; Smitha, K.A.; Baker El-Ebiary, Y.A. AI-Driven Transformer Frameworks for Real-Time Anomaly Detection in Network Systems. Int. J. Adv. Comput. Sci. Appl. 2025, 16, 1121–1130. [Google Scholar] [CrossRef]

- Akkepalli, S.; Sagar, K. Copula entropy regularization transformer with C2 variational autoencoder and fine-tuned hybrid DL model for network intrusion detection. Telemat. Inform. Rep. 2025, 17, 100182. [Google Scholar] [CrossRef]

- Malik, M.; Saini, K.S. Network Intrusion Detection System Using Reinforcement Learning Techniques. In Proceedings of the International Conference on Circuit Power and Computing Technologies, ICCPCT 2023, Kollam, India, 10–11 August 2023; pp. 1642–1649. [Google Scholar]

- Muhati, E.; Rawat, D.B. Asynchronous Advantage Actor-Critic (A3C) Learning for Cognitive Network Security. In Proceedings of the Proceedings—2021 3rd IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications, TPS-ISA 2021, Atlanta, GA, USA, 13–15 December 2021; pp. 106–113. [Google Scholar]

- Luna, L.; Berkowitz, M.P.; Kandel, L.N.; Jansen-Sánchez, S. Dueling Deep Q-Learning for Intrusion Detection. In Proceedings of the Conference Proceedings—IEEE SOUTHEASTCON, Concord, NC, USA, 22–30 March 2025; pp. 1192–1197. [Google Scholar]

- Sharafaldin, I.; Habibi Lashkari, A.; Ghorbani, A.A. Toward Generating a New Intrusion Detection Dataset and Intrusion Traffic Characterization. In Proceedings of the International Conference on Information Systems Security and Privacy, Funchal, Portugal, 22–24 January 2018. [Google Scholar]

- Moustafa, N.; Slay, J. UNSW-NB15: A comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set). In Proceedings of the 2015 Military Communications and Information Systems Conference (MilCIS), Canberra, Australia, 10–12 November 2015; pp. 1–6. [Google Scholar]

- Glasser, J.; Lindauer, B. Bridging the Gap: A Pragmatic Approach to Generating Insider Threat Data. In Proceedings of the 2013 IEEE Security and Privacy Workshops, San Francisco, CA, USA, 23–24 May 2013; pp. 98–104. [Google Scholar]

| Approach Type | Subcategory/Technique Group | Representative Models/Methods |

|---|---|---|

| Signature-Based Detection (SIDS) | Rule and Signature-Based Systems, Pattern Matching Databases | Signature rules (Snort, Suricata) |

| Anomaly-Based Detection (AIDS) | Traditional Machine Learning | Supervised: SVM, RF, DT, KNN; Unsupervised: K-Means, One-Class SVM, Isolation Forest; Semi-/Self-Supervised: Autoencoders, VAEs |

| Deep Learning | Spatial: CNNs; Sequential: RNN, LSTM, GRU; Hybrid Spatial–Sequential: CNN–LSTM, CNN–GRU | |

| GNNs | Static: GCN, GAT, GraphSAGE; Spatio-Temporal: STGCN, E-GraphSAGE; Hierarchical: NestedGNN; Federated: FedGAT | |

| Transformers | Sequential: Vanilla, Temporal; Hybrid/Multiscale: IDS-MTran, FlowTransformer; Vision: ViT; Decision: RL-integrated Transformers | |

| Reinforcement Learning | Value-Based: Q-Learning, DQN; Policy-Based: Actor–Critic, PPO; Hybrid: DQN + MLP, Feature-Selection RL; Multi-Agent: MAFSIDS |

| Cost Dimension | Traditional ML/DL | GNNs | Transformers | Reinforcement Learning |

|---|---|---|---|---|

| Data collection/preprocessing | Low: Flow/tabular extraction, well established pipelines [19,20]. | High: Graph construction (node/edge design), heterogeneous/nested graphs, and dynamic graph maintenance increase overhead [26,30,32,34]. | Moderate–High: Tokenization/embedding and long sequence handling, input encoding choices affect size/speed [36,37,38]. | High: Environment and trajectory design, reward engineering, and realistic simulator development contribute to cost [42,44]. |

| Training compute/sample efficiency | Low | Moderate–High: (message passing, heterogeneity, and temporal variants add cost) [26,30,34]. | High: High cost for large/long sequence variants. Engineering (lite/strided) can reduce cost [36,37,38]. | High: Sample-inefficient iterative training may require many episodes [42,44,46]. |

| Online updating & adaptivity | Low cost to retrain but limited adaptivity [19,20]. | Moderate-High: Supports temporal updates but operationally heavier to maintain graphs online [30,34]. | High: Large models are expensive to update. Distillation/lite designs help [36,37,38]. | High: Naturally adaptive, but online updates require full environment execution, monitoring, and safety controls; operational and computational costs are significant [45,46]. |

| Operational complexity | Low | High: Graph pipelines, indexing, and dynamic updates contribute to cost [26,30,34]. | Moderate-High: Model size and serving complexity contribute to cost [36,37,38]. | High: Environment management, monitoring, and safety precautions increase the cost [45]. |

| MITRE ATT&CK Tactic ID | MITRE ATT&CK Tactic Name | Network Detectable Behavior | Cyber Kill Chain Stage (Depending on Adversarial Technique) |

|---|---|---|---|

| TA0043 | Reconnaissance | Gathering information for network attack planning. | Reconnaissance |

| TA0042 | Resource Development | Setting up network attack infrastructure. | Weaponization/Reconnaissance |

| TA0001 | Initial Access | Initial access to the network. | Delivery/Exploitation |

| TA0002 | Execution | A phase where adversaries execute malicious code on a local or remote network to enable future attack actions. | Exploitation/Installation |

| TA0003 | Persistence | Threat actors’ ability to maintain hidden access within the network. | Persistence/Installation |

| TA0004 | Privilege Escalation | Attempts to gain unauthorized higher access. | Exploitation/Installation |

| TA0005 | Defense Evasion | Obfuscating threat presence to avoid detection by network scanners. | Actions on Objectives/Installation |

| TA0006 | Credential Access | Attempting to obtain network credentials to internal network systems/resources. | Exploitation/Installation |

| TA0007 | Discovery | Probing internal network resources and services. | Reconnaissance/Exploitation |

| TA0008 | Lateral Movement | Unauthorized moving between systems within the network using stolen credentials, exploiting vulnerabilities, etc. | Lateral Movement |

| TA0009 | Collection | Data aggregation and staging on compromised hosts before exfiltration, limited direct network visibility, but may exhibit detectable staging patterns. | Exfiltration/Actions on Objectives |

| TA0011 | Command and Control | Communicating with compromised hosts using beaconing or covert communications. | Command & Control |

| TA0010 | Exfiltration | Unauthorized network data access or attempts at data movement. | Exfiltration/Actions on Objectives |

| TA0040 | Impact | Post-exfiltration or disruption activities such as service shutdown, data destruction, or ransomware deployment. Beyond the proactive detection scope, but included for completeness. | Impact/Actions on Objectives |

| Condition | Classification | Rationale |

|---|---|---|

| Behavior indicates intent, capability, or preparatory action without direct harm. | Threat | Potential risk: preparatory or reconnaissance stage. |

| Behavior achieves compromise, disruption, or data loss. | Attack | Realized exploitation or system impact. |

| Behavior may serve as both a standalone attack and a precursor within a multi-stage campaign. | Dual-context (Threat ↔ Attack) | Treated as a threat in proactive detection, attack when harm is evident. |

| The dataset or study does not specify the outcome (success unclear) | Default to Threat | Aligns with a proactive early warning perspective. |

| Component | Description |

|---|---|

| Research Paradigm/Philosophy | The philosophy behind this SLR is post-positivist as the study assumes that objective performance and usage knowledge about GNNS, Transformers and RL algorithms can be extracted from systematically evaluating existing empirical studies. |

| Research Approach | The conclusions were drawn from observed patterns and insights from existing literature rather than from deductive testing. Therefore, the approach was primarily inductive. |

| Research Methodology | An SLR following PRISMA 2020 guidelines, followed by an OSF protocol pre-registration. |

| Design Type/Purpose | This study described the usage of GNNs, Transformers and RL, hence descriptive, compared model performance with each other, therefore comparative, and identified trends and patterns, therefore exploratory. And finally, it assessed the strengths and weaknesses of the utilized models and methodologies, therefore evaluative. |

| Data Collection & Storing Method | Secondary data collection for this SLR was done using structured search queries in Scopus, IEEEXplore and ACM DL, followed by manual searches in Scopus and IEEEXplore and snowballing to collect peer-reviewed journal articles and conference papers published from the year 2017 to 2025. The extracted data was stored in two tables called “metrics” and “study” in a relational database. Study_ID denoted with the notation S1, S2…SX was shared between the two tables. |

| Data Types | This SLR employs Mixed data types as both quantitative elements (accuracy, F1 score, AUC, etc.) and qualitative elements (integration methods, limitations of the methodologies, etc.) were extracted. |

| Analysis Strategy | Descriptive statistics for recorded performance metrics (accuracy, F1 score, AUC, etc.) and light thematic synthesis for drawing qualitative insights (common model limitations, trends, etc.) were conducted as the primary analysis techniques. Furthermore, to apply quality appraisal, a risk of bias assessment was performed for each included study. |

| Ethical Considerations | Not applicable as the study used publicly available secondary data in a non-privacy-critical domain. However, ethical research conducts were ensured via OSF registration and proper citations. |

| Validity & Reliability/Trustworthiness | Ensured by using PRISMA 2020 guidelines, OSF pre-registration protocol, ROB checklist and consistent screening and extraction criteria. |

| Study_ID | Title | Year |

|---|---|---|

| S1 [56] | RAGN: Detecting unknown malicious network traffic using a robust adaptive graph neural network | 2025 |

| S2 [57] | A Novel Approach for APT Detection Based on Ensemble Learning Model | 2025 |

| S3 [29] | Detect Insider Threat with Associated Session Graph | 2024 |

| S4 [58] | AnoGLA: An efficient scheme to improve network anomaly detection | 2022 |

| S5 [59] | TBA-GNN: A Traffic Behavior Analysis Model with Graph Neural Networks for Malicious Traffic Detection | 2025 |

| S6 [60] | Euler: Detecting Network Lateral Movement via Scalable Temporal Link Prediction | 2023 |

| S7 [61] | Network Anomaly Detection Using a Graph Neural Network | 2023 |

| S8 [62] | Insider Threat Detection Using Generative Adversarial Graph Attention Networks | 2022 |

| S9 [63] | A Hierarchical Approach for Advanced Persistent Threat Detection with Attention-Based Graph Neural Networks | 2021 |

| S10 [64] | Deeply fused flow and topology features for botnet detection based on a pretrained GCN | 2025 |

| S11 [65] | Graph-Based Approaches to Detect Network Anomalies and to Predict Attack Spread | 2023 |

| S12 [26] | FN-GNN: A Novel Graph Embedding Approach for Enhancing Graph Neural Networks in Network Intrusion Detection Systems | 2024 |

| S13 [66] | Flash: A Comprehensive Approach to Intrusion Detection via Provenance Graph Representation Learning | 2024 |

| S14 [67] | Bot-DM: A Dual-Modal Botnet Detection Method Based on the Combination of Implicit Semantic Expression and Graphical Expression | 2024 |

| S15 [68] | An active learning framework using deep Q-network for zero day attack detection | 2024 |

| S16 [69] | Hybrid model for network anomaly detection with gradient boosting decision trees and tabtransformer | 2021 |

| S17 [70] | LGANet: Local Graph Attention Network for Peer-to-Peer Botnet Detection | 2021 |

| S18 [71] | Graph neural network approach with spatial structure to anomaly detection of network data | 2025 |

| S19 [72] | GraphMal: A Network Malicious Traffic Identification Method Based on Graph Neural Network | 2023 |

| S20 [73] | Multi-attributed heterogeneous graph convolutional network for bot detection | 2020 |

| S21 [53] | A Large-Scale P2P Botnet Detection Framework via Topology and Traffic Co-Verification | 2024 |

| S22 [74] | Graph Neural Networks with scattering transform for network anomaly detection | 2025 |

| S23 [75] | A Network Security Situation Element Extraction Method Based on Conditional Generative Adversarial Network and Transformer | 2022 |

| S24 [76] | AJSAGE: A intrusion detection scheme based on Jump-Knowledge Connection To GraphSAGE | 2025 |

| S25 [77] | Network intrusion detection system by learning jointly from tabular and text-based features | 2023 |

| S26 [78] | The Detection of Abnormal Behavior by Artificial Intelligence Algorithms Under Network Security | 2024 |

| S27 [79] | HyperTTC: Hypergraph-Empowered Tactic-Specific Traffic Clustering for Atomized APT Detection | 2025 |

| S28 [54] | Detecting abnormal logins by discovering anomalous links via graph transformers | 2024 |

| S29 [55] | Deep Temporal Graph Infomax for Imbalanced Insider Threat Detection | 2023 |

| S30 [80] | FlowTransformer: A Transformer Framework for Flow-Based Network Intrusion Detection Systems | 2024 |

| S31 [81] | AI-Driven Transformer Frameworks for Real-Time Anomaly Detection in Network Systems | 2025 |

| S32 [82] | Copula entropy regularization transformer with C2 variational autoencoder and fine-tuned hybrid DL model for network intrusion detection | 2025 |

| S33 [83] | Network Intrusion Detection System Using Reinforcement Learning Techniques | 2023 |

| S34 [84] | Asynchronous Advantage Actor-Critic (A3C) Learning for Cognitive Network Security | 2021 |

| S35 [85] | Dueling Deep Q-Learning for Intrusion Detection | 2025 |

| S36 [36] | A novel multi-scale network intrusion detection model with transformer | 2024 |

| Threat Type (Grouped) | MITRE ATT&CK Tactic ID(s) | MITRE ATT&CK Tactic Name(s) | Cyber Kill Chain Stage | Classification |

|---|---|---|---|---|

| Reconnaissance Group | ||||

| Reconnaissance | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Analysis | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Fuzzers | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Portscan | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Probing | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Probing-IP sweep | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Probing-Nmap | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Probing-Port sweep | TA0043 | Reconnaissance | Reconnaissance | Threat |

| Darknet Activity | TA0043 | Reconnaissance | Reconnaissance | Default to Threat |

| Initial Access Group | ||||

| Initial Access | TA0001 | Initial Access | Delivery/Exploitation | Dual-context |

| Initial Compromise | TA0001 | Initial Access | Delivery/Exploitation | Dual-context |

| Infiltration | TA0001 | Initial Access | Delivery/Exploitation | Threat |

| Shellcode | TA0001 | Initial Access | Exploitation | Dual-context |

| R2L | TA0001 | Initial Access | Delivery/Exploitation | Dual-context |

| SQL Injection | TA0001 | Initial Access | Exploitation | Dual-context |

| Unauthorized access | TA0001 | Initial Access | Delivery/Exploitation | Dual-context |

| Zero-Day | TA0001 | Initial Access | Exploitation | Threat |

| Persistence Group | ||||

| Persistence | TA0003 | Persistence | Installation | Dual-context |

| Backdoor | TA0003 | Persistence | Installation | Dual-context |

| Privilege Escalation Group | ||||

| Privilege Escalation | TA0004 | Privilege Escalation | Installation/Exploitation | Dual-context |

| Process Injection | TA0004 | Privilege Escalation | Exploitation | Dual-context |

| Defense Evasion Group | ||||

| Defense Evasion | TA0005 | Defense Evasion | Installation/Exploitation | Dual-context |

| Fileless Malware | TA0005 | Defense Evasion | Installation/Exploitation | Default to Threat |

| Credential Access Group | ||||

| Credential Access | TA0006 | Credential Access | Credential Access | Dual-context |

| Bruteforce | TA0006 | Credential Access | Credential Access | Threat |

| FTP-Patator | TA0006 | Credential Access | Credential Access | Threat |

| FTP-Bruteforce | TA0006 | Credential Access | Credential Access | Threat |

| SSH-Patator | TA0006 | Credential Access | Credential Access | Threat |

| SSH-Bruteforce | TA0006 | Credential Access | Credential Access | Threat |

| HTTP-Bruteforce | TA0006 | Credential Access | Credential Access | Threat |

| Unauthorized login attempts | TA0006 | Credential Access | Credential Access | Threat |

| Discovery Group | ||||

| Anomalous TCP Connections | TA0007 | Discovery | Reconnaissance/Discovery | Default to Threat |

| Lateral Movement Group | ||||

| Lateral Movement | TA0008 | Lateral Movement | Lateral Movement | Dual-context |

| Lateral Movement via Anomalous Authentications | TA0008 | Lateral Movement | Lateral Movement | Dual-context |

| Command and Control Group | ||||

| Botnets | TA0011 | Command and Control | Command and Control | Threat |

| P2P Botnets | TA0011 | Command and Control | Command and Control | Threat |

| Kelihos botnet (P2P Botnets) | TA0011 | Command and Control | Command and Control | Threat |

| C2 Botnets | TA0011 | Command and Control | Command and Control | Threat |

| C2 Traffic | TA0011 | Command and Control | Command and Control | Threat |

| Others | ||||

| Impersonation | TA0005, TA0006 | Defense Evasion, Credential Access | Reconnaissance/Delivery/Credential Access | Dual-context |

| Data Exfiltration | TA0010 | Exfiltration | Exfiltration | Default to Threat |

| Multi-stage/APT-style threats | ||||

| APT | TA0001, TA0008 | Initial Access, Lateral Movement | Reconnaissance/Delivery | Threat |

| APT related login activities | TA0006, TA0008 | Credential Access, Lateral Movement | Credential Access/Lateral Movement | Threat |

| APT (Initial access, Privilege escalation, Persistence, Stealth operations, Data theft) | TA0001, TA0004, TA0003, TA0005, TA0010 | Initial Access, Privilege Escalation, Persistence, Defense Evasion, Exfiltration | Reconnaissance → Exploitation → Installation | Threat |

| APT (Lateral Movement, C2 Traffic) | TA0008, TA0011 | Lateral Movement, Command and Control | Lateral Movement → Command & Control | Threat |

| APT (Probing, R2L, U2R) | TA0043, TA0001, TA0004 | Reconnaissance, Initial Access, Privilege Escalation | Reconnaissance/Delivery | Threat |

| APT (Process creation & manipulation, File access and modification, Inter-process communication (IPC) hijacking, Network connection hijacking) | TA0004, TA0010, TA0005, TA0008 | Privilege Escalation, Exfiltration, Defense Evasion, Lateral Movement | Installation/Exploitation/Lateral Move | Threat |

| APT (Rootkit installation, Process injection, Kernel-level malware usage) | TA0003, TA0004 | Persistence, Privilege Escalation | Installation/Exploitation | Threat |

| APT (Unauthorized access, Malware implantation, Data tampering, Misuse of system resources) | TA0001, TA0003, TA0010, TA0005 | Initial Access, Persistence, Exfiltration, Defense Evasion | Delivery/Installation/Exploitation | Threat |

| APT(Malware download, Malicious payload execution, Remote code execution, System command injection) | TA0001, TA0005 | Initial Access, Defense Evasion | Delivery/Exploitation | Threat |

| Multi-stage APT with advanced evasion techniques | TA0001, TA0005, TA0008 | Initial Access, Defense Evasion, Lateral Movement | Delivery/Installation/Lateral Movement | Threat |

| Multi-stage APT with attack paths | TA0001, TA0008 | Initial Access, Lateral Movement | Delivery/Lateral Movement | Threat |

| Insider Threats | ||||

| Insider Threats | TA0006, TA0008, TA0010 | Credential Access, Lateral Movement, Exfiltration | Credential Access/Lateral/Exfiltration | Threat |

| Insider Threats (AB-I) (data exfiltration) | TA0010 | Exfiltration | Exfiltration | Default to Threat |

| Insider Threats (AB-II) (credential theft) | TA0006 | Credential Access | Credential Access | Dual-context |

| Insider Threats (AB-III) (malicious tunneling) | TA0011 | Command and Control | Command & Control | Threat |

| Model/Hybrid Approach | Unique Study Count |

|---|---|

| GAT | 4 |

| GCN | 4 |

| GraphSAGE | 3 |

| E-GraphSAGE | 2 |

| GNN | 2 |

| AHGCN | 1 |

| CNN + GAT | 1 |

| GAN + GAT | 1 |

| GAT + KNN | 1 |

| GCN + Extra Trees | 1 |

| GCN + GraphSAGE | 1 |

| GCN + GRU | 1 |

| GCN + KNN | 1 |

| GCN + LSTM | 1 |

| GCN + LSTM + SM | 1 |

| GCN + RL | 1 |

| GraphSAGE + KNN | 1 |

| MLP + CR_Layer + GAT | 1 |

| Graph Transformer | 1 |

| Model/Hybrid Approach | Unique Study Count |

|---|---|

| Transformer | 2 |

| BERT | 2 |

| Transformer + MLP | 1 |

| Transformer + CNN | 1 |

| Transformer + CNN-BiLSTM | 1 |

| Tab Transformer | 1 |

| Tab Transformer + GBM | 1 |

| CGAN + Vanilla Transformer | 1 |

| RM-DDCG + Transformer | 1 |

| Transformer Shallow Encoder | 1 |

| Transformer Shallow Decoder | 1 |

| GPT 2.0 | 1 |

| CNN + Transformer | 1 |

| Model/Hybrid Approach | Unique Study Count |

|---|---|

| CNN-BiLSTM + DQN | 1 |

| DQN | 1 |

| DQN + DAE | 1 |

| A3C | 1 |

| Dueling DQN | 1 |

| ID | Model | Dataset | Threat Type | Performance % |

|---|---|---|---|---|

| S1 | GAT | CICIDS2018 + synthetic zero-day traffic | Zero-Day | A: 99.12, P: 99.18, R: 99.09, F1: 99.13, FPR: 0.35 |

| S1 | GAT | CTU-13 + synthetic zero-day traffic | Zero-Day | A: 98.90, P: 98.85, R: 98.82, F1: 98.83, FPR: 0.31 |

| S1 | GAT | USTC-TFC2016 + synthetic zero-day traffic | Zero-Day | A: 99.48, P: 99.43, R: 99.40, F1: 99.42, FPR: 0.30 |

| S1 | GAT | UJS-IDS2022 + synthetic zero-day traffic | Zero-Day | A: 98.12, P: 98.18, R: 98.00, F1: 97.13, FPR: 0.25 |

| S2 | MLP + CR_Layer + GAT | Malware Capture CTU-13 dataset | APT | A: 99, P: 100, R: 99, F1: 99 |

| S3 | GCN | CERT v4.2 | Insider Threats (AB-I: Data Exfiltration) | A: 98.67, P: 99.61, F1: 98.59, AUC: 98.66, TPR: 97.7, FPR: 0 |

| S3 | GCN | CERT v4.2 | Insider Threats (AB-II: Credential Theft) | A: 99.56, P: 99.54, F1: 99.53, AUC: 99.56, TPR: 99.56, FPR: 0 |

| S3 | GCN | CERT v4.2 | Insider Threats (AB-III: Malicious Tunneling) | A: 99.67, P: 99.14, F1: 99.13, AUC: 98.63, TPR: 99.15, FPR: 0 |

| S4 | GCN + LSTM | CICIDS2017 | Probing, U2R, R2L | A: 99.24, P: 98.50, R: 98.62, F1: 98.72 |

| S4 | GCN + LSTM | CTU-13 | Botnets | A: 99.31, P: 98.04, R: 99.53, F1: 99.47 |

| S5 | CNN + GAT | ISCX-IDS2012 | SSH-Bruteforce, HTTP-Bruteforce, Infiltration, Botnets, Portscan | A: 99.75, P: 99.78, R: 99.73, F1: 99.76 |

| S5 | CNN + GAT | CICIDS2017 | FTP-Patator, SSH-Patator, Botnets, PortScan, Infiltration | A: 99.97, P: 99.94, R: 99.97, F1: 99.96 |

| S6 | GCN + LSTM + SM | OpTC | Lateral Movement | P: 98.6, R: 94.9, AUC: 99.4, TPR: 94.9, FPR: 0.013 |

| S10 | GCN + Extra Trees | CTU-13 | C2 Botnets, P2P Botnets | A: 98.85, R: 92.90, F1: 91.33 |

| S10 | GCN + Extra Trees | ISCX-2014 | C2 Botnets, P2P Botnets | A: 99.10, R: 94.66, F1: 91.86 |

| S10 | GCN + Extra Trees | CICIDS2017 | Kelihos botnet (P2P Botnets) | A: 98.23, R: 93.77, F1: 95.38 |

| S12 | GCN + GraphSAGE | CICIDS2017 | FTP-Patator, SSH-Patator, Botnets, PortScan, Infiltration | A: 99.76, P: 99.26, R: 99.51, F1: 99.76 |

| S12 | GCN + GraphSAGE | UNSW-NB15 | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | A: 98.65, P: 98.01, R: 98.97, F1: 98.65 |

| S13 | GraphSAGE | DARPA E3—Cadets | Initial Compromise, Persistence, Privilege Escalation | P: 94, R: 99, F1: 96, FPR: 0.072 |

| S13 | GraphSAGE | DARPA E3—Trace | Lateral Movement, Defense Evasion | P: 95, R: 99, F1: 97, FPR: 0.009 |

| S13 | GraphSAGE | DARPA E3—Theia | Process Injection, Fileless Malware | P: 92, R: 99, F1: 95, FPR: 0.017 |

| S13 | GraphSAGE | DARPA OpTC (Threat 1) | Initial Access, Lateral Movement | P: 91, R: 94, F1: 92, FPR: 0.0009 |

| S13 | GraphSAGE | DARPA OpTC (Threat 3) | Backdoor | P: 92, R: 92, F1: 92, FPR: 0.0002 |

| S17 | GAT | PeerRush + CTU-13 + MAWIWorkingGroupTrafficArchive | P2P Botnets | P: 90.5, R: 100, F1: 95, FPR: 0.6 |

| S18 | GAT | UNSW-NB15 | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | F1: 93.41, A: 93.17, R: 88.12 |

| S18 | GAT | CampusNet-Logs | Reconnaissance, Unauthorized login attempts, Persistence, Privilege Escalation, Defense Evasion, Credential Access, Lateral Movement | F1: 99.13, A: 98.62, R: 98.62 |

| S20 | AHGCN | Custom Honeypot Dataset | Botnets | P: 98.81, R: 97.65 |

| S20 | AHGCN | CTU-13 | Botnets | P: 98.24, R: 98.31 |

| S21 | GCN + RL | ISCX-Botnet + CAIDA + Synthetic P2P | P2P Botnets | A: 99.78, P: 98.89, R: 98.92, F1: 98.91 |

| S22 | E-GraphSAGE | NF-UNSW-NB15-v2 (0% contamination) | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | A: 98.74, Macro-F1: 92.72 |

| S22 | E-GraphSAGE | NF-UNSW-NB15-v2 (4% contamination) | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | A: 98.74, Macro-F1: 92.85 |

| S22 | E-GraphSAGE | NF-CSE-CIC-IDS2018-v2 (0% contamination) | Brute Force FTP, SSH, Infiltration, Botnets, PortScan | A: 98.54, Macro-F1: 98.06 |

| S22 | E-GraphSAGE | NF-CSE-CIC-IDS2018-v2 (4% contamination) | Brute Force FTP, SSH, Infiltration, Botnets, PortScan | A: 96.36, Macro-F1: 95.28 |

| S22 | E-GraphSAGE | NF-UNSW-NB18-v2 (0% contamination) | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | A: 98.54, Macro-F1: 96.36 |

| S22 | E-GraphSAGE | NF-UNSW-NB18-v2 (4% contamination) | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | A: 98.06, Macro-F1: 95.28 |

| S24 | GraphSAGE | Unicorn SC-2 dataset | APT (Malware download, Remote code execution, etc.) | A: 94, P: 93, R: 96, F1: 94, FPR: 0.072 |

| S24 | GraphSAGE | DARPA TC#3 Theia | APT (Unauthorized access, Malware implantation) | P: 89, R: 99, F1: 94, FPR: 0.009 |

| S24 | GraphSAGE | DARPA TC#3 Trace | APT (Rootkit installation, Process injection) | P: 76, R: 99, F1: 86, FPR: 0.017 |

| S24 | GraphSAGE | DARPA TC#3 Cadets | APT (Process manipulation, Network hijacking) | P: 79, R: 99, F1: 88, FPR: 0.0009 |

| S24 | GraphSAGE | DARPA TC#3 Fivedirections | APT (Initial access, Data theft) | P: 79, R: 89, F1: 84, FPR: 0.0002 |

| S24 | GraphSAGE | DARPA TC#5 Theia | Multi-stage APT with attack paths | P: 86, R: 76, F1: 80, FPR: 0.074 |

| S24 | GraphSAGE | DARPA TC#5 Trace | Multi-stage APT with advanced evasion | P: 87, R: 99, F1: 93, FPR: 0.065 |

| S28 | Graph Transformer | CERT r4.2 | APT-related login activities | F1: 92.44, AUC: 94.39, TPR: 92.53, FPR: 7.74 |

| S28 | Graph Transformer | CERT r5.2 | APT-related login activities | F1: 93.52, AUC: 95.35, TPR: 93.52, FPR: 6.81 |

| S28 | Graph Transformer | PicoDomain | APT, Lateral Movement via Anomalous Authentications | F1: 88.89, AUC: 75, TPR: 100, FPR: 25 |

| S29 | GNN | CERT v6.2 | Insider Threats (Espionage, Data theft, Policy violations) | F1: 95.58, AUC: 96.32, P: 95.52, R: 96.79 |

| ID | Model | Dataset | Threat Type | Performance % |

|---|---|---|---|---|

| S6 | GCN | LANL | Lateral Movement | P: 0.66, AUC: 99.16, FPR: 0.47 |

| S6 | GCN + GRU | LANL | Lateral Movement | P: 0.54, R:86.1, AUC: 99.12, TPR: 86.1, FPR: 0.5698 |

| S7 | GCN | TOR-nonTOR | C2 Traffic, Darknet Activity | A: 88 |

| S8 | GAN + GAT | CERT v4.2 | Insider Threats | F1: 84.9, AUC: 90 |

| S9 | GNN | LANL | APT (Lateral Movement, C2 Traffic) | F1: 83, AUC: 90 |

| S11 | GCN + KNN | UNSW-NB15 | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | F1: 81, A: 80 |

| S11 | GAT + KNN | UNSW-NB15 | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | F1: 79, A: 80 |

| S11 | GraphSAGE + KNN | UNSW-NB15 | Fuzzers, Analysis, Backdoor, Reconnaissance, Shellcode | F1: 83, A: 81 |

| S18 | GAT | CICIDS2017 | FTP-Patator, SSH-Patator, Botnets, PortScan, Infiltration | F1: 83.66, A: 69.82 |

| S19 | E-GraphSAGE | UNSW-NB15 | Reconnaissance | F1: 84.17, P: 92.37 |

| ID | Model | Dataset | Threat Type | Performance % |

|---|---|---|---|---|

| S6 | GraphSAGE | LANL | Lateral Movement | P: 0.01, FPR: 24.57 |