Abstract

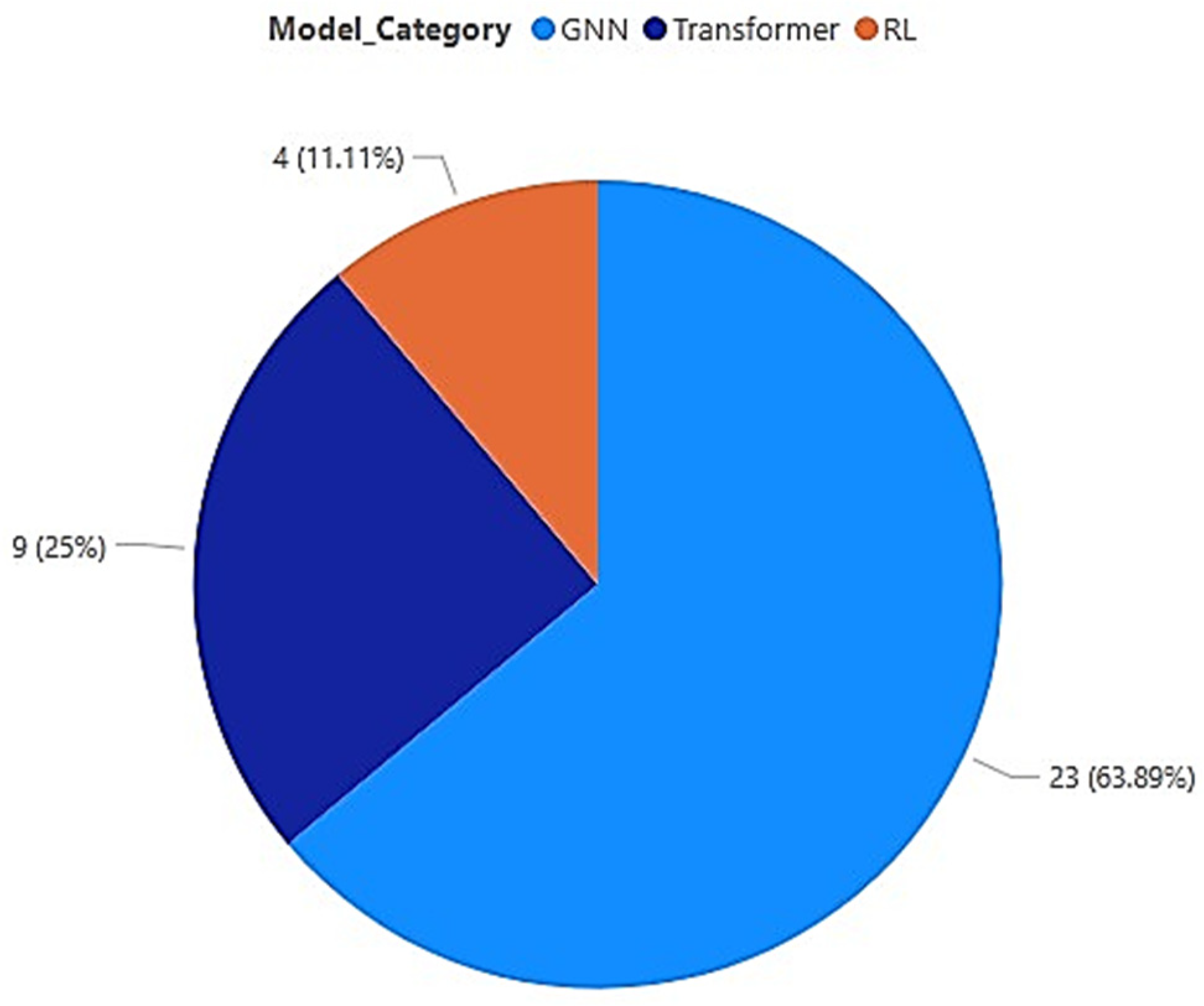

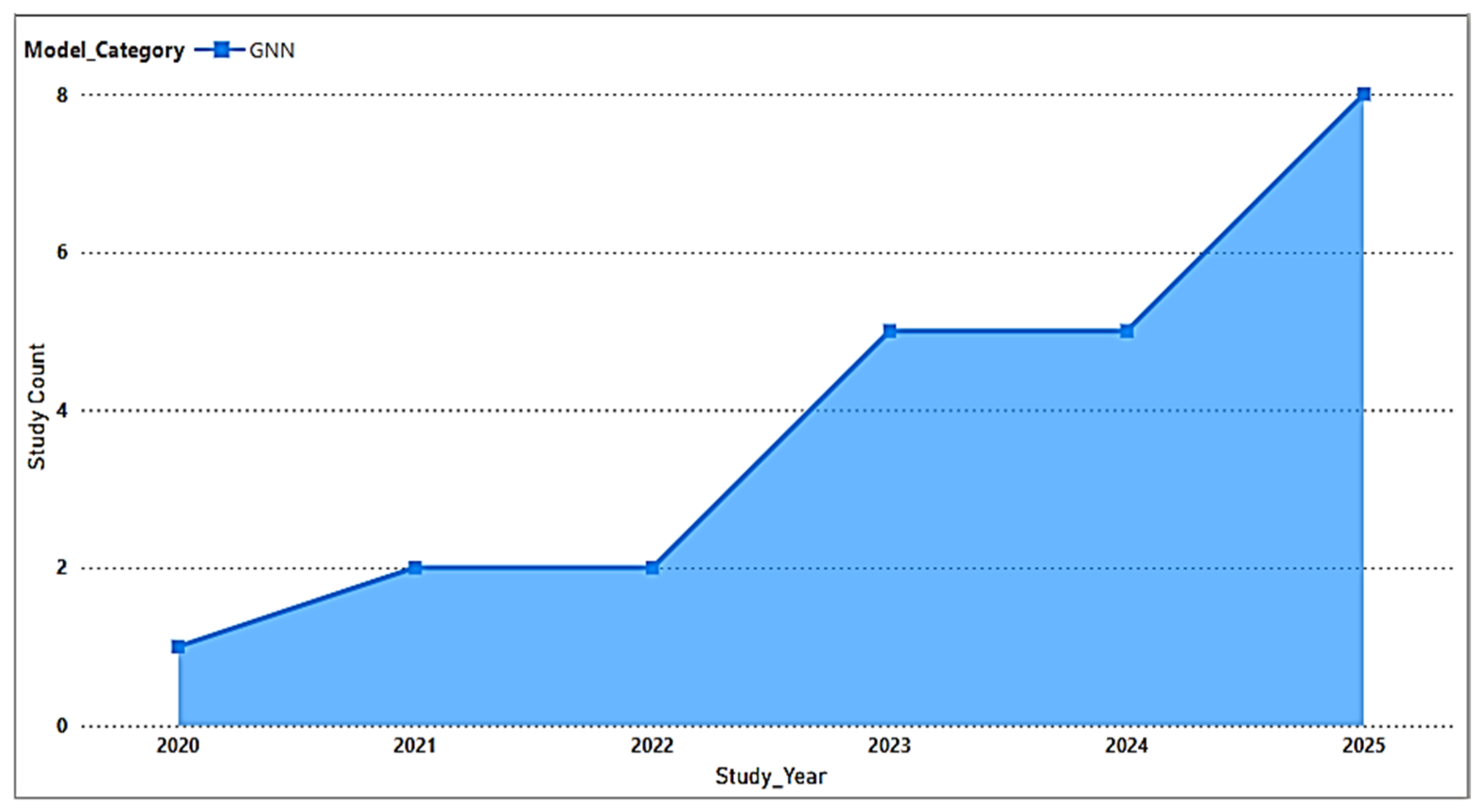

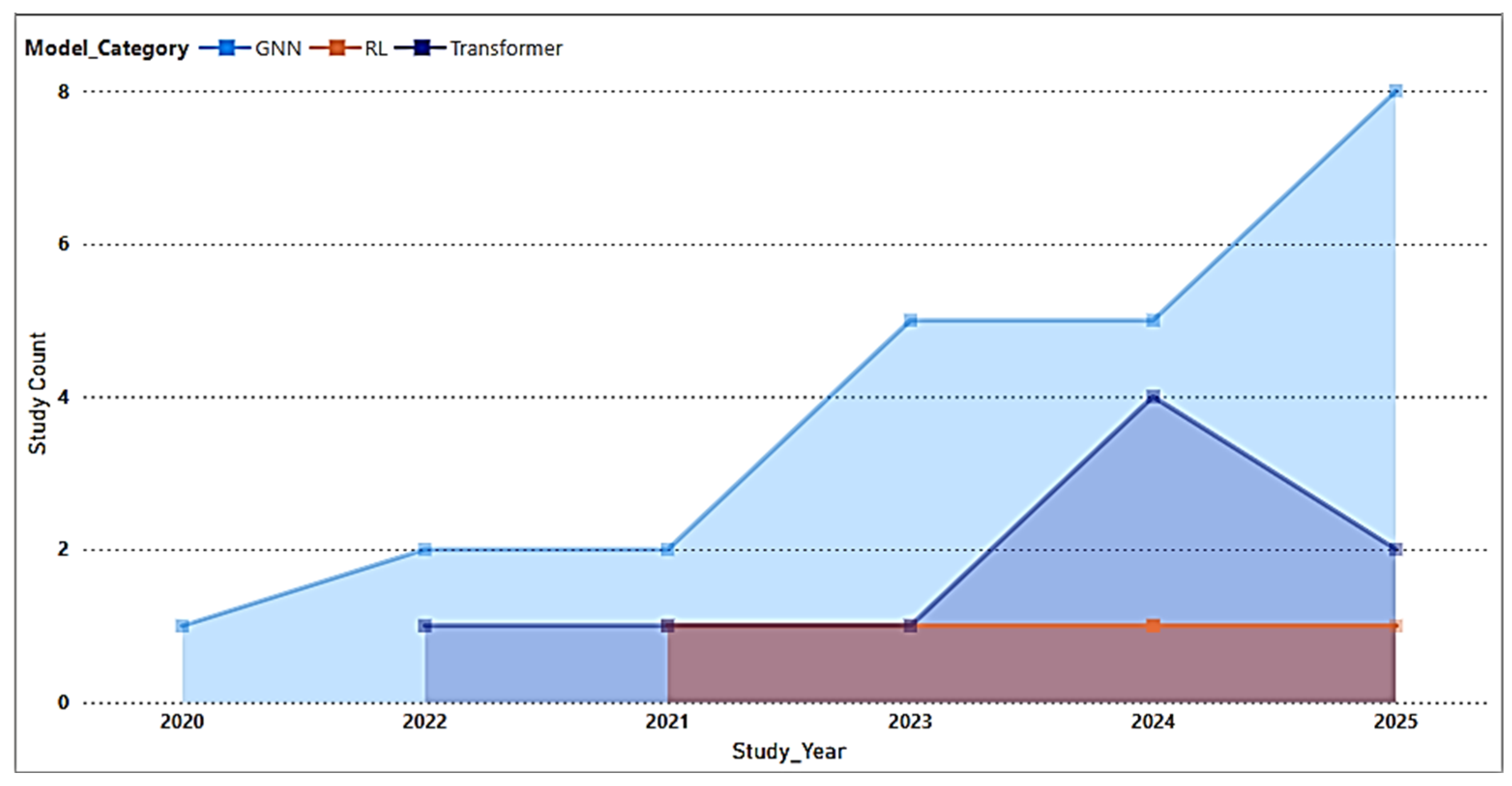

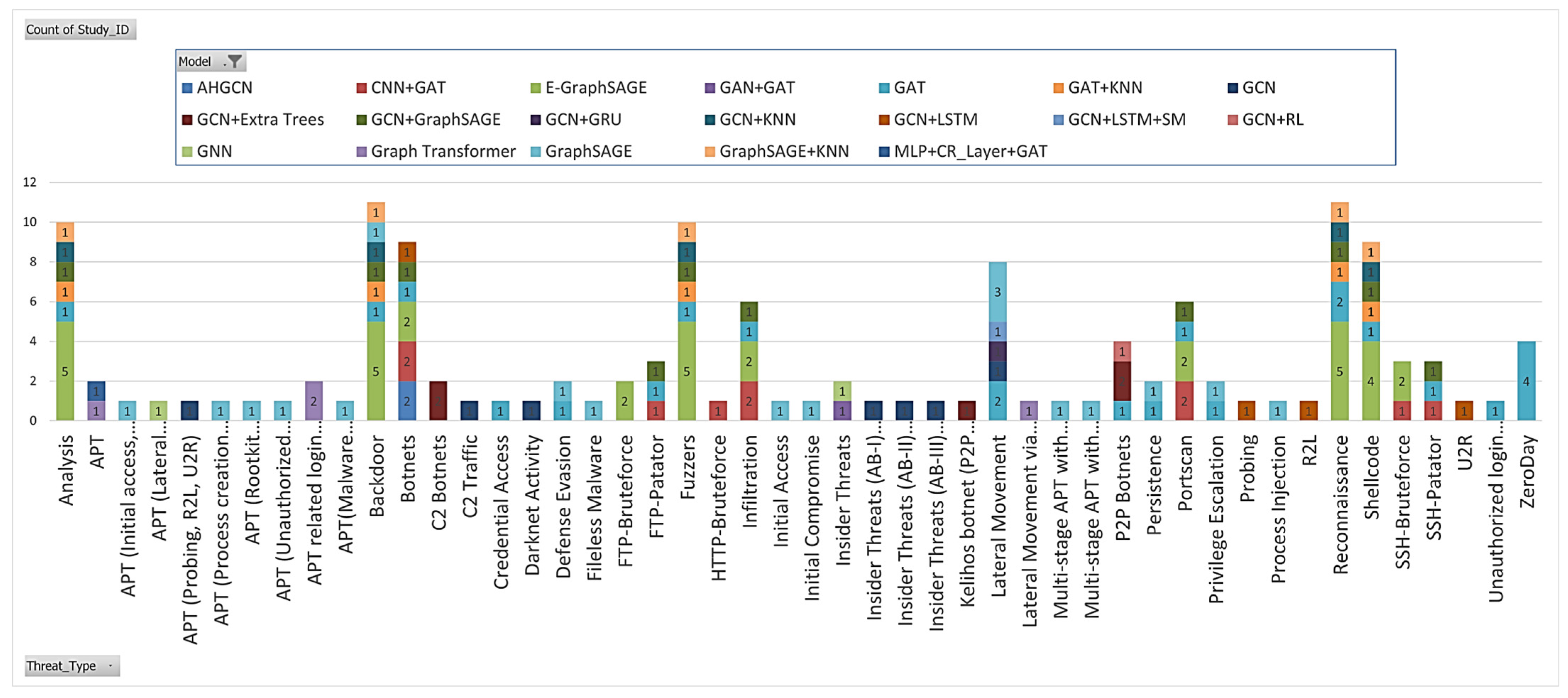

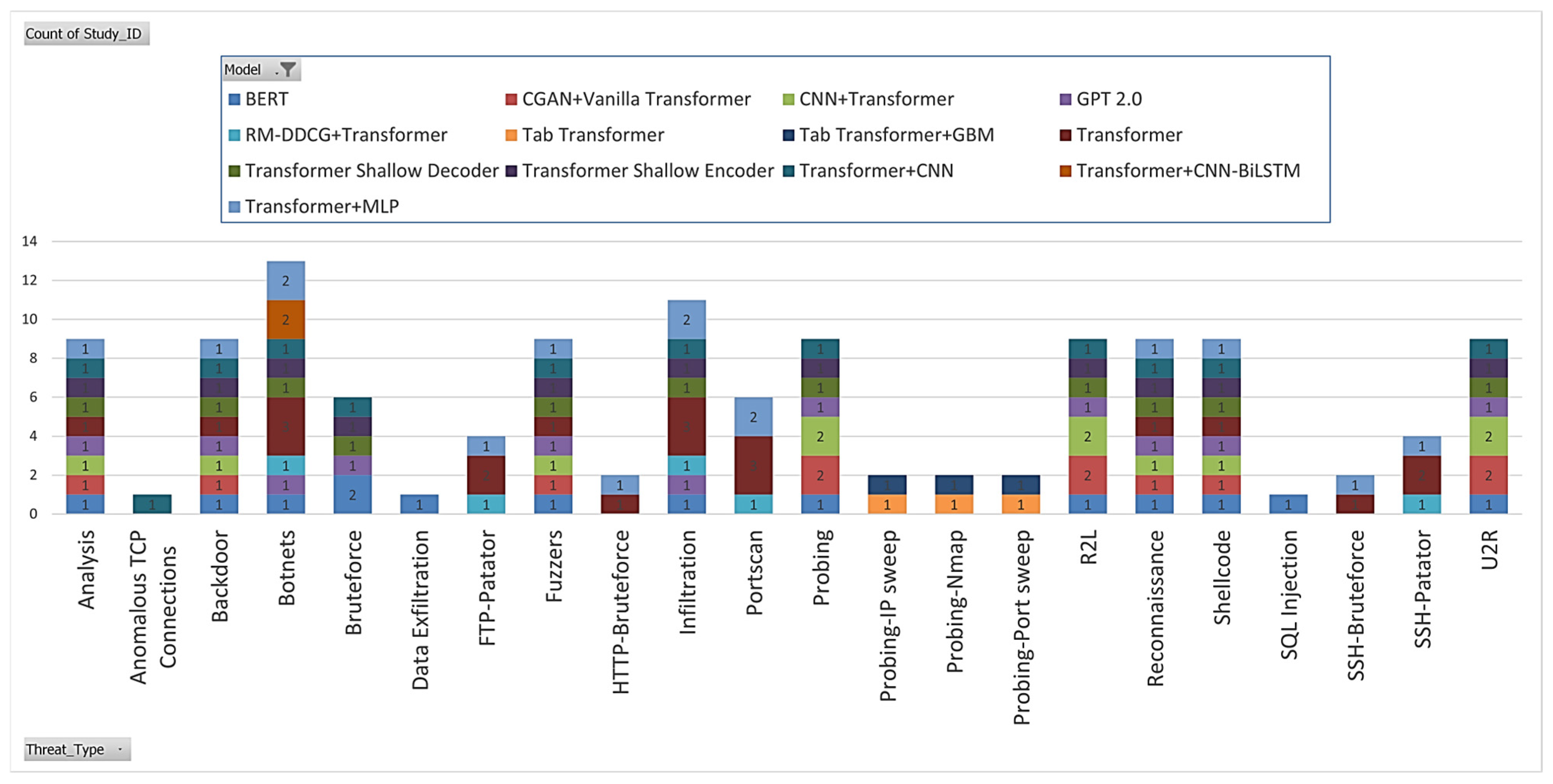

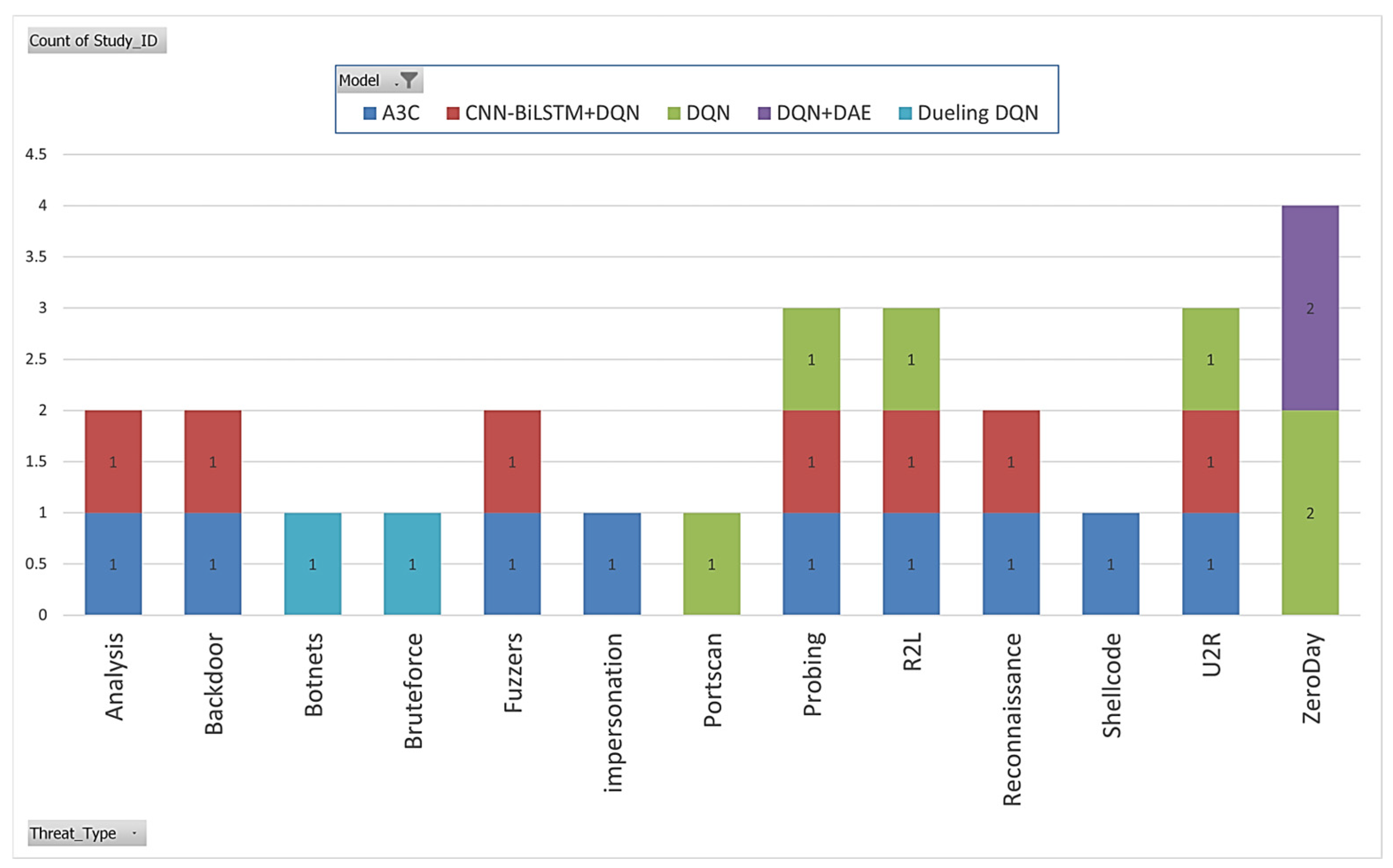

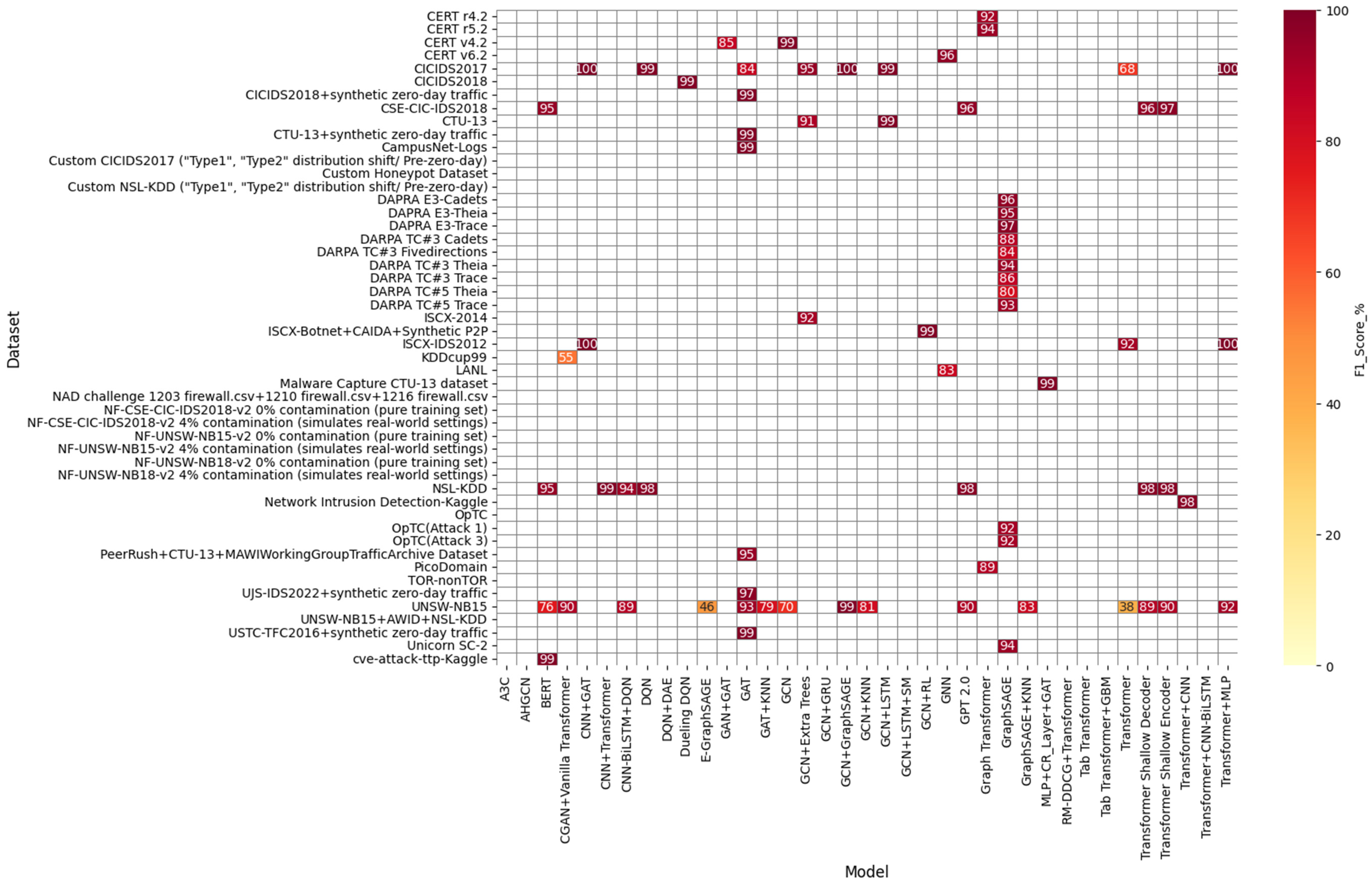

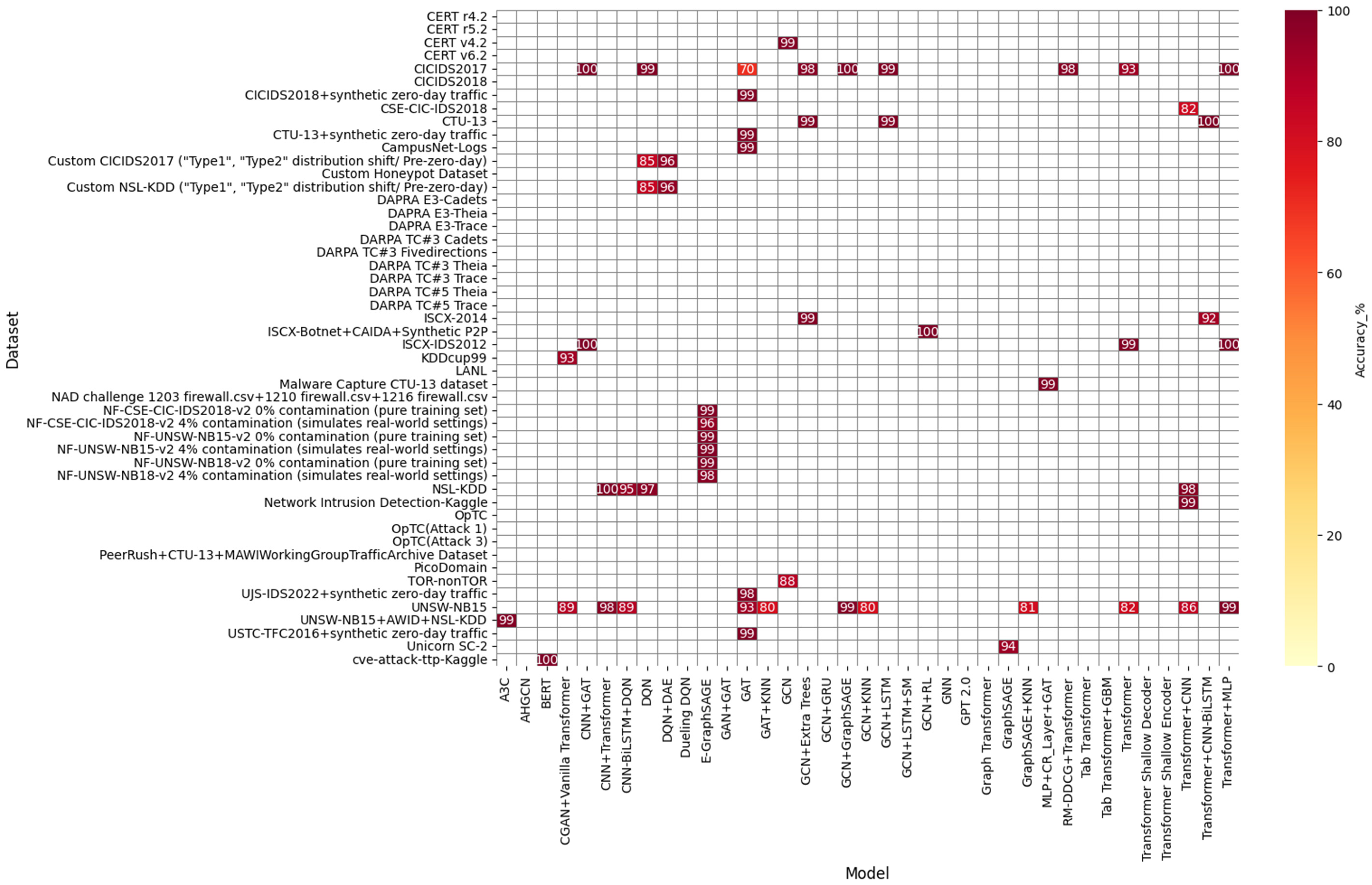

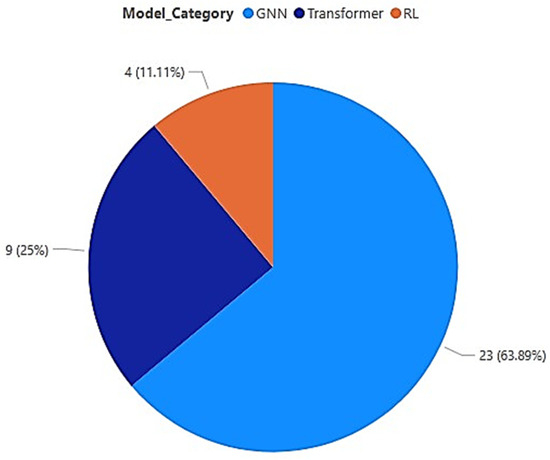

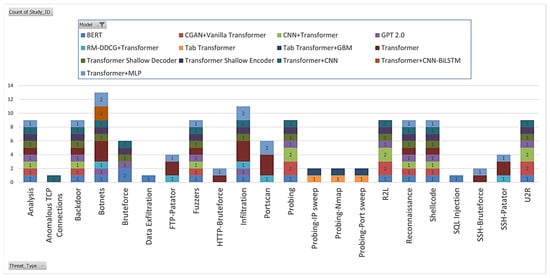

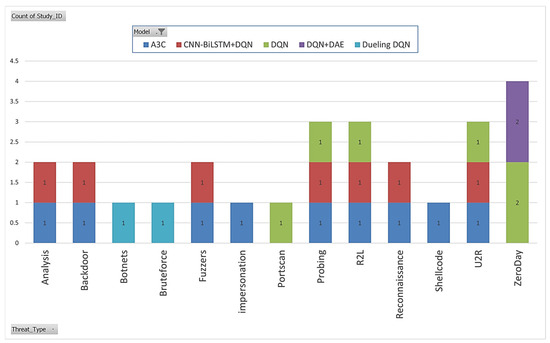

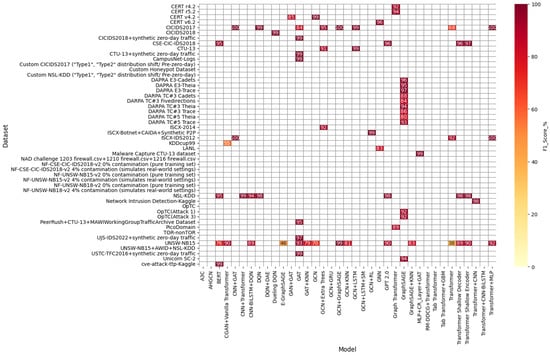

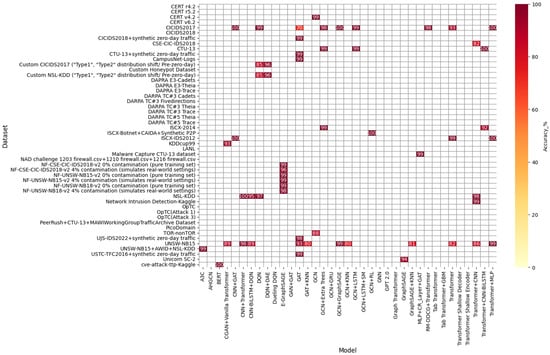

Traditional network threat detection based on signatures is becoming increasingly inadequate as network threats and attacks continue to grow in their novelty and sophistication. Such advanced network threats are better handled by anomaly detection based on Machine Learning (ML) models. However, conventional anomaly-based network threat detection with traditional ML and Deep Learning (DL) faces fundamental limitations. Graph Neural Networks (GNNs) and Transformers are recent deep learning models with innovative architectures, capable of addressing these challenges. Reinforcement learning (RL) can facilitate adaptive learning strategies for GNN- and Transformer-based Intrusion Detection Systems (IDS). However, no systematic literature review (SLR) has jointly analyzed and synthesized these three powerful modeling algorithms in network threat detection. To address this gap, this SLR analyzed 36 peer-reviewed studies published between 2017 and 2025, collectively identifying 56 distinct network threats via the proposed threat classification framework by systematically mapping them to Enterprise MITRE ATT&CK tactics and their corresponding Cyber Kill Chain stages. The reviewed literature consists of 23 GNN-based studies implementing 19 GNN model types, 9 Transformer-based studies implementing 13 Transformer architectures, and 4 RL-based studies with 5 different RL algorithms, evaluated across 50 distinct datasets, demonstrating their overall effectiveness in network threat detection.

1. Introduction

Despite the recent rise in popularity of cloud services, critical government, financial, and healthcare infrastructures still depend on Local Area Networks (LANs) for storing, processing, and sharing sensitive information. As the number of these networks employed by small- to large-scale organizations continues to grow, they become increasingly attractive targets for a wide array of network attacks. Meanwhile, as networking technologies advance, so does the volume and sophistication of the attacks. From basic reconnaissance and port scanning to zero-day exploits, insider threats and advanced persistent threats (APTs), the variety of malicious threats and attack strategies continues to expand today. Therefore, network threat detection and attack prevention have become important components of modern cybersecurity strategies, protecting sensitive data, digital assets, and ensuring service continuity. Unlike conventional network attack detection, which heavily relies on recognizing known attack signatures during or after an attack, network threat detection’s primary goal is to identify early threat indicators through continuous monitoring and analysis of network-related activities before they escalate into full-scale attacks. These threat indicators include, but are not limited to, suspicious user and device behavior, such as unauthorized scanning, privilege escalations, unusual network patterns, and anomalies.

1.1. Intrusion Detection Systems

Today, Intrusion Detection Systems are considered one of the most effective defense mechanisms in protecting critical network infrastructures. According to their underlying detection mechanism, IDS can be categorized into two main categories: Signature-Based IDS (SIDS) and Anomaly-Based IDS (AIDS). SIDS rely on known attack signatures or rules, whereas AIDS flag deviations from normal network behavior by employing a wide range of machine learning and deep learning algorithms. A third IDS category, called hybrid IDS, combines both signature-based and anomaly-based techniques in its intrusion detection strategies. SIDS’ focus on attack detection is reactive as they require signatures of malicious activities that represent actual network attacks, whereas AIDS are fundamentally proactive in their strategy. AIDS’ main focus is on network threats and the threat stages of developing attacks, flagging threat-related deviations from normal network behavior, and actively detecting precursors to attacks.

1.1.1. Signature-Based IDS

Traditional Signature-based Intrusion Detection Systems are static systems primarily designed to detect known attack patterns by matching suspicious network activities against a database of known attack signatures, also known as fingerprints. SIDS employ straightforward logic, implemented via mechanisms based on rules such as simple if–else conditions or pattern-matching mechanisms like regular expressions [1]. Snort and Suricata are well known for their performance in detecting known attacks and are known to produce low false-positive rates. However, by the time the attack signatures are identified, the network is already under attack and has passed the threat development stage. Therefore, while effective and often operating in near real-time, rule/signature-based IDS are not proactive threat detectors. They inherently fail to detect zero-day and novel network exploits, such as polymorphic attacks [2,3]. SIDS’ inability to address developing attacks at their various threat stages makes them inadequate for more recent, sophisticated multistage network attack strategies, such as APT campaigns. Such sophisticated attack campaigns demand careful analysis of network behavior over a period to identify potential pre-attack anomalies or threats before they manifest into real attacks. Moreover, traditional SIDS require continuous manual updates to their attack signature library to provide coverage for new attack types as they emerge and to refine their rules, as the deletion of outdated or inefficient signatures is mandatory, thus making these databases difficult to maintain [2]. Moreover, the organizations that depend on SIDS are vulnerable to new threats until they receive the signature updates to detect those threats. Furthermore, some studies revealed that the threat actors can easily bypass SIDS by making slight changes to the payload, such as re-encoding, no-op padding, and creating polymorphic variants [4].

1.1.2. Anomaly-Based IDS

As a result of the critical issues associated with SIDS, modern IDS are moving toward more proactive threat detection. This approach involves identifying and flagging early warning signs, suspicious network behaviors, or anomalies that may indicate upcoming network attacks. Therefore, modern IDS now focus more on AIDS qualities, where ML and DL models can be effectively used to detect network threats. These approaches are categorized into three main types based on their training methods: supervised, unsupervised, and semi-supervised. In supervised training, ML and DL models learn from both normal and malicious data. Support Vector Machines (SVM), Decision Trees, and Random Forests are common supervised ML models, while DL models such as Feedforward Deep Neural Networks, Convolutional Neural Networks (CNNs), and sequential models like Recurrent Neural Networks (RNNs) or Long Short-Term Memory (LSTM) models are trained using supervised and unsupervised techniques. Unsupervised ML and DL models are especially important in AIDS because they excel at identifying data points that significantly deviate from normal network behavior as anomalies or threats. However, these methods often produce more false positives, which is a critical issue that needs to be addressed. Common unsupervised ML models include K-Means clustering, one-class SVM, and Isolation Forests, while autoencoders, variational autoencoders (VAEs), and deep Belief Networks are used as unsupervised DL models. The third type, self-supervised learning, which is a subcategory of unsupervised learning, is increasingly employed in recent research, where models predict masked parts of their input data [5].

1.1.3. Limitations of Existing AIDS

While signature-based IDS inherently fail to identify attacks during their early threat stages and are therefore not suitable for detecting sophisticated, subtle, evolving, or zero-day attacks, the traditional ML- and DL-based anomaly detection models proposed as solutions also encounter significant limitations and challenges. These limitations and challenges include, but are not limited to:

- Generating high false-positive rates: AIDS tends to produce more false positives compared to SIDS. This presents a major challenge that leads to model inefficiency, alert fatigue, and unnecessary human interventions, thus requiring ongoing research contributions.

- Inability to capture complex spatial relationships and inherent graph structures related to network threats: Traditional machine learning models treat network traffic as flat, sequential data, making it difficult to accurately capture complex relationships and interdependencies between network nodes and their communications. Such spatial, graph-structured information is crucial for identifying unusual relational patterns associated with threats that can potentially lead to network attacks, including insider threats [6].

- Limitations in handling long-term dependencies in network traffic: Subtle threats that develop over a long period present a significant challenge to current anomaly detection models, such as those based on RNNs, due to problems like vanishing gradients [7]. However, identifying such threats is increasingly important in the current network security landscape.

- Adversarial robustness: Current threat detection methods often lack resilience against adversarial attacks. This makes them vulnerable to evasion techniques used by sophisticated network attacks to manipulate machine learning models with malicious data [6].

- Scalability and high dimensionality of network traffic data: Ongoing research is necessary to process network data at large scales effectively and promptly. Even the typical computer network generates network traffic with high throughput, resulting in large volumes of data that current models struggle to analyze efficiently, leading to performance bottlenecks. Therefore, achieving low latency with high network throughput remains a challenge for traditional ML and DL models. Additionally, the high dimensionality of network data leads to the curse of dimensionality, where the effectiveness and generalizability of distance-based algorithms decline as the input data dimensions increase, resulting in increased computational complexity, overfitting, spurious correlations, and sparse clusters [8].

- Covert channel detection: Traditional ML models often miss covert channel attacks that use hidden communication paths, resulting in data theft [9].

- Challenges of dynamic and evolving network environments: Real-world networks must often be restructured with different topologies to meet organizational requirements, resulting in variations in their traffic patterns over time. However, traditional anomaly detection models usually assume the conceptual structure of their network environment to be static or slowly changing, making them less effective in the real world.

- Limited interpretability and transparency: Transparency is important when making cybersecurity-based decisions. However, many ML models, particularly deep neural networks, cannot support transparency because they act as black boxes. This lack of transparency and interpretability can make it challenging for security analysts to understand the reasoning behind the detected anomalies, especially those that are previously unknown.

1.2. GNNs

GNNs are a class of deep neural networks designed to handle graph-structured data, where entities and their interrelations are represented as nodes and edges in the graph. Unlike conventional neural networks, which operate on fixed-size input arrays where data is treated as independent, identically distributed samples, GNNs have the advantage of capturing complex interdependencies and interactions between their nodes through the process called message-passing. This allows GNNs to learn rich, hierarchical representations of graph-structured data, hence making them effective in tasks such as node classification, graph classification, and link prediction. Thus, GNNs are increasingly being employed in domains such as social network analysis and drug discovery, where problems can be accurately modeled as graphs [10].

The Potential of GNNs for Network Threat Detection

- Modeling complex relationships and patterns: GNNs have the potential to successfully capture the interdependencies and relationships in computer networks, including LANs, where nodes represent network entities such as hosts or IP addresses, and edges represent the relationships among the nodes, which can be communication flows, network sessions, etc. This unique characteristic of GNNs can be particularly useful for identifying network abnormalities that can span across multiple coordinated entities and their complex interactions, which traditional ML models, such as SVMs or clustering algorithms, struggle to achieve [11]. Furthermore, GNNs can be employed to identify hidden network patterns, such as the ones presented by covert channels.

- Scalability: Computer networks are known to produce a large amount of data. However, GNNs can handle graph data at large scales, making them suitable for typical network anomaly detection tasks. With appropriate architectural or sampling strategies, GNNs can be scaled to accommodate additional data without a significant loss of performance, demonstrating their inherent robustness qualities [12].

- Dynamic graph construction: Automatic dynamic graph construction techniques can be employed to address the challenge of dynamic and evolving network environments, allowing real-time updates to the graph as new data is received [13]. This improves the robustness of GNNs compared to traditional static ML models.

1.3. Transformers

The Transformer architecture was introduced in the paper titled “Attention is All You Need” [14], and belongs to the family of sequential deep learning models. Transformers use a mechanism called “Self-attention” which enables them to measure the importance of different elements with respect to each other within long input data sequences. This allows the Transformer to capture long-range dependencies and relationships in the input data, such as long-form text prompts, efficiently. Therefore, Transformers are particularly effective in large datasets with complex patterns, due to their ability to handle long sequences in parallel and focus on relevant parts of the data through the self-attention mechanism [14].

In recent years, Transformers have given rise to Large Language Models (LLMs) and generative artificial intelligence (GenAI). However, despite the huge potential of the Transformers, they have not been largely explored in the cybersecurity literature. The aforementioned traits of Transformers are highly suitable for analyzing large network traffic data presented as network flows (e.g., source and destination IP addresses, ports) or sequences of network events (e.g., login attempts, file transfers) to detect sophisticated network threats.

Potential of Transformers for Network Threat Detection

- Handling long sequential data: Transformers are designed to process sequences of data and capture dependencies over long ranges. Hence, they can be potentially used in analyzing network traffic to identify sophisticated threats that evolve slowly by detecting abnormal patterns growing over extended periods [14].

- Attention mechanisms: By utilizing its attention mechanisms, the Transformer model can focus on relevant parts of its input sequence. This helps highlight important features that correspond to malicious behaviors and thus enhances the precision by reducing false positives.

- Parallel processing: Balancing real-time detection vs. accuracy is critical in any anomaly detection mechanism. Achieving low latency in high-throughput environments is crucial for supporting tasks such as network traffic analysis, which is challenging for traditional sequential models, including RNNs. However, Transformers can process entire sequences simultaneously, enabling low-latency detection in network environments.

- Versatility in data handling: Furthermore, Transformers are highly versatile and can be applied to handle different input modalities, enabling multi-faceted network threat analysis, which can improve model robustness [15].

- Interpretability and transparency: Attention mechanisms in Transformers can be inferred to highlight the most relevant parts of the input data that they paid attention to during the threat detection process. This can be exploited to interpret the Transformer’s decision up to some degree, and thus, the overall transparency can be improved.

1.4. Reinforcement Learning

Reinforcement Learning (RL) is a machine learning algorithm where an RL agent learns to make decisions about its environment through a reward and penalty system. The RL agent receives a reward from the environment when its action is expected or desirable, or else, it gets a penalty. It can be a powerful learning tool for solving complex network-related issues where traditional, static systems may fail, as it improves system performance over time by continuously observing how the network behaves, exploring new actions, and updating its policies for long-term outcomes. Thus, RL has the potential to adaptively learn optimal policies for network threat detection, especially when networks are dynamic or when labeled data is limited. Moreover, RL algorithms can be jointly employed with other ML and DL models as core IDS components to support adaptive and proactive decision-making [16].

Potential of Reinforcement Learning in Network Threat Detection

- Adaptive learning: RL enables IDS to adapt to new and evolving threats by continuous learning via interactions with the network environment. This ensures the responding strength of RL-enabled IDS against changing attack patterns.

- Optimization of detection strategies: By learning the optimal policies, RL can optimize the trade-offs between detection accuracy, false positives, and computational efficiency. This can significantly improve the overall performance and resilience of network threat detection systems [17].

- Decision making in real time: RL can improve real-time IDS decisions and responses based on the current state of the network [18].

- Adapting optimal hyperparameters of the base models: RL can effectively choose optimal hyperparameters of ML and DL models when set up in hybrid environments.

1.5. Aims and Contributions of the SLR

1.5.1. Aims

This SLR aims to conduct a thorough examination of the role of GNNs, Transformers, and RL algorithms in network threat detection by systematically analyzing and synthesizing existing peer-reviewed literature. Thus, it seeks to:

- Explore how GNNs, Transformers and RL algorithms have been employed in current network threat detection literature, standalone or in hybrid manners.

- Explore the effectiveness of these models and algorithms in network threat detection, especially in detecting sophisticated modern network threats.

- Identify key trends, strengths, limitations, and gaps in existing approaches.

1.5.2. Contributions

- Providing a potentially novel conceptual framework for classifying network threats inspired by the MITRE ATT&CK model and cyber kill chain that clearly separates network threats from network attacks and maps such network threats to their respective MITRE ATT&CK tactics.

- A domain-specific contextualization of network threat detection focused on conventional Ethernet and TCP/IP-based LANs.

- A structured and detailed synthesis of how GNNs, Transformers and RL algorithms are currently employed in conventional LAN-based network threat detection.

2. Literature Review

2.1. Traditional Machine Learning Methods for Network Threat Detection

Traditional ML classifiers have long been used in intrusion detection systems. ML algorithms like Random Forests, Decision Trees, K-Nearest Neighbors, Support Vector Machines, and XGBoost are commonly employed as network traffic classifiers. Although these ML models perform well on classic benchmarks, such as KDDCup’99 or NSL-KDD, they produce lower performance on more modern datasets, such as CICIDS2017/2018 and UNSW-15, often achieving accuracies between 70% and 90% [19]. Specifically, Ref. [19] reported that Random Forest (RF) obtained 74.87%, XGBoost 71.43%, Decision Tree (DT) 74.22%, and K Nearest Neighbor (KNN) 71.10% accuracy, respectively, on the UNSW-NB15 dataset at a reduced feature set of 11 chosen using a Random Forest Feature Importance. The study also stated that traditional ML models frequently struggled to generalize to unseen data. According to [20], traditional ML classifiers often struggled with complex network traffic and required dimensionality reduction, class balancing, and ensemble methods to remain competitive with DL models. Among these popular ML threat classifiers, tree-based ML models are popular for their interpretability and inference speed. Furthermore, RF is less prone to overfitting than single tree-based methods. As explained above, they all struggle to generalize to complex modern network data. However, when combined with deep learning models, such DL hybrids were shown to increase RF-only performance. In the study in [21], an ensemble RF + Deep Sparse Autoencoder (DSAE-RF) achieved 99.83% accuracy on CICIDS2017. Overall, tree-based methods struggled to perform better without richer features or by creating ensembles with deep learners, although they remained computationally cheap at inference when compared to more computationally intensive models like SVMs [21].

According to [20], SVMs have been widely used in network threat detection. However, they perform poorly when presented with very large or imbalanced feature sets and often require data to be preprocessed using feature scaling techniques or PCA to achieve better performance. However, such data preprocessing frequently results in computationally intensive pipelines, and thus is insufficient for dealing with large-scale network traffic data. The study in [22] employed an SVM-based threat anomaly detection approach for both binary and multiclass classification tasks, evaluating it on the UNSW-NB15 dataset. Despite using a Radial Basis Function (RBF) to map a low-dimensional space to a high-dimensional space, the study’s performance metrics demonstrated moderate performance. A maximum accuracy of 85.99% was obtained for the binary classification, where the threat classes of UNSW-NB15 were merged into a single class. However, the multiclass accuracy was relatively low, at only 75.77%. According to [23], despite their naïve feature independence, which often results in moderate performance on rich and large network data, Naive Bayes (NB)-based network threat classifiers generally train faster than other ML models. However, Ref. [23] also noticed that NB usually generalizes better to previously unknown threats and attacks due to its nature of making fewer assumptions.

Overall, traditional ML network threat classifiers are computationally cheaper and more interpretable than their DL counterparts. However, their performance on modern network threats and modern network data often falls under moderate to underperformance categories, thus limiting their practical application in IDS. According to the literature, careful feature selection or combining with DL hybrids can elevate the performance; however, this results in increased computational power, detection latency, and added complexity [3]. Despite these model design advancements, sophisticated modern network threats, such as APTs, zero-day attacks, and subtle insider threats, can still bypass traditional ML models, thus justifying ongoing research into more advanced and robust deep learning architectures that can generalize to modern network threats.

2.2. Traditional Deep Learning Methods vs. GNNs and Transformers for Network Threat Detection

Common spatial DL models such as CNNs, sequential DL models like RNNs, including their gated versions such as LSTM and GRU, and feedforward Deep Neural Networks have been frequently applied to network threat and network attack detection. Furthermore, hybrids of spatial and sequential models have been used in IDSs [24]. According to [25], many DL models achieved high performance in old network datasets such as the KDD Cup 99. Hybrid CNN-BiLSTM models achieved 99.78% accuracy, 99.58% precision, 99.72% recall, and 99.73% F1-Score on NSL-KDD. In [3], CNN and LSTM models achieved an accuracy of 98% when trained and evaluated using the SMOTE-balanced CICIDS2017 dataset.

A CNN-GRU and a CNN-Bi-LSTM achieved F1-scores of 92.05% and 90.08%, respectively, for the CICDS2017 dataset in a binary classification task, whereas their proposed E-GraphSAGE model achieved an F1 score of 99.76%. The dataset was not class-balanced using any sampling method or synthetic records generation. Instead, to preserve the original class imbalance in the dataset, a sub-sample was selected. According to the study, this reflected the ratio of benign to malicious network flows in the real world, which was then used to evaluate various DL architectures and, thus, various DL models; XGBoost_LSTM, AT_LSTM, and CNN_GRU reported F1-scores of 89.96%, 90.36%, 92.20%, and 93.61%, respectively. The proposed E-GraphSAGE reported an F1-score of 98.65%, outperforming all DL-based approaches [26].

A Transformer architecture was evaluated using several network intrusion datasets against various network threat detection scenarios in the study in [26]. First, the model was tested on the NSL-KDD dataset in a class-wise classification problem, where it achieved high performance across Analysis, Backdoor, Fuzzers, Reconnaissance, Shellcodes, and benign classes, reporting accuracy ranging from 97.0% to 99.9% and recall ranging from 98.1% to 99.7%. When evaluated on the UNSW-NB15 dataset, the Transformer achieved exceptionally high performance in a class-wise classification problem, with accuracies ranging from 99.22% to 99.82% and recall values ranging from 98.39% to 99.38% across the Normal, Probe, U2R, and R2L classes. Furthermore, the model maintained high precision, ranging from 98.24% to 99.38%, and F1-scores from 98.56% to 99.30%, across all classes of the UNSW-NB15 dataset, confirming its strong generalization capabilities in various network threat detection scenarios. Additionally, when compared with multiple state-of-the-art (SOTA) baselines using an NSL-KDD binary classification task, the proposed Transformer outperformed all of them. When evaluated on the NSL-KDD dataset, SOTA models reported accuracy of 91.86% (ResNet34), 97.64% (RNN), 98.71% (LSTM), and 98.79% (Vision Transformer), whereas the proposed Transformer again surpassed all baseline SOTA models with a clear margin, achieving 99.25% accuracy, 99.07% precision, 99.02% recall, and 99.05% F1-score. Furthermore, when evaluated on a UNSW-NB15 binary class task, SOTA models reported 91.00% accuracy for ResNet34, 94.2% for RNN, 95.0% for LSTM, and 95.9% for Vision Transformer, with recall values ranging from 90.1% to 96.2% across all the models. However, the proposed Transformer was not evaluated on the UNSW-NB15 dataset in a binary classification setting. As the paper stated, none of these comprehensive performance evaluations employed any class-balancing technique in any of the datasets. However, focal loss was used as the loss function.

According to [27], during an APT evaluation performed on the CSE-CIC-IDS2018 dataset, a CNN achieved 93.01% accuracy, whereas the GCN achieved 95.96% accuracy. In the study in [28], the Transformer architecture was benchmarked against RNN/CNN models using the CICIDS2017 dataset, which was oversampled to address minority class issues with SMOTE. The class-wise performance of the proposed Transformer, evaluating different network threats, demonstrated near-perfect performance for the benign, PortScan, Patator-FTP, Patator-SSH, and Brute-Force classes, consistently reporting accuracy, precision, recall, and F1-scores in the very high 97–99% range. Infiltration class was also detected at high levels, ranging from 95% to 99% across all reported performance metric types, and the Bot attack class was detected at around 92%. According to the paper, the most challenging class to detect was SQL Injection, with all performance metrics values ranging from 47% to 60%. The authors continued the performance evaluation by conducting a comparison between SOTA ML baselines and their proposed model, demonstrating that the Transformer architecture could outperform all the SOTA RNN and LSTM models in global accuracy across all classes. The Transformer further outperformed all the SOTA models in macro-averaged metrics, such as precision, recall, and F1-scores, with reported values of 99.35%, 98.98%, 98.83%, and 99.17%, respectively.

The CERT Insider Dataset (v4.2) was evaluated using several baseline DL and GCN models in the study in [29]. The proposed session-graph GNN was able to achieve near-perfect performance in all reported metrics, including 99.56% TPR and 0% FPR, detecting insider threats in the AB-II (Abnormal Behavior-II) subset. The authors employed 7 heuristic rules to build an Associated Session Graph (ASG) with session nodes, core/boundary nodes, and inter-session edges. When a comparison evaluation was conducted with traditional DL baselines such as CNNs and LSTM models, which reported moderate performance with TPRs ranging from 76% to 96% and FPRs between 3% and 14% and with a standard GCN, which reported 97.12% TPR and 3% FPR, the proposed ASG-based GNN significantly outperformed them all. The study reports high performance, including 99.56% accuracy, 99.54% precision, 99.53% F1 score, 99.56% TPR, 0% FPR, and 99.56% AUC for the ASG-based GNN. This study demonstrates that when vanilla GNN architectures are enhanced with encoded relationships across sessions, they are highly capable of detecting insider threats compared to traditional sequence-based DL models.

2.3. GNNs in Network Threat Detection

The authors of [30] mentioned that IDS-based traditional machine learning models often fail to learn network interdependencies, and resulting incorrect predictions usually contain a high number of false positives and negatives. The authors further stated that the traditional IDS often only used individual samples of network traffic and host logs to extract abnormal patterns and ignored the relationships between the associated network nodes. To address this issue, the authors propose GNN-IDS, which can represent both static and dynamic network attributes by employing attack graphs and real-time measurements. According to the study, the proposed GNN-based IDS can learn network connectivity and measure the importance of neighboring network nodes and their aggregated features, producing more accurate and reliable predictions with fewer false detections. The solution was evaluated with two synthetic datasets, including one dataset generated from CICIDS 2017 [30]. The proposed Graph Convolution Network with learnable Edge Weights (GCN-EW) version of the model achieved 86.84% Precision, 95.47% Recall, 90.53% F1-score, 98.94% AUC, and 3.63% FPR in the first synthetic dataset, and it achieved 94.14% Precision, 98.02% Recall, 95.97% F1-score, 99.65% AUC, and 1.40% FPR in the CICIDS2017-based synthetic dataset. The proposed GAT version achieved 86.61% Precision, 95.49% Recall, 90.39% F1-score, 98.87% AUC, and 3.73% FPR in the first synthetic dataset, and 94.74% Precision, 98.72% Recall, 96.62% F1-score, 99.72% AUC, and 1.27% FPR in the CICIDS2017-based synthetic dataset.

In [26], the authors suggest a novel preprocessing technique to standardize network flow data, thus improving GNN-based malicious network flow detection. In their approach, they construct graph nodes from network flow features and graph edges based on node IP relationships. Furthermore, the authors propose a hybrid GNN model combining Graph Convolution Networks (GCNs) and SAGEConv to enhance the detection process. The proposed hybrid model achieved high accuracies of 99.76% and 98.65% for the CIC-IDS2017 and UNSW-NB15 datasets, respectively [26].

The following study focuses on a GAT within a federated learning framework to improve network attack detection while preserving data privacy. The authors use log density information to construct a graph representation of the network, allowing them to capture complex node relationships and network behavior over time, thus improving detection accuracy. The attention mechanism of the GAT employs fingerprint-weighted Jaccard similarity to calculate the attention scores. The proposed Federated Graph Attention Network (FedGAT) enables collaborative model training across distributed devices. Experimental validation conducted using the NSL-KDD dataset shows that FedGAT achieves exceptionally high accuracy levels, ranging from 99.983% to 99.998%, by effectively detecting cross-level and cross-department attacks while maintaining privacy [31].

Various APT datasets (e.g., Cadets-Unicorn, Streamspot, Theia-E3, Streamspot) were evaluated in [32]. The proposed GNN achieved perfect detection across several of the datasets and near-perfect detection across most of them. When benchmarked against prior methods (e.g., Kairos-GNN, Threatrace), they reported F1 scores in the range of 83–96%. The proposed GNN was able to surpass all previous techniques by modeling both time and graph structure. Multi-stage APTs inherently possess a temporal dynamic, which is why the proposed GNN, called “CONTINUUM,” is successful in this study.

In the following paper, the authors explored the suitability of nested graph structures for enterprise-level networks, where the outer graph was constructed based on host communications and local activities within each host (e.g., event graphs form the inner graphs). According to the paper, the proposed NestedGNN is the first GNN designed for nested graphs consisting of three types of layers called inner GNN layers, nested graph layers, and outer GNN layers [33].

The applicability of Spatio-Temporal Graph Convolutional Networks (STGCN) for insider threat detection using the psychometric features and user behavior logs of the CMU CERT 4.2 dataset is evaluated in [34]. First, the authors constructed a knowledge graph to model employee interactions within the network, where users formed the nodes and their communications or access to events formed the edges in the graph. The authors compared three graph neural networks: a standard GCN, an STGCN, and a Capsule GNN. In STGCN, graph convolution layers were incorporated to capture spatial information, while the LSTM layers were incorporated to capture sequential patterns in user behavior over time. STGCN achieved an accuracy of 94% and demonstrated robustness in detecting insider threats with minimal false positives by focusing on complex temporal anomalies and subtle behavioral patterns associated with them. Moreover, authors highlighted the importance and the effectiveness of combining GNNs with the layers or model architectures capable of modeling temporal patterns, particularly for insider threat detection [34].

Ref. [35] proposes a taxonomy for organizing existing literature on GNNs in relevance to IDS. The study categorized the analyzed literature into three main categories; Graph Construction, Network Design, and Model Deployment whereas, Graph Construction focused on converting raw network data into static or dynamic graph structures, Network Design concentrated on the architecture of the GNN model such as GraphSAGE or Graph Attention Networks (GAT), and finally Model Deployment dived into the real world challenges in deploying GNNs in IDS such as scalability issues, optimizing the required computations and integration with other models for improved performance.

2.4. Transformers in Network Threat Detection

IDS-MTran proposes a novel multi-scale intrusion detection framework. The framework first utilizes multi-scale convolution operators, deployed as 1 × 1, 3 × 3, and 5 × 5 kernels, to extract diverse network traffic feature representations, followed by a Patching with Pooling (PwP) module to reduce noise and enhance feature interactions. Then, a Transformer with multiheaded attention and positional encoding is utilized in the framework to capture global dependencies across the scales. The Cross-Feature Enrichment (CFE) module in the framework integrates multiscale features via up/down sampling and distillation. During the training process, the focal loss was utilized to address the significant data imbalance of the malicious and benign samples across multiple network threats and attack categories. Thus, performance evaluations of IDS-MTran on the NSL-KDD, CIC-DDoS 2019, and UNSW-NB15 datasets show that the model is able to achieve over 99% accuracy in multiple network threat categories [36].

The “Flow Transformer” proposed by [37] is built on TensorFlow and provides a modular pipeline consisting of three components: dataset ingestion, preprocessing, and model construction. The first component supports various flow-based network traffic data formats (e.g., CICIDS Flowmeter, NetFlow V5), handling both categorical and numerical flow features such as protocols, port numbers, flow duration, and packet counts. The model’s binary classification evaluation on the CICIDS-2018 dataset demonstrated an accuracy level of 98% with a basic Transformer architecture consisting of 2 layers, 2 attention heads, and 128 features [37].

A neural Transformer-based model for detecting real-time zero-day threats in network traffic data is proposed in [38]. First, network flow features such as protocol type, packet size, source and destination IPs are embedded into the vector space to be processed by the Transformer module. Then the Transformer utilizes its self-attention mechanism to calculate the importance of different parts of network packet sequences, identifying complex dependencies and patterns resulting from threat-related anomalies. The authors reported that their model requires low computing resources, specifically 55% CPU and 40% GPU, to achieve a low latency of 25 ms, concluding its suitability for real-time applications. The model was evaluated using CICIDS2017 and a simulated network traffic dataset that included benign activities such as file transfers, normal browsing, video streaming, and various network threat types, including zero-day intrusions. Zero-day traffic was used for evaluating the model’s performance on previously unseen threats. The proposed model achieved 96% accuracy, 94% precision, 92% recall, and a 93% F1 score. Furthermore, the ROC-AUC score was 0.97, with precision and recall trade-offs indicating the model’s robustness in imbalanced datasets such as CICIDS2017 and the simulated dataset.

The Markov property assumes that the next state depends only on the current state. However, in the context of network security, the threats that develop over time with a historical context cannot be accurately modeled according to the Markov property, and thus, such threat detection techniques based on RL algorithms cannot depend solely on the previous network state. Ref. [39] tackles this issue by proposing a model called “Decision Transformer”. Unlike traditional RL methods, such as Q-learning or policy gradients, the proposed methodology treats the RL task as a supervised learning problem. Thus, the authors constructed an RL-Transformer architecture with past trajectories of rewards, detection decisions, and network packets to produce future detection actions. The proposed method also introduces a trade-off between timeliness and detection accuracy by using a reward function that rewards accurate and timely decisions and penalizes inaccurate and delayed ones. The authors also employed an autoencoder to compress packet payload features into embeddings to process packet sequences of arbitrary lengths more efficiently. Once trained on offline datasets such as UNSW-NB15, experimental results showed that decision Transformers achieved higher accuracy and lower latencies during the detection process across different sampling policies, including Expert, Medium, and Random, demonstrating their real-time applicability and robustness.

Ref. [40] proposes a novel cyber threat detection approach through Vision Transformers (ViTs) and Knowledge Distillation (KD). The proposed method encodes input data features into color imagery representations, where each feature is mapped into a three-channel RGB pixel, followed by the extraction of explainable imagery signatures of cyber threats using a ViT teacher model. Here, ViTis self-attention mechanisms are used to record relationships between imagery patches and generate explainable attention maps, followed by creating imaginary representations using these attention maps and feeding them to a CNN student model during the KD process. The CNN student model is then trained to mimic the teacher’s output using a combined loss function made with cross-entropy and Kullback–Leibler (KL) divergence. The authors evaluated their model on four benchmark cybersecurity datasets named CICMalDroid20, CICMalMem22, NSL-KDD, and UNSW-NB15, achieving overall accuracies of 87.2%, 82.6%, 82.9%, and 78.4%, respectively. Although the performance of the ViTs is not top-notch, the study demonstrates the various ways that Transformer architectures can be employed in network threat detection.

A comprehensive survey of 118 papers, including 40 preprints published between 2017 and 2024, has been conducted by [41] to focus solely on Transformers and Large Language Models (LLMs) used in network intrusion detection tasks. The survey has identified key technologies used in these studies, such as attention based architectures for feature extraction, CNN/LSTM hybrid Transformers for combining spatial and sequential data analysis, GAN-Transformers for generating synthetic datasets to address the issue of imbalanced datasets, Vision Transformers (ViTs) for processing network traffic as 2D images, and LLMs like BERT and GPT to enhance contextual understanding and the predictions. The survey further provides several recommendations for future research, including the development of LLM agents with RL components, the use of multimodal inputs, and knowledge distillation.

2.5. RL Algorithms in Network Threat Detection

Ref. [42] proposes a Deep Reinforcement Learning (DRL) framework using three advanced RL techniques, which are Deep Q-Network (DQN), Actor–Critic, and Proximal Policy Optimization (PPO). The study further conducted a performance comparison against the traditional Q-Learning algorithm. Open port attacks, spoofing, keylogging, and finally credential theft were replicated and simulated in a controlled environment using the MITRE Attack framework. Their key findings showed that the Actor–Critic outperformed other RL algorithms by achieving the highest success rate of 0.78, the fewest iterations of 171, and the highest average reward of 4.8. Thus, the paper further demonstrates that DRL algorithms, such as Actor–Critic, are highly effective in learning and detecting dynamic network attacks and potential threats [42].

Ref. [43] has conducted a comprehensive review of the applicability of DRL in cybersecurity, focusing on threat detection and protection. The paper focuses on DRL in both Network Intrusion Detection Systems (NIDS) and Endpoint Detection and Response Systems (EDR). The key technologies used in the reviewed work can be categorized into DQN, Double DQN, Dueling DQN, and policy-based methods, such as Deep Deterministic Policy Gradient (DDPG) and Proximal Policy Optimization (PPO). The paper covers different applications of DRL in cybersecurity, such as anomalous and benign network traffic classification, botnet detection evasion, and adversarial attacks on malware classifiers (Yang, Acuto, Zhou, & Wojtcza, 2024 [43]).

ID-RDRL integrates a DRL model with a Multilayer Perceptron (MLP) to classify network threats out of benign network traffic flow data. The first phase of the approach is a feature selection technique called Recursive Feature Elimination (RFE) combined with a Decision Tree, which filters out 80% of the redundant features in the CSE-CIS-IDS2018 dataset, thus significantly reducing the computational effort. The next phase is the Deep Q-Network classifier, which operates within the Markov Decision Process (MDP) framework. It begins with the Mini-Batch module, which processes the input dataset by treating features as states and labels as actions, creating tuples labeled as “state”, “action” and “next state”. These tuples are then fed into a Convolution Neural Network (CNN), followed by the MLP to extract feature information. Then the DQN utilizes an ε-greedy algorithm to select actions (i.e., classifying traffic as normal or attack) based on the current state to maximize the expected reward. The reward function is set to provide a reward of 1 if the predicted action matches the actual label, thus a 0 otherwise, with a low discount factor of 0.01 to focus on immediate rewards. The authors’ reason behind their reward function strategy is the low correlation between the states in the dataset. The backpropagation through the DQN network updates the Q-function, enabling the model to learn the optimal policy for the classification task. The experimental evaluation results of the study demonstrate that the proposed ID-RDRL can achieve a 96.2% accuracy and an F1 score of 94.9% [44].

Ref. [45] explores the vulnerability of DRL to adversarial attacks by employing a state-of-the-art DRL IDS agent in an adversarial environment using a Deep Q Network for classification tasks. The model was trained using the NSL-KDD dataset and evaluated against two adversarial attack methods, namely Fast Gradient Sign Method (FGSM) and Basic Iterative Method (BIM), both in their targeted and untargeted forms. The results show that adversarial examples degraded the detection performance of the Deep RL agent. The study further reported that targeted adversarial attacks were more effective in influencing malicious traffic to be detected as benign.

The Multi-Agent Feature Selection Intrusion Detection System (MAFSIDS) is a network attack classification framework proposed by [46], which consists of two main modules: the feature self-selection algorithm and the Deep Q-Learning module. The feature self-selection module employs a multi-agent approach to reduce the 2N feature selection space to N agent representations and thus reduces redundant features by 80% compared to the original dataset. Furthermore, a GCN is employed in the study to extract deeper features from the data to improve feature representation accuracy. The function of the Deep Q-Learning module is to utilize Mini-Batches to encode data, allowing the RL to be applied in a supervised learning context. According to the evaluation results, the framework achieved accuracies of 96.8% and 99.1%, and F1-scores of 96.3% and 99.1% on the CSE-CIC-IDS2018 and NSL-KDD datasets, respectively.

2.6. Taxonomy of the Research Domain

A high-level summary of the research domain covered in this paper is presented in Table 1.

Table 1.

Taxonomy of the research domain.

2.7. Cost-Effectiveness Comparison Between Traditional ML and Deep Learning Models vs. GNNs, Transformers, and Reinforcement Learning Models

Across the literature, there is no consistent reporting format for cost or resource usage. Several recent Transformer, GNN, and RL papers already present partial resource measurements or engineering fixes. However, the field lacks standardized, quantitative resource reporting across studies. Therefore, a definitive, numeric cost-effectiveness ranking is not yet possible from the available literature.

However, the literature cited in this manuscript provides qualitative evidence and some concrete evidence that:

- Advanced models (GNNs, Transformers, RL) impose higher preprocessing, training, and operational costs than classic ML baselines, and

- Engineering mitigations (lite models, input encodings, model distillation, or hybrid supervision + RL) are being proposed to reduce these costs.

2.7.1. Data Collection and Preprocessing

- Traditional ML and DL baselines: Surveys of classical and ML-based IDS research note that many traditional ML and DL pipelines with engineered features operate on compact flow or summarized tabular features (e.g., NetFlow/summary statistics), which reduces preprocessing and storage overhead relative to heavy representation pipelines [19,20].

- Heterogeneous or nested graphs require additional preprocessing: Reviews of GNN methods emphasize that applying GNNs to network security requires an explicit graph construction step (hosts/sessions/processes → nodes; flows/relations → edges) and design choices for heterogeneous or nested graphs; those preprocessing steps incur extra engineering cost (feature to graph mapping, edge definition logic) compared to flow/tabular pipelines and homogeneous graphs [26,30,32]. The literature also discusses the need to maintain or update graph structures for streaming data, which adds ongoing processing overhead [30,34].

- Transformer tokenization/sequence handling increases preprocessing: Transformer-based NIDS research explicitly discusses the need to prepare sequential inputs (tokenization/embedding, padding/striding, or patching) for long flows or logs. This processing can increase memory usage and I/O when sequences are long, and authors have proposed input encodings and striding/patching to reduce this cost [36,38]. The Flow Transformer paper explicitly reports that careful input encoding and head selection can affect model size and speed. It further demonstrates design choices that reduce model size and improve training/inference time [37].

- RL environment and trajectory generation cost: RL approaches to IDS typically require the definition of an environment (state, action, reward) and the generation of agent trajectories for training. Building faithful training environments or realistic simulators adds engineering and annotation costs not normally required for supervised classifiers [42,44].

2.7.2. Training Compute and Sample Efficiency

- Traditional ML: These models are generally inexpensive to train and to run in inference, which is reiterated in literature comparing ML baselines with deeper models [19,20].

- Conventional DL: Typical deep baselines require GPU acceleration but are often tractable for common IDS datasets. Comparative studies report DL baselines as a middle ground in terms of compute cost versus performance [3,25].

- GNNs: These models require moderate to high compute demands, depending on design. Methodological reviews of GNNs report that message passing and heterogeneous/hierarchical graph designs increase memory and compute demands. Temporal GNN variants and nested/hierarchical graphs further increase training complexity and memory usage [30,34]. These studies demonstrate that computational cost as a key practical challenge when scaling GNNs to high-throughput network data.

- Transformers: Reviewed Transformer literature notes that Transformer models, especially large pre-trained or long sequence variants, cause higher GPU memory consumption and longer training times. NIDS Transformer frameworks (e.g., Flow Transformer) report measurable model size and runtime tradeoffs, and show that careful design, such as input encoding and classification head choices, can reduce model size by >50% and improve training/inference time without loss of detection performance in experiments [36,37,38]. Thus, Transformers can be computationally heavy, yet they are open to engineering optimizations.

- RL: Deep RL studies for IDS warn that RL training can be sample inefficient and require many episodes to converge. This leads to large cumulative compute (many interactions/episodes) compared to supervised training, and authors recommend sample-efficient RL approaches or hybrid supervised + RL designs for practicality [42,44,46].

2.7.3. Online Updating and Adaptivity

- Traditional ML: Traditional ML retraining is typically fast. However, naïve retraining does not handle concept drift well. Literature recommends periodic retraining or incremental approaches while noting limitations for evolving threats [19,20].

- GNNs: Some temporal GNN designs support incremental graph updates. However, GNNs generally require recomputing node/edge representations when topology or relations change. Thus, the literature highlights the engineering cost of maintaining up-to-date graph pipelines in streaming settings [30,34].

- Transformers: Fine-tuning Transformers can support adaptation to new data. However, large models remain costly to update and the literature reports distilled, shallow, or strided variants as practical choices to reduce update overhead [36,38].

- RL: RL is naturally framed for continual adaptation (policy updates). However, the literature warns about stability and the risk of degraded behavior during continued online learning [45,46].

2.7.4. Operational Complexity and Maintainability

- Pipeline complexity: As mentioned above, existing literature highlights that GNNs and Transformer systems typically demand more complex data pipelines, such as graph processing frameworks for GNNs and long sequence tokenization and large model serving for Transformers. And thus, higher operational and maintenance costs compared to classic ML pipelines [26,30,36].

- Safety & robustness costs: RL studies document adversarial concerns such as reward poisoning and exploitation during learning, which require defenses and monitoring when RL is deployed online. These concerns highlight the need for additional safeguards [45].

Table 2 provides a high-level estimation and comparison of the costs associated with Traditional ML/DL models, GNNs, Transformers, and RL algorithms.

Table 2.

High-level cost comparison of traditional ML/DL, GNNs, Transformers, and RL models.

2.8. Research Gap and the Significance of the SLR

The preliminary literature review has clearly demonstrated the potential of GNNs, Transformers, and RL in modern network threat detection tasks. Modern, sophisticated network threats can consist of multiple stages or slowly evolve and be subtle, presenting a huge challenge to conventional intrusion detection systems equipped with traditional ML and DL models. Hence, there is a need for ongoing research into GNNs, Transformer architectures, and RL algorithms, as well as their applications in the highly critical cybersecurity domain.

However, as the above literature review clearly indicates, and despite recent research interest in the applicability of GNNs, Transformers, and RL for network threat detection, there is a significantly limited number of Systematic Literature Reviews (SLRs) covering the topic in the literature. The existing systematic literature reviews’ main focus is on traditional ML and DL approaches. For example, a 2024 SLR on intrusion detection, which covers studies from 2018 to 2023, highlights that publications focusing solely on traditional methods are considered the top approaches to network threat detection [47]. Thus, the SLR covers models such as CNNs, SVMs, and decision trees, yet the emerging GNNs and Transformer models have been completely overlooked.

When an SLR about GNNs, Transformers, and RL algorithms appears in network threat detection literature, the focus would normally be only on reviewing a single model type. Thus, the preliminary literature research found that there are limited recent surveys. For example, one study conducted a comprehensive literature review on Transformers and Large Language Models (LLMs) in intrusion detection systems [41]. Another study surveyed the use of GNNs in IDS [35]. Thus, there is almost no systematic literature review, any other type of literature review, nor a survey done for all three types of models together or as a combination of two.

However, a comprehensive and comparative analysis of these models is simply not feasible with standalone single-model reviews. Therefore, to conduct a comparative investigation into the strengths and limitations of GNNs, Transformers and RL in network threat detection tasks, to uncover their key trends and technical gaps in current research, a focused SLR is timely and well justified. An SLR is further justified by the need to consolidate knowledge, as the current body of research on network threats, conducted using the models in question, remains highly fragmented. Such an SLR will also provide insights into the best practices for effectively using these models in the cybersecurity domain.

3. Methodology

3.1. Research Questions

3.1.1. Primary Research Questions

- How are GNNs, Transformers, and RL used individually or in combination for network threat detection?

- How do different model architectures, datasets, evaluation metrics, and types of network threats addressed compare across studies involving GNNs, Transformers, and RL?

3.1.2. Secondary Research Questions

- What are the strengths, limitations, and gaps observed in current research utilizing GNNs, Transformers, and RL for network threat detection?

- What are the observable trends that integrate GNNs, Transformers, and/or RL for enhanced network threat detection?

3.2. Scope of the Study

3.2.1. Network Context

Modern network infrastructures are increasingly hybridized, spanning LAN, cloud, Internet of Things (IoT), and other networks. However, the network scope in this review is restricted to conventional Local Area Networks (LANs), including both wired and wireless nodes and their interconnections operating under conventional Ethernet and TCP/IP architecture. Therefore, studies related to software-defined networks (SDNs), cloud-native networks, IoT networks, mobile networks (4G/5G), mobile ad hoc networks (MANETs), etc., were excluded. Moreover, unrelated noise from papers related to non-computer networks, such as networks on chips, UAV-based networks, social networks and specialized forms of interconnected networks, was filtered out from the scholarly database search results.

This deliberate restriction to LAN-based environments is grounded in both methodological rigor and conceptual focus. From a methodological standpoint, LANs provide a controlled and reproducible environment that has been most widely adopted in empirical network threat detection studies. Most frequently used benchmark datasets in the literature, such as UNSW-NB15, CICIDS2017, CSE-CIC-IDS2018, and LANL are LAN-structured, capturing internal and boundary traffic typical of enterprise, academic, and government networks. On the other hand, cloud-based telemetry (e.g., network flows, access logs, security events from AWS, Azure, Google Cloud) is often proprietary, access-restricted, and often lacks standardized public benchmarks, limiting dataset comparability. LAN environments also provide a well-defined and stable graph topology that is consistent for analyzing spatial and temporal relationships in network traffic, which is essential when evaluating GNNs and Transformer-based architectures. Restricting the scope to LAN-oriented systems, therefore, facilitates comparability of performance metrics and threat taxonomies across analyzed studies, enabling a methodologically consistent synthesis while reducing heterogeneity.

From a conceptual standpoint, LAN-based detection represents the core operational layer of organizational cybersecurity, where spatial (topological) and temporal (traffic-evolution) anomalies often first manifest or, conversely, are first observed as propagated effects from compromised cloud or IoT environments. While cloud infrastructures also exhibit internal multi-stage threat propagations progressing through reconnaissance, privilege escalation, lateral movement, and exfiltration stages similar to those mapped in ATT&CK or kill chain frameworks, the LAN remains the most empirically accessible and structurally consistent domain for evaluating detection methodologies. This highlights the LAN’s role as both an origin and convergence point of threats within hybrid networking environments (e.g., LAN-Cloud hybrid infrastructures). Because this review investigates how GNNs, Transformers, and Reinforcement Learning capture spatial, temporal, and adaptive properties in network detectable threats, therefore, a LAN-based context offers a consistent substrate for evaluating these models under comparable and interpretable conditions.

The exclusion of cloud, IoT, and other network environments therefore represents a deliberate methodological boundary rather than a limitation of perspective. These architectures operate differently from traditional LANs: cloud systems use multi-tenant and dynamically managed resources, IoT networks often have limited and intermittent connectivity, and SDN separates control and data planes. These differences, including the distinct attack vectors each environment faces, make them not directly comparable with LAN-based threat detection. Thus, including all different networking contexts in the same framework would compromise methodological consistency and interpretability of the results. Moreover, there are still a number of organizations, particularly small to medium-sized enterprises, which continue to rely on LAN-based infrastructures without cloud integration for reasons such as privacy concerns, making LAN-focused threat detection studies directly relevant to such organizations.

Nevertheless, the insights derived from this LAN-focused synthesis remain highly transferable to broader contexts. Many of the mechanisms identified, such as graph-based spatial reasoning, temporal attention, and adaptive reinforcement strategies, can inform future applications in cloud, IoT, mobile, and other network environments.

3.2.2. Threat Context

This review focuses on synthesizing studies that evaluate network-detectable threats, either through network traffic or host behavior. Therefore, non-network detectable threats operating solely at the Open System Interconnection (OSI) model’s physical layer, such as jamming and electromagnetic interference, were excluded from the review.

Furthermore, this review exclusively focuses on the detection of network threats and their associated behavior, not the direct detection of network attacks. Threats are generally recognized as the potential to harm. Therefore, network threats can be defined as malicious or unauthorized activities, entities, or behaviors that indicate the possibility of a future attack directed at a network and its resources, where actual harm or disruption is realized when exploited by the threat actors. Therefore, the motivation behind exclusively focusing on network threats is the importance of threat mitigation before actual damage can be inflicted, facilitated by the proactive detection of such threat entities, their activities, and the early stages of multi-stage attacks, such as APT attack campaigns.

However, the terms threat and attack are used interchangeably in cybersecurity literature, which makes it extremely difficult to conduct an in-depth SLR in the strict sense of network threat detection. However, the SLR overcomes this grey area of challenge by drawing a clear boundary between these two conceptually different yet often intermingled terms.

3.3. Threat Frameworks and Taxonomies

The MITRE ATT&CK [48] and the cyber kill chain developed by Lockheed Martin [49] provide a technical framework for threat modeling and detection at various stages, backed up by a classification of adversarial tactics and techniques. While these two frameworks provide well-established taxonomies of tactics and attack phases, they do not formally distinguish threats from attacks, nor do they classify threats into structured groups. To address this gap, this review proposes a novel threat classification framework that systematically categorizes network threats, maps them to MITRE ATT&CK tactics and corresponding Cyber Kill Chain stages, and distinguishes them from subsequent attacks. The framework is unique in its methodological, rule-based approach and its focus on early stage, network detectable adversarial behaviors. The classification and categorization of threats evaluated in the reviewed studies, according to this framework, are presented in Section 4.

3.4. Methodological Framework for Threat Classification

To operationalize the proposed classification framework, each threat type reported in the reviewed studies was systematically mapped to the Enterprise MITRE ATT&CK tactic categories and their corresponding Cyber Kill Chain stages. This dual mapping provides both a technical dimension (what the threat or attack is) and a chronological dimension (where it sits within the lifecycle of an adversarial campaign). The classification frameworks is explained below.

3.4.1. Conceptual Framework

By combining MITRE ATT&CK and the Cyber Kill Chain, the framework establishes a hybrid taxonomy that captures both technical detail and chronological sequence:

- MITRE ATT&CK offers fine-grained categorization of adversarial tactics.

- Cyber Kill Chain provides temporal positioning within the attack lifecycle.

Neither framework alone achieves this duality. MITRE lacks chronological context, whereas the Kill Chain lacks technical granularity. Their integration therefore delivers a unified, interpretable structure capable of explaining what a behavior represents when it occurs, and crucially, whether it has transitioned from potential to realized impact. This interpretive dimension is essential for early-stage network threat detection.

Then each reported threat or attack label (e.g., PortScan, Infiltration, SQL Injection, Botnets) was aligned with the most appropriate ATT&CK tactic and Kill Chain stage according to its primary function and observable behavior. When a threat overlapped multiple tactics, the most representative or earliest stage tactic was chosen to maintain consistency with the proactive detection focus of this review. For example, SQL Injection was classified under Initial Access since it represents an entry vector, even though it may later contribute to lateral movement. Ambiguous or broad terms such as “malicious traffic” were refined by consulting the dataset documentation and experimental context.

To provide a clear operational reference within the proposed classification framework, Table 3 summarizes the 14 Enterprise MITRE ATT&CK tactics alongside their network detectable behavior and corresponding Cyber Kill Chain stages. The “Network Detectable Behavior” column contextualizes each ATT&CK tactic in network observable behaviors and indicators, such as unauthorized access, malware implantation, or port scanning, so that the technical definitions are grounded in observable events within network environments.

Table 3.

Mapping Enterprise MITRE ATT&CK tactics to Cyber Kill Chain stages with their network detectable behaviors.

3.4.2. Context-Aware Definition of Threats and Attacks

This review distinguishes between threats and attacks not as fixed categories but as different operational states within the adversarial lifecycle:

- Threats denote capabilities, behaviors, or preparatory actions that have the potential to cause harm but have not yet materialized into a successful exploitation or system compromise, e.g., malware download, infiltration attempts, reconnaissance, or credential-testing activities.

- Attacks represent realized exploitation or harm, where the threat has transitioned into active execution against a target, achieving adversarial objectives or producing measurable system impact, e.g., DDoS flooding.

Some actions (e.g., SQL Injection, Privilege Escalation, Malware Download) may act as both independent attacks and precursors within larger campaigns such as APTs. Hence, the framework treats threat and attack as context-dependent classifications determined by realization rather than by label alone.

3.4.3. Threat/Attack Determination Rules

Table 4 presents the rationale for distinguishing between four categories: “Threat”, “Attack”, “Dual-context”, and “Default to Threat”.

Table 4.

Threat/Attack determination rationale.

This ensures that cases such as fileless malware remain threats (since no harm yet occurs in the relevant context), whereas post-exfiltrations are out of scope (as damage has already been done). In multi-stage APTs, early stages (Reconnaissance → Initial Access → Persistence) are classified as threat phases, while later stages (Exfiltration to Impact) are generally considered as attack phases. Moreover, as a single APT campaign encompasses both threats and attacks, its default classification as a whole is therefore a “Threat”, validating the consistency of the threat classification framework.

3.4.4. General Rules for Mapping (From Network Activity/Label → Classification)

- Treat attempted actions as Threats, and realized or successful actions as Attacks.

- Choose the earliest relevant stage when multiple stages apply.

- When the dataset or paper does not clarify success, default to Threat to align with the proactive detection perspective.

- Document all ambiguous cases in an ambiguity log for reviewer consensus.

3.4.5. Step by Step Procedure for Manual Classification

- Extract labels: List every threat/attack label reported in the paper or dataset.

- Normalize terminology (optional): If necessary, convert labels to lowercase, remove punctuation, and harmonize synonyms (e.g., port scan → portscan) for consistency.

- Identify observed behavior: Examine dataset documentation or study description to infer practical meaning.

- Apply mapping rules. Assign:

- MITRE ATT&CK Tactic and ID

- Cyber Kill Chain Stage

- Classification (Threat/Attack/Dual-context)

- Resolve ambiguity. If the label fits multiple categories, select the earliest stage and record justification.

- Reviewer verification (Optional). A second reviewer reclassifies a 20–30% random subset. Compute inter-rater reliability (Cohen’s κ). Resolve disagreements through discussion.

- Record results. Maintain a spreadsheet with fields (Example fields are given below): Study ID|Dataset|Original Label|Mapped Tactic|Kill Chain Stage|Classification|Ambiguity Flag|Justification|Reviewer 1|Reviewer 2|Final Decision.

3.5. Research Design

3.5.1. Methodological Framework

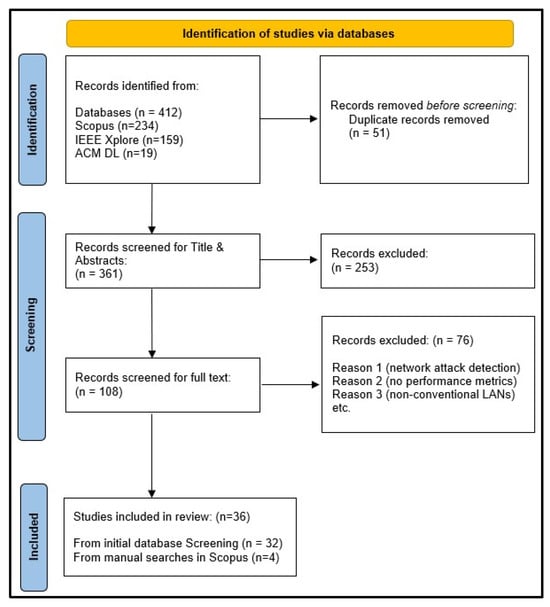

This research adopted a Systematic Literature Review framework, based on the concept that knowledge can be extracted from the existing literature using unbiased and transparent methods. The SLR was conducted according to the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) 2020 guidelines for systematic transparency and reproducibility. Thus, the SLR protocol was preregistered in the Open Science Framework (OSF) before the study screening process. Moreover, this SLR can be classified as secondary research that adopted a structured, comparative narrative synthesis approach, primarily qualitative in nature, supported by quantitative aggregative elements. The qualitative part of the SLR examined elements such as modeling preferences, integration methods, methodology, strengths, limitations, etc. Among the quantitative elements are frequencies of model evaluation instances, datasets, tested threat types, and the reported model performance metrics such as accuracy, F1-score, AUC, etc. Due to the extreme heterogeneity of main model types, different model combinations and methods, various datasets, and performance metrics of choice, a meta-analysis-based SLR was not feasible. However, research methods employed in this SLR are typically ideal for uncovering methodological trends and gaps in the literature, common model integration patterns, and drawing valuable insights, such as why certain approaches succeed or fail.

3.5.2. Research Design Components

The research design components are elaborated in Table 5.

Table 5.

Research design components of the SLR.

3.5.3. SLR Protocol and Registration

This systematic literature review followed the PRISMA 2020 methodology/checklist [50] (Supplementary Materials). And the SLR protocol was registered in OSF at https://osf.io/zs5a9 (accessed on 22 October 2025) to ensure transparency, reproducibility, and reduce bias.

3.5.4. Eligibility Criteria

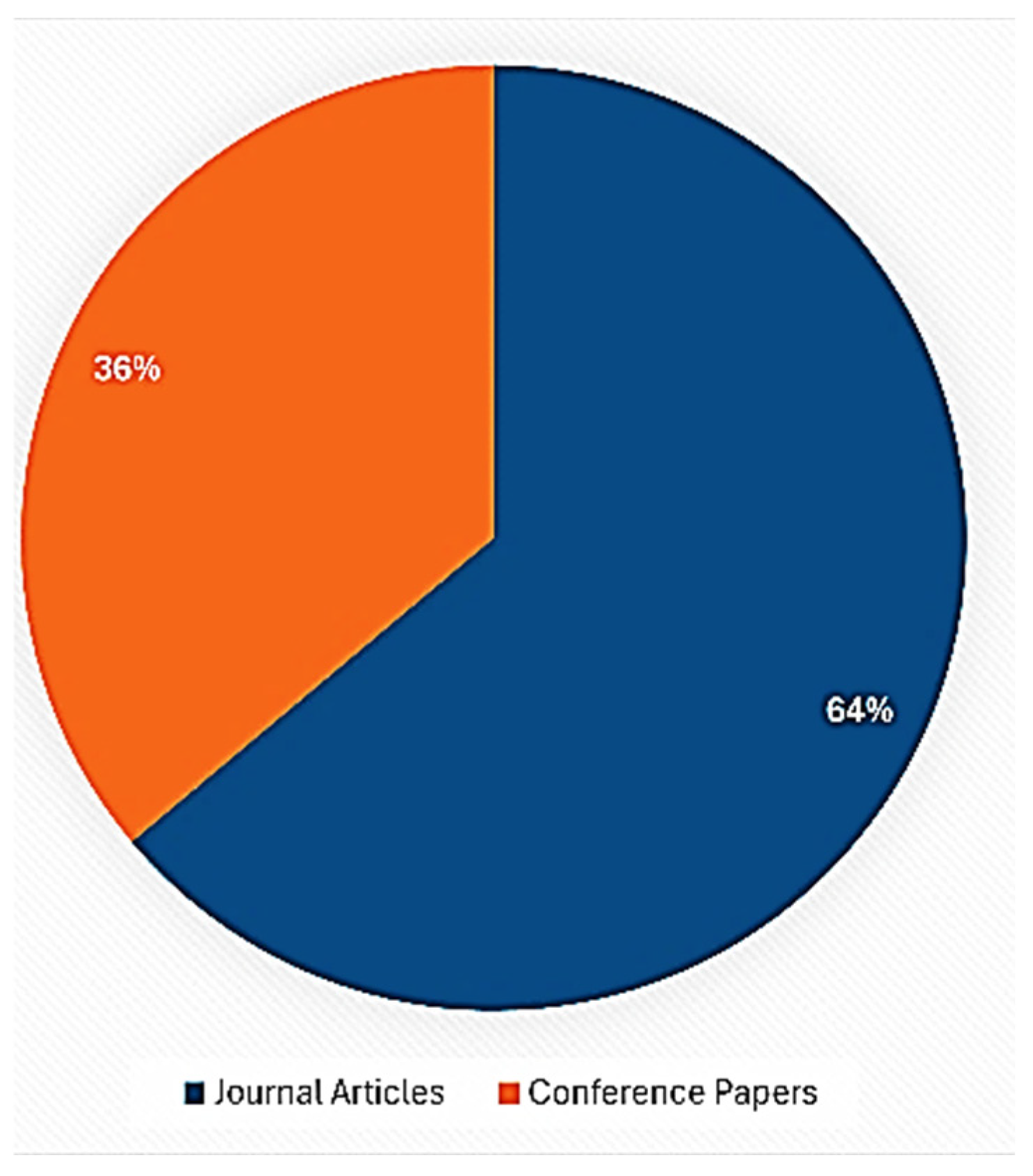

The collected studies consisted of peer-reviewed journal articles and conference papers. The inclusion and exclusion criteria for this research were defined to include only relevant, interpretable, recent, high-quality research.

Therefore, the chosen studies were limited to studies written in English and published since 2017. The starting year was selected to align with the studies done after the beginning of the Transformer era, as the Transformer architecture was first published in 2017 by the landmark paper called “Attention is all you need” [14]. Furthermore, only the papers reporting quantitative performance metrics were eligible for this review. None of the purely theoretical papers without any experiments were included. Furthermore, grey literature, such as reviews, editorials, white papers, etc., was excluded. And the papers not focused on computer network contexts or non-transparent studies were also excluded.

3.5.5. Critical Appraisal (Risk of Bias Assessment)

For quality assessment, a custom quality assessment rubric was constructed. This custom RoB checklist was inspired by [51], PRISMA 2020 [50], and the CASP principles [52], and was designed to satisfy the machine learning/AI-related SLR requirements. Each of the 36 sources included was assessed against the above RoB criteria and documented. The list can be found in the Appendix A.

3.5.6. Information Sources and Search Strategy

The comprehensive, structured search query, which was used to search for relevant research studies in the chosen scholarly databases, was inspired by the aforementioned scope and the exclusion/inclusion criteria. Thus, the peer-reviewed journal articles and conference papers were searched, their titles and abstracts were screened, and they were collected from three well-known scholarly databases, namely, Scopus, IEEE Explorer, and ACM DL.

Search query:

(“Graph Neural Networks” OR gnn* OR graphsage* OR gcn* OR gat OR gin OR “R-GCN” OR mpnn* OR “Graph Convolutional Network” OR “Message Passing Neural Network” OR “Graph Isomorphism Network” OR “Relational Graph Convolutional Network” OR “Graph Attention Network” OR “Dynamic Graph Convolution Network” OR transformer* OR “attention mechanism” OR “self-attention” OR “Vanilla Transformer” OR “Vanilla Transformers” OR “Vision Transformer” OR “Vision Transformers” OR “Temporal Transformer” OR “Temporal Transformers” OR “Graph Transformer” OR “Deep Reinforcement Learning” OR “Reinforcement Learning” OR rl OR q-learning OR “Deep Q-Learning” OR sarsa* OR drl* OR dqn OR “Policy Gradient” OR ppo OR actor-critic OR a2c OR sac)

AND

(“network threats” OR “network anomalies” OR “brute force” OR “network scanning” OR “protocol abuse” OR “malicious tunneling” OR “DNS tunneling” OR “traffic obfuscation” OR “reverse shell” OR botnet OR “port scanning” OR “insider threats” OR “insider threat” OR “advanced persistent threats” OR “zero day” OR “zero-day” OR “zero-day exploits” OR “data exfiltration” OR “privilege escalation” OR “host compromise” OR “lateral movement” OR “persistence” OR “credential access” OR “command and control” OR “c2 traffic” OR “defense evasion” OR “initial access” OR exploit OR reconnaissance OR “post-exploitation” OR beaconing OR “data staging” OR “encrypted C2 traffic” OR “dns spoofing” OR “ip spoofing” OR “tcp syn flood” OR “udp flood” OR ddos OR pivoting OR “exfiltration over DNS” OR “exfiltration over HTTPS” OR “malicious payload”)

AND

(“computer networks” OR “network threat detection” OR “network traffic” OR “network monitoring” OR “network layer” OR “network anomaly detection” OR “network intrusion” OR “network intrusion detection” OR “network flows” OR netflow OR “network communications” OR “flow-based” OR “network behavior” OR “IP address” OR “IP header” OR “routing anomaly” OR “packet header” OR tcp OR udp OR “connection patterns” OR “flow-based detection” OR ftp OR smtp OR “application layer protocol” OR “payload analysis” OR “MAC address” OR “Ethernet frame” OR VLAN OR “session management” OR “SSL/TLS handshake” OR TLS)

NOT

(5 g OR 4 g OR “network on chip” OR chip OR power OR grid OR “smart grid” OR “mobile networks” OR “internet of things” OR iot* OR cloud* OR “cloud security” OR endpoint* OR “radio access network” OR “physical layer attacks” OR “spectrum sensing” OR “physical layer security” OR rfid* OR jamming OR phishing OR malware OR “spam detection” OR “network attacks detection” OR “network attack detection” OR cryptocurrency OR blockchain OR “software vulnerability” OR patching OR “static code analysis” OR review OR survey OR slr OR “Literature Review” OR “Systematic Literature review” OR nlp OR vision OR “recommender systems” OR social OR human OR bio OR transportation OR health OR drug)

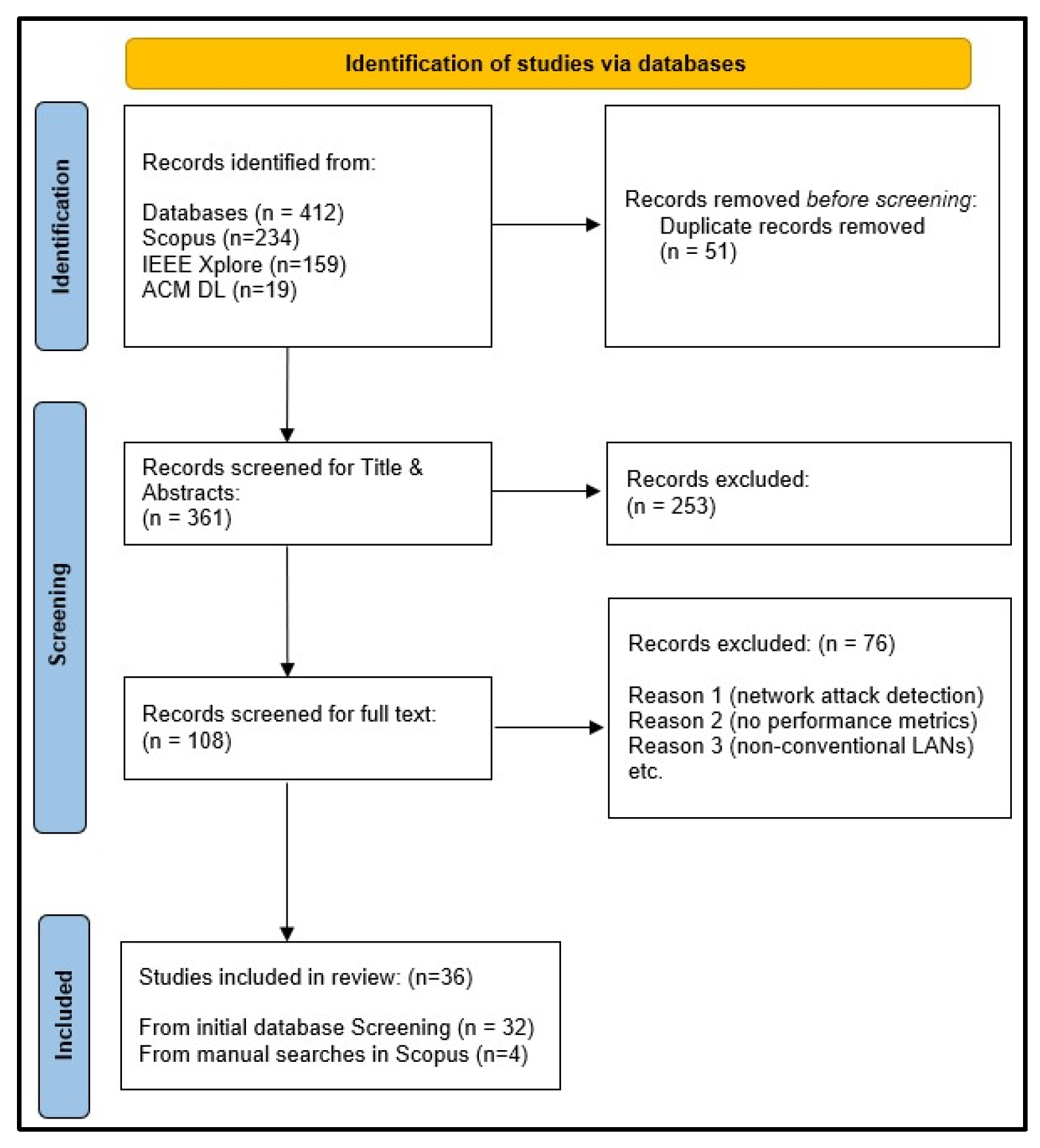

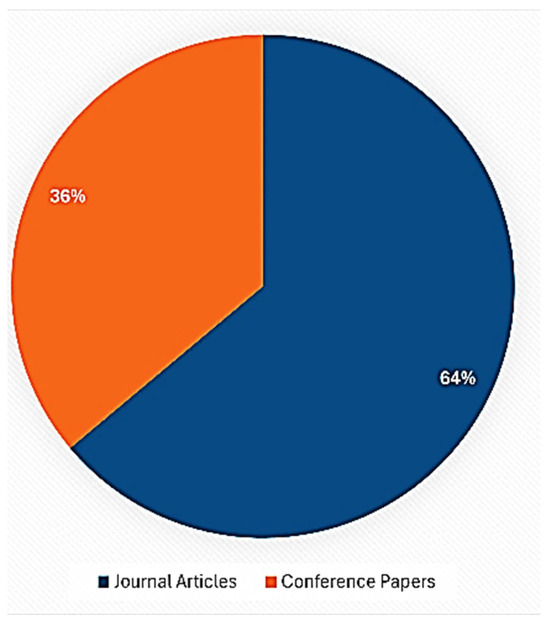

After running the search query and using filters (year range 2017–2025, Language—English, and only peer-reviewed journal articles and conference papers), 234 studies were found in Scopus, including 123 journal articles and 111 conference papers, where IEEExplorer yielded 159 results, including 126 conference papers and 33 Journal papers. ACM DL search resulted in 17 conference papers and only 2 journal articles. Altogether, there were 412 initial studies collected from these three scholarly databases.

3.5.7. Screening and Study Selection

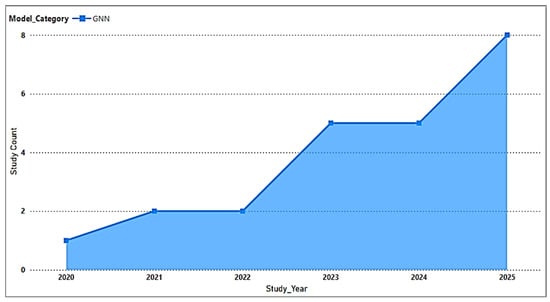

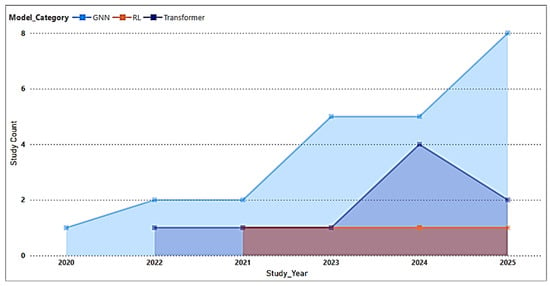

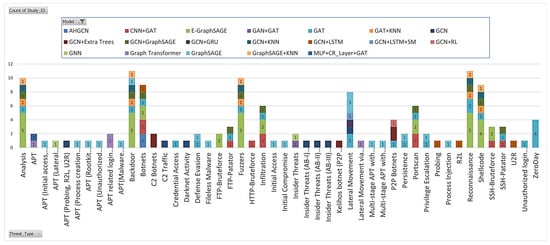

The three-stage screening (titles only, titles + abstracts, and full text) was designed to efficiently narrow down a high volume of search results while maintaining scientific rigor. In-depth analysis of ambiguous cases was required to ensure the transparency of the review. Inclusion and exclusion criteria were clearly defined during the SLR protocol registration and strictly applied to ensure the transparency of the screening process. The final selection of paper citations from each of the databases was downloaded mostly in RIS (Some in EndNote and Bib text formats as required) format and were sent to EndNote for reference management.