1. Introduction

Person Re-ID aims to match the identity (ID) of a pedestrian to be identified with that of the same pedestrian encountered in other scenarios previously [

1]. In recent years, countries around the world have been continuously increasing the number of surveillance devices to ensure the security of public areas. During the 2012 London Olympics, London added over 500,000 surveillance cameras for public security. As of 2021, it is roughly estimated that there are approximately 1 billion surveillance cameras globally. The increase in surveillance cameras is essential to strengthen public security, but such a large amount of data undoubtedly increases the cost of manual retrieval. Therefore, the development of Re-ID technology has become essential.

As a specific pedestrian retrieval problem across non-overlapping cameras [

2], variations in camera viewpoints seriously affect the feature extraction process and lead to a decline in the accuracy of Person Re-identification (Re-ID) algorithms. Thus, one of the key issues for an effective, scalable, and generalizable Re-ID model is how to eliminate the influence of perspective poses [

3]. Although many Re-ID methods have emerged at present [

4,

5] to attempt to solve this problem, most of them try to transform images from a certain perspective into a specified standard image for recognition or use them as a supplement to the original image. This approach mostly relies heavily on the effectiveness of the generative model used, and the performance of the generative model is generally unstable and difficult to train. Moreover, most of them only use a single type of image, often resulting in poor performance at certain angles. Recently, multimodal methods that combine RGB images and gait images [

6] have demonstrated excellent performance in dealing with problems of external covariate changes. However, they do not fully consider the impact of perspective differences, so the solution to this problem is less effective.

To address the issue that perspective differences reduce the effectiveness of person Re-ID, this paper proposes an attention-enhanced person Re-ID algorithm based on appearance–gait information interaction. Firstly, RGB images and GEIs are combined in a dual-branch network structure to compensate for the information loss problem caused by a single image at certain angles. A two-stage information interaction framework is proposed to integrate and exchange information between the two during the feature extraction process and then feedback to the corresponding branches to enhance the effectiveness of features. To address the inherent differences between the two modalities and effectively mine deep semantic correlations between appearance and gait features, an attention-based Multi-modal Information Interaction (MIE) module was designed. This module aims to bridge the significant discrepancies between RGB and GEI, thereby facilitating deeper and more effective feature extraction. Finally, the generated features are fused through an autoencoder, where a weighted approach, guided by view information, is applied.

In summary, the main contributions of this paper are as follows:

To address the problem that the appearance and gait feature extraction processes are independent, preventing effective collaborative learning and guidance of information, a two-stage information interaction framework is proposed to enable appearance and gait features to learn from each other and enhance feature effectiveness.

To address the information discrepancy between appearance and gait modalities, this paper proposes an attention-based Multi-modal Information Interaction (MIE) module. This module is designed to achieve deeper and more effective feature extraction through enhanced inter-modal guidance and integration, thereby improving the model’s overall information integration capability.

After extracting registered appearance images and gait images from the gait dataset CASIA-B [

7] for experimental evaluation, it is found that the proposed method outperforms existing methods in terms of performance metrics and exhibits stronger stability under the evaluation method based on perspective differences.

The remainder of this paper is structured as follows:

Section 2 provides a comprehensive review of recent advances in person re-identification, encompassing classical handcrafted descriptors, deep learning-driven approaches, gait-based recognition paradigms, and attention-driven modeling techniques.

Section 3 delineates the proposed methodology in detail, outlining an integrated framework that synergistically combines appearance and gait modalities.

Section 4 presents a rigorous experimental evaluation, including a detailed description of the CASIA-B dataset, experimental protocol design, performance metrics, ablation studies examining the contributions of MIE operations and attention strategies, and comparative analyses against leading-edge methods in the field. Finally,

Section 5 offers concluding remarks and outlines promising directions for future research.

2. Related Work

2.1. Person Re-Identification

Before the emergence and popularity of deep learning, traditional handcrafted features were used in person Re-ID research, mostly introducing color and texture features. With the success of deep learning in the fields of image classification and object detection, deep learning has been introduced into the person Re-ID field. Deep learning-based person Re-ID methods generally outperform handcrafted feature-based methods in terms of accuracy and show overwhelming advantages on large-scale datasets. In 2014 [

8,

9], Yi et al. and Li et al. first introduced the Siamese network model into person Re-ID, improving the accuracy of person Re-ID by extracting image color and texture information. Sun et al. [

10] proposed a partial refined pooling module, first evenly dividing the image horizontally and then refining each strip to enhance the internal consistency of block features. In person Re-ID for video sequences, since each matching unit corresponds to multiple images, most studies also use recurrent neural networks or long short-term memory networks for feature extraction. For example, McLaughlin et al. and Wu et al. [

11,

12] analyze appearance features (such as CNN, color, LBP) through an RNN network, capture the temporal relationship between frames, and perform Re-ID after estimating the human pose. Regarding the perspective problem, Liu et al. [

4] remove interference information from specific views through view confusion learning (VCFL) to learn view-invariant features and use SIFT [

13,

14] to guide the learning of neural networks. Wan et al. [

15] proposed an angle-regularized perspective-aware loss, projecting features from different viewpoints onto a unified hypersphere to jointly model the distribution at the identity and perspective levels. These methods attempt to solve the impact of perspective from different angles, but due to insufficient information provided by RGB images at certain angles, the accuracy is poor.

Similarly, some studies focus on multimodal Re-ID, leveraging the advantages of different modal data combinations to learn more excellent features. A variety of data types are used, such as RGB-IR multimodal images [

16,

17], depth features–color features [

18], and image-text [

19,

20]. Additionally, some studies combine RGB images and GEI images, effectively integrating appearance-based person Re-ID and gait recognition methods. Lu et al. [

6] proposed a new Re-ID framework consisting of a dual-branch model for fusing appearance and gait features, using an improved Sobel operator to extract gait features to overcome external covariate changes, i.e., changes in pedestrians’ clothing. The aforementioned method combines data from various modalities, enriching the diversity of the information contained within the features and enhancing the expressive power of the features. However, it does not take into account the similarities and differences in data from different perspectives, and therefore does not effectively address the issue of perspective discrepancies.

2.2. Gait Recognition

Gait recognition is a biometric recognition technology developed based on person Re-Id, aiming to identify pedestrians using their gait information. Gait recognition is a behavioral biometric recognition method with unique advantages such as non-contact, difficulty in forgery, and passivity. It usually does not require the explicit cooperation of the subject and has great application potential [

21]. Gait features can be captured remotely in uncontrolled scenarios and are a valuable tool in video surveillance with broad application prospects. In gait recognition, the two common types of data are appearance contours and skeletons, and the usage rate of appearance contours is much higher than that of skeletons [

22].

Wu et al. [

23] proposed three possible structures and conducted experiments, demonstrating the obvious advantages of deep learning in gait recognition. Yu et al. [

24] used a GAN model to convert gait at any angle into a 90 side view gait of ordinary clothing and then extracted features, designing real/fake discriminators and recognition discriminators to determine whether the generated image is real and whether the features in the image are retained. With the progress of deep learning, a series of efficient gait recognition methods have emerged in recent years, such as GaitPart [

25], GLN [

26], and PartialRNN [

27], improving the accuracy of gait recognition. However, the aforementioned methods have not taken into account the issue of the varying amounts of pedestrian feature information present in images from different perspectives, which leads to the conversion of perspectives having an adverse effect on gait recognition performance. Gait images from different perspectives contain varying amounts of gait information and key features. A side perspective at 90° encompasses a richer set of gait information, whereas a front perspective at 0° primarily consists of static information, with dynamic information being almost negligible.

2.3. Attention Mechanism

The attention mechanism was first proposed by Itti et al. [

28] in 1998. In 2014, it became popular after being applied to the recurrent neural network (RNN) model by Mnih et al. [

29]. In 2015, Xu et al. [

30] introduced the attention mechanism into the image field, proposing two image description generation models based on the attention mechanism. The most influential research is undoubtedly the Transformer architecture proposed by Vaswani et al. [

31] of the Google team in 2017, which is based on the encoder–decoder structure. It effectively solves the problems that RNN cannot process in parallel and CNN cannot efficiently capture long-distance dependencies, and has since been applied to the field of computer vision.

Hu et al. [

32] proposed the channel attention mechanism, and the designed SE-Net is a representative work of applying the attention mechanism in the channel dimension and was the champion of the 2017 ImageNet large-scale image classification task. Hu et al. [

33] proposed GE-Net, making full use of spatial attention to better explore the context information between features. Wang et al. [

34] introduced the self-attention mechanism into the image field. By modeling the global context through the self-attention mechanism, long-distance feature dependencies can be effectively captured. Leng et al. [

35] designed the Attention-Aware Occlusion Erasure (AAOE) module for the problem of irregular occlusions in the person Re-ID task to address the issue that fixed partitions cannot handle complex occlusions and weaken the attention of the occluded part.

3. Materials and Methods

3.1. Overall Framework

To address the issue of decreased person Re-ID accuracy caused by perspective differences, this paper proposes a method that fuses appearance features and gait features. Specifically, the information of RGB images and GEI images is integrated, and a dual-branch network structure is designed to complement each other’s information of the two modalities, improving the problem of decreased Re-ID performance due to insufficient information of a single modal image at certain specific perspectives.

As shown in

Figure 1, the overall framework mainly consists of four main parts: the feature extraction module, the multimodal information exchange module, the importance weight estimation module, and the feature fusion module. Firstly, the appearance features of RGB images and the gait features of GEI images are extracted using a dual-branch network architecture. During the feature extraction process, the operation of the multimodal information exchange module is introduced twice. These two interactions occur at the middle layer and the end layer, respectively. Mutual guidance and reinforcement are carried out between the two modalities in a progressive manner, making full use of the feature extraction process to enhance the model’s ability to integrate the two modal information. Then, the perspective of the pedestrian is estimated, and the estimated angle is mapped into importance weights through a mapping function to quantify the importance of features at different perspectives. Finally, weighted fusion and optimization are performed based on the autoencoder to reduce redundant and interfering information while retaining key recognition information.

3.2. Feature Extraction Module

In computer vision research, deep learning methods are widely used in feature extraction tasks, and convolutional neural networks are generally regarded as effective feature extraction tools. Since ResNet-50 is a deep network architecture that innovatively introduces residual blocks, it effectively alleviates the problems of gradient vanishing and explosion in deep learning, thereby enhancing the network’s ability to learn complex features and its overall performance. Therefore, this paper selects ResNet-50 as the backbone network for both the appearance branch and the gait branch to fully utilize its powerful feature representation ability.

For the appearance branch, considering that the person Re-ID algorithm in this paper is for video data, it should include multiple input frames. According to the research of Gao et al. [

36], for data containing temporal cues, the temporal attention mechanism exhibits optimal performance. It is also pointed out that the effect of temporal pooling is comparable to that of temporal attention, and the implementation process is more concise. Therefore, this paper uses temporal pooling to integrate the features extracted from the appearance branch. The formula for temporal pooling is shown in Equation (1):

In the context of the present study, denotes the t-th feature within a sequence of image-level features, each of length T. represents the feature that has undergone temporal pooling aggregation, while D signifies the dimensionality of features extracted via the ResNet-50 architecture. Drawing upon the experimental findings of Gao et al., it has been demonstrated that the performance of temporal pooling is optimally superior when T is set to 4. Consequently, this manuscript adheres to the same parameterization, selecting T = 4 as the window size for temporal pooling.

For the gait branch, we consider the fact that GEI images are widely used in gait recognition because they integrate the static and dynamic information of pedestrians. Among them, static information has a certain robustness to angle changes and can resist the decline in recognition performance caused by perspective differences to a certain extent. Therefore, this paper selects GEI images as the input for the gait branch.

3.3. Multimodal Information Exchange Module

This paper argues that there is an inherent correlation between the appearance and gait modal data, and they can guide each other to improve the effect of feature extraction. Therefore, a dual-modal information interaction mechanism is constructed during the feature extraction stage, aiming to enhance the feature extraction ability through mutual guidance and reinforcement between the two modalities, thereby improving the model’s ability to integrate the two types of modal information. The key regions in RGB images and GEI images usually have irregular shapes, inconsistent sizes, and uncertain positions. For example, color and facial features in RGB images are mainly concentrated in the upper half, while GEI images focus more on gait information in the lower body. In addition, the sizes of these important regions also change under different perspectives, especially in GEI images. As shown in

Figure 2, the red boxes in the figure mark the key regions. Due to the differences between the two modalities, this paper emphasizes learning the attention scores corresponding to each modality separately and returning this feedback information to their respective branches to strengthen the learning of important features of each modality.

In summary, due to the irregular characteristics of the important regions, this paper designs a Multimodal Information Exchange (MIE) module based on the AAOE module designed by Leng et al. [

35] to address the problem of information differences between the appearance and gait modalities. The MIE module can adaptively adjust the attention to the key features of each modality and feed it back to the corresponding branch. The structure of the MIE module is shown in

Figure 3, where (F_a) and (F_g) represent the feature vectors input from the appearance branch and the gait branch, respectively, and (A_a) and (A_g) represent the attention scores fed back to the appearance branch and the gait branch, respectively.

The MIE first integrates the information of appearance and gait by summation. Due to the significant differences between RGB images and GEI images, including inconsistent shapes, positions, and scales of the attention areas, if only a single attention feedback module is used, it may lead to information confusion between modalities and affect subsequent processing. Therefore, this paper sets up two independent attention modules for separate learning to ensure that the network can specifically learn the characteristics of the two modalities and avoid information confusion between modalities.

The key regions in RGB and GEI features usually have irregular shapes and show significant differences under different perspectives, and the AAOE module has strong adaptability and accuracy in dealing with irregular shapes. Therefore, this paper uses the AAOE module. However, different from the attention weakening processing method of the original AAOE module, this paper expects to use it for attention enhancement processing. Given that bitwise addition has the characteristic of information complementarity, while bitwise multiplication emphasizes the correlation between the two, this paper changes the operation of integrating the attention of two partitions in AAOE from multiplication to addition to retain the attention information of each partition. This modification enables the network to autonomously select and adjust attention scores according to actual needs, achieving targeted feedback for specific regions. The number of partitions, as an adjustable hyperparameter, can be flexibly adjusted according to the characteristics of specific tasks and datasets.

It is worth noting that the MIE module is used twice in this paper to enhance the model’s ability to process the person Re-ID task.

Firstly, after the output of the second layer (layer2) of the backbone network ResNet-50, the first MIE module is introduced. At this time, the model has obtained four appearance features and one gait feature, and a total of five different attentions need to be learned. At this stage, four feature maps are obtained by adding each appearance feature to the gait feature one by one and then input into the MIE module for processing, enabling the network to learn unique attentions for each appearance feature and gait feature pair. Specifically, the MIE module should learn four attentions for appearance features and four attentions for gait features at this time. Next, the four appearance attentions are directly fed back to their respective appearance features, while the attentions for gait features are summed and averaged before being fed back.

Subsequently, after the fourth layer (layer4) of the backbone network ResNet-50, the MIE module is deployed again. At this time, the four appearance features have been combined into a comprehensive appearance feature through temporal pooling. The pooled appearance feature and the gait feature are summed and then input into the MIE module to learn the respective attention scores for these two features and feed them back to the corresponding features, further optimizing the model’s ability to capture important information about appearance and gait features.

3.4. Importance Weight Estimation Module

In this study, the importance weights of features from two modalities are estimated based on perspective information. Initially, the input data undergoes perspective estimation to identify the angle of the target pedestrian relative to the camera’s shooting position. Subsequently, through a weight mapping formula, the importance of the two modalities at different angles is expressed as weight values. The process of importance weight estimation is depicted in

Figure 4.

The Gait Energy Image (GEI) is constructed from all complete target pedestrian segmentation maps within the video data. It encompasses the angular information from multiple images and is free from interference from other color information, making it suitable for perspective estimation. After estimating the pedestrian’s perspective angle, the importance weight mapping formula is employed to map it into the weights of the two features. The frontal view (0°) and rear view (180°) of the GEI contain relatively less gait information, while the side view (90°) contains richer gait information [

21]. In the preliminary work of this study [

37], a total of five sets of importance weight mapping formulas were designed. Based on the experimental results, the importance weight mapping formulas were set as shown in Equations (2) and (3):

Here, wa and wg represent the weights of appearance features and gait features, respectively; x denotes the angle, measured in degrees.

3.5. Feature Fusion Module

When dealing with multi-branch networks, the fusion function effectively integrates the advantages of each branch, especially when there are significant view differences [

38]. The method proposed in this paper requires the fusion of appearance features extracted from RGB images and gait features extracted from GEI. However, without proper processing, the fused features may contain redundant and interfering information. Therefore, this paper selects the Auto-Encoder (AE) for fusion optimization. AE can refine and simplify features while retaining important information, effectively removing redundant and interfering information. Additionally, AE has learning capabilities and can perform targeted learning for specific objectives. AE mainly consists of an encoder and a decoder. The encoder is responsible for encoding the original features or data, while the decoder decodes the encoded features. In this study, both the encoder and decoder employ a three-layer network architecture. Each layer performs dimensionality reduction or expansion by a factor of two, and the latent feature’s output dimension is 2048. Under the constraint of the Mean Square Error (MSE) loss, the decoding process aims to generate features that are as close as possible to the original features or data.

The formula for the fusion module is shown in Equation (4), where

represents the encoder in AE.

3.6. Loss Function

This paper employs three loss functions: the Label Smoothing Cross-Entropy Loss function, the Triplet Loss for hard samples, and the Mean Square Error Loss. The Cross-Entropy Loss function is the most fundamental loss function in the Re-ID task. A fully connected layer is connected after the feature layer as the classification layer, and the number of classification categories is equal to the number of pedestrian identities (IDs) in the training set. The Cross-Entropy Loss is calculated after the Softmax operation. During the testing and practical application phases, the classification fully connected layer and Softmax are discarded, and the features extracted from the feature layer are directly used for retrieval. The Label Smoothing technique can effectively prevent overfitting, especially when the amount of training data is limited. This technique adds slight noise to the true labels, enabling the model to better understand the boundaries of each category during training and thus improving generalization performance. Its formula is expressed as Equation (5):

where

y is the true label,

pi is the predicted probability of the

i-th class, the small constant

ε∈[0,1] is the label smoothing coefficient, and

N is the number of classifications.

The Triplet Loss for hard samples,

Ltri, is an optimized version of the traditional Triplet Loss. In each training batch, for an anchor sample, a most challenging (farthest) positive sample and a most difficult-to-distinguish (nearest) negative sample are selected to form a triplet. Its formula is expressed as Equation (6):

where

represent the anchor, positive sample, and negative sample of the

i-th class, respectively,

m represents the fixed margin of the Triplet Loss, and

is the distance metric function.

In addition, the Mean Square Error Loss

LMSE is used in the AE structure to maintain the similarity between the original features and the decoded features. The formula is expressed as Equation (7):

where

yi is the label, i.e., the original feature, and

is the predicted value, i.e., the decoded feature.

In light of the experimental results from the preliminary work of this paper [

39], the LMSE weight is still set to 1.25 in this paper. The final loss function is shown in Equation (8):

4. Experiments and Analysis

4.1. Datasets

This paper utilizes the gait database CASIA-B [

7], which was released in 2005 by the State Key Laboratory of Pattern Recognition, Institute of Automation, Chinese Academy of Sciences, China. The database contains two types of data: video data and pedestrian contour data. The video data database includes records of 124 pedestrians, with each pedestrian filmed under three different clothing conditions and 11 perspectives ranging from 0° to 180°. Specifically, each person’s data consists of 6 normal walking (NM) sequences (NM-01 to NM-06), 2 sequences with a coat (CL) (CL-01 and CL-02), and 2 sequences with a backpack (BG) (BG-01 and BG-02). The pedestrian contour data is obtained by decomposing each pedestrian’s video into frames and applying traditional image segmentation algorithms, converting each frame of the video into a binary image.

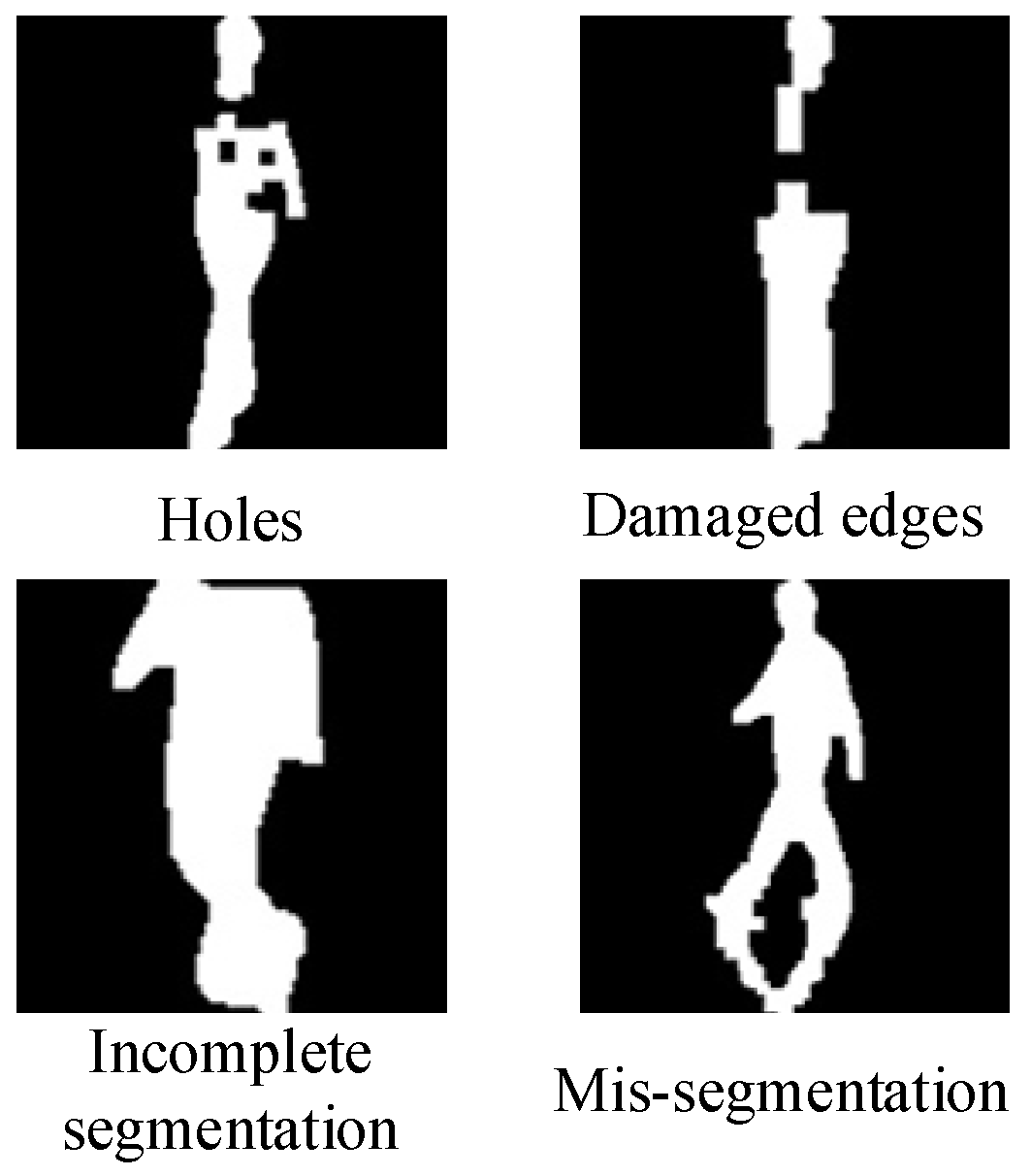

This paper aims to address the issue of decreased pedestrian re-identification performance caused by perspective differences. Therefore, only the NM sequences are used for testing to eliminate the influence of factors such as clothing changes. In scenarios with limited hardware resources, traditional algorithms generally have lower resource requirements, but some of the data contains issues such as holes, damaged edges, and incomplete segmentation, as shown in

Figure 5. These defects can have an adverse impact on the pedestrian re-identification network.

To mitigate the reduction in recognition rate due to segmentation problems, this paper screens and removes GEI with severe defects before training and testing the network. This helps to minimize the impact of segmentation problems on the recognition results and is also beneficial for evaluating the effectiveness and reliability of the proposed method in practical applications. The specific experimental data settings are presented in

Table 1.

4.2. Experimental Details

4.2.1. Parameter Settings and Experimental Conditions

The backbone architecture of our proposed framework is based on ResNet-50, initialized with pre-trained weights from the ImageNet dataset to ensure robust feature representation at the onset of training. During optimization, we employ the Adam algorithm—a widely adopted adaptive gradient method—equipped with an initial learning rate of 1 × 10−4, which decays by a factor of 0.96 after each epoch. The total number of epochs is set to 150, allowing sufficient convergence while mitigating overfitting risks.

To simulate realistic deployment scenarios, where pose annotations may not be available during inference, we utilized a pre-trained ResNet-34 viewpoint classification model. This model was trained using GEIs based on the dataset split described in

Table 1. During testing, this trained model functions as our perspective estimation module, allowing us to simulate potential misclassification errors that a real-world perspective estimation module might produce.

All experiments were conducted on a server equipped with an Intel(R) Xeon(R) CPU E5-2698 v4 @ 2.20GHz processor and a single NVIDIA Tesla V100-DGXS-32GB GPU. The software stack comprised Python v3.9.15, PyTorch v1.8, and CUDA v11.4, ensuring compatibility with modern deep learning pipelines and efficient memory utilization.

4.2.2. Evaluation Metrics

We adopt two well-established metrics in person re-identification (Re-ID): Cumulative Matching Characteristics (CMC) [

38] and mean Average Precision (mAP) [

39]. These measures provide complementary perspectives on model performance. The CMC curve quantifies the probability of retrieving the correct identity within the top-k ranked candidates—commonly referred to as Rank-k accuracy. In this work, we emphasize Rank-1 performance, which reflects the model’s ability to place the true match at the very first position—an essential metric for real-time applications requiring immediate identification.

In contrast, mAP offers a more holistic assessment by averaging precision values across all relevant samples, particularly beneficial when multiple ground-truth matches exist per query. Unlike CMC, which focuses narrowly on top-ranked results, mAP evaluates how effectively the model ranks all positive instances relative to negatives—an attribute critical for evaluating robustness in complex environments. By integrating both CMC and mAP into our evaluation protocol, we establish a rigorous, multi-dimensional benchmark that captures not only retrieval efficiency but also overall ranking quality.

4.3. Ablation Experiments

4.3.1. MIE Information Integration Experiment

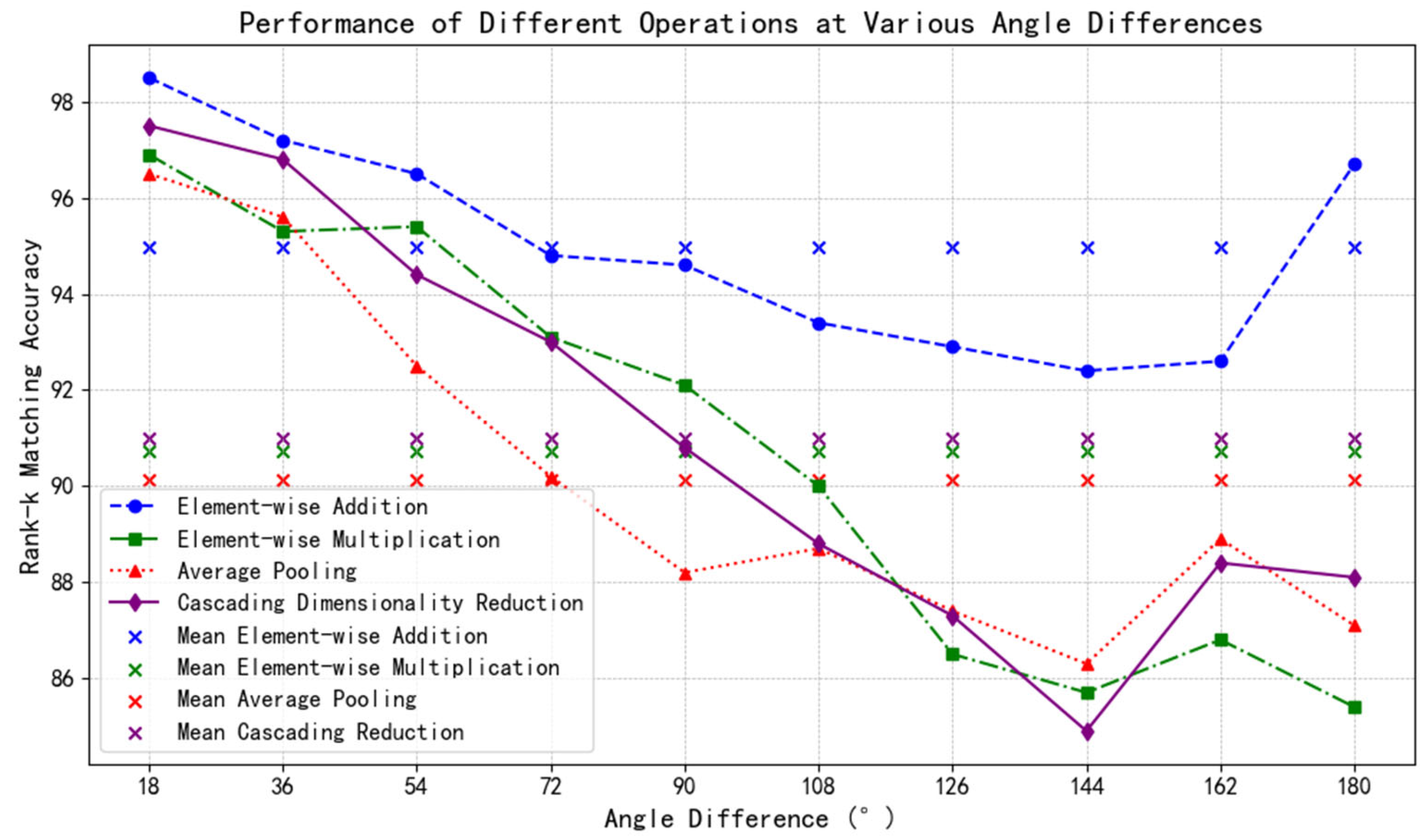

This section aims to discuss the impact of the operation of integrating two-modal information in the MIE module. Four operations are set to integrate the two features: element-wise addition, element-wise multiplication, average pooling, and concatenation with dimensionality reduction. Among them, average pooling involves adding the two features and then taking the element-wise average. The concatenation operation doubles the number of feature channels, so a 1 × 1 convolution is added for dimensionality reduction. The experimental results are presented in

Figure 6 and

Figure 7.

It can be seen that element-wise addition performs the best. This is because this operation can retain the original information of the two modalities and enhance the overall feature representation by simply accumulating their respective advantages. Since the pedestrian re-identification task usually requires capturing and fusing multiple visual features, this method more effectively integrates the complementary information of the two modalities, thereby improving the recognition performance. Element-wise multiplication emphasizes the relevance of information, which may lead to mutual suppression of features, especially when some element values of the two modalities are small or zero, resulting in the loss of important information. Although average pooling can fuse the information of the two modalities to a certain extent, the operation of taking the average may dilute some important feature details. Concatenation may cause problems such as information redundancy and unbalanced feature importance, and the subsequent convolution operation may also lead to the compression and loss of some information. Therefore, its effect is not optimal.

4.3.2. MIE Partition Experiment

This section investigates the impact of different partitioning strategies within the Multi-Information Exchange (MIE) module on recognition accuracy. Specifically, we integrate MIE modules at two distinct layers—layer2 and layer4—of the ResNet-50 backbone, enabling hierarchical interaction between appearance and gait features.

As shown in

Figure 8 and

Figure 9, varying the number of partitions reveals a clear trade-off between resolution fidelity and noise sensitivity. When the partition configuration is set to 8–2 (i.e., 8 partitions in layer2 and 2 in layer4), the model achieves optimal performance in both Rank-1 and mAP scores.

Through meticulous experimentation with varying partition configurations within the MIE module, it was observed that the model achieves optimal performance when configured with an 8-2 partitioning scheme. This can be attributed to the nature of features extracted by layer2, which are predominantly shallow with higher resolution, encapsulating a wealth of detailed information. A paucity of partitions at this layer leads to insufficient resolution, thereby obfuscating or conflating nuanced details of shallow features, ultimately precluding the extraction of valuable information to drive the attention feedback mechanism, and potentially introducing erroneous information that diminishes feature extraction efficacy. Conversely, layer4 extracts deeper features with lower resolution, rich in semantic information. An excess of partitions at this layer results in informational disarray when discerning the significance of various regions, impeding the acquisition of comprehensive semantic information and leading to attention diffusion, thereby degrading overall model performance. Thus, employing an 8-partition scheme for shallow features ensures the capture of abundant detail, while a 2-partition scheme for higher-level features facilitates the retention of more holistic semantic information. This section empirically demonstrates that the 8-2 partition configuration for the MIE module strikes an optimal balance between signal-to-noise ratio, effectively filtering out noise while ensuring the capture and reinforcement of key features, further substantiating the rationality and efficacy of our MIE module design.

4.3.3. Comparative Study of Attention Mechanisms

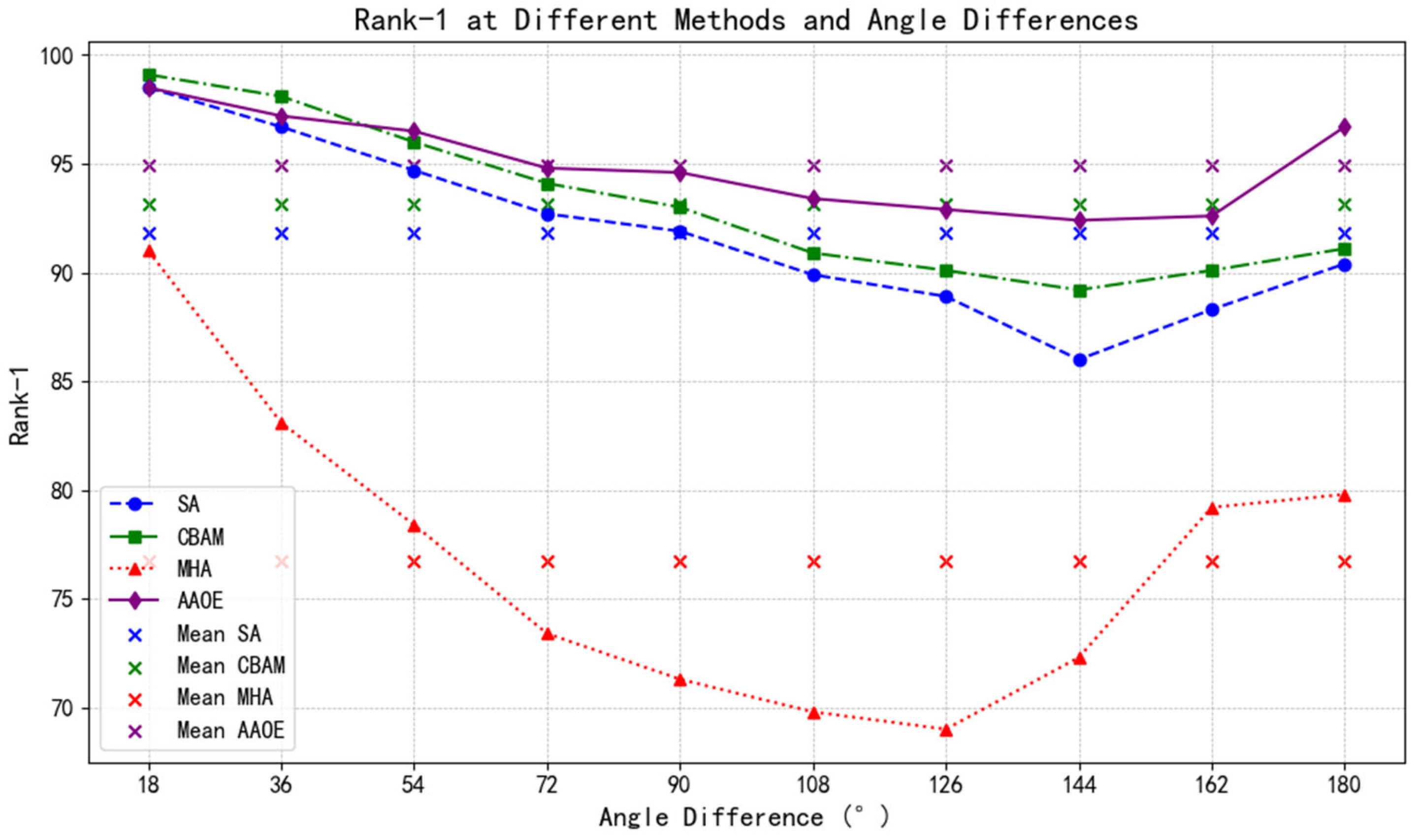

We further evaluate the efficacy of various attention mechanisms integrated into the MIE module. Four representative architectures are considered: Spatial Attention (SA), Convolutional Block Attention Module (CBAM), Multi-head Attention (MHA), and our proposed Adaptive Attention Output Estimator (AAOE).

Experimental results (

Figure 10 and

Figure 11) demonstrate that AAOE consistently outperforms other methods across all angular variations. This superiority stems from its inherent adaptability to irregularly shaped salient regions—a common characteristic in both RGB images and Gait Energy Images (GEIs), especially under non-frontal views. SA and CBAM, being predominantly global in scope, struggle to localize subtle yet discriminative patterns under large viewpoint changes. Meanwhile, MHA, though powerful in capturing pixel-wise dependencies, tends to amplify irrelevant details due to its exhaustive attention map generation—a behavior that can degrade overall performance through misallocation of focus.

By contrast, AAOE dynamically adjusts attention weights according to local context and modality-specific importance, yielding superior alignment between learned features and actual identity signals.

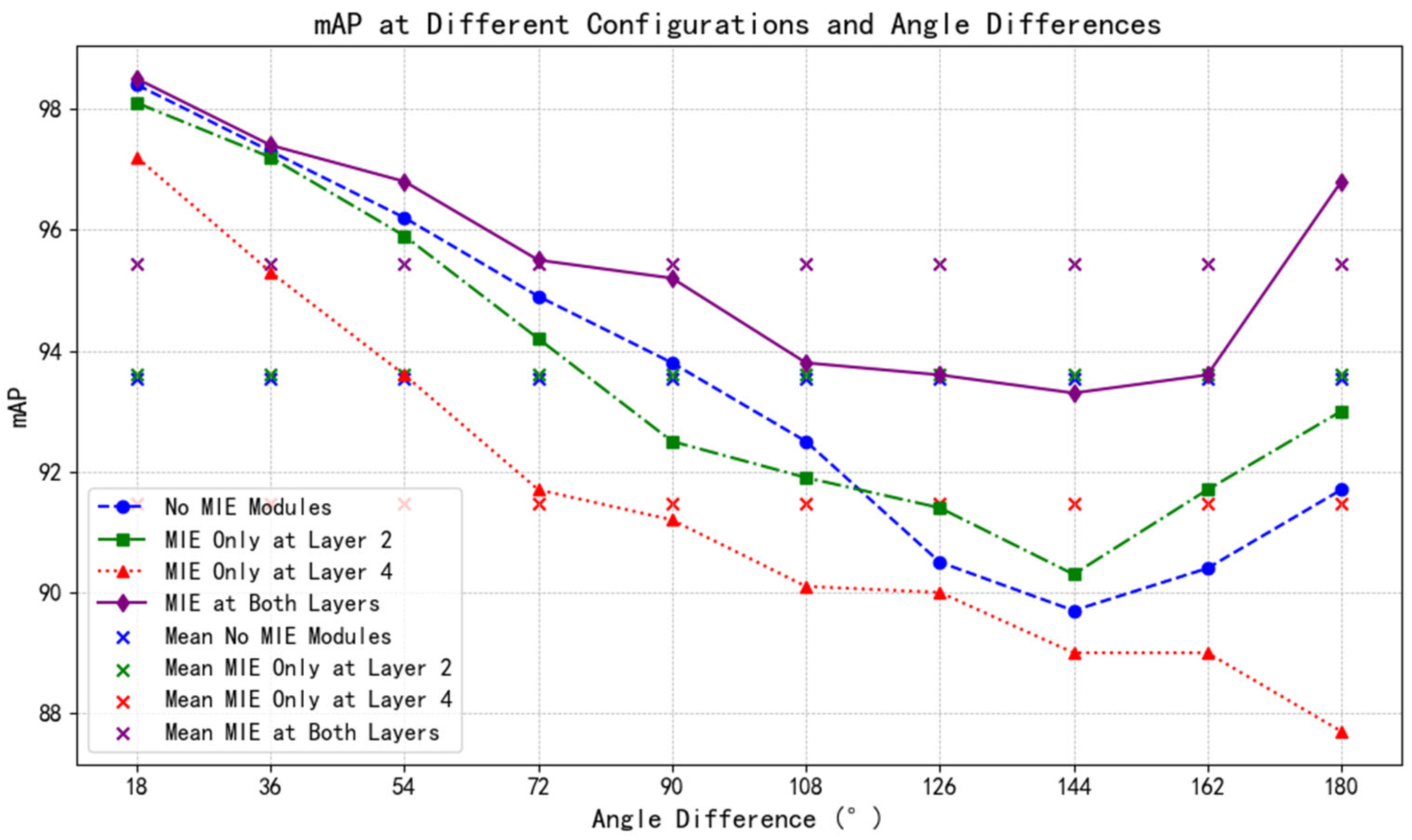

4.3.4. Overall Architecture Abstraction

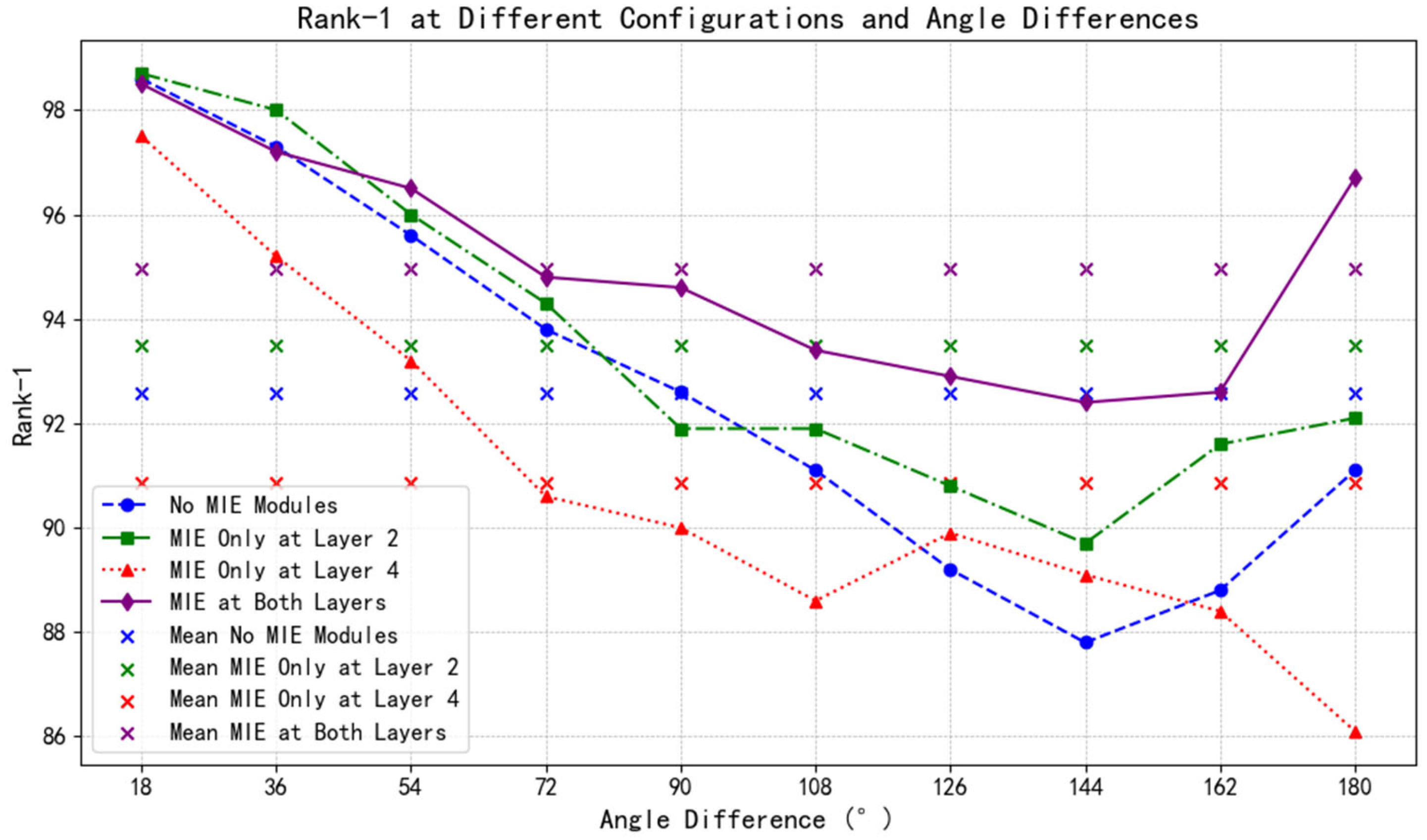

To assess the contribution of modular design choices, we conduct a comprehensive ablation study involving four configurations: (1) no MIE modules; (2) MIE only at layer2; (3) MIE only at layer4; and (4) MIE at both layers.

As evidenced by

Figure 12 and

Figure 13, the dual-MIE configuration yields the highest performance in both Rank-1 and mAP metrics. This outcome underscores the necessity of multi-level information fusion:

Deploying MIE solely at layer2 leads to shallow feature integration without sufficient semantic guidance from deeper representations. Applying MIE exclusively at layer4 fails to leverage early-stage cross-modal interactions, resulting in suboptimal enrichment of high-level semantics and potential interference between branches. Employing MIE at both levels enables progressive refinement: layer2 facilitates coarse-grained feature alignment, while layer4 enhances fine-grained discrimination through contextual awareness—an architectural synergy that significantly boosts model resilience to viewpoint variation.

This layered approach exemplifies a principled strategy for building robust multimodal systems grounded in hierarchical feature engineering.

4.4. Comparative Evaluation

To rigorously validate the effectiveness of our method under challenging conditions, we compare against several state-of-the-art approaches: GaitGANv2, VGG16-MLP, MGOA, LTP, and LAVD. In all experiments, both the probe and gallery sets exclusively consisted of sequences captured under Normal Walking (NM) conditions, and all training and testing configurations were maintained identically. As summarized in

Table 2, our method achieves a higher mean accuracy (94.9%) compared to competing techniques, accompanied by a notably smaller variance (4.199).

The experiment shows that the method proposed in this paper exhibits higher mean values and smaller variances, indicating that the method presented in this paper has stronger stability and reliability when dealing with perspective changes. Specifically, the method proposed in this paper integrates information from both appearance features and gait features, such as the static information of appearance features (e.g., morphology, contour) and the dynamic information of gait features (e.g., stride length, stride frequency), thus achieving higher improvements compared to methods that use single features (e.g., VGG16-MLP, GaitGANv2, MGOA, etc.). At the same time, the importance weight estimation based on perspective information in this paper fuses the information of appearance features and gait features through weighted integration, achieving a balance of signal-to-noise ratio, thus achieving certain improvements compared to directly fusing the two as in LTP. In addition, the MIE module proposed in this paper utilizes the attention mechanism to guide and enhance collaborative learning between the two modalities, while mitigating the significant differences between the two different modal data, thus realizing deeper and more effective feature extraction. Therefore, compared to LAVD, it achieves further improvements in accuracy and smaller variance.

Furthermore, we perform cross-domain evaluations to test generalization capabilities. Following standard protocol, models trained on NM sequences are applied directly to BG (backpack-wearing) sequences without retraining—a scenario mimicking real-world variability in clothing and posture.

Table 3 illustrates that while all methods experience degradation in accuracy under domain shift, our approach remains superior in both average performance (87.3%) and variance (27.43), outperforming even specialized models such as LTP (77.7% mean, 124.9 variance). This efficacy stems from the Multi-modal Information Interaction (MIE) module’s effective interaction and integration of appearance and gait features. This process guides and strengthens collaborative learning between the two modalities while simultaneously mitigating the impact of inherent discrepancies between these two distinct modal data types, thereby achieving deeper and more effective feature extraction. This confirms the robustness of our framework to environmental perturbations—a key requirement for scalable and trustworthy pedestrian recognition systems.

5. Discussion

In

Section 4, the Experimental and Analysis part, this paper validates the rationality of the proposed model structure through five sets of experiments. In the MIE information integration experiment, four operations were designed: bit-by-bit addition, bit-by-bit multiplication, average pooling, and cascaded dimensionality reduction. Bit-by-bit addition ultimately performed the best, as this operation preserves the original information of both modalities and enhances the overall feature representation by simply accumulating their respective advantages, thereby more effectively integrating the complementary information of the two modalities.

In the MIE partition experiment, MIE modules with different partition sizes were set after layer2 and layer4 of the main network to validate the rational of the partition settings. The experiment demonstrated that the 8-2 partition size established in this paper achieved an appropriate balance of signal-to-noise ratio, effectively filtering out noise while ensuring the capture and enhancement of key features.

In the MIE attention experiment, several classical attention mechanisms were selected for testing. The experiment showed that the performance was optimal when using AAOE, indicating that the AAOE module can more effectively capture and focus on the differences from different perspectives, thereby improving recognition accuracy. Moreover, an ablation experiment of the overall model structure was conducted, and the results showed that the model performed best when using the MIE module in both layer2 and layer4, allowing RGB and GEI to effectively interact with each other at shallow and deep features, respectively, further proving the rationality of the model structure proposed in this paper.

Finally, through comparative experiments with state-of-the-art methods, this paper demonstrated that the proposed method exhibited a higher mean and smaller variance, thanks to the two-stage MIE module information interaction. The proposed method showed stronger stability and reliability in handling perspective changes. Additionally, cross-domain experiments were conducted to assess performance, and although there was a significant decrease in re-identification accuracy, the algorithm proposed in this paper still showed the best performance in terms of mean and variance of accuracy, strongly proving the generalization capability of the proposed method. This indicates that the MIE module can help the model maintain relatively good stability.

6. Conclusions

This paper presents a novel attention-enhanced network for person re-identification that leverages synergistic interactions between appearance and gait modalities. By designing a multi-information exchange (MIE) module informed by adaptive attention mechanisms, our framework dynamically aligns and fuses heterogeneous features across varying viewpoints. Crucially, we introduce a mechanism that maps angular cues into spatially aware weight maps, thereby optimizing the signal-to-noise balance in fused representations.

Extensive experiments on the CASIA-B dataset confirm that our method surpasses existing state-of-the-art solutions in terms of both accuracy and robustness under significant viewpoint changes. Finally, under conditions with perspective differences, the average accuracy reached 94.9, and the variance was reduced to 4.199. Moreover, cross-domain evaluations demonstrate strong generalization capabilities, validating the practical relevance of our approach.

Despite the significant progress made by the proposed method in handling viewpoint variations, it still exhibits certain limitations. Specifically, an analysis of failure cases indicates that the current importance weighting estimation, which primarily emphasizes appearance features, causes re-identification failures when pedestrian appearances are overly consistent (e.g., wearing identical colored clothing). Consequently, future research should focus on enhancing the model’s ability to discern subtle differences and simultaneously strengthen the expressive power of gait features.

Furthermore, deeper consideration should be given to effectively handling factors such as illumination and occlusion in future studies. Concurrently, exploring the integration of diverse image types (e.g., infrared, low-light) and investigating more effective ways to combine various biometric cues to boost recognition performance are promising avenues. Finally, the design of lightweight network models to reduce computational costs and resource consumption represents a crucial direction for future work.

Author Contributions

Conceptualization, Z.Y. and L.C.; methodology, Z.Y.; software, Y.C.; validation, Z.Y., L.C. and Y.C.; formal analysis, Z.Y.; investigation, Y.C.; resources, L.C.; data curation, Z.Y.; writing—original draft preparation, Z.Y.; writing—review and editing, Z.Y.; visualization, M.Y.; supervision, H.S.; project administration, H.X.; funding acquisition, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Xiangyu Zhao was employed by China Aerospace Science and Technology Corporation. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zheng, L.; Yang, Y.; Hauptmann, A.G. Person re-identification: Past, present and future. arXiv 2016, arXiv:1610.02984. [Google Scholar] [CrossRef]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C.H. Deep learning for person re-identification: A survey and outlook. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 2872–2893. [Google Scholar] [CrossRef] [PubMed]

- Qian, X.; Fu, Y.; Xiang, T.; Wang, W.; Qiu, J.; Wu, Y.; Jiang, Y.-G.; Xue, X. Pose-normalized image generation for person re-identification. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 650–667. [Google Scholar]

- Liu, F.; Zhang, L. View confusion feature learning for person re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6639–6648. [Google Scholar]

- Zhu, Z.; Jiang, X.; Zheng, F.; Guo, X.; Huang, F.; Sun, X.; Zheng, W. Aware loss with angular regularization for person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13114–13121. [Google Scholar]

- Lu, X.; Li, X.; Sheng, W.; Ge, S.S. Long-Term Person Re-Identification Based on Appearance and Gait Feature Fusion Under Covariate Changes. Processes 2022, 10, 770–789. [Google Scholar] [CrossRef]

- Yu, S.; Tan, D.; Tan, T. A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 4, pp. 441–444. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Deep metric learning for person re-identification. In Proceedings of the 2014 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 34–39. [Google Scholar]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. DeepReID: Deep filter pairing neural network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 152–159. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- McLaughlin, N.; Del Rincon, J.M.; Miller, P. Recurrent convolutional network for video-based person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1325–1334. [Google Scholar]

- Wu, L.; Shen, C.; Hengel, A. Deep recurrent convolutional networks for video-based person re-identification: An end-to-end approach. arXiv 2016, arXiv:1606.01609. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Wu, A.; Zheng, W.-S.; Yu, H.-X.; Gong, S.; Lai, J. RGB-infrared cross-modality person re-identification. In Proceedings of the International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5390–5399. [Google Scholar]

- Wan, L.; Sun, Z.; Jing, Q.; Chen, Y.; Lu, L.; Li, Z. G2DA: Geometry-guided dual-alignment learning for RGB-infrared person re-identification. Pattern Recognit. 2023, 135, 109150. [Google Scholar] [CrossRef]

- Wu, A.; Zheng, W.S.; Lai, J.H. Robust depth-based person re-identification. IEEE Trans. Image Process. 2017, 26, 2588–2603. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Xiao, T.; Li, H.; Zhou, B.; Yue, D.; Wang, X. Person search with natural language description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5187–5196. [Google Scholar] [CrossRef]

- Liu, J.; Zha, Z.-J.; Hong, R.; Wang, M.; Zhang, Y. Deep adversarial graph attention convolution network for text-based person search. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 665–673. [Google Scholar]

- Yu, S.; Chen, H.; Garcia Reyes, E.B.; Poh, N. GaitGANv2: Invariant gait feature extraction using generative adversarial networks. Pattern Recognit. 2019, 87, 179–189. [Google Scholar] [CrossRef]

- Sepas-Moghaddam, A.; Etemad, A. Deep gait recognition: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 264–284. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Huang, Y.; Wang, L.; Wang, X.; Tan, T. A comprehensive study on cross-view gait based human identification with deep cnns. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 209–226. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Chen, H.; Reyes, E.B.G.; Poh, N. GaitGAN: Invariant gait feature extraction using generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR Workshops), Honolulu, HI, USA, 21–26 July 2017; pp. 30–37. [Google Scholar]

- Fan, C.; Peng, Y.; Cao, C.; Liu, X.; Hou, S.; Chi, J.; Huang, Y.; Li, Q.; He, Z. Gaitpart: Temporal part-based model for gait recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14213–14221. [Google Scholar]

- Hou, S.; Cao, C.; Liu, X.; Huang, Y. Gait lateral network: Learning discriminative and compact representations for gait recognition. In Proceedings of the Computer Vision–ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 382–398. [Google Scholar]

- Sepas-Moghaddam, A.; Etemad, A. View-invariant gait recognition with attentive recurrent learning of partial representations. IEEE Trans. Biom. Behav. Identity Sci. 2020, 3, 124–137. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent models of visual attention. In Proceedings of the International Conference on Neural Information Processing Systems (NIPS), Montreal, Canada, 8–13 December 2014; pp. 2204–2212. [Google Scholar]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.; Salakhudinov, R.; Zemel, R.; Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In Proceedings of the International Conference on International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the International Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Vedaldi, A. Gather-excite: Exploiting feature context in convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems (NIPS), Montréal, Canada, 3–8 December 2018; pp. 9423–9433. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local Neural Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Leng, J.; Wang, H.; Gao, X.; Zhang, Y.; Wang, Y.; Mo, M. Where to look: Multi-granularity occlusion aware for video person re-identification. Neurocomputing 2023, 536, 137–151. [Google Scholar] [CrossRef]

- Gao, J.; Nevatia, R. Revisiting temporal modeling for video-based person reid. arXiv 2018, arXiv:1805.02104. [Google Scholar] [CrossRef]

- Wang, Y.; Song, C.; Huang, Y.; Wang, Z.; Wang, L. Learning view invariant gait features with two-stream GAN. Neurocomputing 2019, 339, 245–254. [Google Scholar] [CrossRef]

- Wang, X.; Doretto, G.; Sebastian, T.; Rittscher, J.; Tu, P. Shape and appearance context modeling. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar]

- Mogan, J.N.; Lee, C.P.; Lim, K.M.; Muthu, K.S. VGG16-MLP: Gait Recognition with Fine-Tuned VGG-16 and Multilayer Perceptron. Applied Sciences 2022, 12, 7639. [Google Scholar] [CrossRef]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Cai, Y.; Qin, P.; Zeng, J.; Jin, Z.; Qin, J.; Zhai, S. Research on Person Re-Identification Method for Large-Angle Viewpoint Differences. Comput. Eng. 2024, 50, 330–341. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).