An Adaptive JPEG Steganography Algorithm Based on the UT-GAN Model

Abstract

1. Introduction

- Combining non-data-driven gradient generation with UT-GAN preprocessing, the scheme reduces reliance on datasets and enhances the robustness of adversarial stego images.

- Presenting a new gradient calculator that computes adversarial gradients in place of steganalyzers based on deep learning. The gradient calculator is tailored to DCTR steganalysis, serving as an approximate neural network for the extraction of stego features.

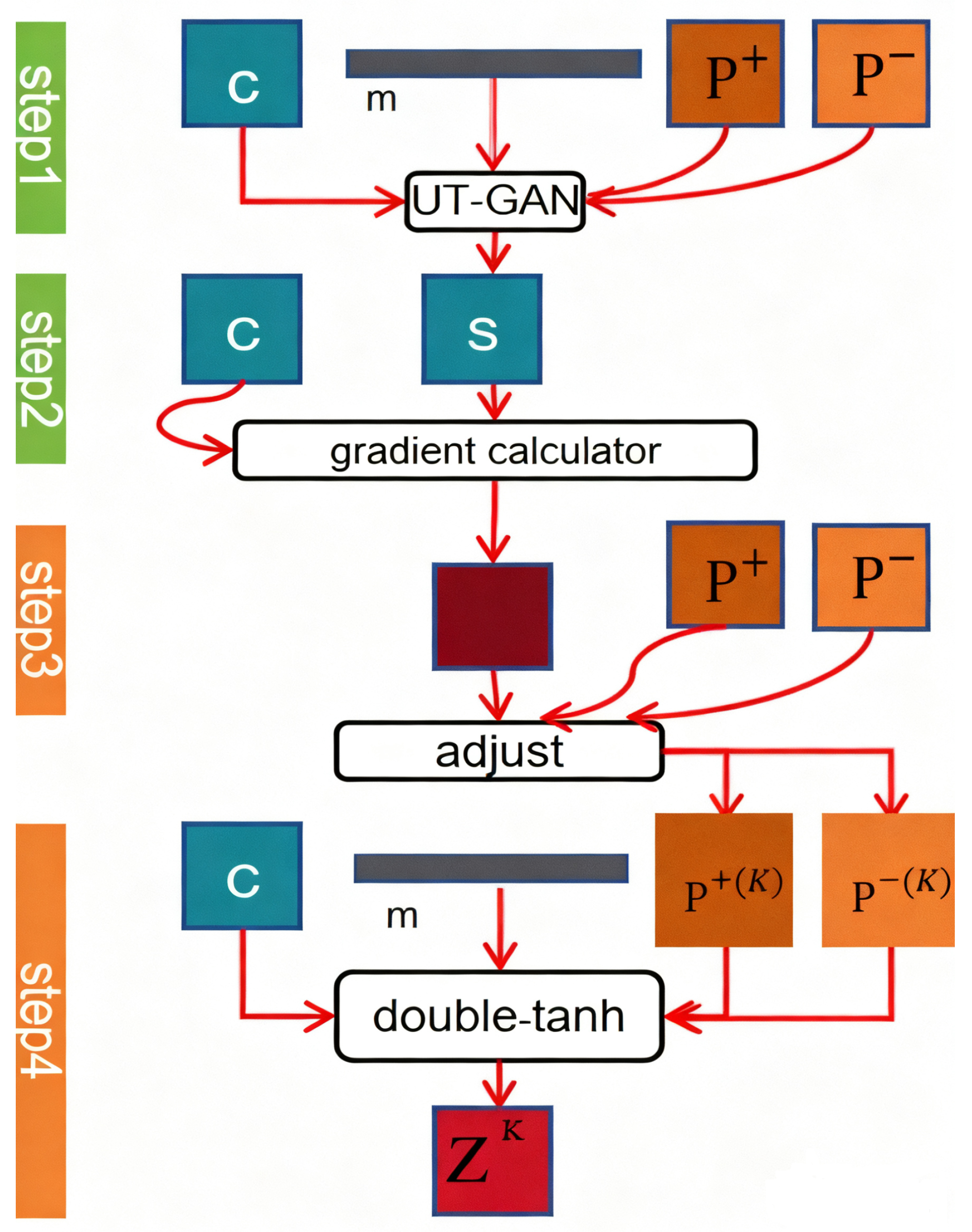

- Striking a balance between security and implementation efficiency. The UT-GAN adversarial framework is utilized to generate adversarial stego images. To achieve optimal results, the embedding cost function is iteratively refined through the use of an embedding simulator.

2. Related Work

3. Proposed Approach

3.1. Optimal Selection of Compliant Stego Images

- Indirect Performance Benchmarking: The security performance of our SE method is thoroughly evaluated against a comprehensive suite of modern steganalyzers (DCTR, SRM, GFR, XuNet, SRNet, CovNet) in Section 4. The consistently high error rates () achieved across these diverse detectors demonstrate SE’s robust anti-detection capability, which conceptually surpasses the security level anticipated from the ADV-EMB framework given its dataset-dependent nature.

- Theoretical Superiority Validation: The fundamental advantages of SE’s design—particularly its non-data-driven gradient calculator and universal selection criterion—provide theoretical grounding for its superior generalizability and robustness compared to ADV-EMB’s model-specific approach.

- Future Comparative Commitment: We acknowledge the value of empirical comparison and commit to conducting a comprehensive experimental analysis against ADV-EMB in subsequent work, pending the development of a standardized implementation benchmark.

3.2. Gradient Calculator

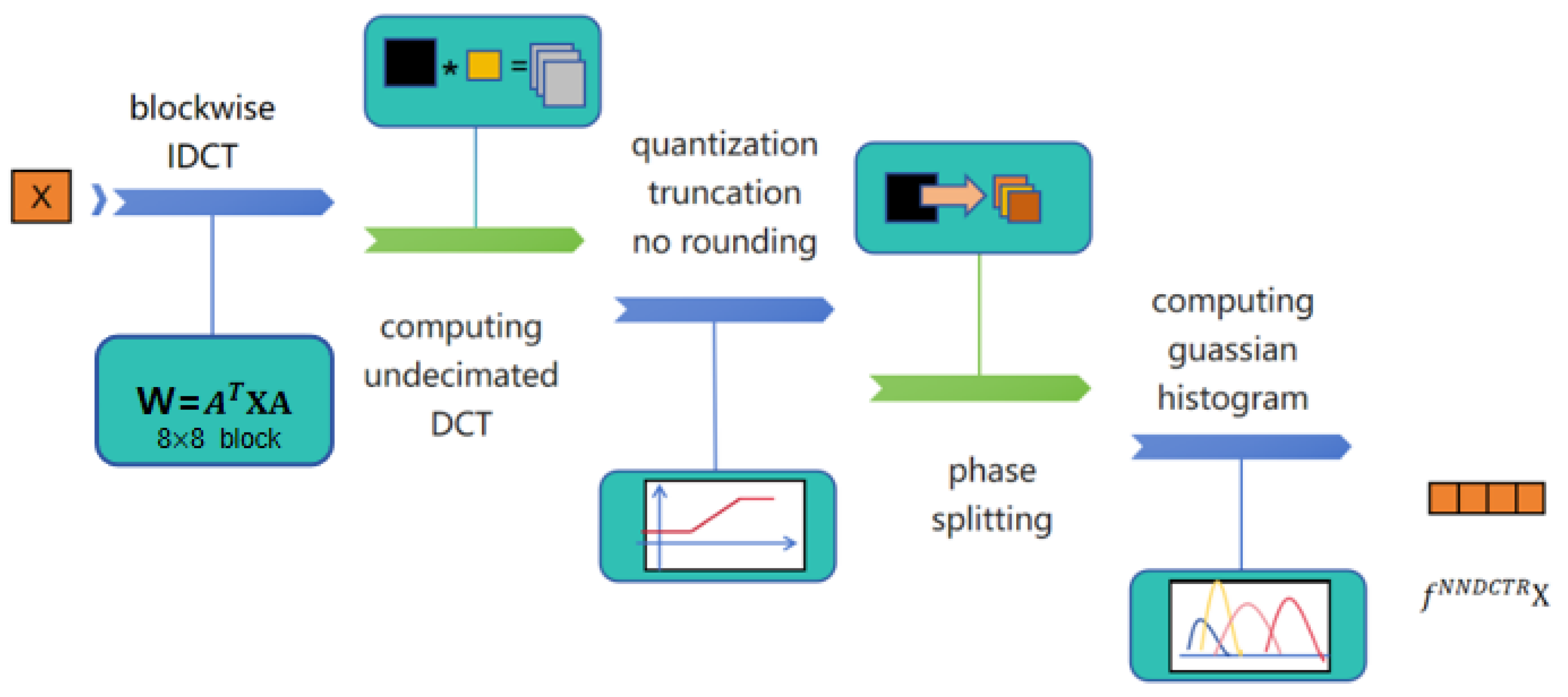

3.3. NN-DCTR

3.4. Linear Mapper

3.5. The SE Implementation

4. Experiments

4.1. Experimental Setup

- J-UNIWARD: A traditional steganographic algorithm that adopts the Syndrome Trellis Code (STC) method for information embedding.

- UT-GAN: A GAN (Generative Adversarial Network)-based steganographic model, which is mainly used for preprocessing in the SE (Steganography/Steganalytic Evaluation, adjust based on specific context) task.

- SPAR-RL: An adaptive steganographic method designed based on reinforcement learning, enabling dynamic adjustment of embedding strategies.

- DBS: A generative steganographic method built on diffusion models, leveraging the iterative denoising process of diffusion for hidden information transmission.

4.2. Performance Evaluation of NN-DCTR Detection

4.3. Feature–Space Distance Analysis

4.4. Performance Under Different Quality Factors

4.5. Non-Adversarial Steganalysis Testing

4.6. Adversarial Steganalysis Testing

4.7. Computational Cost Analysis

- Training Time (GPU Hours): The total time required to train the models to convergence.

- Inference Time (ms per image): The average time required to process a single image (), including both the UT-GAN preprocessing and the gradient calculator optimization.

- Computational Complexity (GFLOPs): Measured using a deep learning profiler (TensorFlow Profiler).

- Peak GPU Memory Usage (GB): The maximum GPU memory consumed during the inference phase.

- J-UNIWARD, as a conventional non-trainable method, exhibits the lowest overhead but also the lowest security performance (as shown in previous sections).

- DBS, the diffusion-based generative method, achieves high security but at an extremely high computational cost, making it less practical for real-time applications due to its iterative denoising process.

- Our SE method strikes an effective balance. It inherits the same training cost as UT-GAN since it uses the same pretrained network. More importantly, its inference overhead is only marginally higher than that of UT-GAN and SPAR-RL (22.1 ms vs. 15.3/18.7 ms). This is because the gradient calculator, despite its powerful optimization capability, is a relatively lightweight network. The complexity (GFLOPs) and memory usage of SE are also on par with other GAN-based adaptive methods.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fridrich, J.; Filler, T. Practical Methods for Minimizing Embedding Impact in Steganography. In Security, Steganography, and Watermarking of Multimedia Contents IX; SPIE: Bellingham, WA, USA, 2007; Volume 6505, pp. 13–27. [Google Scholar]

- Filler, T.; Judas, J.; Fridrich, J. Minimizing Additive Distortion in Steganography Using Syndrome-Trellis Codes. IEEE Trans. Inf. Forensics Secur. 2011, 6, 920–935. [Google Scholar] [CrossRef]

- Pevnỳ, T.; Filler, T.; Bas, P. Using High-Dimensional Image Models to Perform Highly Undetectable Steganography. In Information Hiding; Springer: Berlin/Heidelberg, Germany, 2010; pp. 161–177. [Google Scholar]

- Holub, V.; Fridrich, J. Designing Steganographic Distortion Using Directional Filters. In Proceedings of the 2012 IEEE International Workshop on Information Forensics and Security (WIFS), Costa Adeje, Spain, 2–5 December 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 234–239. [Google Scholar]

- Chen, X.; Xu, G.; Xu, X.; Jiang, H.; Tian, Z.; Ma, T. Multicenter Hierarchical Federated Learning with Fault-Tolerance Mechanisms for Resilient Edge Computing Networks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 47–61. [Google Scholar] [CrossRef]

- Holub, V.; Fridrich, J.; Denemark, T. Universal Distortion Function for Steganography in an Arbitrary Domain. EURASIP J. Inf. Secur. 2014, 2014, 1–13. [Google Scholar] [CrossRef]

- Sedighi, V.; Cogranne, R.; Fridrich, J. Content-Adaptive Steganography by Minimizing Statistical Detectability. IEEE Trans. Inf. Forensics Secur. 2015, 11, 221–234. [Google Scholar] [CrossRef]

- Li, X.; Chen, X. Value Determination Method Based on Multiple Reference Points Under a Trapezoidal Intuitionistic Fuzzy Environment. Appl. Soft Comput. 2018, 63, 39–49. [Google Scholar] [CrossRef]

- Tan, P.; Wang, X.; Wang, Y. Dimensionality Reduction in Evolutionary Algorithms-Based Feature Selection for Motor Imagery Brain-Computer Interface. Swarm Evol. Comput. 2020, 52, 100597. [Google Scholar] [CrossRef]

- Fridrich, J.; Kodovsky, J. Rich Models for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2012, 7, 868–882. [Google Scholar] [CrossRef]

- Kodovsky, J.; Fridrich, J.; Holub, V. Ensemble Classifiers for Steganalysis of Digital Media. IEEE Trans. Inf. Forensics Secur. 2011, 7, 432–444. [Google Scholar] [CrossRef]

- Ren, Y.; Liu, A.; Mao, X.; Li, F. An Intelligent Charging Scheme Maximizing the Utility for Rechargeable Network in Smart City. Pervasive Mob. Comput. 2021, 77, 101457. [Google Scholar] [CrossRef]

- Xu, G.; Wu, H.-Z.; Shi, Y.-Q. Structural Design of Convolutional Neural Networks for Steganalysis. IEEE Signal Process. Lett. 2016, 23, 708–712. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, X. Truthful Resource Trading for Dependent Task Offloading in Heterogeneous Edge Computing. Future Gener. Comput. Syst. 2022, 133, 228–239. [Google Scholar] [CrossRef]

- Yedroudj, M.; Comby, F.; Chaumont, M. Yedroudj-Net: An Efficient CNN for Spatial Steganalysis. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2092–2096. [Google Scholar]

- Boroumand, M.; Chen, M.; Fridrich, J. Deep Residual Network for Steganalysis of Digital Images. IEEE Trans. Inf. Forensics Secur. 2018, 14, 1181–1193. [Google Scholar] [CrossRef]

- Feng, Y.; Qin, Y.M.; Zhao, S. Correlation-Split and Recombination-Sort Interaction Networks for Air Quality Forecasting. Appl. Soft Comput. 2023, 145, 110544. [Google Scholar] [CrossRef]

- Mo, H.; Song, T.; Chen, B.; Luo, W.; Huang, J. Enhancing JPEG Steganography Using Iterative Adversarial Examples. In Proceedings of the 2019 IEEE International Workshop on Information Forensics and Security (WIFS), Delft, The Netherlands, 9–12 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Yang, Y.; Wu, Y.; Hou, M.; Luo, J.; Xie, X. Solving Emden-Fowler Equations Using Improved Extreme Learning Machine Algorithm Based on Block Legendre Basis Neural Network. Neural Process. Lett. 2023, 55, 7135–7154. [Google Scholar] [CrossRef]

- Zhan, M.; Kou, G.; Dong, Y.; Chiclana, F.; Herrera-Viedma, E. Bounded Confidence Evolution of Opinions and Actions in Social Networks. IEEE Trans. Cybern. 2021, 52, 7017–7028. [Google Scholar] [CrossRef]

- Kim, S.; Park, J.; Lee, H. An Adaptive JPEG Steganographic Method Based on Weight Distribution for Embedding Costs. J. Korea Inst. Inf. Commun. Eng. 2025, 29, 1389–1398. [Google Scholar]

- Shi, Q.; Yang, J. Towards Automatic Embedding Cost Learning for JPEG Steganography. ResearchGate Prepr. 2019. [Google Scholar] [CrossRef]

- Tang, W.; Tan, S.; Li, B.; Huang, J. Automatic Steganographic Distortion Learning Using a Generative Adversarial Network. IEEE Signal Process. Lett. 2017, 24, 1547–1551. [Google Scholar] [CrossRef]

- Chen, Z.S.; Yang, Y.; Wang, X.J.; Chin, K.S.; Tsui, K.L. Fostering Linguistic Decision-Making Under Uncertainty: A Proportional Interval Type-2 Hesitant Fuzzy TOPSIS Approach Based on Hamacher Aggregation Operators and Andness Optimization Models. Inf. Sci. 2019, 500, 229–258. [Google Scholar] [CrossRef]

- Li, C.; He, A.; Wen, Y.; Liu, G.; Chronopoulos, A.T. Optimal Trading Mechanism Based on Differential Privacy Protection and Stackelberg Game in Big Data Market. IEEE Trans. Serv. Comput. 2023, 16, 3550–3563. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Bernard, S.; Bas, P.; Klein, J.; Pevny, T. Explicit Optimization of Min Max Steganographic Game. IEEE Trans. Inf. Forensics Secur. 2020, 16, 812–823. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, J.; Liang, W.; Wang, K.I.K.; Yan, Z.; Yang, L.T.; Jin, Q. Reconstructed Graph Neural Network with Knowledge Distillation for Lightweight Anomaly Detection. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 11817–11828. [Google Scholar] [CrossRef]

- Bernard, S.; Bas, P.; Klein, J.; Pevny, T. Backpack: A Backpropagable Adversarial Embedding Scheme. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3539–3554. [Google Scholar] [CrossRef]

- Liu, C.; Kou, G.; Zhou, X.; Peng, Y.; Sheng, H.; Alsaadi, F.E. Time Dependent Vehicle Routing Problem with Time Windows of City Logistics with a Congestion Avoidance Approach. Knowl.-Based Syst. 2020, 188, 104813. [Google Scholar] [CrossRef]

- Xiong, T.; Feng, S.; Pan, M.; Yu, Y. Smart Contract Generation for Inter-Organizational Process Collaboration. Concurr. Comput. Pract. Exp. 2024, 36, e7961. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, L.; Zhao, Y. Adaptive Embedding Cost Optimization for JPEG Steganography Using Multi-Scale Feature Fusion. IEEE Access 2024, 12, 58721–58734. [Google Scholar]

- Holub, V.; Fridrich, J. Phase-Aware Projection Model for Steganalysis of JPEG Images. In Media Watermarking, Security, and Forensics 2015; SPIE: Bellingham, WA, USA, 2015; Volume 9409, pp. 259–269. [Google Scholar]

- Pan, Z.; Wang, Y.; Cao, Y.; Gui, W. VAE-Based Interpretable Latent Variable Model for Process Monitoring. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 6075–6088. [Google Scholar] [CrossRef]

- Liu, D.; Liu, Y.; Chen, X. The New Similarity Measure and Distance Measure Between Hesitant Fuzzy Linguistic Term Sets and Their Application in Multi-Criteria Decision Making. J. Intell. Fuzzy Syst. 2019, 37, 995–1006. [Google Scholar] [CrossRef]

- Zhang, W.; Li, J.; Wang, H. Adaptive Feature Fusion for CNN-Based Steganalysis via Attention Mechanism. IEEE Trans. Inf. Forensics Secur. 2022, 17, 789–802. [Google Scholar]

- Wang, L.; Zhao, Y.; Chen, B. Lightweight GAN Architecture for Low-Complexity Image Steganography. Neurocomputing 2022, 480, 182–195. [Google Scholar]

- Chen, J.; Liu, X.; Zhang, H. DCT Domain Adaptive Embedding Strategy Based on Statistical Distortion Minimization. Signal Process. 2022, 198, 108562. [Google Scholar]

- Li, M.; Wang, J.; Yang, F. Transferable Adversarial Perturbations for Cross-Steganalyzer Defense. IEEE Trans. Cybern. 2023, 53, 2456–2468. [Google Scholar]

- Fu, Y.; Li, Z.; Huang, F.; Ning, W.; Lyu, H. Design of a Fast Image Encryption Algorithm Based on a Novel 2D Chaotic Map and DNA Encoding. Secur. Priv. 2025, 8, e70036. [Google Scholar] [CrossRef]

- Rasool, A. RFS-codec: A Novel Encoding Approach to Store Image Data in DNA. J. Artif. Intell. Bioinf. 2025, 1, 41–50. [Google Scholar] [CrossRef]

| Aspect | ADV-EMB | SE (Proposed) |

|---|---|---|

| Adversarial model | CNN-based steganalyzer | Gradient calculator |

| Data dependency | Dataset-specific | Non-data-driven |

| Selection criterion | Minimize cost | Minimize cover–stego distance |

| Gradient source | Pretrained CNN | Feature-based (DCTR-inspired) |

| Transferability | Limited to known models | High (generalizable) |

| Training overhead | High (requires labeled data) | Low (no dataset training) |

| Steganalyzer | Steganography | 0.1 | 0.2 | 0.3 | 0.4 |

|---|---|---|---|---|---|

| Pe | Pe | Pe | Pe | ||

| NN-DCTR | J-UNIWARD | 48.7 | 46.1 | 41.6 | 38.7 |

| UERWARD | 45.3 | 43.5 | 41.1 | 37.4 | |

| DCTR | J-UNIWARD | 48.2 | 47.1 | 43.1 | 39.8 |

| UERWARD | 46.0 | 44.2 | 42.2 | 38.8 |

| Steganalyzer | Method | Quality Factor | |||

|---|---|---|---|---|---|

| 50 | 75 | 85 | 95 | ||

| SRNet | J-UNIWARD | 25.1 | 22.1 | 20.5 | 18.9 |

| UT-GAN | 35.8 | 33.1 | 31.7 | 29.2 | |

| SPAR-RL | 36.5 | 34.1 | 32.8 | 30.5 | |

| DBS | 37.1 | 34.5 | 33.2 | 31.6 | |

| SE | 38.5 | 35.2 | 34.0 | 30.8 | |

| XuNet | J-UNIWARD | 36.2 | 39.0 | 40.1 | 41.5 |

| UT-GAN | 40.5 | 39.8 | 39.2 | 38.5 | |

| SPAR-RL | 41.8 | 40.8 | 38.4 | 39.3 | |

| DBS | 42.0 | 40.5 | 39.8 | 39.0 | |

| SE | 41.2 | 40.4 | 40.3 | 39.5 | |

| CovNet | J-UNIWARD | 28.5 | 28.7 | 29.2 | 30.1 |

| UT-GAN | 31.2 | 29.9 | 30.5 | 31.8 | |

| SPAR-RL | 33.8 | 32.5 | 31.9 | 32.4 | |

| DBS | 34.5 | 33.2 | 32.8 | 33.1 | |

| SE | 35.1 | 33.8 | 33.5 | 33.9 | |

| Steganalyzer | Steganography | Embedding Rate (%) | ||||

|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | Average | ||

| DCTR | J-UNIWARD | 47.2 | 41.7 | 35.2 | 28.2 | 38.1 |

| ASDL-GAN | 41.4 | 38.7 | 34.3 | 28.4 | 35.7 | |

| UT-GAN | 58.2 | 53.1 | 49.4 | 44.6 | 51.3 | |

| SPAR-RL | 59.3 | 54.5 | 50.6 | 46.7 | 52.8 | |

| DBS | 59.5 | 54.0 | 50.9 | 46.2 | 52.7 | |

| SE | 58.7 | 57.9 | 51.1 | 47.3 | 53.8 | |

| SRM | J-UNIWARD | 42.3 | 33.8 | 27.4 | 22.1 | 31.4 |

| ASDL-GAN | 37.0 | 32.1 | 27.0 | 22.5 | 29.7 | |

| UT-GAN | 46.8 | 43.5 | 38.3 | 33.1 | 40.4 | |

| SPAR-RL | 48.1 | 44.8 | 39.1 | 34.1 | 41.5 | |

| DBS | 48.1 | 45.2 | 38.5 | 34.9 | 41.6 | |

| SE | 47.8 | 44.3 | 39.7 | 35.2 | 41.8 | |

| GFR | J-UNIWARD | 45.2 | 37.7 | 29.3 | 21.6 | 33.5 |

| ASDL-GAN | 41.5 | 32.1 | 28.7 | 19.7 | 30.5 | |

| UT-GAN | 47.2 | 42.2 | 35.6 | 31.8 | 39.2 | |

| SPAR-RL | 49.1 | 43.4 | 36.1 | 33.6 | 40.6 | |

| DBS | 48.8 | 44.3 | 35.4 | 33.5 | 40.4 | |

| SE | 48.5 | 44.1 | 37.4 | 32.9 | 40.7 | |

| Steganalyzer | Steganography | Embedding Rate (%) | ||||

|---|---|---|---|---|---|---|

| 0.1 | 0.2 | 0.3 | 0.4 | Average | ||

| XuNet | J-UNIWARD | 47.4 | 45.1 | 42.8 | 39.0 | 43.6 |

| ASDL-GAN | 43.0 | 40.2 | 37.1 | 33.3 | 38.4 | |

| UT-GAN | 48.9 | 46.3 | 43.5 | 39.8 | 44.6 | |

| SPAR-RL | 48.7 | 47.2 | 43.7 | 40.8 | 45.1 | |

| DBS | 49.0 | 47.5 | 44.3 | 40.5 | 45.1 | |

| SE | 49.6 | 47.0 | 44.8 | 40.4 | 45.5 | |

| SRNet | J-UNIWARD | 48.1 | 39.2 | 34.7 | 32.6 | 38.7 |

| ASDL-GAN | 48.2 | 32.3 | 29.0 | 27.6 | 34.3 | |

| UT-GAN | 49.0 | 40.1 | 37.3 | 33.4 | 40.0 | |

| SPAR-RL | 49.3 | 37.5 | 35.6 | 33.4 | 39.0 | |

| DBS | 49.5 | 38.8 | 35.2 | 33.7 | 39.2 | |

| SE | 48.6 | 39.9 | 38.1 | 34.2 | 40.2 | |

| CovNet | J-UNIWARD | 36.0 | 32.1 | 30.1 | 28.7 | 31.7 |

| ASDL-GAN | 32.4 | 26.9 | 23.9 | 22.9 | 26.5 | |

| UT-GAN | 36.3 | 33.1 | 32.5 | 29.9 | 33.0 | |

| SPAR-RL | 38.1 | 33.4 | 29.9 | 28.5 | 32.4 | |

| DBS | 37.9 | 33.8 | 30.3 | 30.0 | 33.0 | |

| SE | 37.7 | 34.1 | 31.1 | 30.8 | 33.4 | |

| Method | Training Time (GPU hrs) | Inference Time (ms/img) | Complexity (GFLOPs) | GPU Memory (GB) |

|---|---|---|---|---|

| J-UNIWARD | - | 12.5 | 0.02 | 0.1 |

| UT-GAN | 48.2 | 15.3 | 0.85 | 1.8 |

| SPAR-RL | 62.5 | 18.7 | 1.12 | 2.2 |

| DBS | 105.4 | 125.6 | 15.32 | 4.5 |

| SE (Ours) | 48.2 | 22.1 | 1.05 | 2.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, L.; Li, Y.; Zeng, Y.; Chen, P. An Adaptive JPEG Steganography Algorithm Based on the UT-GAN Model. Electronics 2025, 14, 4046. https://doi.org/10.3390/electronics14204046

Tan L, Li Y, Zeng Y, Chen P. An Adaptive JPEG Steganography Algorithm Based on the UT-GAN Model. Electronics. 2025; 14(20):4046. https://doi.org/10.3390/electronics14204046

Chicago/Turabian StyleTan, Lina, Yi Li, Yan Zeng, and Peng Chen. 2025. "An Adaptive JPEG Steganography Algorithm Based on the UT-GAN Model" Electronics 14, no. 20: 4046. https://doi.org/10.3390/electronics14204046

APA StyleTan, L., Li, Y., Zeng, Y., & Chen, P. (2025). An Adaptive JPEG Steganography Algorithm Based on the UT-GAN Model. Electronics, 14(20), 4046. https://doi.org/10.3390/electronics14204046