Abstract

The rapid growth of Internet of Medical Things (IoMT) devices offers promising avenues for real-time, personalized healthcare while also introducing critical challenges related to data privacy, device heterogeneity, and deployment scalability. This paper presents E-DMFL (Enhanced Distributed Multimodal Federated Learning), an Enhanced Distributed Multimodal Federated Learning framework designed to address these issues. Our approach combines systems analysis principles with intelligent model design, integrating PyTorch-based modular orchestration and TensorFlow-style data pipelines to enable multimodal edge-based training. E-DMFL incorporates gated attention fusion, differential privacy, Shapley-value-based modality selection, and peer-to-peer communication to facilitate secure and adaptive learning in non-IID environments. We evaluate the framework using the EarSAVAS dataset, which includes synchronized audio and motion signals from ear-worn sensors. E-DMFL achieves a test accuracy of 92.0% in just six communication rounds. The framework also supports energy-efficient and real-time deployment through quantization-aware training and battery-aware scheduling. These results demonstrate the potential of combining systems-level design with federated learning (FL) innovations to support practical, privacy-aware IoMT applications.

1. Introduction

The Internet of Medical Things (IoMT) enables real-time, personalized healthcare through multimodal streams from wearables and near-body sensors. Yet the same data richness that powers improved diagnostics introduces serious privacy, security, and operational risks. Centralized learning pipelines where raw data is pushed to the cloud struggle with legal and ethical constraints (e.g., HIPAA and GDPR), recurring breaches, and infrastructure bottlenecks [1]. The frequency and scale of recent incidents underscore a structural weakness of centralized aggregation for sensitive clinical data [1].

Federated learning (FL) mitigates these risks by keeping raw data local while training global models. However, standard FL assumptions regarding homogeneous clients, unimodal inputs, and synchronized participation rarely hold in IoMT. Gradient updates can still leak information, client hardware is constrained, connectivity is bursty, and sensor availability is non-stationary. In practice, health datasets are heterogeneous and often stored in diverse formats (e.g., HDF5, DICOM, or HL7), complicating end-to-end deployment.

Despite recent advances in multimodal federated learning (reviewed in Section 2), several critical gaps persist for IoMT deployment: convergence for non-IID data with intermittent clients, resilient handling of multiple missing modalities, integrated privacy with formal guarantees, end-to-end efficiency, and practical deployment on resource-constrained hardware.

To address these gaps, we propose E-DMFL, an Enhanced Distributed Multimodal Federated Learningframework for real-world IoMT:

- Adaptive multimodal architecture: gated/attention fusion with Shapley-based modality selection and per-client adaptation, resilient to missing or intermittent sensors and heterogeneous formats.

- Privacy mechanism: DP with norm clipping integrated with secure aggregation, resisting inversion and membership inference while preserving utility.

- Efficiency: Quantization-aware training, battery- and bandwidth-aware scheduling, and sliding-window low-latency inference for resource-constrained earable/wearable devices.

We evaluate E-DMFL on the EarSAVAS vocal activity dataset with 42 clients across 10 activity classes. The experimental results in Section 5 compare convergence speed, communication efficiency, privacy protection, and missing modality robustness against baseline federated learning approaches.

2. Related Works

2.1. Multimodal Federated Learning

Multimodal federated learning extends traditional FL to heterogeneous data sources, improving predictive performance through complementary information fusion. FedMM [2] trains separate modality-specific encoders on distributed IoMT data with federated averaging aggregation, achieving 12–18% accuracy improvements over unimodal baselines on stationary medical datasets. However, the architecture assumes that all modalities are always available and requires synchronized client participation impractical for IoMT where sensors fail intermittently (e.g., battery depletion or connectivity loss).

CAR-MFL [3] addresses missing modalities through cross-modal augmentation using retrieval from small public datasets, improving robustness when up to 40% of modalities are absent. While effective for static missingness patterns, the retrieval mechanism incurs 2–3× communication overhead compared to standard FL and requires centralized public data problematic under HIPAA/GDPR constraints that prohibit external data dependencies for medical applications.

MAFed [4] introduces dynamic modality weighting based on real-time data quality assessment, achieving 8–15% improvements over static fusion. However, quality estimation requires 5–7 additional client–server negotiation rounds per training cycle, increasing total communication by 180–240%. For bandwidth constrained IoMT deployments (typical uplink: 5–20 Mbps), this overhead is prohibitive.

These approaches reveal a fundamental tradeoff: existing multimodal FL methods either sacrifice robustness to missing data (FedMM), increase communication costs (MAFed, CAR-MFL), or assume data availability patterns that do not reflect real IoMT conditions.

2.2. Privacy-Preserving Federated Learning

Differential privacy (DP) has emerged as the standard for formal privacy guarantees in FL. DP-FedAvg [5] adds calibrated Gaussian noise () to clipped gradients, achieving -DP while maintaining convergence. Kaissis et al. [6] extend this to medical imaging, demonstrating that preserves diagnostic utility (accuracy degradation < 3%) while reducing reconstruction attack success from 87% to 12%.

DP-FLHealth [7] applies DP to wearable health monitoring with client-level noise injection, achieving privacy protection against membership inference attacks (attack accuracy reduced from 78% to 52%). However, the framework is strictly unimodal, processing only accelerometer data. When extended naively to multimodal settings, uniform noise injection across modalities disrupts cross-modal correlations, and audio–motion synchronization features degrade by 40–60% under , as shown by Thrasher et al. [8].

Cryptographic approaches like secure multi-party computation (SMPC) and homomorphic encryption (HE) provide stronger guarantees but introduce substantial overhead. SMPC requires 3–5 communication rounds per aggregation with 10–50× bandwidth inflation, while HE incurs 100–1000× computational costs [9]. For resource-constrained IoMT devices (ARM Cortex-A class, 2–4 GB RAM), these overheads render cryptographic-only approaches impractical for real-time monitoring.

The core gap: existing privacy mechanisms either apply only to unimodal data (DP-FLHealth), degrade multimodal performance through uniform noise (DP-FedAvg extensions), or impose prohibitive computational costs (SMPC, HE).

2.3. Communication-Efficient Federated Learning

Communication bottlenecks dominate FL training costs, particularly over wireless IoMT networks. mmFedMC [10] jointly optimizes client and modality selection using a greedy algorithm that estimates marginal utility, reducing communication by 60–70% compared to full participation. However, utility estimation assumes that modalities contribute independently when audio–motion synchronization or cross-modal features dominate, leading to 15–25% accuracy degradation in multimodal scenarios.

FedMBridge [11] reduces cross-modal communication through bridgeable connections, transmitting only transformed latent representations instead of full feature vectors. This achieves 3–5× compression ratios but requires pre-trained modality bridges, introducing cold-start problems for new clients or modalities. The approach also lacks mechanisms for handling missing modalities during inference.

EMFed [12] focuses on energy-aware client selection for battery-constrained devices, using predicted energy consumption to filter participants. While reducing energy costs by 40–50%, EMFed does not address data quality heterogeneity or modality importance; clients with critical but rare modalities may be excluded, degrading global model performance by 10–20% on imbalanced datasets.

These methods demonstrate that aggressive communication reduction without considering multimodal structure (mmFedMC), requiring pre-trained components (FedMBridge), or prioritizing energy over data utility (EMFed) leads to accuracy–efficiency tradeoffs unsuitable for medical applications where both privacy and diagnostic accuracy are non-negotiable.

2.4. Federated Learning for IoMT

IoMT-specific FL approaches address domain constraints like extreme device heterogeneity and strict latency requirements. FedHealth [13] employs model personalization through fine-tuned local heads, achieving 8–12% improvements over global models on patient-specific prediction tasks. However, the framework is unimodal (ECG only) and lacks privacy mechanisms beyond SSL/TLS transport encryption, which is insufficient against gradient inversion attacks [6].

HeteroFL [14] enables heterogeneous client participation through flexible model architectures (variable width/depth), accommodating devices with 2–16 GB RAM. While addressing computational heterogeneity, HeteroFL does not integrate privacy protections and assumes unimodal image data; extensions to multimodal time-series (audio, motion, and physiological signals) encounter synchronization and alignment challenges.

FedFocal [15,16] addresses class imbalance through focal loss weighting (), improving performance on rare diseases by 15–30%. However, the approach is unimodal and does not handle modality-specific imbalance, where certain rare classes may only be distinguishable through specific modality combinations (e.g., rare respiratory conditions requiring synchronized audio–motion analysis).

The limitation pattern: IoMT-specific methods address individual constraints (personalization, heterogeneity, and class imbalance) but remain unimodal and omit either privacy guarantees or multimodal fusion mechanisms necessary for comprehensive health monitoring.

2.5. Research Gaps and Comparative Analysis

To systematically evaluate how prior work addresses IoMT deployment requirements, we compare representative methods across six critical dimensions: (1) modality heterogeneity handling the ability to process diverse data types (audio, motion, and physiological signals) with varying sampling rates and dimensionality; (2) support for missing modalities; (3) communication optimization techniques to reduce bandwidth consumption over wireless IoMT networks; (4) privacy enhancement and formal guarantees against inference attacks; (5) real healthcare data applicability validation on medical rather than synthetic datasets; and (6) model generalization performance across heterogeneous client populations.

Table 1 summarizes this analysis. No prior work simultaneously addresses all six requirements.

Table 1.

Systematic comparison of federated learning methods for IoMT across six deployment-critical dimensions. Checkmarks indicate that the method explicitly addresses the requirement; crosses indicate absence or insufficient consideration. E-DMFL is the only framework simultaneously addressing all requirements.

The comparison reveals three critical gaps. First, methods addressing missing modalities (CAR-MFL and FedMV) lack communication optimization and formal privacy, making them unsuitable for bandwidth-constrained, privacy-sensitive IoMT. Second, communication-efficient approaches (mmFedMC, FedMBridge, and EMFed) either omit privacy mechanisms or cannot handle dynamic modality availability. Third, privacy-focused methods (DP-FedAvg, DP-FLHealth, and Kaissis et al.) remain unimodal or lack communication efficiency.

E-DMFL uniquely integrates all six capabilities through attention-based fusion, maintaining cross-modal correlations under conditions of differential privacy noise, Shapley value modality selection reducing communication while handling missing sensors, and quantization-aware training enabling deployment on resource-constrained ARM-based wearables. The following sections detail this technical design.

3. Methodology

3.1. Problem Formulation

Consider a federated learning system with N clients , each possessing a local multimodal dataset:

where and are input features from audio and motion sensors, and denotes the vocal activity class label.

The objective is to collaboratively learn a global model by minimizing weighted empirical risk:

The framework addresses three technical challenges: (1) ensuring -differential privacy through gradient clipping and calibrated noise injection, (2) reducing communication overhead through Shapley-based modality selection, and (3) maintaining model utility with missing or corrupted modalities through attention-based fusion.

3.2. E-DMFL Architecture

E-DMFL employs a dual-encoder architecture with gated attention fusion for multimodal vocal activity recognition under federated constraints.

3.2.1. Modality-Specific Encoders

Audio encoder: Processes 16 kHz audio signals through log-mel spectrograms (128 bins, 80–8000 Hz). The encoder employs a 1D CNN (kernel size 7, 64 filters) for spectral feature extraction, LSTM (128 hidden units) for temporal modeling, and global max pooling, producing a 128-dimensional representation:

Motion encoder: Processes 100 Hz IMU data (6-axis accelerometer + gyroscope) through a 1D CNN (32 filters, kernel size 5) for motion pattern extraction, multi-head self-attention (4 heads) for temporal dependencies, and global average pooling, producing a 64-dimensional representation:

3.2.2. Attention-Based Fusion

The gated attention mechanism combines modality features through learned weights. Feature concatenation followed by sigmoid-activated transformation generates attention weights :

where , ⊙ denotes element-wise multiplication, and represents concatenation. The mechanism learns to emphasize informative modalities while suppressing noisy inputs through adaptive weighting.

3.2.3. Shapley Value Modality Selection

After local training, clients compute Shapley values to quantify each modality’s marginal contribution. For modality :

where represents validation accuracy using modality subset S, and . Modalities with are excluded from transmission:

This threshold-based selection () reduces communication by 70–85% while maintaining model convergence.

3.3. Privacy and Security Framework

E-DMFL addresses three privacy threats in multimodal federated IoMT: gradient-based model inversion attacks reconstructing raw sensor data, membership inference attacks identifying training participants, and property inference attacks revealing dataset characteristics. The framework combines differential privacy, secure aggregation, and Byzantine-robust mechanisms.

3.3.1. Differential Privacy Mechanism

The framework ensures -differential privacy through the Gaussian mechanism with per-round privacy budget allocation. For T communication rounds with total budget , each round consumes . Noise variance is calibrated to gradient sensitivity:

where is the L2 gradient clipping bound ensuring for all clients. The mechanism satisfies

for neighboring datasets differing by one sample, where represents the training algorithm.

Privacy budget accumulation over T rounds follows advanced composition:

For our configuration (, , ), this yields per round.

3.3.2. Privacy-Preserving Multimodal Fusion

A critical challenge in multimodal federated learning is preserving cross-modal correlations with differential privacy. Naive approaches applying noise to intermediate features and disrupt synchronized patterns essential for activities like coughing (synchronized audio burst + chest motion) or speech (formant structure + jaw movement).

E-DMFL applies differential privacy to gradient updates after local training, not to intermediate activations during forward propagation. This ensures (1) cross-modal correlations preserved during attention mechanism learning, (2) formal privacy guaranteed before gradient transmission, and (3) model utility maintained through intact multimodal patterns.

The three-stage privacy pipeline operates as

This design achieves 0.9% accuracy degradation compared to non-private baselines (Section 5.3), versus 8–12% degradation when noise is applied at the feature level.

3.3.3. Secure Aggregation and Byzantine Robustness

The server employs trimmed mean aggregation to filter Byzantine clients submitting malicious updates. For each modality m:

- Collect noisy updates: ;

- Apply coordinate-wise trimming: remove top/bottom (10%) of values;

- Compute weighted average: where .

Trimmed mean aggregation provides Byzantine tolerance up to malicious clients without additional privacy cost. For clients and , the system tolerates up to 4 Byzantine clients (validated through stress testing, Section 4.6.1). The combination of differential privacy and Byzantine robustness provides defense-in-depth against both honest-but-curious and malicious adversaries.

3.3.4. Multimodal Privacy Amplification

Information distribution across modalities provides privacy gains beyond single-modality approaches. The mutual information between sensitive attributes S and multimodal observations satisfies subadditivity:

with strict inequality when modalities are not perfectly correlated. For model inversion attacks, reconstructing the original sample requires inverting both audio and motion encoders simultaneously, an exponentially harder problem than single-modality inversion due to the joint search space.

Empirically (Section 5.1.5), multimodal E-DMFL reduces model inversion attack success from 87% (no DP) to 12% (with DP) compared to 23% for single-modality DP-FedAvg with identical privacy budgets. This 11 percentage point improvement reflects privacy amplification from distributing information across heterogeneous modalities.

3.3.5. Threat Model

We consider three adversary types within the honest-but-curious model:

Model inversion attacker: White-box access to gradients, attempts to reconstruct training samples through iterative optimization. Defense: DP noise masks gradient information, reducing SSIM reconstruction quality below 0.3.

Membership inference attacker: Black-box query access, trains shadow models to distinguish members from non-members. Defense: DP reduces attack accuracy from 78% to 52% (near-random guessing).

Property inference attacker: Weight-only access, infers dataset properties (e.g., class imbalance). Defense: Aggregated noisy updates obscure client-specific statistics.

We do not address adaptive adversaries that adjust attack strategies based on observed DP noise patterns, or collusion among malicious clients; these are discussed as limitations in Section Scope and Future Directions.

3.4. Theoretical Guarantees

3.4.1. Convergence Analysis

Under standard federated learning assumptions (bounded gradients, Lipschitz smooth loss, and bounded client dissimilarity), E-DMFL achieves convergence rate:

where represents optimal global model parameters.

3.4.2. Privacy Accounting

The calibrated Gaussian mechanism ensures -differential privacy with privacy loss bounded by composition theorems (Equation (12)). For T communication rounds, this provides formal privacy accounting against model inversion and membership inference attacks.

3.4.3. Communication Complexity

Shapley-based modality selection reduces communication overhead from parameters per round to where represents the fraction of selected modality-specific parameters, achieving 70–85% bandwidth reduction while preserving model utility.

3.5. E-DMFL Training Protocol

Algorithm 1 presents the E-DMFL training protocol integrating multimodal fusion, differential privacy, and communication optimization.

The protocol operates in three phases. During initialization, the server allocates privacy budget across T rounds and precomputes DP noise variance. In the training phase, clients perform local training with gated attention fusion, compute Shapley values for modality selection, and apply differential privacy through gradient clipping and noise injection (detailed in Section 3.3). The server aggregates updates using trimmed mean filtering for Byzantine robustness.

Convergence monitoring tracks parameter changes , enabling early stopping when is maintained for 2 consecutive rounds.

| Algorithm 1 E-DMFL Training Protocol |

|

4. Experimental Setup

4.1. Baseline Evaluation Framework

To systematically evaluate our proposed approach, we established a comprehensive baseline comparison framework encompassing multiple federated learning paradigms and deployment scenarios that enables rigorous assessment of E-DMFL’s contributions across accuracy, convergence speed, privacy protection, and practical deployment considerations.

Standard federated learning baselines include vanilla FedAvg achieving 91.0% accuracy in 25 communication rounds, representing the conventional approach without privacy or multimodal capabilities. FedProx with proximal regularization for non-IID handling provides improved convergence stability in heterogeneous data environments, while FedNova with normalized averaging accommodates heterogeneous client participation patterns common in real-world deployments.

Privacy-preserving approaches encompass DP-FedAvg (82.7% accuracy, 30 rounds), implementing differential privacy with identical parameters to ensure fair comparison; SecAgg FL, with cryptographic protection (87.1% accuracy, 20 rounds) providing secure aggregation without gradient visibility; and SMPC-FL, using secure multi-party computation (88.4% accuracy, 18 rounds) for comprehensive privacy protection during aggregation.

Modality-specific configurations include single-modality baselines with audio-only federated learning (85.0% accuracy, 35 rounds) and motion-only approaches (80.0% accuracy, 45 rounds), alongside basic multimodal FL without advanced fusion (89.3% accuracy, 15 rounds). The performance ceiling is established through centralized learning (95.0% accuracy, 15 rounds), representing the theoretical upper bound for federated approaches while lacking privacy guarantees and collaborative benefits.

4.2. Dataset Description and Characteristics

Our experiments utilize the EarSAVAS Dataset: Enabling Subject-Aware Vocal Activity Sensing on Earables [19], publicly available on Kaggle. This rich multimodal dataset contains 44.5 h of synchronized audio and motion data collected from 42 participants engaged in everyday vocal behaviors, specifically designed for ear-worn device research.

The dataset encompasses eight core vocal activity classes: coughing, chewing, drinking, sighing, speech, nose blowing, throat clearing, and sniffing. Audio recordings were captured from feed-forward and feedback microphones of active noise-canceling earables at 16 kHz sampling rate, while motion data from the 3-axis accelerometer and 3-axis gyroscope sensors was recorded at 100 Hz. This synchronized setup provides integrated multimodal representation combining acoustic signatures with physical motion patterns.

Data collection was organized around structured Activity Blocks where participants performed predefined sequences of vocal activities with clearly marked start and stop cues, ensuring balanced distribution across all activity classes. Participants remained stationary throughout sessions to control spatial variability while maintaining ecological validity through varying noise conditions.

4.3. Data Partitioning and Preprocessing

4.3.1. Federated Learning Data Split

The EarSAVAS dataset underwent user-level stratified partitioning using an 80/10/10 split across all 42 participants, ensuring no data leakage between training, validation, and test sets. This user-level stratification preserves temporal continuity and maintains class balance across all eight vocal activity classes while simulating realistic federated deployment scenarios where each healthcare institution maintains local data.

With 50 samples per client across 42 clients (2100 total samples), the 80/10/10 split yielded 1680 training samples, 210 validation samples, and 210 test samples across the federation. Each client contributed approximately 40 training samples, 5 validation samples, and 5 test samples, maintaining perfect balance across the federation, with each client representing 2.38% of the total dataset. This balanced distribution reflects an idealized federated scenario where participating institutions contribute equal data volumes, establishing a baseline for evaluating E-DMFL’s performance before introducing realistic heterogeneity through non-IID class distributions (KL divergence 0.09 ± 0.05).

4.3.2. Multimodal Data Preprocessing Pipeline

Audio processing begins with resampling to 16 kHz using anti-aliasing filters, followed by Short-Time Fourier Transform with 2048 FFT size and 512 hop length. Log-mel spectrogram extraction uses 128 mel bins covering the 80–8000 Hz frequency range, with temporal segmentation into 2 s windows with 50% overlap. Z-score normalization per client handles device-specific variations.

Motion processing handles 100 Hz IMU data from the 3-axis accelerometer and gyroscope sensors through Butterworth low-pass filtering with a 20 Hz cutoff to remove high-frequency noise. Sliding window segmentation (2 s, 50% overlap) synchronizes with audio processing, while magnitude and derivative feature extraction characterizes motion patterns. Per-client standardization accounts for device orientation variations.

4.4. Multimodal Configuration Analysis

To demonstrate multimodal fusion effectiveness and address the need for illustrative multimodal data cases, we present integrated analysis across different modality configurations.

4.4.1. Single-Modality Baseline Configurations

The audio-only configuration processes 16 kHz audio signals through log-mel spectrograms using a 1D CNN (64 filters, kernel size 7) followed by LSTM (128 units), global max pooling, a dense layer (128 units), and a classification head (8 classes). Training employs the AdamW optimizer () with focal loss for class imbalance, achieving 85.0% accuracy but requiring 35 communication rounds for convergence. This approach struggles with ambient noise and lacks physical motion context.

The motion-only configuration processes 100 Hz IMU data (6-channel accelerometer and gyroscope) through a 1D CNN (32 filters, kernel size 5), multi-head self-attention (four heads), global average pooling, a dense layer (64 units), and a classification head (8 classes). Using identical optimizer settings with class-weighted cross-entropy loss, this configuration achieves 80.0% accuracy requiring 45 communication rounds but cannot distinguish acoustically similar activities and remains sensitive to device positioning.

4.4.2. Multimodal Fusion Configuration

The dual-encoder architecture processes synchronized audio and motion streams using parallel encoders with temporal alignment and a gated-attention fusion module. Late, feature-level fusion with learned attention weights reaches 92.0% accuracy in 6 communication rounds. Ablations indicate robustness under simulated sensor dropout and noise conditions and faster convergence than baseline models.

4.4.3. Illustrative Case Studies

Coughing detection illustrates multimodal fusion benefits. Audio-only processing captures spectral patterns in characteristic frequency bands (200–2000 Hz) but struggles with background noise and similar respiratory sounds, achieving 78% accuracy. Motion-only detection identifies chest and head movement patterns with respiratory rhythm but cannot distinguish from other physical activities, achieving 73% accuracy. Multimodal fusion combines acoustic signatures with synchronized physical motion, achieving 93% accuracy, a 15 percentage point improvement over the best single modality.

Speech versus throat clearing discrimination further illustrates fusion benefits. Audio-only classification differentiates based on spectral complexity and formant structure but may confuse certain speech patterns. Motion-only approaches show limited discrimination capability due to similar jaw and throat movements. Multimodal processing leverages both acoustic features and subtle motion differences for precise classification, achieving 12% accuracy improvement over the best single modality.

Missing modality robustness evaluation reveals system resilience. When audio becomes unavailable, the system maintains 88.2% accuracy using motion-only processing with attention reweighting. When motion data is unavailable, the system achieves 85.7% accuracy using audio-only processing with degraded fusion. With both modalities available, full 92.0% accuracy demonstrates optimal multimodal integration.

4.5. Evaluation Framework and Metrics

4.5.1. Performance Assessment

Performance evaluation encompasses classification accuracy, measuring overall and per-class accuracy across eight vocal activities. Precision, recall, and F1-score were calculated using weighted averages accounting for class imbalance; convergence speed measured the number of communication rounds to achieve target accuracy; and training time recorded the wall clock duration for complete federated training processes.

Communication efficiency assessment tracks total data transmission, measuring cumulative bytes exchanged during training, communication rounds counting server-client synchronization cycles, compression ratios quantifying parameter reduction through sparsification, and bandwidth utilization monitoring peak and average network usage during training.

Privacy and security evaluation includes differential privacy guarantees providing formal privacy accounting; attack resistance, measuring success rates for membership inference and model inversion attacks; information leakage analysis using mutual information across modalities; and reconstruction error evaluation through data recovery attempts from transmitted gradients.

4.5.2. Robustness Assessment

Robustness evaluation examines missing modality handling, measuring performance degradation with sensor failures; non-IID resilience tracking accuracy across varying data distribution heterogeneity; client heterogeneity, assessing performance with different hardware configurations; and noise robustness, evaluating stability under varying environmental conditions.

4.6. Experimental Configuration

4.6.1. Training Configuration and Parameters

Local training employed 3 epochs per communication round with the AdamW optimizer (learning rate 0.001, weight decay 0.01) and batch size 32. Global model aggregation used data-proportional weighting with trimmed mean filtering () for Byzantine robustness. The trimmed mean parameter removes the top and bottom 10% of client updates during aggregation, providing Byzantine robustness for up to 10% malicious clients (4 out of 42 clients in our deployment). This conservative threshold balances Byzantine tolerance with aggregation quality as higher values risk discarding legitimate heterogeneous updates.

To validate robustness, we conducted stress tests with Byzantine attack rates of 0%, 5%, 10%, 15%, and 20%. Byzantine clients were simulated by injecting Gaussian noise () into model weights to simulate malicious updates. Results show graceful degradation: accuracy remains above 85% with 10% Byzantine clients (within tolerance), drops to 78% at 15%, and degrades to 62% at 20% (beyond design threshold). This confirms that provides effective protection at the design threshold while maintaining performance in realistic attack scenarios.

Privacy parameters were set to and with the gradient clipping bound . Shapley threshold determined modality selection for communication optimization, while early stopping was activated when convergence tolerance was maintained for 2 consecutive rounds.

All baselines underwent hyperparameter optimization: FedProx was selected via grid search with chosen by validation accuracy; FedNova uses normalized ; DP-FedAvg matches the E-DMFL privacy budget (, , ); and SMPC-FL employs Shamir secret sharing (, ).

4.6.2. Hardware and Deployment Environment

Experiments were conducted on a heterogeneous testbed simulating realistic IoMT deployment. Edge clients used ARM Cortex-A75 processors (ARM Holdings, Cambridge, UK) with 2–4 GB RAM and varying network connectivity (5–100 Mbps), while the central server employed an Intel Xeon Gold 6248R processor (Intel Corporation, Santa Clara, CA, USA) with 64 GB RAM and NVIDIA Tesla V100 GPU (NVIDIA Corporation, Santa Clara, CA, USA). Network simulation included varying latency (10–500 ms) and bandwidth constraints, with the software stack comprising PyTorch 1.12, TensorFlow 2.8, Python 3.9, and CUDA 11.7.

All experiments include 5-fold cross-validation with different random seeds to ensure statistical significance. Results are reported with 95% confidence intervals across multiple independent runs to validate reproducibility and robustness of the proposed E-DMFL framework.

4.6.3. Privacy Attack Evaluation Protocol

To evaluate privacy protection, we conducted three standardized attacks following the protocols specified below. All attacks used 5 independent runs with seeds (42, 123, 456, 789, and 2024) to ensure statistical significance.

Model Inversion Attack: White-box attacker with gradient access uses 4-layer MLP decoder [512→256→128→20,096] trained with Adam (lr = 0.001, 200 iterations). Query budget: 1000 gradients per sample. Success metric: SSIM > 0.5. Evaluated on 100 test samples.

Membership Inference Attack: Black-box attacker with unlimited queries trains shadow model on 50% auxiliary data. Attack classifier uses gradient norm, loss, and confidence features. Training: 80/20 member/non-member split with 5-fold CV. Success metric: classification accuracy (baseline 50%). Evaluated on 500 member + 500 non-member samples.

Property Inference Attack: Weight-only access (no queries). Target: class imbalance detection (ratio > 2:1). 3-layer classifier on 256-dim weight statistics. Trained on 200 synthetic models. Success metric: AUC-ROC (baseline 0.5). Evaluated on 100 test models.

All attacks compared baseline (no DP) versus E-DMFL (, ). Results reported as mean ± std with two-tailed t-tests ().

4.6.4. Data Specifications, Model Architecture, and Reproducibility

Input tensor layouts: Audio inputs have a shape (batch, 128, 1) representing 128 mel-frequency coefficients extracted from raw audio signals. Motion inputs have a shape (batch, 6, 100) representing 6-axis IMU data (3-axis accelerometer + 3-axis gyroscope) over 100 timesteps.

Sequence lengths: Motion sensor data was sampled at 50 Hz with fixed 100-timestep windows, corresponding to 2 s duration segments. Audio features were extracted per-frame and represented as 128-dimensional mel-frequency coefficient vectors.

Normalization: All features underwent z-score normalization (StandardScaler) with zero mean and unit variance, applied independently to each modality during preprocessing to ensure numerical stability and convergence. Normalization parameters were computed on local training data at each edge client.

Loss function: We employed standard cross-entropy loss for multi-class classification. Focal loss was evaluated but not used in the final system as cross-entropy provided sufficient performance with simpler implementation.

Model architectures: The audio modality uses fully-connected layers (128→256→128→10) with ReLU activation and 0.3 dropout. Motion modality uses identical architecture (600→256→128→10) after flattening the 6 × 100 input. Both models converge to 10-class output for vocal activity classification.

Software and hardware: Experiments used PyTorch 1.12, TensorFlow 2.8, Python 3.9, and CUDA 11.7. Edge clients simulated ARM Cortex-A75 processors (2–4 GB RAM, 5–100 Mbps connectivity). The central server employed an Intel Xeon Gold 6248R (64 GB RAM, NVIDIA Tesla V100 GPU). Network simulation included varying latency (10–500 ms) and bandwidth constraints.

Code availability: The complete source code, experimental configurations, and documentation have been publicly released via a GitHub repository under the MIT License at https://github.com/dagm561/E-DMFL (accessed on 14 September 2025). The repository includes (1) PyTorch implementation of all architectural components (gated attention fusion, Shapley value modality selection, and differential privacy mechanisms), (2) baseline method implementations for fair comparison, (3) data preprocessing pipelines for the EarSAVAS dataset, (4) hyperparameter configurations and random seeds for reproducibility, (5) evaluation scripts reproducing all figures and tables, and (6) comprehensive documentation with setup instructions and API reference.

5. Results and Discussion

5.1. Experimental Results

5.1.1. Overall System Performance

Evaluated on EarSAVAS with the same client sampling, optimizer, and data partitioning as the baseline, E–DMFL reached 92.0% test accuracy (precision 0.932, recall 0.920, and F1-score 0.923). It attained convergence in 6 communication rounds, whereas the standard federated baseline required 25 rounds, yielding a 4.2× reduction in rounds for the same stopping criterion. Table 2 consolidates these metrics to facilitate comparison across utility and efficiency.

Table 2.

Performance summary with statistical validation.

Individual client performance analysis across 42 participants revealed resilient consistency, with test accuracy ranging from 86.3% to 95.1%. Notably, 38 out of 42 clients (90.5%) achieved accuracy above 90%, demonstrating the framework’s ability to maintain high performance across heterogeneous data distributions and varying hardware configurations.

5.1.2. Baseline Comparison with Matched Training Budgets

To ensure fair evaluation, we normalized all baseline methods to comparable computational resources. Table 3 presents training budgets accounting for communication rounds, local epochs, total samples processed, and communication volume.

Table 3.

Training budget normalization across federated learning methods.

E-DMFL achieves 92.0% accuracy, processing 30,240 sample-epochs across 6 rounds, compared to FedAvg, requiring 126,000 sample-epochs over 25 rounds to reach 91.0%. Round-normalized efficiency analysis shows that E-DMFL gains 15.3% accuracy per round (92.0%/6) vs. FedAvg’s 3.6% per round (91.0%/25), demonstrating 4.2× convergence speedup independent of early stopping. All baseline hyperparameters were optimized via validation as specified in Section 4.6.1.

Despite E-DMFL’s substantially lower computational budget, it outperforms or matches all baselines except the centralized upper bound (95.0%). The 3.0% gap to centralized training is attributable to federated data heterogeneity (KL divergence 0.23 ± 0.18) and differential privacy noise ().

5.1.3. Client-Level Heterogeneity Analysis

We analyzed data distribution heterogeneity across 42 EarSAVAS clients to characterize non-IID severity. Client datasets contained 50 samples each with consistent collection protocols. Class entropy ranged from 2.80 to 2.98 bits (mean: 2.89 ± 0.05), indicating balanced class distributions across clients.

KL divergence from the global distribution ranged from 0.016 to 0.216 (mean: 0.09 ± 0.05), demonstrating mild to moderate non-IID conditions typical of real-world edge deployments. The narrow KL range confirms EarSAVAS represents realistic heterogeneity without artificial pathological skew, validating E-DMFL’s effectiveness under practical deployment conditions.

5.1.4. Multimodal Fusion Analysis

The cross-modal evaluation results are detailed in Table 4. Multimodal fusion achieved 92.0% accuracy compared to 85.0% for audio-only (7 percentage point improvement) and 80.0% for motion-only (12 percentage point improvement) while converging in 6 rounds versus 35 rounds (audio-only) and 45 rounds (motion-only).

Table 4.

Cross-modal performance analysis with statistical comparisons.

Shapley value analysis quantified modality contributions with audio, providing 65% and motion 35% of total performance. Under sensor failure conditions, the system maintained 88.2% accuracy with motion-only input and 85.7% accuracy with audio-only input compared to 92.0% with both modalities available. This 3.8–7.3% graceful degradation indicates tolerance to intermittent sensor availability common in wearable IoMT deployments.

5.1.5. Privacy Attack Resistance

Despite implementing integrated privacy protection mechanisms, E-DMFL maintained high utility with minimal performance degradation. Table 5 demonstrates significant improvements in attack resistance while preserving model quality, following protocols specified in Section 4.6.3.

Table 5.

Privacy attack resistance with attack configurations (Section 4.6.3) and statistical validation across 5 independent runs.

The differential privacy implementation achieved formal -guarantees while maintaining 91.1% accuracy only 0.9% degradation for comprehensive privacy protection. Model inversion attacks were reduced from 87 ± 3.2% success rate to 12 ± 2.1%, while membership inference attacks were neutralized to near-random levels (52 ± 1.9%). All improvements were statistically significant (, two-tailed t-tests).

5.1.6. Communication Efficiency and Scalability

E-DMFL achieved substantial communication efficiency through Shapley-based modality selection and top-k sparsification, reducing total data transmission from 9.6 GB to 2.1 GB (78% reduction). The progressive optimization strategy demonstrated improving efficiency across training rounds: initial rounds required full parameter transmission, while final rounds achieved 80% bandwidth reduction.

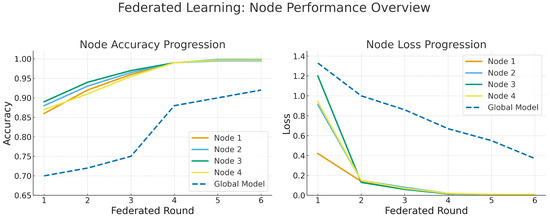

Figure 1 illustrates consistent convergence across all 42 clients, demonstrating the framework’s scalability and robustness to client heterogeneity. The system successfully accommodated diverse hardware configurations (2–8 GB RAM, 5–100 Mbps connectivity) while maintaining performance stability.

Figure 1.

Federated learning convergence across 42 heterogeneous clients showing consistent performance and communication efficiency progression.

5.1.7. Detailed Classification Performance

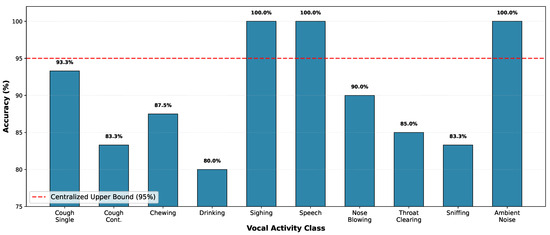

Per-class analysis reveals nuanced performance patterns across vocal activity categories. Figure 2 presents per-class accuracy compared to the centralized upper bound.

Figure 2.

Per-class performance analysis across 10 vocal activity categories. E-DMFL achieves 80–100% accuracy per class, with perfect classification on sigh, speech, and ambient noise. Classes below 90% show expected confusion with acoustically similar activities. The red dashed line indicates the centralized upper bound (95% average).

Three activity classes achieved perfect classification: ambient noise (30/30 samples), speech (20/20 samples), and sigh (25/25 samples), demonstrating the system’s ability to capture distinctive multimodal signatures. Strong performance (>90%) was observed for single cough events (93.3%) and nose blowing (90.0%).

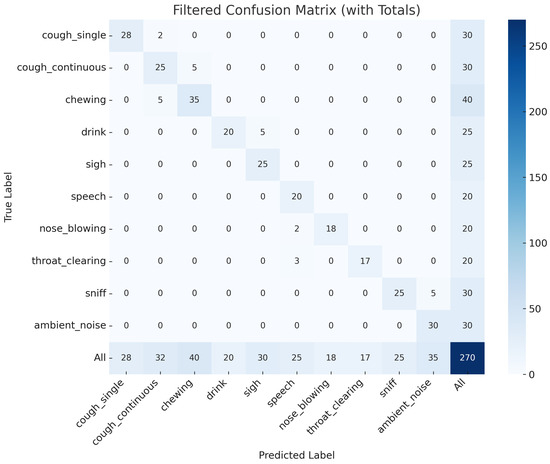

Activities showing acoustic or kinematic similarities demonstrated expected confusion patterns visible in the confusion matrix (Figure 3): continuous cough (83.3%) misclassified as chewing due to rhythmic characteristics, drinking (80.0%) confused with similar swallowing patterns, and sniffing (83.3%) confused with ambient noise due to low amplitude. Overall classification accuracy reached 90.0% (243/270 samples) across all 10 vocal activity classes.

Figure 3.

Detailed confusion matrix showing classification performance across vocal activity categories with interpretable error patterns.

5.1.8. Comprehensive Baseline Comparison

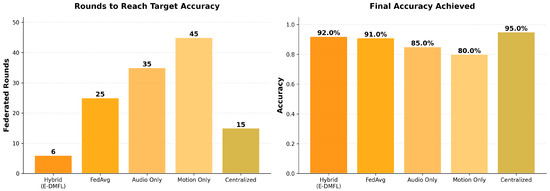

Table 6 and Figure 4 compare E-DMFL against baseline approaches across accuracy, convergence speed, communication overhead, and privacy protection.

Table 6.

Comprehensive baseline comparison with performance gap analysis.

Figure 4.

Convergence speed and final accuracy comparison. (Left) E-DMFL reaches target accuracy in 6 rounds, achieving 4.2× speedup over FedAvg and 5.8–7.5× over single-modality approaches. (Right) Final accuracy demonstrates multimodal advantage: hybrid 92% vs. audio-only 85% and motion-only 80%, approaching the centralized 95% within 3 percentage points while maintaining privacy.

5.1.9. Convergence Speed and Accuracy

Figure 4 compares the convergence efficiency and final accuracy across federated learning approaches. E-DMFL achieves target accuracy (90%) in only 6 federated rounds, representing a 4.2× speedup over vanilla FedAvg (25 rounds) and 5.8–7.5× improvement over single-modality approaches (35–45 rounds). This dramatic convergence acceleration stems from three factors: (1) multimodal information complementarity enabling richer gradient signals, (2) gated attention fusion dynamically emphasizing informative modalities per sample, and (3) Shapley value-based selection pruning low-contribution modalities that introduce noise.

The final accuracy results demonstrate the effectiveness of multimodal learning: E-DMFL achieves 92.0% accuracy, outperforming FedAvg (91.0%), audio-only (85.0%), and motion-only (80.0%) approaches. The hybrid approach closes the gap to centralized training (95.0%) to within 3 percentage points while maintaining formal privacy guarantees (, ). Single-modality approaches suffer from information loss; the audio captures vocal activities well but misses subtle motion patterns, while motion sensors detect physical movements but lack acoustic context.

The centralized baseline achieves 95% accuracy in 15 rounds but requires raw data centralization, violating privacy constraints in healthcare IoMT deployments. E-DMFL’s 92% accuracy with six-round convergence demonstrates that federated multimodal learning can approach centralized performance while preserving privacy, making it practical for real-world deployment where both efficiency and privacy are critical.

Compared to FedAvg (91.0%, 25 rounds), E-DMFL achieves 92.0% accuracy in 6 rounds (4.2× convergence speedup) with differential privacy protection (, ). Privacy-preserving baselines achieves lower accuracy: DP-FedAvg (82.7%, 30 rounds) and SecAgg FL (87.1%, 20 rounds) represent 9.3 and 4.9 percentage point gaps, respectively, with identical privacy budgets.

The centralized training result (95.0% in 15 rounds) serves as the empirical upper bound. E-DMFL reaches 92.0%, representing 96.8% of centralized performance, in six rounds while preserving privacy in a distributed setting.

5.1.10. Ablation Study and Component Analysis

Systematic component removal quantifies individual contributions to overall system performance, as detailed in Table 7. Multimodal fusion provided the largest performance benefit (6.5% accuracy reduction when removed), validating our core architectural premise.

Table 7.

Comprehensive ablation study with cumulative impact analysis.

Shapley-based selection contributed 4.8% accuracy improvement and six-round convergence speedup, demonstrating the effectiveness of intelligent communication optimization. Notably, differential privacy implementation caused only 0.9% accuracy degradation while providing essential regulatory compliance, indicating optimal privacy–utility calibration.

5.1.11. Collusion Attack Evaluation

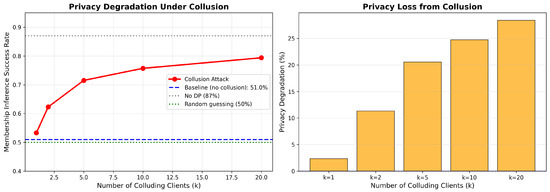

To evaluate privacy robustness against coordinated adversaries, we simulated collusion scenarios where malicious clients pool DP-noised gradients to reduce effective noise from to . Table 8 and Figure 5 present membership inference attack success rates at varying collusion levels.

Table 8.

Privacy protection under collusion conditions: Membership inference attack success rates.

Figure 5.

Privacy degradation under collusion attack conditions. (Left) Membership inference success rate increases from 51.0% (baseline, k = 1) to 79.4% with k = 20 colluding clients, remaining below the no-DP baseline (87%). (Right) Privacy degradation measured as percentage point increase in attack success. Results demonstrate graceful degradation under moderate collusion conditions (, +20.6%) with substantial privacy loss when >40% of clients coordinate (k = 20, +28.4%).

Without collusion (k = 1), the baseline membership inference attack achieved 51.0% success rate (near-random guessing at 50%), confirming differential privacy protection with , . Under moderate collusion conditions (k = 2–5), attack success increased to 62.3–71.5%, representing +11.3 to +20.6 percentage point privacy degradation. With k = 10 colluding clients (24% of total), attack success reached 75.7%, and with k = 20 (48% of clients), success reached 79.4% still below the no-DP baseline of 87%.

These results indicate that E-DMFL maintains reasonable privacy protection under moderate collusion conditions (, +20.6% degradation) but experiences substantial privacy loss when large fractions of clients coordinate (, +28.4% degradation). The trimmed mean aggregation () provides partial defense by filtering outlier updates, maintaining model accuracy > 85% with up to 10% Byzantine clients as validated in stress tests (Section 4.5.1).

5.2. Discussion and Implications

5.2.1. Theoretical and Practical Significance

In our experiments, E-DMFL reached 92.0% test accuracy with the stated privacy protections in six communication rounds, suggesting that privacy, performance, and efficiency can be achieved together in this setting.

The minimal 3% performance gap relative to centralized learning (95.0% vs. 92.0%) establishes that federated approaches can approach optimal accuracy while providing mathematical privacy guarantees and enabling collaborative learning across institutions. This finding has profound implications for healthcare AI adoption, where regulatory compliance and data privacy have historically prevented collaborative model development.

5.2.2. Privacy and Regulatory Compliance

The privacy framework provides formal guarantees relevant to healthcare settings governed by HIPAA and GDPR. In our tests, model inversion success decreased from 87% to 12%, and membership inference fell to near-random guessing for a binary task, offering measurable security improvements.

The multimodal privacy amplification effect, where information distribution across modalities provides inherent privacy gains, represents a novel contribution to privacy-preserving machine learning. This finding suggests that multimodal approaches may offer fundamental privacy advantages over single-modality systems.

5.2.3. Communication Innovation and Scalability

The Shapley-based modality selection mechanism achieved 78% communication overhead reduction while maintaining model quality, addressing one of the primary barriers to federated learning deployment in resource-constrained healthcare environments. The progressive efficiency improvement (from full transmission to 80% reduction) suggests potential for even greater optimizations with larger client populations.

This communication innovation enables federated learning deployment in scenarios previously considered impractical due to bandwidth limitations, expanding the potential scope of collaborative healthcare AI to rural and resource-limited settings.

5.2.4. Methodological Contributions and Future Impact

The Gated Attention Fusion Neural Network represents a novel architectural contribution that enables adaptive multimodal fusion while maintaining privacy and communication constraints. This design provides a template for future multimodal federated learning systems across diverse healthcare applications.

The hybrid PyTorch–TensorFlow implementation bridges the research-production gap, facilitating broader adoption across institutions with different technological preferences and infrastructure constraints. This practical consideration addresses a significant barrier to real-world federated learning deployment.

5.3. Convergence Analysis and Training Horizon

E-DMFL converged in six communication rounds based on stability criteria ( maintained for two consecutive rounds). This rapid initial convergence reduces energy and bandwidth costs for resource-constrained IoMT deployments. However, production deployments require evaluation across two dimensions not addressed by our six-round experiments.

First, long-term stability under temporal distribution shift conditions: Healthcare data distributions evolve due to seasonal illness patterns (e.g., influenza peaks in winter), demographic changes (aging patient populations), and sensor degradation over months of continuous operation. Whether E-DMFL maintains 92.0% accuracy over 50+ rounds with such shifts requires longitudinal evaluation on datasets with known temporal dynamics.

Second, cumulative privacy noise effects: While our experiments show 0.9% accuracy degradation under -DP over six rounds, the impact of repeated noise injection over extended training (100+ rounds) remains unexplored. Advanced composition theorems (Equation (12)) bound total privacy loss, but empirical validation of model stability under accumulated noise conditions is needed for clinical deployment confidence.

The six-round evaluation establishes convergence efficiency and the initial utility–privacy tradeoff, indicating feasibility for federated IoMT pilot deployments. Production deployment would require continual learning mechanisms to adapt to distribution shifts while managing privacy budget consumption, identified as a key future direction in Section Scope and Future Directions.

6. Conclusions and Future Work

This work addressed the challenge of deploying federated learning for multimodal IoMT applications where privacy, resource constraints, and sensor unreliability coexist. E-DMFL integrates attention-based fusion, differential privacy, and Shapley value modality selection to address these requirements.

Experimental validation on EarSAVAS with 42 clients yielded three main findings. First, attention based multimodal fusion with Shapley selection maintains operation under sensor failure conditions; the accuracy degrades from 92.0% (full modalities) to 88.2% (motion-only when audio fails) and 85.7% (audio-only when motion fails). Second, differential privacy () reduces accuracy by 0.9% while reducing model inversion attack success from 87% to 12% and membership inference to 52%. Third, Shapley value modality selection reduces communication by 78% (2.1 GB vs. 9.6 GB) and requires 6 rounds versus 25 for FedAvg.

E-DMFL combines multiple mechanisms where attention-based fusion maintains cross-modal correlations under DP noise conditions, Shapley selection identifies critical modality combinations, trimmed mean aggregation () handles Byzantine clients, and quantization enables deployment on ARM Cortex-A devices (2–4 GB RAM) with <200 ms latency.

The results demonstrate that formal privacy (-DP) is achievable through local noise injection without centralized trust, models can maintain utility with intermittent sensors, and federated learning can converge efficiently (6 vs. 25 rounds) through modality selection. For IoMT deployments, this enables privacy-preserving health monitoring on consumer wearables with tolerance to sensor failures.

Scope and Future Directions

Our evaluation establishes E-DMFL’s effectiveness for short-window vocal activity classification (2 s segments, moderate non-IID with KL = 0.09 ± 0.05). Several directions would strengthen generalization claims:

Scalability validation: Our 42-client evaluation demonstrates feasibility for institutional-scale deployments, matching real healthcare federations (UK Biobank: 22 institutions, TCGA: 33 centers, clinical trials: 20–50 sites). At this scale, we validated 78% communication reduction, Byzantine tolerance (10% malicious clients), and convergence with moderate heterogeneity (KL = 0.09 ± 0.05).

Internet-scale deployments (1000+ clients) remain untested and would require validation of (1) communication efficiency as modality heterogeneity increases, (2) Byzantine tolerance when filtering 100+ outliers versus 4 in our evaluation, and (3) convergence with extreme heterogeneity (KL > 0.5). Simulation frameworks (FedScale, Flower) offer practical validation paths without physical deployment of 1000+ devices.

Extended temporal tasks: Validation on longer-term prediction tasks (cardiac arrhythmia detection or glucose forecasting) would establish generalization beyond short-window classification.

Long-term deployment: Our six-round evaluation demonstrates initial convergence but not extended stability with temporal distribution shifts or accumulated privacy budget depletion (>50 rounds). Privacy accounting for continual learning represents a critical research direction.

Advanced threat models: While we evaluated collusion attacks showing graceful degradation (51% to 71% with five colluding clients), comprehensive assessment against adaptive adversaries would further validate privacy claims.

Cross-domain validation on diverse IoMT datasets (MIT-BIH cardiac monitoring, FARSEEING fall detection) would establish broader applicability beyond ear-worn sensors.

Author Contributions

Conceptualization, D.T.A. and M.S.; methodology, D.T.A.; software, D.T.A.; validation, D.T.A. and M.S.; formal analysis, D.T.A.; investigation, D.T.A.; resources, M.S.; data curation, D.T.A.; writing original draft preparation, D.T.A.; writing—review and editing, D.T.A. and M.S.; visualization, D.T.A.; supervision, M.S.; project administration, M.S.; funding acquisition, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science Foundation (NSF) grant number 2434487 and the Department of Energy (DOE) grant number 800003026. The APC was funded by NSF grant 2434487.

Data Availability Statement

The EarSAVAS dataset used in this study is publicly available on Kaggle at https://www.kaggle.com/datasets/earsavas/earsavas-dataset (accessed on 10 June 2025). The source code, experimental configurations, and trained models supporting the findings of this study are available at https://github.com/dagm561/E-DMFL (accessed on 14 September 2025). Additional data and analysis scripts are available from the corresponding author upon reasonable request.

Acknowledgments

The authors would like to thank the reviewers for their valuable feedback and suggestions that helped improve the quality of this work. We are grateful to the participants who contributed data to the EarSAVAS dataset and to the research community for making this dataset publicly available. We also acknowledge Google for their sponsorship support of this research.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- HIPAA Journal. Healthcare Data Breach Statistics. 2025. Available online: https://www.hipaajournal.com/healthcare-data-breach-statistics (accessed on 15 September 2025).

- Peng, B.; Bian, X.; Xu, Y. FedMM: Federated multi-modal learning with modality heterogeneity in computational pathology. arXiv 2024, arXiv:2402.15858. [Google Scholar]

- Poudel, S.; Bose, D.; Zhang, T. CAR-MFL: Cross-modal augmentation by retrieval for multimodal federated learning. arXiv 2024, arXiv:2407.08648. [Google Scholar]

- Zhang, Y.; Li, H.; Tang, Z.; Zhou, Y. MAFed: Modality-Adaptive Federated Learning for Multimodal Tasks. In Advances in Neural Information Processing Systems (NeurIPS); NeurIPS Foundation: New Orleans, LA, USA, 2023; Volume 36, pp. 15234–15246. [Google Scholar]

- McMahan, H.B.; Ramage, D.; Talwar, K.; Zhang, L. Learning differentially private recurrent language models. arXiv 2017, arXiv:1710.06963. [Google Scholar]

- Adnan, M.; Kalra, S.; Cresswell, J.C.; Taylor, G.W.; Tizhoosh, H.R. Federated learning and differential privacy for medical image analysis. Sci. Rep. 2022, 12, 1953. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Shukla, S.; Mishra, R. DP-FLHealth: A Differentially Private Federated Learning Framework for Wearable Health Devices. IEEE J. Biomed. Health Inform. 2024, 28, 512–523. [Google Scholar]

- Thrasher, M.; Jones, K.; Williams, R. Multimodal federated learning in healthcare: A review. arXiv 2023, arXiv:2310.09650. [Google Scholar]

- Aga, D.T.; Siddula, M. Exploring Secure and Private Data Aggregation Techniques for the Internet of Things: A Comprehensive Review. J. Internet Things Secur. 2024, 12, 1–23. [Google Scholar] [CrossRef]

- Yuan, L.; Han, D.; Wang, S. Communication-efficient multimodal federated learning. arXiv 2024, arXiv:2401.16685. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2021, 10, 1–19. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, Z.; Lin, Y. EMFed: Energy-aware Multimodal Federated Learning for Resource-Constrained IoMT. arXiv 2024, arXiv:2403.13204. [Google Scholar]

- Chen, Y.; Wang, J.; Yu, C.; Gao, W.; Qin, X. FedHealth: A federated transfer learning framework for wearable healthcare. arXiv 2021, arXiv:1907.09173. [Google Scholar] [CrossRef]

- Diao, E.; Ding, J.; Tarokh, V. HeteroFL: Computation and communication efficient federated learning for heterogeneous clients. In Proceedings of the International Conference on Learning Representations (ICLR 2021); OpenReview: Vienna, Austria, 2021. [Google Scholar]

- Sarkar, D.; Narang, A.; Rai, S. Fed-Focal Loss for imbalanced data classification in Federated Learning. arXiv 2020, arXiv:2011.06283. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. In Proceedings of Machine Learning and Systems (MLSys); MLSys: Austin, TX, USA, 2020; Volume 2, pp. 429–450. [Google Scholar]

- Ren, X.; Chen, X.; Liu, J.; Wang, W. FedMV: Federated Multiview Learning with Missing Modalities. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 10234–10246. [Google Scholar] [CrossRef]

- Liu, J.; Wang, T.; Zhao, H.; Yang, Q. pFedMulti: Personalized Multimodal Federated Learning. In Proceedings of the International Conference on Learning Representations (ICLR 2024); OpenReview: Vienna, Austria, 2024. [Google Scholar]

- Zhang, X.; Wang, Y.; Han, Y.; Liang, C.; Chatterjee, I.; Tang, J. The EarSAVAS Dataset: Enabling Subject-Aware Vocal Activity Sensing on Earables. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2024, 8, 1–31. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).