Abstract

The study of dramatic plays has long relied on qualitative methods to analyze character interactions, making little assumption about the structural patterns of communication involved. Our approach bridges NLP and literary studies, enabling scalable, data-driven analysis of interaction patterns and power structures in drama. We propose a novel method to supplement addressee identification in tragedies using Large Language Models (LLMs). Unlike conventional Social Network Analysis (SNA) approaches, which often diminish dialogue dynamics by relying on co-occurrence or adjacency heuristics, our LLM-based method accurately records directed speech acts, joint addresses, and listener interactions. In a preliminary evaluation of an annotated multilingual dataset of 14 scenes from nine plays in four languages, our top-performing LLM (i.e., Llama3.3-70B) achieved an F1-score of (P = , R = ), an exact match of 77.31%, and an 86.97% partial match with human annotations, where partial match indicates any overlap between predicted and annotated receiver lists. Through automatic extraction of speaker–addressee relations, our method provides preliminary evidence for the potential scalability of SNA for literary analyses, as well as insights into power relations, influence, and isolation of characters in tragedies, which we further visualize by rendering social network graphs.

1. Introduction

Tragic plays thrive on the tensions between characters, conflict dramatized through dialogue, soliloquy, and shifting alliances. From fatal misunderstandings to the inevitability of fate, such interactions are complicated webs of power, persuasion, and betrayal. Understanding the structure of interaction in dramatic texts is a challenge for both NLP and literary analysis [1,2].

Traditional literary analysis largely addresses such dynamics as qualitative, ignoring the infrastructural patterns underlying the communication structure. While close reading remains crucial for extracting dramatic meaning, computational techniques offer complementary benefits: they can automatically detect patterns invisible to human readers in vast amounts of text. For literary scholars, precise addressee identification supports the identification of power structures, social hierarchies, and character isolation patterns, all central to the analysis of dramatic plays. For computational studies, this task presents a complex pragmatic challenge that requires contextual understanding, resolution of coreferences, and implicit modeling of social relationships. The intersection of these domains offers opportunities to validate computational methods against established literary theories while enabling scalable analysis of dramatic corpora.

Multiple studies [1,3,4] employing Social Network Analysis (SNA) to drama have argued that centrality measures (e.g., betweenness, eigenvector) can quantify a character’s structural power. However, existing approaches are prone to employing simplifying heuristics, such as linking characters who appear in the same scene [5] or inferring links from adjacent speaker turns [6], rather than identifying directed speech acts (who addresses whom). Although such methods are scalable, they tend to misrepresent dialogue patterns by omitting stated addressees, asides, or monologues. Manual annotation sidesteps this issue, but at a prohibitive cost for extensive studies. Utilizing Large Language Models (LLMs) enables us to automate this labor-intensive process and to test classical literary hypotheses (e.g., the connection between tragic isolation and network periphery).

In this context, we investigate the extent to which LLMs can accurately identify addressees in dramatic texts and how this automated approach refines our understanding of character interactions compared to traditional heuristic-based methods. Our work combines computational and literary methods by applying Large Language Models (LLMs) to automatically identify addressees (also referred to as receivers, listeners, or targets) in tragedy and then project these interactions as social networks.

By leveraging LLMs, our approach transcends probabilistic heuristics to represent not only structural dynamics but also the semantic and rhetorical nuances of dialogue. This facilitates a deeper, context-aware analysis, and it dynamically resolves the addressee based on dialogue structure, speaker intention, and rhetorical context. Unlike traditional models that rely on static rules, LLMs can dynamically track the flow of the conversation. They can detect complex interactions, such as subtle attention shifts, interruptions, and indirect addresses, which are cases where traditional models break because they are based on stationary transition probabilities. This capability enables a more precise analysis of conversational agency. By moving beyond simply tracking turns, LLMs can trace the intricate network of dialogue, underpinning the true targets of speech even when they are not explicitly named. This provides a granular understanding of dramatic networks based on the actual structure of interaction.

Building on previous research available on platforms like DraCor [7], which applies network analysis to dramatic texts, our approach also incorporates directed graph representations. Where existing systems consider co-occurrence and undirected relationships, we incorporate explicit speaker–addressee directionality through LLM-based speech act detection. This enables us to distinguish between A speaking to B and B speaking to A—an important distinction when considering power dynamics. This added modeling capability allows us to capture nuances of dramatic structure such as the passing of influence between characters (through directed edges), the structuring role played by dominant figures in conversation networks, and patterns of exclusion or isolation that arise through systematic examination of address forms—all nuances that are implicit in conventional undirected network models.

Our main contributions are as follows:

- Introducing a multilingual LLM-based method for addressee detection in dramatic texts that can be applied to the entire DraCor corpus, addressing the laborious manual annotation process that currently limits large-scale dramatic analysis.

- Performing a comprehensive evaluation against expert annotations across nine plays in four languages. We release the human annotations as open source at https://huggingface.co/datasets/upb-nlp/metra_annotations (accessed on 4 September 2025).

- Generating directed, weighted graph models of character interactions, enabling finer-grained SNA metrics for theatrical discourse. Our end-to-end pipeline processes raw TEI-encoded dramatic texts, automatically identifies speaker–addressee relationships with high accuracy across four languages, and creates the participant graph that is rendered online. We release our code containing the pipeline and model prompts as open source at https://github.com/upb-nlp/METRA (accessed on 14 August 2025).

This work is structured as follows. Section 2 reviews earlier research on network analysis in drama, including heuristic-based approaches and more recent computational approaches. Section 3 describes our approach to addressee detection, including the dataset description, model selection, and usage. Section 4 presents the evaluation of our method against human annotation, as well as visualizations of social networks. Section 5 explores advantages and limitations, while Section 6 outlines directions for future research on LLM-based systems for addressee detection and social network creation.

2. Related Work

This section reviews prior work on modeling dramatic dialogue. We first present computational approaches within theatrical theory, emphasizing addressivity and turn-taking as foundations for analyzing communicative intent. We then survey methods for addressee detection, from co-occurrence models relying on heuristics to neural- and graph-based techniques, while highlighting recent advances with LLMs. Finally, we discuss the limitations of existing approaches and argue for our scalable, context-sensitive analysis of dramatic texts using LLMs.

2.1. Network Analysis in Drama and Literature

To situate computational work within theatrical and dramaturgical scholarship, we draw on theories of addressivity, speech acts, and performance. Pragmatics and speech act theory [8,9,10] provide formal categories to describe the purpose and intent behind what is said, which helps to determine to whom a line is directed. Sacks et al. [11] introduced a conversational analysis with empirically grounded descriptions of turn-taking.

Performance and dramaturgy studies [12,13,14,15] emphasize staging, audience constructions, asides, and the embodied dynamics of address. Bakhtin’s [16] notion of “addressivity” underscores that every utterance is inherently directed toward an addressee, whether another character or the audience, highlighting the relational and anticipatory nature of dialogue, which is crucial for modeling communicative intent in dramatic texts.

Franco Moretti [1] pioneered the application of network theory to plot analysis, exploring how connections between characters (nodes) reveal narrative patterns. In Franco Moretti’s more recent studies [17,18], the social networks are based on an algorithm that models the dramatic dialogue as a probabilistic network, where characters take turns speaking, with each speaker selection influenced by a “Centrality” parameter that accumulates advantage for frequent speakers, gradually creating hierarchical distinctions.

Within German literary studies, Trilcke et al. [3,4] explored centrality measures and co-occurrence-based network models for drama, while the DraCor infrastructure [7] provided a programmatically accessible corpus for large-scale analysis. Surveys such as the one by Labatut and Bost [19] further systematized techniques for extracting and analyzing fictional networks, highlighting the prevalence of heuristic approaches (e.g., scene co-occurrence) that may misrepresent actual communication flows.

However, these methods rarely examine what is actually being said. Connections between characters are inferred almost entirely from scene co-occurrence or adjacency heuristics, without validating whether a speaker is addressing a particular listener. This abstraction often reduces dramatic dialogue to structural patterns, overlooking communicative intent and rhetorical nuance, which are essential for understanding dramatic interaction.

2.2. Computational Approaches to Dialogue and Thread Disambiguation

A closely related challenge in modeling speaker–addressee relationships is thread disambiguation, which involves accurately identifying conversational threads in rich, dramatic dialogues. The early work of Elsner and Charniak [20] emphasized the difficulty in modeling turn-taking and conversational threads in theater scripts, establishing addressee identification as a fundamental challenge in computational drama analysis.

Traditional approaches to addressee detection have relied heavily on linguistic cues and positional heuristics. Joty et al. [21] developed topic-based methods for identifying addressees in multi-party conversations, while Zhang et al. [22] proposed attention-based neural networks for addressee prediction in online forums. Ouchi and Tsuboi [23] introduced a neural model that combines speaker embeddings with contextual features to predict addressees in Japanese dialogues, achieving notable improvements over rule-based baselines.

Subsequent approaches to thread detection and conversation disentanglement leveraged neural networks [24,25] to identify response relationships and semantic connections between messages. Other approaches were graph-based [26] or context-based [27]. Tan et al. [28] developed a context-sensitive LSTM-based thread detection classification model, which was evaluated on Reddit discussion data. Akhtiamov et al. [29] conducted a comprehensive investigation of human–machine addressee detection using complexity-identical utterances, introducing the Restaurant Booking Corpus (RBC) with controlled experimental conditions that eliminate confounding factors such as different dialogue roles and visible counterpart effects. Their work demonstrated that cross-corpus data augmentation using mixup algorithms improves classification performance, achieving results that surpass both baseline classifiers and human listeners.

Recent advances in computational pragmatics have addressed the challenge of identifying implicit addressees. Katiyar and Cardie [30] investigated the role of entity mentions and coreference chains in determining conversation targets. Zhao et al. [31] developed methods for automatically identifying addressees in historical correspondence, demonstrating the applicability of these techniques to literary and historical texts.

Masala et al. [32] introduced a hybrid framework combining string kernels with neural networks to detect semantic links in multi-participant educational chats. Their approach balances scalability (via lexical features) and nuance (via conversation-specific metadata), proving particularly effective for conversational datasets where deep learning alone struggles with limited training samples.

2.3. Large Language Models for Literary Analysis

The emergence of large language models has opened new avenues for literary analysis, although their application to dramatic texts remains underexplored. Kenton and Toutanova [33] showed BERT’s capabilities for understanding contextual relationships in text, laying the groundwork for more sophisticated dialogue analysis. Zhang et al. [34] showed how large-scale language models can capture literary patterns and generate contextually appropriate text, suggesting their potential for understanding dramatic conventions.

Recent work by Chang et al. [35] explored LLMs’ ability to identify speakers in dialogue-heavy texts, while Li et al. [36] investigated ChatGPT’s performance on various NLP tasks, including coreference resolution and entity recognition—capabilities essential for addressee detection. Qin et al. [37] specifically examined LLMs’ understanding of conversational context and turn-taking patterns, finding strong performance on dialogue understanding tasks.

Kocmi and Federmann [38] argued that LLMs’ cross-linguistic capabilities are particularly relevant for our multilingual approach to dramatic analysis. Wei et al. [39] showed how LLMs exhibit emergent abilities in complex reasoning tasks, including understanding implicit social relationships—a key requirement for addressee detection in dramatic texts where relationships are often implied rather than explicitly stated.

The application of LLMs to literary character analysis has shown promising results. Brahman and Zaiane [40] used large models to understand character motivations and relationships in narratives, while Sims [41] explored how neural language models can capture literary style and character voice. However, most studies focus on narrative fiction rather than dramatic texts, leaving an underexplored gap in understanding how LLMs perform on dialogue-intensive literary forms.

Recent work in NLP has begun to address related challenges of addressee recognition and pragmatics in dialogue. Penzo et al. [42] introduced a diagnostic framework for addressee recognition and response selection in multi-party conversations, highlighting persistent difficulties for LLMs. Similarly, Inoue et al. [43] proposed a benchmark for addressee recognition in multi-modal multi-party dialogue, further underlining the complexity of this task.

2.4. Contextualized Representations and Evaluation Practices

Radwan et al. [44] showed that Transformer embeddings outperform surface heuristics on informal social media text, which parallels our claim that LLMs can surpass adjacency or co-occurrence-based methods for addressee identification in dramatic dialogues. Alshattnawi et al. [45] applied LLM embeddings in downstream ML pipelines for short, informal, multilingual text, highlighting the importance of representational choices and transparent reporting from embedding extraction to evaluation. Al-Ahmad et al. [46] addressed LLM evaluation and reliability reporting, including systematic robustness checks to quantify uncertainty and validate model performance, which inform our evaluation strategy.

2.5. Limitations of Existing Approaches

Current approaches to dramatic network analysis suffer from several fundamental limitations. Co-occurrence-based methods, while scalable, fundamentally misrepresent the directional nature of dramatic dialogue [4]. Fischer and Skorinkin [5] showed that adjacency-based heuristics can inflate connection weights between characters who never actually address each other directly, leading to networks that reflect scene structure rather than communicative relationships. The scalability–accuracy trade-off represents another challenge in existing methodologies. While manual annotation provides high accuracy, Moretti [1] noted the prohibitive cost of scaling such approaches to large corpora. Conversely, automated heuristics achieve scalability at the expense of interpretive validity, particularly in complex dramatic contexts involving asides, soliloquies, and indirect address forms common in tragic plays [6].

While prior LLM studies have shown impressive capabilities in understanding dialogue, they have largely focused on narrative fiction [40,47] or online and multi-party dialogue corpora [28,48,49] rather than on tragic or stage plays. In contrast to online forums or novels, dramatic texts often involve complex turn-taking, indirect address, asides, soliloquies, and multi-character interactions that unfold in tightly constrained spatial and temporal settings.

Overall, to the best of our knowledge, no previous study has addressed the task of directed addressee detection in multilingual drama corpora using LLMs. By applying LLMs specifically to tragic plays, our study addresses a gap in the literature, showing that models trained on general dialogue corpora can be adapted to capture some of the nuanced communicative structures of drama. The models’ contextual understanding enables referent disambiguation in soliloquies through pronoun resolution and long-range addressee detection, identifying when speakers in multi-character stage scenes (with 5+ participants) address listeners outside of the near dialogue turn. This is particularly applicable in dramatic texts, where characters may refer to other characters a few lines ahead of them within the scene, a property that exceeds common co-occurrence or adjacency-based methods. Our method balances accuracy, performance, transferability, computational efficiency, and scalability, enabling effective addressee detection across large and complex dramatic corpora.

3. Method

3.1. Pipeline

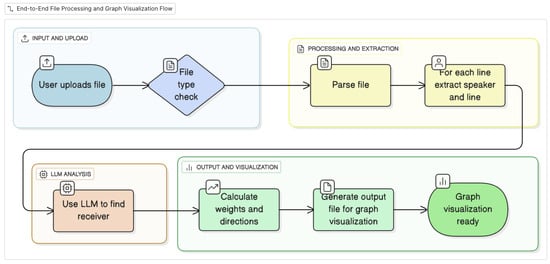

We construct a four-stage pipeline (Figure 1) to transform unstructured text into an interactive network visualization. The process begins with the acquisition and preprocessing of raw textual data from various file formats. Subsequently, an LLM is applied to identify the addressee. The output is then used to construct a network graph, where nodes represent the identified entities and weighted, directed edges quantify their interactions. The final stage involves deploying this graph to a web-based visualization platform, enabling dynamic exploration and detailed analysis of the network’s structural properties, such as centrality and clustering.

Figure 1.

Pipeline for processing plays.

3.2. Dataset

Our approach was evaluated on a preliminary annotated collection of 9 plays in 4 different languages publicly available in DraCor [7] (accessed on 14 August 2025), from which we extracted a selection of complex scenes (see Table 1). Scenes were deemed complex if they met two conditions: (1) a minimum of 5 participants and (2) dialogic features such as singular or plural targeted address, audience-directed speech, or soliloquies.

Table 1.

List of plays with complex scenes and their authors in the corpus.

The plays are sourced from the DraCor platform and subsequently processed. Two professional linguists independently annotated the speaker–receiver relations in the selected complex dialogue scenes to achieve the highest possible accuracy. Inter-annotator agreement measured by Cohen’s kappa is , indicating moderate agreement that is substantially above chance, which is acceptable for this complex linguistic task.

The LLM output is then assessed using two methods. For the first method, a response is considered correct if it is an identical match with the annotation of one or both annotators (exact match). For the second method, a response is considered correct if any non-empty overlap occurs between the output of the model and any of the two annotations (partial match). The moderate agreement between the annotators illustrates the subjectivity of this task. As such, the partial match metric was introduced for a more realistic evaluation scenario, considering that an overlap with at least one human annotator could be seen as a plausible answer.

3.3. Addressee Detection with LLM

For this study, we employ three different models: Llama3.3:70B [50], Gemma3:27B [51], and Qwen3:8B [52] with zero-shot prompting to identify the addressee in each scene. Llama 3.3:70B was included for its open availability and strong general-purpose performance at scale, supporting reproducibility. Gemma 3:27B was chosen for its efficient architecture and competitive English performance. Qwen 3:8B was selected for its documented multilingual coverage, essential for our cross-linguistic setting. Together, these open models provide a balance of efficiency and multilingual strength, while covering a broad parameter range (from 8B to 70B) that enables a robust evaluation.

We distinguish between three main cases: (1) directed speech, where the speaker addresses specific character(s) (e.g., Horatio or Ophelia, Claudius); (2) collective address (marked as all), indicating utterances targeting all onstage characters; and (3) audience address (audience), capturing fourth-wall-breaking direct speech.

The prompt structure consists of a sliding window approach with varying window sizes, a list of characters from the scene, and additional instructions for a specific JSON output. We conduct four different experiments using sliding windows of 5, 7, and 10 lines, as well as the whole scene, to compare their performances.

The sliding window mechanism partitions the scene into overlapping chunks with window size , as well as an additional setting where k is equal to the total number of lines in the scene (i.e., the entire scene as a single window), and step size . Consecutive windows thus share exactly 2 lines: , where . This 2-line overlap preserves speaker–addressee continuity across windows while preventing excessive redundancy that could bias model predictions toward repeated patterns. This controlled overlap provides multiple contextual perspectives on turn transitions, enabling performance comparison across window sizes to identify the optimal configuration for detecting long-range addressees and multi-target utterances.

Following the windowing strategy outlined above, each input to a model is formatted as shown in Table 2 and provided with a system context message.

Table 2.

Template structure for model input prompts in the addressee detection task.

The models are deployed using a vLLM inference engine with model-specific configurations as detailed in Table 3. All model responses are validated against a schema that contains line numbers, speakers, and receiver lists.

Table 3.

vLLM configuration parameters for each model.

Each model is used with the parameters listed in Table 4. The values were chosen to reduce randomness and ensure more deterministic outputs while maintaining diversity in token selection.

Table 4.

Generation parameters used in the study.

All four models were run sequentially on a single A100 GPU, which is an important consideration for reproducibility when using standard academic computing resources.

3.4. Network Visualization

Following addressee detection, we construct directed, weighted graphs to represent character interactions within each scene. Each scene is modeled as a directed graph , where V is the set of characters (nodes) and E is the set of communication links (edges) from a speaker to an addressee.

The weight of an edge from character a (speaker) to character b (addressee) measures the total number of words spoken from a to b:

where = set of all utterances spoken by a to b and = number of words in utterance u.

The visualizations employ directional arrows to indicate the communication flow, with edge thickness proportional to speaking volume. For example, if Character A speaks 50 words to Character B and B responds with 30 words, the network displays a thick A→B edge (weight 50) and a thinner B→A edge (weight 30), revealing asymmetric communication patterns invisible to co-occurrence methods. This approach enables both qualitative interpretation and quantitative analysis through standard SNA metrics such as centrality measures, network density, and clustering detection.

4. Results

4.1. Evaluation

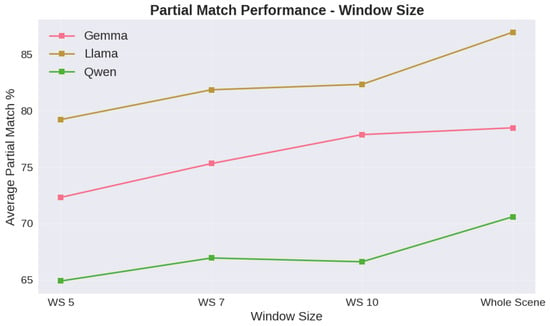

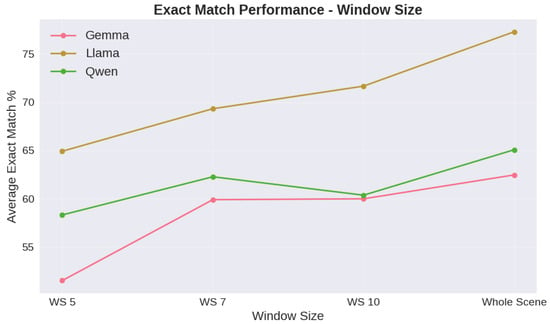

All three models agree well with the human annotators in our set of dramatic plays, as shown in Figure 2 and Figure 3 and Table 5. Across all window sizes, Llama3.3-70B consistently outperforms Gemma3-27B and Qwen3-8B in both partial and exact match performance.

Figure 2.

Partial match percentage across all window sizes.

Figure 3.

Exact match percentage across all window sizes.

Table 5.

Model performance on exact and partial match tasks (%).

Increasing the context window generally improves performance for all models, with the most notable gains occurring when moving from WS 10 to the whole scene. An exception is Qwen, which exhibits a slight decrease in performance when moving from a window size of 7 to 10. Overall, these results suggest that larger context windows enhance both recall-oriented (partial match) and precision-oriented (exact match) retrieval.

Table 5 shows the results per window size per model for both an exact and partial match. The top-performing model is Llama3.3 70B, with a 77.31% exact match across all scenes and all plays and 86.97% for a partial match.

Recognizing that partial match evaluation, where any overlap between predicted and annotated addressees counts as correct, may inflate performance metrics, we calculated precision, recall, and F1-score for the top-performing model and window size. The Llama3.3 model achieved a precision of , recall of , and F1-score of . This precision–recall tradeoff suggests that the model avoids over-generation but may miss some valid addressees.

Table 6 reports the per-scene results for Llama-3.3-70B, using the window size that yielded the highest performance—corresponding to the entire scene.

Table 6.

Llama3.3 whole scene performance.

Table 7 groups the results for Llama per language.

Table 7.

Average exact and partial match results by language.

For both partial and exact match, 13 out of 14 scenes have an agreement higher than 65%, which is comparable with the agreement between annotators, and 4 scenes register near-perfect partial agreement (91.00–98.44%). Performance varies widely by genre: classical tragedies like Hamlet (Act 1, Scene 2: 92.00% exact) perform higher than expressionist plays like Dantons Tod (Scene 2: 40.35% exact). The partial match range of 49.12–98.67% indicates that although absolute identification of addressees is not yet easy, the model finds at least a single correct receiver in most cases.

Another instance is that of the fifth scene of the fifth act of the play Anarchia domowa, for which, initially, most utterances are considered to be addressed to audience, but we unify audience with all since the text itself is unclear (this point being supported as well by our expert annotators). The unification increases the exact match from 25% to 75.0%, while the partial match increases from 50% to 87.5%.

A telling instance is in Dantons Tod, Scene 2, where the varying conceptions of the annotator of the collective addressees impacted the agreement scores. Baseline evaluation treats leute (“people”) and all as distinct labels, yielding baseline exact/partial matches of 40.35 /49.12%. However, the semantic equivalence of these terms (based upon their functional equivalence as collective addressees) boosts agreement to 70.00% (exact) and 78.00% (partial).

4.2. Window Size Optimization and Statistical Robustness

Performance comparison reveals a consistent improvement with larger context windows. To address potential outlier concerns, we perform a comprehensive robustness analysis. Table 8 summarizes the mean for exact matches per model and window size.

Table 8.

Mean addressee detection accuracy (%) by model and window size.

Individual scene analysis shows that a total of 81.8% of measurements (36/44) improved when the window size increased from five lines to the whole scene context, as supported also by the percentages in Table 9.

Table 9.

Improvement in addressee detection from WS5 to whole scene context.

Paired t-tests [53] confirm improvements for all models (p < 0.05). IQR-based outlier detection finds minimal impact (≤2.84 percentage points when outliers were removed). We also perform a one-way ANOVA [54] to assess whether addressee detection performance differs significantly across models for each window size. The results in Table 10 show that although Llama generally achieves higher accuracy than Gemma and Qwen, the differences are not statistically significant at the 0.05 level. Post hoc pairwise comparisons using Tukey HSD [55,56] also do not reveal significant differences between model pairs. These results suggest that, while certain models are favored in terms of performance trends, the observed differences may be due to chance.

Table 10.

ANOVA results comparing addressee detection performance across models for different window sizes.

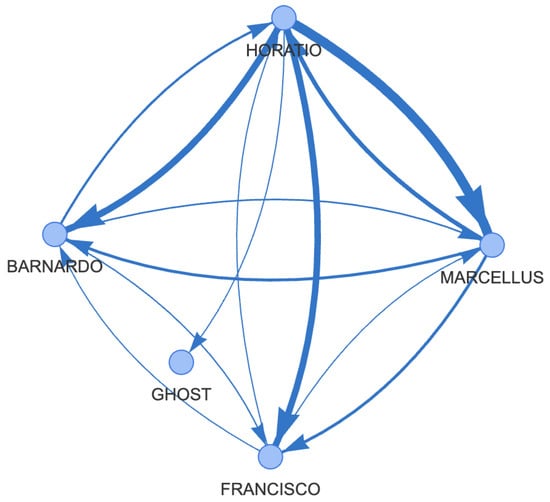

4.3. Visualizations

We create network graphs for each scene to visualize the complex relationships between characters. The direction between characters indicates speech acts, and the line thickness indicates the number of words spoken by one speaker toward a target character. Thus, the thickness of the links represents a quantifiable metric, measuring the frequency or volume of communication between characters. These networks also enable the analysis of structural properties, such as network density (overall connectivity), central actors (individuals with high centrality), and sub-groups or cliques.

We also perform a network analysis across the 14 theatrical scenes to examine character interaction patterns and communication dynamics (see Table 11). Our analysis indicates that the scenes contained between 7 and 19 participants, with message exchanges ranging from 8 to 152 communications per scene. The calculated graph density values varied from 0.11 to 0.64, indicating different levels of interconnectedness among characters, where higher density suggests more distributed dialogue patterns. Scenes with low density (like Anarchia domowa at 0.11) represent moments of social fragmentation or isolation, while high-density scenes (like W małym domku Act 2 at 0.64) show collective engagement. This connects to the tragic theory that social fragmentation precedes tragic moments. We identify between one and five dominant participants per scene based on centrality measures, representing key characters who function as communication hubs or structural bridges within the dramatic network. Dominant characters are extracted by computing betweenness centrality for all participants; afterwards, we select the top-p participants, where p = 0.8 to identify the participants who cumulatively cover at least 80% of all centrality in the graph. This threshold aligns with established practices in social network analysis for identifying core actors while minimizing the inclusion of peripheral characters. Interestingly, scenes with higher message counts do not necessarily exhibit higher graph density, suggesting that some scenes concentrate dialogue among fewer central characters while others distribute communication more evenly across the entire cast.

Table 11.

Network analysis metrics by play and scene.

Table 12 showcases our method on a full play, namely Ojciec Makary, a Polish play that yielded the best results in addressee detection. Act 1 has the highest number of scenes and messages but the lowest density, Act 2 achieves the highest density with fewer participants and messages, and Act 3 balances moderate density with the highest average number of dominant participants.

Table 12.

Aggregated network analysis metrics by act for Ojciec Makary.

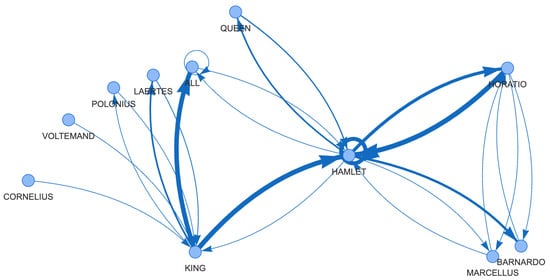

Considering the graph representations in Figure 4 and Figure 5, it is interesting to observe the change in dynamics from one scene to another. In Figure 4 (Hamlet, Act 1, Scene 1), the interactions are distributed among the guards (Barnardo, Francisco, and Marcellus) and Horatio, with the ghost serving as an important but isolated figure. The exchanges appear relatively balanced, reflecting the scene’s focus on establishing the supernatural premise of the play. However, in Figure 5 (Hamlet, Act 1, Scene 2), Hamlet’s appearance dramatically shifts the network structure. The other characters’ speeches gravitate toward him, as evidenced by the concentration of arrows pointing in his direction. This aligns with Hamlet’s role as the protagonist and the focal point of the court’s attention after his father’s death and his mother’s hasty remarriage. An interesting observation is the strong bidirectional exchange between Hamlet and Horatio, represented by thick arrows. This visual emphasis corroborates their close relationship in the play, where Horatio serves as Hamlet’s most trusted friend and confidant. In contrast, the interactions between Hamlet and the King appear more one-sided or superficial (the King tends to speak to Hamlet considerably more than Hamlet speaks to the King), reflecting a strained relationship.

Figure 4.

Hamlet, Act 1, Scene 1.

Figure 5.

Hamlet, Act 1, Scene 2.

It is important to note the improved readability of the network graphs compared to views without addressee identification, which become considerably more cluttered due to multiple unnecessary connections. For illustration, see the web view of Hamlet on DraCor (https://dracor.org/gersh/hamlet-prinz-von-daenemark, accessed on 21 September 2025).

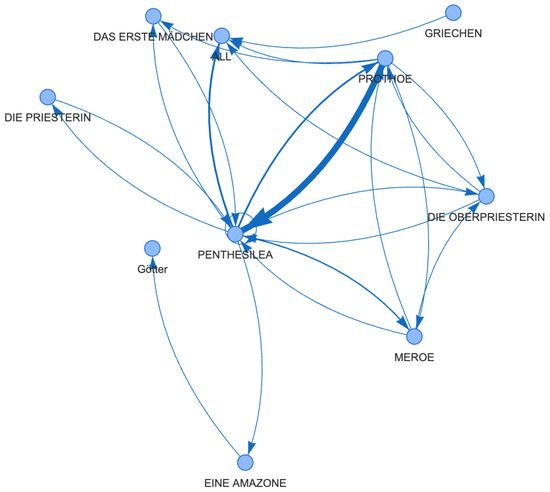

In the network diagram in Figure 6, PENTHESILEA is positioned at the center, connected to nearly every other character. This reflects her central role as protagonist and leader of the Amazons. The notably thick connection between PROTHOE and PENTHESILEA points to a close and frequent interaction. In the play, Prothoe is Penthesilea’s confidante, deeply aware of her emotional struggles.

Figure 6.

Penthesilea, Scene 9.

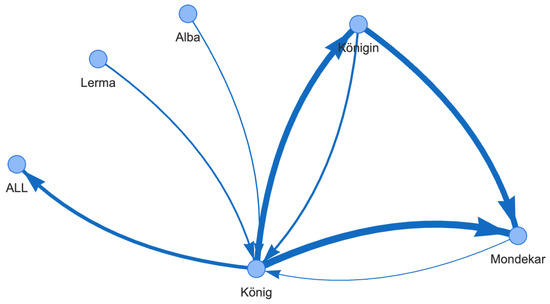

Figure 7 highlights the King’s (könig) dominant position in the social hierarchy of these interactions, as evidenced by both the number and variety of his contacts. The unilateral addressing dynamic, where the King speaks to the group (all) and is responded to only by individual replies, illustrates the power dynamic within the scene.

Figure 7.

Don Carlos, Act 1, Scene 6.

5. Discussion

The results of our LLM-based addressee detection technique show potential to improve social network analysis of tragic plays. By moving beyond typical co-occurrence heuristics to represent directed speech acts, our method can uncover power dynamics and conversational structures central to tragic plays. The results also suggest that LLMs can automate a task that requires arduous manual annotation without losing literary interpretability.

Unlike rule-based methods, which are limited to turn-taking adjacency, LLMs resolve long-range addressee references (e.g., deferred replies, interruptions) by leveraging dialogue history. For instance, in Hamlet Act 1, Scene 2, the model correctly linked Hamlet’s soliloquies to implicit audience addresses despite intervening dialogue.

The sliding window ablation (Table 5) shows a +12.38% exact match gain for Llama3.3-70B when expanding from five-line to full-scene contexts, underscoring the importance of broader discourse context.

Performance consistency across languages (e.g., 95.31% exact match for Polish Ojciec Makary and 88.89% for German Don Carlos) suggests that the method generalizes beyond English-centric corpora. This is attributed to the models’ pretraining on multilingual data and zero-shot transfer capabilities.

A limitation of the model is its tendency to over-generate potential receivers instead of only a single addressee. In most cases, it discovers at least one good recipient that agrees with human annotations. This limitation is evident in the discrepancy between exact and partial matching, as seen in the 9.66% gap between partial and exact matches (Llama3.3-70B), which suggests a hidden precision–recall tradeoff in machine addressee detection.

We categorized the main error types as follows:

- (i)

- Over-broad collective receivers.

The model predicts generic group designations such as all or audience instead of the intended individual addressee. For example, in Act 1, Scene 9 of Penthesilea, the König’s utterance “Gib diesen Toten mir heraus. Ich muß / Ihn wiederhaben” (eng. Give me back this dead man. I must have him back) is directed specifically at Domingo, as annotated by both human annotators, but the model incorrectly assigned all as the receiver. Another common issue was that the model frequently over-predicted addressees, including several unintended recipients, resulting in inflated receiver lists that exceeded what was necessary. Such is the case of the second scene of Dantons Tod.

- (ii)

- Missing receivers.

The reverse is also true: there were instances where the model assigned fewer characters as addressees than the human annotations. An example is Scene 16 from the first act of W Małym domku, when Doktór introduces Jurkiewicz by saying “A! pan inżynier!… Pan inżynier Jurkiewicz, mój lokator” (eng. Ah! the engineer!… Engineer Jurkiewicz, my tenant). Human annotators identify this as directed to both JURKIEWICZ and SĘDZIA (eng. judge), but the model only predicts JURKIEWICZ as the receiver, missing the fact that the introduction is also meant for the judge.

- (iii)

- Context-dependent misassignments.

The model fails to incorporate preceding dialogue context when determining addressees, leading to assignments that contradict established conversational flow or character relationships within the scene. An example is the first scene of Hamlet, where the model predicted all in both instances where the receiver was, in fact, the ghost, which did not speak but was present in the scene.

- (iv)

- Self-referential confusion.

The model incorrectly assigns utterances to the speaker themselves rather than identifying the intended external addressee, particularly in cases involving reported speech or character reflections. An example of this occurs in the execution scene in Danton, where Sanson states “E numai pentru păr ăsta” (eng. This one is only for the hair) while examining Danton before the execution. Both human annotators identify this as directed toward DANTON, but the model erroneously assigns SANSON as the receiver, treating the executioner’s commentary as self-directed.

These error patterns suggest potential avenues for model calibration, including the implementation of list-size penalties to discourage over-broad collective assignments and re-ranking mechanisms to better incorporate contextual dialogue cues.

Although our LLM-based approach improves scalability and contextual understanding in addressee detection, it is not immune to potential biases. Models trained primarily on large corpora dominated by English or other widely represented languages may underperform on underrepresented languages (e.g., Romanian, Polish). Future studies could address this limitation by leveraging larger and more diverse corpora.

Our network visualizations successfully represent hierarchical relationships and influence patterns through edge weighting, empirically validating literary studies of character relationships.

These interactive network visualizations will form the core of an upcoming digital platform under development, where users will be able to interact with character networks dynamically through manipulable visualization parameters (edge thresholds, centrality metrics). This platform expansion aims to bridge the gap between quantitative network analysis and close reading practices by providing immediate textual grounding while enabling macroscopic pattern recognition.

Limitations

The moderate agreement between annotators shows that it is not always possible to definitely say to whom an utterance is addressed, which also places a limit on the expected accuracy of an LLM. However, the main goal of capturing a general view of the interactions in a play is not affected by different interpretations of individual utterances.

In this study, the focus was on showing the feasibility of using LLMs for building the interaction networks. Another important aspect, not covered here, is the interpretability of the choices made by the models. Although not intrinsically interpretable, modern LLMs can be prompted to “reason” before answering, even though this reasoning does not always reflect a model’s beliefs [57].

In addition, because of the limited scope of this study, we cannot determine whether the observed differences between scenes can be attributed to the different languages, the complexity of each scene, or potential pretraining biases of the LLMs, as these plays are well known and multiple references to them exist on the Internet. Since plays are culturally and historically situated texts, interpretations may be skewed by the models’ reliance on web-based corpora. Such biases may influence how characters and relationships are represented, particularly across different languages and cultural contexts.

6. Conclusions

This research constructs a pipeline that integrates computational methods with literary analysis, focusing on LLM-based addressee identification in tragic plays. Our approach extends conventional SNA approaches by projecting directionally represented speech acts, allowing for the proper examination of power dynamics and social dynamics. The method’s scalability, indicated by its capability to process texts in multiple languages, alongside its accuracy evaluated against professional annotation in our preliminary dataset, suggests its benefits for use in large-scale dramatic analysis. The pipeline achieves stable performance across four languages (English, German, Romanian, and Polish) without task-specific fine-tuning, underscoring the generalization capabilities of LLMs for limited-resource literary NLP. For example, the model achieves an exact match rate of up to 95.31% on Polish texts, comparable to its performance on English texts.

Network visualizations created from our output provide both quantitative measures and intuitive representations of dramatic interactions, confirming existing literary theories while revealing novel patterns of interaction.

Our work opens up multiple promising directions for further research. We plan to expand the corpus to include more plays from the languages already covered in this study, as well as plays in other languages. The system will analyze raw texts freely within our LLM pipeline to identify and visualize speaker–receiver relations, enabling researchers to study interaction patterns without requiring manual annotations.

Author Contributions

Conceptualization, A.C.U., S.R., A.T. and M.D.; methodology, A.C.U., S.R. and M.D.; software, A.C.U. and S.R.; validation, A.C.U., S.R., L.-M.N. and M.D.; formal analysis, A.C.U. and S.R.; investigation, A.C.U., S.R., L.-M.N., O.O. and A.T.; resources, O.O., A.T. and M.D.; data curation, A.C.U., L.-M.N. and O.O.; writing—original draft preparation, A.C.U., S.R. and A.T.; writing—review and editing, A.C.U., S.R., A.T. and M.D.; visualization, A.C.U. and S.R.; supervision, A.T. and M.D.; project administration, A.T. and M.D.; funding acquisition, A.T. and M.D. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the EU’s NextGenerationEU instrument through the National Recovery and Resilience Plan of Romania – Pillar III-C9-I8, managed by the Ministry of Research, Innovation and Digitalization, within the project entitled Measuring Tragedy: Geographical Diffusion, Comparative Morphology, and Computational Analysis of European Tragic Form (METRA), contract no. 760249/28.12.2023, code CF 163/31.07.2023.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data used in this study can be found in the open-source DraCor platform at https://dracor.org/ (accessed on 14 August 2025). We made the code publicly available at https://github.com/upb-nlp/METRA (version v1.0.0, accessed on 14 August 2025).

Acknowledgments

The authors acknowledge the METRA team for their support in annotations and conceptualization efforts.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| LLM | Large Language Model |

| SNA | Social Network Analysis |

| NLP | Natural Language Processing |

| JSON | JavaScript Object Notation |

References

- Moretti, F. Network theory, plot analysis. New Left Rev. 2011, 68, 1–31. [Google Scholar] [CrossRef]

- Moretti, F.; Terian, A. Measuring Tragedy—And Drawing Its Borders. Rev. Transilv. 2024, 5, 1–4. [Google Scholar] [CrossRef]

- Trilcke, P. Social network analysis (SNA) als Methode Einer Textempirischen Literaturwissenschaft. In Empirie in der Literaturwissenschaft; Brill Mentis: Leiden, The Netherlands, 2013; pp. 201–247. [Google Scholar]

- Trilcke, P.; Fischer, F.; Kampkaspar, D. Digital network analysis of dramatic texts. In Proceedings of the DH2015: Global Digital Humanities, Sydney, Australia, 29 June–3 July 2015. [Google Scholar]

- Fischer, F.; Skorinkin, D. Social network analysis in Russian literary studies. In The Palgrave Handbook of Digital Russia Studies; Springer Nature: Berlin/Heidelberg, Germany, 2021; p. 517. [Google Scholar]

- Elson, D.; Dames, N.; McKeown, K. Extracting social networks from literary fiction. In Proceedings of the 48th Annual Meeting of the Association for Computational Linguistics, Uppsala, Sweden, 11–16 July 2010; pp. 138–147. [Google Scholar]

- Fischer, F.; Börner, I.; Göbel, M.; Hechtl, A.; Kittel, C.; Milling, C.; Trilcke, P. Programmable Corpora: Introducing DraCor, an Infrastructure for the Research on European Drama. In Proceedings of the DH2019: “Complexities”, Utrecht, The Netherlands, 9–12 July 2019. [Google Scholar] [CrossRef]

- Austin, J.L. How to Do Things with Words; Harvard University Press: Cambridge, MA, USA, 1975. [Google Scholar]

- Searle, J.R. Speech Acts: An Essay in the Philosophy of Language; Cambridge University Press: Cambridge, UK, 1969. [Google Scholar]

- Grice, H.P. Logic and conversation. Syntax Semant. 1975, 3, 43–58. [Google Scholar]

- Sacks, H.; Schegloff, E.A.; Jefferson, G. A simplest systematics for the organization of turn-taking for conversation. Language 1974, 50, 696–735. [Google Scholar] [CrossRef]

- Schechner, R. Performance Theory; Routledge: Abingdon, UK, 2003. [Google Scholar]

- Fischer-Lichte, E.; Jain, S. The Transformative Power of Performance: A New Aesthetics; Routledge: Abingdon, UK, 2008. [Google Scholar]

- Lehmann, H.T.; Jürs-Munby, K. Postdramatic Theatre; Routledge: Abingdon, UK, 2006. [Google Scholar]

- Carlson, M. Theatre: A Very Short Introduction; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Bakhtin, M. The Dialogic Imagination: Four Essays; University of Texas Press: Austin, TX, USA, 2010; Volume 1. [Google Scholar]

- Moretti, F. Simulating dramatic networks: Morphology, history, literary study. J. World Lit. 2020, 6, 24–44. [Google Scholar] [CrossRef]

- Moretti, F. Network Semantics. A Beginning. Rev. Transilv. 2024, 5, 5–11. [Google Scholar] [CrossRef]

- Labatut, V.; Bost, X. Extraction and analysis of fictional character networks: A survey. ACM Comput. Surv. 2019, 52, 1–40. [Google Scholar] [CrossRef]

- Elsner, M.; Charniak, E. You talking to me? A corpus and algorithm for conversation disentanglement. In Proceedings of the ACL, Columbus, OH, USA, 15–20 June 2008; pp. 834–842. [Google Scholar]

- Joty, S.; Carenini, G.; Lin, C.Y. Topic Segmentation and Labeling in Asynchronous Conversations. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 521–531. [Google Scholar]

- Zhang, Z.; Li, J.; Zhu, P.; Zhao, H.; Liu, G. Modeling Multi-turn Conversation with Deep Utterance Aggregation. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 3740–3752. [Google Scholar]

- Ouchi, H.; Tsuboi, Y. Addressee and Response Selection for Multi-Party Conversation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Santa Fe, NM, USA, 20–26 August 2018; pp. 2133–2143. [Google Scholar]

- Kummerfeld, J.K.; Gouravajhala, S.R.; Peper, J.; Athreya, V.; Gunasekara, C.; Ganhotra, J.; Patel, S.S.; Polymenakos, L.; Lasecki, W.S. Analyzing assumptions in conversation disentanglement research through the lens of a new dataset and model. arXiv 2018, arXiv:1810.11118. [Google Scholar]

- Mehri, S.; Carenini, G. Chat disentanglement: Identifying semantic reply relationships with random forests and recurrent neural networks. In Proceedings of the Eighth International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Taipei, Taiwan, 27 November–1 December 2017; pp. 615–623. [Google Scholar]

- Feng, D.; Shaw, E.; Kim, J.; Hovy, E. Learning to detect conversation focus of threaded discussions. In Proceedings of the Human Language Technology Conference of the NAACL, Main Conference, New York, NY, USA, 4–9 June 2006; pp. 208–215. [Google Scholar]

- Wang, L.; Oard, D.W. Context-based message expansion for disentanglement of interleaved text conversations. In Proceedings of the Human Language Technologies: The 2009 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Boulder, CO, USA, 31 May–5 June 2009; pp. 200–208. [Google Scholar]

- Tan, M.; Wang, D.; Gao, Y.; Wang, H.; Potdar, S.; Guo, X.; Chang, S.; Yu, M. Context-aware conversation thread detection in multi-party chat. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 6456–6461. [Google Scholar]

- Akhtiamov, O.; Siegert, I.; Karpov, A.; Minker, W. Using Complexity-Identical Human- and Machine-Directed Utterances to Investigate Addressee Detection for Spoken Dialogue Systems. Sensors 2020, 20, 2740. [Google Scholar] [CrossRef]

- Katiyar, A.; Cardie, C. Investigating LSTMs for Joint Extraction of Opinion Entities and Relations. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 919–929. [Google Scholar]

- Zhao, R.; Sano, S.; Ouchi, H.; Tsuboi, Y. Automatically Identifying Addressees in Multi-party Conversations. Comput. Linguist. 2020, 46, 825–863. [Google Scholar]

- Masala, M.; Ruseti, S.; Rebedea, T.; Dascalu, M.; Gutu-Robu, G.; Trausan-Matu, S. Identifying the Structure of CSCL Conversations Using String Kernels. Mathematics 2021, 9, 3330. [Google Scholar] [CrossRef]

- Kenton, J.D.M.W.C.; Toutanova, L.K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Zhang, S.; Roller, S.; Goyal, N.; Artetxe, M.; Chen, M.; Chen, S.; Dewan, C.; Diab, M.; Li, X.; Lin, X.V.; et al. OPT: Open Pre-trained Transformer Language Models. arXiv 2022, arXiv:2205.01068. [Google Scholar] [CrossRef]

- Chang, K.K.; Cramer, M.; Yang, D.; Bamman, D. Speak, Memory: An Archaeology of Books Known to ChatGPT/GPT-4. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 12747–12763. [Google Scholar]

- Li, F.G.; Alizadeh, M.; Kubli, M. ChatGPT Outperforms Crowd-Workers for Text-Annotation Tasks. Proc. Natl. Acad. Sci. USA 2023, 120, e2305016120. [Google Scholar]

- Qin, C.; Zhang, A.; Zhang, Z.; Chen, J.; Yasunaga, M.; Yang, D. Is ChatGPT a General-Purpose Natural Language Processing Task Solver? In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 1339–1384. [Google Scholar]

- Kocmi, T.; Federmann, C. Large Language Models Are State-of-the-Art Evaluators of Translation Quality. In Proceedings of the 24th Annual Conference of the European Association for Machine Translation, Tampere, Finland, 12–15 June 2023; pp. 193–203. [Google Scholar]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D.; et al. Emergent Abilities of Large Language Models. arXiv 2022, arXiv:2206.07682. [Google Scholar]

- Brahman, F.; Zaiane, O. Learning to Rationalize for Nonmonotonic Reasoning with Distant Supervision. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; Volume 35, pp. 12592–12601. [Google Scholar]

- Sims, M. Literary Pattern Recognition: Modernism between Close Reading and Machine Learning. PMLA 2019, 134, 48–62. [Google Scholar]

- Penzo, N.; Sajedinia, M.; Lepri, B.; Tonelli, S.; Guerini, M. Do LLMs suffer from Multi-Party Hangover? A Diagnostic Approach to Addressee Recognition and Response Selection in Conversations. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 11210–11233. [Google Scholar] [CrossRef]

- Inoue, K.; Lala, D.; Elmers, M.; Ochi, K.; Kawahara, T. An LLM Benchmark for Addressee Recognition in Multi-modal Multi-party Dialogue. In Proceedings of the 15th International Workshop on Spoken Dialogue Systems Technology, Bilbao, Spain, 27–30 May 2025; pp. 330–334. [Google Scholar]

- Radwan, A.; Amarneh, M.; Alawneh, H.; Ashqar, H.I.; AlSobeh, A.; Magableh, A.A.A.R. Predictive analytics in mental health leveraging LLM embeddings and machine learning models for social media analysis. Int. J. Web Serv. Res. (IJWSR) 2024, 21, 1–22. [Google Scholar] [CrossRef]

- Alshattnawi, S.; Shatnawi, A.; AlSobeh, A.M.; Magableh, A.A. Beyond Word-Based Model Embeddings: Contextualized Representations for Enhanced Social Media Spam Detection. Appl. Sci. 2024, 14, 2254. [Google Scholar] [CrossRef]

- Al-Ahmad, B.; Alsobeh, A.; Meqdadi, O.; Shaikh, N. A Student-Centric Evaluation Survey to Explore the Impact of LLMs on UML Modeling. Information 2025, 16, 565. [Google Scholar] [CrossRef]

- Yan, Y.; Zhao, H.; Zhu, S.; Liu, H.; Zhang, Z.; Jia, Y. Dialogue-Based Multi-Dimensional Relationship Extraction from Novels. arXiv 2025, arXiv:2507.04852. [Google Scholar]

- Qamar, A.; Tong, J.; Huang, R. Do LLMs Understand Dialogues? A Case Study on Dialogue Acts. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 27 July–1 August 2025; pp. 26219–26237. [Google Scholar]

- Dey, S.; Desarkar, M.S.; Ekbal, A.; Srijith, P. DialoGen: Generalized Long-Range Context Representation for Dialogue Systems. arXiv 2022, arXiv:2210.06282. [Google Scholar]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A.; Al-Dahle, A.; Letman, A.; Mathur, A.; Schelten, A.; Vaughan, A.; et al. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar] [CrossRef]

- Team, G.; Kamath, A.; Ferret, J.; Pathak, S.; Vieillard, N.; Merhej, R.; Perrin, S.; Matejovicova, T.; Ramé, A.; Rivière, M.; et al. Gemma 3 technical report. arXiv 2025, arXiv:2503.19786. [Google Scholar] [CrossRef]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F.; et al. Qwen technical report. arXiv 2023, arXiv:2309.16609. [Google Scholar] [CrossRef]

- Hsu, H.; Lachenbruch, P.A. Paired t test. In Wiley StatsRef: Statistics Reference Online; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2014. [Google Scholar]

- Ståhle, L.; Wold, S. Analysis of variance (ANOVA). Chemom. Intell. Lab. Syst. 1989, 6, 259–272. [Google Scholar] [CrossRef]

- Tukey, J.W. Comparing individual means in the analysis of variance. Biometrics 1949, 5, 99–114. [Google Scholar] [CrossRef]

- Abdi, H.; Williams, L.J. Tukey’s honestly significant difference (HSD) test. In Encyclopedia of Research Design; Sage: Thousand Oaks, CA, USA, 2010; Volume 3, pp. 1–5. [Google Scholar]

- Chen, Y.; Benton, J.; Radhakrishnan, A.; Uesato, J.; Denison, C.; Schulman, J.; Somani, A.; Hase, P.; Wagner, M.; Roger, F.; et al. Reasoning Models Don’t Always Say What They Think. arXiv 2025, arXiv:2505.05410. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).