Abstract

Face morphing attacks have become a serious threat to Face Recognition Systems (FRSs). A de-morphing-based morphing attack detection method has been proposed and studied, which uses suspect and live capture, but the unknown morphing parameters in the used morphing algorithm make applying de-morphing methods challenging. This paper proposes a robust face morphing attack detection (FMAD) method (pipeline) leveraging deep learning de-morphing networks. Inspired by differences in similarity score (i.e., cosine similarity between feature vectors) variations between morphed and non-morphed images, the detection pipeline was proposed to learn the variation patterns of similarity scores between live capture and de-morphed face/bona fide images with different de-morphing factors. An effective deep de-morphing network based on StyleGAN and the pSp (pixel2style2pixel) encoder was developed. The network generates de-morphed images from suspect and live images with multiple de-morphing factors and calculates similarity scores between feature vectors from the ArcFace network, which are then classified by the detection network. Experiments on morphing datasets from the Color FERET, FRGCv2, and SYS-MAD databases, including landmark-based and deep learning attacks, demonstrate that the proposed method performs high accuracy in detecting unseen morphing attacks across different databases. It attains an Equal Error Rate (EER) of less than 1–4% and a Bona Fide Presentation Classification Error Rate (BPCER) of approximately 11% at an Attack Presentation Classification Error Rate (APCER) of 0.1%, outperforming previous methods.

1. Introduction

Biometric methods are widely used to identify individuals based on unique biological and behavioral characteristics. One particular area where biometrics, especially Face Recognition Systems (FRSs), has gained considerable traction is in international airport security protocols. By leveraging biometric data stored in electronic Machine-Readable Travel Documents (eMRTDs) [1], FRS enables authorities to compare the facial features captured in passports with real-time images of travelers. Despite the high accuracy of state-of-the-art FRS in controlled scenarios, several studies showed that FRS is sensitive or vulnerable to many image modification attacks. One of the most dangerous threats to FRS is a face morphing attack (FMA), which seamlessly mixes multiple face images with a new facial image containing the facial features of the subjects. In the context of biometrics, face morphing poses a serious risk, as it enables the creation of synthetic identities that can bypass security measures. It is important to highlight that the production of high-quality and reliable morphed images requires the ability to remove artifacts and abnormal pixels to achieve high visual similarity, in order to convince the officer.

FMA should maximize both the probability of acceptance of the morphed image by the human officer in the enrollment stage and the possibility of being identified as the same person during the verification stage. In the enrollment stage, an attacker has to find an accomplice and morph his/her facial features with himself/herself to apply for a passport or another form of electronic travel document containing the manipulated image. Once the electronic travel document containing the morphed image is issued to the accomplice, the criminal can utilize it to bypass border controls, whether they are automated or manually operated. Such an attack exploiting the vulnerability of the FRS system was first described in [2]. Numerous face morphing attack methods [3,4,5,6,7] have been proposed, which have further highlighted the vulnerability of FRSs.

To counter the risk of such FMA attacks, many face morphing attack detection (FMAD) strategies were proposed to identify instances where facial images have been morphed. Two prominent approaches in this domain are single-image-based FMAD (S-FMAD) [8,9] and differential-image-based FMAD (D-FMAD) [10,11,12,13,14]. While S-FMAD evaluates individual images to determine whether they have undergone morphing, D-FMAD contrasts the suspect image with a trusted probe image to indicate cases of morphing. Among D-FMAD methods, de-morphing has emerged as a promising one. De-morphing was first introduced by Ferrara et al. [15]. In this differential FMAD approach, the trust live capture (TLC) is utilized to revert (de-morph) a potentially morphed image. Essentially, the TLC is subtracted from the suspect image with a predefined weight (de-morphing factor). The resulting de-morphed face image is then compared with the TLC using an FRS. Thus, the face recognition score between the TLC and the de-morphed image serves as the final FMAD score. If this comparison yields a non-match, it indicates that a morphing attack has been detected; otherwise, the authentication attempt is considered bona fide. Later, Ortega-Delcampo et al. [16] introduced autoencoders to restore accomplices’ facial images for detecting morphing. Banerjee et al. [17] restored the facial images of two contributors from a single morphed image. Shiqerukaj et al. [18] combined de-morphing with Deep Face Representation. Peng et al. [19] utilized a symmetric dual network and restoration losses for accomplice image restoration. Min Long et al. [20] proposed a diffusion-based method, focusing on accomplice image reconstruction.

Though some previous methods give reasonable performance, their practical utility in real-world scenarios remains limited. Environmental factors such as varying lighting conditions, facial expressions, and image resolutions can significantly impact the detection accuracy of these methods. Specifically in de-morphing methods, the morphing factor, which represents the weight of the attacker’s contribution, is an unknown variable that significantly influences performance. Due to this limitation, Ferrara et al. [15] proposed a practical range of morphing for successful enrollment in a landmark-based morphing method and also tested with the same range of de-morphing blending weights in a landmark-based one. The experiment results showed the effectiveness of a de-morphing method itself, but its performance varies with the combinations of two (morphing and de-morphing) blending parameters. It is a mathematically ill-posed problem to estimate the morphing factor for the suspect (morphed or bona fide) and TLC. Furthermore, the recent deep learning-based methods have diverse blending parameters, so the estimation of morphing contribution parameters has become difficult and impractical.

With the primary goal of classifying whether the suspect image is morphed or bona fide, rather than reconstructing the accomplice’s face from the morphed image, we aim to reduce reliance on prior knowledge of face morphing generation in existing de-morphing methods and improve the efficiency of detecting morphing attacks. To achieve this, we train a neural network to recognize the similarity score patterns of de-morphed images, considering different contribution factors associated with enrollment. As we will show in Section 5, similarity score variation of the de-morphed image with the live capture varying the de-morphing factor shows different patterns. Inspired by differences in similarity score variations between morphed and non-morphed images, the detection pipeline was proposed to learn the variation patterns of similarity scores between live capture and de-morphed face images with different de-morphing factors. An effective deep de-morphing network based on StyleGAN and the pSp (pixel2style2pixel) encoder [21] was developed. The network generates de-morphed images from suspect and live images with multiple de-morphing factors and calculates similarity scores between feature vectors from the ArcFace network, which are then classified by the detection network.

The main contributions are in the following:

- We propose a D-FMAD method using a neural network that learns the similarity score patterns of de-morphed images with different contribution factors with TLC images.

- We propose a simple but effective and efficient deep learning face morphing and de-morphing method utilizing pixel2style2pixel [21], which does not need further fine-tuning. We used this de-morphing method for our pipeline of similarity pattern-based D-FMAD.

- We conduct experiments and analysis for created FMAD databases and SYN-MAD datasets. The results demonstrate that the proposed similarity pattern-based detection method outperforms the existing FMAD method in detecting unseen morphing attacks across different datasets.

The rest of this paper is organized as follows: Related works are reviewed in Section 2. Section 3 provides a detailed description of the proposed FMAD. The FMAD dataset is described in Section 4. The experiment and analysis are presented in Section 5. Finally, some conclusions are drawn in Section 6.

2. Related Works

2.1. Face Morphing Attack Methods

The face morphing process is defined as a special effect that lends multiple facial images to generate a single image that contains identity features from multiple contributors. In recent years, obtaining a morphed image has become relatively simple and cost-effective for the general public due to advances and public availability of computer vision and image processing tools. There are various open-source solutions, alongside both free and commercial tools in the form of downloadable applications and online services. These methods are divided into two main approaches: landmark-based and deep learning-based.

2.1.1. Landmark-Based Morph Generation

The first and still most common creation method for generating morphs is the landmark-based approach [15], where morphing is accomplished by blending images according to corresponding landmark points within the facial region, such as the nose, eyes, and mouth areas. These reference points can be obtained through either manual annotation or automatic identification based on facial landmark detection algorithms such as Dlib [22]. Once the landmarks are identified on both faces involved in the morphing, they are used to deform or warp the facial features. During the morphing process, pixel replacement may result in misaligned pixels, causing noise and artifacts and yielding unrealistic and easily detectable images. To address this, post-processing steps like image smoothing, sharpening, edge correction, histogram equalization, and manual retouching are typically performed to minimize artifacts [23,24,25]. Common open-source tools reliant on landmarks include GIMP/GAP [26] and OpenCV [27]. Additionally, there are various commercial options, such as Face Fusion, FantaMorph, and FaceMorpher, that facilitate the creation of extensive morphed image sets.

2.1.2. Deep Learning-Based Morph Generation

Recent advancements in deep learning have opened new avenues for the creation of morph generation by interpolating two facial images in the latent space based on Generative Adversarial Networks (GANs). Generally, GAN-based approaches, such as the MorGAN architecture [4] for morph generation, synthesize morphed images by sampling two facial images within the latent space of the deep learning network, employing a generator comprising encoders, decoders, and a discriminator. Venkatesh et al. [6] employed StyleGAN architecture [28] to enhance the morph generation process by embedding images in the intermediate latent space and increasing the generated image resolution that meets the ICAO standard, which requires a minimum inter-eye distance of 90 pixels. Additionally, Zhang et al. [5] proposed the use of identity priors to facilitate high-quality morphed face generation (MIPGAN-I and MIPGAN-II), scaling up to threaten FRS posed by GAN-based morphs. Recently, Damer et al. [7] presented a diffusion-based method for face morphing, which shows high quality in morphing facial images compared with MIPGAN-I and II.

Recently, Zhang et al. [29] developed vision-language-model-based interactive morphing attack methods.

2.2. Face Morphing Attack Detection

Face morphing attack detection has been extensively studied in the literature and can be categorized into two types: single-image FMAD and differential FMAD.

2.2.1. Single-Image-Based MAD (S-FMAD)

The objective of S-FMAD is to identify face morphing attacks using a single subject’s image as input to the algorithm. The FMAD system receives the image to be analyzed, extracting features to determine whether it is a morph or a genuine image. S-FMAD can be applied during both enrollment and verification processes. In the literature on S-FMAD detection, various approaches have been proposed for detecting morph attacks. These include texture-based methods utilizing LBP, LPQ, BSIF, SIFT, and SURF features [30,31], as well as techniques aimed at extracting noise from images [32,33]. Some approaches detected morphing by assessing the quality differences between the original (bona fide) image and the resulting morphed image [34,35]. Deep learning methods [36,37] are increasingly utilized, showing superior performance compared with texture-based approaches. In order to detect morphing reliably, several approaches are put together in a hybrid form [38,39]. Additionally, a competition was organized based on Privacy-Aware Synthetic Training Data (SYN-MAD) [8], and other works highlight efforts to build a robust and generalized S-MAD with the baseline solution [9].

S-FMAD could be used for both photo enrollment and on-site control, but very challenging because it must adapt to variations in image quality, diverse sensor types (cameras), various morph generation tools, and different print–scan processes.

2.2.2. Differential-Image-Based FMAD (D-FMAD)

D-FMAD, on the other hand, leverages a TLC alongside the suspected morph image. While the meta information offered by the TLC provides advantages, it is important to note that TLCs are commonly acquired in semi-supervised environments and are influenced by various external factors. D-FMAD can be classified into two primary approaches: one involves comparing the biometric characteristics of the two facial images, while the other efforts to reverse the morphing process.

The idea of feature-based D-FMAD is to extract feature vectors from a suspect image and a TLC and then compare the extracted vectors to determine whether a suspicious facial image has been morphed. A typical technique is Deep Face Representations proposed by Scherhag et al. [13]. Muhammad Hamza et al. [14] proposed a method to detect morph attacks on datasets containing Morph-2 and Morph-3 images. Baaria Chaudhary et al. [12] employed a wavelet-based Siamese network to identify morph artifacts in the wavelet domain. Le Qin et al. [11] presented methods for detecting and locating face morphing attacks by utilizing feature-wise supervision for both single-image-based and differential-based approaches. Additionally, Raghavendra Ramachandra et al. [10] introduced a multispectral image captured as a trusted capture to detect morphing attacks.

Face de-morphing techniques reverse the morphing process, reconstructing the original component images employed in creating the morphed image. Effort in this domain was pioneered by Ferrara et al. in the landmark-based method [15], which, however, relies on prior knowledge of the face morphing generation. In contrast, Ortega-Delcampo et al. [16] introduced a convolutional neural network (CNN)-based method for detecting face morphing attacks. This approach reconstructs facial images of accomplices without requiring prior knowledge of morphed facial images. Nevertheless, the simplicity of the network architecture results in generated images of insufficient quality. Banerjee et al. [17] managed to restore the facial images of the two contributors from a single morphed facial image using a generator, three discriminators, and a series of adversarial losses. However, the performance significantly declines compared with de-morphing methods with a live image. Peng et al. [19] utilized a symmetric dual network and two layers of restoration losses to restore the facial image of the accomplice. More recently, Min Long et al. [20] proposed a diffusion-based method to encode facial images into semantic and stochastic latent spaces for face de-morphing. Both of these methods focus on reconstructing the facial image of the accomplice rather than detecting morphing attacks. Shiqerukaj et al. [18] combined two differential morphing attack detection methods, i.e., De-morphing [15] and Deep Face Representation [13]. This approach adopts de-morphing with a fixed de-morphing factor, potentially reducing its effectiveness when the attacker employs different priors.

Face Recognition System (FRS) performance is crucial in D-FMAD performance. Different varieties of FRS are available, including commercial-off-the-shelf (COTS) [40,41,42] and deep learning-based open-source FRS [43,44,45]. The accuracy and reliability of FRS enhance the effectiveness of methods, ensuring robust detection of morphing attacks in biometric security systems. The effectiveness of the deep face recognition network ArcFace in D-FMAD has been shown in many previous works [13,18]. Therefore, we have integrated ArcFace into our decision network.

3. Proposed FMAD Method

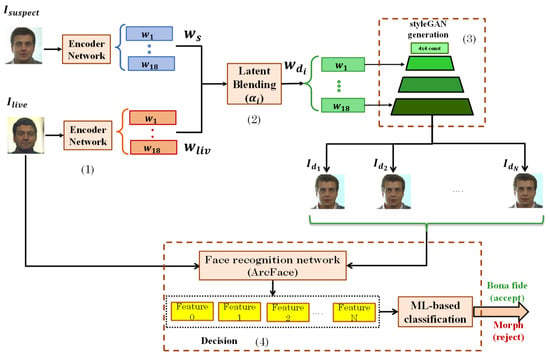

Figure 1 illustrates the pipeline of the proposed face morphing attack detection method. The pipeline consists of 4 main stages: a (face feature) encoder network, a latent space blending block, a face decoder (StyleGAN generator), and an ML-based decision with a similarity scores evaluator. Latent vectors extracted from suspect (morphed or bona fide) images and TLC through an encoder network are blended together in the StyleGAN W + latent space at multiple de-morphing factors. The obtained latent codes are fed into a StyleGAN generator to produce corresponding de-morphed images. These de-morphed images are compared with TLC images using ArcFace features in a machine learning-based classifier to distinguish between bona fide attempts and morph attacks.

Figure 1.

Proposed morphing attack detection pipeline. There are 4 main stages: (1) encoder network, (2) latent space blending block, (3) StyleGan (4) Morphing detection discriminator network.

It is noteworthy that we do not propose a new encoder, nor do we retrain StyleGAN. Instead, we leverage the strengths of existing models to carry out multiple de-morphing processes, thereby enabling the detection of morphed images. In this study, we employ the pSp encoder [21] with a StyleGAN- based decoder and ArcFace face similarity to benchmark the performance of the proposed method. However, the proposed similarity pattern-based method can be applied to other similar implementations.

Since Generative Adversarial Networks (GANs) were introduced in 2014, there have been a lot of improvements proposed that made it a state-of-the-art method to generate synthetic images including synthetic human faces. However, there was not much focus on control over the generator part of GAN. Face morphing and de-morphing processes require the controllability of features of human faces, such as pose, hair color, and eye color. StyleGAN provides excellent hierarchical control, enabling the development of numerous reconstruction methods [21,46,47] and deep learning face morphing [5,6].

In the StyleGAN model, a latent code in the input latent space Z obtained from the input image is first transformed into in the intermediate latent space W using a nonlinear mapping network , implemented as an 8-layer CNN. The dimensionality of both spaces is set to 512. Learned affine transformations then converted into styles , controlling adaptive instance normalization (AdaIN) operations after each convolution layer of the synthesis network G. Additionally, the generator introduces explicit noise inputs to generate stochastic detail. These noise inputs are single-channel images of uncorrelated Gaussian noise, fed to each layer of the synthesis network and added to the output of the corresponding convolution through learned per-feature scaling factors.

The latent vector is linearly combined to achieve image-level morphing. This approach is motivated by the well-established properties of StyleGAN’s latent space, in which linear interpolation between latent codes often results in smooth and semantically meaningful transitions in the image space. Specifically, given two latent vectors and , a weighted combination

produces an intermediate latent code that corresponds to a smooth morphing of images generated from and . While a rigorous mathematical derivation of the resulting image is generally intractable due to the inherent nonlinearity of the generator, extensive empirical evidence in the StyleGAN literature supports the validity and effectiveness of linear operations in -space. We have clarified this theoretical rationale in the revised manuscript and added references to the original StyleGAN papers to justify the applicability conditions of our formulation. Since the morphing and de-morphing process uses the existing (real) face images, not simply generating artificial face images, we need to obtain the feature vectors of the face images. GAN inversion aims to invert a given image back into the latent space of a pre-trained GAN model so that the image can be faithfully reconstructed from the inverted code by the generator [48]. We compared the previous StyleGAN inversion method focusing on reconstruction performance after latent space operations for morphing and de-morphing applications and found the the pSp encoder [21] performs best for morphing and de-morphing purposes. The pSp (pixel to style to pixel) framework is based on the power of a pre-trained StyleGAN and the latent space. Rather than using explicit noise input, this model learns the latent code relative to the average style vector . Adapting to different levels of detail in StyleGAN, the pSp encoder E extends the backbone with a feature pyramid and map2style network, generating 18 style vectors. This encoder is able to match each input image to a coding in the latent domain and shows a strong representation.

3.1. Virtual Morphing Method

To facilitate explanation, a virtual morphing model based on StyleGAN is defined. The actual morphing method may be similar to or different from this model. Assuming that a suspect image Isusp is identified as a morphed image resulting from the morphing process involving criminal image and accomplice image ,

where presents the morph generation and the morphing factor controls the contribution of the criminal, and where presents the morph generation and the morphing factor controls the contribution of the criminal.

The criminal face image and accomplice face image are first transformed into (latent code for the criminal) and (latent code for the accomplice) through an encoder network, respectively. The morphed latent vectors are then generated via the following blending procedure:

The morphed latent code is fed into the StyleGAN generator along with , the average style vector of the pre-trained generator, to produce the corresponding morphed images , as follows:

where and denote the StyleGAN generator and encoder, respectively.

3.2. The Proposed De-Morphing Method

The proposed de-morphing network is also based on a pSp network, which is again specific to StyleGAN. Given a trust live capture (TLC) , an inverse morphing process can be applied to recover the accomplice’s facial image . From (3), the de-morphed latent codes can be obtained with the de-morphing factor as follows:

Even though we could use a more sophisticated formula for latent space de-morphing and possibly the morphing method can use different contribution factors for each latent element, we found that this simple and basic formula performs very well in general for detecting the morphing attacks.

Finally, a de-morphed image is generated from the de-morphed latent vectors through the StyleGAN generator, as follows:

where presents the de-morphing process.

In practical scenarios, the information regarding the morphing factor (or contribution weight of criminal in morphed image) is not available. Experiments in session IV demonstrate that even if an associated de-morphing factor could be approximated through practical assumptions, the task of reconstructing the facial features of the accomplice remains notably challenging because of post-processing. Ferrara et al. [15] also showed similar phenomena in the landmark-based de-morphing method. However, it may be possible to detect morphed images by performing de-morphing across various values of the de-morphing factor .

3.3. Morphing Detection Network with Similarity Scores

We apply the above de-morphing process with the same and at N de-morphing factor in the range and obtain multiple de-morphed images . A face recognition network (ArcFace [43] in this work) is employed to extract the face-related features with a size of 512 from the TLC, and a set of N de-morphed images for , is TLC’s feature. These features are combined to produce N similarity scores between and . These scores are then fed into a fully connected MLP classifier with three layers: an input layer of size N, a hidden layer with nodes, and a single output node with sigmoid activation. The network is trained using the Adam optimizer with a learning rate of 0.001 for 10 epochs, with a 7:3 training-to-test split.

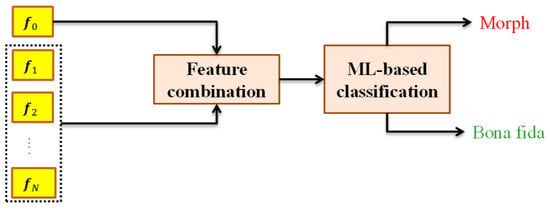

The parameter N denotes the number of de-morphing alpha factors. While the classification network employed is a relatively small MLP, increasing N proportionally increases the overall computational cost of the de-morphing process. We systematically evaluated N in the range of 2 to 10, and the results demonstrated that detection performance saturates beyond N = 5, with no statistically meaningful improvement thereafter. Accordingly, we selected N = 5 as an optimal trade-off between computational efficiency and detection accuracy. This network estimates a probability score that indicates that the suspect image is morphed or bona fide and reaches the decision as illustrated in Figure 2.

Figure 2.

The machine learning-based decision stage involves using face features from both TLC and de-morphed images in a feature combination to obtain an N-dimension feature, which is then aggregated in a classifier to make the final decision.

4. Dataset

An FMAD database has been created from the FRGCv2 and Color FERET databases (Table 1). First, selected images are verified to meet ISO/ICAO specifications [49], ensuring that there are no strong expressions, closed eyes, hats, or glasses. After filtering, the dataset includes 1239 subjects: 806 from Color FERET (466 male and 340 female) and 433 from FRGCv2 (241 male and 192 female). Each dataset is divided into two groups: set A is for morphing and set B contains probe images. For the images selected from the Color FERET database, one image is chosen as the criminal image and one as the probe image per subject. For the images from the FRGCv2 database, one image is chosen as the criminal image and five as probe images per subject.

Table 1.

Overview of the created morphed database.

Furthermore, the SYN-MAD dataset [8] including MIPGAN-I, MIPGAN-II, FaceMorpher, Webmorph, and OpenCV is additionally conducted for FMA detection evaluation. That dataset contains 4483 (984 OpenCV, 1000 FaceMorpher, 500 Webmorph, 1000 MIPGAN-I, 999 MIPGAN-II) morphed face images and 204 bona fide images from the FRLL dataset. During the morphing and de-morphing processes, all images are normalized to a resolution of .

4.1. FMAD Dataset Creation

Generally put, the strength of a morphing attack depends upon the criminal face. When the criminal and accomplice’s faces are similar, the morphed face is hard to detect. Previous works are not aligned on how to choose the dataset and only generate morphed images with equal weights of criminal and accomplice. Therefore, in this paper, we generated morphed images according to 2 protocols.

4.1.1. Protocol 1: For De-Morphing Network’s Performance Evaluation

Protocol 1 is designed to evaluate de-morphing on morphing attacks with different morphing factors in the range [0.1, 0.45]

For each subject indicated as a criminal, candidate accomplices were selected to execute morphing as follows:

- The image of each subject (criminal) in set A is compared with the other of the same gender of the same source database (FRGCv2 or Color FERET). The K subjects (K = 4 for experiments) with the highest ArcFace cosine similarity scores with the criminal are chosen as the accomplices for morphing.

- The morphing processes following our StyleGAN in (6) and FaceMorpher [3] (landmark-based) are performed between each pair of criminal and accomplice with a specific value of alpha in the range of . This results in a total of (number accomplices per criminal) (number morphing factor) morphed attempts for each morphing method.

- For the generated morphed images, ArcFace similarity scores are calculated against the probe image of the criminal to determine the morphing attack success probability (Criminal Morph Acceptance Rate) at a specific morphing factor.

4.1.2. Protocol 2: For Morphing Image Detection Performance Evaluation

Protocol 2 presents the quantitative evaluation of vulnerability analysis of morphed images to the Face Recognition System (FRS) at a morphing factor equal to . This follows [50], which demonstrates that morphing images with equal weights pose the greatest vulnerability to FRS.

Candidates of accomplice for each criminal are selected as in protocol 1. If a pair of subjects is chosen where the first is the criminal and the second is the accomplice, the reverse order (the first as accomplice and the second as criminal) will not be selected. Instead, the next candidate with the highest score will be chosen. This results in a total of (number of accomplices per criminal) morphed attempts for each morphing method. Due to the limited number of probes per subject in the FERET dataset and following previous works [5,51], Mated Morphed Presentation Match Rate (MMPMR) and Fully Mated Morphed Presentation Match Rate (FMMPMR) in Table 2 are only reported on the FRGCv2 database.

Table 2.

Quantitative evaluation of the vulnerability of ArcFace [43] FRS at a morphing alpha of .

For the constructed FMAD dataset, morphed images that successfully match against criminal images are collected from protocols 1 and 2. Scherhag et al. [13] found that using post-processing techniques like resizing and print and scan during training has minimal impact on detection performance. Ferrara et al. [15] also showed that de-morphing improves efficiency for print and scan images. While we do not conduct print and scan images, we believe that the same performance can be obtained in such scenarios. Table 1 summarizes the created database for face morphing attack detection. For each dataset created using a different method from the original dataset, we split it into training and testing sets in a 7:3 ratio. Then, we trained a model on one dataset and tested it on all the other datasets. The SYN-MAD dataset is used solely for evaluation. Face de-morphing is executed on the suspect image (bona fide or morphed image) and corresponding TLC from set B. For the FRGCv2 dataset, the first image in the probe set is selected as the TLC. Meanwhile, since each subject in the SYN-MAD dataset has only 2 genuine images (1 neutral and 1 smiling) when one image is utilized as criminal for morphing, the remaining image of the subject will be used as the TLC. The network was trained over 100 epochs with a learning rate of .

4.2. Properties and Statistics of Morphing Attack Datasets

During the morphing and de-morphing experimentation, a threshold score corresponding to a FAR (False Acceptance Rate) of has been used for both datasets according to Frontex guidelines, where the target FAR is and FRR is . Table 3 summarizes the Criminal Morph Acceptance Rate (CMAR) of morphed images in protocol 1. The results (Table 2) indicate that as the criminal’s contribution to the image becomes more noticeable, the acceptance rate of morphed images increases significantly, eventually reaching a level comparable to that of the landmark-based method [3] ( and compared with and ).

Table 3.

Comparison of the Criminal Morph Acceptance Rate (CMAR) of the (proposed) morphing attack method with the landmark-based method at different morphing alphas.

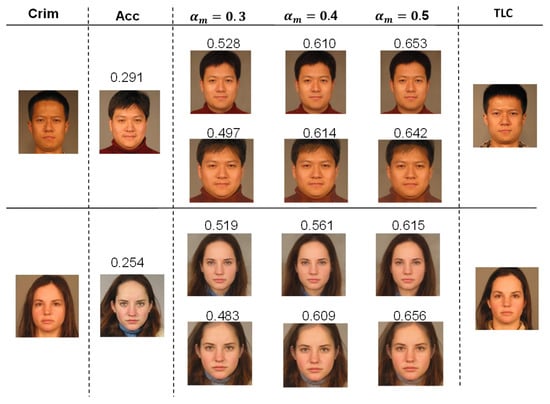

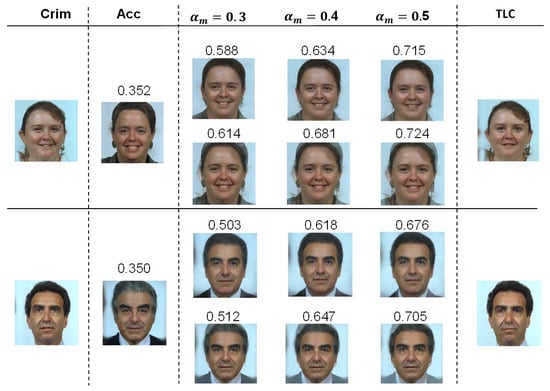

Figure 3 and Figure 4 present some examples of morphed images generated by the proposed StyleGAN-based morphing method and FaceMorpher [3]. The first and second columns show criminals and accomplices, respectively, with the cosine similarity score calculated between them. The third to fifth columns display morphed images with morphing alphas ranging from to . The scores of these morphed images are compared with the TLC images in the last column. It is obvious that as the morphing alpha increases, the morphed images exhibit higher similarity scores when compared with the criminal, indicating a greater correlation to the criminal.

Figure 3.

Examples of morphed images from FRGCv2. For each attack, the first and second columns show the criminals and accomplices with their similarity scores. The third to fifth columns display morphed images with alpha from to , and their scores are compared with live images in the last column. The upper row is proposed morphed images, and the lower row is morphed images by FaceMorpher [3].

Figure 4.

Examples of morphed images from FERET. For each attack, the first and second columns show the criminals and accomplices with their similarity scores. The third to fifth columns display morphed images with alpha from to , and their scores are compared with live images in the last column. The upper row is proposed morphed images, and the lower row is morphed images by FaceMorpher [3].

The Mated Morphed Presentation Match Rate (MMPMR) and Fully Mated Morphed Presentation Match Rate (FMMPMR) of the created morph, indicating the vulnerability of the Face Recognition System (FRS) on protocol 2, are shown in Table 2. It should be noted that the created databases differ from other databases used in scientific publications on FMA and FMAD. In particular, the intra-class variation is much higher in our database due to the number of selected subjects. This approach ensures that our database is more eligible to simulate real-world scenarios. Even though the comparisons are relative, the results show that the proposed morph method indicates high vulnerability, outperforming both StyleGAN and Landmark-II and approaching the performance of the MIPGAN method. This emphasizes the high quality of morphs produced by the proposed method, making them reliable for the evaluation of the proposed detection method.

5. Experiments and Results

In this section, we will first evaluate the proposed de-morphing algorithm and then the similarity score pattern detection method with this de-morphing approach.

5.1. Performance of the Proposed De-Morphing Method

Table 4 reports the genuine acceptance rate (GAR) without de-morphing compared with those obtained with different values of the de-morphing factor in the range of .

Table 4.

Genuine acceptance rate (%) on FERET and FRGCv2 datasets with different values of de-morphing alpha using the proposed method.

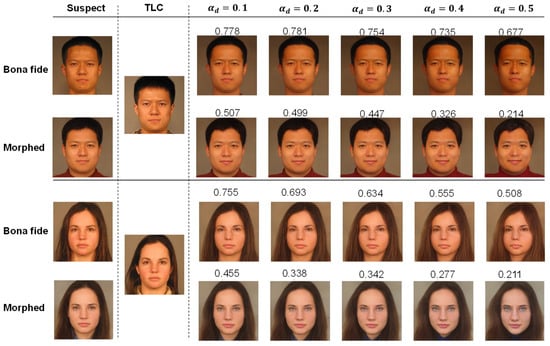

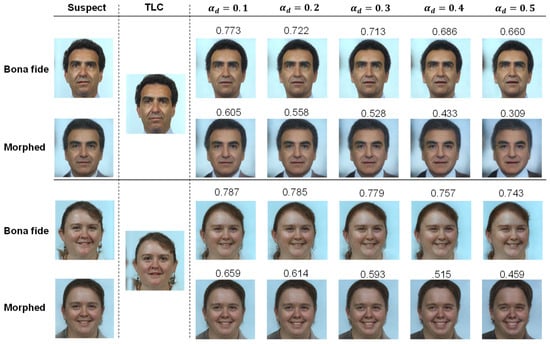

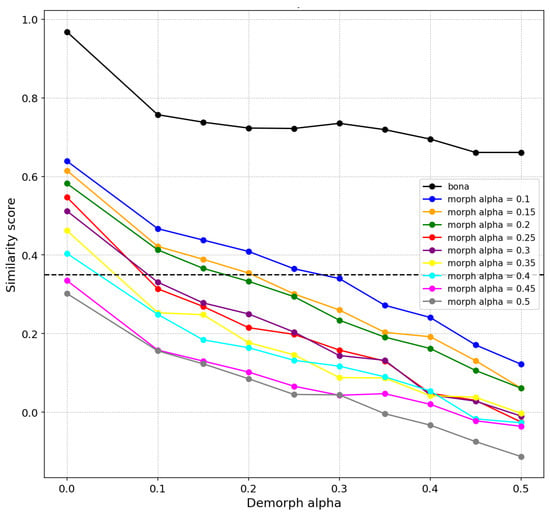

Table 5 and Table 6 present the Criminal Morph Acceptance Rate (CMAR) as a function of both the morphing factor used to create the morphed images and the de-morphing factor from FRGCv2 and the FERET database, respectively. The results indicate a trade-off between the False Acceptance Rate (FAR), also referred to as the CMAR, and the False Rejection Rate (FRR), equivalent to 1 - GAR, as the de-morphing factor increases. Moreover, the proposed de-morphing method demonstrates greater efficiency when applied to morphs from the FERET database compared with those from FRGCv2. Examples of de-morphing on bona fide and morphed images are presented in Figure 5 and Figure 6. The first and second columns show the suspect (bona fide or morph) and TLC. The third to seventh columns display de-morphed images with alphas from to , and their scores are compared with TLC. When the de-morphing alpha increases from to , the reduction in similarity score for bona fide images is around , whereas the reduction in similarity score for morphed images is more substantial, varying from to .

Table 5.

Criminal Morph Acceptance Rate (%) on the FRGCv2 dataset at different values of the morphing alpha when performing de-morphing with different values of de-morphing alpha (best core in bold face).

Table 6.

Criminal Morph Acceptance Rate (%) on the FERET dataset at different values of the morphing alpha when performing de-morphing with different values of de-morphing alpha.

Figure 5.

Examples of de-morphing on bona fide and morphed images from FRGCv2. The first and second columns show the suspect (bona fide or morph) and TLC. The third to seventh columns display de-morphed images with alphas from to , and their scores are compared with TLC.

Figure 6.

Examples of de-morphing on bona fide and morphed images from FERET. The first and second columns show the suspect (bona fide or morph) and TLC. The third to seventh columns display de-morphed images with alphas from to , and their scores are compared with TLC.

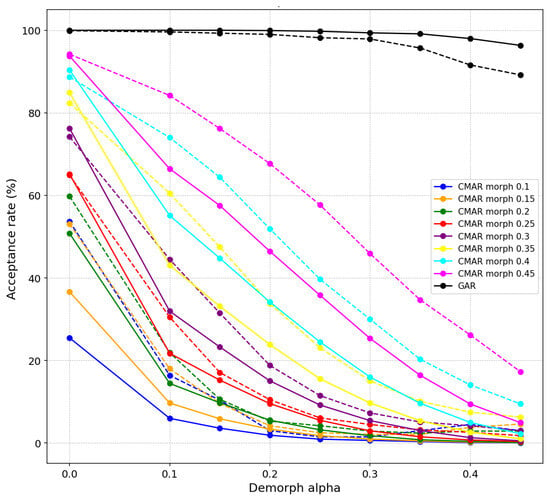

The performance of the proposed de-morph is compared with the landmark-based de-morphing method [15] in Figure 7. The solid line indicates the results of the proposed de-morphing, and the dashed line indicates the landmark-based de-morphing method [15]. It is evident that when the morphing alpha is or higher, the proposed method significantly outperforms the landmark-based method in CMAR. Importantly, there is no notable decline in the GAR as observed in the landmark-based method.

Figure 7.

Comparison between proposed landmark-based methods. The solid line indicates the results of the proposed de-morphing, and the dashed line indicates the landmark-based de-morphing method [15]. The proposed method significantly outperforms the landmark-based method in CMAR on the same morphing and de-morphing factor values. Notably, there is no significant decrease in GAR, unlike the decline seen with the landmark-based method.

5.2. Morphing Attack Detection Performance

For FMAD purposes, N de-morphing factors in the range of are used to produce de-morphed images from the suspect and TLC. As the validating experiments will be presented later, we found that is a good hyper-parameter value for computation efficiency and detection performance.

The ability to detect FMA through multiple de-morphing was evaluated using the Equal Error Rate (EER) and the Bona Fide Presentation Classification Error Rate at an Attack Presentation Classification Error Rate (BPCER10). The results on the created morphed dataset are presented in Table 7. The D-FMAD method is generally more likely to detect FMAs with lower weights compared with FMAs where morphs have been created using equal weights of the contributing face images [13]. Therefore, while the training set includes morphed images with morphing factors ranging from to (Figure 8), Table 7 reports only the testing results for morphed images with a morphing factor of , which poses the highest risk to Face Recognition Systems (FRSs). The proposed detection method achieves an EER of approximately on the FERET dataset and roughly on the FRGCv2 dataset when morphed images are generated by the proposed morphing attack. Although the EER is higher for morphed images created using the landmark-based method [3], it is still around on the FERET dataset and not far off on the FRGCv2 dataset. It is evident from the results that the proposed method exhibits stability across different datasets and effectively detects unseen FMA, including both deep learning-based and landmark-based methods. Furthermore, results for testing with fully morphed images use morphing factors ranging from to , as detailed in Appendix A. The findings demonstrate that our method achieves strong performance even when the morphing factor is unknown.

Table 7.

The detection performance of the proposed MAD algorithms on a created MAD dataset using different morphing methods with a cross-database.

Figure 8.

An example of the change of similarity scores between TLC and de-morphed face images at several morphing alphas. As the de-morphing factor rises from to , the similarity scores between TLC and de-morphed images of a morphed image drop significantly below the threshold. In contrast, for bona fide images, these scores remain well above the threshold.

Table 8 presents the detection performances of the proposed method compared with six S-MAD methods (top 4 solutions in competition SYN-MAD 2022 [8] and two solutions using depth information [9]) on the SYN-MAD dataset. The proposed detection method excels in detecting morphed images from StyleGAN-based attacks. Even though EER is slightly higher on some specific landmark-based morphs, overall, it maintains stable detection performance when applied to unseen morphing attacks.

Table 8.

The detection performance of the proposed MAD algorithms on the SYN-MAD database.

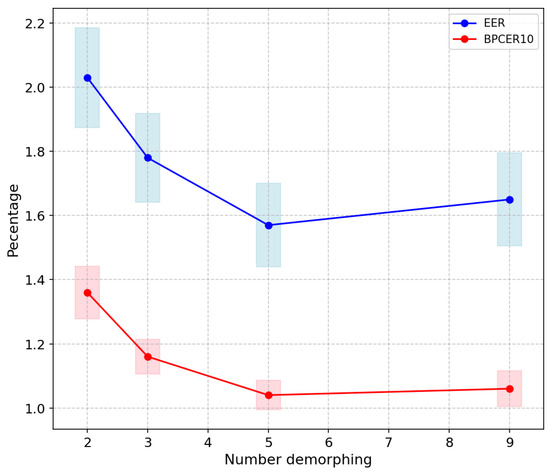

To evaluate the impact of varying the number of de-morphings, additional tests have been carried out for different numbers of de-morphing factors (). Figure 9 shows EER and BPCER10 for these values of N on all testing datasets. The line plot illustrates the average EER and BPCER10, while the rectangles surrounding each data point indicate the range of potential error, with the heights representing the standard deviation at a rate of . Increasing the number of de-morphing factors slightly reduces the EER but also increases detection time. In contrast, using a large number of de-morphing processes can lead to overfitting. For real-time applications, is a good trade-off.

Figure 9.

ERR and BPCER10 for different numbers of de-morphing. The line plot illustrates the average values, while the rectangles surrounding each data point indicate the range of potential error, with the heights representing the standard deviation at a rate of .

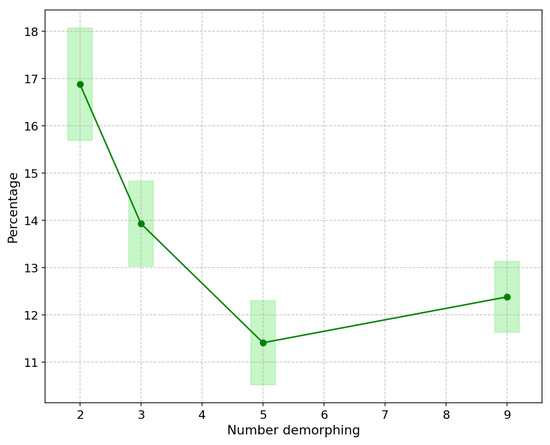

Additionally, to be applicable in real-world scenarios, the False Acceptance Rate (FAR) should be smaller than . The Bona Fide Presentation Classification Error Rate at an Attack Presentation Classification Error Rate of (BPCER0.1) for different numbers of de-morphing is also shown in Figure 10. The BPCER0.1 value at is , which is still higher than the Frontex requirements [52] of being smaller than . This remains a motivation for improving the FMAD method in the future.

Figure 10.

BPCER when APCER for different numbers of de-morphing. The line plot illustrates the average values, while the rectangles surrounding each data point indicate the range of potential error, with the heights representing the standard deviation at a rate of .

6. Conclusions

In this paper, we proposed a D-FMAD pipeline based on deep learning de-morphing technology that addresses challenges in face morphing attacks at FRS. Inspired by differences in similarity score variations between morphed and non-morphed images, the proposed approach learns the change patterns of similarity scores between live capture and de-morphed face images with different de-morphing factors. An effective deep de-morphing network based on StyleGAN and the pSp (pixel2style2pixel) encoder was developed. The method generates de-morphed images from suspect and live images with multiple de-morphing factors and calculates similarity scores between feature vectors from the ArcFace network, which are then classified by the detection network. Experiments on morphing datasets from the Color FERET, FRGCv2, and SYS-MAD databases, including landmark-based and deep learning attacks, demonstrate that the proposed method performs high accuracy in detecting unseen morphing attacks across different databases.

It is important to emphasize that the approach using the similarity score variation in the proposed pipeline is not restricted to specific de-morphing techniques. This underscores the potential for improving FMAD tasks by employing advanced de-morphing techniques, particularly since current studies still fall short of meeting the needs of real-world systems.

Author Contributions

Conceptualization, T.T.H. and H.A.; formal analysis, T.T.H. and B.M.S.I.; algorithms, T.T.H. and H.A.; funding acquisition, H.A.; methodology, T.T.H. and H.A.; project administration, H.A.; resources, T.T.H. and B.M.S.I.; software, T.T.H., H.A., and B.M.S.I.; supervision, H.A.; validation, H.A.; writing—original draft, T.T.H.; writing—review and editing, T.T.H., B.M.S.I., and H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by a research program funded by SeoulTech (Seoul National University of Science and Technology).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to the data in this paper include human identity information and obtained with restriction in sharing data with the original source.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

This appendix contains additional results on test datasets with unknown morphing factors in the range . This result is superior to those obtained from morphed images with equal weight contributions (), indicating that the D-FMAD methods are generally more effective when dealing with FMAs created from face images with lower weights.

Table A1.

The detection performance of the proposed MAD algorithms on the created MAD dataset with unknown morphing factors in the range .

Table A1.

The detection performance of the proposed MAD algorithms on the created MAD dataset with unknown morphing factors in the range .

| Train Dataset | Test Dataset | EER (%) | BPCER10 (%) |

|---|---|---|---|

| FERET (proposed) | FERET (proposed) | 0.56 | 0.12 |

| FRGCv2 (proposed) | 1.10 | 0.46 | |

| FERET (LM-based [3]) | 1.53 | 0.25 | |

| FRGCv2 (LM-based [3]) | 1.80 | 0.69 | |

| FRGCv2 (proposed) | FERET (proposed) | 0.90 | 0.00 |

| FRGCv2 (proposed) | 1.97 | 0.46 | |

| FERET (LM-based [3]) | 1.40 | 0.25 | |

| FRGCv2 (LM-based [3]) | 2.30 | 0.69 | |

| FERET (LM-based [3]) | FERET (proposed) | 1.10 | 0.12 |

| FRGCv2 (proposed) | 1.88 | 0.46 | |

| FERET (LM-based [3]) | 1.52 | 0.24 | |

| FRGCv2 (LM-based [3]) | 2.81 | 0.92 | |

| FRGCv2 (LM-based [3]) | FERET (proposed) | 1.08 | 0.13 |

| FRGCv2 (proposed) | 2.46 | 0.69 | |

| FERET (LM-based [3]) | 1.86 | 0.37 | |

| FRGCv2 (LM-based [3]) | 2.96 | 1.15 | |

| Average | 1.70 ± 0.67 | 0.44 ± 0.31 | |

References

- LOGICAL DATA STRUCTURE-LDS. Machine Readable Travel Documents. Available online: https://www.icao.int/sites/default/files/publications/DocSeries/9303_p1_cons_en.pdf (accessed on 28 August 2025).

- Ferrara, M.; Franco, A.; Maltoni, D. The magic passport. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–7. [Google Scholar] [CrossRef]

- FaceMorpher. Available online: https://github.com/alyssaq/face_morpher (accessed on 28 August 2025).

- Damer, N.; Saladie, A.M.; Braun, A.; Kuijper, A. Morgan: Recognition vulnerability and attack detectability of face morphing attacks created by generative adversarial network. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Applications and Systems (BTAS), Redondo Beach, CA, USA, 22–25 October 2018; pp. 1–10. [Google Scholar]

- Zhang, H.; Venkatesh, S.; Ramachandra, R.; Raja, K.; Damer, N.; Busch, C. Mipgan–generating strong and high quality morphing attacks using identity prior driven gan. IEEE Trans. Biom. Behav. Identity Sci. 2021, 3, 365–383. [Google Scholar] [CrossRef]

- Venkatesh, S.; Zhang, H.; Ramachandra, R.; Raja, K.; Damer, N.; Busch, C. Can GAN generated morphs threaten face recognition systems equally as landmark based morphs?-vulnerability and detection. In Proceedings of the 2020 8th International Workshop on Biometrics and Forensics (IWBF), Porto, Portugal, 29–30 April 2020; pp. 1–6. [Google Scholar]

- Damer, N.; Fang, M.; Siebke, P.; Kolf, J.N.; Huber, M.; Boutros, F. Mordiff: Recognition vulnerability and attack detectability of face morphing attacks created by diffusion autoencoders. In Proceedings of the 2023 11th International Workshop on Biometrics and Forensics (IWBF), Barcelona, Spain, 19–20 April 2023; pp. 1–6. [Google Scholar]

- Huber, M.; Boutros, F.; Luu, A.T.; Raja, K.; Ramachandra, R.; Damer, N.; Neto, P.C.; Gonçalves, T.; Sequeira, A.F.; Cardoso, J.S.; et al. SYN-MAD 2022: Competition on face morphing attack detection based on privacy-aware synthetic training data. In Proceedings of the 2022 IEEE International Joint Conference on Biometrics (IJCB), Abu Dhabi, United Arab Emirates, 10–13 October 2022; pp. 1–10. [Google Scholar]

- Rachalwar, H.; Fang, M.; Damer, N.; Das, A. Depth-guided Robust Face Morphing Attack Detection. In Proceedings of the 2023 IEEE International Joint Conference on Biometrics (IJCB), Ljubljana, Slovenia, 25–28 September 2023; pp. 1–9. [Google Scholar]

- Ramachandra, R.; Venkatesh, S.; Damer, N.; Vetrekar, N.; Gad, R.S. Multispectral Imaging for Differential Face Morphing Attack Detection: A Preliminary Study. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 1–6 January 2024; pp. 6185–6193. [Google Scholar]

- Qin, L.; Peng, F.; Long, M. Face morphing attack detection and localization based on feature-wise supervision. IEEE Trans. Inf. Forensics Secur. 2022, 17, 3649–3662. [Google Scholar] [CrossRef]

- Chaudhary, B.; Aghdaie, P.; Soleymani, S.; Dawson, J.; Nasrabadi, N.M. Differential morph face detection using discriminative wavelet sub-bands. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 1425–1434. [Google Scholar]

- Scherhag, U.; Rathgeb, C.; Merkle, J.; Busch, C. Deep face representations for differential morphing attack detection. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3625–3639. [Google Scholar] [CrossRef]

- Hamza, M.; Tehsin, S.; Karamti, H.; Alghamdi, N.S. Generation and detection of face morphing attacks. IEEE Access 2022, 10, 72557–72576. [Google Scholar] [CrossRef]

- Ferrara, M.; Franco, A.; Maltoni, D. Face demorphing. IEEE Trans. Inf. Forensics Secur. 2017, 13, 1008–1017. [Google Scholar] [CrossRef]

- Ortega-Delcampo, D.; Conde, C.; Palacios-Alonso, D.; Cabello, E. Border control morphing attack detection with a convolutional neural network de-morphing approach. IEEE Access 2020, 8, 92301–92313. [Google Scholar] [CrossRef]

- Banerjee, S.; Jaiswal, P.; Ross, A. Facial de-morphing: Extracting component faces from a single morph. In Proceedings of the 2022 IEEE International Joint Conference on Biometrics (IJCB), Abu Dhabi, United Arab Emirates, 10–13 October 2022; pp. 1–10. [Google Scholar]

- Shiqerukaj, E.; Rathgeb, C.; Merkle, J.; Drozdowski, P.; Tams, B. Fusion of face demorphing and deep face representations for differential morphing attack detection. In Proceedings of the 2022 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 14–16 September 2022; pp. 1–5. [Google Scholar]

- Peng, F.; Zhang, L.B.; Long, M. FD-GAN: Face de-morphing generative adversarial network for restoring accomplice’s facial image. IEEE Access 2019, 7, 75122–75131. [Google Scholar] [CrossRef]

- Long, M.; Yao, Q.; Zhang, L.B.; Peng, F. Face De-Morphing Based on Diffusion Autoencoders. IEEE Trans. Inf. Forensics Secur. 2024, 19, 3051–3063. [Google Scholar] [CrossRef]

- Richardson, E.; Alaluf, Y.; Patashnik, O.; Nitzan, Y.; Azar, Y.; Shapiro, S.; Cohen-Or, D. Encoding in style: A stylegan encoder for image-to-image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 2287–2296. [Google Scholar]

- King, D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Weng, Y.; Wang, L.; Li, X.; Chai, M.; Zhou, K. Hair interpolation for portrait morphing. In Computer Graphics Forum; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2013; Volume 32, pp. 79–84. [Google Scholar]

- Seibold, C.; Samek, W.; Hilsmann, A.; Eisert, P. Detection of face morphing attacks by deep learning. In Proceedings of the Digital Forensics and Watermarking: 16th International Workshop, IWDW 2017, Magdeburg, Germany, 23–25 August 2017; Proceedings 16. Springer: Berlin/Heidelberg, Germany, 2017; pp. 107–120. [Google Scholar]

- Neubert, T.; Makrushin, A.; Hildebrandt, M.; Kraetzer, C.; Dittmann, J. Extended StirTrace benchmarking of biometric and forensic qualities of morphed face images. IET Biom. 2018, 7, 325–332. [Google Scholar] [CrossRef]

- GIMP. GNU Image Manipulation Program Web Site. Available online: https://www.gimp.org (accessed on 28 August 2025).

- OpenCV. Available online: https://learnopencv.com/face-morph-using-opencv-cpp-python (accessed on 28 August 2025).

- Karras, T.; Laine, S.; Aila, T. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4401–4410. [Google Scholar]

- Zhang, H.; Tang, H.; Sun, Y.; He, S.; Li, Z. Modality-Specific Interactive Attack for Vision-Language Pre-Training Models. IEEE Trans. Inf. Forensics Secur. 2025, 20, 5663–5677. [Google Scholar] [CrossRef]

- Scherhag, U.; Raghavendra, R.; Raja, K.B.; Gomez-Barrero, M.; Rathgeb, C.; Busch, C. On the vulnerability of face recognition systems towards morphed face attacks. In Proceedings of the 2017 5th International Workshop on Biometrics and Forensics (IWBF), Coventry, UK, 4–5 April 2017; pp. 1–6. [Google Scholar]

- Scherhag, U.; Rathgeb, C.; Busch, C. Morph deterction from single face image: A multi-algorithm fusion approach. In Proceedings of the 2018 2nd International Conference on Biometric Engineering and Applications, Amsterdam, The Netherlands, 16–18 May 2018; pp. 6–12. [Google Scholar]

- Venkatesh, S.; Ramachandra, R.; Raja, K.; Spreeuwers, L.; Veldhuis, R.; Busch, C. Detecting morphed face attacks using residual noise from deep multi-scale context aggregation network. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 280–289. [Google Scholar]

- Venkatesh, S.; Ramachandra, R.; Raja, K.; Spreeuwers, L.; Veldhuis, R.; Busch, C. Morphed face detection based on deep color residual noise. In Proceedings of the 2019 Ninth International Conference on Image Processing Theory, Tools and Applications (IPTA), Istanbul, Turkey, 6–9 November 2019; pp. 1–6. [Google Scholar]

- Seibold, C.; Hilsmann, A.; Eisert, P. Reflection analysis for face morphing attack detection. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 1022–1026. [Google Scholar]

- Scherhag, U.; Debiasi, L.; Rathgeb, C.; Busch, C.; Uhl, A. Detection of face morphing attacks based on PRNU analysis. IEEE Trans. Biom. Behav. Identity Sci. 2019, 1, 302–317. [Google Scholar] [CrossRef]

- Ferrara, M.; Franco, A.; Maltoni, D. Face morphing detection in the presence of printing/scanning and heterogeneous image sources. IET Biom. 2021, 10, 290–303. [Google Scholar] [CrossRef]

- Damer, N.; Zienert, S.; Wainakh, Y.; Saladie, A.M.; Kirchbuchner, F.; Kuijper, A. A multi-detector solution towards an accurate and generalized detection of face morphing attacks. In Proceedings of the 2019 22th International Conference on Information Fusion (FUSION), Ottawa, ON, Canada, 2–5 July 2019; pp. 1–8. [Google Scholar]

- Venkatesh, S.; Ramachandra, R.; Raja, K.; Busch, C. Single image face morphing attack detection using ensemble of features. In Proceedings of the 2020 IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; pp. 1–6. [Google Scholar]

- Ramachandra, R.; Venkatesh, S.; Raja, K.; Busch, C. Towards making morphing attack detection robust using hybrid scale-space colour texture features. In Proceedings of the 2019 IEEE 5th International Conference on Identity, Security, and Behavior Analysis (ISBA), Hyderabad, India, 22–24 January 2019; pp. 1–8. [Google Scholar]

- Eyedea. Available online: https://www.eyedea.cz/eyeface-sdk (accessed on 28 August 2025).

- GmbH, C.S. Facevacs Technology. Available online: https://www.cognitec.com/facevacs-technology.html (accessed on 28 August 2025).

- F. COTS. Verilook Cots. Available online: http://www.neurotechnology.com/verilook.html (accessed on 28 August 2025).

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4690–4699. [Google Scholar]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.M.; Zisserman, A. Vggface2: A dataset for recognising faces across pose and age. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 67–74. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Zhu, J.; Shen, Y.; Zhao, D.; Zhou, B. In-domain gan inversion for real image editing. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2020; pp. 592–608. [Google Scholar]

- Wei, T.; Chen, D.; Zhou, W.; Liao, J.; Zhang, W.; Yuan, L.; Hua, G.; Yu, N. E2Style: Improve the efficiency and effectiveness of StyleGAN inversion. IEEE Trans. Image Process. 2022, 31, 3267–3280. [Google Scholar] [CrossRef] [PubMed]

- Xia, W.; Zhang, Y.; Yang, Y.; Xue, J.H.; Zhou, B.; Yang, M.H. Gan inversion: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3121–3138. [Google Scholar] [CrossRef] [PubMed]

- ISO/IEC 19794-5; Information Technology—Biometric Data Interchange Formats—Part 5: Face Image Data. International Organization for Standardization: Geneva, Switzerland, 2011.

- Raghavendra, R.; Raja, K.; Venkatesh, S.; Busch, C. Face morphing versus face averaging: Vulnerability and detection. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 555–563. [Google Scholar]

- Abdal, R.; Qin, Y.; Wonka, P. Image2stylegan: How to embed images into the stylegan latent space? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4432–4441. [Google Scholar]

- Frontex, R. Best practice operational guidelines for Automated Border Control (ABC) systems. Eur. Agency Manag. Oper. Coop. Res. Dev. Unit 2012, 9, 2013. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).