Abstract

The inspection of power equipment is vital for maintaining the safe and reliable operation of power systems. Among various inspection tasks, the detection of defects in insulators and wind turbine blades holds particular importance. However, existing detection methods often suffer from limited accuracy, largely due to substantial scale variations among defect targets and the loss of features associated with small objects. To address these challenges, this paper proposes Inspection of Power Equipment-YOLO (IPE-YOLO), an enhanced defect detection algorithm based on the YOLOv8n framework. First, a Cross Stage Partial Multi-Scale Edge Information Enhancement (CSP_MSEIE) module is introduced to improve multi-scale feature extraction, enhancing the detection of targets with significant scale diversity while reducing computational complexity. Second, we reconstruct the neck network with a Context-Guided Spatial Feature Reconstruction for Feature Pyramid Networks (CGRFPN), which promotes cross-scale feature fusion and enriches the fine-grained details of small objects, thereby alleviating feature loss in deeper network layers. Finally, a Lightweight Shared Convolutional Detection Head (LSCD) is employed, leveraging shared convolutional layers to decrease model parameters and computational costs without sacrificing detection precision. Experimental results demonstrate that, compared to the baseline YOLOv8n model, IPE-YOLO improves defect detection accuracy for insulators and wind turbine blades by 2.6% and 2.9%, respectively, while reducing the number of parameters by 12.3% and computational costs by 24.7%. These results indicate that IPE-YOLO achieves a superior balance between accuracy and efficiency, making it well-suited for practical engineering deployments in power equipment inspection.

1. Introduction

In the context of escalating global energy demand, the increasing proportion of electricity in total energy consumption has positioned clean and low-carbon renewable energy as a strategic imperative for ensuring a sustainable supply. Among these, wind power—representing a key frontier in emerging energy technologies—has become a vital pillar of the global power system, owing to its unique technical and environmental advantages [1,2,3].

The operational integrity of wind turbine blades, as primary external components, is a critical determinant of both the unit’s power generation efficiency and its overall service life. Their deployment in remote and often harsh environments leads to prolonged exposure to extreme weather conditions, rendering them susceptible to defects such as cracks, oil stains, and fractures [4,5]. Regular inspection is therefore essential to prevent defect propagation, avert catastrophic failures, and ensure the safe and stable operation of wind power systems. Concurrently, ensuring the stable and reliable transmission of wind-generated power via high-voltage lines is equally critical. As key elements of these transmission systems, insulators are also vulnerable to damage and flashover events caused by extended exposure to adverse environmental conditions, posing substantial risks to grid safety [6,7]. Accordingly, the regular and efficient inspection of insulators is indispensable to guarantee the safety and reliability of power transmission.

Current inspection practices for insulators and wind turbine blades are increasingly shifting toward unmanned aerial vehicle (UAV)-based approaches. The integration of object detection algorithms with UAV platforms has markedly streamlined inspection work. In contrast to traditional manual methods, this integrated approach offers superior operational efficiency and mitigates risks to both inspection personnel and grid infrastructure [8]. Nevertheless, the inherent computational and memory constraints of UAV platforms necessitate the development of lightweight yet highly accurate inspection algorithms capable of real-time, onboard processing. To address these challenges, this paper focuses on the inspection scenarios for insulators and wind turbine blades, taking their respective defects as the research subject, to further explore a defect detection algorithm that is both faster and more accurate.

In the task of detecting surface defects on insulators and wind turbine blades, a primary technical bottleneck is the difficulty in identifying multi-scale targets, particularly small ones, which stems from substantial variations in defect scale. This challenge is particularly pronounced for minute defects, such as insulator flashover marks and blade surface burn marks, which are frequently overlooked or misidentified by conventional detection models.

To address these detection challenges, this paper proposes an enhanced model based on YOLOv8n, which incorporates specialized modules for multi-scale feature fusion and contextual information extraction. These improvements substantially increase target recognition precision, thereby strengthening the model’s ability to robustly identify multi-scale defects on insulators and wind turbine blades. The main contributions of this work are as follows:

- To address the challenge of low detection accuracy for multi-scale defects, we propose the Cross Stage Partial Multi-Scale Edge Information Enhancement (CSP_MSEIE) module. This module simultaneously preserves gradient information across network depths and augments edge features to bolster multi-scale feature extraction, thereby enhancing detection accuracy while concurrently reducing computational cost.

- To overcome the information loss affecting minute defects in deep network layers, we integrate the Context-Guided Spatial Feature Reconstruction for Feature Pyramid Networks (CGRFPN). By capturing global context along horizontal and vertical axes and fusing features across hierarchical levels, CGRFPN substantially enriches the feature representation of small targets, leading to a marked improvement in their detection accuracy.

- To improve deployability on resource-constrained UAV platforms, we introduce the Lightweight Shared Convolutional Detection Head (LSCD). This design employs shared convolutions across detection layers, significantly reducing the model’s parameter count and computational cost while maintaining localization and classification performance.

- Comprehensive experiments on our custom-built insulator and wind turbine blade defect datasets validate the efficacy and efficiency of the proposed IPE-YOLO model. Compared to the YOLOv8n baseline, IPE-YOLO increases the mAP by 2.6% and 2.9% for the respective tasks, while simultaneously decreasing the parameter count by 12.3% and computational cost by 24.7%, highlighting its superior balance between accuracy and efficiency.

2. Related Work

To bolster the efficiency of inspection work, recent research has increasingly focused on integrating object detection algorithms for the identification of faults and defects in power equipment. For instance, Liu Xinyu et al. developed a fault detection method based on Faster R-CNN for sliding faults in transmission line dampers, improving the network’s average precision through structural modifications to achieve efficient, automated diagnosis [9]. Similarly, Zhang Qianyi et al. implemented a Faster R-CNN-based algorithm for detecting equipment in power machine rooms, which was successfully integrated into an intelligent inspection robot, offering a robust technical foundation for intelligent operation and maintenance (O&M) in the power sector [10]. In the domain of photovoltaic (PV) system maintenance, Yin Meile et al. addressed efficiency degradation from dust accumulation by proposing an R-CNN-based detection method. By optimizing the model structure, their optimized model could effectively distinguish dust from other interfering factors in complex environments [11]. Peng Tao et al. introduced an intelligent detection method for photovoltaic panels based on Mask R-CNN, enhancing the model’s image representation by replacing the Feature Pyramid Network (FPN) and incorporating an attention mechanism to focus on key image regions [12]. Leveraging a Convolutional Neural Network (CNN), Chen Mengying et al. automated the detection of bird nests on power transmission towers, reporting significant improvements in recognition accuracy [13]. Wang Yifan et al. used the Faster R-CNN algorithm and ResNet-50 to locate insulators. However, their method could only identify whether a fault was present but could not classify the defect type [14]. Collectively, while these two-stage algorithms often yield high detection accuracy, their inherent structural complexity leads to substantial computational overhead and slow inference speeds, rendering them unsuitable for real-time deployment.

Given the inherent bottlenecks of two-stage algorithms in real-time scenarios, the YOLO algorithm [15] has emerged as a prominent solution in the evolution of object detection technology. Its speed advantage in real-time detection tasks has established it as a mainstream algorithm in numerous industrial applications, leading more scholars to shift their focus toward the YOLO family for its balance of detection accuracy and efficiency. For instance, Li Lirong et al. developed MSLV, a novel network based on YOLOv5s for high-voltage transmission line detection. Their proposed MSLconv module facilitates a lightweight architecture by preserving rich feature information despite channel pruning, thereby achieving a favorable balance between model compactness and accuracy [16]. To address power line damage detection in remote regions, Di Tanyao et al. leveraged both YOLOv5 and YOLOv7 in conjunction with UAV-captured imagery to enable the rapid identification and localization of anomalies such as line breaks and leaning poles [17]. Xiang Xinyue et al. adapted YOLOv4 for risk detection in high-voltage operational scenarios, enhancing model generalization by incorporating the Mish activation function and improving feature extraction via the integration of Cross Stage Partial and Spatial Pyramid Pooling modules [18]. To mitigate avian-related hazards to power equipment, Zhou Hongyi et al. developed an intelligent bird deterrence system based on an improved YOLOv5 algorithm, which integrates software and hardware platforms to achieve efficient and precise bird repelling [19]. Zhang Xiaofeng et al. employed a YOLOv3-based method, leveraging its Darknet-53 backbone to enhance feature extraction for identifying anomalous targets in complex scenes [20]. Zhang Bin et al. improved the YOLOv8n model to create Wear-YOLO for the real-time detection of safety equipment compliance among power personnel, significantly enhancing detection performance to prevent construction-related accidents [21].

In summary, while the YOLO algorithm plays a crucial role in various detection tasks within the power industry, it still exhibits limitations in insulator and wind turbine blade defect detection, particularly concerning the low detection accuracy for multi-scale targets, especially small ones. To address this challenge, this paper introduces a series of targeted enhancements across the three core components of the YOLOv8n architecture: the backbone, neck, and detection head. The objective is to enhance the model’s detection accuracy while simultaneously achieving a balance with a lightweight design, aiming to provide a more precise and lightweight object detection algorithm for insulator and wind turbine blade inspection.

3. IPE-YOLO

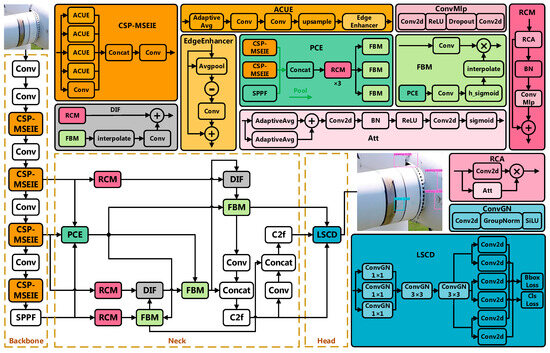

This paper introduces Inspection of Power Equipment-YOLO (IPE-YOLO), a novel architecture derived from YOLOv8, specifically engineered to enhance the detection of surface defects on insulators and wind turbine blades. To address the pronounced scale variations observed in defect targets for both application scenarios, a multi-scale detection method is employed. This approach effectively improves the model’s detection accuracy and strengthens its ability to detect multi-scale defects and small defects. The overall network architecture of IPE-YOLO is illustrated in Figure 1.

Figure 1.

Model structure diagram.

3.1. CSP_MSEIE

To mitigate the persistent challenge of low detection accuracy for multi-scale targets in insulator and wind turbine blade inspection, this paper enhances the model’s feature extraction capabilities across multiple scales. By enhancing feature extraction at large, medium, and small scales, the model’s capability for multi-scale feature extraction is directly strengthened, thereby improving its detection accuracy for multi-scale defect targets.

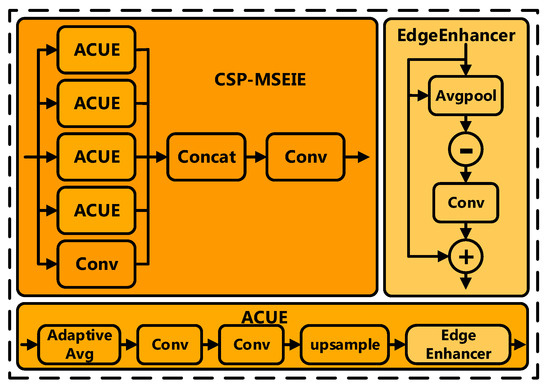

The C2f module within the YOLOv8n network is limited to a single scale, rendering its local convolution operations ineffective for feature extraction in complex images that contain multi-scale targets. This limitation consequently contributes to diminished detection accuracy for multi-scale defects. To overcome this limitation, we redesign the C2f module by integrating Multi-Scale Edge Information Enhancement (MSEIE) module, which concurrently extracting information from various scales while explicitly enhancing edge information. Furthermore, the module is optimized using the Cross Stage Partial (CSP) structure from CSPNet [22], resulting in the CSP_MSEIE module. This optimized module has a lower parameter count and computational load. The structure of the CSP_MSEIE module is illustrated in Figure 2.

Figure 2.

Structure diagram of CSP_MSEIE.

The operational principle of the CSP_MSEIE module is centered on a multi-branch architecture, wherein each branch possesses an identical structure. The module first partitions the input feature map along the channel dimension, directing these feature subsets into parallel multi-scale processing branches. Each branch independently processes feature information at its corresponding scale before the features are fused. This Cross Stage Partial design philosophy effectively integrates gradient information across different stages, preserving a richer gradient flow that substantially reduces computational complexity without sacrificing detection accuracy. To achieve multi-scale analysis, average pooling is utilized to downsample the feature map into four distinct scales. Subsequently, a dedicated edge information enhancement operation is executed within each branch, thereby refining the network’s ability to capture features across a wide spectrum of sizes. In contrast to the original C2f module, the CSP_MSEIE architecture enhances superior interaction between deep and shallow features. This enhancement leads to a marked improvement in detection accuracy for multi-scale defects on insulators and wind turbine blades, while concurrently reducing the overall parameter count and computational demands.

3.2. CGRFPN

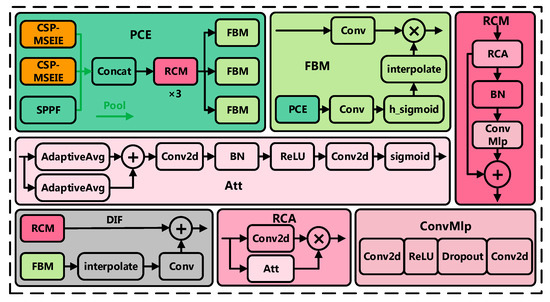

To tackle the challenge of diminished detection accuracy for minute targets in insulator and wind turbine blade inspection, this paper employs a method that strengthens multi-scale feature fusion. The core objective is to augment the semantic richness of features associated with small targets while preserving their fine-grained spatial details, thereby enhancing the model’s classification and localization precision. To realize this objective, we introduce a complete reconstruction of the neck network, termed CGRFPN, which is architecturally founded upon two novel components: the Rectangular Self-Calibration Module (RCM) and the Pyramid Context Extraction (PCE) module, as depicted in Figure 3.

Figure 3.

Structure diagram of each module of CGRFPN.

The CGRFPN feature fusion network is designed with a multi-scale architecture that captures global context from both horizontal and vertical directions and leverages a ConvMLP to enhance feature representation. This network processes input features using the PCE module, which downsamples features to different scales via average pooling and integrates them across levels through channel-wise concatenation. Subsequently, stacked RCM modules are incorporated to facilitate rich, cross-level feature interaction. This design substantially improves the extraction of multi-scale spatial features and strengthens contextual awareness, which are critical for detecting small-scale defects.

Furthermore, the design incorporates the Fuse Block Multi-Scale (FBM) and Dynamic Interpolation Fusion (DIF) modules to enable an adaptive, weighted fusion of features from disparate resolutions. This design significantly enhances feature representation in critical regions and outputs enriched features with greater detail, ultimately achieving a comprehensive optimization of both global context and local details. In essence, the CGRFPN architecture facilitates a profound integration of cross-level features with minimal additional computational overhead. This targeted design significantly improves the model’s generalization, leading to a substantial enhancement in its recognition performance for minute defects.

3.3. LSCD

Deployment scenarios involving unmanned aerial vehicles (UAVs) for defect inspection impose stringent constraints on model complexity due to limited onboard computational resources and real-time processing demands. Under these conditions, it becomes essential to reduce both the number of parameters and the computational load of the detection network. Within the YOLOv8n architecture, the backbone and neck are the most influential components regarding both parameter count and overall performance; consequently, attempts to lightweight these core structures would invariably lead to a significant degradation in accuracy. In contrast, the detection head—constituting approximately 25% of the total parameters—has more significant optimization potential. Given its comparatively lower impact on final performance, optimizing the head offers the most favorable trade-off, enabling substantial reductions in computational cost while preserving detection accuracy.

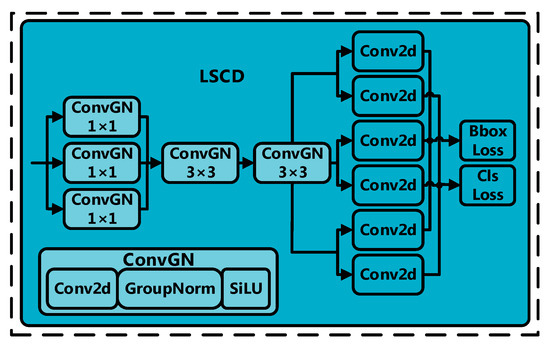

The standard YOLOv8n employs a decoupled head structure where each of its two branches performs two 3 × 3 convolutions and one 1 × 1 convolution. This hierarchical design inherently introduces significant parameter redundancy and computational overhead, which impedes inference speed and limits real-time applicability. To address this bottleneck and meet real-time processing requirements, this paper introduces a lightweight detection head, the LSCD, which eliminates this redundancy through structural reconstruction to significantly increase detection speed while maintaining accuracy.

As shown in Figure 4, the LSCD utilizes a shared convolutional layer architecture to improve feature reuse efficiency. This approach applies a unified convolutional kernel to multi-level input features, thereby establishing a parameter-sharing mechanism that eliminates the redundancy inherent in independent convolutional layers and markedly reduces model complexity. Furthermore, LSCD replaces standard convolutions with Conv_GN, which employs GroupNorm [23] to partition channels into multiple groups and normalize each group using a separate mean and variance. This strategy is critical for mitigating the performance degradation often observed with BatchNorm during small-batch training, a common scenario for resource-constrained platforms, thus enhancing overall model stability and accuracy.

Figure 4.

Structure diagram of LSCD.

3.4. WIoU

In the insulator and wind turbine blade defect datasets, some low-quality samples (e.g., images with blurry targets or unclear boundaries) lead to poor bounding box regression for defect targets, which severely constrains detection accuracy. Therefore, this paper introduces the Wise-Intersection over Union (WIoU) [24] loss function, which employs a dynamic non-monotonic focusing mechanism. This mechanism adaptively allocates gradient gains according to the quality of each anchor box: high-quality anchors receive greater weights, while low-quality anchors are down-weighted. This selective optimization strategy effectively suppresses the negative impact of ambiguous samples, improving both the robustness and precision of localization.

The bounding box loss function adopted by the YOLOv8 model is the Complete Intersection over Union (CIoU). CIoU builds upon IoU by introducing the aspect ratio of the bounding box as a penalty term to further enhance the model’s localization accuracy during detection. It is defined by the following equation:

where and denote the center point of the ground truth box; is a weighting coefficient; is the loss function of IoU, which measures the degree of overlap between the predicted and ground truth boxes; measures the shape difference between the predicted and ground truth boxes; and are the width and height of the smallest enclosing box containing both the ground truth and predicted boxes, respectively; , , , and are the widths and heights of the predicted and ground truth bounding boxes, respectively; and IoU is the ratio of the overlapping area between the predicted box and the ground truth box to the union of their areas.

In the actual detection process, CIoU applies the same penalty weight to all predictions, thereby ignoring the quality differences among samples. This indiscriminate weighting scheme can lead the model to over-prioritize high-quality samples, while simultaneously failing to learn effectively from low-quality examples. To overcome this deficiency, the loss function is optimized using WIoU, which features an adaptive gradient gain adjustment mechanism. WIoU redefines the quality assessment of anchor boxes by introducing a metric for “outlier degree,” which facilitates a more judicious allocation of gradient gains. This strategy effectively de-weights the gradient contribution from both high-quality anchor boxes (reducing their overwhelming influence) and low-quality examples (mitigating harmful gradients). Consequently, WIoU focuses more on anchor boxes of average quality, thereby enhancing the model’s overall generalization and regression accuracy. The specific implementation in this study utilizes WIoUv3, which is defined by the following equation:

where is the outlier degree; is the monotonic focusing coefficient; * denotes the detach operation, which separates and from the computational graph; and are hyperparameters. The “outlier degree”, a concept introduced in WIoUv3, serves as the primary metric for quantifying anchor box quality. A smaller outlier degree signifies a higher-quality anchor box, which is assigned a smaller gradient gain, thereby shifting the focus of bounding box regression onto anchor boxes of average quality. Conversely, a larger outlier degree indicates a lower-quality anchor box, and by allocating a smaller gradient gain, the mechanism prevents these anchors from generating excessive harmful gradients. This dynamic, non-monotonic gradient allocation strategy ensures that the training process is not dominated by either overly simple or excessively challenging examples, thereby leading to a more robust and accurate bounding box regression.

4. Experimental Results

To prove the effectiveness of the IPE-YOLO model, this paper conducted extensive experiments on the insulator and blade datasets. This section provides detailed information on the datasets, experimental conditions, evaluation metrics, and the experimental results.

4.1. Datasets

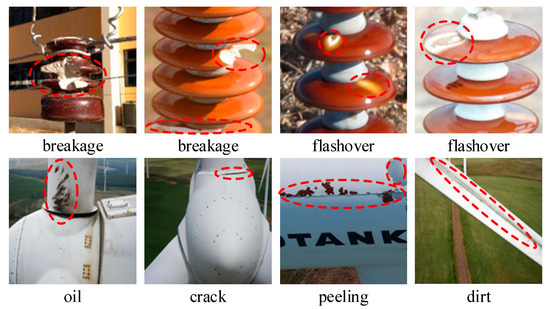

To facilitate robust training and evaluation, two custom-built datasets were constructed for this study, focusing on insulator and wind turbine blade defects, respectively. The first dataset, dedicated to insulator inspection, comprises 1195 images and encompasses three distinct classes: ‘insulator’, ‘flashover’, and ‘breakage’. The second, focusing on wind turbine blade inspection, consists of 4467 images categorized into seven classes: ‘burning’, ‘crack’, ‘deformity’, ‘dirt’, ‘oil’, ‘peeling’, and ‘rusty’. All images across both datasets were meticulously annotated using LabelImg 1.8.6. Following annotation, the entire collection was partitioned into training and validation sets according to a 7:3 ratio. Representative samples from each dataset are illustrated in Figure 5.

Figure 5.

Samples of insulator and wind turbine blade defects. Defects highlighted in red.

4.2. Experimental Conditions

To guarantee strict comparability among all models, the compute and storage budgets—as well as other hardware and runtime conditions—were held constant throughout all experiments, thereby eliminating confounding factors related to hardware variability. All experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 4090 GPU, running the Ubuntu 24.02 operating system. The development environment was based on CUDA 12.4, PyTorch 2.4, and Python 3.10.

To ensure a fair and direct comparison, both the baseline and the proposed models were trained and validated using an identical set of hyperparameters. The primary training parameters were set as follows: an input image size of 640 × 640, a batch size of 32, a momentum of 0.937, a weight decay of 0.0005, and a learning rate of 0.01. The models were trained for 350 epochs on the insulator dataset and 235 epochs on the wind turbine blade dataset, respectively, to ensure full convergence.

4.3. Evaluation Metrics

The performance of the proposed model is quantitatively evaluated using a suite of standard object detection metrics: precision, recall, and mean average precision (mAP). Precision is the proportion of correct detections among all positive detections and is used to evaluate the model’s detection accuracy. Recall is the ratio of correctly identified targets to the total number of targets in the test set, which assesses the model’s ability to comprehensively identify all correct samples. The mean average precision is the most important performance evaluation metric, representing the average precision across all classes, and is determined by both precision and recall. The mAP value calculated at an Intersection over Union (IoU) threshold of 0.5 is denoted as mAP0.5, while the average mAP computed over multiple thresholds in the 0.5–0.95 IoU range is denoted as mAP0.5–0.95. The specific definitions for each metric are as follows:

where TP is the number of true positives, FP is the number of false positives, and FN is the number of false negatives. The denominator, TP + FP, represents the total number of detections made by the system. AP, or average precision, is used to measure the model’s detection performance for a single class. P(R) is the precision at a recall level R, and N is the total number of classes. The mAP is the mean of the AP values across all classes.

4.4. Experimental Analysis

4.4.1. Result Analysis

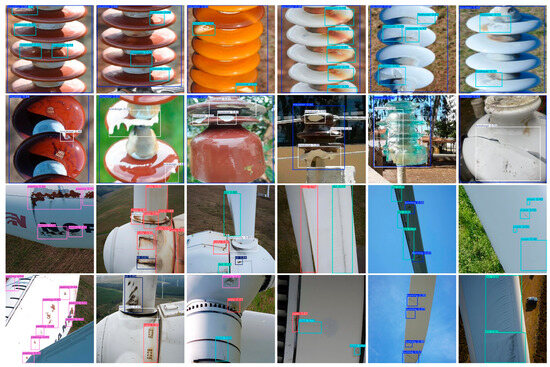

To qualitatively assess the model’s real-world performance, the IPE-YOLO model was tested on a representative set of images encompassing all defect categories. This selection deliberately included defects exhibiting significant scale variations—from small-scale targets such as ‘flashover’ and ‘burning’ to expansive ones like ‘breakage’ and ‘dirt’—thereby providing a rigorous test of the model’s multi-scale detection capabilities. The detection results are shown in Figure 6. As illustrated, the IPE-YOLO model demonstrates remarkable robustness in detecting the various defect types. It successfully localizes diminutive targets like ‘flashover’ and ‘burning’, despite these features subtending a minimal pixel area within the image. This high sensitivity to fine-grained details is directly attributable to the enhanced multi-scale feature extraction and fusion mechanisms integrated into the IPE-YOLO architecture. Collectively, these visual results confirm the efficacy of the proposed IPE-YOLO model, validating its ability to achieve precise and reliable localization of defects across a wide spectrum of scales.

Figure 6.

Display of test results.

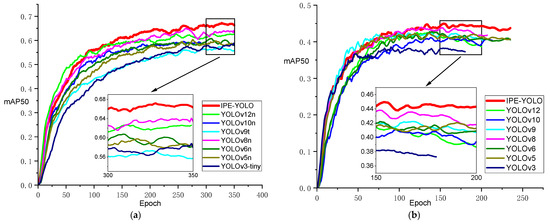

To verify the stability and reproducibility of the observed improvements in detection accuracy, two sets of repeated experiments were conducted. As summarized in Table 1 and Table 2, the proposed method achieved accuracy gains of 2.6% and 2.9% on the two respective datasets, confirming the robustness of the performance enhancements. Furthermore, the model underwent multiple experiments on both datasets, with the resulting mAP curves shown in Figure 7. The curves in the figures have been smoothed using the adjacent averaging method. When calculating each smoothed point, the average of that point and a total of five points before and after it will be taken. This smoothing eliminates large random fluctuations in the training curves, thereby revealing clearer performance trends. The curves indicate that the mAP of the IPE-YOLO model surpasses that of other mainstream YOLO models of a comparable scale, such as YOLOv12n and YOLOv10n. This result confirms that the adopted improvements have enhanced the model’s detection accuracy, enabling more precise and comprehensive recognition of defect targets.

Table 1.

Result of repeated experiment on insulator dataset.

Table 2.

Result of repeated experiment on blade dataset.

Figure 7.

(a) mAP diagrams of each model on insulator dataset; (b) mAP diagrams of each model on blade dataset.

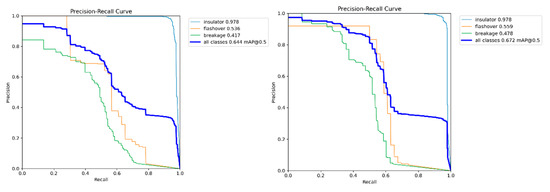

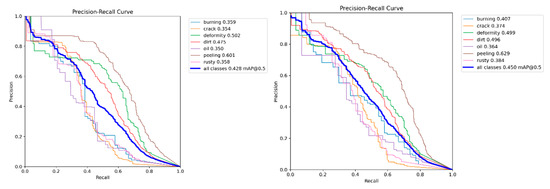

A comparative analysis of the precision–recall (P-R) curves for the proposed IPE-YOLO and the baseline YOLOv8n model is presented in Figure 8 and Figure 9 for the insulator and wind turbine blade datasets, respectively. The plots illustrate the P-R curves for each class at an mAP0.5 threshold. A superior P-R curve is characterized by its proximity to the top-right corner, as the enclosed area under the curve directly corresponds to the average precision. On the insulator dataset, the most substantial improvement is observed for the ‘breakage’ class. This class is characterized by considerable scale variation, with some instances subtending a minimal pixel area. The proposed improvements effectively enhance the model’s ability to detect such multi-scale defects, increasing its accuracy by 6.1%. Additionally, the curve for the flashover class becomes steeper, indicating that the model maintains high precision for flashover defects even at high recall rates, with detection accuracy improving by 4.2%. Similarly, on the wind turbine blade dataset, the performance gain for the ‘burning’ class is the most pronounced. Given that ‘burning’ defects are typically small-scale objects, the 4.8 percentage point AP increase validates the model’s effectiveness in mitigating the common challenge of small object detection. Concurrently, for the peeling defect, which also has large scale variations, the model’s optimization resulted in an increased area under the curve, improving its detection accuracy by 2.8%.

Figure 8.

P-R curve of IPE-YOLO on insulator dataset.

Figure 9.

P-R curve of IPE-YOLO on blade dataset.

Overall, the comparative P-R curve analysis confirms that the proposed model substantially improves the model’s multi-scale defect detection capability. This improvement is especially evident in classes involving small or scale-variable targets, which traditionally pose challenges for general-purpose object detectors.

4.4.2. Comparative Experiment

To better evaluate the proposed lightweight model, IPE-YOLO, comparative experiments were conducted on the insulator and blade datasets against other mainstream YOLO algorithms of a comparable scale, including YOLOv3 [25], YOLOv5 [26], YOLOv6 [27], YOLOv8 [28], YOLOv9 [29], YOLOv10 [30], and YOLOv12 [31]. To ensure a fair comparison, all experiments were conducted using identical hyperparameters and learning rates, with the results presented in Table 3 and Table 4.

Table 3.

Experiment results comparing different algorithmic models on insulator dataset.

Table 4.

Experiment results comparing different algorithmic models on blade dataset.

On the insulator dataset, the IPE-YOLO model achieved the best detection accuracy of 67.2%. In comparison with YOLOv3-tiny, a representative of early lightweight object detection models, the proposed IPE-YOLO model reduced the number of parameters and computational cost by 78.3% and 66.4%, respectively, while achieving a 3% improvement in detection accuracy. Compared to YOLOv5n, an algorithm widely applied in real-time detection scenarios, IPE-YOLO significantly improved detection accuracy by 6.1% with a 14% reduction in computational cost. The improvements were also evident when compared with YOLOv6n and YOLOv9t, with IPE-YOLO achieving 10.1% and 5.4% higher accuracy, respectively. In contrast to the state-of-the-art YOLOv12n, IPE-YOLO demonstrated a superior balance between lightweight design and accuracy, achieving a 20.8% accuracy improvement with a similar number of parameters and computational cost. Finally, compared with the baseline model, YOLOv8n, IPE-YOLO improved accuracy by 2.8% while reducing the number of parameters and computational cost by 12.3% and 24.7%, respectively. These results confirm that IPE-YOLO delivers notable improvements in multi-scale defect detection while further optimizing model efficiency.

On the blade dataset, the IPE-YOLO model also achieved excellent detection performance, with an accuracy of 45.0%. Compared to earlier lightweight models such as YOLOv3-tiny and YOLOv5, it improved accuracy by 6.0% and 2.4%, respectively. In comparison with YOLOv6n and YOLOv9t, the improved IPE-YOLO model also achieved further accuracy gains of 1.8% and 2.2%, respectively. Similarly, when compared with the state-of-the-art YOLOv12n model, IPE-YOLO delivered a 2% increase in accuracy, demonstrating higher detection performance with a comparable lightweight design. Furthermore, compared to the baseline YOLOv8n model, IPE-YOLO’s detection accuracy was 2.2% higher, which confirms the effectiveness of the proposed model for the wind turbine blade defect detection task.

To visually demonstrate the improvement effect, we conducted comparative detection tests on sample images using the proposed IPE-YOLO model and several mainstream YOLO models, with the results shown in Figure 10. In the figure, red dashed boxes highlight missed defects, red dashed lines indicate multiple overlapping detection boxes, and red dashed circles denote false detections. The results indicate that the comparison models suffer from numerous missed and false detections in the insulator and wind turbine blade inspection tasks and are prone to generating redundant detection boxes for small targets. Conversely, IPE-YOLO accurately localizes even diminutive defects with high confidence, effectively mitigating both false detection and omission rates. This visually underscores the efficacy of the proposed architectural enhancements.

Figure 10.

Test results of comparative experiment.

Overall, IPE-YOLO achieves a dual optimization objective: it improves detection accuracy over the baseline while concurrently reducing both parameter count and computational complexity. This accuracy–efficiency balance makes the model particularly suitable for onboard deployment in UAV inspection systems, facilitating practical real-time defect detection for power grid insulator and wind turbine blade maintenance.

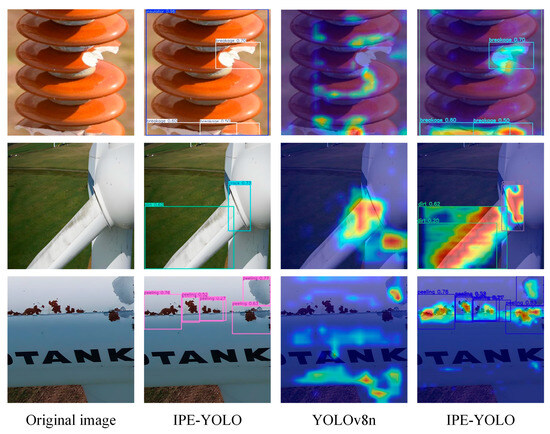

To provide insight into the models’ feature localization capabilities and attention distribution, we employed Gradient-weighted Class Activation Mapping (Grad-CAM) [32] to generate heatmap visualizations, as presented in Figure 11. The trained IPE-YOLO model was used to detect defects in images from both datasets, demonstrating its ability to accurately locate the targets. In these visualizations, a more effective result is indicated by red areas that are highly concentrated on the target’s center and interior. The comparative results in the figure show that the YOLOv8n model struggles to capture the target region and its attention is dispersed. In contrast, the attention of the improved IPE-YOLO model is consistently focused within the boundaries of the detected targets. Compared with YOLOv8n, IPE-YOLO exhibits a higher degree of focus on the defect regions, which indicates that it possesses a stronger ability to extract and integrate defect-relevant features while effectively suppressing interference from irrelevant background information. Such targeted attention distribution directly contributes to the improved detection accuracy observed in both insulator and wind turbine blade defect inspections, reinforcing the conclusion that the proposed enhancements lead to superior feature representation and localization robustness.

Figure 11.

Grad-CAM heat map visualization.

4.4.3. Ablation Experiment

To systematically dissect the contribution of each proposed component, this section presents a comprehensive ablation study. The efficacy of the CSP_MSEIE module, CGRFPN neck, LSCD detection head, and WIoU loss function was incrementally evaluated against the YOLOv8n baseline. The effects of these components on the mAP0.5, Parameters, and GFLOPs metrics were observed on both datasets and compared with the original YOLOv8n model, as shown in Table 5 and Table 6. The results first demonstrate that substituting the C2f module with our proposed CSP_MSEIE yields a dual benefit: it curtails both parametric and computational costs while simultaneously bolstering detection accuracy. The integration of the WIoU loss function confers a notable accuracy enhancement at no additional parametric or computational cost. Although adopting the reconstructed CGRFPN neck slightly increased the number of parameters, it also yielded an improvement in detection accuracy. Finally, replacing the detection head with LSCD reduced the model’s parameter count by 19.8% and its computational load by a further 19.7%, while simultaneously improving detection accuracy. This strategic optimization effectively offsets the parametric increase from the CGRFPN neck, contributing to the final model’s overall efficiency.

Table 5.

Consequences of IPE-YOLO algorithm ablation on insulator dataset.

Table 6.

Consequences of IPE-YOLO algorithm ablation on blade dataset.

The ablation study results show that each of the proposed improvements contributed to enhancing the model’s detection accuracy to varying degrees. Compared with the YOLOv8n model, the improved IPE-YOLO model comprehensively reduced its number of parameters and computational load; the parameter count decreased from 3.0 M to 2.63 M, and the computational load dropped from 8.1 GFLOPs to 6.1 GFLOPs. This represents a reduction of 12.3% and 24.7% in the number of parameters and computational load, respectively, compared to the baseline YOLOv8n model, making it more suitable for deployment on resource-constrained inspection UAVs. Furthermore, by adopting the CSP_MSEIE module and the CGRFPN neck, the model’s capabilities for multi-scale feature extraction and fusion were enhanced, improving the interaction between deep and shallow features. This allows the IPE-YOLO model to maintain reliable detection accuracy while being lightweight.

Focusing on insulator and wind turbine blade inspections, this study introduces an innovative approach by jointly addressing two distinct detection tasks that share the common challenge of multi-scale target detection. To tackle this problem, we propose IPE-YOLO, a systematically enhanced lightweight detection model. The architecture integrates a multi-scale feature extraction module (CSP_MSEIE) and a feature fusion network (CGRFPN) to improve detection accuracy, with particular emphasis on small defect localization. Furthermore, the incorporation of the WIoU bounding box regression loss and the substitution of the baseline detection head with the LSCD head enhance detection precision while simultaneously reducing parameter count and computational cost. These targeted optimizations distinguish IPE-YOLO from conventional YOLO-based models, with the specific modifications and their quantitative impacts summarized in Table 7. Collectively, these enhancements enable IPE-YOLO to deliver superior detection performance and improved inspection efficiency in the context of UAV-based insulator and wind turbine blade defect detection.

Table 7.

Differences and impact between IPE-YOLO and early designs.

5. Conclusions

To address the low accuracy of multi-scale defect detection in the insulator and wind turbine blade defect detection task, this paper proposes a lightweight defect detection model based on YOLOv8, namely IPE-YOLO. The model achieves simultaneous improvements in detection accuracy and efficiency by significantly reducing parameter count and computational complexity while enhancing multi-scale detection performance. The proposed CSP_MSEIE module, constructed using the CSP architecture to replace the baseline C2f block, enhances feature representation capacity while contributing to model lightweighting. The CGRFPN neck was reconstructed using the PCE and RCM modules to strengthen feature fusion, comprehensively integrating features from different levels and thereby improving the model’s contextual awareness. Furthermore, the WIoU bounding box loss function, which features a dynamic non-monotonic focusing mechanism, was introduced to balance the influence of samples of varying quality on detection accuracy, further improving model performance. Finally, the LSCD detection head, employing a shared convolutional layer architecture, enables feature sharing across detection layers, substantially reducing both parameters and FLOPs while improving feature extraction efficiency.

Through a series of experiments on two datasets, the results have verified the effectiveness of the IPE-YOLO model. Compared with YOLOv8n, the IPE-YOLO model improves mAP0.5 by 2.6% on the insulator dataset and 2.9% on the blade dataset. Its number of parameters is reduced from 3.0M to 2.63M (a 12.3% decrease), and its FLOPs are reduced from 8.1G to 6.1G (a 24.7% decrease). In comparison to mainstream YOLO detection algorithms of a similar scale, the IPE-YOLO model exhibits a better trade-off between detection accuracy and lightweight design, demonstrating its feasibility for the insulator and wind turbine blade defect detection task. Furthermore, each of the proposed improvements has positively optimized the model’s detection performance to varying degrees. The resulting model not only achieves higher detection accuracy than the original but also features a substantially reduced parameter volume and computational load, which facilitates its further deployment on resource-constrained UAV equipment.

Looking ahead, our future work will focus on translating these research advancements into practice. The primary objective is the deployment of the IPE-YOLO model onto a custom-developed UAV inspection platform. This will involve rigorous field testing to validate and enhance its robustness and practical utility in complex operational environments. Concurrently, we will continue to pursue algorithmic refinements to further advance the state of the art in efficient object detection.

Author Contributions

Conceptualization, C.X. and M.X.; methodology, M.X. and K.C.; software, Z.C. and Y.M.; validation, Z.C., M.X. and D.L.; formal analysis, Z.C. and C.X.; investigation, K.C.; resources, C.X.; data curation, Z.C. and D.L.; writing—original draft preparation, M.X. and Z.C.; writing—review and editing, K.C. and Y.M.; supervision, M.X.; project administration, K.C.; funding acquisition, M.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Liaoning Province, grant number 2025-BS-0448, and the Liaoning Province Transportation Science and Technology Project, grant number 2024066, and the Fundamental Research Funds for the Provincial Universities of Liaoning, grant number LJ212410150040.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Anthony, K.; Arunachalam, V. Voltage Stability Monitoring and Improvement in a Renewable Energy Dominated Deregulated Power System: A Review. E-Prime-Adv. Electr. Eng. Electron. Energy 2025, 11, 100893. [Google Scholar] [CrossRef]

- Saleh, A.M.; István, V.; Khan, M.A.; Waseem, M.; Ali Ahmed, A.N. Power System Stability in the Era of Energy Transition: Importance, Opportunities, Challenges, and Future Directions. Energy Convers. Manag. X 2024, 24, 100820. [Google Scholar] [CrossRef]

- He, W.; Gao, Q.; Chen, X.; Zhang, Z.; Guo, J.; Han, C.; Lin, Z. Development Model and Path of Future Power Grids under the Ubiquitous Electrical Internet of Things. In Proceedings of the 2019 IEEE 3rd Conference on Energy Internet and Energy System Integration (EI2), Changsha, China, 8–10 November 2019; pp. 599–603. [Google Scholar]

- Dai, J.; Yang, W.; Cao, J.; Liu, D.; Long, X. Ageing Assessment of a Wind Turbine over Time by Interpreting Wind Farm SCADA Data. Renew. Energy 2018, 116, 199–208. [Google Scholar] [CrossRef]

- Liao, G.; Zhao, Y.; Hong, Z.; Zhang, Z.; Zeng, H. Closed-Loop Control of Biaxial Fatigue Loading of Wind Turbine Blades Based on Visual Inspection. J. Phys. Conf. Ser. 2023, 2503, 012088. [Google Scholar] [CrossRef]

- Bueno-Barrachina, J.-M.; Ye-Lin, Y.; Nieto-del-Amor, F.; Fuster-Roig, V. Inception 1D-Convolutional Neural Network for Accurate Prediction of Electrical Insulator Leakage Current from Environmental Data during Its Normal Operation Using Long-Term Recording. Eng. Appl. Artif. Intell. 2023, 119, 105799. [Google Scholar] [CrossRef]

- Stefenon, S.F.; Furtado Neto, C.S.; Coelho, T.S.; Nied, A.; Yamaguchi, C.K.; Yow, K.-C. Particle Swarm Optimization for Design of Insulators of Distribution Power System Based on Finite Element Method. Electr. Eng. 2022, 104, 615–622. [Google Scholar] [CrossRef]

- Luo, Y.; Yu, X.; Yang, D.; Zhou, B. A Survey of Intelligent Transmission Line Inspection Based on Unmanned Aerial Vehicle. Artif. Intell. Rev. 2023, 56, 173–201. [Google Scholar] [CrossRef]

- Liu, X.; Lin, Y.; Jiang, H.; Miao, X.; Chen, J. Slippage Fault Diagnosis of Dampers for Transmission Lines Based on Faster R-CNN and Distance Constraint. Electr. Power Syst. Res. 2021, 199, 107449. [Google Scholar] [CrossRef]

- Zhang, Q.; Chang, X.; Meng, Z.; Li, Y. Equipment Detection and Recognition in Electric Power Room Based on Faster R-CNN. Procedia Comput. Sci. 2021, 183, 324–330. [Google Scholar] [CrossRef]

- Yin, M.L.; Li, Z.Q.; Wen, X.; Dou, X.; Xing, C.J.; Xi, L.X. Recognition Algorithm for Dust on Solar Photovoltaic Panels Based on R-CNN. In Proceedings of the 2024 IEEE 5th International Conference on Advanced Electrical and Energy Systems (AEES), Lanzhou, China, 29 November–1 December 2024; pp. 261–265. [Google Scholar]

- Peng, T.; Wen, F. Photovoltaic Panel Fault Detection Based on Improved Mask R-CNN. In Proceedings of the 2023 IEEE International Conference on Control, Electronics and Computer Technology (ICCECT), Jilin, China, 28–30 April 2023; pp. 1187–1191. [Google Scholar]

- Chen, M.; Xu, C. Bird’s Nest Detection Method on Electricity Transmission Line Tower Based on Deeply Convolutional Neural Networks. In Proceedings of the 2020 IEEE 4th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 12–14 June 2020; pp. 2309–2312. [Google Scholar]

- Wang, Y.; Li, Z.; Yang, X.; Luo, N.; Zhao, Y.; Zhou, G. Insulator Defect Recognition Based on Faster R-CNN. In Proceedings of the 2020 International Conference on Computer, Information and Telecommunication Systems (CITS), Hangzhou, China, 5–7 October 2020; pp. 1–4. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Li, L.R.; Cui, H.; Dai, J.W.; Mei, B.; Zang, Z.J.; He, Z.Q.; Li, J. High-Voltage Transmission Line Inspection Based on Multi-Scale Lightweight Convolution. Measurement 2025, 251, 117193. [Google Scholar]

- Di, T.; Feng, L.; Guo, H. Research on Real-Time Power Line Damage Detection Method Based on YOLO Algorithm. In Proceedings of the 2023 IEEE 3rd International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 26–28 May 2023; pp. 671–676. [Google Scholar]

- Xiang, X.; Zhao, F.; Peng, B.; Qiu, H.; Tan, Z.; Shuai, Z. A YOLO-v4-Based Risk Detection Method for Power High Voltage Operation Scene. In Proceedings of the 2021 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC), Xi’an, China, 17–19 August 2021; pp. 1–5. [Google Scholar]

- Zhou, H.; Zhang, H.; Wang, A.; Fang, X.; Xu, H.; Song, Y. Application of Intelligent Bird Repelling Technology for Power Transmission and Transformation Equipment. In Proceedings of the 2024 IEEE 6th International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 26–28 July 2024; pp. 289–293. [Google Scholar]

- Zhang, X.; Zhang, L.; Li, D. Transmission Line Abnormal Target Detection Based on Machine Learning Yolo V3. In Proceedings of the 2019 International Conference on Advanced Mechatronic Systems (icamechs), Kusatsu, Shiga, Japan, 26–28 August 2019; pp. 344–348. [Google Scholar]

- Zhang, B.; Song, J.; Liu, Q.; Yan, Y. Wear-YOLO: Research on Detection Methods of Safety Equipment for Power Personnel in Substations. In Proceedings of the 2024 5th International Conference on Clean Energy and Electric Power Engineering (ICCEPE), Yangzhou, China, 9–11 August 2024; pp. 274–278. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Yeh, I.-H.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. arXiv 2019, arXiv:1911.11929. [Google Scholar] [CrossRef]

- Wu, Y.; He, K. Group Normalization. arXiv 2018, arXiv:1803.08494. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 12 August 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Ultralytics. Available online: https://github.com/ultralytics/ultralytics (accessed on 15 August 2025).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).