Abstract

Infrared small target detection (IRSTD) remains a critical yet challenging task due to the inherent low signal-to-noise ratio, weak target features, and complex backgrounds prevalent in infrared images. Existing methods often struggle to effectively capture the subtle edge features of targets and suppress background clutter simultaneously. To address these limitations, this study proposed a novel Multi-directional Learnable Edge-assisted Dense Nested Attention Network (MLEDNet). Firstly, we propose a multi-directional learnable edge extraction module (MLEEM), which is designed to capture rich directional edge information. The extracted multi-directional edge features are hierarchically integrated into the dense nested attention module (DNAM) to significantly enhance the model’s capability in discerning the crucial edge features of infrared small targets. Then, we design a feature fusion module guided by residual channel spatial attention (ResCSAM-FFM). This module leverages spatio-channel contextual cues to intelligently fuse features across different levels output by the DNAM, effectively enhancing target representation while robustly suppressing complex background interferences. By combining the MLEEM and the ResCSAM-FFM within a dense nested attention framework, we present a new model named MLEDNet. Extensive experiments conducted on benchmark datasets NUDT-SIRST and NUAA-SIRST demonstrate that the proposed MLEDNet achieves superior performance compared to state-of-the-art methods.

1. Introduction

Infrared small target detection (IRSTD) is a key technology in infrared imaging systems, holding significant strategic value in military early warning, maritime search and rescue, and the monitoring of low-altitude slow-moving drones [1,2,3]. With advancements in infrared detector sensitivity, small targets are typically characterized by a signal-to-noise ratio (SNR) below 2 dB and a size of less than 9 × 9 pixels [4,5]. While generalized object detection methods have achieved remarkable progress, infrared image target detection remains a significant challenge due to its inherent characteristics. Specifically, targets in infrared images typically exhibit small sizes, limited shape features, and substantial variations in both shape and size.

Traditional IRSTD methods, such as local contrast and low-order sparse decomposition, can partially separate the target from the background in some simple scenes [6,7,8]. However it is difficult to adapt to complex scenes, and the algorithms need to manually adjust the parameters. Recently, deep learning-based methods have made significant progress in relation to the task of IRSTD. The U-shaped densely skip-connected deep learning network achieved a very high detection probability on the SIRST dataset. Li et al. [9] proposed a dense nested attention network (DNANet) that utilizes a dense interconnection interaction module to achieve the interaction between high-level and low-level features, and adopts a spatiotemporal attention module to enhance the network performance. However, DNANet does not provide targeted strategies for extracting edge features of targets and has deficiencies in capturing edge features of small targets. Zhang et al. [10] proposed an attention-guided pyramidal context network (AGPCNet) for computing local and global associations between IR small targets. This method uses the attention mechanism to extract the target features, lacking consideration of prior knowledge such as the target edges, resulting in a large number of parameters but an average performance. Guo et al. [11] introduced position attention into dense nested networks, guiding the network to focus on the center position of the target, thereby improving the detection accuracy of the target. The main contribution of this method is the introduction of pixel attention on the foundation of DNANet. However, it still struggles to address the problem of missing alarms of small targets.

Although the above-mentioned methods have made remarkable progress, these methods still have some problems. Firstly, since the target size in infrared images is very small, it will lead to difficulty for deep neural networks to capture the features of small targets; in particular, some edge features will be lost due to the deepening of neural networks. Furthermore, due to the inherently complex backgrounds in which infrared targets typically appear, conventional CNN-based U-shaped models often struggle to achieve sufficient feature representation for robust detection. When a model has difficulty effectively distinguishing between the target and background interference, it will generate a large number of missed detections and false alarms.

To solve the above problems, we propose a new multi-directional learnable edge-assisted dense nested attention network (MLEDNet). Unlike methods such as DNANet [9], AGPCNet [10], and UIUNet [12], the network introduces a multi-directional learnable edge extraction module (MLEEM) into DNANet. The edge information of the small targets extracted by this module is fused with the lower and upper layers of the densely connected network to enhance the overall perception ability of the network for the target edges. Therefore, MLEEM can make the extracted target features more complete, thereby reducing target missed detections. On the other hand, to fuse the features from each layer with the dense nested module more effectively, a feature fusion module guided by the residual channel spatial attention module (ResCSAM-FFM) is designed. In the feature fusion module, the features from each layer of the dense nested module will first undergo the preprocessing of the residual channel spatial attention and then be further fused. The ResCSAM-FFM can further suppress the background interference and enhance the target features at the same time, thereby reducing false detection. To sum up, the main contributions of this paper are as follows:

- (1)

- We propose an MLEEM, which adaptively captures multi-directional edge features and hierarchically integrates them into the dense nested module. This integration enhances the model’s capacity to extract discriminative edge features from infrared targets, particularly under challenging conditions such as low contrast.

- (2)

- A ResCSAM-FFM was proposed. The ResCSAM-FFM enables the multi-level features output by the DNAM to better represent small targets and suppress background interference, thereby achieving a better feature fusion performance.

- (3)

- A new multi-directional learnable edge-assisted dense nested attention network (MLEDNet) is proposed. The experimental results show that our model demonstrates excellent performance.

2. Related Works

2.1. Traditional Algorithms

TopHat [13] is a classical morphological algorithm for infrared small target detection, which employs structural operations to suppress the background texture and enhance small targets in uniform background scenarios. However, this method exhibits significant limitations, including being sensitive to complex cloud interference and dynamic backgrounds, resulting in a high false alarm rate. To address these challenges, Chen et al. [14] proposed the Local Contrast Measurement (LCM) framework, which leverages local contrast analysis to discriminate between target and background pixels. Meanwhile, Wu et al. [15] developed an infrared weak target detection algorithm based on dual-neighborhood gradient analysis. Their approach incorporates a specifically designed gradient filter to generate saliency maps, followed by potential target identification within these maps. Gao et al. [16] made a notable advancement by extending the traditional framework through the introduction of the Infrared Patch Image (IPI) model, implemented via a novel localized block construction method. Although these conventional methods demonstrate certain advantages in specific scenarios, they generally suffer from limited adaptability, often requiring manual parameter tuning, as well as exhibiting severe performance degradation when confronted with complex background conditions.

2.2. U-Net for Infrared Small Target Detection

With the rapid advancement of deep learning, CNN-based infrared IRSTD methods have achieved significant progress. Among these approaches, the U-Net architecture has been widely adopted for IRSTD tasks [17]. Yang et al. [18] proposed the FATCNet method, which built a dual branch decoder structure network using CNN and Transformer to obtain both local and global features, thereby improving detection accuracy. While the incorporation of Transformer enhances the model’s capability to extract global image features, it substantially increases model complexity. Transformer [19] models the global features of sequences through the self-attention mechanism, but it is difficult to directly model local features; it relies on a large amount of data for training. Therefore, the detection effect of infrared small targets by simply using the Transformer model is limited.

Wu et al. [12] developed UIU-Net, which incorporates resolution–maintenance deep supervision and interactive-cross attention modules. This architecture effectively addresses the challenges of tiny object loss and low contrast in infrared small target detection while demonstrating state-of-the-art performance on benchmark datasets. Liu et al. [20] introduced a Multi-Scale Head U-Net (MSHNet) with an enhanced loss function to improve scale and location sensitivity while maintaining a streamlined model architecture. Zhang et al. [21] proposed SCAFNet to address the limitation of existing deep learning models in exploiting complementary features across different layers. The model employs a multi-resolution-assisted enhancement encoder to preserve detailed information in deep features. However, this method relies solely on CNNs for feature extraction and cross-layer complementarity, which constrains its enhancement capability due to the inherent limitations of CNNs. Du et al. [22] proposed an improved dense nested U-Net network, which designed a bottom-up feature pyramid fusion module for integrating low-level features into high-level features. Nevertheless, this fusion approach may lead to the loss of critical features, potentially resulting in the missed detection of small targets.

The above-mentioned studies presented new infrared small target detection models based on U-Net. Although good results have been achieved, due to little consideration being made for the characteristic of small target size, the target feature is prone to be lost as the network deepens.

2.3. IRST Methods Incorporating Edge Information

Recent studies have increasingly recognized edge feature extraction as a critical component in object detection frameworks [23,24]. By leveraging target edge information, these approaches achieve a certain degree of improvement in small target detection accuracy.

Zhang et al. [25] proposed MDIGCNet, a multi-directional information-guided contextual network integrating domain-inspired modules with reparameterized local–global feature fusion, which effectively addresses the lack of structural information in infrared small targets. However, this model is rather complex and may still miss detections. The GVSLNet [26] method introduced a self-learning gradient vector module to enhance the target–background discrimination in complex environments. However, GVSLNet’s cascaded gradient extraction module, while integrated into the backbone network, may not effectively capture optimal gradient features. Liu et al. [27] proposed PGDN-Net, which is a prior-guided network that fuses traditional manual features with a dense nested architecture. The ISNet framework [28] implemented a physics-inspired edge enhancement strategy using Taylor finite difference operators, combined with bidirectional attention aggregation for shape-aware feature learning, demonstrating exceptional robustness against cluttered infrared backgrounds. Zhan et al. [29] proposed EGISD-YOLO, which is an enhanced YOLO-based architecture incorporating hierarchical edge-guided feature fusion and channel attention mechanisms to tackle low-contrast, small-pixel infrared ship detection in cluttered maritime environments.

While these edge-based methods have demonstrated impressive performance, they still face the following three major challenges: (1) persistent small target missed detections, (2) high false alarm rates in complex backgrounds, and (3) substantial computational overhead. To address these limitations, we propose MLEDNet, which innovatively combines a dense nested interaction module with a multi-directional learnable edge extraction module to preserve finer target details. Furthermore, we incorporate a spatiotemporal attention mechanism in the feature fusion stage. The proposed model has a high detection accuracy, and the performance is improved compared to the existing representative methods.

3. Methods

3.1. Overall Architecture

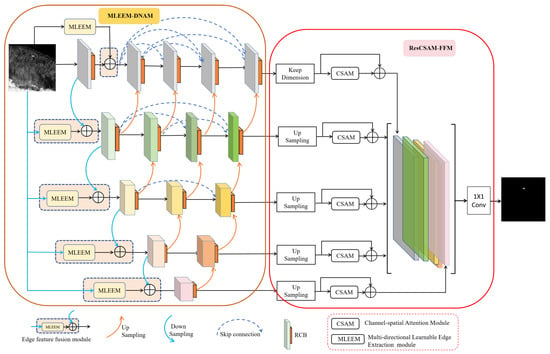

The architecture of the proposed MLEDNet is shown in Figure 1, which is based on DNANet. MLEDNet consists of two key modules: MLEEM-DNAM and ResCSAM-FFM. Unlike the transformer-based object detection methods [30] that rely on global self-attention, our proposed method uses MLEEM to extract edge features and DNAM to model multi-scale global features. Unlike Guo’s method [11], which directly uses channel attention to fuse features of each layer, in our method, residual connections are introduced on the basis of CSAM to maximally preserve target features. Finally, ResCSAM-FFM uses a local attention window to suppress background clutter without sacrificing target details.

Figure 1.

The overall framework diagram of the proposed method.

In MLEEM-DNAM, the infrared image is first processed by MLEEM to extract the edge features of the target. These edge features are then concatenated with the initial convolutional features from each layer of the DNAM. This integration propagates target edge information to deeper feature layers of DNAM, thereby enhancing the accuracy of small target detection. Furthermore, DNAM strengthens the extraction of semantic and detailed features by expanding the network depth and introducing skip connections between residual convolutional attention modules.

Subsequently, the feature outputs from each DNAM layer are fused via ResCSAM-FFM. In this module, ResCSAM is employed to selectively emphasize useful features while suppressing irrelevant interference, improving feature representation robustness. Finally, the network adopts a Conv module to resize outputs to and further obtain the binarized target map. In this paper, all weights of the proposed model are trained from scratch, including comparison models such as DNANet.

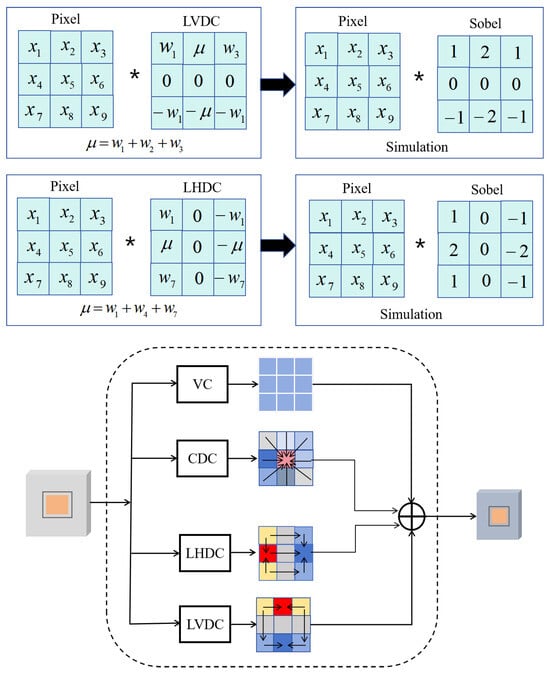

3.2. Feature Extraction with MLEEM

In order to enhance the ability of DNANet to extract features at the edge of small targets, we proposed MLEEM, which integrates the central differential convolution (CDC) [31], learnable horizontal differential convolution (LHDC), learnable vertical differential convolution (LVDC), and Vanilla convolution (VC) together, as shown in Figure 2. In this module, the edge features of the image are obtained using CDC, LVDC, and LHDC, respectively, and then the output results from each operator are added point by point. Among them, LVDC and LHDC are used to simulate the Sobel operator to adaptively obtain the edge features of small targets in the horizontal and vertical directions, respectively. The derivation process of LVDC and LHDC is shown in Figure 2, where is the pixel of the current patch, and and are the weight parameters of a learnable convolution kernel. For the LVDC, , (), and , , . For LHDC, its convolution kernel parameters are similar to those of LVDC, except that the positions of learnable parameters are different. The LVDC and LHDC kernels are central to MLEEM’s ability to capture directional edge information. Their primary function is to compute the gradient approximations along the vertical and horizontal axes, respectively, analogous to the Sobel operator. However, unlike fixed operators, LVDC and LHDC employ learnable convolutional weights to adaptively enhance the sensitivity to edge contrasts specific to infrared dim and small targets. The learnability of LVDC and LHDC enables them to dynamically adjust their response characteristics through training data, and they have stronger scene adaptability compared to fixed operators.

Figure 2.

Framework of MLEEM.

To model the local relations between pixels and their surrounding pixels, we introduced CDC in MLEEM, which is able to aggregate the center-oriented gradient of sampled pixels. CDC augments the standard convolution by subtracting a weighted average of the peripheral pixels, which is expressed as follows:

here denotes the current location on both input and output feature maps, while enumerates the locations in . represents the pixel value at position in the input feature map; is the corresponding kernel weight at offset . is a learnable parameter with a default value of 0.7.

To ensure robust multi-scale object detection, this work employs DNAM as the backbone network. The multi-level skip connections within DNAM effectively preserve scale-invariant target features. Within this architecture, we introduce the Residual Channel-Spatial Attention Block (RCB), constructed by integrating residual connections with the Channel-Spatial Attention Module(CSAM) [9,32].

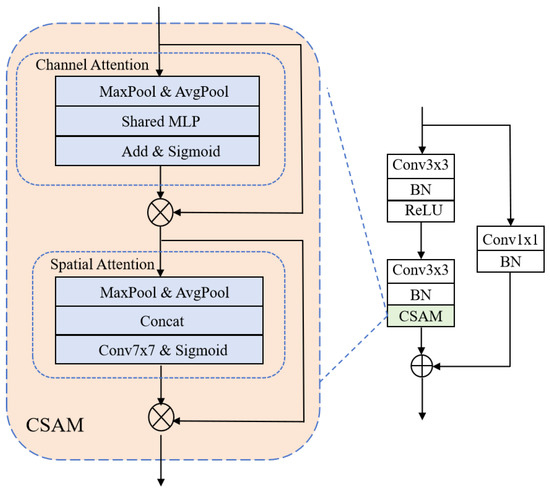

As shown in Figure 3, the RCB is formed by adding a residual connection to CSAM. The CSAM is cascaded by channel attention and spatial attention. The channel attention module is used to focus “what the useful information is” and the process can be described as follows:

where ⊗ denotes the element-wise multiplication; denotes the sigmoid fuction. denotes the weight matrix of channel attention, L is the input features to the channel attention module, MLP represents the shared multilayer perceptron, and and represent the max-pooling and average-pooling operation, respectively.

Figure 3.

Framework of RCB.

Similarly, the spatial attention module is used to focus “where the useful information is” and the process can be described as follows:

where denotes weight matrix of spatial attention, and denotes the convolution operation.

Considering that the classical DNAM lacks a targeted enhancement of edge detail features for small targets, MLEDNet strategically integrates the MLEEM at each DNAM layer. As DNAM’s hierarchical outputs undergo progressive downsampling, we correspondingly downsample the input infrared image at each stage. MLEEM then extracts multi-scale edge features from these downsampled inputs. Crucially, these edge features are fused in an element-wise manner with DNAM’s outputs at corresponding layers using a concatenation operation, resulting in significantly enhanced representations that preserve critical small target characteristics while suppressing background interference.

3.3. Feature Fusion Module Guided by ResCSAM

To enable the optimal fusion of hierarchical features from DNAM while maximally preserving discriminative information, we introduce the CSAM at the DNAM output stage. To retain the detailed characteristics of the target in the deep layer of the network and to make the network easier to be trained, a parallel residual connection is added to CSAM.The residual structure alleviates the vanishing gradient problem in deep networks by using identity mapping, which allows gradients to be directly propagated back to shallow layers during backpropagation. In addition, the residual structure can avoid small target edge information loss caused by channel concatenation operations.

As illustrated in Figure 1, features from different DNAM layers first undergo dimension unification to a standardized tensor—. Among them, the first layer only needs to maintain its size, while the features of other layers need to have their size adjusted to by upsampling. As illustrated in Figure 4, the features of each layer will be processed by CSAM to focus on the important channels and spatial positions. Then, the output of the CSAM is combined with through element-wise addition, yielding the enhanced feature representation. The output features can be expressed as follows:

where is the output of the keep dimension module, and the is the output of ResCSAM.

Figure 4.

Structure diagram of Res-CSAM.

Finally, we concatenated all the features according to the channel dimension and then sent them into a Conv module to obtain a feature map with a size of , which is expressed as follows:

where Conv represents Conv; Concat represents the concatenating operation.

3.4. Loss Function

Inspired by reference [11], we combine the Soft Intersection-over-Union (SoftIoU) loss and Binary Cross-entropy (BCE) loss as the loss function of the proposed model. BCE optimizes pixel-level errors, while Soft-IoU ensures the global consistency of the target. The combination of the two loss functions is conducive to obtaining complete and clearly bounded targets.

SoftIoU is widely adopted in segmentation and detection tasks to directly optimize the overlap between predictions and ground truths. For infrared small target detection, this loss function enhances the model’s sensitivity to subtle geometric features by measuring pixel-wise similarity in a continuous probability space. The Soft-IoU loss is defined as follows:

where Pre and GT denote the predicted probability map and the binary ground truth, respectively. The “·” represents element-wise multiplication. This formulation ensures gradient stability for small targets and mitigates optimization biases caused by extreme foreground–background imbalance.

BCE loss can assess the gap between predicted probabilities and true labels, thus guiding the model to classify the target and background more accurately. The expression of BCE loss is as follows:

where is the true label of the sample i; represents the probability that the sample i predicted by the model belongs to the positive class. The “·” represents element-wise multiplication and N is the total number of samples.

To integrate the advantages of these two loss functions and enable the model to have higher accuracy, we multiply the SoftIoU loss and the BCE loss by a coefficient, respectively, and add them together to obtain the final model loss function, as shown below:

where and denote the weighting coefficients for SoftIoU Loss and BCE Loss, respectively, and they are both set to 0.5, which is obtained by testing.

4. Results

4.1. Datasets

In this paper, two widely used public datasets are used to validate the proposed method.

The NUDT-SIRST dataset comprises 1327 images featuring five distinct scenes—cloud, city, sea, field, and highlight.Each image contains real backgrounds and synthesized targets, with dimensions of pixels. This dataset contains rich verification scenarios and various synthetic complex backgrounds. The target size ranges from 5 to 98 pixels. This dataset can better reflect the application scenarios of small targets. A total of 663 images in this dataset serve as training images, and the rest are test images.

The NUAA-SIRST dataset comprises infrared images from three different scenes—cloud, city, and sea. The target size ranges from 4 to 330 pixels. It contains 427 images, and 213 images are used for training; the rest are the test images.

4.2. Evaluation Metrics

Three metrics are employed to evaluate the performance of the model. The IoU pixel-level evaluation metric is utilized to evaluate the model’s ability to describe the target shape. The target-level probability of detection (Pd) and Fa metrics are utilized to evaluate the model’s ability to detect targets.

- (1)

- IoU: A pixel-level evaluation metric that calculates the overlap ratio between predicted and ground-truth bounding boxes. The IoU is expressed as follows:where represents the intersection area and represents the union area.

- (2)

- Pd: A target-level evaluation index, indicating the proportion of correctly predicted targets to the total number of targets. The centroid of the target is determined using the eight-connected method. The target sets a predefined centroid deviation threshold of 3; if the centroid deviation is less than 3, the target prediction is considered correct. The Pd is expressed as follows:where represents the total number of targets and represents the number of correctly predicted targets.

- (3)

- Fa: is a pixel-level evaluation index, indicating the ratio of false predicted target pixels and all pixels in the whole dataset.where is the number of false predicted target pixels, and is the number of all pixels in the whole dataset.

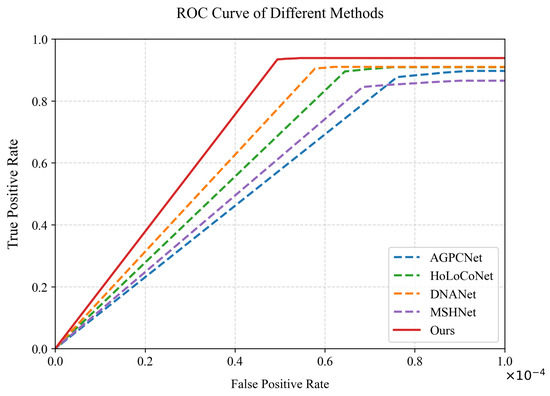

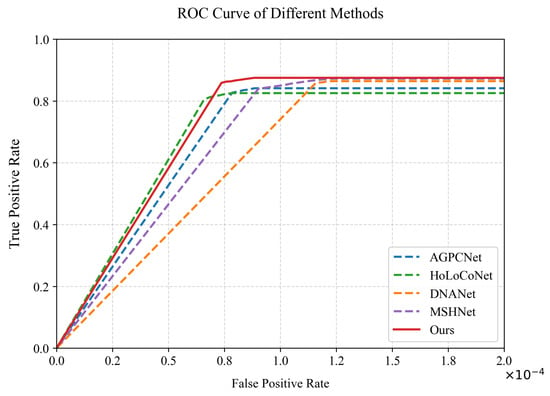

In addition, to better evaluate the detection accuracy of the algorithm, the receiver operating characteristic (ROC) curve was introduced. The ROC curve is used to reflect the relationship between the False Positive Rate and the True Positive Rate of the tested algorithm under different segmentation thresholds.

4.3. Implementation Details

The backbone network of the proposed method is ResNet-10, and the joint loss function is used to train the network. In addition, the Adam optimizer method and the CosineAnnealingLR scheduler are used to optimize the network. The initial learning rate is 0.01, the batch size is 4, and the training epoch is 400. All deep learning models are run under the Pytorch framework with NVIDIA GeForce RTX 4090 Laptop GPU.

4.4. Comparison to State-of-the-Art Methods

State-of-the-art methods including DNANet [9], HoLoCoNet [12], AGPCNet [10], and MSHNet [20], are compared with the proposed method to verify the superiority of our method. All the above methods are data-driven and implemented in Pytorch frameworks. For fairness, all methods use the same training and testing data. Table 1 lists the test results of all methods. Among them, mIoU is the mean of mIoU for the test set in the last 5 epochs, and std is the standard deviation. It can be seen from this table that the method we proposed has obvious advantages compared to the other methods. For NUDT-SIRST, our method achieved the best results in all three indicators. For NUAA-SIRST, the proposed method is slightly higher than AGPCNet in terms of Fa; additionally, in other indicators, our method is superior to other models.

Table 1.

Results of state-of-the-art methods on NUDT-SIRST and NUAA-SIRST.

In Table 2, we present the existing models and the number of parameters, FLOPs, and running time of our model. It can be seen from the table that the inference time of the model is 146.08 ms, which is slightly higher than that of DNANet, and it has a very good deployment prospect in practical applications. While MSHNet achieves the lowest FLOPs, its mIoU is significantly lower than that of MLEDNet. Our method achieves a good balance. Its FLOPs is lower than that of AGPCNet, while its IoU is higher than that of other models.

Table 2.

Model complexity and inference efficiency comparison.

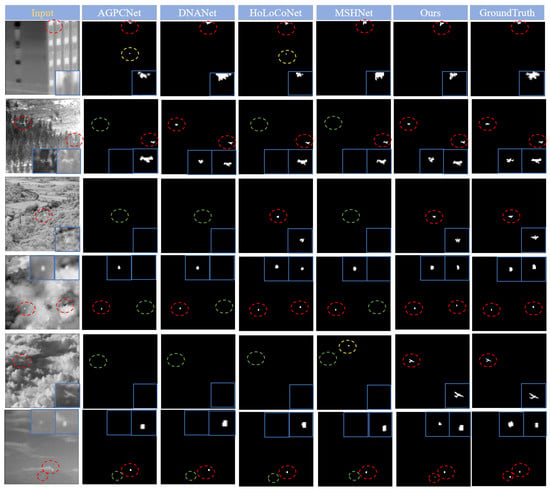

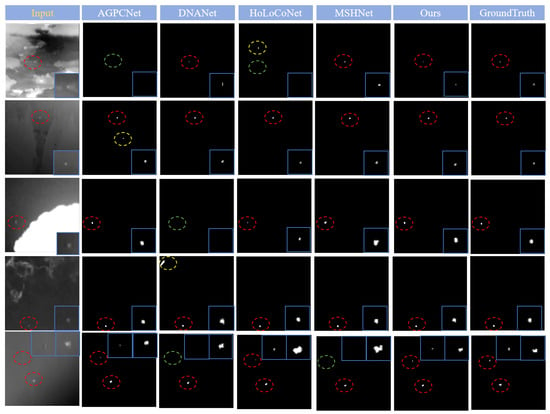

Before visualizing the results, threshold segmentation was performed on the prediction results of the model. The fixed threshold is set to 0. If the prediction result exceeds the threshold, the pixel is set to 255; otherwise, it is set to 0. From the visualization results, as shown in Figure 5, our method does not show any missed detections or false alarms in the first five scenarios on NUDT-SIRST, while other methods report a different degree of missed detection or false alarms. Moreover, the target shape obtained by the proposed method is more similar to that of GroundTruth. In the sixth scene, our model experienced a certain degree of pixel-level missed detections for the target on the left, while the other models completely missed this detection. This is because the contrast of the target is very low and there is interference around the target, which leads to a decline in the model’s detection effect. Similarly, as shown in Figure 6, our method performed best in the NUAA-SIRST dataset, with no missed detections or false alarms.

Figure 5.

The visualization results of all models on the NUDT-SIRST dataset. The red box, green box, and yellow box denote the correctly detected target, missed detection, and incorrectly detected target, respectively. The detected targets or missed areas are zoomed in and framed in blue.

Figure 6.

The visualization results of all models on the NUAA-SIRST dataset. The red box, green box, and yellow box denote the correctly detected target, missed detection, and incorrectly detected target, respectively. The detected targets or missed areas are zoomed in and framed in blue.

According to Figure 7, the ROC curve of our method is located at the upper left, and the area below the curve is the largest, so the detection accuracy of our method is the best in NUDT-SIRST. Figure 8 shows the ROC curve of our method and other models on NUAA-SIRST. As shown in the figure, our method still has certain advantages over other methods. Although the Pa of HoLoCoNet is slightly larger than that of our method when Fa is less than , ultimately, our method is significantly higher than HoLoCoNet when Fa is greater than .

Figure 7.

ROC Curve of all models on the NUDT-SIRST dataset.

Figure 8.

ROC Curve of all models on the NUAA-SIRST dataset.

4.5. Ablation Study

In order to better demonstrate the effectiveness and performance of each part of the proposed MLEDNet, we conducted detailed ablation studies on DNAM-ResCSAM and MLEEM-DNAM.

- (1)

- DNAM-ResCSAM: This model is based on the model we proposed by removing the MLEEM part, that is, adding ResCSAM to the feature fusion part of the original DNANet.

- (2)

- MLEEM-DNAM: Based on the proposed MLEDNet, ResCSAM is removed. This model is used to prove the role of the feature fusion module based on ResCSAM in the proposed model.

- (3)

- MLEDNet-noLHDC: LHDC is removed from MLEEM, and the rest remains the same as the model we proposed.

- (4)

- MLEDNet-noLVDC: LVDC is removed from MLEEM, and the rest remains the same as the model we proposed.

The results of the comparative experiments are shown in Table 3. It can be seen from this table that DNAM-ResCSAM has certain improvements in three indicators in the two datasets compared with DNANet, reflecting that introducing ResCSAM in the feature fusion part of DNANet can effectively enhance the algorithm’s performance. The decrease in the Fa of DNAM-CSAM compared with DNANet in the two datasets verified the effectiveness of ResCSAM in suppressing the background. The performance of MLEEM-DNAM has also improved to a certain extent compared with DNANet, which reflects that the performance of DNANet has been enhanced due to the addition of MLEEM. It can be seen that the performance improvement that DNAM-ResCSAM can achieve over DNANet in the two datasets is slightly smaller than that of MLEEM-DNAM over DNANet. Since MLEEM enhances edge features at the shallow layer of the network, while ResCSAM relies on the aggregation of high-level deep features and requires the support of shallow layer modules, the performance improvement effect of MLEEM is better. It can be seen from Table 3 that the metrics of MLEDNet-noLHDC and MLEDNet-noLVDC have decreased compared with our model, but they are still better than DNANet. The result shows that the absence of LHDC or LVDC will cause the model performance to decline.

Table 3.

Results of ablation experiments on NUDT-SIRST and NUAA-SIRST.

Finally, our model achieved the best metrics compared to the other three. In particular, compared with DNANet, our model had nIoU and Pd exceeding 3.72% and 2.01%, respectively, on NUDT-SIRST, and Fa decreased by 4.6%. On NUAA-SIRST, nIoU and Pd exceeded 3.54%, respectively, and Fa decreased by 28.45%. These results verify the advantages of our improved method in maintaining the integrity of the target, enhancing the detection probability and reducing false detections.

While NUDT-SIRST and NUAA-SIRST serve as benchmark datasets for IRSTD, they exhibit inherent biases. For example, both datasets lack dynamic elements (e.g., moving clouds and ocean waves), limiting the validation of model robustness under motion blur. In future work, more datasets will be used to test the model.

5. Conclusions

This study establishes that IRSTD remains fundamentally challenged by low signal-to-noise ratios, weak target signatures, and complex clutter in real-world environments. To overcome the limitations of existing methods in simultaneously capturing subtle edge features and suppressing background interference, we have proposed MLEDNet, which is a novel architecture integrating MLEEM and ResCSAM-FFM within a densely nested framework. The proposed MLEEM demonstrates significant efficacy in extracting discriminative directional edge information through learnable kernels, while ResCSAM-FFM enables cross-level feature fusion by adaptively weighting spatial-channel contexts. Comprehensive evaluations on the NUDT-SIRST and NUAA-SIRST datasets validate MLEDNet’s state-of-the-art performance, where it outperforms existing methods across critical metrics including Pd, Fa, and nIoU. These results confirm that the explicit modeling of directional edges coupled with attention-based feature fusion substantially advances ISRTD capabilities in complex environments. Future work will explore lightweight implementations for real-time deployment. The proposed methodology provides a principled approach for addressing fundamental challenges in IRSTD systems.

Author Contributions

Conceptualization: Y.L.; methodology: Y.L.; software: Y.L.; validation: Y.L., W.K. and W.Z.; formal analysis: X.L.; investigation: X.L.; data curation: Y.L.; writing—original draft preparation: Y.L.; writing—review and editing: Y.L.; visualization: Y.L., W.K. and W.Z.; supervision: X.L.; project administration: W.K.; funding acquisition: X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Research and Development Program of Hunan Province, China (2024AQ2023) and Major Science and Technology Research Projects in Hunan Province (Project Numbers: 2025QK2008 and 2024QK2010).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ma, T.; Guo, G.; Li, Z.; Yang, Z. Infrared small target detection method based on high-low-frequency semantic reconstruction. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6012505. [Google Scholar] [CrossRef]

- Zang, D.; Su, W.; Zhang, B.; Liu, H. DCANet: Dense Convolutional Attention Network for infrared small target detection. Measurement 2025, 240, 115595. [Google Scholar] [CrossRef]

- Rawat, S.S.; Verma, S.K.; Kumar, Y. Review on recent development in infrared small target detection algorithms. Procedia Comput. Sci. 2020, 167, 2496–2505. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, H.; Zhao, Q.; Pu, C.; Yang, L. An Asymmetric Intensive Interactive Fusion Network for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6002605. [Google Scholar] [CrossRef]

- Wang, X.; Han, C.; Li, J.; Nie, T.; Li, M.; Wang, X.; Huang, L. Multiscale Feature Extraction U-Net for Infrared Dim- and Small-Target Detection. Remote Sens. 2024, 16, 643. [Google Scholar] [CrossRef]

- Zhao, M.; Li, W.; Li, L.; Hu, J.; Ma, P.; Tao, R. Single-frame infrared small-target detection: A survey. IEEE Geosci. Remote Sens. Mag. 2022, 10, 87–119. [Google Scholar] [CrossRef]

- Han, J.; Moradi, S.; Faramarzi, I.; Zhang, H.; Li, N. Infrared small target detection based on the weighted strengthened local contrast measure. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1670–1674. [Google Scholar] [CrossRef]

- Hou, Q.; Wang, Z.; Tan, F.; Zhao, Y.; Zhang, W. RISTDnet: Robust Infrared Small Target Detection Network. IEEE Geosci. Remote Sens. Lett. 2021, 99, 7000805. [Google Scholar] [CrossRef]

- Li, B.; Xiao, C.; Wang, L.; Wang, L.; Lin, Z.; Li, M.; An, W.; Guo, Y. Dense Nested Attention Network for Infrared Small Target Detection. IEEE Trans. Image Process. 2023, 32, 1745–1758. [Google Scholar] [CrossRef]

- Zhang, T.; Li, L.; Cao, S.; Pu, T.; Peng, Z. Attention-Guided Pyramid Context Networks for Detecting Infrared Small Target Under Complex Background. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 4250–4261. [Google Scholar] [CrossRef]

- Guo, H.; Zhang, N.; Zhang, J.; Zhang, W.; Sun, C. Location-Guided Dense Nested Attention Network for Infrared Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 18535–18548. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. UIU-Net: U-Net in U-Net for infrared small object detection. IEEE Trans. Image Process. 2022, 32, 364–376. [Google Scholar] [CrossRef]

- Rivest, J.; Fortin, R. Detection of dim targets in digital infrared imagery by morphological image processing. Opt. Eng. 1996, 35, 1886–1893. [Google Scholar] [CrossRef]

- Chen, C.; Li, H.; Wei, Y.; Xia, T.; Tang, Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Wu, L.; Ma, Y.; Fan, F.; Wu, M.; Huang, J. A double-neighborhood gradient method for infrared small target detection. IEEE Geosci. Remote Sens. Lett. 2021, 10, 1476–1480. [Google Scholar] [CrossRef]

- Gao, C.; Meng, D.; Yang, Y.; Wang, Y.; Zhou, X.; Hauptmann, A.G. Infrared patch-image model for small target detection in a single image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef]

- Dai, Y.; Wu, Y.; Zhou, F.; Barnard, K. Asymmetric Contextual Modulation for Infrared Small Target Detection. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 949–958. [Google Scholar]

- Yang, J.; Deng, S.; Zhang, F.; Pan, A.; Yang, Y. FATCNet: Feature Adaptive Transformer and CNN for Infrared Small Target Detection. IEEE Trans. Aerosp. Electron. Syst. 2024, 6, 9231–9246. [Google Scholar] [CrossRef]

- Wu, H.; Huang, X.; He, C.; Xiao, H.; Luo, S. Infrared Small Target Detection With Swin Transformer-Based Multiscale Atrous Spatial Pyramid Pooling Network. IEEE Trans. Instrum. Meas. 2025, 74, 5003914. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, R.; Zheng, B.; Wang, H.; Fu, Y. Infrared small target detection with scale and location sensitivity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17490–17499. [Google Scholar]

- Zhang, S.; Wang, Z.; Xing, Y.; Lin, Y.; Su, X.; Zhang, Y. SCAFNet: Semantic-Guided Cascade Adaptive Fusion Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5007712. [Google Scholar] [CrossRef]

- Du, X.; Cheng, K.; Zhang, J.; Wang, Y.; Yang, F.; Zhou, W.; Lin, Y. Infrared Small Target Detection Algorithm Based on Improved Dense Nested U-Net Network. Sensors 2025, 25, 814. [Google Scholar] [CrossRef] [PubMed]

- Lin, F.; Ge, S.; Bao, K.; Yan, C.; Zeng, D. Learning Shape-Biased Representations for Infrared Small Target Detection. IEEE Trans. Multimed. 2024, 26, 4681–4692. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, M.; Yang, Z.; Yuan, Y.; Wang, Q. Edge-Guided Perceptual Network for Infrared Small Target Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5643510. [Google Scholar] [CrossRef]

- Zhang, L.; Luo, J.; Huang, Y.; Wu, F.; Cui, X.; Peng, Z. MDIGCNet: Multidirectional Information-Guided Contextual Network for Infrared Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 2063–2076. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, X.; Wu, C.; Ji, P.; Ru, J. A Gradient Vector Self-Learning Network for Infrared Small Target Detection. IEEE Geosci. Remote Sens. Lett. 2024, 21, 7002105. [Google Scholar] [CrossRef]

- Liu, C.; Song, X.; Yu, D.; Qiu, L.; Xie, F.; Zi, Y.; Shi, Z. Infrared Small Target Detection Based on Prior Guided Dense Nested Network. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5002015. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, R.; Yang, Y.; Bai, H.; Zhang, J.; Guo, J. ISNet: Shape matters for infrared small target detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 877–886. [Google Scholar]

- Zhan, W.; Zhang, C.; Guo, S.; Guo, J.; Shi, M. EGISD-YOLO: Edge Guidance Network for Infrared Ship Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 10097–10107. [Google Scholar] [CrossRef]

- Wang, S. Automated non-PPE detection on construction sites using YOLOv10 and transformer architectures for surveillance and body worn cameras with benchmark datasets. Sci. Rep. 2025, 15, 27043. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Zhao, C.; Wang, Z.; Qin, Y.; Su, Z.; Li, X.; Zhou, F.; Zhao, G. Searching central difference convolutional networks for face anti-spoofing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5295–5305. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).