1. Introduction

Text detection in natural scenes is a crucial research direction in the field of computer vision, with wide-ranging applications in areas such as autonomous navigation, augmented reality, and intelligent document analysis. In recent years, deep learning-based methods have significantly improved the performance of text detection, achieving remarkable results on modern datasets such as ICDAR and COCO-Text [

1,

2]. However, most existing studies focus on general scenarios involving English or modern Chinese characters, while research on the detection of ancient Chinese scene text—such as stone inscriptions, bamboo slips, ancient books, or calligraphic works—remains extremely limited.

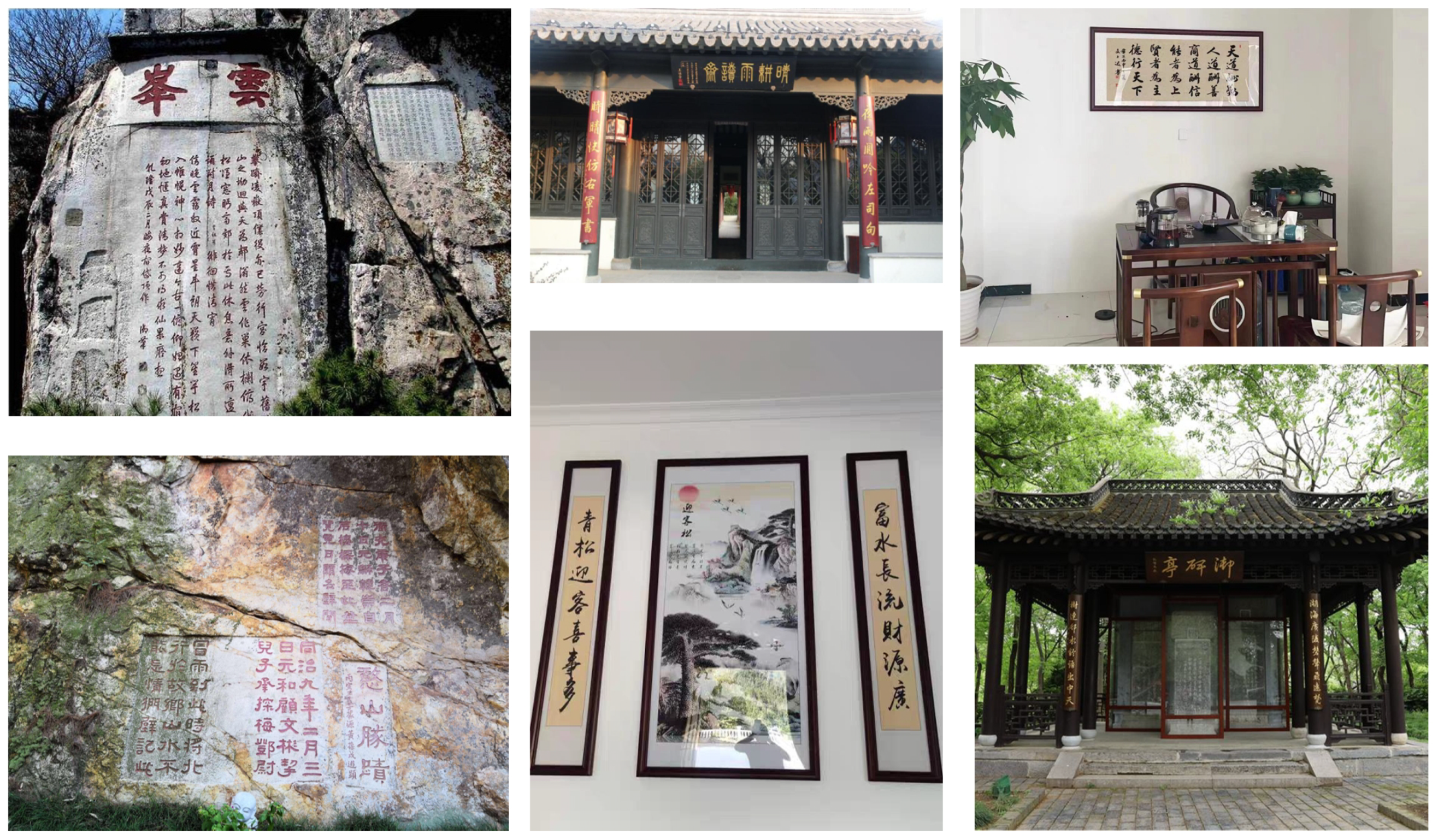

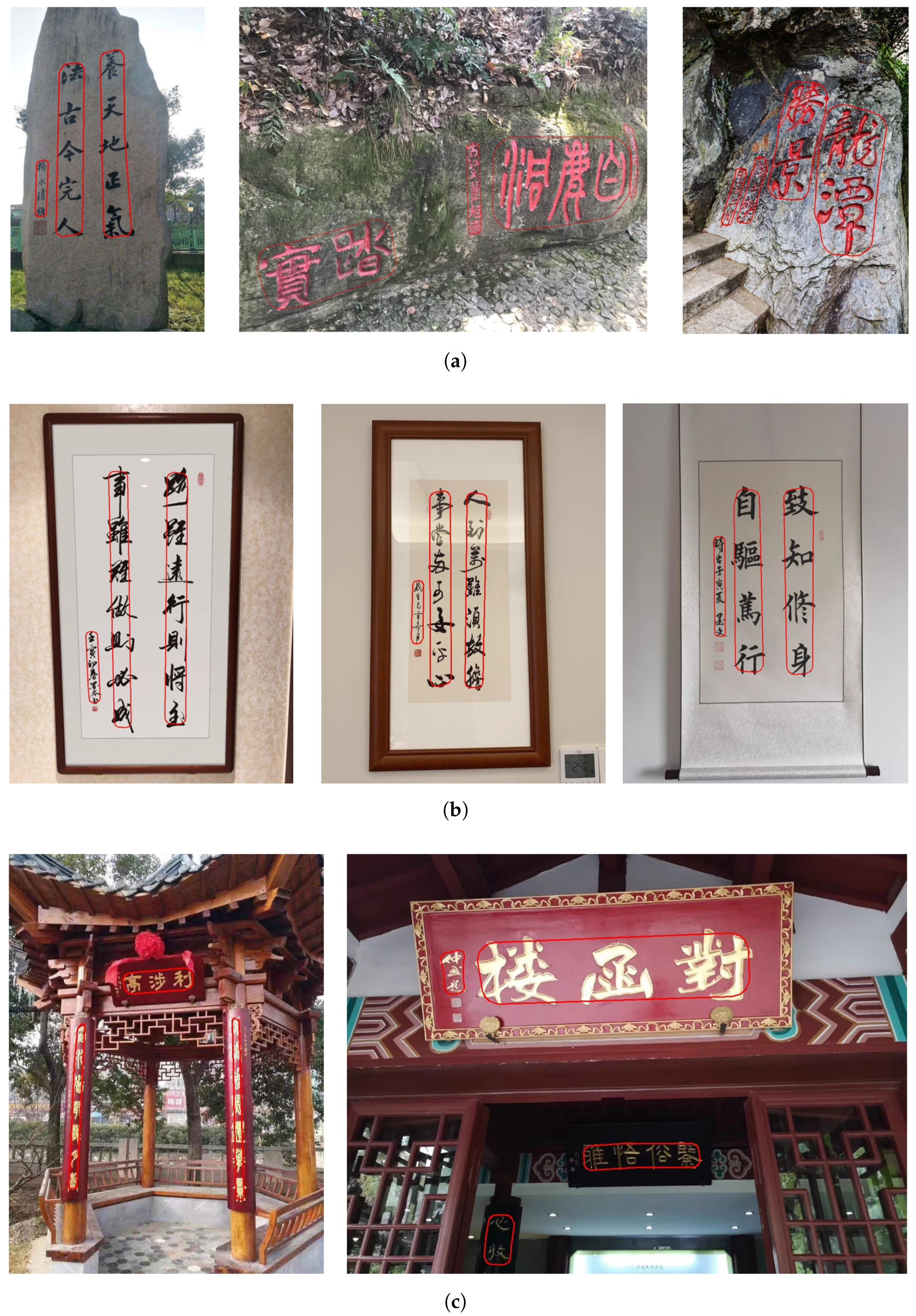

Ancient Chinese text detection plays a vital role in the digital preservation of cultural heritage and provides technical support for interdisciplinary research in archaeology, linguistics, history, and related fields. However, it presents a range of unique challenges: the diversity of script styles (e.g., seal script, clerical script, regular script), the complexity of historical evolution (e.g., oracle bone script, bronze inscriptions), and common issues such as stroke adhesion, character deformation, damaged layouts, irregular line spacing, and skewed or curved arrangements. Additionally, ancient texts often exhibit extreme aspect ratio variations and are affected by complex background textures—such as stone grain, paper degradation, and ink diffusion—as illustrated in

Figure 1. These characteristics greatly increase the difficulty of detection, further compounded by the lack of dedicated datasets.

Among previous text detection models, EAST [

3] achieves efficient geometric shape prediction through a feature fusion strategy similar to an FPN. PSENet [

4], DBNet [

5], and DBNet++ [

6] utilize FPNs to enhance the segmentation accuracy of arbitrarily shaped text, making them suitable for curved text and real-time scenes. CRAFT [

7] captures character-level features using an FPN, making it suitable for high-density text. PAN [

8] combines FPNs to achieve lightweight detection, making it adaptable to resource-constrained devices. However, for text detection tasks, truly useful features must simultaneously contain both detailed information and semantic information about the target, which should be fully exploited by the neural network. In existing feature pyramid architectures [

9,

10,

11,

12], the high-level features at the top of the pyramid need to be propagated through multiple intermediate scales, interacting with features at these scales before being fused with the low-level features at the bottom. During this propagation and interaction process, semantic information from higher-level features may be lost or degraded. This issue becomes especially severe when dealing with ancient texts affected by complex background noise and significant deformation, leading to a notable decline in detection performance.

In recent years, several researchers have proposed dedicated models for the detection of ancient Chinese characters, such as RGD [

13] and AncientGlyphNet [

14]. These models have made notable progress in addressing the unique characteristics of ancient scripts, laying a preliminary foundation for the digitization of ancient documents. However, with the rapid advancement of optical character recognition (OCR) technology and the evolving demands of real-world applications, the technical paradigm of modern OCR systems has undergone a significant shift. Increasingly, state-of-the-art recognition models are focusing on text-line recognition tasks, reflecting the growing need for continuous text understanding and contextual semantic analysis in practical scenarios. Text-line recognition not only enhances recognition accuracy but also improves the handling of semantic relationships and contextual information between characters, thereby enabling more intelligent document understanding. This technological shift poses adaptation challenges for traditional character-level detection models. Early models that focused on the detection of individual characters differ significantly from text-line recognition models in terms of architectural design, output format, and feature representation, making it difficult to achieve seamless technical integration and system interoperability between the two. Specifically, the discrete character-level outputs generated by single-character detection models cannot directly fulfill the input requirements of text-line recognition models, which expect continuous text regions. This mismatch has become a major bottleneck, severely limiting the overall performance and practical utility of OCR systems for ancient Chinese texts.

To address the challenges associated with ancient Chinese scene text detection, this study constructs a dedicated dataset covering a variety of real-world scenarios and proposes a novel method—the dynamic feature fusion upsampling and text-region focus network (DRA-Net)—for ancient Chinese scene text detection. Specifically, we first design a dynamic fusion upsampling module, which leverages a gating mechanism to dynamically fuse high-level and low-level features, thereby avoiding the large semantic gap that often arises in multi-scale feature integration. Subsequently, we introduce an adaptive text-region focus module, aimed at guiding the model to more effectively attend to foreground text regions while suppressing the interference of background noise. Experimental results demonstrate that the proposed model achieves state-of-the-art performance on the constructed ancient Chinese scene text detection dataset under complex and diverse conditions. The code and dataset are available at

https://github.com/AnkhXXX/DRA-Net, accessed on 14 July 2025.

The main contributions of this paper are as follows:

We construct a complex-scene ancient Chinese scene text detection (ACST) dataset. This dataset includes diverse instances of ancient Chinese characters in natural scenes, such as stone inscriptions, couplets, ancient books, and calligraphic scrolls, covering a wide range of script styles from different historical periods.

We propose a novel dynamic feature fusion upsampling and text-region focus network, DRA-Net, for ancient Chinese scene text detection. Extensive comparisons with several mainstream methods on the ACST dataset demonstrate that our method achieves superior performance across multiple evaluation metrics, validating its effectiveness and advancement in complex ancient text scenarios.

We introduce the adaptive text-region focus module, which incorporates axial context modeling and a shape self-calibration mechanism to guide the model in accurately focusing on text regions. This effectively addresses the interference and confusion between ancient texts and complex backgrounds.

We propose the dynamic fusion upsampling module, which employs a gating mechanism to dynamically fuse high-level semantic features with low-level detailed features. This adaptive fusion helps retain both types of information while avoiding the large semantic gap typically present in conventional feature fusion strategies.

2. Related Works

2.1. Text Detection

Early scene text detection methods typically involved first detecting individual characters or components and then combining them into words. Neumann and Matas [

15] proposed locating characters by classifying extremal regions (ERs). They formulated the character detection problem as an efficient sequential selection from a set of extremal regions. The detected ERs were then grouped into words. Jaderberg et al. [

16] first used a proposal generator to generate candidate words. These candidate words were then filtered using a random forest classifier. Finally, the remaining candidate words were optimized using a regression network.

Recently, deep learning has come to dominate the field of scene text detection. Deep learning-based scene text detection methods can generally be divided into two categories based on the granularity of the predicted targets: regression-based methods and segmentation-based methods.

Regression-based methods are a series of models that directly regress the bounding boxes of text instances. TextBoxes [

17] modifies the anchor points and scales of the convolutional kernels based on SSD [

18] for text detection. TextBoxes++ [

2] and DMPNet [

19] apply quadrilateral regression to detect multi-oriented text. SSTD [

20] uses an attention mechanism to roughly identify text regions. RRD [

21] decouples classification and regression by using rotation-invariant features for classification and rotation-sensitive features for regression, leading to better performance on multi-directional and long text instances. EAST [

3] achieves multi-angle text detection by regressing rotation angles and vertex coordinates. PCR [

22] employs an iterative regression process to refine the bounding boxes. ABCNet [

23] and FCENet [

24] utilize Bezier curves and Fourier contour modeling of bounding boxes to predict arbitrarily shaped text. DPText-DETR [

25] uses an enhanced factorized self-attention module to explicitly capture circular-shaped structures, thereby enhancing performance.

Segmentation-based algorithms predict pixel-level masks to precisely locate text regions. PANet [

10] adopts a lightweight structure while simplifying post-processing operations to improve the speed of text detection. CRAFT [

7] uses a weakly supervised model for character-level image segmentation, improving detection accuracy even when only text-line annotations are available in real-world samples. To detect curved text, PSENet [

4] progressively enlarges the scale of text instances to accurately capture the shape information of the text, thereby improving detection accuracy. DBNet [

5] and DBNet++ [

6] define differentiable binarization modules, simplifying the post-processing process while enhancing detection performance. RSCA [

26] employs Local Context-Aware Upsampling (LCAU) in the decoding stage to dynamically reassemble features using local attention weights, enabling the capture of curved spatial transformations without relying on global self-attention. MobileTDNet [

27] integrates an ECA channel attention block to enhance text saliency.

Although the above algorithms are capable of detecting text in arbitrary shapes, their final performance remains limited due to the lack of sufficient high-level semantic information and their inability to effectively resolve the confusion between ancient texts and complex backgrounds. To address this issue, this paper introduces the adaptive text-region focus module.

2.2. Feature Pyramid Network

A feature pyramid network (FPN) [

9] adopts a top-down approach to propagate high-level features to low-level features, achieving feature fusion across different levels. However, during this process, high-level features are not sufficiently fused with low-level features. To address this, PAFPN [

10] adds a bottom-up path on top of an FPN, allowing high-level features to acquire details from low-level features. NASFPN [

28] differs from fixed network architectures in that it uses a neural architecture search algorithm to automatically search for the optimal connection structure. Recently, ideas from other areas have also been used in feature pyramid design. For instance, GraphFPN [

10] uses graph neural networks to interact and propagate information across the feature pyramid. FPT [

29] introduces a self-attention mechanism from the NLP domain to extract features at different levels and uses a multi-scale attention network to aggregate these features. Although GraphFPN facilitates direct interaction between non-adjacent layers, its reliance on graph neural networks significantly increases the number of parameters and computational complexity; FPT suffers from similar issues. In contrast, AFPN introduces only standard convolutional components. To address the above challenges, this paper designs a dynamic fusion upsampling module to enable the fusion of features from different levels while avoiding the introduction of a large semantic gap.

3. Method

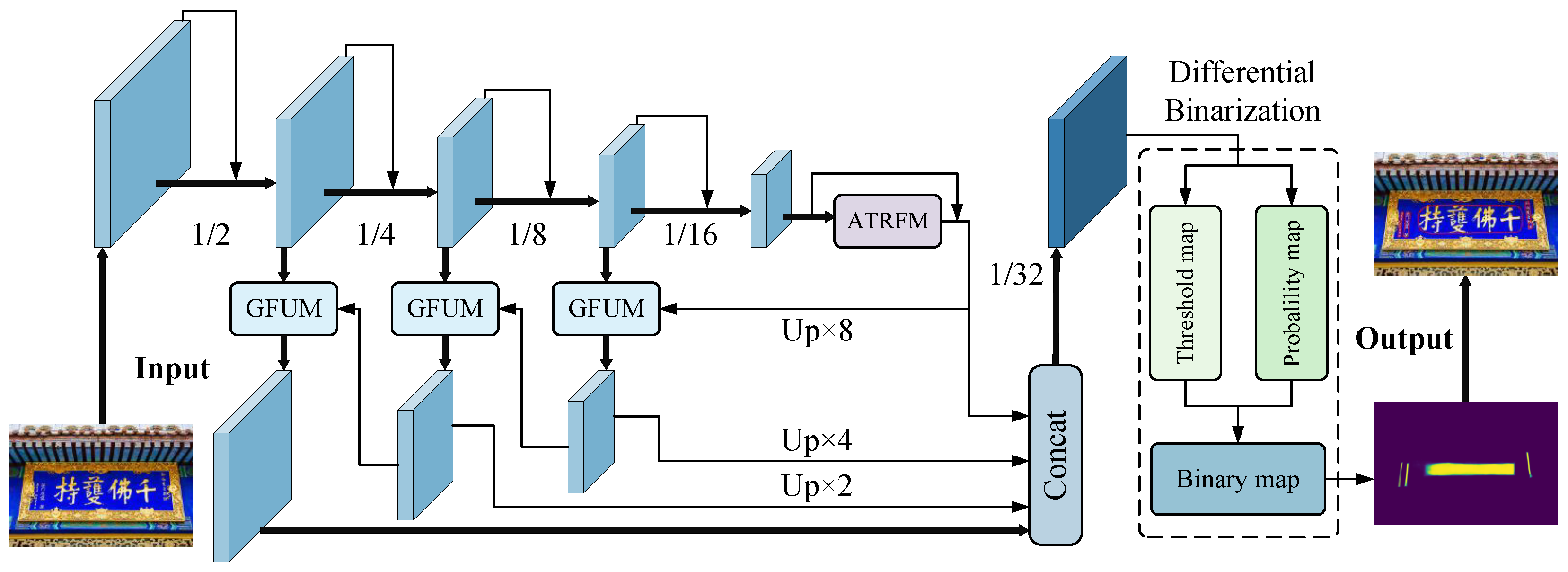

Figure 2 illustrates the architecture of the proposed method. First, the input image is fed into ResNet-18 to extract image features. The features extracted from the final layer are then passed to the adaptive text-region focus module. These features are then fed into the feature pyramid network backbone. The pyramid features are then progressively fused through the dynamic fusion upsampling module to generate the fused feature map

F. Next, the feature map

F is used to predict the probability map

P and the threshold map

T. Based on these, an approximate binary map

B is computed. During training, supervision is applied to the probability and threshold maps, as well as the approximate binary map. The probability and approximate binary maps share the same supervision. A box formulation module can be used to extract bounding boxes from either the approximate binary map or the probability map during inference.

3.1. Adaptive Text-Region Focus Module

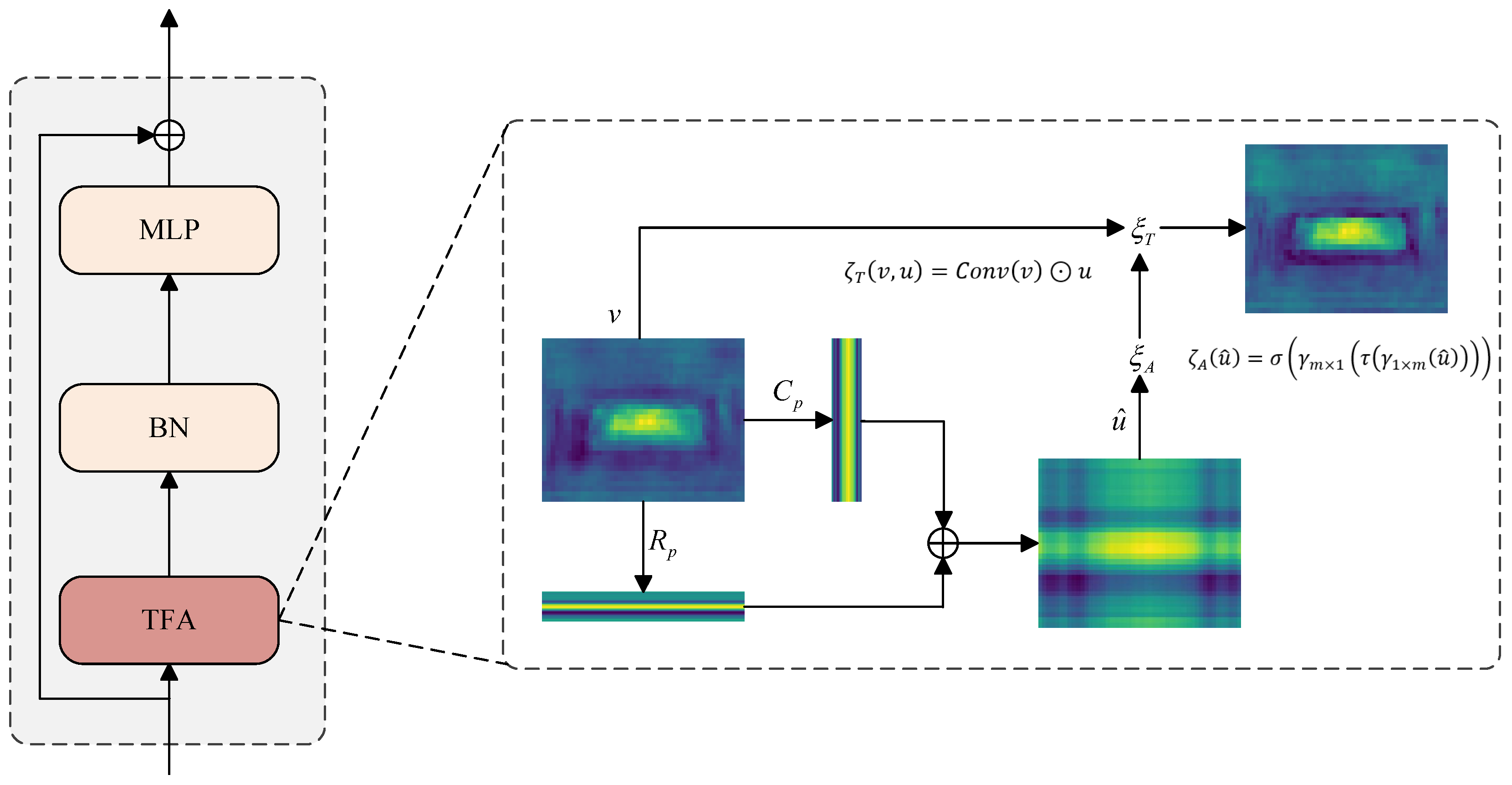

The adaptive text-region focus module (ATRFM) is designed to enhance the model’s ability to focus on text regions in complex scenes, particularly where text and background are intertwined and layouts are irregular. By effectively capturing axial global contextual information, this module helps the model better distinguish text regions from the background, thereby improving detection accuracy. The ATRFM consists of a text-focus attention (TFA) module, batch normalization (BN), and a multilayer perceptron (MLP).

The ATRFM captures axial global contextual information by introducing horizontal and vertical pooling operations. Based on this, two independent axial vectors are generated to effectively model the text regions. Subsequently, a shape calibration function is designed to precisely adjust the target regions, making them better aligned with the foreground objects. During this process, large-kernel strip convolutions are employed to adaptively refine the attention maps along the horizontal and vertical axes, enabling adaptive shape calibration of text regions. Specifically, horizontal strip convolutions are first applied to adjust the horizontal morphology of the text regions, bringing them closer to the foreground areas. This is followed by batch normalization (BN) and ReLU activation to introduce non-linearity. Finally, vertical strip convolutions are used to further refine the vertical morphology of the text regions, allowing the model to effectively handle text regions of various shapes.

The weights of these strip convolutions are learnable, and the shape of the rectangular region is collaboratively adjusted by the horizontal and vertical strip convolutions, resulting in more precise focusing on text regions. This learning process enables the model to adaptively adjust the target regions based on the training data, thereby improving the accuracy of text detection. The details are as follows:

where

denotes the large-kernel strip convolution,

m is the kernel size,

represents batch normalization (BN) followed by the ReLU activation function, and

is the sigmoid activation function.

To further enhance the model’s ability to focus on text regions, we design a feature fusion function. Specifically, a

depthwise convolution is employed to extract local details from the input features. The calibrated attention features are then weighted and fused with the refined input features via the Hadamard product, thereby further strengthening the model’s attention to the foreground text. The specific operation is as follows:

where

denotes the convolution operation and ⊙ represents the Hadamard product, which means element-wise multiplication of the features.

Finally, a residual connection is employed to enhance feature reuse, thereby improving overall model performance. As illustrated in

Figure 3, the overall architecture of the ATRFM can be expressed as

where ⊕ denotes the broadcasting addition operation, and

and

represent horizontal pooling and vertical pooling, respectively.

3.2. Dynamic Fusion Upsampling Module

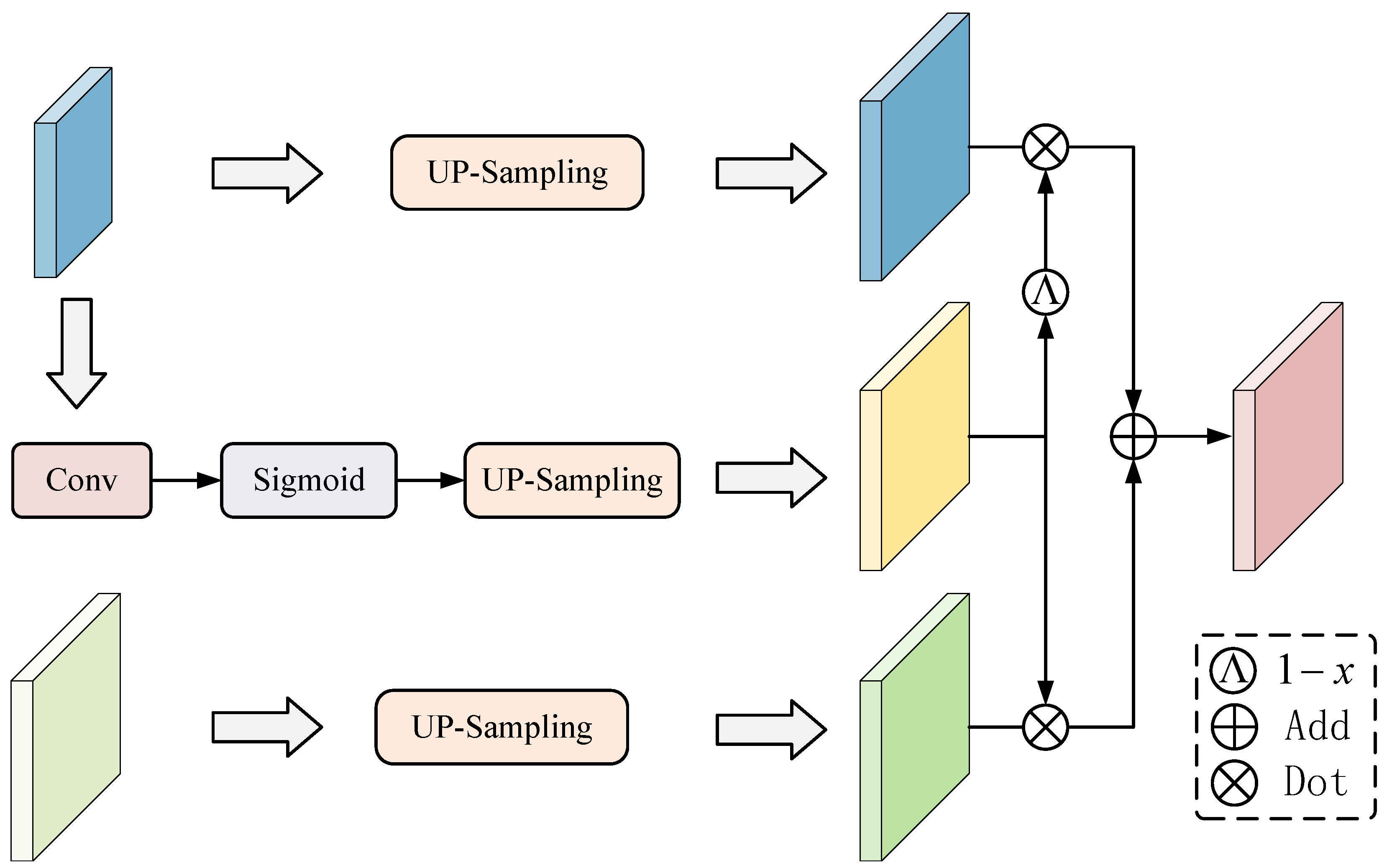

As features at different scales have different receptive fields and perceptual capabilities, the focus is on describing text instances at various scales. For instance, shallow or large-scale features can perceive details of small text instances but struggle to capture the global view of large text instances. Conversely, deep or small-scale features struggle to perceive details of small text instances but excel at capturing the global view of large text instances. To fully utilize features at different scales, semantic segmentation methods commonly use feature pyramids [

9] or U-Net [

30] structures. Unlike most semantic segmentation methods, which simply concatenate or sum features from different scales, the dynamic fusion upsampling module (DFUM) proposed in this paper dynamically fuses features at different scales while simultaneously preserving high-level semantic features and low-level detailed features, as shown in

Figure 4.

The working principle of the DFUM relies on a gating mechanism, which dynamically adjusts the fusion strategy by controlling the fusion ratio of features from different layers. To prevent the introduction of negative weights during feature fusion—which could intensify feature confusion and mistakenly suppress informative text features—we adopt the sigmoid function to ensure that the output values are constrained within the [0, 1] range. Let the input feature maps be

(from a higher layer) and

(from a lower layer), where

. We first apply a

convolution to

to generate a gating value

. The calculation process for the gating value is as follows:

The gating value

compresses the importance of the high-level feature map to a range between 0 and 1, representing its weighted role in the fusion process. The role of the gating value is to determine the fusion ratio between high-level and low-level features. If the gating value is close to 1, it means the high-level features play a greater role in the fusion; conversely, if close to 0, the low-level features dominate. Next, both the gating value

and the high-level feature map

are upsampled to match the spatial resolution of the low-level feature map

, enabling subsequent fusion. Fusion is then performed using the following equation:

In this fusion process, the gating value assigns different weights to the upsampled high-level and low-level feature maps. When approaches 1, the influence of the high-level feature map is greater, and the network relies more on the global semantic information in the high-level feature map. When approaches 0, the influence of the low-level feature map increases, and the network focuses more on the low-level detailed information. This adaptive fusion method enables the model to flexibly select the appropriate feature representation based on different text conditions, playing a crucial role in complex backgrounds, especially in ancient Chinese text scenes. For ancient Chinese scene text detection, text size, font, and background complexity often exhibit significant differences. Traditional convolutional neural networks are easily affected when handling these variations, particularly in balancing low-level features and high-level semantic features. Through the aforementioned adaptive fusion mechanism, the DFUM can flexibly adjust the fusion ratio of high- and low-level features, ensuring that the network captures both detailed features and semantic features, thereby providing more accurate detection results in complex ancient Chinese text scenes.

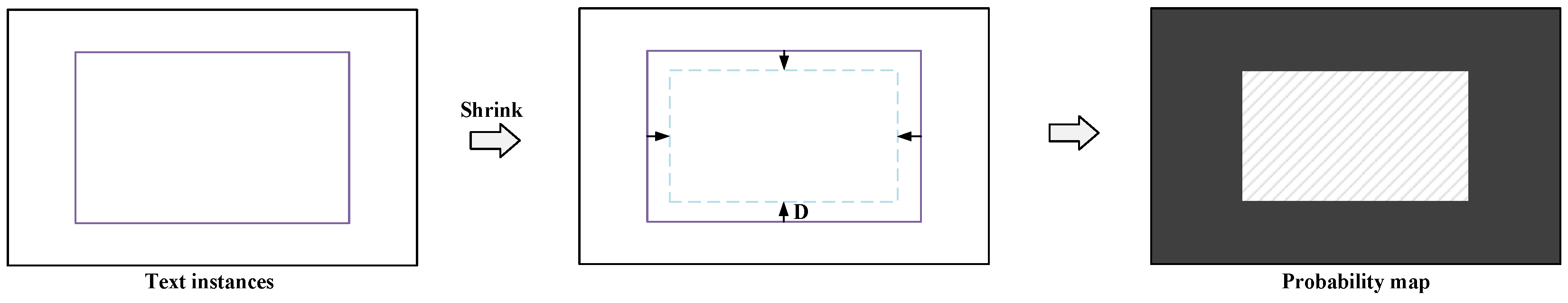

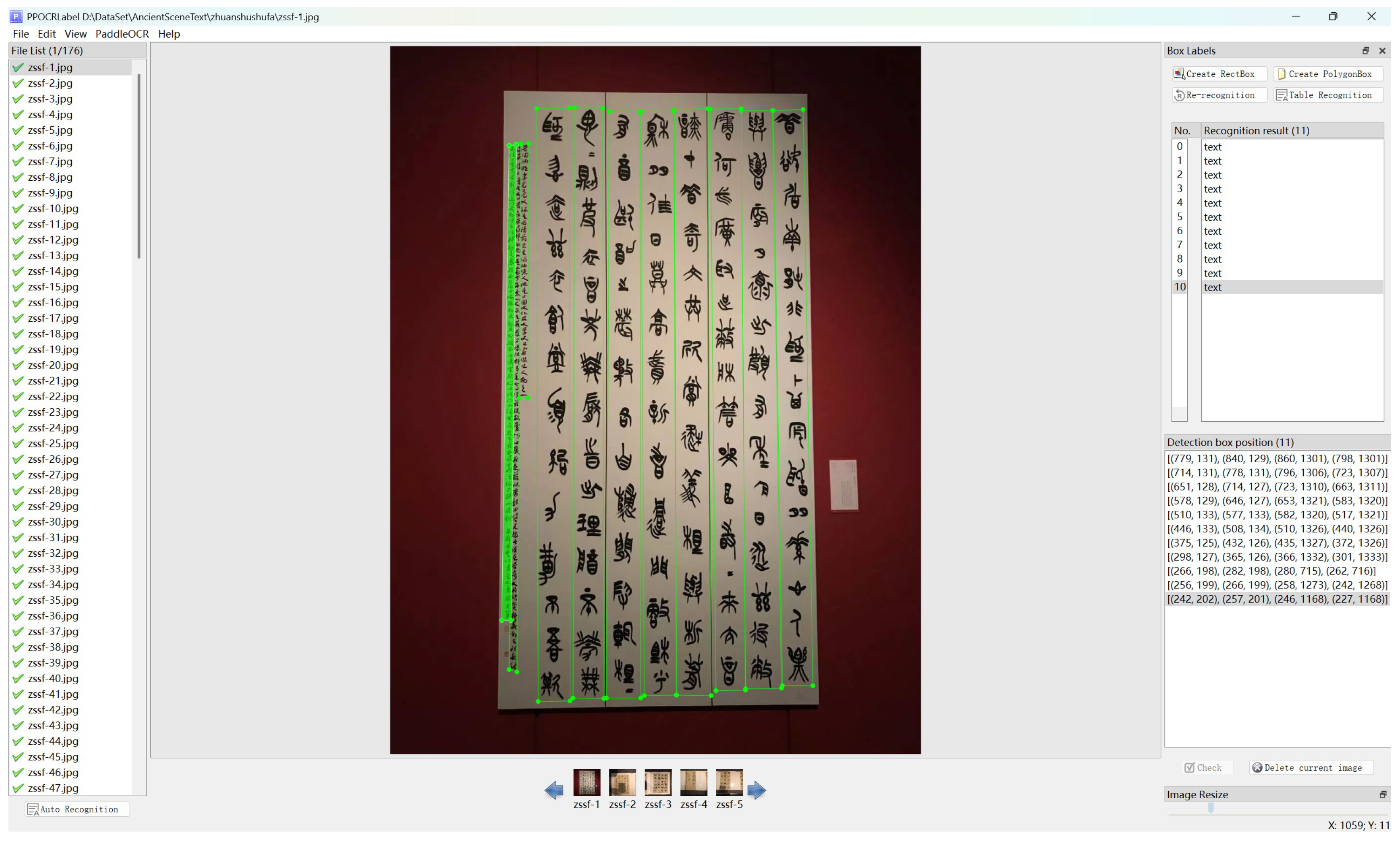

3.3. Differential Binarization

Due to the significant impact of complex scenes on text localization in the ancient Chinese scene text detection dataset, this paper adopts a differential binarization module to reduce the impact of complex scenes and improve the accuracy of text localization. First, the text instances are uniformly scaled and extended to obtain the corresponding probability map P and threshold map T. Then, the network is optimized based on the generated binary map.

The probability map

P is generated using the Vatti clipping algorithm [

31]. Each side of the text region is contracted inward by

D pixels, thereby separating the text and non-text regions. As shown in

Figure 5, the black background represents the non-text area, while the white shaded region represents the text area, referred to as the probability map

P. The formula for the clipping distance

D is as follows:

where

A is the area of the polygon;

r is the shrinkage ratio, which is set to 0.4; and

p is the perimeter. Similarly, the threshold map

T is derived from the probability map by expanding each side of the instance text by

D pixels using the Vatti clipping algorithm. The gray region between the contracted bounding box and the expanded bounding box forms the threshold map

T, as shown in

Figure 6. The probability map and threshold map are used to generate the approximate binary map

B via differential binarization. The formula for differential binarization is provided below, and it can be computed at any pixel level during training to optimize the network:

where

n represents the scaling factor, which is set to 50.

3.4. Deformable Convolution

In traditional convolution operations, the receptive field of the convolutional kernel is fixed, meaning that the sampling points and stride remain constant when sliding over the input feature map. However, in the task of ancient Chinese scene text detection—particularly for long text instances with greatly varying aspect ratios—traditional convolutions struggle to effectively capture both the overall structure and fine details of the text, thereby limiting detection performance. To address this issue, deformable convolution is introduced into the conv3 and conv4 layers of ResNet-18 in this work. Deformable convolutions can dynamically adjust the receptive field, allowing the model to more flexibly adapt to elongated, curved, or rotated text shapes. This significantly enhances the model’s ability to perceive and recognize text instances.

3.5. Optimization

The loss function

L in this paper can be expressed as the weighted sum of the losses of the probability map

, the approximate binary map

, and the threshold map

:

Considering that learning the threshold map is relatively challenging and has a significant impact on the final differentiable binarization result, we set and .

We apply binary cross-entropy (BCE) loss to

and

. To address the issue of class imbalance between positive and negative samples, hard negative sample mining is introduced during the BCE loss computation. By sampling hard negative samples, this technique improves the model’s ability to recognize challenging samples.

where

is the sampled set consisting of positive and negative samples, with a ratio of 1:3 between positive and negative samples.

is the sum of the L1 distance between the prediction and the ground truth:

where

is the set of indices of pixels within the expanded polygon and

is the label of the threshold map.

4. Experiments

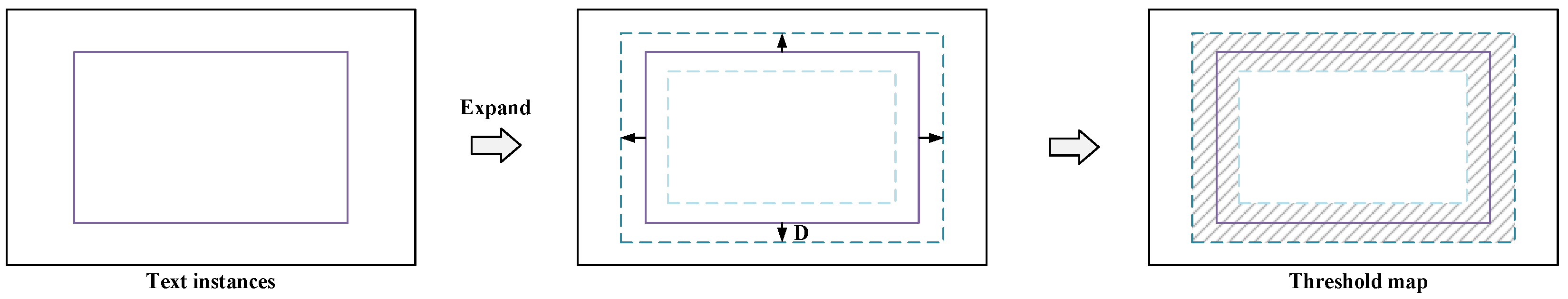

4.1. Ancient Chinese Scene Text Detection Dataset

This section introduces a complex ancient Chinese scene text detection dataset, comprising various categories such as stone carvings, calligraphy, and couplets (see

Figure 7 and

Table 1). The subsequent sections address the selection, classification, and segmentation of the data. Each scene type is treated as a primary category, with images containing text designated as detection targets. This approach facilitates structured analysis and supports effective model training for recognizing and interpreting ancient texts in different and challenging environments. As there is currently no dataset for ancient Chinese scene text detection, the dataset proposed in this paper holds significant research value.

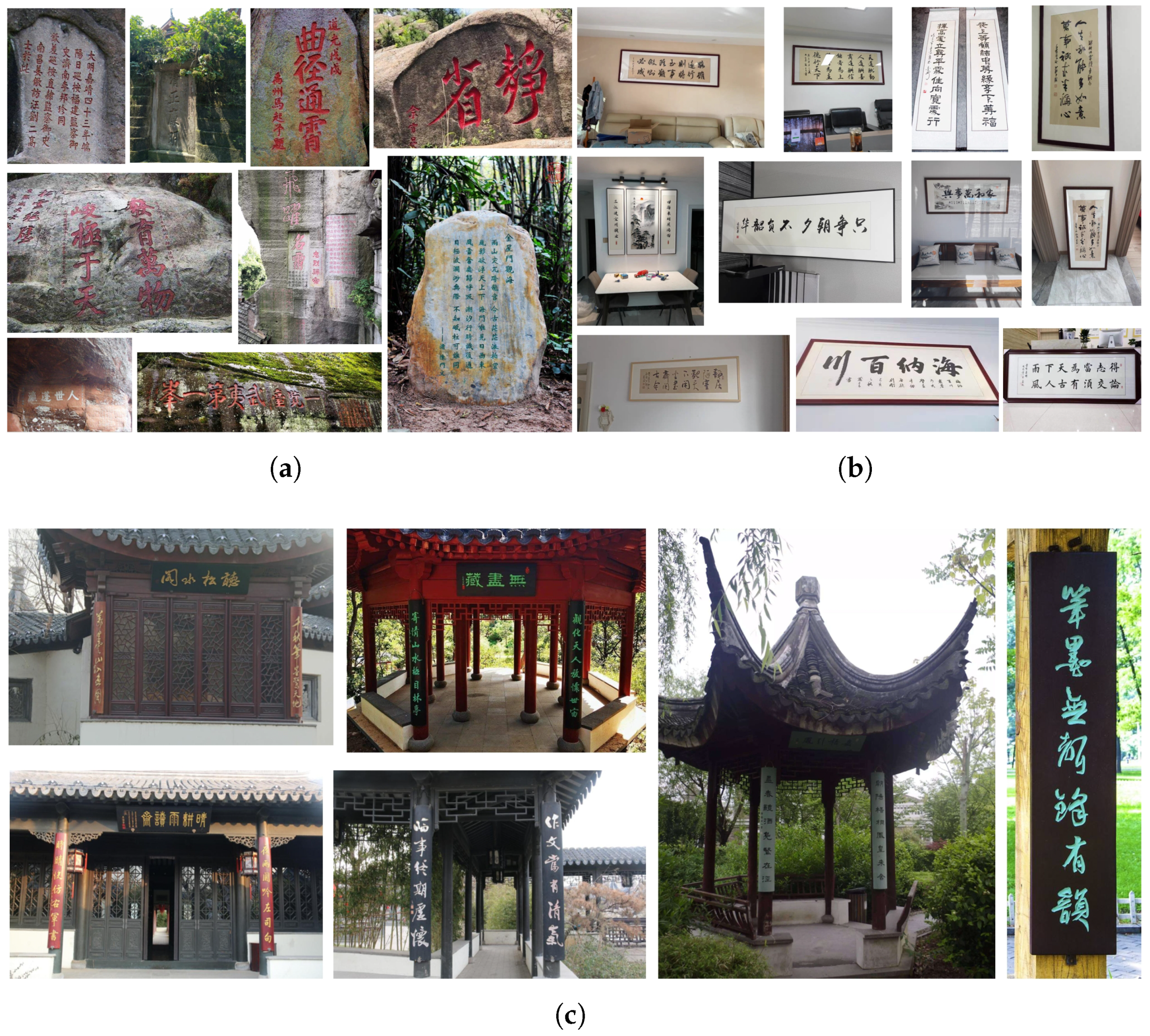

4.1.1. Dataset Collection and Annotation

The dataset includes scenes featuring various forms of ancient Chinese characters (such as stone carvings, calligraphy, and couplets), covering different font styles and scenes from ancient to modern times. In this paper, we filtered and supplemented the HUSAM-SinoCDCS dataset [

14], resulting in a total of 2189 high-quality images (see

Table 1), all captured on-site. The unified image size was 750 × 1000. The scenes in the dataset include the Tianyi Pavilion in Ningbo, China, the Chinese Writing Museum in Anyang, China, and the local Chinese Writing Museum in Huzhou.

For the annotation of the dataset, we employed the PPOCRLabel tool to perform semi-automated processing, as illustrated in

Figure 8. First, the tool automatically scanned and recognized all images. To address potential issues such as missed or incorrect detections during the automated process, we manually corrected and refined the results. Subsequently, we conducted a statistical analysis of annotation consistency (IOU > 0.95), as shown in

Table 2. However, the semi-automated annotation using PPOCRLabel exhibited significant limitations in detecting ancient Chinese scene text, particularly for categories such as stone inscriptions. Through this two-step verification—automated detection followed by manual refinement—we ensured high accuracy and quality in the dataset annotations, thereby enhancing its research value and reliability.

4.1.2. Data Collection Process

To ensure balanced distribution across scene categories, the dataset was randomly partitioned into separate training and testing sets using a 4:1 ratio. After partitioning, the final training set comprised 1750 images, and the test set contained 439 images.

4.2. Evaluation Metrics

In the realms of deep learning and computer vision, particularly in the domain of text detection tasks, it is paramount to evaluate the precision and effectiveness of the model. Text detection tasks often involve the use of QuadMetric, which is specifically designed to evaluate the accuracy of the detected text regions.

This paper uses the QuadMetric evaluation metric, which incorporates precision, recall, and F1-score as the main evaluation metrics, to comprehensively assess the performance of the text detection model. These metrics are calculated by comparing the overlap between the text regions detected by the model and the ground-truth text regions.

where TP represents the number of correctly predicted positive samples, FP represents the number of incorrectly predicted positive samples, and FN represents the number of incorrectly predicted negative samples.

4.3. Implementation Details

The method in this paper was implemented using PyTorch 1.13, and all experiments were conducted on an NVIDIA RTX 4090 GPU(sourced from NVIDIA, Santa Clara, CA, USA) with 24 GB of memory. For all models, training was performed for 800 epochs on the ACST dataset, with a batch size of 16 for training and a batch size of 1 for testing. The network was trained end to end using the Adam optimizer, with a learning rate of 1 × 10−4.

Data augmentation for the training data included the following: (1) random rotation within an angle range of (−10°, 10°); (2) random cropping; and (3) random flipping. All processed images were resized to 640 × 640 to improve training efficiency. During inference, we maintained the aspect ratio of the test images and resized the input images by adjusting the height appropriately for each dataset.

4.4. Comparison with Previous Methods

In

Table 3, we compare several representative scene text detection methods along with recent ancient Chinese character detection approaches, using the proposed ACST dataset as the sole evaluation benchmark. Additionally, we present the detection results in

Figure 9. The experimental results demonstrate that the proposed model achieved superior performance in key evaluation metrics, including recall, precision, and F1-score, outperforming most existing mainstream scene text detection methods. Although the model did not achieve the best results in terms of model size and inference speed, it maintained a good balance overall. These results clearly indicate that the proposed model is capable of effectively addressing the challenges posed by the ACST dataset.

It is worth noting that the ACST dataset exhibits a high degree of diversity and complexity, covering a wide range of challenging text scenarios. For instance, the dataset contains significant variations in backgrounds, fonts, text sizes, and arrangement styles, and even includes some small-scale text instances. These factors pose serious challenges for many mainstream text detection algorithms, leading to a noticeable drop in performance. While DRA-Net also faces limitations under such conditions—particularly in achieving higher recall—the issue primarily stems from insufficient sensitivity to small-scale text, despite the integration of the adaptive text-region focus module (ATRFM). This limitation results in the model occasionally failing to detect fine-grained text regions effectively. Nevertheless, DRA-Net still achieves a significant performance improvement compared to existing methods. Specifically, by dynamically adjusting the feature fusion strategy, DRA-Net is able to preserve deep semantic information while effectively capturing textual details. This is especially beneficial in scenarios involving fine-grained features and complex backgrounds, where the model demonstrates enhanced detection accuracy and maintains strong performance across the diverse scenes in the ACST dataset.

4.5. Ablation Experiment

4.5.1. Impact of Different Backbones

For the task of ancient Chinese scene text detection, especially for long text instances, the aspect ratio often exhibits extreme variations. To address these challenges, this paper introduces deformable convolution into the backbone. As shown in

Table 4, after using deformable convolution, the proposed model achieved better performance on the ACST dataset. We also compared it with other backbones, highlighting the superiority of the ResNet-18 model with deformable convolution. The poorer performance of other backbones can be attributed to the deeper ResNet models, which, when extracting features, tend to focus more on background noise. Given that the ACST dataset contains complex backgrounds, this naturally impacts these models more.

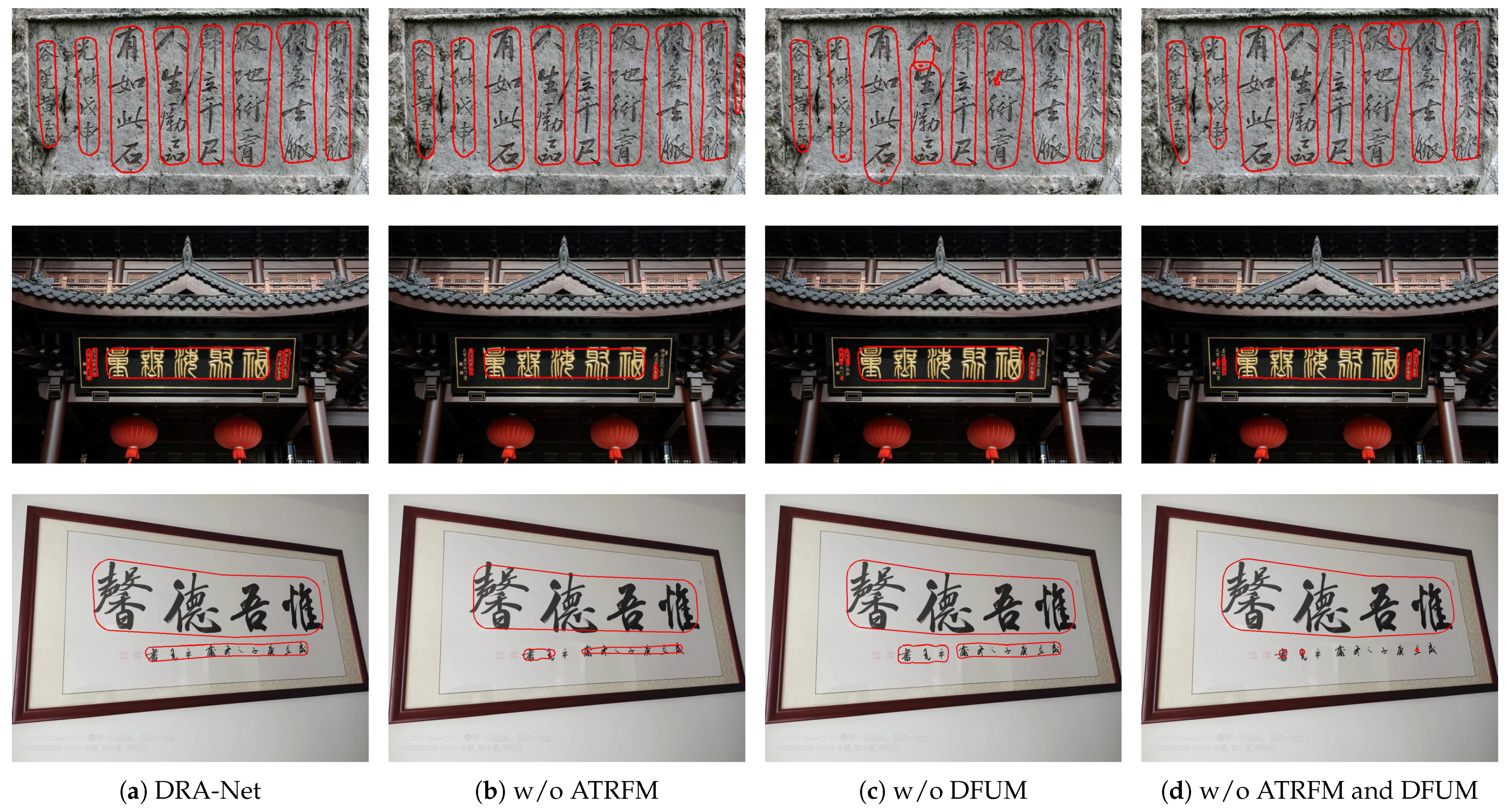

4.5.2. Impact of the DFUM

To verify the effectiveness of the proposed dynamic fusion upsampling module (DFUM), an ablation study was conducted, as shown in

Table 5. In this experiment, the DFUM was removed from the model, and a comparison was performed under the same dataset and task settings. The results show that removing the DFUM led to a significant drop in key performance metrics, including recall, precision, and F1-score. Furthermore, failure cases are illustrated in

Figure 10, showing that the absence of the DFUM resulted in missed detections and disconnected detection regions. These findings indicate that dynamically adjusting the feature fusion strategy substantially enhances the model’s ability to capture multi-level text features—particularly under diverse scenes and complex backgrounds—highlighting the critical role of the DFUM in robust text detection.

A further ablation study was conducted, as presented in

Table 6, to evaluate the effectiveness of the sigmoid function in the gating mechanism. Specifically, we replaced the sigmoid function with the tanh function and observed a notable performance drop in DRA-Net. This decline is attributed to the fact that tanh maps its output to the range (−1, 1), which may introduce negative weights. Such negative weights can mistakenly suppress useful text features—especially in ancient text scenarios where the distinction between the foreground and background is often ambiguous—thus exacerbating feature confusion. In contrast, the sigmoid function, with its output constrained between 0 and 1, can be regarded as a form of normalized weighting, representing the relative importance of different feature maps. It enables the model to dynamically adjust the contribution of each feature without introducing negative interference.

4.5.3. Impact of the ATRFM

To investigate the effectiveness of the proposed adaptive text-region focus module (ATRFM) in ancient Chinese scene text detection, we conducted ablation experiments, as shown in

Table 5. Specifically, we compared model performance with and without the ATRFM while keeping the rest of the network architecture unchanged. The experimental results show that removing the ATRFM led to a notable decrease in key metrics, including precision, recall, and F1-score. Further detection examples are illustrated in

Figure 10, where the model without the ATRFM mistakenly detected non-text regions. This performance degradation indicates that, without the ATRFM, the model’s ability to focus on text regions is significantly weakened. In particular, under complex backgrounds and blurred boundary conditions, the distinction between the foreground text and the background becomes less reliable. Given that ancient Chinese text scenes often involve intricate strokes, diverse font styles, and strong background noise, the inability to sufficiently exploit contextual information and adaptively attend to key regions makes the model more susceptible to background interference, resulting in confused or even missed detections.

Subsequently, we conducted a series of additional ablation experiments, as shown in

Table 7, systematically adjusting the strip convolutional kernel size to evaluate its contribution to the overall performance of DRA-Net. The experimental results indicate that as the kernel size increases beyond 9, model performance significantly degrades. This is primarily because an excessively large convolutional kernel causes the ATRFM to capture too much background information, which, in turn, leads to confusion between the ancient texts and the background.

4.5.4. Combined Effect of DFUM and ATRFM

To further verify the effectiveness and necessity of the proposed modules within the overall model architecture, we conducted an ablation study, in which both the adaptive text-region focus module (ATRFM) and the dynamic fusion upsampling module (DFUM) were simultaneously removed. This modification converted the model to a conventional feature pyramid network (FPN) structure. This setup allowed us to clearly observe the specific contributions of the two modules to overall model performance. As shown in

Table 8, the degraded model exhibited a noticeable decline in performance across multiple evaluation metrics, particularly in precision and recall. Relevant detection examples are illustrated in

Figure 10, where the removal of these two key modules resulted in a significant drop in the model’s detection capability.

In contrast, the full version of DRA-Net, integrating both the ATRFM and DFUM, demonstrates significant advantages for ancient Chinese scene text detection. These advantages primarily stem from two key aspects: First, the multi-level feature fusion mechanism enables DRA-Net to effectively integrate semantic information across different spatial scales, thereby avoiding the large semantic gap often encountered in traditional architectures. Second, the region-focused attention strategy allows the model to more effectively concentrate on salient text regions during feature extraction, suppress background interference, and enhance the representation of fine-grained text features.

5. Discussion

The proposed DRA-Net method achieves significant performance improvements in ancient Chinese scene text detection tasks, reaching an F1-score of 72.9%, a precision of 82.8%, and a recall of 77.5% on the ACST dataset, substantially outperforming existing mainstream methods. This performance gain is primarily attributed to the synergistic effect of the dynamic fusion upsampling module (DFUM) and the adaptive text-region focus module (ATRFM). The DFUM utilizes a gating mechanism to adaptively fuse high- and low-level features, effectively mitigating the semantic information loss commonly observed in traditional feature pyramid structures. Ablation studies demonstrate that the introduction of the DFUM significantly enhances model performance, particularly in scenarios with complex backgrounds, by reducing missed detections and fragmented text regions. The use of the sigmoid function, as opposed to the tanh function, avoids the introduction of negative weights, which can suppress useful text features—especially critical in ancient text scenarios where the distinction between foreground and background is often ambiguous. The ATRFM, on the other hand, incorporates axial attention mechanisms and a shape calibration function to greatly improve the model’s ability to localize text regions. Experimental results show that removing this module leads to frequent false positives in non-text regions, underscoring its crucial role in suppressing background interference. Further ablation studies on the strip convolutional kernel size confirm that an appropriately designed receptive field is essential to balance the capture of textual features with the suppression of background noise.

It is worth noting that the model demonstrates outstanding performance in terms of precision (82.8%), which holds great significance for the digitization of ancient documents, as false positives may lead to incorrect textual interpretations. However, the relatively lower recall (77.5%) is primarily due to the model’s limited ability to detect small-scale text instances. In ancient Chinese scene images, such small-scale text often appears in marginal annotations or decorative inscriptions. Such text, characterized by small, blurred boundaries and interference from complex backgrounds, tends to be weakened or even lost during feature processing. Addressing this limitation remains a critical direction for future work.

In addition, the construction of the ACST dataset fills a notable gap in the field of ancient Chinese scene text detection. However, the limitations of the PPOCRLabel tool in labeling ancient texts—especially for the “stone inscription” category—highlight the increased complexity of ancient scripts and the higher demands they place on existing annotation tools. The dataset’s current limitations in terms of geographical diversity and scale also indicate areas that need further expansion in future research.

The technical contributions of this study not only enhance ancient text detection performance but also show potential for broader applications. Specifically, the proposed dynamic feature fusion strategy and region-focused mechanism can be effectively adapted to other scene text detection tasks involving complex backgrounds. Future work could explore more efficient multi-scale feature fusion strategies, develop end-to-end detection and recognition systems, and investigate few-shot learning approaches to reduce the dependency on large-scale annotated datasets for ancient text detection.

6. Conclusions

This paper proposes a novel method—the dynamic feature fusion upsampling and text-region focus network (DRA-Net)—for ancient Chinese scene text detection, which effectively addresses multiple challenges in this domain, including background interference and extreme aspect ratios. First, by introducing the dynamic fusion upsampling module, DRA-Net achieves dynamically weighted fusion of multi-scale features, thereby mitigating common issues, such as information loss and degradation, during multi-level feature propagation. Second, the adaptive text-region focus module leverages axial attention mechanisms to perform directional modeling of text regions, guiding the network to more accurately focus on text regions and alleviating the confusion between ancient texts and complex backgrounds. In addition, considering the unique characteristics of ancient Chinese text, we constructed a comprehensive dataset containing a wide variety of scene instances across multiple historical periods, filling the gap left by existing public datasets in the field of ancient Chinese scene text detection. Experimental results demonstrate that the proposed DRA-Net method achieves superior performance in detecting ancient Chinese text under complex scene conditions, outperforming previous detection methods and validating its effectiveness and feasibility. Future work may focus on further optimizing the model architecture, exploring more advanced and adaptive feature fusion strategies, and expanding the scale and diversity of the dataset to advance the digital preservation of ancient Chinese texts and support ongoing research in cultural heritage conservation.

Author Contributions

Funding acquisition, H.Q.; Investigation, Y.W. and C.F.; Methodology, Q.X. and C.Z.; Supervision, C.Z., Q.L., and H.Q.; Validation, Y.W., C.F., H.Y., and Q.L.; Writing—original draft, Q.X.; Writing—review and editing, Q.X., C.Z., and H.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Postgraduate Research and Innovation Project of Huzhou University (2025KYCX54), the Chaomi S&T Company Cooperation Project (HK16003), and the Zhejiang Key R&D Plan (2017C03047).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors would like to thank Huzhou University for its support.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ye, Q.; Doermann, D. Text detection and recognition in imagery: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1480–1500. [Google Scholar] [CrossRef] [PubMed]

- Liao, M.; Shi, B.; Bai, X. Textboxes++: A single-shot oriented scene text detector. IEEE Trans. Image Process. 2018, 27, 3676–3690. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Yao, C.; Wen, H.; Wang, Y.; Zhou, S.; He, W.; Liang, J. East: An efficient and accurate scene text detector. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5551–5560. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Hou, W.; Lu, T.; Yu, G.; Shao, S. Shape robust text detection with progressive scale expansion network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9336–9345. [Google Scholar]

- Liao, M.; Wan, Z.; Yao, C.; Chen, K.; Bai, X. Real-time scene text detection with differentiable binarization. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11474–11481. [Google Scholar]

- Liao, M.; Zou, Z.; Wan, Z.; Yao, C.; Bai, X. Real-time scene text detection with differentiable binarization and adaptive scale fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 919–931. [Google Scholar] [CrossRef] [PubMed]

- Baek, Y.; Lee, B.; Han, D.; Yun, S.; Lee, H. Character region awareness for text detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9365–9374. [Google Scholar]

- Wang, W.; Xie, E.; Song, X.; Zang, Y.; Wang, W.; Lu, T.; Yu, G.; Shen, C. Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8440–8449. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Kirillov, A.; Girshick, R.; He, K.; Dollár, P. Panoptic feature pyramid networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6399–6408. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. Detectors: Detecting objects with recursive feature pyramid and switchable atrous convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10213–10224. [Google Scholar]

- Yang, H.; Jin, L.; Huang, W.; Yang, Z.; Lai, S.; Sun, J. Dense and tight detection of Chinese characters in historical documents: Datasets and a recognition guided detector. IEEE Access 2018, 6, 30174–30183. [Google Scholar] [CrossRef]

- Qi, H.; Yang, H.; Wang, Z.; Ye, J.; Xin, Q.; Zhang, C.; Lang, Q. AncientGlyphNet: An advanced deep learning framework for detecting ancient Chinese characters in complex scene. Artif. Intell. Rev. 2025, 58, 88. [Google Scholar] [CrossRef]

- Neumann, L.; Matas, J. Real-time scene text localization and recognition. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3538–3545. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Reading text in the wild with convolutional neural networks. Int. J. Comput. Vis. 2016, 116, 1–20. [Google Scholar] [CrossRef]

- Liao, M.; Shi, B.; Bai, X.; Wang, X.; Liu, W. Textboxes: A fast text detector with a single deep neural network. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision, Proceedings of the ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Liu, Y.; Jin, L. Deep matching prior network: Toward tighter multi-oriented text detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1962–1969. [Google Scholar]

- He, P.; Huang, W.; He, T.; Zhu, Q.; Qiao, Y.; Li, X. Single shot text detector with regional attention. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3047–3055. [Google Scholar]

- Liao, M.; Zhu, Z.; Shi, B.; Xia, G.s.; Bai, X. Rotation-sensitive regression for oriented scene text detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5909–5918. [Google Scholar]

- Dai, P.; Zhang, S.; Zhang, H.; Cao, X. Progressive contour regression for arbitrary-shape scene text detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7393–7402. [Google Scholar]

- Liu, Y.; Chen, H.; Shen, C.; He, T.; Jin, L.; Wang, L. ABCNet: Real-time scene text spotting with adaptive bezier-curve network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9809–9818. [Google Scholar]

- Zhu, Y.; Chen, J.; Liang, L.; Kuang, Z.; Jin, L.; Zhang, W. Fourier contour embedding for arbitrary-shaped text detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3123–3131. [Google Scholar]

- Ye, M.; Zhang, J.; Zhao, S.; Liu, J.; Du, B.; Tao, D. DPText-DETR: Towards better scene text detection with dynamic points in transformer. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 3241–3249. [Google Scholar]

- Li, J.; Lin, Y.; Liu, R.; Ho, C.M.; Shi, H. RSCA: Real-time segmentation-based context-aware scene text detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2349–2358. [Google Scholar]

- Hassan, E. Scene text detection using attention with depthwise separable convolutions. Appl. Sci. 2022, 12, 6425. [Google Scholar] [CrossRef]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. NAS-FPN: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Zhang, D.; Zhang, H.; Tang, J.; Wang, M.; Hua, X.; Sun, Q. Feature pyramid transformer. In Computer Vision, Proceedings of the ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXVIII 16; Springer: Cham, Switzerland, 2020; pp. 323–339. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention, Proceedings of the MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Vatti, B.R. A generic solution to polygon clipping. Commun. ACM 1992, 35, 56–63. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

Figure 1.

Ancient Chinese texts in different scenes.

Figure 1.

Ancient Chinese texts in different scenes.

Figure 2.

Architecture of the proposed method, where “1/2”, “1/4”, “1/8”, …, “1/32” represent the scaling ratios relative to the input image.

Figure 2.

Architecture of the proposed method, where “1/2”, “1/4”, “1/8”, …, “1/32” represent the scaling ratios relative to the input image.

Figure 3.

Adaptive text-region focus module (ATRFM).

Figure 3.

Adaptive text-region focus module (ATRFM).

Figure 4.

Dynamic fusion upsampling module (DFUM).

Figure 4.

Dynamic fusion upsampling module (DFUM).

Figure 5.

Generation of the probability map p.

Figure 5.

Generation of the probability map p.

Figure 6.

Generation of the threshold map T.

Figure 6.

Generation of the threshold map T.

Figure 7.

Some examples from the ACST dataset: (a) stone inscriptions, (b) calligraphy, (c) couplets.

Figure 7.

Some examples from the ACST dataset: (a) stone inscriptions, (b) calligraphy, (c) couplets.

Figure 8.

Annotation process of the ACST dataset.

Figure 8.

Annotation process of the ACST dataset.

Figure 9.

Detection examples of DRA-Net in different scenes: (a) stone inscriptions, (b) calligraphy, (c) couplets.

Figure 9.

Detection examples of DRA-Net in different scenes: (a) stone inscriptions, (b) calligraphy, (c) couplets.

Figure 10.

Ablation experiments on DRA-Net.

Figure 10.

Ablation experiments on DRA-Net.

Table 1.

Data distribution across different scenarios.

Table 1.

Data distribution across different scenarios.

| Scene Categories | Orientation (Vertical/Horizontal) | Text Density | Annotation Formats | Scene Type | Image Count |

|---|

| Stone Inscriptions | 2393/300 | 7.01 | Rectangle | Outdoor | 384 |

| Calligraphy | 3036/455 | 4.69 | Rectangle | Indoor | 920 |

| Couplets | 1657/915 | 2.91 | Rectangle | Outdoor | 885 |

| All | 7086/1670 | 4 | Rectangle | Mix | 2189 |

Table 2.

Results of the statistical analysis of annotation consistency (IOU > 0.95).

Table 2.

Results of the statistical analysis of annotation consistency (IOU > 0.95).

| Scene Categories | Auto Labels | Manual Labels | Matched Count | Manual Change Rate (%) |

|---|

| Stone Inscriptions | 2403 | 2693 | 609 | 77.39 |

| Calligraphy | 2711 | 3491 | 1471 | 57.86 |

| Couplets | 2367 | 2572 | 1382 | 46.27 |

| All | 7481 | 8756 | 3462 | 60.46 |

Table 3.

Comparison with previous methods. (Bold indicates the best data performance).

Table 3.

Comparison with previous methods. (Bold indicates the best data performance).

| Method | Recall | Precision | F1-Score | FPS (/s) | Params (M) |

|---|

| Mask R-CNN [32] | 68.6 | 53.1 | 58.4 | 5.7 | 43.7 |

| PSENet [4] | 72.0 | 61.9 | 66.5 | 6.9 | 29.2 |

| DBNet [5] | 63.2 | 77.7 | 69.7 | 18.0 | 12.4 |

| FCENet [24] | 50.3 | 46.2 | 48.1 | 2.2 | 26.3 |

| DBNet++ [6] | 68.0 | 79.5 | 73.3 | 18.4 | 12.7 |

| AncientGlyphNet [14] | 34.5 | 65.8 | 45.3 | 29.8 | 24.8 |

| Ours | 72.9 | 82.8 | 77.5 | 18.0 | 13.6 |

Table 4.

Impact of different backbones. (Bold indicates the best data performance).

Table 4.

Impact of different backbones. (Bold indicates the best data performance).

| Backbone | Recall | Precision | F1-Score |

|---|

| ResNet-18 | 63.2 | 77.7 | 69.7 |

| ResNet-34 | 43.6 | 67.7 | 53.0 |

| ResNet-50 | 67.1 | 75.3 | 70.9 |

| ResNet-101 | 39.5 | 63.8 | 48.8 |

| ResNet-152 | 34.3 | 73.7 | 46.8 |

| MobileNetV3 | 60.9 | 82.8 | 70.2 |

| Ours | 72.9 | 82.8 | 77.5 |

Table 5.

Ablation study of DRA-Net. (“w/o” denotes the removal of the specified component while keeping the rest of the architecture unchanged, Bold indicates the best data performance).

Table 5.

Ablation study of DRA-Net. (“w/o” denotes the removal of the specified component while keeping the rest of the architecture unchanged, Bold indicates the best data performance).

| Method | Recall | Precision | F1-Score |

|---|

| w/o DFUM | 72.0 | 82.6 | 77.0 |

| w/o ATRFM | 71.6 | 82.7 | 76.7 |

| Ours | 72.9 | 82.8 | 77.5 |

Table 6.

Ablation study of the DFUM. (Bold indicates the best data performance).

Table 6.

Ablation study of the DFUM. (Bold indicates the best data performance).

| Activation Function | Recall | Precision | F1-Score |

|---|

| Tanh | 71.1 | 84.2 | 77.1 |

| Sigmoid | 72.9 | 82.8 | 77.5 |

Table 7.

Ablation study of the ATRFM, where m denotes the size of the strip convolutional kernel. (Bold indicates the best data performance).

Table 7.

Ablation study of the ATRFM, where m denotes the size of the strip convolutional kernel. (Bold indicates the best data performance).

| m | Recall | Precision | F1-Score |

|---|

| 15 | 73.6 | 81.1 | 77.2 |

| 13 | 69.5 | 85.8 | 76.7 |

| 11 | 72.6 | 82.1 | 77.1 |

| 9 | 72.9 | 82.8 | 77.5 |

| 7 | 70.7 | 82.8 | 76.3 |

Table 8.

Impact of different necks on DRA-Net performance. (Bold indicates the best data performance).

Table 8.

Impact of different necks on DRA-Net performance. (Bold indicates the best data performance).

| Neck | Recall | Precision | F1-Score |

|---|

| FPN | 71.2 | 82.5 | 76.5 |

| Ours | 72.9 | 82.8 | 77.5 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).