1. Introduction

Human–machine collaboration (HMC) refers to the synergistic partnership between humans and machines, where both entities work together to achieve shared goals by leveraging their respective strengths [

1]. This collaboration includes scenarios where humans oversee and refine artificial intelligence (AI) outputs, combining human adaptability with machine precision in manufacturing processes, or integrating human intuition with machine speed in data analysis. The essence of HMC lies in creating a partnership that surpasses the capabilities of humans or machines operating independently. While, initially, research tended to emphasize technological efficiency and machine capabilities to make production more effective, recent years have seen a notable shift towards human centricity [

2]. Influenced by broader trends in human-focused design, contemporary HMC prioritizes human experience, values, and empowerment, ensuring that technology enhances human capabilities and places human needs at the core of collaboration.

Multimodal AI significantly enriches HMC by enabling more natural, intuitive, and effective interactions through multiple communication channels, such as text, speech, gestures, images, and videos [

3]. By combining diverse modalities, multimodal AI systems can better understand human intentions, context, and emotions, enhancing the depth and quality of collaboration. In the context of human-centric HMC, multimodal AI aligns technology more closely with human behaviors and cognitive processes, enabling machines to adapt to users’ preferred modes of communication. This fosters smoother interactions, greater trust, and improved decision making, ultimately resulting in more effective, responsive, and personalized human–machine partnerships.

Despite this promise, previous work in HMC has often been limited to either unimodal interactions or narrowly defined benchmarks, focusing mainly on performance efficiency while overlooking human-centric criteria such as trust, explainability, or usability. These limitations create a gap in understanding how multimodal AI can be systematically embedded into real-world HMC systems that balance autonomy with human oversight.

Multimodal large language models (MLLMs) [

4] represent a key advancement in HMC, combining text, images, audio, and more to create systems that understand and interact in ways more aligned with human cognition. These models enhance human-centric HMC by adapting to multiple forms of human communication, improving both user experience and machine capabilities. As a result, MLLMs enable more intuitive, responsive, and personalized collaborations. As a subset of MLLMs, vision–language models (VLMs) [

4] offer a focused approach by aligning visual perception with natural-language understanding. They improve transparency, responsiveness, and personalization in collaborative tasks, making them especially promising for explainable and context-aware autonomy. However, challenges remain in systematically embedding VLMs into HMC systems and aligning them with real-world human needs. This study addresses that gap by proposing a reference architecture and evaluating its effectiveness in enhancing explainability and task performance in practical HMC scenarios.

This study aims to bridge existing gaps by specifically exploring how emerging multimodal AI techniques—particularly MLLMs—can be effectively leveraged to foster a more intuitive, human-centric approach to developing HMC applications, demonstrated by our UAV experimental use case. Achieving this goal requires systematic design principles that ensure transparency, adaptability, and effective integration within HMC systems. It also calls for empirical validation to assess the impact of multimodal AI on both the explainability and the performance of collaborative tasks in real-world scenarios. Consequently, this research study is guided by two hypothesis-driven questions:

Can a reference multimodal AI architecture be designed to support transparent, adaptable, and human-centric collaboration in real-time UAV navigation?

Does embedding vision–language models within UAV control pipelines significantly enhance explainability and task performance in complex, dynamic scenarios?

To address these questions, this study proposes a reference multimodal AI–HMC architecture derived from a literature review and theoretical analysis. We empirically validate our experimental use case with a focus on VLMs in UAV navigation, as drones are increasingly used in safety-critical tasks and unstructured and dynamic environments, where explainable and adaptive autonomy is essential. Their constrained controls and strict safety requirements also make them a suitable testbed for human-in-the-loop collaboration. By conducting this focused evaluation, we aim to demonstrate the practical utility of our use case, providing insights and guidelines to enhance the development and integration of MLLMs. Ultimately, this work contributes to ensuring that technological advancements meaningfully enrich human–machine interactions, prioritizing both effectiveness and human well-being in collaborative environments.

Despite prior conceptual efforts and limited evaluation frameworks, few studies have implemented and validated a fully operational multimodal HMC system. To the best of our knowledge, this work is the first to implement and evaluate an experimental multimodal AI-HMC system that combines vision–language reasoning with real-time drone control in a controlled laboratory environment. This hands-on deployment advances the field by translating theoretical evaluation approaches into a tangible AI-HMC system, demonstrating how design principles can be operationalized in practice.

2. Related Work

Human centricity lies at the core of emerging technological paradigms, emphasizing the prioritization of human well-being, creativity, and agency in industrial innovation. Building on this foundation, Industry 5.0 represents a shift from purely technology-driven processes toward synergistic collaboration between humans and machines, aiming to boost productivity while safeguarding human well-being and experience [

5]. This evolution builds upon concepts such as Operator 4.0, where technologies such as augmented reality, wearables, and cognitive support tools are employed to enhance the skills and decision-making capabilities of industrial workers [

6,

7]. To fully leverage the potential of Industry 5.0, several studies highlight the necessity of establishing clear human-centric metrics and evaluation criteria, ensuring that technology integration genuinely prioritizes human values, cognitive capacities, and user experience [

8,

9]. While some evaluation frameworks for human–machine collaboration exist, they often focus on performance or task success rather than human-centric aspects such as well-being, trust, or cognitive workload. For example, Coronado et al. [

10] proposed models addressing human factors in HRI, and Verna et al. [

11] introduced a tool combining product quality analysis with stress-based well-being metrics. Hopko et al. [

12] also highlighted trust and cognitive load as key factors in shared human–robot spaces. However, such evaluations are rarely applied to systems using vision–language models or multimodal AI, particularly in real-world UAV contexts. This paper addresses the research gap in the systematic design and development of effective human-centric HMC (human–machine collaboration) applications. Previous studies that have explored this challenge [

8,

10,

13,

14,

15,

16] have identified key barriers in defining consistent guidelines for the design, implementation, and assessment of HMC systems that place human needs at the center. In our earlier work [

17], we introduced a human-centric reference framework for HMC, involving heterogeneous robots—such as unmanned ground and aerial vehicles and cobots—collaborating in a shared workspace using various HRI (Human–Robot Interaction) techniques and enabling technologies.

Multimodal AI, particularly MLLMs, has emerged as a transformative approach to advancing HMC by integrating diverse data modalities, such as vision, language, and action [

3]. A prominent class of MLLMs is vision–language models (VLMs) [

18], which combine visual and textual understanding to enable systems to interpret and respond to complex human inputs more naturally and contextually. These capabilities are particularly valuable in dynamic industrial environments, where interpreting multimodal cues is critical. While MLLMs form the foundation of this progress, their effectiveness is often amplified through integration with other key technologies—such as large language models (LLMs) [

19], deep reinforcement learning (DRL) [

20], and augmented reality (AR) interfaces [

21]—which together enhance robotic perception, decision making, and interaction. Frameworks leveraging multimodal AI aim to support seamless collaboration between humans and machines by processing verbal commands, visual cues, and environmental context in real time. Despite these advances, the full impact of MLLMs on human-centric evaluation and user experience in HMC remains underexplored. For instance, Faggioli et al. [

22] investigated how LLMs can assist human assessors in HMC scenarios while also highlighting associated risks. Similarly, other studies have shown that integrating LLMs into collaborative robotic tasks, such as robot arm control, can significantly enhance human trust [

23].

With multimodal AI, personalized human-centered systems are constructed, such as LangWare [

24], to provide natural-language feedback to users by leveraging LLMs and focusing on the evaluation of HMC collaboration and user subjective ratings. To strengthen robotic cognition capabilities in an unstructured environment, the authors in [

25] modeled a collaborative manufacturing system framework comprising a mixed reality head-mounted display, deep reinforcement learning, and a vision–language model, evaluating its performance. Similarly, the GPT-4 language model was used to decompose commands into sequences of motions that can be executed by the robot [

26]. Similarly, LLMs have been studied to be used in remanufacturing [

27]. When evaluating these MLLM use cases, previous studies often focused on performance and task success, omitting human aspects.

Vision–language models (VLMs) are increasingly being applied in UAV and robotic systems to enhance autonomy, safety, contextual reasoning, and human–machine interaction through natural language. As summarized in

Table 1, existing UAV vision–language navigation (VLN) studies differ significantly in methodology, evaluation focus, and degree of real-world validation. For example, Liu et al. [

28] introduced AerialVLN, a vision-and-language navigation framework specifically designed for UAVs which enables drones to interpret visual scenes and follow natural-language instructions for aerial trajectory planning. More recently, Chen et al. [

29] proposed GRaD-Nav++, a lightweight Vision--Language--Action framework trained in a photorealistic simulator that runs fully onboard drones and follows natural-language commands in real time. In the domain of aerial visual understanding, benchmarks such as HRVQA [

30] have been developed to advance research in visual question answering with high-resolution aerial imagery. Renewed VLN-specific advances for UAVs include UAV-VLN [

31], an end-to-end vision--language navigation framework that integrates the LLM-based parsing of instructions with visual detection and planning for semantically grounded aerial trajectory generation. Similarly, Wang et al. [

32] presented a realistic UAV vision--language navigation benchmark using the OpenUAV simulation platform along with assistant-guided tasks to better capture the complexity of realistic aerial VLN challenges. Across studies, human-centric factors such as trust, cognitive load, or explanation clarity remain underexplored, especially in UAV contexts. Our work extends this direction by integrating vision–language reasoning into a real-time UAV platform and empirically evaluating human-centric outcomes such as safety, usability, trust, and explainability in collaborative scenarios.

While previous research has contributed frameworks and metrics for evaluating HMC, these approaches have limited real-world validation, especially involving users. Moreover, many do not fully leverage the capabilities of multimodal AI to explore human-centric aspects. In contrast, our research introduces a reference multimodal AI–HMC architecture that helps systematize the development and design of these applications, applying it to our real-world laboratory use case and evaluating it based on human-centric criteria, increasing trust. This approach builds upon existing methodologies and also extends some of them by emphasizing human-centric factors while underscoring the importance of multimodal AI, especially VLMs, for enhancing these human-centric applications.

3. Proposed Multimodal AI-HMC System Architecture and Use Case Implementation

This chapter introduces the architectural foundation and practical implementation of the proposed HMC system powered by multimodal AI. First, we present a reference multimodal AI-HMC system architecture that defines the modular structure, data flow, and integration principles necessary for deploying intelligent, human-centric systems. This framework serves as a blueprint for combining perception, reasoning, and interaction in a unified HMC pipeline. Building on this foundation, we describe a particular multimodal AI-HMC system architecture implemented in a laboratory setting. This platform features a VLM-powered UAV use case, integrating depth estimation, language-based reasoning, and real-time human feedback. The implementation demonstrates how the reference architecture can be realized in practice and forms the basis for the evaluation presented in subsequent sections.

3.1. Reference Multimodal AI-HMC System Architecture

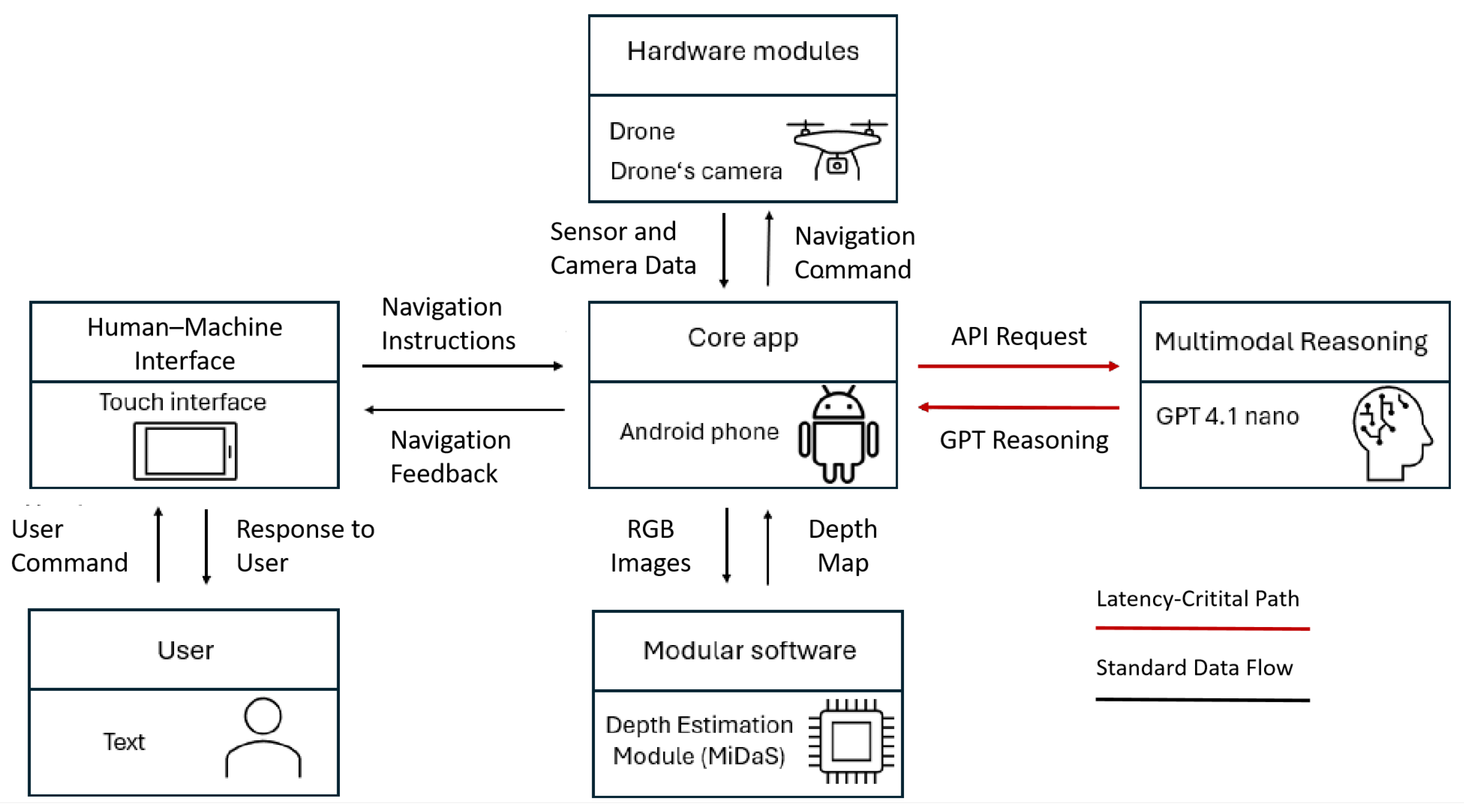

The reference multimodal AI-HMC system architecture shown in

Figure 1 consists of five distinct but interrelated modules:

- (i)

The core application, which operates on a computing platform such as a smartphone or desktop, acts as the central control hub. It facilitates two-way communication between the different modules, ranging from hardware components and software services to AI reasoning engines and user interfaces. This component processes incoming commands, initiates reasoning workflows, manages execution routines, and maintains a continuous feedback cycle within the HMC framework.

- (ii)

The human–machine interface (HMI) serves as the main interaction channel for users. Whether implemented through extended reality (XR), natural input methods, or conventional interfaces like web or touchscreen displays, this module gathers various forms of user input (e.g., speech, touch, and gestures) and translates them into structured data formats. It also delivers system feedback to users in an understandable and accessible manner, effectively closing the communication loop.

- (iii)

The modular software stack contains all AI and service components, including locally hosted inference engines and cloud-based APIs. These software elements are designed as modular and callable services, enabling tasks such as reasoning, classification, or control to be dynamically activated via standard interface protocols.

- (iv)

The hardware layer consists of physical devices—such as sensors, robotic systems, and actuators—that handle environmental perception and task execution. Sensor data are transmitted to the core application for processing, while instructions (e.g., for movement or manipulation) are routed back to the hardware. Bidirectional communication in this layer ensures responsive, real-time behavior in changing environments.

- (v)

The multimodal reasoning module, typically powered by MLLMs, provides advanced cognitive processing. Accessed through API calls or messaging protocols, this module processes complex, semantically rich inputs—including images, metadata, and textual prompts—to generate outputs like recommendations, path plans, or explanations. These results are integrated into the core application and presented to users for validation or further action.

Data transfer within the system is managed using a hybrid model that combines event-based triggers with request–response interactions. When a user initiates a command, the interface parses it, and the core application determines the appropriate processing route. Environmental inputs and system responses are continuously exchanged, enabling adaptive behavior based on context. This architecture emphasizes flexibility and modular design, ensuring that components can be reused, tested, or replaced across different platforms and use cases. By bringing together perception, reasoning, and execution in a coordinated manner, the system enables intuitive and intelligent cooperation between human users and autonomous machines, bridging digital inputs and real-world actions.

3.2. Use Case Implementation: Multimodal AI-HMC System for UAV Navigation

This section presents the implementation details of a practical AI-HMC system developed to assess the proposed architectural approach in a controlled laboratory environment. The use case—centered on a UAV navigation scenario—is grounded in the previously introduced reference architecture and serves to demonstrate how its key components can be realized in practice. The system integrates elements such as the core application, user interface, multimodal reasoning module, software tools, and hardware platform to enable intelligent, user-directed navigation by the operator. By combining real-time depth estimation, visual perception, and natural-language-based decision making powered by GPT-4.1 [

33], the platform offers a test environment for evaluating responsiveness, usability, and human-centered interaction in collaborative tasks. The overall lab platform layout is illustrated in

Figure 2.

The lab platform architecture consists of the following components:

- (i)

The core application was developed in the Kotlin programming language, leveraging its full compatibility with the DJI Mobile SDK V5, which enables precise control over the drone, including direct manipulation through virtual stick input. The system was deployed on a commercially available Android smartphone powered by a Snapdragon 778 G octa-core processor (up to 2.4 GHz), 6 GB RAM, and an Adreno 642 L GPU, running Android 12. This mobile platform was capable of handling sensor data processing, user interface interaction, and communication with external services such as the GPT-based reasoning engine.

- (ii)

The Depth Estimation Module utilized the MiDaS 2.1 v21 small 256 neural network, integrated as a standalone component using ONNX Runtime for on-device inference. MiDaS was selected for its robust generalization in single-image depth estimation across varied environments and lighting. High-resolution 4 K RGB images from the drone were resized to 256 × 192 pixels to ensure efficient processing on mobile hardware. The resulting depth maps were computed in real time and used for environmental understanding and obstacle detection. We employed a lighter version of MiDaS because it balances accuracy with computational efficiency, enabling real-time inference on mobile devices. Its use was particularly important for obstacle detection, as the DJI Mobile SDK does not provide full access to all onboard obstacle detection sensors of the DJI Mini 3 Pro, making monocular depth estimation the most practical option. While heavier MiDaS variants deliver higher accuracy, they would have exceeded the available hardware capacity and introduced unacceptable latency. The lighter model, therefore, represented the best compromise between robustness and efficiency under our platform constraints.

- (iii)

The multimodal reasoning component relied on the GPT-4.1-nano model, accessed through a remote HTTP API. Due to its computational intensity, reasoning was offloaded to a cloud-based environment. The core application transmitted a combination of base64-encoded images, summarized depth data, and sent relevant metadata to the reasoning module, which then returned structured responses. These included stepwise navigation commands and natural-language explanations. The responses were parsed by the core application before execution. While remote deployment offered scalability, it introduced latency challenges, which are further examined in the Evaluation Section. We selected GPT-4.1-nano because it provides a balance between reasoning capability and response latency, making it suitable for real-time UAV navigation with human oversight. Other GPT variants would have offered higher accuracy but at the cost of slower responses and greater resource demands. The nano model, therefore, represented the best compromise for integrating multimodal reasoning and natural-language explanations under our experimental constraints.

- (iv)

The hardware layer comprised the DJI Mini 3 Pro UAV, chosen for its compact design, stable gimbal-mounted RGB camera, and compatibility with SDK-based control. The camera supplied visual data for depth inference and scene interpretation. The UAV maintained a wireless link to the Android control device via Wi-Fi, enabling continuous transmission of telemetry data and command instructions during flight operations.

- (v)

The human–machine interface was built as a touch-based application on the Android device. It allowed users to define navigation targets in 0.5 m increments and confirm or reject AI-generated plans for obstacle avoidance. The interface featured a live video stream, control buttons for command execution, safety mechanisms such as emergency stop, and clear visual outputs for user comprehension. It also managed interaction workflows, including target confirmation and fallback procedures.

This system architecture was purposefully modular, enabling independent updates or substitutions of software components—such as the MiDaS model, GPT interface, or UI—without affecting system interoperability. Integration was maintained through standardized data formats and well-defined communication protocols. To make system behavior more transparent, the architecture explicitly distinguishes between standard data flows (black arrows) and latency-critical paths (red arrows). This distinction emphasizes performance-relevant components, particularly the interaction between the core application and the multimodal reasoning engine. Since the reasoning module is deployed in the cloud rather than locally on the device, remote API calls can introduce delays that directly affect responsiveness. The diagram also specifies exchanged data types—such as RGB images, depth maps, and navigation commands.

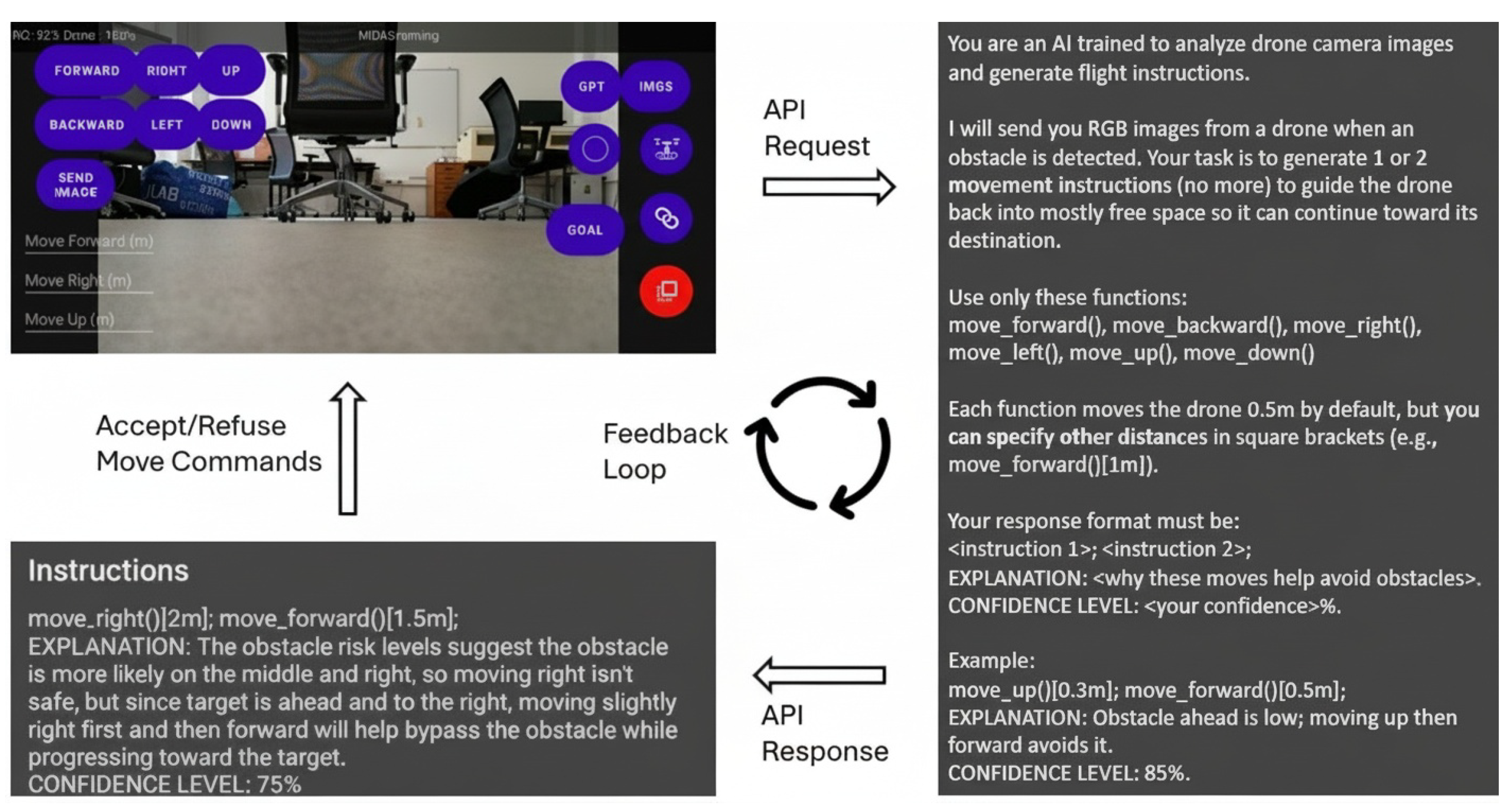

The Interactive Reasoning and Feedback Loop illustrates the HMC workflow in our scenario. It demonstrates how the integration of the cloud-based GPT-4.1-nano enables adaptive, vision-guided navigation planning. As depicted in

Figure 3, when the UAV detects an obstacle, the core application captures the live RGB stream and sends an API request containing both the visual input and relevant navigation context. The external reasoning module interprets this information and generates movement instructions—usually one or two discrete commands—together with a natural-language explanation and an associated confidence level. In our current implementation, the prompting strategy follows a one-shot format, where the model receives a single example of the expected output structure to guide its responses. The core app then parses these structured outputs and displays them through the user interface, where the operator can approve or reject the suggested action. This workflow establishes a human-in-the-loop control cycle that combines automated reasoning with user supervision.

To ensure compatibility with the UAV’s control system, the AI’s instructions were limited to a predefined set of basic movement primitives (e.g., move_forward() [0.5 m] and move_right() [1 m]). These were designed for interpretability and safe execution. The UI visualized the proposed action plan and its rationale, enhancing transparency and fostering user trust. This feedback-driven architecture supports real-time adjustments in dynamic environments, highlighting how multimodal AI can enhance situational awareness and adaptive collaboration in drone navigation tasks.

It is important to note that the proposed system represents an architectural framework rather than a novel optimization algorithm. Therefore, a formal mathematical derivation or convergence proof is not included. Stability is addressed at the architectural level by constraining UAV control to a set of safe, discrete primitives and by ensuring component interoperability through modular design and standardized communication protocols. Robustness was further evaluated empirically through repeated UAV experiments in controlled scenarios.

4. Evaluation and Findings

This chapter presents the evaluation of the proposed multimodal AI–HMC system, with a focus on its impact on human–machine collaboration in a real-world UAV navigation use case. The Methodology Section describes the controlled test scenarios, experimental setup, and standardized conditions used to ensure consistent and comparable results. The subsequent analysis examines how the integration of a VLM affects key human-centric aspects such as explainability, safety, usability, performance, and collaboration synergy. The evaluation is structured into four parts: transparency and explainability, usability, safety and security, and performance and collaboration synergy. Each section highlights different dimensions of the system’s behavior and interaction quality, providing insights into the role of multimodal AI in supporting adaptive and trustworthy HMC.

4.1. Evaluation Methodology

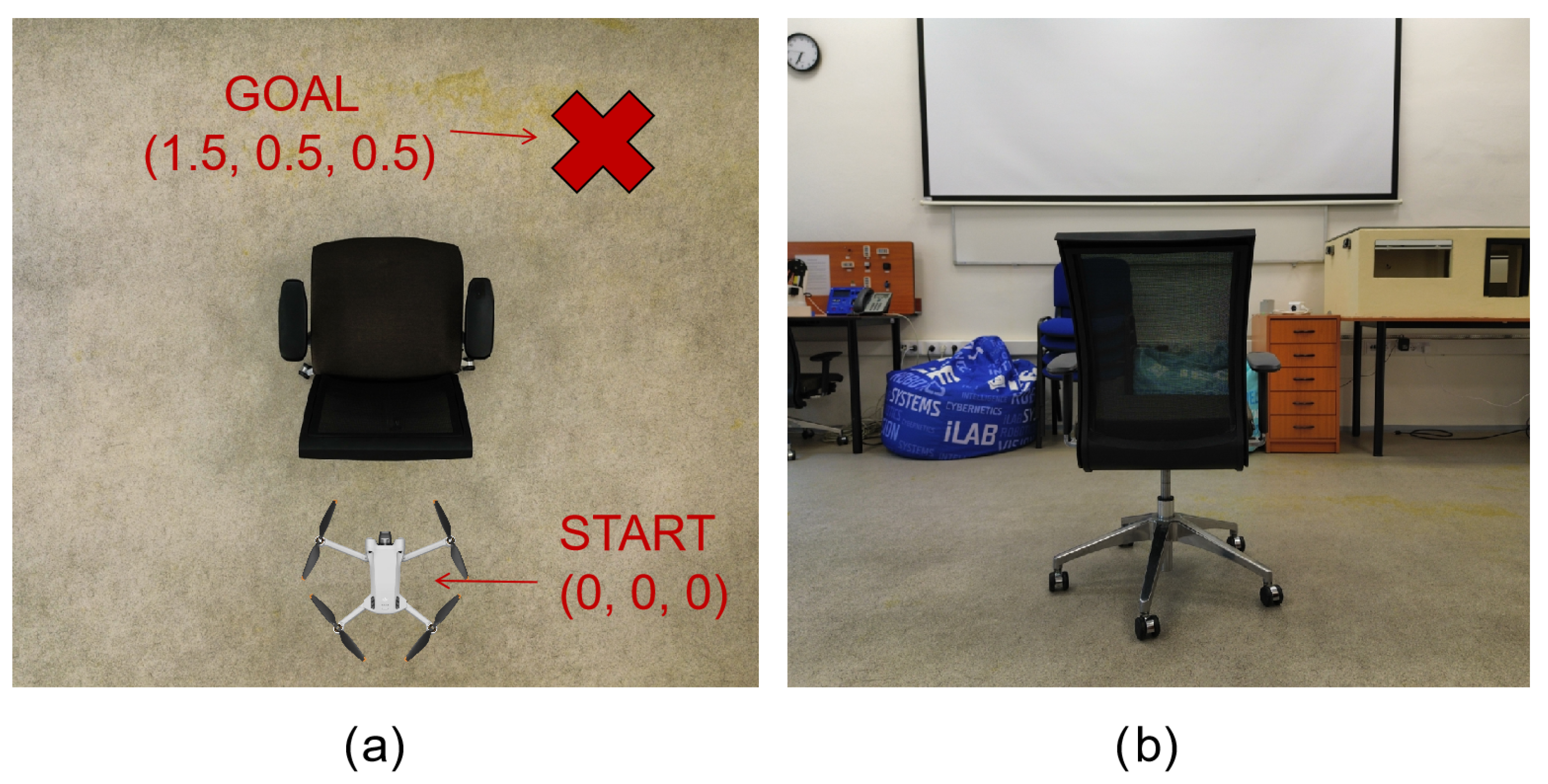

To evaluate the defined metrics in a practical setting, experiments were conducted within a controlled laboratory environment replicating two distinct navigation scenarios. Scenario 1 (

Figure 4) served as a baseline, focusing on a simple obstacle avoidance task. Here, the UAV was required to bypass a single stationary object—a chair—positioned directly in its path. The object’s placement remained unchanged throughout all test runs to maintain experimental consistency. Starting from coordinates

, the UAV’s goal was to reach

, meaning that it needed to move 1.5 m forward and 0.5 m to the right while detecting and avoiding the obstacle. Uniform conditions—including fixed lighting, static obstacle placement, and identical target coordinates—were preserved to ensure that differences in results reflected variations in navigation strategy rather than environmental factors.

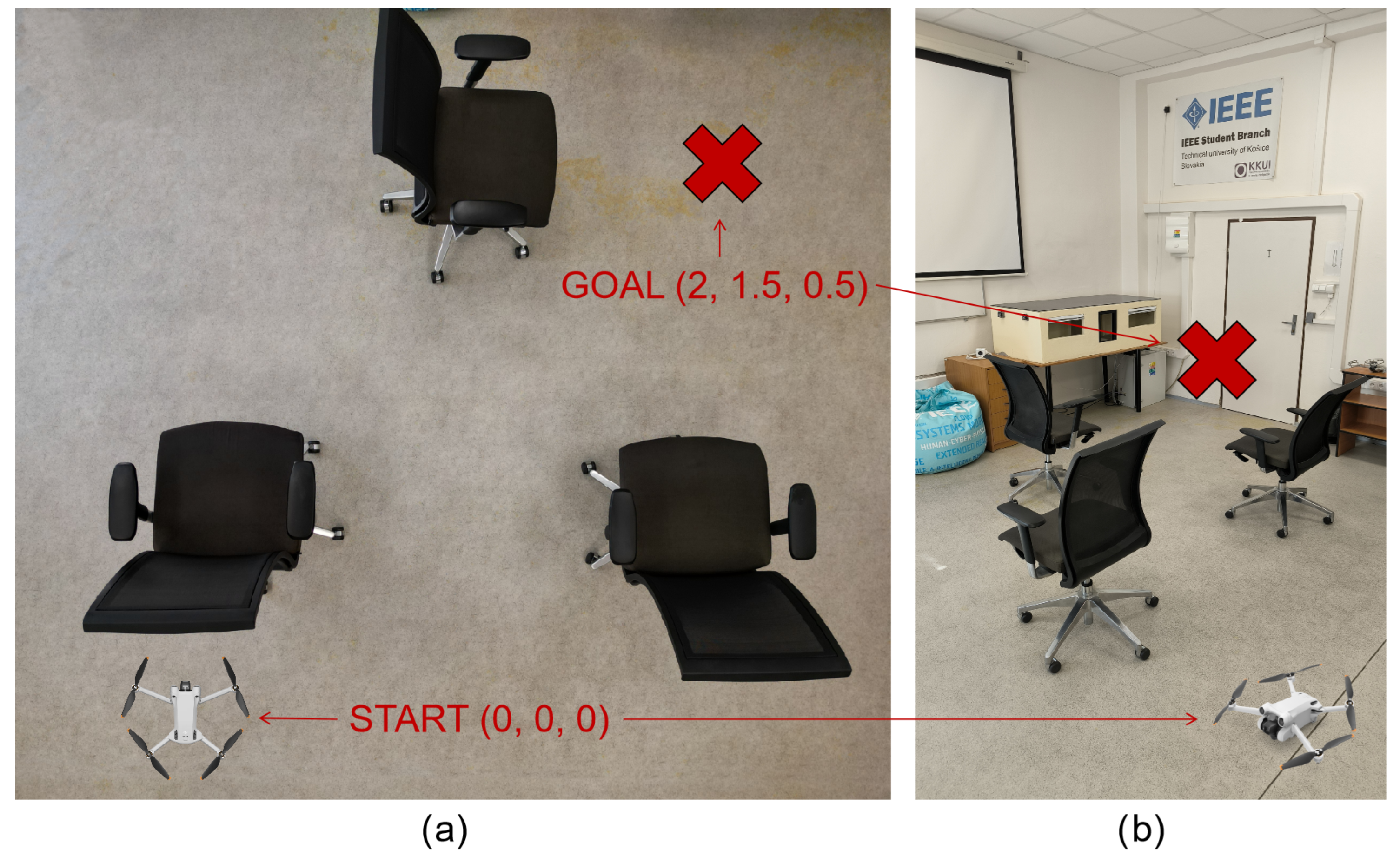

To assess performance under more complex conditions, the second scenario introduced a denser configuration of static obstacles, designed to resemble a cluttered indoor space. The UAV’s target position was set to

, requiring more sophisticated path planning. Immediately after takeoff, the UAV faced a chair directly ahead, another positioned to its right blocking lateral movement, and a third partially ahead on the right obstructing a diagonal route. As shown in

Figure 5, this arrangement demanded that the UAV interpret a more intricate environment and adjust its route dynamically.

This second setup tested the UAV’s capacity for adaptive navigation in constrained spaces, challenging its ability to avoid multiple obstacles in quick succession. Similar to the first scenario, environmental parameters were kept constant across all ten trials to ensure that performance differences were attributable solely to the system’s decision-making process.

Before each trial, a short calibration routine was performed to minimize navigation misalignment and ensure repeatability. The UAV’s local coordinate system was initialized by aligning the take-off position with a predefined origin point (0, 0, 0) marked on the laboratory floor. Target coordinates were measured relative to this origin using a measuring tape, and the environment layout (including obstacle placement) was verified against a reference diagram to ensure consistency across trials. The UAV’s telemetry from the DJI SDK was cross-checked to confirm stable altitude and orientation before issuing the first command. This calibration step reduced systematic drift and ensured that variations in task success were attributable to perception and reasoning rather than initial misalignment.

4.2. Evaluation of Transparency and Explainability

This evaluation employed three key measures: model confidence correlation, explanation clarity, and explanation informativeness.

The first measure, model confidence correlation, investigated the relationship between GPT’s self-assessed confidence levels and the authors’ independent judgment regarding the likelihood of successful task completion. In Scenario 1—which consisted of ten individual trials, each containing a single obstacle and a navigation plan generated by GPT-4.1-nano—the model provided a numerical confidence estimate (ranging from 0 to 100%) for each decision. These values were then compared against the authors’ separate confidence ratings for the same tasks.

To determine the level of agreement between model-generated and human-assigned scores, the following formula was applied (Equation (

1)):

As indicated in

Table 2, the resulting correlation between GPT’s numeric confidence and the authors’ assessment was minimal (Pearson’s

,

). This weak relationship suggests that GPT-4.1-nano’s confidence scores are not a dependable predictor of either its true performance or the degree of trust a human evaluator might place in it.

The system-generated natural-language explanations of navigation decisions were also evaluated for understandability and informativeness, focusing on their interpretability and usefulness. Each explanation was categorized as “good” or “not good” based on its clarity, logical soundness, and relevance to the context. A “good” explanation provided a clear, coherent rationale for the movement choice and was consistent with the scene’s conditions. Conversely, explanations that were vague, internally contradictory, or poorly organized were marked as “not good”.

Of the ten explanations examined, eight met the “good” criterion, giving an overall explanation quality score of 80%. Nevertheless, several issues emerged. In one instance, GPT advised moving left while simultaneously labeling both the left and center high risk—an internal contradiction. In another case, the model recommended heading toward an area close to an obstacle without adequately explaining why that risk was acceptable. These findings highlight the need for stronger consistency and better context-sensitive reasoning, particularly in safety-critical scenarios.

Alongside the authors’ evaluation, a user study was conducted to assess the same three dimensions—confidence, understandability, and informativeness—from the perspective of external participants. Fourteen students from the department were recruited and shown GPT-4.1-nano’s navigation commands paired with the pictures from the drone’s camera and its explanations. Using a structured questionnaire, they rated 10 different trials, each with a unique model output. The UAV laboratory trials were carried out exclusively by the authors, who acted as system operators to ensure safety and reproducibility. These trials did not involve external participants. In contrast, the 14 students participated solely as observers and evaluators, providing subjective ratings on clarity, usefulness, and trustworthiness of the system’s outputs. Thus, the operator role was limited to the authors, while the observer role was fulfilled by the user study participants. To ensure that judgments focused on explanation quality rather than spatial details, all quantitative distance references were removed.

Two versions of the questionnaire were created:

This setup was intended to determine whether the visibility of the model’s self-reported confidence influenced participants’ evaluations, given that language models are known to sometimes overestimate their own certainty. Participants were randomly assigned to one of the two groups.

Each participant was asked three questions, each rated on a scale from 1 to 100%, to evaluate the model’s output according to the following criteria:

Confidence: How would you rate your confidence that the drone will avoid the obstacle?

Understandability: How would you rate the clarity of the explanation?

Informativeness: How would you rate the informativeness of the explanation?

The responses enabled a secondary assessment of model confidence correlation, specifically comparing GPT’s self-reported confidence to participant-rated confidence. In addition, they provided quantitative insights into explanation clarity and informativeness, enabling comparisons between groups with and without confidence visibility.

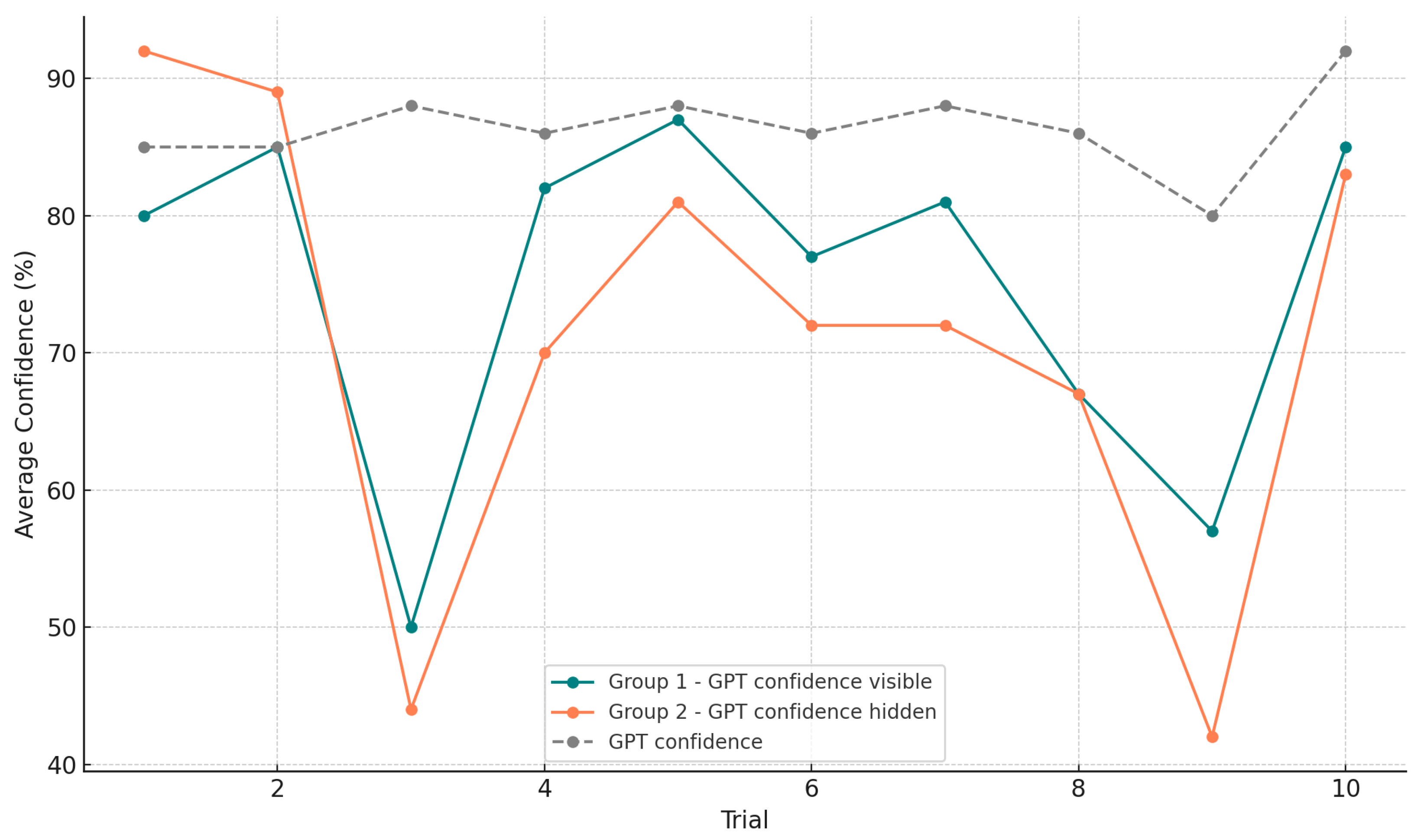

As shown in

Figure 6, both groups’ confidence ratings demonstrated a moderate positive correlation with GPT’s confidence values (

), but the relationship was not statistically significant (

,

). Group 1 (where confidence was visible) tended to rate slightly higher across most trials.

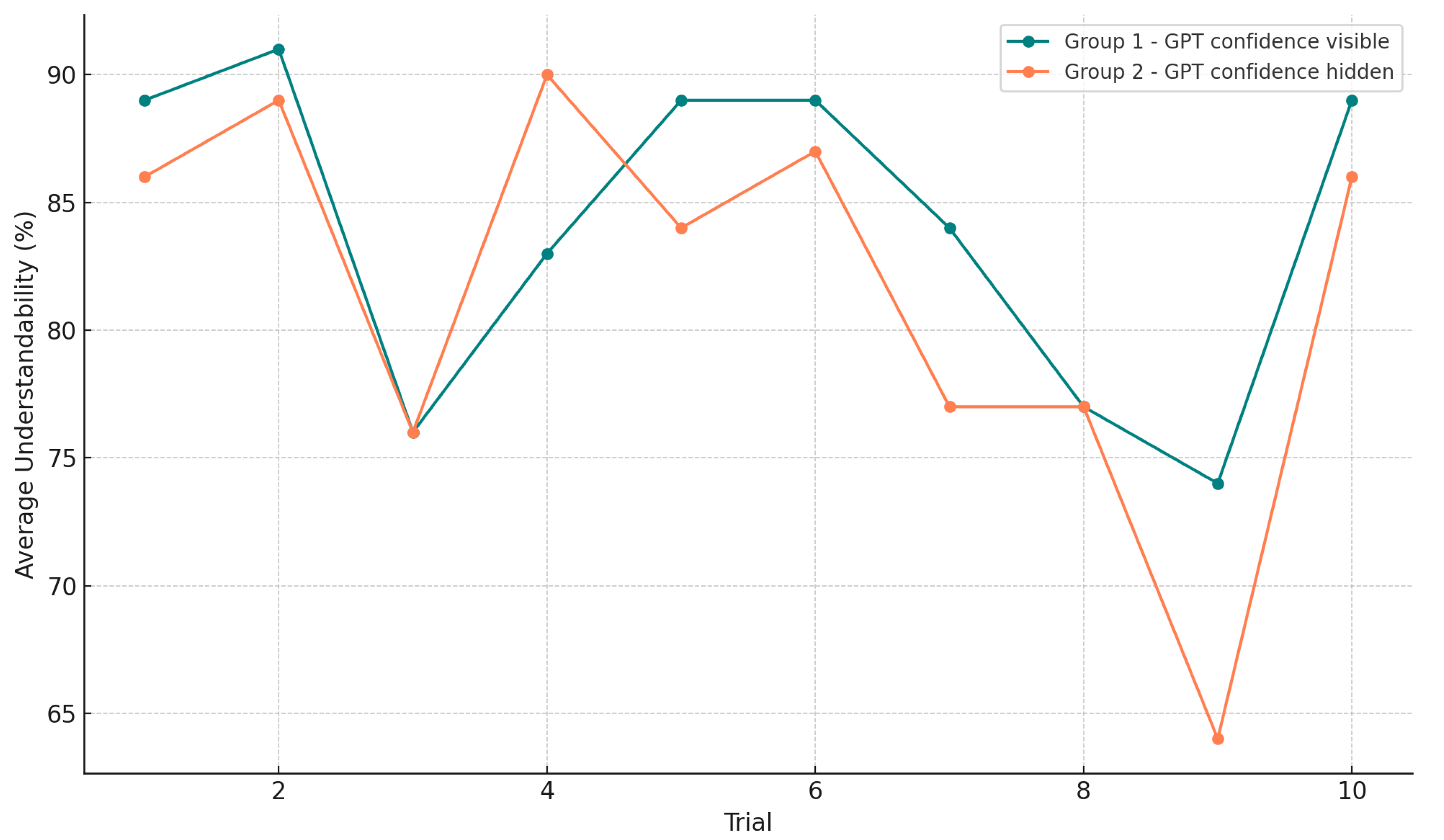

Figure 7 shows that both groups followed similar rating trends, with Group 1 generally giving slightly higher clarity scores. This suggests a possible small benefit from showing GPT’s confidence score, though the effect was small (Cohen’s

) and not statistically significant (

).

In contrast,

Figure 8 suggests that Group 2 (where confidence was hidden) slightly outperformed Group 1 in perceived informativeness. This may indicate that removing the confidence score shifts the focus toward the explanation itself. This difference corresponded to a small-to-moderate effect (Cohen’s

) but again did not reach statistical significance (

).

For completeness, we also compared GPT’s confidence to the authors’ ratings in Scenario 1, where the relationship was negligible (, ). Although none of these results were statistically significant, including effect sizes provides a fuller picture of the findings and highlights small practical effects that may warrant further study with larger samples.

Participant feedback revealed several recurring issues. Some found it difficult to evaluate the explanation without knowing the drone’s target position. Others interpreted large lateral movements as failures in obstacle avoidance. A few chose to assess the explanation independently of the movement command, focusing solely on narrative quality. This disconnect was amplified when explanations referred to future steps not represented in the immediate movement instructions, leading to confusion. Additionally, omitting quantitative movement data reduced perceived clarity and informativeness.

Overall, this study indicates that GPT-4.1-nano’s self-reported confidence has only a moderate, non-significant relationship with user confidence ratings. Participants generally judged the explanations as clear and informative, but the visibility of the model’s confidence had only minor effects on perception. The findings suggest that improving alignment between instructions and justifications, incorporating goal information, and experimenting with different confidence presentation formats may enhance trust and comprehension in future iterations.

4.3. Evaluation of Usability

Usability was assessed using the user task success rate. This metric represents the proportion of trials in which the drone reached its designated target without collisions or operational malfunctions (e.g., becoming stuck or losing orientation), representing the successful run. A trial was marked as successful if the drone completed its assigned route without straying from the planned trajectory and without making contact with any obstacles. The rate was calculated using Equation (

2):

In the first scenario, where navigation involved avoiding a single static obstacle, the drone succeeded in 7 of 10 trials, resulting in a 70% success rate. Failures were mostly linked to three factors: misalignment between the user-defined coordinates and the drone’s interpreted navigation space, delayed obstacle detection leading to collisions, and deviations from the planned path caused by minor positioning errors or unexpected features in the environment.

The second scenario introduced a denser and more complex obstacle arrangement, requiring greater spatial reasoning. Here, the drone completed 5 out of 10 trials successfully (50%). Failures were mainly due to incorrect distance estimation or flawed movement plans that inadvertently guided the drone into nearby objects. The increased complexity meant that small perception or reasoning errors more frequently resulted in collisions.

Across both setups, direct contact with obstacles was the primary cause of task failure, highlighting the need for enhanced path accuracy and improved detection in cluttered or ambiguous environments.

4.4. Evaluation of Safety

For safety evaluation, the system’s reaction time was measured as the interval between obstacle detection and the drone coming to a full stop. Tests were carried out for both static and moving obstacles, as described in [

34]. Because the depth sensor provided only relative measurements, distances were verified manually using a measuring tape.

Three performance indicators were recorded:

Detection distance (m)—Distance from the obstacle at the moment it was first detected.

Speed (m/s)—The drone’s velocity at the time of detection.

Obstacle distance (m)—Separation from the obstacle once stopped.

Table 3 shows the average results for static obstacles at different speeds. Obstacle appearance (texture or surface detail) had little effect on detection range, suggesting that recognition was influenced more by size and contrast than by visual complexity.

Processing power can also affect detection performance, with faster devices potentially improving reaction time and reliability. However, no device-to-device performance comparison was conducted in this study.

Subsequent tests examined the drone’s response to dynamic obstacles appearing suddenly in its path.

Table 4 lists the average measurements. Results indicate safe operational speeds of about 0.6 m/s for static objects and 0.35 m/s for moving ones. Higher velocities reduced both stopping and detection distances, limiting available reaction time and raising collision risk.

Although MiDaS performed well in producing monocular depth maps, its accuracy and timeliness were not always sufficient for real-time, safety-critical navigation. Occasional delays or errors in depth estimation caused late obstacle detection, sometimes leading to collisions. Limited computational resources on mobile devices also introduced latency and degraded depth quality.

Improving system reliability will require reducing processing delays and adopting faster, resource-efficient depth estimation models optimized for real-time use on lightweight hardware.

4.5. Evaluation of Performance

Performance analysis focused on two speed-related metrics: response time and task time. GPT’s average response latency was under 4 s, which was generally acceptable for semi-structured tasks but could hinder operation in high-speed scenarios.

Task time measured the total duration of a successful navigation, from initial movement to reaching the target, excluding failed trials (

Table 5).

In Scenario 1, the mean completion time for seven successful runs was 31.67 s (SD = 5.42). The target was positioned 0.5 m to the right of a central obstacle. Most runs used the right-hand path, averaging 28.51 s, whereas two runs (Trials 7 and 8) used the left-hand route, averaging 39.55 s. This difference suggests a possible time efficiency for the right-hand path, though the small sample size limits conclusions.

Scenario 2, involving a denser obstacle layout, produced a higher mean task time of 76.78 s (SD = 3.39) across five successful runs. The tighter navigation space required slower speeds and frequent pauses for path adjustment.

Response time was measured as the elapsed time between obstacle detection (via MiDaS) and the receipt of GPT-4.1-nano’s full navigation response (i.e., end-to-end response time from request initiation to last token). In Scenario 1, which featured only one obstacle per trial, the mean latency was 2.11 s (SD = 1.55 s). Most trials clustered between 1.43 s and 1.67 s, but two outliers—Trial 2 (6.44 s) and Trial 3 (2.89 s)—inflated variability (

Table 6). Because the experiment relied on cloud-hosted inference, such anomalies may have been caused by transient network jitter, fluctuations in cloud service availability, or server load, in addition to the complexity of specific visual inputs.

While average response times remained acceptable for semi-structured tasks, the high-latency outliers demonstrate potential vulnerability in time-critical scenarios. One way to mitigate such delays in future implementations is through caching and batching strategies. Caching scene representations or depth maps when consecutive frames are highly similar would avoid redundant uploads to the reasoning module, while batching multiple frames for joint processing could reduce the overhead of repeated network requests. These methods were not yet implemented in our prototype but represent practical directions for improving robustness and ensuring more consistent latency in real-time UAV navigation.

4.6. Evaluation of Collaboration Synergy

Human–AI collaboration quality was measured via the Instruction Approval Rate, defined as the proportion of AI-generated navigation commands executed by the user without changes. This reflects how well the AI’s recommendations matched the user’s situational understanding and intended strategy.

In Scenario 1, 9 out of 10 instructions were accepted, giving an approval rate of 90%. Rejections occurred when the proposed movements did not sufficiently account for nearby obstacles or when overly complex maneuvers were suggested despite simpler alternatives being available. These instances emphasize the importance of refining environmental awareness and simplifying instructions when appropriate.

The high acceptance rate indicates strong operational compatibility between the AI and the user while leaving room for improvements in spatial reasoning and context-sensitive decision making.

5. Discussion

This study investigated the integration of multimodal AI—specifically VLMs—into UAV-based HMC systems, with the goal of enhancing transparency, trust, and usability in real-world, human-in-the-loop environments. Consequently, the research was guided by two hypothesis-driven questions: whether a reference multimodal AI-HMC system architecture can be designed to support transparent, adaptable, and human-centric collaboration in real-time UAV navigation (RQ1) and whether embedding vision–language models within UAV control pipelines significantly enhances explainability and task performance in complex, dynamic scenarios (RQ2).

For RQ1, our findings demonstrate that the proposed reference architecture successfully supported transparency and adaptability in UAV navigation. The architecture we propose was validated through the development of a functional experimental laboratory platform, featuring real-time drone control, GPT-4.1-based vision–language reasoning, depth estimation, and a touch-based human–machine interface. The modular system architecture effectively enabled the integration of perception, reasoning, and execution modules, facilitating context-aware, explainable behavior.

For RQ2, the experimental results indicate that embedding vision–language reasoning into UAV control pipelines enhanced both explainability and performance, though with certain constraints. Experimental results confirmed that multimodal AI enhances HMC in several human-centric dimensions. For example, the integration of natural-language explanations and visual feedback contributed to high ratings for understandability and informativeness. While GPT’s self-rated confidence scores did not strongly correlate with user confidence, users still appreciated the presence of explanations. The user study also revealed that explanation quality is sensitive to visual context, phrasing, and interface design, suggesting that a more cohesive design between reasoning output and presentation layer could further improve user trust.

In terms of usability and safety, the system demonstrated promising results, with a 70% success rate in simpler navigation tasks. Based on our empirical tests, the safe operational threshold of the proposed system for obstacle avoidance can be estimated at approximately 0.6 m/s for static obstacle avoidance and 0.35 m/s for dynamic obstacles. Below these speeds, the UAV consistently detected obstacles with sufficient stopping distance (≥0.10 m), while higher velocities led to unreliable detection and increased collision risk. These thresholds should be interpreted as approximate values specific to our hardware and laboratory setting, but they provide an important baseline for future system scaling. However, more complex tasks exposed limitations in perception accuracy, instruction generation, and latency. Failures in path planning, inconsistent explanations, and delayed responses pointed to the need for better error handling, more robust fallback behaviors, and improvements in depth estimation under real-time constraints. These findings suggest that while current VLMs can enhance the collaborative experience, their integration into embodied systems remains non-trivial and requires careful design trade-offs. The analysis of explanation ratings (see

Figure 6,

Figure 7 and

Figure 8) shows that inconsistencies were not confined to isolated cases but occurred to varying degrees across trials. While most outputs were rated positively in terms of clarity and informativeness, several exhibited mismatches between the recommended action and its justification, or inconsistencies between confidence levels and user trust. These deviations appeared in multiple trials rather than being restricted to a small number of cases, indicating that inconsistency is a recurring challenge rather than a rare anomaly. Mitigation strategies include structured prompting (e.g., fixed action–justification templates) or introducing post-processing checks to detect contradictions before outputs are presented to users.

Another important outcome relates to collaboration synergy. With a 90% instruction approval rate, the system exhibited strong alignment between user expectations and AI-generated decisions. Nonetheless, occasional rejections highlighted challenges in adapting to nuanced environmental layouts. Addressing this will require better spatial grounding in AI outputs, multimodal feedback loops, and possibly user intent modeling.

Overall, our findings provide evidence that multimodal reasoning contributes positively to both explainability and performance, thereby answering both RQ1 and RQ2. By explicitly linking experimental results to our research questions, we show that the proposed system architecture not only advances technical feasibility but also contributes to human-centric outcomes such as trust, clarity, and safety.

At the same time, we acknowledge that the number of experiments conducted was limited and the participant sample size relatively small, which constrains the ability to draw statistically significant conclusions. Some evaluation indicators also relied on subjective user ratings, without systematic user research or statistical verification. Furthermore, all experiments were conducted in a controlled laboratory setting with static obstacles and limited environmental complexity. While this was necessary for safety and reproducibility, it differs from real-world UAV applications, where noise, dynamic objects, and variable conditions introduce additional challenges. These limitations reflect the exploratory nature of this laboratory study and the practical constraints of UAV experimentation, where safety and resources restrict large-scale trials. Another limitation on implementation in real-world conditions lies in the exclusion of external interference effects, such as intentional electromagnetic interference, which can propagate errors into multimodal reasoning in UAVs.

In addition, our current implementation employs a one-shot prompting strategy with GPT-4.1-nano, where only a single example is provided to guide the expected response format. While effective for producing structured navigation commands and explanations, this approach does not fully exploit the potential of advanced prompt engineering. As suggested by the reviewers, incorporating explicit formatting rules and few-shot prompt examples could improve response consistency, interpretability, and robustness. We identify this as an important avenue for future work, particularly to enhance the reliability of reasoning outputs in complex or safety-critical UAV operations.

Despite these constraints, the results provide valuable preliminary insights into the feasibility and human-centric benefits of multimodal reasoning in UAV navigation. Future work will focus on expanding the number of participants, applying standardized evaluation instruments, incorporating statistical analyses to validate the findings more robustly, and exploring improved prompting strategies such as few-shot prompting to strengthen reasoning quality and consistency, as well as incorporating EMI-resilient control strategies to improve real-world robustness [

35].

6. Conclusions

This paper presented a novel, fully implemented architecture for human-centric HMC using multimodal AI—specifically VLMs—in a UAV navigation context. Through the development of a real-time laboratory prototype and extensive evaluation, we demonstrated that the integration of depth-aware perception and natural-language reasoning can significantly improve transparency, usability, and user trust in collaborative robotic systems.

Key contributions include a modular reference architecture for multimodal AI–HMC integration, a working system combining VLMs with UAV control and human-in-the-loop reasoning, and empirical results demonstrating high-quality explanations (80%), strong instruction approval rates (90%), and effective obstacle avoidance up to 0.6 m/s in structured environments. While limitations such as response latency, explanation inconsistency, and depth estimation inaccuracies persist, our findings validate the feasibility of deploying explainable, multimodal AI systems in interactive robotic applications.

This study bridges the gap between theoretical frameworks and the applied deployment of human-centric AI. By emphasizing usability, transparency, and system-level integration, it provides both a proof of concept and a foundation for future research in adaptive, human-aligned AI systems. Moving forward, efforts should focus on improving real-time robustness, optimizing system responsiveness on edge devices, enhancing intent awareness, and expanding validation across diverse users and domains. These steps will be critical to advancing safe, intelligent, and trustworthy human–AI collaboration in increasingly complex real-world environments. One crucial avenue for future work is the migration of multimodal reasoning capabilities from cloud-based servers to fully on-device embedded platforms. Transitioning VLM inference and depth modeling onto UAV hardware will be necessary to eliminate network-induced latency, enhance system resilience against connectivity loss, and further improve operational robustness for autonomous missions in the field. The main challenge lies in the computing requirements of such systems. Edge devices, in this case UAVs, often do not possess the hardware needed to run VLMs in their current form, requiring changes which may affect the performance and accuracy of the models used.