1. Introduction

Smart grids represent the next-generation power infrastructure that integrates Information and Communication Technologies (ICT) across the entire power lifecycle—from generation and transmission to distribution and consumption—to maximize energy efficiency and enable real-time responsiveness. These systems are composed of critical infrastructure elements such as power plants, substations, and transmission and distribution facilities, and are characterized by the interconnection of numerous operational devices, sensors, and control systems via dedicated networks [

1]. However, such complexity simultaneously expands the surface of potential cyber threats. In particular, smart grids operating in closed-network (air-gapped) environments face unique challenges where external connectivity is restricted, necessitating a delicate balance between robust security and accessible information retrieval [

2].

Terminology: In this paper, we use the term smart grid in the operational sense of a sensing- and communication-enabled grid that delivers real-time telemetry to a central control center. Edge assets do not perform autonomous diagnosis or closed-loop control; all decisions remain with human operators. Accordingly, the proposed system is a decision-support tool and does not issue control commands.

Recently, large language models (LLMs) have demonstrated remarkable capabilities in natural language understanding and document-based question answering, leading to their adoption across various industrial sectors. Nevertheless, most LLMs are deployed via cloud-based external APIs, rendering them impractical for use in closed-network infrastructures like smart grids due to concerns over data leakage and security compliance. Consequently, there is an emerging demand for alternative AI systems that can securely process internal data while mitigating the risk of information exposure [

3].

Smart grid operators frequently rely on extensive internal documentation—such as incident response manuals, security reports, and policy records—for informed decision-making. However, existing keyword-based retrieval systems are limited in their ability to perform semantic search, resulting in inefficiencies that require significant time and human resources to locate relevant materials. This highlights the need for a document embedding-based LLM system that operates locally, particularly one that adopts a Retrieval-Augmented Generation (RAG) architecture to provide accurate and rapid document search and response capabilities [

4,

5].

In response to these needs, this study aims to design a local LLM-based document question-answering system that can be securely deployed within air-gapped industrial environments such as smart grids. The proposed system emphasizes security, real-time responsiveness, and resilience, thereby establishing a viable technical foundation for field application. Furthermore, by evaluating alignment with international standards such as IEC 62351 [

6], this research seeks to demonstrate the potential of the system as a practical AI solution for enhancing industrial cybersecurity.

1.1. Challenges in Smart Grid Security and Document Management

The smart grid is an intelligent system that interconnects the entire power infrastructure—from power plants, substations, and transmission and distribution networks to end-user terminals—through advanced automation and real-time communication technologies. Compared to traditional power grids, smart grids involve significantly more control points and communication nodes, thereby increasing the surface area vulnerable to cyberattacks. In particular, the convergence of Operational Technology (OT) and Information Technology (IT) within smart grid architectures elevates the security risk, as compromises in control systems can directly result in physical consequences.

Due to this structural complexity, smart grids are typically operated within air-gapped environments that are physically and logically isolated from external networks. However, being disconnected from the internet does not guarantee immunity from cyber threats. Insider threats, the introduction of malware via portable media such as USB devices, and infections through temporarily connected laptops or diagnostic equipment during maintenance are all viable attack vectors. In practice, incidents targeting smart grid and SCADA systems are on the rise, highlighting the urgent need to enhance detection and response capabilities in security systems [

7].

Simultaneously, incident response and operational decision-making in smart grids heavily rely on a large corpus of internal documentation [

8]. This includes system architecture diagrams, incident response procedures, equipment manuals, regulatory compliance documents, and internal security guidelines. Security Operation Center (SOC) analysts and field operators are expected to make accurate and timely decisions based on these resources. However, most of these documents are unstructured, vary in format, and exist in large volumes, making it difficult to retrieve meaningful information promptly using conventional keyword-based search methods [

8,

9].

Moreover, the energy sector is subject to a variety of regulatory requirements and international standards—such as IEC 62351 [

6] and NISTIR 7628 [

2]—which often take the form of lengthy technical documents spanning hundreds of pages. Expecting security personnel or technical staff to manually search and interpret these documents in real time is both inefficient and error-prone.

Therefore, there is a pressing need for an intelligent system that can preserve the security constraints of air-gapped environments while enabling rapid semantic search of internal documents and providing natural language responses. Such a system must go beyond simple information retrieval to include contextual understanding based on domain knowledge, retrieval of precedent cases in similar threat scenarios, and assistance in interpreting standard compliance documents. To address these needs, we propose the development of a local LLM-based document question-answering system capable of operating independently within closed networks. This system aims to simultaneously enhance the security and operational efficiency of smart grids [

10].

1.2. Research Contributions and Paper Structure

The study addresses the urgent need for a document-centric question-answering system that operates safely inside air-gapped critical infrastructures such as smart grids, eliminating any dependency on external networks while meeting stringent requirements for security, real-time responsiveness, and industrial applicability. Prior approaches, constrained by keyword retrieval and simple pattern matching, fall short of delivering semantically accurate results or supporting the domain-aware question answering demanded by field operators [

9]. Moreover, directly deploying cloud-resident LLM services in smart-grid environments raises intolerable risks of data leakage, connectivity barriers, and non-compliance with international security regulations [

11,

12].

To overcome these limitations, the proposed work presents a fully offline Retrieval-Augmented Generation architecture in which e5-based embeddings, vector search, and LLM inference are executed locally, ensuring suitability for closed-network infrastructures. The design extends beyond mere functionality by incorporating defenses against prompt-injection and embedding inversion attacks, GPU-accelerated low-latency inference, and robust backup-and-re-indexing routines that sustain service continuity under failure conditions [

13,

14]. Further, by integrating a smart-grid-specific ontology and knowledge graph, the system elevates contextual accuracy and enables domain reasoning unattainable with conventional search engines. Alignment with IEC 62351 [

6], NISTIR 7628 [

2], and related standards demonstrates the solution’s interoperability and practical readiness for industrial rollout.

The remainder of the paper is organized as follows.

Section 2 surveys relevant technologies and prior research.

Section 3 details the overall architecture and the design of each subsystem.

Section 4 explains security- and reliability-oriented core functions, while

Section 5 describes the ontology-based semantic enhancement strategy.

Section 6 analyses conformity with international standards and discusses industrial deployment scenarios.

Section 7 validates system effectiveness through experiments and scenario-driven evaluations.

Section 8 and

Section 9 conclude by summarizing contributions, acknowledging limitations, and outlining directions for future work.

2. Related Research

In critical infrastructures such as smart grids, where both high security and real-time responsiveness are paramount, the direct application of cloud-based large language models (LLMs) remains largely infeasible. Concerns over potential data leakage during external API calls, technical restrictions imposed by air-gapped environments, and the need to comply with international security standards all demand a fundamental rethinking of how such models are deployed—moving beyond performance metrics to reconsider operational paradigms entirely. Against this backdrop, growing attention has been directed toward local, self-contained LLM architectures. These architectures offer the ability to support natural language querying and document interpretation without exposing sensitive data to external networks, thereby aligning with the operational constraints of high-security industrial settings [

15].

However, localizing an LLM is not simply a matter of installing the model onto a physical device. High-performing pre-trained models—such as those developed by Hugging Face or Meta—must be optimized for offline inference, often through techniques such as model quantization and parameter reduction tailored to GPU environments. Models with approximately seven billion parameters, including LLaMA2, OpenChat, and Mistral, have proven capable of delivering practical inference on a single GPU and are increasingly being deployed in conjunction with document-based Retrieval-Augmented Generation (RAG) systems. In these implementations, vector embedding models such as e5-large or BGE are used to index pre-processed documents, enabling efficient retrieval and semantic matching. The RAG architecture, in particular, plays a critical role in reducing hallucination and improving the factual reliability of generated responses [

10,

16].

Nevertheless, applying this architecture to smart grid environments introduces a distinct set of challenges. Unlike general IT systems, smart grids operate as cyber-physical systems (CPS) in which operational technology (OT) is directly linked to physical energy flow and control mechanisms. As such, document systems in this context are not merely used for information retrieval but are central to decision-making in areas such as incident response, control command interpretation, and compliance evaluation. To fulfill such roles, the system must possess domain-specific reasoning capabilities that exceed the limits of simple keyword or embedding-based retrieval. This requirement calls for an enhanced architecture that incorporates domain-aware ontologies and knowledge graphs [

14,

17].

A knowledge graph enables the model to understand the relationships between entities within the smart grid domain—for example, between generators and protective relays, or remote terminal units (RTUs) and communication protocols—beyond the level of individual word semantics. This structured representation allows the system to generate logically connected answers that go beyond sentence extraction. For instance, in response to a question such as “What is the response protocol for unauthorized access in a substation?”, the model would be able to follow a reasoning chain such as event type → response policy → applicable equipment manual, rather than simply quoting a document. This capability significantly differentiates the proposed system from conventional LLM implementations, and is particularly advantageous in highly standardized, interdependent domains like the power sector.

At the same time, such an architecture must be reinforced from a security perspective. During vector database access or LLM-driven document referencing in RAG systems, several risks may arise—including prompt injection attacks, embedding leakage leading to reverse inference, and unauthorized document exposure. To address these concerns, recent studies have proposed countermeasures such as differential privacy-enhanced embeddings, harmful prompt detection, query logging, and user authentication mechanisms [

15]. Building on this body of research, the present study establishes the technical foundations for a domain-specialized local LLM document-response system that ensures both reliability and security in air-gapped smart grid environments.

Limitations of Existing Research

Although recent studies have increasingly explored natural language processing (NLP)-based question-answering systems applicable to high-security infrastructures such as smart grids, several technical and operational limitations persist when considering real-world deployment scenarios.

A primary limitation lies in the dependency on cloud-based LLM systems. Most existing question-answering frameworks are designed around external APIs provided by platforms such as OpenAI, Google, or Anthropic. As a result, they are fundamentally incompatible with air-gapped infrastructure environments or raise considerable security concerns when deployed in such contexts. While alternative local systems do exist, they are often limited to demonstration-level implementations and are generally not designed with the security requirements of critical infrastructure in mind, focusing instead on generic text processing tasks.

A second challenge is the lack of domain-specific knowledge integration. Fields such as power systems, industrial control, and smart grids operate on formalized equipment specifications, communication protocols, and security policies. General-purpose LLMs, in the absence of prior domain adaptation, struggle to process such structured information effectively. Although some studies have succeeded in handling predefined FAQ documents or simple policy responses, they fall short in addressing more complex reasoning tasks that require semantic linkage across heterogeneous documents or the incorporation of ontologies for context-aware inference.

A third and increasingly important limitation is the insufficient consideration of system-level security in question-answering architectures. Recent literature frequently raises concerns about prompt injection, embedding inversion, and the extraction of sensitive information via user queries. Yet few studies propose or implement structural safeguards—such as authentication-based access control, noise-injected vectorization, or query logging—that are essential for deploying such systems as trustworthy AI components within industrial environments. This absence of secure architecture design remains a critical barrier to practical adoption.

Finally, existing research rarely addresses alignment with international standards or operational compatibility with industry practices. Particularly in the power sector, frameworks such as IEC 62351 [

6] and NISTIR 7628 [

2] provide detailed security guidelines tailored for smart grid environments. Nonetheless, there remains a lack of architectural consideration for integration with incident response documents, operating procedures, or compliance policies derived from these standards. Consequently, many existing approaches are limited to proof-of-concept levels and remain unfit for deployment in security-sensitive, field-oriented environments.

To overcome these limitations, the present study proposes a locally operable LLM-based architecture explicitly designed for integration into smart grid infrastructures. The system is developed with a comprehensive focus on security, domain relevance, real-time responsiveness, and policy-level interoperability, thereby laying the groundwork for practical implementation in high-assurance environments.

3. System Architecture

3.1. Design Objectives and Security Constraints

The primary objective of this system is to implement a document-based question-answering framework powered by a local large language model (LLM), capable of operating entirely within a network-isolated environment. The goal is to enable the practical use of AI capabilities in industrial field settings while preventing the leakage of sensitive technical data or personal information. To achieve this, the system incorporates a retrieval-augmented design that combines local LLM inference with document indexing and embedding techniques based on LlamaIndex.

The system is specifically engineered to function in a fully offline setting. Internet connectivity and external API access are entirely disabled, with dummy API keys configured to ensure that no outbound connections are attempted. All data processing takes place strictly within the local machine, effectively eliminating any risk of information leakage to external servers. However, internal threats such as unauthorized access to the vector database or reverse engineering of document embeddings still pose potential risks. Accordingly, the system is designed to satisfy strict security requirements, including local data retention, prevention of unauthorized access, and protection against embedding misuse.

3.2. Overall Architecture of the Proposed Document QA System

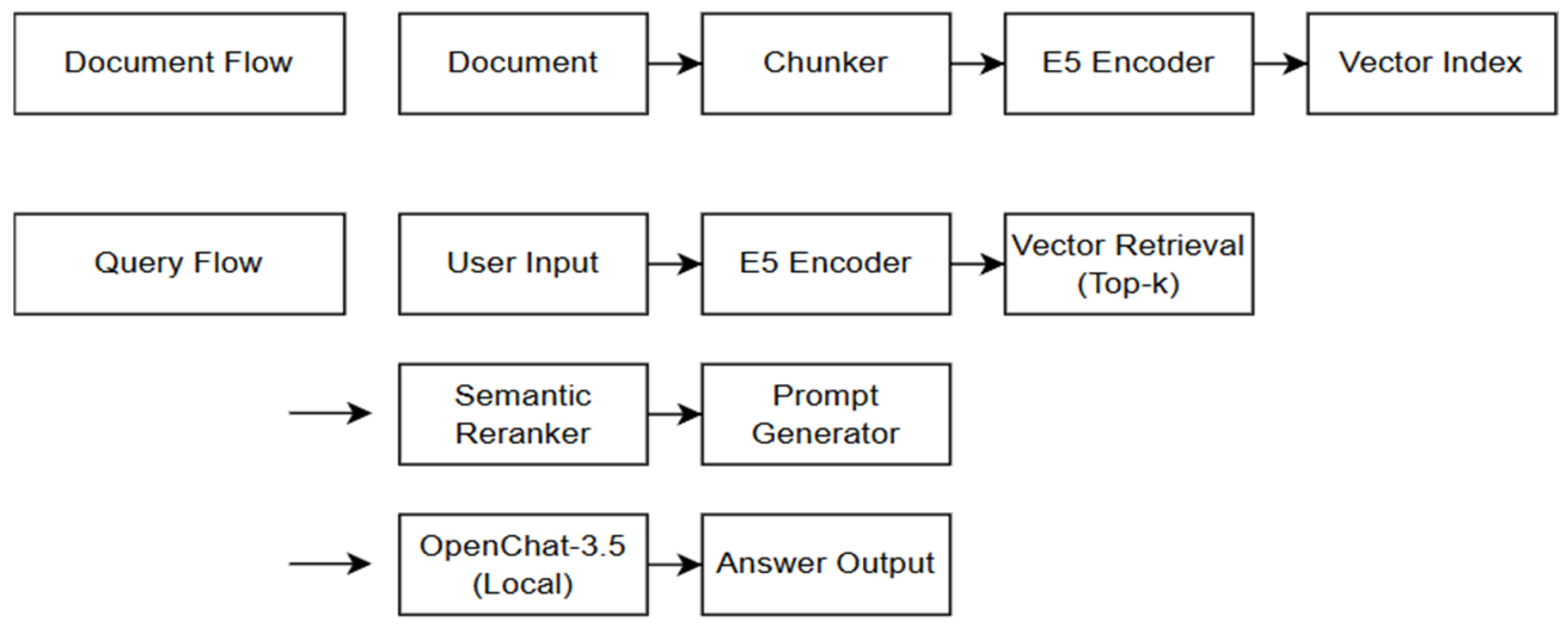

The architecture of the proposed local document question-answering system is composed of four core modules: document ingestion and preprocessing, vector embedding generation, query processing, and response logging. When a user uploads local files in PDF or TXT format, the system extracts textual content using tools such as PyMuPDF (v1.24.10, Artifex Software Inc., Novato, CA, USA). The extracted text is then segmented into semantically meaningful chunks, and embeddings are generated for each segment. These embeddings are stored as a vector index in a local database, which is used during query time to identify the most relevant document fragments based on similarity search.

The retrieved fragments are subsequently reranked using a Sentence Transformer to refine contextual relevance, after which the final context is selected for answer generation. The local LLM then generates a response based on the selected context. All operations are performed entirely within the local environment without any external data transmission. Furthermore, both queries and generated responses are logged internally, supporting auditability and enabling secure monitoring and oversight of system activity.

Figure 1 shows the overall system architecture.

3.3. Local LLM Configuration and Model Selection Criteria

In configuring the local document question-answering system, the OpenChat-3.5 model—fine-tuned on Meta’s open Llama series—was adopted as the base LLM. Two quantized versions of the model, Q5 and Q8, were selected to support different operational priorities. The Q5 model was employed to optimize for speed and lightweight execution, while the Q8 version prioritized response quality. Both models were deployed in .gguf format and executed without GPU acceleration using the llama.cpp backend through Python (version 3.12.6) bindings via LlamaCPP, enabling entirely CPU-based inference suitable for air-gapped environments.

Table 1 summarizes the key configuration differences between the two model versions. The Q5 configuration limits the maximum generation tokens to 256 and uses a shorter context window of 4096 tokens, enabling rapid inference even in constrained environments. In contrast, the Q8 model supports up to 1024 generation tokens and an extended context window of 8192 tokens, allowing for richer and more complete answers at the cost of higher resource consumption. Both configurations incorporate similarity-based document retrieval and reranking stages, although the Q8 variant engages with a greater number of retrieved and reranked documents to enhance contextual precision.

For both models, the generation temperature was set to 0.7 to balance determinism and variability in responses. To improve the contextual relevance of retrieved documents, a SentenceTransformer-based reranking module was integrated prior to LLM inference. Furthermore, the response generation process was configured to use LlamaIndex’s response_mode = “refine”, which allows the model to incorporate multiple retrieved contexts in a sequential manner. This setup ensures that an initial answer is generated from the first retrieved context, and is subsequently refined by incorporating additional relevant contexts step-by-step.

To balance information density and computational efficiency, the prompt structure was designed to encourage concise answers, ideally limited to within 100 characters. This approach not only supports faster response generation but also aids in summarizing essential information for field operability.

3.4. Document Processing and Embedding Strategy

To improve the semantic accuracy of document retrieval, this study adopts the Multilingual-e5-large embedding model provided by Hugging Face. While various alternative embedding models—such as RoBERTa, BERT, and BGE—were considered, the E5 model was ultimately selected due to its strong performance in semantic similarity tasks between questions and documents, its multilingual support, and its compatibility with CPU-based local environments. Competing models such as BGE were evaluated during preliminary testing but were not employed in the final system configuration.

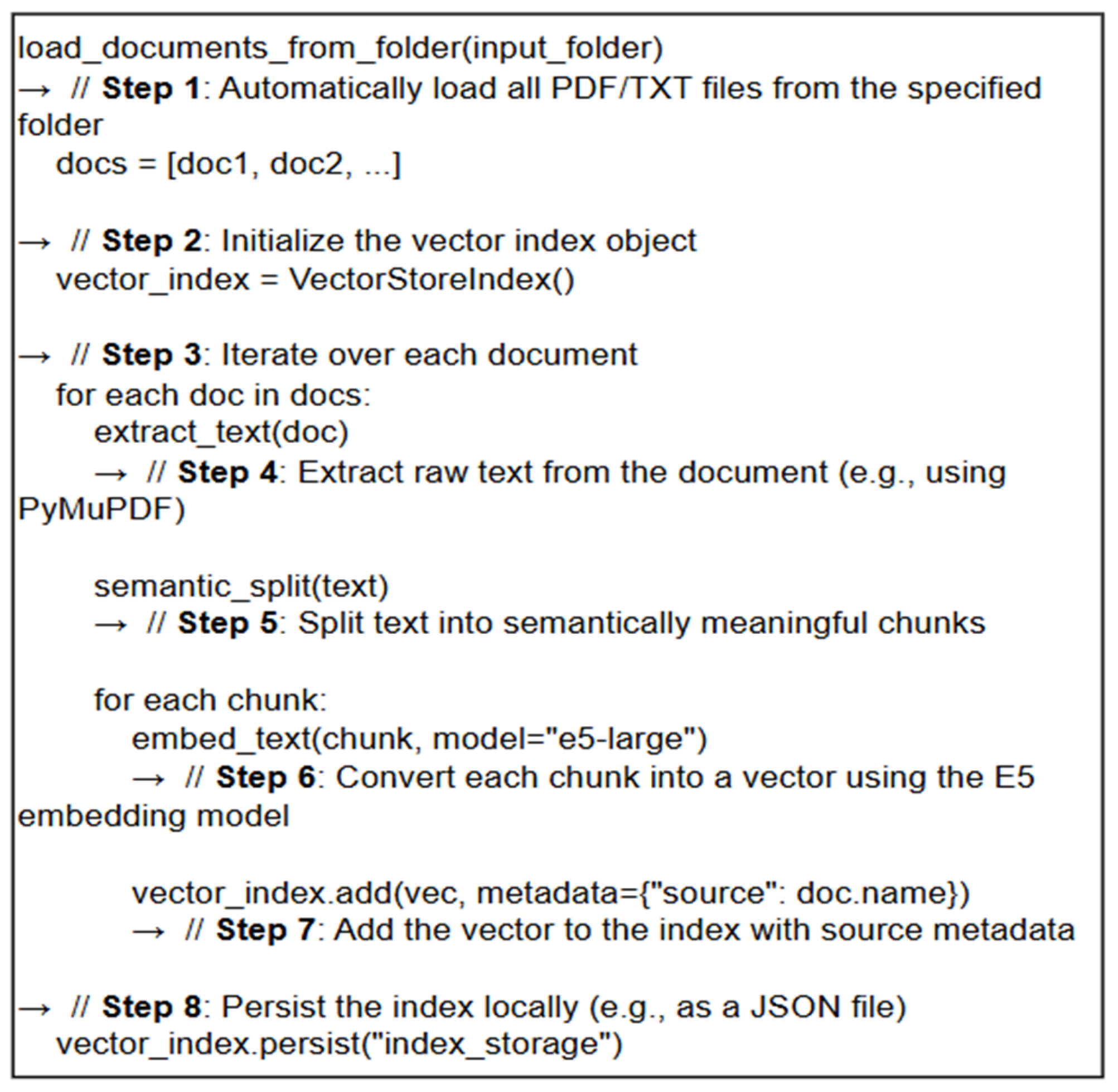

The document preprocessing pipeline is designed to handle input files in both PDF and TXT formats, as shown in

Figure 2. For PDF documents, the PyMuPDF (fitz) library is used to extract text from each page, after which all content is merged into a single unified string and converted into a LlamaIndex-compatible document object. TXT files are read directly using UTF-8 encoding and processed as raw text. Extracted text from each document is then segmented into semantically coherent chunks, rather than by sentence boundaries, using LlamaIndex’s Semantic Text Splitter module. This approach ensures that each chunk preserves meaningful context, which is essential for accurate retrieval.

Each generated chunk is subsequently transformed into a dense vector representation using the E5 embedding model. These vectors are then stored in a local vector store for retrieval and indexing. The overall document embedding procedure, including text extraction, semantic chunking, and vectorization, is summarized in the pseudocode shown in

Figure 2. The embedded document vectors are managed through a Vector Store Index and are persistently stored on disk in a JSON-based format. This design enables the system to reload previously indexed data upon restart, eliminating the need to reprocess and re-embed all documents from scratch. Each vector is stored together with metadata indicating the source document name, which allows the system to identify the origin of each retrieved chunk and enhances the reliability and interpretability of the generated responses. While the current implementation supports only PDF and TXT formats, the architecture is extensible to accommodate a wider range of document types, including DOCX, HTML, and image-based inputs through OCR. Future development aims to expand the preprocessing pipeline accordingly to support these additional formats in a unified and scalable manner.

3.5. Configuring a RAG-Based Search and Reordering Pipeline

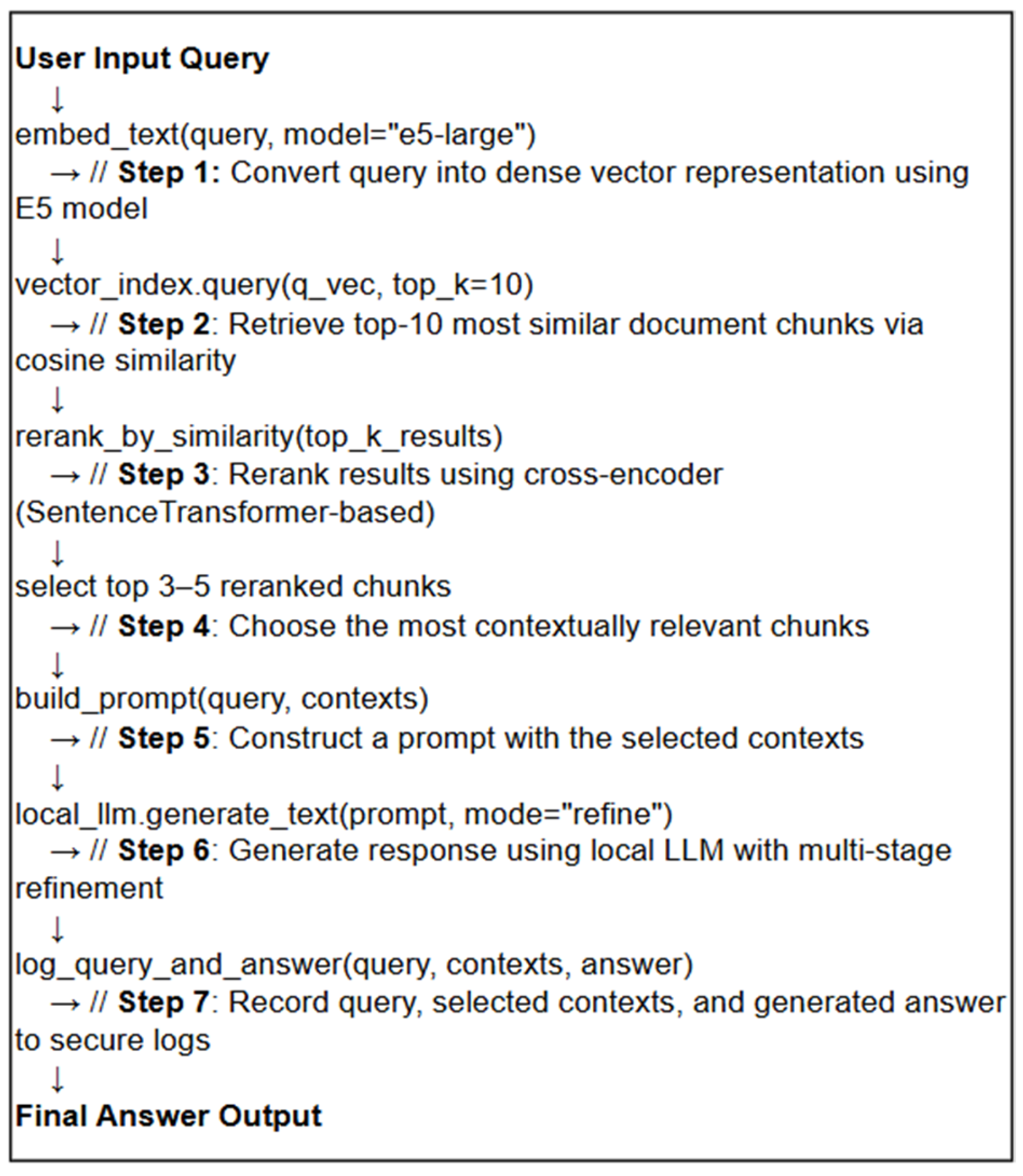

User queries are processed using a Retrieval-Augmented Generation (RAG) framework, which consists of three core stages: vector-based retrieval, reranking, and response generation. The overall process is implemented as shown in

Figure 3.

In the vector-based retrieval stage, the input query

is embedded in to a dense vector representation

using the E5 model [

5]:

The similarity between the query vector and each document chunk vector

in the vector store is computed using cosine similarity [

18]:

The top

k candidates with the highest similarity scores are selected [

5]:

In the semantic reranking phase, the candidate chunks

are passed through a cross-encoder

, which jointly considers both the query and the candidate context to compute semantic relevance scores [

19]:

The top contexts are then selected after reranking, where .

For response generation, the selected contexts are assembled into a prompt

, which is input to the local LLM. The LLM then generates a refined answer

A using LlamaIndex’s multi-step refinement mode. Let the initial answer be

, and let each refinement step be defined recursively [

20]:

The final output is , which integrates all selected context chunks progressively.

Finally, the entire interaction is securely logged, including the query q, the selected contexts , the generated answer , and metadata such as timestamps and retrieval statistics. This log is stored in an access-controlled environment for auditability and system performance evaluation.

Implementation note: Because each retrieved chunk’s original plaintext is persisted together with its embedding vector and provenance metadata (document ID, page span, timestamp) in the local index, the system directly inserts the selected plaintext chunks into the LLM prompt during answer generation. No inverse transformation or decoding from vectors is required—embeddings are used only for retrieval, while generation is always conditioned on the exact source text.

3.6. User Interface Concept and Auditability

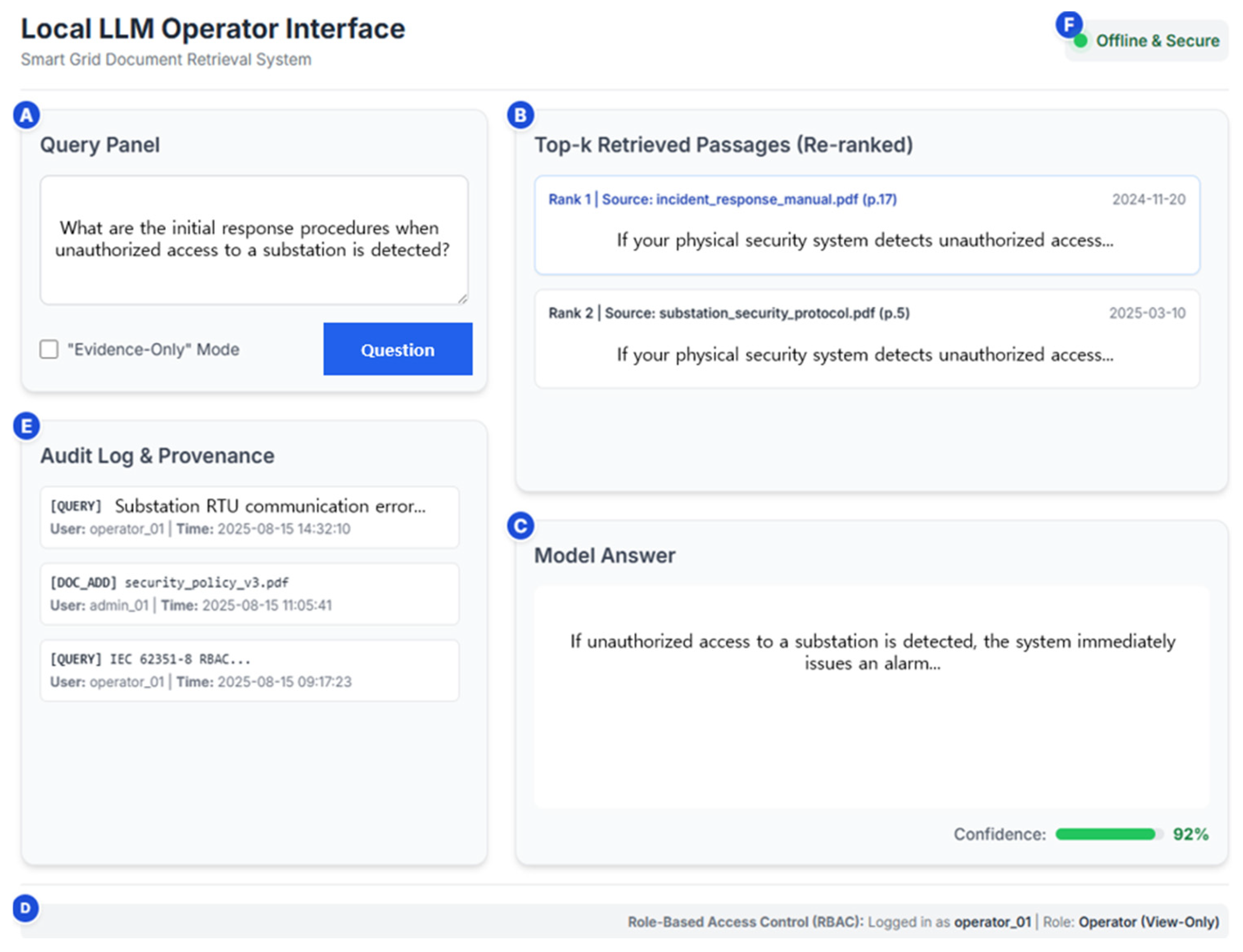

To aid non-expert users, we provide a conceptual GUI (

Figure 4) that foregrounds evidence and auditability. The interface exposes top-k retrieved passages with sources and timestamps, highlights citation anchors in the generated answer, and displays a lightweight confidence cue. An “Evidence-Only” mode restricts output to verbatim extracts. Role-based views (operator/admin) and an immutable audit log align with the access-control and logging design of the system.

4. Security and Reliability-Oriented Design Enhancement

4.1. Security Requirements (Closed-Network Deployment)

The system is deployed inside closed-network energy infrastructures to avoid exposure to cloud services and outbound traffic.

Despite air-gapping, internal risks remain, including (i) compromise of the vector database and potential embedding-inversion attempts; (ii) systematic query aggregation by an authenticated but curious user; and (iii) prompt-injection via malicious inputs or documents that could steer the LLM to reveal protected information.

Security requirements: To ensure operational safety and trustworthiness under the above model, the system enforces the following controls:

SR1. Data locality.

All source documents and their embedding vectors are stored exclusively on local storage within the secure boundary. This eliminates the risk of inadvertent disclosure through internet transmission.

SR2. No external APIs.

Both embedding and LLM inference execute entirely on-premises with all outbound network paths disabled. No third-party API calls are allowed.

SR3. Protection of embedding vectors and vector data.

Stored embedding vectors and metadata are protected using cryptographic safeguards (e.g., encryption at rest, authenticated access), role-based access policies, and, where appropriate, obfuscation to reduce the impact of potential vector-inversion attacks.

SR4. Strong RBAC and separation of duties.

Administrative privileges (document registration, deletion, re-indexing, configuration) are separated from operator privileges and granted only after successful authentication and authorization.

SR5. Comprehensive audit logging with privacy controls.

All queries, retrieval events, and model responses are logged immutably for monitoring and security auditing. Access to logs is restricted to authorized personnel, and sensitive fields are handled according to privacy policy (masking/ minimization where necessary).

SR6. Abuse and prompt-injection mitigations.

The interface supports evidence-first interactions (e.g., citations/Evidence-Only mode) and applies input hardening against prompt-injection. Query rate-limiting/ throttling and monitoring help curb automated aggregation of operational details.

4.2. Prompt Injection and Embedding Inversion Defense

To mitigate prompt injection threats malicious attempts to manipulate model behavior through crafted user inputs prompt construction strictly follows predefined templates designed to clearly distinguish between system-generated instructions and user queries. A general mathematical formulation of secure prompt construction can be expressed as follows [

5]:

Here, refers to the user’s query, while indicates the document contexts that have been selected through semantic re-ranking processes.

This structured approach ensures clear delineation between user input and predefined system context. Preprocessing filters further identify and remove inputs containing potentially harmful commands or suspicious patterns. While such measures substantially mitigate current risks, advanced strategies such as reinforcement learning from human feedback (RLHF) or middleware-based external prompt validation remain areas for future exploration.

To prevent embedding inversion attacks—unauthorized attempts to reconstruct sensitive content from embedding vectors—strict file permissions are enforced, limiting vector index access exclusively to authorized administrators. Additional protective strategies, including secure vector operations, cryptographic hashing of embeddings, or embedding data encryption techniques, are also under consideration. Future enhancements may incorporate differential privacy (DP) methods or perturbation through noise injection to further obscure sensitive information within embeddings. This protective approach can be mathematically expressed through the differential privacy paradigm.

where

denotes the privacy mechanism,

the original embedding function,

the sensitivity of the embedding, and

the privacy budget. Such methods, however, require careful consideration due to inherent trade-offs with retrieval accuracy [

12].

4.3. Real-Time Processing Optimization and GPU Acceleration

Currently, the implemented system operates exclusively on CPU resources, employing multi-threaded inference processes (e.g., Intel i7-13700F with 16-core processing). Benchmark evaluations demonstrated that the 5-bit quantized model (OpenChat-3.5-0106.Q5_K_M) achieves more than threefold inference speed improvements over the 8-bit quantized version (OpenChat-3.5-1210.Q8_0). Nonetheless, response latencies may still notably increase for extensive context handling or generation of lengthy responses.

Future research will explore GPU acceleration methods aimed at achieving real-time performance standards within operational energy infrastructure settings. Transitioning to GPU-based LLM inference, for instance, utilizing PyTorch (version 2.8.0)-compatible inference platforms, could reduce processing complexity significantly, from linear CPU-based complexity

toward substantially improved parallel GPU computation efficiency.

Moreover, parallelized GPU-based embedding computations offer further latency reductions. Additional optimization strategies under consideration include model compression techniques, intelligent prompt summarization algorithms, and caching frequently requested responses to enhance system responsiveness in real-time contexts [

18,

21].

4.4. System Resilience Through Automated Indexing and Recovery

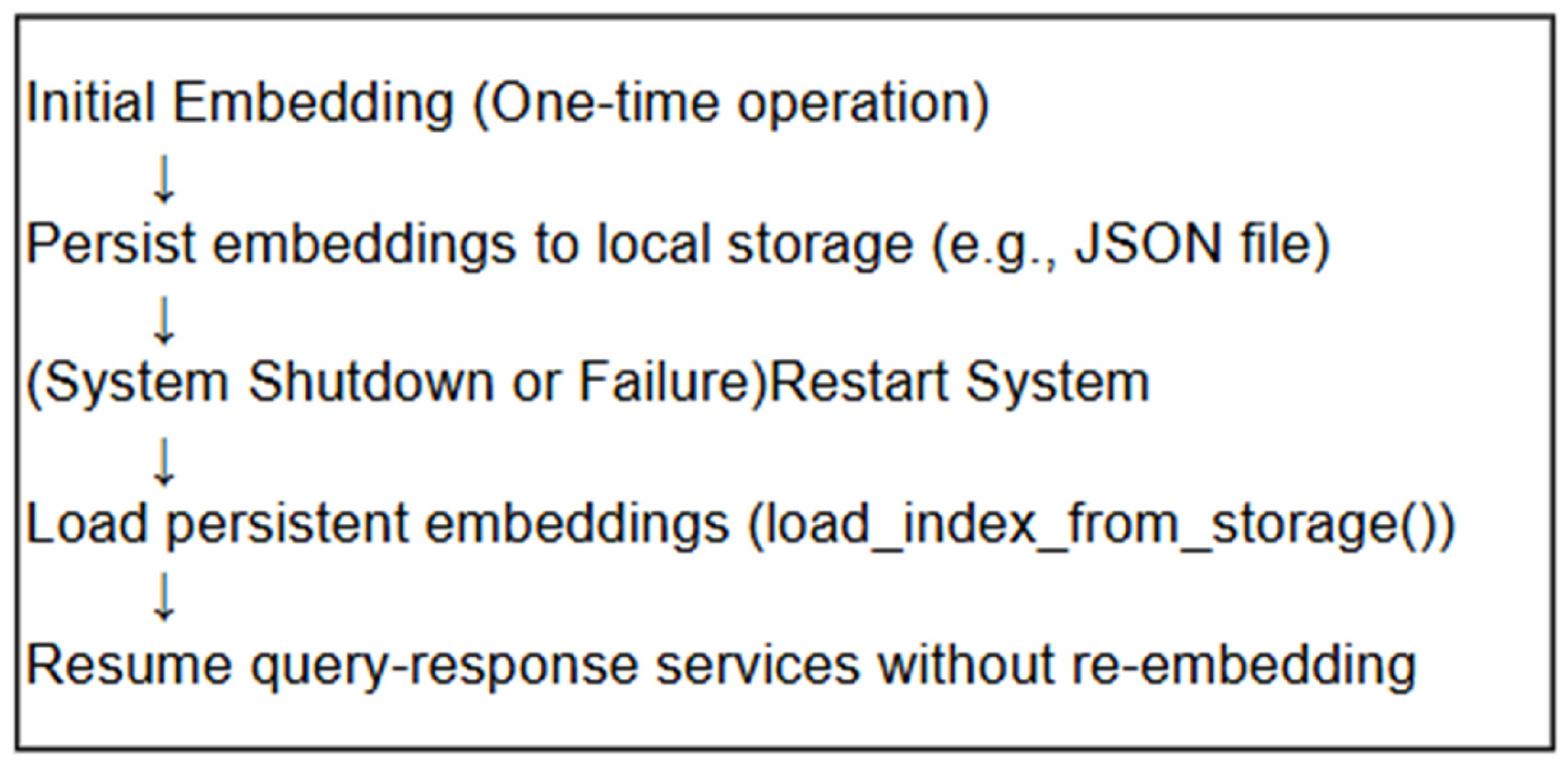

To enhance system resilience, embedded indices are persistently stored on disk (in JSON or equivalent serialized formats), enabling immediate restoration upon system restart without re-embedding overhead. A conceptual model of storage and recovery can be represented as shown in

Figure 5.

Furthermore, manual re-indexing via administrator interfaces supports updates upon document changes. Future developments may incorporate automated indexing triggered by real-time file system monitoring or scheduled periodic updates. Regular incremental backups of index data will further ensure prompt recovery capabilities in scenarios involving data corruption or accidental loss, thereby significantly reinforcing system robustness.

Operational fault monitoring and alerting. To complement the security-oriented logging described above, the system design includes a lightweight health monitor that probes critical components (embedding worker, vector index, LLM backend, storage, and the API gateway) and tracks heartbeats and error rates. When timeouts or errors are detected, the monitor automatically notifies administrators (e.g., via the audit log/SIEM and email) and can trigger policy-defined maintenance actions such as pausing ingestion, restarting the affected service, or failing over to a standby instance. This mechanism covers not only cybersecurity incidents but also ordinary equipment faults (e.g., disk errors, GPU out-of-memory), thereby improving availability. In the current prototype we primarily focus on security logging; integrating full health-monitoring and alerting into the production deployment is planned, which will further reduce time-to-detect and time-to-recover.

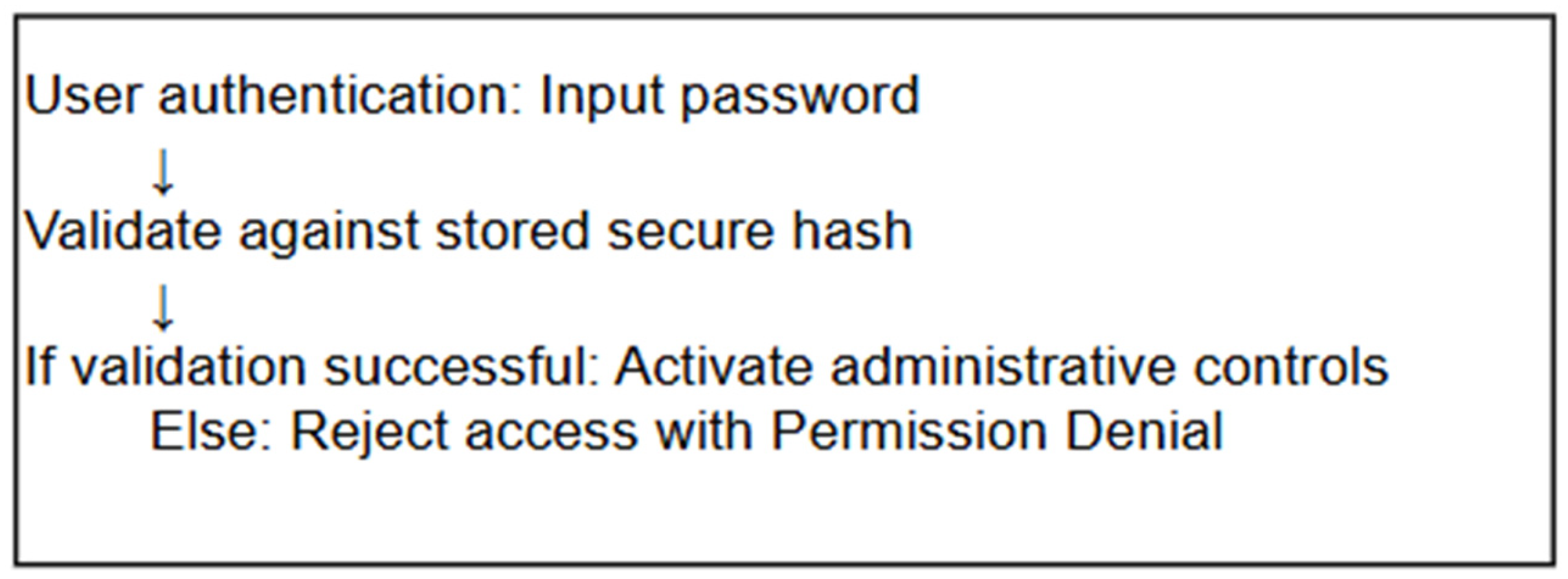

4.5. Access Control, Logging, and Privacy Protection Mechanisms

Security is systematically enforced through role-based access control mechanisms. Document management and administrative tasks are strictly segregated from user-level query functions, requiring secure authentication for administrative operations. A simplified representation of the access control logic is illustrated in

Figure 6.

Authenticated administrative access prevents unauthorized modifications of sensitive document indices, ensuring operational security integrity. Future enhancements may further include granular user permissions to restrict highly sensitive documents to authorized personnel exclusively, accompanied by detailed administrative action logging for enhanced auditing transparency.

Comprehensive logging of query-response interactions, including query timestamp, content, context count, and response summaries, supports security auditing and performance diagnostics. Logs are securely stored with strictly controlled administrative access. Potential future privacy enhancements involve encryption or privacy-preserving masking of log entries—for instance, hashing user identifiers and establishing automated retention policies to purge logs beyond their required auditing lifecycle.

Importantly, since all system processing occurs locally without external transmission, inherent risks associated with cloud-based LLM deployments—particularly external leakage of sensitive personal or operational data—are fundamentally mitigated. This advantage represents a significant step forward in industrial security, offering a safe, privacy-preserving LLM integration environment. Further research may incorporate selective encryption of sensitive log information and advanced privacy-preserving embedding methodologies to strengthen system confidentiality.

5. Ontology Based Semantic Enrichment Strategy

5.1. Domain Knowledge Modeling for Smart Grid Environments

The smart grid environment is characterized by inherent complexity, comprising diverse interconnected elements such as generators, substations, transformers, remote terminal units (RTUs), protection relays, and specific communication protocols. These components interact dynamically, forming intricate relational structures essential for accurate interpretation and effective operational management. However, conventional keyword-based retrieval systems often fail to capture these nuanced domain relationships adequately, limiting their effectiveness in sophisticated, real-time operational contexts.

To address this limitation, this study proposes adopting an ontology-based knowledge modeling framework specifically tailored for smart grid environments. Ontologies explicitly model domain-specific concepts, attributes, and relationships, providing a formal semantic representation that substantially enhances system interpretability. For instance, a domain-specific ontology can explicitly define relationships linking protection relays to specific transformers or clearly specify how communication protocols interface with RTU operations. These semantically structured definitions allow for more precise operational interpretation and facilitate accurate automated reasoning within the document question-answering system.

5.2. Integration of Ontologies Within the Question Answering Workflow

Integrating domain ontologies into the retrieval-augmented generation (RAG) workflow involves embedding ontology-based reasoning directly into the document retrieval and response-generation processes. Upon receiving a user query such as, “What are the required response steps following an unauthorized access alert at a substation?” the system does not merely retrieve isolated document fragments based on keyword matches. Instead, it employs embedding-based semantic similarity computation, measuring the cosine similarity between the query vector (q) and document vectors (d), defined mathematically as [

22].

This approach quantifies semantic relatedness precisely, ensuring more relevant and accurate retrieval outcomes.

Moreover, after initial retrieval and semantic re-ranking, the ontology framework further guides prompt construction provided to the local LLM. Presenting the model with ontology-enriched semantic contexts rather than raw text alone allows the model to generate contextually coherent, operationally precise, and semantically accurate responses. Thus, ontology integration serves not only as a reference mechanism but as an integral component enhancing the overall semantic comprehension capabilities of the document QA system.

5.3. Enhancing Semantic Interpretation and Reasoning Accuracy

Adopting ontology based semantic enrichment significantly improves the interpretive accuracy and reliability of the QA system within smart grid contexts. By explicitly modeling domain specific semantic relationships, the ontology approach effectively resolves inherent ambiguities typically encountered in conventional keyword-based or shallow semantic retrieval approaches. For instance, operational protocols related to specific security threats or technical events often entail multi-step, cross-referenced documentation not explicitly interlinked in textual descriptions. Here, ontology-guided semantic retrieval leverages the formal ontology structure to identify relevant conceptual relationships accurately. Specifically, semantic relationship scores between domain concepts

and

(

) are mathematically defined by an exponential decay function of the ontology distance (

)) [

23]:

where

denotes a parameter adjusting the semantic relevance weighting based on conceptual distances. Consequently, closely related concepts receive higher weighting during retrieval, greatly enhancing multi-hop semantic inference accuracy.

This enhanced semantic reasoning capability provides tangible operational advantages, particularly within critical infrastructure environments. Operators and security personnel gain contextually accurate and reliable guidance in real time, substantially improving situational awareness, decision-making efficiency, and operational responsiveness. Ultimately, ontology-based semantic enrichment positions the QA system as a more robust, contextually intelligent, and reliable tool, significantly increasing its practical value within complex, security-sensitive smart grid infrastructures.

Quantitative impact: Beyond the qualitative gains discussed above, we complement the analysis with an additional ranking metric—Mean Reciprocal Rank (MRR)—reported in

Section 7.5, alongside top-1/top-3 relevance. This provides a more complete quantitative view of ontology-enhanced retrieval quality.

5.4. Smart Grid Domain Ontology Development and Example

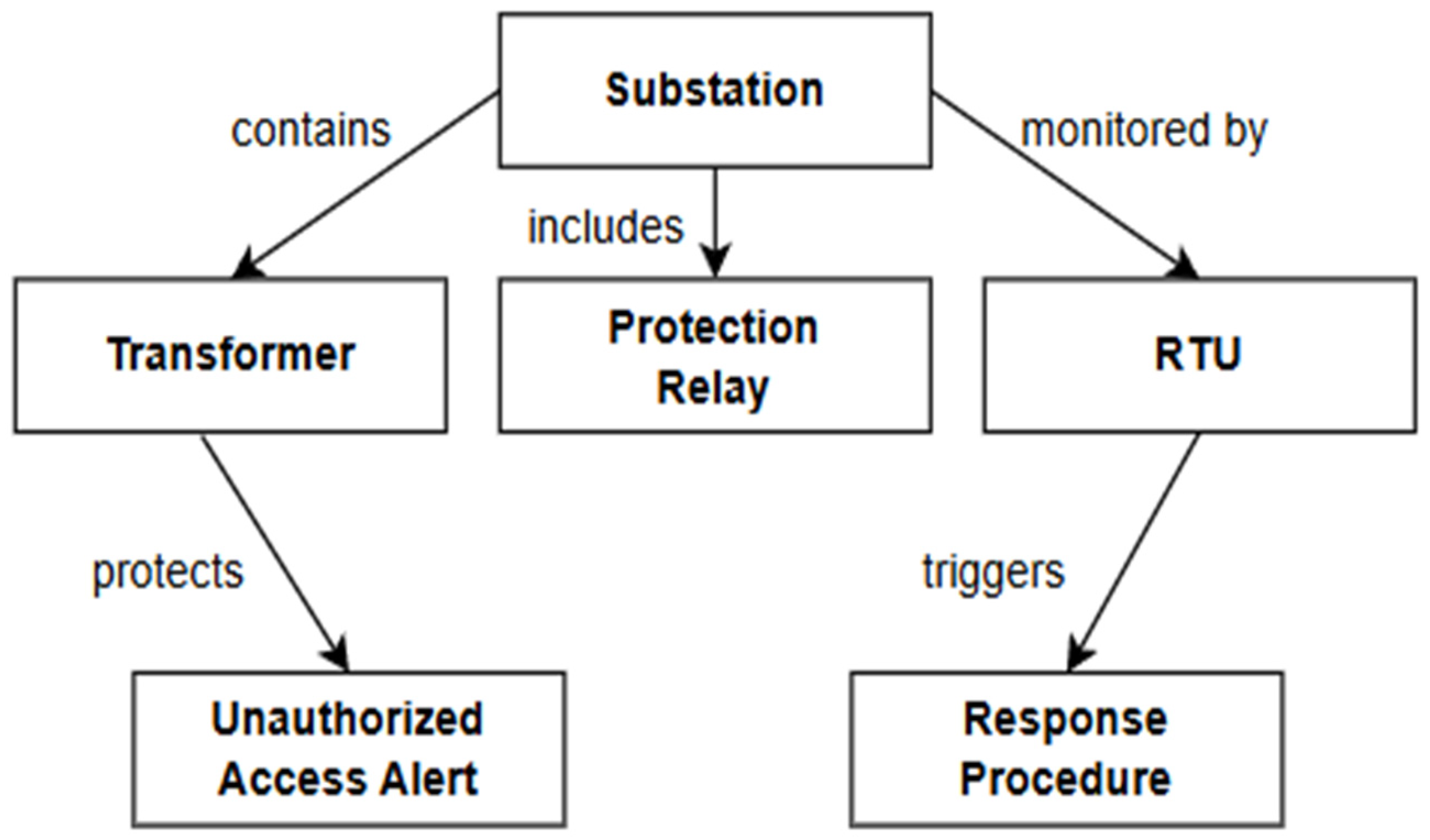

Developing a smart grid-specific ontology requires a structured approach that clearly organizes the key entities and their relationships within the power infrastructure domain. The process typically starts by identifying core classes that form the foundation of the ontology. These include physical components like Substations, Transformers, Generators, Remote Terminal Units (RTUs), and Protection Relays, as well as communication protocols such as SCADA and IEC 61850. In addition, conceptual elements like security alerts or operational procedures are also included.

Each of these domain entities is defined as a distinct concept (or class) within the ontology, and real-world relationships are explicitly modeled to reflect how components interact in practice. For instance, a substation may contain multiple transformers, be monitored by an RTU, and include safety devices like protection relays. Likewise, a security event such as an Unauthorized Access Alert is linked to the specific substation where it occurs and is connected to a corresponding response procedure or protocol.

By formalizing these relationships, the ontology effectively constructs a knowledge graph that integrates both physical infrastructure and procedural context. This structure enables a QA system to automatically navigate and utilize these connections, allowing it to reason over complex scenarios and deliver informed responses.

Integrating this ontology-based knowledge model into the QA system enables the system to understand and respond more intelligently to user queries that involve domain-specific concepts. When a user’s question references something like an “unauthorized access at a substation,” the system does not just look for documents that match the exact keywords—it uses the ontology to recognize the semantic relationships behind the terms. It understands that an alert event is tied to a specific substation, and that the event is associated with a predefined response procedure.

This means the system can retrieve not only documents about the alert itself but also related materials—like technical information on the substation’s devices or security protocols—even when those documents are not directly linked. Because the ontology is built using formal structures like OWL or RDF, it supports consistent interpretation and logical reasoning. This allows the QA system or an inference engine to deduce additional insights, such as recognizing that “grid station” refers to the same concept as “substation,” or that a protection relay guarding a transformer implies that the transformer is part of the same substation.

Figure 7 shows a simplified example of this ontology. In the diagram, a Substation is connected to several related components: it contains a Transformer, includes a Protection Relay, and is monitored by an RTU. A security alert, like an Unauthorized Access Alert, is linked to the Substation where it occurs, and is further connected to a Response Procedure that outlines the appropriate reaction. This structure illustrates how various entities—equipment, monitoring devices, and event-handling protocols—are semantically connected, providing the QA system with a deeper context for understanding and inference.

6. Industrial Standards and Interoperability Considerations

6.1. Alignment with IEC 62351 [6] and NIST IR 7628 [2] Standards

Given the highly security-sensitive nature of smart grid infrastructures, compliance with internationally recognized standards such as IEC 62351 [

6] and NIST IR 7628 [

2] is essential. IEC 62351 [

6] is a multi-part standard that addresses cybersecurity in power systems, providing technical specifications to ensure secure communication and data integrity within energy management and control environments. Likewise, NIST IR 7628 [

2] offers comprehensive cybersecurity guidelines specifically tailored to smart grid systems, covering areas such as risk assessment, system protection, and secure operational procedures.

In the context of our proposed local LLM-based document QA system, careful alignment with these standards is crucial to demonstrate that the system enhances, rather than compromises, existing security requirements. This ensures that our design does not introduce new vulnerabilities, but instead reinforces the foundation laid by established guidelines.

Our system is particularly aligned with the core requirements of IEC 62351 [

6]. It incorporates secure data handling, strong user authentication protocols, and role-based access control—all of which reflect key elements of the standard. For clarity,

Table 2 summarizes how the different parts of IEC 62351 [

6] are addressed by our QA system.

For instance, IEC 62351-3 [

6] mandates the use of Transport Layer Security (TLS) to protect TCP/IP communications in SCADA environments. In response, our system encrypts all client-server communication channels using TLS and enforces mutual authentication. In addition, other components of the standard—such as SCADA protocol security, continuous monitoring, access control, key management, secure system architecture, and event logging—are all reflected in specific design choices within our implementation, as detailed in the table.

In addition to complying with IEC 62351 [

6], the QA system’s design also reflects key principles from the NIST IR 7628 [

2] Guidelines for Smart Grid Cyber Security. NIST IR 7628 [

2] stresses a risk-based approach to protecting smart grid environments, and our deployment process starts with a thorough risk assessment (as outlined in

Section 6.2) to align with that core principle.

The system’s localized data processing—keeping sensitive operational information on-premises and disconnected from the public internet—along with tightly controlled communication paths and robust logging and auditing mechanisms, all reflect NIST’s recommended practices for securing critical energy infrastructure.

By addressing both the technical controls of IEC 62351 [

6] and the broader risk management framework of NIST IR 7628 [

2], our QA system reinforces confidence in its suitability for highly regulated operational networks. This deliberate alignment ensures that the system strengthens, rather than weakens, the existing cybersecurity posture making it a trusted solution for real-world deployment.

6.2. Guidelines for System Deployment Within Regulatory Environments

Deploying an AI-based system within critical energy infrastructure, where regulatory oversight is strict and operational safety is paramount, requires a methodical and well-structured approach from the outset. To ensure the system integrates smoothly and meets compliance expectations, several key guidelines must be followed throughout the deployment process.

First, it is essential to conduct comprehensive risk assessments in line with the NIST IR 7628 [

2] framework. This involves thoroughly analyzing how the introduction of the QA system may impact existing grid operations, identifying potential cybersecurity vulnerabilities, and defining mitigation strategies in advance. These assessments help ensure that all stakeholders—from technical teams to compliance officers—fully understand the risks and the safeguards that will be in place. By starting with this foundation, the deployment process becomes more transparent, structured, and resistant to unforeseen disruptions.

Second, operational security policies must be clearly defined and maintained in accordance with the technical controls outlined in IEC 62351 [

6]. These policies should establish how the QA system will be used in daily operations, outline procedures for continuous security monitoring, and specify response actions in case of a cybersecurity incident. Clear delineation of access roles, user permissions, and security responsibilities is especially important, particularly when mapping roles to IEC 62351-8 [

6] role-based access control standards. In addition, logging and auditing processes must be documented and structured in a way that aligns with the upcoming IEC 62351-14 [

6], allowing seamless integration with existing compliance tools and reporting workflows. These policies should not be static; they must be periodically reviewed and revised to reflect changes in the regulatory landscape or system configuration.

Third, preparing system administrators, operators, and all relevant personnel through structured training programs is a vital part of deployment. These training sessions should not only explain how to operate the QA system but also emphasize the security procedures and standards that must be upheld. Instructional content should include operational workflows, emergency response protocols, access management practices, and guidance on using the system in compliance with internal and external security frameworks. Beyond initial training, organizations should regularly conduct refresher courses and simulation drills such as mock cybersecurity incidents that involve interaction with the QA system to maintain high awareness and practical readiness among users.

By incorporating these measures risk assessment, security policy development, and user education into the deployment strategy from the beginning, the organization can ensure the QA system reinforces existing security structures rather than introducing gaps. This proactive approach helps maintain regulatory compliance, protects critical infrastructure, and establishes a secure foundation for the responsible use of AI in smart grid environments.

6.3. System Modularity and Integration Potential with Existing Infrastructure

Modern smart grid environments are inherently complex and diverse, which is why the proposed QA system has been intentionally designed with modularity and interoperability at its core. The system architecture allows for natural integration with existing infrastructure components such as SCADA systems, energy management systems (EMS), and operational data historians, without requiring significant changes to existing hardware or software.

Each component is loosely connected, making it possible to introduce the QA functionality step by step. For example, the data embedding and indexing modules can independently link to various document repositories or databases already in use. The ingestion module can be configured to periodically pull disturbance reports or event logs from a SCADA historian and convert them into vector embeddings for indexing. This entire process operates in a read-only mode, meaning it does not send control commands or interfere with real-time grid operations, keeping operational risk to a minimum.

The local LLM inference module is also designed to be self-contained and can run on standard on-premises servers. It does not require internet access or any external cloud services, so there are no added network dependencies or security risks. This module communicates with other parts of the system through clearly defined APIs, which allows it to be integrated into specific operational settings such as being queried through an operator’s HMI while remaining isolated from core control networks.

This modular and interoperability focused design makes the system easy to deploy in heterogeneous, legacy environments and allows each component whether it is the ingestion module, ontology service, retriever, LLM, or user interface to be updated, replaced, or scaled independently based on evolving needs.

As a result, the QA system can be positioned as a knowledge assistant that integrates seamlessly into the existing smart grid infrastructure. It adds immediate value by connecting fragmented data sources, supports informed decision-making, and does all of this while staying within the strict safety and security boundaries required for critical energy operations.

6.4. Example Scenario: Secure Integration in a Legacy Smart Grid Environment

To demonstrate the practical value of this design, consider a scenario where the QA system is deployed in a legacy substation environment. An operator in the control center notices an unusual increase in DNP3 protocol messages from RTU-5 at Substation A. In many installations, triaging an unusual increase in DNP3 traffic still requires correlating SCADA logs, equipment manuals, and cybersecurity guidance across systems. Experienced operators can resolve routine cases quickly; the proposed system is intended to support non-routine or cross-domain incidents, assist junior staff, and provide auditable justification rather than replace operator expertise.

With the QA system in place, the operator can simply ask a natural language question through the interface, such as, “There is an abnormal frequency of DNP3 messages from RTU-5 at Substation A. What could be the cause and what action should I take?”

The system processes the query using its modular components. The retriever identifies relevant information, such as recent intrusion detection alerts linked to RTU-5 and excerpts from the substation’s documentation. The ontology service adds context by linking RTU-5 to DNP3 communications and recognizing patterns associated with known attacks that generate excessive DNP3 traffic. Based on this input, the LLM module generates a concise response.

For example, it may respond, “RTU-5 at Substation A is generating a high volume of DNP3 messages, which may indicate a denial-of-service attempt targeting the communication network. This behavior aligns with IEC 62351-7 [

6] guidelines on network monitoring. A similar pattern was observed in the Black Energy malware incident. It is recommended to inspect RTU-5 for suspicious activity and apply recent DNP3 security updates in accordance with IEC 62351-5 [

6].”

The operator receives a clear explanation based on both current system data and established standards. The answer references the relevant standard, cites a real-world case for context, and offers a response aligned with best practices—all without exposing sensitive data outside the network.

This scenario illustrates how the QA system connects operational data with domain knowledge, helping the operator make timely, informed decisions. It interprets technical details in a way that is accessible and aligned with internal policies. By integrating smoothly with existing infrastructure and applying industry standards, the system strengthens incident response and improves overall situational awareness.

7. Evaluation and Analysis

7.1. Experimental Setup and Dataset Configuration

In order to rigorously evaluate the effectiveness of the proposed local LLM-based document retrieval and QA system, we established an experimental environment reflecting realistic operational conditions common in smart grid infrastructures. The testing was conducted on a local workstation featuring an Intel Core i7-13700F CPU with 16 cores and 32 GB RAM, without any external GPU acceleration to closely represent a typical operational setting. Two quantized variants of the OpenChat-3.5 local LLM—specifically, the Q5 model (0106.Q5_K_M, 5-bit quantization) and the Q8 model (1210.Q8_0, 8-bit quantization)—were comparatively assessed.

For embedding generation, we employed the Hugging Face multilingual-e5-large model, selected for its well-established semantic retrieval capabilities and suitability for CPU-only environments. Our dataset comprised operational and security policy documents relevant to smart grid systems, presented in PDF and TXT formats. These documents were segmented into semantically coherent text chunks and converted into vector embeddings. This approach realistically simulated typical smart grid operational document retrieval scenarios.

Scope of evaluation: The present study evaluates two configurations of the proposed system (quantization levels and ontology usage) under a fixed corpus to isolate architectural effects. We do not benchmark against manual operations or third-party enterprise search tools, as measuring operator triage time and accuracy would require controlled human-in-the-loop user studies in an operational control room—beyond the scope of this paper. Designing such a man–machine benchmark (e.g., rare-event triage, decision validation, and documentation time) is part of our planned future work.

7.2. Evaluation of Response Accuracy, Latency, and System Resource Usage

The system evaluation focused on three critical performance dimensions: semantic accuracy, query response latency, and system resource usage. A comprehensive set of 30 queries representative of actual smart grid operational scenarios was utilized to assess the system’s performance. Semantic accuracy was evaluated through manual inspection by domain experts who verified whether generated answers matched expected operational guidelines and procedures. Both Q5 and Q8 models demonstrated comparable semantic accuracy, with negligible qualitative differences observed.

However, response latency revealed significant differences attributable to quantization methods. The Q5 model, optimized for computational speed, consistently provided responses approximately three times faster than the Q8 model. Specifically, the average latency recorded for the Q5 model was between 3 and 4 s per query, whereas the Q8 model’s latency ranged from approximately 10 to 12 s per query. Regarding system resource usage, CPU utilization during inference peaked between 60% and 80%, and memory consumption remained within acceptable limits (below 75% of available RAM), underscoring the practical viability of deploying the system in typical operational settings without specialized hardware. These key experimental results are summarized concisely in

Table 3.

7.3. Safety and Hallucination

Hallucination risk and mitigations. While we do not run a dedicated hallucination stress test, the design constrains ungrounded outputs through several safeguards: (i) retrieval-augmented prompting that presents only retrieved contexts to the LLM; (ii) ontology-guided context selection that narrows the response space to domain-valid relations; (iii) a GUI that surfaces explicit citations and an Evidence-Only mode for verbatim extracts; and (iv) operator-in-the-loop use with immutable audit logs and RBAC. In cases where retrieved evidence is sparse or ambiguous, operators are instructed to treat answers as decision support and to consult procedures, rather than executing automated actions. These safeguards are aligned with the system’s offline, security-first deployment model.

7.4. Experimental Scenario: Incident Report Retrieval for Power Substation Security Events

To evaluate the practical applicability of the system in realistic operational contexts, we conducted a focused scenario experiment simulating typical smart grid security incidents at power substations. Queries included practical security-focused questions, such as. “What immediate steps should be taken upon detecting unauthorized access at a substation?” and “Outline the recommended procedure following anomalous RTU communications.”

In these experimental scenarios, the retrieval-augmented generation (RAG) pipeline effectively located and retrieved relevant operational procedures and guidelines embedded in the document repository. The semantic re-ranking stage notably improved precision, filtering out contextually irrelevant documents and thereby providing more accurate and operationally meaningful responses. Furthermore, integrating ontology-based semantic contexts into LLM prompts significantly enhanced the overall interpretability and relevance of generated answers, as validated through careful review by domain experts. This clearly demonstrated the system’s capability to deliver contextually accurate, operationally precise, and reliable guidance, fulfilling critical information needs during security incidents.

7.5. Retrieval Accuracy and Semantic Precision Evaluation

In addition to latency and system resource usage, we conducted a detailed assessment of retrieval precision and semantic alignment between the top-ranked context segments and user intent. Across the 30 domain-specific queries previously used for performance benchmarking, we manually evaluated the contextual relevance of both the top-1 retrieved segment and the final response output.

Initial context selection based solely on cosine similarity of embedding vectors achieved 87% relevance when judged by domain experts. However, by introducing a secondary semantic re-ranking layer—based on transformer-derived semantic scores—the top-1 relevance accuracy improved to 93%. This layered retrieval mechanism ensured that the selected contexts better matched the operational intent expressed in the queries. The re-ranking process proved especially effective in disambiguating polysemous terms or operational shorthand commonly found in smart grid documents.

In practical terms, this result highlights that even without domain-specific fine-tuning or labeled training data, the combination of dense embedding and lightweight re-ranking enables robust semantic understanding of complex technical queries. The quantitative outcomes of this accuracy analysis are summarized in

Table 4.

These results confirm that the retrieval pipeline, augmented with semantic re-ranking, provides contextually aligned and operationally meaningful documents suitable for downstream reasoning in mission-critical environments.

To further quantify ranking quality, we report Mean Reciprocal Rank (MRR) on the same 30-query set used for accuracy evaluation. Let

denote the rank of the first relevant item for query

MRR is defined as

This metric captures how consistently the system surfaces a relevant passage at the top of the list and complements top-1/top-3 relevance. In our setting, the ontology-enhanced pipeline yielded higher MRR than the cosine-only baseline, mirroring the improvements observed in top-1 accuracy (

Table 4). This indicates that ontology guidance improves not only overall accuracy but also the ranking effectiveness of retrieved contexts presented to operators [

24].

7.6. Case Study: Ontology-Enriched QA vs. Baseline QA Evaluation

To assess the impact of ontology integration in retrieval-augmented QA, we conducted a comparative experiment using two versions of our system with and without ontology support across two quantization levels of the OpenChat-3.5 model (Q5 and Q8). The evaluation used the same document set and question set for all configurations to ensure a consistent basis for comparison.

Table 5 and

Table 6 present sample questions and answers produced by the baseline and ontology-enhanced systems at both quantization levels (Q5 and Q8), illustrating the differences in their outputs. We observe a clear trend: the baseline answers rely solely on the retrieved passages, which sometimes leads to omissions when the needed information is not explicitly present. The ontology-enriched answers, by contrast, are generally more informative and aligned with expert knowledge.

In Q1, both answers were correct, but the baseline response lacked specifics. It did not mention which elements of substation communication were secured or how the standard is structured. In contrast, the ontology informed answer listed key components such as encryption and role-based access control, reflecting an understanding that IEC 62351 [

6] is a multi-part standard addressing various security functions.

In Q2, the baseline gave general advice, while the ontology enhanced version identified specific threats like DNP3 spoofing and firmware-based malware. It also connected these with appropriate countermeasures, referencing standards such as IEC 62351-5 [

6] and IEC 62351-8 [

6]. These standards were not mentioned in any single retrieved passage but were included through the ontology, helping the model respond more like a domain expert than a simple retriever.

In Q3, the ontology informed answer combined information from an incident report with industry best practices. While the baseline response mentioned surface level issues like firewall misconfiguration and outdated patches, the enhanced answer explained them in the broader context of architectural weaknesses, such as poor separation between IT and OT networks and the absence of intrusion monitoring. This approach helped clarify not just what failed, but why it mattered, using the ontology to relate report details to common security principles.

Importantly, the use of ontology did not cause the model to introduce false or irrelevant information. This is likely due to the controlled prompt design, where only concise, query relevant facts grounded in trusted documents or standards were added. For example, the ontology might supply simple facts like “RTUs use DNP3” and “IEC 62351-5 [

6] secures DNP3,” allowing the model to combine these with the document content to form a coherent answer.

Overall, the ontology helped guide the model toward more accurate and complete responses. Instead of guessing or omitting key points, it consistently referenced the correct standards and concepts, contributing to clearer and more useful outputs.

8. Ethical and Social Considerations

Deploying AI driven decision support in safety critical domains raises ethical and social concerns. First, automation bias may cause operators to over-trust model outputs; thus the system must be framed as decision support, not decision-making. Second, accountability requires complete audit trails and provenance of retrieved evidence; our design enforces role-based access and immutable logs but still relies on organizational governance. Third, data minimization and purpose limitation are necessary when indexing internal documents. only operationally required corpora should be embedded and retention policies must be enforced. Fourth, fairness and non-discrimination remain relevant when human resources or penalties could be affected; content policies should prevent the model from generating recommendations outside approved procedures. Finally, non-determinism in LLM outputs implies residual risk. Therefore, we mandate human in the loop verification for safety-relevant actions and surface confidence cues and citations to retrieved passages. These safeguards operator in the loop workflows, provenance, and strict scoping of indexed data help bound the social risks while maintaining the operational benefits of the proposed offline QA system.

9. Conclusions

The above safeguards delimit the ethical and social scope of our system; they also inform future field trials and certification. This study confirmed that a document-based Retrieval-Augmented Generation (RAG) system can operate effectively even in closed environments where internet access is completely restricted. Experimental results demonstrated that the combination of vector embedding based semantic search and reranking mechanisms in a local LLM question answering setup provides highly relevant responses aligned with user intent, achieving an answer accuracy of approximately 90%. A comparison between the OpenChat-3.5 models Q5_K_M (5-bit quantized) and Q8_0 (8-bit quantized) revealed that both models generated similarly accurate answers across test queries, maintaining a high correctness rate without significant performance disparity. Notably, Q5_K_M achieved approximately 1.5 times faster token generation than Q8_0, substantially reducing average response time. Most queries were answered within 10 s on a single CPU/GPU setup, approximating real-time performance. These findings suggest that model quantization can reduce latency without sacrificing accuracy, indicating that the system is well suited for practical applications in terms of both performance and responsiveness.

Unlike conventional cloud-based RAG systems, all processes are executed locally, eliminating the risk of sensitive data being transmitted externally. This design significantly enhances data security by eliminating dependency on external APIs [

18]. Compared to traditional keyword-based enterprise search systems, the proposed model leverages semantic vector search, enabling more contextually accurate document retrieval [

22]. Experimental results also showed that incorporating a reranking algorithm improved retrieval accuracy by approximately 10%, confirming the superiority of semantic search over simple keyword matching. In sum, the proposed local semantic search architecture with built-in security complements the performance strengths of cloud-based RAG while inheriting the safety of on-premise systems, thereby addressing the limitations of both approaches. The system successfully maintains cloud level search precision in an offline setting while mitigating data leakage risks an integrated improvement substantiated through empirical testing [

25].

From the perspective of security and practical deployment, the system demonstrates strong applicability. Its entirely offline configuration allows it to be deployed in network-isolated environments such as manufacturing plants, financial institutions, military systems, or critical infrastructure including smart grids. As data remains entirely internal and all computations are locally performed, the system effectively prevents technology leakage and offers high operational reliability. The actual implementation is designed to rapidly retrieve and summarize internal documents without exposing source content externally, complying with the strict information protection requirements of industrial sites while providing AI-driven knowledge exploration in a practical manner. Even in highly sensitive environments, the system can help protect internal knowledge assets while enhancing productivity through AI, and the experimentally verified performance metrics support this real-world applicability.

Future work should focus on refining the system for high-security infrastructure environments such as smart grids [

26]. First, faster response times should be pursued by optimizing model quantization techniques, applying lightweight LLMs, and leveraging dedicated hardware such as GPUs. Second, to address global industrial needs, the system must expand its support for multilingual documents [

27,

28]. Current operation focuses on Korean and English, but embedding models and retrieval pipelines should be redesigned for multilingual or language-agnostic capabilities. Third, enhancing embedding-level security remains a crucial challenge. Since vector representations may still pose reconstruction risks, mechanisms such as Differential Privacy should be explored to prevent sensitive information from leaking during embedding, alongside encryption and strict access controls for the embedding store [

29]. If pursued along these directions, the system can evolve into a more robust and secure AI-powered document retrieval solution for completely offline environments [

30].

In conclusion, this study proposes and validates a design strategy that addresses both data security and AI, two core demands of modern industry offering meaningful contributions toward the development of the next generation of security-oriented knowledge retrieval systems.