Abstract

In this paper, we introduce a new vehicle automation model describing the latest intelligent vision systems, but not limited to them, based on interactions between the vehicle, its user, and the environment, occurring simultaneously or at different times. The proposed model addresses the lack of vehicle automation models that would simultaneously incorporate the latest vision systems and human actions, organize them according to interaction types, and enable quantitative performance analysis. The model, based on interactions, organizes in terms of types and enables parametric analysis of the operation of the latest automatic vision systems and modern knowledge about human behavior using the perception of visual information. The concept of interaction cycles was introduced, thanks to which it is possible to analyze subsequently occurring interactions, i.e., when actions trigger reactions. Interactions were decomposed into fragments containing single direct unidirectional interactions. The interactions have been assigned consistent numerical effectiveness parameters related to image recognition by individual systems, thanks to which numerical analysis at different levels of detail is possible, depending on the needs. For each of the six interaction types, ten applications of the newest and available vision systems, including those prepared by the authors, were reviewed and selected for effectiveness analysis using the presented model. The analysis was performed by appropriately weighting and averaging or multiplying interaction effectiveness. The overall effectiveness of the interaction model for selected solutions was over 80%. The model also allows the selection of weights for individual components, depending on the criterion being analyzed, e.g., safety or environmental protection. Humans turned out to be the weakest link in interactions, e.g., reducing the human driver role increased overall effectiveness of interactions to almost 90%, and its increase resulted in an effectiveness reduction to over 70%. Examples of selecting solutions for implementation based on the interaction cycle and its fragment, taking into account the effectiveness of subsequent interactions, were also presented. The presented model is characterized by its comprehensiveness, simplicity and scalability according to needs. It can be used both for scientific analysis of existing solutions and be helpful in selecting solutions for modification and implementation by the vehicle manufacturers.

1. Introduction

The systematic development of automated systems in vehicles and the automation of road traffic require the preparation of appropriate system and traffic models. Many road traffic models have been defined so far [1,2].

However, models may not completely describe reality, since they may be simplified and limited or concerned with specific cases only, for example, following a vehicle in front or approaching traffic lights [1].

Models may also not directly take into account the effectiveness of newly added automatic systems in vehicles. An example of such a system could be recognition of wheels detached from another vehicle [3].

They may be non-comprehensive in a general sense, because they focus on movement rather than on interactions between the vehicle, the user, and the environment. These interactions can be defined twofold, as a multi-stage, or complex. They can be effective in terms of the correct reading of user, vehicle, or environmental parameters; for example, road surface readings by the camera from the vehicle [4]. Their effectiveness is important from the point of view of safety, traffic organization, and reduced energy consumption.

This article presents the detailed description of the proposed interaction-based vehicle automation model. As an application example, the image recognition performance of both the latest intelligent systems and humans was taken into account. The model is general, so the systems can be modeled and analyzed not only from the vehicle perspective but also from the perspective of the environment, such as monitoring systems. This means that the model can take into account not only the effectiveness of systems implemented in the vehicle but also external systems operating on the vehicle or its user, like, e.g., recognition of vehicle and/or driver features using street cameras.

2. Related Work

In the era of widespread vehicle automation, a model systematizing issues in this area has become desirable. Therefore, the authors undertook the task of defining such a model. The initially proposed vehicle automation model [5] divided issues related to vehicle automation into groups, particularly those related to signal acquisition, transmission, and processing, taking into account the passage of time. There were seven groups of interest: automation of vehicles, vehicle environment sensors, automatic perception of the environment based on video signal processing, communication of vehicles with external devices, interaction between vehicle and user, direct transmission of signals from the vehicle to the environment, and development of autonomous vehicles. Defined groups were placed into the mutual interactions between the vehicle, the user, and the environment. In two of the aforementioned groups, the passage of time was taken into account [5].

Then, this model was detailed by taking into account vision systems only [6]. This model allowed for a clear understanding of the field of vehicle automation and various issues related to it. This was a different approach than that available in the literature and the analysis of computer vision applications in the field of intelligent transportation systems, with the division of applications not referring to interactions [7].

The groups not only concerned a given type of interaction but also combined different types of interactions or divided the given interactions into various areas of interest. For example, both the vehicle environment sensors group and the automatic perception of the environment based on video signal processing were included in the interactions between the vehicle and the environment. However, in such an approach, although intuitive, considering names of the groups, it was not easy to define particular groups, especially taking into account their mathematical description and quantitative analysis of data [5,6].

On the other hand, direct reference to the types of interactions, and especially to the directions of these interactions, can facilitate such analysis [8]. This may be applied, among others, to newly designed systems, including vision systems, and can enable precise assignment of a given system into a specific group. In case of solutions that could previously belong to various groups, reference can be made to the specific types of interactions. Due to the reasons mentioned above, the authors proposed the interaction-based vehicle automation model.

To the authors’ knowledge, no model similar to the one presented in this paper has existed so far. Only one, comparable to the presented model, demonstrates similarities [8] between the user, the car, and the environment, but it has certain limitations. It focuses exclusively on cooperative advanced driver assistance systems. It only describes interactions without attempting to define them using mathematical formulas. It does not propose any common, unified performance measure for all systems. For example, it does not consider the human recognition stage, even when a human is the target of the interaction. It is also impossible to apply it to the other systems such as external monitoring.

On the contrary, the new interaction-based model presented in this paper is formally defined. Groups of issues describing various systems are not embedded in interactions, but are designated with exactly the same names as the individual interaction types. This allows for the introduction of a clear and transparent mathematical framework based on individual interactions and further data analysis.

Additionally, a unified performance measure was calculated. It is desirable to have an opportunity to use analytical measures for estimating the effectiveness of impacts. The proposed model enables determination of their source, intermediate, and result values. Measures are related to a wide range or limited number of systems; e.g., those operating at a given moment, being an original proposal, or allowing for selection for implementation in autonomous vehicles.

3. Proposed Interaction-Based Automation Analysis

3.1. Definitions of Concepts and Notations

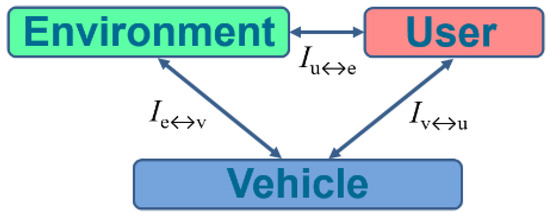

Let us define the following terms: an automated vehicle (v), transporting a user (u), and moves in a specific environment (e). Mutual influences, or in other words interactions, occur between these three v-u-e elements. A general representation is illustrated in Figure 1.

Figure 1.

General diagram of the interactions between a vehicle, a user, and an environment.

The proposed model attempts to present interactions in an accessible way. In general, interactions may not be linear. It depends on their individual nature. Interactions occur over a specific period of time (the system may operate in real-time or with a delay) and under various environmental conditions. The authors tried to distill the complex nature of interactions into a concise and simple description that allows for their analysis, regardless of their type.

Describing interactions between a vehicle, a user, and an environment in more detail is not a simple task. It is because the interactions may be triggered sequentially, or different ones may occur at the same time. For example, when a potential hazard appears on the road, e.g., an animal suddenly running onto the road, the vehicle registers it using sensors and alerts the user. The user controls the vehicle in an appropriate way, and the appropriate maneuver to avoid a collision can be performed. This maneuver causes the environment, in which the vehicle is moving, to change; e.g., the vehicle tire tracks are created on the ground surface next to the road. Afterwards the surveillance cameras can register the new position of the vehicle. The vehicle sensors can register changes in the parameters of the environment, e.g., a new type of road surface. And so on, this situation could continue, interaction after interaction. On the other hand, some interactions, despite the cause-and-effect chain, may occur simultaneously. For example, an oncoming car loses a wheel and veers into the oncoming lane in front of another car. The damaged car can be detected simultaneously twofold: by the other car’s driver’s eyesight and by the onboard automatic systems. In the same example, a damaged car can be detected by one intelligent system and its damaged wheel detected at the same time by another system.

Subsequent interactions can be triggered without restrictions. This means that an action triggers a reaction, and the reaction becomes an action that triggers another reaction, and so on continuously. Therefore, the concept of the so-called interaction cycles can be introduced. Cycles passing through subsequent v-u-e elements. We assume that one individual interaction cycle starts and ends at the same element (environment, user, or vehicle) and passes through at least two elements. Interactions in subsequent or repeated cycles can pass through a given element multiple times.

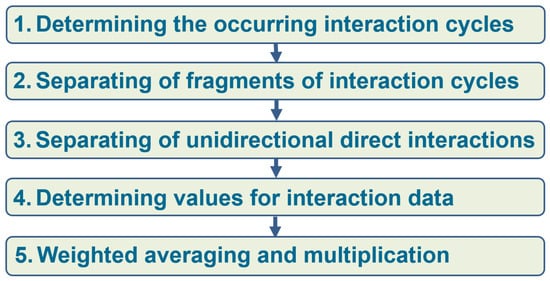

Therefore, the structure of our model is based on the concept of so-called interaction cycles. To facilitate analysis, their fragments are isolated down to the smallest fragments, which are direct, unidirectional interactions. Subsequent synthesis, based on weighted averaging or multiplication, enables analysis of automation effectiveness. The proposed model concept is schematically presented in Figure 2. The presented stages are discussed in the following part of the article.

Figure 2.

General block diagram of proposed model concept.

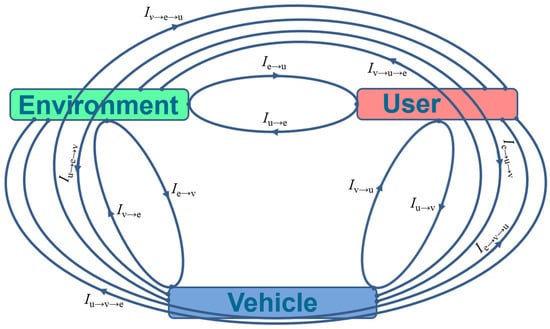

It is possible to distinguish unique fragments of interaction cycles, i.e., those in which we assume that neither elements nor types of interactions are repeated. These specific fragments represent sequences of interactions and, as representative samples, allow complex sequences of interactions to be reduced to a clear and understandable form. These extracted sections of interaction cycles are interactions that are determined by operations performed through two or three different elements. Selected fragments of interaction cycles are shown in Figure 3.

Figure 3.

Selected fragments of interaction cycles.

Interactions that pass through two elements are direct interactions, and those that pass through three are indirect interactions.

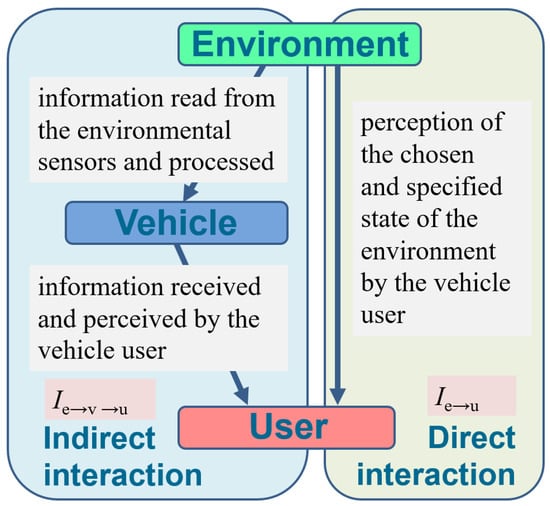

An example of direct interaction (I) is a perception of the chosen and specified state of the environment by the user of a vehicle. The environment, therefore, directly affects the vehicle user.

The corresponding example of indirect interaction is a situation when, based on information read from the environmental sensors and processed, the vehicle transmits information that is received and perceived by the user. The environment, therefore, affects the user, in which, as an intermediate step, the vehicle mediates. An illustration of an example of direct and indirect interaction is shown in Figure 4.

Figure 4.

Illustration of direct and indirect interaction.

In the above-introduced symbols, the direction means that a given element affects the element marked with an arrowhead. Thus, the element marked with an arrowhead was influenced by the element marked with an arrow rod.

3.2. Mathematical Relationships

In order to introduce formulas describing interactions, we start from two-way interactions. Each bidirectional interaction consists of two unidirectional interactions. The sum of bidirectional interactions is the sum of unidirectional interactions occurring between possible combinations of two different v-u-e model elements. It is described by formula [6]:

Unidirectional interactions can be written by considering the input (source) and output elements of the component interactions.

Let us introduce an auxiliary matrix . This matrix will be used to indicate the elements—vehicle (v), user (u), or environment (e)—between which interactions will take place:

As we can see, for the given three elements mentioned above, there are six combinations of two-element unidirectional interactions. Below we will use the index to select a given combination of interaction (i.e., a row from matrix ).

In general, the number of direct interaction types for a given model is a number of indices denoting various direct interactions between elements (interaction objects). It can be calculated using known equation for a number of combinations without repetitions. The total number is two times higher, due to two directions of interactions:

In the case of our model , and, therefore, , so the index .

One-way interactions include both direct and indirect interactions, and there are six possibilities denoting types. Each type of interaction can be divided into component interactions. Each component interaction is below denoted by index for two-element direct interactions and by index for three-element indirect interactions. Thus, the indexes and denote separate interactions within a given type. We assume that the number of direct interactions of a given type is and the number of indirect interactions is . The values of and depend on the type of unidirectional interaction, in consequence on the row of the auxiliary matrix , so we denote them as and .

We can calculate the total interaction of a collective model as a sum of all j-th and k-th interactions. Referring to the elements of the matrix and using the set of indices as references to its rows, we can calculate it as follows:

For example, for , based on matrix using (4), we can write the following (in general or in v-u-e notation):

In turn, the k-th interactions passing through three elements, i.e., indirect interactions, can be decomposed into two interactions types passing through two elements, i.e., direct interactions. It follows that the interaction between the input and output elements (for a three-element interactions) consists of direct interactions coming from the input element and direct interactions reaching the output element. After the decomposition we have the following:

For example, for , using elements from matrix , (6) we can write:

Direct interactions of the same type, i.e., with the same elements and directions, according to the matrix , occur in sums in (6) for three different values of . As an example, interaction consists of , , and interactions. Therefore, in this example, the number of all component interactions of interaction is .

After collecting and ordering direct interactions of the same type, they can be written as a vector. Each vector concerns direct interactions of the same specific type, i.e., the same elements and the same directions.

Now, we introduce index to denote unidirectional, direct interactions in the model that uses direct, i.e., two-element, interactions only:

The number of indices is equal to the number of i-th component direct interactions of a given type, i.e., the cardinality of a set:

For example, for the direct interaction from the vehicle to the user, using (8) we can write the following:

Let us use the directed graph theory, where a directed graph is a graph that is made up of a set of elements called vertices that are connected by directed edges . In our model, the objects (vertices) are between which interactions take place. Direct interactions (directed edges) of various types consist of i-th interactions. So, the model, as a directed graph, is composed of the following:

All vectors defined in (8) can be collected into the model that includes the vehicle, the user, and the environment:

The definition (12) for better reading can be written using the v-u-e notation:

The advantage of introducing such a graph model is the reduction of any complex interaction cycles into single unidirectional direct interactions. In other words, all interactions between the vehicle, the user, and the environment can be described. Then, they can be decomposed into two-element unidirectional direct interactions relating to specific i-th system, e.g., smart vision system.

3.3. Reference to Vision Systems

Knowing the parameters of individual systems, one can build interactions and complex structures containing interaction cycles. For example, the department that organizes automatic systems allows for their comprehensive analysis in terms of various parameters. For vision systems, which may be used in the automotive industry, especially for passenger cars, all six unidirectional direct interactions related to image recognition can be described, each occurring between two elements. Their effectiveness can be assumed to be the effectiveness of object recognition as shown in Table 1.

Table 1.

Unidirectional direct vehicle–user–environment (v-u-e) interactions described using object recognitions by vision systems.

Interactions where the arrowhead element is a vehicle or the environment may include various automatic vision systems that recognize objects from the perspective of the vehicle or the environment.

Interactions where the arrowhead element is a user mean that the user, using visual perception, recognizes objects. Objects can come from the external environment or be generated using vehicle displays.

Such an analysis leads to grouping vision-based systems and arrangements in terms of interaction. Activities performed by humans have been separated from those performed by automatic systems, but they can be compared. This allows for comparative analysis.

3.4. Measures of Effectiveness

We can apply the proposed model for organizing and analyzing the effectiveness of interactions to any system . Interaction effectiveness means the degree to which the interaction goals have been achieved, i.e., to what extent the planned effects of the interaction have been achieved. In the case of vision systems, it may be image recognition, especially recognition of specific objects, according to the general definition of effectiveness [9].

For the interaction-based approach, it is possible to introduce measures of the effectiveness of the output element interaction with the input element. In case of v-u-e interactions, it can be performed for six types of direct interaction, i.e., combinations of two from three elements, including both directions (see (3)). Each of the interactions, which occurs between two elements, corresponds to an i-th system during model application. Therefore, for every type of direct interaction, measures of the effectiveness can be determined for i-th component interactions, each relating to a specific i-th system :

For example, for a vehicle-to-user interaction, the effectiveness of the interaction is the effectiveness of the particular i-th system operating on that interaction:

Effectiveness, understood as values of basic test performance metrics, may include many various measures, e.g., accuracy, precision, sensitivity, and F1 score. In the following formulas TP means true positive, TN means true negative, FP means false positive, and FN means false negative resulting samples [10]:

Often, in image recognition, determining true negative (TN) samples can be problematic or ambiguous. If we substitute TN = TP in the accuracy measure, we obtain the F1 score measure (cf. (16) and (19)). Thus, some similarity between the accuracy and F1 score measures can be stated.

In general, any single metric or set or combination of metrics can be used to compute the model. In the considered example of vision systems, the accuracy and F1 score metrics were the most frequently reported or calculable metrics.

For these reasons, in this article, the accuracy value, F1 score, or the arithmetic mean of both, depending on availability in the literature, is considered as effectiveness of i-th system:

The model can be used to comprehensively determine the effectiveness of all vision systems together related to vehicles, its users, and the environment. Even if it is possible, the huge number of different systems would make such an analysis very time-consuming and error-prone. A much more accessible application involves the analysis of selected systems used in a given case relating to a specific vehicle. These may be systems owned by a given car manufacturer or systems owned by specific authors.

3.5. Analysis Based on Averaging and Multiplication

The model can be used for two illustrative types of analysis: one based on effectiveness averaging and one based on effectiveness multiplication.

The effectiveness averaging analysis may be used to estimate effectiveness assuming a high degree of independence between the vision systems under consideration. We can assume that vision systems prepared for recognizing one type of object, e.g., line detection, did not change the effectiveness of other systems, e.g., traffic light detection. Therefore, these systems operate in parallel without interfering with each other. In consequence, low effectiveness of a given vision system only slightly reduces the effectiveness of the entire system.

The effectiveness of direct unidirectional interactions is introduced as averaged (arithmetic mean) over the available i-th interactions of a given type corresponding to a given system:

As example, for the interaction from the environment to the vehicle and ten component vision systems, it can be defined as follows:

We can enter measures of the effectiveness of bidirectional direct interactions as averages for both directions, where only every second value of m occurs, i.e., :

As an example, for the interaction from the environment to the vehicle, it can be written as follows:

Finally, it is possible to calculate a measure of interaction effectiveness for the entire v-u-e interaction model as an arithmetic mean of bidirectional interactions. It can be written as follows:

Effectiveness values for direct interactions can be multiplied to compare different system configurations. Two or more unidirectional direct interaction effectiveness values can be multiplied.

In the case of analysis based on the multiplication of efficiencies, we assume dependence in the sense of successive interactions, each being a consequence of the previous one. We also assume that their aggregated analysis of effectiveness is important.

Effectiveness for sequences of the same interactions but using different systems can be compared. Different interaction sequences, such as direct and indirect, can also be compared. Such analysis can facilitate the selection of the best solutions for implementation.

4. Application of the Proposed Model to Vision Systems

In this section we describe the application of the model to the automotive vision systems, which are in the authors’ research area.

4.1. Input Data from the Literature Review of Intelligent Automotive Vision Systems

For the illustrative example, the newest, available, and sometimes the authors’ modern vision systems that had been published were selected. Smart systems were chosen, where image recognition was performed using artificial intelligence methods, i.e., artificial neural networks or those supported by a human intelligence. It was assumed that the adopted vision systems operated in and on the vehicle with the effectiveness described in the publications. It is advisable to achieve the highest possible effectiveness of interactions. For example, the car systems that can very well recognize the surroundings affect safety and saving the energy.

The reviewed systems were selected so that they were as different as possible to avoid duplication. The ones for which typical measures of recognition effectiveness were available were selected. If there was an approximate value without decimal parts, a zero was added after the decimal point. Sometimes, these were values directly read from a graph, and despite all efforts, they may be a bit inaccurate.

For each one of the six types of direct unidirectional interaction, to make the analysis transparent, ten examples of interaction-based vision recognition systems were selected from state-of-the-art literature and presented in Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7. For each vision system presented, key system characteristics, such as environmental conditions or classes, regarding v-u-e interaction, were provided in brackets. The tables also present corresponding system recognition effectiveness values, arithmetic mean value, product, variance, and standard deviation of effectiveness. The arithmetic mean is used for calculating the model. The product shows an extreme case where all ten systems operate in series, i.e., their interactions occur sequentially. The variance and standard deviation show how the systems differ and can be used to assess the variation in the performance quality of the presented systems.

Table 2.

Ten selected examples of recognition effectiveness for environment → vehicle interactions.

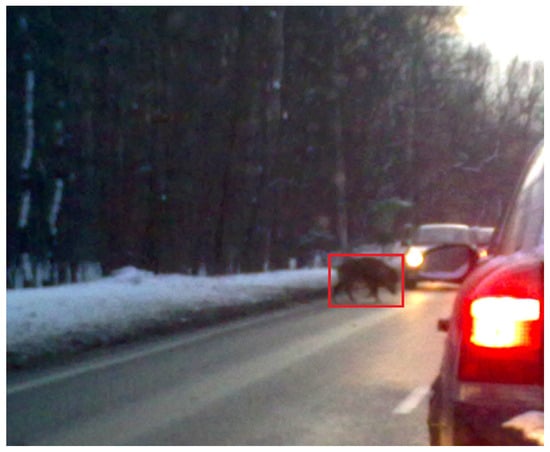

Table 2 presents selected examples of automatic recognition systems operating on interactions from the environment to the vehicle. Vehicle systems, using vision sensors, can recognize the type of surface and its defects [4,11]. Infrastructure elements such as lanes, road signs, traffic lights, pedestrian crossings, or barrier cones can be detected [4,11,12,13,14,15,16]. Pedestrians themselves and other vehicles, including privileged ones, as well as objects posing a threat and animals can be detected [3,16,17,18]. An example of detection of a wild boar crossing the road in Central Europe in winter, under conditions of limited visibility, is shown in Figure 5.

Figure 5.

Detection of an animal crossing the road in winter under conditions of limited visibility (the red box indicates the wild boar object).

Problems affecting errors in vision-based recognition include similarities between objects belonging to different classes and low-quality recordings [19]. Expanding the database of training images [19] and developing cameras can reduce errors. Water splashes on flooded roads or hail can also be a problem, but splashes are commonly avoided by drivers [20]. Appropriate databases, the development of artificial neural network architectures, and the integration of data from various sensors can improve the effectiveness of vision systems.

This type of interaction is met by many systems described in the literature. In Table 2, to show this diversity of systems, general values of effectiveness are sometimes given for groups of objects consisting of individual classes. To make the analysis more detailed, one could give values for individual classes of objects. It was considered that this is not a necessary requirement in the presented analysis.

Next, the interactions from the vehicle to the environment (Table 3) may include surveillance systems or smartphone applications for the user, aimed at effectively recognizing various vehicle elements and their condition. In our case, taking into account mainly the smart systems intended for the vehicle and their selection by its manufacturer, the focus was more on smartphone applications. Vehicle elements that can be recognized include body elements, interior elements, vehicle engine elements, or car dashboard icons [21,22]. The recognized vehicle condition may be related to tire inflation pressure, tire tread height, vehicle corrosion, vehicle damage, or, in particular, tire damage or rim damage [23,24,25,26,27].

Table 3.

Ten selected examples of recognition effectiveness for vehicle → environment interactions.

Table 3.

Ten selected examples of recognition effectiveness for vehicle → environment interactions.

| Index (i) | Vehicle → Environment Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | classification of vehicle body elements (e.g., side indicator, door handle, fuel filler cap, headlight, front bumper) [21] | 99.0% |

| 2 | classification of vehicle interior elements (e.g., steering wheel, door handle, interior lamp, door lock mechanism) [21] | 100% |

| 3 | classification of vehicle engine elements (engine block, voltage regulator, air filter, coolant reservoir) [21] | 100% |

| 4 | car dashboard icons classification (e.g., power steering, brake system, seat belt, battery, exhaust control) [22] | 100% |

| 5 | tire inflation pressure recognition (normal and abnormal) [23] | 99.3% |

| 6 | tire tread height classification (proper and worn tread) [24] | 84.1% |

| 7 | vehicle corrosion detection (identification and analysis of surface rust and appraisal its severity) [25] | 96.0% |

| 8 | vehicle damage detection and classification (various settings, lighting, makes and models; damages: bend, cover damage, crack, dent, glass shatter, light broken, missing, scratch) [26] | 91.0% |

| 9 | tire damage recognition (under various interfering conditions, including stains, wear, and complex mixed damages) [27] | 98.4% |

| 10 | rim damage detection (various weather and lighting conditions) [24] | 90.0% |

| mean value of effectiveness | 95.8% | |

| product of effectiveness | 64.0% | |

| variance | 30.6 | |

| standard deviation | 5.5 |

The next type of interaction is from the user to the vehicle. They are presented in Table 4. Vision systems are used to recognize the state of a human, the commands, and the activities performed. Based on the available facial images, a system for vehicle authentication and driver drowsiness or emotion detection [28,29,30] can operate. Driver distraction detection may be based on parts of the face, i.e., eye gaze analysis [31]. Recognized hand gestures [32] can serve as commands for various car on-board systems. Hands on the steering wheel [33] or feet for opening the power liftgate [34] can also be detected visually. A camera placed in the vehicle interior can be used to determine whether the driver is changing gears, eating, smoking, or using a mobile phone [35].

Table 4.

Ten selected examples of recognition effectiveness for user → vehicle interactions.

Table 4.

Ten selected examples of recognition effectiveness for user → vehicle interactions.

| Index (i) | User → Vehicle Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | facial recognition-based vehicle authentication (facial features analysis to authorize or not to authorize person) [28] | 98.0% |

| 2 | driver drowsiness detection using facial analysis (recognition of drowsy and awake driver behavior) [29] | 100% |

| 3 | driver emotion recognition using face images (anger, happiness, fear, sadness) [30] | 96.6% |

| 4 | driver distraction detection based on eye gaze analysis (recognition of distracted, i.e., the gazing off the forward roadway, and the alert state, i.e., looking at the road ahead) [31] | 90.9% |

| 5 | hand gesture image recognition (multi-class hand gesture classification) [32] | 97.6% |

| 6 | visual detection of both hand presence on steering wheel (images collected from the back of the steering wheel) [33] | 95.0% |

| 7 | vision-based feet detection power liftgate (various weather conditions, different illumination conditions, different types of shoes in various seasons, and various ground surfaces) [34] | 93.3% |

| 8 | driver operating the shiftgear pose classification (driver hand position to predict safe/unsafe driving posture) [35] | 100% |

| 9 | driver eating or smoking pose classification (driver hand position to predict safe/unsafe driving posture) [35] | 100% |

| 10 | driver responding to a cell phone pose classification (driver hand position to predict safe/unsafe driving posture) [35] | 99.6% |

| mean value of effectiveness | 97.1% | |

| product of effectiveness | 74.1% | |

| variance | 10.0 | |

| standard deviation | 3.2 |

The vehicle user-to-environment interfaces mainly concern the recognition of people inside a car using external cameras (Table 5). Surveillance cameras can also detect, for example, fastened seat belts [36], but due to the lack of accuracy, F1 score, or both precision and sensitivity reported in the literature, they were not included for further analysis. While, for discerning users of electric scooters or bicycles, this would not be a difficult task [37], it is not easy for passenger cars. Passengers must be visible from the outside of the vehicle by the vision sensor through a window that may be tinted at various levels [38]. Thus, when designing a car to facilitate the recognition of people by external camera systems, it is worth considering not using completely darkened windows. It is easier to see people sitting in the front than in the rear part of car [38].

Various filters can be used to improve the effectiveness of passenger detection compared to lower recognition effectiveness in the case of not using them, also depending on the signal processing methods used [39]. The best results obtained for filters as well as their combinations are presented in Table 5, also due to the limited number of user-to-environment interaction-based vision systems.

The last two types of interactions are those directed at humans, i.e., those in which the effectiveness of human recognition can be determined. In the examples listed, humans recognize specific objects based on their sense of sight.

Table 5.

Ten selected examples of recognition effectiveness for user → environment interactions.

Table 5.

Ten selected examples of recognition effectiveness for user → environment interactions.

| Index (i) | User → Environment Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | binary classification of presence/absence of a passenger along with the driver using external camera images (two cameras set at the oncoming traffic and perpendicular to it) [38] | 97.0% |

| 2 | rear passenger count using external camera images (two cameras set at the oncoming traffic and perpendicular to it) [38] | 91.1% |

| 3 | passenger detection using surveillance images without filter (various window tinting) [39] | 10.2% |

| 4 | passenger detection using surveillance images with neutral gray filter (various window tinting) [39] | 68.8% |

| 5 | passenger detection using surveillance images with near-infrared filter (various window tinting) [39] | 71.6% |

| 6 | passenger detection using surveillance images with near-infrared and ultraviolet filter (various window tinting) [39] | 72.2% |

| 7 | passenger detection using surveillance images with polarizing filter (various window tinting) [39] | 74.7% |

| 8 | passenger detection using surveillance images with near-infrared and neutral gray filter (various window tinting) [39] | 75.8% |

| 9 | passenger detection using surveillance images with near-infrared and polarizing filter (various window tinting) [39] | 76.6% |

| 10 | passenger detection using surveillance images with ultraviolet filter (various window tinting) [39] | 79.0% |

| mean value of effectiveness | 71.7% | |

| product of effectiveness | 1.1% | |

| variance | 545.6 | |

| standard deviation | 23.4 |

Table 6 presents recognitions reflecting interactions from the environment to the user. Recognition accuracies refer to the recognition of various objects by humans. Vehicles, people, trains, dogs, cats, and birds were selected for this analysis [40]. Observers can also count people and estimate the relationships between movement trajectories. In these cases, the ratio of correct cases to all cases, expressed as a percentage, was assumed as effectiveness [41]. The effectiveness of human image analysis tasks varies widely. Simple tasks, similar to everyday situations, are performed with high accuracy. As object size decreases (compare human and bird recognition, Table 6) or as tasks become more complex, effectiveness decreases. The introduction of three-dimensional (3D) visualization can improve the accuracy of the human analysis due to the possibility of perceiving depth. In tests on images with limited object visibility, objects in three-dimensional images (3D) were recognized slightly better than in two-dimensional (2D) images, but the results were still inaccurate [41]. Conducting user testing on 3D images is justified in cases of, e.g., recognizing movement trajectories. Generally, it is, therefore, a good idea to perform tests in 3D conditions, because 2D conditions may not always fully reflect reality, especially when objects are obscured.

For example, for a vehicle that has stopped at a stop sign, we would like to recognize whether it has stopped in front of or behind a stop sign. If it has stopped behind the stop sign, its trajectory may intersect with the trajectory of vehicles on the road it is about to enter. We can achieve better recognition effectiveness using stereo images than with 2D images [41]. Providing two views of the image in the form of a 3D image (Figure 6b) instead of a 2D image (Figure 6a) can, therefore, be important for various types of recognition, not only by the user but also by the automatic system.

Figure 6.

Recognition of environment using 3D view: (a) left eye view, (b) 3D view visualized as an anaglyph.

The results presented in Table 6 provide recognition values for on 2D and 3D corresponding images so that a comparison can be made and the appropriate case can be selected.

Table 6.

Ten selected examples of recognition effectiveness for environment → user interactions.

Table 6.

Ten selected examples of recognition effectiveness for environment → user interactions.

| Index (i) | Environment → User Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | car recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 94.0% |

| 2 | human recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 98.0% |

| 3 | train recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 94.0% |

| 4 | dog recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 88.0% |

| 5 | cat recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 81.0% |

| 6 | bird recognition accuracy by human observers (human viewing behavior—spatial object recognition) [40] | 83.0% |

| 7 | counting people with various degrees of occlusion by observers in 2D images (several to a dozen people to count) [41] | 33.3% |

| 8 | counting people with various degrees of occlusion by observers in 3D images (several to a dozen people to count) [41] | 54.8% |

| 9 | estimation of the dependence of object motion trajectories with limited visibility by observers for 2D images (relationships between people, vehicles and the traffic sign line) [41] | 36.5% |

| 10 | estimation of the dependence of object motion trajectories with limited visibility by observers for 3D images (relationships between people, vehicles and the traffic sign line) [41] | 45.3% |

| mean value of effectiveness | 70.8% | |

| product of effectiveness | 1.5% | |

| variance | 650.5 | |

| standard deviation | 25.5 |

The last type of interaction mentioned is the vehicle-to-user interaction. Table 7 presents the recognition of different graphical reminders by individuals. Only one new, work, closely related and enabling the obtaining of desirable metric values, was found in this category [42], but we hope that this will continue to grow. Effectiveness is the percentage of individuals who noted a given reminder to all participants.

The reminders were in text and graphical form in the pre-driving and during-driving stages. They concerned safety belts, fuel checks, mirror checks, obstacles, and drowsy driving [42]. Effectiveness can be improved by using an icon together with adding a sound effect [42]. However, due to the focus on vision systems, results for images supported by sound alerts are not given in this study.

Table 7.

Ten selected examples of recognition effectiveness for vehicle → user interactions.

Table 7.

Ten selected examples of recognition effectiveness for vehicle → user interactions.

| Index (i) | Vehicle → User Interaction Example (Additional Notes) | Recognition Effectiveness |

|---|---|---|

| 1 | recognition of text reminder about safety belt displayed on the screen (noticed in pre-driving stage) [42] | 50.0% |

| 2 | recognition of image reminder about safety belt displayed on the screen (noticed in pre-driving stage) [42] | 83.3% |

| 3 | recognition of text reminder about fuel check displayed on the screen (noticed in pre-driving stage) [42] | 83.3% |

| 4 | recognition of image reminder about fuel check displayed on the screen (noticed in pre-driving stage) [42] | 83.3% |

| 5 | recognition of text reminder about mirror check displayed on the screen (noticed in pre-driving stage) [42] | 50.0% |

| 6 | recognition of image reminder about mirror check displayed on the screen (noticed in pre-driving stage) [42] | 66.7% |

| 7 | recognition of text reminder about obstacles displayed on the screen (noticed in during-driving stage) [42] | 33.3% |

| 8 | recognition of image reminder about obstacles displayed on the screen (noticed in during-driving stage) [42] | 33.3% |

| 9 | recognition of text reminder about drowsy driving displayed on the screen (noticed in during-driving stage) [42] | 33.3% |

| 10 | recognition of image reminder about drowsy driving displayed on the screen (noticed in during-driving stage) [42] | 16.7% |

| mean value of effectiveness | 53.3% | |

| product of effectiveness | 0.1% | |

| variance | 604.5 | |

| standard deviation | 24.6 |

Comparing the results from Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7, the highest mean value of effectiveness and product of effectiveness values were obtained for the following interactions: environment to vehicle, vehicle to environment, and user to vehicle. The lowest variance and standard deviation values were obtained for the same types of direct interactions.

In contrast, the highest variance and standard variation values concern systems in which the analysis is performed by a human, as well as automatic systems performing complex tasks with difficult information flow (e.g., recognition of people in vehicles by stationary surveillance cameras).

4.2. Effectiveness Averaging

In the effectiveness averaging analysis, the effectiveness values were averaged based on data obtained from the literature presented in Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7.

As an example, the effectiveness of direct unidirectional interactions from the environment to the vehicle, based on literature results presented in Table 2, according to Formulas (21) and (22) is equal to 91.7%.

The average effectiveness value for the interactions between the environment and the vehicle for both directions, calculated from literature results presented in Table 2 and Table 3, according to Formulas (23) and (24), is equal to 93.8%.

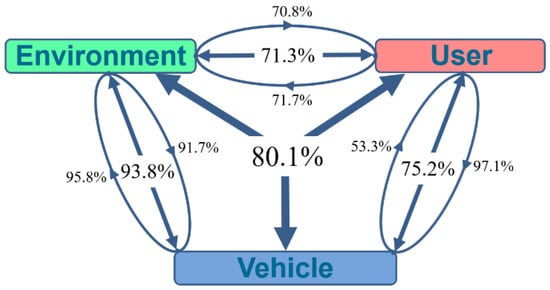

The effectiveness value calculated for the interaction examples presented in Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7, averaged for the entire model in the manner described by Formula (25), is 80.1%. The averaged values at different levels of generalization are schematically presented in Figure 7.

Figure 7.

Average effectiveness values for vision systems at different levels of interaction generalization.

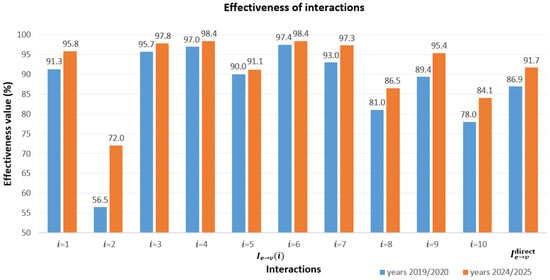

4.2.1. Effectiveness over Time

It is worth examining how the effectiveness of selected solutions has changed over time, e.g., over a 5-year period. In this case, an analysis of the interaction between the environment and the vehicle was first carried out, taken from Table 2, which covers solutions from 2024 to 2025. Next, the most similar smart solutions, based on artificial neural networks, published from 2019 to 2020, were selected [43,44,45,46,47,48,49,50,51,52]. The period of 5 years was chosen because many older publications did not concern systems based on artificial neural networks, and we were interested in smart systems.

Despite the selection of the most similar solutions, the largest differences were found in three cases. At , the older solution was simpler and did not consider pedestrians or safety cones [50]. In the second case, for , instead of detached wheels, detection of a similar object, the vehicle axles, was chosen [51]. At , for animal detection, mAP (average precision) was exceptionally used, due to the lack of accuracy and F1 metrics in the source paper [52].

The effectiveness values are summarized in Figure 8. It can be seen that over the five years, the effectiveness of the environment-to-vehicle interaction increased from 86.9% to 91.7%. If the older value for this interaction were retained and the newer values for all others were used, the effectiveness of the entire model would decrease slightly from 80.1% to 79.3%, i.e., to a value below eighty percent.

Figure 8.

Comparison of the effectiveness of interactions from the environment to the vehicle between 2019/2020 and 2024/2025 for systems using artificial neural networks (data sources for subsequent indices i for years 2019/2020 [43,44,45,46,47,48,49,50,51,52], and for years 2024/2025 [3,4,11,12,13,14,15,16,17,18]).

4.2.2. Effectiveness for Special Application Requirements

In order to adapt the model to specific application requirements, the effectiveness values can be weighted. For example, one can change weights of the effectiveness for averaged values for interactions where the object recognition is performed by a human. Table 8 presents the averaged effectiveness for the entire model for the weights of the interactions from the environment to the user and from the vehicle to the user changing simultaneously in the range of 0 to 2 with a step of 0.2.

Table 8.

Averaged effectiveness of interaction for the entire model depending on weights of interactions towards vehicle users.

During the weighted averaging of the vehicle–user and user–environment effectiveness values, the weights in one interaction direction were increased, while the weights in the other direction were reduced by the same amount. Therefore, the lowest weight value was 0, and the highest was 2. The step value of 0.2 was related to the assumption of five levels with increasingly less driver involvement and increasing driving automation, and the same number of levels for the opposite changes. According to the literature, level 5 in the taxonomy means the full driving automation [53].

According to this analysis, reducing human intervention can increase overall effectiveness.

4.2.3. Effectiveness for Special Environmental Conditions

Another possible analysis is the use of vehicle vision systems in specific environmental conditions, e.g., on a highway. In this case, we are dealing with an interaction from the environment to the vehicle, and weights must be assigned to specific solutions. We assumed that there are no traffic lights or pedestrian crossings on the highway. For these systems, i.e., for and , we assign weights of 0. The effectiveness for this interaction dropped from an overall value of 91.7% to a more appropriate value of 90.9%, given the conditions. The effectiveness of the entire model decreased only slightly and amounted to 80.0%. Detailed weights for particular i-th systems are presented in Table 9.

Table 9.

Weight values of effectiveness for particular vision systems for environment-to-vehicle interactions in highway conditions.

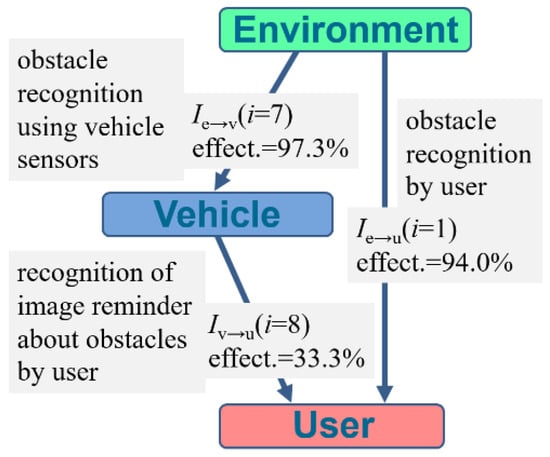

4.3. Effectiveness Multiplication

As the first example of analysis based on multiplication of effectiveness values, one can compare the effectiveness of the interaction between the environment and the vehicle user. This interaction concerns the recognition of the obstacle, which is another car. As we introduce in Section 3, there are two interaction types: indirect and direct. In the case of indirect interaction, the obstacle is first detected by the vehicle system, and then the driver is informed about it using an image and must recognize this reminder. In the case of direct interaction, the driver recognizes the obstacle simply based on what is visible to the eyes in the surrounding environment. A graphic illustration of this example is presented in Figure 9.

Figure 9.

Illustrative fragment of interaction cycle as indirect and direct interaction using vision systems.

Multiplying the effectiveness of component direct interaction values for indirect interaction—effectiveness (from Table 2 and Table 7)—yields a value of 32.4%. This is significantly lower than the direct interaction effectiveness—effectiveness —of 94.0% (from Table 6). According to the results, simply detecting and informing a human is less effective than direct obstacle detection by the driver. To increase the effectiveness of such a system, the human driver needs to be better informed, for example, by additionally using an audible alert [42]. Apart from that, the vehicle should automatically perform a specific action, such as braking.

This example could be expanded further. A driver could become frightened after recognizing an obstacle, and the vision system could use facial images to recognize the driver’s fear as an emotion and apply an appropriate strategy to mitigate this fear. Then, the previously obtained values would be multiplied by 96.6% (Table 4) to obtain values, respectively, of 31.3% and 90.8%. Depending on the needs, we could continue in a similar manner.

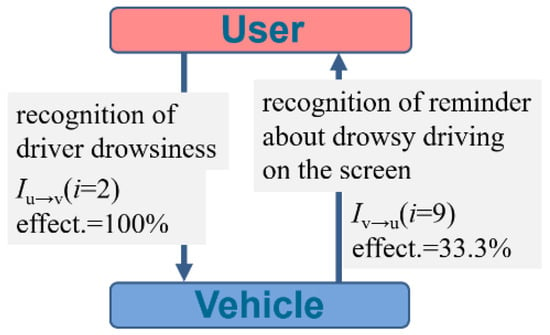

As a second example of multiplying the effectiveness value, we can consider an interactive cycle containing two elements—the driver and the vehicle. The vehicle detects the driver’s drowsiness with high, 100% effectiveness (Table 4). It then displays a visual alert for the driver. The driver, in turn, recognizes the text reminder about drowsy driving, which is displayed on the screen, with an effectiveness of 33.3% (Table 7). This means that the multiplied effectiveness value for both interactions—effectiveness —is also 33.3%. Adding an audible alert intended for the driver can increase this value to 100% [42]. The presented iterative cycle for vision systems is shown in Figure 10.

Figure 10.

Interaction cycle consisting of two direct interactions using vision systems.

Determining interaction cycles and obtaining the effectiveness of vision systems allows determination of the effectiveness of interactions. On this basis, we can quickly assess the level of security and indicate weak points for improvement. However, it should be remembered that estimates based on the literature are approximate, because they may not fully reflect the conditions prevailing during the operation of our vision systems.

5. Conclusions

The introduced model describes the interactions between the vehicle user, the vehicle, and the environment. It is possible to comprehensively analyze interactions occurring simultaneously and at different times.

The concept of interaction cycles was introduced, thanks to which it is also possible to analyze interactions occurring one after another, when subsequent actions trigger subsequent reactions.

The model organizes the latest automatic vision systems in terms of the type of interaction and also takes into account human recognition of objects.

The interactions have been assigned numerical effectiveness parameters related to object recognition by individual systems, thanks to which numerical analysis at different levels of detail is possible, depending on the needs. High effectiveness means high safety and care for the environment. For a system to be useful in practice, it should generate as few false alarms as possible while maintaining high detection sensitivity. Practical solutions must offer compromises. A system with higher recognition effectiveness is better at detecting objects and situations. For example, more animals crossing the road are correctly detected, which means better driver warning and greater potential for emergency braking. This can reduce the number of accidents.

In the presented examples, the overall effectiveness for selected solutions, the impact of the passage of time, reducing or increasing the human role, and environmental conditions on its value and the effectiveness for subsequent interaction were determined. Having a choice between various solution variants with different component systems, the solution with the highest value of overall effectiveness can be chosen.

The comprehensiveness of the presented model refers to its coverage of all types of interactions and possible systems, both automatic and based on human action. Thanks to comprehensiveness, simplicity, and scalability according to the needs of the presented approach, the model can be used both for scientific analysis of existing solutions and be helpful in selecting solutions for implementation by the vehicle manufacturer.

The current model is limited to three elements: vehicle, user, and environment. In the future, the number of elements between which interactions occur could be increased, e.g., by taking into account different types of vehicles and communication between them. The user’s smartphone could also be a separate element of the environment. Thus, the number of different interactions and component systems would also increase. This may increase the number of weights that need to be determined and the number of calculations performed, but the systems should still be transparently ordered in terms of interactions.

Limitations can also be considered as constraints on component systems in terms of their performance under specific conditions and with specific effectiveness. As technology advances, increasingly new and more effective systems can be selected for analysis within the model.

In specific future implementations, the weights for individual interactions could be selected more precisely. For example, the weights for interactions of safety systems could be determined based on accident statistics.

Furthermore, specific metrics could be selected for specific tasks and specific performance, which could also provide material for future analysis. In the future, a new universal metric or set of metrics could be considered for use in research.

However, this tool already enables parametric analysis of the operation of vision systems and human behavior based on the perception of visual information.

Author Contributions

Conceptualization, J.B.; methodology, J.B. and P.P.; validation, J.B. and P.P.; formal analysis, J.B. and P.P.; investigation, J.B. and P.P.; data curation, J.B.; writing—original draft preparation, J.B. and P.P.; writing—review and editing, J.B. and P.P.; visualization, J.B. and P.P.; supervision, J.B. and P.P. All authors have read and agreed to the published version of the manuscript.

Funding

This paper was prepared with the research subsidy 0211/SBAD/0225 in Poznan University of Technology financial means.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wagner, P. Traffic Control and Traffic Management in a Transportation System with Autonomous Vehicles. In Autonomous Driving: Technical, Legal and Social Aspects; Maurer, M., Gerdes, J.C., Lenz, B., Winner, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; pp. 301–316. [Google Scholar] [CrossRef]

- Ali, F.; Khan, Z.H.; Gulliver, T.A.; Khattak, K.S.; Altamimi, A.B. A Microscopic Traffic Model Considering Driver Reaction and Sensitivity. Appl. Sci. 2023, 13, 7810. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P.; Cierpiszewska, J.; Kus, Ł.; Zborowski, P. Automatic recognition of dangerous objects in front of the vehicle’s windshield. In Proceedings of the 2024 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 25–27 September 2024; IEEE: New York, NY, USA, 2024; pp. 91–96. [Google Scholar] [CrossRef]

- Tollner, D.; Zöldy, M. Road Type Classification of Driving Data Using Neural Networks. Computers 2025, 14, 70. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P. How to combine issues related to autonomous vehicles—A proposal with a literature review. In Proceedings of the 2023 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 20–22 September 2023; IEEE: New York, NY, USA, 2023; pp. 189–194. [Google Scholar] [CrossRef]

- Balcerek, J.; Dąbrowski, A.; Pawłowski, P. Vision signals and systems in the vehicle automation model. Prz. Elektrotechniczny 2024, 100, 208–211. [Google Scholar] [CrossRef]

- Dilek, E.; Dener, M. Computer Vision Applications in Intelligent Transportation Systems: A Survey. Sensors 2023, 23, 2938. [Google Scholar] [CrossRef]

- González-Saavedra, J.F.; Figueroa, M.; Céspedes, S.; Montejo-Sánchez, S. Survey of Cooperative Advanced Driver Assistance Systems: From a Holistic and Systemic Vision. Sensors 2022, 22, 3040. [Google Scholar] [CrossRef]

- Effectiveness, Awork, Glossary. Available online: https://www.awork.com/glossary/effectiveness (accessed on 26 August 2025).

- Noola, D.A.; Basavaraju, D.R. Corn leaf image classification based on machine learning techniques for accurate leaf disease detection. Int. J. Electr. Comput. Eng. 2022, 12, 2509–2516. [Google Scholar] [CrossRef]

- Fan, X.; Liu, R. Automatic Detection and Classification of Surface Diseases on Roads and Bridges Using Fuzzy Neural Networks. In Proceedings of the 2024 International Conference on Electrical Drives, Power Electronics & Engineering (EDPEE), Athens, Greece, 27–29 February 2024; IEEE: New York, NY, USA, 2024; pp. 770–775. [Google Scholar] [CrossRef]

- Maddiralla, V.; Subramanian, S. Effective lane detection on complex roads with convolutional attention mechanism in autonomous vehicles. Sci. Rep. 2024, 14, 19193. [Google Scholar] [CrossRef]

- Prakash, A.J.; Sruthy, S. Enhancing traffic sign recognition (TSR) by classifying deep learning models to promote road safety. Signal Image Video Process. 2024, 18, 4713–4729. [Google Scholar] [CrossRef]

- Lin, H.-Y.; Lin, S.-Y.; Tu, K.-C. Traffic Light Detection and Recognition Using a Two-Stage Framework From Individual Signal Bulb Identification. IEEE Access 2024, 12, 132279–132289. [Google Scholar] [CrossRef]

- Wang, K.-C.; Meng, C.-L.; Dow, C.-R.; Lu, B. Pedestrian-Crossing Detection Enhanced by CyclicGAN-Based Loop Learning and Automatic Labeling. Appl. Sci. 2025, 15, 6459. [Google Scholar] [CrossRef]

- Dhatrika, S.K.; Reddy, D.R.; Reddy, N.K. Real-Time Object Recognition For Advanced Driver-Assistance Systems (ADAS) Using Deep Learning On Edge Devices. Procedia Comput. Sci. 2025, 252, 25–42. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P.; Dolny, K.; Skotnicka, J. Automatic recognition of emergency vehicles in images from video recorders. In Proceedings of the 2024 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 25–27 September 2024; IEEE: New York, NY, USA, 2024; pp. 85–90. [Google Scholar] [CrossRef]

- Parkavi, K.; Ganguly, A.; Sharma, S.; Banerjee, A.; Kejriwal, K. Enhancing Road Safety: Detection of Animals on Highways During Night. IEEE Access 2025, 13, 36877–36896. [Google Scholar] [CrossRef]

- Balcerek, J.; Pawłowski, P.; Trzciński, B. Vision system for automatic recognition of animals on images from car video recorders. Przegląd Elektrotech. 2023, 99, 278–281. [Google Scholar] [CrossRef]

- Wiseman, Y. Real-time monitoring of traffic congestions. In Proceedings of the 2017 IEEE International Conference on Electro Information Technology (EIT), Lincoln, NE, USA, 14–17 May 2017; IEEE: New York, NY, USA, 2017; pp. 501–505. [Google Scholar] [CrossRef]

- Balcerek, J.; Dąbrowski, A.; Pawłowski, P.; Tokarski, P. Automatic recognition of elements of Polish historical vehicles. Przegląd Elektrotech. 2024, 100, 212–215. [Google Scholar] [CrossRef]

- Balcerek, J.; Hinc, M.; Jalowski, Ł.; Michalak, J.; Rabiza, M.; Konieczka, A. Vision-based mobile application for supporting the user in the vehicle operation. In Proceedings of the 2019 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 18–20 September 2019; IEEE: New York, NY, USA, 2019; pp. 250–255. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, J.; Kong, X.; Deng, L.; Obrien, E.J. Vision-based identification of tire inflation pressure using Tire-YOLO and deflection. Measurement 2025, 242, 116228. [Google Scholar] [CrossRef]

- Balcerek, J.; Konieczka, A.; Pawłowski, P.; Dankowski, C.; Firlej, M.; Fulara, P. Automatic recognition of vehicle wheel parameters. Prz. Elektrotech. 2022, 98, 205–208. [Google Scholar] [CrossRef]

- Dhonde, K.; Mirhassani, M.; Tam, E.; Sawyer-Beaulieu, S. Design of a Real-Time Corrosion Detection and Quantification Protocol for Automobiles. Materials 2022, 15, 3211. [Google Scholar] [CrossRef]

- Amodu, O.D.; Shaban, A.; Akinade, G. Revolutionizing Vehicle Damage Inspection: A Deep Learning Approach for Automated Detection and Classification. In Proceedings of the 9th International Conference on Internet of Things, Big Data and Security (IoTBDS 2024), Angers, France, 28–30 April 2024; SciTePress: Setúbal, Portugal, 2024; pp. 199–208. [Google Scholar] [CrossRef]

- Shen, D.; Cao, J.; Liu, P.; Guo, J. Intelligent recognition system of in-service tire damage driven by strong combination augmentation and contrast fusion. Neural Comput. Applic. 2025, 37, 5795–5813. [Google Scholar] [CrossRef]

- Gade, M.V.; Afroj, M.; Kadaskar, S.; Wagh, P. Facial Recognition Based Vehicle Authentication System. IJIREEICE 2025, 13, 124–129. [Google Scholar] [CrossRef]

- Essahraui, S.; Lamaakal, I.; El Hamly, I.; Maleh, Y.; Ouahbi, I.; El Makkaoui, K.; Filali Bouami, M.; Pławiak, P.; Alfarraj, O.; Abd El-Latif, A.A. Real-Time Driver Drowsiness Detection Using Facial Analysis and Machine Learning Techniques. Sensors 2025, 25, 812. [Google Scholar] [CrossRef]

- Luan, X.; Wen, Q.; Hang, B. Driver emotion recognition based on attentional convolutional network. Front. Phys. 2024, 12, 1387338. [Google Scholar] [CrossRef]

- Hermandez, J.-S.; Pardales, F.-T., Jr.; Lendio, N.M.-S.; Manalili, I.E.-S.; Garcia, E.-A.; Tee, A.-C., Jr. Real-time driver drowsiness and distraction detection using convolutional neural network with multiple behavioral features. World J. Adv. Res. Rev. 2024, 23, 816–824. [Google Scholar] [CrossRef]

- Ahmed, I.T.; Gwad, W.H.; Hammad, B.T.; Alkayal, E. Enhancing Hand Gesture Image Recognition by Integrating Various Feature Groups. Technologies 2025, 13, 164. [Google Scholar] [CrossRef]

- Borghi, G.; Frigieri, E.; Vezzani, R.; Cucchiara, R. Hands on the wheel: A Dataset for Driver Hand Detection and Tracking. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; IEEE: New York, NY, USA, 2018; pp. 564–570. [Google Scholar] [CrossRef]

- Liu, J.; Chen, Y.; Yan, F.; Zhang, R.; Liao, X.; Wu, Y.; Sun, Y.; Hu, D.; Chen, N. Vision-based feet detection power liftgate with deep learning on embedded device. J. Phys. Conf. Ser. 2022, 2302, 012010. [Google Scholar] [CrossRef]

- Yan, C.; Coenen, F.; Zhang, B. Driving posture recognition by convolutional neural networks. IET Comput. Vis. 2016, 10, 103–114. [Google Scholar] [CrossRef]

- Gu, X.; Lu, Z.; Ren, J.; Zhang, Q. Seat belt detection using gated Bi-LSTM with part-to-whole attention on diagonally sampled patches. Expert Syst. Appl. 2024, 252, 123784. [Google Scholar] [CrossRef]

- Balcerek, J.; Konieczka, A.; Pawłowski, P.; Rusinek, W.; Trojanowski, W. Vision system for automatic recognition of selected road users. In Proceedings of the 2022 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 21–22 September 2022; IEEE: New York, NY, USA, 2022; pp. 155–160. [Google Scholar] [CrossRef]

- Kumar, A.; Gupta, A.; Santra, B.; Lalitha, K.; Kolla, M.; Gupta, M.; Singh, R. VPDS: An AI-Based Automated Vehicle Occupancy and Violation Detection System. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9498–9503. [Google Scholar] [CrossRef][Green Version]

- Kuchár, P.; Pirník, R.; Kafková, J.; Tichý, T.; Ďurišová, J.; Skuba, M. SCAN: Surveillance Camera Array Network for Enhanced Passenger Detection. IEEE Access 2024, 12, 115237–115255. [Google Scholar] [CrossRef]

- van Dyck, L.E.; Kwitt, R.; Denzler, S.J.; Gruber, W.L. Comparing Object Recognition in Humans and Deep Convolutional Neural Networks—An Eye Tracking Study. Front. Neurosci. 2021, 15, 750639. [Google Scholar] [CrossRef]

- Balcerek, J. Human-Computer Supporting Interfaces for Automatic Recognition of Threats. Ph.D. Thesis, Poznan University of Technology, Poznan, Poland, 2016. [Google Scholar]

- Zou, Z.; Alnajjar, F.; Lwin, M.; Ali, L.; Jassmi, H.A.; Mubin, O.; Swavaf, M. A preliminary simulator study on exploring responses of drivers to driving system reminders on four stimuli in vehicles. Sci. Rep. 2025, 15, 4009. [Google Scholar] [CrossRef]

- Balcerek, J.; Konieczka, A.; Piniarski, K.; Pawłowski, P. Classification of road surfaces using convolutional neural network. In Proceedings of the 2020 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 23–25 September 2020; IEEE: New York, NY, USA, 2020; pp. 98–103. [Google Scholar] [CrossRef]

- Naddaf-Sh, S.; Naddaf-Sh, M.-M.; Kashani, A.R.; Zargarzadeh, H. An Efficient and Scalable Deep Learning Approach for Road Damage Detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; IEEE: New York, NY, USA, 2020; pp. 5602–5608. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, H.; Meng, W.; Luo, D. Improved lane detection method based on convolutional neural network using self-attention distillation. Sens. Mater. 2020, 32, 4505–4516. [Google Scholar] [CrossRef]

- Radu, M.D.; Costea, I.M.; Stan, V.A. Automatic Traffic Sign Recognition Artificial Inteligence—Deep Learning Algorithm. In Proceedings of the 2020 12th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 25–27 June 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Che, M.; Che, M.; Chao, Z.; Cao, X. Traffic Light Recognition for Real Scenes Based on Image Processing and Deep Learning. Comput. Inform. 2020, 39, 439–463. [Google Scholar] [CrossRef]

- Malbog, M.A. MASK R-CNN for Pedestrian Crosswalk Detection and Instance Segmentation. In Proceedings of the 2019 IEEE 6th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Kuala Lumpur, Malaysia, 20–21 December 2019; IEEE: New York, NY, USA, 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Kim, J.-A.; Sung, J.-Y.; Park, S.-h. Comparison of Faster-RCNN, YOLO, and SSD for Real-Time Vehicle Type Recognition. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics—Asia (ICCE-Asia), Seoul, Republic of Korea, 1–3 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Kaushik, S.; Raman, A.; Rajeswara Rao, K.V.S. Leveraging Computer Vision for Emergency Vehicle Detection-Implementation and Analysis. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Gothankar, N.; Kambhamettu, C.; Moser, P. Circular Hough Transform Assisted CNN Based Vehicle Axle Detection and Classification. In Proceedings of the 2019 4th International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 5–7 September 2019; IEEE: New York, NY, USA, 2019; pp. 217–221. [Google Scholar] [CrossRef]

- Yudin, D.; Sotnikov, A.; Krishtopik, A. Detection of Big Animals on Images with Road Scenes using Deep Learning. In Proceedings of the 2019 International Conference on Artificial Intelligence: Applications and Innovations (IC-AIAI), Belgrade, Serbia, 30 September–4 October 2019; IEEE: New York, NY, USA, 2019; pp. 100–1003. [Google Scholar] [CrossRef]

- J3016_202104; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. SAE International: Warrendale, PA, USA, 2021. Available online: https://www.sae.org/standards/content/j3016_202104/ (accessed on 22 August 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).