Surface Reading Model via Haptic Device: An Application Based on Internet of Things and Cloud Environment

Abstract

1. Introduction

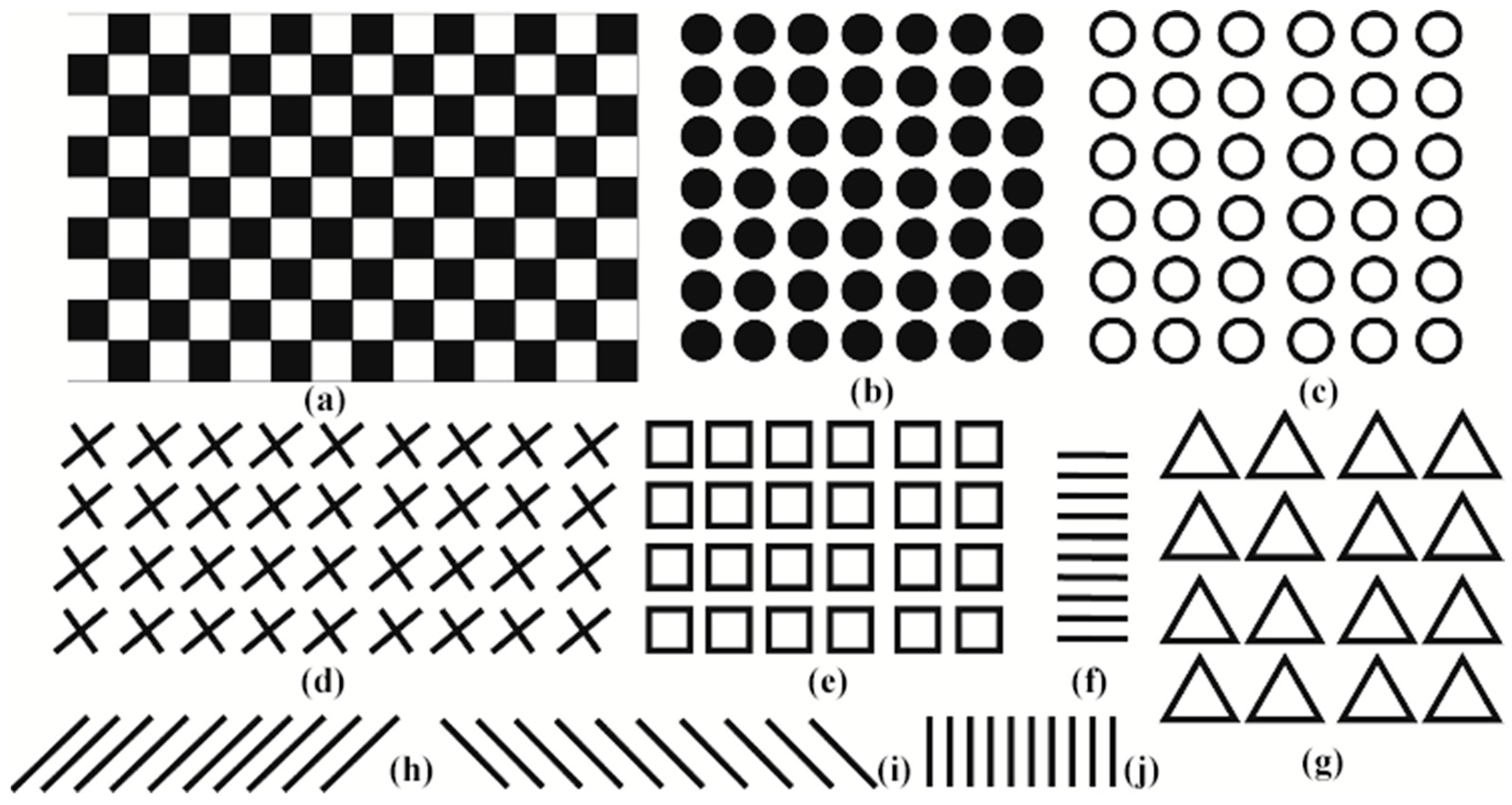

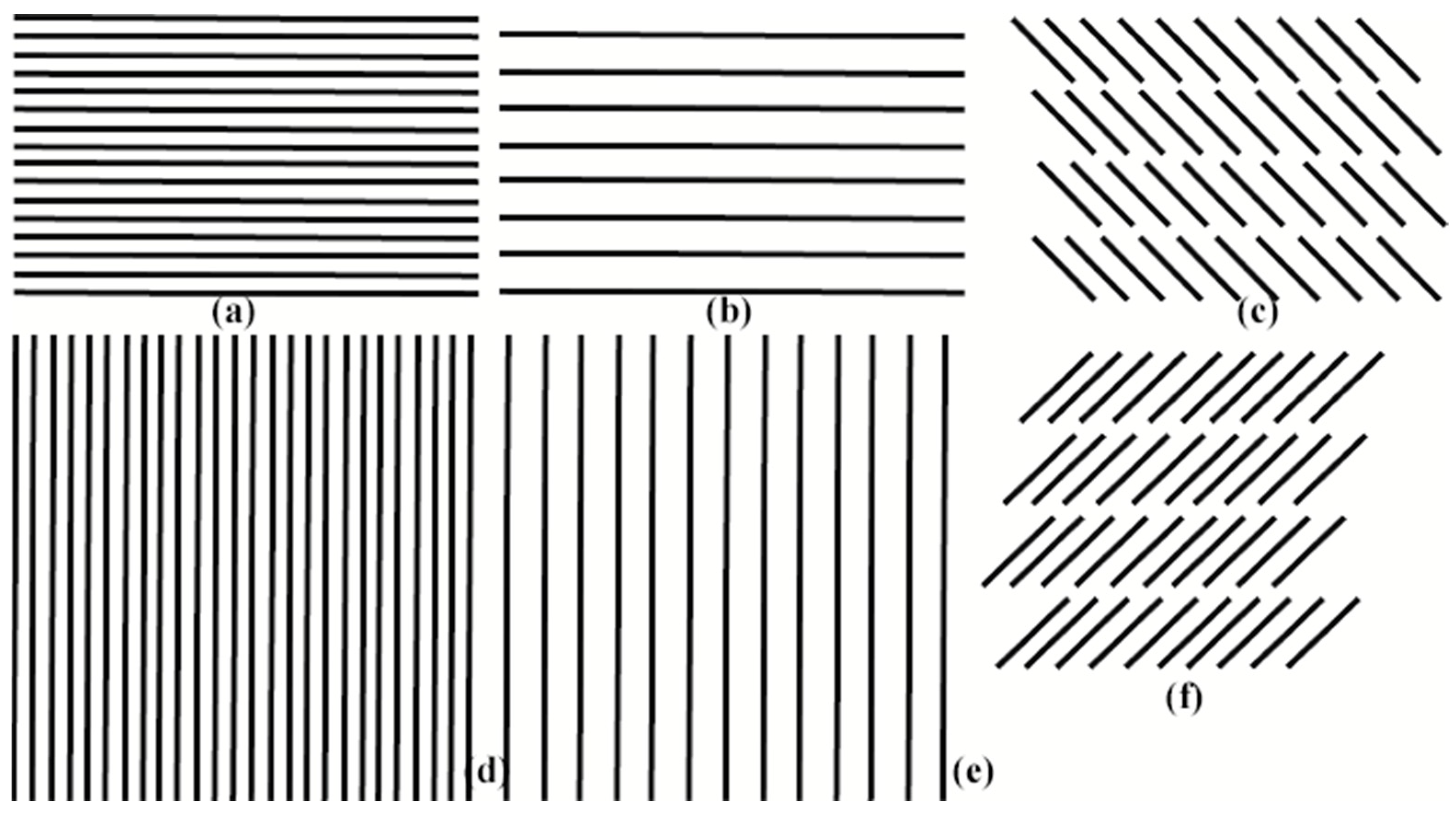

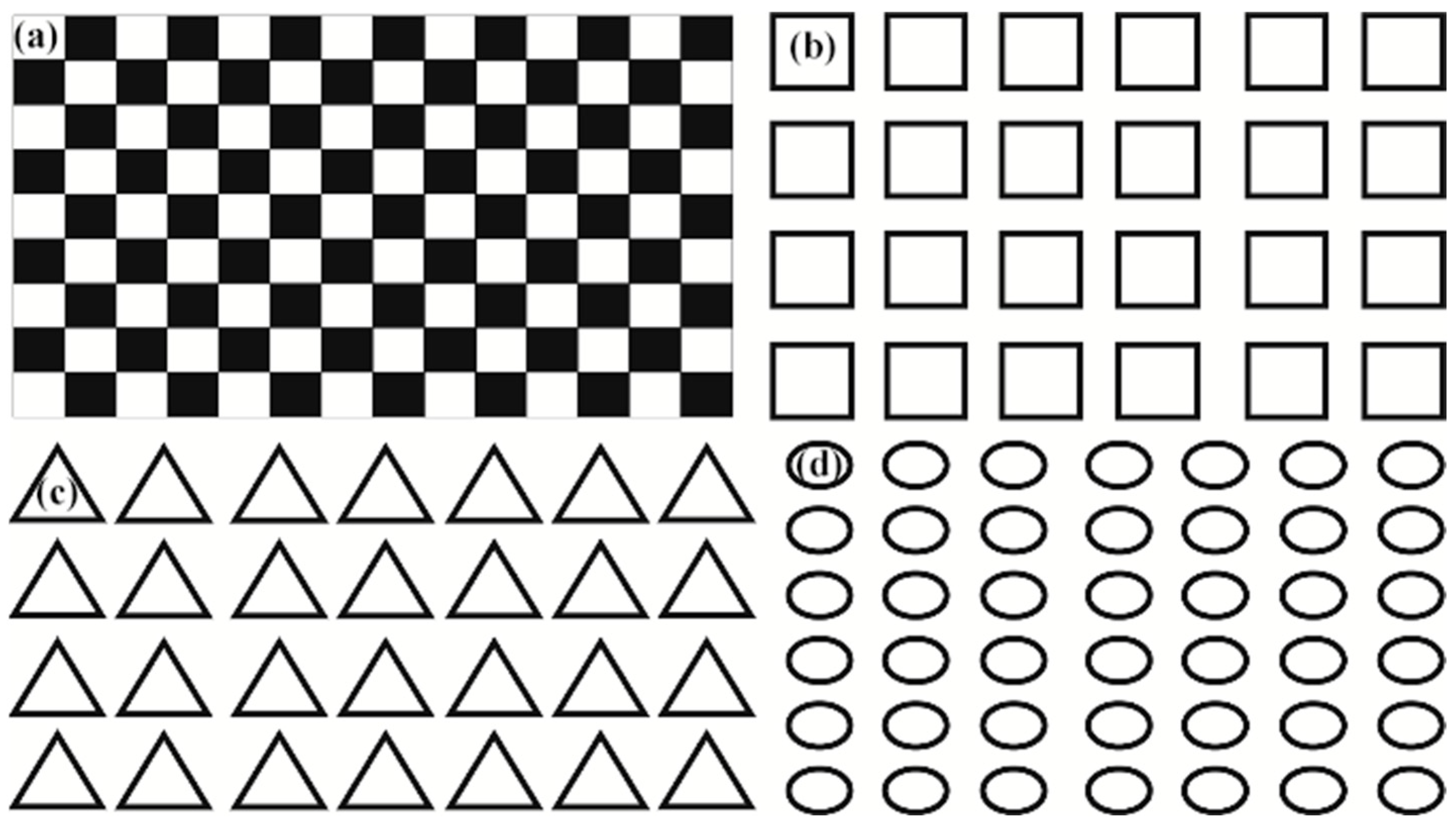

- Propose a novel pattern of images that we can classify as different textures so that they can be identified by people with reduced vision.

- Proposed instances of different surface models

- A system is proposed that will be able to serve visually impaired people remotely through the help of IoT Haptic devices and a Cloud Server.

2. Literature Review

3. Device Installation

3.1. Installation Step-by-Step

- To pair the device, click the Pairing button.

- Immediately, a time bar (the green, empty bar) appears. Click the Pairing button on the device’s back immediately after that. Verify that when I press the button device, the time will not be blank.

- Lastly, you will receive the notification that the device was properly paired. After selecting Apply, select OK. As you can see below, the color will turn green once the calibration is finished.

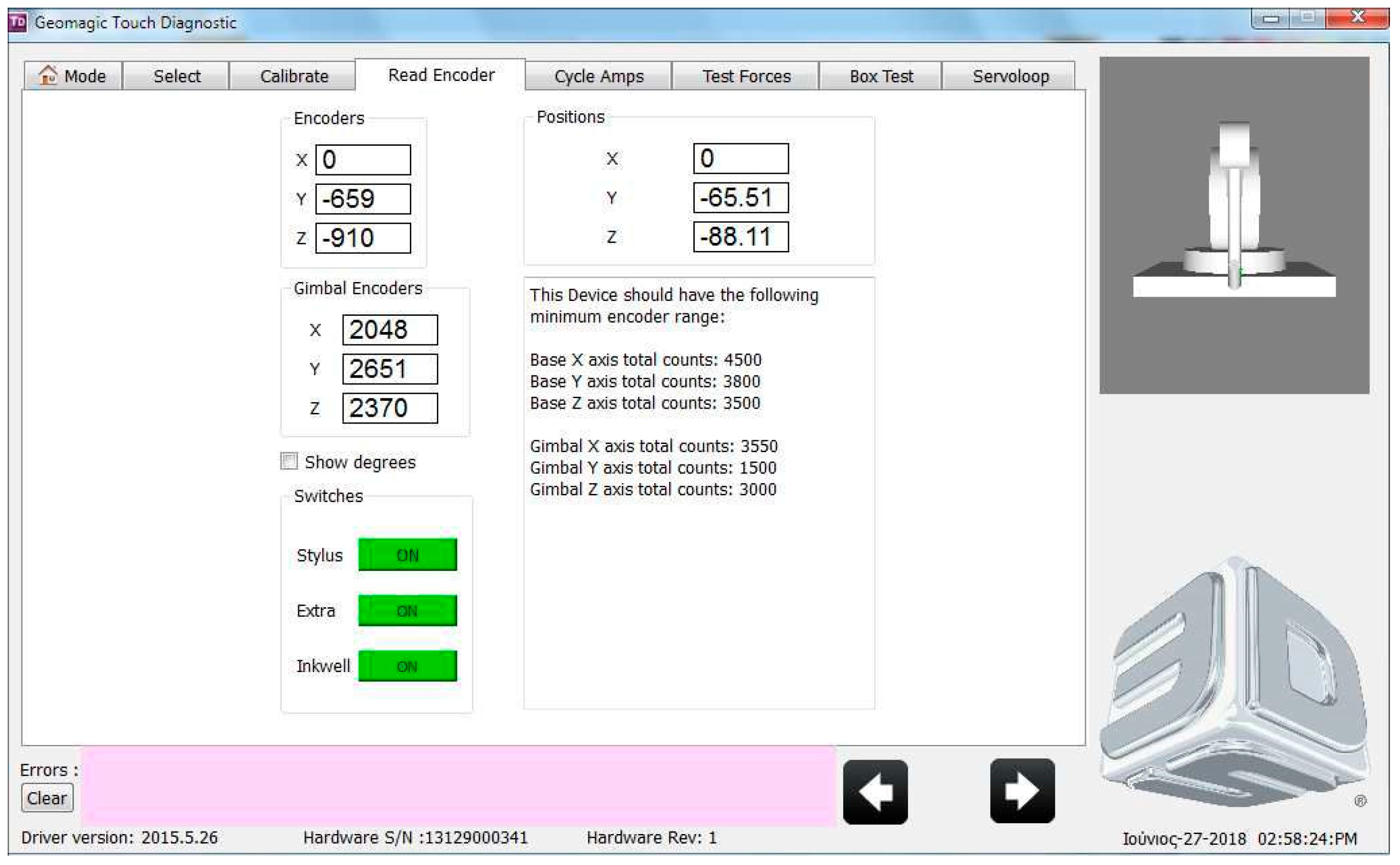

- Press the two haptic device buttons in this stage, then click Next without moving them. The following Figure 1 displays the outcome of tapping the haptic device’s two buttons.

- All you need to do in this step is click Next when both indicators turn green.

- You can regulate the forces applied to the haptic device in this phase. When you are done, select Next.

- You can test your gadget in this stage by pointing the stylus in all directions. Simply select Next.

- The last stage, when you can obtain some haptic device metrics. Click X to exit the Diagnostic Tool after you are done.

3.2. How to Execute the Code

4. Proposed Method

4.1. Problem Evaluation

4.2. Algorithm Approach

| Algorithm 1—XML Source Code |

|

<Scene> <Shape> <Appearance> <Material/> <ImageTexture url="Surface1.tif" DEF="IMT" repeatS="false" repeatT="false"/> </Appearance> <Box DEF="FLOOR" size="0.95 0.49 0"/> </Shape> </Scene> |

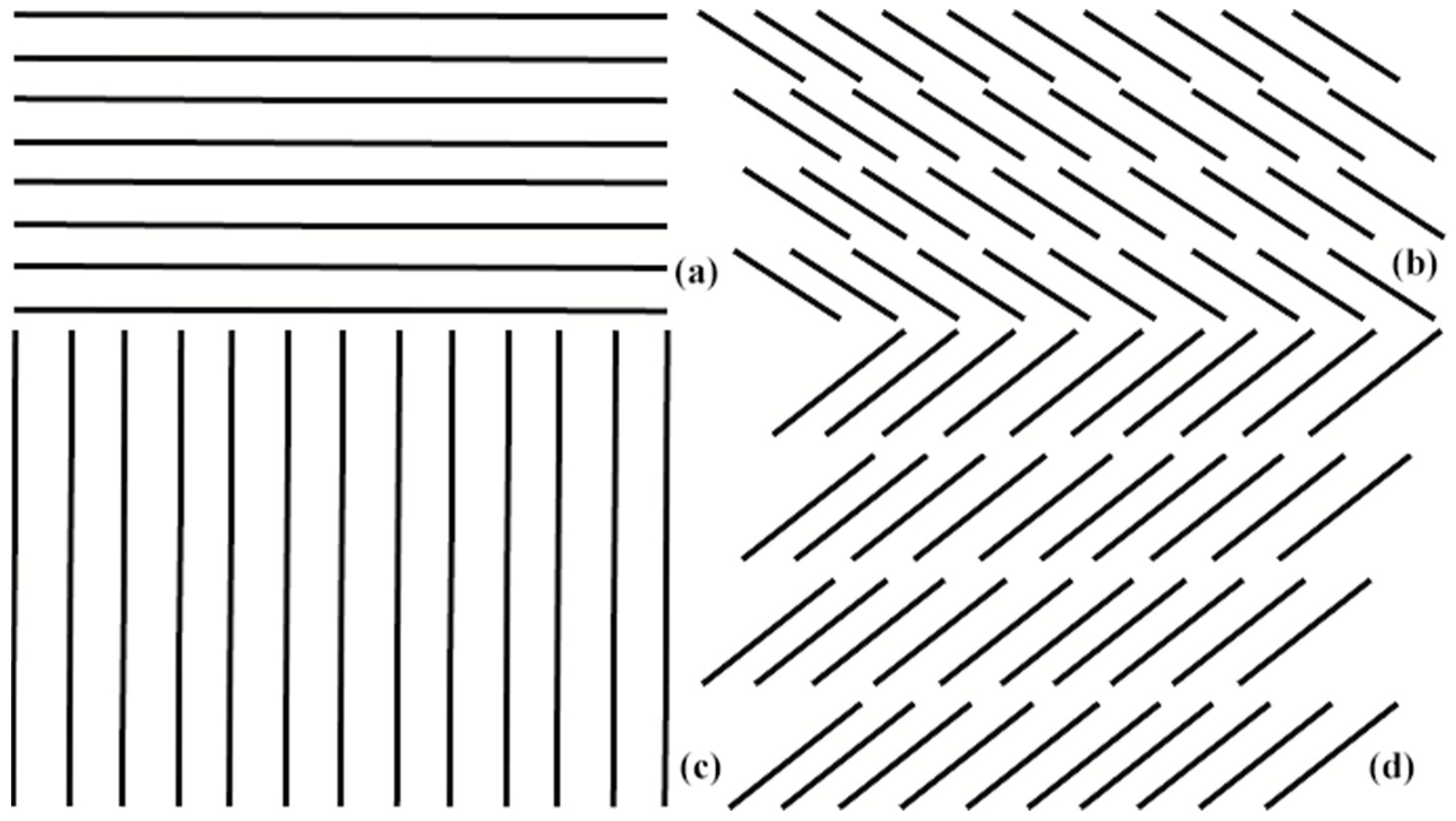

4.3. Image Patterns

5. Experimental Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Emami, M.; Bayat, A.; Tafazolli, R.; Quddus, A. A Survey on Haptics: Communication, Sensing and Feedback. IEEE Commun. Surv. Tutor. 2025, 27, 2006–2050. [Google Scholar] [CrossRef]

- Antonakoglou, K.; Xu, X.; Steinbach, E.; Mahmoodi, T.; Dohler, M. Toward Haptic Communications Over the 5G Tactile Internet. IEEE Commun. Surv. Tutor. 2018, 20, 3034–3059. [Google Scholar] [CrossRef]

- Minopoulos, G.; Kokkonis, G.; Psannis, K.E.; Ishibashi, Y. A Survey on Haptic Data Over 5G Networks. Int. J. Future Gener. Commun. Netw. 2019, 12, 37–54. [Google Scholar] [CrossRef]

- IEEE P1918 Tactile Internet Emerging Technologies Subcommittee. Available online: http://ti.committees.comsoc.org (accessed on 22 February 2025).

- Oteafy, S.M.A.; Hassanein, H.S. Leveraging Tactile Internet Cognizance and Operation via IoT and Edge Technologies. Proc. IEEE 2019, 107, 364–375. [Google Scholar] [CrossRef]

- Aijaz, A.; Dohler, M.; Aghvami, A.H.; Friderikos, V.; Frodigh, M. Realizing the Tactile Internet: Haptic Communications over Next Generation 5G Cellular Networks. IEEE Wirel. Commun. 2017, 24, 82–89. [Google Scholar] [CrossRef]

- Cao, H. Technical Report: What is the next innovation after the Internet of Things? People in Motion Lab (Cisco Big Data Analytics), Department of Geodesy and Geomatics Engineering, University of New Brunswick. arXiv 2017, arXiv:1708.07160. [Google Scholar]

- Fettweis, G. The Tactile Internet: Applications and Challenges. IEEE Veh. Technol. Mag. 2014, 9, 64–70. [Google Scholar] [CrossRef]

- Pacchierotti, C.; Sinclair, S.; Solazzi, M.; Frisoli, A.; Hayward, V.; Prattichizzo, D. Wearable Haptic Systems for the Fingertip and the Hand: Taxonomy, Review, and Perspectives. IEEE Trans. Haptics 2017, 10, 580–600. [Google Scholar] [CrossRef]

- Simsek, M.; Aijaz, A.; Dohler, M.; Sachs, J.; Fettweis, G. The 5G-Enabled Tactile Internet: Applications, requirements, and architecture. In Proceedings of the 2016 IEEE Wireless Communications and Networking Conference, Doha, Qatar, 1 April 2016; pp. 1–6. [Google Scholar] [CrossRef]

- The NIST Definition of Cloud Computing; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2011. [CrossRef]

- Skourletopoulos, G.; Mavromoustakis, C.X.; Mastorakis, G.; Batalla, J.M.; Sahalos, J.N. An Evaluation of Cloud-Based Mobile Services with Limited Capacity: A Linear Approach. Soft Comput. J. 2016, 21, 4523–4530. [Google Scholar] [CrossRef]

- Garg, S.K.; Versteeg, S.; Buyya, R. A framework for ranking of cloud computing services. Future Gener. Comput. Syst. 2013, 29, 1012–1023. [Google Scholar] [CrossRef]

- Haghighat, M.; Zonouz, S.; Abdel-Mottaleb, M. CloudID: Trustworthy cloud-based and cross-enterprise biometric identification. Expert Syst. Appl. 2015, 11, 7905–7916. [Google Scholar] [CrossRef]

- Kumar, J. Integration of Artificial Intelligence, Big Data, and Cloud Computing with Internet of Things. In Convergence of Cloud with AI for Big Data Analytics: Foundations and Innovation; Wiley: Hoboken, NJ, USA, 2023; pp. 1–12. [Google Scholar] [CrossRef]

- Stergiou, C.; Psannis, K.E.; Gupta, B.; Ishibashi, Y. Security, Privacy & Efficiency of Sustainable Cloud Computing for Big Data & IoT. Sustain. Comput. Inform. Syst. 2018, 19, 174–184. [Google Scholar]

- Hilbert, M.; López, P. The World’s Technological Capacity to Store, Communicate, and Compute Information. Science 2011, 332, 60–65. [Google Scholar] [CrossRef]

- Fu, Z.; Ren, K.; Shu, J.; Sun, X.; Huang, F. Enabling Personalized Search over Encrypted Outsourced Data with Efficiency Improvement. IEEE Trans. Parallel Distrib. Syst. 2015, 27, 2546–2559. [Google Scholar] [CrossRef]

- Stergiou, C.; Psannis, K.E. Efficient and Secure Big Data delivery in Cloud Computing. Multimed. Tools Appl. 2017, 76, 22803–22822. [Google Scholar] [CrossRef]

- Galego, N.M.C.; Martinho, D.S.; Duarte, N.M. Cloud computing for big data analytics: How cloud computing can handle processing large amounts of data and improve real-time data analytics. Procedia Comput. Sci. 2024, 237, 297–304. [Google Scholar] [CrossRef]

- Jiménez, M.F.; Mello, R.C.; Bastos, T.; Frizera, A. Assistive locomotion device with haptic feedback for guiding visually impaired people. Med. Eng. Phys. 2020, 80, 18–25. [Google Scholar] [CrossRef]

- Mueen, A.; Awedh, M.; Zafar, B. Multi-obstacle aware smart navigation system for visually impaired people in fog connected IoT-cloud environment. Health Inform. J. 2022, 28, 146045822211126. [Google Scholar] [CrossRef]

- Abburu, K.S.; Rout, K.K.; Mishra, S. Haptic Display Unit: IoT For Visually Impaired. In Proceedings of the 2018 International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2018; pp. 244–247. [Google Scholar] [CrossRef]

- Chaudary, B.; Pohjolainen, S.; Aziz, S.; Arhippainen, L.; Pulli, P. Teleguidance-based remote navigation assistance for visually impaired and blind people—Usability and user experience. Virtual Real. 2023, 27, 141–158. [Google Scholar] [CrossRef]

- Ganesan, J.; Azar, A.T.; Alsenan, S.; Kamal, N.A.; Qureshi, B.; Hassanien, A.E. Deep Learning Reader for Visually Impaired. Electronics 2022, 11, 3335. [Google Scholar] [CrossRef]

- Bouteraa, Y. Design and Development of a Wearable Assistive Device Integrating a Fuzzy Decision Support System for Blind and Visually Impaired People. Micromachines 2021, 12, 1082. [Google Scholar] [CrossRef] [PubMed]

- Poggi, M.; Mattoccia, S. A wearable mobility aid for the visually impaired based on embedded 3D vision and deep learning. In Proceedings of the 2016 IEEE Symposium on Computers and Communication (ISCC), Messina, Italy, 27–30 June 2016; pp. 208–213. [Google Scholar] [CrossRef]

- Rao, S.; Singh, V.M. Computer Vision and IoT Based Smart System for Visually Impaired People. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; pp. 552–556. [Google Scholar] [CrossRef]

- Farooq, M.S.; Shafi, I.; Khan, H.; Díez, I.D.; Breñosa, J.; Espinosa, J.C.; Ashraf, I. IoT Enabled Intelligent Stick for Visually Impaired People for Obstacle Recognition. Sensors 2022, 22, 8914. [Google Scholar] [CrossRef]

- See, A.R.; Costillas, L.V.M.; Advincula, W.D.C.; Bugtai, N.T. Haptic Feedback to Detect Obstacles in Multiple Regions for Visually Impaired and Blind People. Sens. Mater. 2021, 33, 1. [Google Scholar] [CrossRef]

- Pratticò, D.; Laganà, F.; Oliva, G.; Fiorillo, A.S.; Pullano, S.A.; Calcagno, S.; De Carlo, D.; La Foresta, F. Integration of LSTM and U-Net Models for Monitoring Electrical Absorption With a System of Sensors and Electronic Circuits. IEEE Trans. Instrum. Meas. 2025, 74, 2533311. [Google Scholar] [CrossRef]

| Ref. | Authors | Technology Used | Advantages | Disadvantages |

|---|---|---|---|---|

| [21] | M. Jiménez et al. | Haptic feedback via smart walker |

|

|

| [22] | A. Mueen et al. | Fog-assisted IoT with Cloud and NS-3 simulation |

|

|

| [23] | A. K. Srinivas et al. | IoT-based haptic display (refreshable Braille) |

|

|

| [24] | B. Chaudary et al. | Teleguidance with haptic actuators |

|

|

| [25] | J. Ganesan et al. | CNN + LSTM for text/image captioning |

|

|

| [26] | Y. Bouteraa | Wearable device with Fuzzy Logic + ROS |

|

|

| [27] | M. Poggi & S. Mattoccia | 3D vision + deep learning with CNN |

|

|

| [28] | S. Rao & V. M. Singh | Smart shoe with sensors + smartphone app |

|

|

| [29] | M. S. Farooq et al. | Smart IoT-based stick with audio/haptic + GPS |

|

|

| [30] | A. R. See et al. | Haptic feedback + optimized obstacle detection |

|

|

| Source Code |

|---|

|

|

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| texture 1 (Figure 5a) | |||||

| texture 2 (Figure 5b) | |||||

| texture 3 (Figure 5c) | |||||

| texture 4 (Figure 5d) | |||||

| texture 5 (Figure 6a) | |||||

| texture 6 (Figure 6b) | |||||

| texture 7 (Figure 6c) | |||||

| texture 8 (Figure 6d) | |||||

| texture 9 (Figure 7a) | |||||

| texture 10 (Figure 7b) | |||||

| texture 11 (Figure 7c) | |||||

| texture 12 (Figure 7d) |

| Candidate 1 | Candidate 2 | Candidate 3 | Candidate 4 | Candidate 5 | Candidate 6 | Candidate 7 | Candidate 8 | Candidate 9 | Candidate 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| texture 1 (Figure 5a) | 5 | 4 | 4 | 4 | 5 | 4 | 4 | 5 | 5 | 4 |

| texture 2 (Figure 5b) | 4 | 4 | 5 | 4 | 5 | 5 | 4 | 5 | 4 | 5 |

| texture 3 (Figure 5c) | 5 | 5 | 3 | 4 | 4 | 3 | 5 | 4 | 5 | 5 |

| texture 4 (Figure 5d) | 4 | 3 | 2 | 3 | 4 | 3 | 3 | 3 | 4 | 3 |

| texture 5 (Figure 6a) | 5 | 5 | 3 | 3 | 3 | 3 | 4 | 4 | 4 | 4 |

| texture 6 (Figure 6b) | 3 | 3 | 4 | 4 | 4 | 4 | 4 | 5 | 4 | 5 |

| texture 7 (Figure 6c) | 3 | 2 | 5 | 4 | 3 | 4 | 5 | 5 | 5 | 5 |

| texture 8 (Figure 6d) | 5 | 3 | 4 | 2 | 3 | 2 | 3 | 3 | 3 | 3 |

| texture 9 (Figure 7a) | 5 | 4 | 5 | 5 | 4 | 5 | 5 | 5 | 4 | 5 |

| texture 10 (Figure 7b) | 3 | 4 | 1 | 1 | 2 | 1 | 3 | 3 | 2 | 2 |

| texture 11 (Figure 7c) | 5 | 5 | 5 | 5 | 4 | 5 | 5 | 5 | 4 | 5 |

| texture 12 (Figure 7d) | 3 | 1 | 1 | 1 | 2 | 2 | 2 | 3 | 3 | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Plageras, A.P.; Stergiou, C.L.; Memos, V.A.; Kokkonis, G.; Ishibashi, Y.; Psannis, K.E. Surface Reading Model via Haptic Device: An Application Based on Internet of Things and Cloud Environment. Electronics 2025, 14, 3185. https://doi.org/10.3390/electronics14163185

Plageras AP, Stergiou CL, Memos VA, Kokkonis G, Ishibashi Y, Psannis KE. Surface Reading Model via Haptic Device: An Application Based on Internet of Things and Cloud Environment. Electronics. 2025; 14(16):3185. https://doi.org/10.3390/electronics14163185

Chicago/Turabian StylePlageras, Andreas P., Christos L. Stergiou, Vasileios A. Memos, George Kokkonis, Yutaka Ishibashi, and Konstantinos E. Psannis. 2025. "Surface Reading Model via Haptic Device: An Application Based on Internet of Things and Cloud Environment" Electronics 14, no. 16: 3185. https://doi.org/10.3390/electronics14163185

APA StylePlageras, A. P., Stergiou, C. L., Memos, V. A., Kokkonis, G., Ishibashi, Y., & Psannis, K. E. (2025). Surface Reading Model via Haptic Device: An Application Based on Internet of Things and Cloud Environment. Electronics, 14(16), 3185. https://doi.org/10.3390/electronics14163185