Abstract

This paper introduces a novel system that utilizes OpenPose for skeleton estimation to enable a tabletop robot to interact with humans proactively. By accurately recognizing upper-body poses based on the skeleton information, the robot autonomously approaches individuals and initiates conversations. The contributions of this paper can be summarized into three main features. Firstly, we conducted a comprehensive data collection process, capturing five different table-front poses: looking down, looking at the screen, looking at the robot, resting the head on hands, and stretching both hands. These poses were selected to represent common interaction scenarios. Secondly, we designed the robot’s dialog content and movement patterns to correspond with the identified table-front poses. By aligning the robot’s responses with the specific pose, we aimed to create a more engaging and intuitive interaction experience for users. Finally, we performed an extensive evaluation by exploring the performance of three classification models—non-linear Support Vector Machine (SVM), Artificial Neural Network (ANN), and convolutional neural network (CNN)—for accurately recognizing table-front poses. We used an Asus Zenbo Junior robot to acquire images and leveraged OpenPose to extract 12 upper-body skeleton points as input for training the classification models. The experimental results indicate that the ANN model outperformed the other models, demonstrating its effectiveness in pose recognition. Overall, the proposed system not only showcases the potential of utilizing OpenPose for proactive human–robot interaction but also demonstrates its real-world applicability. By combining advanced pose recognition techniques with carefully designed dialog and movement patterns, the tabletop robot successfully engages with humans in a proactive manner.

1. Introduction

Human–robot interaction in a social environment has gained significant attention from researchers. Various studies have explored the capabilities of robots to engage in interactive activities, such as playing games like red light-green light [1], soccer, and rock-paper-scissors [2,3], as well as providing companionship and emotional support [4]. However, these existing robots primarily adopt a passive approach, lacking the ability to proactively interact with humans and understand their needs in near real time. This study aims to address this limitation by enabling robots to engage in proactive interactions with humans.

In real-world desktop environments, challenges such as variable lighting conditions, partial occlusion of keypoints, and a limited field of view complicate the reliable recognition of upper-body poses. These issues necessitate robust yet lightweight pose classification models that can generalize across diverse users and settings.

Recent research has increasingly explored proactive behaviors in HRI, including recognizing user intent through body posture and facial cues. For example, Cha et al. [5] proposed a robot that proactively greets users based on gaze direction, while Li et al. [6] utilized pose estimation to enable robots to initiate interactions during a multiplayer game. These studies underscore the importance of proactive perception in enhancing natural and engaging human–robot communication.

Several studies have employed OpenPose for near real-time human–robot interaction. For instance, Li et al. [6] used OpenPose to evaluate participants’ pose responses in a ‘Simon Says’ game, while Hsieh et al. [7] developed a rehabilitation system where OpenPose assists a robot in responding to patient movements.

Advancements in deep learning have greatly contributed to the progress of human pose estimation. Notably, the Convolutional Pose Machines (CPM) method proposed by Wei et al. in 2016 [8] introduced a sequential convolutional architecture that effectively captures spatial and texture information for accurate human skeleton estimation. Additionally, the hourglass network structure developed by Newell et al. [9] has been widely adopted for single-person skeleton estimation, as demonstrated by the work of Yang et al. [10] and Ke et al. [11]. For multi-person skeleton estimation, two primary approaches have emerged. The top-down approach, as employed by Fang et al. [12], involves detecting individuals and estimating their body poses independently. On the other hand, the bottom-up approach, exemplified by the OpenPose framework proposed by Cao et al. [13,14], lists the positions of all joints for each person’s body and subsequently determines the connections between joints based on individual associations.

This paper aims to leverage the Asus Zenbo Junior robot, designed for business-oriented applications, and apply it to personal home and work environments. Placed on a regular desktop platform, the robot’s field of view is primarily focused on the upper body of individuals. To facilitate a deeper understanding of human activities at the table and enable further interaction, we employ a combination of deep learning-based skeleton estimation techniques to recognize the upper-body poses of individuals situated in front of the table. By incorporating speech and movement, the robot actively engages with people, attentively responding to their actions and needs.

2. Related Work

Pose recognition systems have extensively utilized OpenPose in recent studies. Rishan et al. [15] developed a yoga pose detection and correction system based on OpenPose. This system captures human movements using a mobile camera and transmits them to the detection system at a resolution of 1280 × 720 pixels and a speed of 30 frames per second. By extracting pose features using OpenPose and employing deep-learning models such as temporal distributed convolutional neural networks (CNNs), long short-term memory (LSTM) networks [16], and softmax regression, the system can analyze and predict the correctness of body poses based on frame sequences. Chen [17] focused on recognizing classroom students’ sitting postures using an OpenPose-based system. Surveillance cameras in the classroom detect students’ sitting postures, and pose features are extracted using OpenPose. These features are then transformed into image feature maps and analyzed with CNN [18] to determine the correctness of the sitting postures.

In the context of proactive interaction between robots and humans, Vaufreydaz et al. [19] used multimodal features for early engagement detection via Kinect. Cha et al. [5] designed an interactive robot that proactively greets people using the facial recognition package in Google’s ML Kit. When the robot detects someone looking at it, it initiates a greeting. Building upon the use of skeletal estimation from human bodies for pose prediction and proactive interaction, Li et al. [6] developed a multiplayer game called “Simon Says” involving NAO humanoid robots and humans. The robot announces a specific pose, and players must replicate it. OpenPose is used to extract pose features from the images seen by the robot, which are then converted into image feature maps. A convolutional neural network determines the correctness of the poses, and players who fail to replicate the correct pose are identified and declared out of the game. Hsieh et al. [7] developed an interactive health promotion system that employs Kinect to extract the human body skeleton and identify patients’ postures. Deep neural networks analyze the extracted skeleton to determine correctness. Users engage in rehabilitation therapy games with the Zenbo robot, aiming to increase motivation for recovery. More recently, Lin et al. [20] exploited gesture-informed analysis using foundation models to enable proactive robot responses. Belardinelli [21] also reviewed gaze-based intention estimation techniques, emphasizing anticipatory HRI mechanisms.

Based on the literature review, it is evident that utilizing skeletal estimation from the human body for pose prediction is a feasible approach. Therefore, the research objective of this paper is to combine OpenPose and develop an interactive system for upper-body pose recognition based on Artificial Neural Networks (ANN). This system will be implemented on the Zenbo Junior robot. The design includes the robot’s ability to speak and move in response to different poses, enabling proactive engagement with individuals and facilitating interactive conversations.

Compared to previous studies using CNN with full-image inputs or LSTM-based models, our ANN-based approach leverages already-dominant skeleton features extracted via OpenPose, offering a more lightweight and robust classification method in near real-time scenarios.

3. Methodology

3.1. System Overview

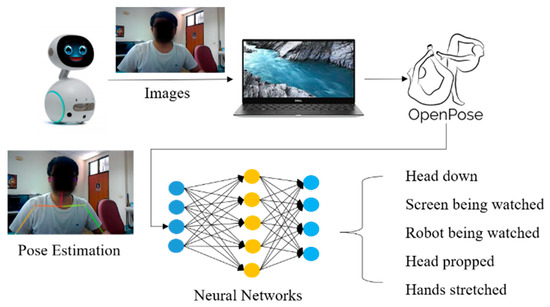

The system architecture of the upper-body pose recognition interactive system consists of two main parts. In the first part, as shown in Figure 1, the image captured by Zenbo Junior is transmitted to the server. The server then utilizes OpenPose to estimate the body posture from the received image. The obtained pose information is used to train an Artificial Neural Network (ANN) model for pose recognition. Specifically, the system aims to recognize five poses: head down, looking at the screen, looking at the robot, supporting the head, and stretching both hands, as well as other possible poses to trigger responses from the robot. This paper will concurrently collect training data and compare the results with other classification models such as a Support Vector Machine (SVM) and convolutional neural network (CNN).

Figure 1.

Flow chart of upper-body posture recognition.

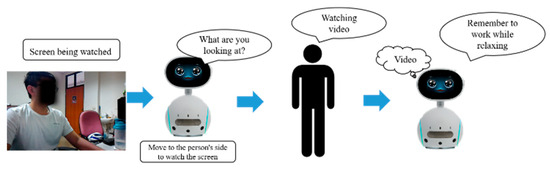

The second part focuses on the development of robot interaction behavior. After the robot recognizes the user’s upper-body pose, it moves to the person’s side and speaks. It then awaits the person’s response, utilizing speech recognition. Once the person responds, the robot understands what the person is doing and provides a caring response, as shown in Figure 2.

Figure 2.

Flow chart of Human–robot interaction.

3.2. Upper-Body Pose Recognition Using Neural Networks

3.2.1. Upper-Body Feature Extraction and Labeling

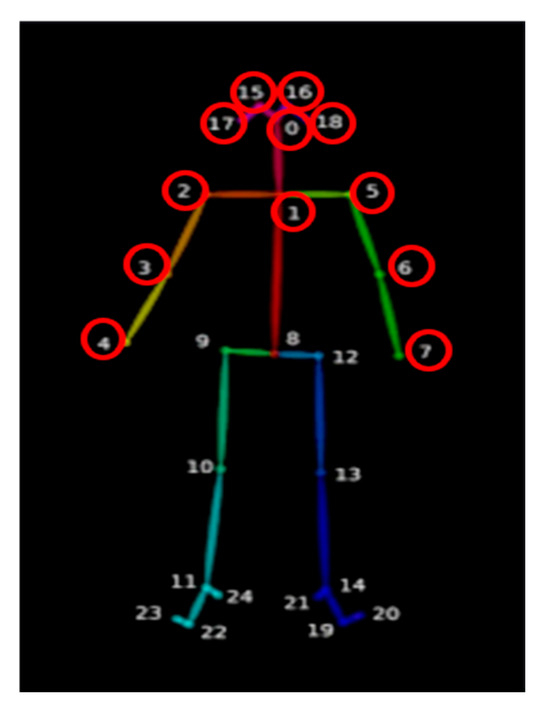

The upper-body pose recognition process begins with the extraction and labeling of relevant features. From the human body pose estimation obtained through OpenPose, a total of 25 coordinate points are initially obtained (as shown in Figure 3). However, since this study focuses exclusively on the upper body, only 12 specific coordinate points are utilized: 0, 1, 2, 3, 4, 5, 6, 7, 15, 16, 17, and 18. These twelve upper-body keypoints were extracted from each image, including point 1 (neck), to capture both central and lateral posture dynamics. These points correspond to the nose, neck, shoulders, elbows, wrists, and hips. The selected points provide X and Y coordinate information in the image, resulting in a total of 24 data points used for annotation and training purposes. Due to occasional occlusion or motion blur, OpenPose may fail to detect all 12 upper-body keypoints in a given image. In such cases, any missing keypoint is automatically filled with a default coordinate value of (0, 0), allowing the input to retain a fixed dimensional structure for model training and inference.

Figure 3.

Points of upper body.

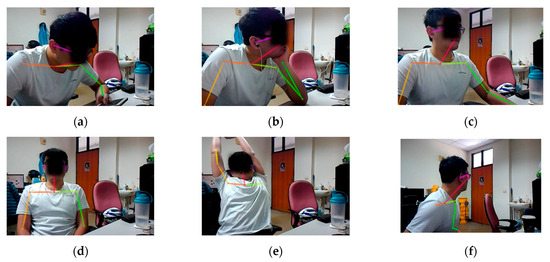

To facilitate annotation, five distinct poses are labeled, including head down, head propped, screen being watched, robot being watched, and hands stretched. Additionally, any other poses not specifically mentioned are labeled as “others.” The “head down” pose refers to instances where a person is looking down at their mobile phone or eating while seated at a computer desk (as shown in Figure 4a). The “head propped” pose corresponds to the posture of a person supporting their head with their left hand, irrespective of whether they are looking at the screen or the robot (as depicted in Figure 4b). The “screen being watched” pose represents the natural placement of hands on the table when a person is focused on the screen (as illustrated in Figure 4c). Similarly, the “robot being watched” pose captures the scenario where a person is facing the robot, with their hands naturally resting on their thighs (as exemplified in Figure 4d). The “hands stretched” pose corresponds to the posture of raising both hands upwards (as demonstrated in Figure 4e).

Figure 4.

Five postures of upper body. (a) Head down, (b) head propped, (c) screen being watched, (d) robot being watched, (e) hands stretched, (f) “Other” category: turning the head.

Lastly, any poses not falling within the four defined categories—such as turning the head, looking up, looking sideways, or sitting idly—were grouped into the “Other” category (as illustrated in Figure 4f). These poses are characterized by the absence of task-oriented body alignment and lack of intentional engagement with the screen or robot. The ‘Other’ class serves to represent neutral or ambiguous postures commonly observed during transitions or brief breaks in desk-based activities.

3.2.2. Artificial Neural Network (ANN) Model Construction

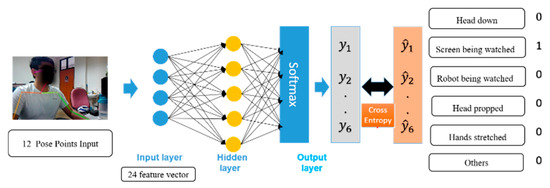

After obtaining the relevant pose estimation points through OpenPose, an Artificial Neural Network architecture for upper-body recognition is established, as shown in Figure 5. Each keypoint of the upper body in the image is considered as an input variable, denoted as x, resulting in a total of 24 points. Each feature point is then multiplied by a weight and propagated to the hidden layer. Each node in the hidden layer receives the weighted sum of input variables, denoted as , which is defined as follows:

Figure 5.

Training for a neural network architecture of upper-body recognition.

Among the activation functions used in the hidden layer, two commonly employed ones are Rectified Linear Unit (ReLU) and Sigmoid. ReLU stands for Rectified Linear Unit and is defined as follows:

ReLU outputs the same value if the input is positive, and 0 if the input is negative. It introduces non-linearity to the neural network and helps prevent issues such as vanishing or exploding gradients.

Sigmoid, on the other hand, is a mapping function defined as follows:

It maps the output range between 0 and 1, providing a probability-like output.

For gradient calculation and loss optimization, two optimization algorithms are compared in this study: Stochastic Gradient Descent (SGD) and Adam optimizer. SGD [22] updates the weight parameters in the direction of the gradients using differentiation and a learning rate. The update equation is given by the following:

where represents the weight parameters, is the loss function, is the learning rate, and ∂/∂ is the gradient derivative of the loss function with respect to the parameters.

Adam optimizer [23] combines the methods of RMSprop and Momentum to dynamically adjust the learning rate for each parameter. It computes momentum and velocity for each parameter and updates the weights accordingly. The update equations are as follows:

where and are the exponential decay rates, and are the momentum and velocity computed by Momentum and RMSprop, respectively, and are the bias-corrected versions of and , and is a small value used to prevent division by zero.

Furthermore, each variable in the hidden layer is multiplied by a weight and propagated to the output layer. Each node in the output layer receives the weighted sum from the hidden layer, as defined in Equation (10):

Finally, a SoftMax classifier is applied to the output layer, which normalizes the outputs into probability values between 0 and 1. The SoftMax equation is as follows [24]:

where represents the output values from the previous layer. The outputs from all nodes in the hidden layer pass through the exponential function and are then summed up as the denominator, while each individual output serves as the numerator, yielding the predicted class probability values .

The predicted class probability values obtained through SoftMax are then used to calculate the loss function by comparing them with the true class probability values . In this paper, categorical cross-entropy [25] is employed as the loss function, defined as follows:

where represents the predicted probability distribution and represents the actual probability distribution. The loss function measures the discrepancy between the observed predicted probability distribution and the actual probability distribution.

The true class output values are encoded using the one-hot encoding technique, representing the classes as (0, 1) vectors. The loss function calculates the difference between the predicted and actual probability distributions, providing a measure of how well the model performs. ReLU outputs the same value if the input is positive, and 0 if the input is negative. It introduces non-linearity to the neural network and helps prevent issues such as vanishing or exploding gradients.

On the right-hand side, for example, if the observed label is “2”, the corresponding representation for this class would be (0, 1, 0, 0, 0, 0). Then, each actual probability value for the different classes is multiplied by the corresponding predicted probability value, and the result is logarithmized. Finally, these logarithmic values are summed, resulting in the value of the loss function.

A separate classification model was used to allow modularity between skeleton extraction and pose classification. This design enables independent tuning of the classification model without modifying the OpenPose architecture, facilitating lightweight deployment and flexibility in updating the pose-recognition logic.

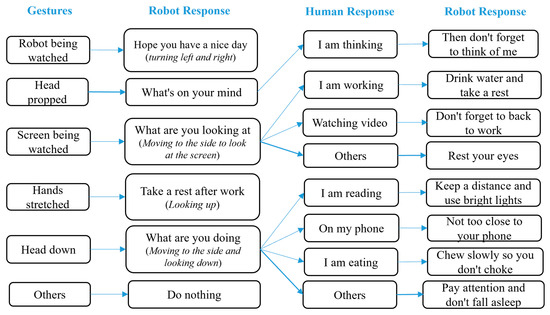

3.3. Active Care System for Zenbo Junior

During the interaction with the robot, the main focus is on actively recognizing the human’s posture and responding in a caring manner. The designed actions and responses of the robot are based on the five postures mentioned earlier: bowing the head, looking at the screen, looking at the robot, propping up the head, and stretching hands. The complete process is illustrated in Figure 6.

Figure 6.

Robot’s actions and responses for upper-body postures.

These five postures commonly occur in a typical desk-based working environment and involve static positions. The robot’s response process upon detecting these postures is as follows:

- Bowing the head: When people engage in activities such as using their smartphones, having a meal, or reading a book, they often bow their heads. Upon recognizing the posture of bowing the head, the robot moves in front of the person, lowers its head to look at the person, and asks, “What are you doing?” The robot activates its voice recognition function and provides appropriate reminders based on the person’s response.

- Looking at the screen: When people are focused on their screens while working, they assume a posture of looking at the screen. When the robot recognizes this posture, it moves next to the person, looks at the screen, and asks, “What are you looking at?” The robot activates its voice recognition function and offers reminders or suggestions based on the person’s response.

- Looking at the robot: When people look at the robot, they assume a posture of looking at the robot. Upon recognizing this posture, the robot rotates left and right and responds, “Wishing you a wonderful day.”

- Propping up the head: When people are engaged in deep thinking or pondering, they assume a posture of propping up their head. When the robot detects this posture, it asks, “What are you thinking about?” The robot activates its voice recognition function and responds accordingly to the person’s input.

- Stretching hands: When people have been working for a while and want to relax, they assume a posture of stretching their hands. Upon recognizing this posture, the robot looks up and reminds the person to take proper rest.

The system infers user intention in a rule-based manner by associating each classified upper-body pose with pre-designed robot actions. For instance, a ‘head-down’ pose is interpreted as disengagement, prompting the robot to approach the user and ask, ‘What are you doing?’ This rule-based mapping allows the robot to simulate intentional interpretation without requiring complex cognitive modeling. It is important to note that while some poses trigger a single predefined response, others may lead to multiple possible actions depending on user feedback. Through this mechanism, the Zenbo Junior robot actively recognizes and responds to user postures, enhancing the overall interactive experience and providing caring support in desk-based environments.

4. Experiments and Results

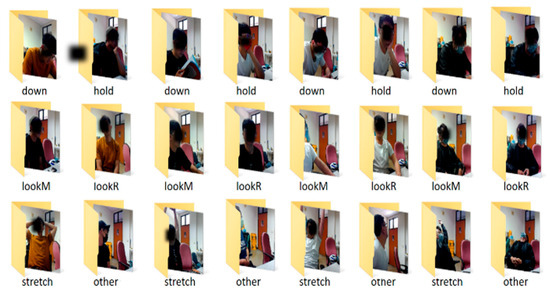

4.1. Data Collection

The dataset used in this paper was collected in collaboration with partners from the Artificial Intelligence Laboratory of the Department of Computer and Communication Engineering at National Kaohsiung University of Science and Technology (NKUST). Images were captured by Zenbo Junior and transmitted to the server in .png format with a resolution of 720 × 480 pixels using the Socket transmission function. The dataset consisted of images of four individuals in various poses, including bowing the head, looking at the screen, looking at the robot, propping up the head, stretching hands, and others (Figure 7). Each pose category contained 300 images, resulting in a total of 7200 images. During the experiment, Zenbo Junior was positioned on the right front of the tabletop, facing the person. Images are collected at a rate of one image every three seconds, with a total collection time of 900 s for each pose. The true representation of each pose is represented using vectors. The dataset was collected in a typical office environment with mixed lighting sources. Natural variation in brightness and occasional occlusion (e.g., partial body or face coverage during motion) were retained to simulate real-world interaction conditions.

Figure 7.

Dataset of upper-body postures.

To evaluate the model’s generalization capability, we adopted a subject-independent validation scheme. Specifically, the dataset consisted of images from four participants, with 5400 samples from three individuals used for training and 1800 samples from the remaining individual used for testing. This ensures that the model is evaluated on previously unseen subjects, closely reflecting real-world human–robot interaction scenarios where the robot must recognize poses from new users. To enhance reliability, the process was repeated across four folds, such that each participant served once as the test subject.

4.2. Experimental Setting

The experimental setup for this research involved two main components: the server-side framework and devices, and the robot-side components (as shown in Table 1). The server-side development utilized TensorFlow [26], a deep-learning framework by Google, and Keras [27], a deep learning library, for modeling. OpenPose was used to acquire skeleton information, and the models were trained using these data. On the robot side, Android Studio was utilized to capture the robot’s image and design its actions and speech.

Table 1.

Experimental hardware and software.

During the training process, a 5-fold cross-validation was conducted on the training dataset to ensure robustness and reduce variance in performance evaluation. The original dataset of 5400 samples was first divided into an 80:20 split for training and validation. Then, the training portion (4320 samples) underwent 5-fold cross-validation. This partitioning helped monitor the training process and identify any signs of overfitting.

The ReLU (Rectified Linear Unit) activation function was employed, along with the Adam (Adaptive Moment Estimation) optimizer. The hidden layers consisted of 32 neurons, and other parameter settings can be found in Table 2. The experimental results include several comparisons: activation functions (ReLU vs. Sigmoid), optimizers (Adam vs. SGD—Stochastic Gradient Descent), hidden layer sizes (32 neurons vs. 64 neurons), and a comparison with the traditional classifier Support Vector Machine (SVM) and the Artificial Neural Network (ANN) used in this study. Additionally, a comparison will be made with the CNN (convolutional neural network) method used in the related literature.

Table 2.

Parameter settings of training model.

4.3. Experimental Results

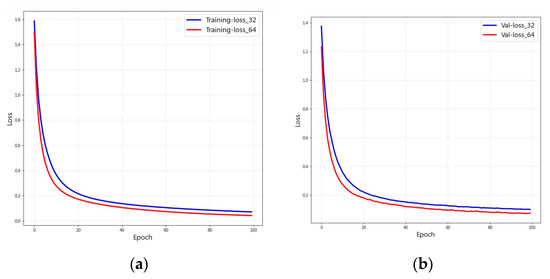

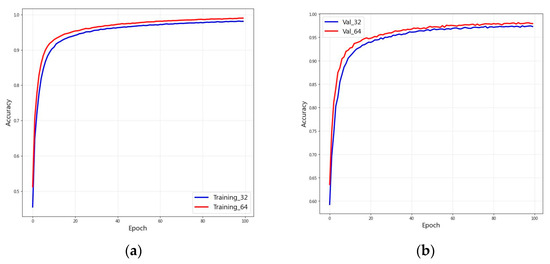

In the comparison between using 32 neurons and using 64 neurons in the hidden layer during training, we observed changes in the loss value (Figure 8a) and validation loss (Figure 8b), as well as changes in accuracy during training (Figure 9a) and validation (Figure 9b). While using 64 neurons showed slightly better results in the loss function, there was no significant difference in accuracy. Given the comparable results, using 32 neurons yielded good results, eliminating the need for 64 neurons and improving the training speed. Therefore, in the subsequent experiments, training was conducted using 32 neurons (training time with 32 neurons: 9.4541 s; training time with 64 neurons: 10.4302 s).

Figure 8.

Loss value for different neurons. (a) Training. (b) Validation.

Figure 9.

Accuracy for different neurons. (a) Training. (b) Validation.

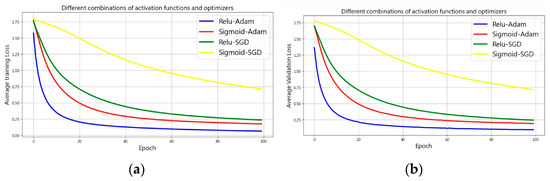

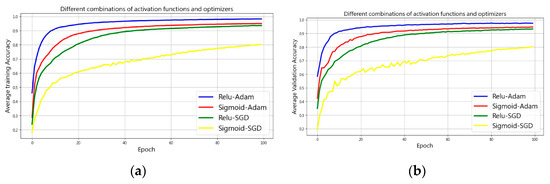

Comprehensive experiments were conducted comparing different combinations of activation functions and optimizers. The results showed variations in loss values during training (Figure 10a) and validation (Figure 10b), as well as changes in accuracy during training (Figure 11a) and validation (Figure 11b). Among these combinations, using the ReLU activation function and the Adam optimizer yielded the best results, while using the Sigmoid activation function and the SGD optimizer resulted in the poorest performance.

Figure 10.

Loss value for different combinations of activation functions and optimizers. (a) Training. (b) Validation.

Figure 11.

Accuracy for different combinations of activation functions and optimizers. (a) Training. (b) Validation.

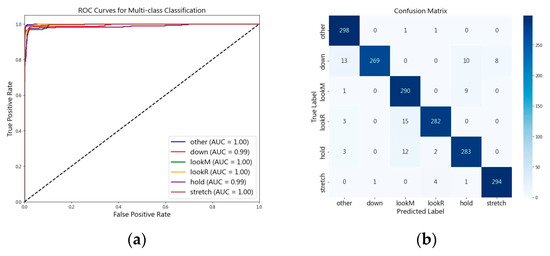

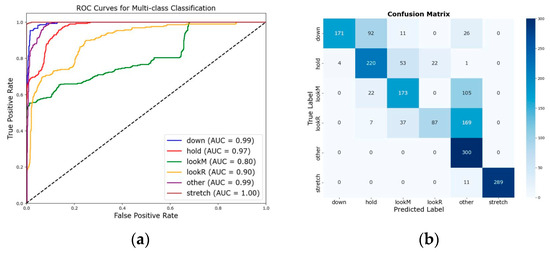

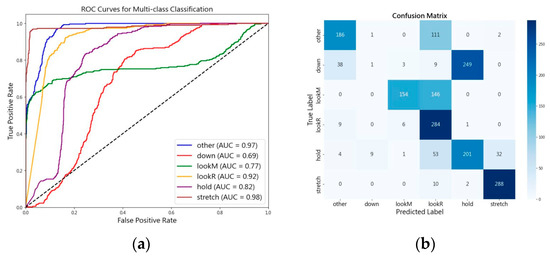

When comparing the classification test results between Artificial Neural Networks (ANN) and non-linear Support Vector Machines (SVM) from the perspective of ROC curves and confusion matrices, it is evident that classifications performed using ANN consistently outperform those conducted with non-linear SVM. Specifically, the ANN approach yields better performance across each classification task, as demonstrated in Figure 12. In contrast, the non-linear SVM struggles with accurately classifying behaviors such as looking at a robot, looking at a screen, and holding the head, leading to inferior results as shown in Figure 13. This discrepancy results in a lower overall average score for the SVM-based classifications.

Figure 12.

Upper-body posture recognition with ANN. (a) ROC curve. (b) Confusion matrix.

Figure 13.

Upper-body posture recognition with non-linear SVM. (a) ROC curve. (b) Confusion matrix.

This paper implemented the CNN architecture from Li et al.’s research [17]. Processing images with OpenPose for pose feature extraction. These features were converted into feature maps for CNN training. According to the ROC curve and confusion matrix as shown in Figure 14, the model demonstrates satisfactory performance only for the classification of looking at the robot and extending hands. Conversely, the model consistently misclassifies head-down posture, resulting in lower scores than ANN and SVM methods. The reason is that the inferior CNN performance may stem not only from the dominance of input features but also from the mismatch between the architecture and the spatially sparse input data. Unlike dense image inputs, skeletal data lack sufficient spatial continuity for CNN kernels to exploit, resulting in suboptimal feature learning. Therefore the classification effect of CNN model tended to be worse than the other two classification models.

Figure 14.

Upper-body posture recognition with CNN. (a) ROC curve. (b) Confusion matrix.

To provide a more comprehensive evaluation of model performance, we report additional metrics including precision, recall, and F1-score, alongside accuracy. These indicators are crucial for assessing classifier effectiveness, particularly in multi-class classification settings. The final evaluation of the ANN, non-linear SVM, and CNN models is shown in Table 3. The ANN model achieved the highest scores across all metrics, with an F1-score of 0.95, a precision of 0.95, a recall of 0.95, and an accuracy of 0.95. In contrast, the non-linear SVM attained an F1-score of 0.68 and an accuracy of 0.69 while the CNN model showed the lowest performance with an F1-score of 0.57 and an accuracy of 0.56.

Table 3.

Parameter settings of training model.

In practice, the average end-to-end inference time per interaction—including image capture, pose estimation, and classification—was approximately 2 s, which is considered near real-time and suitable for desk-based interaction scenarios. The delay ranged from 1.8 to 2.7 s, with the upper bond observed under strong backlighting. This indicates that ambient lighting affects processing stability, which should be considered in deployment environments. Studies using Kinect-based systems often report delays of 1.5–3 s, depending on preprocessing load. Our system’s 2 s response time falls within this range and remains acceptable for interactions involving static upper-body postures and short response intervals in a seated environment.

5. Conclusions and Future Work

This work presents the development of an interactive system for the Zenbo Junior robot based on upper-body pose recognition. The system successfully recognizes five different poses: head lowered, looking at the screen, looking at the robot, head tilted, and hands stretched. Through speech and movement, the robot actively engages with individuals, providing care and participating in conversations.

The experimental design utilized OpenPose to extract skeletal information from images, focusing on the 12 key points of the upper body as input features for training a deep model. The training process employed the ReLU activation function, Adam optimizer, and a hidden layer with 32 neurons. Comparative results demonstrate that the proposed Artificial Neural Network (ANN) model outperforms SVM and CNN models used in related literature.

To address limitations of current rule-based mappings, future work will explore reinforcement learning and adaptive dialog-generation models, enabling the robot to dynamically adjust its responses based on user feedback and behavior patterns.

While the rule-based system enables deterministic behavior, it lacks feedback adaptation and may not align with user expectations. Future work will involve user studies to assess acceptance and integrate adaptive mapping through reinforcement learning or supervised dialog modeling.

Additional poses can be incorporated to expand the range of recognized gestures and enable the robot to produce more diverse responses. Furthermore, we plan to integrate object recognition—specifically by employing a real-time detection algorithm such as YOLO—into the system. By recognizing objects like smartphones, utensils, or books in conjunction with upper-body pose, the robot can more accurately infer users’ ongoing activities (e.g., eating, reading, or using a mobile device). This multimodal approach enhances contextual awareness and reduces reliance on follow-up queries, thereby improving response precision and user experience.

The developed system shows promising results in the field of upper-body pose recognition and robot interaction. By further expanding the repertoire of poses and incorporating advanced recognition capabilities, the system can enhance its effectiveness and responsiveness in future iterations.

Author Contributions

Conceptualization, S.-H.T. and H.-P.Y.; methodology, S.-H.T. and J.-C.C.; software, J.-C.C.; validation, S.-H.T., J.-C.C. and C.-E.S.; formal analysis, S.-H.T. and H.-P.Y.; investigation, J.-C.C. and C.-E.S.; resources, J.-C.C. and C.-E.S.; data curation, J.-C.C. and C.-E.S.; writing—original draft preparation, J.-C.C. and C.-E.S.; writing—review and editing, S.-H.T. and H.-P.Y.; visualization, J.-C.C. and C.-E.S.; supervision, S.-H.T. and H.-P.Y.; All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Science Council, R.O.C. under Grant NSC 107-2923-S-002-001-MY3.

Data Availability Statement

The data presented in this study will be made available in an anonymized format upon reasonable request to the corresponding author. The dataset will also be deposited in a public repository upon acceptance.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sakai, K.; Hiroi, Y.; Ito, A. Playing with a Robot: Realization of “Red Light, Green Light” Using a Laser Range Finder”. In Proceedings of the 2015 Third International Conference on Robot, Vision and Signal Processing (RVSP), Kaohsiung, Taiwan, 18–20 November 2015. [Google Scholar]

- Brock, H.; Sabanovic, S.; Nakamura, K.; Gomez, R. Robust real-time hand gestural recognition for non-verbal communication with tabletop robot haru. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 891–898. [Google Scholar]

- Brock, H.; Chulani, J.P.; Merino, L.; Szapiro, D.; Gomez, R. Developing a Lightweight Rock-Paper-Scissors Framework for Human-Robot Collaborative Gaming. IEEE Access 2020, 8, 202958–202968. [Google Scholar] [CrossRef]

- Hsu, R.C.; Lin, Y.-P.; Lin, C.-J.; Lai, L.-S. Humanoid robots for searching and kicking a ball and dancing. In Proceedings of the 2020 IEEE Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 23–25 October 2020; pp. 385–387. [Google Scholar]

- Cha, N.; Kim, I.; Park, M.; Kim, A.; Lee, U. HelloBot: Facilitating Social Inclusion with an Interactive Greeting Robot. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018; pp. 21–24. [Google Scholar]

- Li, C.; Imeokparia, E.; Ketzner, M.; Tsahai, T. Teaching the nao robot to play a human-robot interactive game. In Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 5–7 December 2019; pp. 712–715. [Google Scholar]

- Hsieh, C.-F.; Lin, Y.-R.; Lin, T.-Y.; Lin, Y.-H.; Chiang, M.-L. Apply Kinect and Zenbo to Develop Interactive Health Enhancement System. In Proceedings of the 2019 8th International Conference on Innovation, Communication and Engineering (ICICE), Zhengzhou, China, 25–30 October 2019; pp. 165–168. [Google Scholar]

- Wei, S.-E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 483–499. [Google Scholar]

- Yang, W.; Li, S.; Ouyang, W.; Li, H.; Wang, X. Learning feature pyramids for human pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1281–1290. [Google Scholar]

- Ke, L.; Chang, M.-C.; Qi, H.; Lyu, S. Multi-scale structure-aware network for human pose estimation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 713–728. [Google Scholar]

- Fang, H.-S.; Xie, S.; Tai, Y.-W.; Lu, C. Rmpe: Regional multi-person pose estimation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2334–2343. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime multi-person 2D pose estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Rishan, F.; De Silva, B.; Alawathugoda, S.; Nijabdeen, S.; Rupasinghe, L.; Liyanapathirana, C. Infinity Yoga Tutor: Yoga Posture Detection and Correction System. In Proceedings of the 2020 5th International Conference on Information Technology Research (ICITR), Moratuwa, Sri Lanka, 2–4 December 2020; pp. 1–6. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chen, K. Sitting posture recognition based on OpenPose. IOP Conf. Ser. Mater. Sci. Eng. 2019, 677, 032057. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Vaufreydaz, D.; Johal, W.; Combe, C. Starting engagement detection towards a companion robot using multimodal features. Robot. Auton. Syst. 2016, 75, 4–16. [Google Scholar] [CrossRef]

- Lin, L.-H.; Cui, Y.; Hao, Y.; Xia, F.; Sadigh, D. Gesture-informed robot assistance via foundation models. In Proceedings of the 7th Annual Conference on Robot Learning, Atlanta, GA, USA, 6–9 November 2023. [Google Scholar]

- Belardinelli, A. Gaze-based intention estimation: Principles, methodologies, and applications in HRI. ACM Trans. Hum. -Robot Interact. 2024, 13, 1–30. [Google Scholar] [CrossRef]

- Recht, B.; Re, C.; Wright, S.; Niu, F. Hogwild!: A lock-free approach to parallelizing stochastic gradient descent. Adv. Neural Inf. Process. Syst. 2011, 24, 1–9. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep feedforward networks. In Deep learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for Large-Scale machine learning. In Proceedings of the 12th USENIX symposium on operating systems design and implementation (OSDI 16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Chollet, F. Deep Learning with Python; Simon and Schuster: New York, NY, USA, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).