1. Introduction

Artificial intelligence (AI) has become pervasive in almost all application domains, from everyday life services to various industries, enabling more efficient and personalized interactions, new features, enhanced system autonomy, and predictive capabilities based on historical and real-time data. AI algorithms are based on increasingly complex models and mainly leverage artificial Neural Networks (NNs). Due to the large variety of use cases, all papers in the literature focus on a given application subset or a given type of model or NN. This paper focuses on Deep Learning systems, and, in particular, Convolutional Neural Networks (CNNs), a subset of Deep Neural Networks (DNNs).

A common point between all use cases is the need to manipulate very large amounts of data with increasingly complex algorithms that require high computational capability. Such services were therefore initially centralized in cloud infrastructures with extensive computing capabilities, including, e.g., GPUs supporting high-performance floating-point operations. This approach has two main limitations: the requirements on network bandwidths to send all the data to the datacenters, and the induced costs in particular in terms of energy consumption and strong environmental impact. The rapidly increasing number of Internet of Things (IoT) devices that produce and collect data has raised the need for AI computations performed on the edge, i.e., as close as possible to data production or collection. In many cases, edge computations are limited to inferences, the system training being performed before in-field commissioning. In this paper, we will therefore focus on inferences performed in AI-powered embedded systems, enabling real time data-driven decision-making.

In this context of Edge AI, many resources have to be developed, including both hardware and software [

1]. Designers face many conflicting constraints such as limitations on the amount of hardware and memory (for system cost, and also equipment physical volume) and low power/energy consumption specifications (for reduced cooling, increased autonomy, simplified power delivery, and reduced environmental impact), in spite of increasingly high computing power requirements. Addressing all these concerns often leads to optimizing the implementation of the NNs, resorting to quantized networks [

2] taking advantage of smaller data representation formats and cheaper arithmetic operations, at the expense of a small precision decrease. Quantization can lead to the use of floating point numbers with a reduced number of bits, fixed point representations, integers or even binary or ternary networks [

3].

Critical applications are not spared by this evolution and require a maximal level of trustworthiness. NNs are increasingly used in avionics and space [

4,

5] or in safety-critical applications, such as transportation (towards autonomous vehicles [

6]) or healthcare devices (for, e.g., remote patient monitoring). Real-time decision-making in, e.g., smart factories or smart grids also require embedded systems to be reliable and available. An increasing number of devices are also at risk with respect to malicious attempts, including remote attacks and cybersecurity threats but also hardware attacks. The later exploit security vulnerabilities through direct physical attacks on the devices, including so-called fault-based attacks perturbing the system behavior to recover confidential sensitive data. All these threats induce additional concerns for designers, requiring managing the potential effects of perturbations inducing logical errors during the computations. Various fault models can be considered; several will be cited later in this paper. However, the focus here is on the most common effect in digital systems, corresponding to one erroneous bit among the system data which is called “bit-flip”.

No matter the most stringent attribute for the application (reliability, availability, safety or security), the occurrence of spurious bit-flips has become a threat to be seriously taken into account, from Silent Data Errors in exascale datacenters [

7] down to single-bit disturbances in resource-constrained devices. However, all bit-flips do not have the same consequences from the application point of view. Some of them are masked due to the application characteristics. The others may lead to benign errors or in some cases to catastrophic consequences. It is not possible to ensure in practice that all spurious bit-flips will be avoided or masked. It is therefore of utmost importance to characterize those bit-flips that can be the most harmful in order to devise protections with the best trade-off between costs and efficiency, maximizing the system’s resiliency while meeting the other requirements. Analyzing the impact of bit-flips in CNNs, taking into account the quantization choices, is the goal of the study reported in this paper. We report the results of extensive fault injections on a use case. These results have been obtained on an integer version [

8] of LeNet [

9] performing digit recognition. This choice allows comparisons with a large set of previous contributions since LeNet is the NN example that has been the most used in the literature discussing AI implementation dependability. We revisit the criteria used in the literature to characterize the criticality of a bit-flip, and we explain some contradictions between previous claims and our findings, highlighting parameters that influence the efficiency of hardening techniques aiming at maximizing resiliency and trustworthiness.

A comprehensive review of the literature will be discussed in the next section. The main outcomes and novel contributions of this work are as follows:

A very comprehensive set of fault injection results, independent of any specific hardware/software implementation and using a much larger dataset than previous studies, on a typical example of quantized CNN;

A global analysis of the bit-flip impacts, highlighting an aspect mostly neglected in the literature, i.e., the positive impact many bit-flips can also have on the NN classification accuracy, limiting the accuracy loss in harsh environments;

An in-depth analysis of bit-flips leading in this case study to misclassifications, especially with respect to usual criticality criteria identified in the literature, i.e., their direction (0 → 1 or 1 → 0) and their impact on the modified value (closer to 0 or further from 0). Results demonstrate that the validity of these criteria depends on several parameters, including the considered layer and type of data;

A discussion of contradictory outcomes from this study and the literature, leading to identifying an extended list of parameters to be considered when forecasting the impact of a bit-flip. The main novelty is to explicitly include the definition of the activation functions in the layers as part of these parameters;

Insights into careful and efficient use of recently proposed hardening schemes.

Previous contributions are summarized and analyzed in

Section 2, emphasizing the motivations and landscape of our study.

Section 3 defines the use case and the targeted faults.

Section 4 presents and discusses the main results, before the conclusion and research perspectives.

2. Analysis of Related Works

In the literature, many works discuss the effect of faults occurring in NNs during inferences. It is not possible to cover all of them, but we will cite and discuss here the outcomes of significant, diversified, and representative contributions in the domain from 2016 to 2024 [

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25]. Most works are focused on the reliability or safety of the NNs; some are more concerned by fault-based attacks in the context of hardware security [

15,

21]. In the following, we will discuss the effect of bit-flips, no matter the targeted dependability attribute. The impact of optimization techniques such as pruning or retraining will not be discussed. The focus is on inferences in the field, after final implementation. Quantization is performed before putting the system into operation and thus will only be discussed with respect to its impact on the data representation format and on the related effect of bit-flips.

2.1. Global Overview: Networks, Targeted Data and Representation Formats, Fault Models

As illustrated in

Table 1, various NNs have been studied, trained with various datasets. However, one network is much more represented than the others, namely Lenet (also referred to as Lenet-5) [

9]. Some benchmark datasets are usually associated with each NN, such as MNIST for LeNet.

Different types of data are manipulated by the NNs and can be considered as fault targets. Some data are independent of any particular hardware or software implementation of the NN because they always need to be computed and stored for some time at some location within the system. These data are in three groups: inputs (e.g., image to classify or analyze), NN parameters (weights and biases), and intermediate data computed as outputs of a given NN layer and reused as inputs of the next layer in a feedforward network, and/or other layers in case of, e.g., a Feedback (or Recurrent) NN or a Deep Residual Network [

26] with skip connections. Other data strongly depend on the hardware/software implementation of the NN, corresponding to bits in memory blocks and in registers for systems based on CPUs, GPUs or hardware accelerators, and also bits in configuration memories for FPGAs.

In early dependability studies, the data assumed to be perturbed were almost restricted to the NN parameters, and, in particular, weights [

10,

13,

15,

16,

17]. Since the weights are used for all inferences, they stay permanently in the system memories and have therefore a higher probability of being perturbed than data used during only a fraction of a single inference computation. However, in case of critical applications, it is not possible to neglect the possible perturbations in all other data. Trustworthiness requires taking into account all possible sources of errors leading to potential misclassifications, and the scope of recent studies has been enlarged in particular to intermediate data, as illustrated in

Table 1. Faults that are dependent on the implementation are also useful to consider, e.g., when characterizing a particular architecture [

11,

14,

20,

22] or a particular platform [

15,

19], but the results cannot be directly generalized to any other implementation. Furthermore, when the implementation is based on embedded software developed for a CPU- or GPU-based platform, the results also depend on design decisions such as compiler selection and selected optimization options. Many studies also report results obtained thanks to physical experiments, such as experiments under particle beams [

12]. In that case, of course, all types of data can be perturbed during the same experiment, and it is not always possible to distinguish what initial perturbation led to an identified effect for the studied implementation. For our study, we focused on the effect of perturbations in data that are independent of the implementation, so that results can lead to general conclusions.

Most of the early studies considered Floating Point (FP) data formats and, in particular, single-precision 32-bit binary FP format FP32 (IEEE 754) or sometimes double precision 64-bit FP. With the increasing need for implementation in embedded systems, quantized networks were also studied from the robustness point of view, first showing that they could achieve higher resilience and then analyzing the fault effects they must avoid. As illustrated in

Table 1, many data representation formats have thus been considered, from standard or custom variants of fixed-point representations with various numbers of bits for integers and fractions, to several sizes of integers and down to binary or ternary formats although few works addressed the effect of faults with this level of quantization. Let us underline that in the case of integers, two formats can be found, although details are rarely given in the papers. In [

15], the authors clearly show that N-bit signed integers are coded using a sign bit and N-1 bits for the magnitude. In most cases, signed integers use the two’s complement representation as in [

24], or in [

25] where variables are defined in a C program. About [

22], the authors confirmed to us that two’s complement was also used.

The fault models used to evaluate the NN robustness are, in general, either stuck-ats or bit-flips, often called Single Event Upsets (SEUs). Stuck-ats can be assimilated in this context to permanent bit errors, similar to, e.g., bit-flips occurring in memory before the inference starts, a frequent assumption when weights are the injection target. More generally, in the literature, SEUs are usually the model used to represent transient perturbation effects. With this model, a bit can be changed in a piece of data at any time. When this piece of data is used several times during the inference, the correct value may be used before the SEU occurs and then be replaced by the modified value for the following computation steps. In practice, due to such a byzantine fault, the behavior can only be analyzed when considering injections on a given platform, but even in these cases most papers do not really consider a possible modification leading to the use of two different values of the same piece of data during a single inference. In consequence, for most papers, the SEU fault model corresponds in fact to a single bit-flip in a piece of data before its first use, with of course the exception of results obtained under particle beams. Few papers also consider other fault models, such as timing errors due to marginalities or process/voltage/temperature variations [

14]. In such cases, faults are assumed to occur with similar effects to stuck-ats or bit-flips. To our knowledge, few works consider these fault models in the case of binary or ternary NNs. For such networks, authors consider a percentage of flipped bits when reading weights from memory, called bit error rate (BER) [

15].

2.2. Most Critical Fault Locations

An important point discussed in the literature is the identification of the most critical locations of errors, characterized with respect to the bits in data representation, to the type of data (input, weights, biases or intermediate data) or to the impacted layer in the NN. Most studies agree that the most significant bits are the most impactful, associated in some cases with the sign bit.

When parameters are floating- or fixed-point numbers, most studies agree that the most significant bits in the exponent or integer part are highly sensitive while the mantissa or fraction bits have only little impact. For the sign bit, claims in the various publications are more diverging, from not critical in many works to critical for some layers [

18] or claimed to impact NN accuracy as the exponent bits do, although this is not clear from the presented figures [

23].

About the layers, in [

13], the convolutional layers in LeNet are reported as the most sensitive to faults in weights, with a lower sensitivity of fully connected layers. For the same network, [

23] identifies the last layer among the most sensitive ones for weights and shows that for intermediate data the criticality of faults increases when the layers are closer to the output. Reference [

25] mentioned a similar trend for the sensitivity to faults in intermediate data. This illustrates that all reported results are not always consistent, probably due to some differences in the evaluated network that are not explicit in the papers and/or to the different data representation formats.

More detailed findings about the critical bit-flips led to several design hardening proposals aiming at increasing the NN robustness with limited overheads. These techniques, which are validated in case studies, mainly rely on two claims that can also sometimes appear as conflicting and will be discussed in the next sections.

2.3. Bit-Flip Direction as Criticality Criterion

Previous studies analyzed the critical bit-flips found during the fault injection experiments, i.e., bit-flips leading to additional misclassifications compared to the nominal NN precision. No matter what stuck-ats or bit-flips are assumed, many papers claim that erroneous bits equal to one have much more critical consequences than wrong bits at zero. This is the basis of several proposals for mitigations, proved to noticeably increase the robustness to faults with limited costs by forcing bits to zero when an error is detected [

20,

23]. In most cases, studies converging towards this claim used floating-point data representations, but the same claim can be found for quantized NNs using 8-bit signed integers [

22]. However, results shown in [

25] indicate an opposite trend, with all the bit-flips found critical during the experiments being in the direction 1 → 0. It has already been identified in some papers such as [

11] that different DNNs have different sensitivities to faults, depending on the topology of the network, the data representation used, and the bit position of the fault. However, the network considered as case study in both [

22,

25] is LeNet with 8-bit signed integers in both cases. So further work is required to explain the different observations.

2.4. Value Further from Zero as Criticality Criterion

The authors of [

23] present in [

24] a different point of view for the same NN and the same data type than [

22,

25]. While [

23] only highlighted the criticality of bit-flips in the direction 0 → 1, [

24] discusses the impact of the data representation. The authors propose a different mitigation approach, taking into account the sign value in addition to the bit-flip direction to force the erroneous piece of data to the value that is closest to zero. The idea is based on the histogram of correct values, narrowly centered around zero. The claim is that errors moving a value closer to zero have less impact on the output of the CNN than errors generating unlikely large values during the computations. The idea is similar to the approach in [

10], rounding faulty weights towards zero in ad hoc NN topologies. This also joins the approach in [

14] which prunes (or sets to zero) weights mapped to faulty MAC units, due to large absolute errors in the matrix–vector product induced by stuck-at faults in the unit and resulting in a large drop in accuracy; here, the data representation format is not indicated but has no impact since the goal is to force the result to zero.

Although presented differently, the proposals in [

23,

24] are consistent since forcing the bits to 0 in FP representations leads to bringing the value closer to zero. However, this different critical criterion still does not explain the observations reported in [

22,

25].

2.5. Conclusion on the Literature Review

The first conclusion of our review is that in most cases “stuck-ats”, “bit-flips” or “SEUs” correspond in fact in the papers to the same perturbation type, i.e., a bit with a wrong value during the period of time the piece of data is used to compute the inference. In this paper, we will use the generic term “bit-flip” to represent an erroneous bit.

It also appears from this summary that further analyses are required to better understand the different reported trends or different interpretations and establish more general bases. In almost all papers, the time required by the experiments led to using only a small number of input samples, leading to uncertainties about the possibility of generalizing the conclusions derived from the observations. For example, looking at studies having used the MNIST test set, the results in [

13] have been obtained from only 37 images for bit-flips in weights, those in [

24] have been obtained from 52 images for bit-flips in weights and 370 images for bit-flips in intermediate data, the number of images used in [

23] is not mentioned and the observations in [

25] have been made with only 26 image samples. All the previous observations therefore deserve consolidated analyses based on a much larger set of images in order to generalize the conclusions and resolve apparent contradictions.

3. Case Study Definition

3.1. Convolutional Neural Network

Many AI models have been proposed in recent decades, with remarkable diversity and improvements in recent years. Since our goal is not to evaluate the latest achievements, we selected a well-known CNN as demonstrator: LeNet, proposed in 1998 for character recognition [

9]. CNNs are highly used in critical and IoT applications, as demonstrated by many contributions in the recent literature. Pattern recognition being a very typical feature, this illustrates usual concerns of Edge AI designers. Furthermore, as shown in the previous section, CNNs are a major target for dependability-related investigations and LeNet has been used in many previous works, allowing us to compare our results with a significant number of previous contributions.

This NN is composed of six hidden layers, three of them being convolutions [

9], and a final output layer. Since our focus is on Edge AI and sparse resource usage, we selected a quantized version written in C using 8-bit integer representation for weights and intermediate data and 16-bit integers for biases. The integers are defined in int8_t or int16_t data types, so they are encoded using two’s complement. The C code is publicly available at [

8]. In this version, the two convolutional layers identified as C1 and C3 in the sequel have outputs computed through a Rectified Linear Unit (ReLU). Layers S2 and S4 perform downsampling with MaxPool. Dense fully connected layers F5, F6 and F7 also use ReLU. The digit in these names indicates the appearance order in the network dataflow.

The network was trained using the images of handwritten digits provided in the training set of the MNIST database. The accuracy obtained with our set of weights and biases is 98.05% on the MNIST test set, containing 10,000 images.

3.2. Dependability Evaluation: Fault Model and Injections

Our goal is to provide results (1) on a much more comprehensive dataset than usually reported in the literature, i.e., the whole MNIST test set (10,000 images) and (2) remain independent of any specific implementation. We will therefore focus on faults occurring in data that must be used during the inferences no matter the hardware characteristics or software optimizations, i.e., the input data (images), NN parameters (weights and biases) and intermediate datasets at the interface between two successive layers.

Perturbations are assumed to provoke a single bit-flip in one piece of data at any physical location and any clock cycle during an inference, provided that the value of the perturbed data is coherent for the whole inference duration (e.g., a given weight has the same correct or erroneous value at each use in the inference and an intermediate data is corrupted at any time before its first use but then remains constant during the next layer computation).

In other words, faults occurring in specific registers or operators related to a specific implementation are only partially represented. However, the considered bit-flips encompass part of many fault models and perturbation causes including, e.g., stuck-ats in memory, transmission errors, particle strikes, etc. Timing errors due to variations, marginalities or undetected defects are also partially covered. The injections, although performed at precise instants defined on a functional basis, cover real bit-flip occurrences at many instants during an inference, as well as many different physical fault locations.

Representing all possible effects of transient faults, in order to obtain results comparable with, e.g., those presented in the literature after experiments under particle beams, would require considering a specific implementation. This would however restrict the generality of the conclusions and lead to very long fault injection experiments for each input sample. On the other hand, the presented results have been obtained for a much larger number of different inferences than usually reported, thanks to highly parallelized software-level fault injection experiments. Such a large number of input images would be out of reach, even with a small network as targeted here, if all the specificities of a given platform architecture were taken into account or a beam had to be reserved for the experiments.

The preliminary results presented in [

25] were obtained by injections on a FPGA-based platform, targeting a small subset of the faults considered in this paper. We have verified that for the targeted bit-flips the fault injection results are the same on the platform and using the software-level injection parallelized on a server farm. The only difference is the experimental time required, several orders of magnitude shorter on the server farm. Of course, the use of a physical platform would be unavoidable to target faults that depend on the platform, but it is not the goal of the presented study.

During our case study, single bit-flip Statistical Fault Injection (SFI) has been performed using uniform random sampling of faults in each (layer, data type) subset with a target of 1% error margin and 99% confidence in each subset according to [

27]. The number of faults injected for each image classification is summarized in

Table 2. The number for each subset of course depends on the total number of bits in each subset and is therefore very different between the two extreme values corresponding to the weights in F5 (15,901 injections) and the intermediate data at the output of F7 (80 injections). A total of 5484 bit-flips were injected into the image pixels. For the whole MNIST test set, nearly 825 million faulty inferences have been computed.

4. Discussion of Results

4.1. Global Impact of Bit-Flips on Accuracy

As expected from the literature and the known intrinsic fault-tolerance of NNs, single bit-flips occurring during the inferences have only a small impact on the NN accuracy: 99.85% of the injected bit-flips had no impact on the classification result. In addition, 0.01% of the bit-flips led to a different misclassification with respect to the nominal inferences. Global accuracy with respect to the MNIST labels therefore only drops from 98.05% to 97.99% when single bit-flips occur. For the bit-flips leading to a different misclassification, the actual impact is application-dependent since the different outcome may be either more harmful, equivalent or less disturbing than the nominal misclassification.

As shown in

Table 3 and

Table 4, the perturbed layer and the type of perturbed data have only a marginal influence on the accuracy evaluation. The most sensitive layer is, in our case, the output layer, as previously found in [

23] for data in custom reduced FP formats and in [

25] for the same NN but with a much smaller number of image samples. The most sensitive data are the biases and then the intermediate data, while weights exhibit the smallest sensitivity, although they were the most studied data in the literature. Let us mention that for the first layer (C1), faults in weights are more significant than faults in the input image (97.86% accuracy with a bit-flip in the weights compared to 98.02% accuracy with a bit-flip in the image).

We must underline that the accuracy variations shown in

Table 3 and

Table 4 are computed taking into account only the faulty inferences for the target layer or the target data type. A more detailed view is given in

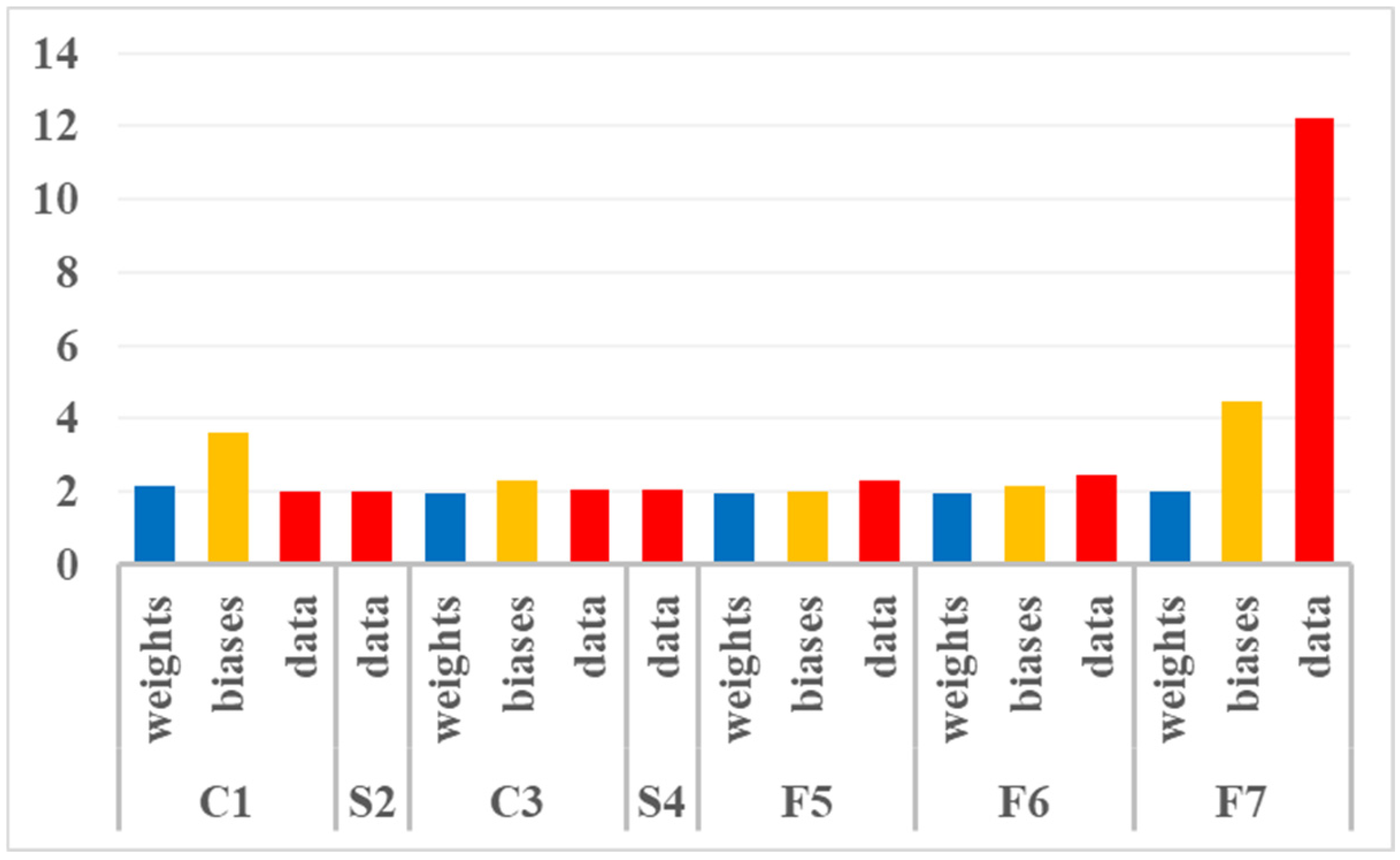

Figure 1 using the same principle, so computing the percentage of misclassifications taking into account only the inferences with bit-flips in the target (layer, data type) subset. It can be observed here that the most sensitive bits are in the output intermediate data of F7, and then in biases for F7 and C1. The high sensitivity of the output intermediate data of F7 is not surprising since it is just before SoftMax and the final classification result.

Figure 1 indicates that 12.23% of the faulty inferences with bit flips injected in this data led to a misclassification with respect to the MNIST labels. This percentage is much higher than the 1.95% inaccuracy without faults. However, this data represents the smallest subset of all the bits involved in an inference computation. Taking into account the small probability that these bits are actually perturbed in the field, among all the others, considerably reduces their actual criticality from the application point of view. In

Table 3 and

Table 4, the relative sizes of the subsets are partially taken into account since the number of injections per subset, indicated in

Table 2, depends on the number of bits in the subset. When the accuracy is computed globally on several subsets (e.g., weights, biases and intermediate data in layer C1), the relative size of each subset impacts the global result by balancing the influence of each subset with respect to its size. It must be noticed however that the random sampling used for SFI generates a number of faults to inject that is not proportional to the number of bits in the subset but rather asymptotic [

27]. The results discussed here are therefore not directly representative of the probability of misclassifications due to bit-flips occurring with the same probability in each bit when the NN operates in a harsh environment, since the influence of the largest subsets is underestimated.

Analyzing the results more deeply, it appears that bit-flips can be grouped into five classes with respect to the impact on the inference results, as summarized in

Table 5. A bit-flip can have no impact on the classification either because a correct answer with respect to the MNIST label is obtained with and without fault, or because the same misclassification is obtained in both cases (cases O1 and O2). As previously mentioned, in a small number of cases a different misclassification occurs with and without fault (case O3). As expected, bit-flips can also have a negative effect, inducing a wrong classification while the nominal NN answer is correct (case O4, called “added misclassification”). However, the most interesting observation is the relatively large number of bit-flips with unexpected correction effect, i.e., allowing to obtain the correct classification result while a misclassification was obtained without fault (case O5, called “corrected misclassification”). This last case is neglected in the literature. An exception is [

19], reporting such observations after injecting bit-flips in an FPGA configuration memory and qualifying this case as “benign errors” with respect to the nominal behavior of the NN. In practice, the right reference is the expected answer (in our case, the MNIST label), not the nominal NN answer with imperfect accuracy. Case O5 does not correspond to benign errors, but to an increase in the probability of right classification thanks to faults, partially compensating for the negative fault effects. Since this case of a positive effect is usually neglected, it will be analyzed in more detail in the next section.

In the following, bit-flips will be considered critical only if they decrease the NN accuracy, i.e., in the case O4. Considering only this category, F7 is the most sensitive layer (10.38% of the bit-flips injected in the intermediate data before SoftMax were recorded as critical, 2.58% in biases and 0.28% globally for all data types because most of the bits are in weights and much less sensitive). Then, in decreasing sensitivity order we found S4 (0.20% in output data), C1 (0.13% global due to 1.79% in biases), S2 and C3 (0.11% global each). The less sensitive layers are F6 (0.05% global) and F5 (0.03% global).

In the following, bit-flips in the case of O5 will be called positive bit-flips.

4.2. Detailed Analysis of Positive Bit-Flip Occurences and Compensations

Positive bit-flips were identified in a significant number of injection experiments (325,784), compensating for about 40% of the total number of new misclassifications (case O4). This noticeably contributes to the small 0.06% global NN accuracy loss.

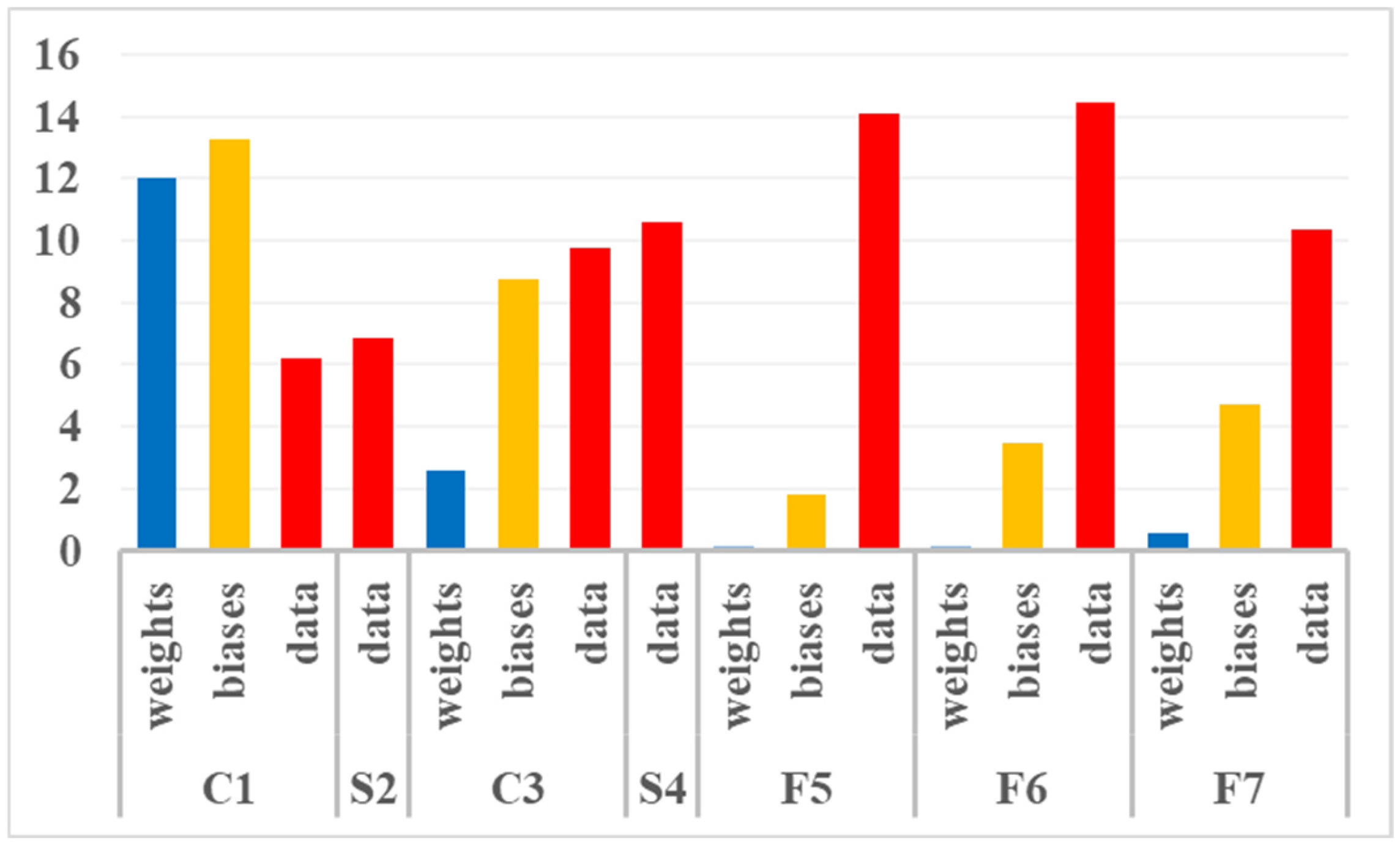

Looking only at these positive bit-flips,

Figure 2 illustrates the percentage recorded among all the faulty inferences for each (layer, data type) subset. The first observation is that such bit-flips have been recorded in all subsets. For intermediate data, the percentage increases as moving forward in NN then drops in F7. For weights, it is at the maximum in C1, falls down to C3 and remains very low in all the fully connected layers although slightly increasing. For biases, it decreases when moving forward in the classification part of the NN (C1 then C3), is minimum for F5 and then increases again when crossing the other fully connected layers in the classification part.

However, what is most important for the application trustworthiness is the global accuracy the user can expect from NN. The real question is therefore how positive bit-flips compensate for the negative impact of other bit-flips, so what is the difference in terms of occurrence rate between positive and critical (or negative) bit-flips.

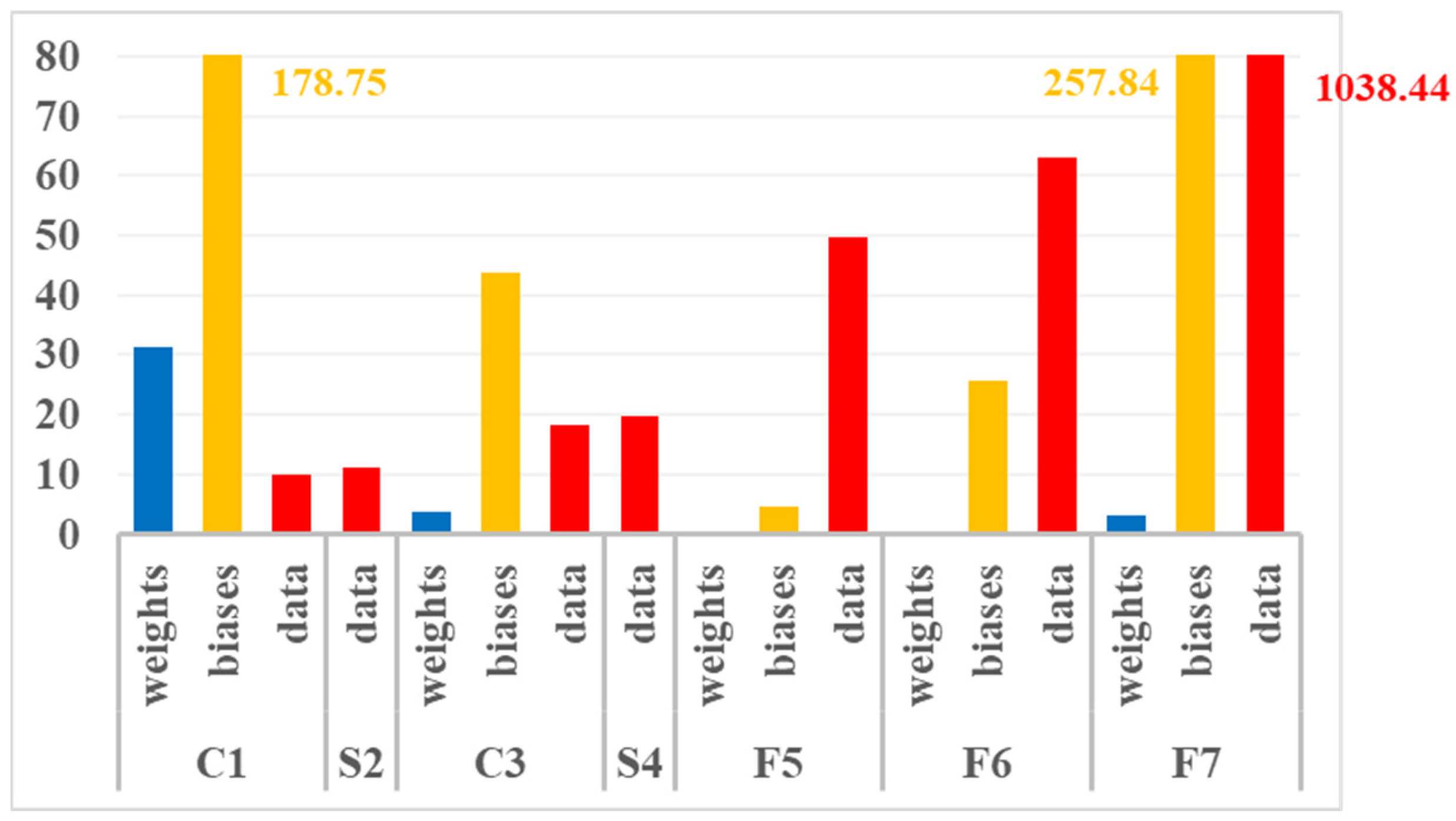

Figure 3 illustrates the recorded percentage of critical bit-flips among the faulty inferences for each (layer, data type) subset. Although values are much higher, the global trends observed for the data types are quite similar to those identified in

Figure 2, except for intermediate data that reaches a very high maximum in layer F7.

Table 6 summarizes the percentage of bit-flips with a positive consequence, among all bit-flips having an impact on accuracy computation. All (layer, data type) subsets have benefited from positive bit-flips, but the benefit is very limited for layer F7 and very large for layer S2. In fact, layers in the feature extraction part of the NN are more prone to compensation than layers in the classification part. Bit-flips in the classification part (fully connected layers) increasingly impact accuracy when occurring closer to the NN output.

Figure 2 and

Figure 3 show that the weights in C1 are those that clearly can have the highest impact on accuracy. However, the compensation level is quite high since the impact can be significantly either positive or negative. Also, they only account for less than 1% of the weights so the probability that a bit-flip occurs in this data subset is small. Although bit-flips in weights have been considerably studied in the literature, they do not appear here as the major concern.

Another observation is that biases are clearly the type of data with the smallest amount of compensation. It is especially the case for the first and last layers.

In addition to the data previously analyzed, let us mention that bit-flips in the input image were found to be those inducing the highest percentage of positive effects. Among those modifying the accuracy, 40.38% lead to corrected classifications compared to the fault-free inferences, compensating for 67.73% of the critical bit-flip effects.

More details on positive bit-flips will be given in the next sections.

4.3. Impact of Bit-Flip Direction on Misclassifications

As mentioned in

Section 2.3, a very common claim in the literature is that bit-flips in the direction 0 → 1 are more critical than bit-flips in the opposite direction. The distribution of the critical bits recorded during our case study is summarized in

Table 7 for weights,

Table 8 for biases and

Table 9 for intermediate data. In each table, red bold numbers highlight the majority cases. Bit-flips in both directions have been found, no matter the layer or the data type. However, the majority case is the direction 1 → 0, with 51.78% in this direction for the weights, 55.79% for the intermediate data, up to 88.45% for the biases and 60.14% overall. Bit-flips in the image inputs are the exception, with only 7.12% of the critical ones being in the direction 1 → 0.

Analyzing the results more precisely, it appears that the most critical direction depends on both the perturbed data and the layer. In the case of weights, the two directions are quite equivalent globally, but critical bits have been forced to 0 in the first layers and to 1 in the last layers. For intermediate data, on the other hand, critical bits forced to 1 are more numerous only in the first two layers. For biases, bit-flips with direction 1 → 0 are clearly the most critical ones in all layers.

As discussed in the previous section, another important aspect is the compensation allowed by positive bit-flips. A question that was not previously addressed in the literature, at least to our knowledge, is the potentially privileged direction of such bit-flips.

Table 10,

Table 11 and

Table 12 summarize their distribution in the context of our case study. It appears that there is no general privileged direction: both directions are almost equivalent for weights (50.67% in the direction 0 → 1), direction 1 → 0 clearly has the majority for biases (79.19%) but direction 0 → 1 has the majority for intermediate data (60.21%). Overall, 57.19% of the recorded positive bit-flips for these three data types were in the direction 0 → 1. A total of 92.50% of the positive bit-flips in the image inputs were also in the direction 0 → 1.

In conclusion, about the direction of critical bit-flips, it appears that the usual claim is not valid for our case study. With the exception of the image inputs, the large majority of critical bit-flips have been found in the direction 1 → 0. Furthermore, a majority of positive bit-flips are in the direction 0 → 1. We will discuss the consequences of design hardening in

Section 4.6.

The findings for our case study may appear at first glance contradictory to the findings reported in [

22], also obtained for a quantized implementation of LeNet using 8-bit signed integers. This point will be discussed in

Section 4.5.

4.4. Impact of Data Value Modification on Misclassifications

As mentioned in

Section 2.4, another way in the literature to characterize the criticality of bit-flips is to focus on those moving the number value further from zero. The distribution of the critical bits recorded during our case study is summarized with respect to this second criterion in

Table 13 for weights,

Table 14 for biases and

Table 15 for intermediate data. As with the previous criterion, bit-flips leading to both value modification directions have been found, no matter the layer or the data type (values at zero for the biases are due to the rounding of very small percentages). However, the majority case clearly depends on the data type. Critical bit-flips mainly correspond to values further from zero in the case of weights (80.59%) and biases (99.91%). But they mainly correspond to the opposite direction in the case of intermediate data (only 16.53% further from zero), with a critical direction reversal for F7. Overall, taking into account the difference in terms of the number of bits in each data type, only 36.86% of the critical bit-flips correspond to values further from zero. For the image inputs, 96.37% of the critical bits correspond to values further from zero.

Positive bit-flips were also recorded with both value modification directions. Their distribution is summarized with respect to this second criterion in

Table 16 for weights,

Table 17 for biases and

Table 18 for intermediate data. Again, there is no general privileged direction but with this criterion critical and positive bit-flips roughly follow the same tendencies.

Positive bit-flips mainly correspond to values further from zero in the case of weights (77.01%) and biases (99.40%) while they mainly correspond to the opposite direction in the case of intermediate data (only 3.97% further from zero), also with a critical direction reversal for F7. Overall, 18.82% of the positive bit-flips correspond to values further from zero. For the image inputs, 96.00% of the positive bits correspond to values further from zero.

In conclusion, about the modification of the value due to the bit-flips, the results are more consistent between critical and positive cases. This criterion is therefore better to take into account possible compensations. However, it cannot be asserted as universal since there is a strong dependency with respect to the data type, and also with respect to the layer F7 in our case. Also, if applied in an undifferentiated manner, the usual claim is again not valid for our case study since most of the critical bit-flips correspond to values closer to zero. As previously mentioned, we will discuss the consequences of design hardening in

Section 4.6.

4.5. Relationship Between Direction and Modified Value

As mentioned in

Section 2.4, some relationship exists between the direction of the bit-flip and the direction of the value modification, when some data representation formats are used.

A bit-flip in the direction 0 → 1 occurring in a floating- or fixed-point number will either change the sign of the number, leaving the absolute value unchanged, or increase the absolute value. When the sign is changed, the value remains at the same distance to zero so assuming that critical modifications are those moving the value further from zero, this type of bit-flip should not be critical. When the bit-flip occurs in mantissa or fraction bits, the value is only slightly changed. On the opposite, when it occurs in the exponent or integer part, especially in the most significant bits, the value is greatly increased and should have more consequences on the inference computation according to usual claims. Also, the most significant bits will have a larger impact when there are more bits in the exponent or integer part since the absolute value modification will be larger. All this is consistent with multiple findings reported in the literature and may support both usual claims about bit-flip criticality characterization.

The question is different when turning to quantized NNs and the use of integers. If the number is represented by its sign and its absolute value as in [

15], the situation is similar to floating- or fixed point numbers. However, in most cases, the integers use a two’s complement representation. In that case, a single bit-flip cannot just change the sign of the number and a bit-flip in a given direction can either increase or decrease the absolute value. In that case, the two usual criteria about the bit-flip direction and the value modification are no longer consistent. This led the authors of [

24] to force an erroneous bit depending on the sign value, in order to move the absolute value closer to zero. But the findings from our case study show that this approach cannot be applied universally and in an undifferentiated manner.

Firstly, critical bit-flips can be mostly in any direction and change the value either further from or closer to zero. This may depend on the type of perturbed data and also on the layer in which the fault occurs. None of the two usual criteria therefore hold generally for any NN implementation and any data subset.

Secondly, our findings differ from the observations in [

22] that led to assert that critical bit-flips are only in the direction 0 → 1, although in both cases 8-bit signed integers are used and the authors of [

22] confirmed to us also using two’s complement representation. We therefore investigated more deeply this contradiction. It appeared, as confirmed by the authors, that the observation in [

22] was made at the output of the ReLU activation function. This function is usually defined as ReLU(x) = max(0; x). After such a rectification, any wrong negative value is forced to zero and therefore has no more consequence on the inference computation completion. The consequence is that a bit-flip can be critical only if it modifies the sign bit or if it modifies another bit of a positive integer. Apart from the sign, the two criticality criteria become again consistent since a bit-flip in the direction 0 → 1 increases the absolute value. The question remains open about the criticality of the sign bits forced to 0. Anyway, this does not explain why in our case the conclusion is that critical bits are mostly in the direction 1 → 0.

Looking more deeply into the inference computations, it appeared that the eight most frequent intermediate data modifications induced by bit-flips classified as critical are those transforming −128 into another negative value or zero, except for F7. Surprisingly, it is similar for the bit-flips classified as positive, but with a lower number of recorded occurrences. Anyway, −128 appears as a special value in the studied implementation of LeNet. In fact, we found that in our case the ReLU function is not implemented in the usual way. The author of the C code available at [

8] mentions that the operations were derived from an analysis of the computations performed by TensorFlow Lite, at the time the code was written. The result is that the minimal activation output value was set to −128 instead of 0. The accuracy obtained with this description demonstrates that the code is functional, but the intermediate data is no more centered than usual. On the other hand, its histogram shows a very high peak of occurrence of the value −128 and the ReLU function does not filter the bit-flips as usual. We can generalize this finding, saying that the propagation of bit-flips partially depends on the activation functions implemented in the different layers. A usual ReLU will have a different impact compared to a modified ReLU but also compared to other functions such as the Unit Step functions, piecewise linear functions (with a behavior depending also on its value at zero), Gaussian functions or sigmoid, to cite the most common functions found in the literature. This also means that the choice of the activation function, when defining the NN model for a given application, has to be made with care when dependability and trustworthiness have to be maximized.

This case study therefore demonstrates that the criteria used to determine whether a bit-flip is critical or not cannot be claimed to depend generally only on the bit-flip direction or the value modification of the perturbed number. A whole set of information has to be taken into account, including the network model, the data representation format, the type of target data, the target layer and the implemented activation function(s).

4.6. Impact on the Efficiency of Hardening Techniques

The first consequence of the findings discussed in the previous sections is that any hardening technique based on one of the usual criticality assumptions can only have a real positive effect on trustworthiness if applied in a pertinent use case. As an example, the technique proposed in [

24], if systematically applied to all data types in our use case, would have a very limited impact on dependability. The approach may appear to be pertinent in the case of weights with 80.59% of critical bit-flips corresponding to values further from zero, but not for the whole data with a percentage reduced to 36.86% of potentially corrected critical bit-flips and even less in the case of the intermediate data alone, with a percentage dropping down to 16.53%.

Another very important point is to take into account the noticeable number of positive bit-flips, leading to an increase in accuracy. An efficient hardening technique should allow maximizing the number of critical bit-flips corrected or at least managed in some way but should also minimize the number of positive bit-flips that would be considered critical and managed similarly. Let us consider the previous example. For weights, 77.01% of the positive bit-flips correspond to values further from zero. Let us assume that all bit-flips leading to values further from zero in the weights are corrected, regardless of their positive or negative impact. The NN accuracy loss compared to the nominal behavior would in that case be 0.00093%. It would be 0.00576% without any hardening technique, just benefiting from compensations (0.011% when not taking into account the compensations). Compared to the cost of the redundancy in terms of hardware and power/energy consumption, the decision to apply the protection has to be considered with care, taking into account the nominal inaccuracy of the NN that is, in general, much higher than the 0.00483% improvement obtained in the previous example thanks to the hardening technique (in our case, the nominal inaccuracy is 1.95% without fault).

Finally, to optimize the global robustness and since the most critical bit-flips depend on the data type and the layer, multiple hardening techniques should be efficiently combined in the different parts of the same NN instead of applying a single technique that would not fit all. In addition, the exact location of the hardening should also be optimized, e.g., preferring a protection on the outputs of some activation functions.

5. Conclusions and Further Work

We have shown in this paper that revisiting usual claims about the characterization of most critical bit-flips in NNs led to identifying several important points to better ensure the trustworthiness of AI-based applications in harsh environments.

The relatively large number of positive bit-flips, and the compensation occurring on the global NN accuracy have been highlighted. Hardening techniques can be optimized for reduced hardware overhead by favoring the less impacting errors, e.g., forcing bits to a predefined value or forcing a number closest to zero. However, designers must take care that such approaches can also prohibit compensation from occurring, globally leading to reduced benefits. Investigating more deeply the conditions for these unexpected corrections is planned in further work, aiming at taking a better advantage of them and avoiding counter-productive mitigation actions.

Our findings also show that the bit-flip impact (direction of the bit-flip, or modified number value) is only a piece of information allowing us to evaluate the criticality of a fault. It was already mentioned in the literature that the considered network model, layer and data type had to be taken into account. We detail the differences for our case study on the basis of extensive experiments. The data representation format was also considered previously, but we extend the conclusions to the exact representation format of signed integers that may change the validity of some claims. Our detailed analysis also demonstrates that the choice of activation functions can noticeably impact the validity of some criticality criteria. To our knowledge, this point was previously overlooked but could be an important design parameter to define trustworthy AI-based features.

These conclusions have been derived from one quantized version of LeNet, which is a simple model compared to more recent ones such as ResNet or MobileNet, to cite a few. It is not possible to generalize the quantified results provided in this paper. But the qualitative conclusions can be generalized and have a wide significance on design practice since they are based on valid counter examples and insights to be taken into account for any model. Obtaining quantified evaluations on more contemporary models is part of further work but will require noticeably heavier experiments to reach a similar level of comprehensiveness.

Let us mention that preliminary results have shown that training the NN with a different dataset may also exhibit a different sensitivity characterization of the layers and data types. If confirmed, the dataset and potentially the training procedure (e.g., number of epochs) may have to be added to the global information required to decide on the criticality of a given fault and therefore on an adequate hardening scheme. This question will also be part of further work.

Other extensions of the study are planned in order to further increase the trustworthiness of Edge AI implementations, considering also faults occurring in micro-architecture of representative processing elements or AI accelerators. One step that would be meaningful for many different hardware or software implementations will be to analyze the criticality of byzantine faults with, e.g., NN parameters modified during their use within an inference and thus having two different values during the sequence of computations of this particular inference even if not modified in the main memory.