1. Introduction

The polygon partition problem has been extensively studied, focusing on dividing rectangular and rectilinear shapes in n-dimensional bitmaps into simple, non-overlapping n-cuboids [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27]. The problem has applications in robotics [

1,

14], DNA microsequencing [

1], Very-Large-Scale Integration (VLSI) [

1,

18], audio propagation modeling [

2,

3], bitmap and data compression [

1,

4,

5,

6,

16], object recognition [

6,

14], image processing [

6,

22], manufacturing and feature extraction [

6], geographic information systems (GIS) [

7], autonomous route-planning [

8], data visualization [

9], database management [

10,

22], load balancing [

10,

11], motion detection and tracking [

14], floorplan optimization [

20,

21], and parallel computing [

22].

Recent (post-2019) contributions have further diversified the scope and methodology of bitmap and rectilinear polygon decomposition. Hanauer et al. [

23] introduced an area-weighted rectangle covering technique for rectilinear polygons with holes, benchmarking integer linear programming (ILP) and approximation or heuristic strategies on large-scale datasets. Biedl et al. [

24] studied conforming partitions of rectilinear polygons with a bounded (low) stabbing number, offering fixed-parameter-tractable algorithms, NP-hardness proofs, and theoretical bounds on partition complexity, thus broadening the theoretical landscape of rectilinear partitioning. In parallel, Oliveira et al. [

25] surveyed two-dimensional rectangular cutting and packing problems in operations research applications, highlighting greedy and skyline-based heuristics. However, these methods address packing predefined item sets into containers rather than decomposing arbitrary foreground regions in bitmaps. Complementing these, Jing et al. [

26] developed a fast content-aware partitioning algorithm for video coding blocks that segments images using gradient structural similarity and texture features. While this illustrates how perceptual models increasingly inform partitioning decisions in applied settings, its domain (versatile video coding units) and objectives (rate-distortion/time trade-offs) differ substantially from element-level bitmap decomposition. These works highlight the rectangular decomposition strategies’ growing methodological diversity and interdisciplinary relevance. Nonetheless, none directly evaluates a pure greedy heuristic for arbitrary bitmap foregrounds to minimize the number of axis-aligned n-cuboids; our empirical finding—that a simple greedy approach can outperform more advanced methods on bitmap decomposition benchmarks—remains novel in demonstrating its practical effectiveness and scalability.

Additional applications may include metrology, dimensionality reduction, component analysis, game and architectural design, simulations, and data analytics [

27].

This work presents four novel methods for decomposing rectangular and rectilinear shapes into simple, non-overlapping n-cuboids. To the best of our knowledge, the specific variants of the greedy heuristic presented here (Greedy, Greedy BT, Greedy PQ) and the iterative integer linear programming (IILP) approach have not appeared in prior literature and were developed independently in the context of element-level bitmap decomposition.

While building on the foundational problem addressed in [

27], the study differs substantially in scope, approach, and implementation. The methods in [

27] are based on sequential region-growing heuristics or, in some cases, randomized selection strategies. In contrast, our proposed algorithms are deterministic, modular, and formally analyzed in terms of runtime complexity and computational overhead. Notably, the pure greedy method introduced here uses a volumetric-first decomposition strategy that consistently performs well across a wide range of synthetic and structured input shapes. Although neither our methods nor those in [

27] are fully invariant to rotation, our results demonstrate that even this simple strategy can achieve near-optimal decompositions in practice. These distinctions are critical for pattern recognition workflows where predictability, generality, and computational scalability are essential.

To the best of our knowledge, Ref. [

27] is the only published method explicitly designed to partition general n-dimensional binary shapes—including non-convex and holed structures—into disjoint n-cuboids with topological correctness. While works [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26] were reviewed, none satisfy all the following criteria: applicability to arbitrary dimensions (n ≥ 1), ability to handle internal holes or concavities, output disjointness, and full label coverage. As such, Ref. [

27] is the sole benchmark suitable for evaluating the proposed methods’ correctness and generality. Recent related studies primarily focus on 2D or 3D segmentation, convex partitioning, or object fitting and thus fall outside the practical scope of this study.

(Note: In the following list, the phrase “except [

27]” indicates that the corresponding feature is already present in the methods from [

27]; therefore, the new techniques do not improve over [

27] in that regard.)

These methods improve upon previous techniques (as described in [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27]) in the following ways:

Generalizability to any number of dimensions (

n ∈ ℕ), except [

27];

Ability to handle shapes with holes and concavities, except [

27] and some others;

Deterministic partitioning not based on randomness, unlike some methods in [

27];

Equal or superior partitioning quality compared to methods in [

27], often at the cost of increased processing time.

Additionally, one proposed method achieves near-constant runtime and exhibits reduced sensitivity to shape orientation compared to multiple existing techniques. These improvements establish a new benchmark for high-dimensional rectangular and rectilinear decomposition, making the proposed methods well-suited for complex computational geometry and pattern recognition problems.

In the context of this work, the polygon partition problem is defined as follows:

An n-dimensional bitmap

A with shape shp = (

s1,

s2, …,

sn) can be represented as a function, as shown in Equation (1) (Assume

si ≥ 1 for all

i ∈ {1, …,

n}.)

where

A(x) = 1 if the voxel/pixel/cell x belongs to the foreground (i.e., “filled”);

A(x) = 0 if x is background (i.e., “empty”).

The objective is to partition the foreground {x | A(x) = 1} into a set of non-overlapping, axis-aligned n-dimensional cuboids (also referred to as rectangles, cuboids, hyper-cuboids, or n-cuboids), ideally using as few and as large n-cuboids as possible.

Let I be the set of all valid coordinates in

A:

Equation (2) represents the index space of the n-dimensional bitmap, where si is the size of the bitmap along dimension i and i is an index ranging over the dimensions (i = 1, …, n).

(If one allows si = 0, I would be empty and the foreground empty; this work focuses on non-empty bitmaps, so take si ≥ 1.)

Define the foreground set U

0 as follows in Equation (3):

In this work, an n-cuboid is an axis-aligned hyperrectangle in ℤn (the n-dimensional integer lattice) that satisfies the following properties:

Here, is the binomial coefficient and ! denotes the factorial function.

Since the methods only use 2D faces, we focus on this quantity. The derivation follows standard combinatorial reasoning and is detailed in

Appendix A.

This axis-aligned bounding box (n-cuboid) B ⊆ ℤn is uniquely defined by its two opposite corners:

The minimum corner m = (m1, …, mn);

The maximum corner M = (M1, …, Mn).

Here, i is an index ranging over the dimensions (i = 1, …, n), and each coordinate satisfies mi ≤ Mi. These two corners define the n-cuboid as the Cartesian product of the integer intervals [mi, Mi], and the terms minimum and maximum refer to the component-wise minimum and maximum values along each axis.

The n-cuboid is then defined as shown in Equation (4):

The volume (or size) of

B is calculated as demonstrated in Equation (5):

A valid decomposition is a set of disjoint axis-aligned n-cuboids satisfying the following:

where

Bi and

Bj are two distinct n-cuboids in the decomposition;

i,

j ∈ {1, 2, …,

k} are indices referring to specific n-cuboids; and ∅ represents the empty set, meaning two n-cuboids share common elements.

When finding an optimized partition, n-cuboid neighborhoods can be processed using hypercubic mesh processing techniques from [

28], as demonstrated in [

27].

The problem of decomposing an n-dimensional bitmap into the minimum number of axis-aligned n-cuboids is known to be NP-hard (see, for example, [

29,

30]). Informally, a decision problem is NP-hard if every problem in NP can be polynomial-time-reduced to it, implying that it is at least as hard as the hardest problems in NP. Consequently, unless P = NP, no known polynomial-time algorithm can optimally solve all instances of this problem.

Greedy algorithms are heuristic methods that incrementally build a solution, always making the locally optimal choice with the hope of reaching a global optimum. At the same time, they are often fast and straightforward to implement, but they do not generally guarantee optimal solutions [

31]. One proposed method improves upon a greedy strategy by incorporating backtracking (BT), a systematic search technique exploring partial solutions and abandoning paths that cannot lead to valid or improved solutions [

31,

32]. This approach, implemented using depth-first search (DFS), ensures that alternative decompositions are considered, potentially leading to better results. DFS is a traversal algorithm that explores a structure as far as possible before backtracking. It is commonly used for recursive problem-solving and is particularly useful in partitioning algorithms that iteratively refine or expand regions before adjusting previous decisions [

31,

32].

Another method, based on a greedy strategy like the two discussed before, leverages priority queues (PQs) to prioritize larger or more promising subregions during decomposition. A priority queue is an abstract data structure where elements are dequeued based on priority rather than insertion order [

31,

32]. This structure is commonly implemented with heaps and is useful in optimization problems where elements must be processed in an order that maximizes efficiency or quality of results [

31,

32]. The proposed algorithm efficiently selects the next partitioning step using a PQ-based approach, leading to more structured and adaptive decompositions than traditional greedy methods.

A different approach combines integer linear programming (ILP) with an iterative refinement process. ILP is a mathematical optimization framework where some or all decision variables are constrained to be integers, making it suitable for solving combinatorial and discrete problems optimally, albeit at a higher computational cost [

32,

33]. Unlike purely ILP-based methods, this iterative approach applies ILP locally to subproblems, refining the partition step by step. This balances computational feasibility with the ability to produce highly optimized decompositions. In short, the method functions as an iterative wrapper around ILP subproblems and is referred to throughout the paper as IILP.

Functional, lightweight, ready-to-use implementations of the four methods are provided in the

Supplementary Materials, written in [

34].

2. Materials and Methods

2.1. The Existing Methods and Dataset

The three methods in [

27] were chosen as benchmark references because, to the authors’ knowledge, they were the only ones able to handle convex, concave, and holed n-dimensional shapes and were easy to implement.

The Special method identifies and segments non-zero regions in an image by locating the first unvisited foreground element and creating slices extending along each dimension until encountering a background or visited element [

27]. It adjusts these slices to exclude such components, defining the n-cuboid corners. This process repeats until all foreground elements are visited [

27].

The General I method starts at a target (seed) point, iterating forward and backward in each dimension to find the n-cuboid boundaries, updating the coordinates upon encountering a background pixel [

27]. It marks foreground regions, adjusts the n-cuboid by refining the area of interest, and splits it into smaller regions if the shape remains unchanged [

27].

The General II method generates permutations of slice dimensions to partition the array, calculates the maximum length of non-zero elements along each axis, and iterates through these permutations to find the best-fitting n-cuboid for the target label [

27]. It refines the target block by marking the starting point (target element) and identifying the optimal n-cuboid enclosing it using the best-fitting permutation [

27].

While the Special method decomposes the entire array into regions, General I and II are designed to find only one n-cuboid encompassing a starting (or target) point [

27]. The whole image can be processed by repeatedly applying these two methods and randomly choosing an unvisited foreground element [

27].

The three methods from [

27]—Special, General I, and General II—represent prior state-of-the-art approaches to n-dimensional bitmap decomposition. Special performs a single region-growing pass over the entire image and is deterministic, making it efficient and reproducible. However, it may produce suboptimal decompositions for images with irregular structures or intricate internal boundaries. Conversely, General I and II always select one n-cuboid at a time using randomized seed-point selection. While these methods are more flexible, they can yield non-deterministic outputs, lacking formal runtime guarantees. In contrast, the algorithms introduced in this work aim to balance runtime efficiency and decomposition quality, particularly on complex shapes, while remaining fully deterministic and formally analyzed.

The dataset used for testing (

Table 1) was the same as in [

27]. It consists of 13 bitmap images, spanning 1D to 4D, with dimension axis lengths varying between 4 and 10 elements. The foreground density (number of filled elements divided by the total image size) ranges from 3.5% to 50%.

2.2. Proposed Methods

The four decomposition methods presented in this study were selected to represent a progression of increasing algorithmic complexity and search sophistication. Pure greedy selection serves as a fast and interpretable baseline. Backtracking extends this approach by locally exploring multiple continuations beyond the first optimal choice. The priority queue method incorporates a deferred decision mechanism, giving it a limited global view of alternatives. Finally, the iterative ILP-based strategy applies exact optimization, sacrificing speed for potential improvements in minimality. This range enables structured comparisons between fast heuristics and more theoretically grounded optimization techniques.

2.2.1. Pure Greedy

The pure greedy approach iteratively selects the unvisited foreground region with the largest volume, forming a valid n-cuboid. Once selected, this n-cuboid is marked as visited and added to the decomposition. The process repeats until the entire foreground is covered.

Mathematically, the pure greedy method can be expressed as an iterative covering of the set of “foreground” points by successively extracting the single largest axis-aligned box contained in the unvisited set. Each iteration labels and removes that box until no unvisited points remain. This procedure is greedy because it always picks the largest immediate box, rather than trying multiple smaller boxes that might, later, lead to fewer total boxes overall. Nonetheless, this approach yields a very compact decomposition.

The result will be a set {B1, B2, …, Bk} (k ∈ ℕ) of boxes (axis-aligned, solid n-cuboids), each assigned a unique label.

The procedure of the pure greedy decomposition algorithm is as follows:

Initialization

Let k = 0, the iteration counter.

Define the set of unvisited foreground points as shown in Equation (8):

- 2.

Iterative Partitioning

While U ≠ ∅, perform the following steps:

Step 2.1: Finding the Largest Expandable Box

For each point

p ∈ U, compute the maximal axis-aligned n-cuboid

Bp centered at

p that remains inside U. Formally,

In Equation (9),

- •

p ∈ B (m, M) ⊆ U.

- •

m, M are expanded along each dimension until further expansion would exit U.

Procedure:

- •

Initialize m = M = p.

- •

For each dimension i:

- ○

Expand Mi in the positive direction as long as all new points remain in U.

- ○

Expand mi in the negative direction as long as all new points remain in U.

Step 2.2: Selecting the Largest Box

Among all computed boxes

Bp for

p ∈ U, choose the one with the maximal volume, as follows:

In Equation (10), |Bp| denotes the volume (number of elements) of Bp.

Step 2.3: Marking the Selected Box

- •

Remove all points in B* from U (mark them as visited).

- •

Assign them the new label k + 1.

Step 2.4: Increment Counter

- •

Update the iteration counter as shown in Equation (11):

- 3.

Output

Once no unvisited foreground points remain, the final decomposition consists of

k labeled n-cuboids as defined in Equation (12):

where

k ∈ ℕ.

Each n-cuboid Bj is assigned a unique label j (j ∈ ℕ) in the final labeled image.

The method has “built-in” optimization because it inherently selects the maximal possible axis-aligned n-cuboid around each unvisited point at every step. This local optimization eliminates the need for a separate post-processing step to merge adjacent regions. As a result, each labeling decision is final upon assignment, leading to no changes in label count before and after optimization and no additional reductions in the results table.

At each iteration

t, the algorithm selects an n-cuboid

such that

In Equation (13),

- -

Ut is the set of unvisited foreground points at iteration t;

- -

denotes any axis-aligned n-cuboid fully contained within the unvisited foreground set at iteration t;

- -

is the volume of n-cuboid B;

- -

(m, M) are the opposite corners defining B;

- -

argmax selects the B with maximum volume from all valid candidates.

Since the algorithm greedily expands each selected n-cuboid along every dimension until further expansion would violate disjointness or exit Ut, guaranteeing that no larger n-cuboid could have been placed at that iteration. Thus, every n-cuboid selected is locally maximal, meaning it cannot be expanded further when selected.

However, this does not mean that the final decomposition is minimal.

Suppose the pure greedy algorithm picks a smaller n-cuboid

B1 out of multiple options. After selecting

B1, it must choose another n-cuboid, then another, until the entire region is covered. The total number of n-cuboids required in the worst case is

s1, i.e., the one shown in Equation (14):

Since s1 can be larger than the number of optimal n-cuboids , the greedy method may produce many more n-cuboids than necessary.

A globally optimal decomposition minimizes

k, meaning

In Equation (15), represents any possible valid decomposition (any other way of covering U0 with disjoint n-cuboids).

In contrast, the pure greedy method may find a decomposition with a suboptimal

k as shown in Equation (16):

because it only optimizes locally, not globally.

In conclusion, the pure greedy method guarantees maximality but not minimality, proving that it is locally but not (always) globally optimal.

The pure greedy method was implemented in [

34], utilizing [

35] for numerical and matrix operations. The worst-case computational complexity is estimated as

O(

V2⋅

D), where

V is the number of foreground elements in the input array, and

D is its dimensionality. This complexity arises from the brute-force search for the largest unvisited n-cuboid in each iteration.

The method evaluates up to O(V) possible starting points at each step. For each candidate, it attempts to expand the n-cuboid as far as possible along all D dimensions, which can take up to O(V) steps in the worst case. This results in an overall O(V2) factor. The additional O(D) factor accounts for the dimensional expansion process. However, D is usually small in practical applications, so the dominant growth term remains O(V2).

The figure titles have three tiers: “Main procedure” identifies the method’s top-level function, “Procedure” refers to a named subfunction invoked by the main function or another subfunction, and “Subprocess” denotes an internal code segment within a subfunction that is not a separate function in the Python (version 3.10) code.

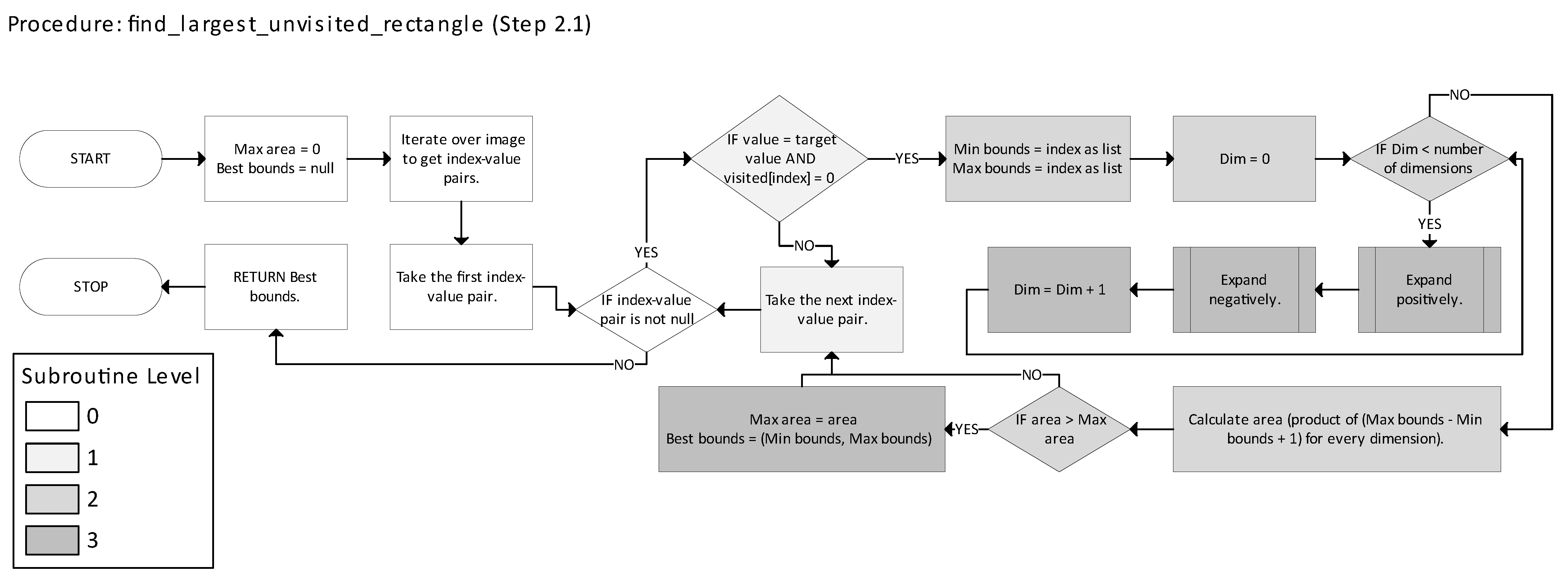

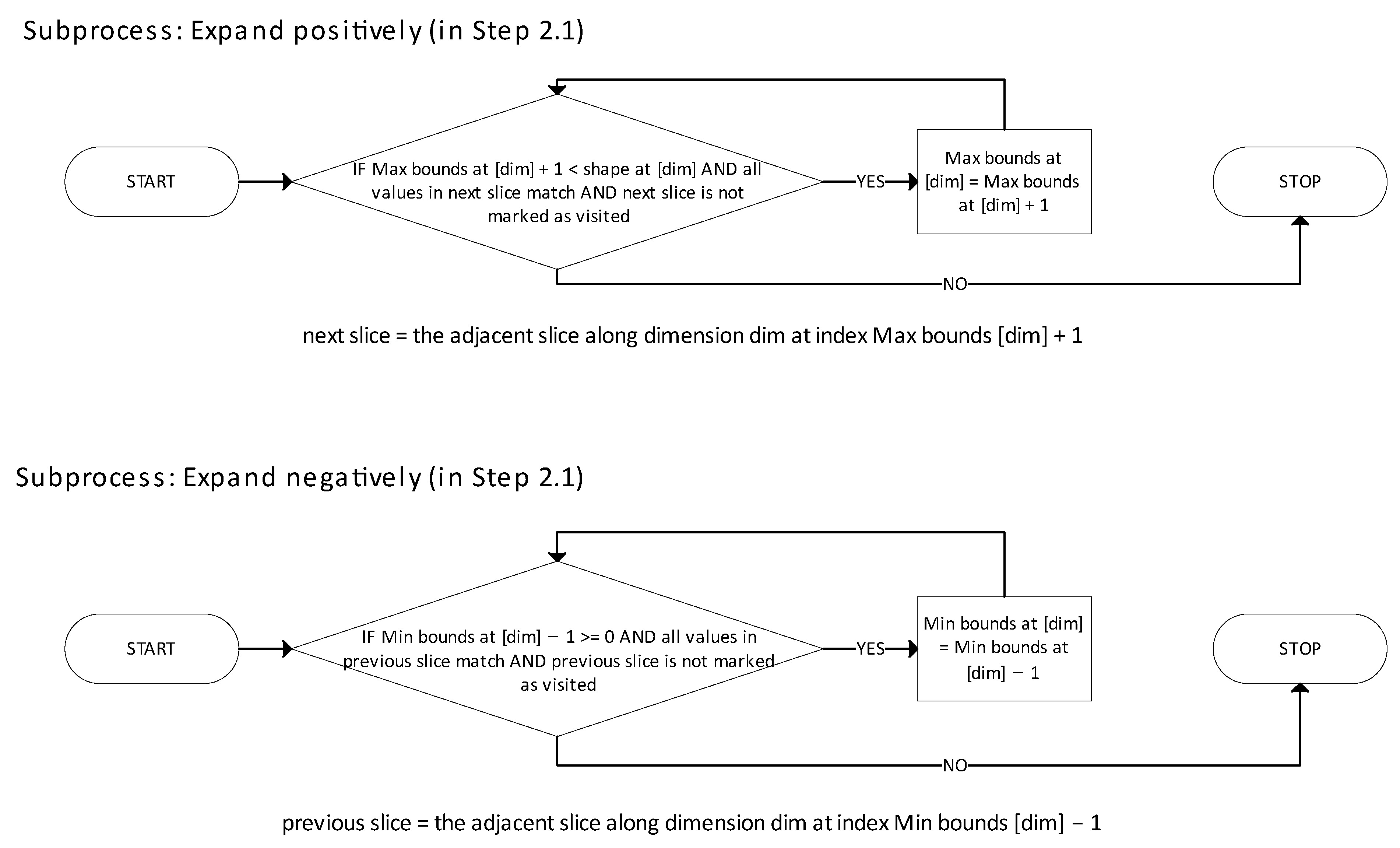

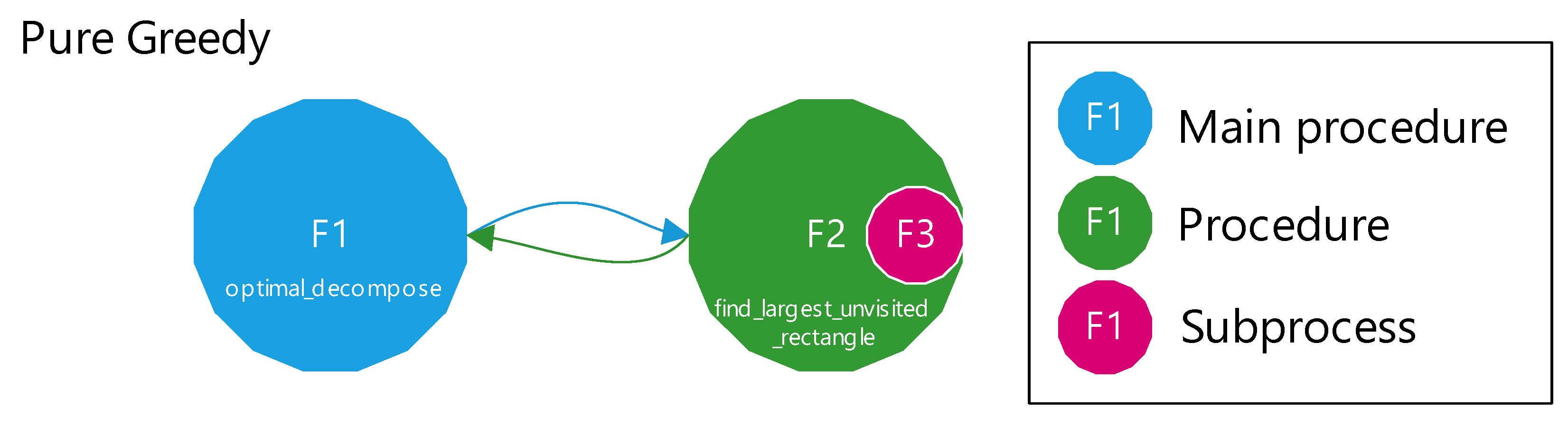

Figure 1 illustrates the main function of the Python implementation of the pure greedy method.

Figure 2 presents the subfunction responsible for identifying the largest unvisited n-cuboid, while

Figure 3 details the logic used to expand the n-cuboid. The step numbers shown in each figure correspond directly to the algorithm description provided earlier. In

Figure 1, the legend includes a number in parentheses for each step, indicating how many blocks are associated with that step; for example, Step 1 (Initialization) consists of three blocks. The table at the left or bottom of the figure lists the step identifiers and their corresponding descriptions; this visual key links the diagram to the step-by-step procedure defined earlier in the text (e.g., Step 2.1 corresponds to “Finding the Largest Expandable Box”). In

Figure 2, a “Subroutine Level” color tag denotes the depth of code nesting (indentation) for each process block. Level 0 corresponds to top-level statements within a function, with increasing levels representing deeper code nesting.

Figure 4 provides a top-level overview of the pure greedy method. Each node is labeled with an F identifier corresponding to the figure number of the detailed flowchart for that function (for example, F1 refers to

Figure 1).

2.2.2. Greedy with Backtracking

The greedy method with backtracking (Greedy BT) combines a depth-first search with a greedy expansion strategy. It aims to find larger n-cuboids than a purely greedy method by exploring multiple extensions before committing to a choice. Theoretically, this approach yields more globally optimal results by balancing local expansion with selective backtracking.

To begin the decomposition, the set of valid endpoints from m is defined as shown in Equation (17):

Our goal is to choose, for each starting point m with

B(

m) = 1 that has not yet been “covered” by a previously chosen n-cuboid, an endpoint as defined in Equation (18):

that maximizes the volume according to Equation (19):

For each dimension

i, the maximum extension

ei is defined as shown in Equation (20):

That is,

ei is the furthest index along dimension

i where the n-cuboid can be extended without hitting a 0, while all other coordinates are held fixed at their start values. This gives an upper bound for each coordinate of any valid endpoint

; that is, for all

we have

Mi ≤

ei. A more abstract form of Equation (20) is shown in

Appendix B.

The algorithm uses a depth-first search (DFS) to explore candidates

M for endpoints. When at a current candidate

M = (

M1, …,

Mn), any extension must satisfy

Mi ≤

ei ∀

i. The key observation is that if we define an upper bound

V(

M) on the volume

V that any extension of the current candidate can achieve by applying Equation (21) as follows:

In the above expression:

- -

denotes the axis-aligned n-cuboid from start point m to current candidate endpoint M;

- -

represents the maximal extensions in each dimension;

- -

estimates how many extensions remain from M to e;

- -

gives an upper bound on any descendant n-cuboid’s volume starting from m and extending beyond M.

Thereafter, for every descendant

that is an extension of

M (i.e., with

), it holds that, as shown in Equation (22):

If at any point we have a candidate M with (where is the best endpoint found so far), then no further extension of M can improve upon , and the DFS branch can be safely pruned.

The complete decomposition of the bitmap is achieved by scanning all points

x in the domain of

A (e.g., via

). For each

x such that

A(

x) = 1 and which has not yet been “visited” (i.e., included in any previously chosen n-cuboid). The above DFS procedure is invoked starting from

m =

x. Once an n-cuboid

is chosen, all points in that n-cuboid are marked as visited. In this way, the algorithm partitions the set as shown in Equation (23):

into disjoint n-cuboids.

Proof that the method correctly finds, for each starting point m, a maximal n-cuboid (concerning volume) and that the entire set {x: A(x) = 1} is partitioned by these n-cuboids is as follows:

- 1.

Termination

Bounded DFS Tree

For a given starting point

m, each coordinate

Mi is bounded by

ei. Therefore, the number of possible endpoints is at most as described in Equation (24):

which is finite. Hence, the DFS is over a finite tree and must terminate.

- 2.

Soundness of the Pruning Rule

Monotonicity of the Volume Function

Let

M be any candidate endpoint and

be any extension of

M (i.e.,

). Then, by definition as in Equation (25):

Pruning Validity

Suppose that at some candidate

M we have as shown in Equation (26):

where

is the best endpoint found so far. Then, for every extension

of

M as defined in Equation (27):

Consequently, no descendant of M can yield a volume more extensive than the current best, and it is safe to prune the branch at M.

- 3.

Optimality of the DFS

Completeness

The DFS algorithm explores all possible candidates except those pruned by the safe rule above. By the pruning argument, no pruned branch could contain an endpoint with a higher volume than .

Inductive Argument

Assume by induction that, for all nodes up to depth

k, the algorithm has correctly maintained the invariant that any unfinished candidate (or descendant) cannot exceed the current best volume

if its upper bound is not higher than

. Then at depth

k + 1, if a candidate were to yield a higher volume than

, its branch would not have been pruned. Hence, when the DFS completes, we must have what is shown in Equation (28):

- 4.

Global Partitioning

Disjointness

For each x in the domain of A such that A(x) = 1, the algorithm checks whether x is already “visited”. When an n-cuboid is selected, all are marked as visited. Thus, no point is assigned to more than one n-cuboid.

Coverage

The outer loop (iterating over all x) ensures that every x with A(x) = 1 not covered by a previously selected n-cuboid becomes the starting point for a new DFS. Therefore, every such x is eventually included in one of the n-cuboids.

In summary,

- -

The DFS terminates because the search space is finite.

- -

The pruning rule is valid because the volume function is monotonic, and any descendant’s computed upper bound V(m) is overestimated.

- -

The method is complete in finding the maximal-volume n-cuboid starting at each m, and the global partition is both disjoint and covers all required indices.

However, dense images, where most or all foreground elements are ones (or non-zero), can cause performance issues due to several factors. Firstly, in a dense matrix, nearly every contiguous block of ones is a valid candidate for an n-cuboid. The DFS procedure must consider many possible endpoints because every dimension can be extended nearly to its maximum. This creates an exponentially vast search space of potential n-cuboids, or a combinatorial explosion of candidates. Secondly, the algorithm prunes branches by computing an upper bound for the volume of any extension of a candidate endpoint M, as described in Equation (21). In a dense image, the maximum extensions ei are very high because there are few (if any) zeros to stop the extension. Thus, the upper bound remains significant, and the pruning condition rarely triggers, forcing the DFS to explore many branches, leading to ineffective pruning.

The greedy method with backtracking, like the pure greedy approach, was implemented in [

34], utilizing [

35] for numerical and matrix operations. The worst-case computational complexity is estimated as

, which expresses the exponential growth in terms of the sizes of each dimension, when each dimension

i has a different size

Di, and the image is n-dimensional. This complexity reflects that in the worst-case scenario (e.g., a dense matrix), the DFS could potentially explore every candidate n-cuboid defined by valid endpoints in each dimension.

The implementation consists of three core parts:

Validation, which checks if a candidate n-cuboid is within bounds and free.

Search that employs a DFS with heuristics and caching to find a near-optimal n-cuboid from a given start.

Marking and partitioning ensure that once an n-cuboid is found, it is not reused, while the primary function labels the regions accordingly.

The modular design assigns each function a clear purpose, making the code easy to follow and maintain. Using a cache prevents redundant calculations, which is especially beneficial in recursive DFS. The search applies a heuristic by sorting the dimensions by available extension. The upper-bound pruning, where the current volume is multiplied by the maximum possible extension in each direction, avoids unnecessary recursion. Isolating the marking of visited regions and partitioning logic into separate functions improves the testability and reusability of the code in different contexts. Still, limitations introduced by expensive slicing operations, high overhead from recursion and caching, and iteration over all indices remain.

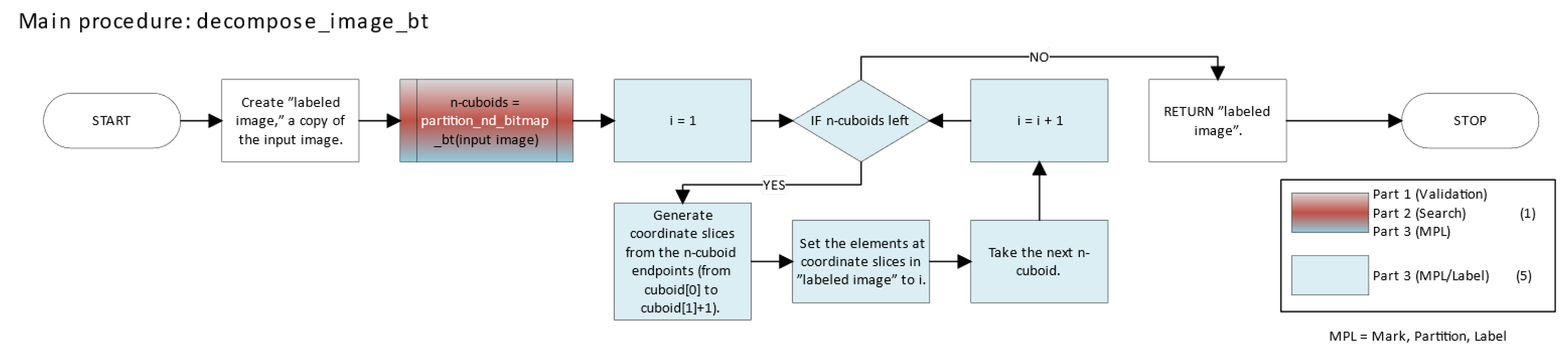

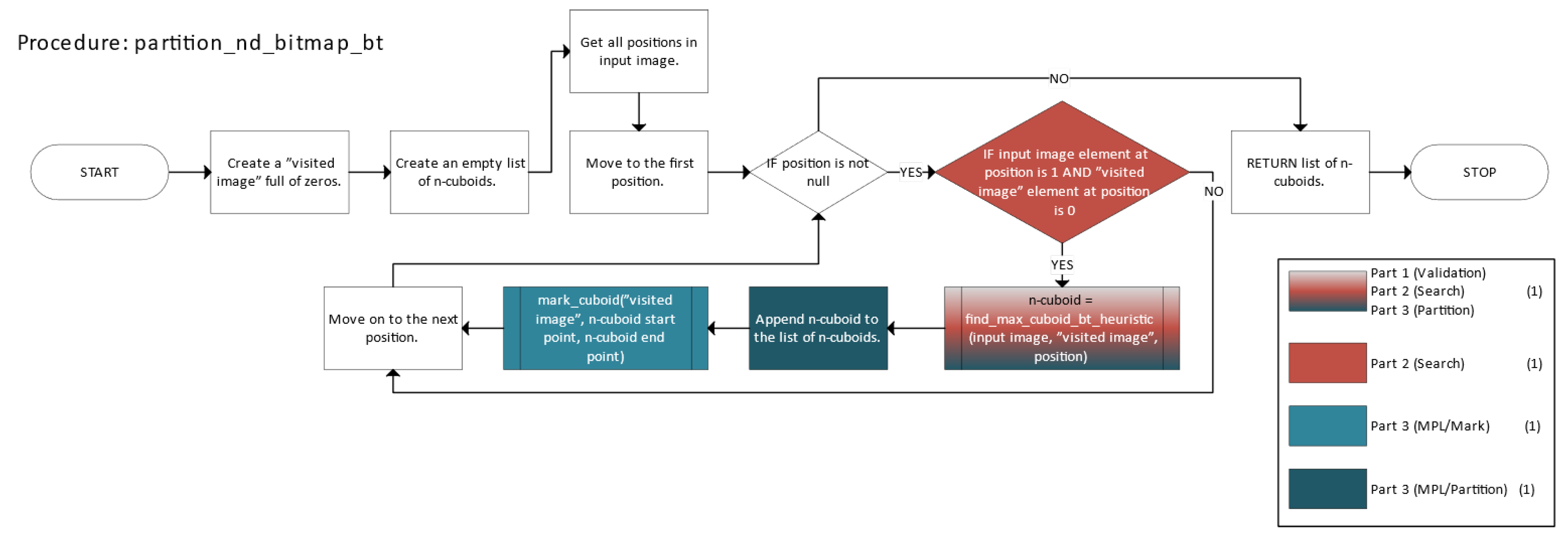

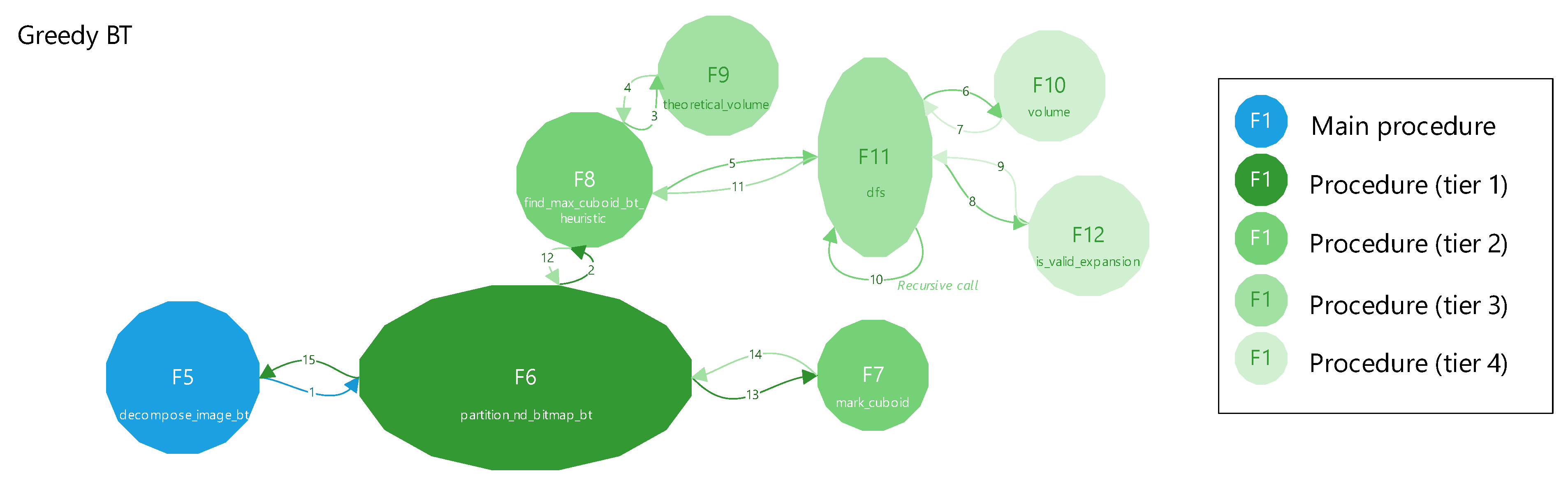

Figure 5 shows the main function of the Python implementation of the Greedy BT method.

Figure 6 depicts the partition function (partition_nd_bitmap_bt), which includes validation, search, and parts of the marking, partitioning, and labeling (MPL) stages for the n-cuboids.

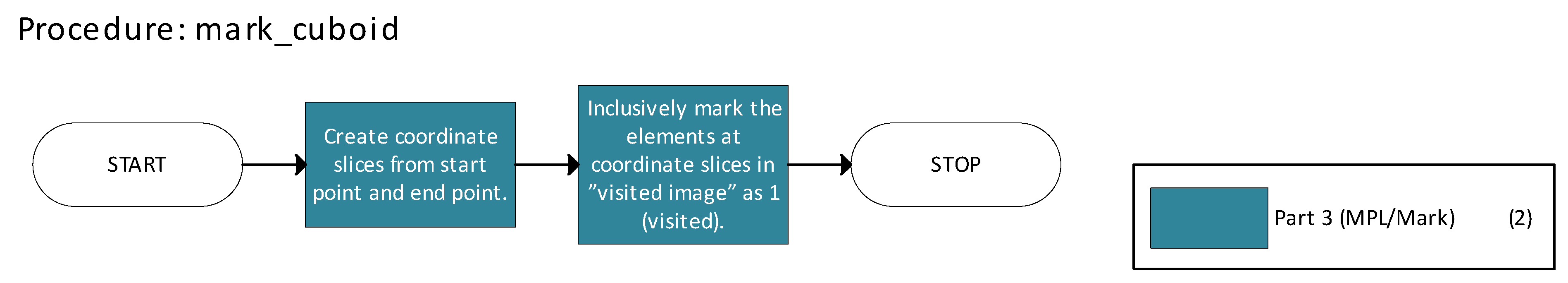

Figure 7 details the mark_cuboid function responsible for marking regions corresponding to n-cuboids as visited.

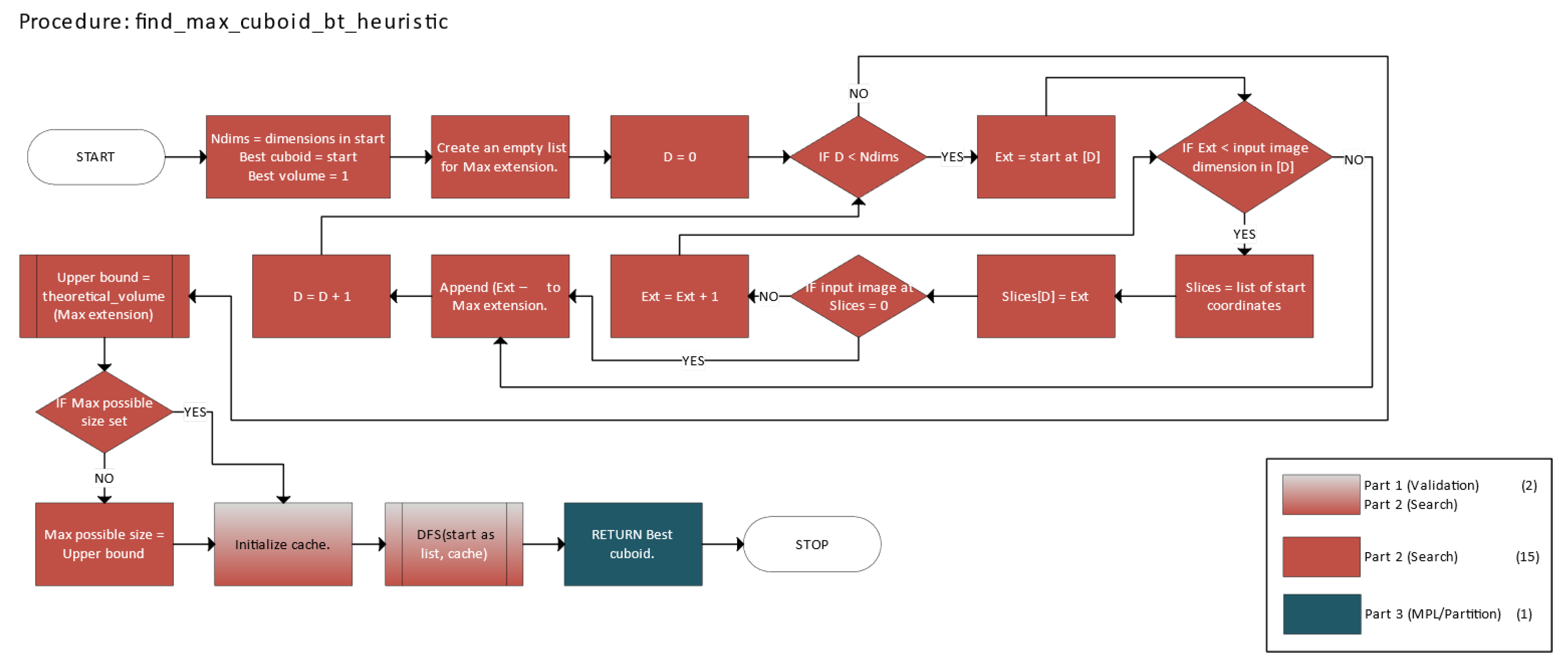

Figure 8 describes the find_max_cuboid_bt_heuristic function, which uses heuristics and backtracking to identify the largest available n-cuboid.

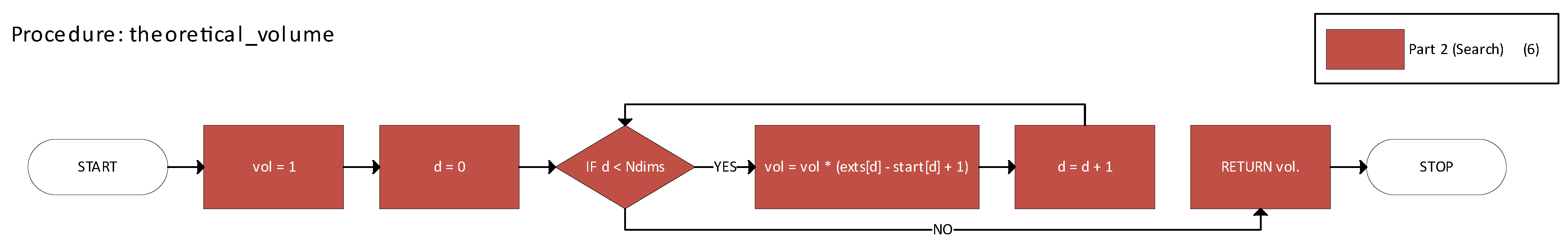

Figure 9 illustrates the theoretical_volume function used to compute the maximal theoretical size of a given n-cuboid.

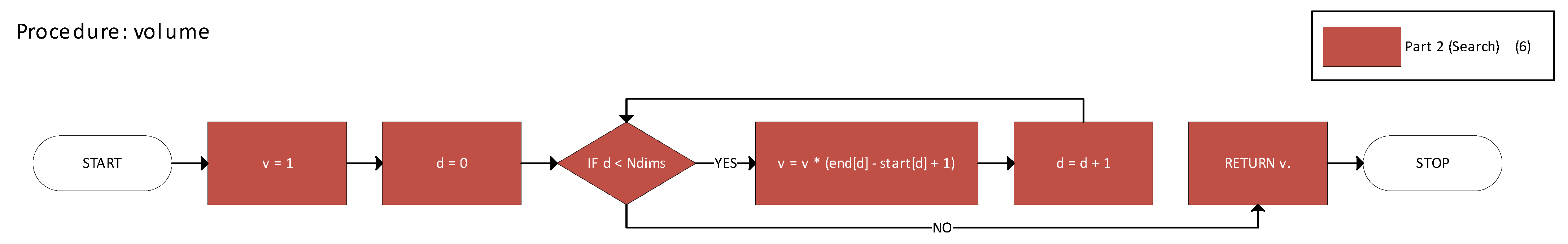

Figure 10 shows the volume function for calculating the actual size of an n-cuboid.

Figure 11 documents the dfs function used to explore candidate n-cuboid extensions.

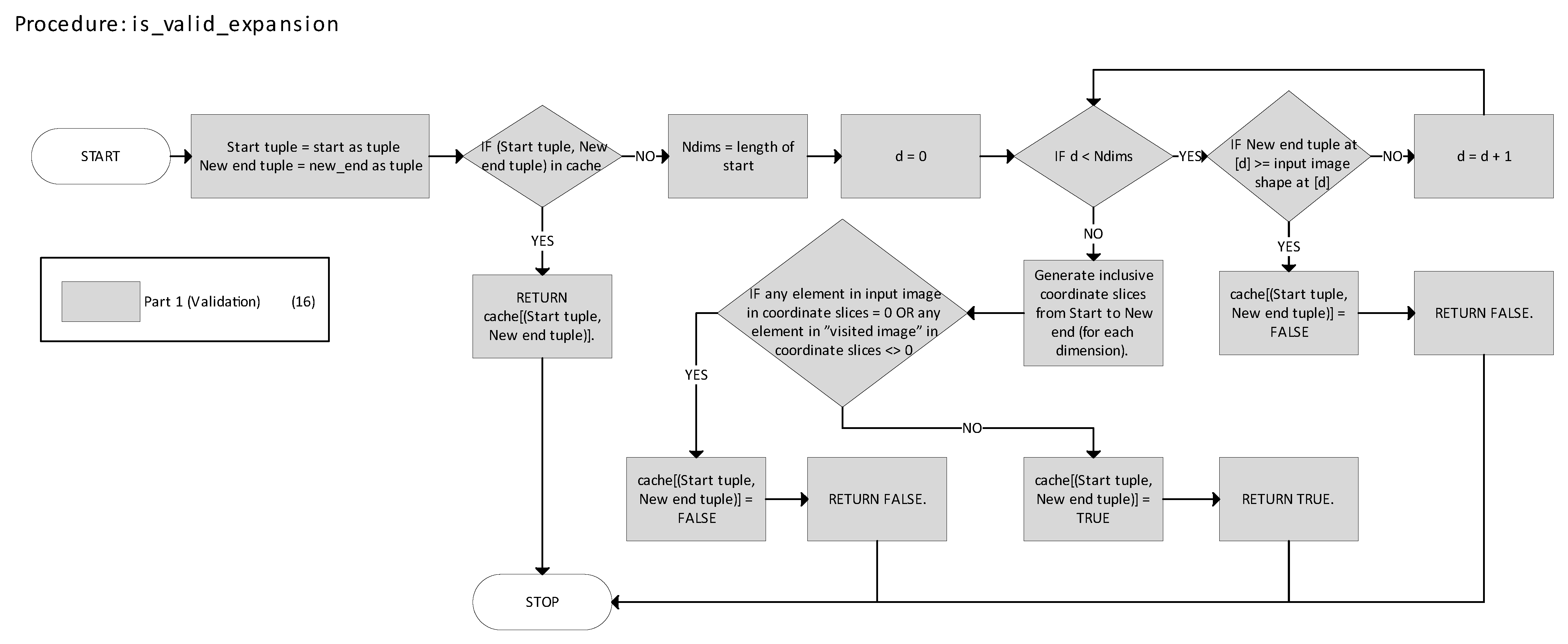

Figure 12 represents the is_valid_expansion function, determining whether an n-cuboid expansion is valid. In

Figure 5 and

Figure 12, parts 1–3 correspond directly to the three core components of the method described earlier: validation, search, and MPL. Each part is color-coded, and blocks involving multiple parts are shown with a gradient fill. For example, in

Figure 5, the block corresponding to partition_nd_bitmap_bt is shaded with a three-color gradient, indicating that it contributes to all three core parts of the method. The number in parentheses in the legend indicates how many blocks are associated with each part.

Figure 13 presents a top-level function call graph of the Greedy BT method. Each function is labeled by its figure number (F*), and the functions are tiered based on their call depth from the main procedure. For example, reaching the volume function (F10) from the main function decompose_image_bt (F5) requires four levels of calls. The arrows indicate execution flow, which proceeds in a depth-first, clockwise traversal. Additionally, superimposed numbers indicate the order in which function calls and returns are executed; for example, the function in F6 is invoked at step 1, which subsequently calls F8 at step 2, and control eventually returns to F5 at step 15.

2.2.3. Greedy with a Priority Queue

The greedy method with a priority queue (Greedy PQ) combines a greedy expansion strategy with a heap-based data structure, aiming to identify larger n-cuboids than a purely greedy approach. Unlike the standard greedy method, which immediately commits to a local expansion, Greedy PQ evaluates multiple expansion options in advance, prioritizing those that lead to potentially more optimal decompositions. This theoretically enables more globally favorable results while maintaining greedy efficiency. However, empirical results vary across images.

In contrast to the two previously discussed greedy methods, Greedy PQ prioritizes local expansions based on a priority queue rather than following a fixed order. However, this technique does not inherently enforce unique labeling for all disconnected foreground regions nor explicitly consider adjacency relationships between elements. As a result, it may incorrectly merge diagonally connected foreground elements, leading to errors in decomposition. A connected-component labeling (CCL) preprocessing step using 2n-connectivity is applied to the input image to ensure correct partition (compare with the approaches of Hoshen–Kopelman [

36] and Hawick et al. [

28]). In this step, two elements belong to the same component if they share an (n − 1)-dimensional face (e.g., 4-connected in 2D, 6-connected in 3D, and 8-connected in 4D), ensuring that each region is processed separately. This ensures purely face-connected regions (not diagonally connected ones) are grouped, avoiding over-merging.

While the priority queue approach remains fundamentally greedy and does not guarantee a globally optimal solution, it effectively balances accuracy, scalability, and computational efficiency. This trade-off makes Greedy PQ highly practical for real-world applications where decomposition quality and speed are critical.

The heap-like structure used in the priority queue is a max-heap, a complete binary tree where the value of each node is greater than or equal to the values of its children, ensuring that the maximum element is always at the root [

31,

32]. In contrast, a min-heap is a complete binary tree where the value of each node is less than or equal to the values of its children, which ensures that the minimum element is always at the root [

31,

32]. In Python’s heapq library, the data structure is a min-heap. It can be used as a max-heap by negating the values pushed and popped.

The code runs a CCL to identify each connected foreground component and processes them independently. For each object C ⊆ I (where I is the index set of all valid coordinates), the goal is to decompose C into a set of axis-aligned n-cuboids {BC1, BC2, …}.

The Greedy PQ approach consists of two main parts: a priority queue of candidate seeds and finding the largest n-cuboid from a seed.

- 1.

Priority Queue of Candidate Seeds

- 1.1.

Initial Seeding

- -

Scan all coordinates x in the component C.

- -

For each unvisited x, compute the “largest” n-cuboid that can be formed starting at x (ignoring visits for the moment), and record its volume.

- -

Push (-volume, x) into a max-heap.

- 1.2.

Pop from the Heap

- -

Repeatedly pop the entry (-volume, x) corresponding to the “candidate seed” among unvisited points.

- -

If x has already been visited, skip it. Otherwise:

Recompute the largest n-cuboid from x using the current visited state.

If the resulting n-cuboid has positive volume and is still unvisited, mark it as a new labeled region.

- 2.

Largest n-Cuboid from a Seed

The function find_max_cuboid_priority (matrix, visited, start) finds the largest n-cuboid as follows:

- 2.1.

Initialize: end ← start.

- 2.2.

Check Expansions: In each iteration, the function checks if it can expand end by +1 in any dimension (checked in reverse order, but conceptually each dimension is tried).

- -

A dimension d is feasible if endd + 1 < sd and that “new slice” is still foreground (A = 1) and not visited.

- -

Among all feasible expansions in a single iteration, the code picks the one yielding the largest new volume.

- 2.3.

Repeat until no expansions are possible.

- 2.4.

Return the final end and the volume as shown in Equation (29):

The algorithm always picks the dimension yielding the most significant immediate volume increase in each step, so the resulting box is “locally maximal” from that seed.

The mathematical formulation of the Greedy PQ method is as follows:

- -

Let C ⊆ I be a connected component in the n-dimensional grid. We maintain a Boolean array W to mark visited points.

- -

To get priority n-cuboid from a seed point, define a function MaxCuboidPriority(A, W, start) → (end, volume):

- 1.

Initialize: end ← start.

- 2.

While expansions are possible:

- 2.1.

Let

be the set of feasible one-step expansions of end along any dimension

d, i.e.,

, provided as shown in Equation (30):

- 2.2.

If , stop.

- 2.3.

Otherwise, pick that maximizes .

- 2.4.

Set end ← .

- 3.

Compute volume with Equation (29).

- 4.

Return end and volume.

Here,

denotes the axis-aligned box as defined in Equation (31):

- -

In priority-based partitioning, we maintain a max-heap . For each unvisited x ∈ C:

- 1.

Compute (end, vol) = MaxCuboidPriority(M, W, x).

- 2.

Push (-vol, x) onto .

- -

Then, while is not empty:

- 1.

Pop (-vol, x) from .

- 2.

If W(x) = true, skip (already visited).

- 3.

Recompute MaxCuboidPriority (M, W, x) to get a possibly updated end and volume.

- 4.

If the new volume is zero, skip.

- 5.

If

BC(x, end) is unvisited, mark it visited and assign a new label as shown in Equation (32):

This process continues until is empty, and at that point, disjoint n-cuboids have covered all of C.

Key differences from other greedy methods include the priority queue and recalculation. Instead of scanning left-to-right or picking the single largest unvisited n-cuboid from among all points (like the pure greedy method might do), the technique stores a heap of “candidate expansions” for every point and always picks the most voluminous candidate first. This is a big difference from a purely sequential approach, and—in theory—it often leads to fewer, larger n-cuboids. However, it remains a greedy approach and therefore is not guaranteed to be globally optimal in all scenarios. Recalculation means that each time a seed is popped from the priority queue, the algorithm recomputes the largest valid n-cuboid starting from that point based on the current visited state to account for newly visited points. While this recalculation can improve accuracy, it adds overhead that scales with the number of seeds processed.

In practice, the number of recalculations can be substantial, especially in densely populated regions where many overlapping candidate n-cuboids compete for expansion. As a result, the time complexity tends to grow faster than linearly with input size, depending on object geometry and dimensionality. Despite its greedy nature, including a heap and dynamic recalculation introduces overhead that is not present in more straightforward methods. Still, the gain in accuracy and reduction in n-cuboid count often justifies the trade-off in theory. The algorithm scales well in low to moderate dimensions, but as n increases, the number of potential expansions and the volume of candidate n-cuboids proliferate, potentially impacting performance.

The method has a limitation arising from its inability to perform the greedy search bidirectionally along each axis. This is because bidirectional expansion is generally incompatible with a priority-queue-based greedy approach, particularly in geometric decomposition problems. A priority queue enforces a strict global ordering of expansion candidates based on a scalar score, such as volume, and always selects the highest-priority option. In contrast, bidirectional growth explores in two or more opposing directions simultaneously, introducing ambiguity: different directions may yield competing partial expansions that cannot be thoroughly evaluated or compared until both sides are grown. For example, in a 1D case, expanding left and right from an inner seed may yield segments of different lengths, and the total size of the combined interval is unknown until both directions are explored in full. A priority queue, however, requires a definitive score (such as volume) to rank candidates and cannot handle such incomplete, speculative expansions without breaking its greedy, one-step-at-a-time logic. As a result, combining bidirectional expansion with heap-based selection requires significant structural changes to the algorithm or forfeits the simplicity and performance of a priority-queue-driven strategy.

In summary, the Greedy PQ method is an iterative, priority-driven approach with the following three steps:

Identify seeds for each point in a connected component, approximate the largest local n-cuboid, and push it into a max-heap.

Pop the most voluminous candidate, recompute that n-cuboid given the current visited state, and if still valid, mark it as a labeled n-cuboid.

Repeat until the heap is empty.

Mathematically, it is a multi-step greedy process that frequently attempts to place the largest n-cuboid first among all unvisited seeds, recalculating expansions on demand.

The greedy method with a priority queue, like the pure greedy and backtracking-based variants, was implemented in [

34], using [

35] for numerical and matrix operations. Connected-component labeling (CCL) was performed via the labeling function in [

37], although a NumPy-only implementation is also provided in the

Supplementary Materials. The algorithm’s computational complexity is

O(

N log

N), where

N is the number of nonzero elements in the input bitmap. Despite its higher computational overhead than the pure greedy method, the approach remains efficient even as the image dimensionality n increases. Memory usage scales linearly with the size of the input and the auxiliary visited array, making it suitable for large-scale, high-dimensional data. However, in higher dimensions, the number of feasible expansion directions and the potential size of candidate n-cuboids increase, which can impact runtime performance despite the memory efficiency of the method.

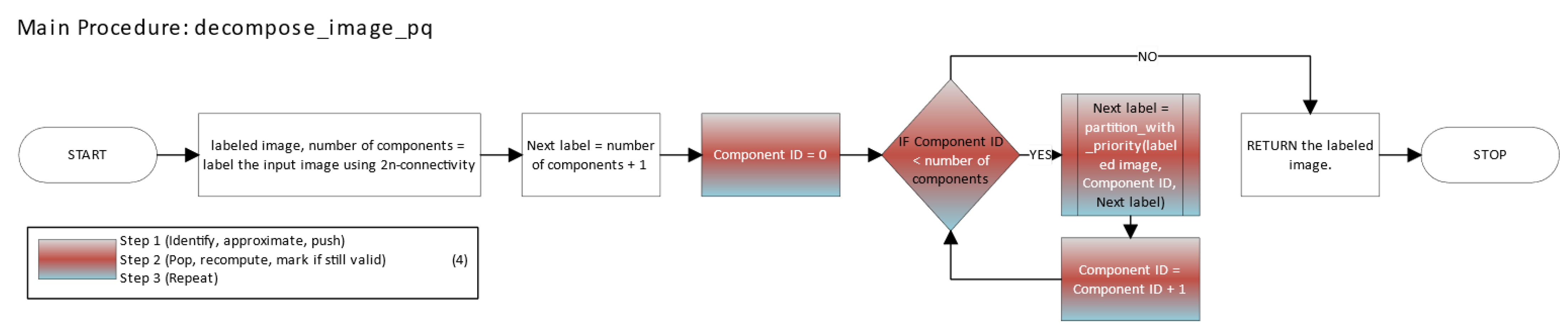

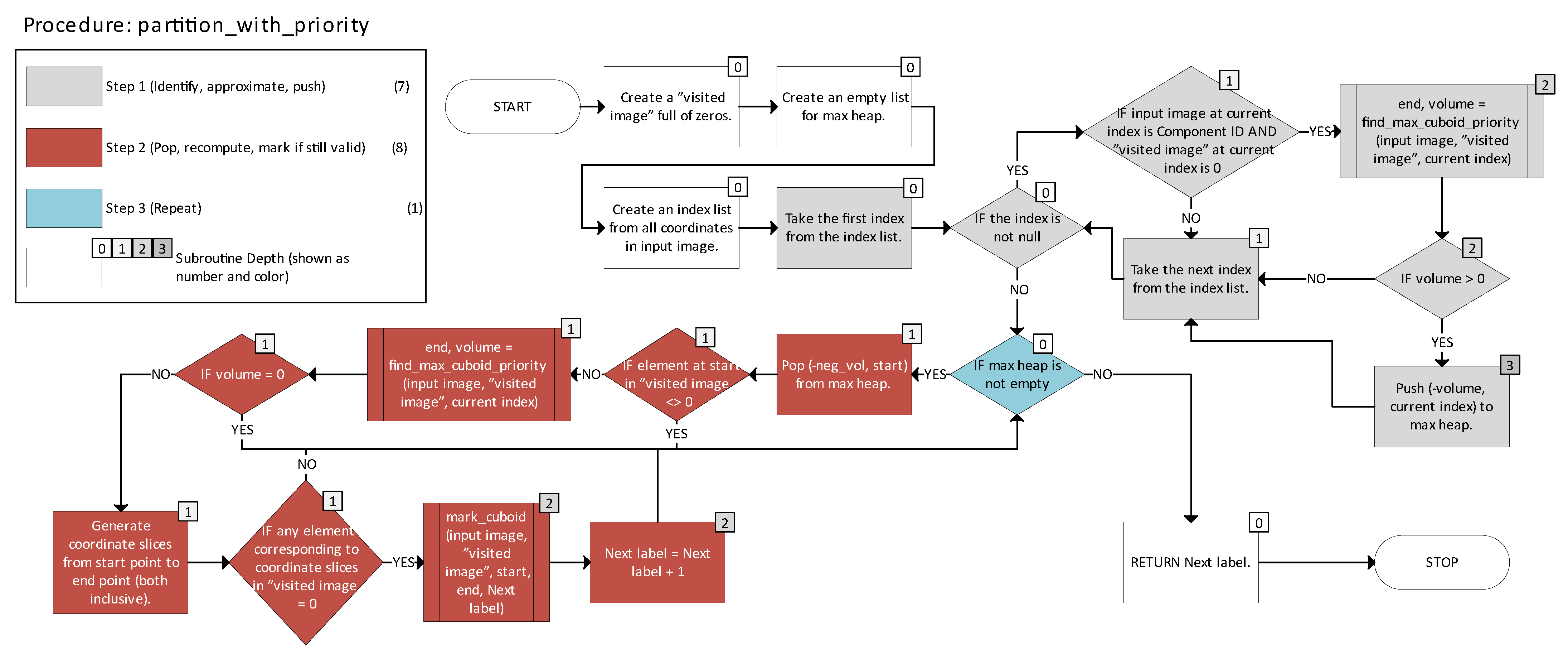

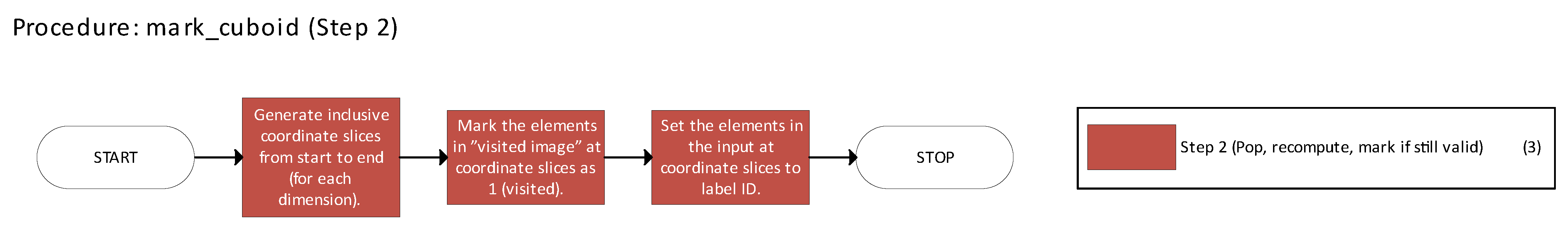

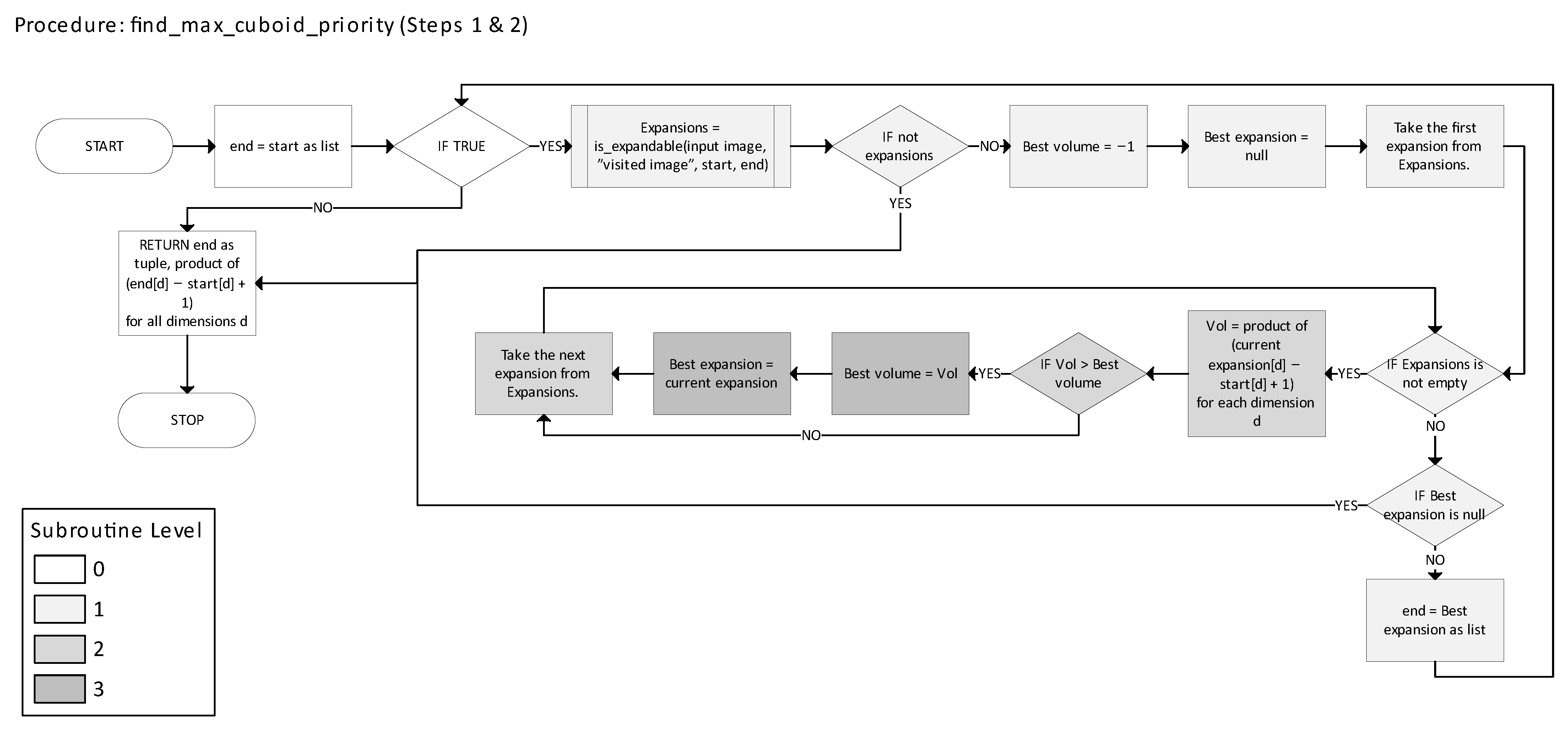

Figure 14 shows the main function of the Greedy PQ method.

Figure 15 depicts the partition_with_priority function, which handles the partitioning process and manages the priority queue to select n-cuboids.

Figure 16 illustrates the mark_cuboid function (not to be confused with the function of the same name from Greedy BT).

Figure 17 describes the find_max_cuboid_priority function, identifying the largest expandable n-cuboid from a given starting point.

Figure 18 presents the is_expandable function, which determines valid directions for n-cuboid expansion. In

Figure 14 and

Figure 17, Steps 1–3 correspond to the three core stages of the method described earlier as Identify–Approximate–Push, Pop–Recompute–Mark, and Repeat. In

Figure 14 and

Figure 16, each step is color-coded, and blocks spanning multiple steps are shown with a gradient fill. For example, the loop blocks in

Figure 14 that iterate through all labeled components use a three-color gradient, indicating these blocks belong to all three core steps. In

Figure 14 and

Figure 16, the number in parentheses within the legend denotes how many blocks are associated with each step. In

Figure 17 and

Figure 18, color shading reflects code nesting, with darker shades representing deeper indentation levels. In contrast, in

Figure 15, nesting depth is indicated by small, color-coded squares with numbers in the top-right corners of each block.

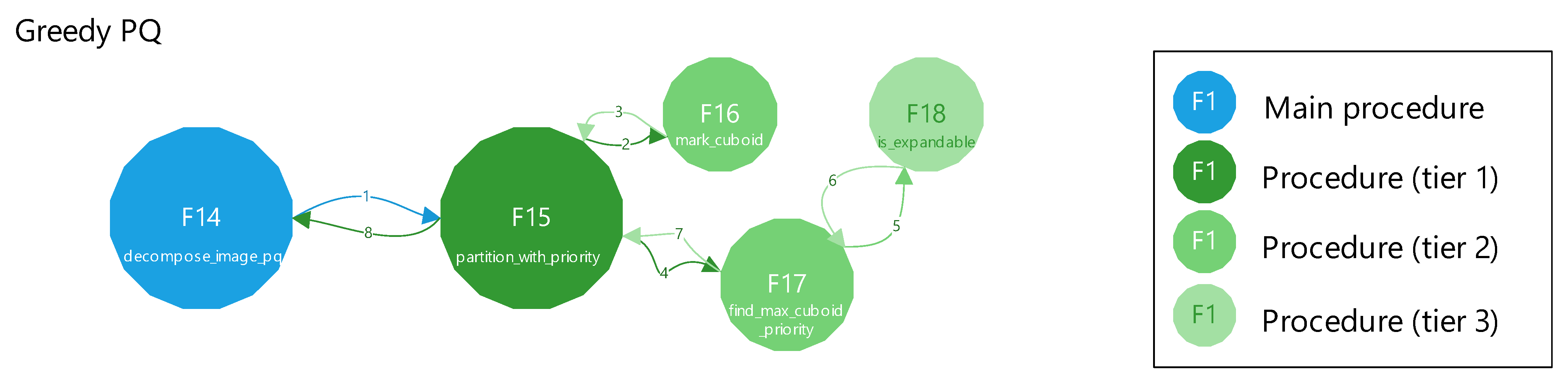

Figure 13 and

Figure 19 present a top-level function call graph for the Greedy PQ method. Each node is labeled by its figure number (F*), and the nodes are tiered based on their call depth from the main procedure. For example, reaching the is_expandable function (F18) from the main function decompose_image_pq (F14) requires three call levels. Arrows indicate execution flow in a clockwise, depth-first traversal. Superimposed numbers along the arrows indicate the sequence of function calls and returns; for example, the function in F15 is invoked at step 1, which subsequently calls F16 at step 2, and control eventually returns to F14 at step 8. It should be noted that when discussing

Figure 19, step refers to a function call or return; this differs from a procedure step defined above and used with

Figure 14 and

Figure 18.

2.2.4. Iterative ILP

The iterative integer linear programming approach (IILP) combines global optimization with incremental refinement. Traditional ILP solves a single global optimization problem but applying it directly to decompose high-dimensional binary shapes is often computationally infeasible due to the size and complexity of the solution space. Instead, iterative ILP divides the image into smaller subregions and solves an ILP subproblem at each iteration.

The algorithm generates a set of candidate n-cuboids covering the current foreground region at every step. These candidates are passed to an ILP solver, which selects a minimal subset that fully covers the area. After labeling the selected cuboids, the remaining foreground is updated, and the process repeats. A validation step ensures that all labeled components are valid n-cuboids; any invalid regions are reprocessed in a refinement stage.

This hybrid strategy balances the optimality of ILP with the scalability of iterative local refinement. It differs from traditional ILP in that it does not attempt to solve the entire decomposition in one pass. The term IILP distinguishes this iterative application of ILP from conventional single-shot ILP formulations.

High-Level Algorithm Overview

- 2.

The main decomposition loop is run after initialization as follows:

- ○

While working_copy still has foreground points (any value 1):

- 1.

Generate candidate n-cuboids from working_copy.

- 2.

Solve ILP subproblem on to pick a minimal subset of n-cuboids covering all 1s in working_copy.

- 3.

Label and remove: For each chosen n-cuboid , assign label , set these voxels to 0 in working_copy, and increment .

- 3.

After the main decomposition loop, a validation and refinement step proceeds as follows:

- ○

After no foreground remains in working_copy, check whether each label in labeled indeed corresponds to a single axis-aligned n-cuboid.

- ○

If any labeled region is not a perfect n-cuboid, isolate that region, re-run the decomposition, and then re-merge the results.

- ○

Keep iterating until all labeled regions pass validation (i.e., each label is a proper n-cuboid).

- 4.

Finally, the output of the method is returned as follows:

- ○

Return the final labeled array in which each non-zero label identifies a valid n-cuboid.

The core steps consist of candidate n-cuboid generation, solving the ILP subproblem, and iterating the image to cover the entire region as follows:

Denote the final set of candidates by . is the set of all (start, end) pairs that form a valid, axis-aligned n-cuboid of 1s that cannot be expanded further.

- 2.

Solving the ILP subproblem takes place in the function solve_ilp_subproblem (bitmap, candidates)

We want to cover all 1s in bitmap exactly once with the fewest n-cuboids from .

- 2.1.

Setup variables

- -

For each candidate , define a binary variable xc.

- -

xc = 1 means we select n-cuboid c in the decomposition.

- 2.2.

Define constraints

- -

Let P = {x ∈ I | bitmap(X) = 1} be the set of all points that need coverage.

- -

For each point x ∈ P, let .

- -

We require exactly one n-cuboid to cover

x as shown in Equation (33):

- 2.3.

Objective is expressed in Equation (34) as follows:

i.e., choose the fewest n-cuboids that cover all 1s exactly once.

- 2.4.

Solve using an ILP solver. The solver returns {c | xc = 1}.

- 2.5.

Result

- -

The set of chosen n-cuboids is an exact cover of all 1s in bitmap.

- 3.

Iterating the image to cover the entire region takes place in the function iterative_decomposition (image)

- 3.1.

Initialize

- -

working_copy ← A, labeled ← 0-array, .

- 3.2.

Repeat

- -

While working_copy still has 1s:

- 1.

Generate .

- 2.

If , break.

- 3.

Solve the ILP to get a minimal cover .

- 4.

For each n-cuboid :

- ○

Mark labeled(x) = for .

- ○

Set working_copy(x) = 0 in that region.

- ○

Increment .

- 3.3.

Validate

- -

Check that each label in labeled indeed forms a single axis-aligned n-cuboid. If not, let bad be the set of problematic labels.

- -

For each label b ∈ bad:

- 1.

Isolate that label’s region in a new working_copy.

- 2.

Re-run the candidate generation + ILP cover (generate_candidate_cuboids and solve_ilp_subproblem).

- 3.

Merge the newly labeled sub-decomposition back into labeled.

- -

Repeat until no invalid labels remain.

- 3.4.

Remap labels to a contiguous set {1, …, L}.

- 3.5.

Return labeled.

Candidate generation has potentially significant complexity, since it attempts expansions in all permutations of dimensions for each point. In ILP, each subproblem is a classic set cover or exact cover problem, which is NP-hard, but practical solvers can handle moderate sizes. If a labeled region fails to form a valid n-cuboid (e.g., due to disconnection or irregular shape), the method isolates and re-decomposes it, effectively performing a refinement pass.

Mathematically and logically, the correctness can be proven as follows:

- -

Each ILP subproblem ensures coverage of the “working copy” in two steps:

- ○

Firstly, the constraints for each x guarantee that every x is covered by precisely one n-cuboid in the chosen subset.

- ○

Secondly, the algorithm progresses towards the desired outcome by removing these elements from the working copy.

- -

Termination:

- ○

Each pass covers at least one 1. The number of 1s is finite, so they all get removed or re-labeled.

- ○

The validation step only triggers a sub-decomposition for “bad” labels; that sub-decomposition again covers a strictly smaller subset of points each time.

- ○

Hence, after finitely many re-labelings, no invalid labels remain.

- -

Disjointness:

- ○

The ILP enforces that each 1 is covered precisely once in that subproblem.

- ○

Across subproblems, once a set of points is labeled and removed, subsequent subproblems cannot reuse them.

Hence, the final labeling is a valid decomposition.

The Iterative ILP can be summarized into the following five steps:

Define a subproblem so that at each step, generate a set of candidate n-cuboids that completely covers the current subset of 1s.

Solve a minimal exact cover ILP to pick the smallest subset of n-cuboids.

Remove those covered 1s from the image and label them.

Validate whether each labeled region is a single, valid n-cuboid; if it is invalid, re-decompose that region.

Continue until all foreground points are covered and each label is valid.

While still potentially expensive, the iterative approach is typically more tractable than a single ILP. It systematically ensures holes and concavities are preserved and each label becomes a proper n-cuboid.

The IILP method was implemented in [

34], using [

35] for numerical and matrix operations. ILP subproblems were solved using [

38]. The total time complexity is approximately

O(

T ⋅ (

k ⋅

n! ⋅

n ⋅

D + ILP(

C,

k’))), where

T is the number of decomposition iterations,

k is the number of foreground elements, and

C is the number of n-cuboid candidates. Space complexity is

O(

N +

C ⋅

n), with

N the image size. In practice, performance depends heavily on the structure of the foreground and the effectiveness of n-cuboid pruning.

The implementation introduces several practical constraints not fully addressed in the theoretical model. Candidate generation explores all permutations of dimension orderings for each foreground element, introducing redundancy and increased computation, especially in high dimensions. While exact duplicates are filtered, semantically overlapping or nested n-cuboids are not explicitly removed. The validation step checks that each labeled region’s bounding box contains only its label and no background, effectively detecting internal voids or mislabelings. However, it does not explicitly verify geometric n-cuboid regularity (though this is partially ensured by the labeling uniformity and axis-aligned bounding box criteria). Additionally, when invalid labels are detected, the refinement process requires full reprocessing of those regions, which may add considerable overhead in large or fragmented images. Lastly, the ILP solver is invoked without early-stopping heuristics, which may limit scalability when subproblem complexity spikes unexpectedly.

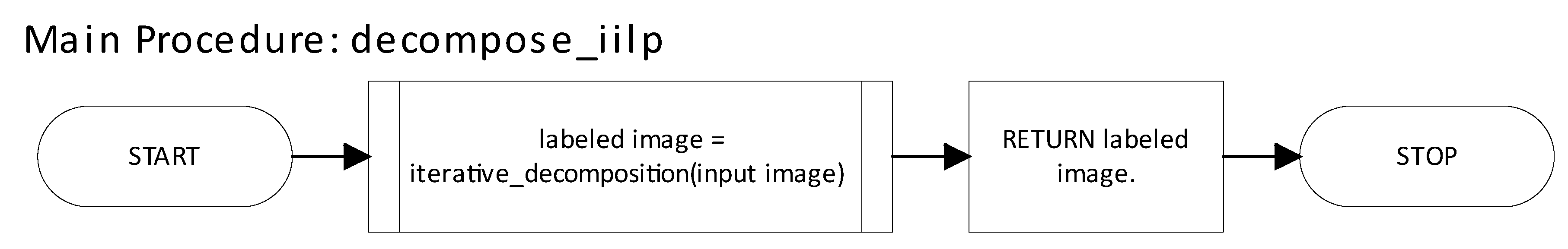

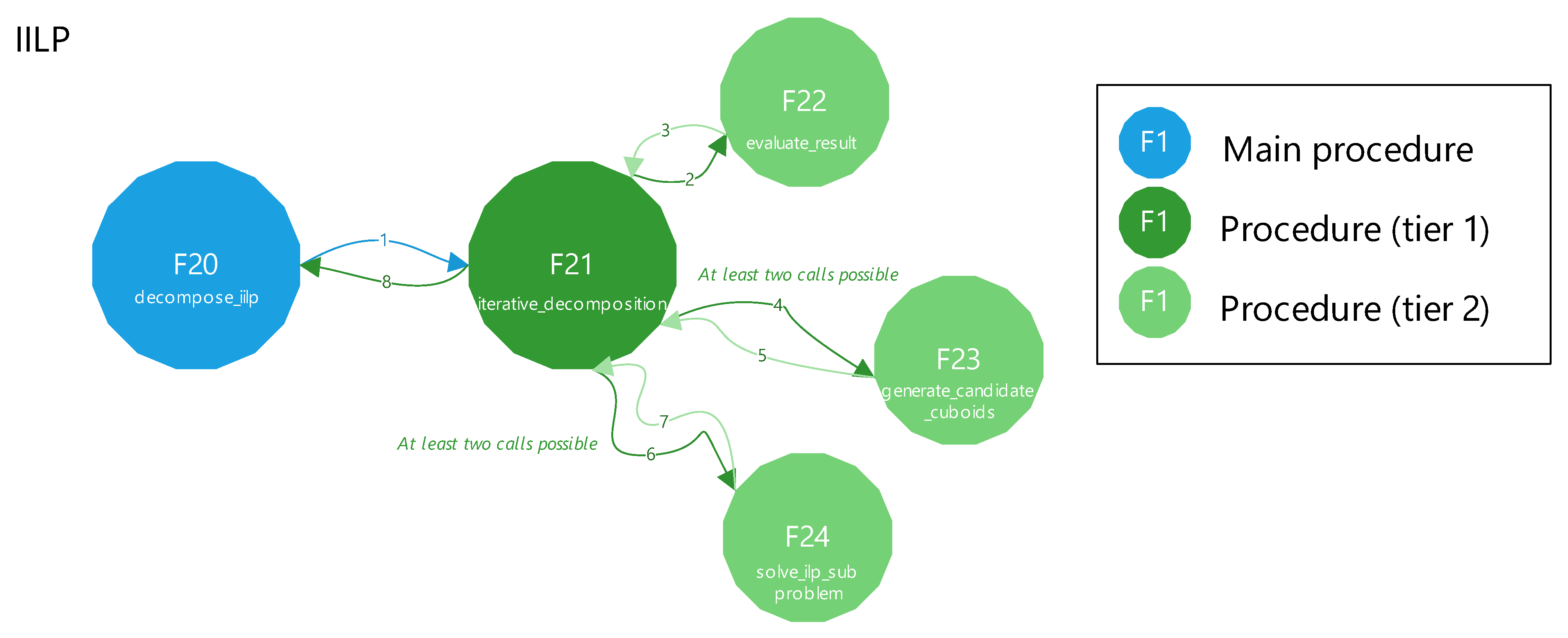

Figure 20 shows the main function decompose_iilp of the Python implementation. Its structure is simple: it invokes the subfunction iterative_decomposition and returns its result.

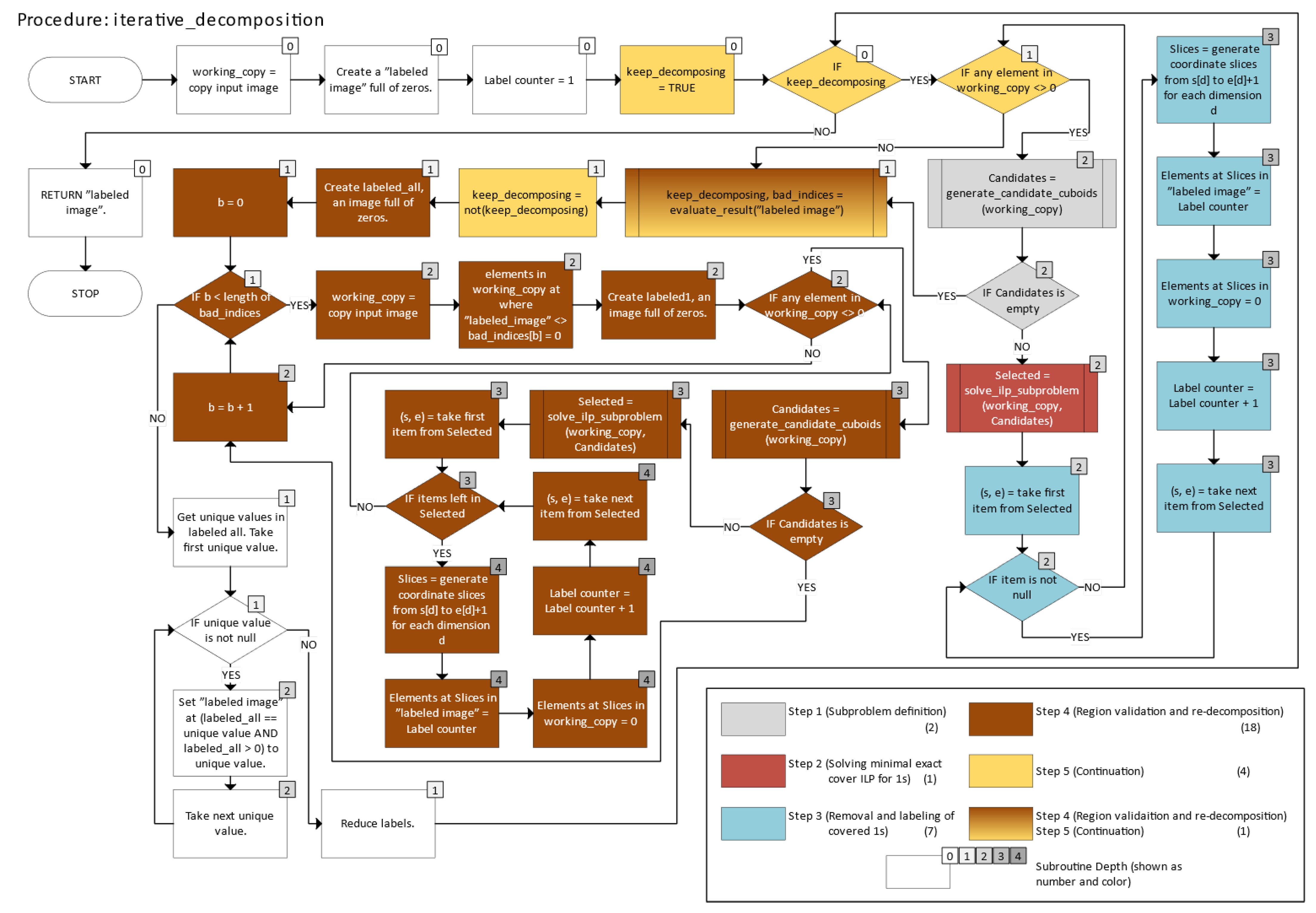

Figure 21 illustrates the iterative_decomposition function, which partitions the image into n-cuboids using the iterative ILP approach.

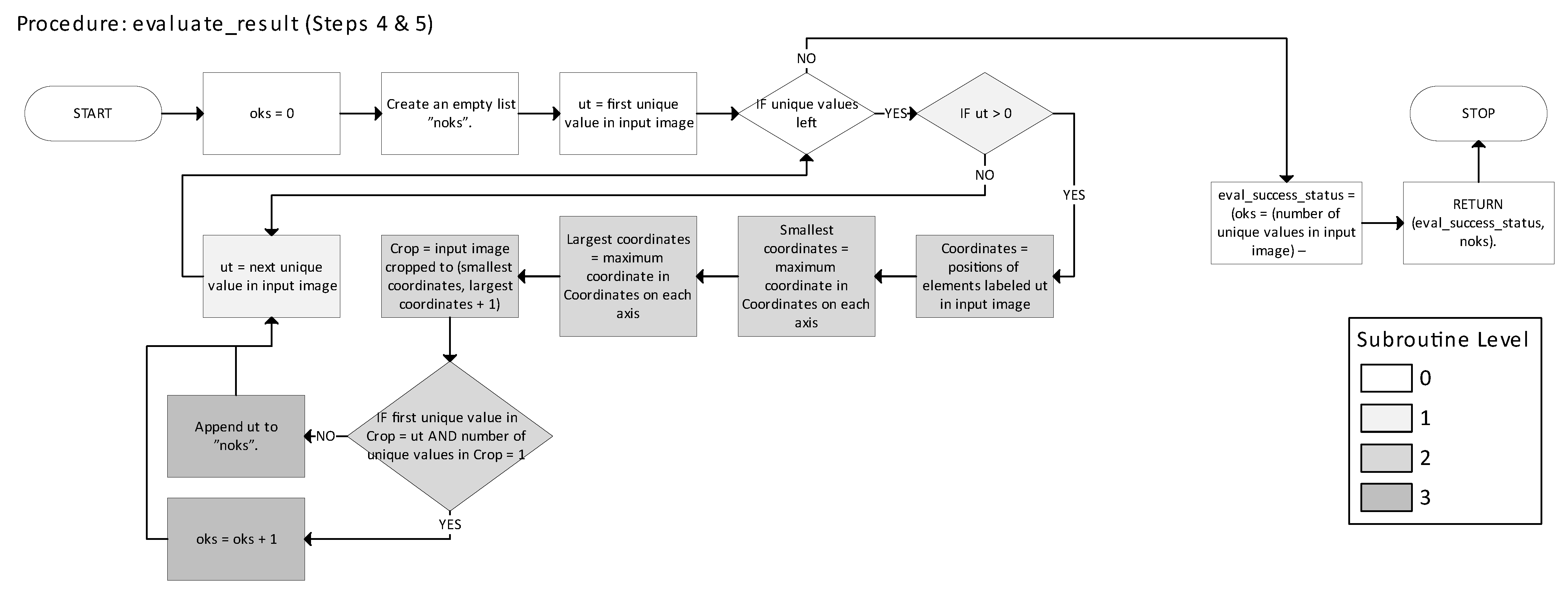

Figure 22 presents the evaluate_result function, which extends the validation procedure from [

27] by returning the labels of incorrectly extracted shapes for further reprocessing.

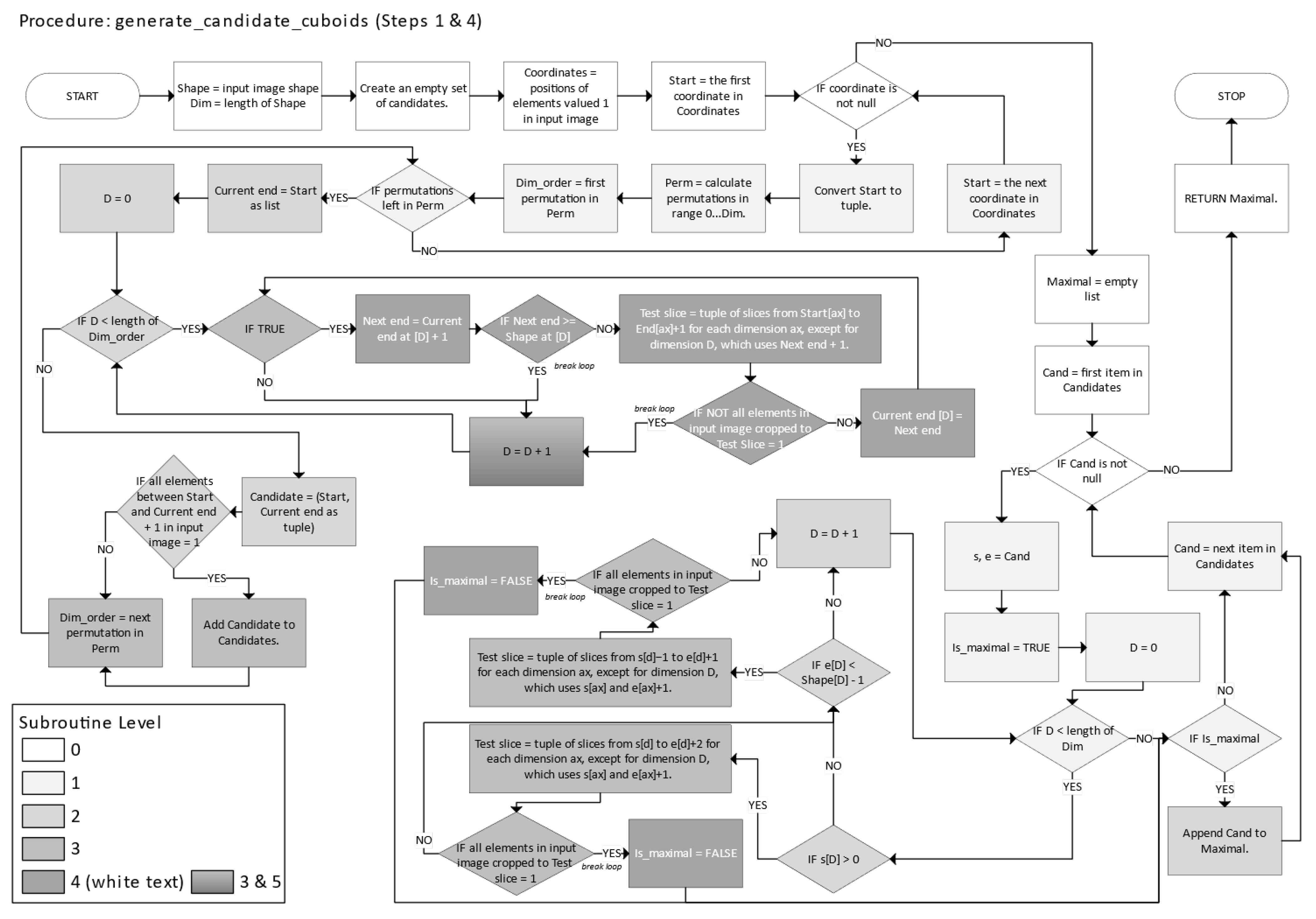

Figure 23 depicts the generate_candidate_cuboids function responsible for generating candidate n-cuboids for the ILP solver.

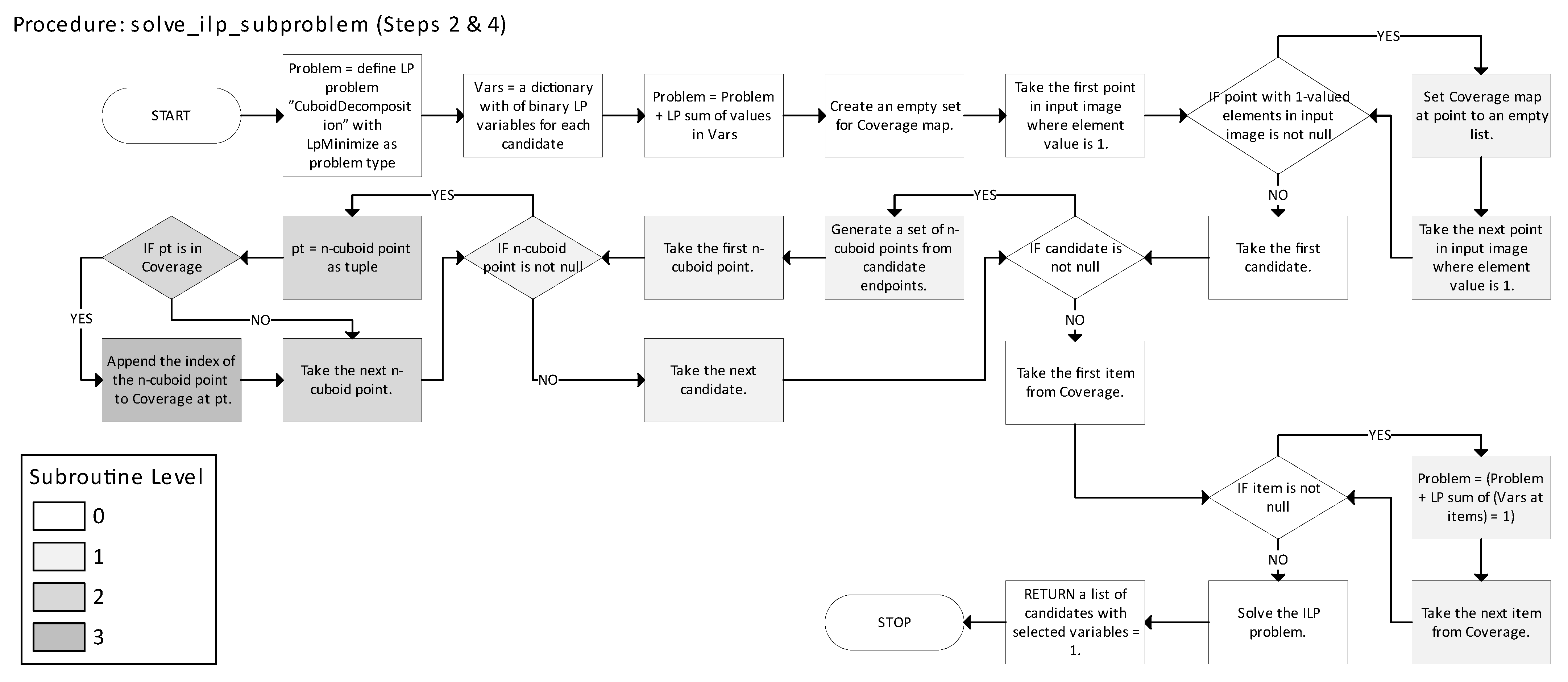

Figure 24 describes the solve_ilp_subproblem function, which applies the ILP technique to determine an optimal cover of the current image region. In

Figure 21 and

Figure 24, Steps 1–5 correspond to the five parts of the IILP strategy described earlier: (1) subproblem definition, (2) solving minimal exact cover for 1s, (3) removal and labeling of covered 1s, (4) region validation and re-decomposition, and (5) continuation (until completion). In

Figure 20 and

Figure 21, these steps are color-coded, and blocks involving multiple parts are shown with gradient fills. The number in parentheses in the legend indicates how many blocks belong to each step. In

Figure 22 and

Figure 24, color indicates nesting levels of code structure. Gradient shading in these figures signals that a block corresponds to multiple levels of nesting or indentation in the source code.

Figure 25 provides a top-level function call graph of the IILP method. Each node is labeled with an F* identifier referring to the corresponding figure. Functions are arranged in tiers based on the call depth from the main procedure. For example, reaching evaluate_result (F22) from decompose_iilp (F20) requires two levels of calls. Execution proceeds in a clockwise, depth-first order. Superimposed numbers indicate the sequence of function calls and returns and are placed directly on the arrows representing those transitions; for instance, F21 is invoked at step 1, which calls F22 at step 2, and control returns to F20 at step 8. It should be noted that when discussing

Figure 25, “steps” refers to the execution order in the top-level call graph. This differs from the five decomposition steps defined earlier and used in

Figure 21 and

Figure 24.

2.3. Testing

The methods were tested by running the dataset five times, recording the following metrics:

- -

Runtime in seconds;

- -

Label count (before and after optimization);

- -

Mean region size (before and after optimization);

- -

Label reduction count.

The average of each metric was computed for further analysis.

The Greedy PQ method used the label function in [

37] for connected-component labeling.

Each decomposition result underwent optimization and label reduction, as described in [

27].

- -

Optimization: Combine adjacent n-cuboids into a single, larger n-cuboid if the result remains a valid partition.

- -

Label reduction: Reassigned shape labels sequentially to eliminate gaps (e.g., if the original labels were 2, 4, and 5, the corresponding post-reduction values became 1, 2, and 3).

To validate partition correctness, the algorithm

Iterated through all labels,

Computed the axis-aligned bounding box for each labeled region, and

Verified that all elements within the bounding box were foreground pixels with the same label.

This validation procedure is documented and used in [

27].

In addition to the validation method described above, an internal visualization-based inspection was performed using a custom tool implemented with [

34,

35,

39,

40]. This step verified that the resulting n-cuboids fully covered the target regions without overlap or omission.

All tests were conducted on a Dell Latitude 7350 laptop running Windows 11 Enterprise.

2.4. The Use of Generative AI

Generative AI was used to generate and debug code and assist in mathematical formulation, data analysis, interpretation, and text editing, including grammar, spelling, punctuation, and formatting.

3. Results

Table 2 highlights the four methods (pure Greedy, Greedy BT, Greedy PQ, and IILP). The label counts, region sizes, and reductions were calculated according to the procedure described in [

27]. Next to each value, we place an icon to indicate the best result in time, label count, or mean region size, and the worst result in reductions. No icon is shown if all four methods perform equally in each category.

For example, in the first image, the clock beside the time of the pure greedy method indicates it was the fastest among the new techniques. No icons appear beside any label or mean region sizes because all methods operated equally in those categories. Meanwhile, the minus-sign icon beside Greedy PQ’s reduction count shows that it required a label reduction step, unlike the other techniques in this case.

Detailed runtime statistics, including standard deviations and minimum–maximum ranges for each image–method combination, are provided in

Table S1 in the

Supplementary Materials.

4. Discussion

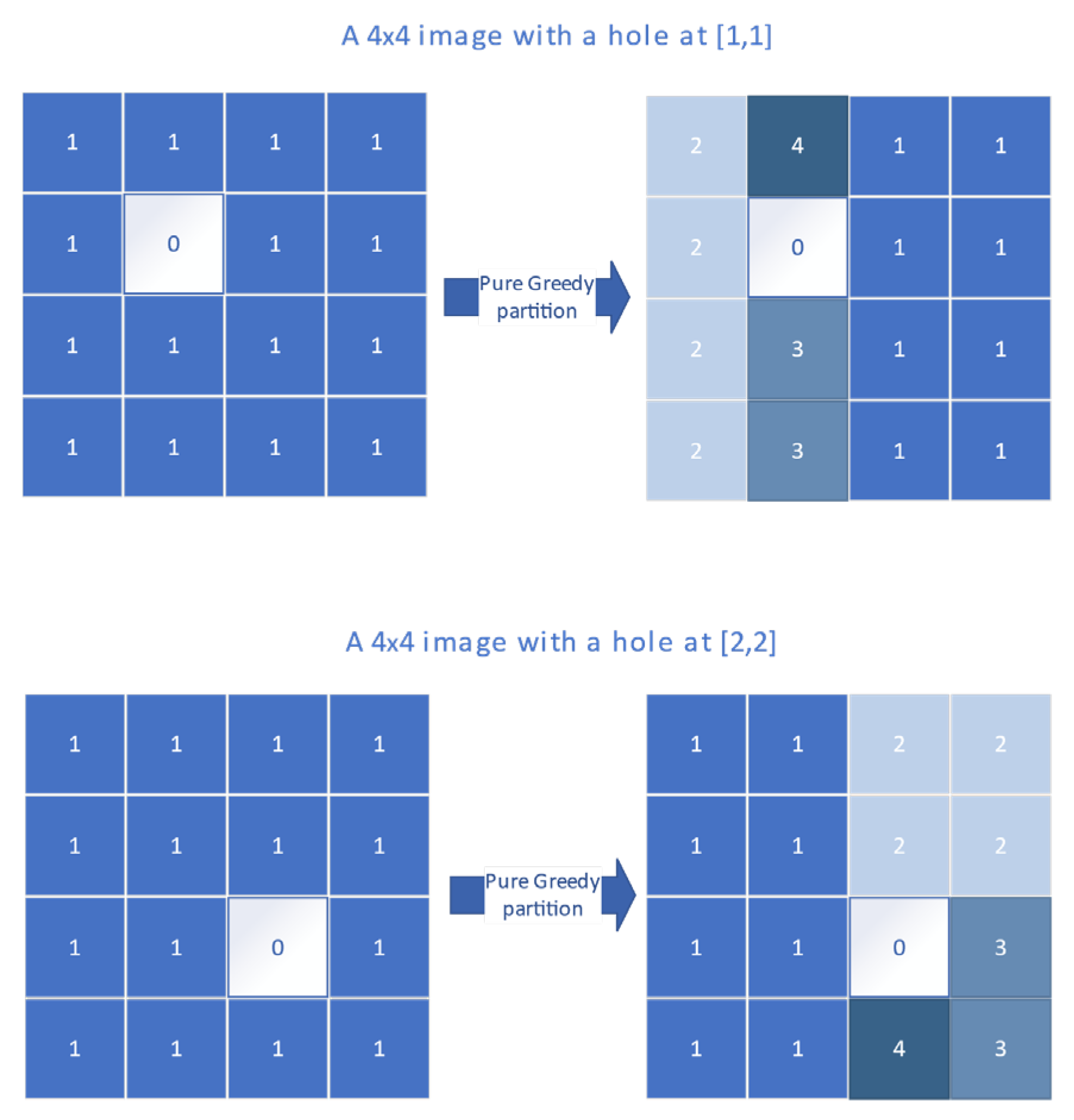

All four new methods produce the optimal solution on trivial or simple images (Box 9 × 9 × 9, Holed, Cross3D, Cross2D, Squares, Cubes, Hypershape, and Lines) and the intermediately complex image (Randomly Generated), differing only in runtime. However, pure greedy is the only one that consistently yields optimal results for more intricate shapes (Steps, the three Holed with Planes images).

Somewhat unexpectedly, the IILP method produced more final partitions than the purely greedy approach on more intricate images. While ILP is theoretically expected to match or outperform greedy methods, the iterative setup negates this advantage. The results suggest that iteration may cause the solver to become trapped in suboptimal solutions, especially under time constraints or with limited iteration budgets, thus sacrificing global optimality.

There are several runtime surprises; for example, Greedy BT took 98 s on the simple Box 9 × 9 × 9 image, while the pure greedy method (and Special and General I from the old techniques) completed the task in milliseconds. This discrepancy likely reflects the overhead of Greedy BT’s heuristics: bidirectional expansion, recursive DFS, prioritization by largest available expansion, upper-bound pruning, and caching. Yet, Greedy BT is not always the slowest—it performs well on some 2D and 3D shapes and the 4D Hypershape. The key factor appears to be how effectively the shape allows the search space to collapse through pruning. The method performs well if the object has large open regions or an easily partitionable structure. Otherwise, the branching factor may explode. This explains why Greedy BT is sometimes the fastest of the four new methods (all images in the dataset except Lines, Holed with Planes, Box 9 × 9 × 9) and sometimes impractical (all three Holed with Planes images, Box 9 × 9 × 9). Notably, while the method was the fastest on Steps, it yielded more n-cuboids than the other greedy approaches and IILP. The method’s sensitivity to shape geometry suggests its performance is highly nonlinear and difficult to predict. Greedy PQ was the fastest on all three Holed with Planes images, but nothing else.

IILP shows moderate performance in some cases but is often slower than average. (Meanwhile, out of the old methods, General II can also jump to multi-second times for shapes like Hypershape). It seems to be the slowest of the four new methods, except on dense images, where Greedy BT can be significantly slower. These spikes in runtimes reinforce that shape geometry drastically affects the branching factor for Greedy BT and the integer program’s complexity for IILP.

These trends are quantitatively reflected in the runtime variability reported in

Table S1, where several methods exhibit significantly higher standard deviations on specific shapes, reinforcing their sensitivity to geometric complexity and branching behavior. Notably, the pure greedy method showed the least variability in average runtime and standard deviations across the three Holed with Planes images, suggesting a degree of orientation robustness. In contrast, IILP, while not always the slowest overall, tended to have consistently high runtimes across images, yet demonstrated the least overall variability in the ratio between standard deviation and mean runtime.

Despite using a priority queue for more informed selection, Greedy PQ required label reduction for every test image – an undesirable trait, as it adds post-processing time and integration complexity. Surprisingly, even IILP required a label reduction step for Holed with Planes XY.

In some cases, greedy and Greedy PQ tie for the fewest partitions if label reduction is considered (Holed with Planes YZ/XZ, Steps). In others, Greedy BT is as good as these two approaches or yields the same result but faster (Holed, Cross3D/2D, Randomly Generated, Squares, Cubes, Hypershape, Lines). On Holed with Planes XY, the pure greedy method was the winner. Conversely, Greedy BT returned equal or worse results in significantly higher runtime than the other methods for Box 9 × 9 × 9 and all Holed with Planes variants. In Steps, Greedy BT lags in partition count, yet is the fastest in runtime. These patterns suggest that each method’s heuristics exploit different structural cues in data. No single method dominates clearly across all metrics–runtime, region size, or region count.

Although [

27] reports runtimes for shapes on multiple orientations, it does not explicitly examine the sensitivity of its methods to input rotation. This study shows that none of the tested algorithms, including ours, are strictly rotation-invariant. The pure greedy method was the only approach consistently producing optimal results for all Holed with Planes images, which depict the same object in different orientations. This suggests a degree of orientation independence. However, further testing with two asymmetric 2D images, one created by rotating the other by 180°, revealed that even this method is not fully invariant to orientation. While it still produced optimal partitions, the results differed (

Figure 26). Other methods showed similar orientation sensitivity.

Beyond their theoretical appeal, the proposed bitmap decomposition methods are relevant to practical systems in electronics, embedded vision, and computational geometry. Their ability to generate compact, disjoint, axis-aligned n-cuboid representations of binary data in any dimension makes them suitable for hardware-constrained applications such as FPGA-based image segmentation, on-chip spatial pattern-matching, and data partitioning for multicore hardware acceleration. In VLSI design automation, the fast decomposition of occupancy maps or logical regions can significantly reduce design complexity and improve simulation throughput. Similarly, in autonomous systems or robotics, efficiently parsing multidimensional sensor data into interpretable subregions allows for simplified control logic and real-time object tracking. These areas benefit from fast, predictable, and deterministic shape decomposition techniques that minimize the number of geometric primitives used, directly supporting storage and transmission efficiency.

A notable application is FPGA-based image segmentation, where hardware logic constraints require minimal and regularized data representations. Decomposing binary feature masks into a few axis-aligned regions enables compact lookup-table mapping, reducing logic depth and memory usage. Our methods—particularly the pure greedy algorithm—achieve high compression ratios without post-optimization, making them attractive for real-time edge processing.

A second application domain is multidimensional data preprocessing in computer vision and sensor fusion. Systems operating on 3D, 4D, or higher-dimensional arrays, such as those used in volumetric imaging, autonomous navigation, or medical diagnostics, require efficient region labeling before classification or feature extraction. The ability to produce compact region decompositions across high-dimensional spaces directly contributes to more efficient region-based CNN architectures or patch-wise statistical analysis. Our methods suit this setting well due to their generalizability and consistent performance across dimensions.

From a systems design perspective, bitmap decomposition also plays a key role in signal encoding, particularly in formats where binary masks represent activation patterns, attention regions, or sparse signals. By reducing such masks into a few axis-aligned primitives, our methods can serve as a lossless compression step, enabling bandwidth savings in communication-constrained systems, such as sensor networks or wireless embedded devices.

On-chip memory optimization is a persistent bottleneck in digital vision systems, especially those implemented in ASIC or SoC designs. The proposed algorithms reduce storage fragmentation by representing complex shapes as minimized sets of regular geometric blocks, which are easier to align in embedded DRAM or SRAM tiles. This simplification reduces memory access overhead and aligns with burst-friendly memory access patterns.

Finally, in circuit design automation and CAD tools, shape decomposition underpins layout partitioning for parallel synthesis, simulation, or routing. Efficiently dividing logic regions, thermal maps, or timing graphs into decomposable regions allows tools to assign these blocks to compute clusters, evaluate spatial constraints, or optimize for thermal isolation—all of which benefit from the deterministic and compact decomposition schemes proposed in this study.

The outcomes of the tests in this work can be summarized as follows:

- -

The pure greedy approach emerges as the most robust method, producing (near-) optimal partitions even on complex shapes, never being the slowest, and requiring no post-processing.

- -

Greedy BT is fast on some shapes, thanks to optimizations (especially, aggressive pruning). Yet, it suffers from high variance and long runtimes in dense or irregular shapes and does not guarantee better partitions than the other three methods.

- -

Greedy PQ and IILP each required label reduction in some cases, suggesting susceptibility to suboptimal paths or solver limitations. Greedy methods often outperformed the IILP in terms of speed and result optimality.

These results highlight a practical lesson: theoretically more powerful methods (e.g., with backtracking, prioritization, or ILP) do not always outperform a carefully designed, simple heuristic—in this case, a greedy method—tailored to the problem structure.

5. Limitations and Future Work

Despite their advantages, the proposed methods have limitations that merit further investigation.

First, the methods are specifically designed for bitmap (raster) data. Processing vector or mesh-based inputs requires rasterization or conversion, such as staircase triangulation or other pre-processing tools, such as those in [

41,

42,

43].

Second, the algorithms operate strictly on axis-aligned n-cuboids, limiting their applicability to arbitrarily rotated or skewed shapes. Adapting the methods to support oblique partitions via coordinate conversions, for instance, could enhance flexibility for applications in geometry processing or computer graphics.

Third, the proposed methods do not distinguish between nested or overlapping regions. Consequently, each shape in multi-shape images must be processed separately. This can be achieved as a preprocessing step using connected-component labeling (CCL) [

44], which assigns unique labels to connected foreground regions, enabling independent processing of each shape. A widely used implementation is available in scikit-image [

37]. By contrast, a NumPy-based alternative is provided in the

Supplementary Materials for educational use or cases where external dependencies should be avoided. Yet, it is not optimized for large-scale processing.

Fourth, the methods assume binary input (foreground vs. background). While this is sufficient for shape decomposition tasks, many real-world segmentation problems involve multi-class or multi-label data. A straightforward extension is to apply the decomposition pipeline separately to each labeled class, effectively treating each label as a unique binary mask. This enables per-class analysis while preserving the logic of the existing methods. Developing native support for multi-class decomposition could broaden applicability to domains such as medical imaging, materials science, and semantic scene analysis.

Fifth, while the methods generalize to arbitrary dimensionality n ∈ ℕ, they have only been empirically validated on synthetic datasets up to 4D. In theory, they correctly support 5D, 6D, and beyond. However, memory usage and processing time grow rapidly with increasing n, posing practical limitations. Real-world high-dimensional data, such as results from scientific simulation, sensor fusion, or medical imaging, would offer a valuable benchmark for testing scalability and robustness.

Sixth, the evaluation relied entirely on synthetic datasets due to the absence of publicly available real-world n-dimensional binary datasets with known ground-truth decompositions. As with most methods addressing n-dimensional binary image decomposition, real-world validation remains limited by the availability of such datasets. The test cases were constructed to capture various degrees and types of geometric and topological complexity (e.g., holed, concave, and rotated structures). Nonetheless, future validation of real-world data from fields such as robotics, volumetric imaging, or computational materials science would be beneficial for assessing generalization and impact.

Seventh, the greedy methods are heuristic and do not guarantee global optimality. Although no failure cases were observed in the tested shapes, it is theoretically possible that the pure greedy strategy may produce suboptimal decompositions in fragmented, adversarial, or finely tessellated shapes, especially when early large selections block better downstream configurations. Systematic exploration of such corner cases, including formal characterizations, is left as future work. Hybrid strategies incorporating delayed commitment, deferred decisions, or limited local backtracking may help address this limitation.

Eighth, the iterative ILP (IILP) method, while conceptually combining the optimality guarantees of ILP with iterative scalability, forfeits full optimality due to the subdivision of the problem into successive subproblems. IILP is best understood as an iterative wrapper around ILP subproblems, and its global result may differ from the solution to a single monolithic ILP. Moreover, it remains computationally expensive; in tests, it did not outperform simpler heuristics in practice.

Finally, Greedy PQ does not support bidirectional expansion due to its reliance on priority queues, which do not naturally accommodate reversibility. This limitation is structural and inherent to the algorithm design, and it is explained in more detail in

Section 2.2.3. Alternative global heuristics compatible with queued expansion strategies could be explored as an enhancement.

Additionally, future work may investigate exploring and incorporating custom seed-point strategies for shape-guided decomposition. This has potential utility in applications such as autonomous path planning, robot task decomposition, and efficient region-based scheduling.

Together, these methods define a rich space for future investigation at the intersection of efficient decomposition, optimality, and practical deployment.