Observation of Human–Robot Interactions at a Science Museum: A Dual-Level Analytical Approach

Abstract

1. Introduction

2. Related Works

3. Behavior Coding Scheme

3.1. Initial Identification of Visitor Behaviors

3.2. Refinement of Behavior Coding Scheme

3.3. Video Tagging

- The tagging process is based on the subject’s behavior. All actions become significant once the subject acknowledges the presence of the robot. Therefore, observations begin when the subject’s face is oriented toward the robot.

- The behavior code “Pass” was used when a visitor noticed the robot but continued to move past it without halting, determined by observing the direction of the visitor’s head.

- If the visitor followed the robot and eventually stopped while looking at the robot, “F-AP” was tagged sequentially. Conversely, if the visitor started to follow the robot but then diverged onto a different path, “F” was tagged.

- The behavior code “None” was specifically tagged only for the behavior after either an approach or follow action. It was used when no gesture or touch occurred after the visitor approached the robot. “None” was also used to denote the absence of interaction or the interval between different interactions.

- Continuous occurrences of the same interaction, even if separated by intervals, were considered a single action and tagged as such.

4. Observation Results

4.1. Environment

4.2. Group-Level Behavioral Observation

4.2.1. Gender Difference

4.2.2. Age Difference

4.3. Individual-Level Behavioral Observation

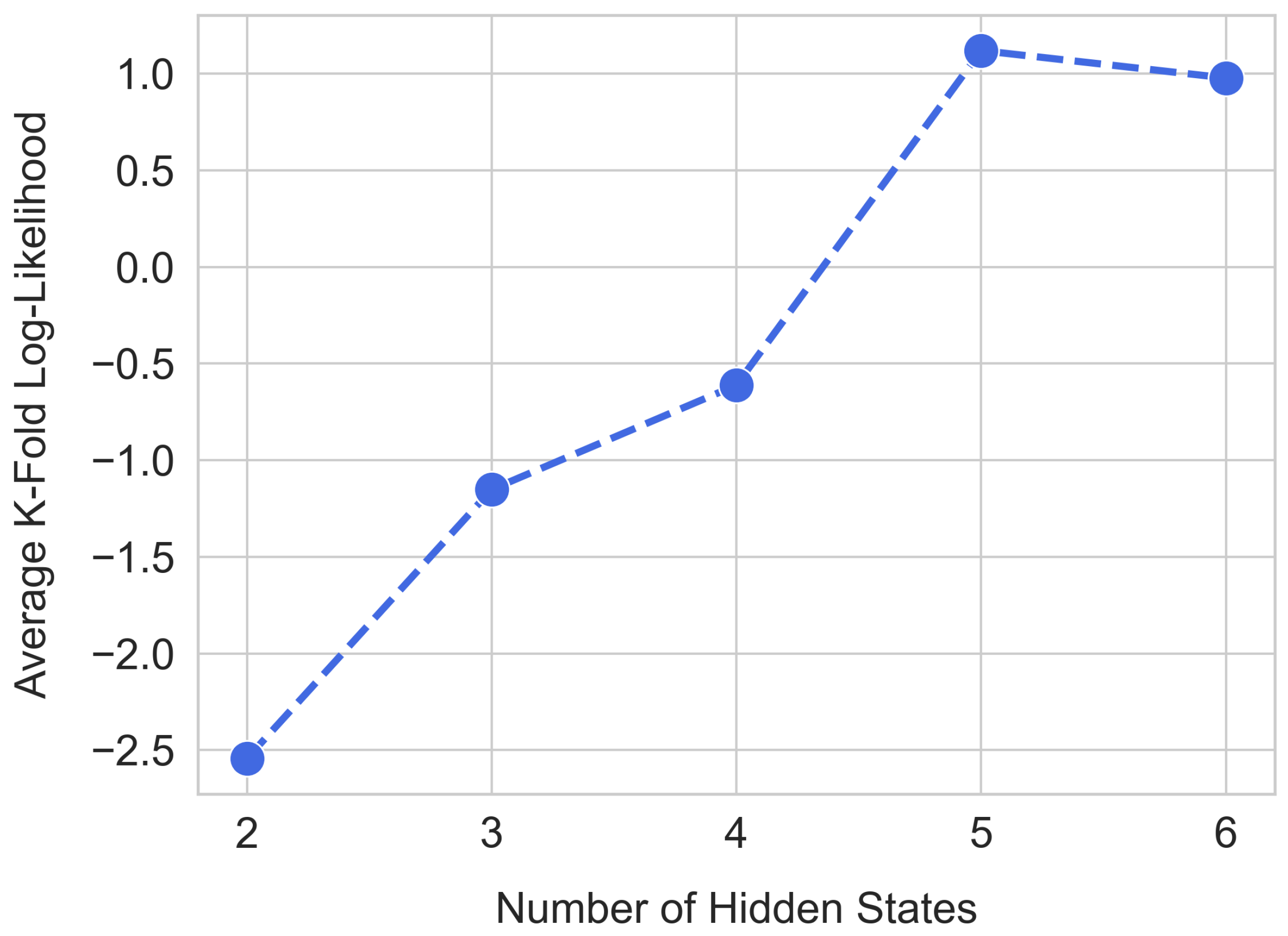

4.3.1. Model Selection and Data Preprocessing

4.3.2. Model Training

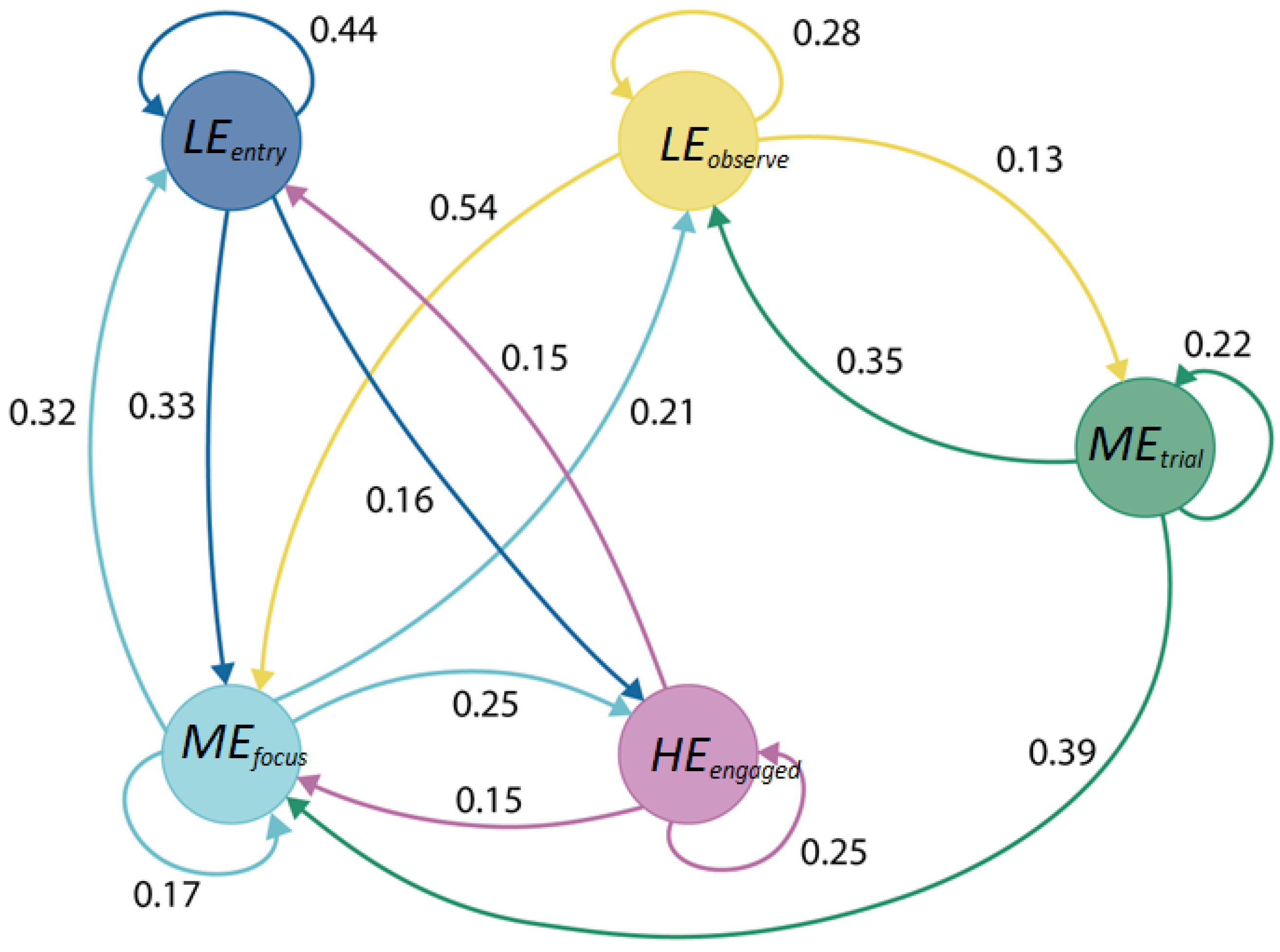

4.3.3. Model Interpretation

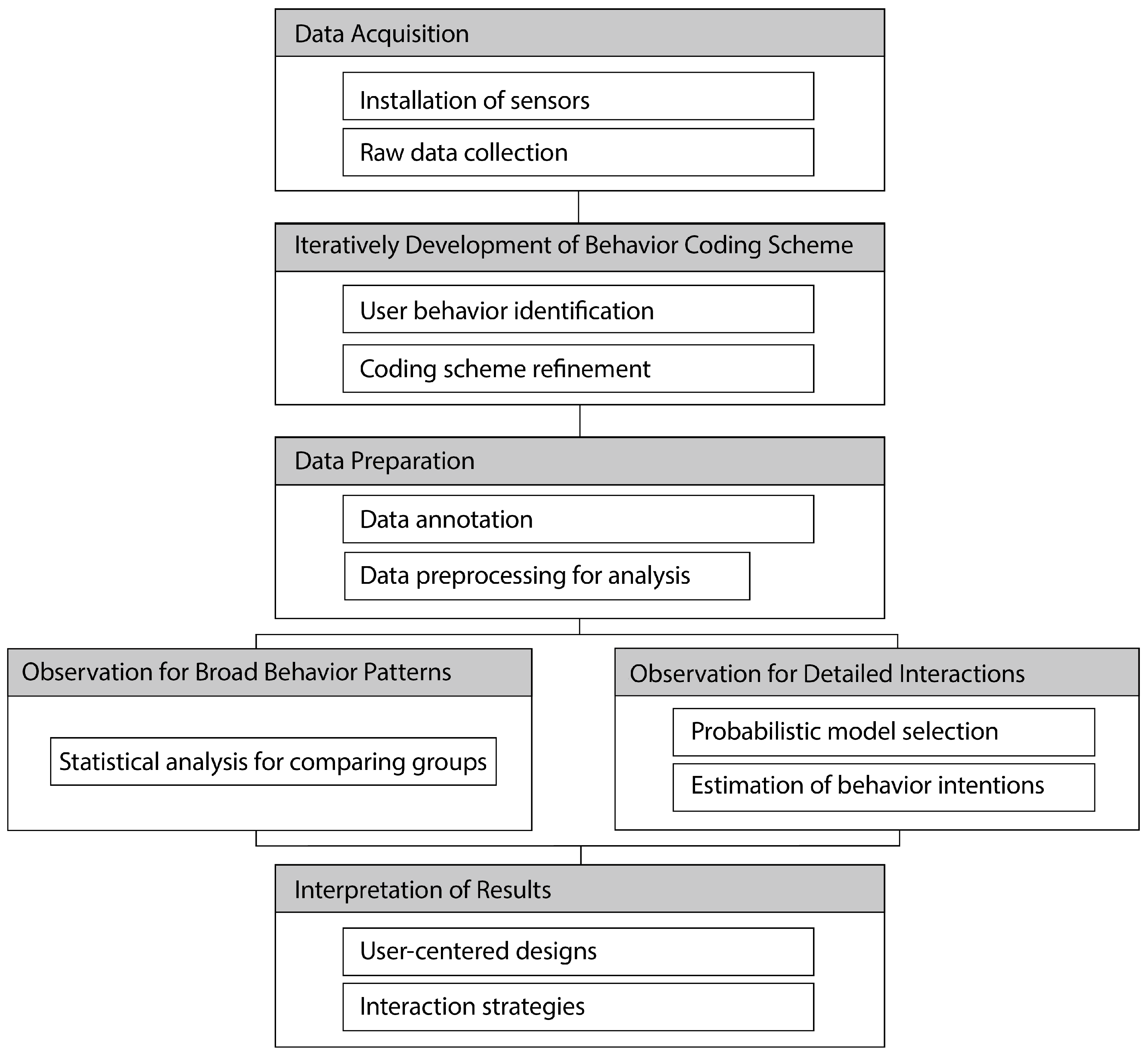

4.4. A Guide to Observation Studies Using Our Approach

4.4.1. Development of Behavior Coding Scheme

4.4.2. Group-Level Observation for Broad Behavior Patterns

4.4.3. Individual-Level Observation for Detailed Interaction Dynamics

5. Discussion

5.1. Behavior-Driven, User-Centered Design

5.2. Adaptive, Dynamic Interaction Strategies

5.3. Utility of Time-Based Engagement Modeling

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gehle, R.; Pitsch, K.; Dankert, T.; Wrede, S. How to open an interaction between robot and museum visitor? Strategies to establish a focused encounter in HRI. In Proceedings of the 12th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2017), Vienna, Austria, 6–9 March 2017; pp. 187–195. [Google Scholar]

- Gasteiger, N.; Hellou, M.; Ahn, H.S. Deploying social robots in museum settings: A quasi-systematic review exploring purpose and acceptability. Int. J. Adv. Robot. Syst. 2021, 18, 1–13. [Google Scholar] [CrossRef]

- Eksiri, A.; Kimura, T. Restaurant service robots development in Thailand and their real environment evaluation. J. Robot. Mechatronics 2015, 27, 91–102. [Google Scholar] [CrossRef]

- Mintrom, M.; Sumartojo, S.; Kulić, D.; Tian, L.; Carreno-Medrano, P.; Allen, A. Robots in public spaces: Implications for policy design. Policy Des. Pract. 2021, 5, 123–139. [Google Scholar] [CrossRef]

- Borghi, M.; Mariani, M.M. Asymmetrical influences of service robots’ perceived performance on overall customer satisfaction: An empirical investigation leveraging online reviews. J. Travel Res. 2023, 63, 1086–1111. [Google Scholar] [CrossRef]

- Zimmerman, M.; Bagchi, S.; Marvel, J.; Nguyen, V. An analysis of metrics and methods in research from human-robot interaction conferences, 2015–2021. In Proceedings of the 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2022), Sapporo, Japan, 7–10 March 2022; pp. 644–648. [Google Scholar]

- Leichtmann, B.; Nitsch, V.; Mara, M. Crisis ahead? Why human-robot interaction user studies may have replicability problems and directions for improvement. Front. Robot. AI 2022, 9, 838116. [Google Scholar] [CrossRef] [PubMed]

- Hoffman, G.; Zhao, X. A primer for conducting experiments in human–robot interaction. ACM Trans. Hum.-Robot Interact. 2020, 10, 1–31. [Google Scholar] [CrossRef]

- Hauser, E.; Chan, Y.-C.; Modak, S.; Biswas, J.; Hart, J. Vid2Real HRI: Align video-based HRI study designs with real-world settings. In Proceedings of the 33rd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN 2024), Pasadena, CA, USA, 26–30 August 2024; pp. 542–548. [Google Scholar]

- Bartneck, C.; Belpaeme, T.; Eyssel, F.; Kanda, T.; Keijsers, M.; Šabanović, S. Research methods. In Human-Robot Interaction: An Introduction; Cambridge University Press: Cambridge, UK, 2020; pp. 126–160. ISBN 978-1-108-47310-4. [Google Scholar]

- Bu, F.; Fischer, K.; Ju, W. Making sense of robots in public spaces: A study of trash barrel robots. J.-Hum.-Robot. Interact. 2025, 1–20. [Google Scholar] [CrossRef]

- Koike, A.; Okafuji, Y.; Hoshimure, K.; Baba, J. What drives you to interact?: The role of user motivation for a robot in the wild. In Proceedings of the 20th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2025), Boulder, CO, USA, 10–13 March 2025; pp. 183–192. [Google Scholar]

- Chang, W.-L.; Šabanovic, S.; Huber, L. Observational study of naturalistic interactions with the socially assistive robot PARO in a nursing home. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2014), Edinburgh, UK, 25–29 August 2014; pp. 294–299. [Google Scholar]

- Zawieska, K.; Hannibal, G. Towards a conceptualisation and critique of everyday life in HRI. Front. Robot. AI 2023, 10, 1212034. [Google Scholar] [CrossRef]

- Schrum, M.; Ghuy, M.; Hedlund-Botti, E.; Natarajan, M.; Johnson, M.; Gombolay, M. Concerning trends in likert scale usage in human-robot interaction: Towards improving best practices. J. Hum.-Robot Interact. 2023, 12, 1–32. [Google Scholar] [CrossRef]

- Rosenbaum, P.R. Observation and Experiment: An Introduction to Causal Inference; Harvard University Press: Cambridge, MA, USA, 2017; ISBN 978-0674975576. [Google Scholar]

- Babel, F.; Kraus, J.; Baumann, M. Findings from a qualitative field study with an autonomous robot in public: Exploration of user reactions and conflicts. Int. J. Soc. Robot. 2022, 14, 1625–1655. [Google Scholar] [CrossRef]

- Daczo, L.-D.; Kalova, L.; Bonita, K.L.F.; Lopez, M.D.; Rehm, M. Interaction initiation with a museum guide robot—From the lab into the field. In Proceedings of the 18th IFIP TC13 International Conference on Human-Computer Interaction (INTERACT), Bari, Italy, 30 August–3 September 2021; pp. 438–447. [Google Scholar]

- Shiomi, M.; Kanda, T.; Ishiguro, H.; Hagita, N. A larger audience, please!—Encouraging people to listen to a guide robot. In Proceedings of the 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2010), Osaka, Japan, 2–5 March 2010; pp. 31–38. [Google Scholar]

- Heerink, M.; Kröse, B.; Evers, V.; Wielinga, B. Assessing acceptance of assistive social agent technology by older adults: The Almere model. Int. J. Soc. Robot. 2010, 2, 361–375. [Google Scholar] [CrossRef]

- Rettinger, L.; Fürst, A.; Kupka-Klepsch, E.; Babel, F.; Baumann, M. Observing the interaction between a socially-assistive robot and residents in a nursing home. Int. J. Soc. Robot. 2024, 16, 403–413. [Google Scholar] [CrossRef]

- Matsumoto, S.; Washburn, A.; Riek, L.D. A framework to explore proximate human-robot coordination. ACM Trans. Hum.-Robot Interact. 2022, 11, 1–34. [Google Scholar] [CrossRef]

- Diehl, M.; Ramirez-Amaro, K. Why did I fail? A causal-based method to find explanations for robot failures. IEEE Robot. Autom. Lett. 2022, 7, 8925–8932. [Google Scholar] [CrossRef]

- Diehl, M.; Ramirez-Amaro, K. A causal-based approach to explain, predict and prevent failures in robotic tasks. Robot. Auton. Syst. 2023, 162, 104383. [Google Scholar] [CrossRef]

- Boos, A.; Herzog, O.; Reinhardt, J.; Bengler, K.; Zimmermann, M. A compliance–reactance framework for evaluating human-robot interaction. Front. Robot. AI 2022, 9, 733504. [Google Scholar] [CrossRef]

- Kim, S.; Hirokawa, M.; Matsuda, S.; Funahashi, A.; Suzuki, K. Smiles as a signal of prosocial behaviors toward the robot in the therapeutic setting for children with autism spectrum disorder. Front. Robot. AI 2021, 8, 599755. [Google Scholar] [CrossRef]

- Oliveira, R.; Arriaga, P.; Paiva, A. Human-robot interaction in groups: Methodological and research practices. Multimodal Technol. Interact. 2021, 5, 59. [Google Scholar] [CrossRef]

- Hall, E.T. A system for the notation of proxemic behavior. Am. Anthropol. 1963, 65, 1003–1026. [Google Scholar] [CrossRef]

- Hayes, A.T.; Hughes, C.E.; Bailenson, J. Identifying and coding behavioral indicators of social presence with a social presence behavioral coding system. Front. Virtual Real. 2022, 3, 773448. [Google Scholar] [CrossRef]

- Fischer, K.; Yang, S.; Mok, B.; Maheshwari, R.; Sirkin, D.; Ju, W. Initiating interactions and negotiating approach: A robotic trash can in the field. In Proceedings of the Association for the Advancement of Artificial Intelligence Spring Symposium (AAAI 2015), Palo Alto, CA, USA, 23–25 March 2015; pp. 10–16. [Google Scholar]

- Andrés, A.; Pardo, D.E.; Díaz, M.; Angulo, C. New instrumentation for human robot interaction assessment based on observational methods. J. Ambient Intell. Smart Environ. 2015, 7, 397–413. [Google Scholar] [CrossRef]

- Rabiner, L.; Juang, B. An introduction to hidden markov models. IEEE ASSP Mag. 1986, 3, 4–16. [Google Scholar] [CrossRef]

- Panuccio, A.; Bicego, M.; Murino, V. A hidden markov model-based approach to sequential data clustering. In Proceedings of the Joint IAPR International Workshop on Structural, Syntactic, and Statistical Pattern Recognition (SSPR/SPR 2002), Windsor, ON, Canada, 6–9 August 2002; pp. 734–742. [Google Scholar]

- Sánchez, V.G.; Lysaker, O.M.; Skeie, N.-O. Human behaviour modelling for welfare technology using hidden Markov models. Pattern Recognit. Lett. 2020, 137, 71–79. [Google Scholar] [CrossRef]

- Le, H.; Hoch, J.E.; Ossmy, O.; Adolph, K.E.; Fern, X.; Fern, A. Modeling infant free play using hidden Markov models. In Proceedings of the IEEE International Conference on Development and Learning (ICDL 2021), Beijing, China, 23–26 August 2021; pp. 1–6. [Google Scholar]

- Gupta, D.; Gupta, M.; Bhatt, S.; Tosun, A.S. Detecting anomalous user behavior in remote patient monitoring. In Proceedings of the 22nd IEEE International Conference on Information Reuse and Integration for Data Science (IRI 2021), Las Vegas, NV, USA, 6–8 August 2021; pp. 33–40. [Google Scholar]

- Cheng, X.; Huang, B. CSI-based human continuous activity recognition using GMM–HMM. IEEE Sens. J. 2022, 22, 18709–18717. [Google Scholar] [CrossRef]

- Flanagan, T.; Wong, G.; Kushnir, T. The minds of machines: Children’s beliefs about the experiences, thoughts, and morals of familiar interactive technologies. Dev. Psychol. 2023, 59, 1017–1031. [Google Scholar] [CrossRef]

- Lewis, K.L.; Kervin, L.K.; Verenikina, I.; Howard, S.J. Young children’s at-home digital experiences and interactions: An ethnographic study. Front. Educ. 2024, 9, 1392379. [Google Scholar] [CrossRef]

- Hemminghaus, J.; Kopp, S. Towards adaptive social behavior generation for assistive robots using reinforcement learning. In Proceedings of the 12th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2017), Vienna, Austria, 6–9 March 2017; pp. 332–340. [Google Scholar]

- Wróbel, A.; Źróbek, K.; Schaper, M.-M.; Zguda, P.; Indurkhya, B. Age-appropriate robot design: In-the-wild child–robot interaction studies of perseverance styles and robot’s unexpected behavior. In Proceedings of the 32nd IEEE International Conference on Robot and Human Interactive Communication (RO-MAN 2023), Busan, Republic of Korea, 28–31 August 2023; pp. 1451–1458. [Google Scholar]

- Abel, M.; Kuz, S.; Patel, H.J.; Petruck, H.; Schlick, C.M.; Pellicano, A.; Binkofski, F.C. Gender effects in observation of robotic and humanoid actions. Front. Psychol. 2020, 11, 797. [Google Scholar] [CrossRef]

- Zand, M.; Kodur, K.; Banerjee, S.; Banerjee, N.; Kyrarini, M. Examining diverse gender dynamics in human-robot interaction: Trust, privacy and safety perceptions. In Proceedings of the 17th International Conference on Pervasive Technologies Related to Assistive Environments (PETRA 2024), Crete, Greece, 26–28 June 2024; pp. 74–79. [Google Scholar]

- Yin, S.; Kasraian, D.; Wang, G.; Evers, S.; van Wesemael, P. Co-designing an ideal nature-related digital tool with children: An exploratory study from the Netherlands. Environ. Behav. 2025, 56, 739–775. [Google Scholar] [CrossRef]

- Neerincx, A.; Veldhuis, D.; Masthoff, J.M.F.; de Graaf, M.M.A. Co-designing a social robot for child health care. Int. J. Child-Comput. Interact. 2023, 38, 100615. [Google Scholar] [CrossRef]

- Chu, L.; Chen, H.W.; Cheng, P.Y.; Ho, P.; Weng, I.T.; Yang, P.L.; Chien, S.E.; Tu, Y.C.; Yang, C.C.; Wang, T.M.; et al. Identifying features that enhance older adults’ acceptance of robots: A mixed methods study. Gerontology 2019, 65, 441–450. [Google Scholar] [CrossRef]

- Finkel, M.; Krämer, N.C. The robot that adapts too much? An experimental study on users’ perceptions of social robots’ behavioral and persona changes between interactions with different users. Comput. Hum. Behav. Artif. Hum. 2023, 1, 100018. [Google Scholar] [CrossRef]

- Matcovich, B.; Gena, C.; Vernero, F. How the personality and memory of a robot can influence user modeling in human-robot interaction. In Proceedings of the 32nd ACM Conference on User Modeling, Adaptation and Personalization (UMAP 2024), Cagliari, Italy, 1–4 July 2024; pp. 136–141. [Google Scholar]

- Maroto-Gómez, M.; Villarroya, S.M.; Malfaz, M.; Castro-González, Á.; Castillo, J.C.; Salichs, M.Á. A preference learning system for the autonomous selection and personalization of entertainment activities during human-robot interaction. In Proceedings of the IEEE International Conference on Development and Learning (ICDL 2022), London, UK, 12–15 September 2022; pp. 343–348. [Google Scholar]

- Joshi, S.; Malavalli, A.; Rao, S. From multimodal features to behavioural inferences: A pipeline to model engagement in human-robot interactions. PLoS ONE 2023, 18, e0285749. [Google Scholar] [CrossRef] [PubMed]

| Types of Visitor Behavior | Snapshot from CCTV |

|---|---|

| A person avoiding robot after recognizing where the robot is. |  |

| A person passing by the robot without knowing where the robot is. |  |

| A person greeting the robot by waving hands. |  |

| A person touching the screen after following the robot while it moves. |  |

| A person touching the screen after approaching the robot. |  |

| Two persons touching the screen after approaching the robot. |  |

| A person pointing to the screen in order for another person to touch it together. |  |

| Item | Descriptions |

|---|---|

| Grammar 1 | When the robot performs (action), the visitor (gazes/directs head) while (maintaining distance). |

| Grammar 2 | (Interaction attempt) is made. |

| Group | Code | Descriptions |

|---|---|---|

| Physical proximity | AP (approach) | Look at the robot’s location, approach it, and stop in front of it. |

| P (pass) | When the robot is stationary, look at it and immediately walk past it. | |

| AV (avoid) | When the robot is moving, step aside in the direction it is heading. | |

| F (follow) | Follow the robot as it moves in the same direction. | |

| Interaction attempts | T (touch) | Touch the robot’s screen or body. |

| G (gesture) | Make gestures toward the robot (e.g., waving, nodding, raising your arms, etc.). | |

| N (none) | Remain still and do nothing to interact with the robot. |

| Factors | Test Results |

|---|---|

| Percent Agreement | 84.19244 |

| Scott’s Pi | 0.7998 |

| Cohen’s Kappa | 0.7998 |

| Krippendorff’s Alpha (Nominal) | 0.7998 |

| Number of Agreements | 980 |

| Number of Disagreements | 184 |

| Number of Cases | 1164 |

| Number of Decisions | 2328 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, H.; Shim, G.; Lee, H.; Kim, M.-G.; Kim, S. Observation of Human–Robot Interactions at a Science Museum: A Dual-Level Analytical Approach. Electronics 2025, 14, 2368. https://doi.org/10.3390/electronics14122368

Yoon H, Shim G, Lee H, Kim M-G, Kim S. Observation of Human–Robot Interactions at a Science Museum: A Dual-Level Analytical Approach. Electronics. 2025; 14(12):2368. https://doi.org/10.3390/electronics14122368

Chicago/Turabian StyleYoon, Heeyoon, Gahyeon Shim, Hanna Lee, Min-Gyu Kim, and SunKyoung Kim. 2025. "Observation of Human–Robot Interactions at a Science Museum: A Dual-Level Analytical Approach" Electronics 14, no. 12: 2368. https://doi.org/10.3390/electronics14122368

APA StyleYoon, H., Shim, G., Lee, H., Kim, M.-G., & Kim, S. (2025). Observation of Human–Robot Interactions at a Science Museum: A Dual-Level Analytical Approach. Electronics, 14(12), 2368. https://doi.org/10.3390/electronics14122368