Abstract

Cohesive human–robot collaboration can be achieved through seamless communication between human and robot partners. We posit that the design aspects of human–robot communication (HRCom) can take inspiration from human communication to create more intuitive systems. A key component of HRCom systems is perception models developed using machine learning. Being data-driven, these models suffer from the dearth of comprehensive, labelled datasets while models trained on standard, publicly available datasets do not generalize well to application-specific scenarios. Complex interactions and real-world variability lead to shifts in data that require domain adaptation by the models. Existing domain adaptation techniques do not account for incommensurable modes of communication between humans and robot perception systems. Taking into account these challenges, a novel framework is presented that leverages existing domain adaptation techniques off-the-shelf and uses statistical measures to start and stop the training of models when they encounter domain-shifted data. Statistically informed multimodal (domain adaptation by transfer) learning (SIMLea) takes inspiration from human communication to use human feedback to auto-label for iterative domain adaptation. The framework can handle incommensurable multimodal inputs, is mode and model agnostic, and allows statistically informed extension of datasets, leading to more intuitive and naturalistic HRCom systems.

1. Introduction

While automation led to the development of robots (and other embodied computer systems) that perform repetitive, non-ergonomic, and dangerous jobs in the industrial sector [1], human operators are still unparalleled in carrying out precise tasks that require dexterity and flexibility. The complementary nature of human and robot agents’ capabilities forms the basis for the design of a shared workspace for the two types of agents to collaborate. The endgame of human–robot communication (HRCom) is to achieve the seamless team dynamics and communication fluency of an all-human team captured in the eponymously named sub-field of human–robot collaboration (HRC) [2,3]. Our previous work in the critique of HRCom compared to natural human communication through the lens of complexity theory [4] argued that the former has a deficit of two important characteristics: feedback and multiplicity. Multiplicity refers to the variety in cultures, languages, and traditions that lead to large variability in the communication behaviour of humans, while feedback is the circularity of actions in communication that are used to regulate the actions of the agents. Robot perception systems developed using supervised learning (SL) and deep convolutional neural networks (CNNs) must be able to handle such variabilities in human communication data but fail to do so due to shortcomings in the training and development of SL-based models. This paper aims to mitigate some of those challenges through the design of a framework that is designed to take inspiration from human communication characteristics like multiplicity and feedback.

Challenges to SL in the context of Human–Robot Communication: Supervised learning models are predominantly trained under the assumption that they will be operating in a closed-world scenario, i.e., the test data it encounters will be drawn from the same distribution as the data on which it has been trained [3]. This is referred to as the data being in-distribution (ID), but real-world applications with the dynamics and uncertainties attached to the continually changing environment seldom generate ID data. Instead, test data are often sourced from a different distribution than that of the source, i.e., from a domain-shifted distribution or out-of-distribution (OOD). This open-world approach [5] typically leads to poor performance of the model, even though the latter may be well-trained on ID data. The distributional shifts may be caused due to differences in classes. In other words, the perception system encounters an instance or an object that it has not been trained for (semantic shift) or of instances of the same classes as training but in a change in the distribution (covariate shift). This work only deals with covariate shift, hereon referred to as ‘shift’. Covariate-shifted data lead to degraded prediction accuracies. Updating models with shifted data is faced with the additional challenge that, as data-driven methods, deep CNNs require large amounts of labelled data. Thus, labelling new shifted data every time there is any change in the perception scene is a very time- and resource-consuming endeavour.

In order to alleviate the challenge posed by shifted data and the high cost of gathering and labelling data for re-training for robot perception models, the aim of this work is to develop a novel framework leveraging existing machine-learning domain adaptation techniques for ensuring that the robot perception model performs as well in the real-world as it does in the hermetic lab conditions in which it has been developed. Particularly, the framework tries to enable higher specificity of the model towards data from the human(s) interacting with it within that application. It takes inspiration from natural human communication and uses real-time human feedback to auto-label shifted data in order to use computational resources only when needed. The setting in the presented work is primarily designed for “industrial” systems since, in industrial settings, especially in the context of communication, short commands would be common and nuances of emotions in communication signals would not have to be dealt with.

The paper is organised as follows: Section 2 contains a discussion on related works, followed by the methodology in Section 3, which presents the contributions, assumptions, design considerations of the framework, and then the framework. Section 4 presents the application of the framework to a communication task (hand gestures), Section 5 contains several studies carried out in the context of this task, and Section 6 ends the paper with conclusions and discussions on future works.

2. Background and Related Works

The dearth of labelled datasets for training has been effectively tackled by using transfer learning (TL), wherein a pre-trained model is used as a starting point to train on the much smaller labelled dataset. This is an extremely common step in deep learning, wherein the idea is to re-use the model that has already learned general features like edges, shapes, and colours in data for improved computational efficiency. ImageNet [6], with 1000 object classes, 1,281,167 training images, 50,000 validation images, and 100,000 test images, is a typical dataset used for this purpose.

Currently, multimodal inputs are detected and classified by machine-learning (ML) models by learning a generic feature representation using a large dataset such as Imagenet or MS COCO [7] with over a million labelled images and then fine-tuning based on the required specific application. This pipeline is followed for almost all aspects of SL, such as semantic segmentation, object detection, and pose estimation. Neural network architectures have been developed to leverage these features and achieve high accuracies on ID data.

Domain adaptation (DA) is a class of techniques that aims to align features between the labelled source domain and the unlabelled target domains so as to increase the performance of the model in both domains. Essentially, DA is a special case of TL. In cases where the target domain is fully annotated, it is called supervised DA. In [8], researchers introduced classification and contrastive semantic alignment loss to guide samples from different domains, but the same classes to map to the feature space created from input data. In unsupervised DA (UDA), labels from the target domain are completely missing. Ref. [9] developed a methodology based on paired RGB images in both source as well as target domains. In another study, the authors proposed a novel residual transfer network to simultaneously learn adaptive classifiers and transferrable features [10]. The assumption was that the source and target classifiers differed by a small residual function.

One class of DA technique exists, called iterative algorithms, that aim to “auto-label” target domains iteratively. Co-training for domain adaptation (CODA) was presented in [11]. The methodology involved slowly adapting the training set from the source to the target domain by the inclusion of target instances with the highest confidence scores. Co-training [12], as an example of unsupervised DA, assumes the existence of two separate views of a single object and two trained classifiers, respectively. At each iteration, only inputs that possess confidence scores above the threshold from only one of the two classifiers are moved to the training set; thus, one classifier provides labels while the other provides the data. CODA performed the same by training two classifiers on different sets of features of the same view.

From the discussion above, it is to be noted that if “paired” sources of data exist or multiple views of the same object or scene can be utilized, then off-the-shelf domain adaptation methodologies may be used for enriching the model with shifted data. Therein lies the challenge since, for HRCom, such extensive and synchronized labelled data is not available. Additionally, multimodal data can also often be incommensurable, such as in the case of facial expressions and voice commands or hand gestures and voice commands, and thus are challenging to use as synchronised datasets for model training. In such cases, an off-the-shelf application of domain adaptation techniques may not be feasible. This work focuses on the cases where incommensurable modes of communication are present and standard DA algorithms cannot be used for updating the model.

3. Methodology

In order to carry out domain adaptation, the framework must detect domain-shifted data. This shifted or out-of-distribution (OOD) data are unlabelled. SoftMax probabilities are used to evaluate model performance where labelled test data are available. In the case of covariate-shifted data, the literature [13] indicates that the use of the SoftMax function in neural network classifiers leads to the classification of OODs with high confidence. Therefore, statistical measures for OOD detection in conjunction with heuristic decisions must be used to indicate OOD [14]. This analysis has been used in our framework to answer the design requirements of when to start and stop training the model on OOD data. A similarity (S) metric has been used as a check for calculating and visualizing the covariate domain shift that occurs when the model encounters new (user) data. This is elaborated upon in Section 3.4.

The shortcomings of supervised learning (SL) for HRCom include, inter alia, the lack of large, labelled datasets, and if labelled datasets are available, for domain shifts, paired or otherwise multi-view and labelled datasets are challenging to obtain. Thus, unlabelled data of the new domain must be used in conjunction with labelled ones to adapt to the new domain. As the human or the work-cell environment changes, the composition of the scene changes, leading to a shift in domain. In order to prevent data drift and in turn calibration drift from the dynamic environment, the ML model must learn continuously [15], thus requiring the framework to be iterative in nature. The cost of labelling and training prohibits a constant state of online learning, something that generalization and adaptation techniques do not take into consideration. In technical considerations, practitioners must stop the model from learning a dataset after it reaches an appropriate minimum, else the error begins to rise again. Thus, the adaptation framework must track when to start and stop training the model. Our previous work [16] presented iterative transfer learning and feedback generated labels using PF-HRCom, but verification of learning was conducted in a supervised manner using labelled test data. That is not always available, and thus an unsupervised formulation must be used to verify that the model has learnt the shifted data. These were the design considerations that went towards the development of the novel framework presented in this paper.

3.1. Contributions

The literature has described human–robot communication (HRCom) that can be achieved through verbal speech, signals through gesture, haptic communication, and through screen-based interfaces [2,17,18]. According to a scoping review conducted by [17], operators in industry establish workplace routines for communication, which need to be updated with the introduction of robots. This establishes routines for HRCom through the use of robot perception systems. In a setup for HRCom, a covariate shift in data distribution may arise due to a change in any component of the scene (human or environment) or change in the sensor capturing the information, leading to a statistical shift in the distribution. Instead of rejecting such shifted observations, the communication model must be updated with the typical behaviours of the human(s) it encounters. In other words, much like the adjustment behaviours in an all-human team, the models must be adapted or “personalized” to the behaviour of the human partner for more humanistic design of industrial systems.

With these considerations in mind, and also the shortcomings of current DA algorithms, the paper proposes a novel framework for human–robot communication, which is a use-case of domain adaptation: SIMLea—statistically informed multimodal (domain adaptation by transfer) learning. The following are the contributions of the same.

- SIMLea leverages publicly available, large datasets of multimodal data and high-accuracy models trained on them to provide feedback-driven labelling of shifted data. This feature leverages real user feedback to auto-label user data during operation, mimicking a key component of natural human communication, wherein feedback is used to continuously update the knowledge associated with the shared task and its status. This would reduce mislabelling. This allows the extension of the model’s learnt feature representations with those of new, covariate domain-shifted data and personalization of the model to the collaborating human, making communication more natural for the human.

- SIMLea is designed to allow feedback to be used from incommensurate modes of communication.

- Statistical measure of similarity is used to drive the adaptation framework to learn only relevant data and start and stop the computationally heavy learning process when required. Implementation of this aspect is presented in Section 5.2.

- SIMLea allows the amalgamation of empirical thresholds and standards and application-specific heuristics. It is an add-on to the ML model and does not require change in its architecture.

This work distinguishes itself, not by introducing a new domain adaptation (DA) technique but by presenting a novel framework that strategically assembles existing DA and similarity measurement methods. This framework enables the effective application of these techniques to human–robot collaboration (HRC) scenarios, which inherently present complexities beyond traditional DA tasks. By leveraging a feedback-driven auto-labelling approach, demonstrated through hand gesture recognition, the framework unlocks the potential for adaptation across diverse human communication datasets.

3.2. Assumptions of SIMLea

The assumptions taken under which the framework has been developed are as follows. These have been adapted from assumptions in [19] for HRC scenarios.

- As mentioned previously, the system only encounters covariate-shifted data. This means that all commands from the human are “correct” in the lexical sense and will fall into one of the pre-trained classes.

- The deployed base model (model trained on standard, publicly available dataset) is well-trained on the standard dataset, i.e., it performs well on the test set of that dataset. This assumption is extrapolated to the iteratively generated models as well since the shifted data are from the same application and are similar to the original data, ipso facto, the training parameters could be re-used for TL without change. By well-trained, one refers to the models possessing the required trade-off between bias and variance, determined based on tribal knowledge of the application.

- The ML classifier that understands (recognizes) feedback from the human has high variance and high accuracy.

Table 1 presents a qualitative comparison between some existing domain adaptation techniques and the proposed framework, wherein the proposed novel framework can handle incommensurate modes of data for labelling unlabelled data and use unsupervised statistical measures to start and stop training. The assumptions of the techniques refer to assumptions in their sources of data, models, and existence of labels for training.

Table 1.

Qualitative comparison of domain adaptation techniques with proposed domain adaptation framework.

3.3. Metric for an Informed Approach to Domain Adaptation

In the absence of labelled datasets for testing, unsupervised formulations for quantifying domain shift may be used for an informed approach to DA [20,21,22]. CNNs learn data through the creation of feature representation spaces specific to the datasets. Thus, using statistical distance in the analysis of this space instead of the data space may be more responsive to better understanding the shift in the distribution. This distance can be visualized as a similarity measure between the datasets. Small visual domain shifts become more pronounced as the data passes through the trained model. This is because deep-learning architectures are highly parameterized and can learn the finer details of the data, thereby losing variance in favour of being biased towards the training data. As the data travels deeper into the model, the representation space becomes more and more abstract. Finally, the fully connected layers use these representations to assign a class during a classification task. The vast collection of dependent weights and biases renders the model highly sensitive to small variations in the visual data. Thus, perceptually small (to the human) perturbations in data may lead to large statistical domain shifts in the model’s understanding. SIMLea leverages this behaviour of CNNs to use the similarity metric between original and shifted data to start and stop training.

3.4. Covariate Domain Shift as a Measure of Similarity

A statistical distance metric between the original training set (or in-distribution (ID)) or “source” and the new data or “target” can be used to determine the “similarity” (S) between the source and target domains. A universal definition of similarity to a given dataset may be a non-sequitur because the representation spaces used to compare datasets are highly dependent on the model’s architecture, training, and hyperparameter values. Thus, “similarity” must be empirically defined as a consequence of the application and the requirements. The reader is directed towards some literature on the various types of metrics developed: [3,13,23,24,25,26,27,28,29,30].

Conservative approaches must have a lower range of features it considers similar, while less conservative ones may contain higher variance. The application-specific thresholds have been defined in Section 4, where validation tests on HG data are discussed. The contributions of the paper lie in the novel framework for domain adaptation and not in the formulation of the specific metric for determining S.

3.5. Factor for Cessation of Domain Adaptation

The larger the value of S, the higher the similarity between the datasets. This measure is used to determine if the adapted and newly generated model after an iteration of SIMLea has learnt the features of the new dataset. If it is found during an iteration that the new S is still small; new data would be collected to proceed towards another iteration of training. If S is increased to now lie within the threshold that one can confidently say that the model has learnt the new features, then the iterative training can be ceased, and the communication system would begin using the newly generated model for operation.

Use of an unsupervised statistical distance between the representation spaces of the source and target distributions to determine S would allow the framework to be model agnostic. This is because it would be an add-on component to the model without requiring any change in the architecture or hyperparameters. This S can then be used for monitoring the execution of the framework and the performance of the model. The decision to employ this monitoring analysis continually or after certain intervals is at the discretion of the engineer.

3.6. Criterion for Feasibility of Transfer Learning

The criterion for feasibility of transfer learning (TL) will depend on the application parameters. The threshold could be determined using the size of dataset used, number of training epochs required, or time of training in order to reach a particular performance metric. These are typically empirically determined. For the purpose of this paper, we have used number of epochs to determine the feasibility of transfer learning. Given that, for comparison, we kept dataset sizes the same for re-training, but the sizes of images and resolution of the datasets could be different, we elected to use number of epochs for the same increase in accuracy as comparison. How many epochs and how much increase in accuracy are heuristic measures that must be determined by the engineer based on application requirements and constraints? Section 4 demonstrates on a hand gesture recognition task.

3.7. SIMLea Framework

The proposed novel framework for personalization through domain adaptation in HRCom consists of the following steps.

- Train an ML model with a standard, publicly-available dataset such that it performs well on ID or source data: base model (model0).

- Collect the new user data and store in memory. These data are unlabelled since they are generated in real-time by the user interacting with the robot. Calculate the similarity score (S) between the trained (in-distribution) data and the incoming user (covariate-shifted) data.

- If the similarity score is higher than a pre-set threshold (that is application and model specific), then the model can be effectively deployed and used for inferencing with the new data. If, on the other hand, the data are dissimilar, then transfer learning may be used if deemed feasible.

- The next step is a checkpoint to test if transfer learning would be effective for domain adaptation. The similarity score obtained in step 2 is used again. If the covariate-shifted dataset is similar to the in-distribution data, then iterative re-training can be used. If the datasets are very dissimilar, then instead of transfer learning the model should be trained from scratch and the new model deployed. Such a case may indicate a significant shift in the domain data, and either the new data needs to be rejected or the model needs to be changed.

- In the case of re-training, endeavouring to keep dataset size low, a small number of empirically determined instances per class of the covariate-shifted data are then auto-labelled by the robot asking for multimodal (in this case, verbal) feedback from the user, as per the work in [16].

- The model is iteratively re-trained with the new batch of covariate-shifted user images mixed with a larger batch of the source dataset or in-distribution data, as per the work in [16].

- Once the new model is generated, the representation space of this model is used to gauge the similarity of the two datasets, and the loop is re-traced. This ensures that a minimal amount of user data is required for effective learning of the model and is auto-labelled on a need-only basis.

The framework ensures that the model stops learning iteratively and consuming resources once it has:

- Case 1: either learnt the features of the target dataset well.

- Case 2: or it can no longer benefit from transfer learning because the datasets are too dissimilar.

Larger batches of data may also be used and would be beneficial for faster DA, and any similarity index may be used, thus making the framework quite flexible for deployment. In order to save resources, and potentially down-time, while the robot perception system analyses new data, the option for similarity analysis can be triggered manually when a new user is added or the work-cell changes or the sensor changes instead of it being automatic for all incoming data. As the model is deployed for long periods of time and increases its variance (with relevant data), the engineer can choose to trigger the analysis only periodically, electing to let some changes not lead to new re-trainings.

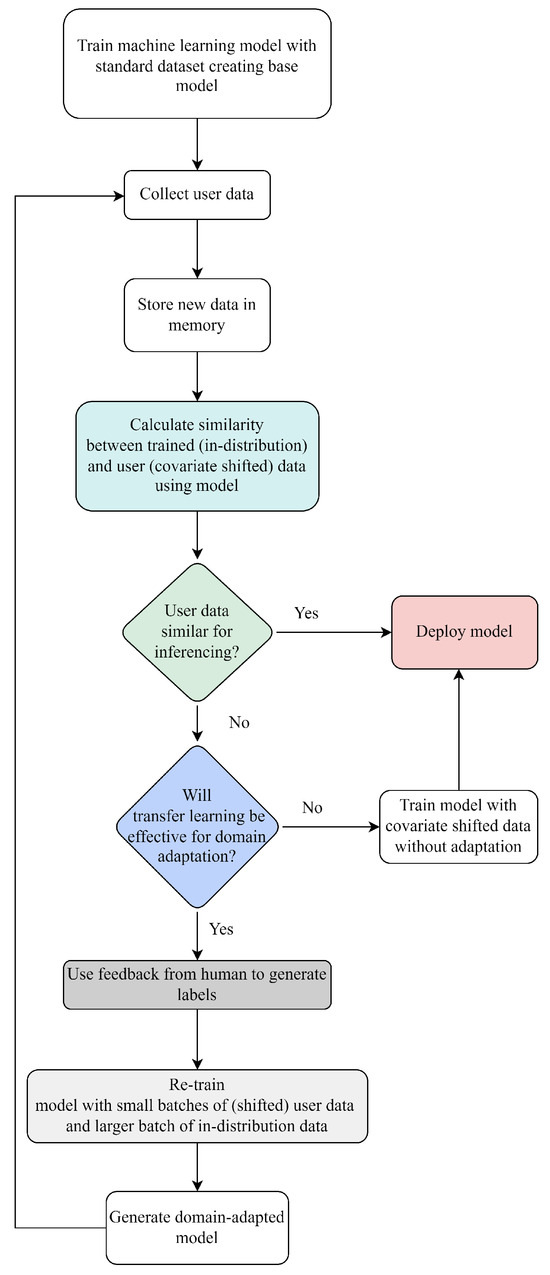

Furthermore, while human-generated datasets are the primary focus of this work, the framework can be used in continuous learning in other applications as long as a feedback-giving agent exists that can be used for auto-labelling. Validation tests have been carried out using hand gesture datasets and are examined in the next section. Figure 1 presents the consolidated framework of SIMLea.

Figure 1.

The iterative framework for SIMLea: Statistically informed multimodal (domain adaptation by transfer) learning involving the collection of user data, finding similarity with trained data, determining the effectiveness of transfer learning for domain adaptation, and training the model based on the decisions of the framework.

4. Validation of SIMLea with Hand Gesture Recognition Data

Following the literature reviews cited [2,17], hand gestures (HGs) are used as a means of communication between humans and robots since hand gestures leave human workers free to go about their tasks without any cumbersome headsets or wires and hand gestures are an intuitive means of communication.

4.1. Variance in HG Datasets

For the presented work, an HG classifier using VGG16 [31] was trained with a publicly available hand gesture dataset—Image database for tiny hand gesture recognition [32]—hereon referred to as TinyHand or TH. The dataset used is composed of seven hand gestures carried out by 40 participants (male and female) with their right hands: fist, l, ok, palm, pointer, thumb down, and thumb up. RGB videos were collected, with 1400 colour frames for each gesture. These hand gestures may be considered quite typical for communicating with a robot or other intelligent system. Variability has been added by the creators of the dataset by incorporating gestures performed in different locations in the images as well as by having the participants positioned with a number of different backgrounds, both cluttered as well as clean, solid coloured, and with variations in illumination. The human face and body occupy the majority of the image, while the hand occupies only about 10% of the pixels of the image. In a dynamic industrial environment, the human may not always be positioned in a central location or have the hands close to the camera; thus, the dataset was selected to mimic these inconsistencies in sensor data. Out of the 40 individuals, 25 had a clean, uncluttered background and the humans in a seated position. The TinyHand Uncluttered Dataset or THUC, is used to refer to this subset of TH. The remaining had different cluttered backgrounds and the humans standing, here referred to as THC (TinyHand Cluttered). For the tests carried out for SIMLea, THUC was used with gestures from 23 participants split into training and validation sets in the ratio of (70:30)%, and the remaining two were used as test sets. The training and validation set finally contained 62,035 images and the test set 4719.

The training parameters used were 0.01 learning rate, 16 batch size, and stochastic gradient descent optimizer run till 13 epochs. The data augmentations carried out on the THUC training set were lateral shifts so as to not overfit the model to expecting the human to be at the centre of the scene, shears so that the fish-eye effect of certain cameras could be trained for, zoom to mimic the varying distance of the human from the camera in a dynamic environment, and small rotation and brightness shifts to allow for dynamic lighting conditions. The trained VGG16 model (model0) was then tested on the THUC test set and had an accuracy of 0.96.

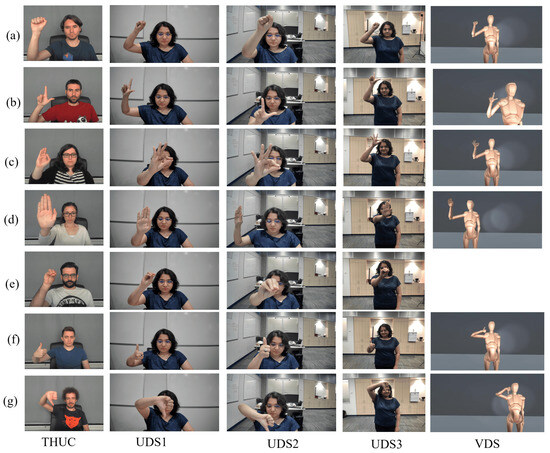

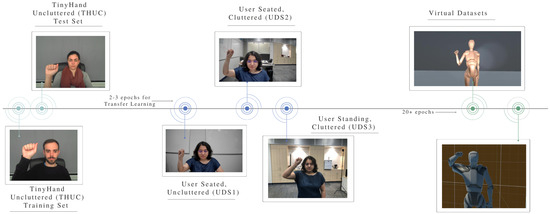

In order to simulate covariate domain shifts, three user datasets were created. These consisted of the author carrying out the seven gestures. In the first instance, the human (the author) of the scene is changed without any change in the environment. To clarify, the environment was uncluttered and a solid shade of gray similar to that of the source training set. In this dataset, the user gestured in a seated position and gestured with their right hand. This dataset is denoted by User Dataset 1 or UDS1. To simulate a change in both components of the scene, the User Dataset 2 (UDS2) consisted of the user (the author) seated in front of a cluttered background that is different from the background of THUC and also THC. Finally, to add more variations, User Dataset 3 (UDS3) had the user in a standing position in front of a cluttered background. Finally, to demonstrate a situation where the application of TL is not useful, a VEnv dataset (VDS) was also created using a skin-tone-coloured humanoid carrying out all the gestures in the above-mentioned classes, except pointer, since the “pointing” gesture is usually an in-built option in VR environments, and for the VR application, the default one was used. Figure 2 provides a sample of images from all the datasets grouped by image classes.

Figure 2.

Sample images and classes of hand gesture classification datasets: TinyHand Uncluttered Dataset (THUC), User Seated, Uncluttered (UDS1), User Seated, Cluttered (UDS2), User Standing, Cluttered (UDS3), and Virtual (VDS), showing visual differences in the datasets: (a) fist, (b) l, (c) ok, (d) palm, (e) pointer, (f) thumb up, (g) thumb down.

Apart from the difference in the human or humanoid, the environment of the scene, and the variations in the “correct” gestures themselves (evident through the variety of deployment of the joints of the arm, their angles with respect to the body and the camera), the difference in the sensor also leads to a covariate domain shift. Notice that the resolutions of THUC, UDS1 and 2, and UDS3 are different, although when passed through the model they would all be scaled to fit (224, 224, 3), where 3 indicates the three RGB channels. The VDS images are captured through a virtual sensor and thus are more agreeable to customized specifications that could be made closer to the original or source dataset.

The rationale for not including left-handed gestures is that some gestures, such as ok, fist, palm, remain semantically invariant, even when carried out by the left hand. Others, such as l, carried out in the same way as the right-handed one (i.e., index fingers pointing upwards, thumbs pointing towards the body, and the remaining fingers curled downwards and facing outwards) do not semantically mean l any more. Under natural circumstances, the human would not use the same way to indicate such laterally variant gestures. Thus, in order to retain focus solely on covariate shift for this study, only right-handed gestures were considered. Even when lateral inversion is used as a data augmentation tool in Section 5.3, this behaviour of the l class of gestures is ignored and semantic shifting is left to be delved into in future work.

4.2. Covariate Domain Shifts in User Data

The model naming conventions are as follows:

- model0 or base model is trained using standard dataset THUC in all tests, except in Section 5.3, where both THUC and THC are used.

- Iterative re-training of model0 on user-labelled data mixed with a portion of standard data generates modeln, where n refers to the number of iterations.

model0 is used to determine the domain shifts in user data. Intuitively through (human) visual inspection, the degree of similarity to THUC would be highest for UDS1 followed by UDS2, UDS3, and finally VDS. This hypothesis should also be validated through the use of the covariate shift metric.

Shift Analysis with THUC Test Set as Source

Stacke et al. proposed an unsupervised formulation called representation shift (RShift) to measure the statistical distance between the feature representation spaces of source and target domains [19]. The shift metric (R) is calculated using KL divergence between the outputs of max pooling layers averaged over all the filters of that particular layer for each dataset. The outputs of convolution layers are not used due to the high dimensionality of the data, which would make analyses extremely computationally heavy and would in turn render the model impractical for operation. For zero values in the distributions, a small constant (0.001) was added for numerical stability. A caveat to be remembered is that the discrepancy as calculated through RShift is the model’s understanding and not the objective or the human’s perspective of the datasets.

The formulation for RShift, given as follows, has been reproduced from [19]. Assume the layers of a CNN are denoted by

Let the layer activations of a trained model for layer l and filter k produced by an input x be denoted by:

The mean value of each activation per layer for a given filter and data is given by

where h and w denote the height and weight of the layer activation.

Next, assuming that the activations over all input data can be fitted into a normal distribution, denotes the continuous distribution of and

- is the input data, where n is the sample size. A similar formulation can be done for a second dataset:

- generating , with m denoting the new sample size.

The representation shift, R, is defined as the mean discrepancy, D, between the distributions over all filters, k, in a given layer, l

where D is the discrepancy or distance metric, which is calculated using Kullback–Leibler (KL) divergence.

KL divergence is defined between two discrete probability distributions, P and Q, as:

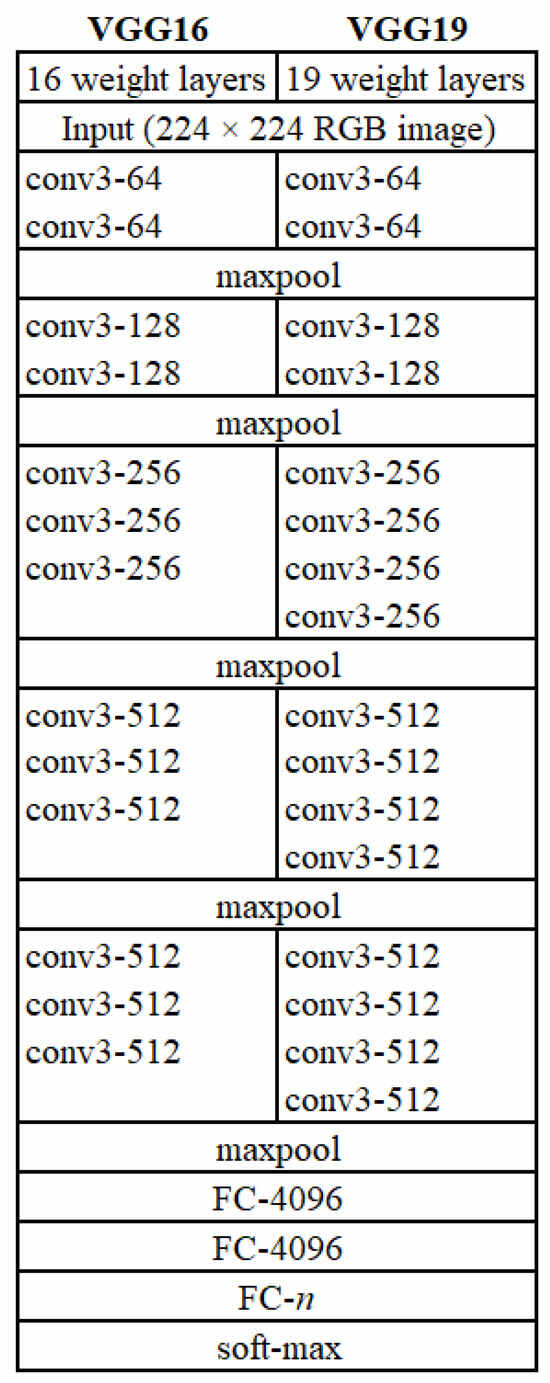

VGG16 [31] has been used for the classification of hand gestures, and SIMLea has been applied on a trained VGG16 model. The architecture contains five convolutional blocks ending with a max pooling (MaxPool) layer (Figure 3). In the figure (which is reproduced from the paper [31]), “conv3” refers to 3D convolution and “FC” to fully connected layers. Recall that R is larger for more dissimilar or more shifted datasets, and vice versa. The following steps are taken for the analysis of each layer. Here, “layer” refers to MaxPool.

Figure 3.

Architecture of VGG16 and VGG19 adapted from [31]. Convolution layers are followed by ReLU activations, which are not shown here. n refers to number of classes.

- Mean and standard deviations of the activations of each filter are obtained for all images in the dataset. These statistics will represent the central tendency and spread of the activations for each filter. Then, a normal distribution is fitted over the values using the mean and standard deviation values. These images are passed through the model unlabelled, and the outputs are obtained through the inference operation of model0. This is done for the source dataset (THUC) and the target datasets (UDS1, 2, and 3, and VDS).

- KL divergence is calculated between pairs of source and target datasets for each filter of a layer.

- The mean of KL divergence values over all filters per layer is obtained, thereby generating one value per source-target pair per layer.

KL divergence is not a symmetrical distance measure; thus, it is important to keep the source unchanged in order to obtain the correct estimates of changes. Since the analysis is in service of DA of the model, the training sets for iterative re-trainings change at each step. Therefore, the THUC test set was kept as the source for calculations. For THUC R analysis, the target dataset is the training set of the same. In order to ensure that the general statistical behaviour is independent of the test and training set of TH, the above analysis was also carried out with the THUC training set as the source (Appendix A). Of course, the succeeding tests could not be re-done with the THUC training set as source since the training set of each model during re-training is different from model0.

model0 was used for inferencing with the shifted datasets with a small batch of labelled data to examine the relation of R with accuracy scores. The R and accuracy values for all the datasets are presented in Table 2 As is evident, our intuition about the similarity of datasets is reflected most clearly in the R values for layers 2 and 3. The test set of THUC has the smallest shift from the THUC training set, which is correlated with the highest accuracy (0.96). This is followed by higher discrepancy values and corresponding lower accuracy values from layer 2 for UDS1 (87.26, 0.58), UDS2 (486.05, 0.48), UDS3 (943.40, 0.16), and VDS (10,571.15, 0.09). This trend is reflected in all layers, with some deviations. Some deviation is to be expected as one goes deeper into the model, since a large amount of information is lost as the model approximates the input to classify to one of the classes. This is intentionally done so as reduce the dimensionality of data to be handled and to neglect insignificant changes in input data using such layers as Max Pooling and Batch Normalization. Apart from that, the features in the deeper layers are more abstract, and it is challenging to use them to interpret the behaviour of the model. Conversely, the initial convolution layers handle very macro-level features, and they too should not be used for interpretation of domain shifts.

Table 2.

Covariate shift (R) and accuracy values for TinyHand Uncluttered Dataset (THUC), User Seated, Uncluttered (UDS1), User Seated, Cluttered (UDS2), User Standing, Cluttered (UDS3), and Virtual Datasets (VDS) using the THUC test set as source.

For this work, the second and third MaxPool layers provided intuitive results (as can be attested by Table 2 and in the succeeding study in the later part of this chapter), and are thus considered for analysis. The first layer follows the intuition, except for the discrepancy expected of UDS2 and UDS3. The values for the fouth layer indicate higher similarity for VDS, which is certainly not backed by the accuracy score for VDS. The fifth layer reflects the desired trend, except for UDS3 and VDS. Notice that the R values decrease for all cases deeper into the model. This is a consequence of the loss of information in the attempt to approximate towards pre-learnt classes.

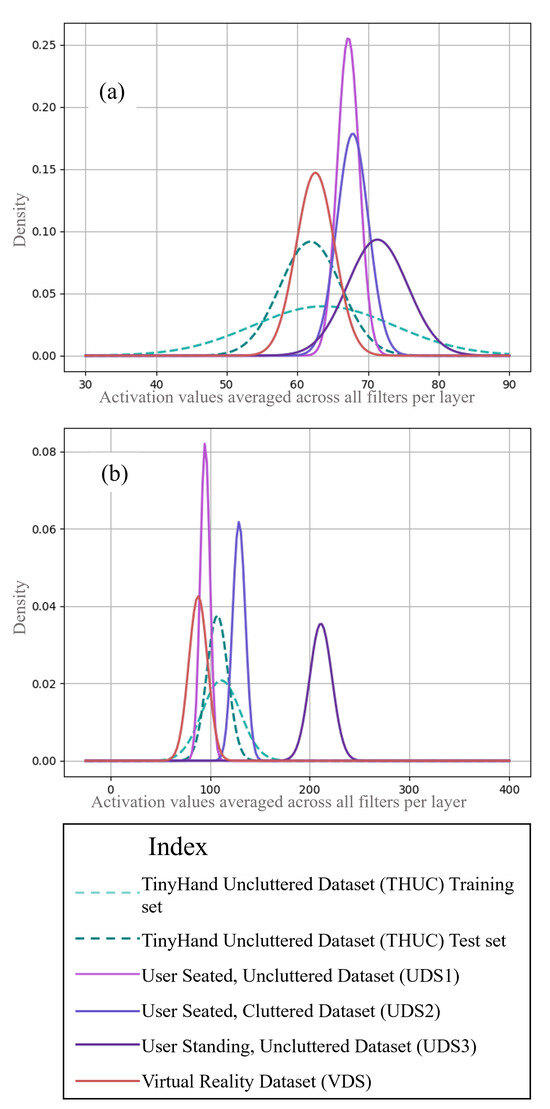

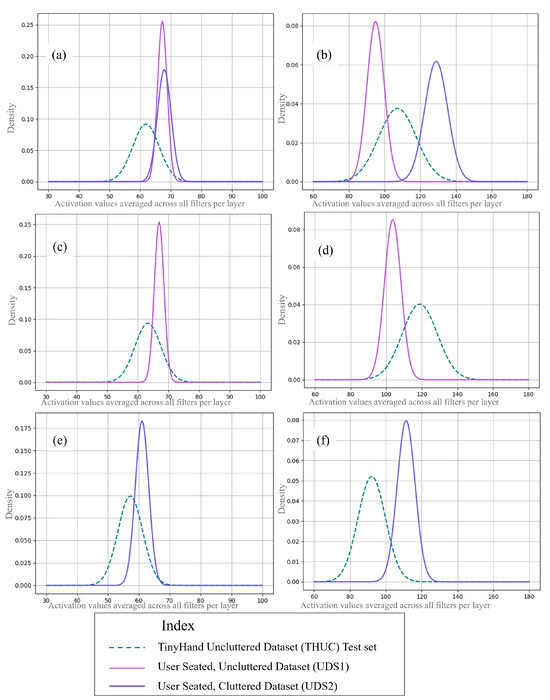

Let us try to visually examine the normal distributions of the datasets for each layer to gain an insight regarding the statistical shifts between the THUC and user datasets. The normal distributions of the activations of the second and third MaxPool layers with respect to each dataset have been plotted in Figure 4. In Figure 4a, for the second MaxPool layer, the standard deviation of the THUC training set is the largest since it contains the largest variance in data. Comparatively, the spread of the THUC test set is smaller but still larger than the shifted datasets. This is showcased in the other layer as well. UDS3 is the most dissimilar among the user datasets, and this is evident from the shape of the distribution (more variation, hence flatter and shifted mean value). Thus, as more visual variations are added to the scene, the more statistically the dataset distribution gets shifted leading to lower accuracies. Layer 3 (Figure 4b) provides the clearest indication of a covariate shift that satisfies our visual inspection hypothesis. The lower standard deviations in the user sets are also a consequence of the dataset being generated using short RGB videos and the presence of only one user. Note the different x-axis scales that have been used for better visualization. VDS in (a) and (b) appear distinct from the user datasets. Its mean value is shifted from the TH training set, and it contains more variance than the user datasets.

Figure 4.

Normal distributions representing activations of each MaxPooling layer for source and target datasets. (a) denotes MaxPool layer 2 and (b) layer 3. In (a), the range of horizontal axis is 30 to 90 and 0 to 400 in (b). The vertical axis in (a) is 0.00 to 0.25, while in (b) it is 0.00 to 0.09.

Batches of around 200 unlabelled images (from all the classes) were used for the statistical analyses. This would require only about five to seven seconds for each gesture video. Smaller batches of images ensure that an acceptable amount of computational resources are dedicated for the statistical analysis during online learning of the model. Section 5.4 explores the issue of batch size. This shift analysis constitutes step 2 of the SIMLea framework. The similarity metric is used to decide if the model has learnt the new dataset and also if TL for DA is a feasible course of action.

5. Results

SIMLea was applied to a hand gesture recognition task that consisted of the ML model constituting the robot perception system encountering new users, changes in the collaborative work-cell, and in the sensors. The capability to detect the changes that manifested as covariate shifts in the incoming data distribution and to decide the means to incorporate the changes into the model were enabled by the application of SIMLea. The validation tests undertaken in this work are indicative of the expected trends when working with human-generated communication data in the form of RGB images and handled using deep CNNs. However, while the steps of the framework are generic and applicable for a wide variety of HRCom scenarios, the architecture and type of classifier used will dictate the parameters of transfer learning (TL), the similarity metric used, and the shift values encountered. Table 3 contains the gist of the section and the studies carried out. The datasets created based on the publicly available TH and THC datasets and the subsequent studies carried out are to mimic changes to the work-cell of the human–robot collaboration scenario: addition of new human workers and changes in the environment of the work-cell. This is achieved by the cluttering of the background of the gesturing human, changes in the humans in the dataset, and by the humans standing up or sitting down to add more variability. Finally, for more top test extreme cases of covariate domain shift, virtual reality datasets are used.

Table 3.

Studies carried out with SIMLea and their corresponding subsections.

5.1. Application of SIMLea to User Datasets

In this work, voice command (VC) has been used to generate feedback. The third assumption is true for voice command classifiers trained on large publicly available datasets and on simple commands. As an example, VC classifiers can be trained using the Google Speech Commands dataset [33], which contains 65,000 s of recorded and annotated data by thousands of participants. In comparison, other modes of communication, such as hand gestures (HG) for specific applications such as the TinyHand dataset, contain data from only 40 individuals [32]. The disparity in data leads to disparity in model performance, which can be tackled using SIMLea.

Performance Metrics: In the human-generated (HG) datasets used in this paper, the number of epochs required for 20%+ improvement of prediction accuracy scores have been in the range of 2 to 3. As more dissimilar datasets need to be adapted, it is logical that the more time the model needs to train on, and hence the number of epochs, would increase. So, for the purposes of illustration of SIMLea for HG, the number of epochs required for a feasible application of TL for DA is about 3.

In order for TL to work effectively, data from all classes must be used at every iteration and for human communication data similar to the training data, small batches of shifted data are sufficient for the model to learn. Furthermore, the batches for TL must be composed of a higher fraction of data from the source dataset. SIMLea was implemented and validated on UDS1 and UDS2. Empirically determined batches composed of 20 user images +100 THUC training set images for each class and auto-labelled through feedback were used for iterative re-training, as per the results from the work on personalization in HRCom [16].

On deploying model0 and it encountering UDS1, R was calculated for all layers, along with accuracy, as shown in Table 4. Since the R value indicated domain shift as compared to THUC training set shift score, DA was required to counter the domain shift. On an ad hoc basis, TL was used as the first study. VC classifier-enabled feedback can be expanded upon and used for the seven classes of HG as well since the names of the classes are commonly used words. A simulation of feedback generated using voice commands from the human was used to label the data, which consisted of 20 images per class of gestures. Conversely, simpler visual or haptic interfaces like touchscreens may also be used instead of using an ML model for feedback.

Table 4.

New covariate shift (R) and accuracy values for User Seated, Uncluttered (UDS1) on iteratively trained model1.

TL was used after labelling, which consisted of freezing the layers of model0, except for the fully connected and classification layers. The training was done for two epochs, thus proving that TL was effective, generating model1. The shift calculations were carried out with model1 with another batch of the same user data, UDS1, the results of which are presented in Table 4. It was observed that, for all layers, the R value decreased, thus indicating a decrease in the dissimilarity of the datasets with respect to the new model and an increase in the variance of said model. For the purpose of validating, labelling was done for a new batch of UDS1 and tested with model1, giving an accuracy of 0.73, which was a 25.8% increase in one iteration and two epochs of training.

This implementation gave an empirical idea of the thresholds for the feasibility of TL for that particular dataset and model and an indication of accuracy from the R value. It should also be noted that the accuracy value for the THUC test set remains high (0.97 for model1 for UDS1 and 0.94 for UDS2). The framework can be deployed further or stopped on the basis of the threshold set for the application. A similar approach was taken for UDS2, which also demonstrated a lessening of R for all layers (Table 5), with a new accuracy score of 0.59 (an increase of 22.9%) with two epochs.

Table 5.

New covariate shift (R) and accuracy values for User Seated, Cluttered (UDS2) on iteratively trained model1.

Figure 5 contains the distributions of activations of the second and third MaxPool layers juxtaposed for UDS1, UDS2, and THUC test datasets with respect to each layer: (a) and (b) are for model0, while (c) and (d) and (e) and (f) are for model1s for UDS1 and UDS2, respectively. Please note that model1 is different for the two datasets, and the term is reused as a model variable name within the iterative framework. The ranges in vertical axes in (a) to (f) are as follows: 0.00 to 0.25, 0.00 to 0.08, 0.00 to 0.25, 0.00 to 0.08, 0.00 to 0.175, and 0.00 to 0.08, respectively. The ranges in horizontal axes in (a) to (f) are as follows: 30 to 100, 60 to 180, 30 to 100, 60 to 180, 30 to 100, and 60 to 180, respectively.

Figure 5.

Normal distributions of activations of the second and third MaxPool layers juxtaposed for TinyHand Uncluttered (THUC), User Seated, Uncluttered (UDS1), User Seated, Cluttered (UDS2) datasets with respect to each layer before iterative retraining (a,b) and after (c,d): UDS1 and (e,f): UDS2. The ranges in the vertical axes in (a–f) are as follows: 0.00 to 0.25, 0.00 to 0.08, 0.00 to 0.25, 0.00 to 0.08, 0.00 to 0.175, and 0.00 to 0.08, respectively. The ranges in horizontal axes in (a–f) are as follows: 30 to 100, 60 to 180, 30 to 100, 60 to 180, 30 to 100, and 60 to 180, respectively.

A decrease in (learnt) variance in THUC can be observed in the standard deviations of THUC in (d), (e), and (f) with respect to (b), (a), and (b) again. This stems from the model’s loss of learnt features and not a change in the source dataset itself. This is a consequence of TL. Based on such indicators, the designer may add a higher number of THUC images when creating batches for re-training. Be that as it may, in all instances, the distance between the mean values of THUC and the user datasets decreased, thus validating a decrease in the perceived shift of the model when handled using SIMLea.

5.2. Application of SIMLea to Virtual Reality Datasets

In order to study a case where, although the task is the same (hand gestures with the same labels) but the data is so covariate shifted that transfer learning would not be feasible and training from scratch will be more effective, a virtual reality dataset of hand gestures was developed. Let us consider this case: for an HRC, or more generally, a human–machine interaction scenario, a virtual reality (VR) environment (VEnv) linked to the real-world environment (REnv) may contain a representation of the human in it. The real HG in REnv may be recreated in the VEnv, which would then be recognized using an ML model, much like REnv gestures. Thus, the VEnv may be conceived as being composed of two parts—the representation of the human through a humanoid model and the setting of the immersive scene, which includes the light(s), the virtual sensor(s), other interacting object(s), and artefacts such as walls, floor, ceiling, their colours, and so on. In order to simulate the dynamic nature of the industrial setting or work-cell, variability in the positioning of the humanoid as well as variability in the positioning of the hand, the degree to which the gestures are performed, and the setting of the work-cell itself must be represented in the dataset. This constitutes an HG dataset that is recognizable by humans belonging to the specific classes as the REnv dataset but is so dissimilar from the perspective of the representation space of the model that TL would not be feasible for adaptation. This is reflected in the S values that are much lower than those for HG datasets in the REnv and is further confirmed on attempting TL wherein ≥20 epochs are required to achieve comparable gains in accuracy. For this study, two datasets were created—one using a generic silver coloured humanoid with a generic background and the other closer to the features learnt by the model, i.e., a humanoid with a more “natural” skin tone [34].

Even after curating VDS in such a way as to make the images as close to the source, training set of THUC as possible, the shift values, as seen from Table 2, are still very high, indicating a significantly large covariate shift (∼76,071% + of the distance between the THUC training and test sets). TL is not effective for VDS since a 10% gain in accuracy required 21 epochs. Therefore, in terms of the feasibility of TL, such a dataset may be construed to be at the limit of usefulness. Figure 6 qualitatively represents the domain shift from the source (training set of THUC) to the farthest target datasets (VDS with the “skin-tone” humanoid and farther shifted for the silver coloured humanoid). Most HG Renv datasets will fall somewhere in between the extremes discussed above, and some heuristics in the form of design thresholds will be required to customize the framework to the application.

Figure 6.

Qualitative representation of covariate domain shifts encountered in hand gesture classification showing the relative shifts and corresponding similarity in the standard and used shifted datasets.

5.3. Application of SIMLea to a Simulated Long-Serving Model

SIMLea is designed to enable continuous learning of an HRCom model. It is expected that a long-serving model, initially trained with a simpler dataset such as THUC, would continue to learn the features of new users and environments of the work-cell during its operation. At the same time, it should still be able to detect changes in the domain (if relevant enough to be further handled) and process the OOD data. Therefore, in order to simulate such a situation, VGG19 [31] was used to train on the training sets of both THUC and THC. The training and validation sets contained 147,807 images split along (70:30)% and the test set containing 63,341 images. The hyperparameters remained the same, except for the number of epochs, which was 20 for this case. A deeper model was chosen for this case, containing three extra convolution layers to account for the larger variability in data the model would have encountered during its operation. Delving into the dataset, THC contains 15 individuals (different from THUC) and a collection of seven different cluttered backgrounds. The new user datasets (UDS1, 2, and 3) contain individuals and backgrounds that are different from all of the combinations mentioned above. So, SIMLea should still be required. A cautionary point to be presented is that the R values correlated to accuracy values are not model agnostic and so the shifts in the present analysis would be unrelated to those in the previous analysis because of differences in the architecture and training sets, leading to significantly different models.

This VGG19 model was trained with lateral inversion as a data augmentation so as to enable recognition of the gestures performed with the left hand. UDS1, 2, and 3 in this case also contained left-handed gestures in order to increase the variance of the data. The discrepancy values of these complex, shifted datasets were evaluated. Table 6 and Table 7 present the values grouped by MaxPool layers.

Table 6.

Covariate shift (R) and accuracy values for TinyHand Uncluttered Dataset (THUC), User Seated, Uncluttered (UDS1), User Seated, Cluttered (UDS2), User Standing, Cluttered (UDS3), and Virtual Datasets (VDS) on model0 trained on both THUC and THC (TinyHand Cluttered Dataset) with THUC as the source distribution for shift calculations.

Table 7.

Covariate shift (R) and accuracy values for TinyHand Cluttered Dataset (THC), User Seated, Uncluttered (UDS1), User Seated, Cluttered (UDS2), User Standing, Cluttered (UDS3), and Virtual Datasets (VDS) on model0 trained on both THUC (TinyHand Uncluttered Dataset) and THC with THUC as the source distribution for shift calculations.

Similar to VGG16, VGG19 also contains five convolution blocks ending with five MaxPool layers. The following trends can be observed from the data in Table 6 and Table 7.

- There is a distribution shift between the training sets of the model itself, i.e., between THUC and THC (indicated by R shift values of 46.29, 30.18, 11.02 of layers 1–3, respectively). The shift is the smallest among the pairs due to the model being trained on both data, although in terms of visible similarity, THUC and UDS1 would be the most similar. Apart from that, the image sizes of the two training dataset were also different (THUC: 640 × 480 pixels, THC 1920 × 10,180 pixels), indicating a change in sensors capturing the data.

- The covariate shift R values for UDS1_R, indicating right-handed gestures are lesser than UDS1_L, indicating left-handed ones for layers 1 and 2 (134.27 versus 201.43 for layer 1, 53.63 vs. 68.53 for layer 2 for right-handed and left-handed, respectively). This is logical since the original training set contains only right-handed gestures and thus may be biased toward them.

- UDS1 is more similar to the training sets of THUC than UDS2 or UDS3, and the level of similarity decreases in that order. This behaviour is attributed to the similar, uncluttered, clean backgrounds of THUC and UDS1. This is inferred from the shift values: for layer 1: UDS1_R is 134.27, for UDS2 329.49, and for UDS3 610.39, and this trend also holds for layer 2.

- THC with the cluttered backgrounds and standing humans are interpreted to be more similar to UDS3 followed by UDS2 and finally UDS1, which shows a large domain shift. This trend is also followed by the deeper layers. This is expected since UDS3 contains the user standing in front of a cluttered background and gesturing and is thus structurally most similar to THC. Shift values of 62.79, 178.75, 1897.07 for layer 1 for UDS3, UDS2, and UDS1_R, respectively, indicate this for example.

- VDS is the least similar to THC or THUC and reflects results from the previous studies. Shift values for VDS are the largest in both tables for all three layers as compared to other datasets.

- Accuracy scores for the user datasets are higher than in the previous study. This is also expected since the model contains higher variance. With that said, the metric is still able to detect a shift in distribution of incoming data. UDS2 and 3 may be used for DA, while UDS1 could be allowed to be classified with the current model. For DA, a mix of THUC, THC, and UDS1 can be used as the “old” data and the data from UDS2 or UDS3, respectively, as the “new” data.

5.4. Examination of Batch Size for Shift Calculation

The sample size of images in a dataset should be statistically representative of the population under purview. Under the constraints of sparse data and limited allowance of computation time during operation, this is an extremely pertinent design consideration. All the aforementioned tests were carried out with about 200 images per batch for statistical analysis. A final study was conducted with 420 images for UDS1 left-handed gestures in comparison to the smaller batch containing 210 images. Table 8 contains the R values indicating domain shifts with respect to the VGG19 model trained with THC and THUC, as mentioned in Section 5.3. The difference between the R values between the smaller and larger datasets for a given layer, and for either THC or THUC as source sets, is a maximum of 21.8% (layer 2, for THUC as source set). Along with that, the discrepancy values follow the same trend for either size of dataset. Hence, a lesser number of images were deemed safe to use for the analysis. Finally, a third reason for bolstering the rationale for small batches is that user data generated from a few seconds of only one user gesturing contain significantly less variance than the large publicly available datasets. Thus, for more complex datasets, a larger batch of images is required, and a smaller batch for simpler ones may be required.

Table 8.

Covariate shift (R) for User Seated, Uncluttered Datasets with Left-Handed Gestures (UDS1_L) on model0 trained on both THUC (TinyHand Uncluttered Dataset) and THC (TinyHand Cluttered) with THUC and THC as source distributions for examination of batch size of target distribution.

6. Conclusions and Future Work

A novel domain adaptation framework, SIMLea, is presented that leverages the advantage of publicly available, large datasets of multimodal data and high-accuracy models trained on them to provide feedback from the human for auto-labelling during continuous operation. In contrast to the current research under domain adaptation and domain generalization, SIMLea is designed to allow feedback to be used from incommensurate multi-modes of communication. This feature allows the framework to be mode agnostic and flexible in its application, in addition to reducing mislabelling.

Domain adaptation allows the extension of the model’s learnt feature representations with those of new, covariate domain-shifted data. For human–robot collaboration, this allows personalization of the model to the collaborating human. A statistical measure of similarity is used to drive the adaptation framework. Thus, models under SIMLea are empowered to learn only relevant data and start and stop the computationally heavy learning process when required. Ergo, during continuous operation, the model learning is statistically and heuristically driven. SIMLea allows the amalgamation of empirical thresholds and standards and application-specific heuristics. It is an add-on to the ML model and does not require change in its architecture. Finally, while human-generated datasets are the primary focus of this dissertation, the framework can be used for DA in other applications as long as a feedback-giving agent exists that can be used for auto-labelling. The results indicate a lowering of covariate shift values between the training and shifted data on iterative re-training using the feedback-enabled labelling of data. For the user dataset with seated user and uncluttered background, there was a 25.8% increase in one iteration and two epochs of training, and for the user dataset with cluttered background, there was a 22.9% increase in accuracy with two epochs using SIMLea.

Several research works in HRC have used establishing the context as a key component of HRCom setup. Additional information through context understood by the robot could be used to remove some of the reliance on heuristics in future works. As an example, consider that the robot perception understands through context that the incoming communication is not relevant to the task (e.g., the human is speaking to another agent about a task unrelated to the current robotic agent’s purview), then without expending the computational resources using SIMLea to find if the data is similar enough to be adapted or not, the robot can simply reject the data.

To provide a consolidated command from the multiple modes of input, the multifaceted inputs must be combined by way of fusion. Thus, translating complementary input modes will make the communication more reliable, resolve the complexity associated with the robot’s understanding of human behaviour, and hence improve the overall robustness of the HRC system. Usage of fusion techniques and multimodal communication can also be useful for datasets that are lower in quality than required, errors arising out of faulty sensors, and for robustness in diverse environments. As an example, noisy environments can rely on the fusion of speech data, with more weight placed on vision data.

The loss of variance contained in the large, standard dataset during the continued application of SIMLea is a limitation of the framework. From a safety standpoint, the higher specificity of the model is an asset, the trade-off being that, as the model becomes more specific (towards the humans working with it), TL under SIMLea will need to be used more and more. This is an inherent limitation of the DA, DG, and TL techniques when dealing with shifted datasets that are much smaller than the source ones. From an industrial perspective, calibration drift is tackled through the obsolescence of legacy information and the tuning of the model to the new incoming data. Nonetheless, TL under SIMLea with portions of “old” data ensures the persistence of features from those datasets, while gradually lessening them over the operation life of the model.

Given the changing variance of the models, another checkpoint may be added to SIMLea in future works to stop further learning once the representation space of the current model contains so much less variance as to be highly biased to the training data. This may lead to all incoming data being flagged as too dissimilar, thus leading to needing the model to be trained from scratch, thus further reducing the variance in learnt features. Of course, the model would encounter such a situation after multiple changes in the incoming datasets. Statistical analysis of the feature space and heuristics may be employed to indicate when the model has reached the high bias, low variance state.

Future work also includes a study of scalability of the framework through the calculation and tracking of processing times of the framework to test for real-time application. Even though, since the dataset sizes for re-training were kept low and the number of epochs was also low, the iterative re-trainings did not take significantly large amounts of time, formal analyses will be carried out in future work with the aim of studying real-time operation. While tests were conducted on a simulated long-serving model, more work needs to be done on this front in conjunction with rising computational costs with the addition of more data.

Author Contributions

D.M., development of methodology presented, formal analysis, data curation, investigation, software development of implemented framework and model, visualization, writing—original draft; H.N., funding acquisition, project administration, supervision, writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

Research supported by UBC Office of the Vice-President, Research and Innovation in the form of seed funding to establish research on digitalization of manufacturing.

Institutional Review Board Statement

Not applicable since datasets used are publicly available, created by other researchers, and cited as such in text. User datasets for testing feature the authors of the paper.

Informed Consent Statement

Not applicable.

Data Availability Statement

The new user data generated and used in this study are available on request from the corresponding author due to privacy reasons of the user.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Alternate Shift Analysis with THUC Training Set as Source

The domain shift calculations were repeated with the THUC training set as the source so as to ascertain the reasoning that the THUC test and training sets are pulled from the same (or nearly the same) distributions and can be used as a representation of the THUC dataset. Refer to Table A1 for the covariate representation shift values for this analysis. It can be observed that the trends follow the intuition pertaining to the similarity between the datasets, especially for layers 2 and 3, the same as for Table A1.

While using either the THUC training or test set proves effective before re-training and for gauging the similarity between the source and shifted data, it is not so after re-training. As mentioned earlier, the “training set” is no longer just THUC; rather, it is a mixture of THUC training images and auto-labelled shifted user images. Thus, in order to keep bench-marking parameters invariant, the THUC test set was used for calculations. Apart from that, let us inspect the divergence mathematically.

The formulation of KL divergence can be understood as follows:

From Figure 4, it can be noted that the standard deviation of THUC is by dint of its greater variance in features, while that of user datasets or VDS are . In order to determine if is monotonically increasing with , let us find the first order differential of with respect to , keeping constant and the means of the two source and target distributions equal.

In order to be monotonically increasing, must be for all values.

This inequality is true for the nature of data being handled, as mentioned above. Therefore, for the same ,

Thus, as variance in the source dataset decreases, the divergence decreases if other factors are unchanged. This response of KL divergence would lead to indications of lesser covariate shift or R values if the source dataset does not contain high variability. This explains the lower R values for the analysis using the THUC test set versus training.

The THUC training set does contain high variability in features, but accessing a representative set for analysis every time during operation would prove to be time-consuming. In some cases, the model available may be trained without access to the training data. Keeping in mind such cases, the test set was used effectively for SIMLea. Furthermore, with all the factors kept constant, the effect of the standard deviation of the THUC test set is applied to all the steps of the adaptation, thus maintaining consistency in the process. Thus, for this application, it was deemed satisfactory to use the THUC test set as the source for further DA calculations.

Table A1.

Covariate shift (R) and accuracy values for TinyHand Uncluttered Dataset (THUC), User Seated, Uncluttered (UDS1), User Seated, Cluttered (UDS2), User Standing, Cluttered (UDS3), and Virtual Datasets (VDS) using THUC training set as source.

Table A1.

Covariate shift (R) and accuracy values for TinyHand Uncluttered Dataset (THUC), User Seated, Uncluttered (UDS1), User Seated, Cluttered (UDS2), User Standing, Cluttered (UDS3), and Virtual Datasets (VDS) using THUC training set as source.

| Tested On | THUC | UDS1 | UDS2 | UDS3 | VDS |

|---|---|---|---|---|---|

| Accuracy | 0.96 | 0.58 | 0.48 | 0.16 | 0.09 |

| R, Layer 1 | 146.08 | 417.86 | 3863.28 | 4689.43 | 8211.66 |

| R, Layer 2 | 183.72 | 241.45 | 3037.89 | 8296.30 | 4,701,616.77 |

| R, Layer 3 | 1.63 | 45.87 | 93.03 | 72.51 | 409.55 |

| R, Layer 4 | 3.98 | 10.94 | 10.65 | 25.16 | 12.11 |

| R, Layer 5 | 0.18 | 1.72 | 3.29 | 4.94 | 5.83 |

Layer refers to MaxPool layer.

References

- Ullah, I.; Adhikari, D.; Khan, H.; Anwar, M.S.; Ahmad, S.; Bai, X. Mobile robot localization: Current challenges and future prospective. Comput. Sci. Rev. 2024, 53, 100651. [Google Scholar] [CrossRef]

- Mukherjee, D.; Gupta, K.; Chang, L.H.; Najjaran, H. A Survey of Robot Learning Strategies for Human-Robot Collaboration in Industrial Settings. Robot. Comput. Integr. Manuf. 2022, 73, 102231. [Google Scholar] [CrossRef]

- Mukherjee, D. Statistically-Informed Multimodal Domain Adaptation in Industrial hUman-Robot Collaboration Environments. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2023. [Google Scholar] [CrossRef]

- Mukherjee, D.; Gupta, K.; Najjaran, H. A Critical Analysis of Industrial Human-Robot Communication and Its Quest for Naturalness Through the Lens of Complexity Theory. Front. Robot. AI 2022, 9, 477. [Google Scholar] [CrossRef] [PubMed]

- Drummond, N.; Shearer, R. The Open World Assumption; Technical report; University of Manchester: Manchester, UK, 2006. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. (IJCV) 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Motiian, S.; Piccirilli, M.; Adjeroh, D.A.; Doretto, G. Unified Deep Supervised Domain Adaptation and Generalization. arXiv 2017, arXiv:1709.10190. [Google Scholar] [CrossRef]

- Kothandaraman, D.; Nambiar, A.; Mittal, A. Domain Adaptive Knowledge Distillation for Driving Scene Semantic Segmentation. arXiv 2020, arXiv:2011.08007. [Google Scholar] [CrossRef]

- Long, M.; Zhu, H.; Wang, J.; Jordan, M.I. Unsupervised Domain Adaptation with Residual Transfer Networks. arXiv 2016, arXiv:1602.04433. [Google Scholar] [CrossRef]

- Chen, M.; Weinberger, K.Q.; Blitzer, J. Co-training for domain adaptation. Adv. Neural Inf. Process. Syst. 2011, 24. Available online: https://papers.nips.cc/paper_files/paper/2011/hash/93fb9d4b16aa750c7475b6d601c35c2c-Abstract.html (accessed on 1 March 2025).

- Blum, A.; Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Eleventh Annual Conference on Computational Learning Theory, Madison, WI, USA, 24–26 July 1998; pp. 92–100. [Google Scholar]

- Schwaiger, A.; Sinhamahapatra, P.; Gansloser, J.; Roscher, K. Is uncertainty quantification in deep learning sufficient for out-of-distribution detection? AISafety@ IJCAI 2020, 54, 1–8. [Google Scholar]

- Hendrycks, D.; Gimpel, K. A Baseline for Detecting Misclassified and Out-of-Distribution Examples in Neural Networks. arXiv 2016, arXiv:1610.02136. [Google Scholar] [CrossRef]

- Chi, S.; Tian, Y.; Wang, F.; Zhou, T.; Jin, S.; Li, J. A novel lifelong machine learning-based method to eliminate calibration drift in clinical prediction models. Artif. Intell. Med. 2022, 125, 102256. [Google Scholar] [CrossRef]

- Mukherjee, D.; Hong, J.; Vats, H.; Bae, S.; Najjaran, H. Personalization of industrial human—Robot communication through domain adaptation based on user feedback. User Model. User Adapt. Interact. 2024, 34, 1327–1367. [Google Scholar] [CrossRef]

- Sotirios Panagou, W.P.N.; Fruggiero, F. A scoping review of human robot interaction research towards Industry 5.0 human-centric workplaces. Int. J. Prod. Res. 2024, 62, 974–990. [Google Scholar] [CrossRef]

- Mukherjee, D.; Gupta, K.; Najjaran, H. An AI-powered Hierarchical Communication Framework for Robust Human-Robot Collaboration in Industrial Settings. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 29 August–2 September 2022; pp. 1321–1326. [Google Scholar]

- Stacke, K.; Eilertsen, G.; Unger, J.; Lundström, C. Measuring Domain Shift for Deep Learning in Histopathology. IEEE J. Biomed. Health Inform. 2021, 25, 325–336. [Google Scholar] [CrossRef]

- Stacke, K.; Eilertsen, G.; Unger, J.; Lundström, C. A Closer Look at Domain Shift for Deep Learning in Histopathology. arXiv 2019, arXiv:1909.11575. [Google Scholar] [CrossRef]

- Nisar, Z.; Vasiljevic, J.; Gancarski, P.; Lampert, T. Towards Measuring Domain Shift in Histopathological Stain Translation in an Unsupervised Manner. In Proceedings of the 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), Kolkata, India, 28–31 March 2022. [Google Scholar] [CrossRef]

- Ketykó, I.; Kovács, F. On the Metrics and Adaptation Methods for Domain Divergences of sEMG-based Gesture Recognition. arXiv 2019, arXiv:1912.08914. [Google Scholar] [CrossRef]

- Vangipuram, S.K.; Appusamy, R. A Survey on Similarity Measures and Machine Learning Algorithms for Classification and Prediction. In Proceedings of the International Conference on Data Science, E-Learning and Information Systems 2021, New York, NY, USA, 5–7 April 2021; pp. 198–204. [Google Scholar] [CrossRef]

- Li, X.; Yang, X.; Ma, Z.; Xue, J.H. Deep metric learning for few-shot image classification: A Review of recent developments. Pattern Recognit. 2023, 138, 109381. [Google Scholar] [CrossRef]

- Chechik, G.; Sharma, V.; Shalit, U.; Bengio, S. Large scale online learning of image similarity through ranking. J. Mach. Learn. Res. 2010, 11, 1–27. [Google Scholar]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Pereira, F. Analysis of representations for domain adaptation. Adv. Neural Inf. Process. Syst. 2006, 19. Available online: https://dl.acm.org/doi/10.5555/2976456.2976474 (accessed on 18 March 2025).

- Harmouch, M. 17 Types of Similarity and Dissimilarity Measures Used in Data Science. 2021. Available online: https://towardsdatascience.com/17-types-of-similarity-and-dissimilarity-measures-used-in-data-science-3eb914d2681/ (accessed on 1 March 2025).

- Jiang, L.; Guo, Z.; Wu, W.; Liu, Z.; Liu, Z.; Loy, C.C.; Yang, S.; Xiong, Y.; Xia, W.; Chen, B.; et al. DeeperForensics Challenge 2020 on Real-World Face Forgery Detection: Methods and Results. arXiv 2021, arXiv:2102.09471. [Google Scholar] [CrossRef]

- Perera, P.; Nallapati, R.; Xiang, B. OCGAN: One-class Novelty Detection Using GANs with Constrained Latent Representations. arXiv 2019, arXiv:1903.08550. [Google Scholar] [CrossRef]

- Aggarwal, C.C.; Yu, P.S. Outlier detection for high dimensional data. In Proceedings of the 2001 ACM SIGMOD International Conference on Management of Data, Santa Barbara, CA, USA, 21–24 May 2001; pp. 37–46. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Bao, P.; Maqueda, A.I.; del Blanco, C.R.; García, N. Tiny hand gesture recognition without localization via a deep convolutional network. IEEE Trans. Consum. Electron. 2017, 63, 251–257. [Google Scholar] [CrossRef]

- Warden, P. Speech Commands: A Dataset for Limited-Vocabulary Speech Recognition. arXiv 2018, arXiv:1804.03209. [Google Scholar] [CrossRef]

- Mukherjee, D.; Singhai, R.; Najjaran, H. Systematic Adaptation of Communication-focused Machine Learning Models from Real to Virtual Environments for Human-Robot Collaboration. arXiv 2023, arXiv:2307.11327. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).